- 1Department of Mathematics, Queens College, CUNY, Queens, NY, United States

- 2Department of Statistics, The Wharton School of the University of Pennsylvania, Philadelphia, PA, United States

- 3Department of Psychology, University of Pennsylvania, Philadelphia, PA, United States

We present methodological advances in understanding the effectiveness of personalized medicine models and supply easy-to-use open-source software. Personalized medicine involves the systematic use of individual patient characteristics to determine which treatment option is most likely to result in a better average outcome for the patient. Why is personalized medicine not done more in practice? One of many reasons is because practitioners do not have any easy way to holistically evaluate whether their personalization procedure does better than the standard of care, termed improvement. Our software, “Personalized Treatment Evaluator” (the R package PTE), provides inference for improvement out-of-sample in many clinical scenarios. We also extend current methodology by allowing evaluation of improvement in the case where the endpoint is binary or survival. In the software, the practitioner inputs 1) data from a single-stage randomized trial with one continuous, incidence or survival endpoint and 2) an educated guess of a functional form of a model for the endpoint constructed from domain knowledge. The bootstrap is then employed on data unseen during model fitting to provide confidence intervals for the improvement for the average future patient (assuming future patients are similar to the patients in the trial). One may also test against a null scenario where the hypothesized personalization are not more useful than a standard of care. We demonstrate our method’s promise on simulated data as well as on data from a randomized comparative trial investigating two treatments for depression.

1 Introduction

Personalized medicine, sometimes called “precision medicine” or “stratified medicine” (Smith, 2012), is a medical paradigm offering the possibility for improving the health of individuals by judiciously treating individuals based on his or her heterogeneous prognostic or genomic information (Zhao and Zeng, 2013). These approaches have been described under the umbrella of “P4 medicine,” a systems approach that combines predictive, personalized, preventive and participatory features to improve patient outcomes (Weston and Hood, 2004; Hood and Friend, 2011). And the interest in such personalization is exploding.

Fundamentally, personalized medicine is a statistical problem. However, much recent statistical research has focused on how to best estimate dynamic treatment regimes or adaptive interventions (Collins et al., 2004; Chakraborty and Murphy, 2014). These are essentially strategies that vary treatments administered over time as more is learned about how particular patients respond to one or more interventions. Elaborate models are often proposed that purport to estimate optimal dynamic treatment regimes from multi-stage experiments (Murphy, 2005b) as well as the more difficult situation of inference in observational studies.

Thus, the extant work, at least in the field of statistics, is highly theoretical. There is a dearth of software that can answer two fundamental questions practitioners will need answered before they can personalize future patients’ treatments: 1) How much better is this personalization model expected to perform when compared to my previous “naive” strategy for allocating treatments? 2) How confident can I be in this estimate? Can I reject a null hypothesis that it will perform no better than the standard of care? Chakraborty and Moodie (2013), page 168 believe that “more targeted research is warranted” on these important questions; and the goal of our paper is to provide a framework and usable software that fills in part of this gap.

Personalized medicine is a broad paradigm encompassing many real-world situations. The setting we focus on herein is a common one: using previous randomized comparative/controlled trial (RCT) data to be able to make better decisions for future patients. We consider RCTs with two treatment options (two-arm), with one endpoint measure (also called the “outcome” or “response” which can be continuous, binary or survival) and where the researchers also collected a variety of patient characteristics to be used for personalization. The practitioner also has an idea of a model of the response (usually a simple first-order interaction model). Although this setting is simplistic, our software can then answer the two critical questions listed above.

Our advances are modest but important for practitioners. 1) Practitioners now have easy-to-use software that automates the testing of their personalization models. 2) We introduce a more intuitive metric that gauges how well the personalization is performing: “improvement” vs. a baseline strategy. 3) Our estimates and hypothesis tests of improvement are all cross-validated, making the estimates honest even when the data at hand was overfit and thereby generalizable to future patients. This external validity is only possible if future patients can be thought to come from the same population as the clinical trial, a generalization that is debated but beyond the scope of our work. 4) We have extended this well-established methodology to the setting of binary and survival endpoints, the most common endpoints in clinical trials.

The paper proceeds as follows. In Section 2, we review the modern personalized medicine literature and locate our method and its limitations within. Section 3 describes our methods and its limitations in depth, by describing the conceptual framework emphasizing our methodological advances. We then carefully specify the data and model inputs, define the improvement metric, and illustrate a strategy for providing practitioners with estimates and inference. Section 4 applies our methods to 1) a simple simulated dataset in which the response model is known, 2) a more complicated dataset characterized by an unknown response model and 3) a real data set from a published clinical trial investigating two treatments for major depressive disorder. Section 5 demonstrates the software for all three types of endpoints: continuous, binary and survival. Section 6 concludes and offers future directions of which there are many.

2 Background

Consider an individual seeking one of two treatments, neither of which is known to be superior for all individuals. “What treatment, by whom, is most effective for this individual with that specific problem, and under which set of circumstances?” (Paul, 1967).1 Sometimes practitioners will select a treatment based informally on personal experience. Other times, practitioners may choose the treatment that their clinic or peers recommend. If the practitioner happens to be current on the research literature and there happens to be a published RCT whose results have clear clinical implications, the study’s superior treatment (on average) may be chosen.

Each of these approaches can sometimes lead to improved outcomes, but each also can be badly flawed. For example, in a variety of clinical settings, “craft lore” has been demonstrated to perform poorly, especially when compared to very simple statistical models (Dawes, 1979). It follows that each of these “business-as-usual” treatment allocation procedures can in principle be improved if there are patient characteristics available which are related to how well an intervention performs.

Personalized medicine via the use of patient characteristics is by no means a novel idea. As noted as early as 1865, “the response of the average patient to therapy is not necessarily the response of the patient being treated” (translated by Bernard, 1957). There is now a substantial literature addressing numerous aspects of personalized medicine. The field is quite fragmented: there is literature on treatment-covariate interactions, locating subgroups of patients as well as literature on personalized treatment effects estimation. However, a focus on inference is rare in the literature and available software for inference is negligible.

Byar (1985) provides an early review of work involving treatment-covariate interactions. Byar and Corle (1977) investigates tests for treatment-covariate interactions in survival models and discusses methods for treatment recommendations based on covariate patterns. Shuster and van Eys (1983) considers two treatments and proposes a linear model composed of a treatment effect, a prognostic factor, and their interaction. Using this model, the authors create confidence intervals to determine for which values of the prognostic factor one of two treatments is superior.

Many researchers also became interested in discovering “qualitative interactions,” which are interactions that create a subset of patients for which one treatment is superior and another subset for which the alternative treatment is superior. Gail and Simon (1985) develop a likelihood ratio test for qualitative interactions which was further extended by Pan and Wolfe (1997) and Silvapulle (2001). For more information and an alternative approach, see Foster (2013).

Much of the early work in detecting these interactions required a prior specification of subgroups. This can present significant difficulties in the presence of high dimensionality or complicated associations. More recent approaches such as Su et al. (2009) and Dusseldorp and Van Mechelen (2014) favor recursive partitioning trees that discover important nonlinearities and interactions. Dusseldorp et al. (2016) introduce an R package called QUINT that outputs binary trees that separate participants into subgroups. Shen and Cai (2016) propose a kernel machine score test to identify interactions and the test has more power than the classic Wald test when the predictor effects are non-linear and when there is a large number of predictors. Berger et al. (2014) discuss a method for creating prior subgroup probabilities and provides a Bayesian method for uncovering interactions and identifying subgroups. Berk et al. (2020) uses trees to both locate subgroups and to provides valid inference on the local heterogeneous treatment effects. They do so by exhaustively enumerating every tree and every node, running every possible test and then providing valid post-selection inference (Berk et al., 2013b).

In our method, we make use of RCT data. Thus, it is important to remember that “clinical trials are typically not powered to examine subgroup effects or interaction effects, which are closely related to personalization … even if an optimal personalized medicine rule can provide substantial gains, it may be difficult to estimate this rule with few subjects” (Rubin and van der Laan, 2012). This is why a major bulk of the literature does not focus on finding covariate-treatment interactions or locating subgroups of individuals, but instead on the entire model itself (called the “regime”) and that entire model is then used to sort individuals. Holistic statements can then be made on the basis of this entire sorting procedure. We turn to some of this literature now.

Zhang et al. (2012a) consider the context of treatment regime estimation in the presence of model misspecification when there is a single-point treatment decision. By applying a doubly robust augmented inverse probability weighted estimator that under the right circumstances can adjust for confounding and by considering a restricted set of policies, their approach can help protect against misspecification of either the propensity score model or the regression model for patient outcome. Brinkley et al. (2010) develop a regression-based framework of a dichotomous response for personalized treatment regimes within the rubric of “attributable risk.” They propose developing optimal treatment regimes that minimize the probability of a poor outcome, and then consider the positive consequences, or “attributable benefit,” of their regime. They also develop asymptotically valid inference for a parameter similar to improvement with business-as-usual as the random (see our Section 3.3), an idea we extend. Within the literature, their work is the closest conceptually to ours. Gunter et al. (2011b) develop a stepwise approach to variable selection and in Gunter et al. (2011a) compare it to stepwise regression. Rather than using a traditional sum-of-squares metric, the authors’ method compares the estimated mean response, or “value,” of the optimal policy for the models considered, a concept we make use of in Section 3. Imai and Ratkovic (2013) use a modified Support Vector Machine with LASSO constraints to select the variables useful in an optimal regime when the response is binary. van der Laan and Luedtke (2015) uses a loss-based super-learning approach with cross-validation.

Also important within the area of treatment regime estimation, but not explored in this paper, is the estimation of dynamic treatment regimes (DTRs). DTRs constitute a set of decision rules, estimated from many experimental and longitudinal intervals. Each regime is intended to produce the highest mean response over that time interval. Naturally, the focus is on optimal DTRs—the decision rules which provide the highest mean response. Murphy (2003) and Robins (2004) develop two influential approaches based on regret functions and nested mean models respectively. Moodie et al. (2007) discuss the relationship between the two while Moodie and Richardson (2009) and Chakraborty et al. (2010) present approaches for mitigating biases (the latter also fixes biases in model parameter estimation stemming from their non-regularity in SMART trials). Robins et al. (2008) focus on using observational data and optimizing the time for administering the stages—the “when to start”—within the DTR. Orellana et al. (2010) develop a different approach for estimating optimal DTRs based on marginal structural mean models. Henderson et al. (2010) develop optimal DTR estimation using regret functions and also focus on diagnostics and model checking. Barrett et al. (2014) develop a doubly robust extension of this approach for use in observational data. Laber et al. (2014) demonstrate the application of set-valued DTRs that allow balancing of multiple possible outcomes, such as relieving symptoms or minimizing patient side effects. Their approach produces a subset of recommended treatments rather than a single treatment. Also, McKeague and Qian (2014) estimate treatment regimes from functional predictors in RCTs to incorporate biosignatures such as brain scans or mass spectrometry.

Many of the procedures developed for estimating DTRs have roots in reinforcement learning. Two widely used methods are Q-learning Murphy (2005a) and A-learning (see Schulte et al., 2014 for an overview of these concepts). One well-noted difficulty with Q-learning and A-learning are their susceptibility to model misspecification. Consequently, researchers have begun to focus on “robust” methods for DTR estimation. Zhang et al. (2013) extend the doubly robust augmented inverse probability weighted method (Zhang et al., 2012a) by considering multiple binary treatment stages.

Many of the methods mentioned above can be extended to censored survival data. Zhao et al. (2015) describe a computationally efficient method for estimating a treatment regimes that maximizes mean survival time by extending the weighted learning inverse probability method. This method is doubly robust; it is protected from model misspecification if either the censoring model or the survival model is correct. Additionally, methods for DTR estimation can be extended. Goldberg and Kosorok (2012) extend Q-learning with inverse-probability-of-censoring weighting to find the optimal treatment plan for individual patients, and the method allows for flexibility in the number of treatment stages.

It has been tempting, when creating these treatment regime models, to directly employ them to predict the differential response of individuals among different treatments. This is called in the literature “heterogeneous treatment effects models” or “individualized treatment rules” and there is quite a lot of interest in it.

Surprisingly, methods designed for accurate estimation of an overall conditional mean of the response may not perform well when the goal is to estimate these individualized treatment rules. Qian and Murphy (2011) propose a two-step approach to estimating “individualized treatment rules” based on single-stage randomized trials using

One current area of research in heterogeneous effect estimation is the development of algorithms that can be used to create finer and more accurate partitions. Kallus (2017) presents three methods for the case of observational data: greedily partitioning data to find optimal trees, bootstrap aggregating to create a “personalization forest” a la Random Forests, and using the tree method coupled with mixed integer programming to find the optimal tree. Lamont et al. (2018) build on the prior methods of parametric multiple imputation and recursive partitioning to estimate heterogeneous treatment effects and compare the performance of both methods. This estimation can be extended to censored data. Henderson et al. (2020) discuss the implementation of Bayesian Additive Regression Trees for estimating heterogeneous effects which can be used for continuous, binary and censored data. Ma et al. (2019) proposes a Bayesian predictive method that integrates multiple sources of biomarkers.

One major drawback of many of the approaches in the literature reviewed is their significant difficulty evaluating estimator performance. Put another way, given the complexity of the estimation procedures, statistical inference is very challenging. Many of the approaches require that the proposed model be correct. There are numerous applications in the biomedical sciences for which this assumption is neither credible nor testable in practice. For example, Evans and Relling (2004) consider pharmacogenomics, and argue that as our understanding of the genetic influences on individual variation in drug response and side-effects improves, there will be increased opportunity to incorporate genetic moderators to enhance personalized treatment. But we will ever truly understand the endpoint model well enough to properly specify it? Further, other biomarkers (e.g. neuroimaging) of treatment response have begun to emerge, and the integration of these diverse moderators will require flexible approaches that are robust to model misspecification (McGrath et al., 2013). How will the models of today incorporate important relationships that can be anticipated but have yet to be identified? Further, many proposed methods employ non-parametric models that use the data to decide which internal parameters to fit and then in turn estimates these internal parameters. Thus a form of model selection that introduces difficult inferential complications (see Berk et al., 2013b).

At the very least, therefore, there should be an alternative inferential framework for evaluating treatment regimes that do not require correct model specification (and thereby obviating the need for model checking and diagnostics) nor knowledge of unmeasured characteristics (see discussion in Henderson et al., 2010) accompanied by easy-to-use software. This is the modest goal herein.

3 Methods

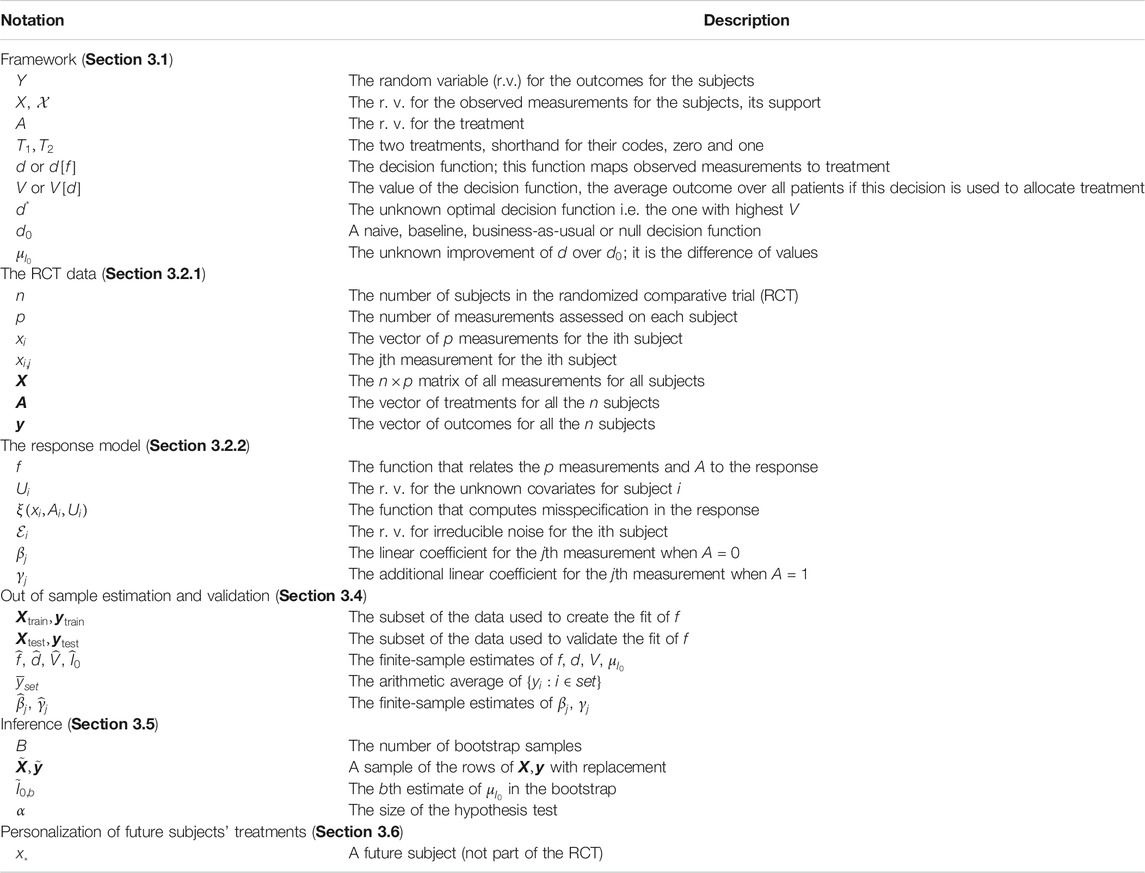

This work seeks to be didactic and thus carefully explains the extant methodology and framework (Section 3.1), data inputs (Section 3.2), estimation (Section 3.4) and a procedure for inference (Section 3.5). For those familiar with this literature, these sections can be skipped. Our methodological contributions then are to 1) employ out-of-sample validation to (Section 3.4) specifically to 2) improvement, the metric defined as the difference in how patients allocated via personalized model fare in their outcome and a patients allocated via business-as-usual model fare in their outcome (Section 3.3) and 3) to extend this validation methodology to binary and survival endpoints (Sections 3.4.2 and 3.4.3). Table 1 serves as a guide to the main notation used in this work, organized by section.

3.1 Conceptual Framework

We imagine a set of random variables having a joint probability distribution that can be also be viewed as a population from which data could be randomly and independently realized. The population can also be imagined as all potential observations that could be realized from the joint probability distribution. Either conception is consistent with our setup.

A researcher chooses one of the random variables to be the response Y which could be continuous, binary or survival (with a corresponding variable that records its censoring, explained later). We assume without loss of generality that a higher-valued outcome is better for all individuals. Then, one or more of the other random variables are covariates

All potential observations are hypothetical study subjects. Each can be exposed to a random treatment denoted

A standard estimation target in RCTs is the population average treatment effect (PATE), defined here as

For personalization, we want to make use of any association between Y and

Although the integral expression appears complicated, when unpacked it is merely an expectation of the response averaged over

We have considered all covariates to be random variables because we envision future patients for whom an appropriate treatment is required. Ideally, their covariate values are realized from the same joint distribution as the covariate values for the study subjects, an assumption that is debated and discussed in the concluding section.

In addition, we do not intend to rely on estimates of the two population response surfaces. As a practical matter, we will make do with a population response surface approximation for each. No assumptions are made about the nature of these approximations and in particular, how well or poorly either population approximation corresponds to the true conditional response surfaces.

Recall that much of the recent literature has been focused on finding the optimal rule,

which is sometimes called “benefit” in the literature. Since our convention is that higher response values are better, we seek large, positive improvements that translate to better average performance (as measured by the response). Note that this is a natural measure when Y is continuous. When Y is incidence or survival, we redefine

The metric

3.2 Our Framework’s Required Inputs

Our method depends on two inputs 1) access to RCT data and 2) either a prespecified parametric model

3.2.1 The RCT Data

Our procedure strictly requires RCT data to ensure there is a causal effect of the heterogeneous parameters. Much of the research discussed in the background (Section 2) applies in the case of observational data. We realize this limits the scope of our proposal. The RCT data must come from an experiment undertaken to estimate the PATE for treatments

There are n subjects each with p covariates which are denoted for the ith subject as

We assume the outcome measure of interest

We will be drawing inference to a patient population beyond those who participated in the experiment. Formally, new subjects must be sampled from that same population as were the subjects in the RCT. In the absence of explicit probability sampling, the case would need to be made that the model can generalize. This requires subject-matter expertise and knowledge of how the study subjects were recruited.

3.2.2 The Model for the Response Based on Observed Measurements

The decision rule d is a function of

As in Berk et al. (2014), we assume the model f provided by the practitioner to be an approximation using the available information,

where f differs from the true response expectation by a term dependent on

We wish only to determine whether an estimate of f is useful for improving treatment allocation for future patients (that are similar to the patients in the RCT) and do not expect to recover the optimal allocation rule

What could f look like in practice? Assume a continuous response (binary and survival are discussed later) and consider the conventional linear regression model with first order interactions. Much of the literature we reviewed in Section 2 favored this class of models. We specify a linear model containing a subset of the covariates used as main effects and a possibly differing subset of the covariates to be employed as first order interactions with the treatment indicator,

These interactions induce heterogeneous effects between

We stress that our models are not required to be of this form, but we introduce them here mostly for familiarity and pedagogical simplicity. There are times when this linear model will perform terribly even if

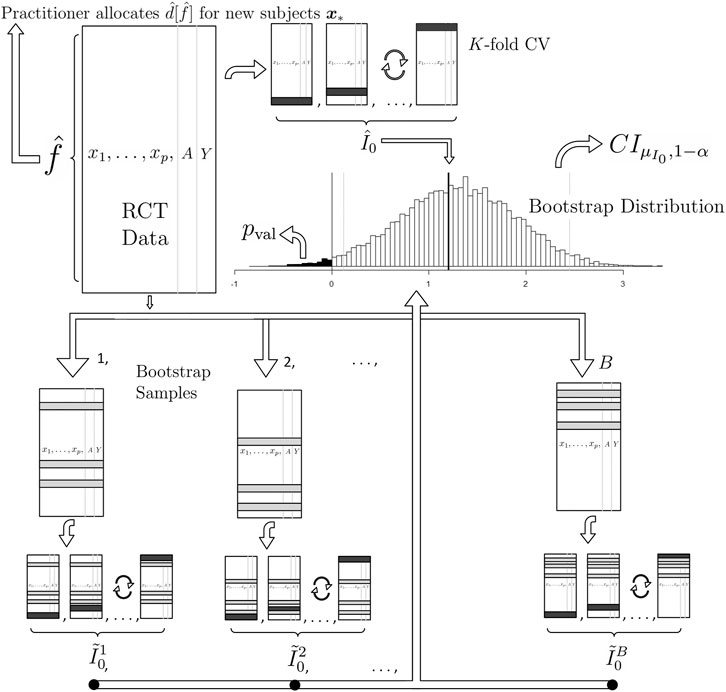

FIGURE 1. A graphical illustration of (1) our proposed method for estimation and (2) our proposed method for inference on the population mean improvement of an allocation procedure and (3) our proposed future allocation procedure (top left of the illustration). To compute the best estimate of the improvement

We also stress that although the theory for estimating linear models’ coefficients (as well as those for logistic regression and Weibull regression) is well-developed, we are not interested in inference for these coefficients in this work as our goal is only estimation and inference for overall usefulness of the personalization scheme, i.e. the unknown parameter

3.3 Other Allocation Procedures

Although

The second business-as-usual procedure we call best. This procedure gives all patients the better of the two treatments as determined by the comparison of the sample average for all subjects who received

3.4 Estimating the Improvement Scores

3.4.1 For a Continuous Response

How well do unseen subjects with treatments allocated by d do on average compared to the same unseen subjects with treatments allocated by

where

In order to properly estimate

Given the estimates

Recall that in the test data, our allocation prediction

The average of the lucky subjects’ responses should estimate the average of the response of new subjects who are allocated to their treatments based on our procedure d and this is the estimate of

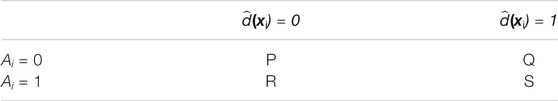

TABLE 2. The elements of

The diagonal entries of sets P and S contain the “lucky” subjects. The notation

How do we compute

Note that the plug-in estimate of value

There is one more conceptual point. Recall that the value estimates

In practice, how large should the training and test splits be? Depending on the size of the test set relative to the training set, CV can trade bias for variance when estimating an out-of-sample metric. Small training sets and large test sets give more biased estimates since the training set is built with less data than the n observations given. However, large test sets have lower variance estimates since they are composed of many examples. There is no consensus in the literature about the optimal training-test split size (Hastie et al., 2013, page 242) but 10-fold CV is a common choice employed in many statistical applications and provides for a relatively fast algorithm. In the limit, n models can be created by leaving each observation out, as done in DeRubeis et al. (2014). In our software, we default to 10-fold cross validation but allow for user customization.

This estimation procedure outlined above is graphically illustrated in the top of Figure 1. We now extend this methodology to binary and survival endpoints in the next two sections.

3.4.2 For a Binary Response

In the binary case, we let V be the expected probability of the positive outcome under d just like in Kang et al. (2014). We consider three improvement metrics 1) the probability difference, 2) the risk ratio and 3) the odds ratio:

and the estimate of all three (the probability difference, the risk ratio and the odds ratio) is found by placing hats on each term in the definitions above (all V’s, d’s and

Following the example in the previous section we employ the analogous model, a logistic linear model with first order treatment interactions where the model f now denotes the probability of the positive outcome

This model, fit via maximum likelihood numerically (Agresti, 2018), is the default in our software implementation. Here, higher probabilities of success imply higher logit values so that algebraically we have the same form of the decision rule estimate,

If the risk ratio or odds ratio improvement metrics are desired, Eqs. 6, 7 are modified accordingly but otherwise estimation is then carried out the same as in the previous section.

3.4.3 For a Survival Response

Survival responses differ in two substantive ways from continuous responses: 1) they are always non-negative and 2) some values are “censored” which means it appropriates the value of the last known measurement but it is certain that the true value is greater. The responses

To obtain

Under the exponential model, the convention is that the noise term

Moreso than for continuous and incidence endpoints, parameter estimation is dependent on the choice of error distribution. Following Hosmer and Lemeshow (1999), a flexible model is to let

Some algebra demonstrates that the estimated decision rule is the same as those above, i.e.

Subjects are then sorted in cells like Table 2 but care is taken to keep the corresponding

Of course we can reemploy a new Weibull model and define improvement as we did earlier as the difference in expectations (Eq. 1). However, there are no more covariates needed at this step as all subjects have been sorted based on

For our default implementation, we have chosen to employ the difference of the Kaplan-Meier median survival statistics here because we intuitively feel that a non-parametric estimate makes the most sense. Once again, the user is free to employ whatever they feel is most appropriate in their context. Given this default, please note that the improvement measure of Eq. 1 is no longer defined as the difference in survival expectations, but now the difference in survival medians. This makes our framework slightly different in the case of survival endpoints.

3.5 Inference for the Population Improvement Parameter

Regardless of the type of endpoint, the

In the context of our proposed methodology, the bootstrap procedure works as follows for the target of inference

In this application, the bootstrap approximates the sampling of many RCT datasets. Each

To create a

If a higher response is better for the subject, we set

We would like to stress once again that we are not testing for qualitative interactions—the ability of a covariate to “flip” the optimal treatment for subjects. Tests for such interactions would be hypothesis tests on the γ parameters of Eqs. 4, 9, 10, which assume model structures that are not even required for our procedure. Qualitative interactions are controversial due to model dependence and entire tests have been developed to investigate their significance. In the beginning of Section 2 we commented that most RCTs are not even powered to investigate these interactions. “Even if an optimal personalized medicine rule [based on such interactions] can provide substantial gains it may be difficult to estimate this rule with few subjects” (Rubin and van der Laan, 2012). The bootstrap test (and our approach at large) looks at the holistic picture of the personalization scheme without focus on individual covariate-treatment interaction effects to determine if the personalization scheme in totality is useful, conceptually akin to the omnibus F-test in an OLS regression.

3.5.1 Concerns With Using the Bootstrap for This Inference

There is some concern in the personalized medicine literature about the use of the bootstrap to provide inference. First, the estimator for V is a non-smooth functional of the data which may result in an inconsistent bootstrap estimator (Shao, 1994). The non-smoothness is due to the indicator function in Eq. 8 being non-differentiable, similar to the example found in (Horowitz, 2001, Chapter 52, Section 4.3.1). However, “the value of a fixed [response model] (i.e., one that is not data-driven) does not suffer from these issues and has been addressed by numerous authors” (Chakraborty and Murphy, 2014). Since our

There is an additional concern. Some bootstrap samples produce null sets for the “lucky subjects” (i.e.

3.6 Future Subjects

The implementation of this procedure for future patients is straightforward. Using the RCT data, estimate f to arrive at

It is important to note that

4 Data Examples

We present two simulations in Sections 4.1 and 4.2 that serve only as illustrations that our methodology both works as purported but degrades in the case of pertinent information that goes missing. We then demonstrate a real clinical setting in Section 4.3.

4.1 Simulation With Correct Regression Model

Consider a simulated RCT dataset with one covariate x where the true response function is known:

where

We further assume

We simulate under a simple scenario to clearly highlight features of our methodology. If

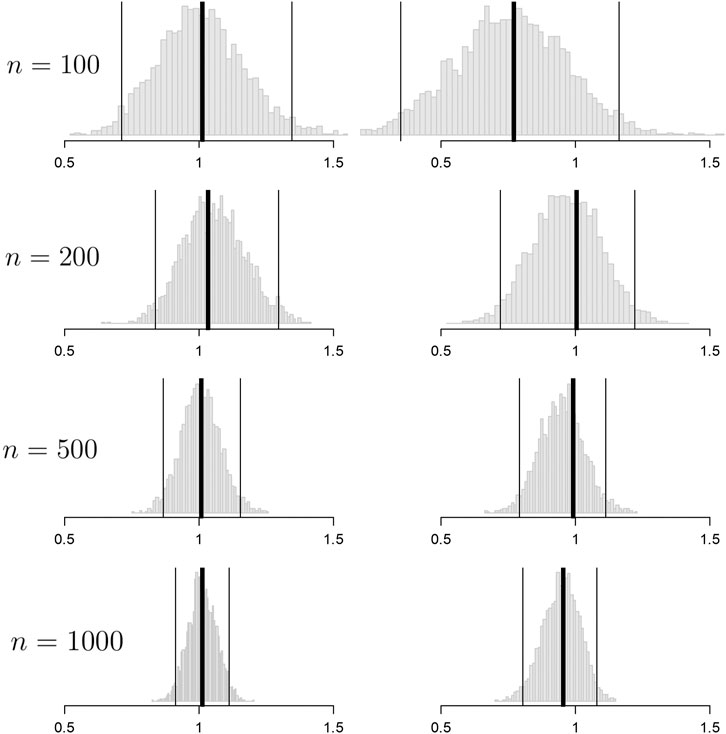

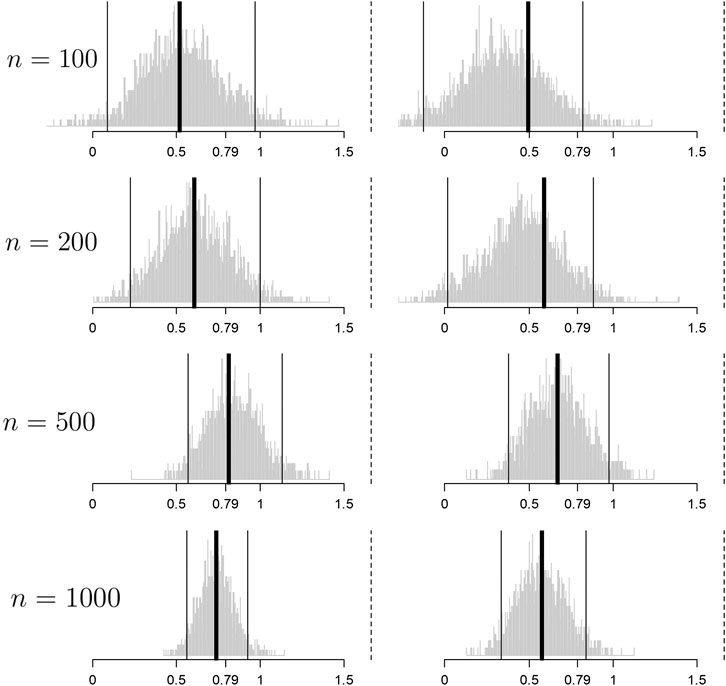

FIGURE 2. Histograms of the bootstrap samples of the out-of-sample improvement measures for

Convergence to

In this section we assumed knowledge of f and thereby had access to an optimal rule. In the next section we explore convergence when we do not know f but pick an approximate model yielding a non-optimal rule that would still provide clinical utility.

4.2 Simulation With an Approximate Regression Model

Consider RCT data with a continuous endpoint where the true response model is

where X denotes a covariate recorded in the RCT and U denotes a covariate that is not included in the RCT dataset. The optimal allocation rule

which is different from the true response model due to (a) the misspecification of X (linear instead of cubic) and (b) the absence of covariate U (see Eq. 3). This is a realistic scenario; even with infinite data,

To simulate, we set the X’s, U’s and

Figure 3 demonstrates results for

FIGURE 3. Histograms of the bootstrap samples of the cross-validated improvement measures for

Convergence toward

The point of this section is to illustrate what happens in the real world: the response model is unknown and important measurements are missing and thus any personalized medicine model falls far short of optimal. However, the effort can still yield an improvement that can be clinically significant and useful in practice.

There are many cases where our procedure will not find signal yielding improvement in patient outcomes either because it does not exist or we are underpowered to detect it. For example 1) in cases where there are many variables that are important and a small sample size. Clinical trials are not usually powered to find even single interaction effects, let alone many. The small sample size diminishes power to find the effect, similar to any statistical test. 2) If the true heterogeneity in the functional response cannot be approximated by a linear function. For instance, parabolic or sine functions cannot be represented whatsoever by best fit lines.

In the next section, we use our procedure in RCT data from a real clinical trial where both these limitations apply. The strategy is to approximate the response function using a reasonable model f built from domain knowledge and the variables at hand and hope to find demonstrate a positive, clinically meaningful

4.3 Clinical Trial Demonstration

We consider an illustrative example in psychiatry, a field where personalization, called “precision psychiatry, promises to be even more transformative than in other fields of medicine” (Fernandes et al., 2017). However, evaluation of predictive models for precision psychiatry has been exceedingly rare, with a recent systematic review identifying 584 studies in which prediction models had been developed, with only 10.4% and 4.6% having conducted proper internal and external validation, respectively (Salazar de Pablo et al., 2020).

We will demonstrate our method (that provides this proper validation) on the randomized comparative trial data of DeRubeis et al. (2005). In this depression study, there were two treatments with very different purported mechanisms of action: cognitive behavioral therapy (

The primary outcome measure y is the continuous Hamilton Rating Scale for Depression (HRSD), a composite score of depression symptoms where lower means less depressed, assessed by a clinician after 16 weeks of treatment. A simple t test revealed that there was no statistically significant HRSD difference between the cognitive behavioral therapy and paroxetine arms, a well-supported finding in the depression literature. Despite the seeming lack of a population mean difference among the two treatments, practitioner intuition and a host of studies suggest that the covariates collected can be used to build a principled personalized model with a significant negative

We now must specify a model, f. For the purpose of illustration, we employ a linear model with first-order interactions with the treatment (as in Eq. 4). Which of the 28 variables should be included in the model? Clinical experience and theory should suggest both prognostic (main effect) and moderator (treatment interaction) variables (Cohen and DeRubeis, 2018). We should not use variables selected using methods performed on this RCT data such as the variables found in DeRubeis et al. (2014), Table 3. Such a procedure would constitute data snooping and it will invalidate the inference provided by our method. The degree of invalidation is not currently known and is much needed to be researched.

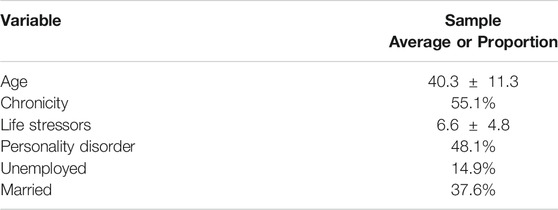

TABLE 3. Baseline characteristics of the subjects in the clinical trial example for the moderating variables employed in our personalization model. These statistics differ slightly from those found in the table of DeRubeis et al. (2005, page 412) as here they are tabulated for subjects only after dropout (

Of the characteristics measured in this RCT data, previous researchers have found significant treatment moderation in age and chronicity (Cuijpers et al., 2012), early life trauma (Nemeroff et al., 2003) (which we approximate using a life stressor metric), presence of personality disorder (Bagby et al., 2008), employment status and marital status (Fournier et al., 2009) but almost remarkably baseline severity of the depression does not moderate (Weitz et al., 2015) and baseline severity is frequently the most important covariate in response models (e.g. Kapelner and Krieger, 2020, Figure 1B). We include these

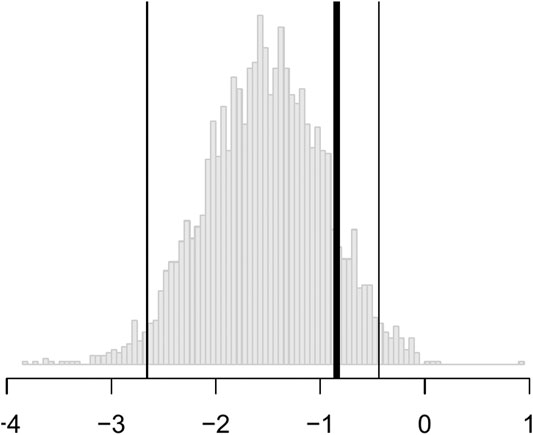

The output from 3,000 bootstrap samples are shown in Figure 4. From these results, we anticipate that a new subject allocated using our personalization model will be less depressed on average by 0.84 HRSD units with a 95% confidence interval of [0.441, 2.657] compared to that same subject being allocated randomly to cognitive behavioral therapy or paroxetine. We can easily reject the null hypothesis that personalized allocation over random is no better for the new subject (p value = 0.001).

FIGURE 4. Histograms of the bootstrap samples of

In short, the results are statistically significant, but the estimated improvement may not be clinically significant. According to the criterion set out by the National Institute for Health and Care Excellence, three points on the HRSD is considered clinically important. Nevertheless, this personalization scheme can be implemented in practice with new patients for a modest improvement in patient outcome at little cost.

5 The PTE Package

5.1 Estimation and Inference for Continuous Outcomes

The package comes with two example datasets. The first is the continuous data example. Below we load the library and the data whose required form is 1) a vector of length n for the responses,

The endpoint y is continuous and the RCT data has a binary treatment vector appropriately named (this is required) and five covariates, four of which are factors and one is continuous. We can run the estimation for the improvement score detailed in Section 3.4.1 and the inference of Section 3.5 by running the following code:

Here, 1,000 bootstrap samples were run on four cores in parallel to minimize runtime. The f model defaults to a linear model where all variables included are interacted with the treatment and fit with least squares. Below are the results.

Note how the three bootstrap methods are different from another. The percentile method barely includes the actual observed statistic for the random comparison (see discussion in Section 3.5). The software also plots the

To demonstrate the flexibility of the software, consider the case where the user wishes to use

Here, the user passes in a custom function that builds the ridge model to the argument personalized_model_build_function. The specification for ridge employed here uses the package glmnet (Friedman et al., 2010) that picks the optimal ridge penalty hyperparameter automatically. Unfortunately, there is added complexity: the glmnet package does not accept formula objects and thus model matrices are generated both upon model construction and during prediction. This is the reason why a custom function is also passed in via the argument predict_function which wraps the default glmnet predict function by passing in the model matrix.

5.2 Estimation and Inference for Binary Outcomes

In order to demonstrate our software for the incidence outcome, we use the previous data but threshold its response arbitrarily at its 75th percentile to create a mock binary response (for illustration purposes only).

We then fit a linear logistic model using all variables as fixed effects and interaction effects with the treatment. As discussed in Section 3.4.2, there are three improvement metrics for incidence outcomes. The default is the odds ratio. The following code fits the model and performs the inference.

Note that the response type incidence has to be explicitly made known otherwise the default would be to assume the endpoint is continuous and perform regression. Below are the results.

The p value is automatically calculated for H_0_mu_equals argument. Here, the test failed to reject incidence_metric = “risk_ratio” (or “probability_difference”).

5.3 Estimation and Inference for Survival Outcomes

Our package also comes with a mock RCT dataset with a survival outcome. In addition to the required input data

There are four covariates, one factor and three continuous. We can run the estimation for the improvement score detailed in Section 3.4.3 and inference for the true improvement by running the following code.

The syntax is the same as the above two examples except here we pass in the binary

In the default implementation for the survival outcome, improvement is defined as median survival difference of personalization vs. standard of care. The median difference can be changed via the user passing in a new function with the difference_function argument. The median difference results are below.

It seems that the personalized medicine model increases median survival by 0.148 vs.

6 Discussion

We have provided a methodology to test the effectiveness of personalized medicine models. Our approach combines RCT data with a statistical model f of the response for estimating improved outcomes under different treatment allocation protocols. Using the non-parametric bootstrap and cross-validation, we are able to provide confidence bounds for the improvement and hypothesis tests for whether the personalization performs better compared to a business-as-usual procedure. We demonstrate the method’s performance on simulated data and on data from a clinical trial on depression. We also present our statistical methods in an open source software package in R named PTE which is available on CRAN. Our package can be used to evaluate personalization models generally e.g. in heart disease, cancer, etc. and even outside of medicine e.g. in Economics and Sociology.

6.1 Limitations and Future Directions

Our method and corresponding software have been developed for a particular kind of RCT design. The RCT must have two arms and one endpoint (continuous, incidence or survival). An extension to more than two treatment arms is trivial as Eq. 2 is already defined generally. Implementing extensions to longitudinal or panel data are simple within the scope described herein. And extending the methodology to count endpoints would also be simple.

Although we agree that a “once and for all” treatment strategy [may be] suboptimal due to its inflexibility” (Zhao et al., 2015), this one-stage treatment situation is still common in the literature and the real world and this is the setting we chose to research. We consider an extended implementation for dynamic treatment regimes on multi-stage experiments fruitful future work. Consider being provided with RCT data from sequential multiple assignment randomized trials (“SMARTs,” Murphy, 2005b) and an a priori response model f. The estimate of

Our choices of

It might also be useful to consider how to extend our methodology to observational data. The literature reviewed in Section 2 generally does not require RCT data but “only” a model that accurately captures selection into treatments e.g. if “the [electronic medical record] contained all the patient information used by a doctor to prescribe treatment up to the vagaries and idiosyncrasies of individual doctors or hospitals” (Kallus, 2017, Section 1). This may be a very demanding requirement in practice. In this paper, we do not even require valid estimates of the true population response surface. In an observational study one would need that selection model to be correct and/or a correct model of the way in which subjects and treatments were paired (Freedman and Berk, 2008). Although assuming one has a model that captures selection, it would be fairly straightforward to update the estimators of Section 3.4 to inverse weight by the probability of treatment condition (the “IPWE”) making inference possible for observational data (Zhang et al., 2012b; Chakraborty and Murphy, 2014; Kallus, 2017).

Another extension would be to drop the requirement of specifying the model f whose specification is a tremendous constraint in practice: what if the practitioner cannot construct a suitable f using domain knowledge and past research? It is tempting to use a machine learning model that will both specify the structure of f and provide parameter estimates within e.g. Kallus’s personalization forests (Kallus, 2017) or convolutional neural networks (LeCun and Bengio, 1998) if the raw subject-level data had images. We believe the bootstrap of Section 3.5 will withstand such a machination but are awaiting a rigorous proof. Is there a solution in the interim? As suggested as early as Cox (1975), we can always pre-split the data in two where the first piece can be used to specify f and the second piece can be injected into our procedure. The cost is less data for estimation and thus, less power available to prove that the personalization is effective.

If we do not split, all the data is to be used and there are three scenarios that pose different technical problems. Under one scenario, a researcher is able to specify a suite of possible models before looking at the data. The full suite can be viewed as comprising a single procedure for which nonparametric bootstrap procedures may in principle provide simultaneous confidence intervals (Buja and Rolke, 2014). Under the other two scenarios, models are developed inductively from the data. This problem is more acute for instance in Davies (2015) where high-dimensional genomic data is incorporated for personalization (e.g. where there are many more SNPs than patients in the RCT). If it is possible to specify exactly how the model search is undertaken (e.g., using the lasso), some forms of statistical inference may be feasible. This is currently an active research area; for instance, Lockhart et al. (2014) and Lee et al. (2016) develop a significance test for the lasso and there is even some evidence to suggest that the double-peeking is not as problematic as the community has assumed (Zhao et al., 2020).

Our method’s generalizability to future patients is also in question as our validation was done within the patients of a RCT. The population of future patients is likely not the same as the population of patients in the RCT. Future patients will likely have wider distributions of the p covariates as typical RCTs feature strict inclusion criteria sometimes targeting high risk patients for higher outcome event rates. A good discussion of these issues is found in Rosenberger and Lachin (2016), Chapter 6. The practitioner will have to draw on experience and employ their best judgment to decide if the estimates our methodology provides will generalize.

And of course, the method herein only evaluates if a personalization scheme works on average over an entire population. “Personalized medicine” eponymously refers to personalization for an individual. Ironically, that is not the goal herein, but we do acknowledge that estimates and inference at an individual level coupled to valid inference for the improvement score is much-needed. This is not without difficulty as clinical trials are typically not powered to examine subgroup effects. A particularly alarming observation is made by Cuijpers et al. (2012), page 7, “if we want to have sufficient statistical power to find clinically relevant differential effect sizes of 0.5, we would need …. about 23,000 patients”.

Data Availability Statement

The datasets presented in this article in Section 4.3 is not readily available because the clinical data cannot be anonymized sufficiently according to the IRB guidelines of the institutions where the study was performed. For more information, contact the authors of the study.

Author Contributions

All authors were responsible for development of methodology and drafting the manuscript. Kapelner and Bleich did the data analysis of Section 4.

Funding

Adam Kapelner acknowledges support for this project provided by a PSC-CUNY Award, jointly funded by The Professional Staff Congress and The City University of New York. ZC and RD acknowledge support from MQ: Transforming mental health (MQDS16/72).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank our three reviewers and the editor whose input greatly improved this work. We would like to thank Abba Krieger, Larry Brown, Bibhas Chakraborty, Andreas Buja, Ed George, Dan McCarthy, Linda Zhao and Wei-Yin Loh for helpful discussions and comments. Earlier versions of this manuscript were released as a preprint on arXiv (Kapelner et al., 2014).

Footnotes

1Note that this problem is encountered in fields outside of just medicine. For example, finding the movie that will elicit the most enjoyment to the individual (Zhou et al., 2008) or assessing whether a certain unemployed individual can benefit from job training (LaLonde, 1986). Although the methods discussed herein can be applied more generally, we will employ examples and the vocabulary from the medical field.

2Note also that we do not have the additional non-smoothness created by Q-learning during the maximization step (Chakraborty et al., 2010, Section 2.4).

3Although this is standard linear modeling practice, it is not absolutely essential in our methodology, where our goal is neither inference for the variables nor prediction of the endpoint.

References

Bagby, R. M., Quilty, L. C., Segal, Z. V., McBride, C. C., Kennedy, S. H., and Costa, P. T. (2008). Personality and Differential Treatment Response in Major Depression: a Randomized Controlled Trial Comparing Cognitive-Behavioural Therapy and Pharmacotherapy. Can. J. Psychiatry 53, 361–370. doi:10.1177/070674370805300605

Barrett, J. K., Henderson, R., and Rosthøj, S. (2014). Doubly Robust Estimation of Optimal Dynamic Treatment Regimes. Stat. Biosci. 6, 244–260. doi:10.1007/s12561-013-9097-6

Berger, J. O., Wang, X., and Shen, L. (2014). A Bayesian Approach to Subgroup Identification. J. Biopharm. Stat. 24, 110–129. doi:10.1080/10543406.2013.856026

Berk, R. A., Brown, L., Buja, A., Zhang, K., and Zhao, L. (2013b). Valid Post-selection Inference. Ann. Stat. 41, 802–837. doi:10.1214/12-aos1077

Berk, R., Brown, L., Buja, A., George, E., Pitkin, E., Zhang, K., et al. (2014). Misspecified Mean Function Regression. Sociological Methods Res. 43, 422–451. doi:10.1177/0049124114526375

Berk, R., Olson, M., Buja, A., and Ouss, A. (2020). Using Recursive Partitioning to Find and Estimate Heterogenous Treatment Effects in Randomized Clinical Trials. J. Exp. Criminol., 1–20. doi:10.1007/s11292-019-09410-0

Berk, R., Pitkin, E., Brown, L., Buja, A., George, E., and Zhao, L. (2013a). Covariance Adjustments for the Analysis of Randomized Field Experiments. Eval. Rev. 37, 170–196. doi:10.1177/0193841x13513025

Bernard, C. (1957). An Introduction to the Study of Experimental Medicine. New York, NY: Dover Publications.

Box, G. E. P., and Draper, N. R. (1987). Empirical Model-Building and Response Surfaces. New York, NY: Wiley.

Brinkley, J., Tsiatis, A., and Anstrom, K. J. (2010). A Generalized Estimator of the Attributable Benefit of an Optimal Treatment Regime. Biometrics 66, 512–522. doi:10.1111/j.1541-0420.2009.01282.x

Buja, A., and Rolke, W. (2014). Calibration for Simultaneity: (Re)sampling Methods for Simultaneous Inference with Applications to Function Estimation and Functional Data. University of Pennsylvania working paper.

Byar, D. P. (1985). Assessing Apparent Treatment-Covariate Interactions in Randomized Clinical Trials. Statist. Med. 4, 255–263. doi:10.1002/sim.4780040304

Byar, D. P., and Corle, D. K. (1977). Selecting Optimal Treatment in Clinical Trials Using Covariate Information. J. chronic Dis. 30, 445–459. doi:10.1016/0021-9681(77)90037-6

Chakraborty, B., Laber, E. B., and Zhao, Y.-Q. (2014). Inference about the Expected Performance of a Data-Driven Dynamic Treatment Regime. Clin. Trials 11, 408–417. doi:10.1177/1740774514537727

Chakraborty, B., and Moodie, E. E. M. (2013). Statistical Methods for Dynamic Treatment Regimes. New York, NY: Springer.

Chakraborty, B., and Murphy, S. A. (2014). Dynamic Treatment Regimes. Annu. Rev. Stat. Appl. 1, 447–464. doi:10.1146/annurev-statistics-022513-115553

Chakraborty, B., Murphy, S., and Strecher, V. (2010). Inference for Non-regular Parameters in Optimal Dynamic Treatment Regimes. Stat. Methods Med. Res. 19, 317–343. doi:10.1177/0962280209105013

Cohen, Z. D., and DeRubeis, R. J. (2018). Treatment Selection in Depression. Annu. Rev. Clin. Psychol. 14, 209–236. doi:10.1146/annurev-clinpsy-050817-084746

Collins, L. M., Murphy, S. A., and Bierman, K. L. (2004). A Conceptual Framework for Adaptive Preventive Interventions. Prev. Sci. 5, 185–196. doi:10.1023/b:prev.0000037641.26017.00

Cox, D. R. (1975). A Note on Data-Splitting for the Evaluation of Significance Levels. Biometrika 62, 441–444. doi:10.1093/biomet/62.2.441

Cuijpers, P., Reynolds, C. F., Donker, T., Li, J., Andersson, G., and Beekman, A. (2012). Personalized Treatment of Adult Depression: Medication, Psychotherapy, or Both? a Systematic Review. Depress. Anxiety 29, 855–864. doi:10.1002/da.21985

Davies, K. (2015). The $1,000 Genome: The Revolution in DNA Sequencing and the New Era of Personalized Medicine. New York, NY: Simon & Schuster.

Davison, A. C., and Hinkley, D. V. (1997). Bootstrap Methods and Their Application. 1st Edn. Cambridge, UK: Cambridge University Press.

Dawes, R. M. (1979). The Robust Beauty of Improper Linear Models in Decision Making. Am. Psychol. 34, 571–582. doi:10.1037/0003-066x.34.7.571

DeRubeis, R. J., Cohen, Z. D., Forand, N. R., Fournier, J. C., Gelfand, L., and Lorenzo-Luaces, L. (2014). The Personalized Advantage Index: Translating Research on Prediction into Individual Treatment Recommendations. A. Demonstration. PLoS One 9, e83875. doi:10.1371/journal.pone.0083875

DeRubeis, R. J., Hollon, S. D., Amsterdam, J. D., Shelton, R. C., Young, P. R., Salomon, R. M., et al. (2005). Cognitive Therapy vs Medications in the Treatment of Moderate to Severe Depression. Arch. Gen. Psychiatry 62, 409–416. doi:10.1001/archpsyc.62.4.409

DiCiccio, T. J., and Efron, B. (1996). Bootstrap Confidence Intervals. Stat. Sci. 11, 189–212. doi:10.1214/ss/1032280214

Dusseldorp, E., Doove, L., and Mechelen, I. v. (2016). QUINT: An R Package for the Identification of Subgroups of Clients Who Differ in Which Treatment Alternative Is Best for Them. Behav. Res. 48, 650–663. doi:10.3758/s13428-015-0594-z

Dusseldorp, E., and Van Mechelen, I. (2014). Qualitative Interaction Trees: a Tool to Identify Qualitative Treatment-Subgroup Interactions. Statist. Med. 33, 219–237. doi:10.1002/sim.5933

Efron, B. (1987). Better Bootstrap Confidence Intervals. J. Am. Stat. Assoc. 82, 171–185. doi:10.1080/01621459.1987.10478410

Evans, W. E., and Relling, M. V. (2004). Moving towards Individualized Medicine with Pharmacogenomics. Nature 429, 464–468. doi:10.1038/nature02626

Faraway, J. J. (2016). Does Data Splitting Improve Prediction?. Stat. Comput. 26, 49–60. doi:10.1007/s11222-014-9522-9

Fernandes, B. S., Williams, L. M., Steiner, J., Leboyer, M., Carvalho, A. F., and Berk, M. (2017). The New Field of “precision Psychiatry”. BMC Med. 15, 1–7. doi:10.1186/s12916-017-0849-x

Foster, J. C. (2013). Subgroup Identification and Variable Selection from Randomized Clinical Trial Data. PhD thesis. Ann Arbor, MI: The University of Michigan.

Fournier, J. C., DeRubeis, R. J., Shelton, R. C., Hollon, S. D., Amsterdam, J. D., and Gallop, R. (2009). Prediction of Response to Medication and Cognitive Therapy in the Treatment of Moderate to Severe Depression. J. consulting Clin. Psychol. 77, 775–787. doi:10.1037/a0015401

Freedman, D. A., and Berk, R. A. (2008). Weighting Regressions by Propensity Scores. Eval. Rev. 32, 392–409. doi:10.1177/0193841x08317586

Friedman, J., Hastie, T., and Tibshirani, R. (2010). Regularization Paths for Generalized Linear Models via Coordinate Descent. J. Stat. Softw. 33, 1–22. doi:10.18637/jss.v033.i01

Gail, M., and Simon, R. (1985). Testing for Qualitative Interactions between Treatment Effects and Patient Subsets. Biometrics 41, 361–372. doi:10.2307/2530862

Goldberg, Y., and Kosorok, M. R. (2012). Q-learning with Censored Data. Ann. Stat. 40, 529. doi:10.1214/12-aos968

Gunter, L., Chernick, M., and Sun, J. (2011a). A Simple Method for Variable Selection in Regression with Respect to Treatment Selection. Pakistan J. Stat. Operations Res. 7, 363–380. doi:10.18187/pjsor.v7i2-sp.311

Gunter, L., Zhu, J., and Murphy, S. (2011b). Variable Selection for Qualitative Interactions in Personalized Medicine while Controlling the Family-wise Error Rate. J. Biopharm. Stat. 21, 1063–1078. doi:10.1080/10543406.2011.608052

Hastie, T., Tibshirani, R., and Friedman, J. H. (2013). The Elements of Statistical Learning. 10th Edn. Berlin, Germany: Springer Science.

Henderson, N. C., Louis, T. A., Rosner, G. L., and Varadhan, R. (2020). Individualized Treatment Effects with Censored Data via Fully Nonparametric Bayesian Accelerated Failure Time Models. Biostatistics 21, 50–68. doi:10.1093/biostatistics/kxy028

Henderson, R., Ansell, P., and Alshibani, D. (2010). Regret-regression for Optimal Dynamic Treatment Regimes. Biometrics 66, 1192–1201. doi:10.1111/j.1541-0420.2009.01368.x

Hood, L., and Friend, S. H. (2011). Predictive, Personalized, Preventive, Participatory (P4) Cancer Medicine. Nat. Rev. Clin. Oncol. 8, 184–187. doi:10.1038/nrclinonc.2010.227

Horowitz, J. L. (2001). Chapter 52 - the Bootstrap (Elsevier), of Handbook of Econometrics, Vol. 5, 3159–3228. doi:10.1016/s1573-4412(01)05005-xThe Bootstrap

Hosmer, D. W., and Lemeshow, S. (1999). Applied Survival Analysis: Time-To-Event. Hoboken, NJ: Wiley.

Imai, K., and Ratkovic, M. (2013). Estimating Treatment Effect Heterogeneity in Randomized Program Evaluation. Ann. Appl. Stat. 7, 443–470. doi:10.1214/12-aoas593

Kallus, N. (2017). Recursive Partitioning for Personalization Using Observational Data. Proc. 34th Int. Conf. on Machine Learning 70, 1789–1798.

Kang, C., Janes, H., and Huang, Y. (2014). Combining Biomarkers to Optimize Patient Treatment Recommendations. Biom 70, 695–707. doi:10.1111/biom.12191

Kapelner, A., Bleich, J., Levine, A., Cohen, Z. D., DeRubeis, R. J., and Berk, R. (2014). Inference for the Effectiveness of Personalized Medicine with Software. Available at: http://arxiv.org/abs/1404.7844.

Kapelner, A., and Krieger, A. (2020). A Matching Procedure for Sequential Experiments that Iteratively Learns Which Covariates Improve Power. Available at: http://arxiv.org/abs/2010.05980.

Laber, E. B., Lizotte, D. J., and Ferguson, B. (2014). Set-valued Dynamic Treatment Regimes for Competing Outcomes. Biom 70, 53–61. doi:10.1111/biom.12132

LaLonde, R. J. (1986). Evaluating the Econometric Evaluations of Training Programs with Experimental Data. Am. Econ. Rev. 76, 604–620.

Lamont, A., Lyons, M. D., Jaki, T., Stuart, E., Feaster, D. J., Tharmaratnam, K., et al. (2018). Identification of Predicted Individual Treatment Effects in Randomized Clinical Trials. Stat. Methods Med. Res. 27, 142–157. doi:10.1177/0962280215623981

LeCun, Y., and Bengio, Y. (1998). “Convolutional Networks for Images, Speech, and Time Series,” in The Handbook of Brain Theory and Neural Networks. Editor M. A. Arbib (Cambridge: MIT Press), 255–258.

Lee, J. D., Sun, D. L., Sun, Y., and Taylor, J. E. (2016). Exact Post-selection Inference, with Application to the Lasso. Ann. Stat. 44, 907–927. doi:10.1214/15-aos1371

Lockhart, R., Taylor, J., Tibshirani, R. J., and Tibshirani, R. (2014). A Significance Test for the Lasso. Ann. Stat. 42, 413–468. doi:10.1214/13-aos1175

Lu, W., Zhang, H. H., and Zeng, D. (2013). Variable Selection for Optimal Treatment Decision. Stat. Methods Med. Res. 22, 493–504. doi:10.1177/0962280211428383

Ma, J., Stingo, F. C., and Hobbs, B. P. (2019). Bayesian Personalized Treatment Selection Strategies that Integrate Predictive with Prognostic Determinants. Biometrical J. 61, 902–917. doi:10.1002/bimj.201700323

McGrath, C. L., Kelley, M. E., Holtzheimer, P. E., Dunlop, B. W., Craighead, W. E., Franco, A. R., et al. (2013). Toward a Neuroimaging Treatment Selection Biomarker for Major Depressive Disorder. JAMA psychiatry 70, 821–829. doi:10.1001/jamapsychiatry.2013.143

McKeague, I. W., and Qian, M. (2014). Estimation of Treatment Policies Based on Functional Predictors. Stat. Sin 24, 1461–1485. doi:10.5705/ss.2012.196

Moodie, E. E. M., and Richardson, T. S. (2009). Estimating Optimal Dynamic Regimes: Correcting Bias under the Null: [Optimal Dynamic Regimes: Bias Correction]. Scand. Stat. Theor. Appl 37, 126–146. doi:10.1111/j.1467-9469.2009.00661.x

Moodie, E. E. M., Richardson, T. S., and Stephens, D. A. (2007). Demystifying Optimal Dynamic Treatment Regimes. Biometrics 63, 447–455. doi:10.1111/j.1541-0420.2006.00686.x

Murphy, S. A. (2005b). An Experimental Design for the Development of Adaptive Treatment Strategies. Statist. Med. 24, 1455–1481. doi:10.1002/sim.2022

Murphy, S. A. (2003). Optimal Dynamic Treatment Regimes. J. R. Stat. Soc. Ser. B (Statistical Methodology) 65, 331–355. doi:10.1111/1467-9868.00389

Nemeroff, C. B., Heim, C. M., Thase, M. E., Klein, D. N., Rush, A. J., Schatzberg, A. F., et al. (2003). Differential Responses to Psychotherapy versus Pharmacotherapy in Patients with Chronic Forms of Major Depression and Childhood Trauma. Proc. Natl. Acad. Sci. 100, 14293–14296. doi:10.1073/pnas.2336126100

Orellana, L., Rotnitzky, A., and Robins, J. M. (2010). Dynamic Regime Marginal Structural Mean Models for Estimation of Optimal Dynamic Treatment Regimes, Part I: Main Content. The Int. J. biostatistics 6, 1–47. doi:10.2202/1557-4679.1200

Pan, G., and Wolfe, D. A. (1997). Test for Qualitative Interaction of Clinical Significance. Statist. Med. 16, 1645–1652. doi:10.1002/(sici)1097-0258(19970730)16:14<1645::aid-sim596>3.0.co;2-g

Paul, G. L. (1967). Strategy of Outcome Research in Psychotherapy. J. consulting Psychol. 31, 109–118. doi:10.1037/h0024436

Qian, M., and Murphy, S. A. (2011). Performance Guarantees for Individualized Treatment Rules. Ann. Stat. 39, 1180–1210. doi:10.1214/10-aos864

Robins, J. M. (2004). “Optimal Structural Nested Models for Optimal Sequential Decisions,” in Proceedings of the Second Seattle Symposium on Biostatistics. (Springer), 189–326. doi:10.1007/978-1-4419-9076-1_11

Robins, J., Orellana, L., and Rotnitzky, A. (2008). Estimation and Extrapolation of Optimal Treatment and Testing Strategies. Statist. Med. 27, 4678–4721. doi:10.1002/sim.3301

Rolling, C. A., and Yang, Y. (2014). Model Selection for Estimating Treatment Effects. J. R. Stat. Soc. B 76, 749–769. doi:10.1111/rssb.12043

Rosenberger, W. F., and Lachin, J. M. (2016). Randomization in Clinical Trials: Theory and Practice. 2nd Edn. Hoboken, NJ: John Wiley & Sons.

Rubin, D. B. (1974). Estimating Causal Effects of Treatments in Randomized and Nonrandomized Studies. J. Educ. Psychol. 66, 688–701. doi:10.1037/h0037350

Rubin, D. B., and van der Laan, M. J. (2012). Statistical Issues and Limitations in Personalized Medicine Research with Clinical Trials. Int. J. biostatistics 8, 1–20. doi:10.1515/1557-4679.1423

Salazar de Pablo, G., Studerus, E., Vaquerizo-Serrano, J., Irving, J., Catalan, A., Oliver, D., et al. (2020). Implementing Precision Psychiatry: A Systematic Review of Individualized Prediction Models for Clinical Practice. Schizophrenia Bulletin 47, 284–297. doi:10.1093/schbul/sbaa120

Schulte, P. J., Tsiatis, A. A., Laber, E. B., and Davidian, M. (2014). Q-and A-Learning Methods for Estimating Optimal Dynamic Treatment Regimes. Stat. Sci. a Rev. J. Inst. Math. Stat. 29, 640–661. doi:10.1214/13-sts450

Shao, J. (1994). Bootstrap Sample Size in Nonregular Cases. Proc. Amer. Math. Soc. 122, 1251. doi:10.1090/s0002-9939-1994-1227529-8

Shen, Y., and Cai, T. (2016). Identifying Predictive Markers for Personalized Treatment Selection. Biom 72, 1017–1025. doi:10.1111/biom.12511

Shuster, J., and van Eys, J. (1983). Interaction between Prognostic Factors and Treatment. Controlled Clin. trials 4, 209–214. doi:10.1016/0197-2456(83)90004-1

Silvapulle, M. J. (2001). Tests against Qualitative Interaction: Exact Critical Values and Robust Tests. Biometrics 57, 1157–1165. doi:10.1111/j.0006-341x.2001.01157.x

Smith, R. (2012). British Medical Journal Group Blogs. Stratified, Personalised or Precision Medicine. Available at: https://blogs.bmj.com/bmj/2012/10/15/richard-smith-stratified-personalised-or-precision-medicine/. Online. (Accessed July 01, 2021).

Su, X., Tsai, C. L., and Wang, H. (2009). Subgroup Analysis via Recursive Partitioning. J. Machine Learn. Res. 10, 141–158.

van der Laan, M. J., and Luedtke, A. R. (2015). Targeted Learning of the Mean Outcome under an Optimal Dynamic Treatment Rule. J. causal inference 3, 61–95. doi:10.1515/jci-2013-0022

Weitz, E. S., Hollon, S. D., Twisk, J., Van Straten, A., Huibers, M. J. H., David, D., et al. (2015). Baseline Depression Severity as Moderator of Depression Outcomes Between Cognitive Behavioral Therapy vs Pharmacotherapy. JAMA psychiatry 72, 1102–1109. doi:10.1001/jamapsychiatry.2015.1516

Weston, A. D., and Hood, L. (2004). Systems Biology, Proteomics, and the Future of Health Care: toward Predictive, Preventative, and Personalized Medicine. J. Proteome Res. 3, 179–196. doi:10.1021/pr0499693

Yakovlev, A. Y., Goot, R. E., and Osipova, T. T. (1994). The Choice of Cancer Treatment Based on Covariate Information. Statist. Med. 13, 1575–1581. doi:10.1002/sim.4780131508

Zhang, B., Tsiatis, A. A., Davidian, M., Zhang, M., and Laber, E. (2012a). Estimating Optimal Treatment Regimes from a Classification Perspective. Stat 1, 103–114. doi:10.1002/sta.411

Zhang, B., Tsiatis, A. A., Laber, E. B., and Davidian, M. (2012b). A Robust Method for Estimating Optimal Treatment Regimes. Biometrics 68, 1010–1018. doi:10.1111/j.1541-0420.2012.01763.x

Zhang, B., Tsiatis, A. A., Laber, E. B., and Davidian, M. (2013). Robust Estimation of Optimal Dynamic Treatment Regimes for Sequential Treatment Decisions. Biometrika 100, 681–694. doi:10.1093/biomet/ast014

Zhao, S., Witten, D., and Shojaie, A. (2020). Defense of the Indefensible: A Very Naive Approach to High-Dimensional Inference. arXiv.

Zhao, Y.-Q., Zeng, D., Laber, E. B., and Kosorok, M. R. (2015). New Statistical Learning Methods for Estimating Optimal Dynamic Treatment Regimes. J. Am. Stat. Assoc. 110, 583–598. doi:10.1080/01621459.2014.937488

Zhao, Y., and Zeng, D. (2013). Recent Development on Statistical Methods for Personalized Medicine Discovery. Front. Med. 7, 102–110. doi:10.1007/s11684-013-0245-7

Keywords: personalized medicine, inference, bootstrap, treatment regimes, randomized comparative trial, statistical software

Citation: Kapelner A, Bleich J, Levine A, Cohen ZD, DeRubeis RJ and Berk R (2021) Evaluating the Effectiveness of Personalized Medicine With Software. Front. Big Data 4:572532. doi: 10.3389/fdata.2021.572532

Received: 14 June 2020; Accepted: 03 February 2021;

Published: 18 May 2021.

Edited by:

Jake Y. Chen, University of Alabama at Birmingham, United StatesReviewed by:

Sunyoung Jang, Princeton Radiation Oncology Center, United StatesHimanshu Arora, University of Miami, United States

Copyright © 2021 Kapelner, Bleich, Levine, Cohen, DeRubeis and Berk. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Adam Kapelner, a2FwZWxuZXJAcWMuY3VueS5lZHU=

Adam Kapelner

Adam Kapelner Justin Bleich2

Justin Bleich2 Zachary D. Cohen

Zachary D. Cohen Richard Berk

Richard Berk