95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Cardiovasc. Med. , 04 August 2023

Sec. Cardiovascular Imaging

Volume 10 - 2023 | https://doi.org/10.3389/fcvm.2023.1195235

This article is part of the Research Topic Echocardiography in Cardiovascular Medicine View all 31 articles

Chi-Yung Cheng1,2,†

Chi-Yung Cheng1,2,† Cheng-Ching Wu3,4,5,†

Cheng-Ching Wu3,4,5,† Huang-Chung Chen6

Huang-Chung Chen6 Chun-Hui Hung7

Chun-Hui Hung7 Tien-Yu Chen6

Tien-Yu Chen6 Chun-Hung Richard Lin1

Chun-Hung Richard Lin1 I-Min Chiu1,2*

I-Min Chiu1,2*

Objectives: The aim of this study was to develop a deep-learning pipeline for the measurement of pericardial effusion (PE) based on raw echocardiography clips, as current methods for PE measurement can be operator-dependent and present challenges in certain situations.

Methods: The proposed pipeline consisted of three distinct steps: moving window view selection (MWVS), automated segmentation, and width calculation from a segmented mask. The MWVS model utilized the ResNet architecture to classify each frame of the extracted raw echocardiography files into selected view types. The automated segmentation step then generated a mask for the PE area from the extracted echocardiography clip, and a computer vision technique was used to calculate the largest width of the PE from the segmented mask. The pipeline was applied to a total of 995 echocardiographic examinations.

Results: The proposed deep-learning pipeline exhibited high performance, as evidenced by intraclass correlation coefficient (ICC) values of 0.867 for internal validation and 0.801 for external validation. The pipeline demonstrated a high level of accuracy in detecting PE, with an area under the receiving operating characteristic curve (AUC) of 0.926 (95% CI: 0.902–0.951) for internal validation and 0.842 (95% CI: 0.794–0.889) for external validation.

Conclusion: The machine-learning pipeline developed in this study can automatically calculate the width of PE from raw ultrasound clips. The novel concepts of moving window view selection for image quality control and computer vision techniques for maximal PE width calculation seem useful in the field of ultrasound. This pipeline could potentially provide a standardized and objective approach to the measurement of PE, reducing operator-dependency and improving accuracy.

Pericardial effusion (PE) is a condition characterized by the accumulation of fluid within the pericardial space, which is typically diagnosed using transthoracic echocardiography. The buildup of fluid increases pressure within the pericardial sac, potentially leading to cardiac tamponade and decreased cardiac output. PE is a serious condition that requires timely intervention, making early detection and accurate measurement of the width of the effusion critical (1).

Echocardiography is considered the gold standard for the detection of PE due to its accessibility, portability, and ability to provide a comprehensive assessment of both anatomy and function (2–5). However, the presence and severity of PE can be uncertain, with mild effusion sometimes indicating pericardial fat rather than true effusion (6). Additionally, image quality can be compromised by factors such as breast tissue or obscuration from bone or lung. These challenges highlight the need for an accurate and reliable method for measuring PE that is not dependent on operator experience.

Artificial intelligence (AI) has been applied in various clinical settings to aid in the diagnosis of conditions based on echocardiograms, with considerable effort devoted to areas such as left ventricular function assessment, regional wall motion abnormality, right ventricular function, valvular heart disease, cardiomyopathy, and intracardiac mass (7–10). In particular, a study conducted in 2020 employed a deep learning model to detect PE in echocardiography and achieved an accuracy of 0.87–0.9 (11). In clinical practice, information on PE width and severity is crucial for initiating appropriate interventions. To the best of our knowledge, no study has yet analyzed the grading of PE using machine learning. Therefore, our study aimed to develop a deep learning model using echocardiography for PE detection and PE width measurement. Additionally, to facilitate the deployment of the deep learning model, we proposed an end-to-end guideline that can output detection results from raw ultrasound files.

The data collection and protocols utilized in this study were authorized by the Institutional Review Board of E-Da Hospital (EDH; no: EMRP24110N) and the Institutional Review Board of Kaohsiung Chang Gung Memorial Hospital (CGMH; no: 20211889B0 and 202101662B0).

In this study, images from routine echocardiography were generated at two medical centers, EDH and CGMH, in southern Taiwan. The deep learning model was trained and internally validated in EDH and externally validated in CGMH.

During the data collection process, we utilized the keyword “pericardial effusion” to search the Hybrid Picture Report System in EDH in order to gather a list of examination records. We obtained raw data from transthoracic echocardiography examinations with a diagnosis of pericardial effusion, which were performed at EDH between January 1, 2010, and June 30, 2020. These data were divided into training and validation datasets based on the respective examination dates. Examinations with dates prior to December 31, 2018, were used for the development of the model, and examinations with dates after January 1, 2019, were used for internal validation. To evaluate the generalizability of the model, we also retrieved echocardiography data from CGMH between January 1, 2019, and June 30, 2020, for external validation. The study flowchart and data summary are presented in Figure 1.

Images were gathered in a normal manner, with patients lying in the left lateral decubitus position. The ultrasound system (IE33, Philips Healthcare; S70, GE Healthcare; or SC2000, Siemens Healthineers) was used to perform echocardiographic examinations in EDH. Data from CGMH for external validation were acquired using EPIC7 (Philips Healthcare), Vivid E9 (GE Healthcare), or SC2000 (Siemens Healthineers). All examinations were saved in picture archiving and communication systems in the Digital Imaging and Communications in Medicine (DICOM) format.

After extracting the raw DICOM files, we processed the image from each patient to select the proper echocardiography views for developing a deep learning pipeline. The selected views were the parasternal long-axis (PLAX), parasternal short-axis (PSAX), apical four-chamber (A4C), and subcostal (SC) views. Two cardiologists manually measured the thickest point of pericardial effusion during each cardiac cycle as ground truth for width of PE. We employed ImageJ, an open-source software platform specifically designed for the scientific analysis and processing of images, to label and annotate the segmented masks corresponding to the echocardiography images. Upon completing the labeling process, the data was subsequently stored as CSV files on a secure, encrypted hard drive to ensure data integrity and confidentiality.

In this study, we developed an end-to-end pipeline for the automated measurement of PE based on the steps outlined below (Figure 2). The training subset of videos from EDH was used for the three main tasks of our pipeline:

We proposed a pipeline for managing echocardiography files directly from the workstation, similar to the work done by Zhang et al. and Huang et al., with some adjustments (Figure 2A) (10, 12). To distinguish the four primary views (PLAX, PSAX, A4C, and SC) from other views during each examination, we developed the first deep neural network model. This model was a ResNet-50-based two-dimensional model that aimed to classify each frame from the extracted DICOM files of echocardiography into the selected view types (13). To train this model, we randomly selected 6,434 images from the training dataset of EDH and labeled them according to the four primary views or other views, including low-quality views. We trained the model with a data split of 80% for the training set and 20% for the validation set. The model weight with the best detection performance in the validation set during the training process was preserved. The detection accuracy was assessed for each view class and weighted average result.

In order to effectively manage the input video from patients during data collection, a 48-frame moving window was utilized to filter all videos. For each video, the best 48 frames with regard to specific view type and image quality were selected using a majority voting method (Figure 3). This process, known as MWVS concept, served not only as a view classifier but also as a quality control measure. Videos that did not contain at least 48 consecutive frames that met the image quality criteria of 50% or higher from one of the four primary views were excluded from further analysis. Additionally, the average view-classifying confidence levels for all images obtained from the selected 48-frame clip were used to evaluate the overall image quality and its correlation with performance. Videos with an average confidence level of less than 0.8 were also excluded from automated segmentation.

From the dataset, we randomly selected and annotated 2,548 frames in the EDH training dataset, ensuring an even distribution across the four primary views. We manually labeled the segmented area for pericardial effusion (PE) at three different phases in the cardiac cycle: end-systolic, end-diastolic, and the middle phase between the two aforementioned phases. The differentiation between epicardial adipose tissue and pericardial fluid was established. During this labeling phase, experienced clinicians manually segmented the area of PE based on its characteristic appearances in ultrasound images, while explicitly excluding epicardial adipose tissue. Additionally, we labeled the segmented areas for the four cardiac chambers to enhance the model's performance in separating these fluid-containing areas.

To train object instance segmentation based on the labeled ground truth, we utilized a Mask Region-Convolutional Neural Network (R-CNN) framework (Figure 2B). The model was trained with a data split of 80% for the training set and 20% for the validation set. Mask R-CNN is commonly used in medical applications for instance segmentation tasks as it can simultaneously perform pixel-level segmentation and classification of multiple target lesions (14). The implemented model generates bounding boxes and targeting masks for each instance of an object in an image. The input comprised consecutive ultrasound frames, and the output was a segmented mask indicating the corresponding four cardiac chambers and PE. The accuracy of the segmentation model was assessed using the Dice coefficient metric.

After generating a segmented mask for pericardial effusion (PE), we proposed a computer vision technique, known as the maximal width calculator, to calculate the largest width of the PE in each ultrasound frame (Figure 2C). To accomplish this, we iterated through the vertical axis in each frame and hypothetically drew a horizontal line to determine if there was an intersection between the segmented mask and the horizontal line. If an intersection existed, we obtained a normal line from the edge of the mask over the intersection point. The length of the normal line that passed through the segmented mask was counted as the width of the PE at that intersection point. The largest width of the PE obtained through the iteration over the vertical axis was regarded as the width of the PE of the frame. This technique was applied to all 48 frames in the ultrasound video to provide the optimal PE width. Detailed explanation of this process was demonstrated in Supplementary Appendix 1.

In this study, continuous variables are presented as either the mean and standard deviation if they are normally distributed or as the median and interquartile range if they are not. Dichotomous data are presented as numbers and percentages. The chi-squared test was used to analyze categorical variables, while continuous variables were analyzed using either the independent-sample t-test if they were normally distributed or the Mann-Whitney U test if they were not.

The performance of the proposed pipeline for PE width measurement was evaluated using metrics such as the mean absolute error, intraclass correlation coefficient, and R-square value when comparing the ground truth and detection. Additionally, the detection of the existence of PE and moderate PE was evaluated using sensitivity, specificity, and the area under the receiver operating characteristic curve. The deep learning models in the pipeline were developed using the TensorFlow Python package, image manipulation was performed using OpenCV 3.0 and scikit-image, and all analyses were conducted using SPSS for MAC version 26.

In this study, a total of 995 echocardiographic examinations were analyzed. Of these, 737 examinations were from the EDH dataset, with 582 being utilized for training and 155 for internal validation. Additionally, 258 examinations from the CGMH dataset were included for external validation. However, due to the limited number of SC views present in the CGMH dataset, this view was not included in the external validation analysis.

The demographic and clinical characteristics of the patients who underwent echocardiography are presented in Table 1. The mean age of patients in the training, internal validation, and external validation sets were 67.4 ± 15.4, 59.8 ± 19.2, and 66.4 ± 16.1 years, respectively. Additionally, 46.5% and 63.2% of patients in the internal and external validation groups, respectively, had PE. The average ejection fraction was 64.3 ± 7.1% in the internal validation group and 61 ± 13.9% in external validation group.

The performance of the view classifier was evaluated with an average accuracy of 0.91 and 0.87 in predicting image classes in the training and validation sets, respectively. The independent accuracy in the validation set for each class was 0.90, 0.87, 0.93, 0.76, and 0.88 for PLAX, PSAX, A4C, SC, and others, respectively.

The MWVS was used to select the right views from all DICOM files in one examination and to improve video quality. The results of MWVS showed that 80%–100% of the four selected ultrasound views in EDH were successfully passed through for the segmentation model. In the external validation (CGMH dataset), 686 ultrasound videos from the four selected views were obtained. Our MWVS scanned through all DICOM files and 365 (53.2%) ultrasound videos were preserved for segmentation inference. The videos selected by our pipeline were further checked by a cardiologist, and none of them were misclassified as other cardiac views.

We employed a mask R-CNN-based model for image segmentation to effectively localize the cardiac chambers and PE area within four different views (Figure 4). The validation set, consisting of 510 images, showed an average Dice coefficient ranging from 0.67 to 0.82 among the four views, with the short-axis view (SC) achieving the lowest result. The best Dice coefficient was observed in the parasternal long-axis view (PLAX) at 0.72, while the SC view had the poorest result of 0.56 (Table 2). Using the segmented PE area, we calculated the maximal PE width from each frame.

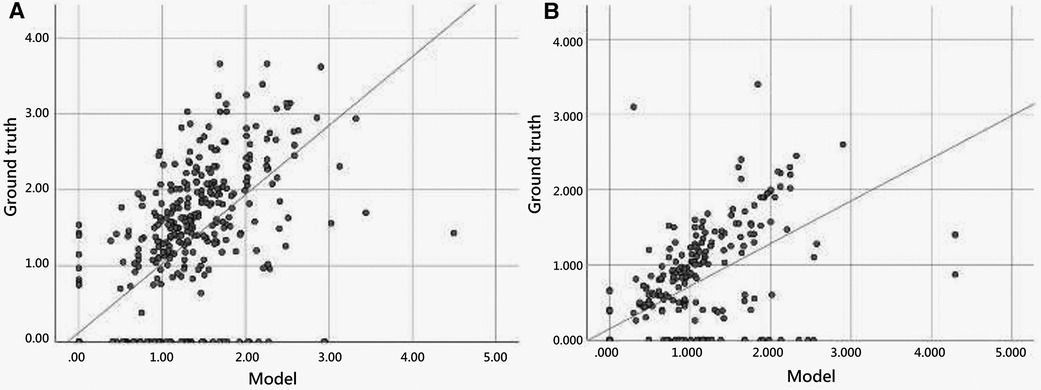

Figure 5 illustrates the scatter plot of the PE width measurement between the ground truth and model detection, which was determined by finding the largest normal line passing through the segmented mask in each frame. We compared the automated and manual measurements of PE width in both the internal (EDH) and external (CGMH) validation datasets, reporting the mean absolute error and correlation between the two. The mean absolute error was 0.33 cm and 0.35 cm in the internal and external datasets, respectively. Additionally, the interobserver variability was found to be highly correlated for the measurement of PE width between our model and human expert (ICC = 0.867, p < 0.001, EDH; ICC = 0.801, p < 0.001, CGMH). The R-square value was 0.594 for the EDH dataset and 0.488 for the CGMH validation dataset.

Figure 5. Scatter plot of pericardial effusion width measurement between model and manual annotation.

Our model accurately detected the existence of PE in the internal validation [AUC = 0.926 (0.902–0.951)] and external validation [AUC = 0.842 (0.794–0.889)]. With regard to recognizing moderate PE or worse, the AUC values improved to 0.941 (0.923–0.960) and 0.907 (0.876–0.943) in the internal and external validation groups, respectively.

We further performed a stratified analysis of the model detection in the different echocardiography views. In the internal validation, the model detection of PE width was highly correlated with the ground truth in the four different views, with ICC ranging from 0.802–0.910. The PLAX and A4C views appeared to have the best detection results with ICCs of 0.910 (0.876–0.935) and 0.907 (0.871–0.932), respectively. In the external validation, similar to internal validation, the model performed better in the PLAX and A4C views, with ICCs of 0.807 (0.726–0.864) and 0.897 (0.846–0.931), respectively. The other performances are listed in Table 3.

In recent years, computer vision and deep learning techniques have been utilized to aid in the interpretation of echocardiography, estimate cardiac function, and identify local cardiac structures. Deep learning algorithms have also been applied to facilitate the diagnosis of PE (15). Nayak et al. developed a CNN that detected PE in the apical four-chamber (A4C) and short-axis (SC) views with accuracies of 91% and 87%, respectively (11). In this study, we propose a deep learning pipeline that can process raw DICOM files from ultrasound and predict the PE width in a clinical setting. This pipeline combines two deep learning models and one technical calculation algorithm to accurately predict PE width. There have been few efforts to predict PE existence, with some studies being based on computed tomography scans (16, 17). To the best of our knowledge, this is the first video-based machine learning model to measure PE width using echocardiography. The correlation between the measurement of our model and human experts was high in both the internal and external validation datasets, with the best performance observed in the parasternal long-axis (PLAX) view. The inference speed of our model, using one graphics processing unit (NVIDIA RTX 3090), was approximately 30–40 s for one examination, which is usually faster than human assessment.

The present study introduces two novel concepts for echocardiography analysis: the MWVS and the maximal width calculator of the segmented mask. These methods are particularly important for real-world applications, particularly when working with relatively smaller datasets. Previous studies often rely on datasets manually selected by human experts during dataset cleaning, and use only “textbook-quality” images for training (18–21). In contrast, the present study proposes an analytical pipeline that can automatically analyze echocardiograms and be easily applied to personal devices or web applications, thus eliminating the need for expert sonographers or cardiologists. Madani et al. developed a CNN that simultaneously classified 15 standard echocardiogram views acquired under a range of real-world clinical variations, and the model demonstrated high accuracy for view classification (21). Similarly, this study used echocardiogram video clips obtained from the real world, taken for a variety of clinical purposes, including ejection fraction calculation, and detecting PE, valve disease, regional wall abnormality, cardiomyopathy, and pulmonary arterial hypertension. An initial screening model for view classification and quality control was developed. All raw images from the medical image database were input into the screening model, leaving a specific view of sufficient quality for diagnosis. Additionally, the “moving window” concept was used to retrieve only clips with 48 consecutive frames that fulfilled the image quality criteria. By avoiding limited or idealized training datasets, it is believed that this model is broadly applicable to clinical practice.

The method of MWVS is a novel concept that has not yet been proposed in the field of echocardiography assisted by machine learning. MWVS serves as an image quality filter and plays a crucial role in ensuring that the images are of sufficient quality for the next step in the pipeline. In the EDH dataset, echocardiography is performed by well-trained technicians who adhere to a protocol established by the echocardiologist consensus committee. As such, the original images from EDH were of relatively homogeneous quality, and MWVS filtered out fewer patients. Conversely, at CGMH, echocardiography is performed by individual echocardiologists who may have varying techniques. As a result, the original images from CGMH were less homogeneous, and MWVS filtered out more patients. This finding highlights the significance of MWVS in maintaining image quality and highlights the importance of image homogeneity in the applicability of machine learning models.

After segmenting the PE area, we developed a novel computer vision-based technique to calculate the largest PE width in ultrasound video. The current categorization of PE size relies on linear measurements of the largest width of the effusion at end-diastole, and is graded as small (<1 cm), moderate (1–2 cm), or large (>2 cm) (22). This semiquantitative classification method is prone to errors due to asymmetric, loculated effusions and shifts in fluid location during the cardiac cycle (23). Therefore, an automated calculation system could help identify the largest width of the PE in every ultrasound frame without any errors. In comparison to AI-based models, the computer vision technique is more similar to the method used by human experts. AI-based models not only consume more computing resources, but also require a large number of datasets for training and validation. To the best of our knowledge, our study is the first to not only detect but also classify the grade of PE.

Our model's ability to automate the process of classifying PE severity, traditionally categorized as mild, moderate, or severe, signifies a meaningful advancement in this field. Although this may appear straightforward for experienced clinicians, automated segmentation and measurement can be invaluable, especially in contexts where echocardiography expertise may be limited or entirely absent. Importantly, even though our model does not currently provide prediction on hemodynamic instability such as cardiac tamponade, its ability to differentiate moderate to severe PE from none or mild is crucial. This feature allows for early risk stratification in patients, prompting clinicians to initiate appropriate assessments and interventions as early as possible. Moreover, our work lays the groundwork for the future development of models designed to predict complex clinical scenarios, such as early hemodynamic instability. The integration of our model's segmentation and classification capabilities with other clinically relevant parameters may, in time, lead to major advancements in the prediction and management of such conditions.

This study had certain limitations that should be considered when interpreting the results. Firstly, the study was conducted retrospectively and the model was trained using data from only one hospital. This resulted in a limited sample size and a lack of ethnic diversity, which may impact the generalizability of the findings to other populations. To address this, future studies should utilize a multicenter design with greater heterogeneity in the dataset. However, it is important to note that the images used in this study were obtained using different ultrasonography machines and were interpreted by multiple echocardiographers, and the model achieved similar results during external validation. Secondly, while the proposed pipeline did grade the amount of PE, there was no information on whether there were signs of cardiac tamponade, as PE volume does not necessarily correlate with clinical symptoms (24). Additionally, due to the small sample size, we were unable to conduct subgroup analysis to distinguish the algorithm's performance on transudative vs. exudative effusions. To increase the clinical applicability of the findings, larger studies and validation cohorts are needed to reproduce the results of this study. Further research should also evaluate the collapsibility of the cardiac chambers and the presence of tamponade signs.

In this study, we developed a deep-learning pipeline that automatically calculates the width of the PE from raw ultrasound clips. The model demonstrated high accuracy in detecting PE and classifying the PE width in both internal and external validation. The use of a novel concept, known as MWVS, for image quality filtering and computer vision techniques for calculating the maximal PE width is a novel application in the field of echocardiography.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

This study was authorized by the Institutional Review Board of E-Da Hospital (EDH; no: EMRP24110N) and the Institutional Review Board of Kaohsiung Chang Gung Memorial Hospital (CGMH; no: 20211889B0 and 202101662B0). Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

C-YC and C-CW drafted the manuscript and the figures. H-CC and T-YC collected and labeled the data. C-HH built and validated the model. I-MC designed the study flowchart and supervised the study. C-HRL revised the manuscript. All authors contributed to the article and approved the submitted version.

The study was supported by grants EDCHM109001 and EDCHP110001 from E-Da Cancer Hospital and grant CMRPG8M0181 from the Chang Gung Medical Foundation.

We thank the Biostatistics Center, Kaohsiung Chang Gung Memorial Hospital for statistics work.

C-HH was employed by Skysource Technologies Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at https://www.frontiersin.org/articles/10.3389/fcvm.2023.1195235/full#supplementary-material

1. Jung HO. Pericardial effusion and pericardiocentesis: role of echocardiography. Korean Circ J. (2012) 42:725–34. doi: 10.4070/kcj.2012.42.11.725

2. Ceriani E, Cogliati C. Update on bedside ultrasound diagnosis of pericardial effusion. Intern Emerg Med. (2016) 11:477–80. doi: 10.1007/s11739-015-1372-8

3. Vakamudi S, Ho N, Cremer PC. Pericardial effusions: causes, diagnosis, and management. Prog Cardiovasc Dis. (2017) 59:380–8. doi: 10.1016/j.pcad.2016.12.009

4. Malik SB, Chen N, Parker RA III, Hsu JY. Transthoracic echocardiography: pitfalls and limitations as delineated at cardiac CT and MR imaging. Radiographics. 2017;37:383–406. doi: 10.1148/rg.2017160105

5. Sampaio F, Ribeiras R, Galrinho A, Teixeira R, João I, Trabulo M, et al. Consensus document on transthoracic echocardiography in Portugal. Rev Port Cardiol (Engl Ed). (2018) 37:637–44. doi: 10.1016/j.repc.2018.05.009

6. Sagristà-Sauleda J, Mercé AS, Soler-Soler J. Diagnosis and management of pericardial effusion. World J Cardiol. (2011) 3(5):135. doi: 10.4330/wjc.v3.i5.135

7. de Siqueira VS, Borges MM, Furtado RG, Dourado CN, da Costa RM. Artificial intelligence applied to support medical decisions for the automatic analysis of echocardiogram images: a systematic review. Artif Intell Med. (2021) 120:102165. doi: 10.1016/j.artmed.2021.102165

8. Zhou J, Du M, Chang S, Chen Z. Artificial intelligence in echocardiography: detection, functional evaluation, and disease diagnosis. Cardiovasc Ultrasound. (2021) 19:1–11. doi: 10.1136/heartjnl-2021-319725

9. Ouyang D, He B, Ghorbani A, Yuan N, Ebinger J, Langlotz CP, et al. Video-based AI for beat-to-beat assessment of cardiac function. Nature. (2020) 580:252–6. doi: 10.1038/s41586-020-2145-8

10. Huang M-S, Wang C-S, Chiang J-H, Liu P-Y, Tsai W-C. Automated recognition of regional wall motion abnormalities through deep neural network interpretation of transthoracic echocardiography. Circulation. (2020) 142:1510–20. doi: 10.1161/CIRCULATIONAHA.120.047530

11. Nayak A, Ouyang D, Ashley EA. A deep learning algorithm accurately detects pericardial effusion on echocardiography. J Am Coll Cardiol. (2020) 75(11_Supplement_1):1563–1563. doi: 10.1016/S0735-1097(20)32190-2

12. Zhang J, Gajjala S, Agrawal P, Tison GH, Hallock LA, Beussink-Nelson L, et al. Fully automated echocardiogram interpretation in clinical practice: feasibility and diagnostic accuracy. Circulation. (2018) 138:1623–35. doi: 10.1161/CIRCULATIONAHA.118.034338

13. He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. Paper presented at: proceedings of the IEEE conference on computer vision and pattern recognition (2016). doi: 10.48550/arXiv.1512.03385

14. He K, Gkioxari G, Dollár P, Mask r-cnn GR. Paper presented at: proceedings of the IEEE international conference on computer vision (2017).

15. Ghorbani A, Ouyang D, Abid A, He B, Chen JH, Harrington RA, et al. Deep learning interpretation of echocardiograms. NPJ digital Medicine. (2020) 3:1–10. doi: 10.1038/s41746-019-0216-8

16. Ebert LC, Heimer J, Schweitzer W, Sieberth T, Leipner A, Thali M, et al. Automatic detection of hemorrhagic pericardial effusion on PMCT using deep learning-a feasibility study. Forensic Sc Med Pathol. (2017) 13:426–31. doi: 10.1007/s12024-017-9906-1

17. Draelos RL, Dov D, Mazurowski MA, Lo JY, Henao R, Rubin GD, et al. Machine-learning-based multiple abnormality prediction with large-scale chest computed tomography volumes. Med Image Anal. (2021) 67:101857. doi: 10.1016/j.media.2020.101857

18. Khamis H, Zurakhov G, Azar V, Raz A, Friedman Z, Adam D. Automatic apical view classification of echocardiograms using a discriminative learning dictionary. Med Image Anal. (2017) 36:15–21. doi: 10.1016/j.media.2016.10.007

19. Sengupta PP, Huang YM, Bansal M, Ashrafi A, Fisher M, Shameer K, et al. Cognitive machine-learning algorithm for cardiac imaging: a pilot study for differentiating constrictive pericarditis from restrictive cardiomyopathy. Circulation. (2016) 9:e004330. doi: 10.1161/CIRCIMAGING.115.004330

20. Gao X, Li W, Loomes M, Wang L. A fused deep learning architecture for viewpoint classification of echocardiography. Info Fusion. (2017) 36:103–13. doi: 10.1016/j.inffus.2016.11.007

21. Madani A, Arnaout R, Mofrad M, Arnaout R. Fast and accurate view classification of echocardiograms using deep learning. NPJ Digit Med. (2018) 1:1–8. doi: 10.1038/s41746-017-0013-1

22. Karia DH, Xing Y-Q, Kuvin JT, Nesser HJ, Pandian NG. Recent role of imaging in the diagnosis of pericardial disease. Curr Cardiol Rep. (2002) 4:33–40. doi: 10.1007/s11886-002-0124-3. PMID: 11743920

23. Prakash AM, Sun Y, Chiaramida SA, Wu J, Lucariello RJ. Quantitative assessment of pericardial effusion volume by two-dimensional echocardiography. J Am Soc Echocardiogr. (2003) 16:147–53. doi: 10.1067/mje.2003.35

Keywords: echocardiography, deep learning—artificial intelligence, pericardial effusion (PE), width measurements, automated segmentation, moving window (MW)

Citation: Cheng C-Y, Wu C-C, Chen H-C, Hung C-H, Chen T-Y, Lin C-HR and Chiu I-M (2023) Development and validation of a deep learning pipeline to measure pericardial effusion in echocardiography. Front. Cardiovasc. Med. 10:1195235. doi: 10.3389/fcvm.2023.1195235

Received: 28 March 2023; Accepted: 13 July 2023;

Published: 4 August 2023.

Edited by:

Francesca Innocenti, Careggi University Hospital, ItalyReviewed by:

Chun Ka Wong, The University of Hong Kong, Hong Kong SAR, China© 2023 Cheng, Wu, Chen, Hung, Chen, Lin and Chiu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: I-Min Chiu cmF5MTk4NUBjZ21oLm9yZy50dw==; b3V0b2ZyYXlAaG90bWFpbC5jb20=

†These authors have contributed equally to this work

Abbreviations A4C, apical four-chamber; AI, artificial intelligence; AUC, area under the receiver operating characteristics curve; CGMH, Chang Gung Memorial Hospital; CNN, convolutional neural network; DICOM, Digital Imaging and Communications in Medicine; EDH, E-Da Hospital; GPU, graphics processing unit; ICC, intraclass correlation coefficient; MWVS, moving window view selection; PE, pericardial effusion; PLAX, parasternal long-axis; PSAX, parasternal short-axis; SC, subcostal.

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.