95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Cardiovasc. Med. , 08 November 2022

Sec. Cardiovascular Imaging

Volume 9 - 2022 | https://doi.org/10.3389/fcvm.2022.1016032

This article is part of the Research Topic Current and Future Role of Artificial Intelligence in Cardiac Imaging, Volume II View all 16 articles

Liliana Szabo1,2,3*

Liliana Szabo1,2,3* Zahra Raisi-Estabragh1,2

Zahra Raisi-Estabragh1,2 Ahmed Salih1,2

Ahmed Salih1,2 Celeste McCracken4

Celeste McCracken4 Esmeralda Ruiz Pujadas5

Esmeralda Ruiz Pujadas5 Polyxeni Gkontra5

Polyxeni Gkontra5 Mate Kiss6

Mate Kiss6 Pal Maurovich-Horvath7

Pal Maurovich-Horvath7 Hajnalka Vago3

Hajnalka Vago3 Bela Merkely3

Bela Merkely3 Aaron M. Lee1,2

Aaron M. Lee1,2 Karim Lekadir5

Karim Lekadir5 Steffen E. Petersen1,2,8,9

Steffen E. Petersen1,2,8,9A growing number of artificial intelligence (AI)-based systems are being proposed and developed in cardiology, driven by the increasing need to deal with the vast amount of clinical and imaging data with the ultimate aim of advancing patient care, diagnosis and prognostication. However, there is a critical gap between the development and clinical deployment of AI tools. A key consideration for implementing AI tools into real-life clinical practice is their “trustworthiness” by end-users. Namely, we must ensure that AI systems can be trusted and adopted by all parties involved, including clinicians and patients. Here we provide a summary of the concepts involved in developing a “trustworthy AI system.” We describe the main risks of AI applications and potential mitigation techniques for the wider application of these promising techniques in the context of cardiovascular imaging. Finally, we show why trustworthy AI concepts are important governing forces of AI development.

In recent years, several artificial intelligence (AI) based systems have been developed in cardiology. This trend is driven by the increasing need to deal with the vast amount of clinical and imaging data produced in the field and with the ultimate aim to advance patient care, diagnosis and prognostication (1, 2). It is not a question anymore whether AI will transform healthcare but rather how it will do so (3). Transformative measures have already impacted many areas of cardiovascular medicine, from smart devices promising to diagnose arrhythmias based on single-lead ECG (4) to automatic image segmentation tools shortening manual image analysis (5, 6). However, there is a critical gap between the development and deployment of AI tools. To date only 24 AI-driven cardiovascular imaging products have received FDA approval (7), suggesting there remain critical challenges in building and implementing these models into everyday practice.

It is easy to scare away busy clinicians with endless legal documentation and specialized terms from philosophy, law and data science. On the other hand, expecting the data science community to be up to date with their field, understand complex medical concepts and consider the ethical ramifications of AI is the recipe for serious unintended consequences (8). Indeed, the discussion around the ethical issues of AI should be inclusive of all participants, from funding agencies to the patients.

The promise of AI revolutionizing cardiovascular imaging could not be delivered without achieving the trust of the end-users and patients. Currently, there are several ethical frameworks for AI applications. One of the most universal guideline was proposed by the European Commission in 2019 (9). This document provides a detailed technical summary and general guidance for dealing with the ethical questions of AI. However, it was written by senior data scientists, consequently does not focus on issues of healthcare applications (10). Indeed, to date, little is accessible to healthcare professionals without an in-depth understanding of the technical terms of the ethical questions embedded in AI applications. Notably, the document written by the European and North American Societies in Radiology detailing potential AI ethics issues can work as a primer for other societies in medicine (11). More recently, the first comprehensive guideline for assessing the trustworthiness of AI-based systems in medical imaging was developed, named FUTURE-AI (12). This technical framework promises to transform AI development in medical imaging and will help create an environment for safe clinical implementation of novel methods (https://future-ai.eu/).

In this narrative review, we aim to summarize the main risks of AI application and potential mitigation techniques in plain language. We provide an overview of ongoing efforts to improve the “trustworthiness” of AI in cardiovascular imaging. Finally, we aim to provide key questions to help initiate dialogue within research groups.

Several dedicated publications describe AI's definitions and main applications within cardiology in great detail (13–16). Here we restrict ourselves to those basic concepts essential for further discussion of AI trustworthiness.

AI is an umbrella term within data science, incorporating a wealth of models, use cases and aiding methodologies to mimic human thought processes and learning patterns (8). Within AI, the most commonly used models are machine learning (ML)-based in medical research; therefore, several important source documents handle AI and ML almost synonymously (1). An overly simplified definition of ML is computer algorithms that “learn” from data. ML methods use pre-processed (e.g., anthropometric data derived from patients) and raw data (e.g., raw imaging files). Deep learning (DL) is a subset of ML that deals with algorithms inspired by the structure and function of the human brain. DL algorithms use neural networks to transform the raw data into an abstract level, refine accuracy and adjust when encountering new data (17).

We can differentiate between supervised and unsupervised learning based on the type of data fed into an AI algorithm. In supervised learning, humans curate and label data before training, and the model is optimized for accuracy with known inputs and outputs. The following models are used for: classification (putting data into categories) and regression (predicting continuous variables within the concept of ML). On the other hand, unsupervised learning deals mainly with unlabelled data, with the ultimate goal of identifying novel patterns in a dataset such as clustering (14).

A critically important step in ML model development is a large and consistently labeled data set—the diverse quality of data and the inconsistent labeling could reduce the accuracy of AI model. Another important step is data splitting: datasets are generally split into training, validation and test sets. Training and validation sets used to train and fit the model, more specifically the validation provides an estimation of the model fit for model selection or tuning of parameters, whilst the test set is reserved to evaluate the final model (18). Given the degradation in performance reported for deep-learning algorithms for medical imaging, it is of paramount importance that the test set consists of independent cohorts to allow for external validation, a key requirement for ensuring the trustworthiness of AI systems (19, 20). Moreover, the external validation should be performed by independent parties to ensure objectiveness. Please note, that validation in the original dataset is not synonymous with external validation, which is performed on a separate dataset.

It is easy to get lost in a philosophical discussion about how to define trust or if it is even possible outside the human realm (21, 22). From a practical standpoint, these questions are confusing rather than helpful. For decades AI was part of the scientific discussion, existing in research environments, and science fictions. Therefore, the question of whether to trust AI tools in healthcare was merely a discussion for scholars in the ethical and data science fields. However, with novel tools emerging daily, we are forced to reconsider the potential ramifications embedded in AI.

The questions we face today are highly practical and directly affect the field's development. Can we trust the CMR segmentation provided by the AI tool? Are we confident that the new artifact-removing algorithm does not mask any important clinical clue? Should we rely on the novel diagnosis support toolkit? What does it mean to trust the judgement of an automatic tool? How do we communicate the uncertainties embedded in a novel predictions score? Are we holding AI to a higher standard than clinical judgement based on intuition and experience?

As an example, left ventricular ejection fraction (LVEF) measured using echocardiography is a long-standing “trusted” parameter in cardiovascular medicine. Because with years of development, validation, and experience, we learned to comprehend the signals that link it to disease and outcome, and communicate the findings to the patients so that they trust their practitioners to understand echocardiography (23, 24). Although the information it provides is far from complete and prone to errors, the usefulness of knowing the EF of a patient in a clinical situation is beyond question; even when clinicans use eyeballing (25). On the other hand an AI application based on the idea that the human eye and brain can learn with experience how to estimate EF without measuring ventricular volumes and making calculations is more controversial, as this approach does not allow the revision of the ventricular contours in case of seemingly disparate results (26).

Wynants et al. reviewed multivariable COVID-19-related prediction models at the beginning of the pandemic. They found that the 232 models identified in the study all reported moderate to excellent predictive performance, but all were appraised to have a high or uncertain risk of bias owing to a combination of poor reporting and poor methodological conduct for participant selection, predictor description, and statistical methods used (27). The most sobering conclusion was that none of the proposed models proved to be of much help in clinical practice. The same conclusion was drawn from the investigation by the Alan Turing Institute (28) and others (29).

Within cardiovascular imaging, the main areas of AI application are: (1) image acquisition and reconstruction—which helps to reduce the scan time, (2) improving the imaging workflow and efficiency of time-expensive tasks such as segmentation, (3) improving the diagnosis-making process, (4) evaluation of disease progression and prognosis, (5) assessment of treatment effectiveness, and (6) generation of new knowledge. Examples illustrating key areas of AI applications from non-invasive cardiovascular imaging is summarized in Table 1, further examples in can be found in dedicated publications (15, 16, 18, 49, 50).

There has been a steep increase in publications using ML in cardiovascular imaging in the past 5 years. This trend was driven by the increasing availability of high computational power, large datasets (16), and the discovery of the computational effectiveness of convolutional neural network architecture (AlexNet) (51).

It has been envisioned that AI tools will take over or at least substitute the work of radiologists and cardiovascular imagers to a great extent and consequently necessitate fewer human resources creating cheaper and more accurate care in the future (52). Roughly a decade into the area of accessible AI innovation, we can see that changes are less rapid, and the results are beneath our expectations (53). No segmentation is used unchecked, no diagnosis is made without human supervision and approval, and the need for well-trained imagers has increased (54). Notably, only a small proportion of the proposed methods, models and tools gain approval from the appropriate authorities (FDA or European Medicines Agency), and reach the clinical application stage. Should we then just conclude that AI is pointless and we must not use it? On the contrary, these experiences and setbacks should motivate the research into more robust AI models and rigorous validation standards. Only by learning from the critical issues raised by researchers and end-users can we move forward in the field of AI.

To understand why AI applications are not approved and used to the rate it was predicted during the height of the ML hype in 2016, we have to look into the potential limitations of these tools. Here we provide an introduction to the main risks of AI applications within cardiovascular imaging: (1) lack of robustness and reliability causing patient harm, (2) issues of AI usability and the misuse of tools, (3) bias and lack of fairness within the AI application which can perpetuate existing inequities, (4) privacy and security issues, (5) lack of transparency, (6) gaps in explainability, (7) gaps in accountability, and (8) obstacles in implementation (Table 2). In each section, we describe the main attributes of each risk, provide relevant examples within cardiovascular imaging and illustrate potential mitigation strategies.

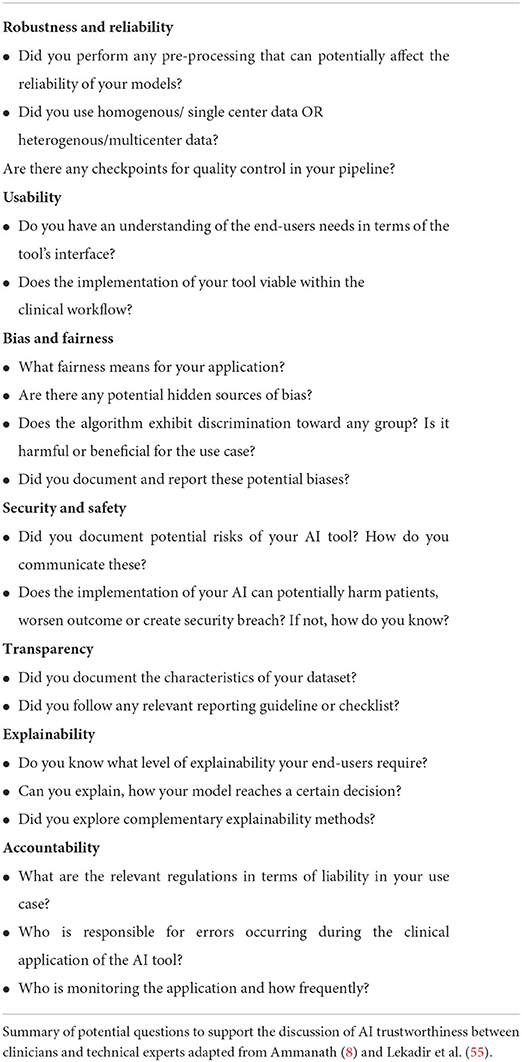

Table 2. Questions to promote discussion of AI trustworthiness between clinicians and technical experts.

AI robustness is defined as the ability of a system to maintain its performance under changing conditions (56). The promise of a robust AI tool is that it can consistently deliver accurate outputs, even when it encounters unexpected or subquality data. When a model's functionality and accuracy change easily, it is considered “brittle” (8).

Medical imaging encapsulates a wealth of potential sources for AI brittleness (12):

(1) Heterogeneity within imaging types of equipment and vendors.

(2) Image acquisition heterogeneity within imaging centers and operators.

(3) Patient-related heterogeneity (including clinical status and anthropometric peculiarities).

(4) Data labeling and segmentation heterogeneity between annotators.

As mentioned above, ML algorithms play an increasingly important role in the image acquisition of all cardiovascular imaging modalities. However, these applications are not without certain limitations. For example, Antun et al. (57) highlighted possible sources of instability of deep learning algorithms at CMR reconstructions. The instabilities usually occur in several forms e.g., undetectable perturbations may result in artifacts in the reconstruction, or a small structure like tumors may not be captured in the reconstruction phase.

The potential brittleness of AI tools is also very well-illustrated by the recent developments in CMR image segmentation (58). Critically, DL-based segmentation tools are often trained and tested on images from single clinical centers, using one vendor with a well-defined protocol resulting in homogenous datasets (59, 60). Furthermore, CMR protocols across prominent multi-center cohort studies are also standardized, prohibiting wider generalizability (5, 61, 62). A notable effort to develop segmentation tools on more heterogeneous datasets to promote robust AI tool development is the Multi-Center, Multi-Vendor and Multi-Disease Cardiac Segmentation (M&Ms) Challenge (63). Investigators of the euCanSHare international project established an open-access CMR dataset (six centers, four vendors, and more than nine phenotype groups) to enable generalizable DL models in cardiac image segmentation. The Society of Cardiovascular Magnetic resonance Imaging (SCMR) registry (64) and Cardiac Atlas project (65) are also aimed at providing diverse databases for similar research ambitions. These efforts are still ongoing, and Campello et al. (63) noted that further research is necessary to improve generalizability toward different scanners or protocols.

Automated coronary computed tomography angiography (CCTA) segmentation faced similar challenges in the past decade. Although the accuracy of the CCTA plaque segmentation tools has been validated against the gold standard invasive methods, the interplatform reproducibility remains disputed (66, 67). Indeed, the time-consuming and labor-intensive nature of quantitative plaque assessment is still responsible for the frequent visual evaluation of coronary artery disease in clinical practice, despite some emerging solutions (68).

Apart from well-curated diverse datasets for benchmarking of segmentation algorithms and the development of novel segmentation tools, the reliability of the output is also a critical to the clinical implementation of these tools. Recently, automated quality control tools have been suggested in high-volume datasets where manual expert inspection is not achievable. Automated quality control tools utilizing different methods, such as Dice similarity coefficient, reverse classification accuracy (RCA) framework, and quality control-driven (QCD) framework, have been implemented within ventricular (69), T1 mapping (70), aortic (71), coronary, and pericardial fat segmentation (72).

AI robustness largely relies on the adaptability of a given model to changing circumstances. A segmentation tool might perform well in a given dataset of healthy hearts, but it might not directly translate into a heterogeneous dataset. The following concepts help promote robustness and reliability in medical imaging applications of AI:

(1) Heterogeneous training data (multi-center, multi-vendor, multiple diseases).

(2) Checking intra- and interobserver variability and whether automated AI tool difference lies within the observer variability.

(3) Applying well-established annotation with powerful annotation software.

(4) Image quality control (to identify artifacts within the data, applying algorithms which help to reduce artifacts).

(5) Applying image harmonization techniques (including the use of phantoms and dedicated harmonization tools such as histogram normalization).

(6) Applying feature harmonization techniques (using test-retest studies and feature selection methods to select stable, robust features for the models).

(7) Data augmentation.

(8) Uncertainty estimation [there is a variety of uncertainty quantification methods, including prediction intervals, Monte Carlo dropout, and ensembling; they are designed to pick up the distance of the new observation to observations the algorithm has already seen Kompa et al. (73)].

Potential issues that can arise during the assessment of robustness and clinical usability is well-illustrated by the adaptation of radiomics in cardiovascular imaging (74). Radiomics enable the extraction of voxel-level information from digital images, promising the quantitative description of tissue shape and texture. The utility of CT radiomics has been demonstrated in identifying vulnerable coronary atherosclerotic plaques (75–77) and linking pericoronary adipose tissue patterns to local inflammation (78, 79). CMR radiomics has also been shown to improve the discrimination of cardiomyopathies (80–82) and improve risk prediction among ST-elevation myocardial infarction patients (83, 84). Despite these advances, the clinical implementation of radiomics is in its infancy. The general critique of the technique lies in the poor repeatability of radiomics features. To improve radiomics usability in CMR, Raisi-Estabragh et al. (85) conducted a multi-center and multi-vendor test-retest study to evaluate the repeatability and reproducibility of CMR radiomics features using cine imaging. The authors reported variable levels of repeatability of the features, which are likely to be clinically relevant. To reduce the radiomics variability introduced by the acquisition center Campello et al. (86) evaluated several image- and feature-based normalization techniques. The authors demonstrated that ComBat, a feature-based harmonization technique, can remove center information, but this does not translate to better algorithmic generalization for classification. The best performing approach in this respect was piecewise linear histogram matching normalization.

Usability is defined as the extent to which an AI application can be utilized to achieve specific goals by specified users with effectiveness, efficiency and satisfaction (87). As the interaction between healthcare professionals and technology is increasingly important, more and more research effort is aimed at testing clinical usability. However, AI tools are barely tested regarding how they interact with clinicians, and most applications are still in “proof-of-concept” status (88). Key issues of usability include lack of a human-centered approach for the development of the AI technologies, e.g., lack of involvement of the end-user for the definition of the clinical requirements and of multi-stakeholder engagement throughout the production lifecycle.

In AI, defining bias and fairness is challenging due to the ever-changing applications we put AI to ISO/IEC TR 24027:2021 (89). Within the healthcare domain, fairness means that AI algorithms should be impartial and maintain the same performance when applied to similarly situated individuals (individual fairness) or different groups of individuals, including under-represented groups (group fairness) (12).

Until now, little data is available regarding the bias and fairness of algorithms in cardiovascular imaging, even though the phenomenon is well-known. As Rajkomar et al. summarized: any type of bias depicted within the dataset is learned and adapted into model performance (90). Overrepresentation of a certain group leads to data collection bias (18), as exemplified by Larrazabal et al. (91). They demonstrated in a large-scale analysis of chest X-ray images that gender imbalance in the training dataset led to incorrect classification of important conditions such as atelectasis, cardiomegaly or effusion. Puyol-Antón et al. performed the first analysis of DL fairness in cardiovascular segmentation using the UKB dataset (92). They found that the segmentation algorithm trained on a dataset balanced regarding participant sex but imbalanced concerning ethnicity resulted in less reliable outcomes for minority groups. It is easy to see how data biases might lead to a less inclusive distribution of resources. Lack of fairness might not only lead to loss of opportunities and worse health outcomes among minority groups but may also reduce public trust in AI applications.

Lekadir et al. identified the main guiding principles for fairness in medical imaging AI (12). Actions to promote AI fairness are not one step but should be implemented throughout the AI lifecycle. Here we summarize the main recommendations from a clinical perspective:

(1) Multi-disciplinarity, which stands for the inclusion of all important stakeholders (AI developers, imaging specialists, patients, and social scientists) in the AI design and implementation.

(2) Context-specific definition of fairness with regards to potential hidden biases in the dataset and data annotators.

(3) Standardization of key variables (e.g., sex, and ethnicity should be collected in a standardized way, because these descriptors of the groups can help test, and verify AI fairness).

(4) The data should be probed for (im)balances, particularly participant age, sex, ethnicity, and social background.

Once we know the potential biases, we have several options to deal with them. There are tools to promote AI fairness on a data collection and curation level, as well as in the model training and testing process.

(1) Data collection process in itself should be transparent and well-documented.

(2) Collecting multi-center data.

(3) Application of specialized statistical methods to evaluate fairness (e.g., true positive rate disparity, statistical parity group fairness, equalized odds, predictive/equality) (93, 94).

(4) Application of specialized statistical methods to mitigate bias (e.g., re-sampling, data augmentation, development of stratified models by sex, or ethnicity).

Exploratory data analysis is also a great tool to probe the dataset for hidden biases (8) and should not be a solitary task for the data scientist. Researchers with a medical background are more adept at picking up chance associations and odd correlations within the dataset. Among other things, data scientists can produce synthetic data to compensate for missing values to create a more balanced dataset. At the same time, we must always stay vigilant to the potential biological meaning of missing data before deciding to make up for it. Therein lies another strong argument for inclusive AI research.

Any potential breach in healthcare AI systems can seriously undermine the trust of end-users. Therefore, developers should cooperate with cybersecurity experts to protect personal information against bad actors before clinical implementation. In some critical areas, such as data protection, there are firmly outlined rules in place: e.g., EU General Data Protection Regulation (GDPR) or the California Privacy Rights Act (CPRA). However, these regulations can never keep up with the speed of innovation.

Key issues surfacing with the use of clinical data for AI development (95):

(1) Sensitive data being shared without informed consent.

(2) Inappropriate informed consent forms (e.g., information within the consent form is detailed beyond the processing capability of the patient/user, no dedicated time allocated for consent review, and opaque use cases permitting patients from understanding how their data might be used).

(3) Data re-purposing without the patient's knowledge and consent.

(4) Personal data being exposed.

(5) Attacks on AI applications (e.g., data poisoning, adversarial attacks).

For example, the South Denver Cardiology Associates recently confirmed a data breach affecting 287,000 patients. The stolen dataset contained dates of birth, Social Security numbers, driver's license numbers, patient account numbers, health insurance information, and clinical information (96). This leakage might result in identity theft, insurance fraud or other inappropriate use of sensitive data. Moreover, in the field of medical imaging, particular attention is necessary toward dealing with potential adversarial attacks (97), including “one-pixel” attacks (98). These attacks involve slight changes to the input images intending to fool the AI and produce a false result. In other cases details of large scale data sharing agreements remain gray for the public (99), which might lead to data privacy controversies in the future.

Fortunately, several steps can be taken to mitigate these risks on an individual and institutional level:

(1) Increasing the awareness of privacy and security risks, informed consent and cybersecurity through (self)education.

(2) Transparent regulations of data privacy, data re-purposing.

(3) De-centralized, federated learning approaches such as federated learning. Federated learning is an ML setting where many de-centralized clients collaboratively train a model under the arrangement of a central server, keeping the data in several individual locations (100). Despite this, some researchers might be hesitant to use federated learning, because of the potential disclosure of the model. However, the data is never exposed to third parties, not even to the data scientist.

(4) Ongoing cybersecurity research into novel, more secure algorithms.

Transparency in AI is a broad term; it refers to the information about the dataset, processes, uses, and outputs that is a prerequisite for accountability. AI transparency within medicine aims to provide all stakeholders with enough information to join in the discussion in a meaningful way. Two universal requirements guide and promote AI transparency:

(1) Data transparency includes transparent methods and guidelines for data collection, utilization, storage, sharing, and documentation.

(2) Model transparency means we have enough knowledge/information about a model's internal properties to apprehend its output meaningfully.

The goal of traceability is to document the entire development process and to monitor the behaviour and functioning of an AI model or system over time. This approach allows tracking any drift from the original training settings. As clinical practice constantly evolves, images provide greater granularity or novel guidelines emerge; keeping track of the model performance and adapting it to the new circumstances is critical (101). Two main concepts driving a decrease in model performance over time are “concept drift” and “data drift”. Concept drift means that some underlying characteristics of variables change (for example, a novel type of cardiomyopathy is distinguished, creating a new class for a classification algorithm), which decreases the accuracy of the model. Data or dataset drift refers to the change in the data, meaning that a difference in the scanning device or image acquisition may directly affect the prediction model deployed (12).

Standardized dataset documentation methods can facilitate ML results' transparency, accountability and repeatability. Recently, Gebru et al. posed a list of questions on how and why data was collected, what is the composition of the data, and how it was curated and labeled in their document entitled “Datasheets for Datasets” (102). Sendak et al. proposed the use of “Model facts cards” for each ML model to ensure that clinicians have a thorough understanding of “how, when, how not, and when not to” incorporate the output into their decisions (103).

In terms of an AI system, explainability means that it is possible to comprehend how the output was reached. The greater the explainability of a model, the better we can understand the internal mechanisms of a decision-making tool. However, as Arbelaez Ossa et al. (104) point out, the key issue in AI explainability is the lack of consensus among data scientists, regulators, and healthcare professionals regarding the definition and requirements.

Notably, explainability is not necessary for all ML models. Simple rule-based models applying linear regression or decision trees are inherently explainable. If we can calculate how a given parameter is weighted within the model, it is unnecessary to push the limits of explainability further.

From a strictly clinical end-user perspective, it is also not necessary to understand all steps involved in a complex DL network if the output is readily accessible and visually verifiable by a physician, such as segmentation. On the other hand, if the algorithm promises to deliver a clinical diagnosis or prognostic information based on imaging data or a combination of imaging features, the clinical application needs to reach high levels of intelligibility. A clinician who does not understand how the algorithm reached its conclusion will likely to rely on their own expertise rather than an opaque output.

To deal with the “black box” nature of particularly DL methods, several post-hoc explainability algorithms were defined to create more interpretable models. The so-called saliency maps or heat maps are the most widely adopted explainability tools in medical imaging. These color-coded maps show the contribution of each image region to a given model prediction (105, 106). Several distinct approaches can be utilized, such as Gradient-weighted Class Activation Mapping (Grad-CAM) (105) or Dense Captioning (DenseCap) (107), to capture the most crucial image areas. Saliency maps have long been applied in image analysis to understand better the key areas supporting the model's decision. As an example, Candemir et al. (108) trained a 3-dimensional convolutional neural network (CNN) to differentiate between coronary arteries with and without atherosclerosis and has shown the essential features learned by the system on color-coded maps. Saliency maps can also suggest if an algorithm picks up temporal data: Howard et al. (109) applied time distributed CNN model with saliency maps for disease classification based on echocardiography images. The author found that these new architectures more than halve the error rate of traditional CNNs, possibly because of the networks' ability to track the movement of specific structures such as heart valves throughout the cardiac cycle.

Local Interpretable Model-Agnostic Explanations (LIME) is used to explain the model locally for one single subject (110). LIME evaluates a given variable's contribution to the whole of the predictive model. SHapely Additive exPlanations (SHAP) is a model agnostic explainability model (can be used to interpret any model) (111). SHAP is based on game theory and can reveal each predictor's effect on the outcome. It calculates a score for each feature in the model, showing the feature's size and direction effects on the outcome. Al'Aref et al. (112) applied boosted ensemble algorithm (XGBoost) in the participants of the CONFIRM registry and showed that incorporating clinical features (e.g., age, sex, cardiovascular risk factors, laboratory values, and symptoms) in addition to coronary artery calcium score can accurately estimate the pretest likelihood of obstructive coronary artery disease on CCTA. They could supply the 20 most crucial features supporting the model's prediction using the SHAP method. Similarly, Fahmy et al. (113) applied the SHAP to support the interpretation of their model looking into the association between CMR metrics and adverse outcomes (cardiovascular hospitalization and all-cause death) in patients with dilated cardiomyopathy. Many other explainability techniques are also available, and new tools are likely to become more sophisticated and model-specific.

Although these models can improve model interpretation, their understanding requires additional efforts from the physicians. We are yet to see if their outputs can become as acceptable to the community and if they can overcome current limitations. Critiques of current explainability models warn that the performance of the explanations are not routinely quantified, and we can rarely elucidate if a given decision was sensible or not (114). Moreover, they might reduce the complexity of a model to a level that is not representative and promote a false sense of security among users. Ghassemi et al. note that with the currently available methods, our best hope is to go through rigorous internal and external validation and use explainability models for troubleshooting and system audits (114).

Accountability refers to the state of being responsible. However, in the context of AI, where algorithms are based on both ML and human ingenuity, the mistakes or errors of the application come from humans developing or using machines (95, 115, 116). On the other hand, it is not clearly defined and regulated yet, with whom the responsibility of AI-powered medical tools lies. Does liability fall on developers, chief executive officers of the developing company, leaders of the healthcare institution buying and authorizing clinical utilization or the doctors using them? Notably, sometimes it is also hard to pinpoint why the AI-related medical error happened (95); therefore, responsibility issues can lead to daunting detective work, steering away the attention from the actual patient care. The main proposed tools to mitigate accountability issues within AI are: (1) the roles and responsibilities of developers and users should be defined, (2) a regulatory framework for accountability should be in place, and (3) dedicated regulatory agencies should be established and monitor AI use.

Even if an AI tool complies with all of the criteria mentioned earlier, integrating a new tool into clinical practice hides several expected and unexpected difficulties. The main obstacles to clinical implementation stem from three primary sources: (1) the differences among institutions regarding equipment, staffing, location, financial possibilities, and inner structures of each healthcare institution, (2) change in physician-patient relationship, and (3) difficulties of clinical and technical integration into existing workflows (95, 117).

Medical data, in general, is very noisy and requires human oversight before integration. Cardiovascular imaging data is slightly more structured than clinical records but still lacks interoperability to a great extent (118). Several initiatives already aim at increasing interoperability among healthcare providers [e.g., European Commission (119), Health Data Research UK (120)]. However, it seems fairly evident that medical AI tools will have to adapt to a certain level of data heterogeneity. The physician-patient relationship has been transformed by technical advances and the maturity of social sciences, but it is yet uncertain how AI tools will impact this relationship. Some argue that it will help by easing clinician workload and providing more personalized data for shared decision-making, while others question doctors' role once critical tasks are delegated to sophisticated algorithms (121). Clinical guidelines will need to be updated to consider the potential role of AI tools between healthcare workers and patients (95, 122). Moreover, these guidelines will also need to be updated to integrate novel tools into the clinical workflow without severe disruption of care (123).

Trustworthy AI is not an obscure concept reserved for technical specialists and scholars of ethical reals (124), but rather a practical set of steps and questions, which, when implemented, can provide us with reliable tools for a new era in healthcare. In an effort to improve the overall quality of the AI prediction models, van Smeden et al. presented 12 critical questions for cardiovascular health professionals to ask (125). Moreover, the use of the Proposed Requirements for Cardiovascular Imaging-Related Machine Learning Evaluation (PRIME) checklist has been suggested by Sengupta et al. (126), a framework that contains a comprehensive list of crucial responsibilities that need to be completed when developing ML models. Here we report key questions to promote discussion of AI trustworthiness between clinicians and technical experts (Table 2), moreover we summarize how these principles fit into the ML lifecycle (Figure 1).

We have to acknowledge that in some instances medical research and consequently medical AI research is plainly inaccurate, but we can rectify these mistakes over time. AI competitions provide an excellent platform for robust validation or rebuttal of results. As an example, a recent competition to predict O(6)-Methylguanine-DNA-methyltransferase (MGMT) promoter methylation from brain magnetic resonance imaging (MRI) scans (127). Overall, 1,555 teams of many thousands of researchers took a large dataset of MRI scans and the results clearly demonstrate that this task is not possible with current approaches, even tough several group claimed to have achieved an ROC scores of up to 0.85 previously (128–130). This suggest that well designed competitions provide and excellent opportunity to improve the quality of AI research.

In order to promote the safe adoption of AI-powered tools in cardiovascular imaging, practicing doctors and future medical professionals need to be properly trained in the technical aspects, potential risks and limitations of the technology (131). McCoy et al. (132) and Grunhut et al. (133) proposed crucial points to improve AI literacy in medical education programs. Furthermore, the involvement and education of the general public are also essential for the broader adoption of these emerging tools.

Embracing the human-in-the-loop principle may offer further benefits where both imagers and ML algorithms fall short (134). It means that we can benefit from the advantages of AI models (i.e., automated segmentation or diagnosis) and having a human at various stages or checkpoints to correct potential errors or use critical thinking where algorithms are not confident in their results. The human can validate or correct the results where the algorithm delivers lower confidence outputs, creating a combined and better decision.

In essence, it does not matter if we call it trustworthy AI, reliable AI or responsible AI—the driving idea is to create an inclusive, collaborative effort in healthcare between all stakeholders. Our task is to consider the possible impact and test our AI tool and all elements of the AI development by posing the right questions relevant to our desired aims.

ZR-E, KL, SEP, and LS contributed to the conceptualization of this review. LS wrote the original draft and produced the table and figure. ZR-E, SEP, PM-H, HV, BM, and AML reviewed the manuscript from a clinical perspective. AS, CM, ER, PG, MK, AML, KL, and SEP reviewed the manuscript from a technical perspective. All authors contributed to the article and approved the submitted version.

LS received funding from the European Association of Cardiovascular Imaging (EACVI Research Grant App000076437). ZR-E recognizes the National Institute for Health Research (NIHR) Integrated Academic Training Programme which supports her Academic Clinical Lectureship post and was also supported by British Heart Foundation Clinical Research Training Fellowship Grant No. FS/17/81/33318. AS was supported by British Heart Foundation project grant (PG/21/10619). CM was supported by the Oxford NIHR Biomedical Research Center. SEP acknowledges support from the SmartHeart EPSRC programme grant (www.nihr.ac.uk; EP/P001009/1) and also from the CAP-AI programme. CAP-AI is led by Capital Enterprise in partnership with Barts Health NHS Trust and Digital Catapult and was funded by the European Regional Development Fund and Barts Charity. SEP, PG, and KL have received funding from the European Union's Horizon 2020 research and innovation programme under grant agreement no. 825903 (euCanSHare project). HV, LS, and BM acknowledge funding from the Technology of Hungary from the National Research, Development, and Innovation Fund, financed under the TKP2021-NKTA funding scheme and Project No. RRF-2.3.1-21-2022-00004 (MILAB) which has been implemented with the support provided by the European Union. AML acknowledges support from the ‘SmartHeart' EPSRC programme grant (www.nihr.ac.uk; EP/P001009/1) and from the UKRI London Medical Imaging and Artificial Intelligence Centre for Value Based Healthcare. This article is supported by the London Medical Imaging and Artificial Intelligence Centre for Value Based Healthcare (AI4VBH), which is funded from the Data to Early Diagnosis and Precision Medicine strand of the government's Industrial Strategy Challenge Fund, managed and delivered by Innovate UK on behalf of UK Research and Innovation (UKRI). ER was partly funded from the programme under grant agreement no. 825903 (euCanSHare project) and grant agreement no. 965345 (HealthyCloud project). The funders provided support in the form of salaries for authors as detailed above but did not have any additional role in the study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Views expressed are those of the authors and not necessarily those of the AI4VBH Consortium members, the NHS, Innovate UK, or UKRI.

Author SEP provides consultancy to Cardiovascular Imaging Inc, Calgary, Alberta, Canada. Author MK was employed by Siemens Healthcare Hungary.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Quer G, Arnaout R, Henne M, Arnaout R. Machine learning and the future of cardiovascular care. J Am Coll Cardiol. (2021) 77:300–13. doi: 10.1016/j.jacc.2020.11.030

2. Davenport T, Kalakota R. The potential for artificial intelligence in healthcare. Future Healthc J. (2019) 6:94–8. doi: 10.7861/futurehosp.6-2-94

3. Floridi L, Cowls J, Beltrametti M, Chatila R, Chazerand P, Dignum V, et al. AI4People—an ethical framework for a good AI society: opportunities, risks, principles, and recommendations. Minds Mach. (2018) 28:689–707. doi: 10.1007/s11023-018-9482-5

4. Rajakariar K, Koshy AN, Sajeev JK, Nair S, Roberts L, Teh AW. Accuracy of a smartwatch based single-lead electrocardiogram device in detection of atrial fibrillation. Heart. (2020) 106:665–70. doi: 10.1136/heartjnl-2019-316004

5. Bai W, Sinclair M, Tarroni G, Oktay O, Rajchl M, Vaillant G, et al. Automated cardiovascular magnetic resonance image analysis with fully convolutional networks. J Cardiovasc Magn Reson. (2018) 20:65. doi: 10.1186/s12968-018-0471-x

6. Davies RH, Augusto JB, Bhuva A, Xue H, Treibel TA, Ye Y, et al. Precision measurement of cardiac structure and function in cardiovascular magnetic resonance using machine learning. J Cardiovasc Magn Reson. (2022) 24:16. doi: 10.1186/s12968-022-00846-4

7. AI Central,. ACR Data Science Institution AI Central. AI Central (2022). Available online at: https://aicentral.acrdsi.org/ (accessed July 3, 2022).

8. Ammanath B. Trustworthy AI: A Business Guide for Navigating Trust and Ethics in AI. Hoboken, NJ: John Wiley and Sons, Incorporated (2022).

9. High-Level Expert Group on Artificial Intelligence,. Ethics Guidelines for Trusthworthy AI. High-Level Expert Group on Artificial Intelligence (2019). Available online at: https://ec.europa.eu/futurium/en/ai-alliance-consultation.1.html (accessed July 4, 2022).

10. Buruk B, Ekmekci PE, Arda B. A critical perspective on guidelines for responsible and trustworthy artificial intelligence. Med Health Care Philos. (2020) 23:387–99. doi: 10.1007/s11019-020-09948-1

11. Geis JR, Brady AP, Wu CC, Spencer J, Ranschaert E, Jaremko JL, et al. Ethics of artificial intelligence in radiology: summary of the joint European and north American multisociety statement. Radiology. (2019) 293:436–40. doi: 10.1148/radiol.2019191586

12. Lekadir K, Osuala R, Gallin C, Lazrak N, Kushibar K, Tsakou G, et al. FUTURE-AI: guiding principles and consensus recommendations for trustworthy artificial intelligence in medical imaging. arXiv. (2021). Available online at: http://arxiv.org/abs/2109.09658 (accessed June 21, 2022).

13. Krittanawong C, Zhang H, Wang Z, Aydar M, Kitai T. Artificial intelligence in precision cardiovascular medicine. J Am Coll Cardiol. (2017) 69:2657–64. doi: 10.1016/j.jacc.2017.03.571

14. Dey D, Slomka PJ, Leeson P, Comaniciu D, Shrestha S, Sengupta PP, et al. Artificial intelligence in cardiovascular imaging. J Am Coll Cardiol. (2019) 73:1317–35. doi: 10.1016/j.jacc.2018.12.054

15. Leiner T, Rueckert D, Suinesiaputra A, Baeßler B, Nezafat R, Išgum I, et al. Machine learning in cardiovascular magnetic resonance: basic concepts and applications. J Cardiovasc Magn Reson. (2019) 21:61. doi: 10.1186/s12968-019-0575-y

16. Martin-Isla C, Campello VM, Izquierdo C, Raisi-Estabragh Z, Baeßler B, Petersen SE, et al. Image-Based cardiac diagnosis with machine learning: a review. Front Cardiovasc Med. (2020) 7:1. doi: 10.3389/fcvm.2020.00001

18. Al'Aref SJ, Singh G, Baskaran L, Metaxas DN, editors. Machine Learning in Cardiovascular Medicine. London: Academic Press (2021). 424 p.

19. Alice CY, Mohajer B, Eng J. External validation of deep learning algorithms for radiologic diagnosis: a systematic review. Radiol Artif Intell. (2022) 4:e210064. doi: 10.1148/ryai.210064

20. Park SH, Han K. Methodologic guide for evaluating clinical performance and effect of artificial intelligence technology for medical diagnosis and prediction. Radiology. (2018) 286:800–9. doi: 10.1148/radiol.2017171920

21. Ryan M. In AI we trust: ethics, artificial intelligence, and reliability. Sci Eng Ethics. (2020) 26:2749–67. doi: 10.1007/s11948-020-00228-y

22. Lewis PR, Marsh S. What is it like to trust a rock? A functionalist perspective on trust and trustworthiness in artificial intelligence. Cogn Syst Res. (2022) 72:33–49. doi: 10.1016/j.cogsys.2021.11.001

23. Feigenbaum H. Evolution of echocardiography. Circulation. (1996) 93:1321–7. doi: 10.1161/01.CIR.93.7.1321

24. Lang RM, Badano LP, Mor-Avi V, Afilalo J, Armstrong A, Ernande L, et al. Recommendations for cardiac chamber quantification by echocardiography in adults: an update from the American society of echocardiography and the european association of cardiovascular imaging. J Am Soc Echocardiogr. (2015) 28:1–39.e14. doi: 10.1016/j.echo.2014.10.003

25. Gudmundsson P, Rydberg E, Winter R, Willenheimer R. Visually estimated left ventricular ejection fraction by echocardiography is closely correlated with formal quantitative methods. Int J Cardiol. (2005) 101:209–12. doi: 10.1016/j.ijcard.2004.03.027

26. Asch FM, Poilvert N, Abraham T, Jankowski M, Cleve J, Adams M, et al. Automated echocardiographic quantification of left ventricular ejection fraction without volume measurements using a machine learning algorithm mimicking a human expert. Circ Cardiovasc Imaging. (2019) 12:e009303. doi: 10.1161/CIRCIMAGING.119.009303

27. Wynants L, Van Calster B, Collins GS, Riley RD, Heinze G, Schuit E, et al. Prediction models for diagnosis and prognosis of covid-19: systematic review and critical appraisal. BMJ. (2020) 369:m1328. doi: 10.1136/bmj.m1328

28. von Borzyskowski I, Mazumder A, Mateen B, Wooldridge M editors,. Data Science AI in the Age of COVID-19. (2021). Available online at: https://www.turing.ac.uk/sites/default/files/2021-06/data-science-and-ai-in-the-age-of-covid_full-report_2.pdf (accessed July 4, 2022).

29. Leslie D, Mazumder A, Peppin A, Wolters MK, Hagerty A. Does “AI” stand for augmenting inequality in the era of covid-19 healthcare? BMJ. (2021) 372:n304. doi: 10.1136/bmj.n304

30. Wolterink JM, Leiner T, Viergever MA, Išgum I. Generative adversarial networks for noise reduction in low-dose CT. IEEE Trans Med Imaging. (2017) 36:2536–45. doi: 10.1109/TMI.2017.2708987

31. Oksuz I, Ruijsink B, Puyol-Antón E, Clough JR, Cruz G, Bustin A, et al. Automatic CNN-based detection of cardiac MR motion artefacts using k-space data augmentation and curriculum learning. Med Image Anal. (2019) 55:136–47. doi: 10.1016/j.media.2019.04.009

32. Benz DC, Ersözlü S, Mojon FLA, Messerli M, Mitulla AK, Ciancone D, et al. Radiation dose reduction with deep-learning image reconstruction for coronary computed tomography angiography. Eur Radiol. (2022) 32:2620–8. doi: 10.1007/s00330-021-08367-x

33. Caballero J, Price AN, Rueckert D, Hajnal JV. Dictionary learning and time sparsity for dynamic MR data reconstruction. IEEE Trans Med Imaging. (2014) 33:979–94. doi: 10.1109/TMI.2014.2301271

34. Zhang J, Gajjala S, Agrawal P, Tison GH, Hallock LA, Beussink-Nelson L, et al. Fully automated echocardiogram interpretation in clinical practice: feasibility and diagnostic accuracy. Circulation. (2018) 138:1623–35. doi: 10.1161/CIRCULATIONAHA.118.034338

35. Edalati M, Zheng Y, Watkins MP, Chen J, Liu L, Zhang S, et al. Implementation and prospective clinical validation of AI-based planning and shimming techniques in cardiac MRI. Med Phys. (2022) 49:129–43. doi: 10.1002/mp.15327

36. Leclerc S, Smistad E, Pedrosa J, Østvik A, Cervenansky F, Espinosa F Espeland T, et al. Deep learning for segmentation using an open large-scale dataset in 2D echocardiography. IEEE Trans Med Imaging. (2019) 38:2198–210. doi: 10.1109/TMI.2019.2900516

37. Huang W, Huang L, Lin Z, Huang S, Chi Y, Zhou J, et al. Coronary artery segmentation by deep learning neural networks on computed tomographic coronary angiographic images. In: 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). Honolulu, HI: IEEE (2018). p. 608–11. doi: 10.1109/EMBC.2018.8512328

38. Sengupta PP, Huang Y-M, Bansal M, Ashrafi A, Fisher M, Shameer K, et al. Cognitive machine-learning algorithm for cardiac imaging: a pilot study for differentiating constrictive pericarditis from restrictive cardiomyopathy. Circ Cardiovasc Imaging. (2016) 9:e004330. doi: 10.1161/CIRCIMAGING.115.004330

39. de Vos BD, Wolterink JM, Leiner T, de Jong PA, Lessmann N, Išgum I. Direct automatic coronary calcium scoring in cardiac and chest CT. IEEE Trans Med Imaging. (2019) 38:2127–38. doi: 10.1109/TMI.2019.2899534

40. Zhang N, Yang G, Gao Z, Xu C, Zhang Y, Shi R, et al. Deep learning for diagnosis of chronic myocardial infarction on nonenhanced cardiac cine MRI. Radiology. (2019) 291:606–17. doi: 10.1148/radiol.2019182304

41. Samad MD, Ulloa A, Wehner GJ, Jing L, Hartzel D, Good CW, et al. Predicting survival from large echocardiography and electronic health record datasets: optimization with machine learning. JACC Cardiovasc Imaging. (2019) 12:681–9. doi: 10.1016/j.jcmg.2018.04.026

42. Patel MR, Nørgaard BL, Fairbairn TA, Nieman K, Akasaka T, Berman DS, et al. 1-Year impact on medical practice clinical outcomes of FFRCT. JACC Cardiovasc Imaging. (2020) 13:97–105. doi: 10.1016/j.jcmg.2019.03.003

43. Cheng S, Fang M, Cui C, Chen X, Yin G, Prasad SK, et al. LGE-CMR-derived texture features reflect poor prognosis in hypertrophic cardiomyopathy patients with systolic dysfunction: preliminary results. Eur Radiol. (2018) 28:4615–24. doi: 10.1007/s00330-018-5391-5

44. Tokodi M, Schwertner WR, Kovács A, Tosér Z, Staub L, Sárkány A, et al. Machine learning-based mortality prediction of patients undergoing cardiac resynchronization therapy: the SEMMELWEIS-CRT score. Eur Heart J. (2020) 41:1747–56. doi: 10.1093/eurheartj/ehz902

45. Queirós S, Dubois C, Morais P, Adriaenssens T, Fonseca JC, Vilaça JL, et al. Automatic 3D aortic annulus sizing by computed tomography in the planning of transcatheter aortic valve implantation. J Cardiovasc Comput Tomogr. (2017) 11:25–32. doi: 10.1016/j.jcct.2016.12.004

46. Casaclang-Verzosa G, Shrestha S, Khalil MJ, Cho JS, Tokodi M, Balla S, et al. Network tomography for understanding phenotypic presentations in aortic stenosis. JACC Cardiovasc Imaging. (2019) 12:236–48. doi: 10.1016/j.jcmg.2018.11.025

47. Hoshino M, Zhang J, Sugiyama T, Yang S, Kanaji Y, Hamaya R, et al. Prognostic value of pericoronary inflammation and unsupervised machine-learning-defined phenotypic clustering of CT angiographic findings. Int J Cardiol. (2021) 333:226–32. doi: 10.1016/j.ijcard.2021.03.019

48. Zheng Q, Delingette H, Fung K, Petersen SE, Ayache N. Pathological cluster identification by unsupervised analysis in 3,822 UK biobank cardiac MRIs. Front Cardiovasc Med. (2020) 7:539788. doi: 10.3389/fcvm.2020.539788

49. Liao J, Huang L, Qu M, Chen B, Wang G. Artificial intelligence in coronary CT angiography: current status and future prospects. Front Cardiovasc Med. (2022) 9:896366. doi: 10.3389/fcvm.2022.896366

50. Achenbach S, Fuchs F, Goncalves A, Kaiser-Albers C, Ali ZA, Bengel FM, et al. Non-invasive imaging as the cornerstone of cardiovascular precision medicine. Eur Heart J Cardiovasc Imaging. (2022) 23:465–75. doi: 10.1093/ehjci/jeab287

51. Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. Commun ACM. (2017) 60:84–90. doi: 10.1145/3065386

52. Obermeyer Z, Emanuel EJ. Predicting the future—big data, machine learning, and clinical medicine. N Engl J Med. (2016) 375:1216. doi: 10.1056/NEJMp1606181

53. Langlotz CP. Will artificial intelligence replace radiologists? Radiol Artif Intell. (2019) 1:e190058. doi: 10.1148/ryai.2019190058

54. The Royal College of Radiologists. Clinical Radiology Census Report 2021. The Royal College of Radiologists (2021). Available online at: https://www.rcr.ac.uk/clinical-radiology/rcr-clinical-radiology-census-report-2021 (accessed July 31, 2022).

55. Lekadir K, Osuala R, Gallin C, Lazrak N, Kushibar K, Tsakou G, et al. FUTURE-AI: guiding principles and consensus recommendations for trustworthy artificial intelligence in medical imaging. arXiv [Preprint]. (2021). arXiv: 2109.09658. Available online at: http://arxiv.org/abs/2109.09658 (accessed June 21, 2022).

56. ISO/IEC TR 24029-1:2021,. Artificial Intelligence (AI)—Assessment of the Robustness of Neural Networks. ISO/IEC TR 24029-1:2021 (2021). Available online at: https://www.iso.org/standard/77609.html (accessed June 12, 2022).

57. Antun V, Renna F, Poon C, Adcock B, Hansen AC. On instabilities of deep learning in image reconstruction - does AI come at a cost? Proc Natl Acad Sci USA. (2020) 117:30088–95. doi: 10.1073/pnas.1907377117

58. Galati F, Ourselin S, Zuluaga MA. From accuracy to reliability and robustness in cardiac magnetic resonance image segmentation: a review. Appl Sci. (2022) 12:3936. doi: 10.3390/app12083936

59. Petersen SE, Matthews PM, Francis JM, Robson MD, Zemrak F, Boubertakh R, et al. UK biobank's cardiovascular magnetic resonance protocol. J Cardiovasc Magn Reson. (2015) 18:8. doi: 10.1186/s12968-016-0227-4

60. Bamberg F, Kauczor H-U, Weckbach S, Schlett CL, Forsting M, Ladd SC, et al. Whole-Body MR imaging in the German national cohort: rationale, design, and technical background. Radiology. (2015) 277:206–20. doi: 10.1148/radiol.2015142272

61. Isensee F, Jaeger P, Full PM, Wolf I, Engelhardt S, Maier-Hein KH. Automatic Cardiac Disease Assessment on Cine-MRI via Time-Series Segmentation and Domain Specific Features. Cham: Springer (2018). (2018). doi: 10.1007/978-3-319-75541-0_13

62. Budai A, Suhai FI, Csorba K, Toth A, Szabo L, Vago H, et al. Fully automatic segmentation of right and left ventricle on short-axis cardiac MRI images. Comput Med Imaging Graph. (2020) 85:101786. doi: 10.1016/j.compmedimag.2020.101786

63. Campello VM, Gkontra P, Izquierdo C, Martin-Isla C, Sojoudi A, Full PM, et al. Multi-Centre, multi-vendor and multi-disease cardiac segmentation: the M&Ms challenge. IEEE Trans Med Imaging. (2021) 40:3543–54. doi: 10.1109/TMI.2021.3090082

64. Society, for Cardiovascular Magnetic Resonance. About the SCMR Registry. Society for Cardiovascular Magnetic Resonance. Available online at: https://scmr.org/page/Registry (accessed July 29, 2022).

65. Cardiac Atlas Project. Available online at: http://www.cardiacatlas.org/ (accessed July 29, 2022).

66. Maurovich-Horvat P, Ferencik M, Voros S, Merkely B, Hoffmann U. Comprehensive plaque assessment by coronary CT angiography. Nat Rev Cardiol. (2014) 11:390–402. doi: 10.1038/nrcardio.2014.60

67. Lin A, Kolossváry M, Motwani M, Išgum I, Maurovich-Horvat P, Slomka PJ, et al. Artificial intelligence in cardiovascular imaging for risk stratification in coronary artery disease. Radiol Cardiothorac Imaging. (2021) 3:e200512. doi: 10.1148/ryct.2021200512

68. Lin A, Manral N, McElhinney P, Killekar A, Matsumoto H, Kwiecinski J, et al. Deep learning-enabled coronary CT angiography for plaque and stenosis quantification and cardiac risk prediction: an international multicentre study. Lancet Digit Health. (2022) 4:e256–65. doi: 10.1016/S2589-7500(22)00022-X

69. Robinson R, Valindria VV, Bai W, Oktay O, Kainz B, Suzuki H, et al. Automated quality control in image segmentation: application to the UK biobank cardiovascular magnetic resonance imaging study. J Cardiovasc Magn Reson. (2019) 21:18. doi: 10.1186/s12968-019-0523-x

70. Hann E, Popescu IA, Zhang Q, Gonzales RA, Barutçu A, Neubauer S, et al. Deep neural network ensemble for on-the-fly quality control-driven segmentation of cardiac MRI T1 mapping. Med Image Anal. (2021) 71:102029. doi: 10.1016/j.media.2021.102029

71. Biasiolli L, Hann E, Lukaschuk E, Carapella V, Paiva JM, Aung N, et al. Automated localization and quality control of the aorta in cine CMR can significantly accelerate processing of the UK Biobank population data. PLoS ONE. (2019) 14:e0212272. doi: 10.1371/journal.pone.0212272

72. Bard A, Raisi-Estabragh Z, Ardissino M, Lee AM, Pugliese F, Dey D, et al. Automated quality-controlled cardiovascular magnetic resonance pericardial fat quantification using a convolutional neural network in the UK biobank. Front Cardiovasc Med. (2021) 8:677574. doi: 10.3389/fcvm.2021.677574

73. Kompa B, Snoek J, Beam AL. Second opinion needed: communicating uncertainty in medical machine learning. Npj Digit Med. (2021) 4:4. doi: 10.1038/s41746-020-00367-3

74. Chang S, Han K, Suh YJ, Choi BW. Quality of science and reporting for radiomics in cardiac magnetic resonance imaging studies: a systematic review. Eur Radiol. (2022) 32:4361–73. doi: 10.1007/s00330-022-08587-9

75. Kolossváry M, Karády J, Szilveszter B, Kitslaar P, Hoffmann U, Merkely B, et al. Radiomic features are superior to conventional quantitative computed tomographic metrics to identify coronary plaques with napkin-ring sign. Circ Cardiovasc Imaging. (2017) 10:e006843. doi: 10.1161/CIRCIMAGING.117.006843

76. Zeleznik R, Foldyna B, Eslami P, Weiss J, Alexander I, Taron J, et al. Deep convolutional neural networks to predict cardiovascular risk from computed tomography. Nat Commun. (2021) 12:715. doi: 10.1038/s41467-021-20966-2

77. Lin A, Kolossváry M, Cadet S, McElhinney P, Goeller M, Han D, et al. Radiomics-Based precision phenotyping identifies unstable coronary plaques from computed tomography angiography. Cardiovasc Imaging. (2022) 15:859–71. doi: 10.1016/j.jcmg.2021.11.016

78. Oikonomou EK, Williams MC, Kotanidis CP, Desai MY, Marwan M, Antonopoulos AS, et al. A novel machine learning-derived radiotranscriptomic signature of perivascular fat improves cardiac risk prediction using coronary CT angiography. Eur Heart J. (2019) 40:3529–43. doi: 10.1093/eurheartj/ehz592

79. Lin A, Kolossváry M, Yuvaraj J, Cadet S, McElhinney PA, Jiang C, et al. Myocardial infarction associates with a distinct pericoronary adipose tissue radiomic phenotype. JACC Cardiovasc Imaging. (2020) 13:2371–83. doi: 10.1016/j.jcmg.2020.06.033

80. Izquierdo C, Casas G, Martin-Isla C, Campello VM, Guala A, Gkontra P, et al. Radiomics-based classification of left ventricular non-compaction, hypertrophic cardiomyopathy, and dilated cardiomyopathy in cardiovascular magnetic resonance. Front Cardiovasc Med. (2021) 8:764312. doi: 10.3389/fcvm.2021.764312

81. Antonopoulos AS, Boutsikou M, Simantiris S, Angelopoulos A, Lazaros G, Panagiotopoulos I, et al. Machine learning of native T1 mapping radiomics for classification of hypertrophic cardiomyopathy phenotypes. Sci Rep. (2021) 11:23596. doi: 10.1038/s41598-021-02971-z

82. Baeßler B, Mannil M, Maintz D, Alkadhi H, Manka R. Texture analysis and machine learning of non-contrast T1-weighted MR images in patients with hypertrophic cardiomyopathy—preliminary results. Eur J Radiol. (2018) 102:61–7. doi: 10.1016/j.ejrad.2018.03.013

83. Baessler B, Mannil M, Oebel S, Maintz D, Alkadhi H, Manka R. Subacute and chronic left ventricular myocardial scar: accuracy of texture analysis on nonenhanced cine MR images. Radiology. (2018) 286:103–12. doi: 10.1148/radiol.2017170213

84. Rauseo E, Izquierdo Morcillo C, Raisi-Estabragh Z, Gkontra P, Aung N, Lekadir K, et al. New imaging signatures of cardiac alterations in ischaemic heart disease and cerebrovascular disease using CMR radiomics. Front Cardiovasc Med. (2021) 8:716577. doi: 10.3389/fcvm.2021.716577

85. Raisi-Estabragh Z, Gkontra P, Jaggi A, Cooper J, Augusto J, Bhuva AN, et al. Repeatability of cardiac magnetic resonance radiomics: a multi-centre multi-vendor test-retest study. Front Cardiovasc Med. (2020) 7:586236. doi: 10.3389/fcvm.2020.586236

86. Campello VM, Martín-Isla C, Izquierdo C, Guala A, Palomares JFR, Viladés D, et al. Minimising multi-centre radiomics variability through image normalisation: a pilot study. Sci Rep. (2022) 12:12532. doi: 10.1038/s41598-022-16375-0

87. ISO 9241-11:2018. Ergonomics of Human-System Interaction—Part 11: Usability: Definitions and Concept. Geneva: ISO (2018)

88. Lara Hernandez KA, Rienmüller T, Baumgartner D, Baumgartner C. Deep learning in spatiotemporal cardiac imaging: a review of methodologies and clinical usability. Comput Biol Med. (2021) 130:104200. doi: 10.1016/j.compbiomed.2020.104200

89. ISO/IEC TR 24027:2021,. Artificial Intelligence (AI) — Bias in AI Systems AI Aided Decision Making. ISO/IEC TR 24027:2021 (2021). Available online at: https://www.iso.org/standard/77607.html (accessed June 16, 2022).

90. Rajkomar A, Oren E, Chen K, Dai AM, Hajaj N, Hardt M, et al. Scalable and accurate deep learning with electronic health records. NPJ Digit Med. (2018) 1:18. doi: 10.1038/s41746-018-0029-1

91. Larrazabal AJ, Nieto N, Peterson V, Milone DH, Ferrante E. Gender imbalance in medical imaging datasets produces biased classifiers for computer-aided diagnosis. Proc Natl Acad Sci USA. (2020) 117:12592–4. doi: 10.1073/pnas.1919012117

92. Puyol-Antón E, Ruijsink B, Mariscal Harana J, Piechnik SK, Neubauer S, Petersen SE, et al. Fairness in cardiac magnetic resonance imaging: assessing sex and racial bias in deep learning-based segmentation. Front Cardiovasc Med. (2022) 9:859310. doi: 10.3389/fcvm.2022.859310

93. Barocas S, Hardt M, Narayanan A. Fairness in Machine Learning. Nips Tutor. Fairmlbook.Org (2017). Available online at: http://www.fairmlbook.org

94. Seyyed-Kalantari L, Liu G, McDermott M, Chen IY, Ghassemi M. CheXclusion: Fairness gaps in deep chest X-ray classifiers. In: Pacific Symposium on Biocomputing, Vol. 26. (2020). p. 232–43. doi: 10.1142/9789811232701_0022

95. European Parliament. Directorate General for Parliamentary Research Services. Artificial Intelligence in Healthcare: Applications, Risks, Ethical Societal Impacts. LU: Publications Office (2022). Available online at: https://data.europa.eu/doi/10.2861/568473 (accessed July 29, 2022).

96. HIPAA, Journal,. South Denver Cardiology Associates Confirms Data Breach Affecting 287,000 Patients. HIPAA Journal. Available online at: https://www.hipaajournal.com/south-denver-cardiology-associates-confirms-data-breach-affecting-287000-patients/ (accessed August 1, 2022).

97. Finlayson SG, Bowers JD, Ito J, Zittrain JL, Beam AL, Kohane IS. Adversarial attacks on medical machine learning. Science. (2019) 363:1287–9. doi: 10.1126/science.aaw4399

98. Sipola T, Kokkonen T. One-pixel attacks against medical imaging: A conceptual framework. In: World Conference on Information Systems and Technologies. Terceira Island: Springer (2021) p. 197–203. doi: 10.1007/978-3-030-72657-7_19

99. Heart, Flow,. NHS England and NHS Improvement Mandate Adoption of AI-Powered HeartFlow Analysis to Fight Coronary Heart Disease. Heart Flow. Available online at: https://www.heartflow.com/newsroom/nhs-england-and-nhs-improvement-mandate-adoption-of-ai-powered-heartflow-analysis-to-fight-coronary-heart-disease/ (accessed September 22, 2022).

100. Kairouz P, McMahan HB, Avent B, Bellet A, Bennis M, Bhagoji AN, et al. Advances and open problems in federated learning. Found Trends® Mach Learn. (2021) 14:1–210. doi: 10.1561/2200000083

101. Mora-Cantallops M, Sanchez-Alonso S, Garc?a-Barriocanal E, Sicilia M-A. Traceability for trustworthy ai: A review of models and tools. Big Data Cogn Comput. (2021) 5:20. doi: 10.3390/bdcc5020020

102. Gebru T, Morgenstern J, Vecchione B, Vaughan JW, Wallach H, Iii HD, et al. Datasheets for datasets. Commun ACM. (2021) 64:86–92. doi: 10.1145/3458723

103. Sendak MP, Gao M, Brajer N, Balu S. Presenting machine learning model information to clinical end users with model facts labels. NPJ Digit Med. (2020) 3:41. doi: 10.1038/s41746-020-0253-3

104. Arbelaez Ossa L, Starke G, Lorenzini G, Vogt JE, Shaw DM, Elger BS. Re-focusing explainability in medicine. Digit Health. (2022) 8:205520762210744. doi: 10.1177/20552076221074488

105. Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: visual explanations from deep networks via gradient-based localization. Int J Comput Vis. (2020) 128:336–59. doi: 10.1007/s11263-019-01228-7

106. Tjoa E, Khok HJ, Chouhan T, Cuntai G. Improving deep neural network classification confidence using heatmap-based eXplainable AI. arXiv. (2022). Available online at: http://arxiv.org/abs/2201.00009 (accessed July 3, 2022).

107. Johnson J, Karpathy A, Fei-Fei L. DenseCap: fully convolutional localization networks for dense captioning. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, NV: IEEE (2016). p. 4565–74. doi: 10.1109/CVPR.2016.494

108. Candemir S, White RD, Demirer M, Gupta V, Bigelow MT, Prevedello LM, et al. Automated coronary artery atherosclerosis detection and weakly supervised localization on coronary CT angiography with a deep 3-dimensional convolutional neural network. Comput Med Imaging Graph. (2020) 83:101721. doi: 10.1016/j.compmedimag.2020.101721

109. Howard JP, Tan J, Shun-Shin MJ, Mahdi D, Nowbar AN, Arnold AD, et al. Improving ultrasound video classification: an evaluation of novel deep learning methods in echocardiography. J Med Artif Intell. (2020) 3:4. doi: 10.21037/jmai.2019.10.03

110. Ribeiro MT, Singh S, Guestrin C. “Why should I trust you?”: explaining the predictions of any classifier. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. San Francisco, CA: ACM (2016). p. 1135–44. doi: 10.1145/2939672.2939778

111. Lundberg SM, Lee S-I. A unified approach to interpreting model predictions. arXiv [Preprint]. arXiv: 1705.07874. Available online at: https://arxiv.org/pdf/1705.07874.pdf

112. Al'Aref SJ, Maliakal G, Singh G, van Rosendael AR, Ma X, Xu Z, et al. Machine learning of clinical variables and coronary artery calcium scoring for the prediction of obstructive coronary artery disease on coronary computed tomography angiography: analysis from the CONFIRM registry. Eur Heart J. (2020) 41:359–67. doi: 10.1093/eurheartj/ehz565

113. Fahmy AS, Csecs I, Arafati A, Assana S, Yankama TT, Al-Otaibi T, et al. An explainable machine learning approach reveals prognostic significance of right ventricular dysfunction in nonischemic cardiomyopathy. JACC Cardiovasc Imaging. (2022) 15:766–79. doi: 10.1016/j.jcmg.2021.11.029

114. Ghassemi M, Oakden-Rayner L, Beam AL. The false hope of current approaches to explainable artificial intelligence in health care. Lancet Digit Health. (2021) 3:e745–50. doi: 10.1016/S2589-7500(21)00208-9

115. Kaplan B. How should health data be used?: privacy, secondary use, and big data sales. Camb Q Healthc Ethics. (2016) 25:312–29. doi: 10.1017/S0963180115000614

116. Raji ID, Smart A, White RN, Mitchell M, Gebru T, Hutchinson B, et al. Closing the AI accountability gap: defining an end-to-end framework for internal algorithmic auditing. arXiv. (2020). Available online at: http://arxiv.org/abs/2001.00973 (accessed August 1, 2022).

117. Arora A. Conceptualising artificial intelligence as a digital healthcare innovation: an introductory review. Med Devices Evid Res. (2020) 13:223–30. doi: 10.2147/MDER.S262590

118. Lehne M, Sass J, Essenwanger A, Schepers J, Thun S. Why digital medicine depends on interoperability. NPJ Digit Med. (2019) 2:79. doi: 10.1038/s41746-019-0158-1

119. European, Commission,. European Health Data Space. European Commission. Available online at: https://health.ec.europa.eu/ehealth-digital-health-and-care/european-health-data-space_en. (accessed August 1, 2022).

120. Health Data Research UK. BHF Data Science Centre. Health Data Research UK. Available online at: https://www.hdruk.org/helping-with-health-data/bhf-data-science-centre/ (accessed August 1, 2022).

121. Matthew Nagy, MPH Bryan Sisk, MD. How will artificial intelligence affect patient-clinician relationships? AMA J Ethics. (2020) 22:E395–400. doi: 10.1001/amajethics.2020.395

122. Cohen IG. Informed consent and medical artificial intelligence: what to tell the patient? George Law J. (2020) 108:1425–69. doi: 10.2139/ssrn.3529576

123. Meskó B, Görög M. A short guide for medical professionals in the era of artificial intelligence. NPJ Digit Med. (2020) 3:126. doi: 10.1038/s41746-020-00333-z

124. Hatherley JJ. Limits of trust in medical AI. J Med Ethics. (2020) 46:478–81. doi: 10.1136/medethics-2019-105935

125. van Smeden M, Heinze G, Van Calster B, Asselbergs FW, Vardas PE, Bruining N, et al. Critical appraisal of artificial intelligence-based prediction models for cardiovascular disease. Eur Heart J. (2022) 43:2921–30. doi: 10.1093/eurheartj/ehac238

126. Sengupta PP, Shrestha S, Berthon B, Messas E, Donal E, Tison GH, et al. Proposed requirements for cardiovascular imaging-related machine learning evaluation (PRIME): a checklist. JACC Cardiovasc Imaging. (2020) 13:2017–35. doi: 10.1016/j.jcmg.2020.07.015

127. Kaggle. RSNA-MICCAI Brain Tumor Radiogenomic Classification. Kaggle. Available online at: https://www.kaggle.com/competitions/rsna-miccai-brain-tumor-radiogenomic-classification (accessed Septembet 26, 2022).

128. Sasaki T, Kinoshita M, Fujita K, Fukai J, Hayashi N, Uematsu Y, et al. Radiomics and MGMT promoter methylation for prognostication of newly diagnosed glioblastoma. Sci Rep. (2019) 9:14435. doi: 10.1038/s41598-019-50849-y

129. Korfiatis P, Kline TL, Coufalova L, Lachance DH, Parney IF, Carter RE, et al. MRI texture features as biomarkers to predict MGMT methylation status in glioblastomas: MRI texture features to predict MGMT methylation status. Med Phys. (2016) 43:2835–44. doi: 10.1118/1.4948668

130. Han L, Kamdar MR. MRI to MGMT: predicting methylation status in glioblastoma patients using convolutional recurrent neural networks. In: Biocomputing 2018. World Scientific (2018). p. 331–42. doi: 10.1142/9789813235533_0031

131. Keane PA, Topol EJ. AI-facilitated health care requires education of clinicians. Lancet. (2021) 397:1254. doi: 10.1016/S0140-6736(21)00722-4

132. McCoy LG, Nagaraj S, Morgado F, Harish V, Das S, Celi LA. What do medical students actually need to know about artificial intelligence? NPJ Digit Med. (2020) 3:86. doi: 10.1038/s41746-020-0294-7

133. Grunhut J, Wyatt AT, Marques O. Educating future physicians in artificial intelligence (AI): an integrative review and proposed changes. J Med Educ Curric Dev. (2021) 8:238212052110368. doi: 10.1177/23821205211036836

Keywords: artificial intelligence, cardiovascular imaging, machine learning (ML), trustworthiness, AI risk

Citation: Szabo L, Raisi-Estabragh Z, Salih A, McCracken C, Ruiz Pujadas E, Gkontra P, Kiss M, Maurovich-Horvath P, Vago H, Merkely B, Lee AM, Lekadir K and Petersen SE (2022) Clinician's guide to trustworthy and responsible artificial intelligence in cardiovascular imaging. Front. Cardiovasc. Med. 9:1016032. doi: 10.3389/fcvm.2022.1016032

Received: 10 August 2022; Accepted: 11 October 2022;

Published: 08 November 2022.

Edited by:

Hui Xue, National Heart, Lung, and Blood Institute (NIH), United StatesReviewed by:

James Howard, Imperial College London, United KingdomCopyright © 2022 Szabo, Raisi-Estabragh, Salih, McCracken, Ruiz Pujadas, Gkontra, Kiss, Maurovich-Horvath, Vago, Merkely, Lee, Lekadir and Petersen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Liliana Szabo, c3oubGlsaWFuYS5lQGdtYWlsLmNvbQ==; bC5zemFib0BxbXVsLmFjLnVr

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.