- 1Science and Technology on Thermal Energy and Power Laboratory, Wuhan, China

- 2School of Automation Science and Electrical Engineering, Beihang University, Beijing, China

- 3Department of Control Science and Engineering, Harbin Institute of Technology, Harbin, China

This paper presents a data-driven predictive controller based on the broad learning algorithm without any prior knowledge of the system model. The predictive controller is realized by regressing the predictive model using online process data and the incremental broad learning algorithm. The proposed model predictive control (MPC) approach requires less online computational load compared to other neural network based MPC approaches. More importantly, the precision of the predictive model is enhanced with reduced computational load by operating an appropriate approximation of the predictive model. The approximation is proved to have no influence on the convergence of the predictive control algorithm. Compared with the partial form dynamic linearization aided model free control (PFDL-MFC), the control performance of the proposed predictive controller is illustrated through the continuous stirred tank heater (CSTH) benchmark.

1 Introduction

Tracking control plays an important role in the modern industrial process and has drawn much attention both in academia and industry over these years (Yin et al., 2017). As an efficient feedforward control strategy, model predictive control (MPC) has shown its efficiency in the tracking control of multivariable systems with various constraints. Compared with other control strategies where the control law is calculated offline, the core idea of MPC is to calculate an optimal control sequence by solving a finite optimal control problem online (Mayne and Rawlings, 2001). MPC is proved to be easy for implementation and maintenance in practical industry (Hidalgo and Brosilow, 1990; Yin and Xiao, 2017). Due to these advantages, MPC has been successfully used in the tracking control of the hybrid energy storage system, modular multi-level converters, wind generation system and many other applications in recent literatures (Qin and Badgwell, 2003; Garcia-Torres et al., 2019; Guo et al., 2019; Kou et al., 2019).

Despite the successful applications of MPC, most predictive controllers rely heavily on the precision of the predictive model. For model-based MPC, the predictive model is usually generated using the first principle, i.e., the controlled auto-regressive intergraded moving average (CARIMA) model (Zhang et al., 2018). However, it is arduous to set up a precise model for modern industrial process based on the first principle due to the complicated mechanism. Some alternative predictive models, such as the time-domain model, are also hard to obtain in practice (Patwardhan et al., 1998). Fortunately, there exists a huge number of underutilized process data with the development of the measurement and storage technology. Motivated by this, the data-driven predictive controller design has attracted the interest of researchers in recent years.

To propose the data-driven predictive control strategy, multivariable statistical analysis (MSA) techniques are used to identify the predictive model based on the available process data. Partial least square (PLS) and its modified forms have been paid more attention to due to the low computation complexity (Gao et al., 2018). To extract the dynamic characteristics of the process, a dynamic PLS based predictive control is proposed based on the assumed autoregressive exogenous (ARX) model (Kaspar and Ray, 1993). Subspace identification techniques are also used to design the predictive controller, where the state space model can be directly identified based on the process data (Yi, 2016; Garg and Mhaskar, 2017; Meidanshahi et al., 2017; Zhou et al., 2017). However, most investigations on subspace identification mainly focus on the identification of linear systems (Wu et al., 2013; Li et al., 2020). To cope with nonlinear systems, the neural network (NN) is often applied for the predictive controller design due to its strong learning ability in nonlinear model regression.

Over the past several years, fruitful achievements have been reported regarding the combination of NN and MPC. A dynamic neural network based MPC (DNN-MPC) is proposed and demonstrated to show superior control performance than the mathematical model based MPC for the industrial baker’s yeast drying process (Yuzgec et al., 2008). A self-organizing recurrent neural network (RNN) aided nonlinear MPC is proposed (Han et al., 2016), where the control performance is evaluated through a wastewater treatment process. To improve the control performance for nonlinear process, the radial basis function neural network (RBF-NN) is applied to design the model predictive controller (Yu et al., 2011). Particularly, an adaptive splitting and merging RBF-NN based MPC has recently been successfully implemented in a zinc hydrometallurgy plant. However, these NN based MPC strategies often require much computation load, which limits its extension to the online implementation for real-time control performance.

In this paper, a random vector functional-link neural network (RVFLNN), i.e., the broad learning system (Chen and Liu, 2018), is incorporated into the predictive controller design. By using the incremental broad learning algorithm, the predictive model can be identified adaptively based on the online process data. In addition, a Jacobian matrix is used to approximate the predictive model to enhance the precision of the predictive model. The main contributions can be summarized as follows:

1) A data-driven predictive control strategy is proposed based on the broad learning algorithm with no prior knowledge of the system model;

2) The proposed data-driven predictive controller can be updated online based on the incremental broad learning algorithm while requiring little computation load;

3) The proposed broad learning aided MPC strategy is verified on the continuous stirred tank heater (CSTH) benchmark for temperature adjustment.

The rest of this paper is organized as follows. Section 2 introduces the basic knowledge of the model predictive control and the fundamental structure of the broad learning system. Section 3 discusses the incremental broad learning algorithm for new training data and proposes the incremental broad learning based MPC. Section 4 verifies the control performance of the proposed broad learning based MPC through the CSTH benchmark. Section 5 concludes this paper.

2 Preliminaries

2.1 Basic Knowledge of Model Predictive Control

Given a nonlinear process described as follows:

where

The core idea of MPC is to optimize the following quadratic problem online

or equivalently,

where Ysp is the setpoint of the process,

2.2 Basic Knowledge of Broad Learning

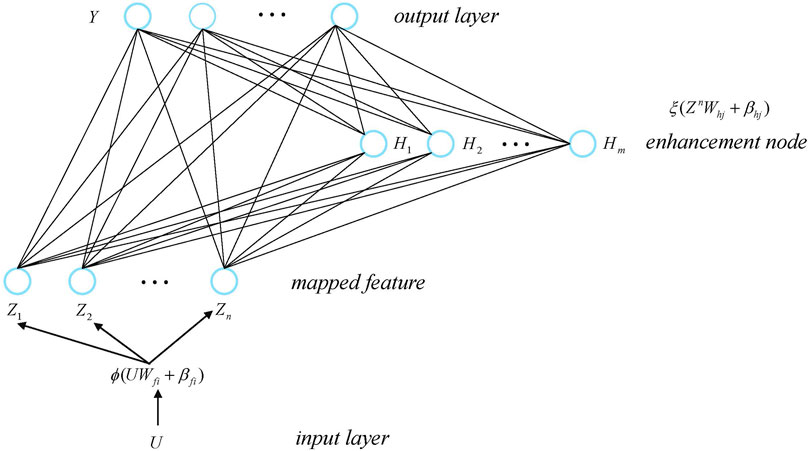

As an improved neural network generated from the RVFLNN, broad learning has been proven to be an efficient algorithm for regression and classification, where it is important to extract the features from the training data. A random initialization of the features is always a popular way due to its easy computation. However, the feature extracted by random projection may suffer from unpredictability and needs guidance. To avoid this, a set of sparse features can be extracted from the training data using the sparse autoencoder (SAE) discussed in Gong et al. (2015). In this way, the alternating direction method of multipliers (ADMM) technique can be used to solve the lasso problem in the broad learning algorithm (Chen and Liu, 2018) to fine-tune the weight matrices. The details of the broad learning is given as below.

Assume that

where ϕ(⋅) denotes the projection function,

Denote Zn ≡ [Z1, Z2, …, Zn] as the concentration of the mapped feature. Zn can then be used to generate the jth enhancement node

where ζ(⋅) denotes the projection function,

Similarly, denote Hm ≡ [H1, H2, …, Hm] as the collection of the enhancement nodes. In this way, the global model can be represented as

where

It is straightforward that Wm can be calculated as

where (⋅)+ represents the moore-penrose pseudoinverse. To reduce the computation complexity, the broad learning algorithm makes use of the ridge regression theory proposed in Hoerl and Kennard (2000).

Assume that

and consequently,

The functional-linked broad learning model is shown in Figure 1. More details on the broad learning can be referred to Chen and Liu (2018).

3 Broad Learning Based Predictive Control

3.1 Incremental Broad Learning Algorithm

Though broad learning has been proven to be efficient for classification and regression, it is not easy to regress the process model based on the online data due to the unpredictable noise, model uncertainty and dynamic characteristics. To implement the predictive control in the framework of the broad learning, it is essential to develop the equivalent incremental broad learning algorithm. By updating the parameters of the broad learning model, the precision of the regression model can be further enhanced. The incremental broad learning algorithm for new training data was briefly discussed in Chen and Liu (2018). The details of the incremental broad learning for new training data will be presented in this section.

According to Chen and Wan (1999), the stepwise updating algorithm of a functional-linked neural network for a new enhancement node can be equivalently represented as adding several columns to the input matrix. Denote the initial input matrix as An, the final input matrix is then given as An+1 = [An|a] when a new enhancement node a is added to the neural network.

Inspired by the moore-penrose pseudo inverse of partitioned matrix mentioned in Diaz and Gutierrez (2006), the pseudo inverse of An+1 can be calculated as

where

In this way, the new weights Wn+1 can be calculated as

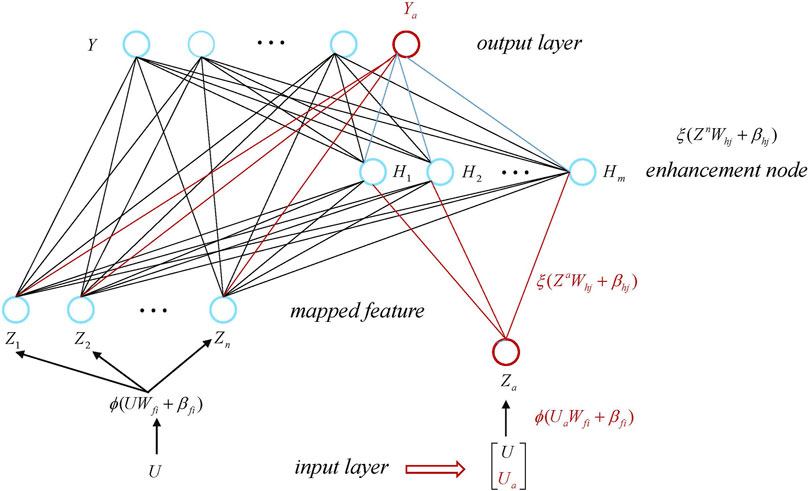

Denote Xa and Ya as a group of new training input data and the corresponding output data. The incremental algorithm broad learning for new training data can be demonstrated as Figure 2.

The original combination of feature nodes and enhancement nodes forms the following input matrix

The input matrix

where

It is obvious that

Denote the input matrix generated by the new training data Ua as Au,

where

The pseudoinverse of

where

The weight matrix of the new broad learning model can be calculated as

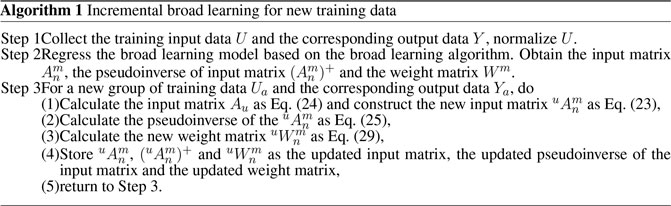

The incremental broad learning algorithm for new training data can be summarized in Algorithm 1.

Remark 1. In fact, the broad learning algorithm is herein used to the establish the predictive model for control purpose. Note that the predictive model does not need to be retrained when the new data arrives if the incremental broad learning algorithm is applied. The main computation is spent on the update of the new weights (

3.2 Incremental Broad Learning Based Predictive Control

For predictive control purpose, the cost function J(k) to be minimized at the kth instant can be given as follows,

where

and ny, nu, np, and nc are the dimension of an output vector, the dimension of the input vector, the predictive horizon and the control horizon, respectively.

Remark 2. It is worth mentioning that np should be set to be longer than the settling time in order to satisfy the convergence condition of the predictive control (Rossiter, 2003). np has no influence on the convergence of predictive control if np is greater than the settling time. However, np is often determined based on the practical experience since the settling time is unknown. In this paper, the length of the control horizon nc and prediction horizon np are both set to be 50.In the broad learning algorithm, the relationship between input training data U and output training data Y can be represented as

where

Therefore, regardless of the activation function, the correlation between u(k) and y(k) can be presented as

However, the activation function F(⋅) is necessary to extract the nonlinear characteristics. In the broad learning, the prediction of y(k) is calculated as

where

Note that the nonlinearity brings challenges to the receding optimization of the predictive control since the cost function to be minimized is nonlinear. Therefore, an appropriate approximation of the predictive model is necessary.According to Eq. 41, the prediction at the instant k can be rewritten as

Assume that u(k) is known, G(u(k)) can then be calculated easily via the general inverse. However, the incremental ΔU is to be calculated by solving the quadratic problem as Eq. 30. Take the prediction of the y(k + 1) at the instant k as an example,

where Δu(k + 1) is unknown.Since G(u) is continuous differentiable at any u, define the Jacobian matrix of G(u) as

In this way, the approximation of G(u(k + 1)) can be given as

According to the definition of G(⋅) and f(net) as presented in Eqs 41, 42. It holds that f′(net) = 1 − f(net)2 ≤ 1 and G(u) is continuously differentiable for any

Theorem 1. Let Eqs 41, 42, 47 holds, replacing G(u(k)) with

Proof. Define the deviation EG between

According to the mean value theorem, we have

Consider the equivalence of Riemann sum and integral, it yields

Consider that λ1 and λ2 are two weight constants and it holds that λ2 ≫ λ1, it yields

Therefore, the convergence of

Remark 3. The Lipschitz constant γ is related to the spectral radius of W. In practice, Δu is often constrained due to the mechanical structure of the actuators. The deviation EG can be acceptable with an appropriate W.Denote W as

The Jacobian matrix JG(u(k)) can be constructed as

where ∘ denotes the Hadamard product and

In addition, the prediction of y(k + i) at the kth instant y(k + i|k) can be given as

which leads to

where

Substituting Eqs 57, 59 into Eq. 30, the cost function can be rewritten as

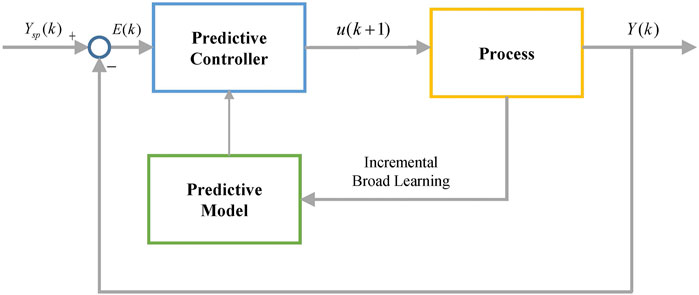

subject to the same constraint as Eq. 5, where E(k) = Ysp(k) − Y(k). The proposed predictive control is illustrated in Figure 3.

4 Benchmark Study on Continuous Stirred Tank Heater

In this section, the proposed predictive control is applied to tune the temperature in the continuous stirred tank heater (CSTH) benchmark. The performance of the proposed control strategy is compared with the partial form dynamic linearization aided model free control (PFDL-MFC) strategy proposed in Hou and Jin (2013) and Hou and Jin (2011). The PFDL-MFC algorithm is chosen for comparison because both algorithms need little prior knowledge of the system model and calculate the optimal control input sequence based on Eq. 4.

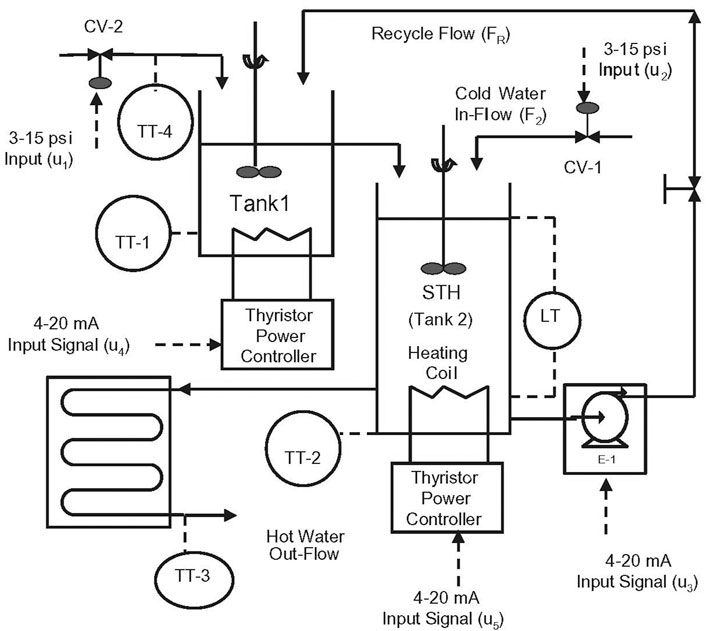

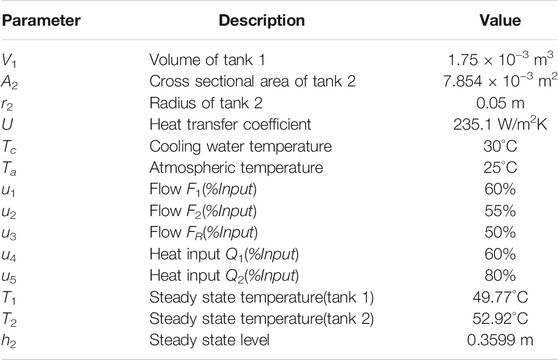

The continuous stirred tank heater (CSTH) is a typical pilot plant for control performance evaluation and process monitoring. The experimental CSTH system is developed in the Automation Laboratory at Department of Chemical Engineering, IIT Bombay as shown in Figure 4 (Thornhill et al., 2008). It mainly consists of two separated water storage tanks. The water is heated separately and recycled between the two tanks. The CSTH benchmark can be regarded as a 5-input-3-output system. u1, u2, and u3 represent the inputs controlled by separate valves. u4 and u5 represent the outputs of two heaters. The three outputs are the water temperature T1 (Tank 1), T2 (Tank 2) and the water level h2 (Tank 2), respectively. Table 1 gives the parameters of the CSTH benchmark in steady state.

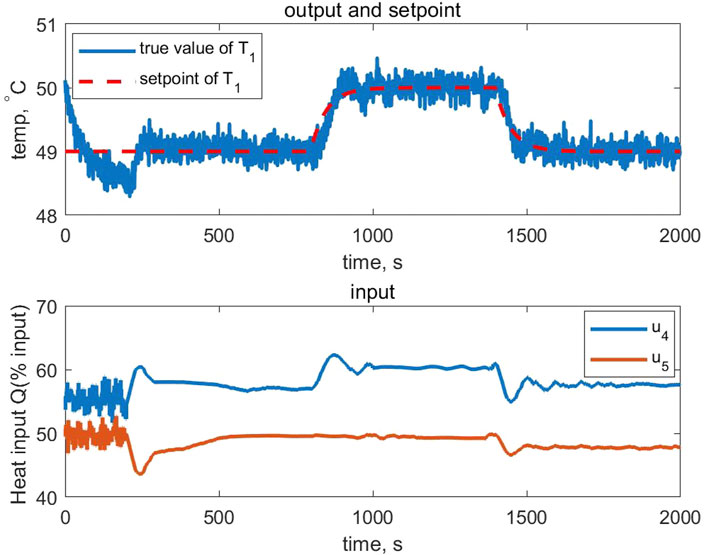

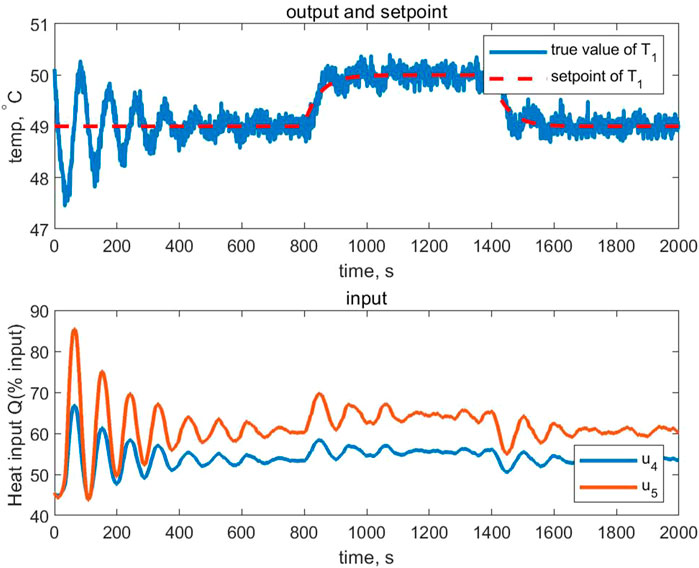

Consider that the heat input is added to the state variables in the form of polynomial functions (Thornhill et al.,2008), the controlled system is nonlinear even if the flow rate is steady. For simplicity, we only use u4 and u5 as the controller outputs to be calculated. The designed predictive controller aims to make T1 track its setpoint. If not specified, the other variables are set to be steady state in Table 1. The practical constraints in the CSTH benchmark are set to be

Smooth approximation is a popular method to enhance the tracking performance (Chi and Liang, 2015). By smooth approximation, the setpoint can be changed smoothly from one desired state to the other. In the simulation, the setpoint is given as

where ys1 = 49, ys2 = 50 and the smoothing coefficient λsp is set to be 0.98.

Consider that the proposed predictive control requires initializing its predictive model based on the available process data. In this simulation, the process inputs are set to be random square waves with white noise in the first 200 s. The initial predictive model is calculated using the process data collected in the first 200 s. After the 200th instant, the predictive model is updated based on the online process data.

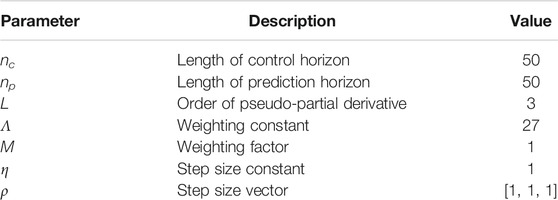

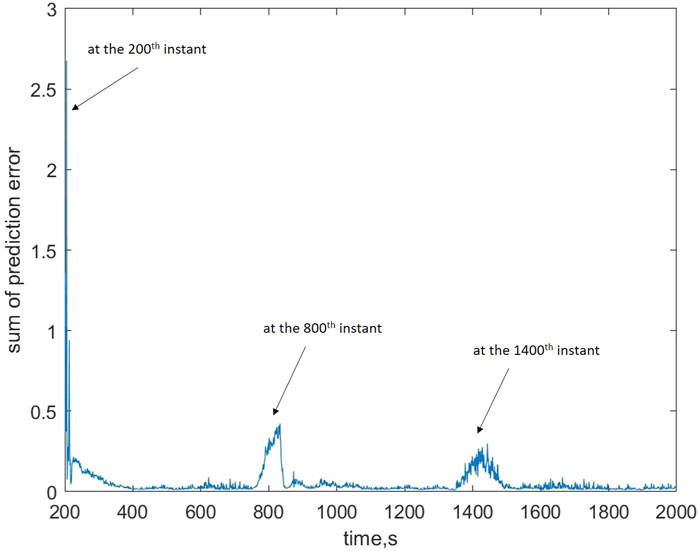

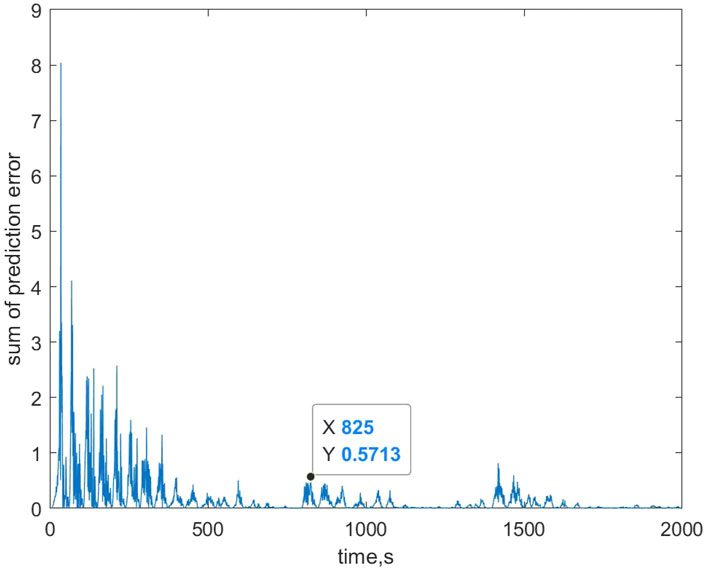

Define the prediction error Ep at the instant k as

where nc and np are both set to be 50.

Other parameters in the PF-MFPC algorithm are respectively set to be λ = 27, μ = 1, ρ = [1, 1, 1], η = 1, and L = 3. The illustrations of the designed parameters are shown in Table 2. The comparison of the prediction error is shown in Figures 5, 6, from which it can be seen that there exists a fluctuation at the 200th, 800th, and 1400th instant. The fluctuation is caused by the setpoint change. Therefore, both algorithms need to update the model dynamics based on the online process data. The comparison shows that the prediction of the proposed control strategy converges much faster than the PFDL-MFC algorithm. In addition, the fluctuation peaks of the proposed broad learning aided MPC is also much lower than the PFDL-MFC algorithm.

It is worth mentioning that the fluctuation of Ep occurs before the setpoint changes at the 1400th and 4800th instant since the proposed predictive controller is based on the multi-step prediction. In this simulation, it holds that nc = np = 50, which implies that the prediction of the output and the incremental of the input can be foreseen in the future 50 sample instants.

The control performance of the proposed broad learning aided MPC and the PFDL-MFC algorithm are demonstrated in Figures 7, 8, respectively. As the setpoint changes, the input signal converges after a few steps of adjustment. Both algorithms show the efficiency in the tracking control for nonlinear systems. However, the proposed algorithm converges much faster than the PFDL-MFC algorithm though it requires to collect the data during the first several instants. In addition, the parameters of the PFDL-MFC algorithm have a great influence on the control performance. In contrast, only the weight λ1, λ2 and the prediction horizon np can be adjustable in the proposed predictive control strategy, which shows minor influence on the control performance.

5 Conclusion

This paper proposes a broad learning based data-driven predictive control strategy without any prior knowledge of the system model. The online computation load of the proposed predictive controller is reduced by using the incremental broad learning algorithm. In addition, the prediction precision is enhanced by approximating the predictive model using a Jacobian matrix. The convergence of the predictive control strategy is discussed theoretically, which demonstrates that the approximation has no influence on the convergence of the predictive control algorithm. The effectiveness of the proposed broad learning based MPC is demonstrated for temperature adjustment through the continuous stirred tank heater benchmark.

Compared with other NN based predictive control strategies, the proposed strategy can update the predictive model online with less online computation burden. Meanwhile, the proposed predictive controller generates the local nonlinearity between the input and output data compared with the dynamic linearization based model free control strategy, which enhances the prediction precision and control performance. The simulation result shows that the proposed predictive control strategy shows faster convergence both in the control performance and the prediction than the PFDL-MFC algorithm. In the future, more work can be done to further reduce the online computation burden.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: http://www.ps.ic.ac.uk/∼nina/CSTHSimulation/index.htm.

Author Contributions

MT and TG: conceptualization, methodology, writing. XL: supervision. KL: writing. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

This work is funded by Open Foundation of Science and Technology on Thermal Energy and Power Laboratory under Grant TPL2018C02.

References

Chen, C. L. P., and Liu, Z. (2018). Broad Learning System: An Effective and Efficient Incremental Learning System without the Need for Deep Architecture. IEEE Trans. Neural Netw. Learn. Syst. 29, 10–24. doi:10.1109/tnnls.2017.2716952

Chen, C. L. P., and Wan, J. Z. (1999). A Rapid Learning and Dynamic Stepwise Updating Algorithm for Flat Neural Networks and the Application to Time-Series Prediction. IEEE Trans. Syst. Man. Cybern. B 29, 62–72. doi:10.1109/3477.740166

Chi, Q., and Liang, J. (2015). A Multiple Model Predictive Control Strategy in the Pls Framework. J. Process Control. 25, 129–141. doi:10.1016/j.jprocont.2014.12.002

Díaz-García, J. A., and Gutiérrez-Jáimez, R. (2006). Distribution of the Generalised Inverse of a Random Matrix and its Applications. J. Stat. Plann. Inference 136, 183–192. doi:10.1016/j.jspi.2004.06.032

Gao, T., Yin, S., Qiu, J., Gao, H., and Kaynak, O. (2018). A Partial Least Squares Aided Intelligent Model Predictive Control Approach. IEEE Trans. Syst. Man. Cybern, Syst. 48, 2013–2021. doi:10.1109/tsmc.2017.2723017

Garcia-Torres, F., Bordons, C., and Ridao, M. A. (2019). Optimal Economic Schedule for a Network of Microgrids with Hybrid Energy Storage System Using Distributed Model Predictive Control. IEEE Trans. Ind. Electron. 66, 1919–1929. doi:10.1109/tie.2018.2826476

Garg, A., and Mhaskar, P. (2017). Subspace Identification and Predictive Control of Batch Particulate Processes.”in American Control Conference (ACC). doi:10.23919/acc.2017.7963003

Gong, M., Liu, J., Li, H., Cai, Q., and Su, L. (2015). A Multiobjective Sparse Feature Learning Model for Deep Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 26, 3263–3277. doi:10.1109/tnnls.2015.2469673

Guo, P., He, Z., Yue, Y., Xu, Q., Huang, X., Chen, Y., et al. (2019). A Novel Two-Stage Model Predictive Control for Modular Multilevel Converter with Reduced Computation. IEEE Trans. Ind. Electron. 66, 2410–2422. doi:10.1109/tie.2018.2868312

Han, H.-G., Zhang, L., Hou, Y., and Qiao, J.-F. (2016). Nonlinear Model Predictive Control Based on a Self-Organizing Recurrent Neural Network. IEEE Trans. Neural Netw. Learn. Syst. 27, 402–415. doi:10.1109/tnnls.2015.2465174

Hidalgo, P. M., and Brosilow, C. B. (1990). Nonlinear Model Predictive Control of Styrene Polymerization at Unstable Operating Points. Comput. Chem. Eng. 14, 481–494. doi:10.1016/0098-1354(90)87022-h

Hoerl, A. E., and Kennard, R. W. (2000). Ridge Regression: Biased Estimation for Nonorthogonal Problems. Technometrics 42, 80–86. doi:10.1080/00401706.2000.10485983

Hou, Z., and Jin, S. (2011). A Novel Data-Driven Control Approach for a Class of Discrete-Time Nonlinear Systems. IEEE Trans. Contr. Syst. Technol. 19, 1549–1558. doi:10.1109/tcst.2010.2093136

Hou, Z., and Jin, S. (2013). Model Free Adaptive controlTheory and Applications. CRC Press,Taylor & Francis Group.

Kaspar, M. H., and Harmon Ray, W. (1993). Dynamic PLS Modelling for Process Control. Chem. Eng. Sci. 48, 3447–3461. doi:10.1016/0009-2509(93)85001-6

Kou, P., Feng, Y., Liang, D., and Gao, L. (2019). A Model Predictive Control Approach for Matching Uncertain Wind Generation with PEV Charging Demand in a Microgrid. Int. J. Electr. Power Energ. Syst. 105, 488–499. doi:10.1016/j.ijepes.2018.08.026

Li, K., Luo, H., Yang, C., and Yin, S. (2020). Subspace-aided Closed-Loop System Identification with Application to DC Motor System. IEEE Trans. Ind. Electron. 67, 2304–2313. doi:10.1109/tie.2019.2907447

Mayne, D. Q., and Rawlings, J. B. (2001). Correction to "Constrained Model Predictive Control: Stability and Optimality". Automatica 37, 483. doi:10.1016/s0005-1098(00)00173-4

Meidanshahi, V., Corbett, B., Adams, T. A., and Mhaskar, P. (2017). Subspace Model Identification and Model Predictive Control Based Cost Analysis of a Semicontinuous Distillation Process. Comput. Chem. Eng. 103, 39–57. doi:10.1016/j.compchemeng.2017.03.011

Patwardhan, R. S., Lakshminarayanan, S., and Shah, S. L. (1998). Constrained Nonlinear Mpc Using Hammerstein and Wiener Models: Pls Framework. Aiche J. 44, 1611–1622. doi:10.1002/aic.690440713

Qin, S. J., and Badgwell, T. A. (2003). A Survey of Industrial Model Predictive Control Technology. Control. Eng. Pract. 11, 733–764. doi:10.1016/s0967-0661(02)00186-7

Rossiter, J. (2003). Model-based Predictive Control: A Practical Approach. Boca Raton, Florida): CRC Press.

Thornhill, N. F., Patwardhan, S. C., and Shah, S. L. (2008). A Continuous Stirred Tank Heater Simulation Model with Applications. J. Process Control. 18, 347–360. doi:10.1016/j.jprocont.2007.07.006

Wu, X., Shen, J., Li, Y., and Lee, K. Y. (2013). Data-Driven Modeling and Predictive Control for Boiler-Turbine Unit. IEEE Trans. Energ. Convers. 28, 470–481. doi:10.1109/tec.2013.2260341

Yi, L. (20162016). “System Modeling and Identification of Unmanned Quad Rotor Air Vehicle Based on Subspace and Pem,” in IEEE Systems And Technologies For Remote Sensing Applications through Unmanned Aerial Systems (STRATUS). doi:10.1109/stratus.2016.7811137

Yin, S., and Xiao, B. (2017). Tracking Control of Surface Ships with Disturbance and Uncertainties Rejection Capability. Ieee/asme Trans. Mechatron. 22, 1154–1162. doi:10.1109/tmech.2016.2618901

Yin, S., Yu, H., Shahnazi, R., and Haghani, A. (2017). Fuzzy Adaptive Tracking Control of Constrained Nonlinear Switched Stochastic Pure-Feedback Systems. IEEE Trans. Cybern. 47, 579–588. doi:10.1109/tcyb.2016.2521179

Yu, H., Xie, T., Paszczynski, S., and Wilamowski, B. M. (2011). Advantages of Radial Basis Function Networks for Dynamic System DesignIEEE Trans. Ind. Electron. 58, 5438–5450. IEEE International Conference on Mechatronics (ICM 2009)APR 14-17, 2009. doi:10.1109/tie.2011.2164773

Yuzgec, U., Becerikli, Y., and Turker, M. (2008). Dynamic Neural-Network-Based Model-Predictive Control of an Industrial Baker's Yeast Drying Process. IEEE Trans. Neural Netw. 19, 1231–1242. doi:10.1109/tnn.2008.2000205

Zhang, Y., Xu, D., and Huang, L. (2018). Generalized Multiple-Vector-Based Model Predictive Control for PMSM Drives. IEEE Trans. Ind. Electron. 65, 9356–9366. doi:10.1109/tie.2018.2813994

Keywords: data driven, broad learning, tracking control, model predictive control, continuous stirred tank heater

Citation: Tao M, Gao T, Li X and Li K (2021) Broad Learning Aided Model Predictive Control With Application to Continuous Stirred Tank Heater. Front. Control. Eng. 2:788492. doi: 10.3389/fcteg.2021.788492

Received: 02 October 2021; Accepted: 20 October 2021;

Published: 30 November 2021.

Edited by:

Zehui Mao, Nanjing University of Aeronautics and Astronautics, ChinaReviewed by:

Guang Wang, North China Electric Power University, ChinaYunsong Xu, National University of Defense Technology, China

Copyright © 2021 Tao, Gao, Li and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xianling Li, bGl4bF83MTlAMTYzLmNvbQ==

Mo Tao1,2

Mo Tao1,2 Xianling Li

Xianling Li