- 1Kresge Library, Oakland University, Rochester, MI, United States

- 2Fine and Performing Arts Library, University of New Mexico, Albuquerque, NM, United States

Introduction: This article explores two QAnon subgroups that were not active during the initial phase of the movement but now epitomize how QAnon has capitalized on social media to reach more people. We examine these smaller communities through the lens of information literacy and other literacies to identify opportunities for librarians and educators.

Results: The communities of conspiracy theorists explored here exhibit information behaviors distinct from the initial QAnon community, presenting opportunities for information professionals to employ new models of information literacy, metaliteracy, and other literacies to combat conspiracy ideation. Notable themes evidenced in both samples include an increasing religiosity affiliated with QAnon, affective states that promote conspiracy ideation, faulty hermeneutics and epistemologies, and specific literacy gaps.

Methods and discussion: We must update our understanding of QAnon and its adherents' shifting priorities and behaviors. Through investigating these smaller subgroups, researchers and educators can address the evolution of the QAnon movement by teaching to literacy gaps and logical fallacies, and acknowledging the troubling emotions that undergird broader belief systems.

1 Introduction

Librarian educators have spent the past two decades educating learners in information literacy; however, the current methods and frameworks falter when confronting the epistemologies that have given rise to QAnon and other conspiracy theories in American culture. Educators and librarians often feel overwhelmed by individuals or groups acting on their conspiracy interests, including the rise in book bannings and similar challenges nationwide (Beene and Greer, 2023; PEN America, 2023). These conspiracies threaten democratic norms, as illustrated by the January 6, 2021, breach of the U.S. Capitol building (McKay and Tenove, 2021; Fisher, 2022). Thus, information professionals and educators must understand the current challenges facing internet users who may be drawn into conspiracy theories to counteract them. As the superconspiracy QAnon has shifted to absorb other conspiracy theories and proliferated via social media, the movement has fractured into smaller groups, often led by influencers who have their own agendas and interests.

To better understand these smaller social media communities and the opportunities and challenges they present for librarians and educators, the authors explored two discrete examples: one influencer on Facebook with a small following of ~4,000 people, and another influencer on Telegram with a much larger following. Telegram has gained scholarly attention for its unique platform affordances in spreading conspiracy theories (Garry et al., 2021; Walther and McCoy, 2021; Peeters and Willaert, 2022), while Facebook has been explored as a social media platform ideally situated to wield outsized influence on the spread of hoaxes, conspiracy theories, misinformation and disinformation (Yasmin and Spencer, 2020; Innes and Innes, 2021; Oremus and Merrill, 2021; Marko, 2022; Mont'Alverne et al., 2023). Following these scholars, we sought to explore the evolution of the QAnon movement. Our previous research enabled us to more readily identify original QAnon themes (Beene and Greer, 2021, 2023) and trace emerging trends. In addition, we were interested in observing the information behaviors, affective states or emotional content, and cognitive patterns evident in these newer communities. As information professionals, we were especially interested in notable literacy gaps (e.g., information, media, digital, visual, and metaliteracy) and the lessons librarians and educators could glean.

In order to expand our praxis, information professionals must reckon with networked conspiracy theories, as well as with the role of emerging cognitive and parasocial authorities in creating and disseminating information. Investigating these communities will allow librarians and educators to account holistically for online information's cognitive, affective, and social dimensions. While research has been conducted on the original QAnon movement and its challenges for information professionals (Beene and Greer, 2021, 2023), this article provides insight into the evolving concerns and interests of splinter QAnon communities.

In addition to some original themes from the QAnon movement, we observed religiosity, white supremacy, and alternative health and wellness philosophies integrated with QAnon. We also noted a myriad of faulty epistemologies and hermeneutics that leveraged strong emotions to bypass critical literacies. This research fills a gap in examining information practices and discourse within the Q subcommunities. The themes that surfaced have implications for future pedagogical interventions or inoculations against conspiracism.

2 Literature review

2.1 The QAnon superconspiracy

In an earlier article (Beene and Greer, 2021), we examined the rise of QAnon and its implications for information professionals. In its most basic premise, QAnon posits that a global cabal of Satanic pedophiles traffic children worldwide. In the QAnon mythology, Donald Trump plays a pivotal role, working behind the scenes to fight and expose the cabal in an event known to followers as “the Storm” or the “Great Awakening.” The initial QAnon movement was constructed through members of anonymous image posting boards known as “chans,” where an anonymous user named “Q” would post daily cryptic messages, which were then scrupulously analyzed by followers. Soon after its inception, the movement jumped over to social media platforms like YouTube, where influencers interpreted Q drops, or posts, for the masses. Over the last seven years, QAnon has evolved from a single conspiracy theory into a “superconspiracy,” which Barkun (2003) defined as complex, evolving, and amorphous, “reflect[ing] conspiratorial constructs in which multiple conspiracies are believed to be linked together” and are “joined in complex ways so that conspiracies come to be nested within one another” (Barkun, 2003, p. 6). These nested conspiracies became the focus of online and social media communities.

This super conspiracy has infected popular social media, our political landscape, and our relationships. Exploring how, exactly, this happened is nearly impossible; in his analysis of how one falls down the rabbit hole, Pierre (2023) noted that, due to the amorphous and complex nature of the conspiracy worldview, “the process of becoming a QAnon follower can be understood as an expression of individual psychological vulnerability to conspiracy belief, cult affiliation, and ARG (alternate-reality game) engagement” (p. 21). To mitigate this conspiratorial mindset, researchers and educators must address “myriad subcategories of (the QAnon) identity and pathways of individuation” (p. 28). The multiple pathways to QAnon result in a spectrum of conspiracy ideation, as delineated by Pierre; there can be those who are “fence-sitters,” or more open to the ideas and questions promulgated by the Q community, “true believers” who have accepted the tenets and identify as committed followers, or “activists” who act as evangelists for the movement. Importantly, Pierre concludes his typology with “apostates” who have worked their way through the spectrum and come out on the other side, renouncing their belief in and affiliation with the community (p. 25–27).

2.2 Information behaviors and discourses in the QAnon community

Several scholars have analyzed discourses within the QAnon community, with most focusing on the nascent QAnon communities that evolved on the 4chan, 8chan, and 8kun message boards. For example, Packer and Stoneman (2021) noted QAnon's unique discursive affordances that “rely heavily on a communicative strategy of encoding and decoding that bears a strong resemblance to an esoteric hermeneutic, but one played out across social media” (p. 256). Q drops, or messages, required active participation from followers, which set up “an opportunity for social media celebrity and monetization” (Packer and Stoneman, 2021, p. 263).

Chandler's 2020 thematic analysis of original Q drops and its three significant subcategories has been used to frame our analyses: “(1) anti-elitism and discrediting the establishment; (2) claims of group marginalization and oppression, and (3) QAnon's role in unifying ‘patriotic' Americans, through covert and, ultimately, overt actions” (Chandler, 2020, p. 20). We also utilized Hodges' 2023 taxonomy of the QAnon worldview, which reflects “particular (if problematic) forms of engagement with authentic sources rather than the result of wholesale fabrication” (p. 129). His taxonomy of users' information practices included “close reading, exaggeration, accusation of ignorance, and deployment of esoteric yet unclear arguments” (p. 130). Hodges cautions against casually dismissing or deriding conspiracy theorists, concluding that

Understanding the relationship between QAnon and consensus reality more deeply and with greater nuance can assist in enabling more effective interactions and, potentially, even interventions between participants and third parties such as information, library, and media workers (2023, p. 140).

Hodges' conclusions turn on the nuance of interpretation as the driving force in the QAnon movement. Indeed, based upon the nascent QAnon community, some have suggested that the movement functions as a participatory game (Berkowitz, 2021; Fitzgerald, 2022; Gabriel Gatehouse, 2022) and that QAnon adherents demonstrate relatively sophisticated information literacy behaviors (Hartman-Caverly, 2019). We seek to update these earlier assessments to account for QAnon's current form on the social web.

2.3 Conspiracy adherents' epistemologies and hermeneutics

This debate between hermeneutics and epistemology is not new and has been explored by scholars in psychology, sociology, and philosophy, among other fields. For example, Ellis (2015) explored moral hermeneutics, coherence epistemology, and affect, while Westphal (2017) discussed the collapse of epistemologies into a hermeneutical framework. Both scholars complicated the notion that epistemology is opposing hermeneutics: that is, the way we construct knowledge is inherently tied up with how we interpret events – and both of these are inextricable from affective states. Building on this debate, Dentith (2018) and Butters (2022) examined these concepts in relation to conspiracy ideation. While Dentith examined the types of experts and other cognitive authorities deployed in conspiracy theory arguments, Butters interrogated how individuals, mainstream news media, and politicians amplified particular worldviews and ideologies. Both scholars found that QAnon followers use cherry-picked and misinterpreted evidence to prop up their worldviews. Thus, it could be assumed that helping users better understand the underlying modes of information production and discourse could assist in dismantling conspiracy interpretation.

QAnon is only one of a spectrum of conspiratorial worldviews, and recent research provides a greater understanding of conspiracy ideation in general. Conspiracy theorists, for example, find it easier to believe in additional conspiracies when they have already subscribed to one (Brotherton and French, 2015; Brashier, 2022). Additionally, those who fall prey to conspiracy theories are often motivated by reasoning and biases that lead them to search for information confirming their preconceptions, beliefs, and values; thus, conspiracy theories may seem more trustworthy because they confirm a particular worldview (Brotherton and French, 2015). As adherents fall deeper into the rabbit hole, they use motivated reasoning and cherry-picking of evidence, accepting only information that confirms a pre-existing philosophy and over-correlating innocuous and disparate events to confirm their beliefs (Sullivan et al., 2010). Finding like-minded users online essentially creates an echo chamber – a term that describes how users within an online community echo, confirm, and reiterate each other's beliefs and opinions. Douglas et al. (2019) argue that conspiracy beliefs are motivated by epistemic, existential, and social needs and not an innate tendency to simple credulity.

Moreover, there is evidence that the concept of authority operates differently on social media platforms, including within QAnon subgroups. Robertson and Amarasingam (2022) discovered that QAnon adherents often rely upon experiential knowledge rather than expertise acquired through research (2022, p. 2013). Their mixed-methods analysis of Telegram examined epistemic models of authority in right-leaning conspiratorial narratives, discovering an abundance of reliance on institutions such as the U.S. and its Constitution (p. 198–201). These narratives center on epistemic modes like scientific reasoning because they are “most prevalent in society and ones they need to most vociferously argue against and dismantle” (p. 198). Most importantly, they found reiterations of QAnon's original mantras, such as “The Storm,” “The Great Awakening,” and “Trust the Plan,” to proliferate in arguments, commenting that the ambiguity of these terms allows for broad interpretations, including theological and millennialist (p. 200). They concluded that evidence and authority predominate in these communities but only to prop up faulty epistemologies and hermeneutics.

These findings correlate with scholars' investigations into how social media users assess online information, where authority is conferred through parasocial and cognitive authorities (Rieh, 2010; Badke, 2015; Liebers and Holger, 2019), and second-hand knowledge (Wilson, 1983). When we do not possess enough knowledge on a topic, we decide whether or not to trust someone or something based on their perceived competence and expertise. For example, most of us have not empirically studied gravity, so we trust the expertise of those who have (Russo et al., 2019). Rather than characterizing our Information Age as “Post-Truth,” then, we might instead think of it as a plethora of competing truth claims. Because we cannot be experts on each claim, we increasingly trust others to connect the dots for us – increasingly in online spaces. The notion of authority is thus dynamic, shifting over time and space. It has shifted from the source itself to individual belief systems.

2.4 Information literacy and metaliteracy in an evolving information landscape

Academic librarians utilize as their primary guiding document the Framework for Information Literacy for Higher Education, codified after years of revising the concept of information literacy (Association of College and Research Libraries, 2016). The document posits an expanded understanding of information literacy as a “set of integrated abilities encompassing the reflective discovery of information,” with attention to how information is produced and disseminated through social, economic, and ethical lenses. The information landscape has evolved tremendously in the past decade, however. The way we think of and teach information literacy has shifted to encompass the new ways in which facts, truth, and trust are challenged by a heightened level of relativism, in which information is assessed using highly personal heuristics (Swanson, 2023).

Compounding the challenges accompanying the evaluation and use of new types of information, librarians have observed a surge in conspiracy theories. In a 2021 nationwide survey, we discovered that American library workers encountered conspiracy ideation in academic, public, special, and K-12 libraries (Beene and Greer, 2023). Importantly, affective states were evident in both patrons and library workers, often compounded by the political and social turmoil of another pandemic year just months after the January 6, 2021, capitol insurrection. Even though the existing literacy frameworks account for some affective components, we are among a growing body of researchers seeking to expand them to include a more holistic accounting of affective states and how they relate to information assessment, especially that which proliferates online.

Metaliteracy, as a separate framework from those authored by academic librarians, could serve as a valuable antidote to misinformation on the social web. Metaliteracy is a unified understanding of discrete literacies (e.g., information, visual, digital, media) and employs metacognitive reflective practices for information evaluation in collaborative online communities (Mackey and Jacobson, 2011). It includes explicit connections to the cognitive, behavioral, metacognitive, and affective domains of learning. As a framework, it addresses some of the shortcomings in discrete literacy models, seeking to form “an empowered and self-directed learner who actively creates meaningful content and effectively participates in a connected world” (Mackey and Jacobson, 2018, p. 1). This socially constructed online information ecosystem encourages users to actively create and produce knowledge.

In many ways, metaliteracy complements the work of other information literacy theorists who conceive of it as a socio-cultural practice (Lloyd, 2010). Lloyd reframes information literacy as a messy, social, participatory, and affective process through which meaning is made and shared (2010, p. 14–24). Her conception of an “information landscape,” which she defines as “the communicative space through which people develop identities and form relationships based on shared practices and ways of doing and saying things” (p. 9), is especially salient to this paper, providing a useful descriptor for how information and meaning are enacted and interacted within the social web.

Likewise, Swanson (2023) argues that the cognitive and affective dimensions inform and build upon each other. Indeed, the mind-gut connection is genuine regarding feelings of knowing. The profession needs an “affective turn,” as has taken place in other disciplines (Zembylas, 2021), which would provide a better understanding of how the mind parcels content, sifts it for essential bits, and connects information to feelings, emotions, and other forces that work upon an individual's experience of the world. This understanding would better prepare information professionals to teach library patrons and students to slow down and evaluate their feelings and thinking before assessing or using a source.

3 Materials and methods

The authors used opportunistic sampling to observe two current QAnon-focused social media communities. We settled on Pastor Chris1 and IET after following various conspiracy theory influencer trails. Pastor Chris provided engagement, a mid-size number of followers, and a salient example of the ongoing intersections of evangelicalism and QAnon via Facebook, which is one of the first mainstream platforms that Q followers congregated on after the chan boards (Kaplan, 2023). Despite banning overt QAnon groups in 2019, QAnon still has a very active presence there through communities like Pastor Chris'. We selected 112 of Pastor Chris' posts from the beginning of their account in May 2021, with additional posts selected from late spring of 2022 and 2023 to illustrate any continuity of themes through time. In addition, ad hoc posts that offered illustrative material for specific literacy gaps or identified themes were chosen.

IET provided a more sizable following with his public Telegram account, whose posts regularly reached over 50,000 views in 2022 and demonstrated regular interactions with his followers. The use of Telegram was important; the online social movement of QAnon migrated from platforms like Facebook to alternative social media platforms such as Telegram, Parler, and Truth Social once QAnon was banned on them (Mac and Lytvynenko, 2020). He has received more attention than Pastor Chris, as he has been profiled by Vice magazine and the Anti-Defamation League; however, he is still mostly unknown by the mainstream public (Gilbert, 2021b; Anti-Defamation League, n.d.). As a Christian wellness influencer and QAnon adherent, he provided a snapshot of the coalescence of communities, including health and wellness and anti-vaccination communities, QAnon and MAGA, and evangelical Christianity. Telegram does not allow screen capture or scraping and requires that one sign up via their phone number – because of these limitations, posts were transcribed verbatim into a spreadsheet for analysis. IET began posting on Telegram in 2021 but gathered momentum with more views and followers in 2022. For this study, we selected posts from August 1-5 in 2021 and 2022. 2023 proved more challenging because he decreased his activity on Telegram, with no posts for August 2023; therefore, 5 days in late April 2023 were selected for comparison to his earlier activity. Posts were coded deductively using the taxonomies described below, in addition to inductively for QAnon behaviors and themes, affiliated conspiracy theories, affective behaviors, and any literacy gaps.

Each of us took responsibility for one sample group and coded the posts individually, although both worked from the same initial codebook. Both deductive and inductive coding were used. Deductive coding was derived from the Chandler (2020) and Hodges (2023) QAnon taxonomies to determine if themes from the early movement were still applicable within these smaller splinter groups. Inductive coding explored themes that emerged in each sample:

• QAnon as a superconspiracy: What other conspiracy theories are being mentioned?

• Affective states: over-confidence, confirmation bias, encouragement, and emotions.

• Literacy gaps: How are news items or other content being interpreted (or misinterpreted)?

• Evangelicalism, exegesis, and Andre's (2023) five rhetorical strategies

Along with coding, discourse analysis was used to explore communication within the communities. Crossley (2021) explained that discourse “looks at the overall meanings conveyed by language in context,” with context indicating the “social, cultural, political, and historical background (which is) important to take into account to understand underlying meanings expressed through language” (Crossley, 2021, “What is discourse analysis?”). The number of reactions and views to each post were thus recorded, in addition to whether and how emojis were used in those reactions. The authors also recorded whether the influencers or users shared stories from mainstream or dubious news media outlets or other social media platforms, in addition to noting any cross-posting between groups, individuals, or other influencers. This practice helped the authors understand the discursive ecosystem of conspiracy theories on the social web.

3.1 The pilled pastor

Self-proclaimed Pastor Chris started a small evangelical church in the southeastern United States in 2009. In their early 60s, they are most active through a public, personal Facebook profile directly linked to a church's website. They currently have around 4,000 followers. A Facebook profile was chosen because Facebook, until the advent of right-wing focused social media such as Truth Social and Telegram, served as the main conduit for the early organization of QAnon followers (Argentino, 2020b). Facebook has also served as one of the primary news sources for Americans, with roughly half of Americans getting their news from social media (Pew Research Center, 2020). Numerous QAnon influencers have sprung up in this space, developing communities of highly engaged followers and connecting with other influencers.

Like many conspiracy theorists active on Facebook, Pastor Chris initially maintained two accounts in case one was put on a temporary hold due to content violations–this is a common practice to avoid disengagement during what are termed “Facebook jail” periods. Only one account remains active and has been since 2021. They also have accounts on other platforms, but those are not as active and do not have as many followers.

The discourse of Pastor Chris' community illustrates how a charismatic leader functions in a new religious movement. Although QAnon and Trump are seen as the ultimate authorities, Pastor Chris' posts exhort, encourage, and evoke the affective states of followers. A common refrain is that the benefits of events like the jubilee or the Storm will be greatest for followers who believe. This caveat shifts responsibility away from the influencer when things do not happen as promised. Many posts include long response threads consisting entirely of “hands praying” emojis, “I believe,” or the comment “Amen” in response to the pastor's exhortations about how God's plan (enacted by Trump and QAnon) is coming together. Skeptical posts are often met with statements that negate the skepticism because the pastor's belief that something is true overrules any fact-checking.

What is striking about Pastor Chris' Facebook activity is the repetition. The same themes, including that the long-awaited events of the Storm “could happen soon,” repeat throughout the years. New dates are added to the timeline and new secret communications are revealed with each repetition. Those who start to doubt are excoriated for questioning, despite the QAnon mantra to “Question Everything.” Pastor Chris once commented, “If you decide you want to walk away and quit, do not comment it on [sic] this post, they will be deleted! Go find a post that's negative doom and gloom and stick around for that [sic] but you are not gonna do it on my post!”

Unlike the original QAnon community, Pastor Chris does not perform close reading; instead, they share content from others doing the work. The influencer is a secondary source, a node on a vast misinformation network that proselytizes the gospel of Q farther and farther from its locus. They instead contribute personal prophecies and affirmations of belief to spur others on. Posts that describe sensing a “major shift” or dream visions that indicate the conspiracy is coming to light are frequent themes throughout the months and years.

3.2 The chiropractor conspiracy theorist

IET, a chiropractor and influencer in the health and wellness industry, is affiliated with the pseudoscience and CrossFit communities as well as QAnon. IET was “outed” by journalist David Gilbert of Vice Magazine (Gilbert, 2021a,b), who investigated other major QAnon influencers and their online presence (Gilbert, 2023). The story was quickly picked up by other outlets (Flomberg, 2021; LightWarrior, 2021), including Reddit communities (Cuicksilver, 2021), various activist sites (Unknown, 2021b; Garber, 2022), and the Anti-Defamation League ((Anti-Defamation League, n.d.).This spread across alternative and mainstream networks demonstrates the speed and power of the social web, features that can be exploited for legitimate or illegitimate purposes. IET is a good example of influencer culture and its role in spreading faulty (and harmful) information across platforms.

IET's use of Telegram illustrates the role that more niche social media spaces are playing in American information consumption. This fact correlates with findings from a 2022 Pew Research Institute survey on the role alternative social media plays in Americans' news and information environment; in it, they found that a very small percentage (only 6%) of Americans regularly get their news from such sites, but of that 6%, 65% feel they have found their community, which is often more pro-Trump, pro-America, and religious (Stocking et al., 2022). IET's rhetoric certainly aligns with a pro-Trump and religious worldview, albeit conspiratorial. These social media platforms have earned a reputation for their role in organizing followers for extremist events (Garry et al., 2021; Walther and McCoy, 2021; Myers and Frenkel, 2022). Users like IET exploit the secretive nature of Telegram, sometimes opting to use the disappearing posts feature, whereby posts disintegrate after an allotted period of time. In addition, Telegram challenges hackers to try to crack it, with no one succeeding yet. IET posts his own content in addition to other creators' content. In particular, he favors sharing TikTok videos, which the Pew Research Institute has recently shown to be a dominant information practice for about a third of Americans over 30 years old (Matsa, 2023). Like Telegram, Gab, and Parler, other scholars have also studied the rapid proliferation of misinformation via TikTok (Michel et al., 2022). This practice serves as a meditation on the power of multimodal information in spreading conspiracist ideologies. While IET shuns “fakebook,” he does have a public account on X (formerly Twitter), especially after Elon Musk acquired the platform and reinstated banned accounts (The Associated Press, 2022; Thorbecke and Catherine, 2022). In this way, he is complementary to Facebook influencers like Pastor Chris.

IET attracts those users already consuming alternative news and social media sources; in many cases, his readers follow the same demagogues. Indeed, his followers are enthusiastically engaged, and he regularly encourages interaction. The discourse within this Telegram community demonstrates the construction of knowledge between and among followers and their influencers, a practice found in other online communities that capitalize on user's affective states (Ziegler et al., 2014).

IET often posts as a wellness influencer, providing a gateway for followers to learn about Christianity, QAnon, and other worldviews. For example, IET was interviewed on Rumble, where he discussed alternative health solutions, including parasite cleanses, Ivermectin, and venoms, with another influencer who posts under the headline “The Kate Awakening” (a play on QAnon's mantra “The Great Awakening”) (The Kate Awakening - Parasite Cleanse Part 2 with IET and SSGQ, 2023).

Like Pastor Chris, IET began using Telegram in 2021 to delve into exegetical readings of the Bible to interpret current and future events, often linked to QAnon and white supremacist themes. This practice continued to a lesser extent into 2023. He seemed to grow his following through a bait-and-switch, where he acted as a layman's pastor, interpreting Biblical books for his followers. In almost every post, he implores his followers to pay attention to the groundwork he is laying for them and to abide by his interpretations. The use of capitalization, not unlike former president Trump's tweets, serves to highlight his main points; strategic use of emojis appears in later posts. These close biblical readings are commonly linked to white supremacist themes, such as when he exhorts his readers to “keep bloodlines pure.” As he posts, he becomes emboldened and begins to refer to Kekistan, Pepe, and anti-Semitic tropes. Through using these memes (Kek and Pepe), he signals his “insider knowledge” to his followers, as these memes originated on the chan boards and have been used to mobilize extremist movements globally (Niewert, 2017; McFall-Johnsen and Morgan, 2021; Topor, 2022; Pepe the Frog, 2023). For his influence in this area, he earned a listing in the Anti-Defamation League's glossary and other activist sites working to curb fascist ideologies (Unknown, 2021a).

After being a featured speaker at the Qanon conference, “For God And Country Patriots' Roundup,” in Dallas, Texas (Unknown, 2021a), IET skyrocketed in popularity. His posting about QAnon increased, especially in 2022, which coincides with the QAnon community's celebration of Q's return (Kaplan, 2022). Evidenced by interactions with and views of his Telegram posts, many of which garnered up to 50,000 views and hundreds of reactions and comments, he began to use more explicit conspiracist rhetoric, not only pertaining to QAnon, but also myriad political conspiracy theories, anti-vaccination themes, and COVID-19. He used his newfound popularity to proselytize and peddle various political conspiracy theories that have become associated with QAnon, including those surrounding “groomers” and saving the children, conspiracies involving the Bidens, Obama, and Trump, and those proliferating about the Ukraine/Russia conflict.

4 Findings

In exploring these two communities, numerous themes became apparent. Not only were the QAnon themes derived from the original community still overarching in the discourse, but additional concerns and information practices have developed. Partly due to the affordances of the particular social media platforms we chose, and partly due to the removal in time and digital space from the original chan boards, these themes highlight potential new areas for research and intervention.

4.1 Original QAnon themes

Some themes still predominated from original QAnon communities – for example, conspiracy theories surrounding the Illuminati and the political elite, the global cabal's exploitation of children, and Trump's role in fighting them. The authors observed original QAnon motifs such as “white hats” vs. “black hats” and the QAnon theme of prominent people being fake doubles (because the real person is locked up already or has been executed).

The taxonomies observed by Chandler (2020) and Hodges (2023) have been watered down in these spaces. A thread of anti-elitism and anti-establishmentarianism runs through many of Paster Chris' and IET's posts, but the theme of “QAnon unif[ying] ‘patriotic' Americans through action” has been supplanted in favor of passive watching and waiting. Similarly, although Hodges' categories of “exaggerated interpretation of correlation” and “esoteric but unclear meaning” occur in some posts, the close reading of the early QAnon communities is largely done by others and passed along by Pastor Chris and IET.

4.1.1 QAnon and religion

The QAnon movement has always had Christian overtones; as early as 2020, several who had been keeping an eye on the movement began describing it as a religion (LaFrance, 2020). Amarasingam et al. (2023) analyzed the QAnon movement in terms of the literature defining new, emerging religious movements, and “hyperreal religions,” movements that borrow heavily from popular culture to shape meaning and belief. They concluded that there is significant overlap between these movements and QAnon, and that viewing QAnonthrough these lenses allows for deeper insights into the movement. Andre (2023) noted with concern that “QAnon is reshaping the topography of many churches” (p. 191), analyzing five overarching rhetorical strategies used by Q in their posts. According to Andre, these rhetorical strategies align with Christianity: civil religion, spiritual language, spiritual warfare, explicitly Christian content, and Christian nationalism (p. 194–200).

Journalist Jeff Sharlet delved deeper into the conflation of Trump, prosperity gospel teachings, and QAnon (2023). He attended multiple Trump rallies and spoke with attendees, some of whom were ordained pastors and ministers. Because of his interactions with devoted Trump followers, many of them professed Christians, Sharlet began to investigate the overlaps between the so-called “prosperity gospel” and the Trump campaign:

The Christian Right that has so long dominated the political theology of the United States emphasizes a heavenly reward for righteousness in faith and behavior; the prosperity gospel is about…”amazing results” you can measure and count. The old political theology was about the salvation to come; the Trump religion was about deliverance, here and now (Sharlet, 2023, p. 49, 50).

For example, an attendee named Pastor Sean is quoted as saying, “‘Secret murders everywhere,' …‘Pedophiles, and evil;” in his conversations with Sharlet, he professed belief that “God had chosen Trump” (Sharlet, 2023, p. 116). Another attendee, Pastor Dave, told Sharlet that he had felt a “spiritual drawing” to devote his life to Trump (Sharlet, 2023, p. 117). Indeed, at the beginning of every Trump rally, a pastor would lead the crowd in prayer (Sharlet, 2023; Hassan and Johnson, 2024).

Lundsko and MacMillen (2023) explored the notion of QAnon as a religion or “replacement reality” extensively. Their assertion that “Psuedo-religion-seeking-certainty fuels conspiratorial thinking and is imbued in a certain type of propagandistic rendering of religion” (p. 59) reflects the rhetoric of Pastor Chris' and IET's communities. Pastor Chris, for example, exhorts followers to “innerstand,” a play on “understand” that emphasizes one's internal and affective epistemological constructs. Their posts frequently reference spiritual warfare and Christian nationalism, echoing the rhetorical strategies of Q. The battle between good and evil is Biblical; God is an American. Posts shared by Pastor Chris from cognitive authorities such as “Prophet Jeff Johnson” state things such as “The season of dancing in the streets is upon us as the TRUMP [sic] is sounding and the time of Presidential occupation is here.” Here, the Biblical trumpet of Revelation has been conflated with former president Trump, who, in other posts and comments, has been declared “a decedent [sic] of King David's line of Judah.” Frequently posted in these communities are generative-AI-produced images of Trump with Jesus, including iconography that communicates his divine appointment.

In this evolving iteration of QAnon, interpretive practices honed in Bible study are interwoven with a conspiracist lens. Most of IET's 2021 Telegram activity was devoted to an asynchronous, online Bible study, in which he would transcribe Biblical verses, interpreting them for his followers. After one such post, he bracketed his Bible reading with:

The same evil tactics then are the same evil tactics now - their playbook hasn't changed in millennia. These satanic POS's are so evil and disgusting!! Be grateful that God woke you up for such a HISTORIC time as this…Pray for those still asleep… It's going to be rough for them as all comes out.

While evangelizing, he alludes to QAnon by referring to Satanic actors, linking them inextricably with Biblical truth. In addition, his rhetoric around being “awakened” refers to being “red-pilled” (a reference to the Matrix movie in which choosing the red pill awakens characters to the reality of their world) as well as “The Great Awakening,” when undeniable truth will “be rough” on those “still asleep.” In this way, he exemplifies Argentino (2020a) finding that “QAnon conspiracy theories are reinterpreted through the Bible. In turn, QAnon conspiracy theories serve as a lens to interpret the Bible itself” (para. 4).

Both Paster Chris and IET function in these spaces as QAnon evangelists; they spread the word of QAnon authorities, provide their own literary glosses on the revelations of the day, and function as what Pierre (2023) termed “Activists” on his typology of conspiracy belief—they have adopted the conspiracy worldview in its entirety and “have decided that they must act on beliefs in order to defend them or to fight for the cause” (p. 26).

4.1.2 QAnon and white supremacy

Although the overlap between right-wing politics, conspiracism, and white supremacy may seem new, it is as old as the founding of America (Barkun, 2003; Walker, 2013; Uscinski and Parent, 2014; Kendi, 2016; Cooke, 2021; Hirschbein and Asfari, 2023). What is new is how the superconspiracy QAnon subsumes and manifests these long-standing conspiracy theories serving to justify white power structures and systemic oppression. Sharlet (2023) examined the emerging overlap between Trump followers and conspiracy theorists, including those who adhere to the “great replacement theory,” driven by white anxiety and consisting of an ideology of scarcity, as BIPOC peoples and immigrants outnumber whites. For example, in his chapter “Whole Bottle of Red Pills,” Sharlet visited with men's rights activists; he observed how their ideology dovetails with racism, Trumpism, and QAnon (Sharlet, 2023). The people he interviewed espoused components of the “great replacement theory,” expanding racist claims to include men being ruled by feminism and women. Their rhetoric is palpably violent; such conspiracy ideation is linked to extremist violence, xenophobia, misogynoir, and Neo-Naziism (Rousis et al., 2020; Bailey, 2021; Gonzalez, 2022; Lovelace, 2022; Topor, 2022; Hernandez Aguilar, 2023). Indeed, the overlap between QAnon conspiracism and fascism cannot be ignored; for instance, Connor ties QAnon to “fascist movements dating back to the Nazis,” which “have a long history of co-opting popular cultural movements in order to gain membership, draw widespread attention, and to normalize talking about ideas couched in racist ideology (i.e., questions and debates over who really controls society are little more than anti-Semitic dog whistling)” (Conner, 2023, para. 6). Although their posts are not overtly white supremacist or anti-Semitic, dog whistles about “bankers,” “the Rothschilds,” and Holocaust denialism all point to a worldview that tolerates, if not actively encourages, darker suppositions. For example, in a post from May 4, 2021, since deleted, Pastor Chris shared a post from “Mr. Patriot” referencing the “hiding of God” through denial of the Flat Earth and commented, “Wow what I am learning about God Creator, earth, holocaust [sic].”

In an assessment of how the Australian manifestation of QAnon coalesced with nationalism and conspiracy theories targeting marginalized communities, Jones (2023) asserted that QAnon promoted violent activism and support for right-wing politicians to bring about “The Storm” (a moral and legal reckoning) and “The Great Awakening” (unavoidable reality to the masses). Similarly, scholars who research how white supremacy has shifted through time have traced how the proliferation of “The Big Lie” is linked to past propaganda such as Mein Kampf , with devastating consequences (Neely and Montañez, 2022, p. 13, n. 6). Meanwhile, Indigenous scholar and activist Julian Brave NoiseCat has drawn parallels between QAnon rhetoric and white colonizers' historical and ongoing oppression of Indigenous peoples (Remski and Brave NoiseCat, 2023). Brave NoiseCat posited that the fear of ritualized child kidnapping and abuse in the QAnon worldview reflects the historical reality of Indigenous children being forcibly removed from their homes to be indoctrinated in colonial boarding schools. In yet another example, the sublimation of the term “groomer” in QAnon ideology is tied to the historical and contemporary threats to LGBTQIA+ affiliated communities (Mogul et al., 2011; Martiny and Lawrence, 2023). IET perpetuates such threats through his promotion of the “groomer” rhetoric and affiliated conspiracy theories, even challenging his followers to argue with him by posting an unattributed definition of “groomer” and stating, “If your official and legally documented actions match this description then being called a groomer isn't libel or slander: it's a fact.” Unsurprisingly, given QAnon's mantras and its use of terms like “The Great Awakening” and “The Storm,” it has attracted and absorbed adherents whose views align with white supremacists, extrajudicial violence, and policies that target marginalized communities.

In our research that surveyed library workers encountering conspiracy theorists over the fall of 2021, participants described potent examples of conspiracy theories, often tied to QAnon, targeting ethnic, religious, and racial categories of people (Beene and Greer, 2023). Most common in the data was conspiracism surrounding Holocaust denial or other anti-Semitic conspiracies, a finding tied to scholarly discussions of conspiracy theories targeting Jews (Barkun, 2003; Hirschbein and Asfari, 2023). There were also instances of patrons adhering to conspiratorial plots perpetrated by the Chinese and Chinese-Americans, usually involving the COVID-19 pandemic. Participants described conspiracy ideation that targeted Black people; for instance, those conspiracy theories surrounding the murders of George Floyd and other unarmed Black men, the Black Lives Matter movement, and the NAACP. A subset of respondents noted conspiracy theories involving immigrants or Indigenous peoples linked rhetorically to the “great replacement theory.” These and affiliated conspiracies are now more enmeshed with QAnon and mainstream right-wing rhetoric (McFall-Johnsen and Morgan, 2021; Zihiri et al., 2022).

Of the two case studies, IET exemplifies the intermingling of anti-Semitism and white supremacy. For example, he references “Kek” while disseminating a conspiracy theory about former President Obama and his finances:

These people are evil.

They're also stupid.

We see everything

When you see it, post it in the comments

Also, what [sic] are they broke? Kek... Where did their money go?

But who, or what, is Kek? As the Roose (2020) explains, Kek “is bandied about on social media… where alt-right activists and avid Donald Trump supporters lurk” and is “a ‘deity' of a semi-ironic ‘religion' the white nationalist movement has created for itself online” (para. 1-2). Originating in gaming culture and spreading to the chan boards, Kek came to symbolize chaos and darkness and is invoked when signaling to others online that one is “awakened” and that destroying the world order is necessary to bring about an enlightened race. Kekistan is thus the mythical country that asserts a satirical ethnicity, most often affiliated with white supremacy; indeed, the flag of Kekistan is based on the Nazi war flag (Pepe the Frog, 2023). When IET reiterates to his followers to “keep bloodlines pure,” or when he refers to “Kek” or “Kekistan” and employs Pepe memes, he is signaling his bona fides in an explicitly racist, destructionist ideology.

4.1.3 QAnon, wellness culture, and COVID-19

The overlap between yoga and fitness industries, alternative health philosophies, and conspiracy theories has been well-documented in both news media and scholarly literature. For example, Conner (2023) analyzed the overlap between wellness, fitness and yoga instructors, finding that a “‘soft[er]' version of QAnon helped draw in those…wanting to get more physically fit, those seeking alternative forms of therapy when modern medicine has failed them, and still for others a search for both a community of likeminded individuals and a spirituality that resonated with them” (para. 34). Monroe (2017) discussed how essential oils became a cure-all for our “Age of Anxiety,” while Asprem and Dyrendal (2015) chronicle the confluence between conspirituality and conspiracy ideation, arguing that this confluence can be traced “deep into the history of Western esotericism” (abstract). So, while this coalescence may seem new, it is more likely that QAnon infiltrated an area already ripe with conspiracism (CBS News, 2021).

Social media has provided the gateway for QAnon ideology to seep into otherwise domestic spaces on platforms such as Instagram. Influencers promoting yoga, CrossFit, and alternative health have interwoven posts about child trafficking, for example, or used a hashtag associated with QAnon (e.g., #greatawakening). Indeed, the idea of an “awakening” can be deployed for New Age spirituality just as readily as it can for QAnon or Christianity (Meltzer, 2021; Conner, 2023). A casual user of social media, dabbling in New Ageism, yoga, or alternative health may receive targeted advertising or see pretty Instagram posts promoting QAnon and other conspiracy theories, thereby drawing them in (Chang, 2021; Baker, 2022; Hermanová, 2022). Such infiltration has not gone unnoticed in fitness circles. For instance, CrossFit distanced itself from the avid practitioner and congressional representative Marjorie Taylor Greene, who is also a QAnon evangelist (Brooks and Jamieson, 2021). Meanwhile, yoga instructors began speaking out about QAnon and other conspiracy theories infiltrating their communities (Roose, 2020; CBS News, 2021).

While anti-vaccination sentiment is not new, QAnon influencers capitalized on the outbreak of the COVID-19 pandemic, with online misinformation spreading to offline decisions and actions. Pastor Chris has been particularly vituperative about the COVID-19 vaccine, linking it with other conspiracies relevant to Big Pharma. Commenting on their own post from May 3, 2021, they appear to have copied content from “TheDonaldUS” Telegram account entitled “10 Reasons why President Trump had to approve these deaths” (excerpted below) that served to position pandemic deaths and COVID-19 vaccines (“the shot”) as part of a much larger plan:

With the approval of the shots (but placing realistic information in the sights of alternative news), the deep state shot was to remain Experimental and NOT MANDATORY (which was NOT part of their plan). In war, you sometimes have to choose between two bad options.

Considering the strategy of the globalists, the DJT and the military have chosen the path with the fewest casualties, namely to continue the facade of public approval of the toxic (but NOT OBLIGATORY) shot.

The alternative FAR would have been more deadly to all of us in many ways. If the Deep State had created an approved BINDING shot, it would have killed millions more.

Is this virus fictitious? If it cannot be separated and there is no scientific trace, does it exist? Only in the minds of the masses!!!

As evidenced by these two small communities spearheaded by IET and Pastor Chris, much of the recent scholarly attention has focused on how the QAnon worldview has unified suspicions against vaccinations or the capitalist inclinations of the pharmaceutical industry with a broader conspiracist mindset.

For IET, a certified chiropractor and CrossFit enthusiast, blending alternative health philosophies, New Ageism, Christianity, QAnon, and anti-vaccination rhetoric (especially as it pertains to the COVID-19 pandemic) seems to come easily. Whether this practice serves to promote his own businesses or beliefs or to share posts from other social media influencers and platforms, IET peddles conspiracist worldviews. Throughout his Telegram, one can find references to a global cabal pushing Big Pharma policies, valorization of resisting vaccinations, and promotion of alternative health solutions. He interacts and encourages his followers through comments and posts, reinforcing the concept of a community fighting the mainstream. Threaded between various political and scientific conspiracy theories are posts directly linking followers to QAnon. For instance, earlier this year, he shared an interview he conducted in July 2019 on Conspyre.tv, dubiously titled “The 2nd American Revolution,” in which he outlines “WHY [he] supports Donald Trump and WHY [he] believes in the Q Operation,” encouraging followers to watch and comment. This post received over 42,000 views and 600 interactions and comments. In this way, he provides a gateway for those followers who may not have heard of QAnon, or those who harbor doubts, while reinforcing a particular conspiracist worldview. Indeed, the comments from this post praise his research, referring to him and other community members as “true Patriots.”

Public health scholarship has long devoted research to combatting vaccine hesitancy, including how misinformation and conspiracy theories can hinder public health outcomes (Larson, 2020; Sobo, 2021; Enders et al., 2022; Farhart et al., 2022; Seddig et al., 2022). A few sources have explicitly discussed the commingling of the QAnon and anti-vaccination movements, highlighting that the ideologies behind both movements emphasize fighting big institutions and mainstream experts by doing one's own research to inform personal decisions (Dickinson, 2021; Inform, 2023). Such anti-science belief can “act as an affective epistemological hinge for other forms of conspiratorial thought” (Fitzgerald, 2022, p.8); once one accepts that a public health campaign is hiding something and working to subvert the truth, it is easier to then accept other scientific conspiracies (e.g., flat earth). Lewandowsky has devoted his research to this overlap, finding that conspiracist ideation and worldviews predict a broader rejection of science (Lewandowsky et al., 2013a).

4.1.4 Observed information practices, hermeneutics, and epistemologies

As creators on new platforms such as TikTok spin ever-more-fantastical suppositions, they are shared with excitement by both IET and Pastor Chris, with their followers accepting these new conspiracies as gospel. The phenomena of parasociality and cognitive authority function in these spaces to propel and reaffirm conspiracy beliefs. Logical fallacies abound, but specific examples stand out: the conjunction fallacy, the non sequitur fallacy, the divine fallacy, and false attribution. The conjunction fallacy presupposes that two or more conclusions are likelier than any of them on their own, defying the laws of probability, while the non sequitur fallacy is an illogical conclusion based on preceding statements or tangents in conversations. The divine fallacy was apparent whenever followers assumed causality from divine intervention or supernatural force, either because they did not know how to explain the phenomenon or because they were driven by faith to assume so. False attributions or falsely exaggerated correlations were constructed from the close reading of signs and communications, leading followers to see collusion where there was none and results that did not follow a straight causal path.

The information practices observed in these communities reaffirm the religiosity of these spaces, most notably, complete credulity and deference to prophecy or authority. Indeed, scholars have noted how hard-right Christian groups expect their followers to accept everything, with no questions asked (e.g., the literal truth of the Bible) – this same ideology has been applied to the Trumpist MAGA movement (D'Angelo, 2024; Hassan and Johnson, 2024). In Pastor Chris' feed, the most outlandish assertions are accepted without hesitation, such as planes running on air, cars running on water, and MedBeds that will make us all young and perfect. Followers are bullied for any sign of skepticism; they must accept everything Pastor Chris posts or they are removed and blocked from participating in the community. In one post from May 2023, Pastor Chris summarizes “intel” received from “Bruce,” one of the cognitive authorities they often reference, about the coming Nesara/Gesara wealth transfers, a financial conspiracy theory that has been integrated into QAnon. After reporting on Bruce's call, Pastor Chris exhorts followers to continue praying and believing to enact the coming “Heavenly blessing… It might also be at the time of… those days of disclosure and lockdowns,” referring to the original QAnon Storm. In this and other posts, logical fallacies abound alongside a cognitive authority (Bruce) acting as a substitute for truth.

In addition to logical fallacies, these communities exemplify echo chambers of emotions and confirmation biases. A post from IET in April 2023 shared one of his tweets that coalesces COVID-19 conspiracy theories, political conspiracy theories, and QAnon. In this post, he exhibited an exaggerated correlation of actual and imagined events, drumming up fervor among his readers:

Look at the list of current events now front and center habbening [sic]: 1. COVID jabs being removed from market 2. Hunter Biden investigation 3. Bio weapons [sic] in Ukraine 4. Biden financial dealings busted open 5. J6 fedsurrection [sic] 6. Fauci files 7. [clown emoji] IC agents being exposed. All at once.” Underneath this screenshot, he shared a meme of Pepe the Frog with glasses, reading a book entitled “1001 I Told You So's.”

This post was viewed 45,000 times, with over 750 reactions and 80 comments from followers. Comments ranged from disheartened resignation over the fact that “normies” are not “awake” and following the signs to those who added conspiracy theories to the list (too much acclaim from other followers). In a seamless blending of fact (Hunter Biden and January 6th investigations) with conspiracy theories (Biden financial dealings, Fauci files, COVID-19 vaccines, Ukraine bioweapons, and IC agents), IET exemplifies a faulty hermeneutics, mingling established record with supposition to weave a conspiratorial narrative.

In both communication groups, belief is prioritized over empirical evidence – it surpasses reality. Truth is relational, and belief is the only metric of import. Teleologism is evident as disparate theories are linked in a web of conspiracy. Followers prop up each other's comments and reactions, reinforcing the notion of a community fighting against a normative, mainstream, and false understanding of events.

4.1.5 Emotions in the echo chamber

These communities define authority differently than mainstream societal definitions. Those who follow QAnon and its more outlandish offshoots strongly reject “elitist” knowledge (i.e., academic information) as part of their worldview, so traditional epistemologies and pedagogies cannot function alone to dislodge the conspiracy worldview. In-group trust is central to QAnon's mantra, encouraging those who doubt or falter to hold steady: “Trust the plan.” For example, whenever a follower questioned a comment by a fellow follower, or worse, a post by the influencer, they were vehemently attacked or removed from the community. Those allowed to remain were treated with disdain, accused of being outsider trolls, or told they did not believe enough. Tribalism is thus reinforced: Are you with us or against us? Such shaming reinforces the behavior and belief in conspiracy ideation, as those who are there to fulfill psychological and affective needs will ensure that their behavior submits to group norms. Thus, various emotions promote conspiracy theorizing within these online communities, including the subgroups of QAnon that have splintered and spread across social media platforms.

Moreover, followers of conspiracy influencers like Pastor Chris and IET recognize them as cognitive authorities with whom they have developed a parasocial relationship, or psychological relationships between influencers and their audience; often driven by strong emotions, followers come to consider these personalities as friends. Emotions are powerful motivators for engagement with conspiracy theories, including anxiety, hopelessness, isolation, anger, and shock (Douglas et al., 2019; Green et al., 2023). In addition to negative emotions, positive affect has also been shown to subvert critical cognitive processing and influence credulity; when we are calm and happy, we are more trusting (Forgas, 2019). Such affective states drive users' information-seeking behaviors and their ability to effectively evaluate faulty information, providing epistemic closure. Since followers consider IET and Pastor Chris to be parasocial cognitive authorities, these influencers appear as savvy manipulators of their followers' emotions, using emojis and capital letters to call attention to potent ideas and themes. They encourage their followers and prolong their engagement, supporting positive affective states in their followers, and increasing followers' trust and confidence in the information IET and Pastor Chris provided. Some of this work is the work of a competent influencer. IET, for example, could also seek to prolong engagement with his followers in order to promote his businesses and sell products; while it is unclear how much he believes of what he posts, his work as an influencer includes a careful calculation for monetizating his worldview. Influencers such as IET have been researched by others who have surveyed QAnon, alternative health, and celebrity influencers (Bloom and Moskalenko, 2021; Yallop, 2021; Petersen, 2023).

Prolonging engagement not only serves the influencer, but the community as well, reinforcing a sense of solidarity. Meanwhile, arousing rhetoric or inflammatory posts keeps their followers engaged and enraged. This is typified in a post from May 2, 2023:

The real crash is the Kilbal system, they lose everything, but WE GAIN EVERYTHING! Never underestimate ur GOD!

This post from Pastor Chris received 31 comments, with most posting affirmative GIFs or “Amen/Hallelujah.” To one who expressed a loss of hope due to the extended timeline, Pastor Chris exhorted them to

think how tired the children who [sic] been trafficking and those who have been in hiding to help bring freedom to those children and to humanity! When u realize this then our wait is nothing compared to that! This is the real tribulation that is happening and we are about to come out into real Freedom from God the Source God the Creator!

This comment illustrated the closed-off trust system developed within these communities, which can make it extremely hard to break through. Any questioning or nudges from skeptics are met with disdain, counterpoints from the in-group, or, as in the comment above, appeals to emotion.

As additional conspiracies are subsumed into the QAnon movement and promulgated via the social web, QAnon could be considered an overarching superconspiracy (Beene and Greer, 2023). For instance, one of Pastor Chris's followers commented on the absolute trust that the community has in former president Trump to enact the financial blessings wrought by the Nesara/Gesara conspiracy:

This is the way I look at it, [sic] I don't think for 1 min that DJT would let us all lose our money while he rides around on his golf cart living large, [sic] there must be a plan. Do you honestly think that anyone would support him or the plan, if he let us all lose our money in the process of doing so? NO, all he has gained from the MAGA movement would be gone, poof, if we all went broke and lost our life savings.

This comment illustrates the vulnerability of the community members and their absolute trust in Trump, an affective state that the influencers exploit. Through actively encouraging the continued belief in things like all debt being wiped out, Pastor Chris and their ilk engender a hope that will only lead to greater disappointment or financial ruin as some choose to forego payments because their loans are seen as illegal, connected again to the global cabal, under the gospel of the conspiracy.

The conspiracy phenomenon of “MedBeds” serves as perhaps the most heartbreaking example of this exploitation. Often included as part of the supposed Nesara/Gesara reveals, MedBeds present a combination of conspiracy (hidden technologies), scientific illiteracy, and magical thinking. These beds, sometimes attributed to Elon Musk and Tesla, sometimes rumored to be military technology, have numerous benefits, as elucidated in a lengthy post widely shared among conspiracy communities throughout 2023. Illustrative of the magical thinking and misplaced hope of this particular conspiracy, enumerated among the purported abilities of the Medbeds was the regrowth of organs and teeth, the cure of all physical and mental ailments, changing hair and eye color at will, and “downloading languages” and other knowledge to one's brain.

Through constant encouragement and repeated appeals of their soon-to-be-announced availability, the conspiracy influencers prime the vulnerable for exploitation. Followers echo the influencers' overconfidence, enthusiasm, and excitement. Comments abound with people announcing they have stopped their medications, quit going to doctors, or lamenting that the MedBeds did not come soon enough for their loved ones.

4.1.6 (il)literacies

The following examples illustrate information literacy and other literacy gaps linked to information behaviors relevant to these groups. Educators and librarians can close these gaps by first recognizing them. Through an exploration of these communities' behaviors, we believe there are many opportunities for better education.

4.1.6.1 Visual and media literacy

Furthering visual and media literacies is a crucial endeavor for these communities, since much of the content community members share consists of unattributed and decontextualized images, viral memes, and questionable videos. Hodges (2023) summarized recent conspiracy theory literature findings that “present-day conspiracy narratives spread in large part through the networked circulation of decontextualized digital images, which accrue new narrative significance as they enter new contexts and arrangements, traveling further from their authentic sources and meanings over time” (p. 132). This networked circulation of decontextualized imagery does not just happen in conspiracy communities. Palmer (2019) analyzed the common practice in online media of reusing images, videos, or screenshots outside of their original contexts to reshape a narrative, a phenomenon he termed the intersemiotic contextual misrepresentation of photojournalism (p. 104). Sharing bits from mainstream media sources or dubious news outlets helps spread misinformation. This practice was evident in both samples. In 2022, IET posted a YouTube video from “We The Media (William Scott)” - “The Unvaccinated will be Vindicated,” From The Highwire, a dubious news outlet profiting from misinformation. This post, which paints the unvaccinated as heroes working to expose and vanquish the Deep State, was viewed by over 46,000 and garnered over 1,000 reactions and 100 comments. One commenter said, “Very powerful. And some people says [sic] Trump pushed this vaccine. Trump tricked the Deep state epic move,” while another commented that posting this video “got me 30 days in fakebook jail.” For that commenter, the “time out” forced upon them did not result in a critical analysis of the video and why it may have sparked the ban but rather reinforced the idea that the Deep State is out to get the truth-tellers. With each new edit and share of news media clips, the visual material takes on additional meanings and wilder interpretations.

The human mind processes and creates meaning from images far quicker than text (Palmer, 2019, p. 107); underscoring this reality, Palmer describes the image as “the juggernaut in the perceptual process” (p. 123). This cognitive reality shaped the Framework for Visual Literacy for Higher Education's (Association of College and Research Libraries, 2016) knowledge practices and dispositions, with knowledge practices including:

• Interpret visuals within their disseminated context by considering related information such as captions, credits, and other types of metadata.

• Question whether a visual could be considered authoritative or credible in a particular context, which can include comparing it to similar visuals, tracking it to its original source, analyzing its embedded metadata, and engaging in similar evaluative methods. (p. 8)

Multimodal media can present an even greater challenge for educators looking to debunk conspiracy theories, as the multiple channels of information can lend credibility to what otherwise could be doubted if the information was shared purely through one mode (e.g., image, text, audio, etc.) (Serafini, 2013, 2022; Campbell and Olteanu, 2023; Neylan et al., 2023). Neylan et al. (2023), writing for PubMed, examined techniques to inoculate online users against multimodal misinformation, including games and gamification; however, they found that multimodal misinformation is uniquely resilient to inoculation.

Both Pastor Chris and IET populate their feeds with multimodal imagery. For IET, sharing YouTube and TikTok videos is a powerful rhetorical tool that instantly communicates to followers. For example, in a Telegram post-dated 2 August 2022, IET shared a TikTok video, which quickly explains that Satan is driving illnesses and the pharmaceutical companies that profit from them, including through vaccines; near the end of the video, hospital logos are visually compared to Satanic symbols. This post garnered 163 comments, ranging from “I knew it!” to “I never noticed this, but it makes sense.” By sharing a popular form of online video, IET only needs to bracket it with his own rhetoric, which he does through a headline that says, “This world isn't what it seems, Let me explain,” and his one comment underneath: “Preach!” For Pastor Chris, images are often posted from the White House webcam, with additional textual glosses praising any movement or change in scenery as a clear sign that conspiracies are being revealed.

Thus, the power of the shared videos and images in these communities is enhanced through the influencers' commentary. Because of the challenges that occur with reading and evaluating multimodal imagery, Campbell and Olteanu (2023) advocate for a new post-digital literacy to combat mis- and disinformation spread through multimodal means; they argue that a new, embodied form of meaning-making is deeply embedded within the digital information ecosystem. A new framework for literacy is both necessary and compelling.

4.1.6.2 Digital literacy

Another area ripe for further investigation is the lack of digital literacy evident within these communities. While there is not one definition of digital literacy, strides are being made by educators and researchers (Tinmaz et al., 2022; Marín and Castaneda, 2023; Palmer, 2023). At its most basic, digital literacy entails understanding the online information environment – how information is created, shared, and repurposed through systems and platforms. In conspiracist communities, there is often a lack of understanding of how information travels and changes online. This is another departure from the early QAnon community, which was comprised of coders and gamers; newer conspiracist communities, such as the groups explored here, display more misunderstanding of how digital information is created and changed online.

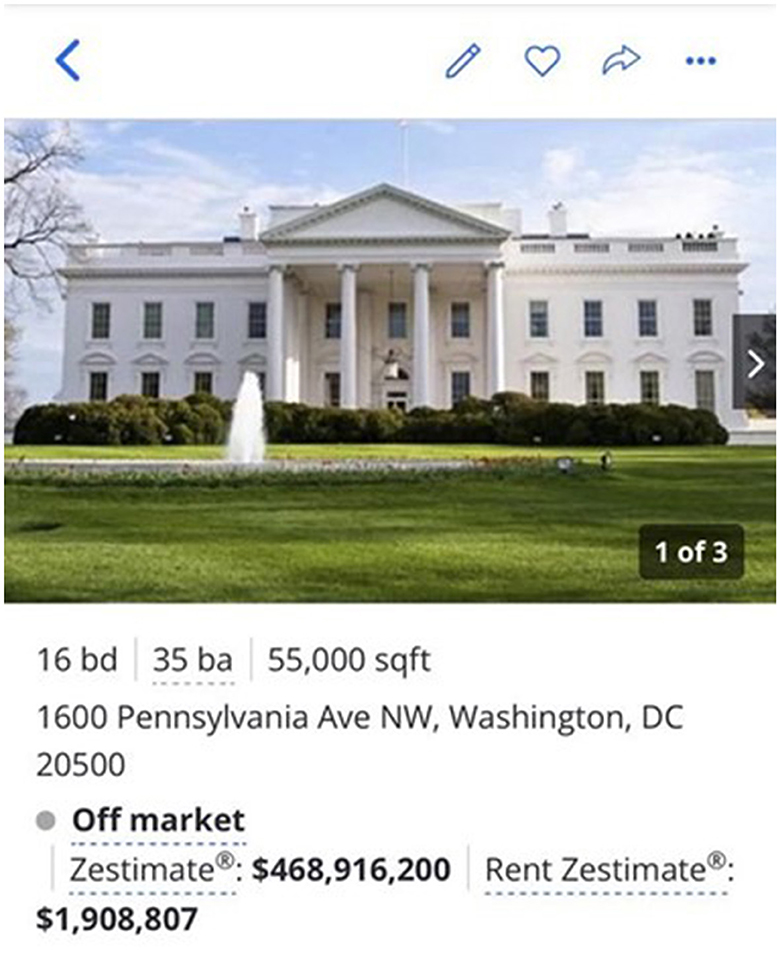

A great example of this misunderstanding is Paster Chris' post from November, 2023, where they posted excitedly that the White House was up for sale. They followed up this post with another that included a screenshot from the real estate website Zillow that purportedly showed the White House up for sale (Figure 1).

Although clearly labeled “Off-market,” a standard stub placeholder listing on Zillow was enough proof for Pastor Chris and many of their followers. In comments, Pastor Chris elaborated: “I sense the white hat will sell it, get the money, put it into the treasury to get back to the people [sic] and then the rods of God will come along and destroy it. Not sure how it all will play out [sic] but you did see a Q drop about the white house for sale.” Commenters pointing out that it was off-market were met with rumors of it already being sold or teleological reasoning such as one follower's note that “yes, I seen [sic] it was off market. However, which third party placed it there? There appears to always be a reason for such events that reveal something… just what... Who knows?”

This example illustrates an all-too-common problem within conspiracy communities: users must understand the ever-growing and ever-sophisticated systems built to populate the internet with content. The conspiracy theory surrounding Wayfair, an online furniture company, provides another example: out-of-stock products like storage cabinets were assigned random names by the company, sometimes resembling human names. The QAnon community circulated rumors that the products were actually codes for children being trafficked and that the products were being used to store the children (Spring, 2020); the theory spread across social media, leading to digital warriors interrupting with and subverting actual child trafficking work (Contrera, 2021). Literacy training that includes basic knowledge about the purposes for online information and how it flows through systems would be beneficial.

4.1.6.3 Science literacy

Scientific illiteracy and conspiracism have been well-studied, especially among climate change scholars (Lewandowsky et al., 2013a,b; Scheufele and Krause, 2019; McIntyre, 2021). Scientific disinformation is rampant in these communities and reflects a lack of knowledge and a willingness to set aside one's knowledge for the more desirable “hidden truths.” For example, Pastor Chris shared a post in May 2023 from the group “PLANET X FOR REAL”:

I can't believe people are so blind they don't even see their freaking moon is doing a cartwheel all over the sky and rising and setting all over the place….Yet the dumb trolls/ NCPs in here will say yeah [sic] that's normal. Don't listen to people who don't even look up while they are too busy looking down [sic] sitting on their couch [sic] buying into all other distractions. Those are the same people who took the [emoji of a syringe]

The group's reference to “Planet X” refers to an actual hypothesized additional planet in the solar system (Kauffman, 2016); the group's posts, however, promote the conspiracy theory that Planet X and other celestial bodies have been hidden from the public. The post shared by Pastor Chris seems to reference a flat earth theory that states that the moon is hung in the sky above the earth in a sealed dome (Modern Flat Earth Beliefs, n.d.). At the end of the post, a jab at those vaccinated against COVID-19 illustrates how adherence to one scientific conspiracy theory quickly begets adherence to another.

The motivations for such beliefs are complicated, but like all others, they stem from affective states and psychological needs. Centralized, government-backed scientific institutions, researchers, and the academic elite are prime targets for mistrust. The scientific method is misunderstood, with changing scientific recommendations portrayed in these communities not as evidence of a growing body of knowledge but as evidence of malfeasance, fraud, or stupidity: “These idiots have no idea what they are doing.” This mindset was common during the COVID-19 pandemic. These communities promote their own scientific experts and cognitive authorities to counteract those that mainstream “normies” accept. For example, IET has promoted creators on alternative news podcasts, others on Telegram, and even creators on TikTok to draw attention to “what's really happening” and “the truth,” each instance of which is applauded and cheered by his followers, who sometimes comment that they, too, have been following these influencers for some time – alongside IET, of course.

Evident in IET's Telegram posts and Rumble interviews are many of the anti-pharmaceutical, anti-vaccation, and anti-science stances. Alongside a libertarian, individualist stance in making and facilitating one's own medical decisions is the promotion of debunked cures such as “parasite cleanses,” Ivermectin and hydroxychloroquine use, and assorted alternative health theories. Concurrent with these views is a hardened stance against scientific expertise, especially those who mandate or recommend COVID-19 vaccinations. These stances have been well-documented in the scholarly literature (Larson, 2020; Voinea et al., 2023). And while many of the fears and associated beliefs around vaccination are warranted, especially in marginalized communities with a distrust of authorities (Stein et al., 2021; Bilewicz, 2022; Cortina and Rottinghaus, 2022; Fredericks et al., 2022), conspiracy ideation also plays an outsized role (Mylan and Hardman, 2021; Enders et al., 2022; Farhart et al., 2022; Seddig et al., 2022). The consequences of distrust have many detrimental effects, notably on public health. For example, vaccine hesitancy has led to a resurgence of measles, which had been nearly eradicated in the United States (Orenstein et al., 2004). A 2020 report on a recent outbreak noted that 9% of the infected required hospitalization, with one death (Feemster and Claire, 2020). Fear of the COVID-19 vaccines, due to either previous vaccine hesitancy or fear of the new mRNA technology used in the vaccines, led to a number of conspiracies and lower vaccine uptakes, with one report estimating that the Delta variant was 11% more fatal for those who were unvaccinated (Dyer, 2021).

Therefore, we must strengthen a basic understanding of science and work toward a holistic scientific literacy – one that begins with understanding the scientific method and how scientific discourse evolves and ends with a fundamental insight into (and respect for) scientific authorities and authoritative bodies. As educators, the health of future generations depends on it.

5 Discussion: lessons for educators

Conspiracy adherents most likely lack the skills and knowledge to evaluate conspiracy claims critically, and many suffer from logical fallacies (e.g., correlation, not causation), teleogism, and other types of misinterpretation. As the well-known “Dunning-Kruger effect” (Kruger and Dunning, 1999) stipulates, those who do not have sufficient knowledge in a subject area will tend to overestimate their abilities and cannot recognize their cognitive and epistemic deficits until they have further training. The deficit model is not the only answer, although it does play a part in these communities. What seems to be happening in these influencer groups is a combination of literacy deficits, maladaptive heuristics, and, as Hodges (2023) phrased it, an “exaggerated interpretation of correlation.” Followers ignore facts that counter their belief systems, filling in the gaps in information with faulty interpretations to fit the desired outcome. The behavior observed in these two communities illustrates how a lack of information and affiliated literacies (e.g., visual, media, digital, etc.) leads to greater gullibility when faced with bad information (Mercier, 2021). An ignorance regarding the various systems of information production and dissemination has led to naïveté when faced with information that has been manipulated. Information professionals can play a role in helping people assess and evaluate information, leveraging the short snippets of time they have with them and focusing on engendering transferable critical thinking dispositions (Weiner, 2013).

With its impetus in the social, participative environment, metaliteracy provides necessary metacognitive prompts that can be leveraged to address many of the logical and literacy gaps seen in these communities. While those entrenched in the groups may be harder to reach, educators can better prepare those in classrooms or other learning environments, inoculating them against information-disordered environments (Compton, 2019). For example, Brungard and Klucevsek (2019) argue that the metaliteracy domains of affect and metacognition provide essential skills that promote critical evaluation of evidence and whether to use that information to produce original content.