94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Commun., 13 March 2024

Sec. Multimodality of Communication

Volume 9 - 2024 | https://doi.org/10.3389/fcomm.2024.1288896

Kimberly Kowal Arcand1†

Kimberly Kowal Arcand1† Jessica Sarah Schonhut-Stasik2,3*†

Jessica Sarah Schonhut-Stasik2,3*† Sarah G. Kane4,5†

Sarah G. Kane4,5† Gwynn Sturdevant6†

Gwynn Sturdevant6† Matt Russo7

Matt Russo7 Megan Watzke1

Megan Watzke1 Brian Hsu8

Brian Hsu8 Lisa F. Smith9

Lisa F. Smith9Introduction: Historically, astronomy has prioritized visuals to present information, with scientists and communicators overlooking the critical need to communicate astrophysics with blind or low-vision audiences and provide novel channels for sighted audiences to process scientific information.

Methods: This study sonified NASA data of three astronomical objects presented as aural visualizations, then surveyed blind or low-vision and sighted individuals to elicit feedback on the experience of these pieces as it relates to enjoyment, education, and trust of the scientific data.

Results: Data analyses from 3,184 sighted or blind or low-vision survey participants yielded significant self-reported learning gains and positive experiential responses.

Discussion: Results showed that astrophysical data engaging multiple senses could establish additional avenues of trust, increase access, and promote awareness of accessibility in sighted and blind or low-vision communities.

Light is the dominant data source in the Universe; therefore, our sense of sight pervades historical astronomy. For millennia, humans explored the sky with the unaided eye until the invention of the telescope (1608) provided a deeper view of the cosmos. In 1851, the first daguerreotype of a Solar eclipse captured the Sun's light, the first astronomical image (Figure 1). In the twentieth century, the quality and quantity of astronomical data experienced massive growth; during World War II, the development of “false color” enhanced astronomical image interpretability (Mapasyst, 2019), and digital data capture with charge-coupled devices (CCDs; Rector et al., 2015) created the potential to collect tremendous amounts of data.

Figure 1. First image of the Sun, taken in Fizeau and Foucault (1845). Image from ©ESA at: https://www.esa.int/ESA_Multimedia/Images/2004/03/First_photo_of_the_Sun_1845.

Newly launched space-based observatories captured many of these large data sets, which revolutionizing astronomy's cultural impact (Taterewicz, 1998) by recording the energy, time, and location of photons emitted from cosmic objects. Space telescope data, delivered through NASA's Deep Space Network,1 is vast and beyond typical human comprehension, from all-sky stellar mapping to imaging gargantuan galaxies (Marr, 2015; Arcand and Watzke, 2017). Images are central to understanding the scope and significance of such vast catalogs (Smith et al., 2011; Arcand et al., 2019), creating an information landscape where archival data have immense value and visualized data hold additional interpretive utility (Hurt et al., 2019).

In the late twentieth century, CCDs began to specialize, observing and transposing light outside visible wavelengths, extending the observable Universe once more. Here, data visualization became essential. NASA's “Great Observatories” [originally the Hubble Space Telescope, Compton Gamma Ray Observatory, Chandra X-ray Observatory, and Spitzer Space Telescope (National Aeronautics and Space Administration, 2009), and now the James Webb Space Telescope] rely on the conversion of their digital transmissions into images (Rector et al., 2015). These data can be combined into aesthetically beautiful images and shared with non-experts (English, 2016), relaying important scientific messages. With the internet, astronomers may couple these innovatively visualized data with worldwide public dissemination (Rector et al., 2015).

Image aesthetics is essential in aiding data comprehension (Arcand et al., 2013; Rector et al., 2015), and the nature of the visualization varies depending on the audience; for example, a plot communicating a scientific result in a journal or a scientifically accurate but aesthetically pleasing image to communicate publicly (DePasquale et al., 2015). Astronomers must alter images for light outside the visual spectrum regardless of the audience; therefore, it is feasible to use other methods of data vivification (Sturdevant et al., 2022) to elicit new understanding or meaning-making.

Studies on astronomical image processing (Rector et al., 2007, 2017; DePasquale et al., 2015), most notably the use of color (Smith et al., 2011, 2015, 2017; Arcand et al., 2013), have shown image creators must explain the translation process for non-expert audiences and that lay explanations perpetuate the public's confidence in the image's scientific nature and authenticity, even when they depict components concealed from human eyes, indicating the same is possible when translating visual data to sound.

Sonification is often defined as mapping data to sound to represent information using non-speech audio, the sonic counterpart to visualization (Kramer et al., 2010; Sawe et al., 2020). For decades, image sonification has communicated spatial information to blind or low-vision (BLV) individuals (Meijer, 1992; Yeo and Berger, 2005; Zhang et al., 2014). It is also used for audio alerts (i.e., Geiger counters; Kramer et al., 2010), research (i.e., studying brain wave changes; Parvizi et al., 2018), communications and education (i.e., multimedia cosmology sonification; Ballora and Smoot, 2013), increasing accessibility (i.e., the sonification of visual graphs; Ali et al., 2020), and for art and entertainment (i.e., compositional works based upon neurological or cosmic particle data; Sinclair, 2012).

In recent years, sonification has become more present in the world of astronomy as communicators and researchers alike try to understand how best to engage the BLV community and allow astronomers to conduct their research using sound alone; see Harrison et al. (2022) and Noel-Storr and Willebrands (2022) for discussions on this topic. Generally, a primary goal of astronomy sonification relates to public engagement (Zanella et al., 2022); however, research investigating active listening by astronomers for data exploration and analysis exists (Alexander et al., 2010; Diaz-Merced, 2013). A notable project in this area is the Audible Universe Project by Misdariis et al. (2022), which aims to create a dialogue between sonification and astronomy. Sonified celestial objects include pulsars, the cosmic microwave background, and solar eclipses (McGee et al., 2011; Ballora, 2014; Eclipse Soundscapes, 2022). In the past decade, astronomy-related sonifications have increased dramatically (Zanella et al., 2022), with more than 98 projects globally representing a third of the Sonification Archive (Lenzi et al., 2021).

The most common approaches to sonification are audification and parameter mapping. With audification, data are translated and mapped directly to audio, so the relevant frequencies fall within hearing range; for example, the sonification of gravitational wave signals recorded by the Laser Interferometer Gravitational-Wave Observatory (LIGO) collaboration (Abbot, 2016); which interprets space-time fluctuations caused by passing gravitational waves as an audio signal, allowing us to hear black hole mergers in real-time (Gravitational Wave Open Science Center, 2022).

For parameter mapping, aspects of the data control specific audio parameters. For example, a star's brightness fluctuations or hues of color may control the frequency so that unique features can be identified (Astronify, 2022); it is common to map brightness to pitch as our ears are more sensitive to variations in frequency than volume. The inverse spectrogram is the most common image mapping technique (Sanz et al., 2014), mapping one axis to time and the other to frequency, allocating a corresponding brightness for each pixel to control volume. For example, we may scan an image from left to right, with different pitches indicating the vertical position of objects. Due to the wide variety of possible mappings, an explanation of the process is vital for the listener to extract meaningful information.

Sonification provides astronomy communicators a new avenue to engage the public, particularly BLV communities, traditionally excluded from engagement. Furthermore, sonification is advantageous as a research tool (with or without visually presented information) because listening to data exploits the auditory system's exquisite sensitivity to pattern variation over time, whether perceived as discrete rhythms or changing pitch (Walker and Nees, 2011). In addition, because sounds are multidimensional, we may encode many parallel data streams by mapping each to a different audio dimension (pitch, volume, timbre) or control multiple simultaneous audio streams so our ears can either listen holistically or focus on one stream at a time (Fitch and Kramer, 1994). Finally, we can render each layer of a multi-wavelength image as a separate audio stream (a different note or instrument) to explore the relationship between wavelengths of data. As such, sonification has excellent potential for stimulating curiosity, increasing engagement, and creating an emotional connection with data.

In recent years, NASA has released several sonification projects, showcasing several decades of data (National Aeronautics and Space Administration, 2020, 2022; and others). In 2020, NASA's Chandra X-ray Observatory launched 'The Universe of Sound,2 providing bespoke audio representations of astronomical datasets for non-expert audiences and working with BLV representatives to create and test sonifications. In this work, we analyze survey data to investigate the effectiveness of sonifications, particularly for BLV communities. This paper represents the first study to explore responses to astronomical sonifications from the BLV community and compare these responses to the experiences of sighted participants.

Before we discuss the results of our work, it is essential to acknowledge our positionality within the context of this study. For some authors, our motivation to explore this topic is shaped by a personal connection to the disability community through lived experience (either in the BLV community or the broader disability community); for others, the motivation lies in the desire to explore alternate data vivification processes and understand how to communicate science to the public effectively. Our own experiences have led us to believe sonification is a positive tool for education and research, and we remain mindful of this bias throughout our analysis. Finally, although our team represents a range of perspectives within the disability and astronomy community, we acknowledge that we remain limited by our lived experiences as a group of majority white individuals living in North America and Canada.

The primary research questions for this study were:

1. How are data sonifications perceived by the general population and members of the BLV community?

2. How do data sonifications affect participant learning, enjoyment, and exploration of astronomy?

There were two secondary research questions:

1. Can translating scientific data into sound help enable trust or investment, emotionally or intellectually, in scientific data?

2. Can such sonifications help improve awareness of accessibility needs that others might have?

The research participants were a convenience sample of respondents (18 years and older) to an online survey. We solicited participants from websites including Chandra3 and Astronomy Picture of the Day (APOD),4 digital newsletters, social media sites such as Facebook and Twitter for Chandra5 and APOD,6 and the social media and contacts of the principal investigator (PI). Further distribution occurred through additional social media sharing. The survey was active on SurveyMonkey7 for 4 weeks beginning February 24, 2021. We note that SurveyMonkey surveys are compatible with assistive software typically used by the BLV community, particularly screen readers and screen magnification.

The Smithsonian Institutional Review Board8 determined that this survey was exempt research under Smithsonian Directive 606.9 The survey started with a participant consent form in which choosing to continue with the survey equaled consent. We provided no compensation to survey participants or dissemination partners.

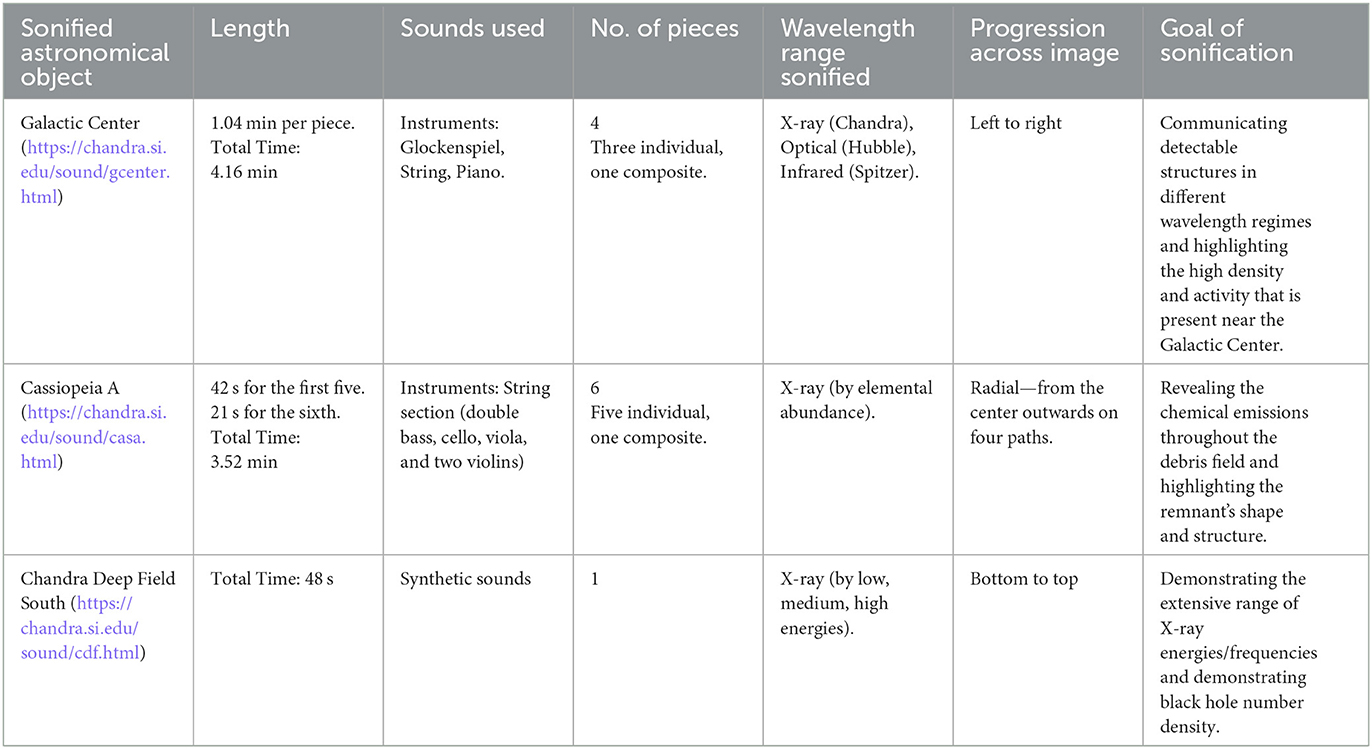

We chose three sonifications from the six available at the time of the study at NASA's Universe of Sound website, choosing those that best represent the collection available for their variation of instrumental vs. synthetic sounds and how they track the visual data—left to right, top to bottom, or radially. Survey participants were presented with the sonifications and their accompanying videos to experience as they were able, followed by short text descriptions (screen-reader adaptable) for each of the represented astronomical objects (the Galactic Center, Cassiopeia A, and the Chandra Deep Field South). The sonifications played in the same order, without counterbalancing, starting with the sonification that used non-synthesized sounds, followed by two more complex sonifications. We provide the details of each sonification at the companion GitHub10 and highlight the key points, along with links to the sonifications, in Table 1.

Table 1. Each row in the table describes the basic parameters for each sonification including a link to the data product, the runtime (in suitable units), the types of sounds used, the number of individual components in the sonification, the wavelength range sonified, and the communication goal of the sonification when it was created.

Our survey began with five demographic questions11 (age, gender, education level, self-rated knowledge of astronomy, and whether the participant identified as BLV).

Participants were then asked to experience the sonifications and after each, respond to a set of statements using a Likert scale. Each statement began with:

“Please respond to this item using a scale of 1 (Disagree Strongly) to 5 (Agree Strongly):”

The five statements were:

1. I enjoyed this experience.

2. I learned more about the [title of sonification, i.e., Galactic Center] through this experience.

3. Hearing the sounds enhanced my experience.

4. Watching the videos enhanced my experience (if applicable).

5. I trust that this representation is faithful to the science data.

The scale provided the following options: 1 (Disagree Strongly), 2 (Disagree), 3 (Neutral), 4 (Agree), 5 (Agree Strongly).

Following this section, the participants were asked about their overall experience, first:

“List up to three words to describe your emotional response to these data sonifications.”

Then, they were asked to rate the following three statements, using the same Likert scale from the first section:

1. After listening to these data sonifications, I am motivated to listen to more.

2. After listening to these data sonifications, I am interested in learning more about our Universe.

3. After listening to these data sonifications, I want to learn more about how others access information about the Universe.

Finally, they were asked two open-ended questions (which allowed for full sentences). These were:

1. What recommendations do you have to help the scientific community create better listening experiences?

2. If the person who created these data sonifications were here, what question would you ask them?

Once the survey closed, we exported the data and cleaned and analyzed the 4,346 responses using Python. We removed the entry of one participant who took from March until July 2021 to complete the survey and all responses in which participants did not indicate whether they were BLV or sighted or answered fewer than three non-demographic questions. This cleaning ensured we could compare the results of the BLV and sighted groups for those who engaged with the sonification questions. We removed identical entries by comparing Internet Protocol (IP) addresses and demographic questions. For repeat entries, we kept only the most recent response. Cleaning yielded 3,184 participant responses. See the Appendix for the demographic breakdown of the cleaned sample.

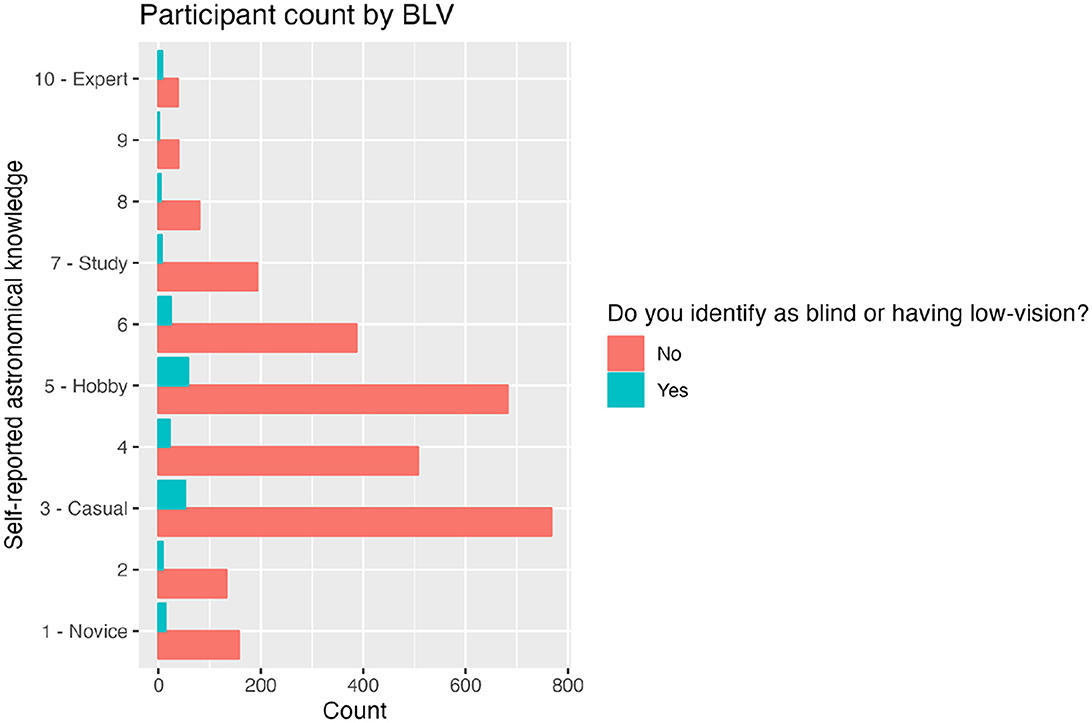

Figure 2 displays self-reported knowledge of astronomy, divided by the BLV (blue) and sighted (red) participants. The apparent contrast in size of the two demographic groups is discussed in Results (Section 3) and Future Work (Section 6.1).

Figure 2. Histogram showing the relative amount of BLV and sighted participants. Red (blue) bars represent sighted (BLV) participants. The x-axis shows the participant counts and the y-axis shows the self-reported level of astronomy knowledge from novice to expert. Note the significant difference between BLV and sighted participants. Thirteen participants omitted astronomical knowledge or BLV status and were omitted from this figure.

Regarding additional demographics, we note a slight majority of male-identifying participants (57.1%). Participant ages spanned from 18 to 24 years (21.6%) to 65 years and older (16.3%); there is a slight predominance of younger participants, but all age groups are represented at above 10% of the total. Likewise, the self-reported education level of participants ranges from those who completed some of high school to those with advanced postgraduate degrees (i.e., doctorate, LLB, or MD); however, those who completed some of high school were the least represented group (3.5%), with most participants (61.3%) having completed an undergraduate degree or higher. We refer the reader to the tables in the Appendix for a complete breakdown of participants' demographic data.

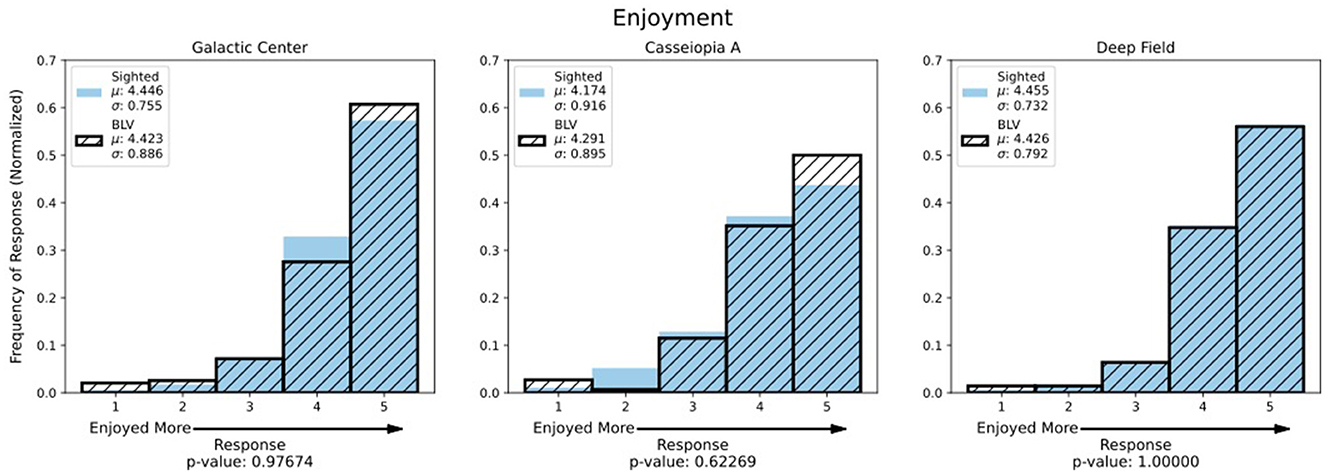

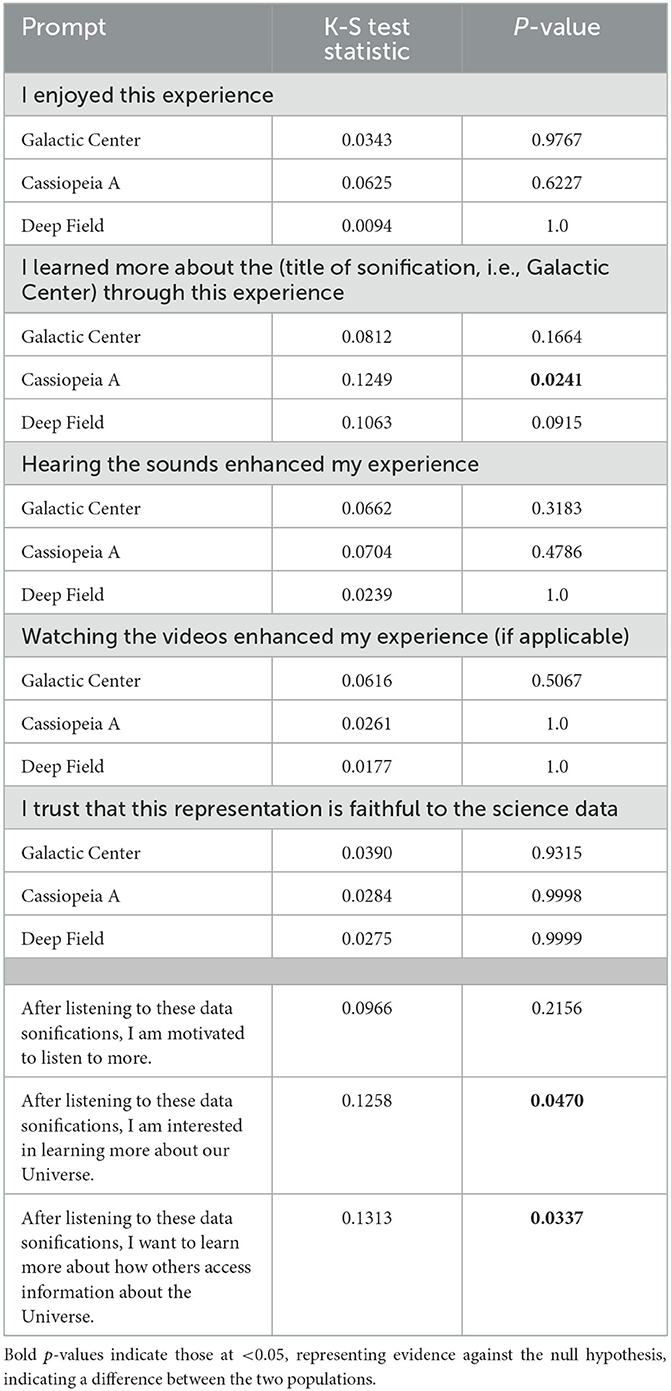

Figures 3–6 display responses to the sonification prompts, separated into BLV and sighted participants, and displayed in order of sonification from left to right (Galactic Center, Cassiopeia A, and the Deep Field). We performed 2-sided Kolmogorov–Smirnov (K-S) tests on each set of distributions using the Python module Scipy's ks_2samp12 function. We elected to use the K-S Test, a non-parametric test, because we did not expect the distribution of responses to our survey to be normal, which visual inspection of the data confirmed. We define a p-value of < 0.05 as evidence against the null hypothesis and a p-value < 0.001 as strong evidence against the null hypothesis. Although our sample sizes differ between the BLV and sighted groups, the 2-sided K-S test can accommodate these differences while maintaining validity, and the default “auto” parameter used can handle small sample sizes. However, this sample has more significant uncertainty due to the smaller number of BLV participants. The results of the K-S tests and p-values for all responses can be found in Table 2.

Figure 3. These histograms depict the participant's ratings of their enjoyment of each sonification. A rating of one represents the least enjoyment (Disagree Strongly), and five represents the most amount of enjoyment (Agree Strongly). We normalized the histograms for easier comparison between the sighted and BLV groups due to the significant difference in sample size. Blue histograms represent the sighted group, and the black hatched histograms represent the BLV group. (Left) Enjoyment rating for the Galactic Center. (Middle) Enjoyment rating for Cassiopeia A. (Right) Enjoyment rating for the Deep Field.

Table 2. K-S test statistics and p-values for the sighted and BLV groups' responses to the survey prompts.

Figure 3 shows participant ratings for the prompt: “I enjoyed this experience.” Generally, all participants reported enjoying the sonifications, with the majority selecting 4 (Agree) and 5 (Agree Strongly). A higher number of BLV participants selected 5 for the Galactic Center and Cassiopeia A, whereas the enjoyment ratings for the Deep Field are almost identical for both groups. Cassiopeia A shows the most extensive range of ratings, and although more BLV participants selected the highest rating, the p-value does not suggest a statistically significant (0.620) difference between the two groups; however, the p-value is significantly lower than for the other sonifications (0.997 and 1.000, respectively).

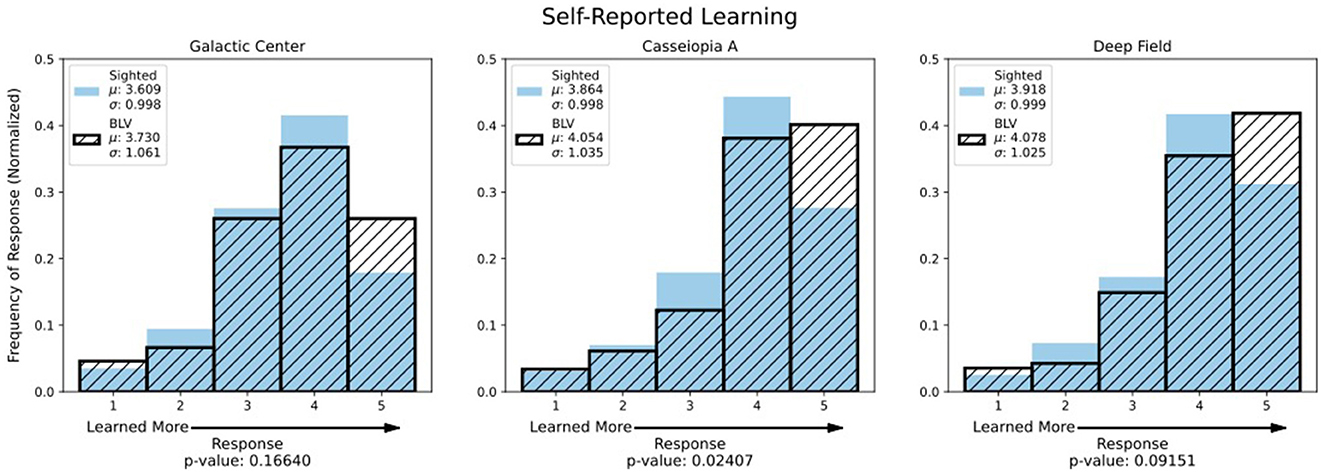

Ratings for the prompt: “I learned more about the [title of sonification, i.e., Galactic Center] through this experience,” are shown in Figure 4. In general, most participants felt they learned something about the cosmic sources. For each sonification, the BLV group rated learning more, particularly for Cassiopeia A, where the low p-value (p = 0.02407) suggests a statistically significant difference in the responses of the BLV and sighted groups. On average, both groups claimed to learn most about the Deep Field.

Figure 4. These histograms depict the participants' feelings on how much they learned from each sonification. Unless otherwise stated, these and all subsequent histograms follow the same conventions as Figure 3.

Figure 5 shows ratings for the prompt: “Hearing the sounds enhanced my experience.” Generally, participants felt that adding audio to the astronomical images enhanced their experience, particularly for the Deep Field. Interestingly, all the p-values are high, suggesting that both the sighted and BLV participants found their experience enhanced to the same extent.

Figure 5. These histograms depict the participants' agreement on whether their experience of the images was enhanced by adding sound.

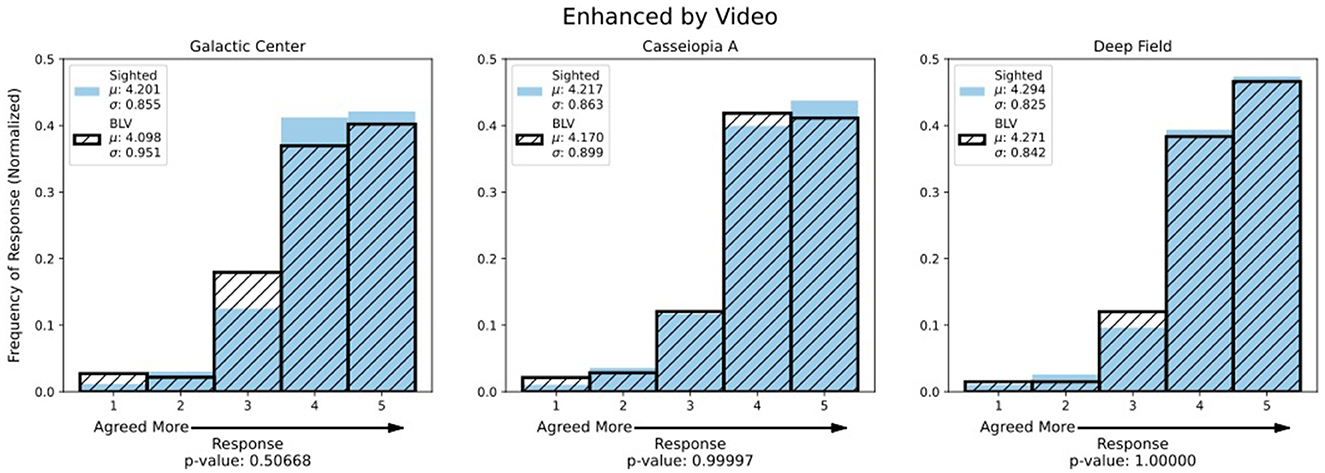

The responses to the prompt “Watching the video enhanced my experience” are depicted in Figure 6. The K-S test results for all three objects indicate that the responses from both groups are statistically similar, with the p-values for Cassiopeia A and the Deep Field suggesting the highest similarity. We note that across all three objects, both groups infrequently responded that they disagreed (1 or 2), with 4 and 5 being the most common response, suggesting both groups generally found the video to be a beneficial addition to their experience.

Figure 6. These histograms depict the participants' rating of whether their experience was enhanced by watching the included video.

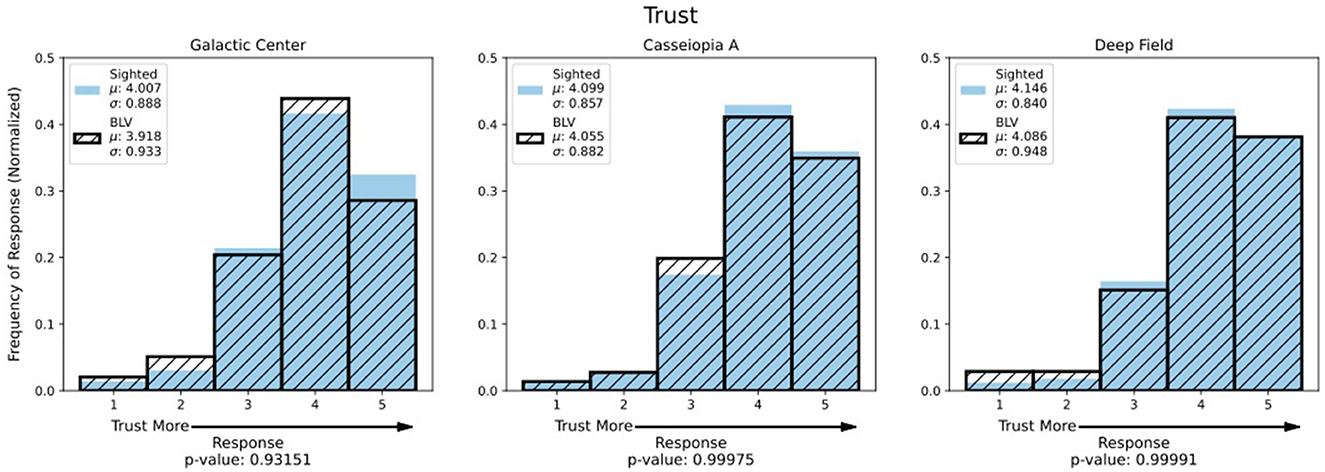

The prompt: “I trust that this representation is faithful to the science data,” is displayed in Figure 7. The frequency of 4 and 5 ratings indicating agreement suggests that participants believed the sonifications were scientifically accurate. The high p-values (all > 0.9) suggest no evidence that the trust levels differed between the groups.

Figure 7. These histograms depict the participants' agreement on whether they trusted the sonifications were scientifically accurate.

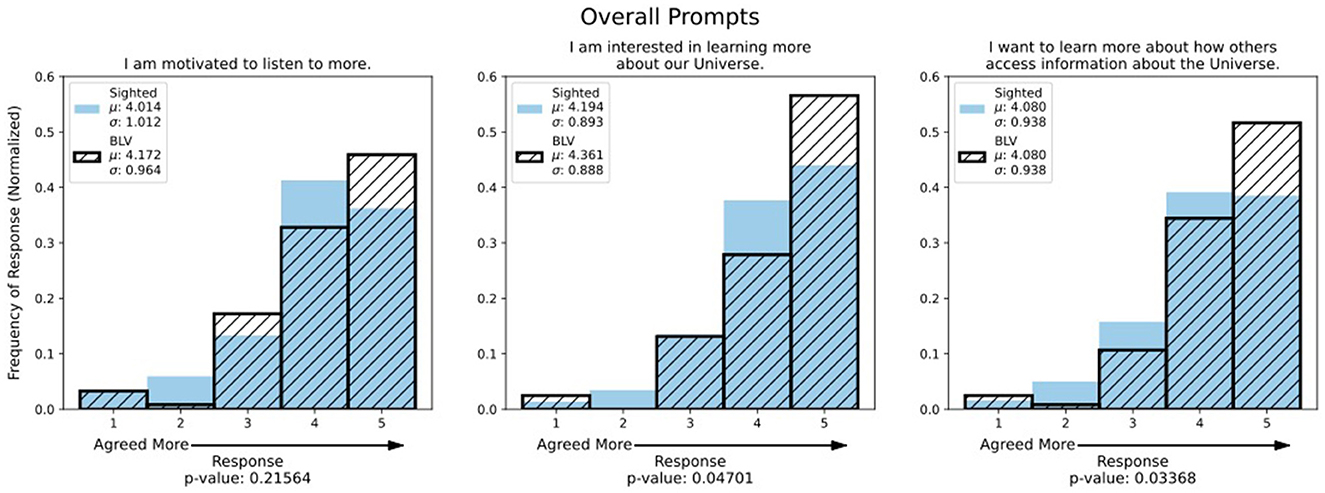

Figure 8 shows the ratings for three prompts given after listening to the sonifications: “I am motivated to listen to more [sonifications],” “I am interested in learning more about our Universe,” and “I want to learn more about how others access information about the Universe.” The p-value for the left-hand histogram implies no difference between the groups regarding whether they wanted to listen to more.

Figure 8. These histograms depict ratings for three prompts provided after participants had listened to all sonifications. (Left) Ratings for the prompt “After listening to these data sonifications, I am motivated to listen to more.” (Middle) Ratings for the prompt “After listening to these data sonifications, I am interested in learning more about our Universe.” (Right) Ratings for the prompt “After listening to these data sonifications, I want to learn more about how others access information about the Universe.” Apart from the prompts addressed (a different question for each histogram as opposed to a different sonification), the histogram conventions follow the same as those previous.

The distribution of ratings regarding interest in continued learning about the Universe and how others access this information differed significantly between the groups (p-values < 0.05). In both cases, BLV participants responded 5 (Agree Strongly) more frequently than sighted users. Although sighted participants responded 5 with a lower frequency to these two prompts, the most common responses were still in agreement (4 and 5), indicating that sighted participants were also interested in learning more. Of the 2,203 sighted respondents to these questions, 1,708 responded in agreement to the prompt regarding being motivated to listen more, 1,798 responded in agreement to the prompt about being interested in learning more about the Universe, and 1,710 responded in agreement to the prompt regarding wanting to learn more about how others access information about the Universe. One sighted participant who answered the other two final prompts did not respond to the prompt about wanting to learn more about the Universe.

Figure 9 shows a word cloud,13 displaying the terms participants used to respond to, “List up to three words to describe your emotional response to these data sonifications.” Word size corresponds to their frequency of use. For terms that pertain to a positive experience, the number of instances is as follows: The combined terms “curious” and “curiosity” totaled 329 instances, and “calm” showed the highest number of entries for a single term (326), followed by “interesting” (243). Additional terms included “relaxed/relaxing” (216), “amazed” (169), “wonder” (168), “beautiful” (133), “peaceful” (132), and “awe” (124). Negative terms also appeared, including (but not limited to): “Boring/bored,” “confused/confusion,” “stress,” “disturbed,” “pointless,” “gimmicky,” and “scary,” but these appeared with far less regularity.

Figure 9. This word cloud shows words provided by participants when asked to describe the sonifications. Larger words correspond to those used more frequently.

The first open-ended question asked, “What recommendations do you have to help the scientific community create better listening experiences?” There were no character limits imposed on the answers. Using manual inductive coding (Chandra and Shang, 2019) through sampling and re-coding, we collated responses into seven broad categories: general comments, technical, scientific, musical, educational, sensory, and accessibility-related, chosen based on the themes seen in the responses. There were 1,417 responses (removing all non-descriptive responses such as blanks or symbols [i.e., ///]). A complete summary of the responses is available in the data repository.

Amongst the responses, we noted two frequent themes; the first, a common misunderstanding of sonification, both at its conceptual level (e.g., “Include actual sound from space”) and in the context of interpretation (e.g., “I don't fully understand the relationship between the sounds and what we are seeing”). These comments suggest an unfamiliarity with sonification as a form of data representation, and the audience may require more background to interpret this representation correctly. We teach students to read graphs and charts visually, so education in sonification might likewise be necessary, echoing the suggestions of Fleming (2023).

The second theme noted is the frequent suggestions regarding the assignment of pitches and other audio parameters to the data, ranging from musical suggestions (e.g., “Please don't stick to the equal temperament system in sound reproduction, so much scientific information is lost or misrepresented that way. Also, why link different things to different pitches suggesting differences in quality better represented by different timbre?”) to responses tagged as scientific (e.g., “If you're going to assign a sonification to individual elements, I think you're going to have to find a better way to differentiate between them than to just change the note on the scale. Maybe brainstorm a way to differentiate between them based on atomic weight or outer electron shell, assigning a sonification to the sounds the orbits might make.”). These responses reflect the question of standardization in sonification, much the same as the standards for visual data representation: how we represent different images or data types in a way that is both interpretable across different sonifications and auditorily pleasant. These standards could improve the feasibility of sonification education.

The second open-ended question asked, “If the person who created these data sonifications were here, what question would you ask them?” We coded these responses into the same seven categories. There were 1,656 responses after removing non-descriptive responses. Across the categories, many questions involved the purpose of these sonifications (e.g., “is the goal enchanting soundscapes or information transfer or enhancing information acquisition in non-visual.” and “By a glimpse to photo we can all have these information at once. So what is the use of this?”). Other questions inquired how the audio parameters were mapped to the image data (e.g., “Did you select the frequency distributions to try and make the sonifications tuneful, or are they evenly (linearly, logarithmically) spaced across the audio spectrum?”). The ubiquity of these questions indicates the relative novelty of and lack of familiarity with sonification as a data representation tool among general audiences.

Enjoyability

Participants across both groups rated their experience as enjoyable (Figure 3), with slightly higher ratings from the BLV group for the Galactic Center and Deep Field. The word cloud also demonstrates enjoyment (Figure 9), as the majority of words skew toward positive responses (i.e., “peaceful,” “wonder,” and “relaxing”). It is encouraging that many participants enjoyed the sonifications, demonstrating the benefit of accessible data even to sighted individuals. Figure 8 demonstrates participant interest in hearing more and suggests the benefits of sonification beyond a learning tool for the BLV community to a general engagement tool. It also presents an opportunity to engage sighted groups regarding accessibility in astronomy.

Surprisingly, the Deep Field showed the widest range of enjoyment, which was unexpected as we reduced the image resolution by a factor of four before being sonified to produce more audible, consistent tones. We made this change to add musical regularity, designed to increase enjoyment. This sonification may have been the least popular as it was the shortest and contains synthetic sounds. Considering this alongside the first open-ended question, which demonstrates participant preference for orchestral sounds, a preference for instrumental sonifications could be demonstrated. In addition, demographic information may be pertinent here; for example, do particular listeners prefer orchestral sounds?

Learning

The BLV participants reported learning more than the sighted participants (Figure 4), which we expected as some of this group may have lacked exposure to astronomical data due to the nature of this visual science. Generally, this result suggests that sound adds a layer to the experience (or creates an experience) that BLV participants rarely encounter. Furthermore, both groups reported learning more about these objects, suggesting that sound, when added to visual data, can improve self-reported learning regardless of sight, demonstrating the benefit of accessible learning models (see also Figure 3). Interestingly, participants reported learning the least about the Galactic Center, perhaps because it is a more generally known astronomical “object.” However, we should note that the Galactic Center was the first sonification heard, which may have affected self-reported learner ratings.

Enhanced experience

Figure 5 reinforces our finding that accessibility benefits all; the majority of participants found their experience enhanced with the addition of sound. Intuitively, we expected the BLV participants to find more significant enhancement from the audio; however, the similarity in responses between the two groups could reflect the spectrum of sight loss within the BLV community. Among the legally blind population, ~10–15% have no vision.14 The remaining 85–90% experience sight loss ranging from light perception to the inability to read text and see images without significant magnification. We did not request BLV participants report their degree of sight loss, so we do not know how many could see the images. Compounding this difficulty is that “visually impaired” among the BLV population has no widely agreed-upon definition, so our BLV sample could include individuals with sight better than the legal cutoff for blindness; however, it seems reasonable to assume that many of our BLV participants could see some of the visualizations included, accounting for the similarities in the groups' responses.

Trust

Many participants felt that the sonifications represented scientifically accurate data (Figure 7), and although encouraging, we must be mindful of potential bias. A level of trust may exist due to NASA's association with the project. Interestingly, the level of trust did not vary much between the groups, implying that an accompanying visual component did not increase trust. This result represents the only set of ratings for which the BLV group chose 4 (Agree) to a greater degree than 5 (Agree Strongly) across all sonifications, possibly indicating a critical area of future improvement; if enjoyment, self-reported learning and enhancement from multiple sensory components are high, perhaps trust is the essential aspect to improve. The BLV community chose a rating of 4 more for the Galactic Center image, a sonification with orchestral mappings. The relatively lower trust ratings for the sonifications from BLV participants might reflect the historical exclusion of this community from astronomy and the sciences more broadly.

Accessibility

The BLV participants wanted to listen to further sonifications, learn more about the process, and learn more about how others access information about the Universe (Figure 8). They stated agreement for these prompts more consistently than the sighted group. This difference in ratings indicates that our BLV group found their exposure to sonification rewarding, allowing them to learn more about the Universe through a novel method with which they may not have experience. This increased interest from BLV participants could represent a personal investment, supporting their community's requirement for accessible educational materials. Sighted participants rated these prompts with less enthusiasm. Still, they showed a positive trend toward interest, a promising sign that they felt motivated to learn more about information accessibility, potentially increasing awareness for the disabled community. The majority of participants indicated an ongoing interest in sonification following exposure to our study, aligning with our findings of enjoyment (Figure 3), self-reported learning (Figure 4), and feelings that sound enhanced the experience (Figure 5).

With the exception of the self-reported learning from the Cassiopeia A sonification (Figure 4), these overall prompts regarding motivation to learn more about the Universe and about information access regarding the Universe mark the only results wherein the BVI and sighted responses differ to a statistically significant extent. This signifies that while both visually impaired and sighted participants largely enjoy, trust, report learning from, etc. individual sonifications to a similar degree, sonifications on a larger scale appear to be more motivating to BVI participants than to sighted participants.

Misconceptions

Responses to our first open-ended question regarding possible improvement (Section 6.1) revealed two potential misunderstandings. The first misconception is the source of the sound (i.e., the sounds are only representations of the data), which is rectifiable with better explanations of the sonification process. Similar misconceptions may also affect visually represented data, for example, the translation of X-ray data to visually accessible images, where the viewer might conclude that these celestial objects are visible to the human eye (Varano and Zanella, 2023). The second misconception is how sonification represents scientific data. This misconception requires more thought than the first. Misunderstanding how we represent the data echoes feelings of mistrust, perhaps due to sonification's novel approach and the lack of exposure to this technique, remedied through more exposure. It suggests that descriptions of the goals and the creation process should be central and involve careful and considerate communication.

This study represents a valuable contribution to accessibility in astronomy; however, it is not as rigorous as desired. We selected participants via a convenience sample, where they voluntarily chose to complete our survey after receiving the link from a newsletter (Chandra or APOD) or astronomy-related social media account. Due to the voluntary nature of participation, those involved may be more interested in astronomy and have a base of knowledge, possibly affecting their interpretation of the sonifications. By formulating a questionnaire that (in part) attempts to obtain opinions on sonification products produced by the authors, we may have introduced a social desirability bias, potentially causing participants to respond more favorably to the sonifications. A complete analysis of this effect is outside this work's scope, but we may consider it more thoroughly in future publications.

Our most significant limitation was the lack of BLV respondents, with the smaller sample size resulting in increased uncertainty in the distribution of their responses. Finally, our survey includes a United States-heavy participant distribution due to how we circulated the survey.

Scientists, data processors, and science communicators are failing to reach and communicate with BLV audiences. We should expand our priorities for processing and presenting information beyond images and present new, novel methods for those with and without sight loss to engage with science. The public availability of astronomy data does not necessarily equate to the true accessibility and equity of that data, much as providing a sidewalk in a high-traffic area improves pedestrian safety but remains inherently inaccessible and inequitable without thoughtful design (by cutting the curb). This paper offers suggestions on potential means for universal design for learning (Bernacchio and Mullen, 2007) in astronomical data processing to improve access to scientific research.

Translating data into sonifications is similar to translating language; by considering cultural nuance, we can create sounds that retain astronomical information and impart an accessible mode for scientific communication. A key conclusion is that the sighted participants enjoyed, learned, and had their experience of astronomy enhanced by the sonifications to similar levels as the BLV participants. The responses from the BLV community reinforce the need for access, and the responses from the sighted community show the benefit to all. These results are typical when implementing accessible designs. For example, consider moving airport walkways, a requirement of the Americans with Disabilities Act15 often enjoyed by those without disabilities. Astronomy, at its core, is a visual science and provides a vital example of the necessity of sonified data for educational and outreach purposes; however, the lack of accessible materials for the BLV community is not specific to astronomy. A review of all potential avenues in which sonification could play an important role is outside the scope of this paper; suffice it to say that, at the very least, in all places where primary data representation is visual, there is a place for a sonified counterpart.

Furthermore, when considering our secondary research question, “Can translating scientific data into sound help enable trust or investment, emotionally or intellectually, in scientific data?” we urgently need accessible data to improve trust. Figure 7 (compared to Figures 3–5) and the first open-ended question demonstrate this. As referenced in the discussion (Section 4), both groups show some degree of mistrust that the sonifications accurately represent the scientific data. In some cases, there is a disconnect as to what the content is showing. We can only cultivate trust through consistent, considerate, and accurate communication. The BLV group generally trusted the data less than the sighted group. Without more detailed information on levels of sightedness, it is hard to determine whether this is due to the inability to compare the visual and audio elements or, perhaps, historical evidence for and societal expectation of astronomy as a purely visual endeavor.

The secondary research question, “Can such sonifications help improve awareness of accessibility needs that others might have?” was explored in Figure 7. The responses reflect that exposure to accessible science data enhances knowledge and accessibility to both groups. These results represent the accessibility needs of the BLV community and the willingness and engagement of the sighted community.

As we progress from this work, the long-term potential learning gains for respondents who engage with sonified data is an important consideration. A single exposure to our sonifications and related questions cannot quantify the long-term learning outcomes of the participants; however, this is an important consideration when implementing sonified materials into more formal educational settings, and it is essential to examine whether using multiple methods would reinforce learning outcomes and retention for students.

One more minor but no less critical conclusion is that participants prefer instrumental sonifications over synthesized sounds. This result is significant because the enjoyment and enrichment of the listener is predicated on the listenership, dictated by how many people listen or include sonifications in their communication efforts. Accessibility to astronomy and scientific data, generally, is still in its infancy. Astronomers need an accelerated effort with adequate resources to reach underserved populations. This project is an important step, but many more are needed.

Future work must focus on the active engagement of BLV participants while recognizing and accommodating the wide range of visual impairments within this non-homogenous group. Efforts could employ different sampling techniques to recruit a larger sample, particularly for a range of BLV individuals with a scope of astronomy familiarity. BLV participants without astronomy familiarity provide insight into how intuitive sonifications are, whereas participants with more familiarity can share how well sonifications match or enhance their understanding of the objects.

We acknowledge that the BLV category spans a broad range of sight loss that this study does not explore or quantify. Future research should ask participants to comment on the usefulness of the images accompanying the sonification as a proxy for measuring their functional vision. Researchers could also collect data on the accessibility software used while completing the survey (e.g., screen magnification, screen readers, Braille displays, and other methods) to understand whether BLV participants access the survey visually or often visually access their computers. Furthermore, one could ask for feedback regarding the visualizations to improve the accessibility of these data representations to those with low vision.

Astronomy communicators must continue to address and resolve misunderstandings of the sonification process by improving accompanying descriptions of the techniques used. These updates must consider the lens of trust in science and be mindful of creating minimal opportunities for miscommunication. To understand this better, we must capture data on the number of times a participant plays a sonification, providing a more objective measure of comprehensibility, intuitiveness, enjoyment, and a desire to understand.

Further studies could gauge the self-reported knowledge of music and technology. Many participants gave feedback on the musical quality, indicating an understanding of music theory, and many also gave technical feedback (bearing in mind that some technical proficiency is required to access the survey).

Although we collected participants' ages, we did so primarily to compare the representativeness of our sample to the overall U.S. population (see Table 3 in the Appendix) and provide thorough information regarding our participants. Future work could explore whether age correlates with enjoyment, self-reported learning, trust, and overall responses to the sonifications, although analysis of this is beyond the scope of our work. We could also explore the role of misconceptions with age. Future studies should be mindful that some participants may have hearing loss, which we do not report here, and could impact the response to sonifications. Hearing loss is more likely with increased age and could further impact the relationship between age and response to sonifications. Other demographic questions, in particular self-reported knowledge of astronomy, could also reveal interesting relationships with responses to sonification and can be explored in the future.

Finally, this work could extend to investigate actual learning outcomes, as opposed to self-reported learning (as in this study). However, this is outside this paper's scope and would involve a participant and control group learning with and without access to sonification.

Input from the broader community is invaluable, and we are encouraged by the recommendations received and excited to implement them into new work. We look forward to collaborating with others throughout astronomy and related fields to make as much data available to as many people as possible. Additional resources are available for this paper on a companion GitHub (see text footnote 10) and a frozen Zenodo16 repository.

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

The studies involving humans were approved by Smithsonian Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study, by way of a consent form at the beginning of the survey.

KA: Writing—original draft, Writing—review & editing. JS-S: Writing—original draft, Writing—review & editing. SK: Writing—original draft, Writing—review & editing. GS: Writing—original draft, Writing—review & editing. MR: Writing—original draft. MW: Writing—original draft. BH: Writing—original draft. LS: Writing—review & editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This paper was written with funding from NASA under contract NAS8-03060 with the principal investigator working for the Chandra X-ray Observatory. NASA's Marshall Space Flight Center manages the Chandra program. The Smithsonian Astrophysical Observatory's Chandra X-ray Center controls science and flight operations from Cambridge and Burlington, Massachusetts. Additional support for the sonifications came from NASA's Universe of Learning (UoL). UoL materials are based upon work supported by NASA under award number NNX16AC65A to the Space Telescope Science Institute, with Caltech/IPAC, Jet Propulsion Laboratory, and the Smithsonian Astrophysical Observatory.

The authors gratefully acknowledge their colleagues at the Center for Astrophysics, NASA and particularly NASA's Astronomy Picture of the Day, for their gracious dissemination help with the study. JS-S acknowledges the Frist Center for Autism and Innovation in the School of Engineering at Vanderbilt University, who fund the Neurodiversity Inspired Science and Engineering Graduate Fellowship. SK acknowledges the Marshall Scholarship.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomm.2024.1288896/full#supplementary-material

1. ^https://www.nasa.gov/directorates/heo/scan/services/networks/deep_space_network/about

2. ^https://chandra.si.edu/sound/

5. ^@chandraxray

6. ^@apod

7. ^https://www.surveymonkey.com/

8. ^https://www.si.edu/osp/policies/human-subject-research

9. ^https://stri-sites.si.edu/permits/sd606/SD606.pdf

10. ^https://github.com/Jesstella/a_universe_of_sound

11. ^Breakdowns of demographic information collected for the survey can be found in the Appendix.

12. ^https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.ks_2samp.html

13. ^https://www.wordclouds.com/

14. ^https://dsb.wa.gov/dispelling-myths

15. ^Americans With Disabilities Act of 1990, Pub. L. No. 101-336, 104 Stat. 328 (1990).

Abbot, B. P. (2016). Observations of gravitational waves from a binary black hole merger. Phys. Rev. Lett. 116:61102. doi: 10.1103/PhysRevLett.116.061102

Alexander, R., Zurbuchen, T. H., Gilbert, J., Lepri, S., and Raines, J. (2010). “Sonification of ace level 2 solar wind data,” in The 16th International Conference on Auditory Display (ICAD 2010), ed. E. Brazil (Washington, DC: Georgia Institute of Technology), 39–40.

Ali, S., Muralidharan, L., Alfieri, F., Agrawal, M., and Jorgensen, J. (2020). “Sonify: making visual graphs accessible,” in Human Interaction and Emerging Technologies. IHIET 2019. Advances in Intelligent Systems and Computing, eds. T. Ahram, R. Taiar, S. Colson, and A. Choplin (Berlin: Springer), 454–459.

Arcand, K., and Watzke, M. (2017). Magnitude: The Scale of the Universe. New York, NY: Black Dog & Leventhal Publishers, Inc.

Arcand, K. K., Jubett, A., Watzke, M., Price, S., Williamson, K. T. S., and Edmonds, P. (2019). Touching the stars: improving NASA 3D printed data sets with blind and visually impaired audiences. J. Sci. Commun. 18:40201. doi: 10.22323/2.18040201

Arcand, K. K., Watzke, M., Rector, T., Levay, Z. G., DePasquale, J., and Smarr, O. (2013). Processing color in astronomical imagery. Stud. Media Commun. 1, 25–34. doi: 10.11114/smc.v1i2.198

Astronify (2022). A Python Package for Sonifying Astronomical Data—Turning Telescope Observations Into Sound! Astronify. Available online at: https://astronify.readthedocs.io/en/latest/ (accessed April 27, 2022).

Ballora, M. (2014). “Sonification strategies for the film Rhythms of the Universe,” in The 20th International Conference on Auditory Display (ICAD 2012) (New York, NY: Georgia Institute of Technology).

Ballora, M., and Smoot, G. S. (2013). Sound: The Music of the Universe. The Huffington Post. Available online at: http://www.huffingtonpost.com/mark-ballora/sound-the-music-universe_b_2745188.html (accessed March 3, 2023).

Bernacchio, C., and Mullen, M. (2007). Universal design for learning. Psychiatr. Rehabil. J. 31, 167–169. doi: 10.2975/31.2.2007.167.169

Chandra, Y., and Shang, L. (2019). Inductive Coding. Research Gate. Available online at: https://www.researchgate.net/publication/332599843_Inductive_Coding (accessed March 3, 2023).

DePasquale, J., Arcand, K. K., and Edmonds, P. (2015). High energy vision: processing X-rays. Stud. Media Commun. 3, 62–71. doi: 10.11114/smc.v3i2.913

Diaz-Merced, W. L. (2013). Sound for the Exploration of Space Physics Data, (Doctoral dissertation), University of Glasgow. Available online at: http://theses.gla.ac.uk/5804/ (accessed March 3, 2023).

Eclipse Soundscapes (2022). Eclipse. Available online at: http://www.eclipsesoundscapes.org/ (accessed April 27, 2022).

English, J. (2016). Canvas and cosmos: visual art techniques applied to astronomy data. Int. J. Modern Phys. D 26:105. doi: 10.1142/S0218271817300105

Fitch, W. T., and Kramer, G. (1994). “Sonifying the body electric: superiority of an auditory over a visual display in a complex, multivariate system,” in Auditory Display: Sonification, Audification, and Auditory Interfaces, ed. G. Kramer (Boston, MA: Addison-Wesley Publishing Company), 307–326.

Fizeau, H., and Foucault, F. (1845). Untersuchung über die Intensität des beim Davy'schen Versuche von der Kohle ausgesandten Lichts. Annalen der Physik 139, 463–476. doi: 10.1002/andp.18441391116

Fleming, S. (2023). “Hearing the light with astronomy data sonification,” in Presentation at the Space Telescope Science Institute. Day of Accessibility, Baltimore, MD. Available online at: https://iota-school.github.io/day_accessibility/ (accessed March 3, 2023).

Gravitational Wave Open Science Center (2022). Audio. Available online at: https://www.gw-openscience.org/audio/ (accessed April 27, 2022).

Harrison, C., Zanella, A., Bonne, N., Meredith, K., and Misdariis, N. (2022). Audible universe. Nat. Astron. 6, 22–23. doi: 10.1038/s41550-021-01582-y

Hurt, R., Wyatt, R., Subbarao, M., Arcand, K., Faherty, J. K., Lee, J., et al. (2019). Making the Case for Visualization [White Paper]. Available online at: https://arxiv.org/pdf/1907.10181.pdf (accessed March 3, 2023).

Kramer, G., Walker, B., Bonebright, T., Cook, P., and Flowers, J. H. (2010). Sonification Report: Status of the Field and Research Agenda (Faculty Publications, Department of Psychology), 444. Available online at: http://digitalcommons.unl.edu/psychfacpub/444 (accessed March 3, 2023).

Lenzi, S., Ciuccarelli, P., Liu, H., and Hua, Y. (2021). Data Sonification Archive. Available online at: https://sonification.design/ (accessed April 27, 2022).

Mapasyst (2019). The (False) Color World: There's More to the World Than Meets the Eye… Geospatial Technology. Available online at: https://mapasyst.extension.org/the-false-color-world-theres-more-to-the-world-than-meets-the-eye/ (accessed March 3, 2023).

Marr, B. (2015). Big Data: 20 Mind-Boggling Facts Everyone Must Read. Forbes. Available online at: https://www.forbes.com/sites/bernardmarr/2015/09/30/big-data-20-mind-boggling-facts-everyone-must-read/#1484f03d17b1 (accessed March 3, 2023).

McGee, R., van der Veen, J., Wright, M., Kuchera-Morin, J., Alper, B., and Lubin, P. (2011). “Sonifying the cosmic microwave background,” in The 17th International Conference on Auditory Display (ICAD 2011) (Budapest: Georgia Institute of Technology).

Meijer, P. (1992). An experimental system for auditory image representations. IEEE Trans. Bio-Med. Engi. 39, 112–121. doi: 10.1109/10.121642

Misdariis, N., Özcan, E., Grassi, M., Pauletto, S., Barrass, S., Bresin, R., et al. (2022). Sound experts' perspectives on astronomy sonification projects. Nat. Astron. 6, 1249–1255. doi: 10.1038/s41550-022-01821-w

National Aeronautics and Space Administration (2009). NASA's Great Observatories. Available online at: https://www.nasa.gov/audience/forstudents/postsecondary/features/F_NASA_Great_Observatories_PS.html (accessed March 3, 2023).

National Aeronautics and Space Administration (2020). Explore—From Space to Sound. NASA. Available online at: https://www.nasa.gov/content/explore-from-space-to-sound (accessed April 27, 2022).

National Aeronautics and Space Administration (2022). 5,000 Exoplanets: Listen to the Sounds of Discovery (NASA Data Sonification)—Exoplanet Exploration: Planets Beyond Our Solar System. NASA. Available online at: https://exoplanets.nasa.gov/resources/2322/5000-exoplanets-listen-to-the-sounds-of-discovery-nasa-data-sonification/ (accessed April 27, 2022).

Noel-Storr, J., and Willebrands, M. (2022). Accessibility in astronomy for the visually impaired. Nat. Astron. 6, 1216–1218. doi: 10.1038/s41550-022-01691-2

Parvizi, J., Gururangan, K., Razavi, B., and Chafe, C. (2018). Detecting silent seizures by their sound. Epilepsia 59, 877–884. doi: 10.1111/epi.14043

Rector, T., Arcand, K., and Watzke, M. (2015). Coloring the Universe, 1st Edn. Alaska: University of Alaska Press.

Rector, T., Levay, Z., Frattare, L., Arcand, K. K., and Watzke, M. (2017). The aesthetics of astrophysics: how to make appealing color-composite images that convey the science. Publ. Astron. Soc. Pacific 129:aa5457. doi: 10.1088/1538-3873/aa5457

Rector, T., Levay, Z., Frattare, L., English, J., and Pu'uohau-Pummill, K. (2007). Image-processing techniques for the creation of presentation-quality astronomical images. Astron. J. 133:510117. doi: 10.1086/510117

Sanz, P. R., Ruíz-Mezcua, B., Sánchez-Pena, J. M., and Walker, B. (2014). Scenes into sounds: a taxonomy of image sonification methods for mobility applications. J. Audio Eng. Soc. 62, 161–171. doi: 10.17743/jaes.2014.0009

Sawe, N., Chafe, C., and Treviño, J. (2020). Using data sonification to overcome science literacy, numeracy, and visualization barriers in science communication. Front. Commun. 5:46. doi: 10.3389/fcomm.2020.00046

Sinclair, P. (2012). Sonification: what where how why artistic practice relating sonification to environments. AI Soc 27, 173–175. doi: 10.1007/s00146-011-0346-2

Smith, L. F., Arcand, K. K., Smith, B. K., Smith, R. K., Smith, J. K., and Bookbinder, J. (2017). Capturing the many faces of an exploded star: communicating complex and evolving astronomical data. JCOM Sci. Commun. J. 16, 1–23. doi: 10.22323/2.16050202

Smith, L. F., Arcand, K. K., Smith, J. K., Smith, R. K., and Bookbinder, J. (2015). Is that real? Understanding astronomical images. J. Media Commun. Stud. 7, 88–100. doi: 10.5897/JMCS2015.0446

Smith, L. F., Smith, J. K., Arcand, K. K., Smith, R. K., Bookbinder, J., and Keach, K. (2011). Aesthetics and astronomy: studying the public's perception and understanding of non-traditional imagery from space. Sci. Commun. 33, 201–238. doi: 10.1177/1075547010379579

Sturdevant, G., Godfrey, A. J. R., and Gelman, A. (2022). Delivering Data Differently. Available online at: http://www.stat.columbia.edu/~gelman/research/unpublished/delivering_data_differently.pdf (accessed March 3, 2023).

Taterewicz, J. N. (1998). The Hubble Space Telescope Servicing Mission. Available online at: https://history.nasa.gov/SP-4219/Chapter16.html (accessed March 3, 2023).

Varano, S., and Zanella, A. (2023). Design and evaluation of a multi-sensory representation of scientific data. Front. Educ. 8:1082249. doi: 10.3389/feduc.2023.1082249

Walker, B. N., and Nees, M. A. (2011). “Theory of sonification,” in The Sonification Handbook, eds. T. Herman, T. Hunt, and J. G. Neuhoff (Logos Publishing House), 9–40.

Yeo, W. S., and Berger, J. (2005). Applications of Image Sonification Methods to Music. Available online at: https://www.researchgate.net/publication/239416537_Application_of_Image_Sonification_Methods_to_Music (accessed March 3, 2023).

Zanella, A., Harrison, C. M., Lenzi, S., Cooke, J., Damsma, P., and Fleming, S. W. (2022). Sonification and sound design for astronomy research, education and public engagement. Nat. Astron. 6, 1241–1248. doi: 10.1038/s41550-022-01721-z

Keywords: sonification, astronomy, accessibility, BLV, science outreach

Citation: Arcand KK, Schonhut-Stasik JS, Kane SG, Sturdevant G, Russo M, Watzke M, Hsu B and Smith LF (2024) A Universe of Sound: processing NASA data into sonifications to explore participant response. Front. Commun. 9:1288896. doi: 10.3389/fcomm.2024.1288896

Received: 18 September 2023; Accepted: 09 February 2024;

Published: 25 March 2024.

Edited by:

Elif Özcan, Delft University of Technology, NetherlandsReviewed by:

Anita Zanella, National Institute of Astrophysics (INAF), ItalyCopyright © 2024 Arcand, Schonhut-Stasik, Kane, Sturdevant, Russo, Watzke, Hsu and Smith. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jessica Sarah Schonhut-Stasik, amVzc2ljYS5zLnN0YXNpa0B2YW5kZXJiaWx0LmVkdQ==

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.