- 1Department of Language, Literature, and Communication, Network Institute, Vrije Universiteit Amsterdam, Amsterdam, Netherlands

- 2Department of Modern Languages and Cultures, Centre for Language Studies, Radboud University Nijmegen, Nijmegen, Netherlands

- 3Faculty of Social Sciences, Donders Institute for Brain, Cognition and Behaviour, Radboud University Nijmegen, Nijmegen, Netherlands

Different levels of conceptual similarity in equivalent visual structures may determine the way meaning is attributed to images. The degree to which two depicted objects are of the same kind limits interpretive possibilities. In the current research, visual hyponyms (objects of the same kind) were contrasted with visual metaphors and unrelated object pairs. Hyponyms are conceptually more similar than metaphor's source and target, or two unrelated objects. Metaphorically related objects share a ground for comparison that lacks between unrelated objects. We expected viewers to interpret hyponyms more quickly than metaphors or unrelated objects. For liking, there were competing predictions: hyponyms are appreciated more because they are easier, or metaphors are liked more because successful cognitive effort is rewarded. In the first experiment viewers were asked to identify relationships in 27 object pairs. Hyponyms were identified faster than metaphors and metaphors faster than unrelated objects. In the second experiment, with the same materials, viewers were asked to rate appreciation for each object pair. This reduced viewing times substantially. Appreciation was higher for hyponyms than for visual metaphors. In a third experiment with the same materials, exposure duration was varied. Hyponyms were preferred to metaphors and unrelated objects irrespective of exposure duration.

1. Introduction

How easy do viewers attribute meaning to a pair of visual objects? And what type of object pairs do they prefer? The alignment of two things, as in metaphors, has much been researched, both in cognitive science and psycholinguistics, and in the field of advertising. In cognitive psychology and psycholinguistics, several frameworks describing the processing of verbal metaphors have been developed (Giora et al., 2004; Bowdle and Gentner, 2005; Gibbs, 2011; Brugman et al., 2019). Although these frameworks claim to be universal, they have rarely been applied to other domains, in this case, the visual domain.

In the field of advertising, on the other hand, visual metaphors have been the subject of study on many occasions, and the focus is on appreciation, not on understanding (McQuarrie and Mick, 1999; Phillips and McQuarrie, 2004; McQuarrie and Phillips, 2005; Lagerwerf et al., 2012; Van Mulken et al., 2014). In this domain, the predominant frameworks are of a different nature, namely of persuasive communication. On the basis of Relevance Theory (Sperber and Wilson, 1986), metaphors are considered ambiguous by nature, they have stopping power, incite reflection, and are better liked than straightforward communication (McQuarrie and Mick, 1999). Typical of advertising research is the emphasis on liking: messages are unlikely to be fully processed when the addressee is less inclined to do so. Another theory that focuses on liking visuals, is Fluency Theory, which claims that the ease with which a visual message can be processed is indicative of its liking (Reber et al., 2004). Surprisingly, appreciation plays no role at all in cognitive and psycholinguistic approaches of verbal metaphors. In this paper, we wish to bring these two approaches to metaphor together.

In a first experiment, we will investigate whether the findings in cognitive science and psycholinguistics also apply to the visual domain, and then, in a second experiment, we will examine the implications of the advertising take on visual metaphor. Finally, we will try to close the bridge in a third experiment. We will do this by using three types of object pairs, for which we invite participants to make a connection between two elements. We will assess their interpretation (experiment 1), their appreciation (experiment 2), or both (experiment 3), using conceptual similarity as a discriminating factor (Van Weelden et al., 2014).

One of the most general and influential definitions of metaphor is the one formulated by Lakoff and Johnson, as “understanding and experiencing one kind of thing in terms of another” (Lakoff and Johnson, 1980, p. 5). This definition applies to all modalities, so both to verbal and visual kinds of things, since the authors intended to study metaphor as a conceptual rather than as a linguistic phenomenon. Still, most of cognitive scientific and psycholinguistic metaphor research is based on linguistic phenomena rather than on visual metaphors (Bowdle and Gentner, 2005; Gibbs, 2008; Brugman et al., 2019; Nacey et al., 2019).

There has been a lively debate around the way in which metaphors are processed. According to the Standard Pragmatic Model, people initiate metaphoric interpretation once they realize that a literal interpretation fails (Grice, 1975; Searle, 1979). Relevance Theory, although pointedly different from Gricean pragmatics, considers “speaking metaphorically” as a form of “loose talk,” because metaphors do not describe literally what is meant, but they invite listeners to infer the appropriate contextual meanings (Sperber and Wilson, 1986; Tendahl and Gibbs, 2008). Other researchers have shown that although the literal meaning does not have to be discarded before the metaphorical meaning is activated, the processing of verbal metaphors is costlier (Coulson and Van Petten, 2002). The costs rise because “creating a bridge between dissimilar semantic domains (…) should require considerable working memory capacity for both access and mapping processes” (Blasko, 1999, p. 1679). A number of studies argue that a literal meaning is not always processed first before a figurative one and acknowledge the role of context, salience, aptness or familiarity (Katz, 1989; Giora, 2003; Glucksberg and Haught, 2006; Utsumi, 2007).

Typical of metaphor is that a target is interpreted in terms of a source, based on a common ground between the two. The ease with which this common ground can be found, may depend on how familiar receivers are with the combination of source and target. Bowdle and Gentner (2005) describe the “career of metaphor,” and show that once receivers have grown used to a combination of a verbal source and target, they no longer make a comparison, but interpret the source directly as a conventional category. Other scholars claim that it is not so much conventionality, but aptness or semantic neighborhood that predicts the ease with which a verbal metaphor is interpreted (Glucksberg and Haught, 2006; Utsumi, 2007). Katz (1989) prefers the term semantic distance, which determines the ease with which elements evoke similar elements, or analogous semantic content. In the same vein, Utsumi (2007) introduces the concept of interpretive diversity, where the number of features involved are indicative of the richness and complexity. The higher the interpretive diversity, the more processing is needed to arrive at a metaphorical interpretation. Likewise, Giora (2003) introduced salience (the prominence of semantic features) as an explaining factor. Conceptual similarity between source and target has been a topic in the study of visual metaphor, with several conceptualizations and varying results (Tourangeau and Sternberg, 1982; Katz, 1989; Gkiouzepas and Hogg, 2011; Van Weelden et al., 2011; Jia and Smith, 2013). Tourangeau and Sternberg even suggest that “[t]he best metaphors involve two diverse domains (more distance between domains making for better metaphors) and close correspondence between the terms within the two domains (Tourangeau and Sternberg, 1982, p. 203).” Since we aim to investigate the cognitive effort when processing visuals (in terms of viewing times), we decided to use this definition of conceptual similarity as a discriminating factor (Magliano et al., 2017).

Metaphor research in the visual domain generally focuses on manipulating the impact of the rhetorical structure in which a source element is depicted: an object is either presented in a metaphorical presentation (with a target object) or in a straightforward presentation (as a unique object), thus comparing a depiction of two objects (source and target) to the depiction of one object (target, Chang and Yen, 2013; Van Mulken et al., 2014). This way, content and meaning are always inherently and fundamentally different between variants. Other researchers typically tested unique types of metaphor, varying target, source and rhetorical figure in each variant (McQuarrie and Phillips, 2005; Gkiouzepas and Hogg, 2011). In the experiments described in the present article, we decided to focus on the type of relationship between two objects. Phillips and McQuarrie (2004) speak of “meaning operation” and distinguish connection (metonymy or association) and comparison (simile or metaphor). However, also non-figurative meanings may be visualized. An important aspect of a visualized relation between objects is the degree to which the objects are alike, or their conceptual similarity (Van Weelden et al., 2011). We distinguished three types of relationship as a function of conceptual similarity: hyponyms (visual representations of two of a kind, with a high conceptual similarity), comparisons (intermediate conceptual similarity) and unrelated objects (low conceptual similarity).

Phillips and McQuarrie (2004) also distinguish different visual structures: juxtaposition (source and target next to each other), fusion (merged presentation of source and target), and replacement (only one element visualized). We decided to use only juxtaposition in our studies, because it allows for more interpretations than fusion (Lagerwerf et al., 2012). Types of relationship are quite hard to manipulate with replacements.

We investigated these three types of relationships between two depicted objects in a context-free environment, in order to eliminate biasing cues of intended meaning. We illustrate this with the image of a bar of soap next to a bottle of glue (see Figure 1). First, a viewer might infer that the objects are two of a kind. In their comprehensive work on visual grammar, Kress and Van Leeuwen analyze this type of object presentation as a “classification” (Kress and Van Leeuwen, 2020); here, we refer to them as hyponyms. Apples and oranges belong to the class of fruit. Pipes and cigarettes are tobacco products. Bars of soap and bottles of glue do not seem to belong to the same overarching class. The conceptual similarity between hyponyms is high. However, a bottle of glue and a bar of soap are quite different.

If the presented objects are not two of a kind, viewers might experience more incongruity in processing the image. This would urge them to put more cognitive effort in finding an alternative interpretation. Viewers might then map a prototypical property of the one object on to the other. In a metaphorical type of relation, the objects are bound by the same ground. Cigarettes and bullets share the category of “potentially killing.” USB flash drives and sponges share the category of “storage and containers.” We consider object pairs in a metaphorical relation to be intermediately conceptually similar. This will be reflected in the term “visual comparison,” for objects that are not semantically similar, but may have a ground for comparison.

In the case of a bar of soap depicted next to a bottle of glue, viewers might unlock inferences like “this soap is sticky,” or “this glue has a nice scent.” They thus create an ad hoc category, a category that does not exist yet, for which a new ground for comparison is invented (Barsalou, 1983). When viewers do not succeed in finding a satisfactory interpretation of the relationship between the two objects, they may consider the juxtaposition of the two objects as fortuitous, or coincidental, and then the objects are considered as unrelated (the third type of relationship, with a low conceptual similarity).

By exposing viewers to juxtapositions of the three types of relationships mentioned above, it will be possible to test how meaning attribution differs between object pairs. The three types mentioned above differ from one another with regard to the type of semantic relationship. Conceptual similarity is high in the case of hyponyms, and low in the case of unrelated objects. Conceptual similarity is intermediate in the case of metaphors because the objects are not two of a kind but share a common ground. This way, the types of relationships could be ordered along an ordinal scale of conceptual similarity. Conceptual similarity predicts that viewers will take more time to interpret unrelated objects than metaphors and hyponyms. We also hypothesize that hyponyms are interpreted faster than metaphors and unrelated objects.

Note that some researchers believe that viewers may always succeed in finding a ground for comparison (see e.g., Koller, 2009). We hypothesize that it will take them at least more time to do so. When confronted with two unrelated objects, viewers need more time to create a ground for comparison than with more conventional metaphorical relations (based on salience, familiarity, or otherwise).

H1: Juxtaposed object images will be identified faster when they represent hyponyms, compared to visual metaphors and unrelated pairs; visual metaphors will be identified faster than unrelated object pairs.

In Experiment 1, we will test H1, and in the manipulation check we will also check how successful participants are in deriving a meaning relation between the objects. Our theorizing about conceptual similarity is based on cognitive approaches to verbal metaphors, that aim to understand how people attribute meaning to utterances. We apply it to images consisting of juxtaposed objects.

2. Experiment 1

In a first online experiment we investigated the effect of different levels of conceptual similarity between juxtaposed object images on cognitive effort put in the identification of the relation between the presented objects.

2.1. Materials and methods

We operationalized cognitive effort as ease of Identification (in terms of viewing time). Three groups of stimuli were developed: hyponyms (9), comparisons (9) and unrelated objects (9); in total, 27 juxtapositions (included in the Supplementary material). Three versions of the questionnaire were developed, so that respondents saw different versions of nine juxtapositions per version (three of each type).

2.1.1. Respondents

Respondents were collected through social network connections, also outside academia. No financial reward or credit points could be earned. Of the 96 respondents who started the experiment, 65 completed it (67.7%). Their age varied between 16 and 59 years (M = 30.9 years, SD = 11.9), and 23.4% were male. 73.4% of the respondents were following or completed a higher education program. None of the demographic variables influenced the resultant findings significantly, in any of the three experiments reported here. All data in the three experiments were analyzed anonymously.

2.1.2. Materials

Development and pretest. Images were collected of daily-usage products, in order to create different combinations with the same objects, thus neutralizing particular object effects (Hodiamont et al., 2018). The researchers assembled an initial set of 36 object pairs, with 12 pairs of hyponyms, 12 visual comparisons and 12 unrelated objects. First, it was checked whether the semantic similarity of the hyponyms was higher than the similarity between the objects in the other types of pairs. Semantic similarity between the objects was calculated with online WordNet semantic agreement scores (Postma et al., 2016). Online WordNet systematically connects meaning properties of lexical units. Grounds for comparison are no meaning property in WordNet, and thus metaphorical meanings are not expressed in terms of semantic similarity. We used a threshold to distinguish types: hyponyms had a similarity score higher than 1.3, while comparisons and unrelated objects had a score lower than 1.1 (semantic similarity scores are included in the materials overview in the Supplementary material). Hyponyms, comparisons and unrelated objects were further distinguished by analyzing the responses of 27 respondents to open questions, asking to infer the relations they inferred from the images they were exposed to: the number of strong implicatures (see Section 2.1.3), recognition of ground for comparison (indicating metaphor), mention of unexpectedness. This way, 27 stimuli were selected, distributed over three versions. Whenever possible, each object occurred in three combinations (in different relation types), one per version. The order of source and target (when applicable) was counterbalanced. We did not expect order effects, however: Hodiamont et al. (2018, p. 177) tested comparable stimuli specifically for source-target order effects and did not find them.

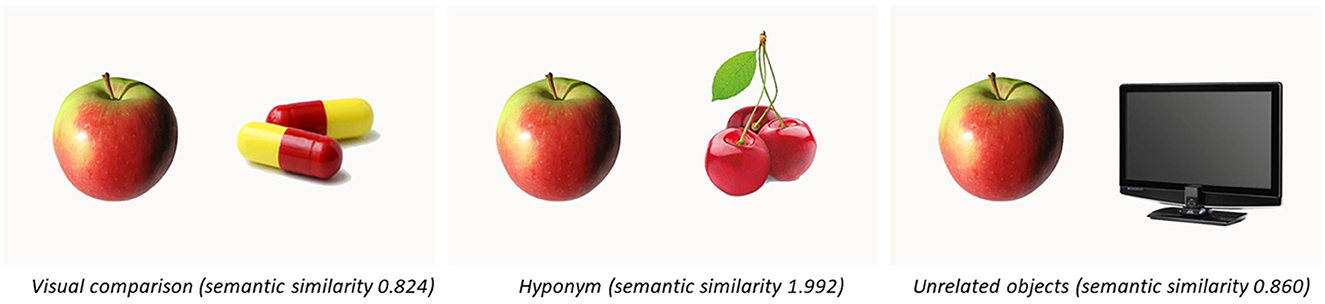

In Figure 2 examples are presented for each type of objects pair containing an apple. One pair represents hyponyms (an apple and a cherry both belong to the class “fruit”), another represents visual comparisons (ground for comparison: an apple and a pill both share the common ground “healthy”) and the third pair is an example of unrelated objects (no or new ground for comparison: an apple and a television have apparently nothing in common). The stimuli were distributed into three versions, with an object occurring only once per version.

Figure 2. Examples of juxtapositions in three types: visual comparison, hyponym, and unrelated objects, with their semantic similarity scores from online WordNet.

2.1.3. Instrumentation

Respondents were asked to press the button “next” after they had taken a close look at the image (with both objects). Their viewing time was measured (ease of Identification). In the online survey software Qualtrics, viewing times are collected by registering the respondent's local computer time between an initial and second click (Qualtrics, 2018). Because of the prior instruction and practice, respondents knew they had to describe the relation between the objects once they had pressed “next.” Hence, they used viewing time to determine the relation. Longer viewing times represent more cognitive effort in Identification.

With an open question, respondents were asked to describe in one to ten words what the objects had in common. It was made clear in the instruction that they also could enter “no relation.” This made it possible to recode the respondents' interpretation in the variables Strong Implicature and No Relationship. By “implicature,” we mean a viewer generated interpretation or inference, chosen as the most relevant association when seeing a combination of objects (Sperber and Wilson, 1986; Phillips, 1997). An implicature is strong if most respondents (>50%) derive the same interpretation of a stimulus. Hence we have operationalized conventionality (Bowdle and Gentner, 2005). An implicature is weak if it occurs in only a few responses (<50%). We may call weak implicatures idiosyncratic because they differ between individuals (McQuarrie and Phillips, 2005). The variables Strong Implicatures and No Relationship were used to check whether our manipulations were correct.

2.1.4. Procedure

Respondents were invited, via social media, to participate in a Qualtrics experiment on “human creativity” (Qualtrics, 2018). They read an instruction with three examples and sample questions. Respondents were shown a total of nine images (in random order). Before exposure to an object pair, respondents were asked to view the image and press “next” when they were done. Then, they were asked to describe the relation between the objects or respond with “no relation” if they had not seen any. After nine images and description questions the respondents reported their age, gender, and education, the device with which they had completed the questionnaire, and whether they were disturbed during the experiment. Participation lasted ~6 min.

2.1.5. Data analysis

Viewing times were measured in milliseconds. Since viewing times may have outliers in length of viewing (due to distracted respondents, or other reasons out of our control), all viewing times higher than the mean value plus twice the standard deviation were deleted.

Data were analyzed using linear mixed-effects regression with respondents and stimuli as random effects and with identification as the dependent variable, and type of relationship (hyponym, comparison and unrelated) as predictor variable, using the package GAMLj in Jamovi, an interface for R (Gallucci, 2019; R Core Team, 2021; The jamovi project, 2021). The omnibus F-test and the t-test use Sattertwaithe approximations for degrees of freedom.

In all models, orthogonal sum-to-zero contrast coding was applied to our categorical fixed effects (i.e., type of relationship). For type of relationship, there were two contrasts: the first contrast compared hyponym (reference, coded as 0) with comparison (1), the second contrast compared hyponym to unrelated (2). We also applied alternative contrast coding to examine the third contrast (i.e., comparison vs. unrelated).

For each model, a stepwise variable selection procedure was conducted in which non-significant predictors were removed to obtain the most parsimonious model.

2.2. Results

We first checked whether respondents distinguished between Types of Relationship in terms of number of Strong Implicatures and of mentions of No Relationship.

2.2.1. Manipulation check

Some researchers claim that any two objects can always create a metaphor (Koller, 2009), others find that viewers do not always succeed in interpreting unrelated objects in juxtaposition (Van Weelden et al., 2011).

To check whether respondents could differentiate between types of relationship, we used the measures of strong implicatures and no relation. We expect the least strong implicatures for unrelated objects, because a ground for comparison has to be created by each respondent (Phillips, 1997). We also expect that hyponyms will get more strong implicatures, because of their higher semantic similarity (see Section 2.1.2). We expect the least mentions of no relation with hyponyms, and the most with unrelated objects.

None of the responses to the unrelated objects contained a strong implicature. Respondents struggled with finding a common denominator. Although many found no connection between the two objects (“none,” “no relationship,” “no connection”), others invented an ad hoc ground for comparison. In the case of the cigarette—cherry combination for instance, individual respondents invented categories like “both are natural products,” “cigarette with a cherry taste,” “both are luxury products,” “both are round,” “both are consumed via the mouth,” “both can be seen as sexy” etc. For hyponyms, strong implicatures were mentioned more often (146 times) than for comparisons [116 times, = 11.68, p < 0.001, Cramér's V = 0.17, N = 386]. Note that respondents cannot be forced to all make the same inferences to visual hyponyms. It is especially the absolute lack of strong implicatures for unrelated objects that sustains this side of the manipulation check.

Responses to hyponyms never contained a mention of no relation. With regard to the other two types of relationship, more responses of no relation occurred for unrelated objects (71 times), compared to comparisons [21 times; = 36.74, p < 0.001, Cramér's V = 0.31, N = 385]. Especially the lack of no relation mentions for hyponyms sustains this part of the manipulation check.

Since for both measures, comparisons differed significantly with the remaining type of relationship, the manipulation check confirms a successful operationalization of the three types of relationship in object pair images: hyponyms, comparisons and unrelated objects.

2.2.2. Identification

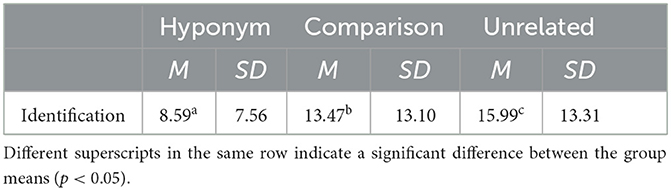

Table 1 shows the means and standard deviations of the dependent variable for the three types of relationships.

Table 1. Means and standard deviations of identification (viewing time in s), for three types of relationship between juxtaposed objects (N = 65).

The analysis showed a main effect of Identification [F(2, 6) = 19.88, p = 0.002]. Contrast analyses showed that a hyponym was identified faster than a comparison [β = 4.26, SE = 1.13, t(6) = 3.75, p = 0.009] and unrelated objects [β = 7.14, SE = 1.14, t(6) = 6.27, p < 0.001]. In addition, comparison differed significantly from unrelated objects [β = 2.89, SE = 1.12, t(6) = 2.57, p = 0.043]. This means that H1 can be accepted.

2.3. Discussion

In Experiment 1, we investigated how conceptual similarity between visual object pairs in juxtaposition determined the cognitive effort of respondents. The expectation was that hyponyms were identified fastest, comparisons more slowly, and that unrelated objects were slowest to be reported as understood. This proved to be the case. To our knowledge, visual metaphors have never been contrasted to other types of object relations, such as hyponyms or arbitrary combinations. For the first time, then, we managed to show that visual comparisons, where the objects are presented in juxtaposition, need more time to be interpreted than juxtapositions with higher semantic similarity (namely hyponyms), and less time than juxtapositions of objects without known grounds for comparison. Conceptual similarity proves to be a discriminating factor.

The differences in viewing times between metaphors and unrelated objects were rather small (although significant). This can be related to the manipulation check results. Some of the respondents might have learned (during the nine trials) that responding with “no relation” is the easy way out, so they stopped searching for a common ground sooner in cases where there was no immediate answer. Since more respondents answered with “no relation” to the unrelated objects, viewing time might have been reduced more for unrelated objects than for comparisons.

A limitation of the experiment is therefore that we did not observe the respondents while performing the experiment. This is a dilemma that is difficult to solve: if we were to observe the respondents, they would maybe try harder for reasons of social desirability or a participation reward.

We may wonder whether in real life, respondents will actually take the time to try and understand the relationship between objects before they decide to look further. They probably construe an opinion very quickly, but rarely wonder why. So, the instruction to describe the relation was helpful to test H1 but might not reflect the spontaneous response to different visual object pairs. Asking respondents to like object pairs is perhaps a better way of eliciting effects, because appreciation entails both getting an impression and evaluating that impression.

In the field of advertising and persuasion, the focus of visual metaphor research is on liking, not primarily on understanding. The prevailing idea is as follows: since visual metaphors contain a deviation from expectation in aligning two things that are dissimilar at first sight, viewers are incited to construct a meaningful relationship between the two objects, and if they succeed in doing so, they will experience a sense of pleasure and closure (Tanaka, 1994; McQuarrie and Phillips, 2005). They will opt out if they do not succeed in bridging the gap between the two objects, because viewers will stop processing if they no longer expect benefits (Berlyne, 1974; Phillips, 2000; Van Mulken, 2005). This should be reflected in less appreciation for pairs of unrelated objects. Metaphorical pairs, on the contrary, allow viewers to resolve the riddle, and therefore, appreciation will be higher. Based on this view, however, it follows that visual metaphors will also be appreciated more than hyponyms, since it is the cognitive pleasure, the pleasure that results from solving the riddle, that predicts the success of visual metaphors. Hyponyms pose less of a puzzle: they simply are two of a kind, and that would entail a lower appreciation.

On the basis of advertising approaches to visual metaphor, we can predict an inverted U-pattern between conceptual similarity and appreciation (Berlyne, 1970; Phillips, 2000; Van Mulken et al., 2014). Researchers consistently found that too easy to understand figures of speech were appreciated less than more complex figures. They also found that figures that were too difficult to understand were appreciated less.

There is, however, a competing theory that allows to predict the appreciation of visual object pairs. The Processing Fluency Theory of aesthetic pleasure investigates how people experience beauty. Processing fluency is the ease with which information is processed in the human mind, and it mainly focuses on experiencing visual stimuli. It basically assumes that aesthetic experience is the result of automatic processing of visual information, without the intervention of reasoning (Reber et al., 2004). The theory allows researchers to test which visual cues (e.g., contrast, recognizability of objects) facilitate the processing of visual information. In general, the relation between exposure to fluent visuals and appreciation, is positive and linear. Positive relations between fluency, understanding and affective feelings have been established by using psychophysiological measures (e.g., Winkielman et al., 2006).

According to Fluency Theory, we would expect hyponyms to be experienced more fluently than visual comparisons and unrelated objects. This would be reflected in processing pace (hyponyms being processed faster than comparisons or unrelated object pairs). Following Fluency Theory, cues of incongruity and deviation would be considered as triggering low fluency and therefore causing less appreciation.

We therefore decided to set up Experiment 2 to test these competing hypotheses, using viewing time as a measure of impression, as well as appreciation. Where advertising research would predict hyponyms to be viewed faster, but appreciated less than metaphors, Fluency Theory would predict hyponyms to be processed faster and appreciated more than metaphors. For both approaches, unrelated objects would be processed slower and be appreciated less than the other conditions. We formulate H2 about speed of impression (both approaches alike) and the competing approaches H3a (advertising research) and H3b (Fluency theory).

H2: An impression of juxtaposed object images will be formed faster when they represent hyponyms, compared to visual comparisons and unrelated object pairs; visual comparisons will be identified faster than unrelated object pairs (i.e., a replication of the findings in experiment 1).

H3a: Visual comparisons will be appreciated more than hyponyms and unrelated object pairs.

H3b: Hyponyms will be appreciated more than comparisons, and comparisons will be appreciated more than unrelated object pairs.

As viewing times are a reflection of information processing, predictions can be made about the effects on recall, following the Limited Capacity Model of Motivated Media Message Processing (LC4MP, Lang, 2000). Building on H2 and H3a, more cognitive resources (longer viewing times) have been allocated to process the message successfully, resulting in better recall for comparisons than for hyponyms (H4a, Peterson et al., 2017); because resource allocation does not lead to successful processing for unrelated objects, recall will be lower compared to comparisons. (H4a, Phillips and McQuarrie, 2004). On the other hand, Fluency would predict a linear relation between understanding, appreciation and recall. Building on H2 and H3b, the cognitive resources (viewing times) were properly allocated (not too much) for hyponyms, but not for comparisons (less appreciation indicating unsuccessful processing) and unrelated objects (same problem but stronger). This results in better recall for hyponyms compared to comparisons, and better recall for comparisons than for unrelated objects (H4b).

H4a: Recall for visual comparisons will be higher for hyponyms and for unrelated object pairs.

H4b: Recall for hyponyms will be higher for visual comparisons and for unrelated object pairs.

3. Experiment 2

3.1. Materials and method

In the second experiment we investigated to what extent the respondents appreciated the object pars with the same materials in the same design and a similar procedure, although the instruction was geared toward getting an impression instead of trying to understand. Besides viewing time, appreciation, and recall were measured.

3.1.1. Respondents

A total of 73 respondents completed the experiment (64.6%), after accepting an invitation via email or social media. Of these, 61.6% were male. They were between 17 and 60 years old (M = 34.5, SD = 11.8). With regard to education, 91.8% had completed (or was following) a higher education program.

3.1.2. Instrumentation

Like in Experiment 1 the respondent was instructed to have a close look at the visual object pair before clicking “next.” Viewing times were measured. The difference with Experiment 1 was that they knew that the follow-up task was to rate appreciation for the image, instead of describing the relation between the objects. We call this variable impression time.

Appreciation itself was measured using a seven-point Likert scale with three items: “good,” “attractive,” and “pleasant” (Cronbach's α = 0.85, MacKenzie et al., 1986).

After viewing and appreciating nine images, the respondents were given three distraction tasks: a find-the-differences puzzle, a put-in-the-right-order puzzle and a word game. Subsequently, they were asked, in an open question, which object combinations they could remember (recall). Recall was scored per respondent and per stimulus: when both objects in a pair were named (2 points), one of the two objects (1) or no object (0).

3.1.3. Data analysis

Viewing times for impression that exceeded the mean value plus twice the standard deviation were removed from the data set. As described in Experiment 1, we used linear mixed-effects regression (Gallucci, 2019) with stimuli and respondent as random effects, and type of relationship (hyponym, comparison and unrelated) as predictor variable. Dependent variables were impression time, appreciation and recall. Model optimization and contrast coding were the same as in Experiment 1.

3.2. Results

We expected that the viewing times for impression would follow the same pattern as in Experiment 1 (H2: Hyponyms faster than comparisons and faster than unrelated objects, comparisons faster than unrelated objects), and that appreciation and recall either would be higher for comparisons compared to the other two conditions (following hypotheses 3a and 4a) or would follow the incremental linear slope of viewing times (following hypotheses 3b and 4b).

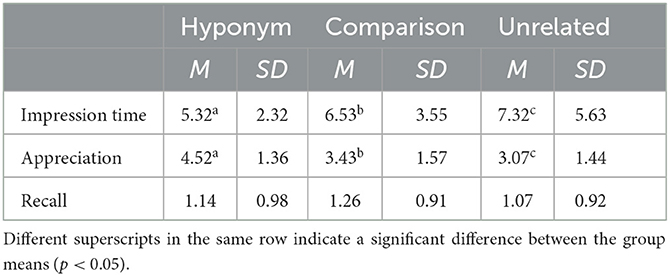

Table 2 shows the means and standard deviations of impression time, appreciation and recall for the three Types of Relationships.

Table 2. Means and standard deviations of impression (viewing time in s), appreciation (scale 1–7), and recall (score 0–2) for three types of relationship (N = 73).

Note that viewing times were overall much shorter than in experiment 1 (viewing times were more or less halved). There was a main effect of Type of relationship on Impression [F(2, 391) = 13.79, p < 0.001], showing that respondents looked shorter at hyponyms than at comparisons [β = 1.13, SE = 0.38, t(503) = 2.97, p = 0.003] and than at unrelated objects [β = 1.95, SE = 0.37, t(547) = 5.20, p < 0.001]. Comparisons were viewed shorter than unrelated objects [β = 0.81, SE = 0.39, t(300) = 2.10, p = 0.04]. H2 may be accepted.

There also was a main effect of type of relationship on appreciation [F(2, 578) = 75.56, p < 0.001] where hyponyms were appreciated more than comparisons [β = 1.11, SE = 0.13, t(581) = 8.65, p < 0.001] and than unrelated objects [β = 1.46, SE = 0.13, t(578) = 11.64, p < 0.001]. Comparisons were appreciated more than unrelated objects [β = 0.36, SE = 0.13, t(581) = 2.64, p = 0.009]. The expectation in H3a that comparisons would be appreciated more than hyponyms and unrelated objects is therefore rejected. H3b is accepted, since respondents appreciated hyponyms more than comparisons and unrelated objects.

There was no effect of Type of Relationship on Recall [F(2, 569) = 1.83, p = 0.161]. Therefore both H4a and H4b are rejected.

3.3. Discussion

H2 was confirmed: viewers looked shorter at hyponyms than at comparisons and unrelated objects. H3b was accepted: hyponyms were over all better appreciated, and comparisons better than unrelated objects. As a consequence, the inverted U-curve of appreciation hypothesized in the competing H3a cannot be accepted. No evidence for either H4a or H4b was found: there were no effects of type of relation on recall.

An important observation in this second experiment was that respondents looked much shorter at object pairs than in the first experiment, in all conditions. In Experiment 2, they were asked to appreciate them instead of describe their relationships. Other research also showed quite consistently that liking visual objects requires less time than understanding them (Leder et al., 2006). This may explain why we found no evidence for H3b (inverted U-curve of appreciation): the hypothesis was based on the implied cognitive pleasure of “making an effort” and “finding a solution” (Phillips, 2000; Van Mulken et al., 2010, 2014; Lagerwerf et al., 2012). Most experimental advertising research on visual rhetoric is based on this assumption. However, the acceptance of H3b supports Fluency theory that is based on a linear relation between understanding and appreciation.

The difference between the two hypotheses resides in the definition of pleasure: cognitive pleasure vs. aesthetic pleasure. Fluency theory research has been focused on art or contextless images: people are not directly instructed to solve a riddle. In advertisements, there is always a contextual cue involved, sometimes only in a brand name that motivates respondents to find a connection between the image and the advertiser's intention (Phillips, 1997). So, although conceptual similarity influences processing effort, cognitive pleasure needs to be triggered additionally.

Maybe the viewers in the second experiment did not grant themselves the time to cognitively appreciate metaphors? Would they appreciate metaphors more than hyponyms if they took the opportunity to solve the riddle? We decided to set up a third experiment with exposure time as a fixed variable. By forcing viewers to look at a juxtaposition for a longer time (5 s), we allowed them to find closure, and to succeed in finding a satisfying interpretation for metaphors (but not for unrelated objects). We compared the long exposure time with shorter ones (0.5 and 1 s).

There is no general agreement about the effects of exposure to images on understanding and liking. Respondents who were exposed to abstract paintings with either elaborative (i.e., more figurative) or descriptive titles showed better understanding of the paintings in a very long presentation condition (90 s), but this was not reflected in their appreciation (Leder et al., 2006). With shorter presentation times (1 vs. 10 s), there was more understanding in the 10 s condition for paintings with elaborative titles than for those with descriptive titles, whereas the opposite appeared for the 1 s condition. No differences were found for liking (Leder et al., 2006). Other research found that complex (combinations of) visual metaphors and metonymies in advertisements were viewed shorter than simple metaphors or metonymies, and they were liked more (Pérez-Sobrino et al., 2019).

Would appreciation for comparisons increase, and would we be able to find the inverted U-turn pattern for appreciation (H3a) and recall (H4a) if we forced our respondents to look longer at the object pairs than they normally would do? We could unify Fluency theory and advertising approach in two interaction hypotheses, qualifying the effects of exposure time and type of relation on appreciation (H5) and recall (H6).

H5: If exposure time is short, hyponyms are appreciated more than the other types of relationship; if exposure time is long, comparisons are appreciated more than the other types of relationships.

H6: If exposure time is short, hyponyms are remembered better than the other types of relationship; if exposure time is long, comparisons are remembered better than the other types of relationships.

So, we expect Fluency theory to predict the effects of Type of relation when exposure time is short, and the inverted U-curve to appear when exposure time is longer. In the method section of Experiment 3, we will define what counts as shorter and longer exposure times.

4. Experiment 3

4.1. Materials and methods

4.1.1. Respondents

A total of 73 respondents completed the experiment (57.1%), after accepting an invitation via email or social media. There were 28 male respondents (36.8%) and 48 were female (63.2%). They were approached online but also in person. Age varied from 18 to 76 (M = 28.8, SD = 13.6), and 55% had a higher education.

4.1.2. Instrumentation and procedure

The instrumentation was similar to Experiment 2. The main difference was, however, that exposure was fixed to either 0.5, 1 or 5 s: respondents could not decide for themselves how long they looked at the stimuli. Exposure duration varied between respondents, but not within: each respondent watched all nine stimuli for the same amount of time. Viewers are able to identify visual objects already at 100 ms exposure time, or even 40 ms (Lee and Perrett, 1997; Johnson and Olshausen, 2003). Since we wanted respondents to be (minimally) able to identify the relation between two objects, we set minimal exposure duration to 0.5 s. EEG measurements show a N400 spike (around 400 milliseconds after exposure) that is indicative for understanding word meaning, so basic interpretation is included in the 0.5 s interval (Kutas and Hillyard, 1980; Pynte et al., 1996; Hagoort et al., 2004; Chwilla et al., 2011). The other two exposure times were chosen to allow a contrast to become apparent: 1 and 5 s.

To stimulate experiences of cognitive pleasure, respondents were asked to identify which type of relation the stimuli had with each other (Lagerwerf, 2002). Instead of words like metaphor and hyponym we used the more common terms “comparison relation” and “umbrella relation” respectively.

Appreciation was operationalized with the items positive, negative, good, and attractive. All questions were measured with a 7-point Likert scale. Once we removed “negative” from the construct, we found Cronbach's α = 0.91.

After exposure to and assessment of the nine stimuli, recall was measured after two distraction tasks, identical to Experiment 2.

4.1.3. Data analysis

Since the stability of the Internet varies per connection, exposure times sometimes differed for the individual respondents. Qualtrics registers the actual exposure time. Trials were therefore removed from the analyses when they were either presented shorter or longer than 250 ms in the 0.5 s condition, or 500 ms shorter or longer in the two other conditions. This resulted in the removal of 31 trials.

To test the hypotheses, we conducted a mixed-effects regression analysis, but now with two factors, type of relationship and exposure duration. We applied contrast coding for both factors.

4.2. Results

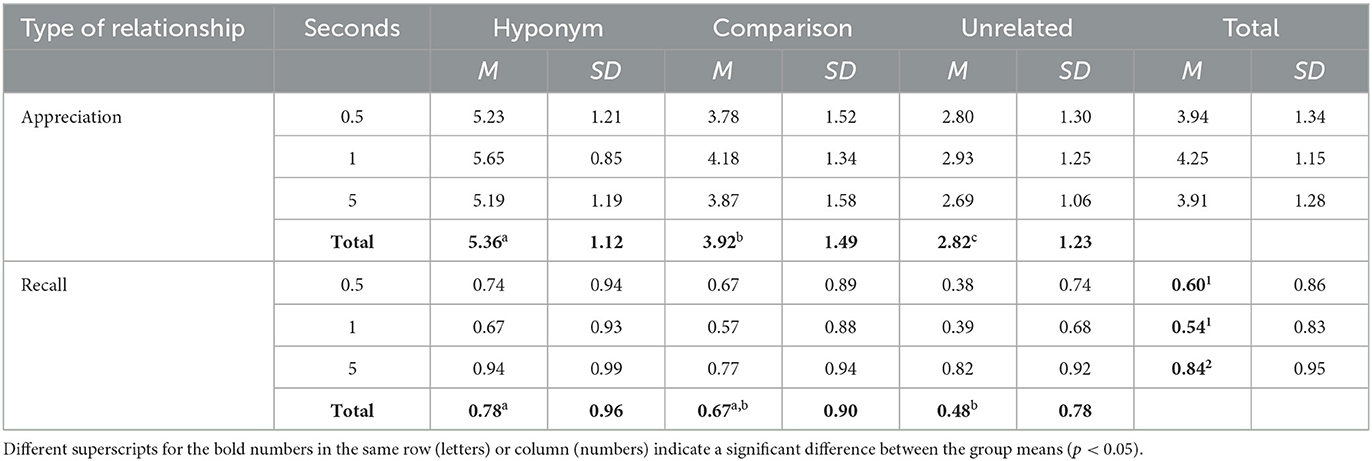

Hypotheses 5 and 6 predicted that hyponyms would be appreciated and remembered more with 0.5 s exposure duration compared to the two other types of relationship, whereas appreciation and recall would be higher for comparisons in the longer exposure duration (1 or 5 s). Table 3 presents the Ms and SDs for appreciation and recall.

Table 3. Means and standard deviations of appreciation (scale 1–7), and recall (score 0–2) for three types of relationship and exposure duration (0.5, 1, and 5 s).

There was a main effect of Type of Relationship on appreciation (F(2, 111) = 235.54, p < 0.001), showing that respondents appreciated hyponyms more than comparisons [β = 1.40, SE = 0.12, t(300) = 11.99, p < 0.001]. Hyponyms are also more appreciated than unrelated objects [β = 2.55, SE = 0.12, t(359) = 21.20, p < 0.001]. Comparisons, in turn, were more appreciated than unrelated object pairs [β = 1.15, SE = 0.13, t(45) = 3.76, p < 0.001]. There was no main effect for exposure duration on appreciation [F(2, 75) = 2.29, p = 0.11]. There was no significant interaction of type of relation and exposure duration (F < 1). The interaction hypothesis H5 cannot be accepted.

We found a main effect of Type of Relationship on recall (F(2, 309) = 5.77, p = 0.003). Respondents remembered hyponyms better than unrelated objects [β = 0.29, SE = 0.09, t(504) = 3.36, p = 0.003], and also comparisons better than unrelated objects [β = 0.21, SE = 0.10, t(187) = 2.03, p < 0.044]. However, hyponyms and comparisons did not differ in recall (t < 1). There was a main effect of exposure duration on recall [F(2, 74) = 3.96, p = 0.02]. Respondents who viewed images for 0.5 or 1 s had no difference in recall (t < 1). An exposure duration of 5 s resulted in more recall than 0.5 s [β = 0.24, SE = 0.11, t(77) = 2.23, p = 0.03], and also more recall than 1 s [β = 0.31, SE = 0.11, t(78) = 2.68, p = 0.009]. We found no interaction of type of relationship and exposure duration on recall (F < 1). Therefore, we cannot accept H6.

Contrary to our expectations we did not find an interaction of exposure duration and type of relationship on appreciation or recall. We do not have confirmation of hypotheses 5 and 6. We did find a main effect of type of relationship, with hyponyms being better liked than comparisons, which in turn are better liked than unrelated object pairs. Hence, we conclude that the findings of experiment 2 are replicated, independent of exposure duration. In addition, a main effect of type of relationship on recall showed that unrelated objects are remembered worse than hyponyms or comparisons.

Exposure duration did have an effect on recall. More recall was found in the 5 s exposure condition compared to the shorter conditions. Although there was no interaction with type of relationship, we now know that processing of visual object pairs is affected between 1 and 5 s exposure duration.

5. General discussion

We expected, in line with current approaches in advertising research (based on Relevance Theory and Pragmatics), that if respondents would elaborate longer upon juxtaposed visual objects their appreciation for (and recall of) metaphors would increase compared to hyponyms and unrelated objects, precisely because their cognitive effort would be rewarded by finding a resolution. This was not the case. In fact, it appeared that Fluency Theory—the ease of processing for visual objects predicts appreciation and recall linearly—explained our findings better. In self-paced exposure (experiment 2), hyponyms are preferred to comparisons, and even if exposure duration is enforced (experiment 3), longer viewing time does not result in preference of comparisons to hyponyms. Apparently, cognitive pleasure does not outweigh processing ease. Or, as we will discuss below: lower conceptual similarity in juxtaposed visual objects is in itself not enough to evoke cognitive pleasure.

Viewing times were considerably shorter when viewers had to judge the juxtapositions in terms of appreciation (experiment 2) instead of understanding (experiment 1). This suggests that it is quite easy to manipulate cognitive effort in viewing images. However, cognitive effort did not result in cognitive pleasure, as the results of experiment 3 suggest. What factors may possibly induce cognitive pleasure?

Conceptual similarity was supposed to distinguish hyponyms from metaphors, and unrelated objects. The pretest in experiment 1, and the manipulation checks, showed quite convincingly that three types of visual object pairs were also perceived as such by the respondents. If objects were not semantically close, respondents would seek for a ground of comparison. The distinction between metaphors and unrelated objects depends on whether one finds closure in the resolution. Individuals may differ in when they experience closure for the meaning of juxtaposed object pairs, and it is the experience of closure that determines cognitive pleasure (Lagerwerf, 2002).

The manipulation of conceptual similarity did not allow us to replicate the findings that have been found in advertising contexts (Chang and Yen, 2013; Chakroun, 2020). In these publications, respondents generally preferred the less easy variant of a print advertisement. Here, we tried to abstract away from an advertising context, and we tried to keep the impact of the individual objects as small as possible by trying to compose counterbalanced stimuli. But at the same time, an advertising context will provide at least the basic intentions of the advertiser (“buy this product,” Tanaka, 1992). In hindsight, it might be the case that cognitive pleasure resides in trying to understand why conceptually less similar objects would promote the advertised product (or which product is advertised). Without the advertising context, participants may have lost the driving force in finding relevance for the inferred relation. We may therefore assume that more respondents recognized metaphors in the visual comparisons in Experiment 1, compared to the respondents in Experiment 2 and 3.

The online character of the experiment may have diminished respondents' motivation and inclination to put in cognitive effort. The laboratory set up (the fixed presentation times and the context-free coupling of two objects) did not invite our respondents to express their appreciation like they would do in a “normal” context. Our intention was to keep all other factors, such as context, text, and environments as constant as possible, to be able to test the influence of type of relationship. By doing so, we might have also excluded the possibility to test the influence of cognitive pleasure within the paradigm that produces the inverted U-curve (“optimal innovation,” Giora et al., 2004). More in general, elimination of potentially confounding variables may go at the cost of reduced ecological validity.

Unfortunately, there is little evidence in the literature for the processing and cognitive elaboration that is needed for juxtaposed objects. Most papers focus on single visual objects (however, see Van Weelden et al., 2011; Peterson et al., 2017). Visual metaphor literature traditionally distinguishes three types of visual structures for metaphor: juxtapositions, fusions, and replacements (Phillips and McQuarrie, 2004). Metaphors inherently require a source and a target, and therefore almost always two objects. With only the source or the target in picture, replacements are rare in advertising, and difficult to manipulate in experimental research. Juxtapositions always allow viewers to look at two separated objects and this may have influenced our findings: because each object on its own may have led the viewer into thinking about the individual object and not about the relationship between the two. Contrary to juxtapositions, fusions represent only one visual object, a fusion of the target and source in one, new object that does not exist in real life. In future research, we may replicate our experiments with fusion stimuli and represent the source and target of a metaphor in one and the same object. It would mean that we also construct hyponyms and unrelated objects in the execution format of fusions. The visual structure of “fused objects” will then invite the viewers to think about the relation between the two objects (Lagerwerf et al., 2012). In this future research meaningful contexts will be added, providing better guidance in interpretation.

Interestingly, Fluency Theory has been extended by applying a dual process model to the original theory (Graf and Landwehr, 2015). Dual process models have been developed in various psychological domains; one renowned variant in advertising is the Elaboration Likelihood Model (ELM) (Petty and Cacioppo, 1986). According to the ELM, consumers process advertisements either automatically and superficially, or consciously and precisely. Their level of involvement or ability and motivation to process the advertisements determines on which level the message is processed. ELM assumes that an attitude toward the advertised product is more stable when it is processed consciously and precisely, but with a higher risk of a negative attitude, when the assessment is more critical. A variant of ELM that has been adapted to visual persuasion, is called PIA. This model (pleasure-interest model of aesthetic liking—PIA) is specifically suited for visual perception and processing (Graf and Landwehr, 2015). Where consumer involvement is a moderating factor in selecting the one or the other processing route in ELM, Graf and Landwehr propose that “disrupted fluency” is a visual cue that may turn automatic processing into controlled processing. When fluency is disrupted, negative affective feelings may be accompanied with semantic distortions, making an individual more motivated to reassess the message (disfluency reduction) (Graf and Landwehr, 2015). Subsequent controlled processing of the message leads to an intellectual understanding of the disfluency, which may cause feelings of pleasure (because disfluency reduction succeeded) (Berlyne, 1970; Graf and Landwehr, 2015). On the other hand, failure of disfluency reduction will lead to feelings of frustration, resulting a negative message appraisal. This account of Fluency Theory elegantly captures what the Standard Pragmatic View also tries to describe. In future research, we hope to put PIA to the test, and expect that automatic processing is disrupted in the case of metaphors, but not in the case of hyponyms.

In this paper, the effect of types of relations between juxtaposed pairs of objects on identification, appreciation and recall were tested for the first time. Type of relationship was a function of conceptual similarity, where respondents were instructed to identify the relation, and they were able to tell hyponyms from metaphors and unrelated objects, and metaphors from unrelated objects. However, when the instruction was to appreciate the relation, Fluency Theory performed better in explaining the findings than the Standard Pragmatic View, where hyponyms were preferred to metaphors, which in turn were preferred to unrelated objects. Manipulation of exposure duration did not change the preference pattern: hyponyms were always the preferred pair of objects.

Our respondents did not prefer metaphors to unrelated object pairs. Although many of the respondents generally agreed on the common ground (by finding a strong implicature, see experiment 1, manipulation check), other respondents treated visual comparisons as unrelated object pairs and vice versa. Apparently, the conventionality, familiarity, salience or neighborhood of the categories differ from person to person. In a future experimental design, we will treat finding the common ground as an individual characteristic, thus as a random subject factor, to verify whether metaphorical mapping leads to more appreciation.

Visual rhetoric is not just applied in advertising, public service announcements or political opinions (cartoons). In social media, so-called memes are ubiquitous and popular. They consist of visuals with incongruous anchoring or an incongruous combination of visuals. Research of the mechanisms and effects of memes in social media would be a very promising extension of the current research.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material and the collected data are available at: https://dataverse.nl/dataset.xhtml?persistentId=doi:10.34894/YYSM6B. Further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by Research Ethics Review Committee (RERC), Faculty of Social Sciences, Vrije Universiteit Amsterdam. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

LL: Conceptualization, Data curation, Methodology, Supervision, Writing—original draft, Writing—review and editing. MM: Conceptualization, Data curation, Methodology, Supervision, Writing—original draft, Writing—review and editing. JL: Data curation, Methodology, Writing—review and editing.

Funding

The authors declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

We wish to thank Susanne Brouwer (Centre for Language Studies, Radboud University) for her statistical support. Also, this research was conducted with the support of the Network Institute of Vrije Universiteit Amsterdam.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomm.2023.1266813/full#supplementary-material

References

Berlyne, D. E. (1970). Novelty, complexity and hedonic value. Percept. Psychophys. 8(5a), 279–286. doi: 10.3758/BF03212593

Berlyne, D. E. (1974). “Verbal and exploratory responses to visual patterns varying in uncertainty and in redundancy,” in Studies in the New Experimental Aesthetics. Steps Toward an Objective Psychology of Aesthetic Appreciation, ed. D. E. Berlyne (Washington DC: Hemisphere), 121–158.

Blasko, D. G. (1999). Only the tip of the iceberg: who understands what about metaphor? J. Pragmat. 31, 1675–1683. doi: 10.1016/S0378-2166(99)00009-0

Bowdle, B. F., and Gentner, D. (2005). The career of metaphor. Psychol. Rev. 112, 193–216. doi: 10.1037/0033-295X.112.1.193

Brugman, B. C., Burgers, C., and Vis, B. (2019). Metaphorical framing in political discourse through words vs. concepts: a meta-analysis. Lang. Cogn. 11, 41–65. doi: 10.1017/langcog.2019.5

Chakroun, W. (2020). The impact of visual metaphor complexity in print advertisement on the viewer's comprehension and attitude. Commun. Linguist. Stud. 6, 6. doi: 10.11648/j.cls.20200601.12

Chang, C.-T., and Yen, C.-T. (2013). Missing ingredients in metaphor advertising: the right formula of metaphor type, product type, and need for cognition. J. Advert. 42, 80–94. doi: 10.1080/00913367.2012.749090

Chwilla, D. J., Virgillito, D., and Vissers, C. T. W. M. (2011). The relationship of language and emotion: N400 support for an embodied view of language comprehension. J. Cogn. Neurosci. 23, 2400–2414. doi: 10.1162/jocn.2010.21578

Coulson, S., and Van Petten, C. (2002). Conceptual integration and metaphor: an event-related potential study. Mem. Cognit. 30, 958–968. doi: 10.3758/BF03195780

Gallucci, M. (2019). GAMLj: General Analyses for Linear MODELS (jamovi module). Available online at: https://gamlj.github.io/ (accessed July 25, 2022).

Gibbs, R. W. Jr. (2011). Evaluating conceptual metaphor theory. Discourse Process. 48, 529–562. doi: 10.1080/0163853X.2011.606103

Gibbs, R. W. Jr,. (ed.). (2008). The Cambridge Handbook of Metaphor and Thought. Cambridge University Press. doi: 10.1017/CBO9780511816802

Giora, R. (2003). On our Mind: Salience, Context, and Figurative Language. Oxford: Oxford University Press. doi: 10.1093/acprof:oso/9780195136166.001.0001

Giora, R., Fein, O., Kronrod, A., Elnatan, I., Shuval, N., Zur, A., et al. (2004). Weapons of mass distraction: optimal innovation and pleasure readings. Metaphor Symb. 19, 115–141. doi: 10.1207/s15327868ms1902_2

Gkiouzepas, L., and Hogg, M. K. (2011). Articulating a new framework for visual metaphors in advertising. J. Advert. 40, 103–120. doi: 10.2753/JOA0091-3367400107

Glucksberg, S., and Haught, C. (2006). On the relation between metaphor and simile: when comparison fails. Mind Lang. 21, 360–378. doi: 10.1111/j.1468-0017.2006.00282.x

Graf, L. K. M., and Landwehr, J. R. (2015). A dual-process perspective on fluency-based aesthetics: the pleasure-interest model of aesthetic liking. Pers. Soc. Psychol. Rev. 19, 395–410. doi: 10.1177/1088868315574978

Grice, H. P. (1975). “Logic and conversation,” in Syntax and Semantics. Speech Acts, eds P. Cole, and J. L. Morgan (New York, NY: Academic Press), 41–58. doi: 10.1163/9789004368811_003

Hagoort, P., Hald, L., Bastiaansen, M., and Petersso, K. M. (2004). Integration of word meaning and world knowledge in language comprehension. Science 304, 438–441. doi: 10.1126/science.1095455

Hodiamont, D., Hoeken, H., and Van Mulken, M. (2018). “Conventionality in visual metaphor,” in Visual Metaphor. Structure and Process, ed. G. J. Steen (Amsterdam: John Benjamins Publishing Company), 163–184. doi: 10.1075/celcr.18.07hod

Jia, L., and Smith, E. R. (2013). Distance makes the metaphor grow stronger: a psychological distance model of metaphor use. J. Exp. Soc. Psychol. 49, 492–497. doi: 10.1016/j.jesp.2013.01.009

Johnson, J. S., and Olshausen, B. A. (2003). Timecourse of neural signatures of object recognition. J. Vis. 3, 4–4. doi: 10.1167/3.7.4

Katz, A. N. (1989). On choosing the vehicles of metaphors: referential concreteness, semantic distances, and individual differences. J. Mem. Lang. 28, 486–499. doi: 10.1016/0749-596X(89)90023-5

Koller, V. (2009). “Brand images: multimodal metaphor in corporate branding messages,” in Multimodal Metaphor, ed. C. J. Forceville, and E. Urios-Aparisi (Berlijn: Mouton de Gruyter), 45–72. doi: 10.1515/9783110215366.2.45

Kress, G. R., and Van Leeuwen, T. (2020). Reading Images: The Grammar of Visual Design, 3rd ed. New York, NY: Routledge. doi: 10.4324/9781003099857

Kutas, M., and Hillyard, S. A. (1980). Reading senseless sentences: brain potentials reflect semantic incongruity. Science 207, 203–205. doi: 10.1126/science.7350657

Lagerwerf, L. (2002). Deliberate ambiguity in slogans. Recognition and appreciation. Document Des. 3, 245–260. doi: 10.1075/dd.3.3.07lag

Lagerwerf, L., van Hooijdonk, C. M. J., and Korenberg, A. (2012). Processing visual rhetoric in advertisements: interpretations determined by verbal anchoring and visual structure. J. Pragmat. 44, 1836–1852. doi: 10.1016/j.pragma.2012.08.009

Lakoff, G., and Johnson, M. (1980). Metaphors we Live by. Chicago, IL: The University of Chicago Press.

Lang, A. (2000). The limited capacity model of mediated message processing. J. Commun. 50, 46–70. doi: 10.1111/j.1460-2466.2000.tb02833.x

Leder, H., Carbon, C.-C., and Ripsas, A.-L. (2006). Entitling art: influence of title information on understanding and appreciation of paintings. Acta Psychol. 121, 176–198. doi: 10.1016/j.actpsy.2005.08.005

Lee, K. J., and Perrett, D. (1997). Presentation-time measures of the effects of manipulations in colour space on discrimination of famous faces. Perception 26, 733–752. doi: 10.1068/p260733

MacKenzie, S. B., Lutz, R. J., and Belch, G. E. (1986). The role of attitude towards the ad as a mediator of advertising effectiveness: a test of competing explanations. J. Mark. Res. 23, 130–143. doi: 10.1177/002224378602300205

Magliano, J. P., Kopp, K., Higgs, K., and Rapp, D. N. (2017). Filling in the gaps: memory implications for inferring missing content in graphic narratives. Discourse Process 54, 569–582. doi: 10.1080/0163853X.2015.1136870

McQuarrie, E. F., and Mick, D. G. (1999). Visual rhetoric in advertising: text-interpretive, experimental, and reader-response analyses. J. Consum. Res. 26, 37–54. doi: 10.1086/209549

McQuarrie, E. F., and Phillips, B. J. (2005). Indirect persuasion in advertising. How consumers process metaphors presented in pictures and words. J. Advert. 34, 7–20. doi: 10.1080/00913367.2005.10639188

Nacey, S., Dorst, A. G., Krennmayr, T., and Reijnierse, W. G., (eds) (2019). Metaphor Identification in Multiple Languages: MIPVU around the World. Vol. 22. Amsterdam: John Benjamins Publishing Company. doi: 10.1075/celcr.22

Pérez-Sobrino, P., Littlemore, J., and Houghton, D. (2019). The role of figurative complexity in the comprehension and appreciation of advertisements. Appl. Linguist. 40, 957–991. doi: 10.1093/applin/amy039

Peterson, M., Wise, K., Ren, Y., Wang, Z., and Yao, J. (2017). Memorable metaphor: how different elements of visual rhetoric affect resource allocation and memory for advertisements. J. Curr. Issues Res. Advert. 38, 65–74. doi: 10.1080/10641734.2016.1233155

Petty, R. E., and Cacioppo, J. T. (1986). Communication and Persuasion: Central and Peripheral Routes to Attitude Change. New York, NY: Springer. doi: 10.1007/978-1-4612-4964-1

Phillips, B. J. (1997). Thinking into it: consumer interpretation of complex advertising images. J. Advert. 26, 77–88. doi: 10.1080/00913367.1997.10673524

Phillips, B. J. (2000). The impact of verbal anchoring on consumer response to image ads. J. Advert. 29, 15–25. doi: 10.1080/00913367.2000.10673600

Phillips, B. J., and McQuarrie, E. F. (2004). Beyond visual metaphor: a new typology of visual rhetoric in advertising. Mark. Theory 4, 113–136. doi: 10.1177/1470593104044089

Postma, M., Van Miltenburg, E., Segers, R., Schoen, A., and Vossen, P. (2016). “Open Dutch WordNet,” in Proceedings of the Eighth Global Wordnet Conference, Bucharest, Romania, January (Global Wordnet Association), 27–30.

Pynte, J., Besson, M., Robichon, F.-H., and Poli, J. (1996). The time-course of metaphor comprehension: an event-related potential study. Brain Lang. 55, 293–316. doi: 10.1006/brln.1996.0107

Qualtrics (2018). Qualtrics. Retrieved from: http://www.qualtrics.com (accessed January 29, 2019).

R Core Team (2021). A Language and Environment for Statistical Computing (version 4, 0.). Vienna: R Foundation for Statistical Computing.

Reber, R., Schwarz, N., and Winkielman, P. (2004). Processing fluency and aesthetic pleasure: is beauty in the perceiver's processing experience? Pers. Soc. Psychol. Rev. 8, 364–382. doi: 10.1207/s15327957pspr0804_3

Searle, J. (1979). “Metaphor,” in Metaphor and Thought, ed. A. Ortony (New York, NY: Cambridge University Press), 92–123. doi: 10.1017/CBO9780511609213.006

Sperber, D., and Wilson, D. (1986). Relevance: Communication and Cognition. Cambridge, MA: Harvard University Press.

Tanaka, K. (1992). The pun in advertising: a pragmatic approach. Lingua 87, 91–102. doi: 10.1016/0024-3841(92)90027-G

Tanaka, K. (1994). Advertising Language: A Pragmatic Approach to Advertisements in Britain and Japan. London: Routledge.

Tendahl, M., and Gibbs, R. W. Jr. (2008). Complementary perspectives on metaphor: cognitive linguistics and relevance theory. J. Pragmat. 40, 1823–1864. doi: 10.1016/j.pragma.2008.02.001

The jamovi project (2021). Jamovi. (Version 2.3) [Computer Software]. Retrieved from: https://www.jamovi.org (accessed July 25, 2022).

Tourangeau, R., and Sternberg, R. J. (1982). Understanding and appreciating metaphors. Cognition 11, 203–244. doi: 10.1016/0010-0277(82)90016-6

Utsumi, A. (2007). Interpretive diversity explains metaphor–simile distinction. Metaphor Symb. 22, 291–312. doi: 10.1080/10926480701528071

Van Mulken, M. (2005). De verpakking van maandverband. De ontwikkeling van retoriek in tijdschriftadvertenties. Tijdschrift Genderstud. 8, 15–25.

Van Mulken, M., Le Pair, R., and Forceville, C. (2010). The impact of perceived complexity, deviation and comprehension on the appreciation of visual metaphor in advertising across three European countries. J. Pragmat. 42, 3418–3430. doi: 10.1016/j.pragma.2010.04.030

Van Mulken, M., Van Hooft, A., and Nederstigt, U. (2014). Finding the tipping point: visual metaphor and conceptual complexity in advertising. J. Advert. 43, 333–343. doi: 10.1080/00913367.2014.920283

Van Weelden, L., Maes, A., Schilperoord, J., and Cozijn, R. (2011). The role of shape in comparing objects: how perceptual similarity may affect visual metaphor processing. Metaphor Symb. 26, 272–298. doi: 10.1080/10926488.2011.609093

Van Weelden, L., Schilperoord, J., and Maes, A. (2014). Evidence for the role of shape in mental representations of similes. Cogn. Sci. 38, 303–321. doi: 10.1111/cogs.12056

Keywords: visual metaphor, hyponymy, pragmatics, experiment, cognitive processing, Fluency Theory, visual rhetoric, juxtaposition

Citation: Lagerwerf L, Van Mulken M and Lagerwerf JB (2023) Conceptual similarity and visual metaphor: effects on viewing times, appreciation, and recall. Front. Commun. 8:1266813. doi: 10.3389/fcomm.2023.1266813

Received: 25 July 2023; Accepted: 05 October 2023;

Published: 26 October 2023.

Edited by:

Mirian Tavares, University of Algarve, PortugalReviewed by:

Pedro Alves da Veiga, Universidade Aberta, PortugalGeoffrey Ventalon, Université de Tours, France

Copyright © 2023 Lagerwerf, Van Mulken and Lagerwerf. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Luuk Lagerwerf, bC5sYWdlcndlcmZAdnUubmw=; Margot Van Mulken, bWFyZ290LnZhbi5tdWxrZW5AcnUubmw=

†These authors have contributed equally to this work and share first authorship

Luuk Lagerwerf

Luuk Lagerwerf Margot Van Mulken

Margot Van Mulken Jefta B. Lagerwerf3

Jefta B. Lagerwerf3