94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Commun., 09 January 2023

Sec. Psychology of Language

Volume 7 - 2022 | https://doi.org/10.3389/fcomm.2022.896355

This article is part of the Research TopicModality and Language Acquisition: How does the channel through which language is expressed affect how children and adults are able to learn?View all 19 articles

This study investigated the acquisition of depicting signs (DS) among students learning a signed language as their second-modality and second-language (M2L2) language. Depicting signs, broadly described, illustrate actions and states. This study sample includes 75 M2L2 students who were recruited from college-level American Sign Language (ASL) courses who watched and described three short clips from Canary Row the best they could in ASL. Four types of DS were coded in the students' videorecorded retellings: (1) entity depicting signs (EDS); (2) body part depicting signs (BPDS); (3) handling depicting signs (HDS); and (4) size-and-shape specifiers (SASS). Results revealed that SASS and HDS increase in instances as students advance in their ASL learning and comprehension. However, EDS expressions did not have a relationship with their ASL comprehension. ASL 2 students produced less DS than the ASL 1 students but did not differ from the ASL 3+ students. There were no differences in instances of BPDS among the three groups of L2 learners although their ability to produce BPDS was correlated with their ASL comprehension. This study is the first to systematically elicit depicting signs from M2L2 learners in a narrative context. The results have important implications for the field of sign language pedagogy and instruction. Future research, particularly cross-sectional and/or longitudinal studies, is needed to explore the trajectory of the acquisition of DS and identify evidence-based pedagogical approaches for teaching depicting signs to M2L2 students.

While teaching signed language is increasing in popularity worldwide, very little research has been done related to how hearing individuals learn sign languages on a global scale. Hearing second language (L2) learners are not only learning a new language, but also a new visual-gestural modality (M2) of communication (Chen Pichler and Koulidobrova, 2016). Little is known about how hearing students learn American Sign Language (ASL) as a second language in a second modality. Rosen (2020) discussed how practitioners in the field “often revert to their own understanding of what language is, how to teach it, how learners learn, and how to assess learners' language knowledge and skills” (p. 17). This study builds on Clark's (2016) theoretical framework of depiction as basic tools to relay information about people, places, things, and events.

In this article, we describe how M2L2 learners acquire skills related to depicting signs in the visual-gestural modality as observed in a cross-sectional study. In literature, some sign language researchers use the word classifiers. In this paper, we will refer classifiers to depicting signs. The use of depicting signs by deaf signers has been well-documented across a variety of signed languages and ages; deaf children as young as 2–3 years of age (Schick, 1987; Slobin et al., 2003) can produce depicting handshapes that are a part of depicting signs. The present study focused on four different depicting signs: (1) Entity depicting signs; (2) Body part depicting signs; (3) Handling depicting signs; and (4) Size-and-shape specifiers (SASS). The study provides insight into the difficulties they encounter and typical learner behaviors.

Some of the subjects in this study took ASL classes. Those subjects who took ASL classes at the university were exposed to the same curriculum, an ASL e-curriculum, that was based on the American Council of Teaching Foreign Languages (ACTFL)'s national standards. ACTFL published ASL national standards (Ashton et al., 2014). The philosophy behind ACTFL foreign language classes is based on a “spiral” concept: everything should be introduced at the beginning level and continue to be taught in more advanced classes. For example, basic and static depicting handshapes that do not require much movement are introduced in beginning ASL classes. More complex levels of depicting handshapes and signs including entity that entails movement are introduced later in more advanced ASL classes. The ASL program for foreign/modern language credit has ~1,000 students every year. ASL classes enroll up to 20 students in each class. Approximately ten faculty members teach ASL classes for foreign/modern credits. Most of these faculty members are either lecturers (non-tenure track faculty) or adjunct faculty members. Tenure track and tenured faculty are reserved for matriculated interpreting classes with degree-seeking students. We recognize the variations regarding teaching experience and qualifications.

Despite the growth of interest in researching the fields of sign second language acquisition (SSLA) and sign language pedagogy in the past 30 or so years, there is not much research in SSLA (see Chen Pichler and Koulidobrova, 2016; Boers-Visker and Pfau, 2020; Rosen, 2020; Schönström, 2021). While spoken languages are oral-aural, signed languages are gestural-visual. Here we discuss the second modality of second language learners whose first modality (M1) is a spoken language (based on sound and use of their mouth and tongue) and whose second modality (M2) is a signed language (based on vision and use of body, hands, arms, head, and facial expressions). Most foreign language students learn their second language in their first modality (M1L2) but here we focus on those learning a second language in their second modality (M2L2) (Hill et al., 2018). There are many variables to consider related to L2 acquisition compared to first language (L1) acquisition. Learners acquiring their second language come from different native language backgrounds and are at different stages of life (e.g., childhood L2 and adult L2). In this study we focus primarily on adult learners who take ASL classes as part of their foreign/modern language credit requirements at a private university in the Northeastern part of the United States. “Extending L2 investigations to sign language introduces yet another important variable, that of modality…In view of these potential modality effects, it is quite plausible that learning a second language in a different modality from one's first language may present possibilities, difficulties, and therefore development patterns that do not occur for L2 learning in the same modality as one's L1” (Chen Pichler and Koulidobrova, 2016, p. 219).

Literature on spoken L2 acquisition focuses a great deal on linguistic transfer. Some common research questions related to M2L2 are whether M2L2 learners' linguistic features transfer from M1 to M2 and L1 to L2. If yes, which linguistic features tend to transfer? Do these learners' patterns transfer to M2L2 because of their early mastery of a spoken language or because of their use of gestures? Language transfer has been documented for L2 learners at all grammatical levels. For example, L2 learners may apply their word order patterns in their L2 that follow the word order in their first language: an ASL student whose first language is English might attempt to sign ASL or use gestures using English word order and syntactic rules. Some studies show that for M2L2 learners, experience with gestures including co-speech gestures, facial expressions and other non-manual cues could transfer into L2 learners' attempt to express in sign language (see Taub et al., 2008; Chen Pichler, 2009, 2011; Brentari et al., 2012; Ortega, 2013; Ortega and Morgan, 2015). More research is needed to explore the extent of potentially transferable features especially in M2L2 learners.

The research methodology in this paper involves narrating a story. Here, we focused on narratives in this study to investigate how second language users (L2) use depicting signs. Research related to narrative tasks is well-established (see Robinson, 1995; Foster and Skehan, 1996; Skehan and Foster, 1997, 1999; Bygate, 1999). According to Albert and Kormos (2011), this research methodology usually involves creation of a story in response to a stimulus, such as a picture or a film. Methods of language teaching also often employ tasks that entail narrating or retelling a story, allowing students to use their imagination to generate new ideas (Swain, 1985). L2 spoken language instruction employs communicative and task-based language methods, including telling narratives (Albert and Kormos, 2011).

There are a number of studies related to oral narrative performance tasks in spoken language research. These tasks generally involve some storytelling and an opportunity to use one's imagination (Albert and Kormos, 2011). Narrating a story in a second language is an increasingly popular language elicitation tool; however, researchers affirm that it is one of the most difficult and challenging aspects of language production (Ellis, 1987; Robinson, 1995). Challenges include remembering the series of events in the story and establishing a variety of viewpoints, as well as mastering lexical, syntactic, and pragmatic linguistic features (Norbury et al., 2014). Constructing a narrative is challenging because both language and cognitive tasks occur at the same time. Producing cohesive, coherent, and structured narratives requires sophisticated language and cognitive skills (see Bamberg and Damrad-Frye, 1991). Gulamani et al. (2022) expressed that narrative is an interesting area of study in L2 acquisition since it can provide us with a rich source of data related to linguistic and cognitive tasks as well as data that compare language fluency among M2L2 late learners with those of early learners.

Though there has been a great deal of research on the topic of gesture in psychology and linguistics, researchers do not have an agreed-upon definition of gesture. Kendon (1986), who advocated for studying gesture in a second language context, recognized that gestures may be considered gradient or gestural and are integral to the very working of the system as a language (Kendon, 2008). McNeill described a gesture as “an unwitting, non-goal-directed action orchestrated by speaker-created significances, having features of manifest expressiveness” (2016, p. 28). Furthermore, McNeill (2016) emphasized that an expressive action is part of the process of speaking that enacts imagery. Silent gestures and co-speech gestures (those gestures that co-occur with speech) (McNeill, 1992; Kendon, 2004) are commonly used by individual naïve gesturers. Capirci et al. (2022) described how throughout most of the twentieth century, “different models proposed to describe signed languages were based on a hierarchy: only the lexical units (i.e., standardized in form and meaning signs) were considered at the core of the language, while the productive signs (i.e., iconic constructions) were pushed to the linguistic borderline, closer to the level of gesticulation and mime” (p. 6,365).

One can distinguish gestures used by sign naïve gesturers (1) to support or complement speech (co-speech) or (2) as silent gestures. This might be a conscious process, but may well be unconsciousness. Learners of M2L2 might recruit gestures as a way to express themselves when they do not master a language yet. So they might produce a gesture for an object in case they lack the lexeme. In this case, gestures serve another role as a deliberate strategy to get the information across. Some gestures resemble sign language lexemes. For example, Brentari et al. (2012) describes hand-as-object gestures and Ortega et al. (2020) describes some iconic transparent lexemes (e.g., drink, play-piano, etc).

Gullberg (2006) explained how gestures are relevant to the study of Second Language Acquisition (SLA): “Gestures can therefore be studied as a developing system in their own right both in L2 production and comprehension” (p. 103). Gestures are important in M2L2 studies, namely, since it is possible that beginning sign language learners resort to gestures in absence of any knowledge of sign language. It is possibility that beginning sign language learners also use transfer of gestures, just like learners of other language pairs might transfer a lexeme or grammatical signs of their L1 in their L2 (Chen Pichler and Koulidobrova, 2016). It is possible that some sign language learners might depend on their gestural repertoire in their learning process when they do not know how to produce the signs or remember how to sign them.

When acquiring a second language, learners draw from any available semiotic resources and not only from their linguistic experience (Ortega et al., 2020). Research in the field of sign language has shown that signed languages may share some properties with gesture especially the locative relationships between referents and participants involved in action (Casey S., 2003; Casey S. K., 2003; Liddell, 2003a,b; Kendon, 2004; Schembri et al., 2005). Schembri et al. (2005) tested deaf native signers of Australian Sign Language (Auslan), deaf signers of Taiwan Sign Language (TSL), and hearing non-signers using the Verbs of Motion Production task from the Test Battery for ASL Morphology and Syntax. They found that that the handshape units, movement and location units appear to be very similar between the responses of non-signers, Auslan signers and TSL signers. This confirm the other data that claim that depicting handshape constructions are blends of linguistic and gestural elements (Casey S., 2003; Casey S. K., 2003; Liddell, 2003a,b; Kendon, 2004; Schembri et al., 2005). M2L2 learners do have access to a repertoire of gestures (Boers-Visker, 2021a,b). There is a body of literature that shows that there are some similarities between some gestures and signs. Ortega et al. (2019) suggested that gestures that overlap in form with signs are called “manual cognates.” Ortega and Özyürek (2020) found that the gesturers used similar systematic signs and that many signs involving acting, representing, drawing and molding were considered cognates.

Depicting is a common part of everyday communication. Clark's (2016) explained that people use a basic method of communication through describing, pointing at things, and “depicting things with their hands, arms, head, face, eyes, voice, and body, with and without props” (p. 324). Some examples Clark's (2016) described include iconic gestures, facial gestures, quotations of many kinds, full-scale demonstrations, and make-believe play. Depiction is used by all (spoken and signed) language users, and that for sign languages, this is accomplished by using depicting handshapes and signs. Depiction can also include behaviors that are not actually happening but are a representation of behaviors or events (Goffman, 1974). Gesturers have been shown to deploy depiction in such signs as “like this,” where “like this” functions to introduce the depiction, which can simply be a gesture (Fillmore, 1997; Streeck, 2008). Cuxac (1999, 2000) proposed that, with depiction, a signer can “tell by showing,” Cuxac driven by an illustrative goal.

Ferrara and Halvorsen (2017) and Ferrara and Hodge (2018) applied Clark's theory on spoken communication to signed languages. Clark's (2016) theory was based on Peirce's (1994) work which identified the foundational principles of categorization of semiotic signs into symbols, indices, and icons. Ferrara and Halvorsen (2017) and Ferrara and Hodge (2018) proposed that there are different ways to display signs based on the signer's intentions.

The main function of verbs is “to encode meaning related to action and states” (Valli et al., 2011, p. 133). In sign language literature, Liddell (2003a) first coined the term “depicting verbs.” Thumann (2013) identifies depiction as “the representation of aspects of an entity, event, or abstract concept by signers' use of their articulators, their body, and the signing space around them” (p. 318). Valli et al. (2011) explained, “like other verbs [depicting verbs] contain information related to action or state of being” (p. 138). An example of depiction in ASL is BIKE-GO-UP-THE-HILL (translation: “The bike is being ridden up a hill”). For this paper, we will use “depictive signs” as an umbrella term for the categories we are analyzing.

Conceptual blending is the combination of words and ideas to create meaning in various ways. Liddell (2003a) extended Fauconnier's (2001) conceptual blending theory to ASL to explain the structure of depicting signs. Valli et al. (2011) offered an example of blending: two people are sitting in an office having a conversation. One of the interlocutors wants to describe the street where she lives. She could set up objects on the table as a visual representation of her street; she might use a book to represent her house, a folder to represent her neighbor's house, and a pen to represent the railroad tracks at the end of the street. Liddell (2003a) describes how these real items on the table represent part of the imagined “scene” of the street where she lives. Because ASL is a visual language, ASL users can present conceptual blending by using their fingers, hands, arms, body, and face as the “objects” that represent the scene in signing space. Dudis (2004) offered another example: to describe a rocket flying in space, a non-signing college professor might use a pen to represent a rocket flying in space. Similarly, in ASL, signers could represent the rocket by using the handshapes of either a “1” or “R” instead of a pen. When signers represent an entity or event that is not actually present, they may choose to use depicting handshapes, signs and space to make unseen entities visible (Thumann, 2013).

In a study that examined an ASL educational video series, Thumann (2013) found that native ASL users produced depiction an average of 20.44 times per minute. In another study, ASL-English interpreters were asked to interpret two texts twice, 12 years apart (Rudser, 1986). Their interpretations 12 years later included a greater number of depicting handshapes, suggesting that an increased usage of depicting handshapes aligns with a higher level of ASL fluency. In a similar study, deaf children whose mothers who were native signers displayed a greater usage of depicting handshapes compared to deaf children whose mothers who were non-native signers (Lindert, 2001). Halley (2020) suggested that non-native signers may struggle with comprehending and producing depiction, especially the depicting handshapes and signs. Thumann (2010) agreed that second language learners of ASL find depiction challenging to comprehend and produce. Wilcox and Wilcox (1997) and Quinto-Pozos (2005) likewise found that second language learners of ASL have difficulty producing depicting handshapes. These studies support the evidence in this study—that not all depicting signs are easy for M2L2 learners to acquire. However, in another study, Boers-Visker (2021a,b) conducted a study with 14 novel learners of Sign Language of the Netherlands (NGT) over a period of 2 years. The NGT learners were asked to produce sign language descriptions of prompts containing various objects that could be depicted using a depicting handshape. They found that the practice of denoting an object with a meaningful handshape was not difficult to learn.

Frishberg (1975) conducted one of the earliest studies related to depicting handshapes in sign language; she described depicting handshapes as “hand-shapes in particular orientations [used] to stand for certain semantic features of noun arguments” (p. 710). A subsequent body of work has contributed to the description and analysis of depicting handshapes in almost all known sign languages (Schembri, 2001; Zwitserlood, 2012). Referents in handshapes signals that it has certain salient characteristics, such as size and shape, or that the referents represent a class of semantically related items (Cormier et al., 2012).

Depicting signs entail information related to the location, movement, path, and/or manner of movement of an argument of the verb, as well as the two locations of both referents in relation to each other (Schembri, 2001). Whole entity depicting handshapes entail handshapes that represent an item from a semantic group. For example, the Depicting Handshape (DH)-3 handshape represents a car, motorcycle, or a bike; the DH-1 handshape represents upright beings (people and animals); and the bent DH-V handshape represents people sitting or small animals such as cats and birds. Handling depicting handshapes represent a hand holding an item. For example, the flat-DH-C handshape represents holding flat items with some thickness, such as a book, piles of papers, or a cereal box. The DH-S handshape represents holding cylindrical items. When a signer uses a handling depicting handshape, it denotes that an agent is manipulating an object in a particular way (e.g., holding a paint roller; hands on steering wheel). As the name implies, body part depicting handshapes represent a part of the body of a human or animal. The body part depicting handshape DH-V (downward) represents the legs of a human or upright animal, and the DH-C (spread) represents the claws of an animal. Likewise, size-and-shape specifiers describe the size or shape of an object. For example, the DH-F handshape using one hand could represent a circular object such as a coin or using both hands to represent a cylindrical object by moving apart from each other (e.g., a stick, pipe, or small pole). Depicting signs also include locations that are encoded in the signing space.

Depicting signs may include one or two hands. For example, a signer might use one hand to represent a person standing up and another hand to represent a small animal. Schembri (2003) described how it is possible simultaneously for one hand to depict a whole entity depicting handshape while the other hand employs a handling depicting handshape. Schembri (2003) also explained that one of the depicting handshape types could represent part of a static or moving referent and could be combined with verb stems that represent the motion or location of a referent.

Some studies of non-signers have shown that non-signers use depicting handshape-like gestures to express motion events (Singleton et al., 1993; Schembri et al., 2005). However, in a study of Dutch signers, depicting handshapes used were found to be highly conventionalized compared to non-signers' gestures (Boers-Visker and Van Den Bogaerde, 2019). Boers-Visker and Van Den Bogaerde (2019) compared how two L2 subjects and three L1 subjects used depicting handshapes and found that both L2 subjects used depicting handshapes less frequently than the L1 subjects. The visual representation of depicting handshapes and depicting signs is new for many M2L2 students (Boers-Visker, 2021a,b). There are a few recent studies that describe how M2L2 learners acquire depicting handshapes and signs. Marshall and Morgan (2015) studied British Sign Language (BSL) M2L2 learners who had been learning BSL for 1–3 years. The researchers found that the learners were aware of the need to use depicting handshapes to represent objects but had difficulties in choosing the correct depicting handshapes although the location did not lead to much difficulty. In another study, Ferrara and Nilsson (2017) looked at how Norwegian Sign Language (NSL) learners used depicting handshapes and signs to describe an environment. They found that the learners often resorted to lexical signs instead of depicting handshapes and signs and used lexical signs marked for location. In summary, these studies showed that M2L2 students found it difficult to acquire depicting handshapes and that it is a complex system to learn.

Depicting signs— (Frishberg, 1975; Supalla, 1986)—“are a productive semiotic resource for ironically representing entities, spatial relationships, handling actions and motion events in signed languages” (for overviews of their properties see Emmorey, 2003; Zwitserlood, 2012) (McKee's et al., 2021, p. 95). Using a cognitive linguistics framework, depicting signs entail their analog character and function in discourse (Liddell, 2003a; Dudis, 2008; Ferrara, 2012). The the depicting signs identified in this study included the four main types of depicting signs in the in McKee's et al. (2021) study. We coded the four types of depicting signs in our data: (1) Entity depicting signs; (2) Body part depicting signs; (3) Handling depicting signs; and (4) Size-and-shape specifiers (SASS), as follows (the information/codes below were adapted from McKee's et al., 2021, p. 100–101).

The handshape represents a whole or part of an entity that belongs to a closed semantic category, for instance human beings or vehicles. Whole Entity (Engberg-Pedersen, 1993) or semantic depicting handshapes (Supalla, 1986) have also been used as alternative names in the literature. The handshape can combine with a movement that indicates motion path and/or manner of the entity in space (unlike size-and-shape specifiers).

Body parts of an animate referent, e.g., legs, eyes, feet, head, are mapped onto the signer's fingers or hands.

In handling depicting signs, the movement that combines with a depicting handshape imitates how an object is touched or handled. Padden et al. (2013) explained two strategies within this category depending on which iconic feature is depicted by the selected handshape: the action of the hands or the shape properties of the object being handled.

a. Handling (HDS-h): the handshape depicts a hand manipulating an object, e.g., grasping the handle of a toothbrush and moving the handshape at the mouth as if brushing.

b. Instrument (HDS-i): the handshape depicts a salient feature of the object itself, e.g., the extended index finger represents a whole toothbrush, with lateral movement across the mouth that imitates the orientation of a toothbrush in use.

These describe the visual-geometric structure of a referent (see Supalla, 1986) and this study we distinguish three sub-categories:

a. Static SASS (SASS-st): The size and/or shape of an object or part of an object is either directly mapped onto the signer's hand (e.g., a flat B-handshape representing a sheet of paper), or the distance between the signer's hands or fingers shows the size of the referent, (e.g., two flat handshapes, palms facing, to show the width of a box). Unlike entity depicting signs, handshapes do not specify a particular semantic class of referents but categorize them in a broader sense into flat objects, round objects, thin objects, etc. Another difference from entity depicting signs is that no path movement is involved in static SASS. The repeated articulation of a static SASS can depict a quantity of objects (e.g., a stack of books).

b. Tracing SASS (SASS-tr): The signer uses the index fingers or whole hands to trace the outline of an object in the air, e.g., the triangular shape of a traffic sign. The hand movement involved in this category of depicting signs specifies the shape or extent of a referent, unlike in entity depicting signs where path movement describes the motion of a whole entity.

c. Element SASS (SASS-el): These are descriptions of non-solid element such as water, light or vapor. Although such elements do not have a clearly delineated size or shape their depiction shares properties with SASS (see also Supalla, 1986 “texture and consistency morphemes”). Element depictions are rarely represented in experimental studies but need to be accounted for in a M2L2 acquisition study.

The first main type of depicting signs are called entity depicting signs (EDS), which represents a whole or part of an entity such as a human being or vehicle. The signer retelling the Canary Row story could use EDS to show Sylvester the cat walking upright, like a human being. The handshape consists of an index finger that shows an upright person in a path of motion. The signer could use a “1” handshape to depict a cat walking from point A to point B (see Figure 1).

Figure 1. First depicting sign of a native signer: Example of EDS: Sylvester the cat walking upright similar to a human being from point A to point B.

The second main type of depicting signs is called body part depicting signs (BPDS), where animate parts of a body (legs, eyes, feet, head, etc.) are mapped onto the signer's hands or fingers. In the Canary Row example, when Sylvester the cat is kicked out of a building and lands in the garbage, the signer could use a “S” handshape to depict a cat's head hitting the garbage (see Figure 2).

Figure 2. Second depicting sign related to BPDS used to describe the head “S” of the Sylvester the cat when he is kicked out of a building and hits his head in the garbage area.

The third main type of depicting signs is called handling depicting signs (HDS), which combines movement with depicting handshapes to imitate how an object is touched or handled. Here, we distinguish two sub-categories based on Padden et al. (2013)'s work in this area: Handling (HDS-h) and Instrument (HDS-i). HDS-h is when the handshape depicts a hand manipulating an object, e.g., grasping the handle of a toothbrush and moving the handshape at the mouth as if brushing. In the Canary Row example, the grandmother is seen holding the closed umbrella while hitting the cat, this could be depicted through an HDS-h as shown in Figure 3. HDS-I is related to the handshape that depicts a salient feature of the object itself, e.g., the extended index finger represents a whole toothbrush, with lateral movement across the mouth that imitates the orientation of a toothbrush in use. In the Canary Row example, the hotel concierge is talking on the phone. The signer could use the sign for “telephone” as part of HDS-i (see Figure 4). In another study, Padden et al. (2010) found a generational difference related to handling and instrument and SASS in two different sign languages, Al-Sayyid Bedouin Sign Language (ABSL) and Israeli Sign Language (ISL). They also found that while both ASL and ISL make full use of the size-and-shape specifiers and handling depicting handshapes, the depicting handshapes system of ASL includes more abstract entity depicting handshape, such as UPRIGHT-OBJECT and VEHICLE than ISL, which relies more on size-and-shape specifiers and handling depicting handshape.

Figure 3. Third depicting sign that shows HDS-h which the Grandmother is seen holding the closed umbrella when hitting the cat.

The fourth main type of depicting signs shows the size-and-shape specifier (SASS) of an object. SASS describe the visual-geometric structure of a referent (Supalla, 1986). Under this SASS, there are three sub-categories: Static SASS, Tracing SASS and Element SASS. With Static SASS (SASS-st) the size and/or shape of an object or part of an object is either directly mapped onto the signer's hand (e.g., a flat B-handshape representing a sheet of paper), or is represented by the distance between the signer's hands or fingers to show the size of the referents. For example, in the Canary Row story, the signer might depict the size of the downspout that is attached to the building (see Figure 5). The Tracing SASS (SASS-tr) is when the signer uses the index finger or whole hands to trace the outline of an object in the air, e.g., the triangular shape of a traffic sign. For example, in the Canary Row story, the signer makes an outline with his/her index finger outlining a poster/sign on the wall (see Figure 6). The Element SASS (SASS-el) entails non-solid elements such as water, light or vapor. Not all SASS-el have a clearly delineated size or shape, but their depiction shares properties with SASS. For example, in the Canary Row story, the signer could depict the water trickling down the downspout pipes (see Figure 7).

Figure 5. Fifth depicting sign entails a static SASS where the signer depicts the size of the downspout that is attached to the building.

Figure 6. Sixth depicting sign entails a tracing SASS where the signer traces the poster on a building wall.

Given the paucity of research on second language acquisition specifically in sign languages students, this study looks at these four main types of depicting signs. Students who were learning ASL as a second language and second modality were the focus of this research. The research hypotheses are that:

a. In a cross-section sample of M2L2 ASL students, students exhibit a common acquisition trajectory for the use of all types of depicting signs over time.

b. The advanced ASL groups would exhibit greater use of depiction signs compared to the beginning ASL groups.

The null hypotheses are that the students would not exhibit a common acquisition trajectory in the use of all types of depicting signs over time, and that there are no differences between the beginning and advanced ASL groups.

The study reported in this article is part of a larger cross-sectional research project to investigate cognitive and task-based learning in M2L2 hearing students. Some other tests that were part of the larger research project include the Kaufman Brief Intelligence Test; ASL-Comprehension Test; Image Generator; Spatial Stroop; ASL Spatial Perspective; and ASL Vocabulary. All tests were counterbalanced. Analyses related to these other cognitive tasks are ongoing and will be disseminated in separate papers. This research project was approved by the Institutional Review Board and was conducted in accordance with the ethical guidelines laid out by the university.

The sample included 75 hearing undergraduate students (Mage = 21.2 years, SDage = 1.7 years) who were taking 3-credit college ASL courses of different levels. Of 59 participants who reported their gender identity, 61% identified as female, 37.3% identified as male, and 1.7% identified as non-binary. The participants were divided into three subgroups based on their coursework. The ASL 1 Group represents those who were enrolled in a 3-credit ASL 1 course for one semester (15 weeks); the ASL 2 Group were enrolled in the second level ASL course (either because they took ASL 1 already or already had some ASL skills), and the ASL 3+ Group were in the third level ASL course or higher. There were no significant differences between the three ASL groups based on their age, F (2, 56) = 0.903, p = 0.411, or gender identity, F (2, 56) = 1.645, p = 0.202.

The material consisted of short stimulus clips from Canary Row, a series of Sylvester and Tweety cartoons (Freleng, 2004). These clips were used as an effective elicitation tool for narrative retellings (McNeill, 1992). Each of the clips is a few minutes long. All three clips were selected to elicit signed stories from M2L2 students.

The first clip shows Sylvester and Tweety across from each other in different buildings. Sylvester is on a lower level of a building and Tweety is on an upper level of a building across the street. Tweety is in his bird cage. Sylvester uses binoculars to look for Tweety. Tweety also has a set of binoculars. Tweety chirps and makes some noises. Sylvester becomes excited and runs across the street to enter the building Tweety is in. There is a sign on the building that says, “No dogs or cats allowed.” As soon as Sylvester enters the building, he gets kicked out and lands in the garbage with trash on his head.

In the second clip, Sylvester walks back and forth in an alley. Across the street, Sylvester sees a dancing monkey wearing a shirt and cap next to a man with a mustache who is playing a musical box. Sylvester calls to the monkey and entices it with a banana. The monkey follows Sylvester behind the bush/wall. Sylvester changes into the monkey's clothes and acts as the monkey, carrying a cup to collect coins. Tweety sees Sylvester and tweets. Next, Sylvester climbs up the drain pipe toward Tweety. As soon Tweety sees Sylvester at the window, he escapes his cage and flies into the Grandmother's apartment. Sylvester begins to chase Tweety in the apartment. When Sylvester runs into the Grandmother, he stops and acts like a monkey in front of her. While the Grandmother is talking to Sylvester, he continues to look around for Tweety under the table cloth, chair, Grandmother's long dress, and the rug. The grandmother takes out a coin from her wallet and drops it in a cup that Sylvester is holding. Next, Sylvester grabs his hat and pulls it then suddenly, he gets hit by the Grandmother with an umbrella. Eventually Sylvester becomes dizzy and leaves the room.

In the final clip, the desk clerk answers an old-fashioned telephone and can be seen talking affably on the phone. Next, Sylvester is shown sitting in a mailbox and eavesdropping on the clerk's phone conversation. Sylvester becomes sneaky and appears at the Grandmother's apartment door disguised as a porter and knocks at her door. There is a small rectangular window above the door; the Grandmother can be seen talking to Sylvester through the transom window at the top of the door. Sylvester asks the Grandmother to open the door and she says OK. Next, Sylvester enters the apartment, looks around the room; he picks up the bird cage that is covered in a cloth and a small suitcase. He leaves the apartment with the bird cage and suitcase and throws out the suitcase. He picks up the bird cage and walks down the stairs. Sylvester carries the covered bird cage into the alley and puts it on top of a box. Sylvester removes the cover and, to his surprise, the Grandmother is in the bird cage instead of Tweety. The Grandmother hits Sylvester with an umbrella and chases him down the street.

An informed consent form and a video-release form were shared with the participants prior to the testing. By signing these forms, participants allowed researchers to record their signing, and share their video data for the purposes of presentation, publication, and teaching. Participants were allowed to continue with the study even if they did not wish to have their video data released but gave their informed consent. Participants who did not wish to have their videos shared gave us permission to collect their data and use it for analysis, but their videos were not used for the creation of still images, videos, or presentation of data in public. All participants were given language background questionnaires and asked to rate their ASL skills proficiency.

The ASL students watched the cartoon Canary Row video clips and were asked to “retell the story as if you were telling it to a deaf friend” using gestures or sign language. Participants were tested individually and compensated $20 for their time. Two research assistants, who were hearing English-ASL interpretation majors, provided an informed consent form and explained the benefits and risks of the study in spoken English. These instructions were read to the participants: “For this part of the study, you will watch a short clip from a Sylvester and Tweety cartoon. You will sign in ASL what you saw in the cartoon clip. I will show you the clip two times before I ask you to sign the story.” Participants were also told that they could use gesture, mime, sign, or a combination. The intent of these instructions was to avoid causing participants to feel uncomfortable or limited regarding their expressive sign language skills. They were encouraged to use any semiotic device they deemed appropriate, especially if they were not feeling confident in their ASL skills. In a private testing room where there were no other distractions, participants sat in front of a desktop computer to watch the cartoon clips. On top of the computer was a built-in webcam running in the background during testing to capture the student's signing. Participants were allowed to watch the video up to two times before retelling the cartoon stories using whichever semiotic devices needed to complete the retelling task. Participants were encouraged not to share the content of the test with other potential subjects outside of the testing sites. Participants were tested three times; each time they watched a different clip from Canary Row.

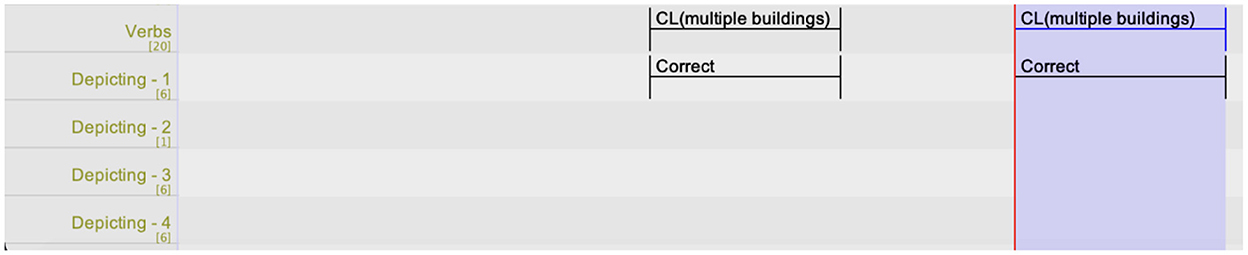

Videos of participants' retelling the cartoon clips were coded and analyzed using ELAN, a video annotation software program developed by researchers at the Max Planck Institute of Psycholinguistics in Nijmegen, Netherlands (Crasborn and Sloetjes, 2008; ELAN, 2021). In ELAN, tiers for coding purposes were developed to capture each of the depicting signs. ELAN was used to track how many times (tokens) each tier was marked. Researchers marked the tokens to indicate whether the discourse stretch presented instances of the four main types of depicting signs: (1) Entity depicting signs; (2) Body part depicting signs; (3) Handling depicting signs; and (4) Size-and-shape specifiers (SASS). Annotations in ELAN were made on five tiers for the verbs and the four different depicting signs. The figures below provide examples of what the annotations look like for a fragment where the signers in the video (not shown) are producing depicting signs. Figure 8 comprise an annotation that show tiers that were created; tokens were marked “correct” if the signer produced these depicting signs correctly. If they were not produced at all or produced incorrectly, they were left blank. The annotations show and “Depicting−1,” indicating the stationary sign or depicting handshape that was produced. For example, cars parked in the driveway.

Figure 8. Annotations in the data on a selection of tiers. The affixes verbs in their tier titles (first column) denote the sign(s) or verbs that were made.

A total of four student research assistants—two hearing students who are children of deaf adults and native-like signers, one deaf and native signer, and one hearing near-native signer—collaborated to perform analyses and complete the coding for each tier. The codings were spilt into three different ratings: 1. Correct sign production; 2. A mix of correct and incorrect sign production; and 3. Incorrect sign production. For instance, in the mix of both correct and incorrect signs, some M2L2 signers would produce the correct form (handshape) but the movement or location in the signing space is incorrect. Research shows that sometimes these gestures have the same form as signs (Ortega et al., 2019). For example, the “incorrect” handshapes might not be incorrect in this respect. However, these errors are part of the learning process and could be seen as an interlanguage phenomenon. Boers-Visker and Van Den Bogaerde (2019) showed that sign-naïve gesturers use handshapes in their features that deviate from the lexeme. Learners might produce these incorrect handshapes during the first stages of their learning process (Janke and Marshall, 2017).

The total number of depicting signs in each of the three retelling was divided by three to give the average number of depiction sign tokens per video. However, one limitation of the method was that not all participants had three clear videos of them retelling each of the three clips. Approximately 30% of the videos (68 videos of 225 possible retellings) were unscoreable because they were either choppy, frozen, or the participant was partially outside of the video frame. For those who had some unscoreable videos, the total tokens per video was divided by the number of scoreable videos. This ensured we could compare students across different ASL levels.

A one-way analysis of variance (ANOVA) was performed to determine if the ASL groups differed in their ASL Comprehension Test (ASL-CT) performance. All three ASL groups performed significantly different from each other, F(2, 72) = 19.088, p < 0.001, PES = 347, 95% CI = 0.165, 0.479. Post-hoc analyses with Bonferonni corrections to the alpha revealed that the ASL 1 Group (M = 51% correct; SD = 10%) performed worse (p = 0.031) than the ASL 2 Group (M = 59% correct: SD = 10%) and the ASL 3+ Group (p < 0.001; M = 69% correct; SD = 10%), the ASL 2 Group performed worse than the ASL 3+ Group (p = 0.026), and the ASL 3+ Group performed better than the ASL 1 (p < 0.001) and ASL 2 Group (p = 0.026).

Two raters who are non-deaf native ASL signers born to deaf, signing parents coded the Entity-Static and Entity-Movement tokens. Their inter-rater reliability was r (67) = 0.811, p < 0.001. One deaf native signer and one non-deaf and non-native ASL signer coded the remaining DV variables. Their inter-rater reliability was r (42) = 0.918, p < 0.001. To determine if there was a relationship between ASL-CT performance and the total number of depicting sign tokens identified, a significant positive correlation was found, r (69) = 0.387, p < 0.001, suggesting that the more ASL comprehension a student has, the more ASL production with DV was observed. A multivariate ANOVA was computed with number of depicting sign tokens as the dependent variable, depicting sign type (EDS, SASS, BPDS, HDS) as the within subject variable and ASL class level (ASL 1, ASL 2, ASL 3+) as the between subject variable. The analysis revealed significant group main effects for EDS, SASS, and HDS (p < 0.05) but not BPDS (see Table 1 and Figure 9).

Figure 9. Average frequency of depicting signs per each four depiction types and each ASL group. Error bars represent the standard error. EDS, entity depicting signs; SASS, size-and-shape specifier; BPDS, body part depicting sign; and HDS, handling depicting sign.

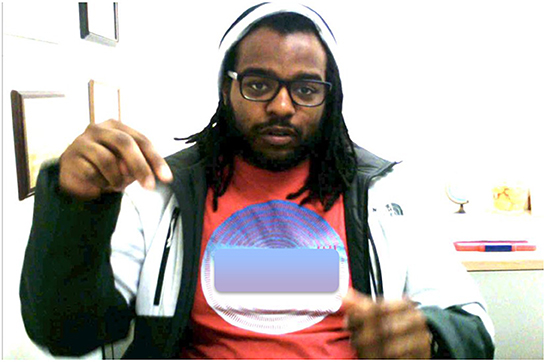

The following figures provide descriptive examples of the students producing different types of depicting signs. Figure 10 shows an ASL student using depicting sign, the stationary depicting handshape, to show where the entity is established in the signing space (e.g., CL-planes on a runaway). In the last example, Figure 11 shows an ASL student using depicting signs that show the action and motion of an entity (e.g., CL- a car going uphill).

Figure 11. ASL student using type 3 depicting signs: a depicting handshape showing a cat walking down the stairs.

The three groups of ASL undergraduate college students were more heterogenous than homogenous in their ASL expressive skills as evident in the groups' standard deviations. Regardless, the results revealed that producing SASS and HDS are skills that improve as students advance in their L2 training and both have a positive relationship with an independent measure of ASL comprehension. While EDS did not correlate with ASL comprehension and the ASL 2 students produced less of this depicting sign than the ASL 1 students but did not differ from the ASL 3+ students. The frequency of BPDS among the three groups of L2 learners was not different although their ability to produce BPDS was correlated with their ASL comprehension.

The authors postulate that it is possible the ASL 1 signers gesture concepts in a way that it produced a depicting sign before they know the actual sign for the concept, hence the less EDS tokens among those in ASL 2. It is possible that some gesturing skills students bring to ASL 1 might be helping them produce descriptions that are pidgin-like signs mixed with gestures they spontaneously produce. Occhino and Wilcox (2017) discussed how the interlocutor may categorize an articulation as a sign or gesture differently based on their linguistic experience. A possible limitation is that those students who learned ASL in their ASL classes became comfortable with using gestures and acting as opposed to telling the story using the depicting handshapes or tracing depicting handshapes to describe the size or shape of an object. As students' progress through their ASL education, they are exposed to new vocabulary and learn more prescriptive rules of ASL. Over time, the ASL students may have learned more of the formal rules related to use of depicting handshapes and describing objects or people. As discussed earlier in this article, the “hand-as-object” gestures and entity depicting handshape produced by non-native signers could be similar to each other. Previous research suggests that M2L2 learners could access their repertoire of gestures as substrate upon which they could build their knowledge (Marshall and Morgan, 2015; Janke and Marshall, 2017). It is very possible that learners used more gestures in ASL 1 and in the more advanced ASL classes, learners used more depicting signs including depicting handshapes. Boers-Visker (2021a,b) suggested “that the commonalities between gestures and signs facilitate the learning process, that is, we are dealing with an instance of positive transfer” (p. 23).

Future analyses should include more specific linguistic features—eye gaze, mouth movement, depicting handshapes (including depicting handshape entity), phonological features, manual and non-manual articulators, location, and use of space—and compare them between groups. The three groups with increasing levels of proficiency makes this a cross-sectional study. Future studies could follow all the same participants for a longer time, filming them at ASL 1, ASL 2 and 3+.

A potential limitation of this study is the utilization of videos that captured a two-dimensional model of language, instead of a three-dimensional model that would be found within a naturalistic setting. This may have impacted the raters' ability to see and read the gestures and signs in the videos. Another possible limitation is that it was difficult to disentangle signs from gestures. In several instances, it was a challenge to judge whether the signs were actually signs, and not gestures that resemble depicting signs.

Another possible limitation is that the subjects in this study could have had more socio-cultural exposure to signers and Deaf culture on campus. Given the visible presence of a large staff of sign language interpreters and the large number of deaf and hard of hearing individuals on campus, hearing study participants may have become accustomed to using gestures and/or signs and viewing how others use gestures and/or signs to communicate. The students were asked to imagine they were communicating with a deaf friend. The university has approximately 22,000 hearing students and 1,000 deaf and hard of hearing students. Although the participants were screened to make sure they did not have prior training in ASL and were not enrolled in other language courses, it is likely that they have had casual exposure and interaction with deaf and hard of hearing individuals in shared spaces such as classrooms, dormitories, dining halls, or other common spaces. Studies at other universities should also be conducted to see whether there are effects related to presence or absence of deaf and signing populations. Another limitation was that we did not observe how sign language teachers taught their ASL classes. There were more than 10 sections of ASL classes each semester and it is possible that, although each instructor followed the department's ASL curriculum, each likely had different teaching styles. Despite these limitations, we believe that the study provides valuable data on the acquisition of depicting signs, and that the findings presented offer a good starting point for further research.

Our study yielded some answers but stimulated more questions. Some future research directions might include whether new signers require explicit instruction on the use of each type of depicting signs. Further cross-sectional and/or longitudinal studies are needed to analyze the later stages of learning of all four depicting signs and to measure the amount of improvement at each level of sign languages. Furthermore, research is needed to compare the learning trajectories for all four types of depicting signs in M2L2 ASL users with the trajectories of learners of signed languages of other countries. The large sample size in this study is a strength of our study which could lead to possible future research directions.

One suggestion for the future is to investigate how deaf ASL signers produce these depicting signs based on their frequency and the duration of each depicting signs, then compare those data with M2L2 hearing ASL students at other universities.

We did not ask our subjects whether they took any acting classes, as that might influence their ability to produce more depicting handshapes and signs. This would be another interesting study to compare subjects who took acting classes with subjects who never took acting classes and to test whether their depicting signs change over time. Ortega et al. (2020) found that hearing signers create expectations related to the form of iconic signs that they have never seen before based on their implicit knowledge of gestures. More studies are needed to better understand the role of what is traditionally considered “transfer” from L1 to L2; i.e., the extension of articulatory gestures from multimodal use of spoken languages to sign languages. Another area for study would be to investigate whether and how ASL teachers rely on hearing students' knowledge of a gestural repertoire to teach them depicting signs. Future research could also ascertain best practices in teaching depicting signs to maximize ASL learners' skill development. These lines of inquiry may serve as a guide for future evaluations of ASL pedagogies.

Future research also should include other variables and their effects on M2L2 learners who learn sign language. For example, Albert and Kormos (2011) wanted to see if creativity had a role in second-language oral task performance. They tested the creativity of Hungarian secondary school English learners using a standardized creativity test. Participants also performed two versions of a narrative task which included the numbers of words and narrative clauses, subordination ratio, lexical variety, and accuracy. They found that students who invented a high number of solutions on a creativity test did more talking. It is very possible that in a foreign language setting, students who talk more might create more opportunities for themselves to use the language in narrative tasks and have the beneficial effects of offering more output compared to students who do not talk as much or who score much lower on a creativity test. They concluded that some aspects of creativity might have an effect on the amount of output students produce, but not on the quality of narrative performance. Future studies should look into whether there is a connection between personalities and talkativeness. Future studies also should investigate the possible effect of students' personalities and whether personality impacts their output.

M2L2 research is still in its infancy; we are still learning what a typical learning trajectory looks like in this population. Learning how to produce depicting signs in the visual-gestural modality is a challenging task, but this study demonstrated that M2L2 students can develop these skills. The ASL 3+ group appears to be able to produce a higher number of instances of depiction. The fact that depicting signs were not readily observed until after two semesters of college-level ASL instruction suggests that these four types of depicting signs may take more time for signers to learn; this finding has implications for ASL education. The task type in this study might have influenced the production of depicting signs.

There are few studies that consider the learner's interlanguage during development. Likewise, few studies have addressed acquisition of a signed language within the theoretical frameworks of second language acquisition. Research related to M2L2 from a language development perspective is still sparse; more research is needed to better identify the gaps in second language acquisition research findings and ascertain best teaching practices. Investigating the challenges in M2L2 development could contribute to the overall body of second language acquisition research. Future research, particularly cross-sectional and/or longitudinal studies, is needed to explore the trajectory of the acquisition of depicting signs, and to establish evidence-based approaches to teaching them to M2L2 students.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Rochester Institute of Technology. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

KK and PH: conceptualization, methodology, and formal analysis. KK: investigation, writing–original draft preparation, supervision, project administration, and funding acquisition. KK, GK, and PH: resources, writing–review and editing, and visualization. All authors contributed to the article and approved the submitted version.

The authors would like to thank the NTID Department of ASL and Interpreting Education for supporting this research effort. A special thank you to Corrine Occhino for her guidance in developing this project. A special thank you to Gerry Buckley and the NTID Office of the President for their generous support through the Scholarship Portfolio Development Initiative. We would also like to thank our research assistants (in alphabetical order) Carmen Bowman, Abigail Bush, Ella Doane, Rachel Doane, Andrew Dunkum, Barbara Essex, Yasmine Lee, Kellie Mullaney, Katherine Orlowski, Melissa Stillman, and Ian White, who contributed their ideas to the success of the M2L2 project and the ASL students whose participation made this research possible. We would also like to thank David Quinto-Pozos and Ryan Lepic for their review and consultation on this research project and the reviewers for their feedback on the manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Albert, A., and Kormos, J. (2011). Creativity and narrative task performance: an exploratory study. Lang. Learn. 61, 73–99. doi: 10.1111/j.1467-9922.2011.00643.x

Ashton, G., Cagle, K., Kurz, K. B., Newell, W., Peterson, R., and Zinza, J. (2014). Standards for learning American sign language. Am. Council Teach. Foreign Lang. 1–64.

Bamberg, M., and Damrad-Frye, R. (1991). On the ability to provide evaluative comments: further explanations of children's narrative competencies. J. Child Lang. 18, 689–710. doi: 10.1017/S0305000900011314

Boers-Visker, E. (2021a). Learning to use space. A study into the SL2 acquisition process of adult learners of sign language of the Netherlands. Sign Lang. Linguist. 24, 285–294. doi: 10.1075/sll.00062.boe

Boers-Visker, E. (2021b). On the acquisition of complex classifier constructions by L2 learners of a sign language. Lang. Teach. Res. 24, 285–294. doi: 10.1177/1362168821990968

Boers-Visker, E., and Pfau, R. (2020). Space oddities: the acquisition of agreement verbs by L2 learners of sign language of the Netherlands. Mod. Lang. J. 104, 757–780. doi: 10.1111/modl.12676

Boers-Visker, E., and Van Den Bogaerde, B. (2019). Learning to use space in the L2 acquisition of a signed language. Sign Lang. Stud. 19, 410–452. doi: 10.1353/sls.2019.0003

Brentari, D., Coppola, M., Mazzoni, L., and Goldin-Meadow, S. (2012). When does a system become phonological? Handshape production in gesturers, signers, and homesigners. Nat. Lang. Linguistic Theory 30, 1–31. doi: 10.1007/s11049-011-9145-1

Bygate, M. (1999). Quality of language and purpose of task: patterns of learners' language on two oral communication tasks. Lang. Teach. Res. 3, 185–214. doi: 10.1177/136216889900300302

Capirci, O., Bonsignori, C., and Di Renzo, A. (2022). Signed languages: a triangula semiotic dimension. Front. Psychol. 12, 802911. doi: 10.3389/fpsyg.2021.802911

Casey, S. (2003). “Relationships between gestures and signed languages: indicating participants in actions,” in Cross-Linguistic Perspectives in Sign Language Research: Selected Papers from TISLR 2000, eds. A. Baker, B. van den Bogaerde, and O. Crasborn (Florianopolis, Brazil: TISLR).

Casey, S. K. (2003). “Agreement,” in Gestures and Signed Languages: The Use of Directionality to Indicate Referents Involved in Actions. San Diego: University of California.

Chen Pichler, D. (2009). Sign production by first-time hearing signers: a closer look at handshape accuracy. Cadernos de Saude 2, 37–50. doi: 10.34632/cadernosdesaude.2009.2975

Chen Pichler, D. (2011). “Sources of handshape error in first-time of signers of ASL,” in Deaf Around the World: The Impact of Language, eds. G. Mathur and D. Napoli (Oxford, UK: Oxford University Press), 96–121.

Chen Pichler, D., and Koulidobrova, H. (2016). “Acquisition of sign language as a second language,” in The Oxford Handbook of Deaf Studies in Language, eds. M. Marschark and P. Spencer (Oxford, UK: Oxford University Press), 218–230.

Clark, H. (2016). Depicting as a method of communication. Psychol. Rev. 123, 324–347. doi: 10.1037/rev0000026

Cormier, K., Quinto-Pozos, D., Sevcikova, Z., and Schembri, A. (2012). Lexicalisation and de-lexicalisation processes in sign languages: comparing depicting constructions and viewpoint gestures. Lang. Commun. 32, 329–348. doi: 10.1016/j.langcom.2012.09.004

Crasborn, O., and Sloetjes, H. (2008). “Enhanced ELAN functionality for sign language corpora,” in 3rd Workshop on the Representation and Processing of Sign Languages: Signs and Exploitation of Sign Language Corpora (Marrakech, Morocco), 39–43.

Cuxac, C. (1999). “French sign language: proposition of a structural explanation by iconicity,” in International Gesture Workshop (Springer: Heidelberg), 165–184.

Cuxac, C. (2000). La Langue des Signes Française (LSF). Les voies de l'iconicité. Faits de langues (Ophrys, Paris: Evry): 15–16.

Dudis, P. (2004). Body partitioning and real-space blends. Cog. Ling. 15, 223–238. doi: 10.1515/cogl.2004.009

Dudis, P. (2008). “Types of depiction in ASL,” in Sign Languages: Spinning and Unraveling the Past, Present and Future. Proceedings of the 9th Theoretical Issues in Sign Language Research Conference, ed R. de Quadros (Florianopolis, Brazil: Editora Arara Azul, Petropolis), 159–190.

ELAN (2021). (Version 6.2) [Computer software]. Nijmegen: Max Planck Institute for Psycholinguistics, The Language Archive. Available online at: https://archive.mpi.nl/tla/elan (accessed December 16, 2022).

Ellis, R. (1987). Interlanguage variability in narrative discourse: style shifting and use of the past tense. Stud. Second Lang. Acquis. 9, 1–19. doi: 10.1017/S0272263100006483

Emmorey, K. (2003). Perspectives on Classifier Constructions. Mahwah, NJ: Lawrence Erlbaum Associates.

Engberg-Pedersen, E. (1993). Space in Danish Sign Language: The Semantics and Morphosyntax of the Use of Space in a Visual Language. Washington, DC: Gallaudet University Press.

Fauconnier, G. (2001). “Conceptual blending and analogy,” in The Analogic Mind: The Perspectives from Cognitive Science, eds. D. Gentner, K. Holyoak, and B. Kokinov (Cambridge, MA: The MIT Press), 255–285.

Ferrara, L. (2012). The Grammar of Depiction: Exploring Gesture and Language in Australian Sign Language (Auslan) [dissertation] (Sydney: Macquaire University).

Ferrara, L., and Halvorsen, R. (2017). Depicting and describing meanings with iconic signs in norwegian sign language. Gesture 16, 371–395. doi: 10.1075/gest.00001.fer

Ferrara, L., and Hodge, G. (2018). Language as description, indication, and depiction. Front. Psychol. 9, 716. doi: 10.3389/fpsyg.2018.00716

Ferrara, L., and Nilsson, A.-L. (2017). Describing spatial layouts as an L2M2 signed language learner. Sign Lang. Linguistic. 20, 1–26. doi: 10.1075/sll.20.1.01fer

Fillmore, C. (1997). Deixis Lectures on Deixis. Stanford, California: Center for the Study of Language and Information.

Foster, P., and Skehan, P. (1996). The influence of planning and task type on second language performance. Stud. Second Lang. Acquis. 18, 299–323. doi: 10.1017/S0272263100015047

Freleng, F. (2004). Looney Tunes: 28 Cartoon Classics [Cartoon; 2-disc premier edition, Canary Row is on disc 2). Burbank, CA: Warner Bros. Entertainment Inc.

Frishberg, N. (1975). Arbitariness and iconicity: historical change in american sign language. Language 51, 676–710. doi: 10.2307/412894

Goffman, E. (1974). Frame Analysis: An Essay on the Organization of Experience. Cambridge, MA: Harvard University Press.

Gulamani, S., Marshall, C., and Morgan, G. (2022). The challenges of viewpoint-taking when learning a sign language: data from the “frog story” in british sign language. Second Lang. Res. 38, 55–87. doi: 10.1177/0267658320906855

Gullberg, M. (2006). Some reasons for studying gesture and second language acquisition (Hommage à Adam Kendon). Int. Rev. Appl. Ling. Lang. Teach. 44, 103–124. doi: 10.1515/IRAL.2006.004

Halley, M. (2020). Rendering depiction: a case study of an American sign language/english interpreter. J. Interp. 28, 3. Available online at: https://digitalcommons.unf.edu/joi/vol28/iss2/3

Hill, J., Lillo-Martin, D., and Wood, S. (2018). Sign Languages: Structures and Contexts. Oxfordshire, UK: Routledge.

Janke, V., and Marshall, C. (2017). Using the hands to represent objects in space: gesture as a substrate for signed language acquisition. Front. Psychol. 8, 2007. doi: 10.3389/fpsyg.2017.02007

Kendon, A. (1986). Some reasons for studying gesture. Semiotica 62, 3–28. doi: 10.1515/semi.1986.62.1-2.3

Kendon, A. (2008). Some reflections on the relationship between “gesture” and “sign.” Gesture 8, 348–366. doi: 10.1075/gest.8.3.05ken

Liddell, S. (2003a). Grammar, Gesture, and Meaning in American Sign Language. Cambridge, UK: Cambridge University Press.

Liddell, S. (2003b). “Sources of meaning in ASL classifier predicates,” in Perspectives on Classifier Constructions in Sign Languages, ed K. Emmorey (Mahwah, NJ: Erlbaum), 199–220.

Lindert, R. B. (2001). Hearing Families with Deaf Children: Linguistic and Communicative Aspects of American Sign Language Development [dissertation] (Berkeley, CA: University of California).

Marshall, C., and Morgan, G. (2015). From gesture to sign language: conventionalization of classifier constructions by adult hearing learners of British Sign Language. Top. Cogn. Sci. 7, 61–80. doi: 10.1111/tops.12118

McKee, R., Safar, J., and Pivac Alexander, S. (2021). Form, frequency and sociolinguistic variation in depicting signs in New Zealand Sign Language. Lang. Commun. 79, 95–117. doi: 10.1016/j.langcom.2021.04.003

McNeill, D. (1992). Hand and Mind: What Gestures Reveal About Thought. Chicago, IL: University of Chicago Press.

McNeill, D. (2016). “Gesture as a window onto mind and brain, and the relationship to linguistic relativity and ontogenesis,” in Handbook on Body, Language, and Communication, eds. C Müller, A. Cienki, E. Fricke, S. Ladewig, D. McNeill, and S. TeBendorf (Berlin: Mouton de Gruyter), 28–54.

Norbury, C., Gemell, T., and Paul, R. (2014). Pragmatic abilities in narrative production: a cross-disorder comparison. J. Child Lang. 41, 485–510. doi: 10.1017/S030500091300007X

Occhino, C., and Wilcox, S. (2017). Gesture or sign? A categorization problem. Behav. Brain Sci. 40, 36–37. doi: 10.1017/S0140525X15003015

Ortega, G. (2013). Acquisition of a Signed Phonological System by Hearing Adults: The Role of Sign Structure and Iconicity (dissertation). London: University of College.

Ortega, G., and Morgan, G. (2015). Input processing at first exposure to a sign language. Second Lang. Res. 31, 443–463. doi: 10.1177/0267658315576822

Ortega, G., and Özyürek, A. (2020). Systematic mappings between semantic categories and types of iconic representations in the manual modality: a normed database of silent gesture. Behav. Res. Methods 52, 51–67. doi: 10.3758/s13428-019-01204-6

Ortega, G., Özyürek, A., and Peeters, D. (2020). Iconic gestures serve as manual cognates in hearing second language learners of a sign language: an ERP study. J. Experiment. Psychol. Learn. Memory Cogn. 46, 403–415. doi: 10.1037/xlm0000729

Ortega, G., Schiefner, A., and Özyürek, A. (2019). Hearing non-signers use their gestures to predict iconic form-meaning mappings at first exposure to signs. Cognition 191, 103996. doi: 10.1016/j.cognition.2019.06.008

Padden, C., Meir, I., Aronoff, M., and Sandler, W. (2010). “The grammar of space in two new sign languages,” in Sign Languages, ed D. Brentari (Cambridge, UK: Cambridge University Press), 570–592.

Padden, C., Meir, I., Hwang, S.-O., Lepic, R., Deegers, S., and Sampson, T. (2013). Patterned iconicity in sign language lexicons. Gesture 13, 287–308. doi: 10.1075/gest.13.3.03pad

Peirce, C. (1994). The Collected Papers of Charles Sanders Peirce I-VIII. Cambridge, MA: Harvard University Press.

Quinto-Pozos, D. (2005). “Factors that influence the acquisition of ASL for interpreting students,” in Sign Language Interpreting and Interpreter Education: Directions for Research and Practice, eds. M. Marschark, R. Peterson, E. Winston, P. Sapere, C. Convertino, R. Seewagen, and C. Monikowski (Oxford, UK: Oxford University Press), 159–187.

Robinson, P. (1995). Task complexity and second language narrative discourse. Lang. Learn. 45, 99–140. doi: 10.1111/j.1467-1770.1995.tb00964.x

Rosen, R. (2020). “Introduction - pedagogy in sign language as first, second, and additional language,” in The Routledge Handbook of Sign Language Pedagogy, ed. R. Rosen (Oxfordshire, UK: Routledge), 1–14.

Rudser, S. (1986). Linguistic analysis of changes in interpreting: 1973-1985. Sign Lang. Stud. 53, 332–330. doi: 10.1353/sls.1986.0009

Schembri, A. (2001). Issues in the Analysis of Polycomponential Verbs in Australian Sign Language (Auslan) (dissertation). University of Sydney.

Schembri, A. (2003). “Rethinking classifiers in signed languages,” in Perspective on Classifier Signs in Sign Language, ed. K. Emmorey (East Sussex, UK: Psychology Press), 3–34.

Schembri, A., Jones, C., and Burnham, D. (2005). Comparing action gestures and classifier verbs of motion: evidence from Australian sign language, taiwan sign language, and nonsigners' gestures without speech. J. Deaf Stud. Deaf Educ. 10, 272–290. doi: 10.1093/deafed/eni029

Schick, B. (1987). The Acquisition of Classifier Predicates in American Sign Language (dissertation). West Lafayette, IN: Purdue University.

Schönström, K. (2021). Sign languages and second language acquisition research: an introduction. J. Eur. Second Lang. Assoc. 5, 30–43. doi: 10.22599/jesla.73

Singleton, J., Morford, J., and Goldin-Meadow, S. (1993). Once is not enough: standards of well-formedness in manual communication created over three different timespans. Language 69, 683–715. doi: 10.2307/416883

Skehan, P., and Foster, P. (1997). Task type and task processing conditions as influenced on foreign language performance. Lang. Teach. Res. 1, 185–211. doi: 10.1177/136216889700100302

Skehan, P., and Foster, P. (1999). The influence of task structure and processing conditions on narrative retellings. Lang. Learn. 49, 93–120. doi: 10.1111/1467-9922.00071

Slobin, D., Hoiting, N., Kuntze, M., Lindert, R., Weinberg, A., Pyers, J., et al. (2003). “A cognitive/functional perspective on the acquisition of classifiers,” in Perspectives on Classifier Signs in Sign Languages, ed. K. Emmorey (Oxfordshire, UK: Taylor and Francis), 271–298.

Supalla, T. (1986). “The classifier system in American sign language,” in Noun Classes and Categorization 7, ed. C. Craig (Amsterdam/Philadelphoa: John Benjamins), 181–214.

Swain, M. (1985). “Communicative competence: some roles of comprehensible input and comprehensible output in its development,” in Input in Second Language Acquisition eds S. Gass and C. Madden (New York, NY: Newbury House), 235–253.

Taub, S., Galvan, D., Pinar, P., and Mather, S. (2008). “Gesture and ASL L2 acquistion.” Sign Languages: Spinning and Unravelling the Past, Present and Future. Petropolis: Arara Azul.

Thumann, M. (2010). Identifying Depiction in American Sign Language Presentations (dissertation). Washington, DC: Gallaudet University.

Thumann, M. (2013). Identifying recurring depiction in ASL presentations. Sign Lang. Stud. 13, 316–349. doi: 10.1353/sls.2013.0010

Valli, C., Lucas, C., Mulrooney, K., and Villanueva, M. (2011). Linguistics of American Sign Language: An Introduction. Washington, DC: Gallaudet University Press.

Wilcox, S., and Wilcox, P. (1997). Learning to See: Teaching American Sign Language as a Second Language. Washington, DC: Gallaudet University Press.

Keywords: depiction, sign language, second language acquisition, second modality, language learning

Citation: Kurz KB, Kartheiser G and Hauser PC (2023) Second language learning of depiction in a different modality: The case of sign language acquisition. Front. Commun. 7:896355. doi: 10.3389/fcomm.2022.896355

Received: 15 March 2022; Accepted: 08 December 2022;

Published: 09 January 2023.

Edited by:

Christian Rathmann, Humboldt University of Berlin, GermanyReviewed by:

Eveline Boers-Visker, HU University of Applied Sciences Utrecht, NetherlandsCopyright © 2023 Kurz, Kartheiser and Hauser. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kim B. Kurz,  a2JrbnNzQHJpdC5lZHU=

a2JrbnNzQHJpdC5lZHU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.