95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

PERSPECTIVE article

Front. Commun. , 08 February 2022

Sec. Science and Environmental Communication

Volume 7 - 2022 | https://doi.org/10.3389/fcomm.2022.824682

This article is part of the Research Topic Helping Scientists to Communicate Well for All Considered: Strategic Science Communication in an Age of Environmental and Health Crises View all 13 articles

The study of the misinformation and disinformation epidemics includes the use of disease terminology as an analogy in some cases, and the formal application of epidemiological principles in others. While these have been effective in reframing how to prevent the spread of misinformation, they have less to say about other, more indirect means through which misinformation can be addressed in marginalized communities. In this perspective, we develop a conceptual model based on an epidemiology analogy that offers a new lens on science-driven community engagement. Rather than simulate the particulars of a given misinformation outbreak, our framework instead suggests how activities might be engineered as interventions to fit the specific needs of marginalized audiences, towards undermining the invasion and spread of misinformation. We discuss several communication activities–in the context of the COVID-19 pandemic and others—and offer suggestions for how practices can be better orchestrated to fit certain contexts. We emphasize the utility of our model for engaging communities distrustful of scientific institutions.

Over several decades, the science of information has effloresced into a sophisticated technical field, comprising scholars of the humanities, psychologists, communication theorists, computer scientists, and others. Exemplars include the study of how misinformation and disinformation can spread faster than truths (Vosoughi et al., 2018) or how “hashtag activism” manifests in social justice movements online (Jackson et al., 2020). More recently, perspectives from the science of epidemiology have been invoked towards the general science of information contagion. The “misinformation as disease” analogy has grown into its own subfield, with public health experts suggesting practical, disease-oriented interventions (Scales et al., 2021). These studies identified the basic reproductive number of misinformation campaigns (Cinelli et al., 2020), and even discussed ways to “immunize” populations against misinformation by pre-emptive campaigns (Maertens et al., 2021). At the very least, the infectious disease analogy highlights the seriousness of the misinformation crisis, and how information has features that allow it to spread through digital spaces. Moreover, these analogies have now provided the language and methods for one to describe how misinformation spreads over networks of interconnected individuals, and highlighting the centrality of social media spaces (e.g., Facebook) as hubs.

That a science surrounding the contagiousness of information was already developing prior to the 2010s was critical, as the decade would present two global events--a worldwide neo-fascist movement and the COVID-19 pandemic--where the spread of misinformation would be central and carry dire consequences. For example, believing in COVID-19 conspiracy theories is predictive of a number of troublesome outcomes, including a higher chance of testing positive for COVID-19, job loss, reduced income, social rejection and decreased overall well-being (van Prooijen et al., 2021).

During the COVID-19 era, evidence-based social science has provided important insights into what makes misinformation contagious and pernicious, especially on social media(Ferrara et al., 2020; Krittanawong et al., 2020). What is clear from such work is an acute need to transfigure the study of science communication into practical means through which one can stymie the propagation of mis- and disinformation as recently exemplified by the COVID-19 pandemic. Here, we develop a qualitative schematic-analogy based compartmental infection model (as classically used in epidemiology) of misinformation. We use this schematic to deconstruct the many routes that mis- and disinformation can propagate through an ecosystem of individuals and how science driven community engagement can be appropriately used as an intervention.

This is especially applicable to marginalized and low-resource communities that are affected by structural violence or poor health outcomes, justifying ongoing mistrust of science or medical information. For example, in the COVID-19 pandemic, communities of color in the United States had infection and mortality rates higher than whites for the much of the American wing of the pandemic (CDC, 2019; Chowkwanyun and Reed, 2020; APM Research Lab Color of Coronavirus, 2021; Tai et al., 2021). These communities are examples of settings where nonspecific approaches to addressing misinformation are ineffective. Alternatively, the context that surrounds marginalized communities implores very specific interventions.

In sum, the many forces that foster the spread of misinformation have created a status quo where scientists, journalists and public health officials must regularly compete with anti-science messaging for the dominant narrative surrounding scientific and health-related information. The model we propose helps to identify specific groups of people and the communication efforts which may be most effective to utilize. Further, it addresses a critical challenge of science communication: how to ensure the programming that we’ve designed is addressing the specific needs of the audience that it is intended for.

For many decades, mathematical modeling efforts have been crucial for organizing available information, transforming the unknowns into testable hypotheses, and providing projections of how disease may progress under a set of assumptions (Lofgren et al., 2014; Cobey, 2020). Epidemiological modeling has been a centerpiece for thinking about contagion from actual diseases to supernatural contexts (França et al., 2013; Adams, 2014), or even in purely fantastical digital worlds (Lofgren and Fefferman, 2007).

The most widely used of the modeling efforts involves the compartmental Susceptible (S)-Infected (I)-Recovered (R) framework. While it is based on simple differential equations, the entire S-I-R approach has been so successful because the model building process is abetted by a visual instrument, whereby the modeler can build mathematical relationships between the actors in a model based on a structured notation and logic. This method is widely taught in introductory coursework in epidemiology, dynamical systems, even calculus courses.

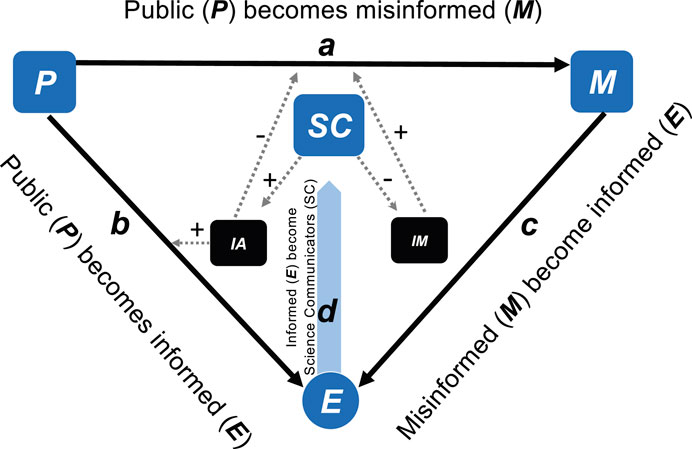

In this perspective, we use the structure of S-I-R models to build a non-mathematical analogy for a system where misinformation steers a population of individuals towards being misinformed. Our model, called the PME model (Figure 1; Public Misinformation and Education Model) uses original names for the individual compartments and uses the framework as a guide to anchor a new qualitative model for considering how to build interventions based on the specific scope of a misinformation problem.

FIGURE 1. The PME Model. This is a simplified visual compartmental flow diagram of misinformation and disinformation. Solid arrows correspond to transitions between individuals. [P] corresponds to the public that is undecided with regards to scientific understanding surrounding a scientific phenomenon. [M] corresponds to those members who are misinformed. [E], those individuals who are informed and acting on accurate scientific information. Letters a – d correspond to different transition states. For more details, see Tables 1–3. Several dashed arrows describe places where a compartment influences another process or compartment. Positive (+) and negative (−) signs correspond to type of effect on the process.

Person-Compartments. Our model comprises two sets of populations, corresponding to people (Table 1), and information (Table 2). The people compartments include the broader population of individuals susceptible to conversion to a misinformed (M) or informed (E) status. The model also contains a population of science communicators (SC). These are individuals in society who are equipped with the scientific knowledge and tools to properly create accurate content and counteract misinformation. These can be professional scientists who, because of formal science communication training or a related experience, regularly engage the public. Also, educators (at all levels) who teach members of the public in formal or informal settings qualify.

Information-Compartments. The information compartments in our model correspond to the body of information that the individuals in the person-compartments interact with. The IM component, corresponding to misinformation, serves as the body of misinformation that the public might become exposed to via social media and other sources. The IA box, alternatively, is the body of accurate information that comes from formal education (e.g., coursework), scientific engagement activities, and accurate social media memes all of which are generated by science communicators (SC).

As demonstrated in Figure 1, the PME model coordinates these compartments into a dynamic system where information interacts with populations of individuals. Arrows correspond to different relationships and interactions between compartments. In Figure 1, [a] corresponds to the rate at which the public becomes misinformed. [b] corresponds to the rate at which the public becomes properly educated. [c] is the rate at which the misinformed become properly educated, and [d], the rate at which the educated become science communicators (SC). Solid arrows (→) correspond to full transitions. That is, when one compartment becomes another, as in the transition from the public to misinformed (M) or educated (E) at rate [a] or [b]. Dashed arrows, on the other hand, correspond to the relationship between a compartment and the rate at which one of the transitions is occurring (a-d). For example, science communicators (SC) produce accurate information (IA), remove misinformation (IM), increase the rate, [b], through which the public becomes educated (E), and decrease the rate through which the public becomes misinformed, [a].

The PME model comes with several features that may foster a new perspective on the modern problem of misinformation in vulnerable and marginalized communities. As observed in Table 4, interventions can be engineered that address certain transition points of the model. Below we outline these transition stages, discuss the types of suitable intervention, and how they interact with the ecosystem outlined.

As has been measured and documented in many studies, social media has emerged as a major instrument in the propagation of misinformation, across various paradigms, for over a decade. Further, conspiracists have actively exploited COVID-19 science for manipulative purposes (Jamieson, 2021). This played a particular role in the spread of misinformation and disinformation, especially with regards to the COVID-19 vaccine (Loomba et al., 2021). For example, in the United Kingdom, 5G masts were set on fire based on misinformation linking 5G to COVID-19 a theory that was trending on twitter under the #5GConronavirus hashtag (Ahmed et al., 2020). This is but one of the many types of misinformation campaigns which have helped to undermine effectiveness of good public health practices.

Tools for preparing the public to engage misinformation. Pre-bunking is based on the idea of “psychological inoculation,” whereby an individual learns how to identify misinformation tropes, which would decrease susceptibility to misinformation (Maertens et al., 2021). It is based on the idea that misinformation often has a fundamental structure, and knowledge of this structure may aid our quest to lower its contagiousness (Douglas et al., 2019). A number of tools and content have been created to help people identify their own vulnerabilities and the weaknesses of media and also improve individual evaluation of quality of information. These include courses aimed at spotting misinformation (Breakstone et al., 2021) and games (Basol et al., 2020).

Just as social media has been weaponized for misinformation, the powers of social media are also being put into use by educators, scientists, physicians and public health experts in innovative ways towards educating people on science and thus aiding in the public becoming informed. For example, during the COVID-19 pandemic, multiple social media platforms including Instagram and TikTok were used to create and share content related to the biology and evolution of SARS-CoV2 the virus which causes COVID-19, the messaging behind non-pharmaceutical interventions, as well as pharmaceutical interventions like vaccines. This social media content increased the [IA] pool, corresponding to the availability of quality information. Ideally this information (and its sources) is reliable, trustworthy, factual, multilingual, targeted, accurate, clear, and science-based information. In addition, there is a growing literature on the utility of podcasts as a mechanism for science outreach and education, an additional means through which the public can be properly informed (Birch and Weitkamp, 2010; Hu, 2016).

Targeted practice. In addition, live social media sessions can be effective, where scientists can engage with the public and help them navigate health decision-making processes in an empathetic manner by answering questions. For example, during the early stages of the pandemic, Black physicians and scientists gathered on Clubhouse (a voice-only social media application) to interact and provide accurate information to the public (Turton, 2021). That Black healthcare workers and scientists led the effort was critical, as they were answering a specific call to engage members of the Black community who were curious or distrustful. And this is demonstrative of the type of targeted interventions that are necessary to generate an educated public (E), using context-specific tools. In general, these efforts highlight the need for culturally-sensitive and inclusive science communication (Manzini, 2003; Canfield and Menezes, 2020), especially for neglected communities (Wilkinson, 2021).

STEM education activities to improve science literacy. As science literacy is largely the responsibility of public education systems, an individual’s or a community’s understanding of basic scientific facts and the scientific process more broadly are closely linked to the level of formal education received (Trapani and Hale, 2019; Besley and Hill, 2020). Thankfully, the STEM education paradigm has begun to develop original and provocative education curricula that tackle complex topics such as molecular evolution, using laboratory-based methods. These courses have had a demonstrated positive effect on how students perform on assessments targeted to Next Generation Science Standards (Cooper et al., 2019).

Any communicator who has firsthand experience addressing this transition (including the authors of this perspective) will testify to its difficulty. In general, it cannot occur until the misinformed individual is prepared to embrace new information. At the level of the community, this challenge is amplified. In this scenario, a vulnerable community is identified, and programming is engineered to fit the needs of that community. Grassroots efforts are often the best examples of this and involve the recognition of that faith leaders are influential in some communities (Abara et al., 2015; Santibañez et al., 2017). In marginalized communities, science communication requires empathy and acknowledgment of why communities may distrust scientific institutions.

Practitioners of science communication collaborating with knowledgeable and trusted members in minoritized communities, who curate spaces for discussions, have several goals: to uncover how distrust pervades and impacts a community, while simultaneously addressing misinformation and defusing hesitation among members of the community. This creates an ideal ecosystem for the delivery of accurate information which aims to result in behavior change.

Relatedly, communication efforts with minoritized communities need to be carefully tailored to incorporate politics, history and how these factors interact to affect these communities’ engagement with science. Practitioners must embrace the complexity inherent in these spaces by expressing humility and asking respectful questions, acknowledging the valid concerns of the community (i.e openly recognize historical oppressions, discrimination and inequities which contribute to mistrust in science and authorities). Note that many of these ideas are similar to the tasks associated with the P → E transition discussed above.

There are myriad examples of science communication where the cultural sensitivity of interventions has been critical to the effectiveness of messaging. For example, the 2014 Ebola virus pandemic, which affected several countries in West Africa, provided a scenario from which lessons can be learned about effective science communication that results in behavior change. In this scenario, practitioners tapped into the folklore and indigenous communication practices of the region’s communities, specifically their rich heritage of traditional modes of community engagement. One such mode was partnering with Griots—West African troubadours, storytellers, historians, poets, praise singers and musicians. These figures utilized story and music to communicate key scientific and public health messages to communities. This proved an effective platform through which science communication and public engagement could engender the trust and buy-in of local communities, which resulted in behavioral change that had a positive impact on containment of Ebola (Deffor, 2019).

Such approaches illustrate the transformative power of language, culture, and Indigenous knowledge in attempts to communicate in settings that are potentially rife with misinformation. They also emphasize the benefit of culturally assertive approaches and practices which build on and harness the values of communities in question (Canfield and Menezes, 2020; Finlay et al., 2021; Wilkinson, 2021).

There is a dire need to increase the pool of scientists and healthcare professionals who are properly trained to communicate complex science matters in a simple format to the public. The challenge in training science communicators is in the fact that science communication (or “Sci-Comm”) is a multifaceted skill, involving:

• Ability to gain public’s trust and be relatable

• Ability to explain complicated concepts using simple language

• Building on current expertise, while not speaking on matters too far outside of one’s area of expertise

Impactful science communicators often make use of the power of combined visuals and storytelling to improve the effectiveness of their messaging, improving recall and enhanced understanding as well as increased engagement with content. These attributes can be especially beneficial for communities with low health literacy/scientific literacy. That is, marginalized communities, like the ones who suffer disproportionately from the COVID-19 pandemic, need effective science communicators.

Because of the many skills necessary to be an effective science communicator, training them can be challenging. It is a skillset that is rarely taught at any level of education, nor directly emphasized in scientific training. Additionally, many science communicators attribute their growth in the craft to being self-taught or learning by practice. Thankfully, there are several new initiatives that are aimed at improving the ability of scientists to communicate with the public. For example, newer curriculum aimed to teaching science graduate students to write across different genres has been effective in improving writing ability through aiding in students’ ability to gauge audience, and other important dimensions of science communication (Harrington et al., 2021). This is just one example of many initiatives that falls under the umbrella effort to train professionals in science communication (Silva and Bultitude, 2009; Besley and Tanner, 2011). Though this perspective hasn’t focused on journalists, they are a critical actor in how information propagates. And many modern effects in training professionals to communicate science with the public has focused on journalists specifically (Menezes, 2018; Smith et al., 2018).

More broadly, we argue that the amplification of refined education programs that transform science practitioners into communicators is an underappreciated means through which one can intervene in the spread of misinformation. The PME model highlights how the science communicator compartment affects the dynamics of the system in multiple ways. They produce accurate information that is digestible to the public [IA] and help to debunk inaccurate or misleading information [IM].

In this perspective, we offer a conceptual model that adds further depth to epidemiological analogies for the spread of misinformation. We offer that the existing models, while effective for a more general dialogue around preventing the spread of misinformation, have undervalued the context-specificity of the misinformation ecology. We offer a new qualitative model, based on epidemiological principles, but engineered around a nuanced understanding of the specific transitions in the spread of misinformation, which reveals the many indirect ways that one can intervene. Importantly, our model highlights the role of grassroots interventions, and the importance of programs that train science communicators. Furthermore, our model also reveals the specific place for “pre-bunking” and innovative STEM education approaches.

More important than any single intervention, we propose that “one size fits all” approaches are ineffective, and that interventions should be tailored to the individual needs of settings, with targeted goals in mind. This will require that the individual doing the communicating have intimate knowledge of the setting in which they operate. For example, approaching a group of individuals who are already deeply embedded in the misinformation ecosystem with classical STEM education tools will be a waste of effort. Similarly, an aggressive or persuasively pro-science message may not be necessary for those who simply want to understand the basics or have earnest questions about how complex phenomena work. It is our hope that our framework aids these efforts towards more nuanced and targeted messaging, that can undermine the process through which the public becomes misinformed.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Both authors conceived the idea, performed the analysis, and wrote the manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abara, W., Coleman, J. D., Fairchild, A., Gaddist, B., and White, J. (2015). A faith-based Community Partnership to Address HIV/AIDS in the Southern United States: Implementation, Challenges, and Lessons Learned. J. religion Health 54, 122–133. doi:10.1007/s10943-013-9789-8

Ahmed, W., Vidal-Alaball, J., Downing, J., and López Seguí, F. (2020). COVID-19 and the 5G Conspiracy Theory: Social Network Analysis of Twitter Data. J. Med. Internet Res. 22, e19458. doi:10.2196/19458

APM Research Lab Color of Coronavirus (2021). COVID-19 Deaths Analyzed by Race and Ethnicity. Available at: https://www.apmresearchlab.org/covid/deaths-by-race (Accessed November 28, 2021).

Basol, M., Roozenbeek, J., and van der Linden, S. (2020). Good News about Bad News: Gamified Inoculation Boosts Confidence and Cognitive Immunity Against Fake News. J. Cogn. 3, 2. doi:10.5334/joc.91

Besley, J. C., and Hill, D. (2020). Science and Technology: Public Attitudes, Knowledge, and Interest. Science and Engineering Indicators 2020. NSB-2020-7. Alexandria: National Science Foundation.

Besley, J. C., and Tanner, A. H. (2011). What Science Communication Scholars Think about Training Scientists to Communicate. Sci. Commun. 33, 239–263. doi:10.1177/1075547010386972

Birch, H., and Weitkamp, E. (2010). Podologues: Conversations Created by Science Podcasts. New Media Soc. 12, 889–909. doi:10.1177/1461444809356333

Breakstone, J., Smith, M., Connors, P., Ortega, T., Kerr, D., and Wineburg, S. (2021). Lateral reading: College Students Learn to Critically Evaluate Internet Sources in an Online Course. Cambridge, MA: The Harvard Kennedy School.

Canfield, K., and Menezes, S. (2020). The State of Inclusive Science Communication: A Landscape Study. Kingston: Metcalf Institute, University of Rhode Island.

CDC (2019). COVID-19 Provisional Counts - Health Disparities. Available at: https://www.cdc.gov/nchs/nvss/vsrr/covid19/health_disparities.htm (Accessed November 28, 2021).

Chowkwanyun, M., and Reed, A. L. (2020). Racial Health Disparities and Covid-19 - Caution and Context. N. Engl. J. Med. 383, 201–203. doi:10.1056/nejmp2012910

Cinelli, M., Quattrociocchi, W., Galeazzi, A., Valensise, C. M., Brugnoli, E., Schmidt, A. L., et al. (2020). The COVID-19 Social Media Infodemic. Sci. Rep. 10, 16598. doi:10.1038/s41598-020-73510-5

Cobey, S. (2020). Modeling Infectious Disease Dynamics. Science 368, 713–714. doi:10.1126/science.abb5659

Cooper, V. S., Warren, T. M., Matela, A. M., Handwork, M., and Scarponi, S. (2019). EvolvingSTEM: a Microbial Evolution-In-Action Curriculum that Enhances Learning of Evolutionary Biology and Biotechnology. Evolution (N Y) 12, 12–10. doi:10.1186/s12052-019-0103-4

Deffor, S. (2019). “Ebola and the Reimagining of Health Communication in Liberia,” in Socio-cultural Dimensions of Emerging Infectious Diseases in Africa (Berlin: Springer), 109–121. doi:10.1007/978-3-030-17474-3_8

Douglas, K. M., Uscinski, J. E., Sutton, R. M., Cichocka, A., Nefes, T., Ang, C. S., et al. (2019). Understanding Conspiracy Theories. Polit. Psychol. 40, 3–35. doi:10.1111/pops.12568

Ferrara, E., Cresci, S., and Luceri, L. (2020). Misinformation, Manipulation, and Abuse on Social Media in the Era of COVID-19. J. Comput. Soc. Sc 3, 271–277. doi:10.1007/s42001-020-00094-5

Finlay, S. M., Raman, S., Rasekoala, E., Mignan, V., Dawson, E., Neeley, L., et al. (2021). From the Margins to the Mainstream: Deconstructing Science Communication as a white, Western Paradigm. J. Sci. Commun. 20, C02. doi:10.22323/2.20010302

França, U., Michel, G., Ogbunu, C. B., and Robinson, S. (2013). World War III or World War Z? the Complex Dynamics of Doom. Cambridge MA: New England Complex Systems Institute.

Harrington, E. R., Lofgren, I. E., Gottschalk Druschke, C., Karraker, N. E., Reynolds, N., and McWilliams, S. R. (2021). (1AD) Training Graduate Students in Multiple Genres of Public and Academic Science Writing: An Assessment Using an Adaptable, Interdisciplinary Rubric. Front. Environ. Sci. 9, 715409. doi:10.3389/fenvs.2021.715409

Hu, J. C. (2016). Scientists Ride the Podcasting Wave. Science 11, 2016. doi:10.1126/science.caredit.a1600152

Jackson, S. J., Bailey, M., and Welles, B. F. (2020). # HashtagActivism: Networks of Race and Gender justice. Cambridge: MIT Press.

Jamieson, K. H. (2021). How Conspiracists Exploited COVID-19 Science. Nat. Hum. Behav. 5, 1464–1465. doi:10.1038/s41562-021-01217-2

Krittanawong, C., Narasimhan, B., Virk, H. U. H., Narasimhan, H., Hahn, J., Wang, Z., et al. (2020). Misinformation Dissemination in Twitter in the COVID-19 Era. Am. J. Med. 133, 1367–1369. doi:10.1016/j.amjmed.2020.07.012

Lofgren, E. T., and Fefferman, N. H. (2007). The Untapped Potential of Virtual Game Worlds to Shed Light on Real World Epidemics. Lancet Infect. Dis. 7, 625–629. doi:10.1016/s1473-3099(07)70212-8

Lofgren, E. T., Halloran, M. E., Rivers, C. M., Drake, J. M., Porco, T. C., Lewis, B., et al. (2014). Opinion: Mathematical Models: A Key Tool for Outbreak Response. Proc. Natl. Acad. Sci. USA 111, 18095–18096. doi:10.1073/pnas.1421551111

Loomba, S., de Figueiredo, A., Piatek, S. J., de Graaf, K., and Larson, H. J. (2021). Measuring the Impact of COVID-19 Vaccine Misinformation on Vaccination Intent in the UK and USA. Nat. Hum. Behav. 5, 337–348. doi:10.1038/s41562-021-01056-1

Maertens, R., Roozenbeek, J., Basol, M., and van der Linden, S. (2021). Long-term Effectiveness of Inoculation Against Misinformation: Three Longitudinal Experiments. J. Exp. Psychol. Appl. 27, 1–16. doi:10.1037/xap0000315

Manzini, S. (2003). Effective Communication of Science in a Culturally Diverse Society. Sci. Commun. 25, 191–197. doi:10.1177/1075547003259432

Menezes, S. (2018). Science Training for Journalists: An Essential Tool in the post-specialist Era of Journalism. Front. Commun. 3, 4. doi:10.3389/fcomm.2018.00004

Norton, K. (2020). Inaugural “Black in X” Weeks foster Inclusivity and Empowerment in STEM. Available at: https://www.pbs.org/wgbh/nova/article/inaugural-black-x-weeks-foster-inclusivity-and-empowerment-stem/.

Santibañez, S., Lynch, J., Paye, Y. P., McCalla, H., Gaines, J., Konkel, K., et al. (2017). Engaging Community and Faith-based Organizations in the Zika Response, United States, 2016. Public Health Rep. 132, 436–442. doi:10.1177/0033354917710212

Scales, D., Gorman, J., and Jamieson, K. H. (2021). The Covid-19 Infodemic—Applying the Epidemiologic Model to Counter Misinformation. New Engl. J. Med. 385, 678–681. doi:10.1056/NEJMp2103798

Silva, J., and Bultitude, K. (2009). Best Practice in Communications Training for Public Engagement with Science, Technology, Engineering and Mathematics. Jcom 08, A03. doi:10.22323/2.08020203

Smith, H., Menezes, S., and Gilbert, C. (2018). Science Training and Environmental Journalism Today: Effects of Science Journalism Training for Midcareer Professionals. Appl. Environ. Edu. Commun. 17, 161–173. doi:10.1080/1533015x.2017.1388197

Tai, D. B. G., Shah, A., Doubeni, C. A., Sia, I. G., and Wieland, M. L. (2021). The Disproportionate Impact of COVID-19 on Racial and Ethnic Minorities in the United States. Clin. Infect. Dis. 72, 703–706. doi:10.1093/cid/ciaa815

Trapani, J., and Hale, K. (2019). Higher Education in Science and EngineeringScience & Engineering Indicators 2020. NSB-2019-7. Alexandria: National Science Foundation.

Turton, W. (2021). Black Doctors Work Overtime to Combat Clubhouse Covid Myths. Available at: https://www.bloomberg.com/news/articles/2021-02-11/black-doctors-work-overtime-to-combat-clubhouse-covid-19-myths (Accessed November 24, 2021).

van Prooijen, J.-W., Etienne, T. W., Kutiyski, Y., and Krouwel, A. P. M. (2021). Conspiracy Beliefs Prospectively Predict Health Behavior and Well-Being during a Pandemic. Psychol. Med., 1–25. doi:10.1017/S0033291721004438

Vosoughi, S., Roy, D., and Aral, S. (2018). The Spread of True and False News Online. Science 359, 1146–1151. doi:10.1126/science.aap9559

Keywords: misinformation, community engagement, epidemiology, COVID-19, science communication

Citation: Osman A and Ogbunugafor CB (2022) An Epidemic Analogy Highlights the Importance of Targeted Community Engagement in Spaces Susceptible to Misinformation. Front. Commun. 7:824682. doi: 10.3389/fcomm.2022.824682

Received: 29 November 2021; Accepted: 04 January 2022;

Published: 08 February 2022.

Edited by:

Ingrid Lofgren, University of Rhode Island, United StatesReviewed by:

Douglas Ashwell, Massey University Business School, New ZealandCopyright © 2022 Osman and Ogbunugafor. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: C. Brandon Ogbunugafor, YnJhbmRvbi5vZ2J1bnVAeWFsZS5lZHU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.