- 1LSFB-Lab, NaLTT, University of Namur, Namur, Belgium

- 2Department of Interpretation and Translation, Gallaudet University, Washington, DC, United States

Sign language linguistics has largely focused on lexical, phonological, and morpho-syntactic structures of sign languages, leaving the facets of interaction overlooked. One reason underlying the study of smaller units in the initial stages of development of the field was a pressing concern to ground sign languages as linguistic. The interactive domain has been sidestepped in gesture studies, too, where one dominant approach has been rooted in psycholinguistic models arguing for gesture’s tight relationship with speech as part of language. While these approaches to analyzing sign and gesture have been fruitful, they can lead to a view of language as abstracted from its natural habitat: face-to-face interaction. Such an understanding of how language manifests itself—one that takes for granted the conversational exchange—cannot account for the interactional practices deployed by deaf and hearing individuals within and across various ecological niches. This paper reviews linguistic research on spoken and sign languages, their approaches to gesture that have tended to posit a divide between what is linguistic vs. non-linguistic and sign vs. gesture. Rather than opposing the two, this paper argues for seeing the dynamics between gesture and sign as intimately intertwined both intra- and inter-personally. To ground this claim, we bring evidence from four languages: ASL—American English and French Belgian Sign Language (LSFB)-Belgian French across modalities (signed and spoken) to offer a view of language as situated, dynamic and multimodal. Based on qualitative corpus analyses of signers’ and speakers’ face-to-face interactional discourses of two communicative actions, viz. palm-up and index pointing, it is exemplified how deaf ASL-LSFB and American English-Belgian French hearing individuals mobilize their hands to continuously contribute to both linguistic meaning and the management of their social actions with addressees. Ultimately, exploring the interactional synergies arising within and across different communicative ecologies enables scholars interested in the fields of gesture and sign language research to gain a better understanding of the social, heterogeneous nature of language, and as to what it means for spoken and sign languages to be embodied languages.

1 Introduction

One of the essential questions in linguistics that scholars seek to understand is how individuals, within and across diverse ecological niches, communicate with each other (Ferrara and Hodge, 2018). This question, however, has historically been addressed in linguistics through a particular lens, primarily derived from the investigation of Indo-European (spoken) languages and as just speech or text (Vigliocco et al., 2014; Perniss, 2018). Furthermore, the nature of these traditional linguistic theories was “mostly occupied with aspects of language that denote [d] things arbitrarily and categorically” (Özyürek and Woll, 2019, p. 68), a view inherited from the duality expressed in Saussure’s signe. In turn, such a conception has firmly impacted the field of linguistics where the empirical emphasis has been laid on certain structures of language, such as the lexicon and morphosyntax while defocusing others mainly connected with interactional-pragmatic aspects of language use.

Although these traditional linguistic views have been fruitful in terms of producing knowledge about the building blocks of individual utterances, some scholars’ current perspective on what constitutes language and how it works in human communication has tremendously expanded since these initial conceptions of language. It has been reckoned with in the fields of gesture studies (Kendon, 2008; Müller, 2018) and sign language (hereafter, SL) linguistics (e.g., Ferrara and Hodge, 2018; Perniss, 2018) that these theories tend to depict a narrow picture of language and its properties because they offer “a distilled abstraction of how language really manifests” (Murgiano et al., 2020, p. 3) in a given communicative context.

In fact, the arena where humans’ language ability manifests itself in the here and now is deeply rooted in human sociality (Holler and Levinson, 2019), which directly converges with what Schegloff (1996) once called the home habitat of language itself, that is, face-to-face interaction. After all, it is in this context that the human ability for language has emerged phylogenetically, is learnt by children, and is mostly used in everyday conversations (Perniss, 2018). When communicating face-to-face, people draw on a wide range of interwoven multi-semiotic practices to create and interpret meaningful composite utterances (Enfield, 2009).

Shifting the construal of language as a structured, symbolic system to an inherently multimodal and situated social practice (Ferrara and Hodge, 2018; Murgiano et al., 2020) requires the analyst to account for semiotic moves of the entire body as it is situated in the physical environment (Streeck, 2009; Mondada, 2019). As such, the body is reconceived as a locus for meaning making where all sorts of visible bodily actions (Kendon, 2004) are available for speakers’ and signers’ interpretation. These embodied visible units of meaning, referred to as gestures, as we shall argue, are deeply integrated with language, whether in its signed or spoken manifestation. In this paper, we follow Andrén’s (2014) framework of “the upper and lower limit of gesture” (p. 153), to consider any visible bodily action as potentially communicative in the context of its production while remaining a visibly recognizable resource available at all times for interpretation, even if it is not “necessarily intentional” (Bolly and Boutet, 2018, p. 2).

But how the body (specifically, manual and non-manual articulators) of language users is implicated in spoken and signed interaction has not—yet—reached consensus thus far in the SL linguistics and gesture communities. The actual perceptible differences between gestural phenomena and the signing stream have influenced how scholars treat visual imagery in SLs. As it stands, there are two competing views of gesture - one that affiliates gesture with sign, and another that differentiates gesture from language - but these views, as will be argued here, need not be mutually exclusive.

At the crossroad of gesture and sign, this paper offers unique insights into the topic of language in interaction across modalities, insights that we believe have far-reaching ramifications not only for how we come to describe the presence of gestural instantiations in signed and spoken discourses, but for language theory itself. This kind of opening on language pushes us to rethink the traditional dichotomy that distinguishes between what is linguistic and non-linguistic, what is part of language and what is viewed as outside of it (Kendon, 2008, 2014). In the same vein, it forces us to put into perspective what is considered a foundational property of language and what is considered marginal “or even a negligible attribute” (Murgiano et al., 2020, p. 4). Ultimately, such an approach will offer a more thorough understanding of the rich heterogeneity of the human language ability.

In Section 2, we first review literature conducted on gesture from a cognitive and psycholinguistic perspective (2.1). We discuss their main limitations with respect to analyzing gestural phenomena. Next, we consider the other side of the coin, language as a multimodal, situated social practice (2.2) by presenting an overview of the interactional meanings of gesture in signers’ and speakers’ conversations to further ground that language does not only emerge inside people’s minds but shapes and is shaped by people’s activities in interaction. After laying out the data in Section 3, evidence is brought together from four languages across modalities, namely, ASL/American English and LSFB/Belgian French (Section 4), to show how signers and speakers use gestural phenomena to create meaningful utterances and regulate the flow of their conversational exchanges. Lastly, the implications of applying this kind of approach to language are discussed in Section 5.

2 Setting the Stage for Gestural Instantiations in Signing and in Speaking

2.1 Gesture in Cognitive-Psychological Accounts

The ways gestural aspects have received attention in SLs have been influenced by models inherited from the prevailing structuralist approach to language, primarily devoted to the linguistic analysis of spoken language (SpL, hereafter) structures (see Kendon, 2008 for a thorough discussion of the influences of Saussurean and Chomskyan approaches to language on (SL) linguistics). However, other events have reinforced the barrier standing between gesture and sign, which is also the result of a strongly advocated model that situates gesture within the realm of a cognitive-psychological view of the term, which emerged in the latter half of the 20th century.

These accounts of gesture in signing are found in earlier works on the subject (Emmorey, 1999; Sandler, 2009; Schembri and others, 2005) as well as more recent work (Goldin-Meadow and Brentari, 2017) where gesture is defined in terms of a psychologically driven model of language. Following McNeill (1992), they argue for a binary separation between what is embedded in the sign (for SLs) as discrete and categorical from more gradient aspects that they consider paralinguistic.

McNeill (1992, 2005) ideas about gesture are primarily concerned with the microgenesis of utterance formation, the ‘Growth Point’, as it unfolds in a speaker’s mind; gestural production in situ, then, acts as a window into the mind. In particular, McNeill’s interest in gesture lies in gesticulation (McNeill, 1992), which includes the most idiosyncratic units depicting imagery, the deictic and the iconic forms of language. These forms are treated as unique to SpL—their very definition requires their co-expression with speech. SLs are conceived of as standing in direct opposition to gesticulation leaving them outside the bounds of human communication. McNeill’s aim to account for gesture in a single theoretical system, one that sees “the whole person as a theoretical entity” (1992, p.11) leaves out deaf signing people. While it is indisputable that (parts of) SLs bear resemblance to conventionalized gestures (i.e., emblems), we still cannot claim that SL users integrate gradient, imagistic expressions with conventionalized forms differently than SpL users when the fundamental question is one of definition (i.e., what body movements are considered ‘gesture’ or ‘sign’). Rather than emphasizing continuities, as it was presumably intended to do, this continuum has exacerbated categorical differences between bodily actions expressed by hearing speakers and deaf signers. If ‘gesture’ is a theoretical notion that humans have both imagistic, idiosyncratic aspects of thought combined with linguistic expressions, then it should follow that deaf signing humans, too, have similar mental constructs. The outward expressions (viz. signing) should also be analyzable from the same framework or else the framework is flawed (Shaw, 2019). We see this shortcoming as an unintentional byproduct of approaching the analysis of gesture solely from this “inside looking out” perspective (McNeill, 2018), as deeply rooted in the inner cognitive and psychological functioning of the human mind (McNeill, 1992, 2005), where gesture is distanced from the environment in which it ordinarily unfolds and to whom it is addressed, that is, in language as used by speakers and signers with co-present addressees.

McNeill’s approach to SLs influenced early treatments of gesture in SL. Emmorey’s (1999) study of ASL narratives asks whether signers also gesture. Applying the principle of co-expression with speech as the litmus test, she finds signers do not produce spontaneous idiosyncratic manual gestures that co-occur simultaneously with signing as speakers produce gestures concurrent with their speech. Rather, signers might interject manual gestures in between conventionalized signs or gesture with one hand while signing with the other. Emmorey reveals the shortcomings of applying the McNeillian sense of gesture to SLs. Defining gesture as gesticulation implies that only (manual) spontaneous, idiosyncratic gestures that are capable of revealing the imagistic nature of thought are considered unique to SpLs. It will be examined further, however, how such a position impedes the full treatment of visible bodily actions in SpL and SLs.

Another example of a McNeillian view of gesture in sign is found in Sandler’s (2009) work on Israeli Sign Language (ISL). In ISL, signers use their mouth to express the gestural aspects of certain discourse parts, and these mouth actions are analogous to the representational hand gestures found in speakers’ discourse. Sandler suggests that the gestural content, expressed by the mouth, co-occurs with the linguistic content conveyed by the hands, just as is the case in speakers’ use of co-speech gestures but in reverse. Gesture, here, is still situated outside of language, as reflected in Goldin-Meadow and Brentari’s quote on Sandler’s (2009) study: These mouth gestures are seen “to embellish [emphasis added] the linguistic descriptions they g [i]ve with their hands” (2017, p. 12). What the mouth reveals here is the imagistic, instantaneous and idiosyncratic aspect of gesture usually attributed to the hands in SpLs. Moreover, Goldin-Meadow and Brentari’s (2017) aforementioned words portray mouth gestures as purely paralinguistic that provide ancillary support, as if they were ornaments that only assist the hands. Such a view reduces the scope of gesture’s role and position in SL discourse.

Some scholars have put forth alternate interpretations of other ways that signers ordinarily express themselves, shedding light on possible modality-independent characteristics that included, for instance, the use of space in pronouns and indicating verbs (Liddell, 2003), or depicting constructions (Schembri et al., 2005). The latter, for instance, raised questions about whether these constructions could be analyzed as mixed forms, incorporating both linguistic and gestural elements. Schembri et al. (2005) have explored the use of classifier constructions by signers for expressing motion events in three historically unrelated SLs, namely, ASL, TSL, and Australian SL (Auslan), and their comparison with the gestures performed by hearing English non-signers describing the same motion events. An important detail about the design of their study is that speakers were constrained to using their hands without being allowed to speak, which impacts the kinds of gestures produced.

Analyzing the handshape, motion, and place of articulation, the authors found that not only all signers across the three unrelated languages used motion and place of articulation in a similar fashion, but they were also the same in the silent gestures articulated by the hearing non-signing speakers. In contrast, the handshapes were similar among signers within the same SL but different from the other SLs and from the silent gestures as well. In other words, hearing speakers, when prevented from speaking, produced gestures that resembled the signs that signers performed regarding motion and location parameters, but not handshape. Thus, while motion and location units for the description of events displayed systematicity, variability in handshape was established for different SpLs and SLs. According to the authors, their findings add evidence with respect to the status of classifier constructions as “blends of gestural and linguistic elements” (p. 287), which concur with other results (e.g., Liddell, (2003) study, reporting that classifier handshapes were categorical in nature (viz., linguistic)).

Resulting from these methodological designs and theoretical paradigms, only a limited set of discourse features are considered as eligible gestural instantiations in signing, “mainly depicting constructions, constructed action, and referential use of space” (Shaw 2019, p. 4). This view limits the range of other visible bodily actions that deaf people employ in their discourse, including the interactive forms. The same holds true in the study of gesture in relation to speech where only the most imagistic side of gesture is considered gesture proper.

The hybridity of embodied expressions is enticing as an analytic starting point. There are a few shortcomings to these studies that we consider here. To begin, the studies conducted under these research paradigms mainly draw on data collected under experimental conditions with a predominant focus on task-oriented narrations from a single speaker, and either neglect the face-to-face context or take it for granted. Second, some push forward the notion that gestural expressions might be channel-specific (where linguistic status follows the articulator through which the bodily action is expressed). SLs have already shown that the hands, especially, but also the eyebrows, mouth and even torso, can be used conventionally. What we stand to gain by comparing signed and spoken discourses is a theory that does not automatically presume a priori that a bodily action belongs to one category or another. Instead, we describe what the bodily actions accomplish semiotically in interaction and consider separately whether the forms are conventionally shared. The benefit of this approach is that we have greater latitude to account for how people express themselves through their bodies in all sorts of modalities (be it speech, sign, tactile/pro-tactile sign, and so on). Should we find similar patterns cross-linguistically we might advance the argument for a theory that embraces both “language” and “action” as an integrated system.

Lastly, although silent gestures (also called “spontaneous signs”, Goldin-Meadow and Brentari, 2017, p. 9) have been explored alongside conventional SLs and homesign systems as regards their roles in language emergence and evolution (see Goldin-Meadow, 2015 and Özçalışkan et al., 2016), we argue that these silent gestures are not reflective of how bodies engage in real-life contexts. Put differently, silent gestures are not spontaneous, idiosyncratic gestures created on the spot but rather, they are the result of some sort of convention established within that specific constricted usage, as discussed by Müller (2018). Hence, they fail to resemble language because they are not like language, at least as it materializes in natural contexts with various conversational partners. The meanings and forms of these silent gestures cannot be generalized either to a larger audience of users, or for more complex communicative purposes, nor across various naturalistic contexts (outside their restricted settings), which make the claim of silent gestures as evidence for a divide between gesture and sign relatively weak (Müller, 2018).

Yet, if we adopt Kendon’s definition of gesture as “visible actions as utterance” and Andrén’s framework where the upper and lower limits of gesture allow for the potential for gradience in any form, gesture is kaleidoscopic. In interaction, language users of SLs and SpLs resort to a broad range of bodily behaviors to pragmatically manage their interaction while negotiating the moment-by-moment relationship with addressee(s). Hence, only focusing on the imagistic side of gestural expression in signing and in speaking, as if gestures were only a product of inner thoughts, brings to light an incomplete picture of multiple minds (and bodies) interacting. By shifting attention to language in situated interactions, intersubjectivity comes into play (Schiffrin, 1990) and it becomes immediately apparent that some bodily moves are responses to the moves of the other person. The growth of ideas, then, also occurs in concert with another mind.

While it is true that McNeill’s (1992, 2005) theory remains a strong influence on the examination of gesture in SpLs and SLs, several scholars have begun to embrace language proper as inherently multimodal and primarily dialogic (e.g., Kendon, 2004; Goodwin, 2007; Enfield, 2009; Streeck, 2009) and examine gesture as part of people’s social activities (e.g., Bavelas, 1994; Gerwing & Bavelas, 2013).

The present paper builds on current and previous work that views the pragmatic and interactional meanings “ever present in human interaction” (Ferrara, 2020, p. 2). The next section surveys key studies whose work has demonstrated how language users—deaf and hearing—deploy their body to regulate their interaction rather than to express propositional meaning stricto sensu.

2.2 Gesture in Usage-Based, Interactional Accounts

When we turn our analytic eye to an interaction as a unit of analysis, certain bodily actions come to the fore. Gestures that appear seemingly meaningless in terms of propositional content serve central roles to addressees assessing utterances as they unfold online. In face-to-face conversation, not all gestures align with the propositional content of spoken utterances but rather play a role in the management of the social context in which they take place. Like navigational markers, these gestural forms guide conversational partners, turn-by-turn, through the production and interpretation of informational and social meaning. These gestures have received many different labels such as “speech handling” (Streeck, 2009), “pragmatic” (Kendon, 2004) and “interactive” (Bavelas et al., 1992). The latter is used to refer to this specific class of gesture as Bavelas and others’ 1995) functional typology is used in the present paper.

Bavelas and others conducted several research experiments (1992, 1994, 2008) in which they concluded that not only visibility (the fact of interacting with a visible addressee, 1992) but also the dialogue situation itself (the fact of having both parties to be able to express themselves spontaneously and freely, 1994) acted as independent influences on the emergence of interactive gestures (Bavelas et al., 2008). In addition, the degree of knowledge shared between interactants also influenced the use of interactive gesture (Holler & Bavelas, 2017). In other words, social context and information states were the two main drivers behind the emergence of the forms.

These findings shed light on this particular class of gesture that does not seem to serve directly the propositional content but rather to point to diverse aspects of interaction itself and the interpersonal management of the speaker/signer-addressee relationship. Now, the ways those gestures involve the addressee in the interaction are multifaceted. Bavelas and others 1995) have highlighted four major functions, namely, regulating turns in conversation as well as delivering, citing, and seeking information. These functions serve as the basis for the evidence provided in Section 4. It is worth noting that Bavelas and others’ functional typology has gained traction recently and has been applied on spoken and signed language data (e.g., Holler, 2010; Shaw, 2019; Ferrara, 2020; Gabarró-López, 2020; Lepeut, In press). In what follows, each main interactive function is explained with additional literature in SL and SpL studies supporting the claim for the inter-personal roles that the body entails in language.

First, regulating gestures maintain the flow of conversation with respect to turns-at-talk (Bavelas et al., 1992, p. 473). These include backchannels made by addressees to show agreement, following and/or attention. In SL research, the turn-taking engine is a facet of signed discourse that has received the most attention, particularly in the following areas: how signers take, maintain, or yield the turn (Baker, 1977; McIlvenny, 1995; Coates & Sutton-Spence, 2001; McCleary and de Arantes Leite, 2013; Girard-Groeber, 2015), how conversational repair (e.g., self-initiated repair, other-initiated repair, and so on) is undertaken (Dively, 1998; Dingemanse et al., 2015; Manrique, 2016; Skedsmo, 2020), and how overlap in signing occurs (Baker & van den Bogaerde, 2012; Coates & Sutton-Spence, 2001; de Vos et al., 2015; Groeber & Pochon-Berger, 2014; McCleary and de Arantes Leite, 2013). Even studies on tactile SLs have described the functioning of turn-taking patterns, such as conversational repair practices and backchannels (e.g., Mesch, 2001). The same is true for studies that have adopted a multimodal perspective on turn-taking and the role of gesture in the management of such turns (e.g., Deppermann 2013; Mondada, 2007; Van Herreweghe 2002).

A second interactive category consists of delivery gestures whose role is to mark the content of the information transmitted (as new or shared) to the addressee, including digression and elliptical gestures. Shared delivery gestures correspond to the notion of common ground defined as the “knowledge, beliefs, and assumptions that interlocutors” (Holler and Bavelas, 2017, p. 218) share prior to or develop during conversation. Common ground is another interactive accomplishment that gesture marks and has received important attention in both spoken (Holler, 2010; Gerwing and Bavelas, 2013) and signed conversation (Shaw, 2019; Ferrara, 2020). By contrast, the delivery of new information to addressees (Kendon, 2004; Müller, 2004; Streeck, 2009; Ferré, 2012) has been reported and seems more frequent in spoken than signed discourse (Ferrara, 2020).

Seeking gestures aim to elicit addressees’ responses. In conversation, participants do not only monitor their own actions and states of mind but also those of their conversational partners (Clark & Krych, 2004). These interactive gestures are used to seek understanding, following, attention, and agreement from addressees with the ongoing conversation and even help during word search activities (Goodwin & Goodwin, 1986).

Finally, citing gestures refer to previous contributions made by the other interactant in the conversation and acknowledge a point being made by the addressee (Bavelas, 1994). Citing functions of gestural phenomena have been reported for the index pointing, for instance, in ASL (Shaw, 2019) and NTS (Ferrara, 2020) and spoken conversation (Bavelas, 1994).

Interactive gestures are only recently gaining attention among SL researchers but they seem to be patterning in similar ways across modality. Given the sparse research on SL interaction, this brief survey reveals that some properties of SL interaction remain largely overlooked, leaving a gap in our understanding of SL functioning. Yet, it remains fundamental to be able to describe signers’ practices—just like those of speakers—to obtain a more comprehensive picture of how language works beyond speech. These studies pinpoint crucial implications for the integration of interactive gesture in language use. The scholars exploring their interactional meaning align with a view that considers gesture and sign hand-in-hand as found in Müller’s (2018) and Kendon’s (2008, 2014) approaches, among others. Müller (2018) argues that the meanings of gesture are rooted in “embodied experiences that are dynamic and intersubjective, and not at all like images” (p. 12). As for Kendon (2008), he highlights the importance of setting aside the gulf between gesture and sign in favor of viewing the two side-by-side through historical, functional, and material arguments:

it would be better if we undertook comparative studies of the different ways in which visible bodily action is used in the construction of utterances […]. Such an approach would reveal the diverse ways in which utterance contributing visible bodily actions can be fashioned and the diverse ways in which they can function from a semiotic point of view (Kendon, 2008, p. 358)

Many scholars have recently embraced this view in both gesture research and SL linguistics (e.g., Ferrara & Hodge, 2018; Müller, 2018; Puupponen, 2019; Ferrara, 2020). Yet, the fact remains that only a handful of studies have put theory into practice by adopting a direct comparative approach between different SpLs and SLs while relying on directly comparable interactional corpus data.

2.3 The Current Approach

This work aims at advancing the comparative study of visible bodily action in sign and speech. More particularly, the current paper adopts Kendon’s (2008) framework and offers a unique perspective not only on language use across languages (ASL-AmEng/LSFB-BF) and modalities (signed/spoken) but also on the similarities that emerge when gesture and sign are explored side-by-side in different contexts (dyads/triads) and settings (at home/in the lab). To illustrate a potentially fruitful area for cross-linguistic analysis, we have selected the palm-up (PU hereafter) and pointing actions as a point of departure. These manual forms are particularly well-suited for cross-linguistic analysis because of their physical resemblance with each other and the frequency with which they emerge in face-to-face encounters. Accounting for their patterning in signed and spoken interactions should contribute to the discussion of how gesture is defined and how language is analyzed. This study demonstrates how ASL and LSFB signers along with American and Belgian French speakers draw on similar methods of communication to express diverse interactional meanings through these two gestural practices. These two gestures are analyzed and discussed when conveying interactional meanings as reviewed in 2.2. The data used to discuss the PU and Index pointing interactional meanings are described next.

3 Method and Data

In this study, we adopt a comparative approach between languages (cross-linguistically and cross-modally) and also step out from experimental settings and narrative tasks to demonstrate the synergies that arise from face-to-face interactions where signers and speakers are “free” to communicate about any topic.

The research draws from data collected in the United Stated and Belgium, and includes four languages, namely, ASL and American English (AmEng), and LSFB and Belgian French (BF). The data present a novel approach in the fields of gesture studies and SL linguistics in that the films (ASL-AmEng on the one hand and LSFB-BF, on the other) have been collected under the same methodological conditions so that direct comparisons of signers and speakers’ communicative practices can be made.

We focus on two manual forms that emerged throughout the interactions by applying a conversational analytic framework that is informed by interactional sociolinguistics (an approach also used in Shaw, 2019 and Lepeut, In press). The premise of this sort of analysis starts with discourse that is as naturalistic as possible so the analyst can examine the ways in which interlocutors draw on their communicative repertoires naturally. Conversation Analysis (CA), originally conceived by Sacks, Schegloff and Jefferson (1974), is a technique that looks at discourse as structured in sequences of turns where interlocutors design their talk (speech, sign) on-site. Utterances are analyzed as linked contingently where a contribution by one interlocutor can be seen as prompting an utterance by another. Within these sequences, people iteratively reveal their orientations to each other and to the content about which they talk.

For the purposes of this paper, four films were selected and viewed multiple times in their entirety before the researchers identified four segments during which several PU and pointing actions emerged. One to 5 minutes (depending on the excerpt) were then closely reviewed turn by turn in ELAN. Following in the tradition of Streeck (2009) and Goodwin (2007), each PU and pointing token was analyzed for co-occurring nonmanual behaviors and their relationship to the prior—and subsequent—turns to situate them within the broader interactive context. After identifying the forms, Bavelas et al. (1995) functional typology was used to attribute the interactive functions, an approach also applied quantitatively in previous work (see Ferrara 2020 for index pointing in NTS, Gabarró-López, 2020 for PU in LSFB and LSC and Lepeut, 2020 for PU and pointing in LSFB and BF). All in all, examining both the form and the function in this way led to conclusions about their interactional meanings in context.

3.1 LSFB and Belgian French

The data for LSFB and BF are drawn from two multimodal corpora: 1) the LSFB Corpus (Meurant 2015); and 2) the FRAPé Corpus (Meurant et al. subm.), the ambient spoken counterpart of LSFB. The LSFB Corpus is the open-access reference corpus for LSFB (https://www.corpus-lsfb.be/). It is composed of data produced by a total of 100 signers (male and female, aged between 18 and 83 years old, and from diverse regions in Belgium). The data collection process in the FRAPé Corpus - ongoing - applies the same methodological process as that of the LSFB Corpus. The FRAPé Corpus is comparable to LSFB in terms of genres, participants and recording environment, so that direct comparisons between both corpora can be conducted when individuals are tested under the same conditions. Only some minor adjustments were done (e.g., task where speakers discuss cultural differences between Walloon and Flemish people in Belgium instead of hearing-deaf culture).

Sessions were guided by a deaf moderator for LSFB and a hearing moderator for FRAPé who guided the different conversational exchanges between the participants through different tasks. For this study, one pair of female LSFB signers and one pair of female BF speakers were selected. LSFB signers talked about the differences between deaf and hearing cultural habits while BF speakers discussed the differences between the Walloon and the Flemish. Although the discussions are semi-directed, participants are free to talk and jump in the conversation any time they want, reflecting the dialogic character of spontaneous conversation (Bavelas et al., 2008).

The video samples were transcribed and annotated using the ELAN software. The ID-gloss technique based on Johnston, (2015) annotation guide was used for LSFB. All transcriptions for speech follow Valibel’s transcription conventions (see Bachy et al., 2007). The left and right hands were annotated on two separate tiers. The functions based on Bavelas et al. (1995) typology for interactive gesture as described in section 2.2 was used to annotate the interactive PU and pointing in the data.

3.2 ASL and American English

This section of the paper draws on data collected as part of another comparative study of signed and spoken discourses in the U.S. (Shaw, 2019). The ASL and American English interactions were filmed following sociolinguistic techniques of collecting language data in naturalistic settings (Labov, 1972; Schiffrin, 1987, 1990). Two groups of four people were filmed for just over 1 hour each: one group consisted of four deaf friends using ASL, the other consisted of four hearing friends using American English. The participants already knew each other, and the social gatherings were not unlike ones they ordinarily had with each other. To provide some structure to both groups, the participants were asked to play the game Guesstures and were not instructed to act in a certain way as part of their encounter other than to play the game. Each filming session took place in one of the participant’s homes.

Both films were imported into ELAN. Spoken utterances for each hearing participant were broadly transcribed with basic prosodic features (rising/falling pitch, marked emphatic stress, vowel lengthening, speed of production). Manual gestures for each participant were transcribed on separate tiers. Glosses for the manual components and descriptions of non-manual markers of the signed utterances were transcribed in separate tiers for the ASL data.

3.3 Selection of Interactional Gestures in Signing and in Speaking

The selection of the PU and the index pointing as relevant cases for this paper is motivated by two main factors. First, both forms are conducive to examine aspects of the human language ability in achieving specific interactional goals in the social context since they appear to operate as pragmatic. As Cooperrider et al. (2018) highlight for the PU: “[i] f researchers agree on anything, it is that palm-ups are interactional in nature” (p. 5). Ferrara (2020) has also demonstrated that index pointing actions are not solely used for referential purposes but also interactional ones in Norwegian signed conversation and the same holds true for SpLs (see Mondada 2007; Jokinen, 2010). Yet, corpus-based analyses of these two forms from an interactional point of view is lacking and has remained overlooked in gesture and SL research. Secondly, to be able to compare systematically gesture in signing and in speaking, the investigation of identical gestural phenomena occurring in both languages is necessary. Therefore, these two tokens constitute a strong baseline for the current study.

In this paper, PU is defined as resulting from a rotation of the wrist(s) that brings the palm(s) into an upward position, displaying a flat hand with the fingers more or less extended. However, next to this conventional representation, other, less canonical versions of the form may occur and are also considered. The wrist rotation, for example, may be absent if the preceding gesture/sign has already put the hand(s) in this orientation. The same holds true if the hands are already resting on the lap. As a result, the person only needs to bring the hand(s) into the conventional position in space and with the conventional configuration to produce the PU. Similarly, more reduced forms of PU can be performed, without completing the entire 180° rotation but displaying the intention of movement of the wrist(s). These reduced forms have been reported in previous research (e.g., Mesch, 2016; Gabarró-López, 2020) and are defined by Mesch (2016) as a movement when the participant “slightly rotate [s] the wrist so that the palm forms a smaller angle than 90° with the floor” (p. 177). The partial or full rotation of the wrist(s) is of importance here, which also distinguishes it from a mere pointing action of the hand.

While the mere act of pointing may seem trivial at first sight, it remains a multifarious tool (Cooperrider and Mesh, 2022). We follow the definition of Cooperrider and Mesh who define pointing as a meaningful “bodily “movement toward a target—someone, something, somewhere—with the intention of reorienting attention to it” (p. 22), with a focus on points with the extended index only.

In the following sections, evidence drawn from the four languages across modalities is provided. PU and index pointing cases are qualitatively detailed, first in LSFB/BF and next in ASL/AmEng. These instantiations demonstrate how language users from different ecological niches activate their whole body, using similar communicative practices, to regulate the dialogic flow and engage with their addressees in interaction. These examples show the importance and the relevance for integrating these conversational moves within the broader context of language theory, regardless of the modality in which language manifests itself.

4 Evidence From Four Languages Across Modalities

4.1 Palm-Up and Index Pointing in LSFB and BF Conversations

4.1.1 LSFB

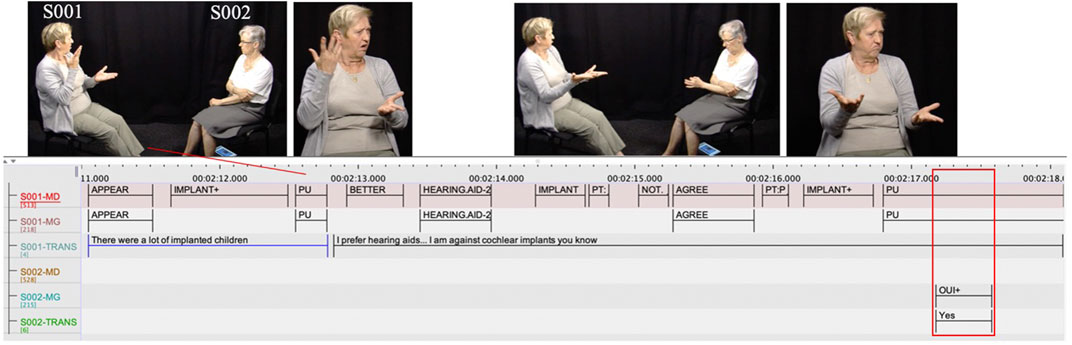

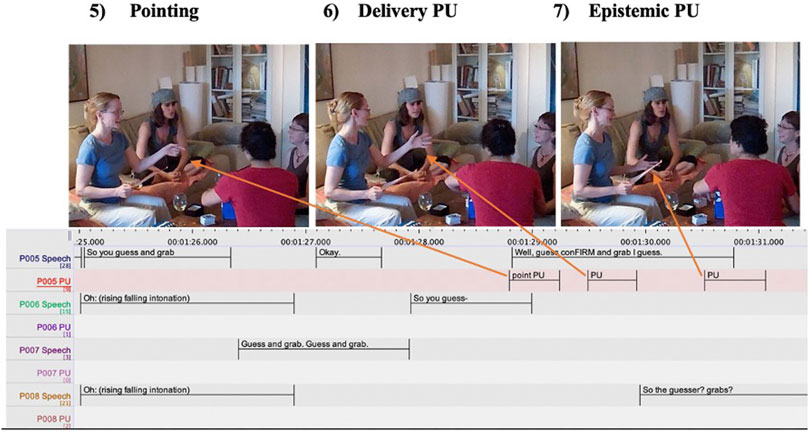

The sequence below illustrates a series of interactive PU and pointing actions in LSFB drawn from a conversation with two female deaf signers, S001 and S002, who discuss differences between hearing and deaf culture. The conversation deviates on the topic of cochlear implants and hearing aids. The example in Figure 1 begins with the primary signer (S001) explaining that when she visited a school for the Deaf, she noticed a lot of implanted children.

S001, when mentioning there were a lot of implanted children, introduces this content by metaphorically handing over the new information to her addressee, S002 with a PU carrying a delivery function. This PU marks the content of information as new and/or relevant to the main point to S002. In other words, the new content is delivered on the palms of her hands for S002’s to inspect. By signaling to the addressee the status of the information, the signer “helps coordinate the understanding of meaning between [signer and addressee]" (Bavelas et al., 1995, p. 395), which is corroborated by the fact that S002 promptly responds to the PU by giving non-manual feedback (a head nod together with an open mouth). By responding to this two-handed PU, S002 signals on a moment-by-moment basis that she has understood the new information. Upon receiving this finely tuned response, S001 directly resumes signing to give her opinion on the matter (in Figure 1. “I prefer hearing aids … “).

The next two-handed PU by S001 comes right after she expresses her opinion signing “BETTER HEARING. AID IMPLANT NOT AGREE PT:PRO1 IMPLANT PALM-UP” (“I prefer hearing aids … I am against cochlear implants you know”). PU can be produced to seek information from others in conversation, therefore serving a seeking function whose aim is to seek agreement, following, or check for understanding and/or attention from the addressee. Here, S001 is seeking evidence of agreement with what she has just uttered, and the meaning expressed in the PU is analogous to “don’t you agree with the point I just made?” (Bavelas et al., 1995). In response to the PU, S002 provides immediate feedback with the lexical sign “YES” (“I agree with you”). It is worth mentioning that S001’s PU is co-produced with a shoulder shrug, a repeated headshake and down cornered lips that all participate in the meaning-making process. These non-manual features add an epistemic dimension to the PU being performed reflecting the signer’s stance on the event talked about. The combination of the manual and non-manual aspects illustrates how the signer activates her whole body to convey the intended meaning to her addressee in the interaction.

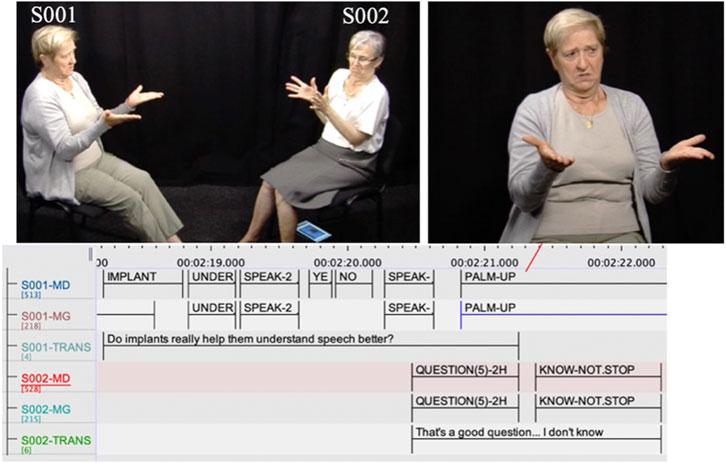

After producing the seeking agreement PU in Figure 1, S001 asks a follow-up question to S002: whether implants help understand speech better (Figure 2). She ends her question with a two-handed PU and S002 replies that she has absolutely no idea (QUESTION KNOW-NOT STOP).

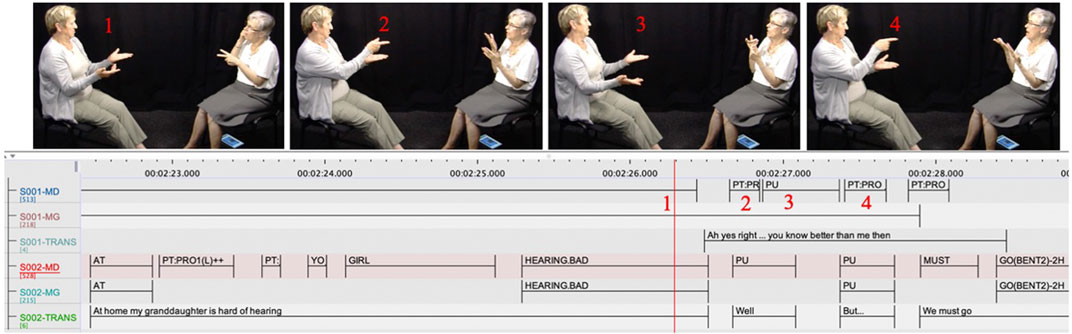

In the first part, S001’s PU acts as a turn-yielding signal. This token takes place in final position of a yes/no question. As shown in previous SL studies, PU can function as a question particle in yes/no and wh-questions (e.g., NZSL; Mckee and Wallingford, 2011). Yet, the change in speakership does not occur instantly as S002 does not have a straight answer to S001’s question: “that’s a good question. I don’t know”. As a result, S001 does not bring her hands back to rest. Instead, she keeps them in the exact location, handshape, and orientation characteristic of the PU for more than 5 s (5343 ms) along with a sustained gaze, overlapping with S002’s utterance, who introduces a related but new topic in the discussion: “at home my granddaughter is hard of hearing” (Figure 3). As S002 elaborates on the topic, which marks a turn transition between both signers and during which other interactional cues emerge in the interaction (viz., S001’s two interactional index points as S002 performs PUs in Figure 3).

The example in Figure 3 begins as S002 ends her utterance with a post-stroke hold on the sign “HEARING.BAD” for 407 ms to seek S001’s understanding/following. To show her acknowledgement, S001 produces a one-handed index point on her dominant hand (S001-RH) while her non-dominant hand (S001-LH) remains held in the shape of the previous PU. In this case, S001’s pointing does not carry referential meaning on its own but it also expresses feedback, highlighting the active role of the addressee in the exchange (Ferrara, 2020). Upon receiving direct confirmation with S001’s first index point, S002 produces next a two-handed PU to punctuate her discourse (“Well”), directly followed by a short hesitation hold (368 ms) during which, S001 reproduces the exact same pointing as if to reiterate her backchannel response in case S002 has not received it:

The two instances of interactional pointing contrast with the seeking function observed in Figure 1, with the PU as here they do not aim at monitoring the other in the conversation but instead show agreement and/or following. As Ferrara (2020) highlights on pointing in Norwegian SL (NTS), “these showing following/agreement finger points tell another signer ‘ah, I see’ or ‘yes, I agree with what you are saying,’ and thus are examples of conversational feedback or backchanneling” (p. 15).

Alternatively, it is revealing to observe that both PUs in Figure 1, and in Figure 2 occur at the end of S001’s utterance. However, the PU in Figure 1, aims at getting a feeback response from S002, while in Figure 2, S001 ends with her palms facing upwards at the end of her question, offering S002 the floor and leaving room for her reply on the implant question. This echoes previous results on the relevance of addressing specific interactional strategies in the management of SL discourse (Groeber & Pochon Berger, 2014; Lepeut, In press). S001 releases her hold with her hands going back to rest only when the next turn has officially been taken by S002, signaled with S002’s PU mais (‘but’). It has been argued by Groeber and Pochon-Berger (2014) that these subtle actions are non-arbitrary as “the timing of the release is based upon the current speaker’s meticulous on-line analysis of the co-participants conduct” (p. 9), which the authors pursue further, the “hold release is key to understanding the interactional job that the hold performs” (p. 10). In this instance, the hold release does not occur until S001 has visibly acknowledged and recognized S002’s actions, that is, S002’s start of a new turn and upon which S001 finally brings her hands back to rest.

4.1.2 Belgian French

In the following cases, a description of the interactional meanings of PU and pointing in the spoken Belgian French dataset, the FRAPé Corpus, is introduced.

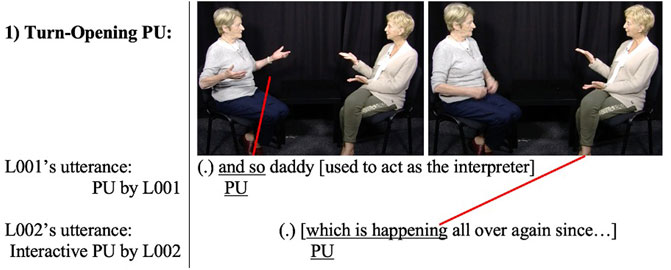

In Figures 4–6, L001 and L002 talk about the special linguistic situation that characterizes Belgium between the Walloon and the Flemish, and how far this situation goes back in time. Both sit in silence (.) and the example begins with L001 telling her addressee, L002, that Flemish people living in the French-speaking part used to be trialed in French and that her dad used to act as their interpreter. As L001 utters the words “and so <PU> daddy used to … ” (02:52.559), L002 simultaneously raises her hands from rest position in the shape of a PU (02:52.967) to take the turn, which results in speech and gestural overlaps between L001 and L002’s utterances (picture 1, on the left). L002’s intervention into the main line of L001’s action results in pushing the primary speaker to suspend her speakership to enable L002’s intervention. L001 leaves her hands up in midair position as she had not finished speaking but since L002 continues with her utterance, she finally brings them back to rest and L002 takes over (Figure 4, picture 2 on the right).

The PU initially performed by L001 is held briefly in midair position as L002 intervenes in the main line of action. L001 is going to bring her hands to rest position, which “leaves the floor to the next speaker and makes the speaker transition effective” (Groeber and Pochon-Berger, 2014, p. 14). This kind of finely-tuned coordination of the co-participants’ actions on a moment-by-moment basis, in turn, displays “turn boundaries as flexible, interactionally achieved and unfolding across a certain timespan rather than fixed points in time” in conversations (2014, p 14). How this kind of activity is systematically achieved within and across signed and spoken language interaction awaits future research.

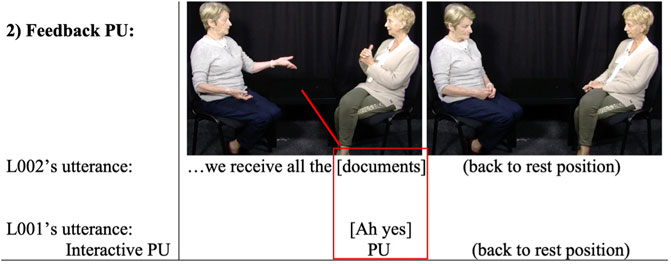

Then, in Figure 5, L002 elaborates upon L001’s previous turn mentioning that the same situation is still happening today: if people are Walloon but living in the Flemish side - although close to the French linguistic border - they receive all the paperwork in Flemish and not in French. As she is uttering those words, L001 provides feedback to show her agreement with that statement in the form of another PU concurrently reflected in her speech as she says “ah yes” (03:02.632). As both speakers are telling each other that this linguistic situation is not new, a brief silence takes place in the conversation with both of their hands lying in rest position.

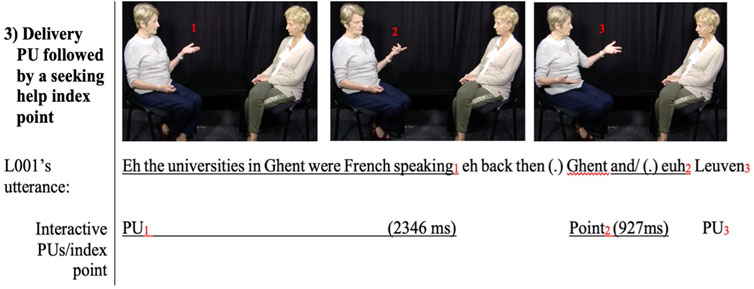

In Figure 6, L001 is going to produce another PU (03:42.099) to deliver new, relevant information content to L002 by mentioning that the university in Gent, a Flemish city, was French speaking at the time and she holds that PU to obtain some feedback from L002, which she does in the form of a head nod.

As L001 mentions Ghent University, she remembers that there was another Flemish university for which it was similar. Yet she cannot find the name and changes her PU into an index pointing (03:45.688) that she holds for 927 ms, combined with a floating gaze and frowned eyebrows, to seek L001’s help during that word search activity. Through the pointing gesture and the hold, together with the hesitation in her speech “and/” and the non-manual activity, she makes a direct reference to her addressee, asking to help with the missing information. But L001 recovers the university name by herself and delivers the missing piece on the palm of her hand to L002 one more time with a PU (03:48.189). The sequence resumes with L001 keeping the floor and elaborating her claim about Flemish universities being French speaking at the time.

4.2 Palm-Up in ASL and American English Conversations

4.2.1 ASL

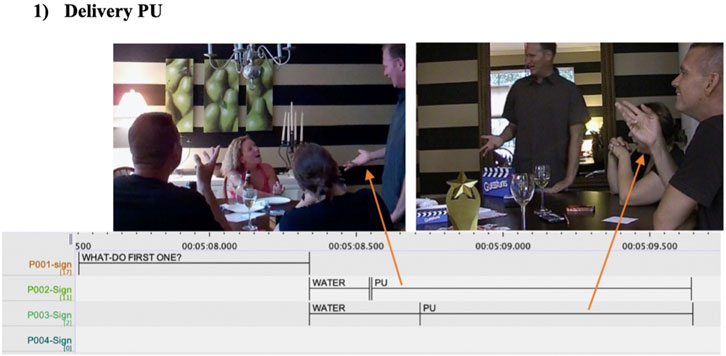

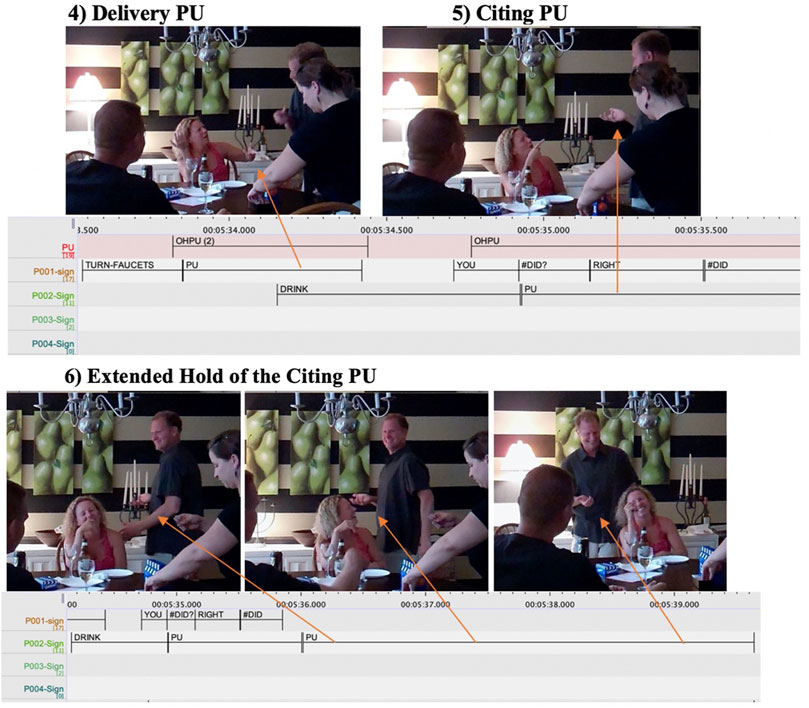

We turn now to examine the interactive PU in ASL during a 1 min exchange between a wife (P001), husband (P002), and another friend (P003). No pointing actions emerged in this segment (for more details on pointing actions in ASL, see Shaw, 2019). Just prior to the moment of focus here, P002 (standing in Figure 7) gestured four clues to P001, two of which, “Water” and “Tissue”, she missed. They began their post-turn debrief here when P001 asked “what was the first clue?“. The participants who knew the correct answer (P002 and P003) both replied “WATER! PU (hold)” (Figure 7, 1).

In this example, P002 holds the PU relatively low with respect to his torso—the fingers index toward P001. P003’s reduced PU, in contrast, is positioned 90° close to his own face given that he is resting his elbow on the table. Holding the PU in these instances has the effect of delivering new information (i.e., the clue was “water”) but the move also implies a sort of judgment of P001 especially by her teammate. The PU presents the clue as obvious, right in front of her, in the hand, and hits on two dimensions of the exchange: the interactive and knowledge sharing dimensions. Her response is mild disbelief, her mouth is agape then she purses her lips, blinks her eyes and looks down at the table bashfully for a moment.

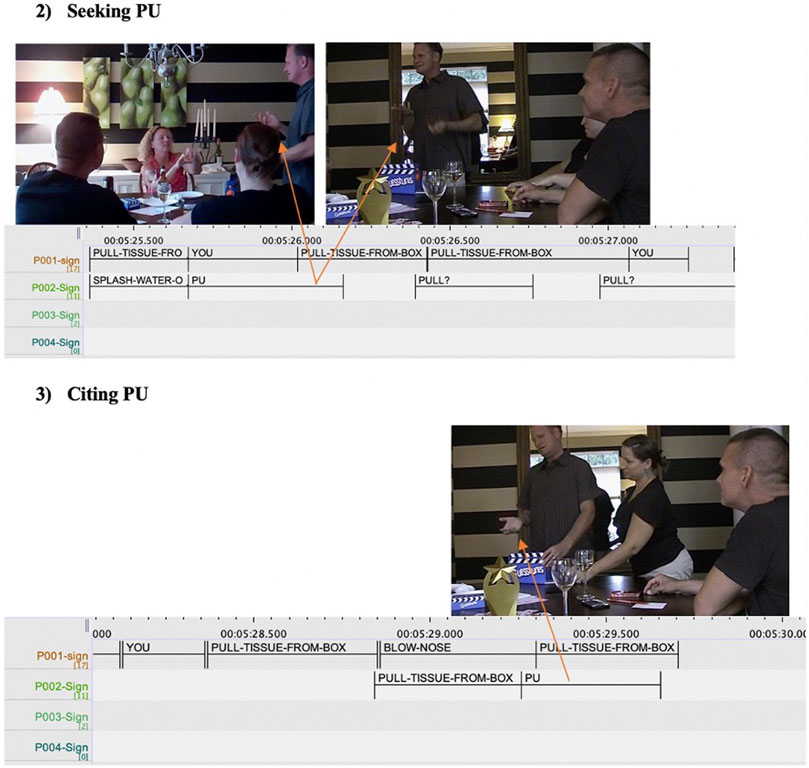

Her teammate then informs her of the other incorrect guess (“Tissue”) and re-enacts the moves he performed. He then quickly shifts to repeat those he performed for “Water”. In Figure 8 P002 has just acted as if splashing water on his face then produces a PU form. This PU differs from the earlier one in a few important ways. First, the hand is held much closer to the signer’s torso and it comes just after a self-initiated turn. If we consider one interactive function of the PU as a vehicle for passing judgment on something, the physical proximity of the form to the signer visibly signals personal evaluation. In this example, his performance of “Water” is the object of evaluation. His turn initiation disambiguates the PU as evaluating his own talk and not that of his interlocutors.

Notice, while P002 presents his performance of “Water”, P001 simultaneously enacts her ideas for “Tissue”. She begins by acting as if pulling a tissue out of a box. P002 does not understand what is meant by this action—given that his immediately prior utterance concerned his choices for gesturing the clue “Water”. We see evidence of his confusion when he repeats the pulling action with lowered brows. When P001 clarifies that she was gesturing “Tissue”, P002 repeats the pulling action followed by PU with his head tilted slightly (Figure 8, 3). Here, the PU is produced lower in space than the one in example 2) but, importantly, not as low as in the first example (Figure 7). Also, P002’s gaze is now at his own hand. What can be made of these differences? Given that he just repeated the action that P001 produced, we conclude that this PU cites the information just provided by his interlocutor to the discourse but it also marks his uptake of the information as well as an assessment of the content. He then asks P001 what he should have done to gesture “Water”. P001 replies by acting as if she is taking a long drink from a cup and then turning off faucets.

After P001 provides this suggestion, she produces two PUs with lowered brows, effectively delivering the information and also assessing it as common sense (Figure 9, 4 and 5).

In (4), P001’s right hand PU is close to her head which is unsurprising given that her elbow is on the table. Her left PU is situated between the interlocutors though. This has the effect of both delivering information and also citing it while her face expresses the evaluation of it. This move essentially ranks her suggestion as common sense (again, in the hand) and her teammate’s performance as unsatisfactory. The slight is taken up by P002 in his very next turn when he repeats the gestured actions (drinking dramatically from a cup), the ones he had indeed performed during the game. He then flips his wrist into a PU (Figure 9, 5) and holds it in place, sustaining gaze with his teammate. P001 immediately realizes her mistake, signing “YOU #DID? RIGHT #DID” (“You gestured that? That’s right, you did”). Her teammate does not let her get away with it that easily, though, and holds this PU for a full 4 seconds as he walks behind her, taps her shoulder and makes eye contact with their audience, P003 (Figure 9, 6).

This final PU is unlike the others that were held because the signer also physically moves away from where he was standing. Instead of dropping his hand, this hold becomes performative—a metaphorical carrying of the content of his prior turn as if a prize marking that he was right. The semiotic transformation, then, from delivery of information to citing said information while also regulating turns by holding claim to the floor is signaled by the movement of the wrist, eye gaze, non-manual markers as well as the physical positioning of his body. The interactive subtext is that “I was right, you were wrong” in the playful context of healthy competition. The release of the hold (cf. Groeber and Pochon-Berger, 2014; Lepeut, In press) occurs right when he takes his seat and gives his teammate a high five.

4.2.2 American English

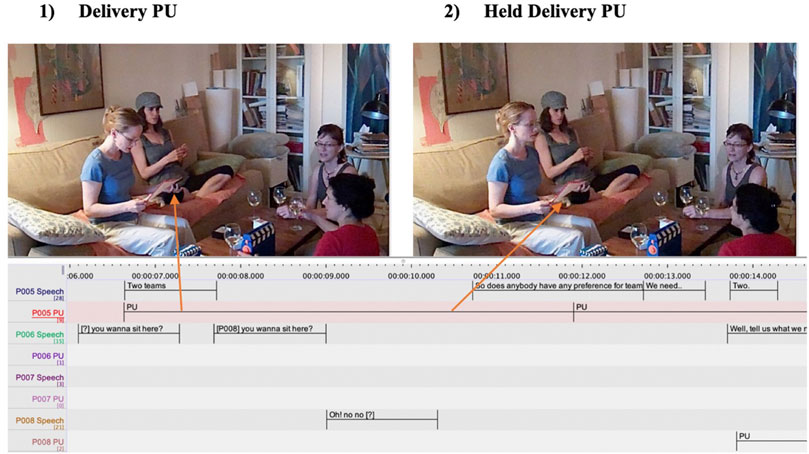

Now we turn to a short sequence from the American English data. A series of PU and pointing forms that emerged between four friends (P005, P006, P007, and P008) while they figured out how to play the game. The primary speaker (P005) in this exchange is seated on the far left and holds the instructions as she shifts between reading and informing the group of the rules. This 1 min exchange begins when she reads aloud, “Two teams … ” while producing a PU (Figure 10, 1).

The speaker rotates her left hand to PU and holds it there for a full 5 seconds despite the fact that her speech trails off after uttering “Two teams”. Holding the PU serves multiple functions here. It begins as a classic delivery gesture where the content, “two teams,” is metaphorically relayed to the group, just as her hand rotates to supine position. But when coupled with her eye gaze which is directed, not at her interlocutors, but the instructions, the form takes on a turn-regulating function as well, signaling to the group that she is not done reading and still holds at least partial claim to the floor. The woman sitting next to her on the couch, P006, reinforces this by whispering to P008 (seated on the floor opposite her) “You wanna sit here?” This turn prompts P005 to look up at the addressed but not relinquish the held PU (Figure 10, 2).

Just after this moment, the primary speaker returns her gaze to the instructions and briefly closes her fingers of the left hand saying, “So does anybody have any preference for teams?” Upon uttering ‘teams’, she extends her fingers into a fully opened PU (Figure 11, 3).

This repetition of “teams” is embedded in a request for preferences of teammates. She also repeats the PU but this time holds it fully open for 4 seconds. Her couch neighbor takes up the second position, replying, “Well, tell us what we need to do first” at which point P008 starts to wave an open PU toward P005 (Figure 11, 4). The movement catches P005’s eye and she briefly looks up at P008 just as she retracts the PU (not pictured). What is worth noting in this instance is that when she moves the PU in P005’s direction, P008 has not verbally said anything; P006 has. After P008 signals the PU toward P005, P008 simply says “Yeah”, taking up P006’s suggestion while also reinforcing P005’s role as holding the knowledge contained in the instructions. All this time, P005’s PU is held in space, retaining a visible trace of her prior turn and also sustaining her claim to the floor. The question becomes how to interpret P008’s PU form—whose talk is it citing? Is she trying to initiate a turn? It is not entirely clear until she utters “Yeah” agreeing with P006’s suggestion that P005 relay the instructions first.

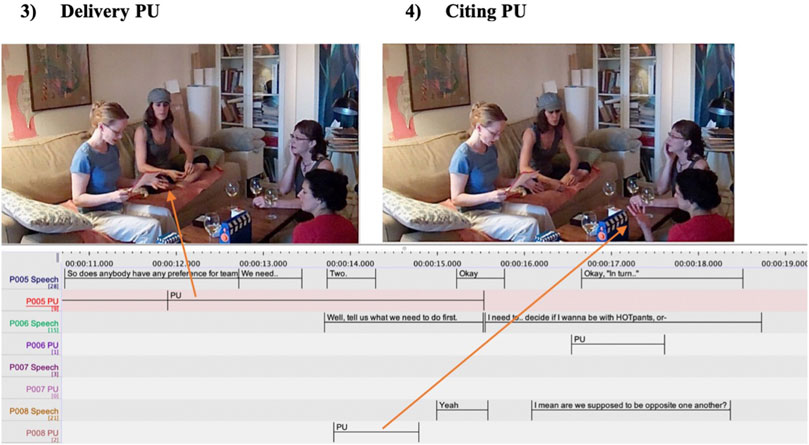

The next series of turns involves deciphering who is supposed to pull a card out of the game box when a clue is correctly guessed. P005 retracts her PU and at the same time the other participants weigh in on possibilities—how do the cards drop into the box? Does the guesser or the gesturer pick out the card when a guess is correct? P005 continues to read silently and then finds out that it is the gesturer who is responsible for grabbing the card. She begins by saying “Ohhhh” marking new information (Schiffrin, 1987), then reads aloud “you have a few seconds to get your team to guess the word and grab the card … before it drops out of sight and out of reach.” This information prompts P007 and P008 to echo “Ohhhh” with the same rising-falling intonation that P005 uttered. New information has been introduced and P007 playfully adds “Guess and grab. Guess and grab.” It is this last contribution that prompts P005 to edit by responding, “Well, guess, confirm and grab.” During this utterance, she produces a quick sequence of gestured forms that cite prior talk while adding epistemic judgments about it.

First, she produces a pointing action directed toward P007, citing P007’s prior utterance (“Guess and grab”) (Figure 12, 5) but, in concert with the speech, also signals that what she is about to say contrasts with the prior turn. P005 quickly flexes her wrist on the first syllable of “confirm” which results in the point being oriented in an opposite direction (not pictured). Then she tosses a PU toward P007 on “grab” (Figure 12, 6) effectively delivering the edited utterance back to the primary author of the original turn.

The manual actions are produced quickly but they accomplish a few interactive and knowledge sharing tasks. The initial point coheres P005’s contribution to P007’s prior talk while signaling something unexpected is to come (cf. the discourse marker well, Schiffrin, 1987). The second point could be said to symbolically illustrate contrast to P007’s talk (that the player does not just guess and grab the card but also has to confirm that it was correct) by pointing in the opposite direction. After staking this claim and delivering it back to P007, P005 ends up hedging by saying “I guess”. She opens a PU while shrugging slightly and drawing down the corners of her mouth (Figure 12, 7).

5 Discussion and Conclusion

This paper reported cases of the PU and index pointing action in different signing and speaking contexts from an interactional perspective. These forms accomplish an array of pragmatic moves—citing, seeking, delivering information and regulating turns. How do interlocutors discern the differences? It appears that several co-occurring bodily expressions are key to disambiguating the meanings. First, location in space and movement—the extension of the hand near or far from the torso as well as the movement of the hands toward an interlocutor helped to distinguish its meaning. Second, eye gaze—whether the participant looks at their interlocutor or elsewhere seems to make a difference in the function of the form. Third, facial expression—the PU, in its simple form allows other articulators like the face, torso and head, to take central stage and indeed the co-participants respond in kind. And finally, prior utterances - whether the PU is produced inter- or intra-signer/speaker plays a part in the form’s interactive meaning.

While language conveys propositional information, it also conveys social meaning. This latter dimension of language use (including how interlocutors discern the meaning of the moves), has received short shrift in linguistic theories. These data show that when there is an open floor, interlocutors negotiate turns as well as knowledge-sharing all at once. The turn-taking mechanism triggers sensitivities to all the social relationships between them. But when they are also negotiating common ground, they mark epistemicity too, which shifts dynamically as time unfolds. People are adept at expressing and interpreting these micro-moves on-line, but the coordination takes work—work that is made evident through their bodies.

What do these forms tell us, then, about each of these aspects (relational and informational) of interaction? We can look at the moment-by-moment unfolding of the interaction to uncover what the form means (or, stated differently, what it accomplishes). Linguists interested in interaction often describe conversations as jointly constructed. This perspective calls into question who ‘owns’ the ideas expressed in the turns. When people meet face-to-face, the meeting of the minds can be seen through the participants’ bodies. The raised PU and pointing actions between two interlocutors activate the space between them—they do not contribute substantive content, there are no images per se that could be abstracted from them. Rather, they signal attunement, a visible presentation of intersubjective intentions. And they emerge systematically across at least four distinct ecological niches.

The overlaying of social with linguistic moves allows the researcher to account for these seemingly impromptu forms that challenge theoretical boundaries between gesture and sign. It is high time to go beyond the inner, cognitively driven models of gesture to include a more socially regulated conception of it. Gesture is not exclusively an intra-personal phenomenon revealing the imagistic side of language (as advocated by McNeill, 1992 and Goldin-Meadow and Brentari, 2017), but it is also highly inter-personal, assisting the dialogic process of interaction by regulating the dynamics of the speaker-addressee relationship and managing aspects of interaction itself. We push forward the claim that both signers and speakers’ bodily expressions are cut from the same cloth (Kendon, 2004, pp. 307–325). Therefore, more gradient-gestural expressions should not be seen as outside language in any modality. On the contrary, when communicating, all individuals draw on diverse resources from their available semiotic repertoires in the here-and-now (Ferrara and Hodge, 2018). These resources can be interpreted as linguistic, in the sense of belonging to the realm of language as a system but also part of the multimodal components, including these more gradient-gestural phenomena. These two sides are not mutually exclusive, and they need to be considered as equal components of language, as both sides of the same coin.

To account for this social, interactive nature of gesture in language, the diametric opposition between sign and gesture, the linguistic vs the non-linguistic, needs to be left behind in favor of a more encompassing and integrative definition of language as a system and as a situated practice (Murgiano et al., 2020). The acknowledgement of interactive mechanisms in signers’ discourse that are typically not considered part of the signing stream, part of language, but that resemble those deployed by speakers in SpLs, allows scholars to contend that humans use their bodies in parallel and meaningful ways.

Data Availability Statement

Videoclips have been made available through OSF at: https://osf.io/dkr6w/. Further inquiries can be directed to the corresponding authors.

Ethics Statement

Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author Contributions

Both AL and ES conceptualized the study and analyzed their data (LSFB/French: AL and ES: ASL/AmEng) and wrote 50% of the manuscript.

Funding

The research on LSFB and Belgian French was funded by a F.R.S.-FNRS Research Fellow Grant (1.A.156.17F, 2016-2020). This paper is published with the support of the University Foundation of Belgium (AS-0383).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors acknowledge all deaf and hearing participants who took part in this study. We also thank the reviewers, LM and GR, for their very useful feedback.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomm.2022.780124/full#supplementary-material

References

Andrén, M. (2014). “On the Lower Limit of Gesture,” in From Gesture in Conversation to Visible Action as Utterance: Essays in Honor of Adam Kendon. Editors M. Seyfeddinipur, and M. Gullberg (Amsterdam: Benjamins), 153–174. doi:10.1075/z.188.08

Bachy, S., Dister, A., Francard, M., Geron, G., Giroul, V., Hambye, Ph., et al. (2007). Conventions de transcription régissant les corpus de la banque de données VALIBEL. Louvain-la-Neuve: Working paper, 1–18.

Baker, A., and Bogaerde, B. v. d. (2012). “Communicative Interaction,” in Sign Language. An International Handbook. Editors R. Pfau, M. Steinbach, and B. Woll (Berlin/Boston: De Gruyter Mouton), 489–512. doi:10.1515/9783110261325.489

Baker, Ch. (1977). “Regulators and Turn-Taking in American Sign Language Discourse,” in On the Other Hand. Editor L. A. Friedman (New York: Academic Press), 215–236.

Bavelas, J. B., Chovil, N., Coates, L., and Roe, L. (1995). Gestures Specialized for Dialogue. Pers Soc. Psychol. Bull. 21, 394–405. doi:10.1177/0146167295214010

Bavelas, J. B., Chovil, N., Lawrie, D. A., and Wade, A. (1992). Interactive Gestures. Discourse Process. 15, 469–489. doi:10.1080/01638539209544823

Bavelas, J. B. (1994). Gestures as Part of Speech: Methodological Implications. Res. Lang. Soc. Interaction 27, 201–221. doi:10.1207/s15327973rlsi2703_3

Bavelas, J., Gerwing, J., Sutton, C., and Prevost, D. (2008). Gesturing on the Telephone: Independent Effects of Dialogue and Visibility. J. Mem. Lang. 58, 495–520. doi:10.1016/j.jml.2007.02.004

Bolly, C. T., and Boutet, D. (2018). The Multimodal CorpAGEst Corpus: Keeping an Eye on Pragmatic Competence in Later Life. Corpora 13, 279–317. doi:10.3366/cor.2018.0151

Clark, H. H., and Krych, M. A. (2004). Speaking while Monitoring Addressees for Understanding. J. Mem. Lang. 50, 62–81. doi:10.1016/j.jml.2003.08.004

Coates, J., and Sutton‐Spence, R. (2001). Turn‐taking Patterns in Deaf Conversation. J. Sociolinguistics 5, 507–529. doi:10.1111/1467-9481.00162

Cooperrider, K., Abner, N., and Goldin-Meadow, S. (2018). The Palm-Up Puzzle: Meanings and Origins of a Widespread Form in Gesture and Sign. Front. Commun. 3. doi:10.3389/fcomm.2018.00023

Cooperrider, K., and Mesh, K. (2022). “Pointing in Gesture and Sign,” in Gesture in Language: Development across the Lifespan. Editors A. Morgenstern, and S. Goldin-Meadow (De Gruyter Mouton; American Psychological Association), 21–46. doi:10.1037/0000269-002

Deppermann, A. (2013). Multimodal Interaction From a Conversation Analytic Perspective. Journal of Pragmatics 46, 1–7. doi:10.1016/j.pragma.2012.11.014

de Vos, C., Torreira, F., and Levinson, S. C. (2015). Turn-timing in Signed Conversations: Coordinating Stroke-To-Stroke Turn Boundaries. Front. Psychol. 6. doi:10.3389/fpsyg.2015.00268

Dingemanse, M., Roberts, S. G., Baranova, J., Blythe, J., Drew, P., Floyd, S., et al. (2015). Universal Principles in the Repair of Communication Problems. PLoS One 10, e0136100. doi:10.1371/journal.pone.0136100

Dively, V. L. (1998). “Conversational Repairs in ASL,” in Pinky Extension and Eye Gaze. Language Use in Deaf Communities. Editor C. Lucas (Washington, DC: Gallaudet University Press), 137–169.

Duncan, S. (2005). Gesture in Signing: a Case Study from Taiwan Sign Language. Lang. Linguistics 6, 279–318.

Emmorey, K. (1999). “Do signers Gesture?,” in Gesture, Speech, and Sign. Editors L. Messing, and R. Campbell (New York: Oxford University Press), 133–158. doi:10.1093/acprof:oso/9780198524519.003.0008

Enfield, N. J. (2009). The Anatomy of Meaning: Speech, Gesture, and Composite Utterances. Cambridge, UK: Cambridge University Press. doi:10.1017/CBO9780511576737

Ferrara, L., and Hodge, G. (2018). Language as Description, Indication, and Depiction. Front. Psychol. 9, 14–29. doi:10.3389/fpsyg.2018.00716

Ferrara, L. (2020). Some Interactional Functions of finger Pointing in Signed Language Conversations. Glossa: A J. Gen. Linguistics 5, 1–26. doi:10.5334/gjgl.993

Ferré, G. (2012). Functions of Three Open-palm Hand Gestures. Multimodal Commun. 1, 5–20. doi:10.1515/mc-2012-0002

Gabarró-López, S. (2020). Are Discourse Markers Related to Age and Educational Background? A Comparative Account between Two Sign Languages. J. Pragmatics 156, 68–82. doi:10.1016/j.pragma.2018.12.019

Gerwing, J., and Bavelas, J. (2013). “51. The Social Interactive Nature of Gestures: Theory, Assumptions, Methods, and Findings,” in Body – Language – Communication. Editors C. Müller, A. Cienki, E. Fricke, S. H. Ladewig, D. McNeill, and S. Teßendorf (Berlin: Mouton De Gruyter), 821–836. doi:10.1515/9783110261318.821

Girard-Groeber, S. (2015). The Management of Turn Transition in Signed Interaction through the Lens of Overlaps. Front. Psychol. 6, 1–19. doi:10.3389/fpsyg.2015.00741

Goldin-Meadow, S. (2015). The Impact of Time on Predicate Forms in the Manual Modality: Signers, Homesigners, and Silent Gesturers. Topics in Cognitive Science 7, 169–184. doi:10.1111/tops.12119

Goldin-Meadow, S., and Brentari, D. (2017). Gesture, Sign, and Language: The Coming of Age of Sign Language and Gesture Studies. Behav. Brain Sci. 40, 1–60. doi:10.1017/S0140525X15001247

Goodwin, C. (2007). Participation, Stance and Affect in the Organization of Activities. Discourse Soc. 18, 53–73. doi:10.1177/0957926507069457

Groeber, S., and Pochon-Berger, E. (2014). Turns and Turn-Taking in Sign Language Interaction: A Study of Turn-Final Holds. J. Pragmatics 65, 121–136. doi:10.1016/j.pragma.2013.08.012

Harness Goodwin, M., and Goodwin, C. (1986). Gesture and Coparticipation in the Activity of Searching for a Word. Semiotica 62, 51–75. doi:10.1515/semi.1986.62.1-2.51

Holler, J., and Bavelas, J. (2017). “Chapter 10. Multi-Modal Communication of Common Ground Why Gesture?,” in How the Hands Function in Speaking, Thinking, and Communicating. Editors R. B. Church, M. W. Alibali, and S. Kelly (Amsterdam: Benjamins, 213–240. doi:10.1075/gs.7.11hol

Holler, J., and Levinson, S. C. (2019). Multimodal Language Processing in Human Communication. Trends Cogn. Sci. 23, 639–652. doi:10.1016/j.tics.2019.05.006

Holler, J. (2010). “Speakers' Use of Interactive Gestures as Markers of Common Ground,” in Proceedings of the 8th International Gesture Workshop, Selected Revised Papers: Gesture in embodied communication and human–computer interaction. Editors S. Kopp, and I. Wachsmuth (Heidelberg: Springer-Verlag), 11–22. doi:10.1007/978-3-642-12553-9_2

Johnston, T. (2015). Auslan Corpus Annotation Guidelines. Technical Report. Sydney/Melbourne: Macquarie University/La Trobe University.

Jokinen, K. (2010). “Pointing Gestures and Synchronous Communication Management Development of Multimodal Interfaces: Active Listening and Synchrony,” in Lecture Notes in Computer Science. Editors A. Esposito, N. Campbell, C. Vogel, A. Hussain, and A. Nijholt (Berlin/Heidelberg: Springer), 33–49. doi:10.1007/978-3-642-12397-9_3

Kendon, A. (2014). Semiotic Diversity in Utterance Production and the Concept of ‘language'. Phil. Trans. R. Soc. B 369, 20130293. doi:10.1098/rstb.2013.0293

Kendon, A. (2008). Some Reflections on the Relationship between ‘gesture' and 'sign'. Gest 8, 348–366. doi:10.1075/gest.8.3.05ken

Labov, W. (1972). Some Principles of Linguistic Methodology. Lang. Soc. 1, 97–120. doi:10.1017/s0047404500006576

Lepeut, A. (2020). Framing Language through Gesture: Palm-Up, Index Finger-Extended Gestures, and Holds in Spoken and Signed Interactions in French-speaking and Signing Belgium. PhD Dissertation. Namur: University of Namur.

Lepeut, A. (In press). “When the Hands Stop Moving, Interaction Keeps Going,” in Languages in Contrast 22. Editors S. Gabarró-López, and L. Meurant (Amsterdam: Benjamins).

Liddell, S. K. (2003). Grammar, Gesture, and Meaning in American Sign Language. Cambridge: Cambridge University Press.

Manrique, E. (2016). Other-initiated Repair in Argentine Sign Language. Open Linguistics 2, 1–34. doi:10.1515/opli-2016-0001

McCleary, L. E., and de Arantes Leite, T. (2013). Turn-taking in Brazilian Sign Language: Evidence from Overlap. Jircd 4, 123–154. doi:10.1558/jircd.v4i1.123

McIlvenny, P. (1995). “Seeing Conversations: Analyzing Sign Language Talk,” in Situated Order: Studies in the Social Organization of Talk and Embodied Activities. Editors P. ten Have, and G. Psathas (Washington, DC: University Press of America), 129–150.

McKee, R., and Wallingford, S. (2011). ‘So, Well, Whatever', ‘So, Well, Whatever’: Discourse Functions of palm-up in New Zealand Sign Language, 14. Sign Language & Linguistics, 213–247. doi:10.1075/sll.14.2.01mck

McNeill, D. (1992). Hand and Mind: What Gestures Reveal about Thought. Chicago, IL: University of Chicago Press.

Mesch, J. (2016). Manual Backchannel Responses in Signers' Conversations in Swedish Sign Language. Lang. Commun. 50, 22–41. doi:10.1016/j.langcom.2016.08.011

Mesch, J. (2001). Tactile Sign Language: Turn Taking and Question in Signed Conversations of Deafblind People. Washington, DC: Gallaudet University Press.

Meurant, L. (2015). Corpus LSFB. First Digital Open Access Corpus of Movies and Annotations of French Belgian Sign Language (LSFB). Namur: LSFB-Lab, University of Namur. [Online]. URL: http://www.corpus-lsfb.be.

Meurant, L., Lepeut, A., Vandenitte, S., and Lombart, C. The Multimodal FRAPé Corpus: Towards Building a Comparable LSFB and Belgian French Corpus. Corpora.

Mondada, L. (2019). Contemporary Issues in Conversation Analysis: Embodiment and Materiality, Multimodality and Multisensoriality in Social Interaction. J. Pragmatics 145, 47–62. doi:10.1016/j.pragma.2019.01.016

Mondada, L. (2007). Multimodal Resources for Turn-Taking. Discourse Stud. 9, 194–225. doi:10.1177/1461445607075346

Müller, C. (2004). “Forms of the Uses of the PU Open Hand. A Case of a Gesture Family?,” in The Semantics and Pragmatics of Everyday Gestures. Editors C. Müller, and R. Posner (Berlin: Weidler), 233–256.

Müller, C. (2018). Gesture and Sign: Cataclysmic Break or Dynamic Relations?. Front. Psychol. 9. doi:10.3389/fpsyg.2018.01651

Murgiano, M., Motamedi, Y., and Vigliocco, G. (2020). Language Is Less Arbitrary Than One Thinks: Iconicity and Indexicality in Real-World Language Learning and Processing. J. Cogn. 3. doi:10.31234/osf.io/qzvxu

özçalişkan, Ş., Lucero, C., and Goldin-Meadow, S. (2016). Does Language Shape Silent Gesture?. Cognition 148, 10–18. doi:10.1016/j.cognition.2015.12.001

Özyürek, A., and Woll, B. (2019). “Language in the Visual Modality: Cospeech Gesture and Sign Language,” in “Human Language: From Genes and Brain to Behavior. Editor P. Hagoort (Cambridge, MA: MIT Press), 67–83.

Perniss, P. (2018). Why We Should Study Multimodal Language. Front. Psychol. 9, 1109. doi:10.3389/fpsyg.2018.01109

Puupponen, A. (2019). Towards Understanding Nonmanuality: A Semiotic Treatment of Signers' Head Movements. Glossa 4, 1–39. doi:10.5334/gjgl.709

Sacks, H., Schegloff, E. A., and Jefferson, G. (1974). A Simplest Systematics for the Organization of Turn-Taking for Conversation. Language 50, 696–735. doi:10.1353/lan.1974.0010

Sandler, W. (2009). Symbiotic Symbolization by Hand and Mouth in Sign Language. Semiotica 2009, 241–275. doi:10.1515/semi.2009.035

Schegloff, E. A. (1996). “Turn Organization: One Intersection of Grammar and Interaction,” in “Turn Organisation: One Intersection of Grammar and Interaction” in Grammar And Interaction. Editors E. Ochs, E. A. Schegloff, and S. A. Thompson (Cambridge, UK: Cambridge University Press), 52–133. doi:10.1017/cbo9780511620874.002

Schembri, A., Jones, C., and Burnham, D. (2005). Comparing Action Gestures and Classifier Verbs of Motion: Evidence from Australian Sign Language, Taiwan Sign Language, and Nonsigners' Gestures without Speech. J. Deaf Stud. Deaf Edu. 10, 272–290. doi:10.1093/deafed/eni029

Schiffrin, D. (1987). Discourse Markers. Cambridge/New York: Cambridge University Press. doi:10.1017/CBO9780511611841

Schiffrin, D. (1990). The Principle of Intersubjectivity in Communication and Conversation. Semiotica 80 (1-2), 121–151. doi:10.1515/semi.1990.80.1-2.121

Shaw, E. (2019). “Gesture in Multiparty Interaction,” in Sociolinguistics in Deaf Communities 24. Editor J. Fenlon (Washington, DC: Gallaudet University Press).

Skedsmo, K. (2020). Multiple Other-Initiations of Repair in Norwegian Sign Language. Open Linguistics 6, 532–566. doi:10.1515/opli-2020-0030