- 1Department of Psychology, Arizona State University, Tempe, AZ, United States

- 2School of Human Evolution and Social Change, Arizona State University, Tempe, AZ, United States

- 3School of Computing Informatics and Decision Systems Engineering, Arizona State University, Tempe, AZ, United States

- 4School of Earth and Space Exploration, Arizona State University, Tempe, AZ, United States

We explore how an AR simulation created by a multidisciplinary team evolved into a more interactive, student-centered learning game. The CovidCampus experience was designed to help college students understand how their decisions can affect their probability of infection throughout a day on campus. There were eight decision points throughout the day. Within group comparisons of immediate learning gains and self-reported behavioral changes were analyzed. Results revealed a significant increase in confidence in asking safety-related questions. Post-play, a significant majority of players listed new actions they would take to increase their safety; players were more agentic in their choices. This game allowed players to go back and replay with different choices, but only 7% chose to replay. Short, interactive desktop games may be an effective method for disseminating information about how to stay safer during a pandemic. The game appeared to positively change most players’ health behaviors related to mitigation of an infectious disease. Designers of interactive health games should strive to create multi-disciplinary teams, include constructs that allow players to agentically make decisions, and to compare outcomes over time.

Introduction

This article outlines the evolution of the creation of an interactive simulation-style game for public health. We represent a highly multidisciplinary team of students, researchers, and professors. The game was made by undergraduate students from three departments with guidance from an epidemiology subject matter expert, a learning scientist, a human factors engineer, a user interface design expert, and a biomedical engineer. This paper highlights choices made during the design process and ends with a within group comparison of learning gains and self-reported behavioral changes. The lead author has been designing educational games for over a dozen years using multiple media - either on 2D or in Mixed Reality (Johnson-Glenberg et al., 2014a; Johnson-Glenberg et al., 2014b; Johnson-Glenberg and Megowan-Romanowicz, 2017) or in Virtual Reality (VR) (Johnson-Glenberg, 2018; Johnson-Glenberg et al., 2019; Johnson-Glenberg et al., 2020), with an emphasis on learning via embodiment. The spectrum of augmented to virtual reality is now referred to as XR (eXtended Realities).

The article serves as a snapshot of how one lab at one university reacted to the pandemic of 2020. The overall goal was to design educational multimedia that would get across a safety message. In general, the term design refers to the thoughtful “organization of resources to accomplish a goal” (Hevner et al., 2004). The design journey of the final CovidCampus game was filled with unexpected twists. It begins with a university-sponsored XR Challenge. In 2018, five of the authors applied to the XR Challenge and chose the topic of modeling the Ebola outbreak in the Democratic Republic of Congo. That viral epidemic was beginning to reach its peak. With the help of an epidemiologist and bio-medical engineer, the mechanics of R0 (R-naught and the SEIR equation) were mastered and included in the backend of a mobile app. The goal was to deploy the mobile app on Github for decision makers to use the augmented reality (AR) app to predict the spread of Ebola and make informed decisions about which cities (with varying populations and states of infection) to deploy the vaccines to. As we polished the algorithm and User Experience (UX) of that app, the pandemic of Covid-19 became evident in the U.S. (around mid-February 2020) and the team decided to completely “retool” and focus all efforts on understanding the spread of Covid-19. We decided to create a new type of interactive Public Service Announcement that would both teach about transmission, and advise players on how to stay healthy.

The First AR Iteration

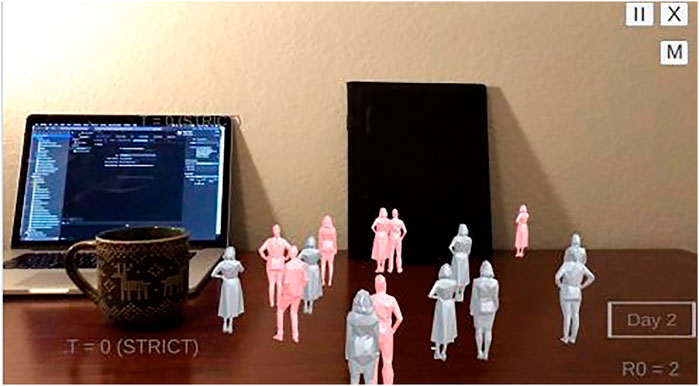

The first iteration was designed for mobile devices and used AR; it was built with Vuforia® and used a plane surface as the marker. The app included 3D avatar people of different colors (red = infected; grey = not infected) to designate health status. The people moved (like statues or chess pieces, i.e., the legs did not move) on top of a plane. When a gray person was within a certain diameter distance from a red person, then transmission could occur. Figure 1 shows an example.

FIGURE 1. The first augmented reality (AR) version of the CovidCampus game with interactive time and R-naught controls.

After X number of days all the gray people would eventually turn red. The user could set the R0 from 1 to 4 in the bottom right corner to affect speed of the spread. As we playtested this version, it became evident that actions (i.e., transmission and color change) either happened too slowly (users lost interest) or too quickly (users were overwhelmed) and educational nuances were lost, like the difference between an R0 of 2 versus 3. Additionally, the decision was made to not model when people recovered and achieved immunity because the team agreed that adding a third color would be even more confusing for users as the avatars moved around. But, now it seemed we were no longer modeling realistically and that made us question the validity of the entire design. What did we want the takeaway message to be? Primarily, we wanted to teach people how to be safe and make informed personal decisions.

Once we narrowed down who the user group would be (college students), and the primary goal (make better real world decisions), it became easier to design. Over the last decade, a growing number of health education serious games have measured efficiency and efficacy using randomized trials of patients and clinicians (Papastergiou, 2009; Graafland et al., 2017). A recent scoping review by Sharifzadeh et al. (2020) found 2,313 articles that ranged from 1985 to 2018, after removing repetitions, exergames and others, only 161 articles met the inclusion and exclusion criteria. Two classes of knowledge improvement were discerned in the games: either knowledge improvement (58.4%) or skill improvement (41.6%). Our study falls into the knowledge improvement class, with the goal of then changing behavior. Note: we did not have the resources to do a followup study to assess if behavior changed; however, we asked users to report if and what they would change. The Sharifzadeh et al. (2020) study recommends follow-up studies and that game developers use multidisciplinary teams to improve the design of serious games.

The Second Desktop Version: College Classroom

As with many universities in the U.S., students at ours were discouraged from returning to campus after spring break (end of March, 2020). Over the summer of 2020, the team completely reconceptualized the game. One goal was to make a game/sim that would be more readily accessible to all so it needed to be Web-based and not dependent on a downloaded app for a mobile AR experience. The decision was made to “build what we know”, that allowed the team to create an experience that was specific to students on a college campus. The teams also wanted to allow users more agency over their choices and actions. Agency, in education, refers to the sense of “personal empowerment” involved with creating and achieving goals, it implies self-regulation (Shogren et al., 2017). The new version was built in Unity® and deployed as a desktop-based game. It took approximately four months to build the game from scratch – it was deployable in mid-September. Figure 2 shows the splash screen. The game can be accessed at https://xr.asu.edu/CovidCampus (only on laptop/desktop for now, or at www.embodied-games.com).

There is a large literature on positive learning gains seen from well-designed games because games are engaging and motivating (Malone and Lepper, 1987). A meta-analysis revealed that the most frequent impacts associated with serious games were knowledge acquisition (also called content understanding), and motivational and affective outcomes (Connolly et al., 2012). In games, players are free to make “fail” choices (e.g., ones that could greatly increase their risk of exposure) they can then safely learn from those by failing “productively”. This team set out to create a game-like sim that would allow players to make a range of choices or decisions throughout a day. Some decisions are better than others; players receive immediate feedback. In the CovidCampus game there are eight major decisions to be made (e.g., how to get lunch, where to exercise, etc.). After each decision, players see how the choice affected the probability of infection bar at the bottom of the screen. This bar represents the potential for the player to become infected with Covid-19. At the end of the day, players can go back through the day as many times as they want and make different choices. We know that students can learn Covid-19 specific content from game-like simulations. A recent study by Hu et al. (2021) demonstrated that medical students assigned to a game-playing group learned as much as those in a lecture-based group by the end of the semester. Interestingly, at the 5-week follow-up assessment, the game-playing group retained significantly more knowledge.

CovidCampus: Game Design

Pick an avatar

We did not want players to spend much time creating an avatar, nonetheless giving users a choice of avatars could affect engagement. It is known that avatar personalization can have positive effects. Using scanned 3D avatars, Waltemate et al. found that personalized avatars significantly increased virtual body ownership and sense of presence compared to the use of more generic avatars (Waltemate et al., 2018). The decision was made to give users a simple choice of male or female with either lighter or darker skin. See Figure 3.

The daily decisions

Eight decisions were made chronologically throughout the day: wearing a mask in the AM (this was before it was mandatory on campus), how to transport to class, how to get upstairs to class, entering a crowded class, getting lunch, how to workout, going to a party, and finally, make a decision what to do when your roommate asks for privacy (forcing player out into the world).

Example of Classroom Choice

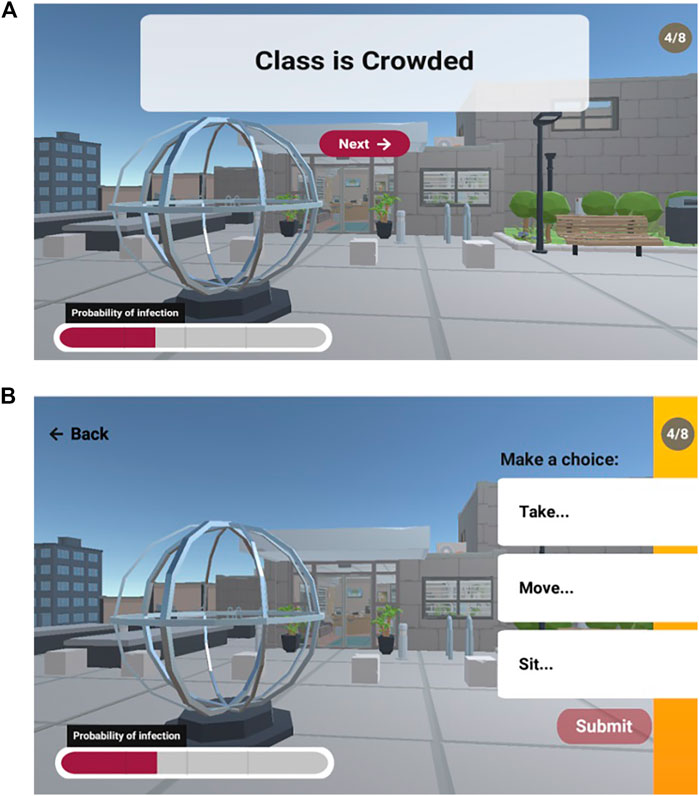

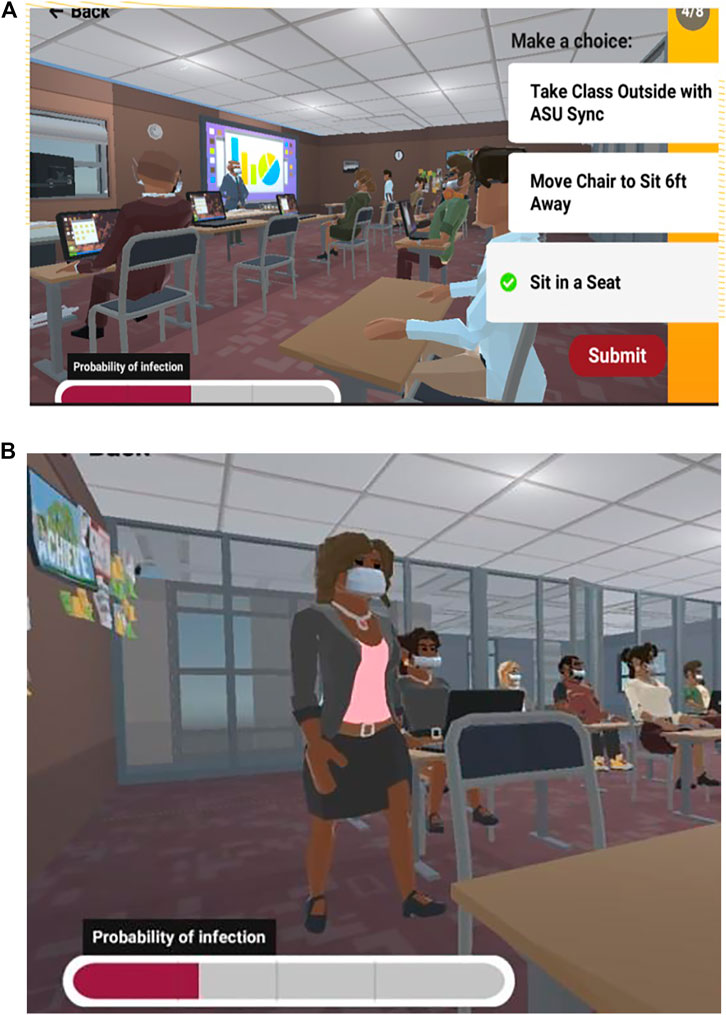

The eight decisions had either three or four choices associated with them. Figure 4 shows an example of the fourth decision which was “entering a crowded classroom”.

Panel B illustrates that only the first word or verb of the action phrase is shown. This forces the user to click on the tab and see what the full action looks like. When the white tab is clicked all text and a new full image are revealed. Playtesting revealed that if all the text were present in the white tabs, then players would simply read and not click on and open the images. Figure 5 shows a player who opted to “Sit in a Seat” in the crowded classroom. After the choice is submitted, the probability of infection bar will increase appropriately.

FIGURE 5. Panel A text for choice and full image, panel B shows the action taken with probability bar.

Probability bar

Initially the probability bar was set at 2% for every player, this was based on information we had about students in the college-based Tempe, AZ zip code in July of 2020. We purposefully did not include exact numbers on the fillable bar. Increases came in three sizes: small (1-2%), moderate (3-5%), or high (10-11%). The team agreed on the rates of increase. In the example above, taking the class outside on a laptop raised the bar 1%, moving the chair in the class raised it 5%, and sitting in the crowded classroom, without moving the chair, raised it 10%. The bar was constrained to not go above 87%. After a choice was made a short message appeared with text-based feedback about whether the decision was optimal, and why.

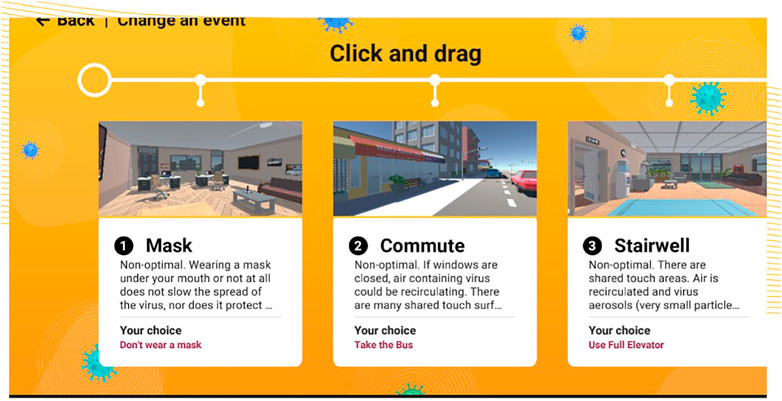

The intent of the fillable probability bar was so that every decision would reify for players how important each individual decision was. That is, decisions affect their health risk levels (individual), but these decisions can also have ramifications on the risk levels of those around them (group health). After the eighth and final choice, players saw the “Change an Event” page. Here, they had the choice of going back and changing either the entire day, or just one decision event, and seeing how that affected their probability bar. See Figure 6.

FIGURE 6. The “Change an Event” click and drag line that showed up after the eighth and final decision.

Methods

Recruitment

The semester at our large Southwestern university started on August 20, 2020. Students were instructed to take a mandatory Web-based Covid Safety Instruction Course during the semester, only a few modules needed to be completed. Within the course, there were multiple modules and our CovidCampus game was one of them. The game officially launched on September 21, 2020, so the first month of potential sign-ons was missed. Because signs-ons began to slow by early October, we pulled the data for analysis on October, 6, 2020.

To incentivize players to engage and take the survey, a gift card was offered. Players were asked if they wanted to opt into a pre- and post-survey; if so, they would have a 1 in 20 chance of winning a $20 eGift card. (The survey was removed at the end of 2020, opt-ins were down to once a week after mid-October.) All Human Subject and IRB protocols were followed.

Rollout

From September 21, 2020 to October 6, 2020, 113 people completed the game. Of those, 99 users took the survey, thus 88% of game-completers took the survey. Several students reported in the early days that they thought the game was “broken” because it did not load on their mobile phones. This is an important lesson to learn when rolling out games/sims at the college level. Students assume everything is mobile, but we wanted to avoid them downloading an app and working with very small text font. So, the first version was browser-based and optimized only for laptop/desktop viewing. We quickly added a large warning beside the game icon that noted it was not mobile. To keep players engaged, we wanted to only insert a minimum of pre-play survey questions. Thus, this study did not gather any substantial demographic data, we do know that 98% of the emails given to redeem the gift card were from the university (asu.edu).

Results

Participants

All participants reported they were 18 years old or older. While 99 participants took the survey, one user was not included in the analyses for giving non-serious answers, e.g. what would you do if your class was crowded? Answer, “I would cough on everyone.” Two players spent less than one minute in the game and were excluded from analyses.

Time on task

Time in the game ranged from 72 to 292 seconds (players who spent less than 60 seconds in the game were not included because they would not have had time to read and consider choices).

Repeat players

Of the survey users, seven went through the game more than one time. Of those, only four went through the game four times, the system stopped tallying after four times. Thus, seven out of 99 (7%) players took advantage of the ability to change answers and observe different outcomes. The survey was administered only one time and that was after the last playthrough.

The section below begins with the pre-game questions. The results for questions 1 and 2 regarding party comfort and confidence are reported with post-game results.

Pre-Game Survey Questions

1. Party Comfort

If you were invited to a birthday party, what degree of comfort would you feel about attending? The anchors were: 1= none to 5 = very high degree.

2. Party Confidence

What degree of confidence do you have asking the host of a birthday party questions that you feel are important to your safety? Anchors: 1 = none to 5 = very high degree.

3. Open-ended Question on Crowded Class

“If you entered a crowded classroom with students sitting too close together, what would you do?”

In the pre-survey the most common response was to enter the class, but move the chair, 56/96 = 58%. Several other answers included [typos maintained], “I would talk to the teacher in private and let them know that i felt uncomfortable”. Another said, “Stand akwardly in the back. Wonder why the school isn't succesfully implementing proper safety strategies. Send a tik tok to friends with a witty commentary. If my presence is optional I would leave. Attend from home in the future”

Ten said they would “leave the room” immediately, 10/96 = 10%.

4. Daily Actions

“Click on the actions you generally do throughout the day to avoid the virus”:

•Stay at home (when an option)

•Wear mask inside

•Wear mask inside and outside

•Wear a garlic necklace

•Try to avoid public transportation

•Only distance with strangers

•Distance with everyone

•Wash hands/use sanitizer frequently

Number 4 with the garlic necklace was included to make certain users were paying attention. Only one person clicked on that at pretest. On average, users chose five (mode = 5) of the seven appropriate options.

5. Ranking

Question number 5 asked users to rank order safe eating choices. Because everyone got this question correct and ceiling out, we realized it was not a “sensitive” item and we excluded the question from further analyses. However, it was good to know that users understood that 1) prepping food at home was better than > 2) getting it delivered > 3) was better than eating outside at a restaurant > 4) was better than eating inside at a restaurant.

Post Survey Questions

Below are results on the post-play questions with paired t tests on the pretest, where appropriate. All tests are two-tailed with an alpha set to .05. Analyses were performed on SPSS 26. Standard deviations are in parentheses.

6. Engage

“Did you find this simulation engaging?” The choices were:

The engage Mean was 3.82 (1.06) moderately high, which was significantly different from the value of 1.0 (not at all), one sample t (95) = 16.91, p < .001.

7. Change Behavior

“Is this simulation likely to change your behavior?” The choices were:

The change behavior Mean was 3.25 (1.20). Only 31% said not at all or not really, the rest said a medium amount or higher. The self-reported Mean change lay between “a medium amount” and “yes somewhat”. This is significantly different from the value of 1.0 which signified “not at all”, one sample t (95) = 18.29, p < .001.

8. Open-ended Behavior Example

“Give an example of a specific behavior that you might do differently after this simulation?” Below are some examples of responses [typos remain].

•Think different about ride sharing

•Hard to say, not a socail person myself at start. probably will decrease the time for shopping in Walmart later.

•do not do excerise at home

•Not walk into classrooms.

•Working out inside versus outside

•I will try my best to be outdoors more to exercise and make more food at home.

•I will not go to the gym

•wearing a mask around family

•I would think twice about using public transportation.

•Join parties virtually

•Something I would do differently is maybe consider not attending small parties unless family related.

•I will definitely rethink where I exercise. I would rather exercise alone, so it was interesting to see that working out outside was best.

•Commuting to class, I will probably walk now

Behavioral example - change in percentages - Percentage who answered they would stop an action (“I will not use rideshare”; “I get away from gym”): 24/96 = 25% - Percentage who answered they would start an action (“Wear a mask around my family”): 60/96 = 63% - Percentage who would not change their actions (“I already do all the right things”): 12/96 = 12%.

9. Pre and Post Party Comfort About Going

“Now, if you were invited to a birthday party, what degree of comfort would you feel about going?” Anchors: 1 = none, 5 = very high degree.

At pretest the comfort Mean was 2.38 (1.05), at posttest the Mean was 2.19 (1.06). There was a statistically marginal decrease in comfort post play. Players felt marginally less comfortable about attending a party after seeing the transmission bar and going through the full game, paired t = 1.92, p < .057.

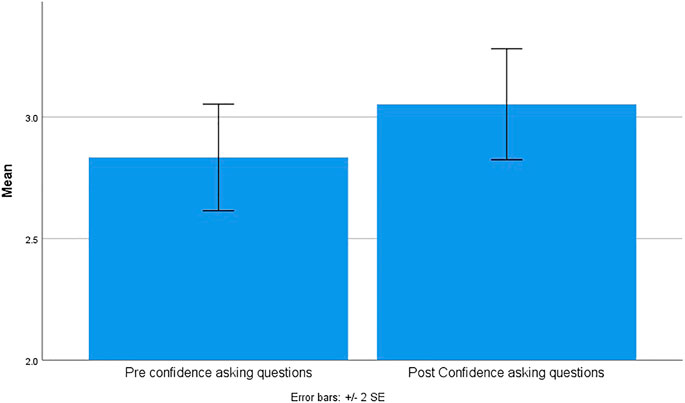

10. Pre and Post Party Confidence Asking Questions

“Now, what degree of confidence do you have asking the host of a birthday party questions that you feel are important to your safety?”

The pretest confidence Mean was 3.83 (1.07), at posttest confidence was significantly higher, Mean = 4.05 (1.11), paired t (95) = 2.56, p < .012. Figure 7 shows how going through the game increased players’ confidence in asking safety-related questions.

FIGURE 7. Change in confidence about asking safety related questions, higher after going through the game.

11. Open-ended Post Crowded Classroom

“Now, if you entered a crowded classroom with students sitting too close together, what would you do?”

After playing the game, the most common answer no longer centered around arranging chairs or spacing, (recall, that was 58% in the pretest), the most common answer now was a variation on taking the course online or virtually, 64/96 = 67% The next most common answer related to re-arranging seating, 25/96 = 26% and the “other” category included seven who wrote responses like, “wear a mask” or “report the instructor”, 7/96 = 7%.

12. Post Daily Actions

“Click on the actions you generally do throughout the day to avoid the virus”: Same options as in item 4 at pretest. The mode was still five items.

- Percentage who chose the same options to avoid the virus (remained with same number): 71/96 = 74%

- Percentage who chose more options to avoid the virus: 19/96 = 20%

- Percentage who chose fewer options to avoid the virus: 6/96 = 6%.

13. Bar Change

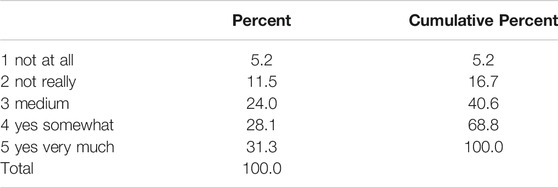

“Did seeing the probability of infection bar increase over time make you think about how you might also infect others?” The options were:

Table 1 reports the cumulative probabilities.

The post play Mean was = 3.69 (1.18), Median = 4, Mode = 5. Using the Mean, there was was a significant difference from 1.0 which meant “not at all”, one sample t test, t (95) = 22.03, p < .001. Only 17% reported with a negative. The largest percent, almost 1/3 of participants, responded with “yes, very much”.

14. Open-ended Comments on Game

“Any comments, or new decisions you think we should add to the game?”

Some more of the substantive ideas are listed in Appendix A. Below are several intriguing ones. [Typos maintained].

•As many of ASU's infected population live off-campus, I would put a few questions specifically targeted at this population. Perhaps something concerning going to work or the grocery store.

•working with people who refuse to wear a mask.

•I think you should have a statistics bar for how many people you can spread COVID-19 to and what risk you are if everyone followed the rules that you did.

•Nope. It was perfect!

•Maybe add something about how to shop safely (esp grocery shopping)

•try to make it funny

•add in character customization

•Shaking hands

Discussion

The comradery experienced while creating and iterating on the game design is something that is almost intangible, it is difficult to capture and report in data, but we can state that the work of creating the game was crucial in helping all members of the team feel constructive and agentic during the unsteady summer months of 2020. Thus, we are pleased that several significant changes were revealed in immediate post-play results. We observed several positive effects from playing CovidCampus which takes place in an academic setting. These included an increase in confidence in asking relevant safety questions and multiple self-reported behavioral changes. A mini-game where players can make and change multiple decisions appears to be an effective method for disseminating information about how to stay safer during a pandemic. Key results are highlighted below.

Changing behavior

When asked if the game-like simulation might change the players’ behavior, the self-reported average was between “a medium amount” and “yes somewhat”. A full 69% reported that it had changed their behavior, and of those, 20% reported by “a lot”. The post-play mean was statistically significantly different from the post-play answer coded with a 1, “not at all”. But, we cannot assume that the intervals are all equal in such a Likert scale, and this is why players were also asked to list some behaviors they would change. Several said “nothing”, but the majority listed multiple behaviors. These included an interesting mixture of more risky behaviors being stopped (“stop going to gym”, “not eat in restaurants”, “see friends less often”) and safer behaviors being started (“eating at home more”, “logging onto events virtually more often”) .

Confidence

The confidence question was rewritten many times to make it more comprehensible. It may still read awkwardly, but the goal was to keep it the length of one sentence. For the posttest version, the word now was included, “Now, what degree of confidence do you have asking the host of a birthday party questions that you feel are important to your safety?”

At posttest, confidence was significantly higher than at pretest. The number “4” represented the sentiment of a “high” degree of confidence. On average, players came up significantly post-play in feeling confidence. This suggests that knowledge gained from the game helped them better appreciate and understand the types of safety-related questions they should be asking before committing to going to a gathering. Players may be feeling more agentic and knowledgeable after having read some of the game’s feedback, and after seeing how the transmissibility bar kept increasing throughout the day.

Changes in entering crowded classrooms

Before playing the game, the most common answer when confronted with a crowded, poorly spaced classroom centered on rearranging the chairs or distancing (58%). After playing the game, the most common answer was a variation on taking the course online/virtually (67%). The next most common answer related to re-arranging seating (26%, a decrease of 32%). This suggests that playing through the game may have altered players’ future behaviors. The simulation allowed them to understand that taking a course online (in these pandemic times) is the safer choice. Players may now feel more agentic/in control and not feel so forced, or compelled, to sit in a crowded room. It would be interesting to develop an agency assessment tool and include that in a next version (perhaps similar to the one created by Svihla et al. (2020) that measures framing agency in STEM students working in groups).

Feedback and the probability bar

When asked. “Did seeing the probability of infection bar increase over time make you think about how you might also infect others?” The majority said yes that seeing the bar change made them think about infecting others. It would have been good to interview a subset of players. For now, we speculate that seeing the simple data visualization of the filling bar helped players to understand how many small decisions can add up in probability, as the day wears on. It appears that receiving the real-time visual feedback had an effect. This is in line with the importance of feedback during encoding and learning (Shute, 2008), and the literature supporting that tailored, real-time feedback is key when behavior change is a game’s purpose (Gamberini et al., 2012). Although, we acknowledge that there may be a social desirability bias problem with this question, because answering in the negative implies you also may not think or care about others.

Replay

We had expected a larger percentage would go through the game multiple times and play again comparing how each decision affected the probability bar, but less than 7% opted to play again. Recall that of the 99 who logged on, only four players played the game four or more times, we stopped tallying after four repeats. We agree with one player’s comment that we could have made the game more engaging by adding audio and more animations of the characters.

Future

In future versions, we have plans to add in environmental auditory sounds. A player’s idea of including a “dashboard” showing others’ decisions was also a good idea. But, of course, that would need some monitoring because there will always be users who play to lose or try to break the system. It would be important to follow-up on players weeks or months later, and assess if they have maintained some of the safety behaviors they wrote they would implement.

There is interest in turning this game into an immersive VR experience. That is why the low polygon avatars were chosen, so that the game could be processed more quickly on an open VR platform (e.g., WebXR). While we suspect that may make the game more engaging, there will be less uptake when deployed to non-ubiquitous, and still expensive, platforms like VR headsets.

Conclusions

In sum, we have created an engaging and effective game-like simulation for disseminating information about how to stay safer during the Covid-19 pandemic. This game was also able to address two of the concerns from the recent Sharifzadeh et al. (2020) educational health games scoping study. The first was the inclusion of multidisciplinary teams. They strongly recommend this for health games yet only 42% (68/161) of their reviewed games either explicitly mentioned the use of such teams or implicitly mentioned the involvement of experts, such as, instructional, clinical, and User Experience (UX) designers during game development. The second concern was the impact of health topics such as safety and nutrition on population-level outcomes. Sharifzadeh et al. state in the discussion section, “The future of educational health games may entail a larger coverage of the general healthy population rather than patients with specific diseases. An interesting trend…is the gradual move from developing disease-specific serious educational games (e.g., diabetes) to targeting broader public health topics (e.g., safety and nutrition)”. The CovidCampus experience was designed to address a broad public health topic and help college students understand how their individual decisions can affect their probability of infection throughout a day on campus. Playing the game led to increased confidence in asking safety questions and it altered reported behaviors in the majority of players. Post-play, players listed new actions they would take for increased safety and they were more agentic in their choices and descriptions. These sorts of interactive games and simulations can be effective in sharing health messages, and potentially in changing people’s health behaviors.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Arizona State University Internal Review Board. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

MCJG Lead game designer, ran study, analyzed results, wrote manuscript. MJ served as epidemiological expert co-edit manuscript, CC designed and coded AR versions, DB co-designed game, RNZ co-designed game and created animations, XA co-designed, served as project manager and lead developer, JR co-created AR versions, HHT co-play design and co-graphics, AK co-create survey and ran all backend, HB graphics lead and co-edit manuscript.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Many thanks to Leopoldo Cortes, Dr. Tim Lant, Vanessa Ly, Dennis Bonilla, Dr. Sharon Hsiao, Andrew Carnes, Mehmet Kosa, Dr. Robert LiKamWa and MeteorStudio at ASU, The Learning Futures Collaboratory, and Auryan Ratliff.

Appendix

•Further examples of some students’ ideas to make CovidCampus v.1 better.

•It would be interesting to see an outdoor activity like swimming or playing a game. I think people assume it's safe if it's outside.

•Maybe more scenarios

•It might be interesting to see a dashboard of the responses of others.

•It was hard to tell what the full situation was before making certain decisions in the game (for example "exercise in your dorm" didn't specify whether you had a roomate or not, which obviously affects the risk level.

•Something with sororities or frats

•going shopping in mall verus online

•The use of some auditory features may help the gain to be more engaging.

•long lasting symptoms of covid

•Option for bikes

•Add elderly people

•Some people have to take public transit. Perhaps questions on how to use it more safely would be good.

References

Connolly, T. M., Boyle, E. A., MacArthur, E., Hainey, T., and Boyle, J. M. (2012). A Systematic Literature Review of Empirical Evidence on Computer Games and Serious Games. Comput. Educ., 59(2), 661–686. doi:10.1016/j.compedu.2012.03.004

Gamberini, L., Spagnolli, A., Corradi, N., Jacucci, G., Tusa, G., Mikkola, T., et al. (2012). Tailoring Feedback to Users’ Actions in a Persuasive Game for Household Electricity Conservation. Berlin, Germany: Paper presented at the International Conference on Persuasive Technology.

Graafland, M., Bemelman, W. A., and Schijven, M. P. (2017). Game-based Training Improves the Surgeon's Situational Awareness in the Operation Room: a Randomized Controlled Trial. Surg. Endosc. 31 (10), 4093–4101. doi:10.1007/s00464-017-5456-6

Hevner, A. R., March, S. T., Park, J., and Ram, S. (2004). Design Science in Information Systems Research. MIS Q. 28 (1), 75–105. doi:10.2307/25148625

Hu, H., Xiao, Y., and Li, H. (2021). The Effectiveness of a Serious Game versus Online Lectures for Improving Medical Students' Coronavirus Disease 2019 Knowledge. Games Health J. 10, 139–144. doi:10.1089/g4h.2020.0140

Johnson-Glenberg, M. C., Birchfield, D. A., Tolentino, L., and Koziupa, T. (2014a). Collaborative Embodied Learning in Mixed Reality Motion-Capture Environments: Two Science Studies. J. Educ. Psychol. 106 (1), 86–104. doi:10.1037/a0034008

Johnson-Glenberg, M. C. (2018). Immersive VR and Education: Embodied Design Principles that Include Gesture and Hand Controls. Front. Robot. AI 5 (81), 5. doi:10.3389/frobt.2018.00081

Johnson-Glenberg, M. C., and Megowan-Romanowicz, C. (2017). Embodied Science and Mixed Reality: How Gesture and Motion Capture Affect Physics Education. Cogn. Res. 2 (24). doi:10.1186/s41235-017-0060-9

Johnson-Glenberg, M. C., Savio-Ramos, C., and Henry, H. (2014b). “Alien Health”: A Nutrition Instruction Exergame Using the Kinect Sensor. Games Health J. 3 (4), 241–251. doi:10.1089/g4h.2013.0094

Johnson-Glenberg, M. C., Su, M., Bartolomeo, H., Ly, V., Nieland Zavala, R., and Kalina, E. (2020). Embodied Agentic STEM Education: Effects of 3D VR Compared to 2D PC. Paper presented at the Immersive Learning Research Network 2020 Virtual Conference, Virbela Platform. doi:10.23919/ilrn47897.2020.9155155

Johnson-Glenberg, M. C. (2019). “The Necessary Nine: Design Principles for Embodied VR and Active Stem Education,” in Learning in a Digital World: Perspective on Interactive Technologies for Formal and Informal Education. Editors P. Díaz, A. Ioannou, K. K. Bhagat, and J. M. Spector (Singapore: Springer Singapore), 83–112. doi:10.1007/978-981-13-8265-9_5

Malone, T. W., and Lepper, M. R. (1987). Making Learning Fun: A Taxonomy of Intrinsic Motivations for Learning. Aptitude, Learn. instruction 3, 223–253.

Papastergiou, M. (2009). Exploring the Potential of Computer and Video Games for Health and Physical Education: A Literature Review. Comput. Educ., 53(3), 603–622. doi:10.1016/j.compedu.2009.04.001

Sharifzadeh, N., Kharrazi, H., Nazari, E., Tabesh, H., Edalati Khodabandeh, M., Heidari, S., et al. (2020). Health Education Serious Games Targeting Health Care Providers, Patients, and Public Health Users: Scoping Review. JMIR Serious Games, 8(1), e13459. doi:10.2196/13459

Shogren, K. A., Little, T. D., and Wehmeyer, M. L. (2017). Human Agentic Theories and the Development of Self-Determination. Dordrecht, Netherlands: Springer.

Shute, V. J. (2008). Focus on Formative Feedback. Rev. Educ. Res. 78 (1), 153–189. doi:10.3102/0034654307313795

Svihla, V., Gallup, A., and Kang, S. P. (2020). Development and Insights from the Measure of Framing Agency. Paper presented at the ASEE Virtual Annual Conference. 2020-06-22, 10.18260/1-2--34442

Keywords: Covid-19 education, Simulations, serious games, Augmented Reality (AR), XR, interactive STEM education, public service games

Citation: Johnson-Glenberg MC, Jehn M, Chung C-Y, Balanzat D, Nieland Zavala R, Apostol X, Rayan J, Taylor H, Kapadia A and Bartolomea H (2021) Interactive CovidCampus Simulation Game: Genesis, Design, and Outcomes. Front. Commun. 6:657756. doi: 10.3389/fcomm.2021.657756

Received: 11 February 2021; Accepted: 23 April 2021;

Published: 13 May 2021.

Edited by:

Seow Ting Lee, University of Colorado Boulder, United StatesReviewed by:

Isaac Nahon-Serfaty, University of Ottawa, CanadaDouglas Ashwell, Massey University Business School, New Zealand

Copyright © 2021 Johnson-Glenberg, Jehn, Chung, Balanzat, Nieland Zavala, Apostol, Rayan, Taylor, Kapadia and Bartolomea. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mina C. Johnson-Glenberg, bWluYS5qb2huc29uQGFzdS5lZHU=

Mina C. Johnson-Glenberg

Mina C. Johnson-Glenberg Megan Jehn

Megan Jehn Cheng-Yu Chung

Cheng-Yu Chung Don Balanzat

Don Balanzat Ricardo Nieland Zavala1

Ricardo Nieland Zavala1 Jude Rayan

Jude Rayan Hector Taylor

Hector Taylor Hannah Bartolomea

Hannah Bartolomea