95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Commun. , 06 September 2019

Sec. Organizational Communication

Volume 4 - 2019 | https://doi.org/10.3389/fcomm.2019.00050

This article is part of the Research Topic The Evolution and Maturation of Teams in Organizations: Theories, Methodologies, Discoveries & Interventions View all 19 articles

The focus of this current research is 2-fold: (1) to understand how team interaction in human-autonomy teams (HAT)s evolve in the Remotely Piloted Aircraft Systems (RPAS) task context, and (2) to understand how HATs respond to three types of failures (automation, autonomy, and cyber-attack) over time. We summarize the findings from three of our recent experiments regarding the team interaction within HAT over time in the dynamic context of RPAS. For the first and the second experiments, we summarize general findings related to team member interaction of a three-member team over time, by comparison of HATs with all-human teams. In the third experiment, which extends beyond the first two experiments, we investigate HAT evolution when HATs are faced with three types of failures during the task. For all three of these experiments, measures focus on team interactions and temporal dynamics consistent with the theory of interactive team cognition. We applied Joint Recurrence Quantification Analysis, to communication flow in the three experiments. One of the most interesting and significant findings from our experiments regarding team evolution is the idea of entrainment, that one team member (the pilot in our study, either agent or human) can change the communication behaviors of the other teammates over time, including coordination, and affect team performance. In the first and second studies, behavioral passiveness of the synthetic teams resulted in very stable and rigid coordination in comparison to the all-human teams that were less stable. Experimenter teams demonstrated metastable coordination (not rigid nor unstable) and performed better than rigid and unstable teams during the dynamic task. In the third experiment, metastable behavior helped teams overcome all three types of failures. These summarized findings address three potential future needs for ensuring effective HAT: (1) training of autonomous agents on the principles of teamwork, specifically understanding tasks and roles of teammates, (2) human-centered machine learning design of the synthetic agent so the agents can better understand human behavior and ultimately human needs, and (3) training of human members to communicate and coordinate with agents due to current limitations of Natural Language Processing of the agents.

In general, teamwork can be defined as the interaction of two or more heterogeneous and interdependent team members working on a common goal or task (Salas et al., 1992). When team members interact dynamically with each other and with their technological assets to complete a common goal, they act as a dynamical system. Therefore, an essential part of a successful team is the ability of its members to effectively coordinate their behaviors over time. In the past, teamwork has been investigated for all-human teams by considering team interactions (i.e., communication and coordination) to understand team cognition (Cooke et al., 2013) and team situation awareness (Gorman et al., 2005, 2006). Presently, advancements in machine learning in the development of autonomous agents are allowing agents to interact more effectively with humans (Dautenhahn, 2007), to make intelligent decisions, and to adapt to their task context over time (Cox, 2013). Therefore, autonomous agents are increasingly considered team members, rather than tools or assets (Fiore and Wiltshire, 2016; McNeese et al., 2018) and this has generated research in team science on Human-Autonomy Teams (HAT)s.

In this paper, we summarize findings from three of our three recent experiments regarding the team interaction within the HAT over time in the dynamic context of a Remotely Piloted Aircraft System (RPAS). In the first and the second experiments, we summarize general findings related to the interaction of a three-member team over time, by comparison of HATs with all-human teams. In the third experiment, which extends beyond the first two experiments, we investigate HAT evolution when HATs are faced with a series of unexpected events (i.e., roadblocks) during the task: automation and autonomy failures and malicious cyber-attacks. For all three of these experiments, measures focus on team interactions (i.e., communication and coordination) and temporal dynamics consistent with the theory of interactive team cognition (Cooke et al., 2013). Therefore, the goal of the current paper is to understand how team interaction in HATs develops over time, across routine and novel conditions, and how this team interaction relates to team effectiveness.

We begin by describing HATs as sociotechnical systems and identify the challenges in capturing this dynamical complexity. Next, we introduce the RPAS synthetic task environment, and three RPAS studies conducted in this environment. Then, we summarize the findings from HATs and compare this evolution to that of all-human teams.

A HAT consists of a minimum of one person and one autonomous agent “coordinating and collaborating interdependently over time in order to successfully complete a task” (McNeese et al., 2018). In this case, an autonomous team member is considered to be capable of working alongside human team member(s) by interacting with other team members (Schooley et al., 1993; Krogmann, 1999; Endsley, 2015), making its own decision about its actions during the task, and carrying out taskwork and teamwork (McNeese et al., 2018). In team literature, it is clear that autonomous agents have grown more common in different contexts, e.g., software (Ball et al., 2010) and robotics (Cox, 2013; Goodrich and Yi, 2013; Chen and Barnes, 2014; Bartlett and Cooke, 2015; Zhang et al., 2015; Demir et al., 2018c). However, considering an autonomous agent as a teammate is challenging (Klein et al., 2004) and requires effective teamwork functions (McNeese et al., 2018): understanding its own task, being aware of others' tasks (Salas et al., 2005), and effective interaction (namely communication and coordination) with other teammates (Gorman et al., 2010; Cooke et al., 2013). Especially in dynamic task environments, team interaction plays an important role in teamwork and it requires some amount of pushing and pulling of information in a timely manner. However, the central issue to be addressed is more complex than just pushing and pulling information; time is also a factor. This behavioral complexity in dynamic task environments can be better understood from a dynamical systems perspective (Haken, 2003; Thelen and Smith, 2007).

Robotics science (Bristol, 2008) posits that complex behavior of an autonomous agent does not necessarily require complex internal mechanisms in order to interact in the environment over time (Barrett, 2015). That is, the behavioral flexibility of a simple autonomous agent is contingent on the mechanics and wiring of its sensors rather than its brain or other components (for an example see Braitenberg and Arbib, 1984). However, in order to produce complex behaviors, there are other elements than hardware, specifically interaction with the environment which it is subject to. The behavioral complexity of an autonomous agent is actually more than parts appear to be individually. This complexity is a real challenge for robotics and cognitive scientists seeking to understand autonomous agents and their dynamic interactions with both humans and the agent's environment (Klein et al., 2004; Fiore and Wiltshire, 2016). Humans have a similar dynamical complexity, as summarized by Simon (1969), who stated, “viewed as behaving systems, [humans] are quite simple. The apparent complexity of our behavior over time is largely a reflection of the complexity of the environment in which we find ourselves” (p. 53).

In order to better understand the complexity of autonomous agents and their interactions with humans in their task environment, we can consider the interactions as happening within a dynamical system where an agent synchronizes with human team members in a dynamic task environment. In this case, a dynamical system is a system which demonstrates a continuous state-dependent change (i.e., hysteresis: future state causally depends on the current state of the system). Thus, interactions are considered a state of the system whole rather than the individual components. A dynamical system can behave in many and different ways over time which move around within a multidimensional “state space.” Dynamical systems may favor a particular region of the state space—i.e., move into a reliable pattern of behavior—and, in such cases is considered to have transitioned to an “attractor state.” When the system moves beyond this state, it generally reverts to it in the future. The system then becomes more resilient (i.e., the attractor states get stronger) to adapt to dynamic unexpected changes in the task environment as it develops experience. However, if given a strong enough perturbation from the environment's external forces, the system may move into new patterns of behavior (Kelso, 1997; Demir et al., 2018a).

With that in mind, HAT is a sociotechnical system in which behaviors emerge via interactions between interdependent autonomous and human team members over time. These emerging behaviors are an example of entrainment, the effect of time on team behavioral processes, and in turn team performance (McGrath, 1990). Replacing one human team role with an autonomous agent can change the behavior of other teammates and affect team performance over time. In the sociotechnical system, human and autonomous team members must synchronize and rhythmize their roles with the other team members to achieve a team task over time. In order to do so, it is necessary for the team to develop an emergent complexity which is resilient, adaptable, and includes fault-tolerant systems-level behavior in response to the dynamic task environment (Amazeen, 2018; Demir et al., 2018a).

Adaptive complex behavior of a team (as sociotechnical system) is considered within the realm of dynamical systems (either linear or non-linear) and dynamical changes of the sociotechnical systems behavior can be measured via Non-linear Dynamical Systems (NDS) methods. One commonly used NDS method in team research is Recurrence Plots (RPs) and its extension Recurrence Quantification Analysis (RQA; Eckmann et al., 1987). The bivariate extension of RQA is Cross RQA and multivariate extension is Joint RQA (JRQA; Marwan et al., 2002; Coco and Dale, 2014; Webber and Marwan, 2014). In general, RPs visualize the behavior trajectories of dynamical systems in phase space and RQA evaluates how many recurrences there are which use a phase space trajectory within a dynamical system. The experimental design of the RPAS team is conceptually in line with JRQA and it is thus the method used for HAT research in this exploratory paper.

The synthetic teammate project (Ball et al., 2010) is a longtitudinal project which aims to replace a ground station team member with a fully-fledged autonomous agent. From a methodological perspective, all three of the experiments were conducted in the context of CERTT RPAS-STE (Cognitive Engineering Research on Team Tasks RPAS—Synthetic Task Environment; Cooke and Shope, 2004, 2005). CERTT RPAS-STE has various features and provides new hardware infrastructure to support this study: (1) text chat capability for communications between the human and synthetic participants, and (2) new hardware consoles for three team members and two consoles for two experimenters who oversee the simulation, inject roadblocks, make observations, and code the observations.

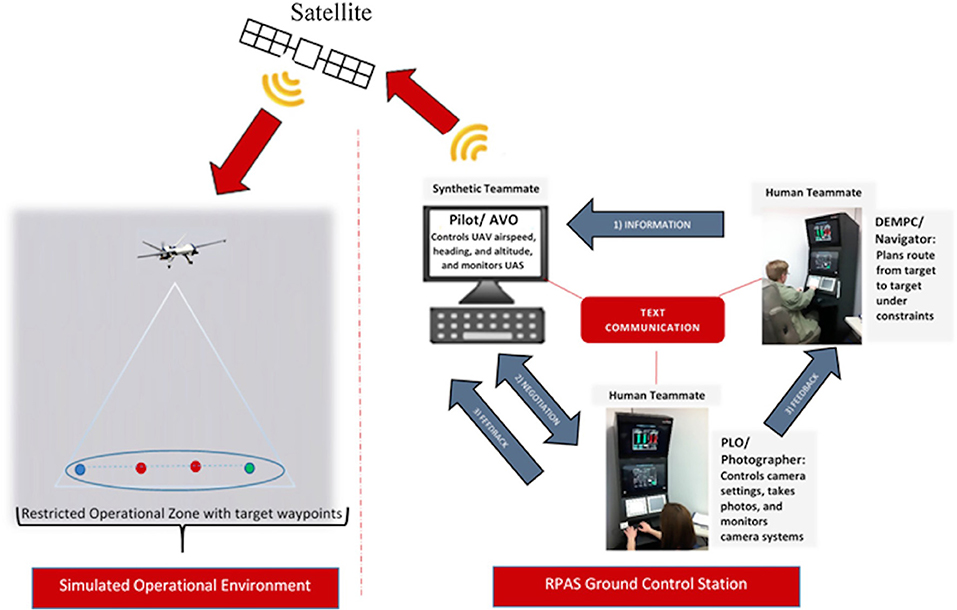

The RPAS-STE task requires three different, interdependent teammates working together to take good photos of the targets (see Figure 1): (1) the navigator provides the flight plan to the synthetic pilot (called Information) and navigates it to each waypoint, (2) the pilot controls the Remotely Piloted Aircraft (RPA) and adjusts altitude and airspeed based on the photographer's requests (called Negotiation), and (3) the photographer photographs the target waypoints, adjusts the camera settings, and also shares information relating to photo quality—i.e., whether or not the photo was “good”—to the other two team members (called Feedback). Taking good photographs of designated target waypoints is the main goal for all the teams, and it requires timely and effective information sharing among teammates. The photographer determines if a photo is good based on the photograph folder which shows examples of good photographs (in regard to camera settings, i.e., camera type, shutter speed, focus, aperture, and zoom). This timely effective coordination sequence for this task is called Information-Negotiation-Feedback (INF; Gorman et al., 2010). All interactions occur within a text-based communications system (Cooke et al., 2007).

Figure 1. Simulated RPAS task environment for each role, and task coordination (information-negotiation-feedback). The dashed line separates ground control station and simulated operational environment (from Demir et al., 2017; reprinted with permission).

In the simulated RPAS task environment, the target waypoints were within areas referred to as Restricted Operating Zones (ROZ boxes) which have entry and exit waypoints that teams must pass through to access the target waypoints. All studies had missions that could either be low workload (11–13 target waypoints within five ROZ) or high workload (20 target waypoints within seven ROZ). The number and length of missions varied as follows: In the first and the second experiments, all teams went through five 40 min missions with 15 min breaks in between missions. Missions 1–4 were low workload, but Mission 5 was high workload in order to determine the teams' performance strength. During the last study, teams went through ten 40 min missions which were divided into two sessions with 1 or 2 weeks in between. However, while in the first and second studies, the first four missions had identical workloads, in the third study, the first nine missions had identical workloads and the 10th mission was high workload.

In the RPAS STE, we collected performance and process measures and then analyzed them with statistical and non-linear dynamical methods. In this way, we could first understand the nature of all-human teams to prepare for the development of HATs. In general, we collected the following measures for the following three RPAS experiments (see Table 1; Cooke et al., 2007). Each of these measures was designed during a series of experiments which were part of the synthetic teammate project.

In RPAS studies, we considered team communication flow to look at HAT patterns of interaction and their variation over time by using Joint Recurrence Plots (JRPs). JRPs are instances when two or more individual dynamical components show a simultaneous recurrence (pointwise product of reperesentative univariate RPs) and JRQA provides the quantity (and length) of recurrences in a dynamical system using phase space trajectory (Marwan et al., 2007). In this perspective, JRQA can be utilized for the purpose of examining variations between multiple teams in regard to how and why they, specifically how frequently team members synchronize their activities while communicating by text message. That is, JRQA basically evaluates synchronization and influence by means of looking at system interactions (Demir et al., 2018b).

In RPAS studies, the time stamp for each message (as seconds) is used to evaluate the flow of communication between team members, resulting in multivariate binary data. We chose an ideal window size based on the following order: (1) Determinism (DET) was estimated based on windows which increased by 1 s for each mission, and (2) DET variance was evaluated for each size of window and a 1 min window that was chosen according to the average period in which DET no longer increased was selected. This information was useful in order to visually and quantitatively represent any repeating structural elements within communication of the teams.

For all three experiments, we extracted seven measures from JRQA: recurrence rate, percent determinism (DET), longest diagonal line, entropy, laminarity, trapping time, and longest vertical line. The measure which all three RPAS studies were interested in was DET, represented by formula (1) (Marwan et al., 2007), which we defined as the “ratio of recurrence points forming diagonal lines to all recurrence points in the upper triangle” (Marwan et al., 2007). Time periods during which the system repeated a sequence of states were represented in the RP by diagonal lines. DET is able to characterize the level of organization present in the communications of a system by examining the dispersion of repeating points on the RP; systems which were highly deterministic repeated sequences of states many times (i.e., many diagonal lines on the RP) while systems that were mildly deterministic would only repeat a sequence of states rarely (i.e., few diagonal lines). In Formula (1), l is the length of the diagonal line when its value is lmin and P(l) is the probability distribution of line lengths (Webber and Marwan, 2014). A 0% Determinism rate indicated that the time series never repeated, whereas a 100% Determinism rate indicated a perfectly repeating time series.

In the first experiment, human team members collaborate with a “synthetic teammate” [a randomly selected human team member, Wizard of Oz Paradigm; WoZ (Riek, 2012)] that communicates based on natural language. In the second experiment, a synthetic agent with limited communication behavior, the Adaptive, Control of Thought-Rationale (ACT-R; Anderson, 2007), worked with human team members. In the last experiment, similar to the first experiment, human team members communicated and coordinated with a “synthetic teammate” (played this time by a highly trained experimenter who mimicked a synthetic agent with a limited vocabulary; WoZ) in order to overcome automation and autonomy failures, and malicious cyber-attack. Participants in all three experiments were undergraduate and graduate students recruited from Arizona State University and were compensated $10/hour. In order to participate, students were required to have normal or corrected-to-normal vision and be fluent in English. The following table indicates the experimental design and situation awareness index for each of the conditions (see Table 2). This study was carried out in accordance with the recommendations of The Cognitive Engineering Research Institute Institutional Review Board under The Cognitive Engineering Research Institute (CERI, 2007). The protocol was approved by The Cognitive Engineering Research Institute Institutional Review Board. All subjects gave written informed consent in accordance with the Declaration of Helsinki.

For the first experiment, the main question is whether the manipulation of team members' beliefs about their pilot can be associated with team interactions and, ultimately, team performance for overcoming the roadblocks (Demir and Cooke, 2014; Demir et al., 2018c). Thus, there are two conditions in this experiment: synthetic and control, with 10 teams in each condition (total 20 teams). Sixty randomly selected participants completed the experiment (Mage = 23, SDage = 6.39). In the synthetic condition, we simulated a “synthetic agent” using a WoZ paradigm: one participant was chosen to be the pilot, and in therefore automatically and unknowingly became the synthetic agent. The other two team members were randomly assigned to navigator and photographer roles and were informed that there was a synthetic agent serving as the pilot. In this case, the navigator and photographer could not see the pilot when entering or leaving the room, nor during the breaks. Since the pilot in the control condition was a randomly assigned participant and the other two team members knew this (all three roles signed the consent forms together, and they all saw each other during that time), communication developed naturally among the team members (again, the navigator and photographer roles were randomly assigned).

In this study, we manipulated the beliefs of the navigator and the photographer in that they were led to believe that the third team member was not human, but a synthetic agent. This was done in order to answer the question of whether the manipulation of that belief could affect team interactions and ultimately team effectiveness (Demir and Cooke, 2014; Cooke et al., 2016; Demir et al., 2018c). The key aspects of two articles of this study use several quantitative methods to understand team interaction and its relationship with team effectiveness across the conditions. In this specific experiment, the teams went through five 40 min missions (with a 15 min break after each) and we collected the measures described in Table 1. We comprehensively discussed the key findings in previous papers (Demir and Cooke, 2014; Demir et al., 2018c).

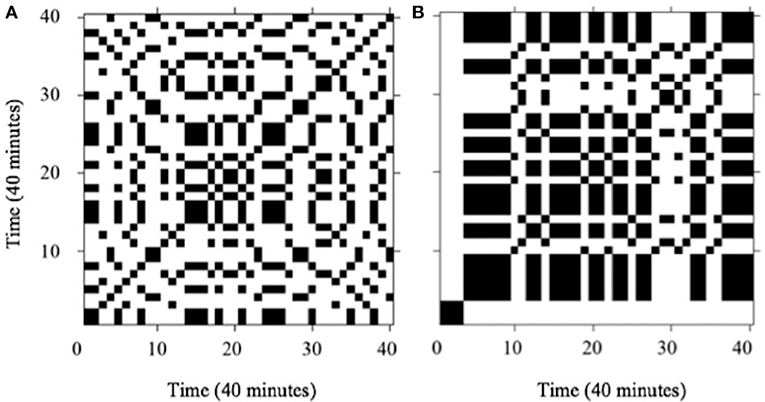

As a dynamical analysis, we applied JRQA to binary communication flow time series data for 40 min missions in order to visually and quantitatively represent any repeating structural elements within communication of the teams. In the following figure, we give two example JRP (one control and one synthetic team) for two RPAS teams' interactions; these consist of three binary sequences (one for each team member) that are each 40 min in length. The three binary sequences were created based on whether navigator, pilot, or photographer sent a message in any given minute. If a message was sent or no message was sent, they was coded as “1” and “0,” respectively. Based on the JRP and DET, the very short diagonals indicated that the control teams showed less predictable team communication (Determinism: 46%) while the longer diagonals mean that the synthetic teams demonstrated more predictable communication (Determinism: 77.6%; see Figure 2). Also, we found that the predictability in synthetic teams had more negative relationship with their performance on target processing (TPE), whereas this relationship was less negative in the control teams (Demir et al., 2018c).

Figure 2. Example JRP for two high performing UAV teams' interactions (length 40 min): (A) control (Determinism: 46%) and (B) synthetic teams (Determinism: 77.6%) (from Demir et al., 2018c; reprinted with permission).

Overall findings from this first experiment (see Table 3) indicate that the teams which had been informed that their pilot was actually a synthetic agent not only liked the pilot more, but also perceived lower workload, and assisted the pilot by giving it more suggestions (Demir and Cooke, 2014). Based on the two goals of current paper, our findings indicate that (Demir et al., 2018c) the control teams processed and coordinated more effectively at the targets to get good photographs (i.e., target processing efficiency) than the synthetic teams and displayed a higher level of interaction while planning the task. Team interaction was related to improved team effectiveness, suggesting that the synthetic teams did not demonstrate enough of the adaptive complex behaviors that were present in control teams, even though they could interact via natural language. The implication here is that merely believing that the pilot was a not human resulted in more difficult planning for the synthetic teams, thus making it more difficult to effectively anticipate their teammates' needs.

In the second experiment, the focal manipulation was of the pilot position resulting in three conditions: synthetic, control, and experimenter (10 teams for each condition). As indicated by the name, the synthetic condition had a synthetic team member in the role of, which had been developed using ACT-R cognitive modeling architecture (Anderson, 2007); participants in this condition had to communicate with the synthetic agent in a manner void of ambiguous or cryptic elements due to its limited language capability (Demir et al., 2015). In the control condition, since the pilot was human, communication among team members developed naturally. Finally, in the experimenter condition, the pilot was limited to using a coordination script specific to the role. Using the script, the experimenter pilot interacted with the other roles by asking questions at appropriate times in order to promote adaptive and timely sharing of information regarding critical waypoints. In all three conditions, the roles of navigator and photographer were randomly assigned. Therefore, 70 randomly selected participants completed the second experiment (Mage = 23.7, SDage = 3.3).

In the synthetic condition, the ACT-R based synthetic pilot was designed based on interaction with team members and interaction in the task environment, including adaptation of various of English constructions, selection of apropos utterances, discernment of whether or not communication was necessary, and awareness of the current situation of the RPA, i.e., flying the RPA between waypoints on the simulated task environment (Ball et al., 2010). However, since the synthetic pilot still had limited interaction capability, it was crucial that the navigator and photographer made certain that their messages to the non-human teammate were void of ambiguous or cryptic elements. If not, their synthetic teammate was unable to understand and, in some cases, malfunctioned (Demir et al., 2015).

In the second experiment, we explore and discuss team interaction and effectiveness by comparing HATs with all-human teams (i.e., control and experimenter teams). Here, we give a conceptual summary of findings from previous papers that compared human-autonomy and all-human teams on dynamics (Demir et al., 2018a,b) and also their relationship with team situation awareness and team performance, via interaction (Demir et al., 2016, 2017; McNeese et al., 2018).

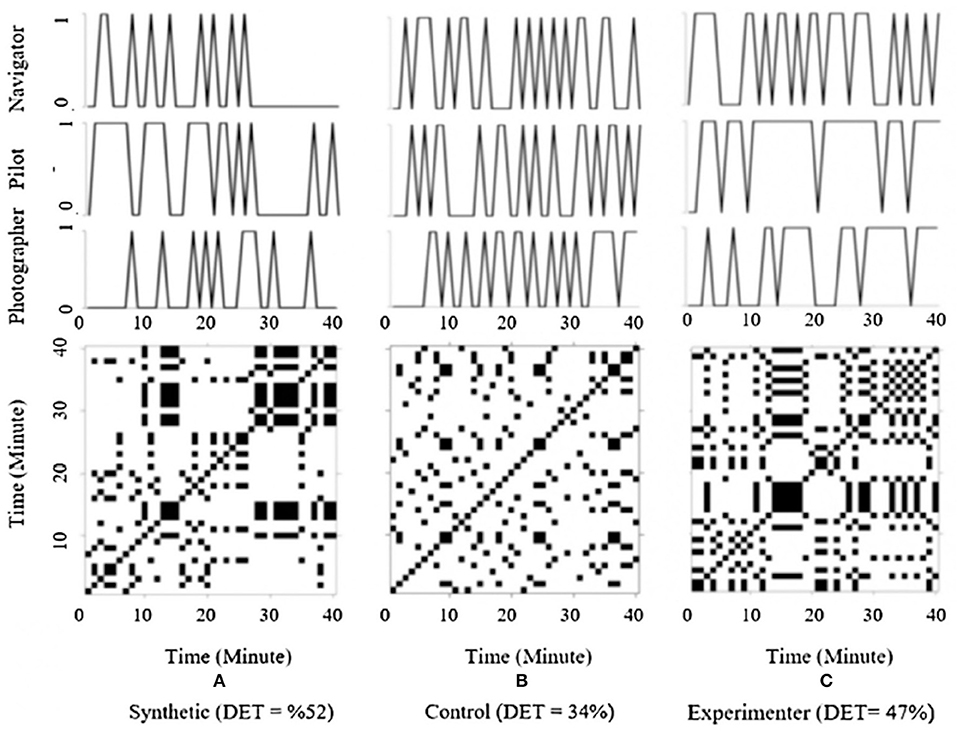

In Figure 3, three example JRPs from this study are depicted for three teams' communication for each condition (same as in the first RPAS study: three 40 min binary sequences) along with their calculated DET: Figure 3A—synthetic (DET = 52%), Figure 3B—control (DET = 34%), and Figure 3C—experimenter (DET = 47%). Visible on the y-axis, instances of any messages sent by any of the three roles (navigator, pilot, or photographer) in any minute were coded as “1,” and if no message was sent in any minute, it was coded as “0.” The synthetic team in this example exhibited rigid communication (higher determinism), whereas the control team demonstrated an unstable communication pattern compared to the other two teams. Taking into account the goals of this paper, in the synthetic team, higher determinism tended to correspond to instances when all three team members were silent (see Figure 3A between 30 and 35 min). For control teams, such varied communication patterns were not unanticipated since the pilot role was randomly assigned. On the other hand, coordination behaviors of control teams, experimenter teams, and synthetic teams were unstable, metastable, and rigid, respectively, as indexed by the percent DET from JRQA. Extreme team coordination dynamics (overly flexible or overly rigid) in the control and synthetic teams resulted in low team performance. Experimenter teams performed better in the simulated RPAS task environment due to metastability (Demir, 2017; Demir et al., 2018a,b). In addition to the dynamic findings, overall findings for this study showed positive correlations between pushing information and both team situation awareness and team performance. Additionally, the all-human teams had higher levels than the synthetic teams in regard to both pushing and pulling. By means of this study, we saw that anticipation of other team members' behaviors as well as information requirements are important for effective Team Situation Awareness (TSA) and team performance in HATs. Developing mechanisms to enhance the pushing of information with HATs is necessary in order to increase the efficacy of teamwork in such teams.

Figure 3. Example joint recurrence plots for three RPAS teams' interactions in three conditions—length 40 min: (A) synthetic, (B) control, and (C) experimenter teams (from Demir et al., 2018b; reprinted with permission).

In the third experiment, the “synthetic” pilot position was filled by a well-trained experimenter (in a separate room—WoZ paradigm) who mimicked the communication and coordination of a synthetic agent from the previous experiment (Demir et al., 2015). In the third experiment, 40 randomly selected participants (20 teams) completed the experiment (Mage = 23.3, SDage = 4.04). In order to facilitate their effective communication with the synthetic pilot, both the navigator and the photographer had a cheat sheet to use during the training and the task. The main manipulation and consideration of this study was team resilience, so at selected target waypoints teams faced one of three kinds of roadblocks—automation failure, autonomy failure, or malicious cyber-attack—and had to overcome it within a set time limit. Automation failures were implemented as loss of displayed information for one of the agents for a set period. Autonomy failures were implemented as comprehension or anticipation failures on the part of the synthetic pilot. The malicious cyber-attack was implemented near the end of the final mission as an attack on the synthetic pilot wherein it flew the RPA to a site known to be a threat but claimed otherwise (Cooke et al., 2018; Grimm et al., 2018a,b).

The teams encountered three types of automation failures present on either the pilot's shared information data display, or the photographer's, e.g., there was an error in the current and next waypoint information or in the distance and time from the current target waypoint. In order to overcome each failure, team members were required to effectively communicate and coordinate with each other. Each of the automation failures were inserted individually at specific target waypoints from Missions 2 through 10 (Mission 1 was the baseline mission and didn't include any failures). Malicious cyber-attack was only applied on Mission 10. Therefore, Mission 10 was the most challenging.

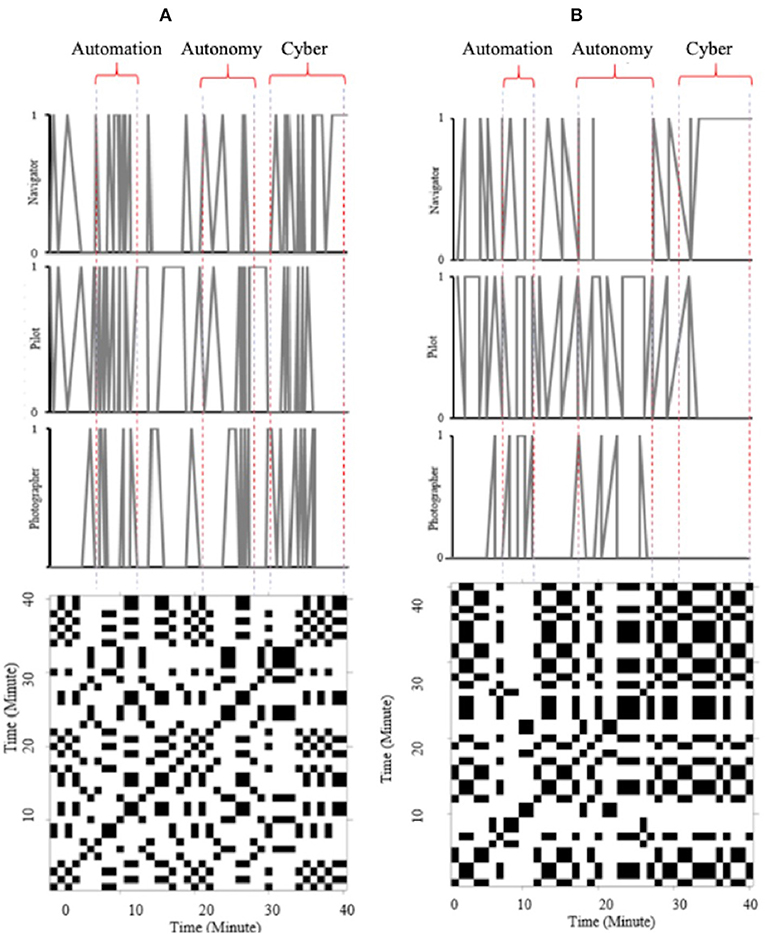

Within the concept of dynamical systems analysis, two sample JRP are shown for the communication of high and low performing RPAS teams, which were indicated based on their target processing efficiency (TPE) scores during Mission 10 (three 40 min binary sequences). Additionally, the plots show the calculated DET for both teams; the first one performed well (DET = 48%) and the second performed poorly (DET = 54%). Accordingly, as shown in Figure 4A, although the percentages of the DET scores were not too far apart, the communication of the high performing team was more rigid than that of the low performing team. Interestingly each of the team members in the high performing team communicated more frequently during each one of the failures, than those of the other teams, and they overcame all of the failures they encountered, including the malicious cyber-attack. As for the low performing team (see Figure 4B), the members communicated more during the automation failure and they successfully overcame that roadblock. Unfortunately, the same team did not communicate to the same degree and with the same efficacy during the remaining two roadblocks (autonomy failure and malicious cyber-attack). In fact, the navigator did not even participate during the autonomy failure, and the photographer either failed to anticipate the needs of his teammates during the malicious cyber-attack, the photographer was simply unaware of the failure. This lack of team situation awareness resulted in poor TPE scores.

Figure 4. Example Joint Recurrence Plots for two RPAS teams' interactions: (A) high performing team (Mission 10—DET: 48%) overcame all three failures (automation, autonomy, and malicious cyber-attack); and (B) low performing team (Mission—DET: 54%) only overcame automation failure—Mission 10: length 40 min (from Grimm et al., 2018b; reprinted with permission).

Based on the goals of current paper, when the HATs interact effectively, they improve in their performance and process over time and tend to push information or anticipate the information needs of others more as they gain experience. In addition, dynamics of HATs differ in how they respond to failures. When the HAT teams demonstrated more flexible behavior, they became more adaptive to the chaotic environment, and in turn overcame more failures in the RPAS task environment.

The goal of this current paper is 2-fold: first, to understand how team interaction in HATs evolves in the dynamic RPAS task context and second, to observe how HATs respond to a variety of failures (automation, autonomy, and malicious cyber-attack) over time. One of the most significant findings from our experiments regarding team evolution is the idea of entrainment, that one team member (the pilot in our study, either synthetic or human) can change the communication behaviors of the other teammates over time, including coordination, and affect team performance. In the communication context of this task, we know that pushing information between the team members is important and we know that, in general, the synthetic teammate was capable of communication and knew its own needs, but it did not know the needs of its counterparts in a timely manner, especially during novel conditions. In the first experiment, synthetic teams did not effectively plan during the task and, in turn, did not anticipate each others' needs. Similarly, in the second experiment synthetic teams more often relied on pulling information instead of anticipating each other's needs in a timely manner. Behavioral passiveness of the synthetic teams addresses team coordination dynamics which is a fundamental concept of the ITC theory. Therefore, we applied one of the NDS methods, JRQA, on communication flow from the three experiments and the findings from dynamical systems contributed more insights to explain the dynamic complex behavior of HATs.

In the first and second studies, behavioral passiveness of the synthetic teams resulted in very stable and rigid coordination in comparison to the all-human teams, which were less stable. We know that some degree of stability and instability is needed for team effectiveness, but teams with too much of either performed poorly. In the second experiment, this issue is clearly seen across three conditions: synthetic, control, and experimenter teams. Experimenter teams demonstrated metastable coordination (not rigid nor unstable) and performed better, whereas the control and synthetic teams demonstrated unstable and rigid coordination, respectively, and performed worse. Metastable coordination behavior of the experimenter teams may have helped them adapt to the unexpected changes in the dynamic task environment. In addition to metastable coordination behavior, the experimenter teams also demonstrated effective team communication, pushing and pulling information in a timely and constructive way. This type of metastable pattern was also discovered in different contexts using the entropy measure. For instance, a system functions better if there is a trade-off between its level of complexity and health functionality (Guastello, 2017). Another sample entropy analysis on neurophysiology shows that teams at the optimum level of organization exhibit metastable behavior in order to overcome unexpected changes in the task environment (Stevens et al., 2012). Sample entropy analysis also revealed that a moderate amount of stability resulted in high team performance. This finding also resembles the third experiment, moderately stable behavior and timely anticipation of team members' needs helped teams to overcome the three types of failures. However, one of the most important findings from these experiments is entrainment. That is, one team member (in our case was the pilot).

Through these studies it is clearly possibly to have successful HATs, but a more important question moving forward is how to achieve high levels of HAT performance. How can we ensure effective levels of communication, coordination, and situation awareness between humans and agents? In response to this question, the authors propose three potential future needs for ensuring effective HATs: (1) training humans how to communicate and coordinate with agents, (2) training agents on the principles of teamwork, and (3) human-centered machine learning design of the synthetic agent. In other words, for humans and agents to interact with one other as team members, all participants must understand teamwork and be able to effectively communicate and coordinate with the others; it's not just one or the other.

First, before participating in HATs, humans should be specifically trained on how to interact with the agent. In the future this training will be fundamentally important as the types of available agents with which a person might team up vary greatly, with many variants in both cognitive modeling and machine learning. Understanding how to interact with these agents is step one in ensuring effective HATs, because without meaningful communication, effective teamwork is impossible. In our studies, we specifically trained participants in how to properly interact with the synthetic agents in their teams. If we had not trained them how to interact, the interaction would have been significantly hindered due to the participants not understanding the communication and coordination limitations of the synthetic agent. The training allowed them to successfully interact with the agent due to an understanding of the agent's capabilities. In the future, the need for training humans to interact with agents will hopefully decrease due to the increased availability and experience of interacting with agents and advancements in natural language processing. However, in the immediate future it will be necessary to develop appropriate training specific to this type of interaction.

Second, agents as team members must be programmed and trained with a fundamental conceptualization of what teamwork is and what the important principles of teamwork are. If you dig into the fundamentals of the synthetic agent in our studies, they did not understand the concept of teaming. Instead, it was capable of communication and understood its own task with very little understanding of other team members' tasks, let alone the team task. Moving forward, computer scientists and cognitive scientists need to work together to harness the power of machine learning to train agents to know what teamwork is (communication, coordination, awareness, etc.). An agent will never be able to adapt and adjust to dynamical characteristics such as coordination if it is not trained to conceptualize and taught how to apply that knowledge first.

Finally, there is a significant need to have serious discussions on how the broader community should be developing these agents technically. Our agent was built on the ACT-R cognitive architecture which has certain advantages, but as advancements in machine learning continue, it is valuable to debate the technical foundation of these agents. The major advantage and promise of using machine learning is that the agent can be trained and can learn many facets of teamwork. Reinforcement and deep learning provide promise that an agent will develop human-centered capabilities by recalibrating its technical infrastructure based on more and more interactions with a human team member. We are not arguing for one side or the other (cognitive architectures or machine learning), but rather that the community carefully should weigh the pros and cons of each and then choose the technical methodology that is most efficient and leads to developing an effective agent as a team member.

We are still in the early stages of the evolution of HAT. Our current work extends team coordination metrics to assess coordination quality and ultimately, team effectiveness in terms of adaptation and resilience; and also, explores the kinds of training, technological design, or team composition interventions that can improve HAT under degraded conditions. A great deal of ongoing work is needed in many areas. We strongly encourage the broader team science community to conduct interdisciplinary work to advance HAT.

MD helped with the specific decisions on the experimental design, applied dynamical systems methods, and led the writing of this manuscript. NM and NC contributed to crafting the general idea of the research, provided input to make the ideas of this study concrete, designed the experiment, the paradigm and the experimental protocol, and contributed to writing up this manuscript.

RPAS I and II research were partially supported by ONR Award N000141110844 (Program Managers: Marc Steinberg, Paul Bello) and ONR Award N000141712382 (Program Managers: Marc Steinberg, Micah Clark). RPAS III research was supported by ONR Award N000141712382 (Program Managers: Marc Steinberg, Micah Clark).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors acknowledge Steven M. Shope from Sandia Research Corporation who updated RPAS-STE testbed and integrated the synthetic teammate into the RPAS STE testbed.

Amazeen, P. G. (2018). From physics to social interactions: scientific unification via dynamics. Cogn. Syst. Res. 52, 640–657. doi: 10.1016/j.cogsys.2018.07.033

Anderson, J. R. (2007). How Can the Human Mind Occur in the Physical Universe? Oxford; New York, NY: Oxford University Press.

Ball, J., Myers, C., Heiberg, A., Cooke, N. J., Matessa, M., Freiman, M., et al. (2010). The synthetic teammate project. Comput. Math. Org. Theory 16, 271–299. doi: 10.1007/s10588-010-9065-3

Barrett, L. (2015). Beyond the Brain: How Body and Environment Shape Animal and Human Minds, 1st Edn. Princeton, NJ; Oxford: Princeton University Press.

Bartlett, C. E., and Cooke, N. J. (2015). Human-robot teaming in urban search and rescue. Proc. Hum. Fact. Ergon. Soc. Ann. Meet. 59, 250–254. doi: 10.1177/1541931215591051

Braitenberg, V., and Arbib, M. A. (1984). Vehicles: Experiments in Synthetic Psychology, 1st Edn. Cambridge, MA: The MIT Press.

Bristol, U. (2008). Grey Walter and His Tortoises | News | University of Bristol. Available online at: http://www.bristol.ac.uk/news/2008/212017945378.html (accessed October 24, 2018).

CERI (2007). Available online at: http://cerici.org/ceri_about.htm (accessed January 16, 2019).

Chen, J. Y. C., and Barnes, M. J. (2014). Human-agent teaming for multirobot control: a review of human factors issues. IEEE Trans. Hum. Mach. Syst. 44, 13–29. doi: 10.1109/THMS.2013.2293535

Coco, M. I., and Dale, R. (2014). Cross-recurrence quantification analysis of categorical and continuous time series: an R package. Front. Psychol. 5:510. doi: 10.3389/fpsyg.2014.00510

Cooke, N. J., Demir, M., and McNeese, N. J. (2016). Synthetic Teammates as Team Players: Coordination of Human and Synthetic Teammates (Technical Report No. N000141110844). Mesa, AZ: Cognitive Engineering Research Institute.

Cooke, N. J., Demir, M., McNeese, N. J., and Gorman, J. (2018). Human-Autonomy Teaming in Remotely Piloted Aircraft Systems Operations Under Degraded Conditions. Mesa, AZ: Arizona State University.

Cooke, N. J., Gorman, J., Pedersen, H., Winner, J., Duran, J., Taylor, A., et al. (2007). Acquisition and Retention of Team Coordination in Command-and-Control. Available online at: http://www.dtic.mil/docs/citations/ADA475567 (accessed November 10, 2018).

Cooke, N. J., Gorman, J. C., Myers, C. W., and Duran, J. L. (2013). Interactive team cognition. Cogn. Sci. 37, 255–285. doi: 10.1111/cogs.12009

Cooke, N. J., and Shope, S. M. (2004). “Designing a synthetic task environment,” in Scaled Worlds: Development, Validation, and Application, eds L. R. E. Schiflett, E. Salas, and M. D. Coovert, 263–278. Available online at: http://www.cerici.org/documents/Publications/scaled%20worlds%20paper3.pdf (accessed November 10, 2018).

Cooke, N. J., and Shope, S. M. (2005). “Synthetic task environments for teams: CERTT's UAV-STE,” in Handbook of Human Factors and Ergonomics Methods, eds N. Stanton, A. Hedge, K. Brookhuis, E. Salas, and H. Hendrick, 41–46. Available online at: http://cerici.org/documents/Publications/Synthetic%20Task%20Environments%20for%20Teams.pdf (accessed November 10, 2018).

Cox, M. T. (2013). “Goal-driven autonomy and question-based problem recognition,” in Poster Collection. Presented at the Second Annual Conference on Advances in Cognitive Systems (Palo Alto, CA). Available online at: http://mcox.org/

Dautenhahn, K. (2007). “A paradigm shift in artificial intelligence: why social intelligence matters in the design and development of robots with human-like intelligence,” in 50 Years of Artificial Intelligence, eds M. Lungarella, F. Iida, J. Bongard, and R. Pfeifer (Berlin; Heidelberg: Springer-Verlag, 288–302.

Demir, M. (2017). The impact of coordination quality on coordination dynamics and team performance: when humans team with autonomy (Unpublished Dissertation). Arizona State University. Available online at: http://hdl.handle.net/2286/R.I.44223 (accessed November 10, 2018).

Demir, M., and Cooke, N. J. (2014). Human teaming changes driven by expectations of a synthetic teammate. Proc. Hum. Fact. Ergonom. Soc. Ann. Meet. 58, 16–20. doi: 10.1177/1541931214581004

Demir, M., Cooke, N. J., and Amazeen, P. G. (2018a). A conceptual model of team dynamical behaviors and performance in human-autonomy teaming. Cogn. Syst. Res. 52, 497–507. doi: 10.1016/j.cogsys.2018.07.029

Demir, M., Likens, A. D., Cooke, N. J., Amazeen, P. G., and McNeese, N. J. (2018b). Team coordination and effectiveness in human-autonomy teaming. IEEE Trans. Hum. Mach. Syst. 49, 150–159. doi: 10.1109/THMS.2018.2877482

Demir, M., McNeese, N. J., and Cooke, N. J. (2016). “Team communication behaviors of the human-automation teaming,” 2016 IEEE International Multi-disciplinary Conference on Cognitive Methods in Situation Awareness and Decision Support (CogSIMA) (San Diego, CA), 28–34.

Demir, M., McNeese, N. J., and Cooke, N. J. (2017). Team situation awareness within the context of human-autonomy teaming. Cogn. Syst. Res. 46, 3–12. doi: 10.1016/j.cogsys.2016.11.003

Demir, M., McNeese, N. J., and Cooke, N. J. (2018c). The impact of a perceived autonomous agent on dynamic team behaviors. IEEE Transac. Emerg. Top. Comput. Intell. 2, 258–267. doi: 10.1109/TETCI.2018.2829985

Demir, M., McNeese, N. J., Cooke, N. J., Ball, J. T., Myers, C., and Freiman, M. (2015). Synthetic teammate communication and coordination with humans. Proc. Hum. Fact. Ergonom. Soc. Ann. Meet. 59, 951–955. doi: 10.1177/1541931215591275

Demir, M., McNeese, N. J., Cooke, N. J., Bradbury, A., Martinez, J., Nichel, M., et al. (2018d). “Dyadic team interaction and shared cognition to inform human-robot teaming,” in Proceedings of the Human Factors and Ergonomics Society. Presented at the Human Factors and Ergonomics Society Annual Meeting 62 (Philadelphia, PA).

Eckmann, J.-P., Kamphorst, S. O., and Ruelle, D. (1987). Recurrence plots of dynamical systems. Europhy. Lett. 4:973. doi: 10.1209/0295-5075/4/9/004

Endsley, M. R. (2015). Autonomous Horizons: System Autonomy in the Air Forceâ?”A Path to the Future (Autonomous Horizons No. AF/ST TR 15-01). Department of the Air Force Headquarters of the Air Force. Available online at: http://www.af.mil/Portals/1/documents/SECAF/AutonomousHorizons.pdf?timestamp=1435068339702

Fiore, S. M., and Wiltshire, T. J. (2016). Technology as teammate: examining the role of external cognition in support of team cognitive processes. Front. Psychol. 7:1531. doi: 10.3389/fpsyg.2016.01531

Goodrich, M. A., and Yi, D. (2013). “Toward task-based mental models of human-robot teaming: a Bayesian approach,” in Virtual Augmented and Mixed Reality. Designing and Developing Augmented and Virtual Environments, ed R. Shumaker (Las Vegas, NV: Springer, 267–276.

Gorman, J. C., Amazeen, P. G., and Cooke, N. J. (2010). Team coordination dynamics. Nonlin. Dyn. Psychol. Life Sci. 14, 265–289.

Gorman, J. C., Cooke, N. J., Pederson, H. K., Connor, O. O., and DeJoode, J. A. (2005). Coordinated Awareness of Situation by Teams (CAST): measuring team situation awareness of a communication glitch. Proc. Hum. Fact. Ergon. Soc. Ann. Meet. 49, 274–277. doi: 10.1177/154193120504900313

Gorman, J. C., Cooke, N. J., and Winner, J. L. (2006). Measuring team situation awareness in decentralized command and control environments. Ergonomics 49, 1312–1325. doi: 10.1080/00140130600612788

Grimm, D., Demir, M., Gorman, J. C., and Cooke, N. J. (2018a). “Systems level evaluation of resilience in human-autonomy teaming under degraded conditions,” in 2018 Resilience Week. Presented at the Resilience Week 2018 (Denver, CO).

Grimm, D., Demir, M., Gorman, J. C., and Cooke, N. J. (2018b). “The complex dynamics of team situation awareness in human-autonomy teaming,” in Cognitive and Computational Aspects of Situation Management (CogSIMA). Presented at the 2018 IEEE Conference on Cognitive and Computational Aspects of Situation Management (CogSIMA) (Boston, MA).

Guastello, S. J. (2017). Nonlinear dynamical systems for theory and research in ergonomics. Ergonomics 60, 167–193. doi: 10.1080/00140139.2016.1162851

Haken, H. (2003). “Intelligent behavior: a synergetic view,” in Studies of Nonlinear Phenomena in Life Science, Vol. 10. The Dynamical Systems Approach to Cognition, eds W. Tschacher and J.-P. Dauwalder (World Scientific Publisher Co. Inc., 3–16.

Hart, S. G., and Staveland, L. E. (1988). “Development of NASA-TLX (Task Load Index): results of empirical and theoretical research,” in Human Mental Workload, eds P. A. Hancock and N. Mashkati (Amsterdam: North Holland Press), 139–183.

Kelso, J. A. S. (1997). Dynamic Patterns: The Self-organization of Brain and Behavior. Cambridge, MA; London: MIT Press.

Klein, G., Woods, D. D., Bradshaw, J. M., Hoffman, R. R., and Feltovich, P. J. (2004). Ten challenges for making automation a “team player” in joint human-agent activity. IEEE Intell. Syst. 19, 91–95. doi: 10.1109/MIS.2004.74

Krogmann, U. (1999). From Automation to Autonomy-Trends Towards Autonomous Combat Systems (Unclassified No. RTO MP-44). Science and Technology Organization. Available online at: NATO http://www.dtic.mil/dtic/tr/fulltext/u2/p010300.pdf

Marwan, N., Carmen Romano, M., Thiel, M., and Kurths, J. (2007). Recurrence plots for the analysis of complex systems. Phys. Rep. 438, 237–329. doi: 10.1016/j.physrep.2006.11.001

Marwan, N., Wessel, N., Meyerfeldt, U., Schirdewan, A., and Kurths, J. (2002). Recurrence plot based measures of complexity and its application to heart rate variability data. Phys. Rev. E 66:026702. doi: 10.1103/PhysRevE.66.026702

McGrath, J. E. (1990). “Time matters in groups,” in Intellectual Teamwork: Social and Technological Foundations of Cooperative Work, eds J. Galegher, R. E. Kraut, and C. Egido (Hillsdale, NJ: Lawrence Erlbaum Associates, Inc.), 23–61.

McNeese, N. J., Demir, M., Cooke, N. J., and Myers, C. (2018). Teaming with a synthetic teammate: insights into human-autonomy teaming. Hum. Fact. 60, 262–273. doi: 10.1177/0018720817743223

Riek, L. D. (2012). Wizard of Oz studies in HRI: a systematic review and new reporting guidelines. J. Hum. Robot Interact. 1, 119–136. doi: 10.5898/JHRI.1.1.Riek

Salas, E., Dickinson, T. L., Converse, S. A., and Tannenbaum, S. I. (1992). “Toward an understanding of team performance and training: Robert W. Swezey, Eduardo Salas: Books,” in Teams: Their Training and Performance, eds R. W. Swezey and E. Salas, 3–29. Available online at: http://www.amazon.com/Teams-Training-Performance-Robert-Swezey/dp/089391942X (accessed November 10, 2018).

Salas, E., Sims, D. E., and Burke, C. S. (2005). Is there a “Big Five” in teamwork? Small Group Res. 36, 555–599. doi: 10.1177/1046496405277134

Schooley, L. C., Zeigler, B. P., Cellier, F. E., and Wang, F. Y. (1993). High-autonomy control of space resource processing plants. IEEE Cont. Syst. 13, 29–39. doi: 10.1109/37.214942

Stevens, R. H., Galloway, T. L., Wang, P., and Berka, C. (2012). Cognitive neurophysiologic synchronies: what can they contribute to the study of teamwork? Hum. Fact. 54, 489–502. doi: 10.1177/0018720811427296

Thelen, E., and Smith, L. B. (2007). “Dynamic systems theories,” in Handbook of Child Psychology, Vol. 1: Theoretical Models of Human Development, 6th Edn., eds W. Damon and R. M. Lerner (Hoboken, NJ: Wiley), 258–312.

Webber, C. L., and Marwan, N. (eds.). (2014). Recurrence Quantification Analysis: Theory and Best Practices, 2015 Edn. New York, NY: Springer.

Keywords: human-autonomy teaming, synthetic agent, team cognition, team dynamics, remotely piloted aircraft systems, unmanned air vehicle, artificial intelligence, recurrence quantification analysis

Citation: Demir M, McNeese NJ and Cooke NJ (2019) The Evolution of Human-Autonomy Teams in Remotely Piloted Aircraft Systems Operations. Front. Commun. 4:50. doi: 10.3389/fcomm.2019.00050

Received: 15 February 2019; Accepted: 23 August 2019;

Published: 06 September 2019.

Edited by:

Eduardo Salas, Rice University, United StatesReviewed by:

Gilbert Ernest Franco, Beacon College, United StatesCopyright © 2019 Demir, McNeese and Cooke. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mustafa Demir, bWRlbWlyQGFzdS5lZHU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.