Abstract

Around the world, increasing numbers of people are attending informal science events, often ones that are part of multi-event festivals that cross cultural boundaries. For the researchers who take part, and the organizers, evaluating the events’ success, value, and effectiveness is hugely important. However, the use of traditional evaluation methods such as paper surveys and formal structured interviews poses problems in informal, dynamic contexts. In this article, we draw on our experience of evaluating events that literally took place in a field, and discuss evaluation methods we have found to be simple yet useful in such situations.

Introduction

Around the world, people come “in their droves” (Durant et al., 2016) (p. 1) to a panoply of informal science events. “Informal” encompasses a wide range of types of event (Bauer and Jensen, 2011), locations, and sizes of activity, from 30 people in a bar for a café scientifique (Grand, 2015), families in a park (Bultitude and Sardo, 2012) or thousands packing a science stand-up show in a theater (Infinite Monkey Cage Live, 2016).

About two-thirds of British survey respondents (n = 1,749) (Castell et al., 2014) and two-fifths of Australian respondents (n = 1,020) (Searle, 2014) said they had attended a science-related leisure or cultural activity in 2014. The favored kind of activity depends on local culture; in the UK it often involves visiting a nature reserve, museum, or science center (Castell et al., 2014), whereas in Australia people are more likely to have attended a talk or lecture (Searle, 2014).

Although attending science festivals remains a relatively niche activity, nevertheless, Bultitude et al. (2011) noted a marked acceleration in the growth of science festivals in the early 2000s. The scope of science festivals varies hugely, from week-long festivals that cover a city and draw on a multiplicity of performance modes, to small-scale, 1- or 2-day events (Wiehe, 2014) to the inclusion of science-themed activities in arts and cultural festivals (Venugopal and Featherstone, 2014; Sardo and Grand, 2016).

Clearly, the more events, of whatever size, exist, the more scientists are likely to participate, and with participation comes the need to consider its effectiveness and value (HEFCE, 2014; ARC, 2016). The primary purpose of evaluation is to support this assessment; to consider the qualities of a program or project, and to derive lessons for one or more stakeholders (A CAISE, 2017).

That is not to say that meeting stakeholders’ requirements, although often fundamental, is the only benefit of evaluation, especially given that in recent years the burden on evaluation has grown to include demands that it demonstrate how scientists’ work is making a difference, and offer evidence of social and other impacts (Watermeyer, 2012; King et al., 2015). Stilgoe et al. (2014) deprecate the tendency to focus on the “how” of engagement and not enough on the “why”; suggesting that evaluations fail to consider broad questions and implications of engagement activities. Wilkinson and Weitkamp (2016) argue the same concern more positively, suggesting that high-quality evaluation can do more than assess; it can promote innovation, change, and development and is vital if scientists wish to systematically and critically reflect on the process of their engagement (Neresini and Bucchi, 2011). In short, evaluation should enable scientists to understand “which aspects of an experience are working, in what ways, for which audiences and why” (Jensen, 2015) (p. 7).

For evaluations to meet such multi-faceted demands requires considerable expertise (King et al., 2015; Illingworth, 2017). Evaluation design can suffer from limited theorization and limited evidence on the effectiveness of different methods of engagement (Wortley et al., 2016). Jensen (2014, 2015) is one of the most formidable critics of the use of poor-quality and outmoded methods which, he argues, even when used by well-resourced organizations, can result in poor data and erroneous conclusions. Choosing appropriate methods that will enable an evaluation to meet its objectives is not always straightforward, given the subtle interplay of objectives, communication medium, and audiences (Cooke et al., 2017).

The best evaluation, especially if researchers intend to use it to demonstrate the impact of their research, calls for robust objectives, and a commitment to choosing appropriate methods. It goes beyond head counts, or using intuition and “visual impressions and informal chats” (Neresini and Bucchi, 2011) (p. 70) to decide whether audiences’ experiences are worthwhile (Jensen, 2015). Understanding audiences’ emotional and intellectual engagement (Illingworth, 2017) is part of the process that enables researchers to monitor actual against hoped-for outcomes, understand whether they have achieved their objectives and plot a route to improvement (Friedman, 2008; NCCPE, 2016; Burchell et al., 2017).

When planning evaluation, it is vital to consider the audiences and the most appropriate ways of engaging them (Wellcome Trust, 2017). The first step is often to consider when to evaluate. Evaluation is frequently “summative”; participants reflect afterward on their experience of the whole process. But “formative” evaluation can be equally useful; reflection before and during an event can identify if an event is unfolding as planned, what is affecting the progress of the event and what changes can be made in-action to increase the chances of success (Mathison, 2005).

How to evaluate—what methods to use—also needs careful consideration. Good online resources are readily available (Spicer, 2017). The evaluators’ aim should always be to gather robust and reliable data but there is no one right way; the choice depends on the event’s objectives. If, for example, one of the objectives is to reach overlooked audiences, then quantitative data on the numbers, age, sex, or ethnicity of participants are called for. If, however, the objective is to access participants’ observations, understanding, insights, and experiences (Tong et al., 2007) then qualitative data are needed. Although qualitative research methods are sometimes distrusted and regarded as being unreliable and anecdotal (Johnson and Waterfield, 2004), their interdisciplinary character and variety support the study of phenomena in natural settings and the capturing of subtleties, variation, and variety in people’s responses (Charmaz, 2006).

We suggest that where matters equally; that the setting of an event has a profound influence on the way an event is evaluated. Methods must not only suit the audience but also the context. Formal methods (e.g., paper surveys or questionnaires) undoubtedly work well in formal settings such as lectures, or in schools, where the audience is seated, contained, and probably neatly arranged in rows, but such tools are hard to use in the push and pull of a crowd at a science festival (Wiehe, 2014). Jensen (2015) argues that technological tools such as apps or analysis of social media data are one answer to embedding evaluation in an institutional fabric. We agree that while such technologies are undoubtedly valuable for ongoing evaluation in extended events, or in permanent settings such as museums, they may not be feasible or financially viable for one-off events or those held in open spaces.

In this article, we offer our reflections on evaluation methodologies in informal settings, considering the question: “What works in the field?”

What Kind of “Field”?

The Events

The Latitude Festival (LF) is typical of summer festivals held in the UK and elsewhere. It is, literally, held in a field; a parkland in south-east England. Audiences and performers camp there for up to 4 days; venues range from full-scale theaters to pop-up, outdoor, and individual performances. A varied bill of musicians, bands, and artists features across four stages, and there are elements of theater, art, comedy, cabaret, poetry, politics, and literature.1 In 2014 and 2015, the Wellcome Trust collaborated in a series of events at LF, including traditional single-person presentations, conversations between a presenter and a host, hosted panel discussions, theater and poetry performances, interactive events, and dance workshops.

Bristol Bright Night2 (BBN) was funded by the Marie Skłodowska-Curie Actions, part of European Researchers’ Night.3 The BBNs in 2014 and 2015 offered events including a Researchers’ Fair, long talks, “bite-sized” talks, interactive demonstrations, pop-up street theater, workshops, art installations, debates, film screenings, and free entry to At-Bristol science center and other informal venues (e.g., the Watershed and the Hippodrome) across the city of Bristol in south-west England.

It is important to note that although both festivals included some events for families, the majority, especially at LF, were aimed at adults. While some of the methods we describe can be adapted for use with children, our reflections are based on experiences of conducting evaluations among adults.

The Evaluation Methods

Our experience suggests that the informality of the events and venues in festivals should be reflected in the use of unobtrusive and minimally disruptive evaluation methods. In an informal event, the evaluator can be inconspicuous and immersed in the crowd, experiencing the event from the crowd’s perspective. Given that informal events are often “drop-in” or “pop-up,” with no defined beginning or end, evaluation should not disrupt their natural flow. Informal evaluation methods and observational tools can address and overcome these issues; here, we discuss some methods we have successfully used “in the field”.

“Snapshot” Interviews

Interviews allow the evaluator to closely examine the audience’s experiences of an event, offering direct access to people’s observations and insights (Tong et al., 2007). However, in the midst of a busy festival, people want to move quickly to the next event and few are willing to spend 20–30 min in an intensive interview. We find “snapshot” interviews to be a quick and focused method of capturing lively and immediate feedback (DeWitt, 2009; Bultitude and Sardo, 2012). They last no more than 2 min, asking a small number of consistent, clear, and structured questions. DeWitt (2009) argues that snapshots collect honest feedback, as their proximity leads to “spur-of-the-moment answers.” On the other hand, their shortness can mean that the data, while broad, are shallow. This can, however, be mitigated by using simple and robust questions; e.g., “what attracted you to this activity?”

Snapshot interviews fit the informal and relaxed atmosphere of festivals, where people, in our experience, welcome the opportunity for conversations with new people. Participants have proved willing to be interviewed if they are assured it is going to be very short, which encourages high participation. Snapshots can be used with a variety of settings and audiences. They work best in events (e.g., presentations) that have a set finishing time, as the evaluator can easily catch audience members as they are leaving. They can be used in free-form events (e.g., interactive exhibitions), but this calls for more careful planning, as it is less clear when people are likely to be ready to share their thoughts.

Because snapshot interviews are so short, they are best audio-recorded (rather than writing notes) to maintain the relationship between interviewer and interviewee. At a festival, sampling specific demographic groups can be difficult, as people move from one activity to the next, depressing the representativeness, and response rate. To overcome this, snapshot interviews can be combined with observation (below).

At multi-venue events, such as BBN, another problem is that people can be over-sampled, as evaluators in one location cannot easily know to whom evaluators at another have spoken. This can be mitigated by, for example, giving interviewees a small sticker to attach to their clothing; a simple signal that they have been interviewed. This also acts as a tally of the number of interviews. Another issue is when to approach audience members. It is crucial that this happens after people have spent a reasonable amount of time at the event, otherwise their feedback will be premature. Evaluators need to place themselves where they can sense the natural flow and approach people toward the end of their visit.

Online Questionnaires

Online questionnaires offer an alternative to snapshot interviews. If people have given permission, they can be sent a link to an online questionnaire shortly after the event. The disadvantage of online questionnaires is that people may ignore the invitation to participate. This can be mitigated by sending a carefully worded, friendly—and short—email alongside the link.

Like snapshots, online questionnaires do not disrupt people’s enjoyment and are a convenient way to gather data. Respondents are in their own space and so feel more comfortable and, with no human interviewer to please (Couper et al., 2002), are probably more honest in their answers. People attending informal science events often abandon paper questionnaires or leave them incomplete. In our experience, online questionnaires have better response rates than an equivalent paper questionnaire; the questionnaire sent out after BBN had a response rate of 33%.

We design questionnaires that are short and quick to complete, comprising mostly closed questions (e.g., would you come to a similar activity again?). Closed questions, which present the respondents with a list of options, do not discriminate against less responsive participants (De Vaus, 2002). Open-ended questions, which allow participants to provide answers in their own terms (Groves et al., 2004) can be included where more reflective answers are needed, but should ideally be kept to a minimum, as they tend to have a lower response rate and may be seen as tedious by the respondents (De Vaus, 2002).

Observations

The use of observation in evaluation draws on its rich history in ethnography, particularly because observation is considered well suited to natural situations. Observation permits an evaluator to become a “temporary member of the setting (and thus) more likely to get to the informal reality” (Gillham, 2010) (p. 28). Observations complement other evaluation methods, allowing the evaluator to contextualize other data, become aware of subtle or routine aspects of a process and gather more of a sense of an activity as a whole (Bryman, 2004). It is particularly useful when the goal is to find out how the audience interacts with and reacts to the activity or how they behave in a particular setting.

The self-effacement of evaluating as a participant–observer (to a greater or lesser degree) (Angrosino, 2007) is particularly important in small, intimate events, where a conspicuous evaluator could disturb the ambience. There are advantages to using one person to make all the observations, in that they will maintain a consistent outlook and behavior. However, this raises issues about the evaluator’s subjectivity; one way around this is to carry out comparative observations using a team of evaluators. If there are multiple evaluators, they will need to agree on how they will use the guide and the method of conducting the observation.

Whether working singly or in a team, we devise a standard observation guide to allow evaluators to gather data as efficiently as possible. The guide includes space for observations on audience composition (age range, male/female ratio, etc.), outside problems (weather, noise, traffic, etc.), presenters (age, confidence, activity level, etc.), venue layout, audience engagement, and activity type and description. We suggest observers put themselves in an unobtrusive location, from where they can record data such as audience size, participation, interaction, and reactions. Using the guide, evaluators can take detailed, structured notes “live,” which can be supplemented by additional reflections immediately after the event.

Autonomous Methods

At festivals, multiple events occur concurrently and we have therefore found it useful to employ evaluation tools that allow us to collect data simultaneously from a variety of events and without an evaluator necessarily being present. A further bonus of autonomous methods is that because they do not involve interaction in person, audience members who dislike having to say “no” to an evaluator have an alternative route to contribute. Although an over-enthusiastic evaluator might not quite traumatize or alienate a potential participant (Allen, 2008), employing autonomous methods helps ensure the atmosphere of the event is unaffected and the disruption to participants’ enjoyment is minimized.

Two autonomous methods we have used are:

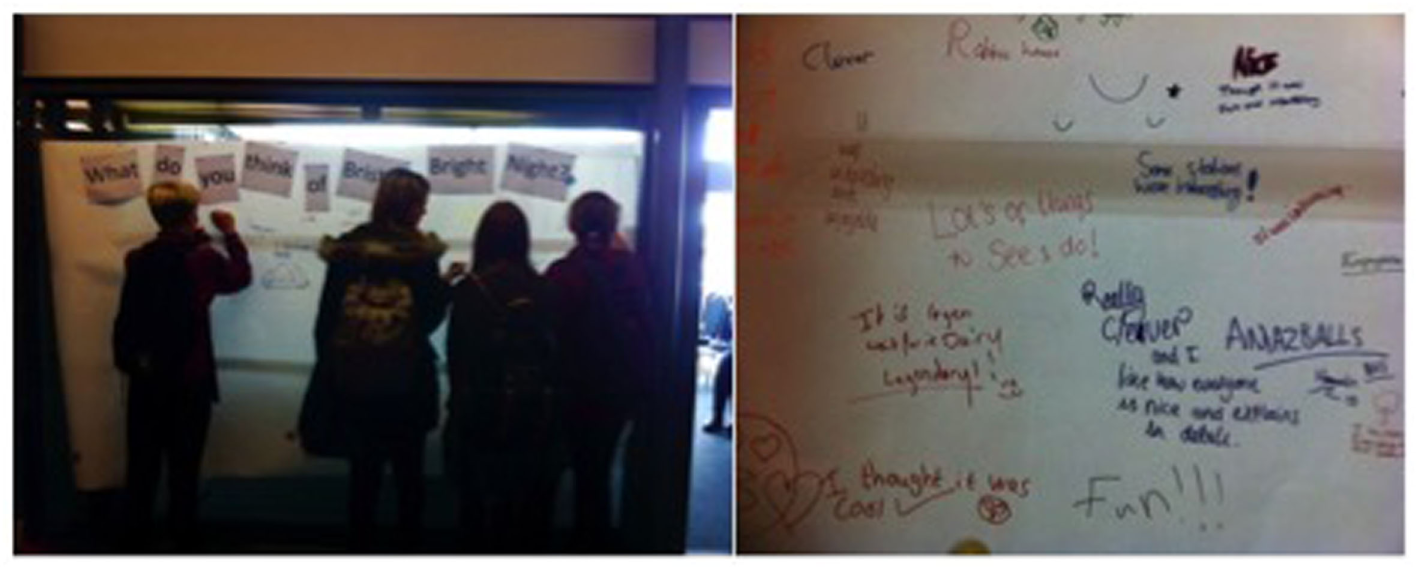

Graffiti Walls

Simply a wall or huge piece of paper on which audience members can draw or write, giving evaluators an insight into respondents’ thoughts and comments about the event (Rennie and McClafferty, 2014). Graffiti walls can include simple questions or prompts to focus respondents’ thoughts (see Figure 1). This evaluation method encourages interaction among audiences and with evaluators; seeing others’ contributions encourages people to add their own. The walls can become very attractive, especially if evaluators promote drawing as a means of expression, and this encourages further feedback.

Figure 1

Graffiti wall at Bristol Bright Night (Sardo and Grand, 2016).

While photographs of the rich feedback on graffiti walls certainly make a graceful addition to any evaluation report, they can also be used to show changes in knowledge, behavior, or understanding through the use of before and after drawings (Wagoner and Jensen, 2010). For analysis, Spicer (2017) suggests using a simple form of category analysis to group comments or drawings by their commonalities, and thus allow the data to be used in the same way as other qualitative data. For example, if the wall asks the question “what was the best thing about xxx?” the categories might include named activities, specific presenters, general positive comments, knowledge gained, etc.

One drawback is that graffiti walls are not completely anonymous; although respondents will probably not include their name or details alongside their contribution, it would be possible for an evaluator to covertly observe characteristics such as age, ethnicity, and sex. Spicer (2012) recommends having a “suggestion box” for people who feel uncomfortable leaving private comments on a public wall.

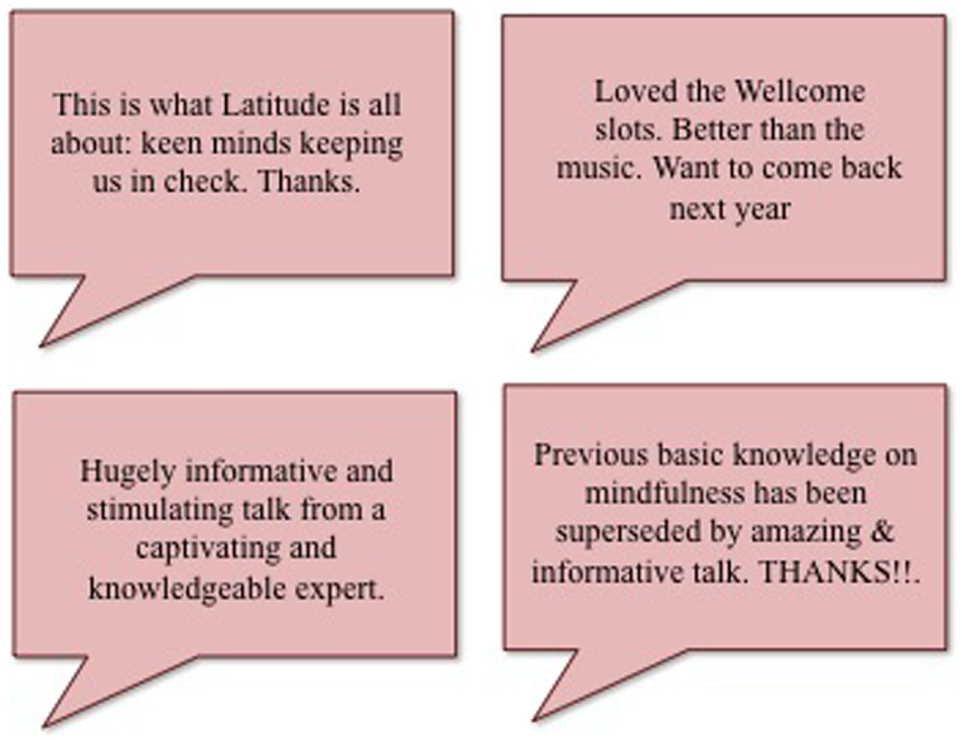

Feedback Cards

For LF and BBN, we designed cards with questions/prompts on one side and the other side left blank for responses (see Figure 2). Some questions were generic: “what do you think about this activity?” while others were specific: “how do you feel about neuroscience?” These are questions that might be asked in an online survey or snapshot interview (and indeed can allow the evaluator to triangulate data across an event) but the value of feedback cards does not lie in the differences in the framing of the questions but in the possibility of reaching multiple or reluctant responders.

Figure 2

Examples of comments left on feedback cards, Latitude Festival (Grand, 2015).

Depending on the style of the event, cards can be placed on seats, displayed on exhibits, be available at the entrance/exit or handed out by evaluators. For anonymous collection, we ask people to post completed cards in highly visible, strategically located boxes.

Feedback cards are not wholly reliable. When visitors take the time to add their comments, we have gathered rich, insightful, meaningful, and interesting comments. However, cards can easily be ignored, mis-used, or left blank. The style of event also matters: at BBN, with predominantly drop-in or promenade events, feedback cards produced little data but they were extremely successful at LF, where events had fixed durations.

Finding an appropriate distribution method that is unobtrusive but nonetheless encourages feedback is pivotal. At BBN, some researchers were reluctant to have feedback cards placed in their display areas and where they did have them, did not encourage visitors to complete them. At the cost of autonomy, we have used evaluators to hand out cards and encourage responses. This can increase response rates but the style of the evaluator then plays its part. Evaluators must approach a large number of participants to get a decent number of responses, so they must be active in approaching and engaging. They must also [pace Allen (2008)] be unintimidating and unthreatening.

Reflection and Conclusion

Like all good horticulture, there is more to working in the field than simply counting the crop. Evaluation happens before, during and after an event; preparing the field properly and working in sympathy with the environment is crucial to success.

To support the development of engagement, evaluation can be both formative and summative, and go further than headcounts or anecdote. Traditional methods (e.g., in-depth interviews, focus groups, and paper questionnaires), designed for formal venues, do not work well in the informal settings where engagement increasingly happens. Evaluations should be tailored to the context, the venue, the audience, and the activity; for informal settings this means unobtrusive and non-disruptive methods that allow the evaluators to experience the event from the audience’s perspective.

Interviews gather rich data but in busy, dynamic contexts need to be kept short, to encourage participation by allowing people to quickly move on to the next activity; such “snapshot” interviews capture instant reactions and have high-participation rates. An alternative is to use post-event online questionnaires; these remove potential interviewer bias and allow participants to feed back at a convenient time and consider their responses but do mean that responses are delayed, rather than fresh. However, post-event online questionnaires are only usable if organizers have people’s permission to use their email addresses.

Detailed, structured observations can provide more than headcounts and activity logs. Observations permit contextualization of other data and reflect the whole process of an activity. Although they can suffer from subjectivity, this can be mitigated by the use of an observation guide.

Autonomous methods, such as graffiti walls and comment cards, largely remove the evaluator as an active participant in the feedback process and so can be less irritating and intrusive. However, the completion rate relies heavily on evaluators subtly but positively encouraging participants to complete feedback.

Public engagement with science increasingly happens in informal spaces, as part of wider cultural activity, free-form, and flowing. Successful evaluation requires that we adapt our methods to suit that environment.

Statements

Ethics statement

The evaluation of the Wellcome Trust-sponsored science strand at the Latitude Festivals (LF) was approved by the Faculty Ethics Research Committee of the Faculty of Health & Applied Sciences, University of the West of England, Bristol, UK. The evaluation of the Bristol Bright Nights (BBN) was approved by the Faculty Ethics Research Committee of the Faculty of Environment & Technology, University of the West of England, Bristol, UK. All subjects gave informed consent for the collection of data. For snapshot interviews (used at LF) participants gave verbal consent, which was audio-recorded. Information sheets, giving details of how to withdraw data from the evaluation, were offered to participants. For online questionnaires (used at BBN), participants read an information text and were required to indicate their consent before they proceeded to the questionnaire. Information on how to withdraw data was included in the questionnaire. No personally identifying data were collected and all data were made anonymous before use. The data were securely stored on a password-protected computer.

Author contributions

Research, evaluations, and writing: AG and AS.

Funding

The evaluation of the Latitude festivals was commissioned by Latitude Festivals Ltd. (http://www.latitudefestival.com/). The evaluation of the Bristol Bright Nights (http://www.bnhc.org.uk/bristol-bright-night/) was funded as part of the Marie Skłodowska-Curie Actions, part of European Researchers Night (http://ec.europa.eu/research/researchersnight/index_en.htm).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

1.^http://www.latitudefestival.com/.

2.^http://www.bnhc.org.uk/bristol-bright-night/.

3.^http://ec.europa.eu/research/researchersnight/index_en.htm.

References

1

A CAISE. (2017). Evaluation [Online]. Center for Advancement of Informal Science. Available at: http://informalscience.org/evaluation

2

AllenS. (2008). “Tools, tips, and common issues in evaluation experimental design choices,” in Framework for Evaluating Impacts of Informal Science Education Projects: Report from a National Science Foundation Workshop, ed. FriedmanA. (National Science Foundation), 31–43. Available at: http://www.aura-astronomy.org/news/EPO/eval_framework.pdf

3

AngrosinoM. (2007). Doing Ethnographic and Observational Research. Thousand Oaks, CA: SAGE.

4

ARC. (2016). Australian Research Council National Innovation and Science Agenda [Online]. Available at: http://www.arc.gov.au/sites/default/files/filedepot/Public/ARC/consultation_papers/ARC_Engagement_and_Impact_Consultation_Paper.pdf

5

BauerM.JensenN. P. (2011). The mobilization of scientists for public engagement. Public Understand. Sci.20, 3.10.1177/0963662510394457

6

BrymanA. (2004). Social Research Methods. Oxford: Oxford University Press.

7

BultitudeK.McDonaldD.CusteadS. (2011). The rise and rise of science festivals: an international review of organised events to celebrate science. Int. J. Sci. Educ. Part B1:165–188.10.1080/21548455.2011.588851

8

BultitudeK.SardoA. M. (2012). Leisure and pleasure: science events in unusual locations. Int. J. Sci. Educ.34, 2775–2795.10.1080/09500693.2012.664293

9

BurchellK.SheppardC.ChambersJ. (2017). A ‘work in progress’? UK researchers and participation in public engagement. Res. All1, 198–224.10.18546/RFA.01.1.16

10

CastellS.CharltonA.ClemenceM.PettigrewN.PopeS.QuigleyA.et al (2014). Public Attitudes to Science 2014. London: IpsosMori Social Research Institute. Available at: https://www.ipsos.com/ipsos-mori/en-uk/public-attitudes-science-2014

11

CharmazK. (2006). Constructing Grounded Theory: A Practical Guide through Qualitative Analysis. London: SAGE.

12

CookeS.GallagherA.SopinkaN.NguyenV.SkubelR.HammerschlagN.et al (2017). Considerations for effective science communication. FACETS2, 233.10.1139/facets-2016-0055

13

CouperM.TraugottM.LamiasM. (2002). Web survey design and administration. Public Opin. Q.65, 230–253.

14

De VausD. (2002). Surveys in Social Research. Social Research Today, 5th Edn. New York: Routhedge.

15

DeWittJ. (2009). “Snapshot interviews,” in Poster Presentation at the ECSITE Annual Conference; 2009 June 4–6; Budapest, Hungary. Available at: http://www.raeng.org.uk/societygov/public_engagement/ingenious/pdf/JED_poster_ECSITE09.pdf

16

DurantJ.BuckleyN.ComerfordD.Fogg-RogersL.FoosheeJ.LewensteinB.et al (2016). Science Live: Surveying the Landscape of Live Public Science Events [Online]. Boston & Cambridge, UK: ScienceLive. Available at: https://livescienceevents.org/portfolio/read-the-report/

17

FriedmanA. (2008). Framework for Evaluating Impacts of Informal Science Education Projects [Online]. Available at: http://www.aura-astronomy.org/news/EPO/eval_framework.pdf

18

GillhamB. (2010). Case Study Research Methods. London/New York: Continuum.

19

GrandA. (2015). “Café Scientifique,” in Encyclopedia of Science Education, ed. GunstoneR. (Heidelberg: Springer-Verlag). Available at: http://www.springerreference.com

20

GrovesR.FowlerF.CouperM.LepkowskiJ.SingerE.TourangeauR. (2004). Survey Methodology. Wiley Series in Survey Methodology, 1st Edn. Hoboken, NJ: Wiley-Interscience.

21

HEFCE. (2014). REF Impact [Online]. Available at: http://www.hefce.ac.uk/rsrch/REFimpact/

22

IllingworthS. (2017). Delivering effective science communication: advice from a professional science communicator. Semin. Cell Dev. Biol.70, 10–16.10.1016/j.semcdb.2017.04.002

23

Infinite Monkey Cage Live. (2016). About [Blog]. Available at: http://www.infinitemonkeycage.com/about/

24

JensenE. (2014). The problems with science communication evaluation. JCOM13, C04.

25

JensenE. (2015). Highlighting the value of impact evaluation: enhancing informal science learning and public engagement theory and practice. JCOM14, Y05.

26

JohnsonR.WaterfieldJ. (2004). Making words count: the value of qualitative research. Physiother. Res. Int.9, 121–131.10.1002/pri.312

27

KingH.SteinerK.HobsonM.RobinsonA.ClipsonH. (2015). Highlighting the value of evidence-based evaluation: pushing back on demands for ‘impact’. JCOM14, A02.

28

MathisonS. (2005). Encyclopaedia of Evaluation. Thousand Oaks, CA: SAGE.

29

NCCPE. (2016). Evaluating Public Engagement [Online]. Available at: https://www.publicengagement.ac.uk/plan-it/evaluating-public-engagement

30

NeresiniF.BucchiM. (2011). Which indicators for the new public engagement activities? An exploratory study of European research institutions. Public Understand. Sci.20, 64.10.1177/0963662510388363

31

RennieL.McClaffertyT. (2014). ‘Great Day Out at Scitech’: Evaluating Scitech’s Impact on Family Learning and Scientific Literacy. Perth, WA: Scitech. Available at: https://www.scitech.org.au/images/Business_Centre/PDF/2014_Great_Day_Out_At_Scitech_visitor_impact_study.pdf

32

SardoA. M.GrandA. (2016). Science in culture: audiences’ perspectives on engaging with science at a summer festival. Sci. Commun.38, 251.10.1177/1075547016632537

33

SearleS. (2014). How do Australians Engage with Science? Preliminary Results from a National Survey. Australian National Centre for the Public Awareness of Science (CPAS), The Australian National University.

34

SpicerS. (2012). Evaluating Your Engagement Activities. Developing an Evaluation Plan. The University of Manchester. Available at: http://www.engagement.manchester.ac.uk/resources/guides_toolkits/Writing-an-evaluation-plan-for-PE.pdf

35

SpicerS. (2017). The nuts and bolts of evaluating science communication activities. Semin. Cell Dev. Biol.70, 17–25.10.1016/j.semcdb.2017.08.026

36

StilgoeJ.LockS. J.WilsdonJ. (2014). Why should we promote public engagement with science?Public Underst. Sci.23, 4–15.10.1177/0963662513518154

37

TongA.SainsburyP.CraigJ. (2007). Consolidated criteria for reporting qualitative research (COREQ), a 32-item checklist for interviews and focus groups. Int. J. Qual. Health Care19, 349–357.10.1093/intqhc/mzm042

38

VenugopalS.FeatherstoneH. (2014). “Einstein’s garden: an exploration of visitors’ cultural associations of a science event at an arts festival,” in UWE Science Communication Postgraduate Papers, ed. GrandA. (Bristol, UK: University of the West of England, Bristol). Available at: http://eprints.uwe.ac.uk/22753

39

WagonerB.JensenE. (2010). Science learning at the zoo: evaluating children’s developing understanding of animals and their habitats. Psychol. Soc.3, 65–76.

40

WatermeyerR. (2012). From engagement to impact? Articulating the public value of academic research. Tertiary Educ. Manage.18, 115–130.10.1080/13583883.2011.641578

41

Wellcome Trust. (2017). Public Engagement Resources for Researchers [Online]. Available at: https://wellcome.ac.uk/sites/default/files/planning-engagement-guide-wellcome-nov14.pdf

42

WieheB. (2014). When science makes us who we are: known and speculative impacts of science festivals. JCOM13, C02.

43

WilkinsonC.WeitkampE. (2016). Evidencing Impact: The Challenges of Mapping Impacts from Public Engagement and Communication [Blog]. Available at: blogs.lse.ac.uk/impactofsocialsciences/2016/06/17/evidencing-impact-the-challenges-of-mapping-impacts-from-public-engagement

44

WortleyS.StreetJ.LipworthW.HowardK. (2016). What factors determine the choice of public engagement undertaken by health technology assessment decision-making organizations?J. Health Organ. Manag.30, 872.10.1108/JHOM-08-2015-0119

Summary

Keywords

evaluation, methodology, informal events, science communication, public engagement, non-traditional methods

Citation

Grand A and Sardo AM (2017) What Works in the Field? Evaluating Informal Science Events. Front. Commun. 2:22. doi: 10.3389/fcomm.2017.00022

Received

20 September 2017

Accepted

23 November 2017

Published

11 December 2017

Volume

2 - 2017

Edited by

Anabela Carvalho, University of Minho, Portugal

Reviewed by

Brian Trench, Dublin City University, Ireland; Karen M. Taylor, University of Alaska Fairbanks, United States

Updates

Copyright

© 2017 Grand and Sardo.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ann Grand, ann.grand@uwa.edu.au

Specialty section: This article was submitted to Science and Environmental Communication, a section of the journal Frontiers in Communication

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.