95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Cell Dev. Biol. , 17 February 2023

Sec. Molecular and Cellular Pathology

Volume 11 - 2023 | https://doi.org/10.3389/fcell.2023.1133680

This article is part of the Research Topic Artificial Intelligence Applications in Chronic Ocular Diseases View all 31 articles

Zuhui Zhang1,2†

Zuhui Zhang1,2† Ying Wang1†

Ying Wang1† Hongzhen Zhang1

Hongzhen Zhang1 Arzigul Samusak1

Arzigul Samusak1 Huimin Rao1

Huimin Rao1 Chun Xiao1

Chun Xiao1 Muhetaer Abula1

Muhetaer Abula1 Qixin Cao3*

Qixin Cao3* Qi Dai1,2*

Qi Dai1,2*With the rapid development of computer technology, the application of artificial intelligence (AI) in ophthalmology research has gained prominence in modern medicine. Artificial intelligence-related research in ophthalmology previously focused on the screening and diagnosis of fundus diseases, particularly diabetic retinopathy, age-related macular degeneration, and glaucoma. Since fundus images are relatively fixed, their standards are easy to unify. Artificial intelligence research related to ocular surface diseases has also increased. The main issue with research on ocular surface diseases is that the images involved are complex, with many modalities. Therefore, this review aims to summarize current artificial intelligence research and technologies used to diagnose ocular surface diseases such as pterygium, keratoconus, infectious keratitis, and dry eye to identify mature artificial intelligence models that are suitable for research of ocular surface diseases and potential algorithms that may be used in the future.

Artificial intelligence (AI) is a frontier field of computer science whose goal is to use computers to solve practical issues (Rahimy, 2018). The concept was introduced at a workshop at Dartmouth College in 1956 (Lawrence et al., 2016). The conference discussed the relevant theories and principles of machine simulation intelligence. Since then, the development of AI has been unstable due to limited technical conditions and levels. Nevertheless, with the rapid development of computer technology, the application of AI in medical research has become a hot topic in modern technology. Recently, healthcare has become one of the frontiers of AI applications, particularly for image-centric subspecialties such as ophthalmology (Ting et al., 2019), cardiology (Dey et al., 2019), radiology (Saba et al., 2019), and oncology (Niazi et al., 2019), among others. They adopt big data technology to collect massive clinical data and images and apply big medical data to AI to guide or assist doctors in clinical decision-making through the supercomputing power and data mining ability of cloud computing. AI can obtain disease characteristics from the training set and apply them to a verification or test set to diagnose the corresponding disease. AI can segment anatomical structures such as abnormal shapes in the images. AI can also classify images into different types according to the characteristics of diseases. The algorithms of AI include traditional machine learning (ML) algorithm and deep learning (DL) algorithm. The traditional ML algorithms mainly include linear regression, logical regression, support vector machine (SVM), decision tree and random forest (RF) algorithms, and usually do not involve large-scale neural networks. DL algorithm mainly uses multimedia data sets (such as images, videos, and sounds), and usually involves the application of large-scale neural networks, including artificial neural network (ANN), convolutional neural network (CNN), and recurrent neural network (RNN).

Previously, most studies on the application of AI in ophthalmology focused on glaucoma (Devalla et al., 2018; Kucur et al., 2018; Asaoka et al., 2019; Wang M. et al., 2019), fundus diseases (Gulshan et al., 2016; Burlina et al., 2017; Ting et al., 2017; Venhuizen et al., 2018; Nagasato et al., 2019), and cataracts (Gao et al., 2015; Yang et al., 2016; Long et al., 2017; Wu et al., 2019; Xu et al., 2020). Compared to diagnosing retinal diseases, which largely depend on fundus images acquired from ophthalmoscopy or fundus photography, multiple examinations are required to diagnose ocular surface diseases, considering the complexity of their structural and physiological functions. In recent years, with the expansion of AI in ophthalmology, increasing research has applied AI to ocular surface diseases such as pterygium, keratoconus (KC), infection keratitis, and dry eye. Herein, we reviewed research on the application of AI in the field of ocular surface-related diseases to guide clinical work. The remainder of this paper consists of the following: Sections 2–7 provides the efficiency of AI in diagnosing ocular surface diseases, pterygium, KC, infectious keratitis, dry eye, and other ocular surface diseases.

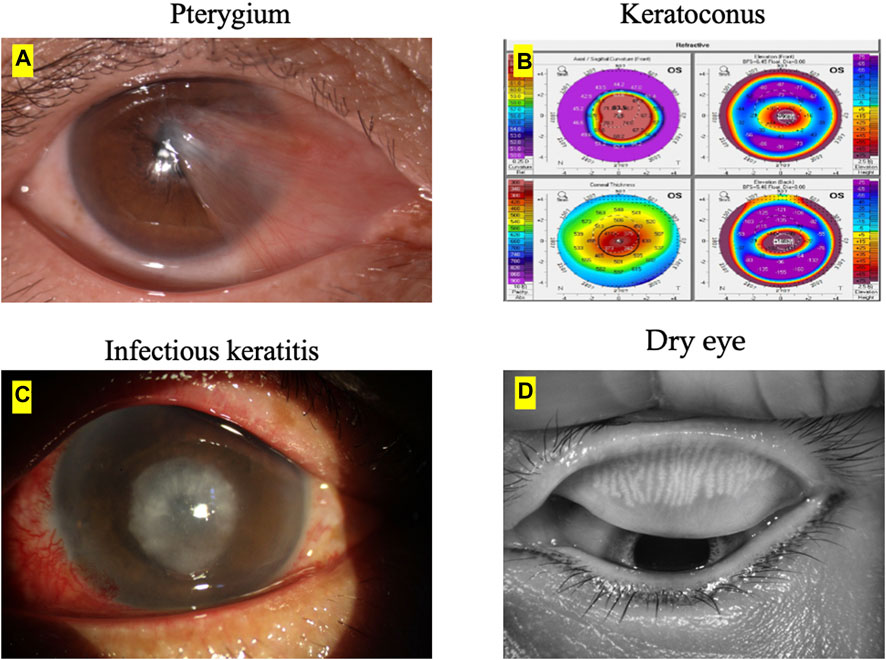

The image examples of ocular surface diseases and image modalities to diagnose each corneal disease is presented in Figure 1. The main image modalities of ocular surface diseases include anterior segment photograph, pentacam, slit-lamp images and Keratograph 5M, etc.

FIGURE 1. Ocular surface diseases and image modalities. (A) The main imaging modality of pterygium is anterior segment photograph. (B) The main imaging modality of keratoconus is pentacam. (C) The main imaging modality of infectious keratitis is slit-lamp images. (D) The main imaging modality of dry eye is Keratograph 5M.

A systematic literature search was performed in PubMed and Web of science. The goal was to retrieve as many studies as possible applying ML to ocular surface disease related data. The following keywords were used: All combinations of “ocular surface,” “pterygium,” “keratoconus,” “keratitis,” “dry eye,” and “meibomian gland dysfunction (MGD)” with “artificial intelligence,” “machine learning,” “deep learning,” “convolutional neural network,” “decision tree.” No time period limitations were applied for any of the searches.

Pterygium is a common eye disorder in which abnormal fibrovascular tissue protrudes from the inner side of the eyes toward the corneal area (Zulkifley et al., 2019). Since it is directly linked to excessive exposure to ultraviolet radiation, farmers and fishermen are the two high-risk groups (Gazzard et al., 2002; Abdani et al., 2019). This condition can be better managed when patients know about this disease early. Moreover, pterygium tissues or lesions encroach on the pupil area at the latter stage, possibly causing vision impairment (Tomidokoro et al., 2000; Clearfield et al., 2016; Wang F. et al., 2021). Currently, the grading of pterygium is mainly based on the subjective evaluation of doctors. Therefore, AI can be used to develop an efficient automatic grading system for pterygium (Hung et al., 2022). In vast rural and remote areas that lack professional medical resources for ophthalmology, AI diagnostic technology can provide local patients with a convenient pterygium screening method, prevent the rush of patients to county or prefectural hospitals for medical care, and reduce the burden on patients. Furthermore, it suggests treatment methods, clarifies the indications for further surgical treatment, facilitates the timely referral of patients needing surgery at the grassroots level, and rationally allocates medical resources. Table 1 mainly reviews AI applications for the diagnosis of pterygium.

In 2012, Gao et al. (2012) proposed a pterygium detection system based on color information. Interestingly, the pupil detection technique, which uses corneal images, achieved 85.38% accuracy. Similarly, Mesquita and Figueiredo (2012) applied a circle hough transform to segment the iris. Subsequently, a region-growing algorithm based on Otsu’s algorithm is applied to the iris’s segmented area to segment the pterygium tissue. Wan Zaki et al. (2018) developed an image-processing method based on ASP using the following four modules to differentiate pterygium from normal: preprocessing, corneal segmentation, feature extraction, and classification. Image-processing method performance was evaluated using a SVM and an ANN. The performance of the proposed image-processing method generated results of 88.7%, 88.3%, and 95.6% for sensitivity, specificity, and area under the curve (AUC), respectively. However, the imperfect image setup should also be noted as a limitation. Abdani et al. (2020) and Abdani et al. (2021) proposed an automatic pterygium tissue segmentation using CNN. This is useful for detecting pterygium from the early stage to the late stage. The overall accuracy of both studies is high [92.20% (Abdani et al., 2020), 93.30% (Abdani et al., 2021)].

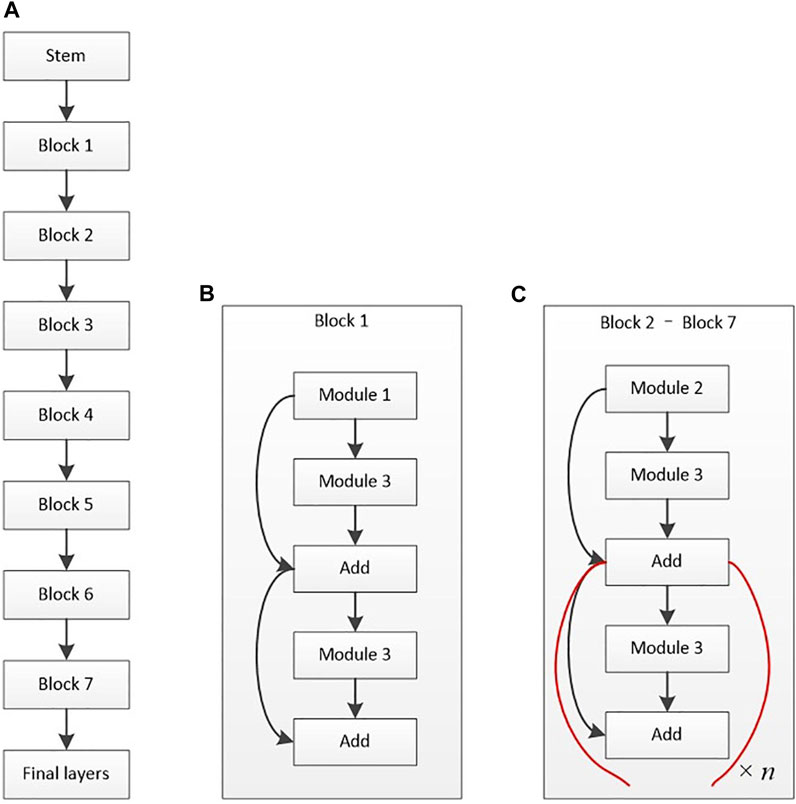

Zhang et al. (2018) also used a deep DL diagnosis system that can automatically diagnose various eye diseases based on the patient’s ASP and provide diagnosis-based targeted treatment recommendations. Specifically, the last stage provides treatment advice based on medical experience and AI strictly associated with pterygium (accuracy, >95%). Zulkifley et al. (2019) proposed a DL approach (Pterygium-Net) based on fully convolutional neural networks (FCNN) with the help of transfer learning to detect and localize the pterygium automatically. Pterygium-Net produces high average detection sensitivity and specificity of 0.95 and 0.983, respectively. As for pterygium tissue localization, the algorithm achieves 0.811 accuracies with a meager failure rate of 0.053. Xu W. et al. (2021) developed a unique intelligent diagnosis system based on DL to diagnose pterygium (Figure 2 depicts the architectural diagram of EfficientNet-B6, created by Xu et al.). Experts and the AI diagnosis system categorized the images into the following three categories: normal, pterygium observation, and pterygium surgery. Moreover, the accuracy rate of the AI diagnostic system on the 470 tested images was 94.68%, diagnostic consistency was high, and kappa values of the three groups were above 85%. The AI, pterygium diagnosis system, can not only judge the presence of pterygium but also classify the severity of pterygium. Fang et al. (2022) evaluated the performance of a DL algorithm for the detection of the presence and extent of pterygium based on ASP taken from slit-lamp and handheld cameras. The AI algorithm could detect the presence of referable-level pterygium with optimal sensitivity and specificity. A handheld camera might be a simple screening tool for detecting reference pterygium.

FIGURE 2. Architectural diagram of EfficientNet-B6 (Xu W. et al., 2021). (A) Basic architecture. (B) Structure of block 1. (C) Structure of blocks 2–7.

Hung et al. (2022) proposed a DL system to predict pterygium recurrence. The AI algorithm shows high specificity (80.00%) but low sensitivity (66.67%) in predicting pterygium recurrence. Wan et al. (2022) proposed a DL system for measuring the pathological progression of pterygium. These are essential for achieving accurate medical diagnosis and can conveniently assist ophthalmologists in timely detecting pterygium status and arranging surgery strategies. In addition to the abovementioned application of AI to the segmentation and diagnosis of pterygium, Kim et al. (2022) developed AI software for quantitative analysis of the immunochemical image of pterygium. They concluded that the AI software might improve the reliability and accuracy of evaluating histopathological specimens obtained after ophthalmological surgery. The above research shows that the AI model can achieve satisfactory results in the diagnosis and classification prediction of pterygium.

KC is a non-inflammatory, asymmetric, ectatic corneal disorder characterized by progressive thinning and impaired vision (Henein and Nanavaty, 2017; Mas Tur et al., 2017). Since the signs of intermediate and advanced KC are quite common, clinical diagnosis is straightforward (Gomes et al., 2015). Atypical KC includes KC suspect (KCS), forme fruste KC (FFKC), and subclinical KC (SKC). Unfortunately, these atypical KC symptoms and signs are not obvious and are difficult to diagnose based on general examination results. However, most of the KC studies analyzed the corneal morphological metrics from Pentacam. AI-based corneal morphological metrics can provide early KC detection. Moreover, early AI research on KC relied on corneal topography data for neural network training to distinguish KC from other corneal abnormalities such as astigmatism, corneal transplantation, and post-photorefractive keratectomy (PRK). Table 2 mainly reviews AI applications for the diagnosis of KC.

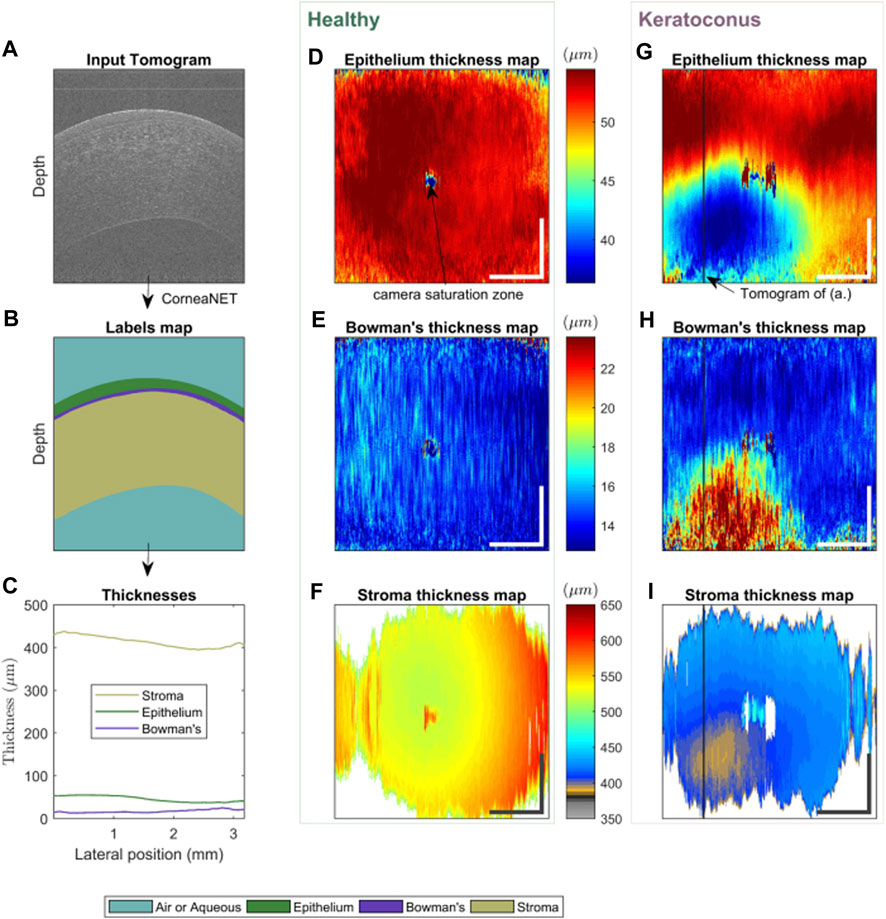

The advantage of these AI algorithms lies in the potential to help clinicians differentiate between KC and normal eyes. In 1997, Smolek and Klyce (1997) designed a classification neural network for KC screening to detect the existence of KC or KCS. In total, 10 topographic indices were used as the network inputs. The AI model showed 100% accuracy, specificity, and sensitivity for the test set. Accardo and Pensiero (2002) proposed an ANN method to identify KC from corneal topographies. The results showed a global sensitivity and specificity of 94.1% (with a KC sensitivity of 100%) and 97.6% (98.6% for KC alone) in the test set, respectively. This study elevates the potential of AI for the automatic screening of early KC, pointing out that simultaneously using the topographic parameters of both eyes improves the discriminative capability of the ANN. Twa et al. (2005) described applying decision tree induction, an automated machine learning classification (MLC) approach, to objectively and quantitatively differentiate between normal and KC corneal shapes. The results showed an accuracy of 92% and an area under the receiver operating characteristic (ROC) curve of 0.97. Arbelaez et al. (2012) employed the SVM algorithm to integrate data from the corneal surfaces and pachymetry into the model. Interestingly, precision was improved the most when posterior corneal surface data were included, particularly in SKC cases. Additionally, this AI approach increases its sensitivity from 89.3% to 96.0%, 92.8% to 95.0%, 75.2% to 92.0%, and 93.1% to 97.2% in abnormal eyes, eyes with KC, those with SKC, and normal eyes, respectively. Therefore, the diagnostic accuracy of the AI approach was further improved by including the posterior corneal surface and corneal thickness data. Smadja et al. (2013) applied an MLC to discriminate between normal eyes and KC with 100% sensitivity and 99.5% specificity and between normal and FFKC with 93.6% sensitivity and 97.2% specificity. The MLC showed excellent performance in discriminating between normal eyes and FFKC, thus providing a tool closer to automated medical reasoning. This AI might undoubtedly enable clinicians to detect FFKC before refractive surgery. However, its effect requires further validation since only 372 eyes of 197 patients were included. Similarly, Ruiz Hidalgo et al. (2016) classified 860 eyes into five groups by combining 22 parameters obtained from Pentacam measurements and conducted MLC training. Consequently, the accuracy of the FFKC versus normal task was 93.1%, with 79.1% sensitivity and 97.9% specificity for the FFKC discrimination. Considering the difference between eyes, Kovács et al. (2016) included a “bilateral data” parameter and used a neural network algorithm for modeling. This system on bilateral data of the index of height decentration had a higher accuracy than a single unilateral parameter in differentiating the eyes of all patients with KC from control eyes (area under ROC, 0.96 versus 0.88). Yousefi et al. (2018) developed an unsupervised ML algorithm and applied it to identify and monitor KC stages. Four hundred and twenty corneal topographies, elevations, and pachymetric parameters were also measured. Notably, the specificity of this AI method for identifying normal eyes from those with KC was 94.1%, and the sensitivity for identifying KC in normal eyes was 97.7%. Therefore, this technique can be adopted in corneal clinics and research settings to better diagnose and monitor changes and improve our understanding of corneal changes in KC. Zou et al. (2019) also investigated the diagnosis of healthy corneas, SKC, and KC through ML modeling using Pentacam data of participants in 2018. The diagnostic accuracy of this model for SKC and normal corneas was 95.53% and 96.67%, respectively, and the AUC of the validation set was 99.36%. Conversely, the accuracy of diagnosis of KC and normal corneas was 98.91%, and the AUC of the validation set was 99.82%. The diagnostic accuracy of the model was 95.53%, which was significantly better than the resident’s with 93.55%. Dos Santos et al. (2019) employed a custom-built ultra-high-resolution OCT (UHR-OCT) system to scan 72 and 70 normal and KC eyes, respectively. Overall, 20,160 images were labeled and used for training in a supervised learning approach. A custom neural network architecture, CorneaNet [Figure 3 depicts CorneaNet, created by Dos Santos et al. (2019)], was designed and trained. This study revealed that CorneaNet could segment both normal and KC images with high accuracy (validation accuracy, 99.56%). Interestingly, CorneaNet could detect KC early and, more generally, examine other diseases that change corneal morphology. Issarti et al. (2019) established a stable, low-cost computer-aided diagnosis (CAD) system for early KC detection. CAD combines a custom-made mathematical model, feedforward neural network (FFN), and Grossberg-Runge Kutta architecture to detect and suspect KC clinically. The final diagnostic accuracy was >95% for KCS, mild KC, and moderate KC. The algorithm also provides a 70% reduction in computation time while increasing stability and convergence regarding traditional ML techniques.

FIGURE 3. Using CorneaNet, the thicknesses of the epithelium, stroma, and Bowman’s layer were computed in a normal and a KC case (Dos Santos et al., 2019). The healthy case shows close to uniform thicknesses for all three layers. In contrast, for the KC case, the epithelium and stroma are thinner in a specific region of the cornea, and Bowman’s layer is thicker. (A–C) Thickness calculation in one tomogram. (A) UHR-OCT tomogram of a keratoconus patient, (B) corresponding labels map computed using CorneaNet. (B) Thicknesses of the three corneal layers computed using the label maps. (D–F) Thickness maps in a healthy subject case. (G–I) Thickness maps in a keratoconus case. The thickness scale bar is shared by the maps horizontally. Scale bar: 1 mm.

Some studies have focused on staging KC severity. Kamiya et al. (2019) applied the DL of color-coded maps, measured using swept-source AS-OCT, to evaluate the diagnostic accuracy of KC. They included a total of 304 eyes [grades 1 (108 eyes), 2 (75 eyes), 3 (42 eyes), and 4 (79 eyes)] according to the Amsler–Krumeich classification and 239 age-matched healthy eyes. This AI system effectively discriminated KC from normal corneas (99.1% accuracy) and further classified the grade of the disease (87.4% accuracy). Two studies used topography images to detect and stage KC (Kamiya et al., 2021a; Chen et al., 2021). Both studies had high overall accuracies [78.5% (Kamiya et al., 2021a), 93% (Chen et al., 2021)], with better performance on color-coded maps than the raw topographic indices. Malyugin et al. (2021) trained an ML model using topography images and visual acuity to classify KC stages based on the Amsler–Krumeich classification system. The model’s overall classification accuracy was 97%, highest for stage 4 KC and lowest for FFKC. Another study trained an ensemble CNN on Pentacam measurements to differentiate between normal eyes and early, moderate, and advanced KC with a staging accuracy of 98.2% (Ghaderi et al., 2021). Other studies have focused on detecting KC progression, though each study had varying definitions of disease progression. The first study trained a CNN model on AS-OCT images, achieving an 84.9% accuracy in discriminating KC with and without progression (Kamiya et al., 2021b). Another study trained an AI model to predict KC progression and the need for corneal crosslinking using tomography maps and patient age with an AUC of 0.814 (Kato et al., 2021).

Lavric and Valentin (2019) proposed a corneal detection algorithm using CNN to analyze and detect KC and obtained an accuracy rate of 99.33%. Kuo B. I et al. (2020) developed a DL algorithm for detecting KC based on a computer-assisted videokeratoscope (TMS-4), Pentacam and Corvis ST. The AI model has high sensitivity and specificity in identifying KC. Abdelmotaal et al. (2020) used a domain-specific CNN to implement DL. The CNN performance was assessed using standard metrics and detailed error analyses, which include network activation maps. Accordingly, the CNN categorized four map-selectable display images, with average accuracies of 0.983 and 0.958 for the training and test sets, respectively. Furthermore, Shi et al. (2020) created an automated classification system that used MLC to distinguish clinically unaffected eyes in patients with KC from a normal population by combining Scheimpflug camera images and UHR-OCT imaging data. Interestingly, this AI model dramatically improved the differentiable power to discriminate between normal eyes and those with SKC (AUC = 0.93). The epithelial features extracted from the OCT images were the most valuable for the discrimination process. Cao et al. (2021) developed a new clinical decision-making system based on ML, automatically detecting SKC with high accuracy, specificity and sensitivity. Mohammadpour et al. (2022) developed a classifier based on AI, which can help detect early keratoconus. Al-Timemy et al. (2021) trained a hybrid-CNN model to identify features and then used it to train an SVM to detect KC. The final AI model had a 97.70% accuracy in differentiating normal from KC eyes and 84.40% in differentiating normal, KCS, and KC based on the merged development subset and independent validation subset. Kundu et al. (2021) established a universal architecture of combining AS-OCT and AI. It achieves an excellent classification of normal and KC. This AI model effectively classified very asymmetric ectasia (VAE) eyes as SKC and FFKC. Tan et al. (2022) developed a novel method based on biomechanical parameters calculated from raw corneal dynamic deformation videos to quickly and accurately diagnose KC using ML (99.6% accuracy). Ahn et al. (2022) developed and validated a novel AI model to determine a diagnosis of KC based on basic ophthalmic examinations, including visual impairment, best-corrected visual acuity, intraocular pressure (IOP), and autokeratometry. Xu et al. (2022) developed a deep learning-derived classifier (KerNet) that is helpful for distinguishing clinically unaffected eyes in patients with asymmetric keratoconus (AKC) from normal eyes.

Other studies have compared AI algorithms to detect KC. Souza et al. (2010) used three algorithms: SVM, multi-layer perceptron, and radial basis function neural networks. Notably, the three selected classifiers performed optimally, with no significant differences between their performance. Cao et al. (2020) found the RF model outperformed other ML algorithms using tomographic and demographic data. Herber et al. (2021) found that the RF model had good accuracy in predicting healthy eyes and various stages of KC. The accuracy was superior to that of the linear discriminant analysis model. Castro-Luna et al. (2021) also found that the RF outperformed the decision tree model (89% accuracy vs. 71%, respectively), while Aatila et al. (2021) found the RF model to have the highest accuracy when compared with other ML models in detecting all classes of KC.

AI has been used to screen potential candidates for refractive surgery besides detecting KC. For example, Xie et al. (2020) established a system centered on the AI model Pentacam InceptionResNetV2 Screening System (PIRSS) to screen normal corneas, suspected irregular corneas, early stage KC, KC, and PRK corneas. The PIRSS system achieved an overall detection accuracy of 95%, similar to that of specialists who were refractive surgeons (92.8%). Recently, Hosoda et al. (2020) have identified KC-susceptibility loci by integrating genome-wide association study (GWAS) with AI, demonstrating that computational techniques combined with GWAS can help identify hidden relationships between disease susceptibility genes and potential susceptibility genes. The above research shows that the AI model is close to an experienced ophthalmologist in the classification and grading of KC.

Infectious keratitis is one of the most common corneal diseases that significantly causes visual impairment (Papaioannou et al., 2016; Austin et al., 2017; Flaxman et al., 2017; Ung et al., 2019). The disease can be categorized into different types, such as bacterial keratitis (BK) (Tuft et al., 2022), fungal keratitis (FK) (Sharma et al., 2022), herpes simplex virus stromal keratitis (HSK) (Banerjee et al., 2020), or Acanthamoeba keratitis (AK) (de Lacerda and Lira, 2021). Early detection and timely medical intervention of keratitis can prevent the disease progression, thus attaining a better prognosis (Austin et al., 2017; Lin et al., 2019). However, if not diagnosed and treated promptly, keratitis may lead to significant vision loss and corneal perforation (Watson et al., 2018). The diagnosis of infectious keratitis mostly depends on discriminatively identifying the visual features of the infectious lesion in the cornea by a skilled ophthalmologist. AI analysis has been introduced into the field of keratitis diagnosis for automatic real-time identification of abnormal components in corneal images, thereby assisting ophthalmologists in rapidly diagnosing infectious keratitis. Table 3 mainly reviews AI applications for the diagnosis of infectious keratitis.

In 2003, Saini et al. (2003) assessed the usefulness of ANN for classifying infective keratitis. The trained ANN correctly classified all 63 and 39 of 43 corneal ulcers in the training and test sets, respectively. Specificity for bacterial and fungal categories was 76.47% and 100%, respectively. The accuracy of the ANN was 90.7% and was significantly better than that of the ophthalmologist’s predictions (62.8%). These preliminary results suggest that using neural networks to interpret corneal ulcers requires further development. In 2017, Sun et al. (2017) established a new technique to automatically identify corneal ulcer sites using fluorescein staining images based on a CNN that labels each pixel in the staining image as an ulcer or a non-ulcer. The AI method had a mean Dice overlap of 0.86 compared with the manually delineated gold standard. In 2018, Patel et al. (2018) evaluated the variability of corneal ulcer measurements between cornea specialists and reduced clinician-dependent variability using semi-automated segmentation of ulcers from photographs. Wu et al. (2018) classified normal and FK images based on the newly proposed texture analysis method, adaptive robust binary pattern (ARBP), and the SVM, preprocessed abnormal images to enhance targets and employed the line segment detector algorithm to detect hyphae. Interestingly, it could perfectly separate abnormal from normal corneal images with an accuracy of 99.74%. Liu et al. (2020) proposed a new CNN framework for automatically diagnosing FK using data augmentation and image fusion. This study indicated that the accuracy of conventional AlexNet and VGGNet were 99.35% and 99.14%, those of AlexNet and VGGNet based on mean fusion were 99.80% and 99.83%, and those of AlexNet and VGGNet based on histogram matching fusion (HMF) were 99.95% and 99.89%. Additionally, this novel CNN framework perfectly balances diagnostic performance and computational complexity and can improve real-time performance in diagnosing FK.

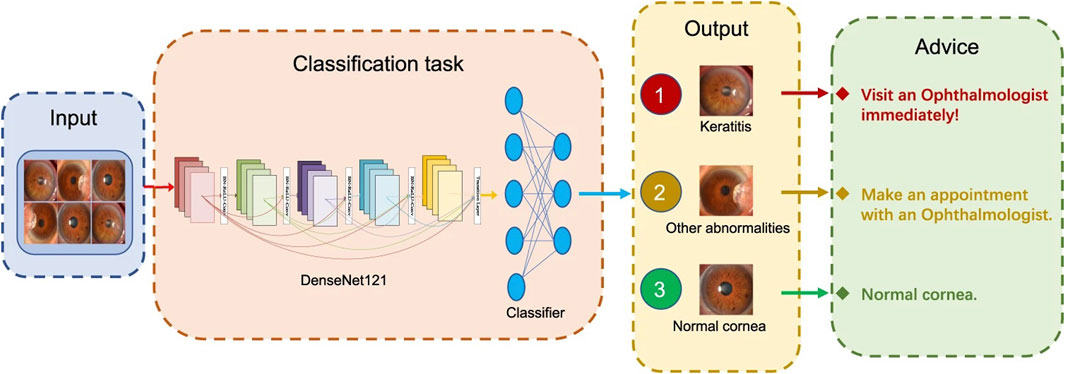

Lv et al. (2020) developed an AI system based on the DL algorithm for the automated diagnosis of FK in IVCM images. The AI system exhibited satisfactory diagnostic performance (93.64% accuracy) and effectively classified FK in various IVCM images. Xu F. et al. (2021) established an interpretable AI (XAI) system based on Gradient-weighted Class Activation Mapping (Grad-CAM) and Guided Grad-CAM and used IVCM images for FK detection. With better interpretability and explainability, XAI-assistance assistance increased the accuracy (94.2%) and sensitivity (92.7%) of competent and novice ophthalmologists significantly without reducing specificity (95.5%). Two studies used SLI images to detect FK (Kuo M. T et al., 2020; Mayya et al., 2021). The diagnostic rate of FK in one study is 69.40% (Kuo M. T et al., 2020), while that of the other study is 88.96% (Mayya et al., 2021). Xu Y. et al. (2021) designed a sequential-level deep model to discriminate infectious corneal diseases effectively by classifying clinical images based on more than 1,10,000 SLI. The model achieved a diagnostic accuracy of 80%, much better than the 49.27% diagnostic accuracy of 421 ophthalmologists. Furthermore, Li Z. et al. (2021) developed an AI system for the automated classification of keratitis, other corneal abnormalities, and normal corneas based on 6,567 SLI (Figure 4 depicts the workflow of the DL system in clinics, which was created by Li et al.). This AI system showed remarkable performance in cornea images captured by different digital slit-lamp cameras and a smartphone with the super macro mode (all AUCs >0.96). Additionally, the system performed similarly to that of ophthalmologist specialists in classifying keratitis, cornea with other abnormalities, and normal corneas.

FIGURE 4. Workflow of the DL system in clinics for detecting abnormal cornea findings (Li Z. et al., 2021).

Furthermore, Hung et al. (2021) applied different CNN to differentiate between BK and FK using SLI. The DL algorithm achieved an average accuracy of 80.0%. Additionally, the diagnostic accuracy for BK and FK ranged from 79.6% to 95.9% and 26.3% to 65.8%, respectively. Koyama et al. (2021) adopted a DL architecture for facial recognition and applied it to determine the probability score for specific pathogens that cause keratitis. 4,306 SLI were studied, including 312 images from internet publications on keratitis caused by bacteria, fungi, acanthamoeba, and HSV. The developed algorithm had a high overall accuracy; for diagnosis, the accuracy/AUC for AK, BK, FK, and HSK was 97.9%/0.995, 90.7%/0.963, 95.0%/0.975, and 92.3%/0.946, respectively. Zhang et al. (2022a) constructed an early IK-aided diagnosis model (KeratitisNet) based on DL. The accuracy of KeratitisNet for diagnosing BK, FK, AK, and HSK was 70.27%, 77.71%, 83.81%, and 79.31%, and AUC was 0.86, 0.91, 0.96, and 0.98, respectively. Ghosh et al. (2022) found that compared with the single architecture model, the CNN with ensemble learning performs best in distinguishing FK from BK.

In addition to the abovementioned discrimination between different keratitis types, there is also a study of a fully-automatic DL-based algorithm for segmenting ocular structures and microbial keratitis biomarkers on SLI (Loo et al., 2021). Tiwari et al. (2022) trained a CNN to differentiate active corneal ulcers from healed scars from SLI. The AI model was tested on internal (India) and external (the United States) data sets and achieved high performance (AUCs > 0.94). Koo et al. (2021) reported that the model detects hyphae more quickly, conveniently, and consistently through DL using CM images in real-world practice. The performance of this AI model showed high sensitivity and specificity. The above research shows different performances in the diagnosis and classification of different keratitis by AI model, but basically the accuracy is gradually improving.

Dry eye is one of the most common ocular surface diseases in clinical practice, characterized by a loss of homeostasis of the tear film and accompanied by ocular abnormalities, such as tear film instability and hyperosmolarity, ocular surface inflammation and damage, and neurosensory abnormalities (Craig et al., 2017a; Craig et al., 2017b; Stapleton et al., 2017). As the most common trigger of dry eye (Craig et al., 2017b), MGD is associated with many other ocular diseases (Sullivan et al., 2018; Lekhanont et al., 2019; Llorens-Quintana et al., 2020) and systemic factors (Arita et al., 2019; Sandra Johanna et al., 2019; Wang et al., 2020), which affect patients’ quality of life, causing ocular irritation, ocular surface inflammation, and visual impairment (Sabeti et al., 2020). Therefore, evaluating the function of meibomian glands (MGs) in patients with dry eyes is essential. Furthermore, MG morphology is closely associated with the severity of MGD, and the MG image index indicates their health (Giannaccare et al., 2018). Recently, researchers have started employing image processing and image analysis software such as ImageJ to perform morphological analysis of the structure of MGs. However, semi-quantitative analysis requires manual labeling of each image, which is labor-intensive and inefficient. The efficiency of AI technology in image recognition is much higher than that of manual analysis, and the cost is significantly reduced. Table 4 mainly reviews AI applications for the diagnosis of dry eye.

In 2019, Wang J. et al. (2019) established a DL approach to digitally segment the MG atrophy area and compute the percentage atrophy in meibography images. In total, 497 meibography images were used to train and adjust the DL model, while the remaining 209 images were applied for evaluation. The AI algorithm achieves 95.6% meiboscore grading accuracy on average, significantly outperforming the specialist by 16.0% and the clinical team by 40.6%. This study presents an accurate and consistent gland atrophy evaluation method for meibography images based on deep neural networks and may contribute to an improved understanding of MGD. However, this AI system could only predict the MG atrophy region rather than individual MG morphology. In 2020, Maruoka et al. (2020) evaluated the ability of DL models to detect obstructive MGD using in vivo confocal microscopy (IVCM) images. For the single DL model, the AUC, sensitivity, and specificity of diagnosing obstructive MGD were 0.966%, 94.2%, and 82.1%, respectively, and for the ensemble DL model, 0.981%, 92.1%, and 98.8%, respectively. Zhang et al. (2021) developed a DL algorithm to check and classify IVCM images of MGD automatically. By optimizing the AI algorithm, the classifier model displayed excellent accuracy. The sensitivity and specificity of the AI model for obstructive MGD were 88.8% and 95.4%, respectively, and for atrophic MGD, 89.4% and 98.4%, respectively. Furthermore, Zhou et al. (2020) used the transfer-learning mask R-CNN to build a model. The model evaluated each image in 0.499 s, whereas the average time for clinicians was more than 10 s. This study also included 2,304 MG images to construct an MG image database. The proportion of MGs marked by the model was 53.24% ± 11.09%, and the artificial marking was 52.13% ± 13.38%. Therefore, this model can improve the accuracy of examinations, save time, and be used for clinical auxiliary diagnosis and screening of diseases related to MGD. Prabhu et al. (2020) proposed an automated algorithm based on DL to segment MGs and evaluated various features for quantifying these glands. This study also analyzed five clinically relevant metrics in detail and found that they represented changes associated with MGD.

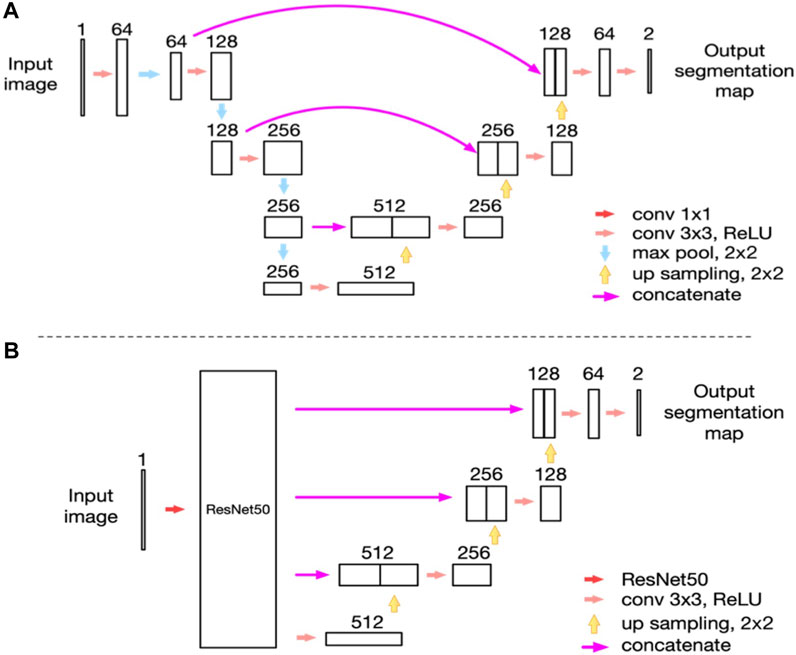

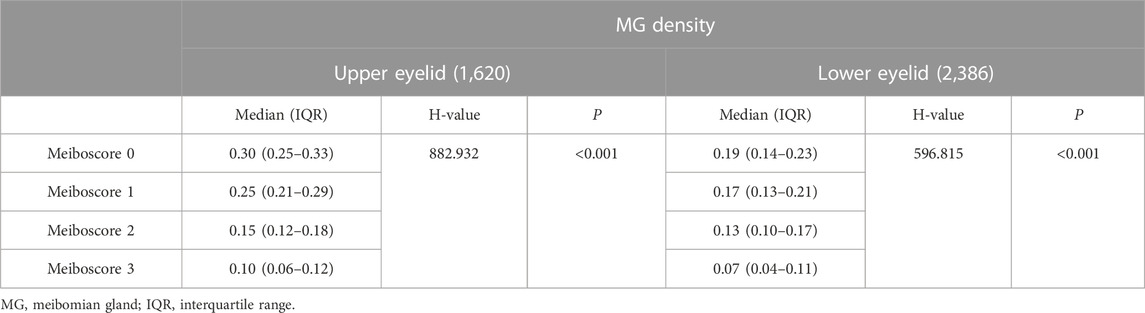

In 2021, we proposed a novel MGs extraction method based on CNN (Dai et al., 2021) with an enhanced mini U-Net. Consequently, the IoU achieved 0.9077, and repeatability was 100%. The processing time for each image was 100 ms. We identified a significant and linear correlation between MG morphology and clinical parameters using this method. This study provided a new method for quantifying morphological features of MG obtained by meibography. Furthermore, we used an advanced AI system based on ResNet_U-net (Figure 5 depicts the network structure created by Zhang et al.) to assess the effect of MG density in diagnosing MGD (Zhang et al., 2022b). The updated AI system achieved 92% accuracy (IoU) and 100% repeatability in MG segmentation. The AUC was 0.900 for MG density in all eyelids. Sensitivity and specificity were 88% and 81%, respectively, at a cutoff value of 0.275. We compared the correspondence between MG density and meiboscore, as shown in Table 5. Thus, MG density is an effective index for MGD, particularly supported by the AI system, which could replace the meiboscore.

FIGURE 5. Network structure (Zhang et al., 2022b). (A) The network structure of the modified U-net model as we reported previously; (B) The network structure of the ResNet50_U-net model in this study.

TABLE 5. Comparison table of MG density and meiboscore (Zhang et al., 2022b).

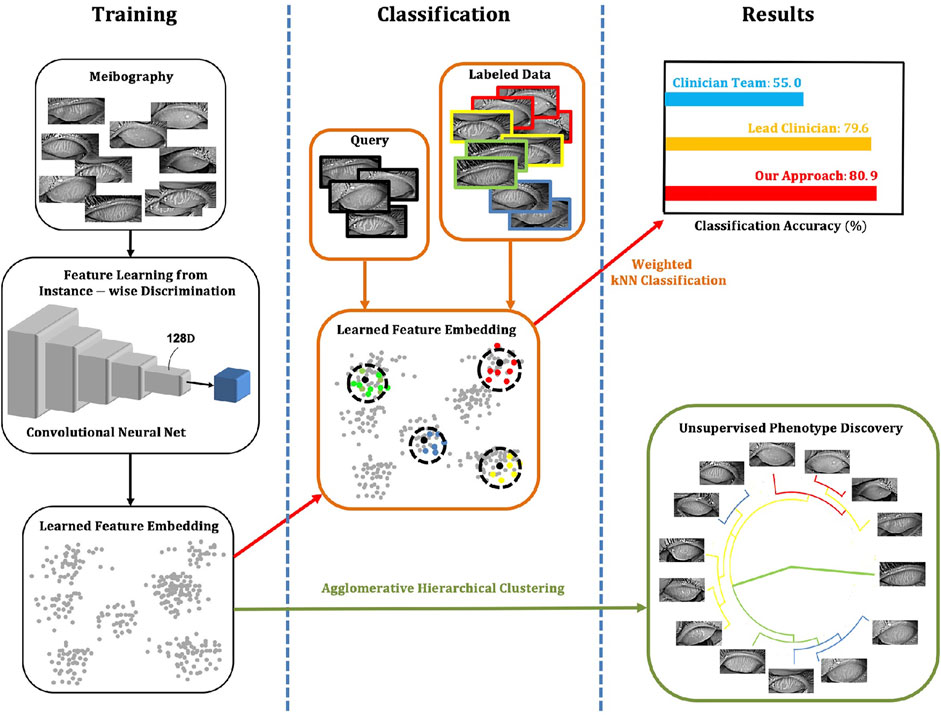

In 2021, Khan et al. (2021) established a model based on adversarial learning, a conditional generative adversarial network (C-GAN), to accurately detect, segment, and analyze MG. This technique significantly improved the inability of existing methods to quantify irregularities in infrared images of the MG regions. Additionally, this technique outperformed state-of-the-art results for detecting and analyzing the dropout area of the MGD. Setu et al. (2021) proposed an automatic infrared MG segmentation method based on DL (U-Net). The model was trained and evaluated using 728 anonymized clinical meibography images. The average precision, recall, and F1 scores were 83%, 81%, and 84% on the testing dataset, with an AUC value of 0.96, based on the ROC curve and the Dice coefficient of 84%. Single-image segmentation and morphometric parameter evaluations had an average of 1.33 s. Wang J. et al. (2021) developed an automated AI method to segment individual MG regions in an infrared meibography image and analyzed their morphological features. The AI algorithm, on average, achieved 63% mean IoU in segmenting glands, 84.4% sensitivity and 71.7% specificity in identifying ghost glands. Yeh et al. (2021) established an unsupervised feature learning method based on non-parametric instance discrimination (NPID) to automatically measure MG atrophy (Figure 6 illustrates an overview of the approach created by Yeh et al.). 497 meibography images were used for network learning and tuning, and the remaining 209 images were applied for network model evaluations. The proposed NPID achieved an average 80.9% meiboscore grading accuracy, outperforming the clinical team by 25.9%. Therefore, this method may aid in diagnosing and managing MGD without prior image annotations, which require time and resources.

FIGURE 6. Overview of the approach (Yeh et al., 2021). The NPID is applied to learn a metric by feeding unla-beled meibography images and then to discriminate them according to their visual similarity. This approach measures atrophy severity and discovers subtle relationships between meibogra-phy images. There is no required image labeling, serving as ground truth for training.

Dry eye is complicated to diagnose since there is no single characteristic symptom or diagnostic measure. Other studies have employed AI to detect tear film, tear meniscus height (TMH), corneal morphology and blinking to diagnose dry eye besides the abovementioned assessment of dry eye by AI detection of MGs morphology. Diego et al. (Peteiro-Barral et al., 2017) proposed a method that automatically assessed tear film classification and demonstrated its effectiveness. This method applied class binarization and feature selection for optimization purposes. Su et al. (2018) proposed an automatic method to detect the fluorescent tear film break-up area using a CNN model and to define its appearance as CNN-BUT. The sensitivity and specificity of CNN-BUT in screening patients with dry eye were 0.83 and 0.95, respectively. Vyas et al. (2022) proposed a tear film break-up time (TBUT) -based dry eye detection method that detects the presence/absence of dry eye from TBUT video. This AI system exhibits high performance in classifying TBUT frames, detecting dry eye, and severity grading of TBUT video with an accuracy of 83%.

Further, Stegmann et al. (2020) evaluated lower TMH using OCT by automatically segmenting the image data using AI algorithms. The AI segmentation times were approximately two orders of magnitude faster than the previous algorithms. Chase et al. (2021) developed a CNN algorithm to detect dry eye using AS-OCT images with good performance (accuracy = 84.62%, sensitivity = 86.36%, specificity = 82.35%). The epithelial layer and tear film were the learned areas of the AS-OCT images that differentiated images with dry eye from normal. The AI model had a significantly higher accuracy detecting dry eye than corneal staining, conjunctival staining, and Schirmer’s testing. Deng et al. (2021) established a method for the automatic quantitation of lower TMH with FCNN. These neural networks have high performance owing to the modified encoder with a residual block, which has better feature extraction than the original U-Net. Additionally, the overall average IoU for tear meniscus segmentation was 82.5%. Therefore, the algorithm results of the TMH had a higher correlation with the ground truth than manually obtained results. Su et al. (2020) proposed training a deep CNN model to detect superficial punctate keratitis (SPK) automatically, and this AI method can be used to reliably grade the severity of SPK to improve the efficiency (97% accuracy) of dry eye diagnosis. Through AI analysis, Jing et al. (2022) have found a significant correlation between corneal nerve morphological changes in patients with dry eyes and intrinsic corneal aberrations, particularly higher-order aberrations. Zheng et al. (2022) established a blink analysis model using AI to generate a blink profile, which provides a new method for evaluating incomplete blinking and diagnosing dry eye. The above research shows that the AI model has achieved remarkable results in the segmentation of MG morphology in patients with dry eye.

AI has also led to many achievements in the auxiliary diagnosis and treatment of corneal edema, corneal endothelial dystrophy, corneal nerves, corneal epithelial defects, posterior elastic layer detachment, corneal perforation, corneal foreign bodies, and other ocular surface diseases. Veli and Ozcan (2018) established a cost-effective and portable platform based on contact lenses for the non-invasive detection of Staphylococcus aureus using a three-dimensional (3D) holographic reconstruction combined with an SVM-based ML algorithm. Interestingly, the method is characterized by low cost and portability, although the study did not include participants for clinical trials. Eleiwa et al. (2020) created and validated a DL model based on VGG19 and transferred learning to diagnose Fuchs endothelial corneal dystrophy. Additionally, Wei et al. (2020) proposed a DL model for automated sub-basal corneal nerve fiber segmentation and evaluation using IVCM). The model achieved an AUC, sensitivity, and specificity of 0.96, 96%, and 75%, respectively. However, this AI model had limitations in that it was not externally validated and could consider all parameters in the IVCM images. Zéboulon et al. (2021) established and verified a novel automated tool for detecting and visualizing corneal edema using OCT. This study trained a CNN to classify each pixel in the corneal OCT images as “normal” or “edema” and to generate colored heat maps of the result. Additionally, the optimal threshold for differentiating normal from edematous corneas was 6.8%, with an accuracy, sensitivity, and specificity of 98.7%, 96.4%, and 100%, respectively. However, the AI model could not quantitatively analyze the severity of edema, and the principle of the model training process output results remains invisible. Li D. F et al. (2021) developed an image analysis system for AS-OCT examination results based on DL technology and evaluated its influence on identifying various corneal pathologies and quantified indices. Furthermore, the labeled AS-OCT images were used to train corneal pathology detection and stratification models based on the deep CNN algorithm. Interestingly, the average sensitivity and specificity of the corneal pathology detection model were 96.5% and 96.1%, compared with the results of manual labeling. Additionally, the average Dice coefficients of the corneal stratification model for the corneal epithelium and stroma were 0.985 and 0.917, respectively. Deshmukh et al. (2021) developed an automated segmentation DL algorithm for corneal stromal deposits in patients with corneal stromal dystrophy. Segmentation on corneal deposits was accurate via the DL algorithm in the well-controlled dataset and showed reasonable performance in a real-world setting. Yoo et al. (2021) developed an AI model to detect conjunctival melanoma using a digital imaging device such as smartphone camera. It showed an accuracy of 94.0% using 3D melanoma phantom images captured using a smartphone camera.

With the development of modern society and the economy, people’s health awareness is gradually improving, and the pressure on ophthalmologists to diagnose and treat will increase. However, although over 2,00,000 ophthalmologists exist worldwide, there is currently a severe shortfall in developing countries (Resnikoff et al., 2012). Furthermore, the number of ophthalmologists is declining in 12% of low-income countries with the lowest ophthalmologist densities and highest population growth rates (Resnikoff et al., 2020). The timely emergence of AI has given rise to optimism in the field of ophthalmology, particularly in areas involving big data and image-based analysis. DL is a branch of ML that employs multi-layer neurons with high-dimensional non-linear transformations in performing high-dimensional data abstraction to extract hidden features (Lecun et al., 2015). Therefore, with the help of DL, we can input many images as samples to the computer and allow the computer to automatically learn the high-dimensional features of the images to determine the intrinsic relationship between the images and the results. DL establishes an intrinsic relationship between input and output through multi-layer CNN mapping, similar to the human learning process. Thus far, various AI models have been developed, such as CNN, deep neural networks, deep belief networks, and RNN. These models have been applied in computer vision, speech recognition, natural language processing, audio recognition, and bioinformatics with excellent results (Lecun et al., 1998; Taigman et al., 2014; He et al., 2016). Additionally, using DL to process and analyze images of ocular surface diseases can significantly improve accuracy and efficiency, reduce manual analysis costs, and overcome errors between different experienced annotators. Currently, different AI models are used for AI applications for different ocular surface diseases. Among them, CNN model accounts for the majority of the AI applications for pterygium, keratitis and dry eye, while RF model has good accuracy in predicting healthy eyes and KC in all stages in the AI application for KC.

DL established a method for computers to automatically learn the hidden features in images and integrate feature learning into building models, thereby reducing the incompleteness caused by artificially designed features. Patterns that are invisible to the naked eye can be picked out. For example, Kermany et al. (2018) trained a DL system to identify retinal OCT images of patients. Surprisingly, the system also accurately identified several other characteristics, including risk factors for heart disease, age, and sex. No one had previously noticed sex variations in the human retina. However, we cannot fully understand its feature extraction logic, leading to the AI “black box” since the DL neural network is very complex and has poor interpretability challenges (Ahuja and Halperin, 2019). Therefore, Kermany et al. (2018) used “occlusion testing” in their study of AI recognition of OCT retinopathy images to study the logic of AI diagnosis. This involved occluding different parts of OCT images of the fundus of patients with retinopathy. The AI erroneously categorized the lesion image as normal after considering the features of a specific section, implying that these features are the basis for the AI’s judgment. Similarly, in analyzing ocular surface diseases using DL models, we can also use occlusion testing to learn the judgment basis of AI to discover new morphological evaluation indicators of ocular surface diseases. An ophthalmic multi-modal diagnostic platform using multiple modules for targeted examination of target tissues has been established and applied clinically. With advances in technology, it may be possible in the future to acquire global three-dimensional data of the eye simultaneously. Correct reading, analysis and diagnosis of acquired data require a more comprehensive and in-depth knowledge base. Compared with human beings, AI has absolute superiority in integrating information, processing data, diagnosis speed, etc.

At present, AI still has certain limitations. 1) Most ML methods have insufficient training and validation sets; therefore, more image data training is needed to improve accuracy, sensitivity, and specificity further. 2) The inspection equipment used by different countries, regions, and medical institutions differ, as do the images obtained by different inspection equipment regarding color and resolution, which will inevitably affect image acquisition and diagnostic accuracies. 3) Current ML methods cannot explain disease diagnosis, of which the output results are learned only from the training set. 4) AI cannot learn effectively for some difficult and rare ocular surface diseases with insufficient data. Therefore, it is difficult to obtain an effective and correct diagnosis rate. Although AI still faces certain challenges in model building, it can assist doctors with objective clinical decisions and lay the foundation for the accurate treatment of patients. These issues must be adequately addressed before AI can be translated into clinical applications in ophthalmology.

In conclusion, AI has great potential to improve the diagnostic efficiency of ocular surface diseases. The novelty of this study is evidenced by its contribution to the existing literature, as it is one of the studies to provide information on research hotspots and trends in the application of AI in diagnosing ocular surface diseases. Furthermore, the results reveal that although AI still faces certain challenges in model building, it can assist doctors with objective clinical decisions and lay the foundation for the accurate treatment of patients. Ultimately, AI algorithms and tools in development for o ocular surface disease are helping us to understand disease pathogenesis, identify disease biomarkers, and develop novel treatments for ocular surface disease.

Writing—original draft preparation, ZZ; Formal analysis, YW and HZ; Writing—review, AS and HR; Editing, CX and MA; Supervision, QC; Conceptualization, QD. All authors have read and agreed to the published version of the manuscript.

This research was funded by the Zhejiang Provincial Medical and Health Science Technology Program of Health and Family Planning Commission (grant number: 2022PY074; grant number: 2022KY217), and by the Scientific Research Fund of Zhejiang Provincial Education Department (Y202147994).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Aatila, M., Lachgar, M., Hamid, H., and Kartit, A. (2021). Keratoconus severity classification using features selection and machine learning algorithms. Comput. Math. Methods Med. 2021, 9979560. doi:10.1155/2021/9979560

Abdani, S. R., Zulkifley, M. A., and Hussain, A. (2019). “Compact convolutional neural networks for pterygium classification using transfer learning,” in IEEE. International Conference on Signal and Image Processing Applications, Kuala Lumpur, Malaysia, 17-19 September 2019, 140–143. doi:10.1109/ICSIPA45851.2019.8977757

Abdani, S. R., Zulkifley, M. A., and Moubark, A. M. (2020). “Pterygium tissues segmentation using densely connected DeepLab,” in Proceedings of the 2020 IEEE 10th Symposium on Computer Applications Industrial Electronics (ISCAIE), Malaysia, April 18–19 2020, 229–232. doi:10.1109/ISCAIE47305.2020.9108822

Abdani, S. R., Zulkifley, M. A., and Zulkifley, N. H. (2021). Group and shuffle convolutional neural networks with pyramid pooling module for automated pterygium segmentation. Diagn. (Basel) 11 (6), 1104. doi:10.3390/diagnostics11061104

Abdelmotaal, H., Mostafa, M. M., Mostafa, A. N. R., Mohamed, A. A., and Abdelazeem, K. (2020). Classification of color-coded Scheimpflug camera corneal tomography images using deep learning. Transl. Vis. Sci. Technol. 9 (13), 30. doi:10.1167/tvst.9.13.30

Accardo, P. A., and Pensiero, S. (2002). Neural network-based system for early keratoconus detection from corneal topography. J. Biomed. Inf. 35 (3), 151–159. doi:10.1016/s1532-0464(02)00513-0

Ahn, H., Kim, N. E., Chung, J. L., Kim, Y. J., Jun, I., Kim, T. I., et al. (2022). Patient selection for corneal topographic evaluation of keratoconus: A screening approach using artificial intelligence. Front. Med. (Lausanne) 9, 934865. doi:10.3389/fmed.2022.934865

Ahuja, A. S., and Halperin, L. S. (2019). Understanding the advent of artificial intelligence in ophthalmology. J. Curr. Ophthalmol. 31 (2), 115–117. doi:10.1016/j.joco.2019.05.001

Al-Timemy, A. H., Mosa, Z. M., Alyasseri, Z., Lavric, A., Lui, M. M., Hazarbassanov, R. M., et al. (2021). A hybrid deep learning construct for detecting keratoconus from corneal maps. Transl. Vis. Sci. Technol. 10 (14), 16. doi:10.1167/tvst.10.14.16

Arbelaez, M. C., Versaci, F., Vestri, G., Barboni, P., and Savini, G. (2012). Use of a support vector machine for keratoconus and subclinical keratoconus detection by topographic and tomographic data. Ophthalmology 119 (11), 2231–2238. doi:10.1016/j.ophtha.2012.06.005

Arita, R., Mizoguchi, T., Kawashima, M., Fukuoka, S., Koh, S., Shirakawa, R., et al. (2019). Meibomian gland dysfunction and dry eye are similar but different based on a population-based study: The hirado-takushima study in Japan. Am. J. Ophthalmol. 207, 410–418. doi:10.1016/j.ajo.2019.02.024

Asaoka, R., Murata, H., Hirasawa, K., Fujino, Y., Matsuura, M., Miki, A., et al. (2019). Using deep learning and transfer learning to accurately diagnose early-onset glaucoma from macular optical coherence tomography images. Am. J. Ophthalmol. 198, 136–145. doi:10.1016/j.ajo.2018.10.007

Austin, A., Lietman, T., and Rose-Nussbaumer, J. (2017). Update on the management of infectious keratitis. Ophthalmology 124 (11), 1678–1689. doi:10.1016/j.ophtha.2017.05.012

Banerjee, A., Kulkarni, S., and Mukherjee, A. (2020). Herpes simplex virus: The hostile guest that takes over your home. Front. Microbiol. 11, 733. doi:10.3389/fmicb.2020.00733

Burlina, P. M., Joshi, N., Pekala, M., Pacheco, K. D., Freund, D. E., and Bressler, N. M. (2017). Automated grading of age-related macular degeneration from color fundus images using deep convolutional neural networks. JAMA Ophthalmol. 135 (11), 1170–1176. doi:10.1001/jamaophthalmol.2017.3782

Cao, K., Verspoor, K., Chan, E., Daniell, M., Sahebjada, S., and Baird, P. N. (2021). Machine learning with a reduced dimensionality representation of comprehensive pentacam tomography parameters to identify subclinical keratoconus. Comput. Biol. Med. 138, 104884. doi:10.1016/j.compbiomed.2021.104884

Cao, K., Verspoor, K., Sahebjada, S., and Baird, P. N. (2020). Evaluating the performance of various machine learning algorithms to detect subclinical keratoconus. Transl. Vis. Sci. Technol. 9 (2), 24. doi:10.1167/tvst.9.2.24

Castro-Luna, G., Jiménez-Rodríguez, D., Castaño-Fernández, A. B., and Pérez-Rueda, A. (2021). Diagnosis of subclinical keratoconus based on machine learning techniques. J. Clin. Med. 10 (18), 4281. doi:10.3390/jcm10184281

Chase, C., Elsawy, A., Eleiwa, T., Ozcan, E., Tolba, M., and Abou Shousha, M. (2021). Comparison of autonomous AS-OCT deep learning algorithm and clinical dry eye tests in diagnosis of dry eye disease. Clin. Ophthalmol. 15, 4281–4289. doi:10.2147/OPTH.S321764

Chen, X., Zhao, J., Iselin, K. C., Borroni, D., Romano, D., Gokul, A., et al. (2021). Keratoconus detection of changes using deep learning of colour-coded maps. B.M.J. Open Ophthalmol. 6 (1), e000824. doi:10.1136/bmjophth-2021-000824

Clearfield, E., Muthappan, V., Wang, X., and Kuo, I. C. (2016). Conjunctival autograft for pterygium. Cochrane Database Syst. Rev. 2 (2), CD011349. doi:10.1002/14651858.CD011349.pub2

Craig, J. P., Nelson, J. D., Azar, D. T., Belmonte, C., Bron, A. J., Chauhan, S. K., et al. (2017). TFOS DEWS II report executive summary. Ocul. Surf. 15 (4), 802–812. doi:10.1016/j.jtos.2017.08.003

Craig, J. P., Nichols, K. K., Akpek, E. K., Caffery, B., Dua, H. S., Joo, C. K., et al. (2017). TFOS DEWS II definition and classification report. Ocul. Surf. 15 (3), 276–283. doi:10.1016/j.jtos.2017.05.008

Dai, Q., Liu, X., Lin, X., Fu, Y., Chen, C., Yu, X., et al. (2021). A novel meibomian gland morphology analytic system based on a convolutional neural network. Ieee. Access 9, 23083–23094. doi:10.1109/ACCESS.2021.3056234

de Lacerda, A. G., and Lira, M. (2021). Acanthamoeba keratitis: A review of Biology, pathophysiology and epidemiology. Ophthalmic Physiol. Opt. 41 (1), 116–135. doi:10.1111/opo.12752

Deng, X., Tian, L., Liu, Z., Zhou, Y., and Jie, Y. A. (2021). A deep learning approach for the quantification of lower tear meniscus height. Biomed. Signal Process. Control 68, 102655. doi:10.1016/j.bspc.2021.102655

Deshmukh, M., Liu, Y. C., Rim, T. H., Venkatraman, A., Davidson, M., Yu, M., et al. (2021). Automatic segmentation of corneal deposits from corneal stromal dystrophy images via deep learning. Comput. Biol. Med. 137, 104675. doi:10.1016/j.compbiomed.2021.104675

Devalla, S. K., Chin, K. S., Mari, J. M., Tun, T. A., Strouthidis, N. G., Aung, T., et al. (2018). A deep learning approach to digitally stain optical coherence tomography images of the optic nerve head. Invest. Ophthalmol. Vis. Sci. 59 (1), 63–74. doi:10.1167/iovs.17-22617

Dey, D., Slomka, P. J., Leeson, P., Comaniciu, D., Shrestha, S., SenGupta, P. P., et al. (2019). Artificial intelligence in cardiovascular imaging: JACC state-of-the-art review. J. Am. Coll. Cardiol. 73 (11), 1317–1335. doi:10.1016/j.jacc.2018.12.054

Dos Santos, V. A., Schmetterer, L., Stegmann, H., Pfister, M., Messner, A., Schmidinger, G., et al. (2019). CorneaNet: Fast segmentation of cornea OCT scans of healthy and keratoconic eyes using deep learning. Biomed. Opt. Express 10 (2), 622–641. doi:10.1364/BOE.10.000622

Eleiwa, T., Elsawy, A., Özcan, E., and Abou Shousha, M. (2020). Automated diagnosis and staging of Fuchs’ endothelial Cell corneal dystrophy using deep learning. Eye Vis. (Lond) 7, 44. doi:10.1186/s40662-020-00209-z

Fang, X., Deshmukh, M., Chee, M. L., Soh, Z. D., Teo, Z. L., Thakur, S., et al. (2022). Deep learning algorithms for automatic detection of pterygium using anterior segment photographs from slit-lamp and hand-held cameras. Br. J. Ophthalmol. 106 (12), 1642–1647. doi:10.1136/bjophthalmol-2021-318866

Flaxman, S. R., Bourne, R. R. A., Resnikoff, S., Ackland, P., Braithwaite, T., Cicinelli, M. V., et al. (2017). Global causes of blindness and distance vision impairment 1990–2020: A systematic review and meta-analysis. Lancet Glob. Health 5 (12), e1221–e1234. doi:10.1016/S2214-109X(17)30393-5

Gao, X., Lin, S., and Wong, T. Y. (2015). Automatic feature learning to grade nuclear cataracts based on deep learning. Ieee. Trans. Bio Med. Eng. 62 (11), 2693–2701. doi:10.1109/TBME.2015.2444389

Gao, X., Wong, D. W., Aryaputera, A. W., Sun, Y., Cheng, C. Y., Cheung, C., et al. (2012). “Automatic pterygium detection on cornea images to enhance computer-aided cortical cataract grading system,” in 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August 2012 - 01 September 2012, 4434–4437. doi:10.1109/EMBC.2012.6346950

Gazzard, G., Saw, S. M., Farook, M., Koh, D., Widjaja, D., Chia, S. E., et al. (2002). Pterygium in Indonesia: Prevalence, severity and risk factors. Br. J. Ophthalmol. 86 (12), 1341–1346. doi:10.1136/bjo.86.12.1341

Ghaderi, M., Sharifi, A., and Jafarzadeh Pour, E. (2021). Proposing an ensemble learning model based on neural network and fuzzy system for keratoconus diagnosis based on pentacam measurements. Int. Ophthalmol. 41 (12), 3935–3948. doi:10.1007/s10792-021-01963-2

Ghosh, A. K., Thammasudjarit, R., Jongkhajornpong, P., Attia, J., and Thakkinstian, A. (2022). Deep learning for discrimination between fungal keratitis and bacterial keratitis: DeepKeratitis. Cornea 41 (5), 616–622. doi:10.1097/ICO.0000000000002830

Giannaccare, G., Vigo, L., Pellegrini, M., Sebastiani, S., and Carones, F. (2018). Ocular surface workup with automated noninvasive measurements for the diagnosis of meibomian gland dysfunction. Cornea 37 (6), 740–745. doi:10.1097/ICO.0000000000001500

Gomes, J. A., Tan, D., Rapuano, C. J., Belin, M. W., Ambrósio, R., Guell, J. L., et al. (2015). Global consensus on keratoconus and ectatic diseases. Cornea 34 (4), 359–369. doi:10.1097/ICO.0000000000000408

Gulshan, V., Peng, L., Coram, M., Stumpe, M. C., Wu, D., Narayanaswamy, A., et al. (2016). Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 316 (22), 2402–2410. doi:10.1001/jama.2016.17216

He, K., Zhang, X., Ren, S., and Sun, J. (2016). “Deep residual learning for image recognition,” in IEEE. Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27-30 June 2016, 770–778. doi:10.1109/CVPR.2016.90

Henein, C., and Nanavaty, M. A. (2017). Systematic review comparing penetrating keratoplasty and deep anterior lamellar keratoplasty for management of keratoconus. Cont. Lens Anterior Eye 40 (1), 3–14. doi:10.1016/j.clae.2016.10.001

Herber, R., Pillunat, L. E., and Raiskup, F. (2021). Development of a classification system based on corneal biomechanical properties using artificial intelligence predicting keratoconus severity. Eye Vis. (Lond) 8 (1), 21. doi:10.1186/s40662-021-00244-4

Hosoda, Y., Miyake, M., Meguro, A., Tabara, Y., Iwai, S., Ueda-Arakawa, N., et al. (2020). Keratoconus-susceptibility gene identification by corneal thickness genome-wide association study and artificial intelligence IBM watson. Commun. Biol. 3 (1), 410. doi:10.1038/s42003-020-01137-3

Hung, K. H., Lin, C., Roan, J., Kuo, C. F., Hsiao, C. H., Tan, H. Y., et al. (2022). Application of a deep learning system in pterygium grading and further prediction of recurrence with slit lamp photographs. Diagn. (Basel) 12 (4), 888. doi:10.3390/diagnostics12040888

Hung, N., Shih, A. K., Lin, C., Kuo, M. T., Hwang, Y. S., Wu, W. C., et al. (2021). Using slit-lamp images for deep learning-based identification of bacterial and fungal keratitis: Model development and validation with different convolutional neural networks. Diagn. (Basel) 11 (7), 1246. doi:10.3390/diagnostics11071246

Issarti, I., Consejo, A., Jiménez-García, M., Hershko, S., Koppen, C., and Rozema, J. J. (2019). Computer aided diagnosis for suspect keratoconus detection. Comput. Biol. Med. 109, 33–42. doi:10.1016/j.compbiomed.2019.04.024

Jing, D., Liu, Y., Chou, Y., Jiang, X., Ren, X., Yang, L., et al. (2022). Change patterns in the corneal sub-basal nerve and corneal aberrations in patients with dry eye disease: An artificial intelligence analysis. Exp. Eye Res. 215, 108851. doi:10.1016/j.exer.2021.108851

Kamiya, K., Ayatsuka, Y., Kato, Y., Fujimura, F., Takahashi, M., Shoji, N., et al. (2019). Keratoconus detection using deep learning of colour-coded maps with anterior segment optical coherence tomography: A diagnostic accuracy study. BMJ Open 9 (9), e031313. doi:10.1136/bmjopen-2019-031313

Kamiya, K., Ayatsuka, Y., Kato, Y., Shoji, N., Mori, Y., and Miyata, K. (2021a). Diagnosability of keratoconus using deep learning with placido disk-based corneal topography. Front. Med. (Lausanne) 8, 724902. doi:10.3389/fmed.2021.724902

Kamiya, K., Ayatsuka, Y., Kato, Y., Shoji, N., Miyai, T., Ishii, H., et al. (2021b). Prediction of keratoconus progression using deep learning of anterior segment optical coherence tomography maps. Ann. Transl. Med. 9 (16), 1287. doi:10.21037/atm-21-1772

Kato, N., Masumoto, H., Tanabe, M., Sakai, C., Negishi, K., Torii, H., et al. (2021). Predicting keratoconus progression and need for corneal crosslinking using deep learning. J. Clin. Med. 10 (4), 844. doi:10.3390/jcm10040844

Kermany, D. S., Goldbaum, M., Cai, W., Valentim, C. C. S., Liang, H., Baxter, S. L., et al. (2018). Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell 172 (5), 1122–1131.e9. doi:10.1016/j.cell.2018.02.010

Khan, Z. K., Umar, A. I., Shirazi, S. H., Rasheed, A., Qadir, A., and Gul, S. (2021). Image based analysis of meibomian gland dysfunction using conditional generative adversarial neural network. B.M.J. Open Ophthalmol. 6 (1), e000436. doi:10.1136/bmjophth-2020-000436

Kim, J. H., Kim, Y. J., Lee, Y. J., Hyon, J. Y., Han, S. B., and Kim, K. G. (2022). Automated histopathological evaluation of pterygium using artificial intelligence. Br. J. Ophthalmol., bjophthalmol-2021-320141. doi:10.1136/bjophthalmol-2021-320141

Koo, T., Kim, M. H., and Jue, M. S. (2021). Automated detection of superficial fungal infections from microscopic images through a regional convolutional neural network. PLOS ONE 16 (8), e0256290. doi:10.1371/journal.pone.0256290

Kovács, I., Miháltz, K., Kránitz, K., Juhász, É., Takács, Á., Dienes, L., et al. (2016). Accuracy of machine learning classifiers using bilateral data from a Scheimpflug camera for identifying eyes with preclinical signs of keratoconus. J. Cataract. Refract. Surg. 42 (2), 275–283. doi:10.1016/j.jcrs.2015.09.020

Koyama, A., Miyazaki, D., Nakagawa, Y., Ayatsuka, Y., Miyake, H., Ehara, F., et al. (2021). Determination of probability of causative pathogen in infectious keratitis using deep learning algorithm of slit-lamp images. Sci. Rep. 11 (1), 22642. doi:10.1038/s41598-021-02138-w

Kucur, Ş. S., Holló, G., and Sznitman, R. A. (2018). A deep learning approach to automatic detection of early glaucoma from visual fields. Plos One 13 (11), e0206081. doi:10.1371/journal.pone.0206081

Kundu, G., Shetty, R., Khamar, P., Mullick, R., Gupta, S., Nuijts, R., et al. (2021). Universal architecture of corneal segmental tomography biomarkers for artificial intelligence-driven diagnosis of early keratoconus. Br. J. Ophthalmol., bjophthalmol-2021-319309. doi:10.1136/bjophthalmol-2021-319309

Kuo, B. I., Chang, W. Y., Liao, T. S., Liu, F. Y., Liu, H. Y., Chu, H. S., et al. (2020). Keratoconus screening based on deep learning approach of corneal topography. Transl. Vis. Sci. Technol. 9 (2), 53. doi:10.1167/tvst.9.2.53

Kuo, M. T., Hsu, B. W., Yin, Y. K., Fang, P. C., Lai, H. Y., Chen, A., et al. (2020). A deep learning approach in diagnosing fungal keratitis based on corneal photographs. Sci. Rep. 10 (1), 14424. doi:10.1038/s41598-020-71425-9

Lavric, A., and Valentin, P. (2019). KeratoDetect: Keratoconus detection algorithm using convolutional neural networks. Comput. Intell. Neurosci. 2019, 8162567. doi:10.1155/2019/8162567

Lawrence, D. R., Palacios-González, C., and Harris, J. (2016). Artificial intelligence. Camb. Q. Healthc. Ethics 25 (2), 250–261. doi:10.1017/S0963180115000559

Lecun, Y., Bengio, Y., and Hinton, G. (2015). Deep learning. Nature 521 (7553), 436–444. doi:10.1038/nature14539

Lecun, Y., Bottou, L., Bengio, Y., and Haffner, P. (1998). Gradient-based learning applied to document recognition. Proc. I.E.E.E. 86 (11), 2278–2324. doi:10.1109/5.726791

Lekhanont, K., Jongkhajornpong, P., Sontichai, V., Anothaisintawee, T., and Nijvipakul, S. (2019). Evaluating dry eye and meibomian gland dysfunction with meibography in patients with stevens-johnson syndrome. Cornea 38 (12), 1489–1494. doi:10.1097/ICO.0000000000002025

Li, D. F., Dong, Y. L., Xie, S., Guo, Z., Li, S. X., Guo, Y., et al. (2021). Deep learning based lesion detection from anterior segment optical coherence tomography images and its application in the diagnosis of keratoconus. Zhonghua Yan Ke Za Zhi 57 (6), 447–453. doi:10.3760/cma.j.cn112142-20200818-00540

Li, Z., Jiang, J., Chen, K., Chen, Q., Zheng, Q., Liu, X., et al. (2021). Preventing corneal blindness caused by keratitis using artificial intelligence. Nat. Commun. 12 (1), 3738. doi:10.1038/s41467-021-24116-6

Lin, A., Rhee, M. K., Akpek, E. K., Amescua, G., Farid, M., Garcia-Ferrer, F. J., et al. (2019). Bacterial keratitis preferred practice Pattern®. Ophthalmology 126 (1), P1–P55. doi:10.1016/j.ophtha.2018.10.018

Liu, Z., Cao, Y., Li, Y., Xiao, X., Qiu, Q., Yang, M., et al. (2020). Automatic diagnosis of fungal keratitis using data augmentation and image fusion with deep convolutional neural network. Comput. Methods Programs Biomed. 187, 105019. doi:10.1016/j.cmpb.2019.105019

Llorens-Quintana, C., Garaszczuk, I. K., and Szczesna-Iskander, D. H. (2020). Meibomian glands structure in daily disposable soft contact lens wearers: A one-year follow-up study. Ophthalmic Physiol. Opt. 40 (5), 607–616. doi:10.1111/opo.12720

Long, E., Lin, H., Liu, Z., Wu, X., Wang, L., Jiang, J., et al. (2017). An artificial intelligence platform for the multihospital collaborative management of congenital cataracts. Nat. Biomed. Eng. 1 (2), 0024. doi:10.1038/s41551-016-0024

Loo, J., Kriegel, M. F., Tuohy, M. M., Kim, K. H., Prajna, V., Woodward, M. A., et al. (2021). Open-source automatic segmentation of ocular structures and biomarkers of microbial keratitis on slit-lamp photography images using deep learning. Ieee. J. Biomed. Health Inf. 25 (1), 88–99. doi:10.1109/JBHI.2020.2983549

Lv, J., Zhang, K., Chen, Q., Chen, Q., Huang, W., Cui, L., et al. (2020). Deep learning-based automated diagnosis of fungal keratitis with in vivo confocal microscopy images. Ann. Transl. Med. 8 (11), 706. doi:10.21037/atm.2020.03.134

Malyugin, B., Sakhnov, S., Izmailova, S., Boiko, E., Pozdeyeva, N., Axenova, L., et al. (2021). Keratoconus diagnostic and treatment algorithms based on machine-learning methods. Diagn. (Basel) 11 (10), 1933. doi:10.3390/diagnostics11101933

Maruoka, S., Tabuchi, H., Nagasato, D., Masumoto, H., Chikama, T., Kawai, A., et al. (2020). Deep neural network-based method for detecting obstructive meibomian gland dysfunction with in vivo laser confocal microscopy. Cornea 39 (6), 720–725. doi:10.1097/ICO.0000000000002279

Mas Tur, V., MacGregor, C., Jayaswal, R., O’Brart, D., and Maycock, N. A. (2017). A review of keratoconus: Diagnosis, pathophysiology, and genetics. Surv. Ophthalmol. 62 (6), 770–783. doi:10.1016/j.survophthal.2017.06.009

Mayya, V., Kamath Shevgoor, S., Kulkarni, U., Hazarika, M., Barua, P. D., and Acharya, U. R. (2021). Multi-scale convolutional neural network for accurate corneal segmentation in early detection of fungal keratitis. J. Fungi (Basel) 7 (10), 850. doi:10.3390/jof7100850

Mesquita, R. G., and Figueiredo, E. M. N. (2012). “An algorithm for measuring pterygium’s progress in already diagnosed eyes,” in 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25-30 March 2012, 733–736. doi:10.1109/ICASSP.2012.6287988

Mohammadpour, M., Heidari, Z., Hashemi, H., Yaseri, M., and Fotouhi, A. (2022). Comparison of artificial intelligence-based machine learning classifiers for early detection of keratoconus. Eur. J. Ophthalmol. 32 (3), 1352–1360. doi:10.1177/11206721211073442

Nagasato, D., Tabuchi, H., Ohsugi, H., Masumoto, H., Enno, H., Ishitobi, N., et al. (2019). Deep-learning classifier with ultrawide-field fundus ophthalmoscopy for detecting branch retinal vein occlusion. Int. J. Ophthalmol. 12 (1), 94–99. doi:10.18240/ijo.2019.01.15

Niazi, M. K. K., Parwani, A. V., and Gurcan, M. N. (2019). Digital pathology and artificial intelligence. Lancet Oncol. 20 (5), e253–e261. doi:10.1016/S1470-2045(19)30154-8

Papaioannou, L., Miligkos, M., and Papathanassiou, M. (2016). Corneal collagen cross-linking for infectious keratitis: A systematic review and meta-analysis. Cornea 35 (1), 62–71. doi:10.1097/ICO.0000000000000644

Patel, T. P., Prajna, N. V., Farsiu, S., Valikodath, N. G., Niziol, L. M., Dudeja, L., et al. (2018). Novel image-based analysis for reduction of clinician-dependent variability in measurement of the corneal ulcer size. Cornea 37 (3), 331–339. doi:10.1097/ICO.0000000000001488

Peteiro-Barral, D., Remeseiro, B., Méndez, R., and Penedo, M. G. (2017). Evaluation of an automatic dry eye test using MCDM methods and rank correlation. Med. Biol. Eng. Comput. 55 (4), 527–536. doi:10.1007/s11517-016-1534-5

Prabhu, S. M., Chakiat, A., S, S., Vunnava, K. P., and Shetty, R. (2020). Deep learning segmentation and quantification of meibomian glands. Biomed. Signal Process. Control 57 (3), 101776. doi:10.1016/j.bspc.2019.101776

Rahimy, E. (2018). Deep learning applications in ophthalmology. Curr. Opin. Ophthalmol. 29 (3), 254–260. doi:10.1097/ICU.0000000000000470

Resnikoff, S., Felch, W., Gauthier, T. M., and Spivey, B. (2012). The number of ophthalmologists in practice and training worldwide: A growing gap despite more than 200,000 practitioners. Br. J. Ophthalmol. 96 (6), 783–787. doi:10.1136/bjophthalmol-2011-301378

Resnikoff, S., Lansingh, V. C., Washburn, L., Felch, W., Gauthier, T. M., Taylor, H. R., et al. (2020). Estimated number of ophthalmologists worldwide (international council of ophthalmology update): Will we meet the needs? Br. J. Ophthalmol. 104 (4), 588–592. doi:10.1136/bjophthalmol-2019-314336

Ruiz Hidalgo, I., Rodriguez, P., Rozema, J. J., Ní Dhubhghaill, S., Zakaria, N., Tassignon, M. J., et al. (2016). Evaluation of a machine-learning classifier for keratoconus detection based on Scheimpflug tomography. Cornea 35 (6), 827–832. doi:10.1097/ICO.0000000000000834

Saba, L., Biswas, M., Kuppili, V., Cuadrado Godia, E., Suri, H. S., Edla, D. R., et al. (2019). The present and future of deep learning in radiology. Eur. J. Radiol. 114, 14–24. doi:10.1016/j.ejrad.2019.02.038

Sabeti, S., Kheirkhah, A., Yin, J., and Dana, R. (2020). Management of meibomian gland dysfunction: A review. Surv. Ophthalmol. 65 (2), 205–217. doi:10.1016/j.survophthal.2019.08.007

Saini, J. S., Jain, A. K., Kumar, S., Vikal, S., Pankaj, S., and Singh, S. (2003). Neural network approach to classify infective keratitis. Curr. Eye Res. 27 (2), 111–116. doi:10.1076/ceyr.27.2.111.15949

Sandra Johanna, G. P. S., Antonio, L. A., and Andrés, G. S. (2019). Correlation between type 2 diabetes, dry eye and meibomian glands dysfunction. J. Optom. 12 (4), 256–262. doi:10.1016/j.optom.2019.02.003

Setu, M. A. K., Horstmann, J., Schmidt, S., Stern, M. E., and Steven, P. (2021). Deep learning-based automatic meibomian gland segmentation and morphology assessment in infrared meibography. Sci. Rep. 11 (1), 7649. doi:10.1038/s41598-021-87314-8

Sharma, N., Bagga, B., Singhal, D., Nagpal, R., Kate, A., Saluja, G., et al. (2022). Fungal keratitis: A review of clinical presentations, treatment strategies and outcomes. Ocul. Surf. 24, 22–30. doi:10.1016/j.jtos.2021.12.001

Shi, C., Wang, M., Zhu, T., Zhang, Y., Ye, Y., Jiang, J., et al. (2020). Machine learning helps improve diagnostic ability of subclinical keratoconus using Scheimpflug and OCT imaging modalities. Eye Vis. (Lond) 7, 48. doi:10.1186/s40662-020-00213-3

Smadja, D., Touboul, D., Cohen, A., Doveh, E., Santhiago, M. R., Mello, G. R., et al. (2013). Detection of subclinical keratoconus using an automated decision tree classification. Am. J. Ophthalmol. 156 (2), 237–246. e1. doi:10.1016/j.ajo.2013.03.034

Smolek, M. K., and Klyce, S. D. (1997). Current keratoconus detection methods compared with a neural network approach. Invest. Ophthalmol. Vis. Sci. 38 (11), 2290–2299.

Souza, M. B., Medeiros, F. W., Souza, D. B., Garcia, R., and Alves, M. R. (2010). Evaluation of machine learning classifiers in keratoconus detection from orbscan II examinations. Clin. (Sao Paulo) 65 (12), 1223–1228. doi:10.1590/s1807-59322010001200002

Stapleton, F., Alves, M., Bunya, V. Y., Jalbert, I., Lekhanont, K., Malet, F., et al. (2017). TFOS DEWS II epidemiology report. Ocul. Surf. 15 (3), 334–365. doi:10.1016/j.jtos.2017.05.003

Stegmann, H., Werkmeister, R. M., Pfister, M., Garhöfer, G., Schmetterer, L., and Dos Santos, V. A. (2020). Deep learning segmentation for optical coherence tomography measurements of the lower tear meniscus. Biomed. Opt. Express 11 (3), 1539–1554. doi:10.1364/BOE.386228

Su, T.-Y., Ting, P.-J., Chang, S.-W., and Chen, D.-Y. (2020). Superficial punctate keratitis grading for dry eye screening using deep convolutional neural networks. I.E.E.E. Sens. J. 20 (3), 1672–1678. doi:10.1109/JSEN.2019.2948576

Su, T. Y., Liu, Z. Y., and Chen, D. Y. (2018). Tear film break-up time measurement using deep convolutional neural networks for screening dry eye disease. Ieee. Sens. J. 18, 6857–6862. doi:10.1109/JSEN.2018.2850940

Sullivan, D. A., Dana, R., Sullivan, R. M., Krenzer, K. L., Sahin, A., Arica, B., et al. (2018). Meibomian gland dysfunction in primary and secondary sjögren syndrome. Ophthal. Res. 59 (4), 193–205. doi:10.1159/000487487

Sun, Q., Deng, L., Liu, J., Huang, H., Yuan, J., Tang, X., et al. (2017). “Convolutional neural network for corneal ulcer area segmentation. Fetal, infant and ophthalmic medical image analysis. OMIA FIFI,” in Lect. Notes comput. Sci. (Berlin: Springer), 10554.

Taigman, Y., Yang, M., Ranzato, M. A., and Wolf, L. (2014). “DeepFace: Closing the gap to human-level performance in face verification,” in IEEE. Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23-28 June 2014, 1701–1708. doi:10.1109/CVPR.2014.220

Tan, Z., Chen, X., Li, K., Liu, Y., Cao, H., Li, J., et al. (2022). Artificial intelligence-based diagnostic model for detecting keratoconus using videos of corneal force deformation. Transl. Vis. Sci. Technol. 11 (9), 32. doi:10.1167/tvst.11.9.32