- 1Ningbo Cixi Institute of Biomedical Engineering, Ningbo Institute of Materials Technology and Engineering, Chinese Academy of Sciences, Ningbo, China

- 2University of Chinese Academy of Sciences, Beijing, China

- 3The Affiliated Ningbo Eye Hospital of Wenzhou Medical University, Ningbo, China

- 4School of Control and Computer Engineering North China Electric Power University, Baoding, China

- 5Zhejiang Engineering Research Center for Biomedical Materials, Ningbo Cixi Institute of Biomedical Engineering, Ningbo Institute of Materials Technology and Engineering, Chinese Academy of Sciences, Ningbo, China

Morphological changes of the choroid have been proved to be associated with the occurrence and pathological mechanism of many ophthalmic diseases. Optical Coherence Tomography (OCT) is a non-invasive technique for imaging of ocular biological tissues, that can reveal the structure of the retinal and choroidal layers in micron-scale resolution. However, unlike the retinal layer, the interface between the choroidal layer and the sclera is ambiguous in OCT, which makes it difficult for ophthalmologists to identify with certainty. In this paper, we propose a novel boundary-enhanced encoder-decoder architecture for choroid segmentation in retinal OCT images, in which a Boundary Enhancement Module (BEM) forms the backbone of each encoder-decoder layer. The BEM consists of three parallel branches: 1) a Feature Extraction Branch (FEB) to obtain feature maps with different receptive fields; 2) a Channel Enhancement Branch (CEB) to extract the boundary information of different channels; and 3) a Boundary Activation Branch (BAB) to enhance the boundary information via a novel activation function. In addition, in order to incorporate expert knowledge into the segmentation network, soft key point maps are generated on the choroidal boundary, and are combined with the predicted images to facilitate precise choroidal boundary segmentation. In order to validate the effectiveness and superiority of the proposed method, both qualitative and quantitative evaluations are employed on three retinal OCT datasets for choroid segmentation. The experimental results demonstrate that the proposed method yields better choroid segmentation performance than other deep learning approaches. Moreover, both 2D and 3D features are extracted for statistical analysis from normal and highly myopic subjects based on the choroid segmentation results, which is helpful in revealing the pathology of high myopia. Code is available at https://github.com/iMED-Lab/Choroid-segmentation.

1 Introduction

The choroid is a dense vascular layer posterior of the uvea, the middle membrane of the ocular posterior segment. It plays a critical role in thermoregulation, adjustment of retinal position, and secretion of growth factor (Nickla and Wallman, 2010). The high blood flow in the choroid makes it immune to environmental conditions with various extreme temperatures. Choroidal thickness has become one of the diagnostic indicators of many ophthalmic diseases, such as high myopia, glaucoma, age-related macular degeneration, and diabetic retinopathy (Regatieri et al., 2012; Chen et al., 2014; Wang et al., 2015; Yiu et al., 2015). Takeing high myopia as an example, the percentage of Asian young people with high myopia increaed by 6.8%–21.6% over the period 2010 to 2014 (Wong and Saw, 2016). Individuals with high myopia are highly susceptible to developing pathological myopia, which is one of the leading causes of low vision and blindness (Oduntan, 2005; Cedrone et al., 2006). Therefore, choroid segmentation and choroidal thickness analysis are crucial in determining the pathogenesis and treatment strategy of ophthalmopathy.

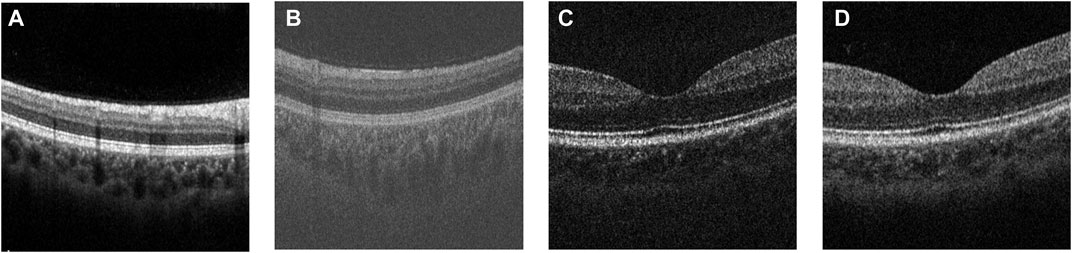

The development of Optical Coherence Tomography (OCT) (Huang et al., 1991) has made analysis of retinal and choroidal morphology convenient and accurate for clinical research and application. With the emergence of new OCT techniques such as spectral domain OCT (SD-OCT) (Yaqoob et al., 2005), enhanced depth imaging OCT (EDI-OCT) (Wong et al., 2011) and swept-source OCT (SS-OCT) (Choma et al., 2003), the choroid can be clearly visible. Because of the characteristics of non-invasive 3D imaging, these new OCT techniques have become the primary choice for clinicians to diagnose ophthalmic diseases. Figure 1 shows an OCT volume acquired from a healthy eye, which can be divided into three parts: from top to bottom (retina, choroid and sclera). In addition, the 2D B-scans can be extracted from the 3D volume for further study of the choroidal morphology.

FIGURE 1. Examples of a 3D OCT volume and 2D OCT B-scan image. The choroid-sclera interface (indicated by the red dashed line) is ambiguous and difficult to extract compared to the other boundaries.

Based on the B-scans, various methods have been proposed for choroidal layer segmentation. Previous methods were mainly based on graph theory (Zhang et al., 2012; Hu et al., 2013; Mazzaferri et al., 2017). These methods rely on manual parameter settings, and usually yield low efficiency, which limits their segmentation accuracy and makes them difficult to apply in clinical practice. With the emergence and development of deep learning, several Convolutional Neural Network (CNN) models have been applied to choroidal layer segmentation (Chen et al., 2015; Sui et al., 2017; He et al., 2021; Yan et al., 2022). The powerful feature learning capability of CNN has significantly improved choroid segmentation accuracy and efficiency over the last decade. In addition, end-to-end networks have enabled models to take original images as direct input, and output segmentation results without handcrafted operations (Mao et al., 2020; Zhang et al., 2020).

Many current studies have explored possible improvements of segmentation efficiency and model optimization, but only a few have focused on the structural characteristics of the choroidal layer. Due to the low contrast of OCT images (as shown in Figure 1), the boundary between choroid and sclera is ambiguous, which for many algorithms leads to inaccurate boundary localization. However, the issue of vagueness in imaging the Choroidal Scleral Interface (CSI) has been little investigated. Moreover, choroidal thickness, as an alternative and important biological indicator strongly associated with several ocular diseases, has been quantified in much recent work on the basis of 2D B-scans only (Regatieri et al., 2012; Kim et al., 2013; Agrawal et al., 2020). In contrast, the 3D morphological characteristics of the choroid and its thickness in different regions, such as the nasal side and the foveal region, may provide more indicative and accurate information for diagnosis of ocular diseases. But few research works have investigated choroid characteristics derived from 3D morphology.

In this paper, we focus on tackling the following two issues in choroid segmentation and morphological analysis. Firstly, since it is difficult to extract the boundary between choroid and sclera due to low contrast in OCT, existing segmentation methods usually perform ineffectively and produce poor definition of the choroidal boundary. Secondly, there is a lack of choroid-related biomarkers in highly myopic subjects, especially three-dimensional biomarkers, which are more conducive to the diagnosis and treatment of diseases.

To this end, we propose a fully automated choroid segmentation framework with boundary feature enhancement. Initially, in order to extract accurate boundary information, we design a new Boundary Enhancement Module (BEM). This consists of three parallel branches. One branch is a Feature Extraction Branch (FEB), which uses dilated convolution (Yu and Koltun, 2015) with different dilation rates to acquire relevant image features under different receptive fields, so that the boundary features are fully retained. The second branch is a Channel Enhancement Branch (CEB), which exploits and enhances the boundary characteristics of different channels through global average pooling and convolution operations. The third branch is a Boundary Activation Branch (BAB), which strengthens the boundary information from the spatial perspective via one-dimensional convolution and a specific activation function to further enhance boundary features. The BEM can be integrated with different encoder-decoder networks, such as the U-Net and FCN. In addition, for each B-scan, a soft point map is generated based on the extracted points on the choroidal boundary by using a boundary strengthen point selection algorithm. Based on these boundary soft point maps, we introduce the Boundary Perceptual Loss (BP-Loss) to provide feedback on the boundary enhancement effect of the output segmentation result. Finally, we extract and analyze both 2D and 3D morphological features of the choroid in the highly myopic population, based on the segmentation results.

In brief, our main contributions are listed as follows:

• We propose a novel BEM module for reinforcing information on the choroidal boundary from three perspectives including feature, channel and space, which can be integrated with major encoder-decoder architectures such as U-Net, FCN, etc.

• A boundary perceptual loss is introduced to incorporate expert knowledge into our segmentation network. This new loss provides the flexibility to learn a prior boundary information from a soft point map.

• We extract 3D edge point cloud features and reconstructed the 3D structure of the choroid based on the 2D segmentation results of all B-scans. In addition, we statistically analyze choroidal thickness and 3D characteristics in different subfields to further determine the correlation between choroidal morphological changes and high myopia.

2 Related work

2.1 Choroid segmentation

Existing methods for choroid segmentation in OCT are mainly divisible into two categories: traditional methods, and machine learning methods. Zhang et al. (Zhang et al., 2012) first attempted to extract the choroidal layer in 3D SD-OCT by adapting a graph-based method, which produced a relatively accurate choroidal surface. However, this method was tested on normal subjects only, and it is difficult to achieve the expected segmentation performance for some patients, especially those with large changes of choroidal morphology. In order to overcome this limit, Hu et al. (Hu et al., 2013) improved the graph-based multi-layer segmentation method by applying various smoothness and interaction constraints to different choroidal layer structures. This method has been validated on OCT images collected from both healthy subjects and non-neovascular AMD subjects, revealing great similarities with manual segmentation.

With the emergence of Enhanced Depth Imaging OCT (EDI-OCT) technology, its high-resolution imaging made the choroidal layer structure more clearly displayed in OCT B-scans, which is more conducive to choroid segmentation. Tian et al. (Tian et al., 2013) adopted Dijkstra’s algorithm to seek the shortest path, and the choroidal surface was quickly and accurately detected. Similarly, Danesh et al. (Danesh et al., 2014) proposed a segmentation method based on the Gaussian mixture model, to obtain the choroidal structure in EDI-OCT images. However, this still requires handcrafted features, and is sensitive to noise artifacts existing in EDI-OCT images. In addition, Chen et al. (Chen et al., 2015) introduced a new pipeline composed of a progressive intensity distance image generation algorithm and graph search method for the problem of noise and boundary ambiguity. Wang et al. (Wang et al., 2017) used a Markov Random Field (MRF) method to connect adjacent pixels, and a level set method to regularize the distance of uneven textures.

Since graph search technology is greatly affected by manual parameter settings, deep learning-based methods have been developed to obtain the choroidal structure. Sui et al. (2017) proposed a convolutional neural network (CNN)-based method that learns a graph-edge weight directly from raw OCT pixels. The network structure can be divided into two parts: one detects the CSI boundary, and the other detects the BM boundary. This method has revealed good adaptability to 3D EDI-OCT images collected from both healthy subjects and patients with macular edema. He et al. (2021) combined CNN and a l2-lq (0 < q < 1) fitter to segment the outer choroidal surface, in which the CNN is used to generate predicted values, and the l2-lq fitter is employed to maintain the stability of the fitting function. The OCT image is partitioned into small patches to form the input of the CNN, and post-processing is required to discard irrelevant information, which leads to relative inefficiency compared to end-to-end architectures. Similarly, Masood et al. (2019) used deep learning methods to establish a new segmentation structure to obtain the outer choroidal surface. Before being fed into the CNN, the OCT image needs to be divided into patches for data sampling and conversion. This method reduces the average segmentation error, but it is still not as efficient as end-to-end architectures.

As a result, several end-to-end deep learning approaches have been proposed more recently. Zhang et al. (2020) proposed an end-to-end method, consisting of a global multi-layer segmentation block, a choroidal layer segmentation block, and a regularization block. This first segments all the inner retinal layers, and then utilizes global information to detect the choroidal layer. For 3D OCT images collected from healthy subjects, the thickness difference obtained by this method (4.30 ± 0.02 pixels) is more accurate than those obtained by other state-of-the-art methods. Chai et al. (2020) proposed a method that can effectively segment the choroidal boundary by minimizing the differences between different regions, and takes into account the differences between different OCT acquisition equipment. It feeds OCT images from different domains into a U-Net-based network, and uses both adversarial and perceptual loss for domain adaptation.

2.2 Choroidal thickness analysis

Examining the choroidal layer as extracted from OCT images, ophthalmologists can analyze choroidal variations from different perspectives. In particular, choroidal thickness is of great interest, as it often indicates the presence or even severity of some ophthalmic diseases. Yiu et al. (2015) extracted choroidal thickness from EDI-OCT images collected from subjects with Age-related Macular Degeneration (AMD). Employing on a semi-automatic segmentation method, they analyzed the similarities and differences in choroidal thickness between normal individuals and patients with AMD. Wang et al. (2015) compared choroidal thickness between patients with high myopia and healthy people. By analyzing the experimental results, they found that choroidal thickness in healthy individuals is significantly thicker than that of individuals with high myopia. Regatieri et al. (2012) examined choroidal thickness in diabetic patients and found that the change of choroidal thickness was related to the severity of diabetes. More recently, several studies have shown that choroidal thickness as revealed by retinal OCT images is associated with certain neurodegenerative diseases. Moschos and Chatziralli (2018) extracted the retinal thickness and choroidal thickness of patients with Parkinson’s Disease (PD) from spectral domain OCT, and compared the results with those from healthy individuals. The differences between people with, and without PD were statistically significant. Similarly, Satue et al. (2018) used swept-source OCT to measure retinal and choroidal thickness of patients with PD. They found that the retina of patients with PD became thinner, while choroidal thickness might increase. Similar to our work, Chen et al. (2022) segmented the choroidal layer of highly myopic patients and non highly myopic people and compared the thickness, while they lacked the analysis of three-dimensional features, and the segmentation performance needs to be improved.

To this end, the automatic and accurate quantification of choroidal thickness is potentially crucial to diagnosis of these diseases. However, most quantification approaches of choroidal thickness can only provide two-dimensional measurements at a fixed location, which limits the practicability. Therefore, we proposed to use 3D edge point cloud features to produce a three-dimensional reconstruction of the choroidal layer.

2.3 Boundary segmentation

Boundary segmentation in images remains a research hotspot, not only in the fields of medical image analysis but also in many fields of other computer vision such as remote sensing. The mainstream boundary segmentation approaches may be divided into two categories: filtering-based methods, and learning-based methods. Wang et al. (2018) introduced an interactive geodesic method based on CNN into medical image segmentation: a mannual correction of boundary information is required to improve the accuracy of boundary segmentation. Lee et al. (2020) proposed a novel network with boundary preserving blocks to retain the boundary information via learning proper weights of boundary features. Wei et al. (2021) proposed a concentric loop CNN with a boundary detector and a refinement block to improve the effect of boundary segmentation in remote sensing images. Dang and Lee (2021) improved the effect of boundary segmentation in document images by sharing the weights of boundary features and global features, and using adversarial loss to strengthen the learning of boundary information. Wang et al. (2021) combined a transformer with CNN to enhance the segmentation of skin lesions, and used an attention mechanism to boost the performance of boundary segmentation. Recently, Yu et al. (2022) proposed FBCU-Net, which uses boundary semantic features to segment medical images, but it is mainly used for region segmentation and the performance of layer structure segmentation still needs to be improved.

3 Methods

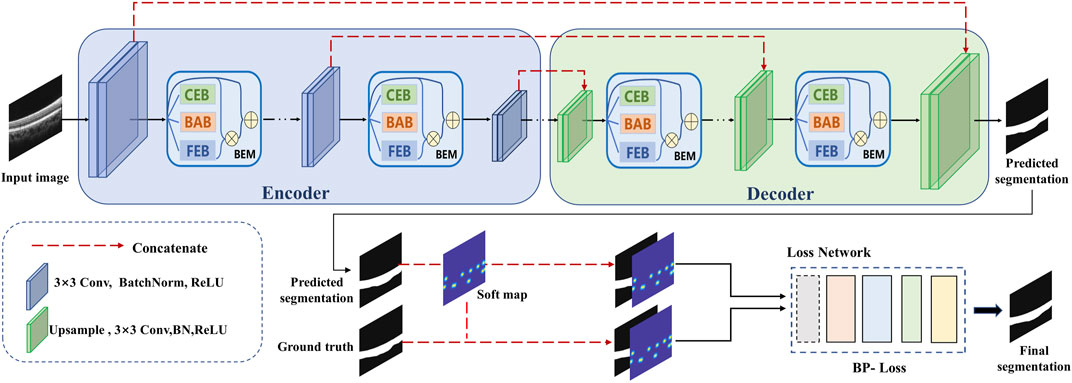

In this paper, we propose a novel encoder-decoder network with boundary feature enhancement for choroid segmentation. The proposed choroid segmentation framework is presented in Figure 2. The proposed framework adopts U-Net (Ronneberger et al., 2015) as the baseline encoder-decoder network, and incorporates a novel module, termed a BEM into each encoder/decoder layer. The BEM consists of three parallel branches: FEB, CEB and BAB. In addition, pre-trained VGG-19 is utilized to calculate the specific boundary perceptual loss, which guides the segmentation framework in reducing the gap at boundary feature level between the predicted segmentation map and ground truth.

FIGURE 2. An overview of the proposed boundary enhancement framework for choroid segmentation. A novel BEM is incorporated into each encoder/decoder layer of the proposed framework. In addition, a pre-trained VGG network is utilized to calculate the specific boundary perceptual loss to improve the choroidal boundary segmentation with the guidance of the soft point map generated from ground truth.

In order to train the proposed framework, a soft point map is constructed for each B-scan as another ground truth for extra supervision. Boundary enhancement points are first extracted using the boundary enhancement point selection algorithm. To allow tolerance of the key points’ position in the training phase, we generate Gaussian distributed disks based on all extracted points for each B-scan to construct the corresponding soft point map. The details are illustrated in the following subsections.

3.1 Soft point map construction

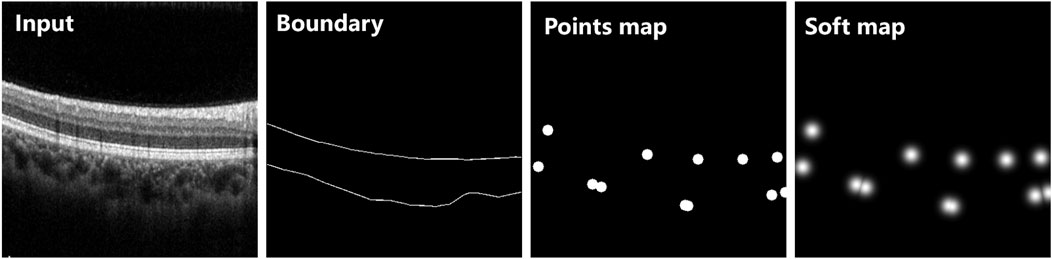

Inspired by Lee et al. (2020), we employed the boundary enhancement point selection algorithm to select several key points, then generated a point map for each B-scan as another ground truth for training. By contrast with the binary disks generated on the selected points in (Lee et al., 2020), we adopted a two-dimensional Gauss function to generate a point map with soft boundaries for more effective guidance with boundary information to segmentation. This modification is mainly based on the following considerations: a binary disk allocates undifferentiated attention to all pixels in the neighborhood of the corresponding selected point, which creates vulnerability to deviation of boundary localization. The soft point map can be expressed as follows:

where (i, j) represent the coordinates of one pixel of the generated soft point map matrix S; (xk, yk) represent the coordinates of kth selected key point (total K selected key points); σ represents the standard deviation of the Gauss function. The differences between the original point map and the proposed soft point map are illustrated in Figure 3.

FIGURE 3. Two different types of point maps extracted from the same OCT B-scan. The original points map was generated using binary disk as (Lee et al., 2020), while the soft map was generated based on a two-dimensional Gauss function. All points were extracted from the same boundary of ground truth.

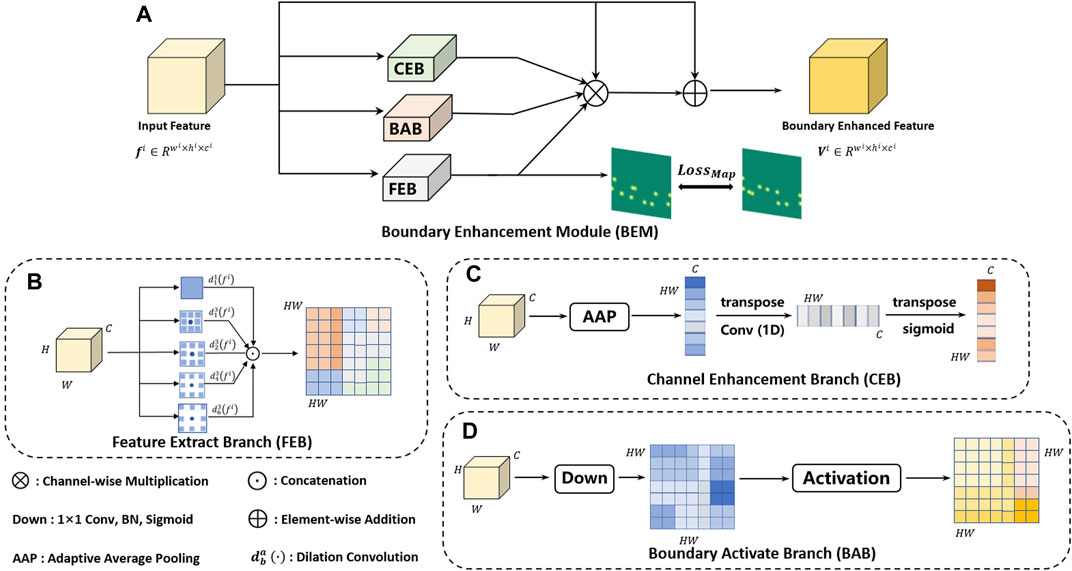

3.2 Boundary enhancement module

The proposed choroid segmentation architecture incorporates our novel BEMs into its encoder/decoder layers, as shown in Figure 2. Figure 4 presents the architecture of the BEM, which consists of three branches including FEB, CEB and BAB. The BEM can be embedded in various layers in the segmentation network. The BEM embedded in the ith layer takes the feature maps

where ⊕ represents element-wise addition; ⊗ represents multiplication (pixel-wise multiplication for single-channel maps Mi and Qi, and channel-wise multiplication for the weighting vector Ni).

FIGURE 4. The architecture of the proposed BEM (A). The BEM consists of three parallel branches including FEB (B), CEB (C) and BAB (D), which achieve enhancement of boundary information from the feature, channel and spatial perspective, respectively.

3.2.1 Feature extraction branch

The gray section in Figure 4 shows the architecture of the FEB, which is designed for extracting a boundary-enhanced point map with different receptive fields. Multiple receptive fields integrate global context information and local detailed information, which is beneficial to accurate localization of boundary key points. We adopted convolution with different dilation rates to obtain the features with different receptive fields. Finally, the features with different receptive fields are concatenated and then fed into a single 1 × 1 convolutional layer with a Sigmoid function. Let

where ⊙ and

3.2.2 Channel enhancement branch

Each channel of a feature map may be regarded as a specific-class response. However, there are also some differences in the importance of different feature classes to a specific task (e.g., choroid segmentation). In order to selectively enhance features useful for choroid segmentation, a CEB is designed to calculate channel-wise weighting vectors for extracted feature maps. The detailed structure of CEB is shown in the green section of Figure 4. The branch first adopts global average pooling (gap) to generate channel-wise statistics of the input feature maps fi, then applies one-dimensional convolution of kernel size 3 to obtain the final channel-wise weighting vectors Ni, which can be denoted as follows:

where fgap denotes global average pooling; C1D3 denotes the one-dimensional convolution of kernel size 3;

3.2.3 Boundary activation branch

In order to further improve detection of the choroidal boundary, we introduced an extra branch called the BAB, as detailed in by the red section of Figure 4. The channel number of the feature maps is reduced to 1 via a 1 × 1 convolutional layer followed by a Sigmoid function: a specific activation function is then applied to the obtained single-channel feature map, which can be formulated as follows:

where fi represents the input feature maps; C2D1 represents the 1 × 1 convolutional layer;

It is worth noting that the specific activation function was designed based on the observation that choroidal boundary pixels generally have a value around 0.5 in the obtained feature maps, while interior of choroid and background pixels have values near 1 and 0, respectively. To this end, the activation map is utilized to adjust each feature map spatially for the choroid segmentation task, by assigning higher weights for choroidal boundary pixels (close to 2—e−0.25), and lower weights for interior of choroid and background pixels (close to 1) (Simonyan and Zisserman, 2014). In this way, the boundary information is highlighted after activation.

3.3 Loss function

In order to effectively train the proposed choroid segmentation network, we introduced a novel loss called boundary perceptual loss (BP-Loss), which embeds the soft point map into the segmentation network. The complete joint loss function is formed after incorporating segmentation loss.

3.3.1 Segmentation loss

First, we adopted Binary Cross Entropy Loss as the segmentation loss in order to reduce the difference between the ground-truth segmentation map and the predicted segmentation map, which is defined as:

where

3.3.2 Boundary perceptual loss

Unlike general semantic segmentation tasks, medical images require strong expert knowledge to achieve better segmentation performance. Therefore, we concatenated the generated soft point map with the predicted result and ground-truth to form the input of the VGG network, in order to constrain their geometrical relationship. To maintain consistency between the output of the FEB and ground truth. We adopted mean square error (MSE) loss and defined boundary point loss as:

where Mi and

where ϕi (⋅) denotes the feature maps from the ith layer of the VGG-19 network pre-trained on the ImageNet; SGT denotes ground truth segmentation map; MGT denotes ground truth soft point map;

The Boundary Perceptual Loss is defined as:

where n indicates the number of BEMs in the proposed choroid segmentation network.

Finally, the total loss function is defined as:

where λSeg and λBP are set as 0.5 and 0.5 in our task.

4 Experiment settings

4.1 Dateset

In this work, a new Choroidal OCT image for SegmenTAtion (COSTA) dataset, which consists of three subsets named COSTA-H, COSTA-T and COSTA-B, was constructed for our proposed approach. These subsets were acquired from different devices or adopted different bit depths, as illustrated in Figure 5.

FIGURE 5. Examples of the original OCT images from (A) COSTA-H dataset, (B) COSTA-T dataset, (C) COSTA-B dataset (6 bit-depth), and (D) COSTA-B dataset (12 bit-depth).

• COSTA-H consists of 10 OCT volumes from 10 healthy subjects. Each volume was captured by the Heidelberg Spectrails system, and contains 384 non-overlapping B-scans, each covering a 3 × 3 × 2 mm3 region. Two groups of ophthalmologists were invited to independently make manual annotations of the upper and lower boundaries of the choroidal layer (BM and CSI), and their consensus were used as ground truth after discussion. In order to reduce the number of manual annotations, we asked these ophthalmologists to annotate one B-scan every six consecutive B-scans, due to the high similarity between adjacent B-scans in an OCT volume. Finally, we obtained a total of 384/6 × 10 = 640 B-scans with manually annotated choroid boundaries.

• COSTA-T was captured by the Topcon DRI-OCT-1 system, containing a total of 20 OCT volumes from 20 healthy human eyes. Each volume contains 256 B-scans with a resolution of 512 × 992 pixels covering a 6 × 6 × 2 mm3 region. This dataset was also annotated by the same protocol as COSTA-H, yielding a total of 256/4 × 20 = 1280 B-scans with annotated choroid boundaries.

Both COSTA-H and COSTA-T datasets were used for training and testing, where the ratio of data volume between the training and testing sets is 3:1. To more accurately and credibly evaluate the proposed network, we adopted the 4-fold cross-validation strategy, i.e., randomly dividing all samples into 4 equal pieces and taking each piece as the validation set and others as the training set in turn. After 4 groups of tests, different validation sets are replaced each time. That is, the results of four groups of models are obtained, and the average value is taken as the final result.

• COSTA-B was captured by a homemade 70-Khz SD-OCT system with different bit depths, and all this data was selected from Hao et al. (Hao et al., 2020). It contains 199 annotated B-scans with a resolution of 270 × 450 pixels from one normal subject. By contrast with COSTA-H and COSTA-T, COSTA-B was only used to test the robustness of the proposed method with respect to imaging quality. For the same B-scan, we also made a comparison of segmentation results based on different bit depths. The higher bit depth represents the better image quality, which makes for easier choroid segmentation.

4.2 Implementation

All deep learning approaches in the experiments were implemented with PyTorch (Paszke et al., 2019) and ran on a single NVIDIA GeForce GTX 3090 GPU with 24 GB memory under an Ubuntu 16.04 system. The proposed network was trained with 400 epochs, and some hyper-parameters were set as follows: Adam optimization, with an initial learning rate of 0.0005 and batch size of 8. For other comparison methods, we adopted the same training strategy in the original paper.

4.3 Quantitative evaluation metrics

In order to compare the performance of the proposed method with other state-of-the-art deep learning networks, the following routine metrics for image segmentation were adopted and calculated:

• Dice Coefficient (Dice) =

• Intersection over Union (IoU) =

• Accuracy (Acc) =

• Sensitivity (Sen) =

In addition, we adopted Average Unsigned Surface Detection Error (AUSDE) (Xiang et al., 2018) based on BM and CSI:

where m represents the width of the B-scan, y(i) and

5 Results

In this section, we performed training, validation as well as testing on COSTA-H and COSTA-T datasets, and compared them with the state-of-the-art choroid segmentation methods from both qualitative and quantitative perspectives. In addition, we applied the model trained on COSTA-H to COSTA-B to validate the robustness of the proposed method. Furthermore, we applied the proposed method to high myopia subjects. Segmentation results of all B-scans were then utilized for 3D reconstruction, which extracts 3D features for clinical correlation analysis.

5.1 Qualitative results

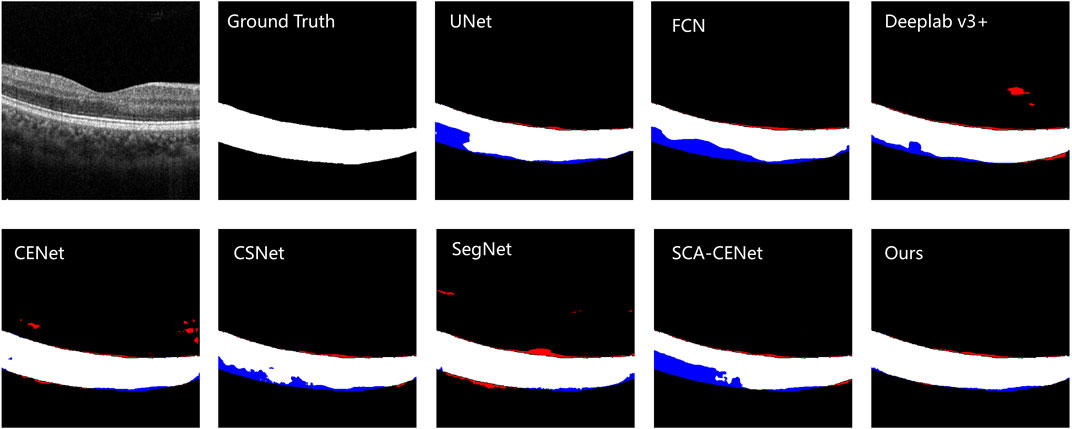

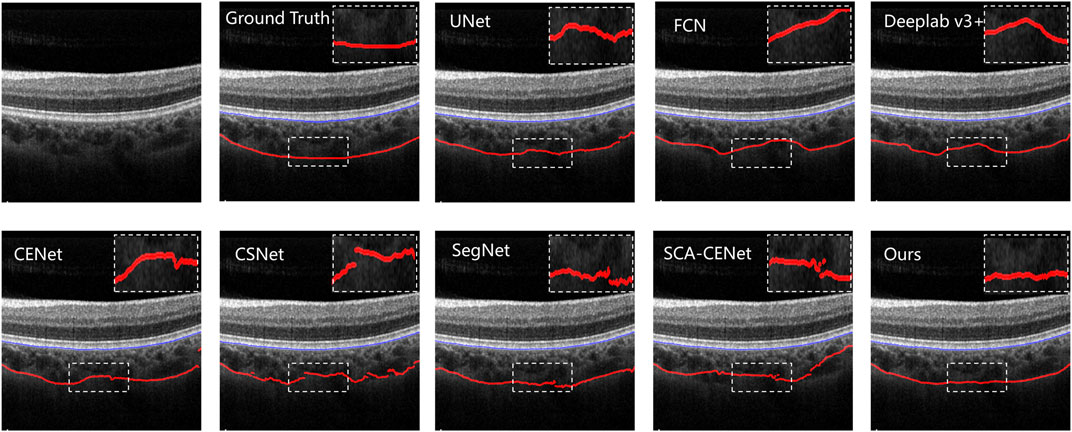

Figure 6 shows the visualization of the results of training and testing on Heidelberg, and we compare them with other popular methods that use deep learning to segment the choroid layer, including the U-Net (Ronneberger et al., 2015), FCN (Long et al., 2015), DeepLab v3+ (Chen et al., 2018), SegNet (Badrinarayanan et al., 2017), CE-Net (Gu et al., 2019), CS-Net (Mou et al., 2019), and SCA-CENet (Mao et al., 2020). In Figure 7, the BM and CSI are located by blue and red lines, respectively. In both the overall segmentation and the boundary extraction (shown by the zoomed-in part of Figure 7), the proposed BEM performed better than its counterparts, which shows that our reinforcement of boundary characteristics is useful and efficient.

FIGURE 6. The visualization of the example result of choroid segmentation on the COSTA-H dataset. The first image is the original image, the second image is the ground truth, and the next few images are the results of different methods of segmentation: the specific methods are marked in the upper left corner of the image. White denotes a correctly segmented choroidal area, red denotes over-segmentation, and blue denotes under -segmentation.

FIGURE 7. Results of different choroid segmentation methods in boundary detection. The name of the method is shown at the upper left corner of each image.

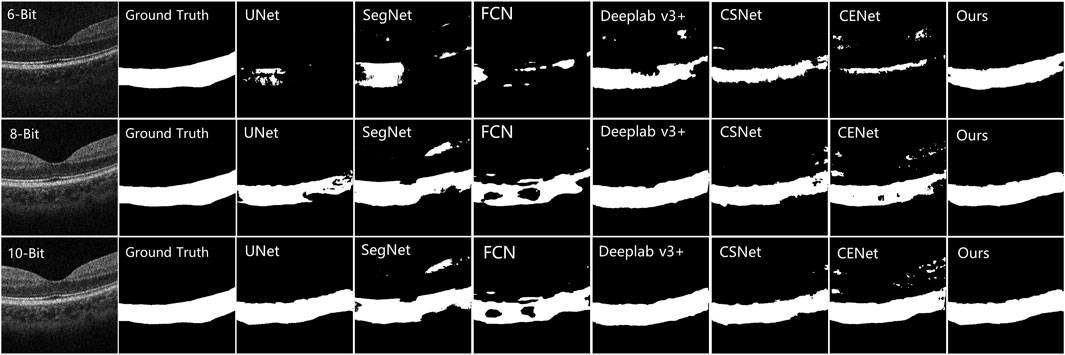

The testing results on the COSTA-B dataset are presented in Figure 8, which shows three OCT B-Scans with different bit depths, including 6, 8, and 12 (the best image quality) bits. The model with the best segmentation results on the COSTA-H dataset was used to segment these images. The segmentation results show the excellent robustness of the proposed model. Common segmentation methods that focus on global information and lack detailed features have difficulty in fully segmenting all the choroid layers. In contrast, the proposed method, which benefits from its ability to enhance boundary characteristics and extract different features from different perceptual fields and channels, shows good robustness across images of differing qualities.

FIGURE 8. Comparison results of other choroid segmentation methods in boundary detection. The specific methods are marked in the upper left corner of the image.

5.2 Quantitative results

In order to verify the advantages of our method from a quantitative perspective, we selected the Dice, IoU, AUSDE and TD as evaluation metrics. For the COSTA-B test, only two metrics, Dice and IoU, were selected for evaluation, as the segmentation results of many methods could not form clear boundary lines (as shown in Figure 8).

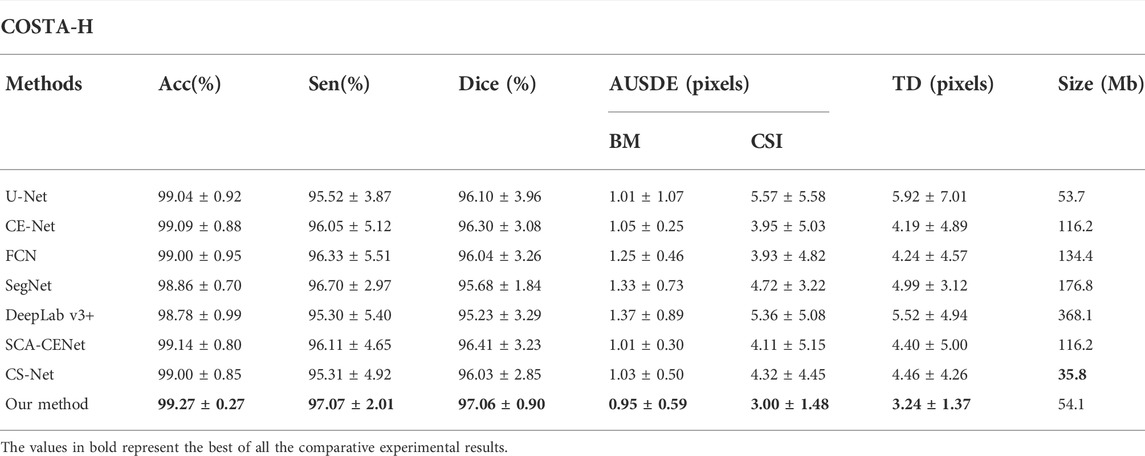

Table 1 shows the quantitative comparison of various deep learning methods applied to the COSTA-H dataset. As is shown in Table 1, our method achieved 97.06% of the Dice coefficient and 94.31% of the IoU value, with a Boundary Error of 0.9496 for AUSDE of the BM and a Detection Error of 3.0029 for the lower boundary CSI, outperforming other methods. After adding BEM and BP-Loss to UNet, the value of AUSDE of CSI is improved by 2.57 pixels and that of TD is improved by 2.68 pixels, demonstrating the value of boundary extraction.

TABLE 1. Quantitative segmentation results of different deep learning methods on the COSTA-H dataset.

In order to further evaluate our proposed method, we conducted additional tests on the COSTA-T dataset and the results are shown in Table 2. With the help of BEM, our method achieves 92.87% of the Dice coefficient and 86.91% of the IoU value: after adding the BEM and BP-Loss, this improves to 2.02% and 2.39% on the Dice and IoU values, respectively.

TABLE 2. Quantitative segmentation results of different deep learning methods on the COSTA-T dataset.

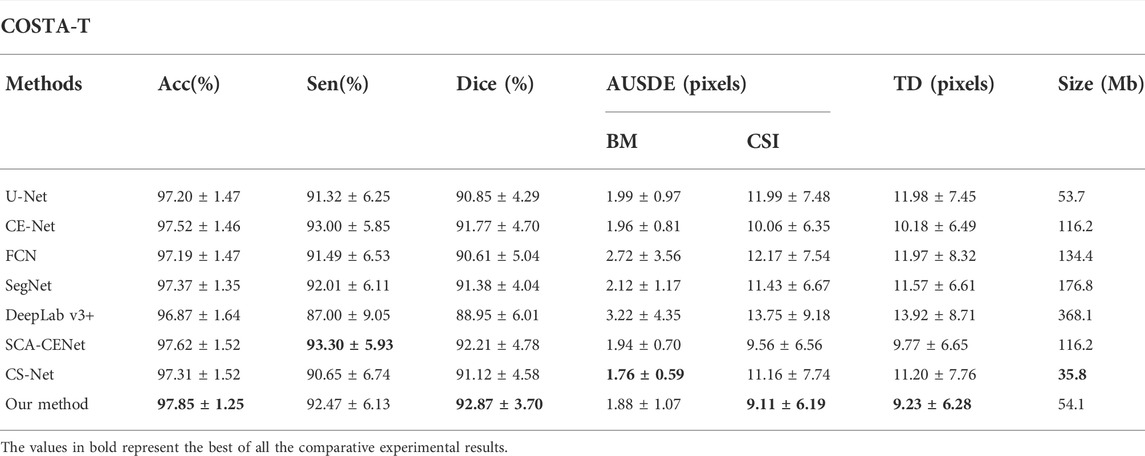

We also select 6, 8, 10, and 12 bit depth images in the COSTA-B dataset for evaluation on the DICE and IoU metrics. For each network, both the best trained and validated models on the COSTA-H dataset were tested. It may be seen from Figure 9, by contrast with our proposed method, the segmentation results of the other networks degrade significantly over decreased depths of the same image. Specifically, all of the tested algorithms obtain better segmentation results on the 12-bit depth map than those on the 6 and 8-bit depths. This further validates the robustness of the proposed method.

FIGURE 9. Trend of Dice and IoU results of different segmentation methods in different bit depth images. (A), (C) are the result on the COSTA-H dataset, and (B), (D) are the result on the COSTA-T dataset.

5.3 Ablation study

In order to verify that each branch in the BEM and the BP-Loss are effective, we conducted ablation experiments by removing each branch separately and performing the experiments on the same dataset, and the results are shown in Figure 10. It can be seen that the dice and IoU metrics gradually increase with the addition of different branches, both in COSTA-T and COSTA-H datasets. It is obvious that the segmentation result benefits from every branch of the BEM.

FIGURE 10. Ablation results on COSTA-H and COSTA-T datasets. The blue bars denote the quantitative results of the baseline network U-Net. The orange bars denote the segmentation results of the network with FEB. The gray bars denote the segmentation results of the network with FEB and CEB. The yellow bars denote the segmentation results of the network with FEB, CEB, and BAB. The red bars denote the segmentation results of the network with FEB, CEB, BAB, and BP-Loss.

After adding BEM, the IoU of the segmentation result on COSTA-T dataset reaches 86.53%, which is 3.01% higher than the baseline. With the help of BP-Loss, the segmentation result reaches 86.91%, which further improves the segmentation effect. Similarly, each module and branch plays a role on the COSTA-H dataset.

6 Clinic applications

High myopia is a common visual impairment worldwide. The mechanical pulling of the growing eye axis in high myopia leads to retinal and choroidal thinning, as well as to a variety of pathological changes in the fundus, which can easily evolve into pathological myopia (Read et al., 2019; Scherm et al., 2019; Singh et al., 2019). Previous studies have shown that choroidal thickness is significantly higher in highly myopic patients than that in the healthy subjects, but no correlation has been found in other features such as volume, surface area, curvature of the BM and CSI, and other 3D features. Encouraged by the good perfomance of the proposed method demonstrated in the experimentation, we applied the segmentation method to a prospective clinical study, in which the choroidal thickness of different regions are extracted and the 3D morphology of choroidal structures reconstructed using point clouds.

1) Dataset: We collected 20 volunteers aged between 20 and 30 years with high myopia. The right and left eyes of all volunteers were scanned by the Heidelberg Spectrails system device for data acquisition, and volume data were extracted within a 4.5 × 4.5 × 2 mm3 area centered on the macular. Each volume contained 512 B-scans.

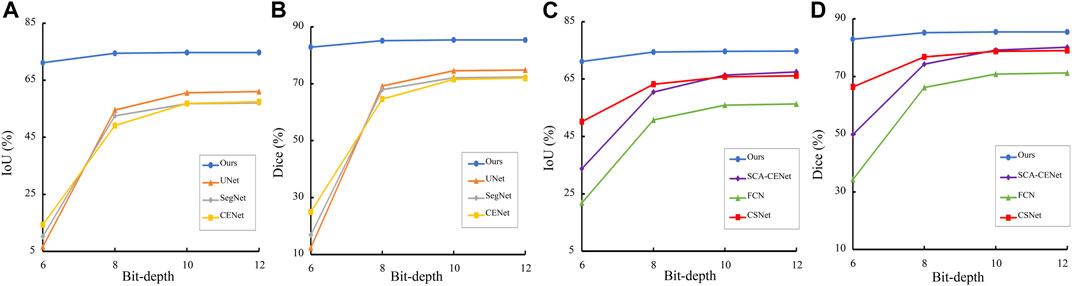

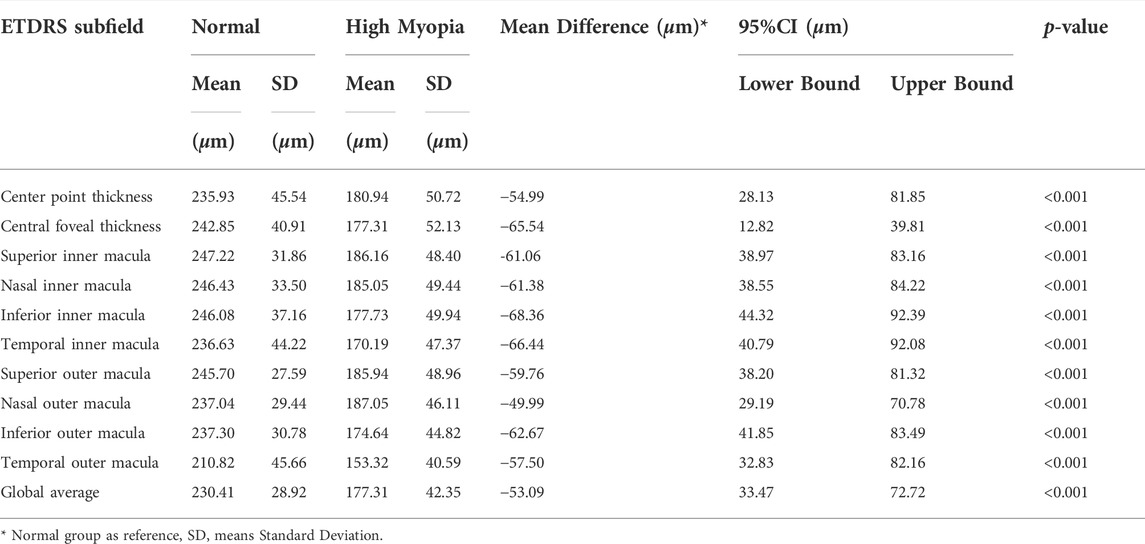

2) Result: Figure 11 shows the distribution of choroidal layer thickness in different areas across the volume, in both normal and highly myopic subjects. Table 3 shows the quantitative results of the average choroidal thickness in different subfields of the macula. The average choroidal thickness in the highly myopic subjects was significantly thinner than that in the normal subjects in all regions, with an average choroidal thickness of 230.41 ± 28.92 μm in the normal population and 177.31 ± 42.35 μm in the highly myopic subjects, while the specific thickness distribution in the other regions is shown in Table 3, with p-values less than 0.001 after t-test, which is consistent with the results reported in (Wang et al., 2015) recently.

FIGURE 11. Choroidal layer thickness map in normal and highly myopic subjects using Early Treatment Diabetic Retinopathy Study (ETDRS) circles of 1 mm, 3 mm, and 6 mm. The standard ETDRS subfields dividing the macula into 9 subfields. CFT: Central foveal thickness; TIM: Temporal inner macula; NIM: Nasal inner macula; SIM: Superior inner macula; IIM: Inferior inner macula; TOM: Temporal outer macula; NOM: Nasal outer macula; SOM: Superior outer macula; IOM: Inferior outer macula. (A) denotes the thickness map in normal subject, (B) denotes the 9 subfields of macula, (C,D) denote the average choroidal thickness [μm] of subfields in normal subjects and highly myopic, respectively.

TABLE 3. Average choroidal thickness and 95% CI of different Early Treatment Diabetic Retinopathy Study (ETDRS) subfields in normal and highly myopic subjects.

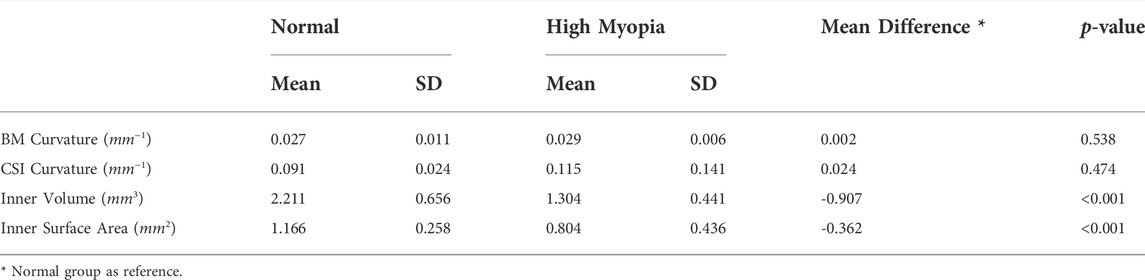

Current studies on the correlation between choroidal morphology and diseases rarely involve the 3D features of the choroid. To fill this gap, we reconstructed the 3D morphology of the choroid and extracted the 3D features of choroidal volume, surface area and surface curvature using 3D point clouds. The average volume of the choroid in the central macular notch 3 × 3 × 2 mm3 in the normal subjects was 2.211 ± 0.656 mm3, whereas the average volume of the choroid in the same range in the highly myopic subjects was 1.304 ± 0.441 mm3, with a p-value less than 0.001. This is also consistent with the relevant research results reported in (Barteselli et al., 2012).

In addition, we also calculated the surface area and the curvature of the upper and lower choroid: the results are shown in Table 4. It may be seen from Table 4 that the choroidal surface areas of highly myopic subjects and normal subjects are significantly different, while differeces of curvature between highly myopic subjects and normal subjects are not as significant.

7 Discussion and conclusion

With the emergence and popularity of deep learning methods, several choroidal layer segmentation methods have been developed during the last decade and many have been applied to choroidal segmentation tasks. However, due to the low depth and low contrast of the early OCT techniques, the applications of deep learning methods to retinal segmentation tasks have been limited. Since the continuous innovation of OCT equipment means that the choroid can now be rendered intact in B-scan, it is straightforward that the previous methods for segmenting the retinal layer should be applied to the task of choroidal layer segmentation. However, even when using the most recent technical improvements in OCT imaging, the CSI layer of the choroid is still not as clear as the boundaries of the retina. Therefore, the models developed for retinal layer segmentation tend to generate ambiguous results when applied to the choroidal boundary.

Recognizing the limitations of existing models, the goal of our work is to develop a method to automatically segment the choroidal layers, while dealing with the ambiguous boundary. We enable the segmentation network to focus on the boundary features by adding a boundary enhancement module to the major segmentation network. The module has three branches to enhance boundary features via different perspectives: expanding the perceptual field using dilated convolution, activating a boundary features using the boundary activation function and extracting the boundary features of different channels using channel convolution.

In order to embed expert knowledge into the proposed choroidal automatic segmentation model, we extract boundary enhancement points from the boundary of ground truth and generate a soft point map, then introduce a boundary perceptual loss, so that the boundary region information can be fed back to the segmentation network based on ground truth, following which the accurate segmentation of the choroidal layer can be performed.

In addition, in order to further validate the clinical application of this method, compared with previous studies, we investigated not only from the two-dimensional perspective of thickness, but also from a three-dimensional perspective. The differences of choroidal 3D morphological structures between highly myopic and normal subjects are compared. This paper demonstrates the effectiveness of the proposed method, which has the potential to promote understanding the pathogenesis of some eye diseases (e.g., high myopia) related to morphological changes of the choroid, so as to support early screening and intervention.

However, this work has limitations. For example, the volunteer normal subjects may have a certain degree of myopia, yet still not reach the definition of high myopia, which may affect the statistical analysis of final results. The dataset employed for validation might be extended, not only in terms of data volume but also in terms of disease types, such as glaucoma and pathological myopia. Another limitation of our method is that it is less useful for tackling multi-layer (multi-class) segmentation tasks. Since the selected boundary enhancement points have not been further classified by different layers, soft point map construction and boundary enhancement module in the proposed method might not be suitable for multi-layer segmentation in retinal OCT images. In future work, the proposed model may be improved by setting different weights to the boundary points, which would change the type and number of points adaptively. In this way, the proposed model might then be applied to both binary and multiclassification tasks.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving human participants were reviewed and approved by Ningbo Institute of Materials Technology and Engineering, Chinese Academy of Sciences. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

QY and YM designed this study, analyzed the data and revised the manuscript, they are both the corresponding authors. WW and YG carried out this study, performed the research and wrote the manuscript. HH and YZ helped to analyze part of the data. JZ and PS developed the computational pipeline and revised the manuscript. All authors read, edited and approved the final manuscript.

Funding

This work was supported in part by the Zhejiang Postdoctoral Scientific Research Project (ZJ2022118), in part by General research program of Zhejiang Provincial Department of health (2021PY073), in part by Traditional Chinese Medicine project of Zhejiang Province (2021ZB268), in part by the National Science Foundation Program of China (61906181, 62272444 and 62103398), in part by the Zhejiang Provincial Natural Science Foundation of China (LR22F020008), in part by the Ningbo Natural Science Foundation (2022Z127), in part by the Key Research and Development Program of Zhejiang Province (2020C03036).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Agrawal, R., Ding, J., Sen, P., Rousselot, A., Chan, A., Nivison-Smith, L., et al. (2020). Exploring choroidal angioarchitecture in health and disease using choroidal vascularity index. Prog. Retin. Eye Res. 77, 100829. doi:10.1016/j.preteyeres.2020.100829

Badrinarayanan, V., Kendall, A., and Cipolla, R. (2017). Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39, 2481–2495. doi:10.1109/TPAMI.2016.2644615

Barteselli, G., Chhablani, J., El-Emam, S., Wang, H., Chuang, J., Kozak, I., et al. (2012). Choroidal volume variations with age, axial length, and sex in healthy subjects: A three-dimensional analysis. Ophthalmology 119, 2572–2578. doi:10.1016/j.ophtha.2012.06.065

Cedrone, C., Nucci, C., Scuderi, G., Ricci, F., Cerulli, A., and Culasso, F. (2006). Prevalence of blindness and low vision in an Italian population: A comparison with other European studies. Eye 20, 661–667. doi:10.1038/sj.eye.6701934

Chai, Z., Zhou, K., Yang, J., Ma, Y., Chen, Z., Gao, S., et al. (2020). “Perceptual-assisted adversarial adaptation for choroid segmentation in optical coherence tomography,” in 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), Iowa City, IA, United States, April 3–7, 2020 (IEEE), 1966.

Chen, H.-J., Huang, Y.-L., Tse, S.-L., Hsia, W.-P., Hsiao, C.-H., Wang, Y., et al. (2022). Application of artificial intelligence and deep learning for choroid segmentation in myopia. Transl. Vis. Sci. Technol. 11, 38. doi:10.1167/tvst.11.2.38

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., and Adam, H. (2018). Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, September 8–14, 2018. 801

Chen, Q., Fan, W., Niu, S., Shi, J., Shen, H., and Yuan, S. (2015). Automated choroid segmentation based on gradual intensity distance in hd-oct images. Opt. Express 23, 8974–8994. doi:10.1364/OE.23.008974

Chen, S., Wang, W., Gao, X., Li, Z., Huang, W., Li, X., et al. (2014). Changes in choroidal thickness after trabeculectomy in primary angle closure glaucoma. Investig. Ophthalmol. Vis. Sci. 55, 2608–2613. doi:10.1167/iovs.13-13595

Choma, M. A., Sarunic, M. V., Yang, C., and Izatt, J. A. (2003). Sensitivity advantage of swept source and Fourier domain optical coherence tomography. Opt. Express 11, 2183–2189. doi:10.1364/oe.11.002183

Danesh, H., Kafieh, R., Rabbani, H., and Hajizadeh, F. (2014). Segmentation of choroidal boundary in enhanced depth imaging octs using a multiresolution texture based modeling in graph cuts. Comput. Math. Methods Med. 2014, 479268. doi:10.1155/2014/479268

Dang, Q.-V., and Lee, G.-S. (2021). Document image binarization with stroke boundary feature guided network. IEEE Access 9, 36924–36936. doi:10.1109/access.2021.3062904

Gu, Z., Cheng, J., Fu, H., Zhou, K., Hao, H., Zhao, Y., et al. (2019). Ce-net: Context encoder network for 2d medical image segmentation. IEEE Trans. Med. Imaging 38, 2281–2292. doi:10.1109/TMI.2019.2903562

Hao, Q., Zhou, K., Yang, J., Hu, Y., Chai, Z., Ma, Y., et al. (2020). High signal-to-noise ratio reconstruction of low bit-depth optical coherence tomography using deep learning. J. Biomed. Opt. 25, 123702. doi:10.1117/1.JBO.25.12.123702

He, F., Chun, R. K. M., Qiu, Z., Yu, S., Shi, Y., To, C. H., et al. (2021). Choroid segmentation of retinal OCT images based on CNN classifier and l2-lq fitter. Comput. Math. Methods Med. 2021, 8882801. doi:10.1155/2021/8882801

Hu, Z., Wu, X., Ouyang, Y., Ouyang, Y., and Sadda, S. R. (2013). Semiautomated segmentation of the choroid in spectral-domain optical coherence tomography volume scans. Investig. Ophthalmol. Vis. Sci. 54, 1722–1729. doi:10.1167/iovs.12-10578

Huang, D., Swanson, E. A., Lin, C. P., Schuman, J. S., Stinson, W. G., Chang, W., et al. (1991). Optical coherence tomography. science 254, 1178–1181. doi:10.1126/science.1957169

Kim, J. T., Lee, D. H., Joe, S. G., Kim, J.-G., and Yoon, Y. H. (2013). Changes in choroidal thickness in relation to the severity of retinopathy and macular edema in type 2 diabetic patients. Investig. Ophthalmol. Vis. Sci. 54, 3378–3384. doi:10.1167/iovs.12-11503

Lee, H. J., Kim, J. U., Lee, S., Kim, H. G., and Ro, Y. M. (2020). “Structure boundary preserving segmentation for medical image with ambiguous boundary,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, Online, United States, June 14–19, 2020, 4817–4826.

Long, J., Shelhamer, E., and Darrell, T. (2015). “Fully convolutional networks for semantic segmentation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, Boston, MA, United States, June 7–12, 2015, 3431

Mao, X., Zhao, Y., Chen, B., Ma, Y., Gu, Z., Gu, S., et al. (2020). “Deep learning with skip connection attention for choroid layer segmentation in oct images,” in 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, July 20–24, 2020 (IEEE), 1641.

Masood, S., Fang, R., Li, P., Li, H., Sheng, B., Mathavan, A., et al. (2019). Automatic choroid layer segmentation from optical coherence tomography images using deep learning. Sci. Rep. 9, 3058–3118. doi:10.1038/s41598-019-39795-x

Mazzaferri, J., Beaton, L., Hounye, G., Sayah, D. N., and Costantino, S. (2017). Open-source algorithm for automatic choroid segmentation of oct volume reconstructions. Sci. Rep. 7, 42112–42210. doi:10.1038/srep42112

Moschos, M. M., and Chatziralli, I. P. (2018). Evaluation of choroidal and retinal thickness changes in Parkinson’s disease using spectral domain optical coherence tomography. Seminars Ophthalmol. 33, 494. doi:10.1080/08820538.2017.1307423

Mou, L., Zhao, Y., Chen, L., Cheng, J., Gu, Z., Hao, H., et al. (2019). “Cs-Net: channel and spatial attention network for curvilinear structure segmentation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention, Shenzhen, China, October 13–17, 2019 (Springer), 721

Nickla, D. L., and Wallman, J. (2010). The multifunctional choroid. Prog. Retin. Eye Res. 29, 144–168. doi:10.1016/j.preteyeres.2009.12.002

Oduntan, A. (2005). Prevalence and causes of low vision and blindness worldwide. Afr. Vis. eye health 64, 44–57. doi:10.4102/aveh.v64i2.214

Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., et al. (2019). “Pytorch: An imperative style, high-performance deep learning library,” in The Conference in NeurIPS 2019, Vancouver Convention Center, Canada, November 8–14, 2019 32, 8026. Available at: http://papers.neurips.cc/paper/9015-pytorch-an-imperative-style-high-performance-deep-learning-library.pdf.

Read, S. A., Fuss, J. A., Vincent, S. J., Collins, M. J., and Alonso-Caneiro, D. (2019). Choroidal changes in human myopia: Insights from optical coherence tomography imaging. Clin. Exp. Optom. 102, 270–285. doi:10.1111/cxo.12862

Regatieri, C. V., Branchini, L., Carmody, J., Fujimoto, J. G., and Duker, J. S. (2012). Choroidal thickness in patients with diabetic retinopathy analyzed by spectral-domain optical coherence tomography, 32. Philadelphia, Pa: Retina, 563.

Ronneberger, O., Fischer, P., and Brox, T. (2015). “U-net: Convolutional networks for biomedical image segmentation,” in International Conference on Medical image computing and computer-assisted intervention, Munich, Germany, October 5–9, 2015 (Springer), 234

Satue, M., Obis, J., Alarcia, R., Orduna, E., Rodrigo, M. J., Vilades, E., et al. (2018). Retinal and choroidal changes in patients with Parkinson’s disease detected by swept-source optical coherence tomography. Curr. Eye Res. 43, 109–115. doi:10.1080/02713683.2017.1370116

Scherm, P., Pettenkofer, M., Maier, M., Lohmann, C. P., and Feucht, N. (2019). Choriocapillary blood flow in myopic subjects measured with oct angiography. Ophthalmic Surg. Lasers Imaging Retina 50, e133–e139. doi:10.3928/23258160-20190503-13

Simonyan, K., and Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. Oxford, United Kingdom: Cornell University. arXiv preprint arXiv:1409.1556.

Singh, S. R., Vupparaboina, K. K., Goud, A., Dansingani, K. K., and Chhablani, J. (2019). Choroidal imaging biomarkers. Surv. Ophthalmol. 64, 312–333. doi:10.1016/j.survophthal.2018.11.002

Sui, X., Zheng, Y., Wei, B., Bi, H., Wu, J., Pan, X., et al. (2017). Choroid segmentation from optical coherence tomography with graph-edge weights learned from deep convolutional neural networks. Neurocomputing 237, 332–341. doi:10.1016/j.neucom.2017.01.023

Tian, J., Marziliano, P., Baskaran, M., Tun, T. A., and Aung, T. (2013). Automatic segmentation of the choroid in enhanced depth imaging optical coherence tomography images. Biomed. Opt. Express 4, 397–411. doi:10.1364/BOE.4.000397

Wang, C., Wang, Y. X., and Li, Y. (2017). Automatic choroidal layer segmentation using markov random field and level set method. IEEE J. Biomed. Health Inf. 21, 1694–1702. doi:10.1109/JBHI.2017.2675382

Wang, G., Zuluaga, M. A., Li, W., Pratt, R., Patel, P. A., Aertsen, M., et al. (2018). Deepigeos: A deep interactive geodesic framework for medical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 41, 1559–1572. doi:10.1109/TPAMI.2018.2840695

Wang, J., Wei, L., Wang, L., Zhou, Q., Zhu, L., and Qin, J. (2021). Boundary-aware transformers for skin lesion segmentation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, September 27–October 1, 2021. Springer, 206

Wang, S., Wang, Y., Gao, X., Qian, N., and Zhuo, Y. (2015). Choroidal thickness and high myopia: A cross-sectional study and meta-analysis. BMC Ophthalmol. 15, 70–10. doi:10.1186/s12886-015-0059-2

Wei, S., Zhang, T., and Ji, S. (2021). A concentric loop convolutional neural network for manual delineation level building boundary segmentation from remote sensing images. IEEE Trans. Geosci. Remote Sens. 60, 1–11. doi:10.1109/tgrs.2021.3126704

Wong, I. Y., Koizumi, H., and Lai, W. W. (2011). Enhanced depth imaging optical coherence tomography. Ophthalmic Surg. Lasers Imaging 42, S75–S84. doi:10.3928/15428877-20110627-07

Wong, Y.-L., and Saw, S.-M. (2016). Epidemiology of pathologic myopia in Asia and worldwide. Asia. Pac. J. Ophthalmol. 5, 394–402. doi:10.1097/APO.0000000000000234

Xiang, D., Tian, H., Yang, X., Shi, F., Zhu, W., Chen, H., et al. (2018). Automatic segmentation of retinal layer in oct images with choroidal neovascularization. IEEE Trans. Image Process. 27, 5880–5891. doi:10.1109/TIP.2018.2860255

Yan, Q., Gu, Y., Zhao, J., Wu, W., Ma, Y., Liu, J., et al. (2022). Automatic choroid layer segmentation in oct images via context efficient adaptive network. Appl. Intell. (Dordr). 1–13. doi:10.1007/s10489-022-03723-w

Yaqoob, Z., Wu, J., and Yang, C. (2005). Spectral domain optical coherence tomography: A better oct imaging strategy. Biotechniques 39, S6–S13. doi:10.2144/000112090

Yiu, G., Chiu, S. J., Petrou, P. A., Stinnett, S., Sarin, N., Farsiu, S., et al. (2015). Relationship of central choroidal thickness with age-related macular degeneration status. Am. J. Ophthalmol. 159, 617–626. doi:10.1016/j.ajo.2014.12.010

Yu, F., and Koltun, V. (2015). Multi-scale context aggregation by dilated convolutions. Princeton, United States: Cornell University. arXiv preprint arXiv:1511.07122.

Yu, M., Pei, K., Li, X., Wei, X., Wang, C., and Gao, J. (2022). Fbcu-net: A fine-grained context modeling network using boundary semantic features for medical image segmentation. Comput. Biol. Med. 150, 106161. doi:10.1016/j.compbiomed.2022.106161

Zhang, H., Yang, J., Zhou, K., Li, F., Hu, Y., Zhao, Y., et al. (2020). Automatic segmentation and visualization of choroid in oct with knowledge infused deep learning. IEEE J. Biomed. Health Inf. 24, 3408–3420. doi:10.1109/JBHI.2020.3023144

Keywords: choroidal layer, optical coherence tomography, boundary segmentation, deep learning, high myopia

Citation: Wu W, Gong Y, Hao H, Zhang J, Su P, Yan Q, Ma Y and Zhao Y (2022) Choroidal layer segmentation in OCT images by a boundary enhancement network. Front. Cell Dev. Biol. 10:1060241. doi: 10.3389/fcell.2022.1060241

Received: 03 October 2022; Accepted: 25 October 2022;

Published: 10 November 2022.

Edited by:

Weihua Yang, Jinan University, ChinaReviewed by:

Guang Yang, Imperial College London, United KingdomLei Wang, Wenzhou Medical University, China

Zhili Chen, Shenyang Jianzhu University, China

Copyright © 2022 Wu, Gong, Hao, Zhang, Su, Yan, Ma and Zhao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Qifeng Yan, eWFucWlmZW5nQG5pbXRlLmFjLmNu; Yuhui Ma, bWF5dWh1aUBuaW10ZS5hYy5jbg==

†These authors have contributed equally to this work

Wenjun Wu

Wenjun Wu Yan Gong3†

Yan Gong3† Jiong Zhang

Jiong Zhang Pan Su

Pan Su Yuhui Ma

Yuhui Ma Yitian Zhao

Yitian Zhao