94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Cell Dev. Biol., 28 August 2020

Sec. Molecular and Cellular Pathology

Volume 8 - 2020 | https://doi.org/10.3389/fcell.2020.00673

The number of scientific publications in the literature is steadily growing, containing our knowledge in the biomedical, health, and clinical sciences. Since there is currently no automatic archiving of the obtained results, much of this information remains buried in textual details not readily available for further usage or analysis. For this reason, natural language processing (NLP) and text mining methods are used for information extraction from such publications. In this paper, we review practices for Named Entity Recognition (NER) and Relation Detection (RD), allowing, e.g., to identify interactions between proteins and drugs or genes and diseases. This information can be integrated into networks to summarize large-scale details on a particular biomedical or clinical problem, which is then amenable for easy data management and further analysis. Furthermore, we survey novel deep learning methods that have recently been introduced for such tasks.

With the exploding volume of data that has become available in the form of unstructured text articles, Biomedical Named Entity Recognition (BioNER) and Biomedical Relation Detection (BioRD) are becoming increasingly important for biomedical research (Leser and Hakenberg, 2005). Currently, there are over 30 million publications in PubMed (Bethesda, 2005) and over 25 million references in Medline (Bethesda, 2019). This amount makes it difficult to keep up with the literature even in more specific specialized fields. For this reason, the usage of BioNER and BioRD for tagging entities and extracting associations is indispensable for biomedical text mining and knowledge extraction.

Named-entity recognition (NER), in general, (also known as entity identification or entity extraction) is a subtask of information extraction (text analytics) that aims at finding and categorizing specific entities in text, e.g., nouns. The phrase “Named Entity” was coined in 1996 at the 6th Message Understanding Conference (MUC) when the extraction of information from unstructured text became an important problem (Nadeau and Sekine, 2007). In the linguistic domain, Named Entity Recognition involves the automatic scanning through unstructured text to locate “entities,” for term normalization and classification into categories, e.g., as person names, organizations (such as companies, government organizations, committees.), locations (such as cities, countries, rivers) or date and time expressions (Mansouri et al., 2008). In contrast, in the biomedical domain, entities are grouped into classes such as genes/proteins, drugs, adverse effects, metabolites, diseases, tissues, SNPs, organs, toxins, food, or pathways. Since the identification of named entities is usually followed by their classification into standard or normalized terms, it is also referred to as “Named Entity Recognition and Classification” (NERC). Hence, both terms, i.e., NER and NERC, are frequently used interchangeably. One reason why BioNER is challenging is the non-standard usage of abbreviations, synonymous, homonyms, ambiguities, and the frequent use of phrases describing “entities” (Leser and Hakenberg, 2005). An example of the latter is the neuropsychological condition Alice in wonderland syndrome, which requires the detection of a chain of words. For all these reasons, BioNER has undoubtedly become an invaluable tool in research where one has to scan through millions of unstructured text corpora for finding selective information.

In biomedical context, Named Entities Recognition is often followed Relation Detection (RD) (also known as relation extraction or entity association) (Bach and Badaskar, 2007), i.e., connecting various biomedical entities with each other to find meaningful interactions that can be further explored. Due to a large number of different named entity classes in the biomedical field, there is a combinatorial explosion between those entities. Hence, using biological experiments to determine which of these relationships are the most significant ones would be too costly and time-consuming. However, by parsing millions of biomedical research articles using computational approaches, it is possible to identify millions of such associations for creating networks. For instance, identifying the interactions of proteins allows the construction of protein-protein interaction networks. Similarly, one can locate gene-disease relations allowing to bridge molecular information and phenotype information. As such, relation networks provide the possibility to narrow down previously-unknown and intriguing connections to explore further with the help of previously established associations. Moreover, they also provide a global view on different biological entities and their interactions, such as disease, genes, food, drugs, side effects, pathways, and toxins, opening new routes of research.

Despite the importance of NER and RD being a prerequisite for many text mining-based machine learning tasks, survey articles that provide dedicated discussions of how Named Entity Recognition and Relations Detection work, are scarce. Specifically, most review articles (e.g., Nadeau and Sekine, 2007; Goyal et al., 2018; Song, 2018), focus on general approaches for NER that are not specific to the biomedical field or entity relation detection. In contrast, the articles by Leser and Hakenberg (2005) and Eltyeb and Salim (2014) focus only on biomedical and chemical NER, whereas (Li et al., 2013; Vilar et al., 2017) only focus on RD. To address this shortcoming, in this paper, we review both NER and RD methods, since efficient RD depends heavily on NER. Furthermore, we also cover novel approaches based on deep learning (LeCun et al., 2015), which have only recently been applied in this context.

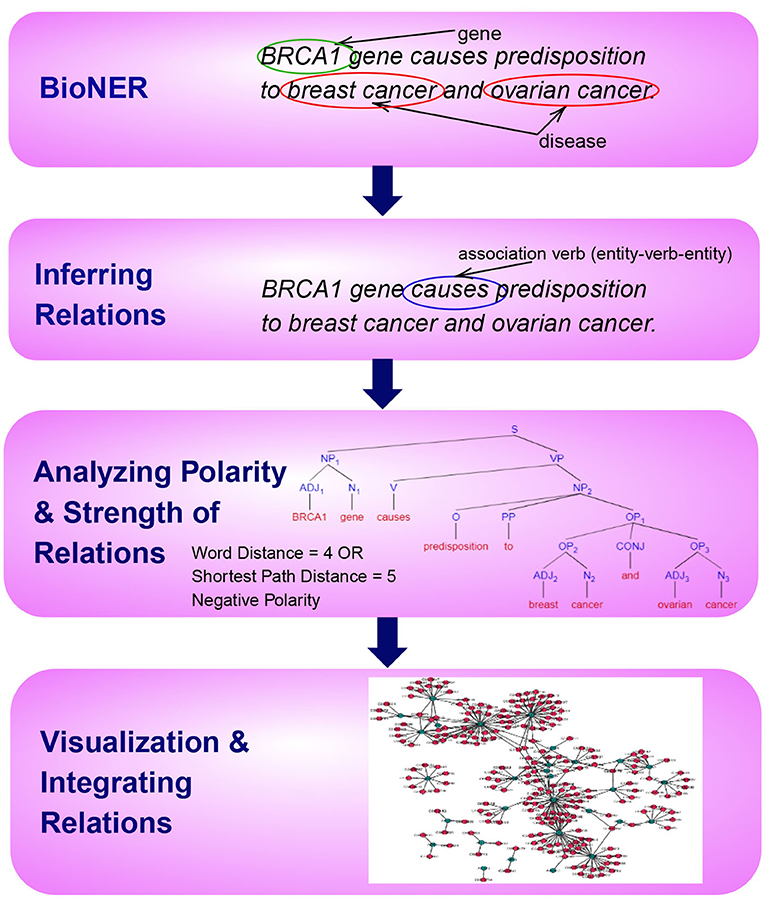

This paper is organized according to the principle steps involved in named entity recognition and relation extraction, shown in Figure 1. Specifically, the first step involves the tagging of entities of biomedical interest, as shown in the figure for the example sentence “BRCA1 gene causes predisposition to breast cancer and ovarian cancer.” Here the tagged entities are BRCA1, Breast Cancer, and Ovarian Cancer. In the next step, relationships between these entities are inferred using several techniques, such as association indicating verbs as illustrated in the example. Here the verb causes is identified as pointing to a possible association. In the subsequent step, we aim to distinguish sentence polarity and strength of an inferred relationship. For instance, in the above sentence, the polarity is negative, i.e., indicating an unfavorable relation between the BRCA1 gene and the tagged disease and the strength of relationship could be extracted by either shortest path in the sentence dependency tree or by a simple word distance as shown in the example. Finally, it is favorable to visualize these extracted relations with their responding strengths in a graph, facilitating the exploration and discovery of both direct associations and indirect interactions, as depicted in Figure 1.

Figure 1. An overview of the principle steps for BioNER and Relation Detection and Analysis. As an example, the sentence “BRCA1 gene causes predisposition to breast cancer and ovarian cancer” is used to visualize each step.

As such, in section 2, we survey biomedical Named Entity Recognition by categorizing different analysis approaches according to the data they require. Then we review relation inferring methods in section 3, strength, and polarity analysis in section 4 and Data Integration and Visualization in section 5. We will also discuss applications, tools, and future outlook in NER and RD in the sections that follow.

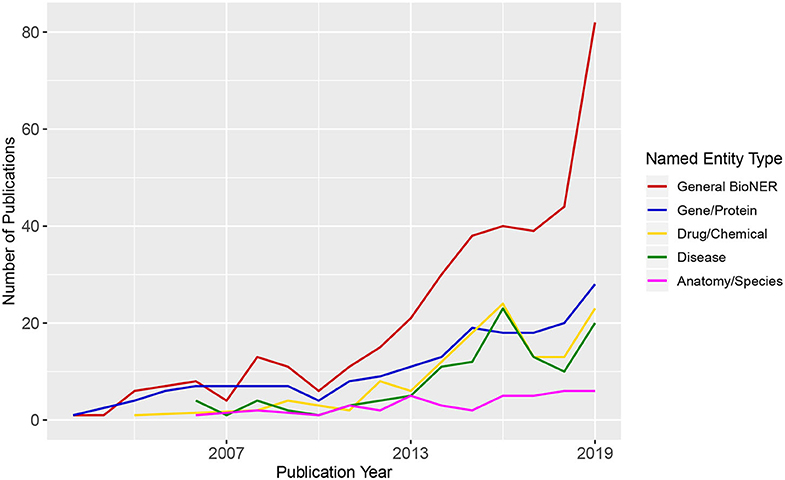

BioNER is the first step in relation extraction between biological entities that are of particular interest for medical research (e.g., gene/disease or disease/drug). In Figure 2, we show an overview of trends in BioNER research in the form of scientific publication counts. We extracted the details of the publications that correspond to several combinations of terms related to “Biomedical Named Entity Recognition” from the Web of Science (WoS) between 2001 and 2019 and categorize them by general BioNER keywords, i.e., gene/protein, drugs/chemicals, diseases, and anatomy/species. As a result, the counts of articles in each category were plotted chronologically. One can see that there is a steadily increasing amount of publications in general BioNER and a positive growth in nearly every sub-category since the early 2000s. By looking at Figure 2, one can predict that this trend will presumably continue into in the near future.

Figure 2. Publication trends in biomedical Named Entity Recognition. The numbers of the published articles were obtained from Web of Science (WoS). The legend shows different queries used for the search of WoS.

Accordingly, in the following sections, we discuss challenges in BioNER, the steps in a generic NER pipeline, feature extraction techniques, and modeling methods.

Developing a comprehensive system to capture named entities, requires defining the types on NEs, specific class guidelines for types of NEs, to resolve semantic issues such as metonymy and multi-class entities, and capturing valid boundaries of a NE (Marrero et al., 2013). However, for developing a BioNER system, there are a few more additional problems to overcome than those for general NER (Nayel et al., 2019). Most of these issues are domain-specific syntactic and semantic challenges, hence extending to feature extraction as well as system evaluation. In this section, we will address some of these problems.

Text preprocessing and feature extraction for BioNER requires the isolation of entities. However, as for any natural language, many articles contain ambiguities stemming from the equivocal use of synonyms, homonyms, multi-word/nested NEs, and other ambiguities in naming in biomedical domain (Nayel et al., 2019). For instance, the same entity names can be written differently in different articles, e.g., “Lymphocytic Leukemia” and “Lymphoblastic Leukemia” (synonyms/British and American spelling differences). Some names may share the same head noun in an article such as in “91 and 84 kDa proteins” (nested) corresponding to “91 kDa protein” and “84 kDa protein”, in which case the categorization needs to take the context into account. There are various ways for resolving these ambiguities, using different techniques, e.g., name normalization and noun head resolving (D'Souza and Ng, 2012; Li et al., 2017b).

In addition, there are two distinct semantic-related issues resulted from homonyms, metonymy, polysemy, and abbreviations usage. While most terms in the biomedical field have a specific meaning, there are still terms, e.g., for genes and proteins that can be used interchangeably, such as GLP1R that may refer to either the gene or protein. Such complications may need ontologies and UMLA concepts to help resolve the class of the entity (Jovanović and Bagheri, 2017). There are also those terms that have been used to describe a disease in layman's terms or drugs that have ambiguous brand names. For example, diseases like Alice in Wonderland syndrome, Laughing Death, Foreign Accent Syndrome and drug names such as Sonata, Yasmin, Lithium are easy culprits in confusing a bioNER system if there is no semantic analysis involved. For this reason, recent research work (e.g., Duque et al., 2018; Wang et al., 2018d; Pesaranghader et al., 2019; Zhang et al., 2019a) discussed techniques for word sense disambiguation in biomedical text mining.

Another critical issue is the excessive usage of abbreviations with ambiguous meanings, such as “CLD”, which could either refer to “Cholesterol-lowering Drug,” “Chronic Liver Disease,” “Congenital Lung Disease,” or “Chronic Lung Disease.” Given the differences in the meaning and BioNE class, it is crucial to identify the correct one. Despite being a subtask of word sense disambiguation, authors like (Schwartz and Hearst, 2002; Gaudan et al., 2005) have focused explicitly on abbreviation resolving due to its importance.

Whereas most of the above issues are a result of the lack of standard nomenclature in some biomedical domains, even the most standardized biological entity names can contain long chains of words, numbers and control characters (for example “2,4,4,6-Tetramethylcyclohexa-2,5-dien-1-one,” “epidemic transient diaphragmatic spasm”). Such long named-entities make the BioNER task complex, causing issues in defining boundaries for sequences of words referring to a biological entity. However, correct boundary definitions are essential in evaluation and training systems, especially in those where penalizing is required for missing to capture the complete entity (long NE capture) (Campos et al., 2012). One of the most commonly used solutions for multi-word capturing challenge is to use a multi-segment representation (SR) model to tag words in a text as combination of Inside, Outside, Beginning, Ending, Single, Rear or Front, using standards like IOB, IOBES, IOE, IOE, or FROBES (Keretna et al., 2015; Nayel et al., 2019).

In order to assess and compare NER systems using gold-standard corpora, it is required to use standardized evaluation scores. A frequently used error measures for evaluating NER is the F-Score, which is a combination of Precision and Recall (Mansouri et al., 2008; Emmert-Streib et al., 2019).

Precision, recall, and F-Score are defined as follows (Campos et al., 2012):

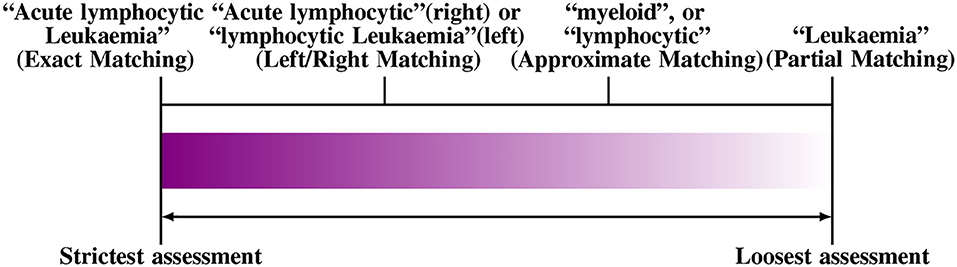

A problem with scoring a NER system in this way is it requires to define the degree of correctness of the tagged entities for calculating precision and recall. The degree of correctness, in turn, depends on the pre-defined boundaries of the captured phrases. To illustrate this, consider the following example phrase “Acute Lymphocytic leukemia.” If the system tags “lymphocytic leukemia”, but misses “Acute”, we need to decide if it is still a “true positive,” or not. The decision depends on the accuracy requirement of the BioNER; for a system that collects information on patients with Leukemia in general, it may be possible to accept the above tag as a “true positive.” In contrast, if we are looking for rapid progressing Leukemia types, it may be necessary to capture the whole term, including acute. Hence, the above would be considered “false positive.”

One possible solution is to relax the matching criteria to a certain degree, since an exact match criterion tends to reduce the performance of a BioNER system. The effects of such approaches have been evaluated, e.g., using left or right matching, partial or approximate matching, name fragment matching, co-term matching, and multiple-tagging matching. Furthermore, some approaches apply semantic relaxation such as “categorical relaxation,” which merges several entity types to reduce the ambiguity, e.g., by joining DNA, RNA, and protein categories or by combining cell lines and type entities into one class. In Figure 3, we show an example of the different ways to evaluate “Acute Lymphocytic leukemia.” For a thorough discussion of this topic, the reader is referred to Tsai et al. (2006).

Figure 3. An example for different matching criteria to evaluate Named Entity Recognition. From left to right the criteria become more relaxed (Tsai et al., 2006).

Until recently, there was also an evaluation-related problem stemming from the scarcity of comprehensively labeled data to test the systems (which also affected the training of the machine learning methods). This scarcity was a significant problem for BioNER until the mid-2000s, since human experts annotated most of the gold standard corpora, and thus were of small size and prone to annotator dependency (Leser and Hakenberg, 2005). However, with growing biological databases and as the technologies behind NER evolved, the availability of labeled data for training and testing have increased drastically in recent years. Presently, there is not only a considerable amount of labeled data sets available, but there are also problem-specific text corpora, and entity-specific databases and thesauri accessible to researchers.

The most frequently used general-purpose biomedical corpora for training and testing are GENETAG (Tanabe et al., 2005), and JNLPBA (Huang et al., 2019), various BioCreative corpora, GENIA (Kim et al., 2003) (which also includes several levels of linguistic/semantic features) and CRAFT (Bada et al., 2012). In Table 1, we show an overview of 10 text corpora often used for benchmarking a BioNER system.

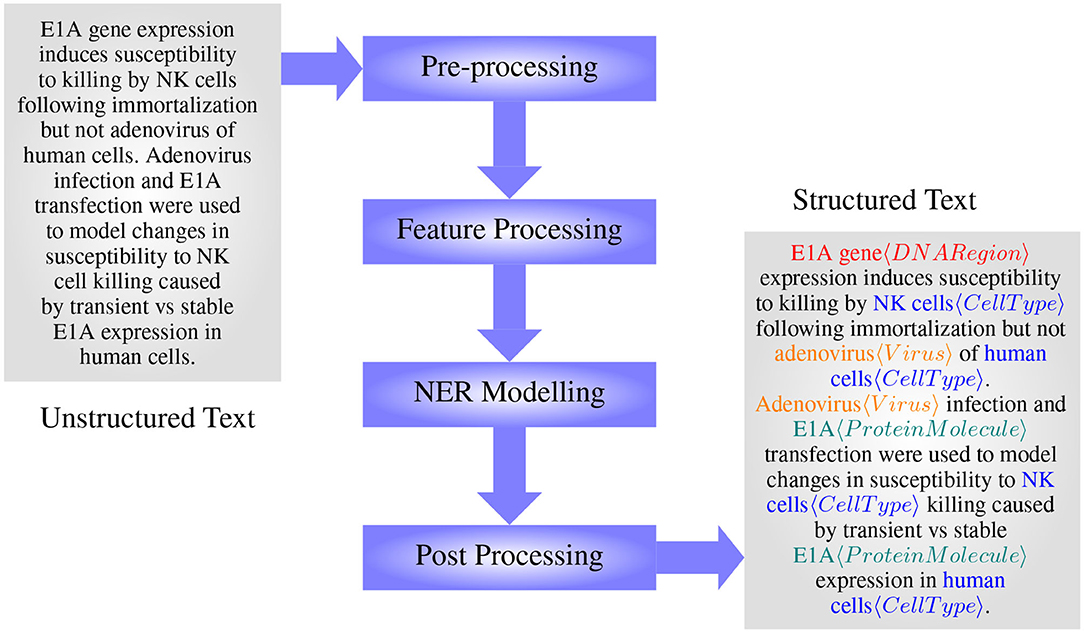

The main steps in BioNER include preprocessing, feature processing, model formulating/training, and post-processing, see Figure 4. In the preprocessing stage, data are cleaned, tokenized, and in some cases, normalized to reduce ambiguity at the feature processing step. Feature processing includes different methods that are used to extract features that will represent the classes in question the most, and then convert them into an appropriate representation as necessary to apply for modeling. Importantly, while dictionary and rule-based methods can take features in their textual format, machine learning methods require the tokens to be represented as real-valued numbers. Selected features are then used to train or develop models capable of capturing entities, which then may go through a post-processing step to increase the accuracy further.

Figure 4. The main steps in designing a BioNER system (with an example from manually annotated GENIA Corpus article MEDLINE:95343554— Routes and Cook, 1995).

While for general NLP tasks, preprocessing includes steps such as data cleaning, tokenization, stopping, stemming or lemmatization, sentence boundary detection, spelling, and case normalization (Miner et al., 2012), based on the application, the usage of these steps can vary. Preprocessing in BioNER, however, comprises of data cleaning, tokenization, name normalization, abbreviation, and head noun resolving measures to lessen complications in the features processing step. Some studies follow the TTL model (Tokenization, Tagging, and Lemmatization) suggested by Ion (2007) as a standard preprocessing framework for biomedical text mining applications (Mitrofan and Ion, 2017). In this approach, the main steps include sentence splitting and segmenting words into meaningful chunks (tokens), i.e., tokenization, part-of-speech (POS) tagging, and grouping tokens based on similar meanings, i.e., lemmatization using linguistic rules.

In systems that use rules and dictionaries, orthographic and morphological feature extraction focusing on word formations are the principle choice. Hence, they heavily depend on techniques based on word formation and language syntax. Examples of such include, regular expressions to identify the presence of words beginning with capital letters and entity-type specific characters, suffixes, and prefixes, counting the number of characters, and part-of-speech (POS) analysis to extract nouns/noun-phrases (Campos et al., 2012).

For using machine learning approaches, feature processing is mostly concerned with real-valued word representations (WR) since most machine learning methods require a real-valued input (Levy and Goldberg, 2014). While the simplest of these use bag-of-words or POS tags with term frequencies or a binary representation (one-hot encoding), the more advanced formulations also perform a dimensional reduction, e.g., using clustering-based or distributional representations (Turian et al., 2010).

However, the current state-of-the-art method for feature extraction in biomedical text mining is word embedding due to their sensitivity to even hidden semantic/syntactic details (Pennington et al., 2014). For word embedding, a real-valued vector representing a word is learned in an unsupervised or semi-supervised way from a text corpus. While the groundwork for word embedding was laid by Collobert and Weston (2008), Collobert et al. (2011), over the last few years, much progress has been made in neural network based text embedding taking into account the context, semantics and syntax for NLP applications (Wang et al., 2020). Below we discuss some of the most significant approaches for word representation and word embedding applicable to biomedical field.

The most commonly used rich text features in BioNER are Linguistic, Orthographic, Morphological, Contextual, and Lexicon (Campos et al., 2012), all of which are used extensively, when it comes to rule-based and dictionary-based NER modeling. Still, word representation methods may use selected rich text features like char n-grams and contextual information to improve the representation of feature space as well. For instance, char n-grams are used for training vector spaces to recognize rare words effectively in fastText (Joulin et al., 2016), and CBOW in word2vec model uses windowing to capture local features, i.e., the context of a selected token.

To further elaborate, linguistic features, generally focus on the grammatical syntax of a given text, by extracting information such as sentence structures or POS tagging. This allows us to obtain tags that are most probable to be a NE since most named entities occur as noun phrases in a text. The orthographic features, however, emphasize the word-formation, and as such, attempt to capture indicative characteristics of named entities. For example, the presence of uppercase letters, specific symbols, or the number of occurrences of a particular digit might suggest the presence of a named entity and, therefore, can be considered a feature-token. Comparatively, morphological features prioritize the common characteristics that can quickly identify a named entity, for instance, a suffix or prefix. It also uses char n-grams to predict subsequent characters, and regular expression to capture the essence of an entity. Contextual features use preceding and succeeding token characteristics of a word by windowing to enhance the representation of the word in question. Finally, Lexicon features provides additional domain specificity to named entities. For example, systems that maintain extensive dictionaries with tokens, synonyms, and trigger words that belong to each field are considered to use lexicon features in their feature extraction (Campos et al., 2012).

One-hot vector word representation: The one-hot-encoded vector is the most basic word embedding method. For a vocabulary of size N, each word is assigned a binary vector of length N, whereas all components are zero except one corresponding to the index of the word (Braud and Denis, 2015). Usually, this index is obtained from a ranking of all words, whereas the rank corresponds to the index. The biggest issue of this representation is the size of the word vector; since for a larger corpus, word vectors are very high-dimensional and very sparse. Besides, frequency and contextual information of each word are lost in this representation but can be vital in specific applications.

Cluster-based word representation: In clustering-based word representation, the basic idea is that each cluster of words should contain words with contextually similar information. An algorithm that is most frequently used for this approach is Brown clustering (Brown et al., 1992). Specifically, Brown clustering is a hierarchical agglomerative clustering which represents contextual relationships of words by a binary tree. Importantly, the structure of the binary tree is learned from word probabilities, and the clusters of words are obtained by maximizing their mutual information. The leaves of the binary tree represent the words, and paths from the root to each leaf can be used to encode each word as a binary vector. Furthermore, similar paths and similar parents/grandparents among words indicate a close semantic/syntactic relationship among words. This approach, while similar to a one-hot vector word representation, reduces the dimension of the representation vector, reduces its sparsity, and includes contextual information (Tang et al., 2014).

Distributional word representation: The distributional word representation uses co-occurrence matrices with statistical approximations to extract latent semantic information. The first step involves obtaining a co-occurrence matrix, F with dimensions V × C, whereas V is the vocabulary size and C the context, and each Fij gives the frequency of a word i ∈ V co-occurring with context j ∈ C. Hence, in this approach, it is necessary for the preprocessing to perform stop-word filtering since high frequencies of unrelated words can affect the results negatively. In the second step, a statistical approximation or unsupervised learning function g() is applied to the matrix F to reduce its dimensionality such that f = g(F), where the resulting f is a matrix of dimensions V × d with d ≪ C. The rows of this matrix represent the words in the vocabulary, and the columns give the counts of each word vector (Turian et al., 2010).

Some of the most common methods used include clustering (Turian et al., 2010), self-organizing semantic maps (Turian et al., 2010), Latent Dirichlet Allocation (LDA) (Turian et al., 2010), Latent Semantic Analysis (LSA) (Sahlgren, 2006), Random Indexing (Sahlgren, 2006), Hyperspace Analog to Language (HAL) (Sahlgren, 2006). The main disadvantage of these models is that they become computationally expensive for large data sets.

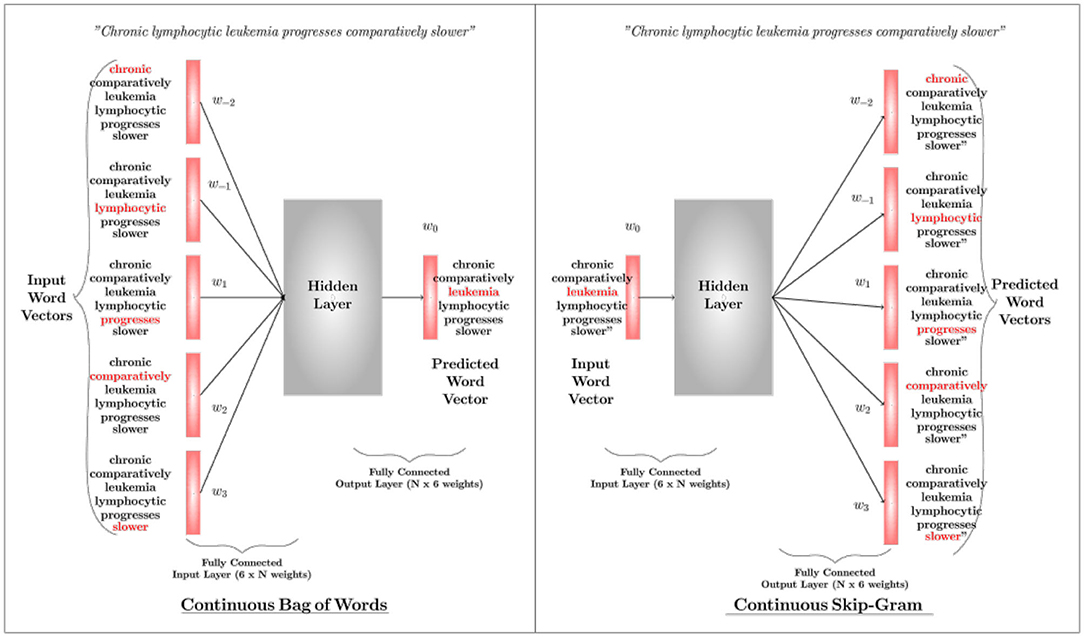

Word2Vec: Word2Vec is the state-of-the-art word representation model using a two-layer shallow neural network. It takes a textual corpus as the input, creates a vocabulary out of it, and produces a multidimensional vector representation for each word as output. The word vectors position themselves in the vector space, such that words with a common contextual meaning are closer to each other. There are two algorithms in the Word2Vec architecture, i.e., Continuous Bag-of-Words (CBoW) and Continuous Skip-Gram. Either can be used based on the application requirement. While the former predicts the current word by windowing its close contextual words in the space (with no consideration to the order of those words), the latter uses the current word to predict the words that surround it. The network ultimately outputs either a vector that represents a word (in CBoW) or a vector that represents a set of words (in skip-gram). Figure 5 illustrates the basic mechanisms of the two architectures of word2vec; CBOW and Skip-Gram. Details about these algorithms can be found in Mikolov et al. (2013a,b) (parameter learning of the Word2Vec is explained in Rong, 2014).

Figure 5. An overview of the Continuous Bag-of-Words Algorithm and the Skip-Gram Algorithm. A CBOW predicts the current word based on surrounding words, whereas Skip-Gram predicts surrounding words based on the current word. Here w(t) represents a word sequence.

GloVe: GloVe (Global Vectors) is another word representation method. Its name emphasizes that global corpus-wide statistics are captured by the method, as opposed to word2vec, where local statistics of words are assessed (Pennington et al., 2014).

GloVe uses an unsupervised learning algorithm to derive vector representations for words. The contextual distance among words creates a linear sub-structural pattern in the vector space, as defined by logarithmic probability. The method bases itself on how word-word co-occurrence probabilities evaluated on a given corpus, can interpret the semantic dependence between the words. As such, training uses log-bi-linear modeling with a weighted least-square error objective, where GloVe learns word vectors so that the logarithmic probability of word-word co-occurrence equals the dot product of the words. For example, if we consider two words i and j, a simplified version of an equation for GloVe is given by

Here is the word vector for word i, is the contextual word vector, which we use to build the word-word co-occurrence. is the probability of co-occurrence between the words i and j and Xij and Xi are the counts of occurrence of word i with j and occurrence of word i alone in the corpus. An in-depth description of GloVe can be found in Pennington et al. (2014).

fastText: fastText, introduced by researchers at Facebook, is an extension of Word2Vec. Instead of directly learning the vector representation of a word, it first learns the word as a representation of N-gram characters. For example, if we are embedding the word collagen using a 3-gram character representation, the representation would be < co, col, oll, lla, lag, age, gen, en>, whereas < and >, indicate the boundaries of the word. These n-grams are then used to train a model to learn word-embedding using the skip-gram method with a sliding window over the word. FastText is very effective in representing suffixes/prefixes, the meanings of short words, and the embedding of rare words, even when those are not present in a training corpus since the training uses characters rather than words (Joulin et al., 2016). This embedding method has also been applied to the biomedical domain due to its ability to generalize over morphological features of biomedical terminology (Pylieva et al., 2018) and detecting biomedical event triggers using fastText semantic space (Wang et al., 2018b).

BERT/BioBERT: Bidirectional Encoder Representations for Transformers (BERT) (Devlin et al., 2018), is a more recent approach of text embedding that has been successfully applied to several biomedical text mining tasks (Peng et al., 2019). BERT uses the transformer learning model to learn contextual token embeddings of a given sentence bidirectionally (from both left and right and averaged over a sentence). This is done by using encoders and decoders of the transformer model in combination with Masked Language Modeling to train the network to predict the original text. In the original work targeted for general purpose NLP, BERT was pre-trained with unlabeled data from standard English corpora, and then fine-tuned with task-specific labeled data.

For domain-specific versions of BioBERT (Peng et al., 2019; Lee et al., 2020), one uses the pre-trained BERT model, and by using its learned weights as initial weights, pre-trains the BERT model again with PubMed abstracts and PubMed Central full-text articles. Thereafter, the models are fine-tuned using benchmark corpora, e.g., mentioned in Tables 1, 3. The authors of BioBERT states that for the benchmark corpora, the system achieves state-of-the-art (or near) precision, recall, and F1 scores in NER and RE tasks.

We would like to highlight that a key difference between BERT, ELMo, or GPT-2 (Peters et al., 2018; Radford et al., 2019) and word2vec or GloVec is that the latter perform a context-independent word embedding whereas the former ones are context-dependent. The difference is that context-independent methods provide only one word vector in an unconditional way but context-dependent methods result in a context-specific word embedding providing more than one word vector representation for one word.

Modeling methods in BioNER can be divided into four categories: Rule-based, Dictionary-based, Machine Learning based, and Hybrid models (Eltyeb and Salim, 2014). However, in recent years, the focus shifted to either pure machine learning approaches or hybrid techniques combining rules and dictionaries with machine learning methods.

While supervised learning methods heavily dominate machine learning approaches in the literature, some semi-supervised and even unsupervised learning approaches are also used. Examples of such work will be discussed briefly later in the section below. The earliest approaches for BioNER focused on Support Vector Machines (SVM), Hidden Markov Models (HMM), and Decision Trees. However, currently, most NER research utilizes deep learning with sequential data and Conditional Random Fields (CRF).

Rule-based approaches, unlike decision trees or statistical methods, use handcrafted rules to capture named-entities and classify them based on their orthographic and morphological features. For instance, it is conventional in the English language to start proper names, i.e., named-entities, with a capital letter. Hence entities with features like upper-case letters, symbols, digits, suffixes, prefixes can be captured, for example, using regex expressions. Additionally, part-of-speech taggers can be used to fragment sentences and capture noun phrases. It is common practice, in this case, to include the complete token as an entity, if at least one part of the token identifies as a named-entity.

An example of the earliest rule-based BioNER system is PASTA (Protein Active Site Template Acquisition, Gaizauskas et al., 2003), in which entity tagging was performed by heuristically defining 12 classes of technical terms, including scope guidelines. Each document is first analyzed for sections with technical text, split into tokens, analyzed for semantic and syntactic features, before extracting morphological and lexical features. The system then uses handcrafted rules to tag and classify terms into 12 categories of technical terms. The terms are tagged with respective classes using the SGML (Standard Generalized Markup Language) format. Recently, however, there is not much literature on pure handcrafted rule-based BioNER systems, and instead, papers such as Wei et al. (2012) and Eftimov et al. (2017) present how combining heuristic rules with dictionaries may result in higher state-of-the-art f-scores. The two techniques complement each other by rules compensating for exact dictionary matches, and dictionaries refining results extracted through rules.

The main drawbacks of rule-based systems are the time-consuming processes involved with handcrafting rules to cover all possible patterns of interest and the ineffectiveness of such rules toward unseen terms. However, in an instance where an entity class is well-defined, it is possible to formulate thorough rule-based systems that can achieve both high precision and recall. For example, most species entity tagging systems rely on binomial nomenclature (two-term naming system of species), which provides clearly defined entity boundaries, qualifying as an ideal candidate for a rule-based NER system.

Dictionary-based methods use large databases of named-entities and possibly trigger terms of different categories as a reference to locate and tag entities in a given text. While scanning texts for exactly matching terms included in the dictionaries is a straightforward and precise way of named entity recognition, recall of these systems tends to be lower. Such is the result of increasingly expanding biomedical jargon, their synonyms, spelling, and word order differences. Some systems have been using an inexact or fuzzy matching, by automatically generating extended dictionaries to account for spelling variations and partial matches.

One prominent example of a dictionary-based BioNER model is in the association mining tool Polysearch (Cheng et al., 2008), where the system keeps several comprehensive dictionary thesauri, to make tagging and normalization of entities rather trivial. Another example is Whatizit (Rebholz-Schuhmann, 2013), a class-specific text annotator tool available online, with separate modules for different NE types. This BioNER is built using controlled vocabularies (CV) extracted from standard online databases. For instance, WhatizitChemical uses a CV from ChEBI and OSCAR3, WhatizitDisease uses disease terms CV extracted from MedlinePlus, whatizitDrugs uses a CV extracted from DrugBank, WhatizitGO uses gene ontology terms and whatizitOrganism uses a CV extracted from the NCBI taxonomy. The tool also includes options to extract terms using UniProt databases when using a combined pipeline to tag entities. LINNAEUS, Gerner et al. (2010) is also a dictionary-based NER package designed explicitly to recognize and normalize species name entities in text and includes regex heuristics to resolve any ambiguities. The system has a significant recall of 94% at the mention-level and 98% at the document level, despite being dictionary-based.

More latest state-of-the-art tools have shown preference in using dictionary-based hybrid NER as well, attributing to its high accuracy of performance with previously known data. Moreover, since it involves exact/inexact matching, the main requirement for high accuracy is only a thoroughly composed dictionary of all possible related jargon.

Currently, the most frequently used methods for named entity recognition are machine learning approaches. While some studies focus on purely machine learning-based models, others utilize hybrid systems that combine machine learning with rule-based or dictionary-based approaches. Overall these present state-of-the-art methods.

In this section, we discuss three principal machine learning methodologies utilizing supervised, semi-supervised, and unsupervised learning. These also include Deep Neural Networks (DNN) and Conditional Random Fields (CRF), because newer studies focused on using LSTM/Bi-LSTM coupled with Conditional Random Fields (CRF). Furthermore, in section 2.2.3.4, we will discuss hybrid approaches.

Supervised methods: The first supervised machine learning methods used were Support Vector Machines (Kazama et al., 2002), Hidden Markov models (Shen et al., 2003), Decision trees, and Naive Bayesian methods (Nobata et al., 1999). However, the milestone publication by Lafferty et al. (2001) about Conditional Random Fields (CRF) taking the probability of contextual dependency of words into account shifted the focus away from independence assumptions made in Bayesian inference and directed graphical models.

CRFs are a special case of conditionally-trained finite-state machines, in which the final result is a statistical-graphical model that performs well with sequential data, therefore making it ideal for language modeling tasks such as NER (Settles, 2004). In Lafferty et al. (2001), the authors stated that given a text sequence X = {x1, x2, ..., xn} and its corresponding state label S = {s1, s2, ...., sn}, the conditional probability of state S for given X can be expressed as:

Here, si can be an entity class label (l ∈ L) for each text xi (such as a gene or protein), fj(si−1, si, x, i) is the feature function and λj is the weight vector of fj. Ideally, the learned λj for fj must be positive for features that correlate to a target label, negative for anti-correlation and zero for irrelevant features. Overall, the learning process for a given training set D = {〈x, l〉1, 〈x, l〉2, ....., 〈x, l〉n} can be expressed as a log likelihood maximization problem given by:

Modified Viterbi algorithm assigns respective labels for the new data, after the training process (Lafferty et al., 2001).

Deep learning: In the last 5 years, there is a shift in the literature toward general deep neural network models (LeCun et al., 2015; Emmert-Streib et al., 2020). For instance, feed-forward neural networks (FFNN) (Furrer et al., 2019), recurrent neural networks (RNN), or convolution neural networks (CNN) (Zhu et al., 2017) have been used for BioNER systems. Among these, frequent variations of RNNs are, e.g., Elman-type, Jordan-type, unidirectional, or bidirectional models (Li et al., 2015c).

The Neural Network (NN) language models are essential since they excel at dimension reduction of word representations and thus help improve performances in NLP applications immensely (Jing et al., 2019). Consequently, Bengio et al. (2003) introduced the earliest NN language model as a feed-forward neural network architecture focusing on “fighting the curse of dimensionality.” This FFNN that first learns a distributed continuous space of word vectors is also the inspiration behind CBOW and Skip-gram models of feature space modeling. The generated distributed word vectors are then fed into a neural network, that estimates the conditional probability of each word occurring in context to the others. However, this model has several drawbacks, first being that it is limited to pre-specifiable contextual information. Secondly, it is not possible to use timing and sequential information in FFNNs, which would facilitate language to be represented in its natural state, as a sequence of words instead of probable word space (Jing et al., 2019).

In contrast, convolutional neural networks (CNN) are used in literature as a way of extracting contextual information from embedded word and character spaces. In Kim et al. (2016), such a CNN has been applied to a general English language model. In this setup, each word is represented as character embeddings and fed into a CNN network. Then the CNN filters the embeddings and creates a feature vector to represent the word. Extending this approach to Biomedical text processing, Zhu et al. (2017), generates embeddings for characters, words, and POS tagging, which are then combined to represent words and fed to a CNN level with several filters. The CNN outputs a vector representing the local feature of each term, which can then be tagged by a CRF layer.

To facilitate language to be represented as a collection of sequential tokens, researchers have later started exploring recurrent neural networks for language modeling. Elman-type and Jordan-type networks are such simple recurrent neural networks, where contextual information is fed into the system as weights either in the hidden layers in the former type or the output layer in the latter-type. The main issue with these simple RNNs is that they face the problem of vanishing gradient, which makes it difficult for the network to retain temporal information long-term, as benefited by in a recurrent language model.

Long Short-Term Memory (LSTM) neural networks compensate for both of the weaknesses mentioned in previous DNN models and hence are most commonly used for language modeling. LSTMs can learn long-term dependencies through a special unit called a memory cell, which not only can retain information long time but has gates to control which input, output, and data in the memory to preserve and which to forget. Extensions of this are bi-directional LSTMs, where instead of only learning based on past data, as in unidirectional LSTM, learning is based on past and future information, allowing more freedom to build a contextual language model (Li et al., 2016b).

For achieving the best results, Bi-LSTM and CRFs models are combined with a word-level and character-level embedding in a structure, as illustrated in Figure 6 (Habibi et al., 2017; Wang et al., 2018a; Giorgi and Bader, 2019; Ling et al., 2019; Weber et al., 2019; Yoon et al., 2019). Here a pre-trained look-up table produces word embeddings, and a separate Bi-LSTM for each word sequence renders a character-level embedding, both of which are then combined to acquire x1, x2, ...., xn as word representation (Habibi et al., 2017). These vectors then become the input to a bi-directional LSTM, and the output of both forward and backward paths, hb, hf, are then combined through an activation function and inserted into a CRF layer. This layer is ordinarily configured to predict the class of each word using an IBO-format (Inside-Beginning-Outside).

If we consider the hidden layer hn in Figure 6, first, the embedding layer embeds the word gene into a vector Xn. Next, this vector is simultaneously used as input for the forward LSTM and the backward LSTM , of which the former depends on the past value hn−1 and the latter on the future value hn+1. The combined output resulting from the backward and the forward LSTMs is then passed through an activation function (tanh) that results in the output Yn. The CRF layer on the top uses Yn and tags it as either I-inside, B-Beginning, or O-Outside of a NE (named entity). Consequently, in this example, Yn is tagged as I-gene, i.e., a word inside of the named entity of a gene.

Semi-supervised methods: Semi-supervised learning is usually used when a small amount of labeled data and a larger amount of unlabeled data are available, which is often the case when it comes to Biomedical collections. If labeled data is expressed as X(x1, x2, ...., xn)−>L(l1, l2, ..., ln) where X is the set of data and L is the set of labels, the task is to develop a model that accurately maps Y(y1, y2, ..., ym)−>L(l1, l2, ..., lm) where m>n and Y is the set of unlabeled data that needs mapping to labels.

Whereas literature using a semi-supervised approach is lesser in BioNER, Munkhdalai et al. (2015) describes how domain knowledge has been incorporated into chemical and biomedical NER using semi-supervised learning by extending the existing BioNER system BANNER. The pipeline runs the labeled and unlabeled data in two parallel lines wherein one line labeled data is processed through NLP techniques to extract rich features such as word and character n-grams, lemma, and orthographic information as in BANNER. In the second line, the unlabeled data corpus is cleaned, tokenized, and run through brown hierarchical clustering and word2vec algorithms to extract word representation vectors, and clustered using k-means. All of the extracted features from labeled and unlabeled data are then used to train a BioNER model using conditional random fields. The authors of this system emphasize that the system does not use lexical features or dictionaries. Interestingly, BANNER-CHEMDNER has shown an 85.68% and an 86.47% F-score on the testing sets of CHEMDNER Chemical Entity Mention (CEM) and Chemical Document Indexing (CDI) sub-tasks and shown a remarkable 87.04% F-score in the test set of the BioCreative II gene-mention task.

Unsupervised methods: While unsupervised machine learning has potent in organizing new high throughput data without previous processing and improving the ability of the existing system to process previously unseen information, it is not very often the first choice for developing BioNER systems. However, Zhang and Elhadad (2013) introduced a system, which uses an unsupervised approach to BioNER with the concepts of seed knowledge and signature similarities between entities.

First, for the seed concepts, semantic types and semantic groups are collected from UMLS (Unified Medical Language System) for each entity type, e.g., protein, DNA, RNA, Cell type, and cell line, to represent the domain knowledge. Second, the candidate corpora are processed using a noun phrase chunker and an inverse document frequency filter, which formulates word sense disambiguation vectors for a given named entity using a clustering approach. The next step generates the signature vectors for each entity class with the intuition that the same class tends to have contextually similar words. The final step compares the candidate named entity signatures and entity class signatures by calculating similarities. As a result, they found the highest F-score of 67.2 for proteins and the lowest at 19.9 for cell-line. Sabbir et al. (2017) used a similar approach, where they implement a word sense disambiguation with an existing knowledge base of concepts extracted through UMLS to develop an unsupervised BioNER model with over 90% accuracy. These unsupervised methods tend to work well when dealing with ambiguous Biomedical entities.

Currently, there are several state-of-the-art applications of BioNER, that combine the best aspects of all the above three methods. Most of these methods combine machine learning with either dictionaries or sets of rules (heuristic/derived), but other approaches exist which combine dictionaries and rule sets as well. Since machine learning approaches have shown to result in better recall values, whereas both dictionary-based and rule-based approaches tend to have better precision values, the former method shows improved F-scores.

For instance, OrganismTagger (Naderi et al., 2011) uses binomial nomenclature rules of naming species to tag organism names in text and combines this with an SVM to assure that it captures organism names that do not follow the binomial rules. In contrast, SR4GN (Wei et al., 2012), which is also a species tagger, utilizes rules to capture species names and a dictionary lookup to reevaluate the accuracy of the tagged entities.

Furthermore, state of the art tools such as Gimli (Campos et al., 2013), Chemspot (Rocktäschel et al., 2012), and DNorm (Leaman et al., 2013) use Conditional Random fields with a thesaurus of own field-specific taxonomy to improve recall. In contrast, OGER++ (Furrer et al., 2019), which performs multi-class BioNER, utilizes a feed-forward neural network structure followed by a dictionary lookup to improve precision.

On the other hand, some systems have been able to combine statistical machine-learning approaches with rule-based models to achieve higher results, as described in this more recent work (Soomro et al., 2017). This study uses the probability analysis of orthographic, POS, n-gram, affixes, and contextual features with Bayesian, Naive-Bayesian, and partial decision tree models to formulate rules of classification.

While not all systems require or use post-processing, it can improve the quality and accuracy of the output by resolving abbreviation ambiguities, disambiguation of classes and terms, as well as parenthesis mismatching instances (Bhasuran et al., 2016). For example, if a certain BioNE is only tagged in one place of the text, yet the same or a co-referring term exist elsewhere in the text, untagged, then the post-processing would make sure these missed NEs are tagged with their respective class. Also, in the case of a partial entity being tagged in a multi-word BioNE, this step would enable the complete NE to be annotated. In the case where some of the abbreviations are wrongly classified or failed to be tagged, some systems use tools such as the BioC abbreviation resolver (Intxaurrondo et al., 2017) at this step to improve the annotation of abbreviated NEs. Furthermore, failure to tag NE also stems from unbalanced parenthesis in isolated entities, which also can be addressed during pre-processing. Interestingly, Wei et al. (2016) describes using a complete rule-based BioNER model for post-processing in disease mention tagging to improve the F-score.

Another important sub-task that is essential at this point, is to resolve coreferences. This may be also important for extracting stronger associations between entities, discussed in the next section. Coreferences are those terms that refer to a named entity without using its proper name, but by using some form of anaphora, cataphora, split-reference or compound noun-phrase (Sukthanker et al., 2020). For example in the sentence “BRCA1 and BRCA2 are proteins expressed in breast tissue where they are responsible for either restoring or, if irreparable, destroying damaged DNA,” the anaphora they refers to the proteins BRCA1 and BRCA2, and resolving this helps to associate the proteins with their purpose. When it comes to biomedical coreference resolution, it is important to note that generalized methods may not be very effective, given that there are fewer usages of common personal pronouns. Some approaches that have been used in the biomedical text mining literature are heuristic rule sets, statistical approaches and machine learning-based methods. Most of the earlier systems commonly used mention-pair based binary classification and rule-sets to filter coreferences such that only domain significant ones are tagged Zheng et al. (2011a). While the rule set methods have provided state-of-the-art precision they often do not have a high recall. Hence, a sieve-based architecture Bell et al. (2016) has been introduced, which arranges rules starting from high-precision-low-recall to low-precision-high-recall. Recently, deep learning methods have been used for coreference resolution in general domain successfully without using syntactic parsers, for example in Lee et al. (2017). The same system has been applied to biomedical coreference resolution in Trieu et al. (2018) with some domain-specific feature enhancements. Here, it is worth mentioning that the CRAFT corpus, earlier mentioned in Table 1, has an improved version that can be used for coreference resolution for biomedical texts (Cohen et al., 2017).

In the biomedical literature coreference resolution is sometimes conducted (e.g., Zheng et al., 2011b, 2012; Uzuner et al., 2012), but in general underrepresented. A reason for this could be that biomedical articles are differently written in the sense that, e.g., protagonistic gene or protein names are more clearly used and referred to due to their exposed role. However, if this is indeed the reason or if there is an omission in the biomedical NER pipeline requires further investigations.

After BioNER, the identification of associations between the named entities follows. For establishing such associations, the majority of studies use one of the following techniques (Yang et al., 2011): Co-occurrence based approaches, rule-set based approaches, or machine learning-based approaches.

The simplest of these methods, co-occurrence based approaches, consider entities to be associated if they occur together in target sentences. The hypothesis is that the more frequent two entities occur together, the higher the probability that they are associated with each other. In an extension of this approach, a relationship is deemed to exist between two (or more) entities if they share an association with a third entity acting as a reciprocal link (Percha et al., 2012).

In a rule-based approach, the relationship extraction depends highly on the syntactic and semantic analysis of sentences. As such, these methods rely on part-of-speech (POS) tagging tools to identify associations, e.g., by scanning for verbs and prepositions that correlate two or more nouns or phrases serving as named entities. For instance, in Fundel et al. (2006), the authors explain how syntactic parse trees can be used to break sentences into the form NounPhase1−AssociationVerb−NounPhrase2, where the noun phrases are biomedical entities associated through an association verb, and therefore indicates a relationship. In this approach, many systems additionally incorporate a list of verbs that are considered to show implications between nouns, i.e., for example, verbs such as elevates, catalyzes, influences, mutates.

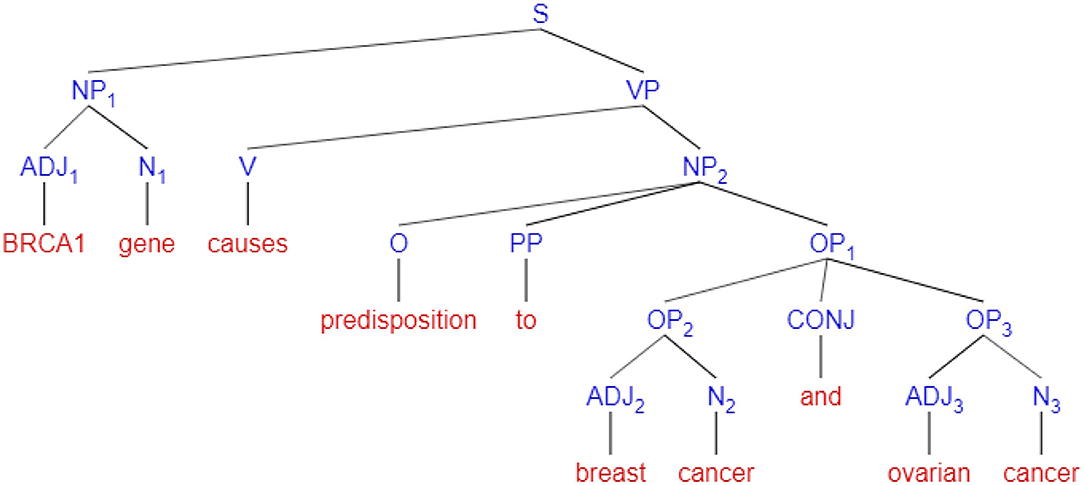

In Figure 7, an example of a syntactic sentence parse tree created by POS tagging, is shown. In this figure, nodes signify syntax abbreviations, i.e., S = sentence, NP = Noun Phrase, VP = Verb Phrase, PP = Preposition Phrase, OP = Object of Preposition, CONJ = conjunction ADJ = Adjective, N = Noun, V = Verb, and O = Object. The method first fragments a sentence into noun phrases and verb phrases, and each of these phrases is further segmented to adjectives, nouns, prepositions, and conjunctions for clarity of analysis. More details of the strength of associations will include in section 4.2

Figure 7. An example of a syntax parse tree for the sentence “BRCA1 gene causes predisposition to breast cancer and ovarian cancer”.

The most commonly used machine learning approaches use an annotated corpus with pre-identified relations as training data to learn a model (supervised learning). Previously, the biggest obstacle for using such machine learning approaches for relation detection was acquiring the labeled training and testing data. However, data sets generated through biomedical text mining competitions such as BioCreative and BioNLP have moderated this problem significantly. Specifically, in Table 2, we list a few of the main gold-standard corpora available in the literature for this task.

Historically, SVMs have been the first choice for this task due to their excellent performance in text data classification with a low tendency for overfitting. Furthermore, they have also proven to be good with sentence polarity analyzing for extracting positive, negative, and neutral relationships as described by Yang et al. (2011). Of course, in SVM based approaches, feature selection acts as the strength-indicator for accuracy and, therefore, is considered a crucial step in relationship mining using this approach.

One of the earliest studies using an SVM was (Özgür et al., 2008). This study used a combination of methods for evaluating an appropriate kernel function for predicting gene-disease associations. Specifically, the kernel function used a similarity measure incorporating a normalized edit-distances between the paths of two genes, as extracted from a dependency parse tree. In contrast to this, the study by Yang et al. (2011) used a similar SVM model, however, for identifying the polarity of food-disease associations. For this reason, their SVM was trained with positive, negative, neutral, and irrelevant relations, which allowed assigning the polarity in the form of “risk.” For instance, particular food can either increase risk, reduce risk, be neutral, or be irrelevant for a disease. Recently, Bhasuran and Natarajan (2018) extended the study by Özgür et al. (2008) using an ensemble of SVMs trained with small samples of stratified and bootstrapped data. This method also included a word2vec representation in combination with rich semantic and syntactic features. As a result, they improved F-scores for identifying disease-gene associations.

Although SVMs appear to take predominance in this task, other machine learning methods have been used as well. For instance, in Jensen et al. (2014), a Naive-Bayes classifier has been used for identifying food-phytochemical and food-disease associations based on TF-IDF (term frequency-inverse document frequency) features. Whereas, in Quan and Ren (2014), a Max-entropy based classifier with Latent Dirichlet Allocation (LDA) was used for inferring gene-disease associations, and in Bundschus et al. (2008) a CRF was used for both NER and relation detection, for identifying disease-treatment and gene-disease associations.

Due to the state of the art performance and less need for complicated feature processing, deep learning (DL) methods are becoming increasingly popular for relation extraction in the last five years. The most commonly used DL approaches include convolutional neural networks (CNNs), recurrent neural networks (RNNs), and hybrids of CNN and RNN (Jettakul et al., 2019; Zhang et al., 2019b), most of which are also able to classify relation-type as well.

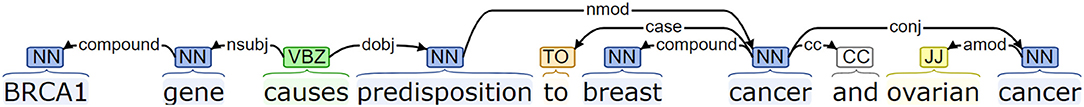

The feature inputs to DL models may include sentence-level, word-level, and lexical-level features represented as vectors (Zeng et al., 2014), positions of the related entities, and the class label of the relation type. The vectors are looked up from pre-trained word and positional vector space on either a single corpus or multiple corpora (Quan et al., 2016). Significantly, the majority of deep learning methods use sentence dependency graphs mentioned in the rule-based approach (Figure 8) to extract the shortest path between entities and relations as features for training (Hua and Quan, 2016a,b; Zhang et al., 2018c; Li et al., 2019). Other studies have used POS tagging, and chunk tagging features in combination with position and dependency paths to improve performance (Peng and Lu, 2017). The models are trained to either distinguish between sentences with relations or to output the type of relation.

Figure 8. Dependency graph for the sentence “BRCA1 gene causes predisposition to breast cancer and ovarian cancer” generated using Standford coreNLP parser (Manning et al., 2014) (nsubj-nominal subject, dobj-direct object, nmod-nominal modifier, amod-adjectival modifier, conj-conjunction, CC-coordinating conjunction, JJ-adjective, NN-noun).

The earliest approaches use Convolutional Neural Networks (CNN), where the extracted features e.g., dependency paths/sentences, are represented using the word vector space. Since CNN's require every training example to be of similar size, instances are padded with zeros as required (Liu et al., 2016). After several layers of convolutional operations and pooling, these methods are followed by a fully connected feed-forward neural layer with soft-max activation function (Hua and Quan, 2016b).

Subsequently, LSTM networks, including bi-LSTM, have been used in Sahu and Anand (2018) and Wang et al. (2018e), to learn latent features of sentences. These RNN based models perform well with relating entities that lie far apart from each other in sentences. Whereas, CNNs requires restrictive sized inputs, the RNNs have no such restrains and are useful when long sentences are available, since the input is sequentially processed. These models have been used to extract drug-drug and protein-protein interactions (Hsieh et al., 2017). Extending this further, Zhang et al. (2018c) experiments with bidirectional RNN models using two hierarchical layers, one with two simple RNNs, one with two GRUs, and last with two LSTMs. Here the hierarchical bi-LSTM has shown a better performance.

In recent years, there have also been studies that use a novel approach, i.e., graph convolutional networks (GCN) (Kipf and Welling, 2016) for relation extraction using dependency graphs (Zhang et al., 2018b; Zhao et al., 2019). Graph convolutional networks use the same concept of CNN, but with the advantage of using graphs as inputs and outputs. By using dependency paths to represent text as graphs, GCNs can be applied to relation extraction tasks. In Zhao et al. (2019), the authors use a hybrid model that combines GCNs preceded by bidirectional gated recurrent units (bi-GRU) layer to achieve significant F-measures. Furthermore, for identifying drug-drug interactions, a syntax convolutional neural network has been evaluated for the DDIExtraction 2013 corpus (Herrero-Zazo et al., 2013) and found to outperform other methods (Zhao et al., 2016). Conceptually similar approaches have been used in Suárez-Paniagua et al. (2019); Wei et al. (2019).

In extension, Zheng et al. (2018) uses a hierarchical hybrid model that resembles a reverse CRNN (convolutional recurrent neural network), where a CNN and a soft-max layer follow two bi-LSTM layers. The method has been used to extract chemical-disease relations, and have been trained and evaluated on CDR corpus (Li et al., 2016a). Whereas, authors of Zhang et al. (2018a) uses two CNNs and a bi-LSTM simultaneously to learn from word/relation dependency and sentence sequences, to extract disease-disease and protein-protein relations. These hybrid methods aim to combine the CNN's efficiency in learning local lexical and syntactic features (short sentences) with RNN's ability to learn dependency features over long and complicated sequences of words (long sentences). Both of the above models have been found to perform well with their respective corpora.

Graph-based representation preserves the sentence structure by converting the text directly into a graph, where biomedical named entities are vertices and other syntactic/semantic structures connecting them are edges. While complex sentence structures may lead to nested relations, this method facilitates identifying common syntactic patterns indicating significant associations (Luo et al., 2016).

Once the named entities are tagged, the next steps involve splitting sentences, annotating them with POS, and processing other feature extractions as required. Graph extraction is usually performed at this point as a part of the feature extracting process. Once the graphs including concepts and their syntactic/semantic relations are mined, these can be used as kernels, training data for deep learning approaches, or for generating rule sets with the help of graph search algorithms (Kilicoglu and Bergler, 2009; Ravikumar et al., 2012; Panyam et al., 2018a; Björne and Salakoski, 2018). For example, in Liu et al. (2013), approximate subgraph matching has been used to extract biomolecular relations from key contextual dependencies and input sentence graphs. A similar approach has been used in MacKinlay et al. (2013). The paper by Luo et al. (2016) provides a good review including a wide array of examples for which graph-based approaches are used in biomedical text mining.

Also, the combination of machine learning and graph-based approaches have been studied with great success. For instance, in Kim et al. (2015), a linear graph kernel based on dependency graphs for sentences has been used in combination with an SVM to detect drug-drug interactions. In order to enrich the information captured by kernels, Peng et al. (2015) uses an extended dependency graph that has also been defined to include information beyond syntax. Furthermore, in Panyam et al. (2018b), chemical-induced disease relations have been studied by comparing tree kernels (subset-tree kernel and partial-tree kernel) and graph kernels (all-path-graph and approximate-subgraph-matching). As a result, they found that the all-path-graph kernel performs significantly better in this task.

In this section, we discuss methods that do not fit in either of the above categories but provide interesting approaches. In Zhou and Fu (2018), an extended variant of the frequency approach is studied, which combines co-occurrence frequency and Inverse Document Frequency (IDF) for relations extraction. The study sets the first precedence to entity co-occurrence in MeSH terms and second to those in the article title, and third to the ones in the article abstract by assigning weights to each precedence level. A vector representation for each document sample is created using these weights for calculating the score of each key-term-association by multiplying IDF with PWK (penalty weight for the keyword, depending on the distance from MeSH root). Next, by comparing with the dictionary entries for relevance, each gene and disease is converted into vectors (Vg, Vd), and the strength of a relation is calculated through the cosine similarity given by . The authors then evaluate the system by comparing precision, recall, and cosine similarity.

In contrast, the study by Percha and Altman (2015) introduces an entirely novel algorithm to mine relations between entities called Ensemble Clustering for Classification (EBC). This algorithm extract drug-gene associations by combining an unsupervised learning step and a lightly supervised step that uses a small seed data set. In the unsupervised step, all co-occurrences of gene-drugs pairs (n) and all dependency path between the pairs (m) are mined to create a matrix of n × m which is then clustered using Information-Theoretic Co-Clustering. The supervised step follows by comparing how often the seed set pairs and test set pairs co-cluster together using a scoring function, and relationships are ranked accordingly. The same authors have extended this method further in Percha and Altman (2018), by applying hierarchical clustering after EBC to extract four types of association between gene-gene, chemical-gene, gene-disease, and chemical-disease. Incidentally, this hierarchical step has enabled additional classification of these relationships into themes such as ten different types of chemical-gene relations or seven distinct types of chemical-disease associations.

A further refinement following a relation detection is an analysis of the polarity and the strength of the identified associations, providing additional information about the relations and, hence, enhances extracted domain-specific knowledge.

A polarity analysis of relations is similar to a sentiment analysis (Swaminathan et al., 2010; Denecke and Deng, 2015). For inferring the polarity of relations, similar machine learning approaches can be used, as discussed in section 3.3. However, a crucial difference is that for the supervised methods, appropriate training data need to be available, providing information about the different polarity classes. For instance, one could have three polarity classes, namely, positive associations (e.g., decreases risk, promotes health), neutral associations (e.g., does not influence, causes no change), and negative associations (e.g., increases risk, mutates cell). In general, a polarity analysis opens new ways to study research questions of how entities interact with each other in a network. For example, the influence of a given food metabolite on certain diseases can be identified, which may open new courses of food-based treatment regiments (Miao et al., 2012a,b).

A strength analysis comes after identifying associations between entities in a text since all extracted events might not be considered significant associations. Especially in simple co-occurrences based method to identify relationships, strength analysis can be vital, since just a simple mention of two entities in a sentence with no explicit reciprocity, may result in them wrongly defined as associations. Some of the most common methods employed in the literature include distance analysis and dependency path analysis, or an extension of those methods.

An example of a method that implements a word distance analysis is Polysearch (Liu et al., 2015). Polysearch is essentially a biomedical web crawler focusing on entity associations. This tool first estimates co-occurrence frequencies and the association verbs to locate content that is predicted to have entity associations. Next, using the word-distances between entity-pairs in the selected text, content relevancy (i.e., the strength of association) score is calculated. Incidentally, this system is currently able to search in several text corpora and databases, using the above method, to find relevant content for over 300 associative combinations of named entity classes.

In Coulet et al. (2010), the authors created syntactic parse trees, as shown in Figure 7, by analyzing sentences selected by the entity co-occurrences approach. Each tree then converts into a directed and labeled dependency graph, whereas nodes are words, and edges are dependency labels. Next, by extracting shortest paths between node pairs in the graph, they transform associations into the form Verb(Entity1, Entity2), such that Entity1 and Entity2 are connected by Verb. This approach, which is an extension of the association-identifying method described in section 3.1, hypothesizes that the shortest dependency paths indicate the strongest associations. Other studies that use a dependency analysis of sentences to determine the strength of the associations include (Quan and Ren, 2014; Kuhn et al., 2015; Mallory et al., 2015; Percha and Altman, 2015). Many systems using machine learning approaches, also tend to define syntactic and dependency paths analysis of sentences as a feature selection method before training relation mining models, as discussed in Özgür et al. (2008); Yang et al. (2011), and Bhasuran and Natarajan (2018).

After individual relations between biomedical entities have been inferred, it is convenient to assemble these in the form of networks (Skusa et al., 2005; Li et al., 2014; Kolchinsky et al., 2015). In such networks, nodes (also called vertices) correspond to entities and edges (also called links) to relations between entities. The resulting networks can be either weighted or unweighted. If polarity or strength of relations has been obtained, one can use this information to define the weights of edges as the strength of the relations, leading to weighted networks. Polarity information and relation type classifications can further be used to label edges. For example, these labels could be positive regulation, negative regulation, or transcription. In this case, edges tend to be directed indicating which entity is influenced by which. Such labeled and/or weighted networks are usually more informative than unweighted ones because they carry more relevant domain-specific information.

The visualization of interaction networks often provides a useful first summary of the results extracted from the relation extraction task. The networks are either built from scratch or automatically by using software tools. Two such commonly used tools for the network visualization are Cytoscape (Franz et al., 2015) and Gephi (Bastian et al., 2009), both providing open-source java libraries. Cytoscape can also be used interactively via a web-interface, while Gephi can be used for 3D rendering of graphs and networks. There are also several libraries specifically developed for network visualization in different languages. For instance, NetbioV (Tripathi et al., 2014) provides an R package and Graph-tool (Peixoto, 2014) a package for Python.

The networks generated in the above way can be further analyzed to reconfirm known associations, and further explore new ones (Özgür et al., 2008; Quan and Ren, 2014). Measures frequently used for biomedical network analysis include node centrality measures, shortest paths, network clustering, and network density (Sarangdhar et al., 2016). The measures selected to analyze a graph predominantly depend on the task at hand; for example, shortest path analysis is vital for discovering signaling pathways, while clustering analysis helps identify functional subnetwork units. Further commonly used metrics are centrality measures and network density methods, e.g., for identifying the most influential nodes in the network. Whereas graph density compares the number of existing relations between the nodes vs. all possible connections that can be formed in the network, centrality measures are commonly used to identifying the importance of an entity within the entire network (Emmert-Streib and Dehmer, 2011).

There are four main centrality measures, namely, degree, betweenness, closeness, and eigenvector centrality (Emmert-Streib et al., 2018). Degree centrality, the simplest of the above measures, corresponds just to the number of connections of a node. Closeness centrality is given by the reciprocal of the sum of all shortest path lengths between a node and all other nodes in the network, as such it measures the spread of information. Also betweenness centrality utilizes shortest paths by taking into account the information flow of the network. This is realized by counting shortest paths through pairs of nodes. Finally, eigenvector centrality is a measure of influence where each node is assigned a score based on how many other influential nodes are connected to it.

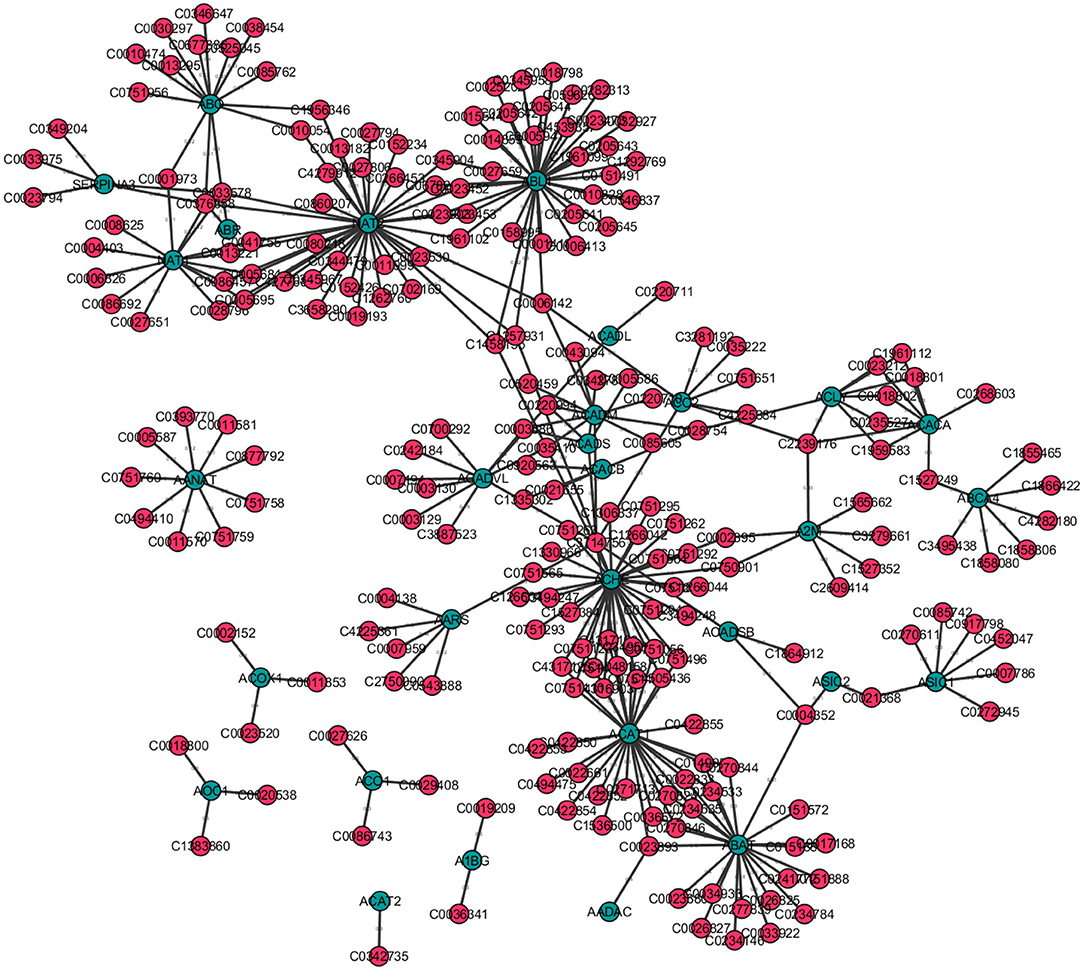

For instance, consider Figure 9, a disease-gene network. Here blue nodes correspond to genes and pink nodes represent diseases. For instance, blue nodes with a higher degree centrality correspond to those genes associated with a higher number of diseases. Similarly, pink nodes with a high degree centrality correspond to diseases that are associated with more genes. Furthermore, the genes with a high closeness centrality are important because they have a direct or indirect association to the largest number of other genes and diseases. Further, if a gene X that is connected to a large number of diseases, and is furthermore connected to gene Y with a high eigenvector centrality, it may be worth exploring if there are diseases in the neighborhood of gene X, that are possibly also associated to gene Y and vice versa. Hence, based on centrality measures, one may be able to find previously undiscovered relations between certain diseases and genes.

Figure 9. Disease-gene network created for selected 300 entries from the DisGeNet Database (Bauer-Mehren et al., 2010) with Cytoscape v3.7.2. Genes are shown as blue nodes and diseases as pink nodes.

In this section, we will discuss some of the main benchmark tools and resources available for Named-Entity Recognition and Relation Extraction used in the biomedical domain.

While the training corpora for machine learning methods in BioNER and BioRD both have been discussed extensively in the sections above, here we mention some of the databases with entities and relation mappings. These are crucial for dictionary-based methods and in post-processing, and as such, are often used for biomedical text mining research.

Some of the Named-Entity specific databases that have comprehensive collections of jargon include Gene Ontology (Consortium, 2004), Chemical Entities of Biological Interest (Shardlow et al., 2018), DrugBank (Wishart et al., 2017), Human Protein Reference Database (Keshava Prasad et al., 2008), Online Mendelian Inheritance in Man (Amberger et al., 2018), FooDB (Wishart, 2014), Toxins and Toxin-Targets Database (Wishart et al., 2014), International Classifications of Disease (ICD-11) by WHO (World Health Organization, 2018), Metabolic Pathways and Enzymes Database (Caspi et al., 2017), Human Metaboleme Database (Jewell et al., 2007), and USDA food and nutrients database (Haytowitz and Pehrsson, 2018). The majority of these has been used by Liu et al. (2015) to compile their thesauri and databases.

Databases for known Entity-Relations in Biomedical research include DISEASES (Pletscher-Frankild et al., 2015) and DisGeNet (Bauer-Mehren et al., 2010) providing gene-disease relations, CTD (Davis et al., 2012) with relations between chemicals, genes, phenotypes, diseases, exposures and pathways, SIDER (Kuhn et al., 2015) providing drug-side effect relations, STRING (Szklarczyk et al., 2014) with protein-protein interactions, ChemProt (Kringelum et al., 2016) with chemical-protein interactions and PharmGKB (Hewett et al., 2002) providing drug-gene relations. These databases have been used by various authors to evaluate relation extraction systems.

In Table 3, we provide an overview of BioNER tools that are available for different programming languages. While there are several other tools, our selection criterion was to cover the earliest successful implementations, benchmark tools as well as the most recent tools using novel approaches.

The improvement of resources and techniques for biomedical annotation has also brought about an abundance of open source tools that have simplified the information extraction for relation mining in biomedical texts. Many of these are general-purpose text mining tools that can be easily configured to process biomedical texts. For instance, Xing et al. (2018) used the open information extraction tool OLLIE (Schmitz et al., 2012) to identify relations between genes and phenotypes, whereas (Kim et al., 2017) identified gene-disease relations utilizing DigSee (Kim et al., 2013). Other useful tools that can be application adaptable and have higher F-score measures are; DeepDive (Niu et al., 2012): an information extraction system developed for structuring unstructured text documents, RelEx (Fundel et al., 2006): a dependency parsing based relations extractor applicable to biomedical free text, and PKDE4J (Song et al., 2015): an extractor that combines rule-based and dictionary-based approaches for multiple-entity relation extraction.