94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Blockchain, 05 November 2019

Sec. Blockchain for Good

Volume 2 - 2019 | https://doi.org/10.3389/fbloc.2019.00017

This article is part of the Research TopicIdentity and Privacy GovernanceView all 10 articles

Current architectures to validate, certify, and manage identity are based on centralized, top-down approaches that rely on trusted authorities and third-party operators. We approach the problem of digital identity starting from a human rights perspective, with a primary focus on identity systems in the developed world. We assert that individual persons must be allowed to manage their personal information in a multitude of different ways in different contexts and that to do so, each individual must be able to create multiple unrelated identities. Therefore, we first define a set of fundamental constraints that digital identity systems must satisfy to preserve and promote privacy as required for individual autonomy. With these constraints in mind, we then propose a decentralized, standards-based approach, using a combination of distributed ledger technology and thoughtful regulation, to facilitate many-to-many relationships among providers of key services. Our proposal for digital identity differs from others in its approach to trust in that we do not seek to bind credentials to each other or to a mutually trusted authority to achieve strong non-transferability. Because the system does not implicitly encourage its users to maintain a single aggregated identity that can potentially be constrained or reconstructed against their interests, individuals and organizations are free to embrace the system and share in its benefits.

The past decade has seen a proliferation of new initiatives to create digital identities for natural persons. Some of these initiatives, such as the ID4D project sponsored by The World Bank (2019) and the Rohingya Project (2019) involve a particular focus in the humanitarian context, while others, such as Evernym (2019) and ID2020 (2019) have a more general scope that includes identity solutions for the developed world. Some projects are specifically concerned with the rights of children (5rights Foundation, 2019). Some projects use biometrics, which raise certain ethical concerns (Pandya, 2019). Some projects seek strong non-transferability, either by linking all credentials related to a particular natural person to a specific identifier, to biometric data, or to each other, as is the case for the anonymous credentials proposed by Camenisch and Lysyanskaya (2001). Some projects have design objectives that include exceptional access (“backdoors”) for authorities, which are widely considered to be problematic (Abelson et al., 1997, 2015; Benaloh et al., 2018).

Although this article shall focus on challenges related to identity systems for adult persons in the developed world, we argue that the considerations around data protection and personal data that are applicable in the humanitarian context, such as those elaborated by the International Committee of the Red Cross (Kuner and Marelli, 2017; Stevens et al., 2018), also apply to the general case. We specifically consider the increasingly commonplace application of identity systems “to facilitate targeting, profiling and surveillance” by “binding us to our recorded characteristics and behaviors” (Privacy International, 2019). Although we focus primarily upon the application of systems for digital credentials to citizens of relatively wealthy societies, we hope that our proposed architecture might contribute to the identity zeitgeist in contexts such as humanitarian aid, disaster relief, refugee migration, and the special interests of children as well.

We argue that while requiring strong non-transferability might be appropriate for some applications, it is inappropriate and dangerous in others. Specifically, we consider the threat posed by mass surveillance of ordinary persons based on their habits, attributes, and transactions in the world. Although the governments of Western democracies might be responsible for some forms of mass surveillance, for example via the recommendations of Financial Action Task Force (2018) or various efforts to monitor Internet activity (Parliament of the United Kingdom, 2016; Parliament of Australia, 2018), the siren song of surveillance capitalism (Zuboff, 2015), including the practice of “entity resolution” through aggregation and data analysis (Waldman et al., 2018), presents a particular risk to human autonomy.

We suggest that many “everyday” activities such as the use of library resources, public transportation services, and mobile data services are included in a category of activities for which strong non-transferability is not necessary and for which there is a genuine need for technology that explicitly protects the legitimate privacy interests of individual persons. We argue that systems that encourage individual persons to establish a single, unitary1 avatar (or “master key”) for use in many contexts can ultimately influence and constrain how such persons behave, and we suggest that if a link between two attributes or transactions can be proven, then it can be forcibly discovered. We therefore argue that support for multiple, unlinkable identities is an essential right and a necessity for the development of a future digital society for humans.

This rest of this article is organized as follows. In the next section section, we offer some background on identity systems; we frame the problem space and provide examples of existing solutions. In section 3, we introduce a set of constraints that serve as properties that a digital identity infrastructure must have to support human rights. In section 4, we describe how a digital identity system with a fixed set of actors might operate and how it might be improved. In section 5, we introduce distributed ledger technology to promote a competitive marketplace for issuers and verifiers of credentials and to constrain the interaction between participants in a way that protects the privacy of individual users. In section 6, we consider how the system should be operated and maintained if it is to satisfy the human rights requirements. In section 7, we suggest some potential use cases, and in section 8 we conclude.

Establishing meaningful credentials for individuals and organizations in an environment in which the various authorities are not uniformly trustworthy presents a problem for currently deployed services, which are often based on hierarchical trust networks, all-purpose identity cards, and other artifacts of the surveillance economy. In the context of interactions between natural persons, identities are neither universal nor hierarchical, and a top-down approach to identity generally assumes that it is possible to impose a universal hierarchy. Consider “Zooko's triangle,” which states that names can be distributed, secure, or human-readable, but not all three (Wilcox-O'Hearn, 2018). The stage names of artists may be distributed and human-readable but are not really secure since they rely upon trusted authorities to resolve conflicts. The names that an individual assigns to friends or that a small community assigns to its members (“petnames,” Stiegler, 2005) are secure and human-readable but not distributed. We extend the reasoning behind the paradox to the problem of identity itself and assert that the search for unitary identities for individual persons is problematic. It is technically problematic because there is no endogenous way to ensure that an individual has only one self-certifying name (Douceur, 2002), there is no way to be sure about the trustworthiness or universality of an assigned name, and there is no way to ensure that an individual exists only within one specific community. More importantly, we assert that the ability to manage one's identities in a multitude of different contexts, including the creation of multiple unrelated identities, is an essential human right.

The current state-of-the-art identity systems, from technology platforms to bank cards, impose asymmetric trust relationships and contracts of adhesion on their users, including both the ultimate users as well as local authorities, businesses, cooperatives, and community groups. Such trust relationships, often take the form of a hierarchical trust infrastructure, requiring that users accept either a particular set of trusted certification authorities (“trust anchors”) or identity cards with private keys generated by a trusted third party. In such cases, the systems are susceptible to socially destructive business practices, corrupt or unscrupulous operators, poor security practices, or control points that risk coercion by politically or economically powerful actors. Ultimately, the problem lies in the dubious assumption that some particular party or set of parties are universally considered trustworthy.

Often, asymmetric trust relationships set the stage for security breaches. Rogue certification authorities constitute a well-known risk, even to sophisticated government actors (Charette, 2016; Vanderburg, 2018), and forged signatures have been responsible for a range of cyber-attacks including the Stuxnet worm, an alleged cyber-weapon believed to have caused damage to Iran's nuclear programme (Kushner, 2013), as well as a potential response to Stuxnet by the government of Iran (Eckersley, 2011). Corporations that operate the largest trust anchors have proven to be vulnerable. Forged credentials were responsible for the Symantec data breach (Goodin, 2017a), and other popular trust anchors such as Equifax are not immune to security breaches (Equifax Inc, 2018). Google has published a list of certification authorities that it thinks are untrustworthy (Chirgwin, 2016), and IT administrators have at times undermined the trust model that relies upon root certification authorities (Slashdot, 2014). Finally, even if their systems are secure and their operators are upstanding, trust anchors are only as secure as their ability to resist coercion, and they are sometimes misappropriated by governments (Bright, 2010).

Such problems are global, affecting the developed world and emerging economies alike. Identity systems that rely upon a single technology, a single implementation, or a single set of operators have proven unreliable (Goodin, 2017b,c; Moon, 2017). Widely-acclaimed national identity systems, including but not limited to the Estonian identity card system based on X-Road (Thevoz, 2016) and Aadhaar in India (Tully, 2017), are characterized by centralized control points, security risks, and surveillance.

Recent trends in technology and consumer services suggest that concerns about mobility and scalability will lead to the deployment of systems for identity management that identify consumers across a variety of different services, with a new marketplace for providers of identification services Wagner (2014). In general, the reuse of credentials has important privacy implications as a consumer's activities may be tracked across multiple services or multiple uses of the same service. For this reason, the potential for a system to collect and aggregate transaction data must be evaluated whilst evaluating its impact on the privacy of its users.

While data analytics are becoming increasingly effective in identifying and linking the digital trails of individual persons, it has become correspondingly necessary to defend the privacy of individual users and implement instruments that allow and facilitate anonymous access to services. This reality was recognized by the government of the United Kingdom in the design of its GOV.UK Verify programme (Government Digital Service, 2018), a federated network of identity providers and services. However, the system as deployed has significant technical shortcomings with the potential to jeopardize the privacy of its users (Brandao et al., 2015; Whitley, 2018), including a central hub and vulnerabilities that can be exploited to link individuals with the services they use (O'Hara et al., 2011).

Unfortunately, not only do many of the recently-designed systems furnish or reveal data about their users against their interests, but they have been explicitly designed to do so. For example, consider digital rights management systems that force users to identify themselves ex ante and then use digital watermarks to reveal their identities (Thomas, 2009). In some cases, demonstrable privacy has been considered an undesirable feature and designs that protect the user's identity intrinsically are explicitly excluded, for example in the case of vehicular ad-hoc networks (Shuhaimi and Juhana, 2012), with the implication that systems without exceptional access features are dangerous. Finally, of particular concern are systems that rely upon biometrics for identification. By binding identification to a characteristic that users (and in most cases even governments) cannot change, biometrics implicitly prevent a user from transacting within a system without connecting each transaction to each other and potentially to a permanent record. In recent years, a variety of US patents have been filed and granted for general-purpose identity systems that rely upon biometric data to create a “root” identity linking all transactions in this manner (Liu et al., 2008; Thackston, 2018).

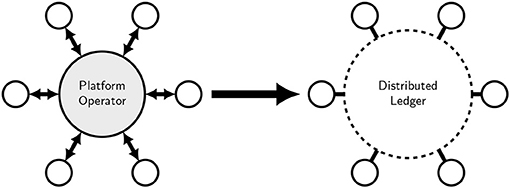

The prevailing identity systems commonly require users to accept third parties as trustworthy. The alternative to imposing new trust relationships is to work with existing trust relationships by allowing users, businesses, and communities to deploy technology on their own terms, independently of external service providers. In this section we identify various groups that have adopted a system-level approach to allow existing institutions and service providers to retain their relative authority and decision-making power without forcibly requiring them to cooperate with central authorities (such as governments and institutions), service providers (such as system operators), or the implementors of core technology. We suggest that ideally, a solution would not require existing institutions and service providers to operate their own infrastructure without relying upon a platform operator, while concordantly allowing groups such as governments and consultants to act as advisors, regulators, and auditors, not operators. A distributed ledger can serve this purpose by acting as a neutral conduit among its participants, subject to governance limitations to ensuring neutrality and design limitations around services beyond the operation of the ledger that are required by participants. Figure 1 offers an illustration.

Figure 1. Many network services are centralized in the sense that participants rely upon a specific platform operator to make use of the service (Left), whereas distributed ledgers rely upon network consensus among participants instead of platform operators (Right).

Modern identity systems are used to coordinate three activities: identification, authentication, and authorization. The central problem to address is how to manage those functions in a decentralized context with no universally trusted authorities. Rather than trying to force all participants to use a specific new technology or platform, we suggest using a multi-stakeholder process to develop common standards that define a set of rules for interaction. Any organization would be able to develop and use their own systems that would interoperate with those developed by any other organization without seeking permission from any particular authority or agreeing to deploy any particular technology.

A variety of practitioners have recently proposed using a distributed ledger to decentralize the administration of an identity system (Dunphy and Petitcolas, 2018), and we agree that the properties of distributed ledger technologies are appropriate for the task. In particular, distributed ledgers allow their participants to share control of the system. They also provide a common view of transactions ensuring that everyone sees the same transaction history.

Various groups have argued that distributed ledgers might be used to mitigate the risk that one powerful, central actor might seize control under the mantle of operational efficiency. However, it is less clear that this lofty goal is achieved in practice. Existing examples of DLT-enabled identity management systems backed by organizations include the following, among others:

• ShoCard SITA (2016) is operated by a commercial entity that serves as a trusted intermediary (Dunphy and Petitcolas, 2018).

• Everest Everest (2019) is designed as a payment solution backed by biometric identity for its users. The firm behind Everest manages the biometric data and implicitly requires natural persons to have at most one identity within the system (Graglia et al., 2018).

• Evernym (2019) relies on a foundation (Tobin and Reed, 2016) to manage the set of approved certification authorities (Aitken, 2018), and whether the foundation could manage the authorities with equanimity remains to be tested.

• ID2020 (2019) offers portable identity using biometrics to achieve strong non-transferability and persistence (ID2020 Alliance, 2019).

• uPort Lundkvist et al. (2016) does not rely upon a central authority, instead allowing for mechanisms such as social recovery. However, its design features an optional central registry that might introduce a means of linking together transactions that users would prefer to keep separate (Dunphy and Petitcolas, 2018). The uPort architecture is linked to phone numbers and implicitly discourages individuals from having multiple identities within the system (Graglia et al., 2018).

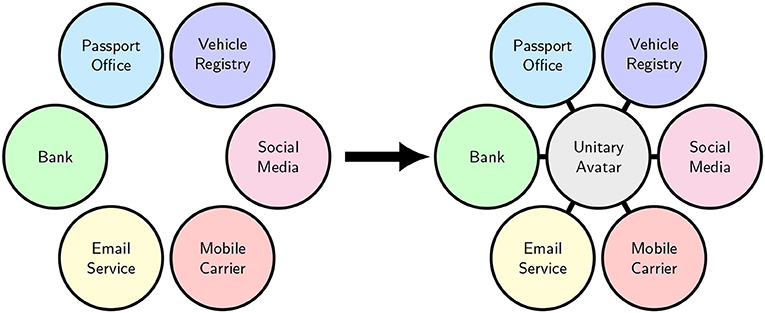

Researchers have proposed alternative designs to address some of the concerns. A design suggested by Kaaniche and Laurent does not require a central authority for its blockchain infrastructure but does require a trusted central entity for its key infrastructure (Kaaniche and Laurent, 2017). Coconut, the selective disclosure credential scheme used by Chainspace (2019), is designed to be robust against malicious authorities and may be deployed in a way that addresses such concerns (Sonnino et al., 2018)2. We find that many systems such as these require users to bind together their credentials ex ante3 to achieve non-transferability, essentially following a design proposed by Camenisch and Lysyanskaya (2001) that establishes a single “master key” that allows each user to prove that all of her credentials are related to each other. Figure 2 offers an illustration. Even if users were to have the option to establish multiple independent master keys, service providers or others could undermine that option by requiring proof of the links among their credentials.

Figure 2. Consider that individual persons possess credentials representing a variety of attributes (Left), and schemes that attempt to achieve strong non-transferability seek to bind these attributes together into a single, unitary “avatar” or “master” identity (Right).

The concept of an individual having “multiple identities” is potentially confusing, so let us be clear. In the context of physical documents in the developed world, natural persons generally possess multiple identity documents already, including but not limited to passports, driving licenses, birth certificates, bank cards, insurance cards, and so on. Although individuals might not think of these documents and the attributes they represent as constituting multiple identities, the identity documents generally stand alone for their individual, limited purposes and need not be presented as part of a bundled set with explicit links between the attributes. Service providers might legitimately consider two different identity documents as pertaining to two different individuals, even whilst they might have been issued to the same person. A system that links together multiple attributes via early-binding eliminates this possibility. When we refer to “multiple identities” we refer to records of attributes or transactions that are not linked to each other. Users of identity documents might be willing to sacrifice this aspect of control in favor of convenience, but the potential for blacklisting and surveillance that early-binding introduces is significant. It is for this reason that we take issue with the requirement, advised by various groups including the (International Telecommunications Union, 2018), that individuals must not possess more than one identity. Such a requirement is neither innocuous nor neutral.

Table 1 summarizes the landscape of prevailing digital identity solutions. We imagine that the core technology underpinning these and similar approaches might be adapted to implement a protocol that is broadly compatible with what we describe in this article. However, we suspect that in practice they would need to be modified to encourage users to establish multiple, completely independent identities. In particular, service providers would not be able to assume that users have bound their credentials to each other ex ante, and if non-transferability is required, then the system would need to achieve it in a different way.

We shall use the following notation to represent the various parties that interact with a typical identity system:

• (1) A “certification provider” (CP). This would be an entity or organization responsible for establishing a credential based upon foundational data. The credential can be used as a form of identity and generally represents that the organization has checked the personal identity of the user in some way. In the context of digital payments, this might be a bank.

• (2) An “authentication provider” (AP). This would be any entity or organization that might be trusted to verify that a credential is valid and has not been revoked. In current systems, this function is typically performed by a platform or network, for example a payment network such as those associated with credit cards.

• (3) An “end-user service provider” (Service). This would be a service that requires a user to provide credentials. It might be a merchant selling a product, a government service, or some other kind of gatekeeper, for example a club or online forum.

• (4) A user (user). This would be a human operator, in most cases aided by a device or a machine, whether acting independently or representing an organization or business.

As an example of how this might work, suppose that a user wants to make an appointment with a local consular office. The consular office wants to know that a user is domiciled in a particular region. The user has a bank account with a bank that is willing to certify that the user is domiciled in that region. In addition, a well-known authentication provider is willing to accept certifications from the bank, and the consular office accepts signed statements from that authentication provider. Thus, the user can first ask the bank to sign a statement certifying that he is domiciled in the region in question. When the consular office asks for proof of domicile, the user can present the signed statement from the bank to the authentication provider and ask the authentication provider to sign a new statement vouching for the user's region of domicile, using information from the bank as a basis for the statement, without providing any information related to the bank to the consular office.

Reflecting on the various identity systems used today, including but not limited to residence permits, bank accounts, payment cards, transit passes, and online platform logins, we observed a plethora of features with weaknesses and vulnerabilities concerning privacy (and in some cases security) that could potentially infringe upon human rights. Although the 1948 Universal Declaration on Human Rights explicitly recognizes privacy as a human right (United Nations, 1948), the declaration was drafted well before the advent of a broad recognition of the specific dangers posed by the widespread use of computers for data aggregation and analysis (Armer, 1975), to say nothing of surveillance capitalism (Zuboff, 2015). Our argument that privacy in the context of digital identity is a human right, therefore, rests upon a more recent consideration of the human rights impact of the abuse of economic information (European Parliament, 1999). With this in mind, we identified the following eight fundamental constraints to frame our design requirements for technology infrastructure (Goodell and Aste, 2018):

Structural requirements:

1. Minimize control points that can be used to co-opt the system. A single point of trust is a single point of failure, and both state actors and technology firms have historically been proven to abuse such trust.

2. Resist establishing potentially abusive processes and practices, including legal processes, that rely upon control points. Infrastructure that can be used to abuse and control individual persons is problematic even if those who oversee its establishment are genuinely benign. Once infrastructure is created, it may in the future be used for other purposes that benefit its operators.

Human requirements:

3. Mitigate architectural characteristics that lead to mass surveillance of individual persons. Mass surveillance is about control as much as it is about discovery: people behave differently when they believe that their activities are being monitored or evaluated (Mayo, 1945). Powerful actors sometimes employ monitoring to create incentives for individual persons, for example to conduct marketing promotions or credit scoring operations. Such incentives may prevent individuals from acting autonomously, and the chance to discover irregularities, patterns, or even misbehavior often does not justify such mechanisms of control.

4. Do not impose non-consensual trust relationships upon beneficiaries. It is an act of coercion for a service provider to require a client to maintain a direct trust relationship with a specific third-party platform provider or certification authority. Infrastructure providers must not explicitly or implicitly engage in such coercion, which should be recognized for what it is and not tolerated in the name of convenience.

5. Empower individual users to manage the linkages among their activities. To be truly free and autonomous, individuals must be able to manage the cross sections of their activities, attributes, and transactions that are seen or might be discovered by various institutions, businesses, and state actors.

Economic requirements:

6. Prevent solution providers from establishing a monopoly position. Some business models are justified by the opportunity to achieve status as monopoly infrastructure. Monopoly infrastructure is problematic not only because it deprives its users of consumer surplus but also because it empowers the operator to dictate the terms by which the infrastructure can be used.

7. Empower local businesses and cooperatives to establish their own trust relationships. The opportunity to establish trust relationships on their own terms is important both for businesses to compete in a free marketplace and for businesses to act in a manner that reflects the interests of their communities.

8. Empower service providers to establish their own business practices and methods. Providers of key services must adopt practices that work within the values and context of their communities.

These constraints constitute a set of system-level requirements, involving human actors, technology, and their interaction, not to be confused with the technical requirements that have been characterized as essential to self-sovereign identity (SSI) (Stevens et al., 2018). Although our design objectives may overlap with the design objectives for SSI systems, we seek to focus on system-level outcomes. While policy changes at the government level might be needed to fully achieve the vision suggested by some of the requirements, we would hope that a digital identity system would not contain features that intrinsically facilitate their violation.

Experience shows that control points will eventually be co-opted by powerful parties, irrespective of the intentions of those who build, own, or operate the control points. Consider, for example, how Cambridge Analytica allegedly abused the data assets of Facebook Inc to manipulate voters in Britain and the US (Koslowska et al., 2018) and how the Russian government asserted its influence on global businesses that engaged in domain-fronting (Lunden, 2018; Savov, 2018). The inherent risk that centrally aggregated datasets may be abused, not only by the parties doing the aggregating but also by third parties, implies value in system design that avoids control points and trusted infrastructure operators, particularly when personal data and livelihoods are involved.

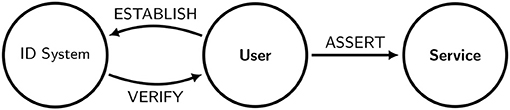

Various digital identity architectures and deployments exist today to perform the three distinct functions we mentioned earlier: identification, authentication, and authorization (Riley, 2006). We introduce a fourth function, auditing, by which the basis for judgements made by the system can be explained and evaluated. We characterize the four functions as follows:

• IDENTIFICATION. A user first establishes some kind of credential or identifier. The credential might be a simple registration, for example with an authority, institution, or other organization. In other cases, it might imply a particular attribute. The implication might be implicit, as a passport might imply citizenship of a particular country or a credential issued by a bank might imply a banking relationship, or it might be explicit, as in the style of attribute-backed credentials (Camenisch and Lysyanskaya, 2003; IBM Research Zurich, 2018)4.

• AUTHENTICATION. Next, when the provider of a service seeks to authenticate a user, the user must be able to verify that a credential in question is valid.

• AUTHORIZATION. Finally, the user can use the authenticated credential to assert to the service provider that she is entitled to a particular service.

• AUDITING. The identity system would maintain record of the establishment, expiration, and revocation of credentials such that the success or failure of any given authentication request can be explained.

Ultimately, it is the governance of a digital identity system, including its intrinsic policies and mechanisms as well as the accountability of the individuals and groups who control its operation, that determines whether it empowers or enslaves its users. We suggest that proper governance, specifically including a unified approach to the technologies and policies that the system comprises, is essential to avoiding unintended consequences to its implementation. We address some of these issues further in section 6.

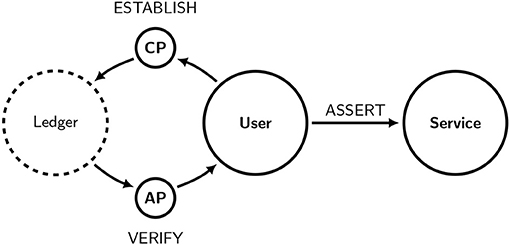

Figure 3 gives a pictorial representation of the functions. Table 2 defines the notation that we shall use in our figures. With the constraints enumerated in section 3 taken as design requirements, we propose a generalized architecture that achieves our objectives for an identity system. The candidate systems identified in section 2 can be evaluated by comparing their features to our architecture. Since we intend to argue for a practical solution, we start with a system currently enjoying widespread deployment.

Figure 3. A schematic representation of a generalized identity system. Users first establish a credential with the system, then use the system to verify the credential, and then use the verified identity to assert that they are authorized to receive a service.

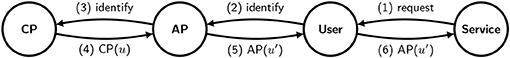

As a baseline example of an identity framework, we consider a system that uses banks as certification providers whilst circumventing the global payment networks. SecureKey Concierge (SKC) (SecureKey Technologies, Inc, 2015) is a solution used by the government of Canada to provide users with access to its various systems in a standard way. The SKC architecture seeks the following benefits:

1. Leverage existing “certification providers” such as banks and other financial institutions with well-established, institutional procedures for ascertaining the identities of their customers. Often such procedures are buttressed by legal frameworks such as Anti-Money Laundering (AML) and “Know Your Customer” (KYC) regulations that broadly deputize banks and substantially all financial institutions (GOV.UK, 2014) to collect identifying information on the various parties that make use of their services, establish the expected pattern for the transactions that will take place over time, and monitor the transactions for anomalous activity inconsistent with the expectations (Better Business Finance, 2017).

2. Isolate service providers from personally identifying bank details and eliminate the need to share specific service-related details with the certification provider, whilst avoiding traditional authentication service providers such as payment networks.

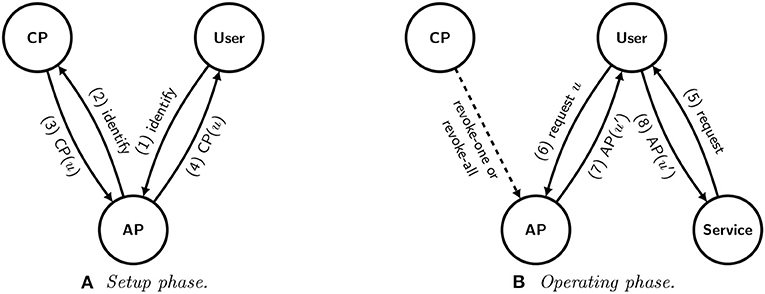

Figure 4 offers a stylized representation of the SKC architecture, as interpreted from its online documentation (SecureKey Technologies, Inc, 2015). When a user wants to access a service, the service provider sends a request to the user (1)5 for credentials. The user then sends encrypted identifying information (for example, bank account login details) to the authentication provider (2), which in this case is SKC, which then forwards it to the certification provider (3). Next, the certification provider responds affirmatively with a “meaningless but unique” identifier u representing the user, and sends it to the authentication provider (4). The authentication provider then responds by signing its own identifier u′ representing the user and sending the message to the user (5), which in turn passes it along to the service provider (6). At this point the service provider can accept the user's credentials as valid. The SKC documentation indicates that SKC uses different, unlinked values of u′ for each service provider.

Figure 4. A stylized schematic representation of the SecureKey Concierge (SKC) system. The parties are represented by the symbols “CP,” “AP,” “User,” and “Service,” and the arrows represent messages between the parties. The numbers associated with each arrow show the sequence, and the symbol following the number represents the contents of a message. First, the service provider (Service) requests authorization from the user, who in turn sends identifying information to the authentication provider (AP) to share with the certification provider (CP). If the CP accepts the identifying information, it sends a signed credential u to the AP, which in turn issues a new credential u′ for consumption by the Service, which can now authorize the user.

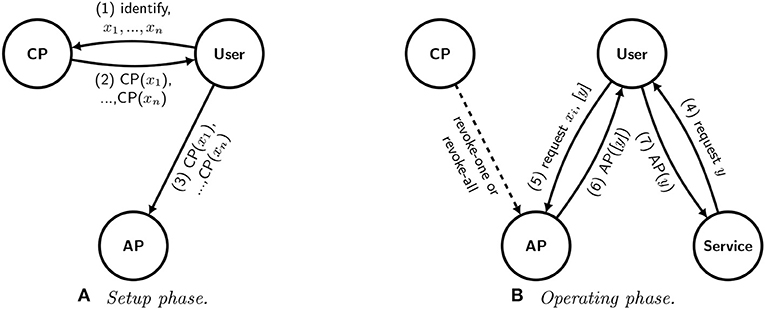

We might consider modifying the SKC architecture so that the user does not need to log in to the CP each time it requests a service. To achieve this, we divide the protocol into two phases, as shown in Figure 5: a setup phase (Figure 5A) in which a user establishes credentials with an “certification provider” (CP) for use with the service, and an operating phase (Figure 5B) in which a user uses the credentials in an authentication process with a service provider. So, the setup phase is done once, and the operating phase is done once per service request. In the setup phase, the user first sends authentication credentials, such as those used to withdraw money from a bank account, to an authentication provider (1). The authentication provider then uses the credentials to authenticate to the certification provider (2), which generates a unique identifier u that can be used for subsequent interactions with service providers and sends it to the authentication provider (3), which forwards it to the user (4). Then, in the operating phase, a service provider requests credentials from the user (5), which in turn uses the previously established unique identifier u to request credentials from the authentication provider (6). This means that the user would implicitly maintain a relationship with the authentication provider, including a way to log in. The authentication provider then verifies that the credentials have not been revoked by the certification provider. The process for verifying that the CP credential is still valid may be offline, via a periodic check, or online, either requiring the AP to reach out to the AP when it intends to revoke a credential or requiring the AP to send a request to the CP in real-time. In the latter case, the AP is looking only for updates on the set of users who have been through the setup phase, and it does not need to identify which user has made a request. Once the AP is satisfied, it sends a signed certification of its identifier u′ to the user (7), which forwards it to the service provider as before (8).

Figure 5. A schematic representation of a modified version of the SKC system with a stateful authentication provider and one-time identifiers for services. The user first establishes credentials in the setup phase (A). Then, when a service provider requests credentials from the user in the operating phase (B), the user reaches out to the authentication provider for verification, which assigns a different identifier u′ each time.

Unfortunately, even if we can avoid the need for users to log in to the CP every time they want to use a service, the authentication provider itself serves as a trusted third party. Although the SKC architecture may eliminate the need to trust the existing payment networks, the authentication provider maintains the mapping between service providers and the certification providers used by individuals to request services. It is also implicitly trusted to manage all of the certification tokens, and there is no way to ensure that it does not choose them in a way that discloses information to service providers or certification providers. In particular, users need to trust the authentication provider to use identifiers that do not allow service providers to correlate their activities, and users may also also want to use different identifiers from time to time to communicate with the same service provider. As a monopoly platform, it also has the ability to tax or deny service to certification providers, users, or service providers according to its own interests, and it serves as a single point of control vulnerable to exploitation. For all of these reasons, we maintain that the SKC architecture remains problematic from a public interest perspective.

In the architecture presented in section 4.2, the authentication provider occupies a position of control. In networked systems, control points confer economic advantages on those who occupy them (Value Chain Dynamics Working Group (VCDWG), 2005), and the business incentives associated with the opportunity to build platform businesses around control points have been used to justify their continued proliferation (Ramakrishnan and Selvarajan, 2017).

However, control points also expose consumers to risk, not only because the occupier of the control point may abuse its position but also because the control point itself creates a vector for attack by third parties. For both of these reasons, we seek to prevent an authentication provider from holding too much information about users. In particular, we do not want an authentication provider to maintain a mapping between a user and the particular services that a user requests, and we do not want a single authentication provider to establish a monopoly position in which it can dictate the terms by which users and service providers interact. For this reason, we put the user, and not the authentication provider, in the center of the architecture.

For a user to be certain that she is not providing a channel by which authentication providers can leak her identity or by which service providers can trace her activity, then she must isolate the different participants in the system. The constraints allow us to define three isolation objectives as follows:

1. Have users generate unlinked identifiers on devices that they own and trust. Unless they generate the identifiers themselves, users have no way of knowing for sure whether identifiers assigned to them do not contain personally identifying information. For users to verify that the identifiers will not disclose information that might identify them later, they would need to generate random identifiers using devices and software that they control and trust. We suggest that for a user to trust a device, its hardware and software must be of an open-source, auditable design with auditable provenance. Although we would not expect that most users would be able to judge the security properties of the devices they use, open-source communities routinely provide mechanisms by which users without specialized knowledge can legitimately conclude, before using new hardware or software, that a diverse community of experts have considered and approved the security aspects of the technology. Examples of such communities include Debian (Software in the Public Interest, Inc, 2019) for software and (Arduino, 2019) for hardware, and trustworthy access to these communities might be offered by local organizations such as libraries or universities.

2. Ensure that authentication providers do not learn about the user's identity or use of services. Authentication providers that require foundational information about a user, or are able to associate different requests over time with the same user, are in a position to collect information beyond what is strictly needed for the purpose of the operation. The role of the authentication provider is to act as a neutral channel that confers authority on certification providers, time-shifts requests for credentials, and separates the certification providers from providers of services. Performing this function does not require it to collect information about individual users at any point.

3. Ensure that information given to service providers is not shared with authentication providers. The user must be able to credibly trust that his or her interaction with service providers must remain private.

The communication among the four parties that we propose can be done via simple, synchronous (e.g., HTTP) protocols that are easily performed by smartphones and other mobile devices. The cryptography handling public keys can be done using standard public-key-based technologies.

Figure 6 shows how we can modify the system shown in Figure 5 to achieve the three isolation objectives defined above. Here, we introduce blind signatures (Chaum, 1983) to allow the user to present a verified signature without allowing the signer and the relying party to link the identity of the subject to the subject's legitimate use of a service.

Figure 6. A schematic representation of a digital identity system with a user-oriented approach. The new protocol uses user-generated identifiers and blind signatures to isolate the authentication provider. The authentication provider cannot inject identifying information into the identifiers, nor can it associate the user with the services that she requests. (A) Setup phase. (B) Operating phase.

Figure 6A depicts the new setup phase. First, on her own trusted hardware (see section 4.4), the user generates her own set of identifiers x1, …, xn that she intends to use, at most once each, in future correspondence with the authentication provider. Generating the identifiers is not computationally demanding and can be done with an ordinary smartphone. By generating her own identifiers, the user has better control that nothing encoded in the identifiers that might reduce her anonymity. The user then sends both its identifying information and the identifiers x1, …, xn to the certification provider (1). The certification provider then responds with a set of signatures corresponding to each of the identifiers (2). The user then sends the set of signatures to the authentication provider for future use (3).

Figure 6B depicts the new operating phase. First, the service sends a request to the user along with a new nonce (one-time identifier) y corresponding to the request (4). The user then applies a blinding function to the nonce y, creating a blinded nonce [y]. The user chooses one of the identifiers xi that she had generated during the setup phase and sends that identifier along with the blinded nonce [y] to the authentication provider (5). Provided that the signature on xi has not been revoked, the authentication provider confirms that it is valid by signing [y] and sending the signature to the user (6). The user in turn “unblinds” the signature on y and sends the unblinded signature to the service provider (7). The use of blind signatures ensures that the authentication provider cannot link what it sees to specific interactions between the user and the service provider.

To satisfy the constraints listed in section 3, all three process steps (identification, authentication, and authorization) must be isolated from each other. Although our proposed architecture introduces additional interaction and computation, we assert that the complexity of the proposed architecture is both parsimonious and justified:

1. If the certification provider were the same as the service provider, then the user would be subject to direct control and surveillance by that organization, violating Constraints 1, 3, and 5.

2. If the authentication provider were the same as the certification provider, then the user would have no choice but to return to the same organization each time it requests a service, violating Constraints 1 and 4. That organization would then be positioned to discern patterns in its activity, violating Constraints 3 and 5. There would be no separate authentication provider to face competition for its services as distinct from the certification services, violating Constraint 6.

3. If the authentication provider were the same as the service provider, then the service provider would be positioned to compel the user to use a particular certification provider, violating Constraints 1 and 4. The service provider could also impose constraints upon what a certification provider might reveal about an individual, violating Constraint 3, or how the certification provider establishes the identity of individuals, violating Constraint 8.

4. If the user could not generate her own identifiers, then the certification provider could generate identifiers that reveal information about the user, violating Constraint 3.

5. If the user were not to use blind signatures to protect the requests from service providers, then service providers and authentication providers could compare notes to discern patterns of a user's activity, violating Constraint 5.

The proposed architecture does not achieve its objectives if either the certification provider or the service provider colludes with the authentication provider; we assume that effective institutional policy will complement appropriate technology to ensure that sensitive data are not shared in a manner that would compromise the interests of the user.

A significant problem remains with the design described in section 4.5 in that it requires O(n2) relationships among authentication providers and certification providers (i.e., with each authentication provider connected directly to each certification provider that it considers valid) to be truly decentralized. Recall that the system relies critically upon the ability of an certification provider to revoke credentials issued to users, and authentication providers need a way to learn from the certification provider whether a credential in question has been revoked. Online registries such as OCSP (Juniper Networks, 2018), which are operated by a certification provider or trusted authority, are a common way to address this problem, although the need for third-party trust violates Constraint 1. The issue associated with requiring each authentication provider to establish its own judgment of each candidate certification provider is a business problem rather than a technical one. Hierarchical trust relationships emerge because relationships are expensive to maintain and introduce risks; all else being equal, business owners prefer to have fewer of them. Considered in this context, concentration and lack of competition among authentication providers makes sense. If one or a small number of authentication providers have already established relationships with a broad set of certification providers, just as payment networks such as Visa and Mastercard have done with a broad set of banks, then the cost to a certification provider of a relationship with a new authentication provider would become a barrier of entry to new authentication providers. The market for authentication could fall under the control of a monopoly or cartel.

We propose using distributed ledger technology (DLT) to allow both certification providers and authentication providers to proliferate whilst avoiding industry concentration. The distributed ledger would serve as a standard way for certification providers to establish relationships with any or all of the authentication providers at once, or vice-versa. The ledger itself would be a mechanism for distributing signatures and revocations; it would be shared by participants and not controlled by any single party. Figure 7 shows that users would not interact with the distributed ledger directly but via their chosen certification providers and authentication providers. Additionally, users would not be bound to use any particular authentication provider when verifying a particular credential and could even use a different authentication provider each time. Provided that the community of participants in the distributed ledger remains sufficiently diverse, the locus of control would not be concentrated within any particular group or context, and the market for authentication can remain competitive.

Figure 7. A schematic representation of a decentralized identity system with a distributed ledger. The user is not required to interact directly with the distributed ledger (represented by a dashed circle) and can rely upon the services offered by certification providers and authentication providers.

Because the distributed ledger architecture inherently does not require each new certification provider to establish relationships with all relevant authentication providers, or vice-versa, it facilitates the entry of new authentication providers and certification providers, thus allowing the possibility of decentralization.

We argue that a distributed ledger is an appropriate technology to maintain the authoritative record of which credentials have been issued (or revoked) and which transactions have taken place. We do not trust any specific third party to manage the list of official records, and we would need the system to be robust in the event that a substantial fraction of its constituent parts are compromised. The distributed ledger can potentially take many forms, including but not limited to blockchain, and, although a variety of fault-tolerant consensus algorithms may be appropriate, we assume that the set of node operators is well-known, a characteristic that we believe might be needed to ensure appropriate governance.

If implemented correctly at the system level, the use of a distributed ledger can ensure that the communication between the certification provider and the authentication provider is limited to that which is written on the ledger. If all blind signatures are done without including any accompanying metadata, and as long as the individual user does not reveal which blind signature on the ledger corresponds to the unblinded signature that he or she is presenting to the authentication provider for approval, then nothing on the ledger will reveal any information about the individual persons who are the subjects of the certificates. We assume that the certification authorities would have a limited and well-known set of public keys that they would use to sign credentials, with each key corresponding to the category of individual persons who have a specific attribute. The size of the anonymity set for aa credential, and therefore the effectiveness of the system in protecting the privacy of an individual user with that credential, depends upon the generality of the category. We would encourage certification authorities to assign categories that are as large as possible. We would also assume that the official set of signing keys used by certification providers and authentication providers is also maintained on the ledger, as doing so would ensure that all users of the system have the same view of which keys the various certification providers and authentication providers are using.

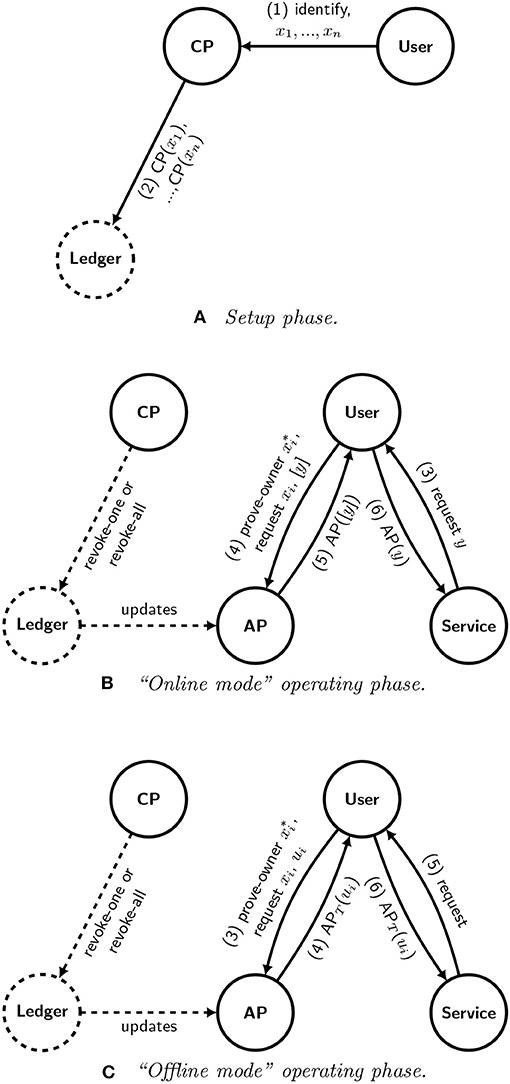

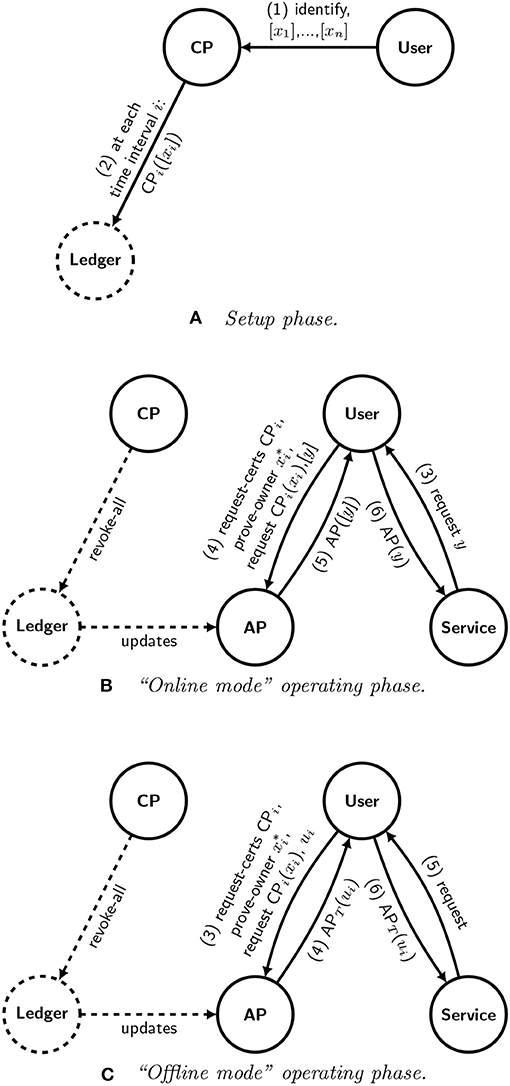

Figure 8 shows how the modified architecture with the distributed ledger technology would work. Figure 8A shows the setup phase. The first two messages from the user to the certification provider are similar to their counterparts in the protocol shown in Figure 6A. However, now the user also generates n asymmetric key pairs , where xi is the public key and is the private key of pair i, and it sends each public key x1, …, xn to the certification provider (1). Then, rather than sending the signed messages to the authentication provider via the user, the certification provider then instead writes the signed certificates directly to the distributed ledger (2). Importantly, the certificates would not contain any metadata but only the public key xi and its bare signature; eliminating metadata is necessary to ensure that there is no channel by which a certification provider might inject information that might be later used to identify a user. Figure 8B shows the operating phase, which begins when a service provider asks a user to authenticate and provides some nonce y as part of the request.

Figure 8. A schematic representation of one possible decentralized digital identity system using a distributed ledger. Diagrams (A,B) show the setup and operating phases for an initial sketch of our design, which uses a distributed ledger to promote a scalable marketplace that allows users to choose certification providers and authentication providers that suit their needs. Diagram (C) shows a variation of the operating phase that can be used in an offline context, in which the user might not be able to communicate with an up-to-date authentication provider and the service provider at the same time.

The certification provider can revoke certificates simply by transacting on the distributed ledger and without interacting with the authentication provider at all. Because the user and the authentication provider are no longer assumed to mutually trust one another, the user must now prove to the authentication provider that the user holds the private key when the user asks the authentication provider to sign the blinded nonce [y] (4). At this point we assume that the authentication provider maintains its own copy of the distributed ledger and has been receiving updates. The authentication provider then refers to its copy of the distributed ledger to determine whether a credential has been revoked, either because the certification provider revoked a single credential or because the certification provider revoked its own signing key. Provided that the credential has not been revoked, the authentication provider signs the blinded nonce [y] (5), which the user then unblinds and sends to the service provider (6). The following messages are carried out as they are done in Figure 6B.

We assume that each certification provider and authentication provider has a distinct signing key for credentials representing each possible policy attribute, and we further assume that each possible policy attribute admits for a sufficiently large anonymity set to not identify the user, as described in section 5.1. A policy might consist of the union of a set of attributes, and because users could prevent arbitrary subsets of the attributes to authentication providers and service providers, we believe that in most cases it would not be practical to structure policy attributes in such a manner that one attribute represents a qualification or restriction of another. Additionally, at a system level, authentication providers and service providers must not require a set of attributes, either from the same issuer or from different issuers, whose combination would restrict the anonymity set to an extent that would potentially reveal the identity of the user.

The proposed approach can also be adapted to work offline, specifically when a user does not have access to an Internet-connected authentication provider at the time that it requests a service from a service provider. This situation applies to two cases: first, in which the authentication provider has only intermittent access to its distributed ledger peers (perhaps because the authentication provider has only intermittent access to the Internet), and second, in which the user does not have access to the authentication provider (perhaps because the user does not have access to the Internet) at the time that it requests a service.

In the first case, note that the use of a distributed ledger allows the authentication provider to avoid the need to send a query in real-time6. If the authentication provider is disconnected from the network, then it can use its most recent version of the distributed ledger to check for revocation. If the authentication provider is satisfied that the record is sufficiently recent, then it can sign the record with a key that is frequently rotated to indicate its timeliness, which we shall denote by APT. We presume that APT is irrevocable but valid for a limited time only. If the authentication provider is disconnected from its distributed ledger peers but still connected to the network with the service provider, then it can still sign a nonce from the service provider as usual.

In the second case, however, although the user is disconnected from the network, the service provider still requires an indication of the timeliness of the authentication provider's signature. The generalized solution is to adapt the operating phase of the protocol as illustrated by Figure 8C. Here, we assume that the user knows in advance that she intends to request a service at some point in the near future, so she sends the request to the authentication provider pre-emptively, along with a one-time identifier ui (3). Then, the authentication provider verifies the identifier via the ledger and signs the one-time identifier ui with the time-specific key APT (4). Later, when the service provider requests authorization (5), the user responds with the signed one-time identifier that it had obtained from the authentication provider (6). In this protocol, the service provider also has a new responsibility, which is to keep track of one-time identifiers to ensure that there is no duplication.

Unfortunately, the architecture described in sections 5.2 and 5.3 has an important weakness as a result of its reliance on the revocation of user credentials. Because the credential that an certification provider posts to the ledger is specifically identified by the user at the time that the user asks the authentication provider to verify the credential, the certification provider may collude with individual authentication providers to determine when a user makes such requests. Even within the context of the protocol, an unscrupulous (or compromised, or coerced) certification provider may post revocation messages for all of the credentials associated with a particular user, hence linking them to each other.

For this reason, we recommend modifying to the protocol to prevent this attack by using blinded credentials to improve its metadata-resistance. Figure 9 shows how this would work. Rather than sending the public keys xi directly to the certification provider, the user sends blinded public keys [xi], one for each of a series of specific, agreed-upon time intervals (1). which in turn would be signed by the certification provider using a blind signature scheme that does not allow revocation (1). The certification provider would not sign all of the public keys and publish the certificates to the ledger immediately; instead, it would sign them and post the certificates to the ledger at the start of each time interval i, in each instance signing the user keys with a key of its own specific to that time interval, CPi (2). If a user expects to make multiple transactions per time interval and desires those transactions to remain unlinked from each other, the user may send multiple keys for each interval.

Figure 9. A schematic representation of a metadata-resistant decentralized identity architecture. This version of the design represents our recommendation for a generalized identity architecture. By writing only blinded credentials to the ledger, this version extends the design shown in Figure 8 to resist an attack in which the certification provider can expose linkages between different credentials associated with the same user. Diagrams (A,B) show the setup and operating phases, analogously to the online example shown in Figure 8; Diagram (C) shows the corresponding offline variant.

When the time comes for the user to request a service, the user must demonstrate that it is the owner of the private key corresponding to a (blinded) public key that had been signed by the certification provider. So the user must first obtain the set of all certificates signed by CPi, which it can obtain from the authentication provider via a specific request, request-certs. Then it can find the blind signature on [xi] from the list and unblind the signature to reveal CPi(xi). It can then send this signature to the authentication provider along with its proof of ownership of as before.

This version of the protocol is the one that we recommend for most purposes. Although the request-certs exchange might require the user to download a potentially large number of certificates, such a requirement would hopefully indicate a large anonymity set. In addition, there may be ways to mitigate the burden associated by the volume of certificates loaded by the client. For example, we might assume that the service provider offers a high-bandwidth internet connection that allows the user to request the certificates anonymously from an authentication provider. Alternatively, we might consider having the certification provider subdivide the anonymity set into smaller sets using multiple well-known public keys rather than a single CPi, or we might consider allowing an interactive protocol between the user and the authentication provider in which the user voluntarily opts to reduce her anonymity set, for example by specifying a small fraction of the bits in [xi] as a way to request only a subset of the certificates.

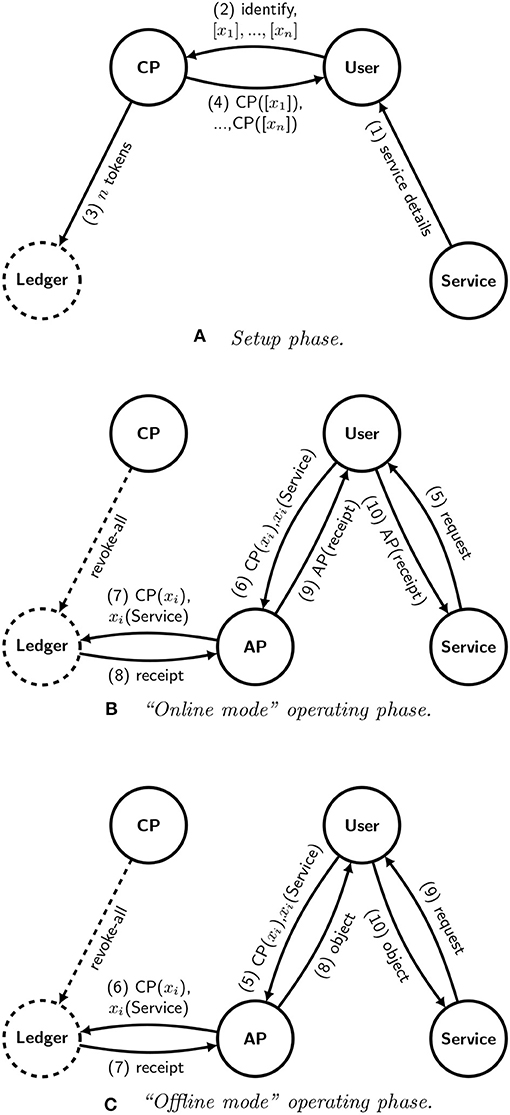

The architecture defined in section 5.4 can also be adapted to allow users to spend tokens on a one-time basis. This option may be of particular interest for social and humanitarian services, in which tokens to be used to purchase food, medicine, or essential services may be issued by a government authority or aid organization to a community at large. In such cases, the human rights constraints are particularly important. Figure 10 shows how a certification provider might work with an issuer of tokens to issue spendable tokens to users, who may in turn represent themselves or organizations.

Figure 10. A schematic representation of a decentralized identity architecture for exchanging tokens. The protocol represented in this figure can be used to allow users to pay for services privately. Diagrams (A,B) show the setup and operating phases, analogously to the online example shown in Figures 8, 9; Diagram (C) shows the corresponding offline variant.

Figure 10A shows the setup phase. We assume that the service provider first tells the user that it will accept tokens issued by the certification provider (1). The user then sends a set of n newly-generated public keys, along with any required identity or credential information, to the certification provider (2). We assume that the tokens are intended to be fungible, so the certification provider issues n new, fungible tokens on the ledger (3). We need not specify the details of how the second message works in practice; depending upon the trust model for the ledger it may be as simple as signing a statement incrementing the value associated with the certification provider's account, or it may be a request to move tokens explicitly from one account to another. Then, the certification provider signs a set of n messages, each containing one of the blinded public keys, and sends them to the user (4). The messages will function as promissory notes, redeemable by the user that generated the keys, for control over the tokens.

Figure 10B shows the operating phase. When a service provider requests a token (5), the user sends a message to an authentication provider demonstrating both that it has the right to control the token issued by the certification provider and that it wishes to sign the token over to the service provider (6). The authentication provider, who never learns identifying information about the user, lodges a transaction on the ledger that assigns the rights associated with the token to the service provider (7), generating a receipt (8). Once the transaction is complete, the authentication provider shares a receipt with the user (9), which the user may then share with the service provider (10), who may now accept that the payment as complete.

Like the “main” architecture described in section 5.4, the “token” architecture can also be configured to work in an offline context by modifying the operating phase. Figure 10C shows how this would work. The user requests from the authentication provider one or more physical “objects”, which may take the form of non-transferable electronic receipts or physical tokens, that can be redeemed for services from the service provider (5). The authentication provider sends the objects to the user (8), who then redeems them with the service provider in a future interaction (9, 10).

An important challenge that remains with the distributed ledger system described in section 5 is the management of the organizations that participate in the consensus mechanism of the distributed ledger. We believe that this will require the careful coordination of local businesses and cooperatives to ensure that the system itself does not impose any non-consensual trust relationships (Constraint 4), that no single market participant would gain dominance (Constraint 6), and that participating businesses and cooperatives will be able to continue to establish their own business practices and trust relationships on their own terms (Constraint 7), even while consenting to the decisions of the community of organizations participating in the shared ledger. We believe that our approach will be enhanced by the establishment of a multi-stakeholder process to develop the protocols by which the various parties can interact, including but not limited to those needed to participate in the distributed ledger, and ultimately facilitate a multiplicity of different implementations of the technology needed to participate. Industry groups and regulators will still need to address the important questions of determining the rules and participants. We surmize that various organizations, ranging from consulting firms to aid organizations, would be positioned to offer guidance to community participants in the network, without imposing new constraints.

The case for a common, industry-wide platform for exchanging critical data via a distributed ledger is strong. Analogous mechanisms have been successfully deployed in the financial industry. Established mechanisms take the form of centrally-managed cooperative platforms such as SWIFT (Society for Worldwide Interbank Financial Telecommunication, 2018), which securely carries messages on behalf of financial markets participants, while others take the form of consensus-based industry standards, such as the Electronic Data Interchange (EDI) standards promulgated by X12 (The Accredited Standards Committee, 2018) and EDIFACT (United Nations Economic Commission for Europe, 2018). Distributed ledgers such as Ripple (2019) and Hyperledger (IBM, 2019) have been proposed to complement or replace the existing mechanisms.

For the digital identity infrastructure, we suggest that the most appropriate application for the distributed ledger system described in section 5 would be a technical standard for business transactions promulgated by a self-regulatory organization working concordantly with government regulators. A prime example from the financial industry is best execution, exemplified by Regulation NMS (Securities and Exchange Commission, 2005), which led to the dismantling of a structural monopoly in electronic equities trading in the United States7. Although the US Securities and Exchange Commission had the authority to compel exchanges to participate in a national market system since 1975, it was not until 30 years later that the SEC moved to explicitly address the monopoly enjoyed by the New York Stock Exchange (NYSE). The Order Protection Rule imposed by the 2005 regulation (Rule 611) “requir[ed] market participants to honor the best prices displayed in the national market system by automated trading centers, thus establishing a critical linkage framework” (Securities and Exchange Commission Historical Society, 2018). The monopoly was broken, NYSE member firms became less profitable, and NYSE was ultimately bought by Intercontinental Exchange in 2013 (Reuters, 2018).

We believe that distributed ledgers offer a useful mechanism by which self-regulatory organizations satisfy regulations precisely intended to prevent the emergence of control points, market concentration, or systems whose design reflects a conflict of interest between their operators and their users.

An important expectation implicit to the design of our system is that users can establish and use as many identities as they want, without restriction. This means not only that a user can choose which credentials to show to relying parties, but also that a user would not be expected to bind the credentials to each other in any way prior to their use. Such a binding would violate Constraint 5 from section 3. In particular, given two credentials, there should be no way to know or prove that they were issued to the same individual or device. This property is not shared by some schemes for non-transferable anonymous credentials that encourage users to bind together credentials to each other via a master key or similar mechanism (Camenisch and Lysyanskaya, 2001) as described in section 2.2.

If it were possible to prove that two or more credentials were associated with the same identity, then an individual could be forced to associate a set of credentials with each other inextricably, and even if an individual might be given an option to reveal only a subset of his or her credentials to a service provider at any given time, the possibility remains that an individual might be compelled to reveal the linkages between two or more credentials. For example, the device that an individual uses might be compromised, revealing the master key directly, which would be problematic if the same master key were used for many or all of the individual's credentials. Alternatively, the individual might be coerced to prove that the same master key had been associated with two or more credentials.

The system we describe explicitly does not seek to rely upon the ex ante binding together of credentials to achieve non-transferability or for any other purpose. We suggest that the specific desiderata and requirements for non-transferability might vary across use cases and can be addressed accordingly. Exogenous approaches to achieve non-transferability might have authentication providers require users to store credentials using trusted escrow services or physical hardware with strong counterfeiting resistance such as two-factor authentication devices. Endogenous approaches might have authentication providers record the unblinded signatures on the ledger once they are presented for inspection, such that multiple uses of the same credential become explicitly bound to each other ex post or are disallowed entirely. Recall that the system assumes that credentials are used once only and that certification providers would generate new credentials for individuals in limited batches, for example at a certain rate over time.

We anticipate that there might be many potential use cases for a decentralized digital identity infrastructure that affords users the ability to manage the linkages among their credentials. Table 3 offers one view of how the use cases might be divided into four categories on the basis of whether the purpose is to assert entitlements or spend tokens and upon whether the services in question are operated by the public sector or the private sector. Use cases that involve asserting entitlements might include asserting membership of a club for the purpose of gaining access to facilities, accessing restricted resources, or demonstrating eligibility for a discount, perhaps on the basis of age, disability, or financial hardship, at the point of sale. Use cases that involve spending tokens can potentially be disruptive, particularly in areas that generate personally identifiable information. We imagine that a decentralized digital identity infrastructure that achieves the privacy requirements would be deployed incrementally, whether general purpose or not. We suggest the following three use cases might be particularly appropriate because of their everyday nature, and might be a fine place to start:

1. Access to libraries. Public libraries are particularly sensitive to risks associated with surveillance (Zimmer and Tijerina, 2018). The resources of a public library are the property of its constituency, and the users have a particular set of entitlements that have specific limitations in terms of time and quantity. Entitlement levels could be managed by having the issuer use a different signing key for each entitlement. User limits could be enforced in several ways. One method involves requiring a user to make a security deposit that is released at the time that a resource has been returned and determined to be suitable for recirculation. Another method involves requiring the library to check the ledger to verify that a one-time credential has not already been used as a precondition for providing the resource and requiring the user to purchase the right to a one-time credential that can only be re-issued upon return of the resource.

2. Public transportation. It is possible to learn the habits, activities, and relationships of individuals by monitoring their trips in an urban transportation system, and the need for a system-level solution has been recognized (Heydt-Benjamin, 2006). Tokens for public transportation (for example, pay-as-you-go or monthly bus tickets) could be purchased with cash in one instance and then spent over time with each trip unlinkable to each of the others. This can be achieved by having an issuer produce a set of one-time use blinded tokens in exchange for cash and having a user produce one token for each subsequent trip. Time-limited services such as monthly travel passes could be issued in bulk, including a signature with a fixed expiration date providing a sufficiently large anonymity set. An issuer could also create tokens that might be used multiple times, subject to the proviso that trips for which the same token is used could be linked.

3. Wireless data service plans. Currently, many mobile devices such as phones contain unique identifiers that are linked to accounts by service providers and can be identified when devices connect to cellular towers (GSM Association, 2019). However, it is not actually technically necessary for service providers to know the particular towers to which a specific customer is connecting. For the data service business to be tenable, we suggest that what service providers really need is a way to know that their customers have paid. Mobile phone service subscribers could have their devices present blinded tokens, obtained from issuers following purchases at sales offices or via subscription plans, to cellular towers without revealing their specific identities, thus allowing them to avoid tracking over extended periods of time. Tokens might be valid for a limited amount of time such as an hour, and a customer would present a token to receive service for a limited time. System design considerations would presumably include tradeoffs between the degree of privacy and the efficiency of mobile handoff between towers or time periods.

We do not anticipate or claim that our system will be suitable for all purposes for which an individual might be required to present electronic credentials. We would imagine that obtaining security clearances or performing certain duties associated with public office might explicitly require unitary identity. Certain activities related to national security undertaken by ordinary persons, such as crossing international borders, might also fall into this category, although we argue that such use cases must be narrowly circumscribed to offer limited surveillance value through record linkage. In particular, linking any strongly non-transferable identifiers or credentials to the identities that individuals use for routine activities (such as social media, for example, or the use cases described above) would specifically compromise the privacy rights of their subjects. Other application domains, such as those involving public health or access to medical records, present specific complications that might require a different design. Certain financial activities would require interacting with regulated financial intermediaries who are subject to AML and KYC regulations, as mentioned in section 4.1. For this reason, achieving privacy for financial transactions might require a different approach that operates with existing financial regulations (Goodell and Aste, 2019).

We argue that the ability of individuals to create and maintain multiple unrelated identities is a fundamental, inalienable human right. For the digital economy to function while supporting this human right, individuals must be able to control and limit what others can learn about them over the course of their many interactions with services, including government and institutional services.