95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Bioeng. Biotechnol. , 04 May 2023

Sec. Biomechanics

Volume 11 - 2023 | https://doi.org/10.3389/fbioe.2023.1139405

Alexandra A. Portnova-Fahreeva1*

Alexandra A. Portnova-Fahreeva1* Fabio Rizzoglio2

Fabio Rizzoglio2 Maura Casadio3

Maura Casadio3 Ferdinando A. Mussa-Ivaldi1,2

Ferdinando A. Mussa-Ivaldi1,2 Eric Rombokas4,5

Eric Rombokas4,5Dimensionality reduction techniques have proven useful in simplifying complex hand kinematics. They may allow for a low-dimensional kinematic or myoelectric interface to be used to control a high-dimensional hand. Controlling a high-dimensional hand, however, is difficult to learn since the relationship between the low-dimensional controls and the high-dimensional system can be hard to perceive. In this manuscript, we explore how training practices that make this relationship more explicit can aid learning. We outline three studies that explore different factors which affect learning of an autoencoder-based controller, in which a user is able to operate a high-dimensional virtual hand via a low-dimensional control space. We compare computer mouse and myoelectric control as one factor contributing to learning difficulty. We also compare training paradigms in which the dimensionality of the training task matched or did not match the true dimensionality of the low-dimensional controller (both 2D). The training paradigms were a) a full-dimensional task, in which the user was unaware of the underlying controller dimensionality, b) an implicit 2D training, which allowed the user to practice on a simple 2D reaching task before attempting the full-dimensional one, without establishing an explicit connection between the two, and c) an explicit 2D training, during which the user was able to observe the relationship between their 2D movements and the higher-dimensional hand. We found that operating a myoelectric interface did not pose a big challenge to learning the low-dimensional controller and was not the main reason for the poor performance. Implicit 2D training was found to be as good, but not better, as training directly on the high-dimensional hand. What truly aided the user’s ability to learn the controller was the 2D training that established an explicit connection between the low-dimensional control space and the high-dimensional hand movements.

With 27 degrees of freedom (DOFs) operated by 34 muscles, human hands are complex in both their structure and control. As a result, replacing the hand in cases of congenital or acquired amputation can be a challenging task, especially when the number of controlled joints is high (e.g., in very dexterous prostheses) and the number of available control signals (e.g., muscles) is low (Iqbal and Subramaniam, 2018). Many research groups have attempted to account for such dimensionality mismatch with existing dimensionality-reduction (DR) methods.

The most commonly used DR technique in the field of prosthetic control has been principal component analysis (PCA), which creates a low-dimensional (latent) representation of the data by finding the directions in the input space that explain the most variance in the data (Pearson, 1901). In the past, several groups have explored the efficacy of PCA in reducing dimensionality of complex hand kinematics during object grasping and manipulation (Santello et al., 1998; Todorov and Ghahramani, 2004; Ingram et al., 2008; Rombokas et al., 2012). These studies have inspired several research teams to develop a PCA-based controller for operating a prosthetic hand with multiple DOFs via a 2D (latent) space (Magenes et al., 2008; Ciocarlie and Allen, 2009; Matrone et al., 2010; Matrone et al., 2012; Segil, 2013; Segil and Controzzi, 2014; Segil, 2015).

However, PCA is purely linear in its nature, consequently only accounting for linear relationship in the input data. As a result, the nonlinear relationships that exist in hand kinematics data are disregarded. To account for these relationships, there are a variety of nonlinear DR methods. In our prior work, we have explored the use of a nonlinear autoencoder (AE) to reduce the dimensionality of hand kinematics during American Sign Language (ASL) gesturing, object grasping, and Activities of Daily Living (ADLs) (Portnova-Fahreeva et al., 2020). There, we found that two latent AE dimensions can reconstruct over

With such superior reconstruction performance, nonlinear AEs may serve as a platform for more accurate and dexterous lower-dimensional control of prosthetic hands. As a result of this work, our team has developed a myoelectric interface, in which users were able to control a virtual hand with 17 DOFs with only four electromyographic (EMG) signals (Portnova-Fahreeva et al., 2023 [manuscript in review]). Our preliminary work has shown the potential of AEs to be used to alleviate the issue of dimensionality mismatch in the control of dexterous prosthetic hands.

But is the dimensionality mismatch between device DOFs and control signals the only issue when it comes to myoelectric prosthetic control? Or is the problem at hand (figuratively and actually) more complex?

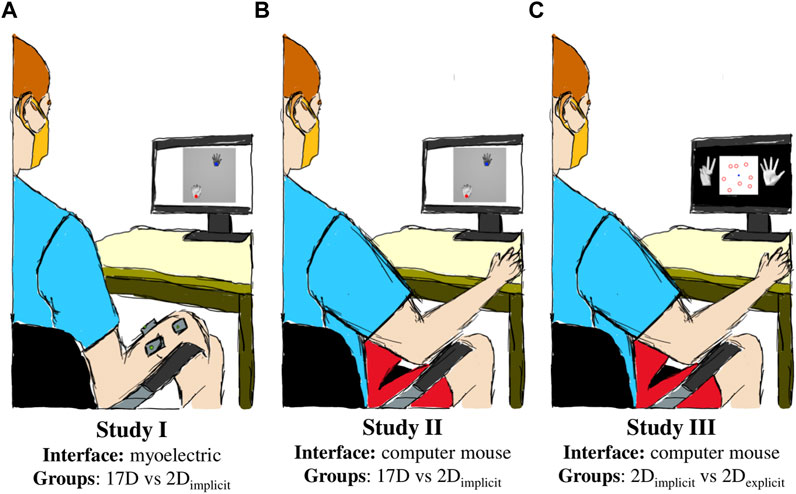

We attempted to answer these questions with three studies in which we trained the participants to perform hand gestures on a virtual hand via the AE-based controller (Figure 1). The studies assessed the following things:

a) to what degree is the difficulty of operating the AE-based controller due to the complexity of operating myoelectric interfaces,

b) whether an initial training on a 2D plane, which matches the underlying dimensionality of the AE-based controller, without explicitly establishing the connection between 2D reaches and hand kinematics enhances learning, and

c) whether an initial training on a 2D plane, in which the user is explicitly told about the connection between the 2D reaches and full hand gestures, enhances learning.

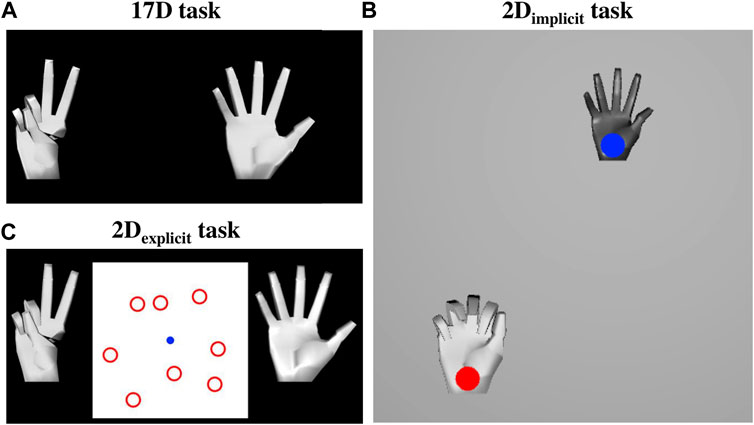

FIGURE 1. Experimental setup. (A) Study I used a myoelectric interface and split the participants into two groups based on training paradigms: 17D and 2Dimplicit. (B) Study II employed a mouse computer interface and compared two groups: 17D and 2Dimplicit. (C) Study III incorporated explicit target-gesture training (2Dexplicit) and a mouse-based interface.

Study I employed a myoelectric interface in which the participants controlled a virtual hand via the AE-based controller with by flexing/extending and abducting/adducting their wrist (Figure 1A). It explored how additional implicit training in the form of 2D target reaching affected the performance of controlling the 17D virtual hand.

Study II employed a computer mouse interface to control the virtual hand and assessed how much of the difficulty of learning the controller was from the challenge of operating an EMG-based interface (Figure 1B). Like Study I, it also assessed the potential of implicit 2D training to improve the performance of operating a 17D virtual hand.

Lastly, Study III explored how much of the difficulty in the learning arose from the user’s inability to establish the connection between the underlying 2D control and the actual 17D virtual hand (Figure 1C). Like Study II, Study III employed a mouse-based interface, but included a modified target-reaching session in 2D to establish an explicit connection for the participants between the dimensionalities of the underlying control and the presented task.

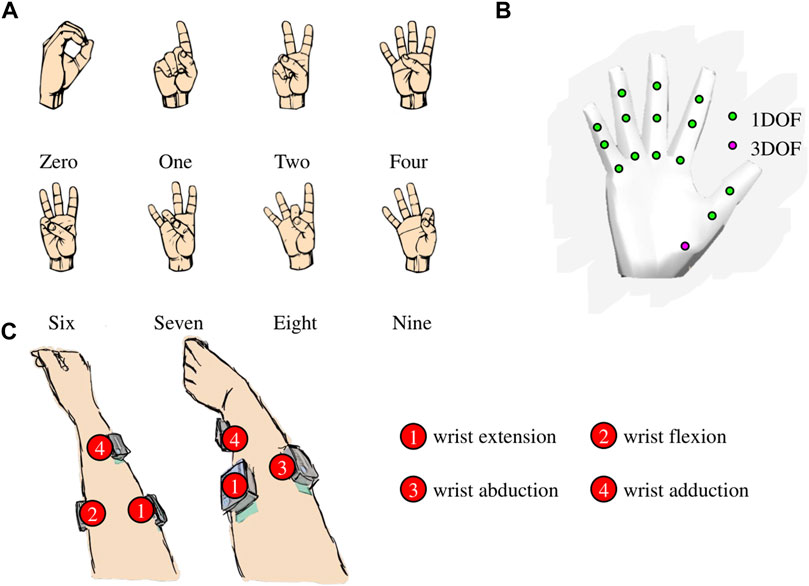

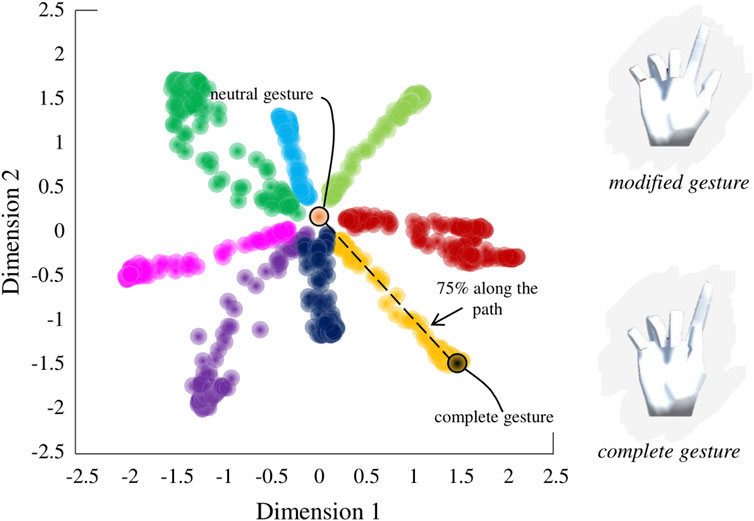

Using the findings of our original study, in which a nonlinear AE was determined to be superior to PCA in compressing and reconstructing complex hand kinematics, we built an AE-based myoelectric controller (Portnova-Fahreeva et al., 2023 [manuscript in review]). A variational AE model was used to first encode, or compress, high-dimensional (17D) kinematic signals recorded during ASL gestures (Figure 2A) into a low-dimensional (2D) space, and then to decode, or reconstruct, back into the original 17D, which corresponded to the joint angles of a virtual hand (Figure 2B).

FIGURE 2. (A) Eight American Sign Language (ASL) gestures that the participants were trained to reproduce during the studies. (B) 17 degrees of a freedom (DOFs) of the virtual hand. (C) Surface electrode placement on the participant’s forearm for the myoelectric interface.

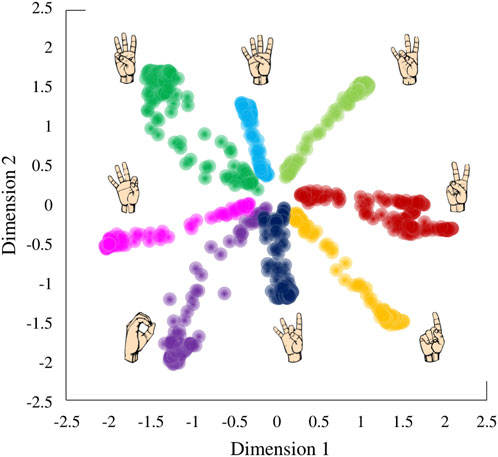

This AE-based controller practically allowed the user to recreate ASL gestures in a high-dimensional hand simply by navigating along a low-dimensional 2D plane (Figure 3). Each of the points on the control space can be reconstructed into a 17D kinematic signal in a virtual hand.

FIGURE 3. Latent space derived by applying a variational autoencoder to hand kinematics data of an individual performing American Sign Language (ASL) gestures.

The 17 DOFs of the virtual hand that were controlled were flexions/extensions of the three joints (metacarpal, proximal interphalangeal, distal interphalangeal) of the four fingers (pinky, ring, middle, and index) and flexion/extension of two joints of the thumb (metacarpal and interphalangeal) as well as the 3D rotation of its carpometacarpal joint.

Due to the nature of the AE-based control plane, not all recreated hand kinematics were within the natural ranges of motion of a biological hand. To prevent the hand from generating biologically unnatural gestures during the control, we limited the possible ranges of motion of the virtual hand joints to the ranges of motions of an actual hand. If the reconstruction output yielded a number outside of the natural range of motion of a hand joint, that joint did not change its angle in the virtual hand. For the purposes of the studies, the hand was defined to be in a neutral gesture when all of its fingers were fully extended (i.e., open hand).

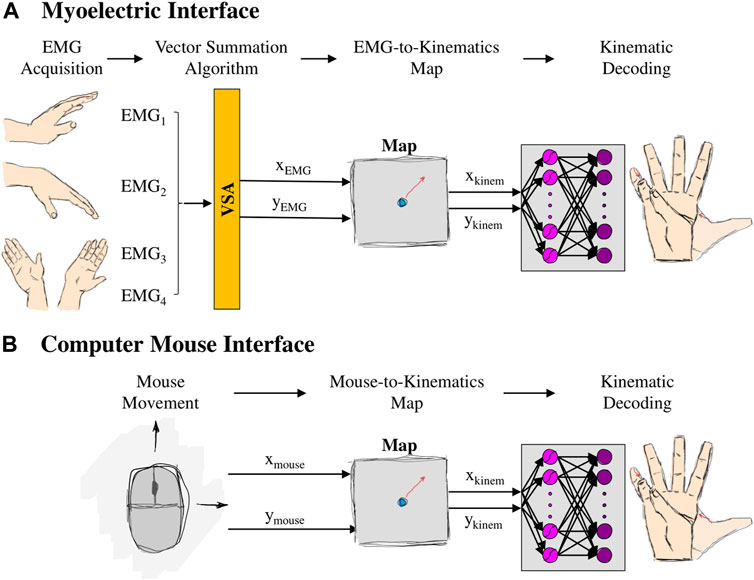

With the myoelectric AE-based controller, the user was able to operate a 17-DOF virtual hand using only four muscle signals (Figure 4A). Here is a general overview of the controller steps:

FIGURE 4. Setup of (A) myoelectric interface and (B) mouse interface. For the myoelectric interface, wrist movements generated EMG signals, which, in turn, were combined using a Vector Summation Algorithm into a 2D vector (

Step 1: four muscle signals were acquired from the user’s forearm (placement shown in Figure 2C), using surface EMG electrodes (Delsys Inc., MA, United States). Electrode 1 was placed approximately 1/3 of the distance between the lateral epicondyle of the elbow and the ulnar styloid process on the anterior side of the forearm. Electrode 2 was placed on the posterior side of the forearm, approximately 1/3 of the distance between the lateral epicondyle of the elbow and the ulnar styloid process, slightly more towards the ulnar side. Electrode 3 was placed on the ulnar side of the forearm, approximately 3 cm to the right of Electrode 1. Electrode 4 was placed on the anterior side of the forearm, approximately ¼ of the way between the radial styloid process and the cubital fossa. Signals from electrodes 1, 2, 3, and 4 were mainly associated with muscle activations during wrist extension, flexion, abduction, and adduction, respectively. A series of standard pre-processing techniques were applied to the raw recordings to extract the EMG envelope (EMG Acquisition).

To calibrate the acquired EMG signals, we used EMG recorded during

Step 2: four calibrated EMG signals were compressed into a 2D control signal such that wrist extension/flexion controlled the vertical position (

Matching the resting EMG position with the center of the latent space ensured that every trial started from the neutral gesture and every movement was performed in the center-out reaching manner. In the resting position, the corresponding virtual hand position was with all five fingers completely open.

Step 3: the compressed 2D control signal was mapped to the 2D control space—

Step 4: the point on the control space was reconstructed into 17D kinematics of the virtual hand (Kinematic Decoding).

More on each of these components can be found in our other work (Portnova-Fahreeva et al., 2023 [manuscript in review]).

Relaxing the forearm muscles returned the control position back to the center of the 2D plane, which, in turn, corresponded to the neutral gesture in the virtual hand.

In the computer mouse interface, the user was able to operate the virtual hand by clicking and holding the left button and dragging their computer mouse (Figure 4B). Dragging the mouse across the screen, in turn, controlled the position of the controller. Here are the steps of the mouse interface:

Step 1movement from the computer mouse on a 2D plane was obtained in the following format—

Step 2. the 2D mouse cursor position was directly mapped to the point on the control space –

Step 3. the point on the control space was reconstructed into a 17D gesture of the virtual hand (Kinematic Decoding).White Gaussian noise (

For the three studies, right-handed unimpaired participants were recruited to learn to control a virtual hand on the screen via the AE-based controller. All participants were naïve to the controller. Participant recruitment and data collection conformed with the University of Washington’s Institution Review Board (IRB). Informed written consent was obtained from each participant prior to the experiment.

No physical constraints were imposed on the participants throughout the experiment as they were free to move their right arm while performing the experiment objectives.

During each study, the participants were seated in an upright position in front of a computer screen, at approximately

The gestures that they learned to recreate were eight ASL gestures (Figure 2A). Each study was divided into Training and Test phases.

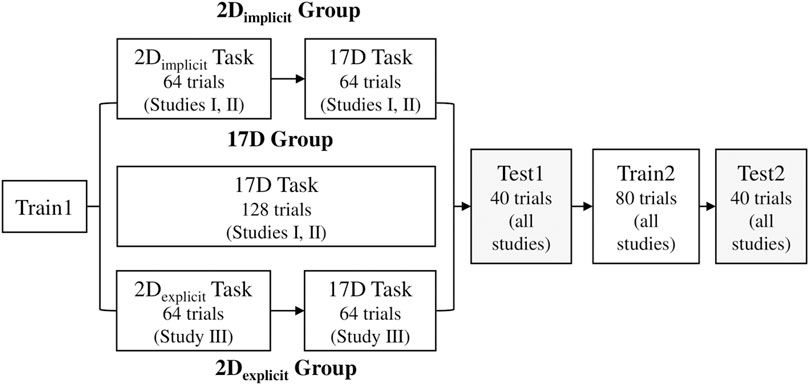

The training phase was divided into two sessions, referred to as Train1 and Train2. The only difference between the learning groups occurred during Train1 session of the studies.

During the 17D task, the participants were presented with only two virtual hands (Figure 5A) and had no visual feedback about the location of the controller on the 2D latent space. The hand on the left was the hand the participants needed to match. The hand on the right was the hand controlled either via a myoelectric or a mouse interface.

FIGURE 5. (A) 17D task setup. The hand on the left was the target hand that the participants needed to match. The hand on the right was the controlled hand that the participants controlled either via the myoelectric or mouse interface. (B) 2Dimplicit task setup. The participants performed simple 2D reaches by controlling a hand with a blue cursor over it. The hand with the red target over it was the hand they needed to match. (C) 2Dexplicit task setup. The participants controlled the blue cursor on the 2D plane, which in turn controlled the 17-DOF hand on the right. The hand on the left was the hand whose gesture they needed to match. The participants were required to learn which of the eight red targets represented the 2D location of the matching gesture.

Each trial always started from a neutral gesture. At the beginning of a new trial, the matching hand would form a new gesture and the participants had

The participants were required to maintain the gesture for

At the end of each trial, successful or not, the participants heard a sound cue asking them to either relax their muscles (myoelectric interface) or release the button (mouse interface), which, in turn, returned the controlled hand back to the neutral gesture. Once the participant was completely relaxed for

During the 2Dimplicit task, the participants engaged in a center-out target-reaching task. They were presented with visual feedback of the cursor that they controlled (either via a myoelectric or a mouse interface) and different targets that they needed to reach on a 2D plane (Figure 5B). The targets and the cursor were represented with circles of the same size (approximately 0.25″radius). Target locations were placed at various distances from the center cursor, which effectively were the locations of the eight ASL gestures on the latent space.

Grey and white hands were placed over both the control cursor and the target, respectively. Both hands showed the reconstructed gestures related to the current position of the cursor and the target on the latent space. The grey hand was slightly smaller in size than the white one for ease of differentiation once the two hands overlayed each other.

Each trial always started from a neutral gesture and the controlled cursor in the center of the plane. The participants were given

The target was successfully reached if the cursor stayed within the acceptable range for

At the end of each trial, successful or not, the participants heard a sound cue asking them to either relax their muscles (myoelectric interface) or release the button (mouse interface), which, in turn, returned the controlled hand back to the neutral gesture. Once the participant was completely relaxed for

During their 2Dexplicit task, the participants were always presented with both hands (the matching and the controlled ones). In addition, there was a 2D plane placed between the two virtual hands. The plane was a visual representation of the underlying control space. On the plane, there were eight red targets presented at all times, which corresponded to the 2D position of the eight gestures the participants were required to learn to recreate during training. A blue cursor corresponded to the 2D location of their controller, which, in turn, mapped into the 17D hand that the participants observed on the right (Figure 5C).

Each trial always started from a neutral gesture in the controlled hand and the blue cursor in the center of the plane. After hearing a sound cue, the hand on the left showed a new gesture, and the participants had

Neither the blue cursor nor the red targets provided any visual feedback on the correctness of the gesture-matching. Only the target and the controlled hands provided visual feedback by turning yellow (within the acceptable range) or green (successful) through the session. This, in turn, forced the participants to pay attention to the hand gestures as well as cursor/target location on the 2D plane, thus creating a more explicit connection between the 2D planar task and the 17D virtual hand gesture.

At the end of each trial, successful or not, the participants heard a sound cue asking them to either relax their muscles (myoelectric interface) or release the button (mouse interface), which, in turn, returned the controlled hand back to the neutral gesture. Once the participant was completely relaxed for

As for the training, the test phase was also divided into two sessions, Test1 and Test2. The goal of the test sessions was to determine whether the participants were able to transfer the skills acquired during training to conditions where they needed to recreate slightly different gestures. There, the participants were asked to match the hand gesture, similar to the 17D task during training either via the myoelectric or the mouse interface. No visual feedback about the location of the controller or the target gesture on the 2D plane was given during test.

After hearing a sound cue, the participants had

FIGURE 6. Sampling of modified gestures from the latent space. Modified gestures were sampled from 75% of the nominal path between the neutral position and the complete gesture on the latent space.

As during training, both hands would turn yellow if the participant was within the acceptable range and green if they successfully matched the gesture. Each trial ended with the same cue to either relax the muscles for

The participants in each study were split into three groups, which differed in the tasks they had to perform during Train1.

For this group, the dimensionality of the visual feedback of the task during training did not match that of the underlying control interface. This means that the participants only performed the 17D task during Train1.

For this group, Train1 was split into the 2Dimplicit task and the 17D task. During the 2Dimplicit task, the dimensionality of the visual feedback of a training task matched that of the underlying control interface for part of the training. We call it implicit 2D training because no explicit explanation was given to the participants on how the target-reaching task related to the 17D task they were later presented with.

Similar to the 2Dimplicit group, the 2Dexplicit group had an initial training on the 2D control space prior to the 17D task. However, the nature of the training task (2Dexplicit task) as well as the instructions given to the participants in this group were such that they could observe the relationship between the cursor movements on the 2D plane and the kinematics of the presented hand. In other words, this group was explicitly instructed on the connection between the underlying dimensionality of the controller and the generated hand gestures.

For this study, we recruited 14 unimpaired right-handed individuals (four males, ten females,

The main difference between the two participant groups was in Train1. The 2Dimplicit group practiced the 2Dimplicit task for the first half of Train1 (64 trials, i.e., eight gestures presented eight times in a pseudo-random order) and switched to the 17D task for the second half of the session (64 trials) (Figure 7). The 17D group performed the 17D task for the entirety of the session (128 trials, i.e., eight gestures repeated

FIGURE 7. Sequence of training and test session in each study. The only difference between the groups is in Train1 session, where the 17D group only experiences the 17D task while the 2Dimplicit/explicit groups have the session split in half: 2Dimplicit/explicit task and 17D task.

During Train2, both groups performed the 17D task, where the eight ASL gestures were repeated 10 times in a pseudorandom order, for a total of 80 trials per session. One minute break was given to the participants after 40 trials.

During Test1 and Test2, the participants were tested for a total of

A new group of 22 unimpaired right-handed participants was recruited (12 males, 10 females,

We recruited a total of seven participants (5 females, 2 males,

Performance between and within the three groups in each study was assessed with the following metrics:

Adjusted reach time was defined as the time taken to complete a hand gesture match (in the 17D task) or target reach (in the 2D task). For every missed trial, the ART of the trial was set to the timeout value (

Adjusted path efficiency was a measure of straightness of the path taken to either reach the 2D target or match the 17D gesture. It was calculated using Eq. 6, where

Similar to ART, for every missed trial, the APE of the trial was set to the lowest possible value of

Success rate measured the percentage of successful trials performed in a single session. This was calculated only for the test sessions.

For statistical analysis, we used MATLAB Statistics Toolbox functions (MathWorks, Natick, MA, United States). Anderson-Darling Test was used to determine the normality of the data (Anderson and Darling, 1954). Since all data were determined to be non-Gaussian, we used non-parametric tests for statistical analysis.

We evaluated differences within and across groups on the average ART and APE for the first or last repetition of the 2Dimplicit/explicit or 17D tasks. We also calculated differences within and across compared groups for the success rate between Test1 and Test2 in each study. Differences across the groups were determined by applying Wilcoxon Rank Sum Test while differences within the groups were tested using Wilcoxon Sign Rank Test (Wilcoxon, 1945). In all our analyses, the threshold for significance was set to

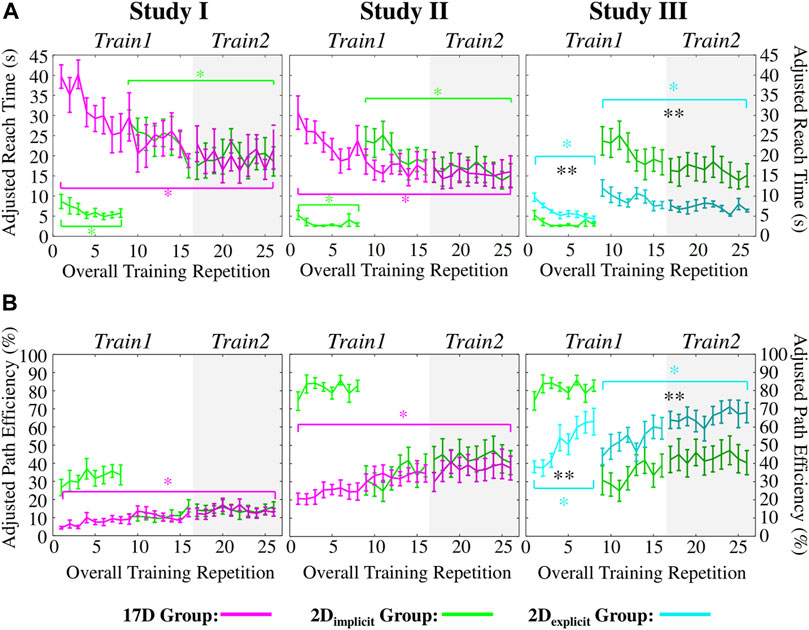

Participants across both groups were able to significantly improve their performance by the end of training (lower adjusted reach time), but no significant differences were observed across the groups in terms of final performance.

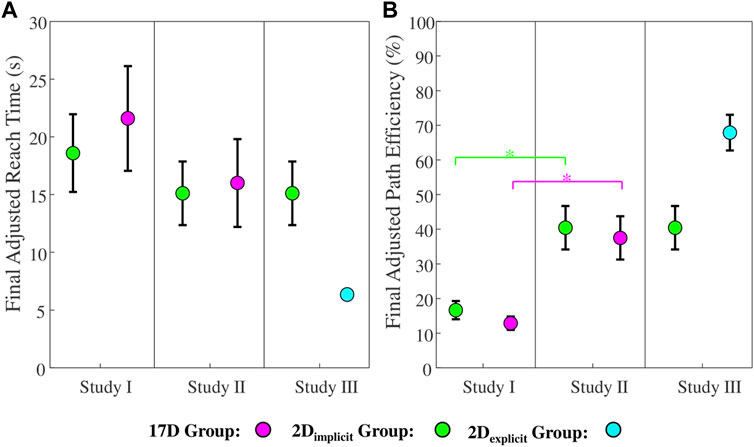

The 2Dimplicit group significantly improved the ART during the 2D task—from an average of

FIGURE 8. (A) Adjusted reach times (ARTs) and (B) adjusted path efficiencies (APEs) of Studies I, II, and III. Green colors represent the 2Dimplicit group. Magenta colors represent the 17D group. Teal colors represent the 2Dexlicit group. Error bars represent standard errors. Magenta asterisks (*) represent statistical differences within the 17D group. Green asterisks (*) represent statistical differences within the 2Dimplicit group. Teal asterisks (*) represent statistical differences within the 2Dexplicit group. Black asterisks (*) signify statistical differences between groups. The shaded area of each plot represents Train2 session. Note that for the third column (Study III), we are showing the result of the green group obtained in Study II for ease of comparison.

The 17D group also significantly decreased the ART from an average of

The difference between the two groups at the end of the training was not statistically significant (

The 2Dimplicit group increased the APE from

On the contrary, the 17D group was able to significantly increase its APE over the course of the 17D task training—from an average of

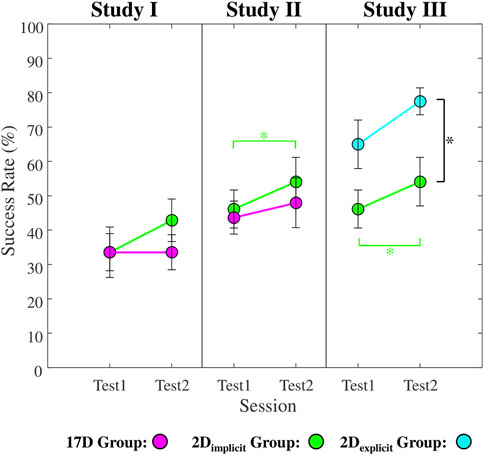

The success rates of the 2Dimplicit group during Test1 and Test2 were an average of

FIGURE 9. Average success rates for Test1 and Test2 sessions across all participants in each group in Studies I, II, and III. Green color represents the 2Dimplicit group. Magenta color represents the 17D group. Teal color represents the 2Dexplicit group. Error bars represent standard errors. Black asterisks (*) signify statistical differences between the groups in each study. Green asterisks (*) represent statistical differences within the 2Dimplicit group.

Both groups were able to significantly improve their performance by the end of training (lower adjusted reach time), but no significant differences were observed across the groups in terms of final performance.

The participants in the 2Dimplicit group were able to significantly improve their average ART from the first to the last repetition in the 2Dimplicit task (from

Similarly, the 17D group had a significant improvement in its average ART value—from 30

The difference between the two groups at the end of the training was not statistically significant (

The increase of the APE values for the 2Dimplicit group during the 2Dimplicit task was not statistically significant (

As in Study I, only the 17D group significantly increased its average APE value over the course of the training repetitions—from

During test sessions, the 2Dimplicit group successfully completed

In the sections below, we compare the performance of the 2Dimplicit group from Study II and the 2Dexplicit group, whose data were collected during Study III. In addition to significantly improving their performance over the entire training, participants in the 2Dexplicit group significant outperformed the 2Dimplicit group in terms of reach time and path efficiency.

The participants in the 2Dexplicit group significantly decreased their average ART during the 2Dexplicit task—from

During the 2Dimplicit/explicit tasks alone, the 2Dimplicit group reached targets significantly faster than the 2Dexplicit group at the beginning (

However, the 2Dexplicit group began the 17D task at a significantly lower ART value (

The 2Dexplicit group significantly improved its APE during both the 2Dexplicit and the 17D tasks (Figure 8B, third column, teal). During the 2Dexplicit task, the improvement was from an average of

When considering the 2Dexplicit/implicit tasks, the 2Dexcplit group had consistently lower APE values than the 2Dimplicit group. When switching to the 17D task, the difference of average APE values between the 2Dexplicit (

The success rate of the 2Dexplicit group between the test sessions improved from an average of

The set of studies presented in this paper allowed us to answer the three questions proposed in the beginning of the paper:

a) To what degree is the difficulty of operating the AE-based controller due to the complexity of operating myoelectric interfaces?

The complexity of the myoelectric interface did not have an effect on the final performance in terms of reach times achieved by either of the tested groups—the participants were able to perform gesture matches as fast as their counterparts who used the mouse interface. The only difference was observed in terms of path efficiency—the participants using the mouse interface were able to perform straighter reaches than the participants using the myoelectric interface.

b) Does an initial training on a 2D plane, which matches the underlying dimensionality of the AE-based controller without explicitly establishing the connection between 2D reaches and hand kinematics, enhances learning?

Without an explicit connection between the 2D reaches and virtual hand kinematics, the participants practicing the 2Dimplicit task did as well, but not better than their counterparts who practiced only the 17D task.

c) Does an initial training on a 2D plane, in which the user is explicitly told about the connection between the 2D reaches and full hand gestures, enhances learning?

Providing an explicit connection between the underlying low-dimensional control space and the presented high-dimensional task significantly improved the participant’s performance in terms of reach times and path efficiencies.

The findings in Studies I and II suggest that the myoelectric interface itself was not the main reason for poor performances across Study I participants and highlighted the need to look further into more optimal ways of teaching the users about the controller itself to improve their overall performance.

The notion that the users can learn any mappings for human-computer interfaces that you provide them with (intuitive or not) has been supported by a multitude of studies (Liu and Scheidt, 2008; Radhakrishnan et al., 2008; Ison and Artemiadis, 2015; Zhou et al., 2019; Dyson et al., 2020). However, we hypothesized that learning the control map may be more difficult in cases when a developed controller is operated via input signals in an unnatural way. What we mean by that is, for example, in case of our myoelectric interface, the forearm muscle contractions (i.e., wrist movements) did not yield the same physiological kinematic results in the virtual hand. As a result, there was a clear mismatch between the natural way of creating the gestures the users saw on the screen (i.e., finger flexions and extensions) and the alternative way they were required to learn to use their wrist to recreate these gestures. For comparative purposes, we utilized a computer mouse interface, which, we hypothesized, would allow the users to learn the mapping significantly faster, given than it is more familiar and used every day.

Despite our hypothesis, we found that by the end of the training, there was no significant difference in the reach times between the participants in Studies I and II (

FIGURE 10. (A) Adjusted reach time and (B) adjusted path efficiency for the final training repetition across the 2Dimplicit (green), 17D (magenta), and 2Dexplicit (teal) groups in Studies I, II, and III. Green asterisks (*) represent statistical differences between the 2Dimplicit groups in Studies I and II. Magenta asterisks (*) represent statistical differences between the 17D groups in Studies I and II.

It is also important to note that control gains across the two interfaces were potentially not the same, thus, making a direct comparison between Studies I and II in terms of reach times difficult. In the case of a myoelectric interface, control gains were individualized to each participant to ensure that they could access each point on the 2D control plane with comfortable muscle contraction levels (i.e., without over-contracting). On the contrary, in the mouse interface case, the participants had fixed control gains. It is possible to assume that the difference in the initial reach times during Train1 in the 17D group between the myoelectric and mouse interfaces was due to the control gains for the mouse controller being larger. However, when observing the final reach times in Train2, participants across both Study I and Study II plateaued to comparable reach times for both interface types, suggesting that control gains were potentially comparable.

In Study III, we found that guiding the user to learn the explicit connection between the underlying dimensionality of the controller and the high-dimensional hand postures was critical for learning.

Here, the relationship between the underlying dimensionality of the controller and the gesture-matching task that followed the target reaching was not explicitly made. Presentations of a single target at a time along with smaller avatars of the controlled and matching hands, most likely, encouraged the participants to focus on the 2D target location rather than the generated 17D gesture. This observation is supported by the fact that the 2Dimplicit group did not outperform the 17D group during gesture-matching at the end of Train2 (Studies I and II).

It is also important to note that after the first eight repetitions of either the 17D or 2Dimplicit task, both groups completed gesture matching at comparable adjusted reach times (Figure 8A). This points to the fact that the initial training in 2D worked as well, but not better, as having the initial training on the 17D task. The same effect is observed when looking at path efficiency during Studies I and II—the participants across both groups performed similarly (Figure 8B).

When designing Study III, we hypothesized that learning that took place during the 2D task did not provide the 2Dimplicit group with a full understanding of the underlying dimensionality of the control space. Instead, it only trained them to perform abstract (as they appeared to the participants) movements on a 2D plane. As a result of this observation, in Study III we explicitly informed the participants about the relationship between the cursor position in 2D and the corresponding (i.e., reconstructed) hand gesture in 17D. We hypothesized that once this relationship was understood, it would become a pure memorization problem of the gesture locations on the 2D plane.

The presentation of all eight targets without error feedback directly on the targets themselves forced the participants to pay attention not only to the target locations but at the desired hand gestures. In addition, the participants clearly observed how their movements on the 2D plane corelated to the generated gestures as the hands were now more visually accentuated and significantly larger than those presented in Studies I and II.

We hypothesize that the explicit understanding of the controller-task relationship would be essential when teaching nonlinear-based controllers developed; however, such understanding might not be relevant in cases where PCA (a linear method) has been used. The reason for that would be that the latent space created by PCA follows the superposition principle. This means that new gestures could be superimposed from other gestures on the 2D plane. A good example of that would be if one dimension of the latent space solely controls the opening and closing of the thumb, while the other one flexes and extends the other four fingers, gestures generated within the space would be linear combinations of these two dimensions.

The superposition principle does not hold in latent spaces generated by nonlinear systems such as nonlinear AEs. And while gestures that are similar kinematically, appear closer to each other on the latent space encoded by a nonlinear AE (Portnova-Fahreeva et al., 2020), nonlinear maps might be harder for users to interpolate from. And while the differences in learning linear and nonlinear maps have not been explored in this paper, we suggest that this might be an interesting route to investigate in future experiments.

One of the major outcomes of the studies described in this paper was the application of nonlinear DR methods, such as AEs, for the development of a nonlinear postural controller, in which complex kinematics of a virtual hand with 17DOFs were extracted from the position on the 2D plane. In the past, linear controllers have been developed, in which the dimensionality of hand kinematics during grasping was reduced using PCA (Magenes et al., 2008; Matrone et al., 2010; Matrone et al., 2012; Belter et al., 2013; Segil, 2013; Segil and Controzzi, 2014; Segil, 2015).

In the studies where linear postural controller was validated via a myoelectric interface (Matrone et al., 2012; Segil and Controzzi, 2014; Segil, 2015), the average movement times (time to successfully reach but not hold the hand in a correct grasp) were between

Explanations for the discrepancies in the results could be due to the major differences in the controller schemes and protocols. First of all, the output system with a PCA-based controller had

Despite potential differences across the linear- and nonlinear-based controllers, the nonlinear counterpart yielded a major advantage in its superiority in reconstructing higher variance of the input signal with a smaller number of latent dimensions (Portnova-Fahreeva et al., 2020). What this means is that a nonlinear-based controller with just two latent dimensions would result in a reconstructed hand that was closest in appearance (i.e., kinematically) to the original input signals whereas the PCA-based controller would be unable to reconstruct some of the gestures.

Following the results of Study III, in which we discovered a more effective form of training of the AE-based controller via the mouse interface, we hypothesize that a higher performance than what was observed in Study I could be achieved with a nonlinear postural controller via a myoelectric interface. As a result, we suggest that nonlinear postural controllers could still be a viable option for complex prosthetic control allowing for more precise dimensionality-reduction of intricate hand kinematics than what could be achieved by PCA.

One of the major limitations of our studies was the design of the test sessions with very short window to perform reaches. Considering that the average ART during training sessions in Studies I and II was significantly higher than the time allowed for a successful reach during test, the participants were set up for failure, which explains the low success rates during test. In Study III, the average ART at the end of the training sessions was similar to the time allowed for a successful gesture-matching during test, which explains a significantly higher success rates during test sessions for the 2Dexplicit group.

As discussed in Section 4.1, given the experimental design, the control gains between the mouse and myoelectric interfaces across the studies are not directly comparable. To allow the participants in Study I to be able to reach every point on the 2D control plane without over-contracting their muscles, we tuned the controller gains for each individual. The control gains in the mouse interface studies (Studies II and III), on the contrary, were kept constant for all participants. As a result, this design decision might have had an impact on the comparability of the results across myoelectric and mouse-controlled studies. In addition, this makes it difficult to assume that the 2Dexplicit group results from Study III (in terms of faster reach times) would translate entirely to a setup with the myoelectric interface. However, given that adjusted reach times plateaued around similar values for the groups training with the mouse interface in Study II as the groups training with the myoelectric interface in Study I (Figure 8A), it is possible that a 2Dexplicit group would get to similar reach times by the end of training using the myoelectric interface as the same group did using the mouse interface in Study III.

At the surface level, myoelectric noise can be modeled as Gaussian noise with both additive and multiplicative components (Clancy et al., 2001). Since the level of muscle activation required to reach the entire workspace in Study I was kept at comfortable (non-over-contracting) levels by design, we assumed that the multiplicative component would likely be similar to its additive counterpart, thus resulting in the design only incorporating the additive component. However, this assumption, if false, might have resulted in the mouse interface not being as noisy as the myoelectric interface, thus making direct comparisons between the path efficiency results between Studies I and II more difficult.

Lastly, the fastest reach times presented by the 2Dexplicit group by the end of training in Study III (an average of

When designing these studies, the end-user group that we considered were upper-limb amputees that utilize prosthetic hands in their daily living. Although the studies were performed on unimpaired individuals, they highlighted the possibility of using nonlinear controllers for the purpose of manipulating a myoelectric hand prosthesis. The myoelectric interface that we designed for Study I employed wrist muscle signals to operate on the 2D latent space. And although an upper-limb amputee might not have those wrist muscles, other more proximal locations can be chosen to obtain clean signals to control a location of a 2D cursor, which, in turn, would operate the hand. The main advantage of our controller is that it does not require a large number of signals (only enough to operate the cursor on the 2D plane) to control a hand with a large number of DOFs. One does not even need to limit themselves to the EMG system. One suggestion would be to obtain a 2D control signal from a simpler interface based on Internal Measurement Units (IMUs). For example, IMUs can be placed on the user’s shoulders, consequently, controlling the posture of the prosthetic hand. In the past, IMUs have been widely used to operate a low-dimensional controller (Thorp et al., 2015; Seáñez-González et al., 2016; Abdollahi et al., 2017; Pierella et al., 2017; Rizzoglio et al., 2020). Thus, nonlinear AE-based controllers, such as the one we developed for our studies, can be a versatile and modular solution for controlling complex upper-limb prosthetic devices via low-dimensional interfaces.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by University of Washington Human Subjects Division. The patients/participants provided their written informed consent to participate in this study.

AP-F contributed to design and implementation of the study as well as data analysis performed for the experiment. AP-F also wrote the manuscript with the support of FR, ER, MC, and FM-I. FR contributed to design of the neural network architecture described in the paper, providing necessary code for certain parts of data analysis, and educating on pre-processing steps of EMG. ER, MC, FM-I contributed to conception and design of the study as well as interpretation of the results. All authors contributed to manuscript revision, read, and approved the submitted statement.

This project was supported by NSF-1632259, by DHHS NIDILRR Grant 90REGE0005-01-00 (COMET), by NIH/NIBIB grant 1R01EB024058-01A1, and NSF-2054406.

The authors of this paper would like to thank all the participants who took part in this study in the midst of the pandemic and all the lab mates who participated in being the pilot runs of these studies.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abdollahi, F., Farshchiansadegh, A., Pierella, C., Seáñez-González, I., Thorp, E., Lee, M.-H., et al. (2017). Body-machine interface enables people with cervical spinal cord injury to control devices with available body movements: Proof of concept. Neurorehabilitation neural repair 31, 487–493. doi:10.1177/1545968317693111

Anderson, T. W., and Darling, D. A. (1954). A test of goodness of fit. J. Am. Stat. Assoc. 49, 765–769. doi:10.1080/01621459.1954.10501232

Belter, J. T., Segil, J. L., Sm, B., and Weir, R. F. (2013). Mechanical design and performance specifications of anthropomorphic prosthetic hands: A review. J. rehabilitation Res. Dev. 50, 599. doi:10.1682/jrrd.2011.10.0188

Ciocarlie, M. T., and Allen, P. K. (2009). Hand posture subspaces for dexterous robotic grasping. Int. J. Robotics Res. 28, 851–867. doi:10.1177/0278364909105606

Clancy, E. A., Bouchard, S., and Rancourt, D. (2001). Estimation and application of EMG amplitude during dynamic contractions. IEEE Eng. Med. Biol. Mag. 20, 47–54. doi:10.1109/51.982275

Dyson, M., Dupan, S., Jones, H., and Nazarpour, K. (2020). Learning, generalization, and scalability of abstract myoelectric Control. IEEE Trans. Neural Syst. Rehabilitation Eng. 28, 1539–1547. doi:10.1109/tnsre.2020.3000310

Ingram, J. N., Körding, K. P., Howard, I. S., and Wolpert, D. M. (2008). The statistics of natural hand movements. Exp. Brain Res. 188, 223–236. doi:10.1007/s00221-008-1355-3

Iqbal, N. V., Subramaniam, K., and P., S. A. (2018). A review on upper-limb myoelectric prosthetic control. IETE J. Res. 64, 740–752. doi:10.1080/03772063.2017.1381047

Ison, M., and Artemiadis, P. (2015). Proportional myoelectric control of robots: Muscle synergy development drives performance enhancement, retainment, and generalization. IEEE Trans. Robotics 31, 259–268. doi:10.1109/tro.2015.2395731

Liu, X., and Scheidt, R. A. (2008). Contributions of online visual feedback to the learning and generalization of novel finger coordination patterns. J. neurophysiology 99, 2546–2557. doi:10.1152/jn.01044.2007

Magenes, G., Passaglia, F., and Secco, E. L. (2008). “(Year). "A new approach of multi-dof prosthetic control,” in 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society: IEEE), 3443–3446.

Matrone, G. C., Cipriani, C., Carrozza, M. C., and Magenes, G. (2012). Real-time myoelectric control of a multi-fingered hand prosthesis using principal components analysis. J. neuroengineering rehabilitation 9, 40–13. doi:10.1186/1743-0003-9-40

Matrone, G. C., Cipriani, C., Secco, E. L., Magenes, G., and Carrozza, M. C. (2010). Principal components analysis based control of a multi-dof underactuated prosthetic hand. J. neuroengineering rehabilitation 7, 16–13. doi:10.1186/1743-0003-7-16

Pearson, K. (1901). LIII. On lines and planes of closest fit to systems of points in space. Lond. Edinb. Dublin philosophical Mag. J. Sci. 2, 559–572. doi:10.1080/14786440109462720

Pierella, C., De Luca, A., Tasso, E., Cervetto, F., Gamba, S., Losio, L., et al. (2017). “Changes in neuromuscular activity during motor training with a body-machine interface after spinal cord injury,” in International Conference on Rehabilitation Robotics (ICORR): IEEE), 1100–1105.

Portnova-Fahreeva, A. A., Rizzoglio, F., Nisky, I., Casadio, M., Mussa-Ivaldi, F. A., and Rombokas, E. (2020). Linear and non-linear dimensionality-reduction techniques on full hand kinematics. Front. Bioeng. Biotechnol. 8, 429. doi:10.3389/fbioe.2020.00429

Radhakrishnan, S. M., Baker, S. N., and Jackson, A. (2008). Learning a novel myoelectric-controlled interface task. J. neurophysiology 100, 2397–2408. doi:10.1152/jn.90614.2008

Rizzoglio, F., Pierella, C., De Santis, D., Mussa-Ivaldi, F., and Casadio, M. (2020). A hybrid Body-Machine Interface integrating signals from muscles and motions. J. Neural Eng. 17, 046004. doi:10.1088/1741-2552/ab9b6c

Rombokas, E., Malhotra, M., Theodorou, E. A., Todorov, E., and Matsuoka, Y. (2012). Reinforcement learning and synergistic control of the act hand. IEEE/ASME Trans. Mechatronics 18, 569–577. doi:10.1109/tmech.2012.2219880

Santello, M., Flanders, M., and Soechting, J. F. (1998). Postural hand synergies for tool use. J. Neurosci. 18, 10105–10115. doi:10.1523/jneurosci.18-23-10105.1998

Seáñez-González, I., Pierella, C., Farshchiansadegh, A., Thorp, E. B., Wang, X., Parrish, T., et al. (2016). Body-machine interfaces after spinal cord injury: Rehabilitation and brain plasticity. Brain Sci. 6, 61. doi:10.3390/brainsci6040061

Segil, J. L., Controzzi, M., Weir, R. F. f., and Cipriani, C. (2014). Comparative study of state-of-the-art myoelectric controllers for multigrasp prosthetic hands. J. rehabilitation Res. Dev. 51, 1439–1454. doi:10.1682/jrrd.2014.01.0014

Segil, J. L., and Weir, (2015). Novel postural control algorithm for control of multifunctional myoelectric prosthetic hands. J. rehabilitation Res. Dev. 52, 449–466. doi:10.1682/jrrd.2014.05.0134

Segil, J. L., and Weir, R. F. f. (2013). Design and validation of a morphing myoelectric hand posture controller based on principal component analysis of human grasping. IEEE Trans. Neural Syst. Rehabilitation Eng. 22, 249–257. doi:10.1109/tnsre.2013.2260172

Thorp, E. B., Abdollahi, F., Chen, D., Farshchiansadegh, A., Lee, M.-H., Pedersen, J. P., et al. (2015). Upper body-based power wheelchair control interface for individuals with tetraplegia. IEEE Trans. neural Syst. rehabilitation Eng. 24, 249–260. doi:10.1109/tnsre.2015.2439240

Todorov, E., and Ghahramani, Z. (2004). “Analysis of the synergies underlying complex hand manipulation,” in The 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society: IEEE), 4637–4640.

Wilcoxon, F. (1945). Individual comparisons by ranking methods. Biom. Bull. 1, 80–83. doi:10.2307/3001968

Keywords: dimensionality reduction, autoencoders, prosthetics, hand, learning, myoelectric, kinematics, EMG

Citation: Portnova-Fahreeva AA, Rizzoglio F, Casadio M, Mussa-Ivaldi FA and Rombokas E (2023) Learning to operate a high-dimensional hand via a low-dimensional controller. Front. Bioeng. Biotechnol. 11:1139405. doi: 10.3389/fbioe.2023.1139405

Received: 07 January 2023; Accepted: 21 April 2023;

Published: 04 May 2023.

Edited by:

Ramana Vinjamuri, University of Maryland, Baltimore County, United StatesReviewed by:

Samit Chakrabarty, University of Leeds, United KingdomCopyright © 2023 Portnova-Fahreeva, Rizzoglio, Casadio, Mussa-Ivaldi and Rombokas. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Alexandra A. Portnova-Fahreeva, YWxleGFuZHJhLnBvcnRub3ZhQGdtYWlsLmNvbQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.