- 1Department of Mechanical Engineering, Northwestern University, Evanston, IL, United States

- 2Department of Neuroscience, Northwestern University, Chicago, IL, United States

- 3Department of Mechanical Engineering, University of Washington, Seattle, WA, United States

- 4Department of Electrical Engineering, University of Washington, Seattle, WA, United States

In the past, linear dimensionality-reduction techniques, such as Principal Component Analysis, have been used to simplify the myoelectric control of high-dimensional prosthetic hands. Nonetheless, their nonlinear counterparts, such as Autoencoders, have been shown to be more effective at compressing and reconstructing complex hand kinematics data. As a result, they have a potential of being a more accurate tool for prosthetic hand control. Here, we present a novel Autoencoder-based controller, in which the user is able to control a high-dimensional (17D) virtual hand via a low-dimensional (2D) space. We assess the efficacy of the controller via a validation experiment with four unimpaired participants. All the participants were able to significantly decrease the time it took for them to match a target gesture with a virtual hand to an average of

1 Introduction

The complexity of the human hand has been the topic of abundant research aimed at understanding its underlying control strategies. With 27 degrees of freedom (DOFs) controlled by 34 muscles, replacement of the hand, in cases of congenital or acquired amputation, can be a difficult task, oftentimes either oversimplified (e.g., one-dimensional hooks) or overcomplicated (e.g., high-dimensional prosthetic hands) by prosthetic solutions. And while the intricacy of developed prosthetic hands available on the market grew over the last 5 decades (Belter et al., 2013), their control methods have fallen behind (Castellini, 2020).

The conventional method of controlling dexterous prosthetic hands is through myoelectric interfaces, in which electromyographic (EMG) signals from existing muscles in the amputee’s residual limb are used to operate the device. However, lack of available muscle signals due to the difference in amputation levels oftentimes poses limitations on the controllers themselves (O'Neill et al., 1994). The issue arises from the fact that while there might be many DOFs in the device, which allows for an individuated movement, a limited number of EMG signals might be available on the residual limb to control these DOFs (Iqbal and Subramaniam, 2018). To account for the differences in the control and output dimensions, some have investigated the potential of using dimensionality-reduction (DR) methods.

A famous study in which a DR technique was applied to complex hand kinematics during object grasping was done by Santello et al. (1998). There, the group used principal component analysis (PCA), which is a linear DR technique that creates a low-dimensional (latent) representation of the data by finding the directions in the original space that explain the most variance in the input data. In their study, they found that a 2D latent space could account for approximately 80% of the variability of hand kinematics during various type of grasping. Relying on this finding, several groups have developed, what they called, a postural controller in which a prosthetic hand with multiple DOFs could be operated via a 2D space (Magenes et al., 2008; Ciocarlie and Allen, 2009; Matrone et al., 2010; Matrone et al., 2012; Segil and Weir, 2013; Segil et al., 2014; Segil and Weir, 2015).

One of the main limitations of PCA is its linearity, due to which it can only account for linear relationships in the input data. In our recent study, we explored the use of a nonlinear autoencoder (AE) as a way to account for nonlinear relationships in hand kinematics data (Portnova-Fahreeva et al., 2020). In the study, we found that two latent dimensions of an AE could produce superior results to that of PCA, reconstructing over 90% of hand kinematics data. In addition, a nonlinear AE spread the variance more uniformly across its latent dimensions, allowing for a more even distribution of control across each DOF. As a result, AEs may serve as a platform for more accurate lower-dimensional prosthetic control, utilizing its reconstruction power and more equal spread of latent dimension variance.

Leveraging these findings of superior features of nonlinear AEs over its linear counterpart (i.e., PCA), we developed and implemented a novel myoelectric controller that allowed for the control of a high-dimensional virtual hand with 17 DOFs via a low-dimensional (2D) control space using only four muscle signals. We referred to it as an AE-based controller. An in-depth description of the development and implementation of the controller as well as the motivation behind certain design choices, such as the type of an AE network used for dimensionality reduction of hand kinematics, are presented in this paper. In addition, a simple validation experiment was run to assess the ability of four unimpaired naïve users to learn to control a multi-DOF virtual hand with four EMG signals without being aware of the underlying dimensionality of the controller. The least and most effective ways of training this AE-based controller were assessed in a different work done by our group (Portnova-Fahreeva et al., 2022).

2 Methods

2.1 Autoencoder-based controller

2.1.1 Standard autoencoders

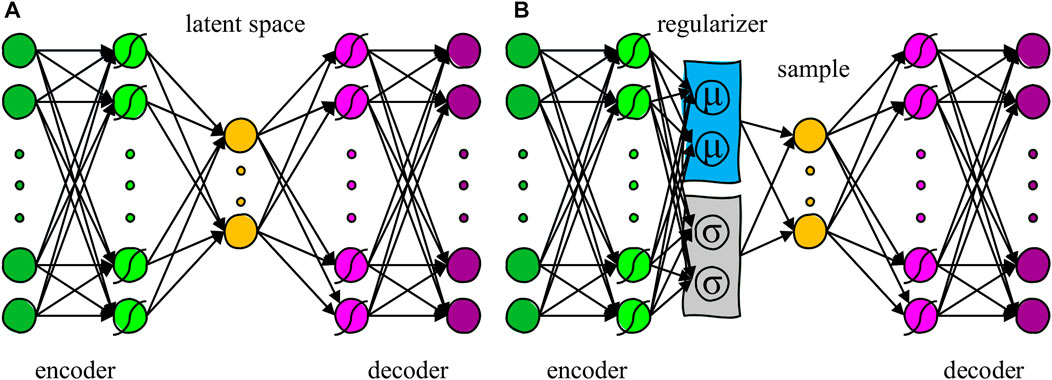

In the our previous study (Portnova-Fahreeva et al., 2020), we determined the superiority of a standard AE structure (Figure 1A) to the conventional linear PCA method when reducing the dimensionality of complex hand kinematics to two latent dimensions.

FIGURE 1. (A) Structure of a standard autoencoder (AE) with three hidden layers. The middle layer represents the latent space. Curves over the units represent a nonlinear activation function. (B) Structure of a variational autoencoder (VAE) with three hidden layers and a regularizer term before the latent space.

AEs are artificial neural networks consisting of two components: an encoder that converts the inputs (

2.1.2 Variational autoencoders

Despite their strong capabilities of reconstructing biological data with minimal information loss, the topological characteristics of latent spaces derived from standard AEs do not allow for intuitive interpolation. In other words, points that are not part of the encoded latent space often reconstruct to unrealistic data. In the case of the hand kinematic data from our previous study (Portnova-Fahreeva et al., 2020), this would result in the reconstruction of unnatural hand gestures with joint angles outside of their possible ranges of motion.

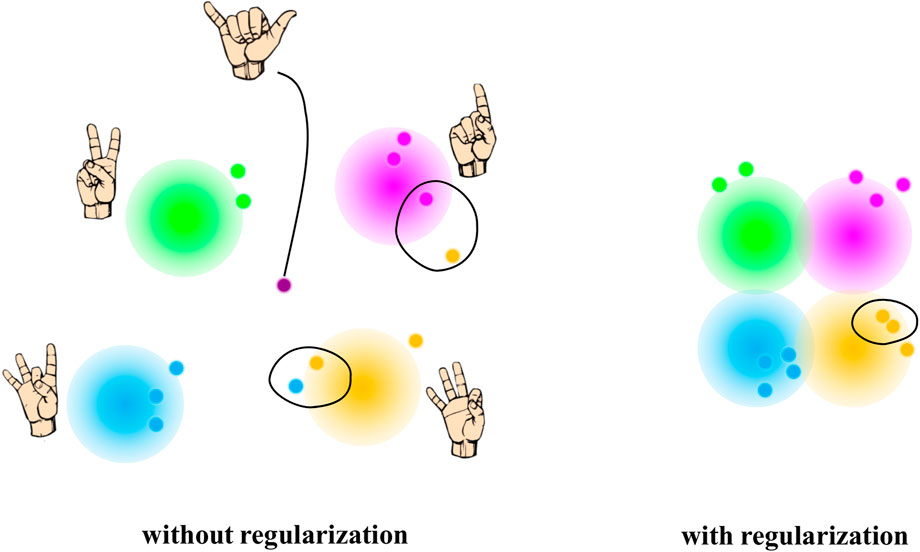

In addition, standard AEs often yield inconsistencies in the latent space, leaving large gaps between encoded clusters of different data types (e.g., different gestures) (Portnova-Fahreeva et al., 2020). This means that getting from one gesture to another would require crossing spaces of unrealistic datapoints. As a result, such a latent space may not be the most optimal option for myoelectric prosthetic control (Figure 2, left).

FIGURE 2. Examples of potential two latent spaces without (left) and with (right) regularization as part of the neural network. In the absence of the regularizer, the latent space yields close points that are not actually similar once decoded (note the points that are close to each other but of different color). In addition, without regularization, some points on the latent space can reconstruct to something unexpected (note the purple point). Regularization, on the contrary, yields a more uniformly distributed latent space, in which two close points project into similar representations once decoded. Points on a regularized latent space also give “meaningful” content when reconstructed.

To counteract these fundamental problems of standard AEs, we proposed using a Variational Autoencoder (VAE) (Kingma and Welling, 2013) in the development of our controller. Differently from a standard AE, a regularizer term is added to the reconstruction error in the VAE cost function, which aims to match the probability distributions of the latent space to that of a prior (or source) distribution (Figure 1B). VAEs typically use the Kullback-Leibler Divergence (KLD) (Kullback and Leibler, 1951) to minimize the distance between the latent and the source distribution. When the source distribution is a Gaussian, the cost function that a VAE optimizes is:

By optimizing the two terms of the cost function, the resulted VAE latent space can locally maintain the similarity of nearby encodings yet be globally densely packed near the latent space origin (Figure 2, right). In our study, VAE was trained to regularize the latent space distribution

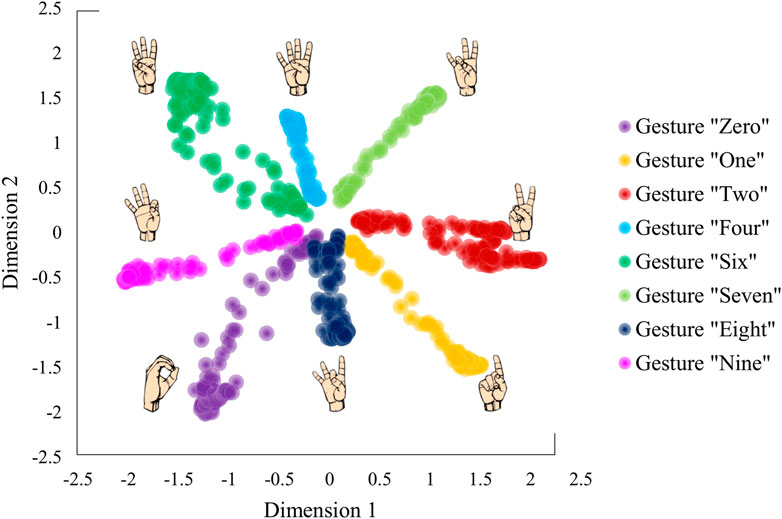

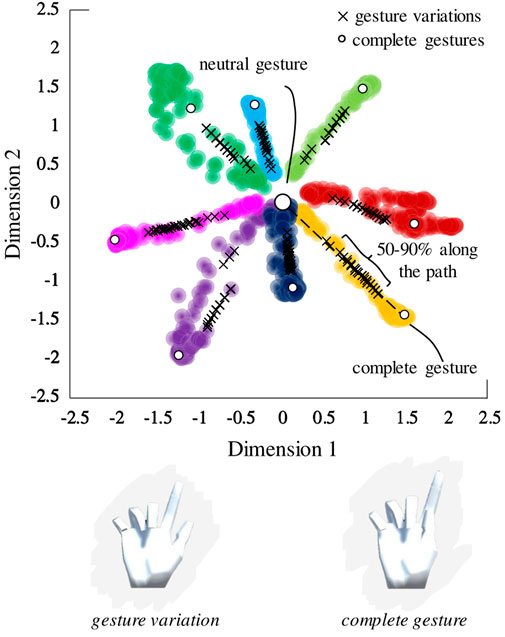

We applied the VAE network to the data recorded from one of the participants (P1) from Portnova-Fahreeva et al., which resulted in a 2D latent space with separable encoded gesture classes (Figure 3).

FIGURE 3. Latent space derived by applying a variational autoencoder to hand kinematics data of an individual performing American Sign Language gestures.

We performed hyperparameter tunning on the VAE network to determine the most optimal model for the given data. We used a separate validation dataset not included in the analysis performed in the original study (Portnova-Fahreeva et al., 2020), in which the participant performed American Sign Language (ASL) gestures. The hyperparameters under assessment were the type of nonlinear activation function between neural network layers, learning rate, and the weight on the regularizer term in the cost function, indicated with β (Eq. 2). The performance of each hyperparameter pair was evaluated in terms of reconstruction, assessed with a Variance Accounted For (VAF) between the input and the output of the network, and similarity between the empirical VAE latent space and the target distribution (i.e., normal Gaussian), calculated with via KLD. VAF was calculated using Eq. 3.

2.2 Virtual hand

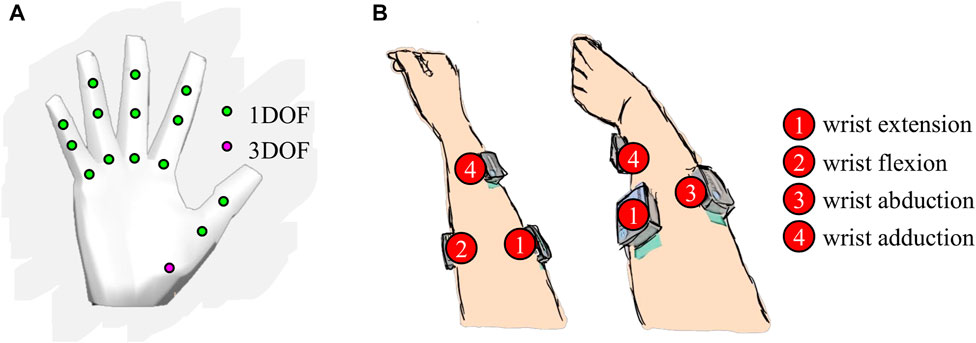

The controller was validated using a virtual environment with a 3D computer model of a hand with 17 DOFs (Figure 4A). The 17 DOFs that were operated with the controller were flexions/extensions of the three joints (metacarpal, proximal interphalangeal, distal interphalangeal) of the four fingers (pinky, ring, middle, and index) and flexion/extension of two joints of the thumb (metacarpal and interphalangeal) as well as the 3D rotation of its carpometacarpal joint.

FIGURE 4. (A) 17 degrees of a freedom (DOFs) of the virtual hand. (B) Surface electrode placement on the participant’s forearm for the myoelectric interface. Each electrode location is specific to one of the four wrist movements.

To prevent the hand from generating biologically unnatural gestures during the control, we limited the possible ranges of motion of the virtual hand joints to the ranges of motions of an actual hand. If the reconstruction output yielded a number outside of the natural range of motion of a hand joint, that joint did not change its angle in the virtual hand. Neutral gesture was defined as a completely open hand, with all fingers fully extended.

2.3 Controller components

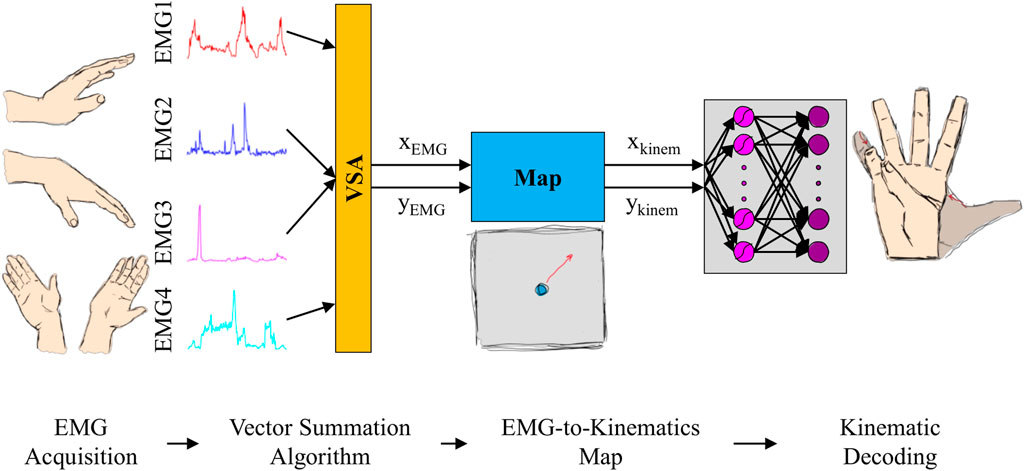

The developed AE-based controller, which converted four muscle signals into 17 joint kinematics of a virtual hand, contained four components: EMG acquisition, Vector Summation Algorithm (VSA), EMG-to-kinematics map, and kinematic decoding (Figure 5). Each component of the controller is described at length in the sections below.

FIGURE 5. Setup of the AE-based myoelectric controller. Four electromyographic (EMG) signals, generated during wrist movements, are acquired with surface electrodes (EMG acquisition) and combined using a Vector Summation Algorithm (VSA) into a 2D vector (

2.3.1 EMG acquisition

The control of the virtual hand was performed with muscle signals acquired from four surface EMG electrodes (Delsys Inc., MA, United States), placed on the user’s right forearm (Figure 4B). Each electrode recorded one of the four wrist movements: flexion, extension, abduction, and adduction. Raw EMG signals were recorded at a sampling frequency of

A calibration procedure was performed on the recorded EMG signals to verify proper electrode placement. This step was done by means of visual inspection. There, the user was asked to perform a single wrist movement, and the study coordinator confirmed that only one of the four recorded EMG signals was active.

After that, each participant was asked to perform

In addition, we recorded

For each muscle

2.3.2 Vector Summation Algorithm

The calibrated EMG signals of the four wrist muscles were combined using a VSA to obtain a 2D control signal. The muscles that controlled wrist extension/flexion were mapped to move the 2D controller in the up/down direction (i.e.,

Matching the resting EMG position with the center of the latent space ensured that every trial started from the neutral gesture and every movement was performed in the center-out reaching manner. In the resting position, the corresponding virtual hand gesture was with all five fingers completely open.

An additional 3rd order low-pass Butterworth filter of

2.3.3 EMG-to-kinematics map

After mapping calibrated EMG signals to a 2D cursor position on the screen (

2.3.4 Kinematic decoding

The decoder sub-network of the VAE model was finally utilized to reconstruct a point on a 2D control space (

With this controller, a user could consequently control a high-dimensional virtual hand by moving a point on a 2D plane (See Video in the Supplementary Video S1).

2.4 Controller validation

To determine the effectiveness of the AE-based controller, we developed a validation experiment. To do so, we recruited four unimpaired right-handed individuals, entirely naïve to the myoelectric controller, to participate in a 2-h experiment. Participant recruitment and data collection conformed with the University of Washington’s Institution Review Board (IRB). Informed written consent was obtained from each participant prior to the experiment.

In the experiment, the participants engaged in a series of trials to learn the AE-based controller to operate the 17D virtual hand on the screen. It contained two train sessions (Train1 and Train2) with a break in between.

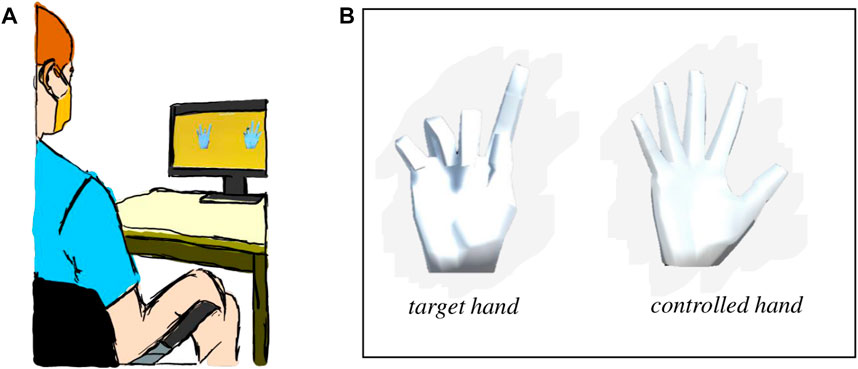

No physical constraints were imposed on the participants throughout the experiment as they were free to move their right arm while performing the experiment objectives. The participants were seated in an upright position in front of a computer screen, at approximately

FIGURE 6. (A) Experimental setup with the participant sitting approximately

2.4.1 Training sessions

During the training sessions, the participants were presented with two virtual hands (Figure 6B). The hand on the left was the target hand the participants needed to match. The hand on the right was the hand controlled with the wrist muscles through the myoelectric interface. No visual feedback was given about the location of the controller or the target gesture on the 2D latent space. Hence, the participants were unaware of the underlying dimensionality of the controller. The target hand gestures followed the VAE latent space shown in Figure 3.

The participants started a new trial with their muscles completely relaxed. This resulted in the controlled hand starting from the neutral gesture. After a sound cue indicating a new trial, the target hand formed a new gesture that the participants were required to match. Contracting their forearm muscles, they had

After each trial, successful or not, the participants heard a sound cue that asked them to relax their muscles, which returned their controlled hand back into the neutral gesture. Once completely relaxed for

The gestures that the participants were required to match during Train1 and Train 2 were slight variations of the original eight ASL gestures, which were created by selecting a set of equidistant points between

FIGURE 7. Sampling of gesture variations from the latent space. Variations were sampled from 50% to 90% of the nominal path between the neutral gesture and the complete gesture on the latent space.

It is important to note that given the acceptable range of 0.5 units on the latent space and many variations being sampled on a small latent space segment (i.e., the

Train1 session contained a total of

2.5 Outcome measures

Performance of each participant was assessed with the following metric.

2.5.1 Adjusted match time (AMT)

AMT was defined as the time taken to complete a hand gesture match,

For every missed trial, the AMT of the trial was set to the timeout value (

2.5.2 Adjusted path efficiency (APE)

APE was defined as a measure of straightness of the path taken to match the gesture, calculated on the 2D control plane. It was calculated using Eq. 12, where

Similar to AMT, for every missed trial, the APE of the trial was set to the lowest possible value of 0%.

2.6 Statistical analysis

For statistical analysis, we used MATLAB Statistics Toolbox functions (MathWorks, Natick, MA, United States). Anderson-Darling Test was used to determine the normality of the data (Anderson and Darling, 1954). Since the data were determined to be non-Gaussian, we used non-parametric tests for statistical analysis.

We evaluated differences for each participant on the average AMT and ART values at the beginning and the end of the training using Wilcoxon Sign Rank Test (Wilcoxon, 1945). The threshold for significance was set to

3 Results

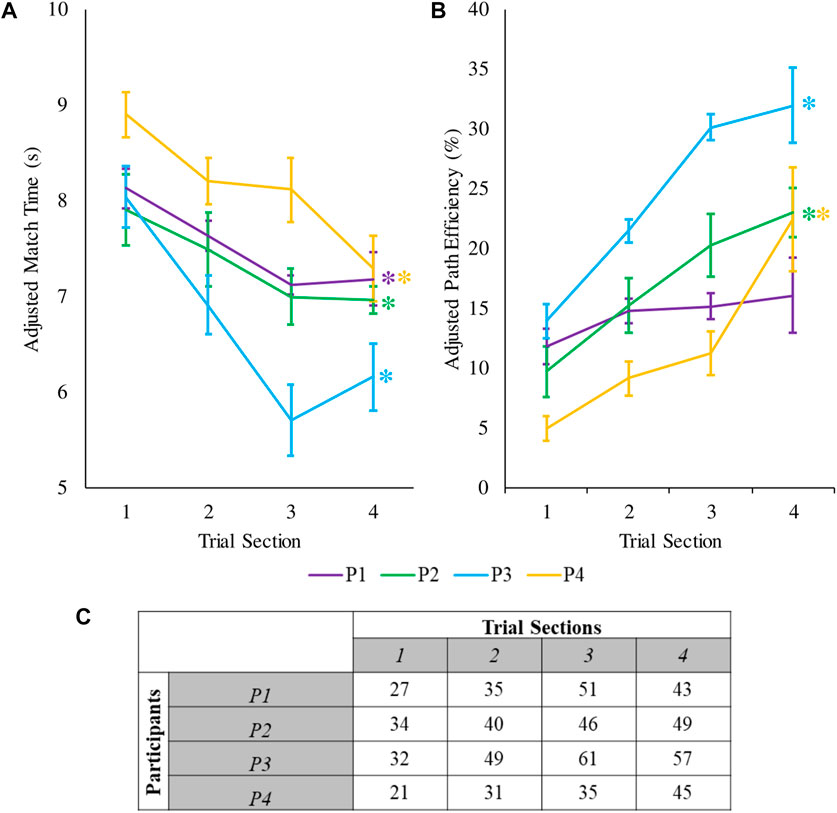

Here we present the results of the validation experiment. By the end of training by the end of training, all four participants were able to significantly decrease their adjusted match times and three out of four participants significantly increased their adjusted path efficiencies (

FIGURE 8. (A) Mean and standard error of the adjusted match time for the four participants during the validation experiment. The average values are calculated over ten repetitions, each of which consisted of eight distinct ASL gestures averaged for each participant. (B) Mean and standard error of the adjusted path efficiency during the validation experiment. Asterisks indicate statistical significance between the beginning and the end of the experiment. (C) Number of successfully matched gestures for every trial section. Every trial section consisted 10 variations of the eight ASL gestures (i.e., 80 trials per trial section).

4 Discussion

In this paper, we explored the use of nonlinear AEs to control a complex high-dimensional hand system via a myoelectric interface. In addition, we validated the controller’s usability via a simple experiment. With all four participants improving their performance by the end of training (three out of four doing so significantly for APE and all four showing statistical significance in AMT), the AE-based controller proves to have a strong potential of being used in the space of upper-limb prosthetics to perform high-dimensional control via a low-dimensional space.

4.1 Comparing to PCA-based controllers

One of the major outcomes of this study was the application of a nonlinear AE for the development of a controller, in which complex kinematics of a virtual hand with 17 DOFs were operated via a 2D plane. In the past, several groups have developed similar hand controllers but with the use of a linear method such as PCA (Magenes et al., 2008; Matrone et al., 2010; Matrone et al., 2012; Belter et al., 2013; Segil and Weir, 2013; Segil et al., 2014; Segil and Weir, 2015).

In the studies where a linear postural controller was validated with a myoelectric interface (Matrone et al., 2012; Segil et al., 2014; Segil and Weir, 2015), the average movement times (time to successfully reach but not hold the hand in a correct grasp) were between

Despite potential differences across the linear- and nonlinear-based controllers, the nonlinear counterpart yields a major advantage in its superiority in reconstructing higher variance of the input signal with a smaller number of latent dimensions as discovered in Portnova-Fahreeva et al. (2020). What this means is that a nonlinear-based controller with just two latent dimensions would results in a reconstructed hand that is closer in appearance (i.e., kinematically) to the original input signals whereas the PCA-based controller would be less accurate in reconstructing the gestures. Consequently, such a controller can yield a more genuine movement in a prosthetic hand in comparison to its PCA-based counterpart.

4.2 Limitations

One of the main limitations of the study presented in this work is a small sample size. The reason for it was mainly the fact that the study was run for validation purposes only and participant recruitment happened at the time of the pandemic, with limited in-person interactions at universities.

Match times during successful trials were another limitation, especially in the context of myoelectric control. It specifically points to the ineffectiveness of the training paradigm used in this paper to train the participants on the AE-based controller. The point of various training paradigms and their effect on learning of the novel controller is discussed in our other work (Portnova-Fahreeva et al., 2022).

4.3 Applicability for prosthetic users

When designing this study, the end-user group that we considered were upper-limb amputees that utilize prosthetic hands in their daily living. Although our validation experiment was performed on unimpaired individuals, we were able to highlight the possibility of using nonlinear controllers for the purpose of manipulating a myoelectric hand prosthesis. The myoelectric interface that we designed employed muscle signals generated by wrist movements to operate on the 2D latent space. And although an upper-limb amputee might not be able to generate these, other more distal locations can be chosen to obtain clean signals to control a location of a 2D cursor, to operate a high-dimensional hand. The main advantage of our controller is that it does not require a large number of signals to control a hand with a large number of DOFs (only enough to operate the cursor on a 2D plane). One does not even need to limit themselves to the EMG system—a 2D control signal can be obtained from a simpler interface based on Internal Measurement Units (IMUs). For example, IMUs can be placed on the user’s shoulders, consequently, controlling the posture of the prosthetic hand. In the past, IMUs have been widely used to operate a low-dimensional controller (Thorp et al., 2015; Seáñez-González et al., 2016; Abdollahi et al., 2017; Pierella et al., 2017; Rizzoglio et al., 2020). Thus, nonlinear AE-based controllers, such as the one proposed here, have the potential to serve as a versatile and modular solution for controlling complex upper-limb prosthetic devices.

Lastly, it is important to note that most of the currently available prosthetic hands do not provide for continuous control of individual fingers. However, we believe that such designs are the result of limitations placed by existing prosthetic control strategies (e.g., pattern recognition) that are discrete and only allow for a limited number of predefined gestures. By developing controllers such as the AE-based controller that would allow the user to operate the device in a continuous manner, we challenge the existing limits, aiming to jumpstart the development of prosthetic hands that would facilitate continuous control.

Data availability statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by the University of Washington Human Subjects Division. The patients/participants provided their written informed consent to participate in this study.

Author contributions

AP-F contributed to design and implementation of the study as well as data analysis performed for the experiment. AP-F also wrote the manuscript with the support of FR, ER, and FM-I. FR contributed to design of the neural network architecture described in the paper, providing necessary code for certain parts of data analysis, and educating on pre-processing steps. ER and FM-I contributed to conception and design of the study as well as interpretation of the results. All authors contributed to the article and approved the submitted version.

Funding

This project was supported by NSF-1632259, by DHHS NIDILRR Grant 90REGE0005-01-00 (COMET), by NIH/NIBIB Grant 1R01EB024058-01A1, and NSF grant 2054406.

Acknowledgments

The authors of this paper would like to thank all the participants who took part in this study as well as Dr. Dalia De Santis, who has always been an incredible mentor on conducting thorough research and performing valuable analysis.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fbioe.2023.1134135/full#supplementary-material

References

Abdollahi, F., Farshchiansadegh, A., Pierella, C., Seáñez-González, I., Thorp, E., Lee, M.-H., et al. (2017). Body-machine interface enables people with cervical spinal cord injury to control devices with available body movements: Proof of concept. Neurorehabilitation neural repair 31, 487–493. doi:10.1177/1545968317693111

Anderson, T. W., and Darling, D. A. (1954). A test of goodness of fit. J. Am. Stat. Assoc. 49, 765–769. doi:10.1080/01621459.1954.10501232

Belter, J. T., Segil, J. L., Sm, B., and Weir, R. F. (2013). Mechanical design and performance specifications of anthropomorphic prosthetic hands: A review. J. rehabilitation Res. Dev. 50, 599. doi:10.1682/jrrd.2011.10.0188

Castellini, C. (2020). “Upper limb active prosthetic systems—Overview,” in Wearable robotics (Amsterdam, Netherlands: Elsevier), 365–376.

Ciocarlie, M. T., and Allen, P. K. (2009). Hand posture subspaces for dexterous robotic grasping. Int. J. Robotics Res. 28, 851–867. doi:10.1177/0278364909105606

Iqbal, N. V., Subramaniam, K., and P., S. A. (2018). A review on upper-limb myoelectric prosthetic control. IETE J. Res. 64, 740–752. doi:10.1080/03772063.2017.1381047

Kingma, D. P., and Welling, M. (2013). Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114.

Kullback, S., and Leibler, R. A. (1951). On information and sufficiency. Ann. Math. statistics 22, 79–86. doi:10.1214/aoms/1177729694

Magenes, G., Passaglia, F., and Secco, E. L. (2008). “A new approach of multi-dof prosthetic control,” in 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20-25 August 2008, 3443–3446.

Matrone, G. C., Cipriani, C., Carrozza, M. C., and Magenes, G. (2012). Real-time myoelectric control of a multi-fingered hand prosthesis using principal components analysis. J. neuroengineering rehabilitation 9, 40–13. doi:10.1186/1743-0003-9-40

Matrone, G. C., Cipriani, C., Secco, E. L., Magenes, G., and Carrozza, M. C. (2010). Principal components analysis based control of a multi-dof underactuated prosthetic hand. J. neuroengineering rehabilitation 7, 16–13. doi:10.1186/1743-0003-7-16

O'neill, P., Morin, E. L., and Scott, R. N. (1994). Myoelectric signal characteristics from muscles in residual upper limbs. IEEE Trans. Rehabilitation Eng. 2, 266–270. doi:10.1109/86.340871

Pierella, C., De Luca, A., Tasso, E., Cervetto, F., Gamba, S., Losio, L., et al. (2017). “Changes in neuromuscular activity during motor training with a body-machine interface after spinal cord injury,” in 2017 International Conference on Rehabilitation Robotics (ICORR), London, UK, 17-20 July 2017, 1100–1105.

Pistohl, T., Cipriani, C., Jackson, A., and Nazarpour, K. (2013). Abstract and proportional myoelectric control for multi-fingered hand prostheses. Ann. Biomed. Eng. 41, 2687–2698. doi:10.1007/s10439-013-0876-5

Portnova-Fahreeva, A. A., Rizzoglio, F., Casadio, M., Mussa-Ivaldi, S., and Rombokas, E. (2022). Learning to operate a high-dimensional hand via a low-dimensional controller. Front. Bioeng. Biotechnol. 11, 647. doi:10.3389/fbioe.2023.1139405

Portnova-Fahreeva, A. A., Rizzoglio, F., Nisky, I., Casadio, M., Mussa-Ivaldi, F. A., and Rombokas, E. (2020). Linear and non-linear dimensionality-reduction techniques on full hand kinematics. Front. Bioeng. Biotechnol. 8, 429. doi:10.3389/fbioe.2020.00429

Rizzoglio, F., Pierella, C., De Santis, D., Mussa-Ivaldi, F., and Casadio, M. (2020). A hybrid Body-Machine Interface integrating signals from muscles and motions. J. Neural Eng. 17, 046004. doi:10.1088/1741-2552/ab9b6c

Santello, M., Flanders, M., and Soechting, J. F. (1998). Postural hand synergies for tool use. J. Neurosci. 18, 10105–10115. doi:10.1523/jneurosci.18-23-10105.1998

Seáñez-González, I., Pierella, C., Farshchiansadegh, A., Thorp, E. B., Wang, X., Parrish, T., et al. (2016). Body-machine interfaces after spinal cord injury: Rehabilitation and brain plasticity. Brain Sci. 6, 61. doi:10.3390/brainsci6040061

Segil, J. L., Controzzi, M., Weir, R. F., and Cipriani, C. (2014). Comparative study of state-of-the-art myoelectric controllers for multigrasp prosthetic hands. J. rehabilitation Res. Dev. 51, 1439–1454. doi:10.1682/jrrd.2014.01.0014

Segil, J. L., and Weir, R. F. (2013). Design and validation of a morphing myoelectric hand posture controller based on principal component analysis of human grasping. IEEE Trans. Neural Syst. Rehabilitation Eng. 22, 249–257. doi:10.1109/tnsre.2013.2260172

Segil, J. L., and Weir, R. F. (2015). Novel postural control algorithm for control of multifunctional myoelectric prosthetic hands. J. rehabilitation Res. Dev. 52, 449–466. doi:10.1682/jrrd.2014.05.0134

Thorp, E. B., Abdollahi, F., Chen, D., Farshchiansadegh, A., Lee, M.-H., Pedersen, J. P., et al. (2015). Upper body-based power wheelchair control interface for individuals with tetraplegia. IEEE Trans. neural Syst. rehabilitation Eng. 24, 249–260. doi:10.1109/tnsre.2015.2439240

Keywords: dimensionality reduction, autoencoders, prosthetics, hand, myoelectric, controller

Citation: Portnova-Fahreeva AA, Rizzoglio F, Mussa-Ivaldi FA and Rombokas E (2023) Autoencoder-based myoelectric controller for prosthetic hands. Front. Bioeng. Biotechnol. 11:1134135. doi: 10.3389/fbioe.2023.1134135

Received: 30 December 2022; Accepted: 15 June 2023;

Published: 26 June 2023.

Edited by:

Yang Liu, Hong Kong Polytechnic University, Hong Kong SAR, ChinaReviewed by:

Andrea d’Avella, University of Messina, ItalyMatthew Dyson, Newcastle University, United Kingdom

Copyright © 2023 Portnova-Fahreeva, Rizzoglio, Mussa-Ivaldi and Rombokas. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Alexandra A. Portnova-Fahreeva, YWxleGFuZHJhLnBvcnRub3ZhQGdtYWlsLmNvbQ==

Alexandra A. Portnova-Fahreeva

Alexandra A. Portnova-Fahreeva Fabio Rizzoglio

Fabio Rizzoglio Ferdinando A. Mussa-Ivaldi

Ferdinando A. Mussa-Ivaldi Eric Rombokas

Eric Rombokas