- 1Faculty of Science, Hong Kong Baptist University, Hong Kong, China

- 2College of Information and Computer, Taiyuan University of Technology, Taiyuan, China

In order to more accurately and comprehensively characterize the changes and development rules of lesion characteristics in pulmonary medical images in different periods, the study was conducted to predict the evolution of pulmonary nodules in the longitudinal dimension of time, and a benign and malignant prediction model of pulmonary lesions in different periods was constructed under multiscale three-dimensional (3D) feature fusion. According to the sequence of computed tomography (CT) images of patients at different stages, 3D interpolation was conducted to generate 3D lung CT images. The 3D features of different size lesions in the lungs were extracted using 3D convolutional neural networks for fusion features. A time-modulated long short-term memory was constructed to predict the benign and malignant lesions by using the improved time-length memory method to learn the feature vectors of lung lesions with temporal and spatial characteristics in different periods. The experiment shows that the area under the curve of the proposed method is 92.71%, which is higher than that of the traditional method.

1 Introduction

Because of factors such as smoking, air pollution, and occupational environment, lung cancer has become one of the most malignant tumors that threaten human health and life and has become the number one killer of all cancers (Taillant et al., 2004; Zhang et al., 2018). Global cancer data show that the number of new cases and deaths of lung cancer in the world in 2018 were 2.1 million and 1.8 million, respectively, with the highest morbidity and mortality rates among all cancers. The 5-year survival rate of patients with advanced lung cancer is approximately 16%, but for effective treatment in patients with early-stage disease, the 5-year survival rate can increase by approximately four to five times (Nagaratnam et al., 2018 Cheuk). Pulmonary nodules are an early manifestation of lung cancer, and their benign and malignant predictions are very important for radiologists to carry out cancer staging assessment and individualized clinical treatment planning. With the development of medical imaging technology, the number of computed tomography (CT) images of the lungs continues to increase, but the number of experienced physicians is limited, resulting in the explosive growth of image data and the serious shortage of manual diagnosis. Therefore, computer-aided diagnosis technology is urgently needed (Zhang et al., 2020a) to assist physicians in feature extraction and benign and malignant prediction of lung nodules.

In clinical diagnosis, the objects of lung medical image processing are often limited to the data of the patient in the same period, and the feature vectors of a slice in a certain period are considered in isolation, and the global features with spatial information on the time axis are ignored. In addition, Existing prediction methods, such as medical decision-making systems (Christo et al., 2020) combined with intelligent optimization (Deng et al., 2020; Zhang et al., 2021), are divided into multiobjective (Cui et al., 2020; Cai et al., 2021a) and single-objective optimization (Boudjemaa et al., 2020; Yang et al., 2020). Although the factors considered can be more comprehensive, they mostly rely on artificial features. Because of the limited expressive power of manual features, the prediction effects of existing methods are often unsatisfactory. At the same time, because of the complexity of the growth and evolution of lung nodules in the lung cancer lesion area (Duffy and Field, 2020), the same lesion often has different imaging manifestations at different periods. Among them, the medical imaging data of lesions at different periods contain a large amount of their evolution (development, death)–related information. Lung CT images have blurred edges, low gray values, and difficult-to-express texture information. It is difficult to accurately and comprehensively characterize lung lesions. In recent years, longitudinal prediction methods have been proposed (Santeramo et al., 2018; Oh et al., 2019), and the current research methods are rarely useful in the field of pulmonary medicine, and the existing intelligent diagnosis mostly uses isolated image fragments, which cannot present the entire cycle of the lesion, resulting in the inability to link the characteristics of lung cancer at different periods.

Figure 1 shows the evolution trend of the sequence of long-course lung lesions examined every 3 months in the same patient.

We propose a scheme that uses the latest deep learning techniques (Cui et al., 2021) to extract the depth features of long-term lung CT lesion sequence images for early benign and malignant lung lesion prediction. According to the sequence images of the lesions in each period, make full use of the temporal and spatial information of the image to extract the depth features of the lesions in different periods. According to the characteristics of lung medical images in different periods, the long- and short-term memory model recurrent neural network (RNN) architecture is good for lung lesions. Longitudinal prediction of malignancy provides reliable help for physicians.

The major contributions of this article are as follows:

1) On the lung lesion image data set, RPN was used to extract the candidate region (Ren et al., 2017), and linear interpolation technology was used to obtain the three-dimensional (3D) structure of the candidate region.

2) We propose a novel method to exploit 3D convolutional neural network (CNN) deep network to extract the deep hidden features of long-duration lung lesions; compared with their 2D counterparts, the 3D CNNs can encode richer spatial information and extract more discriminative representations via the hierarchical architecture trained with 3D samples.

3) We propose a novel long short-term memory (LSTM) network with time modulation information to propagate the spatial–temporal information between pulmonary lesions adjacent slices for a long period and capture the corresponding long-term dependencies and solve the problem that the input must be the image of lung lesions with equal intervals, thereby predicting the next stage of pulmonary lesion.

2 Related Work

2.1 Methods of Extracting Medical Image Feature Information

The large amount of information contained in the lesions in each period of medical imaging has important guiding significance for obtaining accurate prediction results, and accurate prediction results also play an important guiding role for doctors’ diagnosis (Hu et al., 2016). For extracting a large amount of information from the lesions, Zhao and Du (2016) used dimensionality reduction technology and deep learning technology, respectively, to extract spectral features and spatial features and used CNN to find space-related features. Bodla et al. (2017) proposed a face recognition method based on deep heterogeneous feature fusion, which uses different deep CNNs (DCNNs) to concatenate the generated features and merge the feature information. Khusnuliawati et al. (2017) proposed that the scale invariant feature transform and local extensive binary pattern should be used for multifeature extraction, and the extracted features should be concatenated and fused in the form of histogram. Xiao et al. (2015) proposed a feature fusion method based on SoftMax regression to perform effective feature fusions by estimating the similarity measure from object to class and the probabilities that each object belongs to a different kind. Shi et al. (2017) put forward a new nonlinear measurement learning method, which uses deep sparse autoencoder feature fusion strategy based on deep network.

2.2 Application of Traditional Methods to Time-Series Data

In recent years, scholars have also studied time-series data in medicine. Onisko et al. (2016) analyzed medical time series through Kaplan–Meier estimator Cox proportional hazard regression model and dynamic Bayesian network modeling. Li and Feng (2015) predicted the number of future medical appointments by analyzing the appointment capacity of emergency patients every day and every hour. Cheng et al. (2020) applied a Bayesian nonparametric model based on Gaussian process regression to hospital patient monitoring using clinical covariables and all information provided by laboratory tests and successfully conducted medical intervention. As deep learning has a good advantage in time-series learning, many scholars have applied it to many fields. Chandra (2015) has proposed utilizing RNN to predict collaborative evolution by analyzing time series. Fragkiadaki et al., 2016 proposed an improved RNN model that captures moving body gestures in video for recognition and prediction. Koutník et al. (2014) have introduced a modified clockwork RNN architecture, which divides its hidden layers into separate modules, achieving the processing input of each module at its own time granularity, improving the performance of task tests, and speeding up the network speed.

2.3 Application of CNN and Long-Term Memory and LSTM to Time-Series Data

In recent years, CNNs have been successfully used to detect radiological anomalies in medical images, such as ordinary X-rays. LSTMs is a special type of RNN that can classify, process, and predict time series (Graves, 2012; Zhang, 2020). The internal state of the LSTM (also known as cell state or memory) enables the architecture to remember the standard LSTM. The standard LSTM contains memory blocks, which contain memory units. A typical memory block consists of three main components: an input gate controlling the input activation flow of memory cells, an output gate controlling the output activation flow, and a forgetting gate regulating the internal state of cells. The forgetting gate adjusts the amount of information used in the internal state of the previous time step. Santeramo et al. (2018) attempted to automate the analysis of longitudinal medical image data by using the LSTM network to analyses the temporal context of a series of chest radiographs. In the field of breast pathological images, Kooi and Karssemeijer (2017) proposed a region of interest (ROI)–based method to compare plaques aligned at different time points. Although the latter method is slightly improved compared with a single detection method, it depends on specific lesion detection and requires local data.

These algorithms are very effective, but are rarely applied to long-term lung CT image prediction. So far, most studies have used CNNs in individual tests, but abandoned previously available clinical information. One limitation of traditional LSTM is that they implicitly assume equal interval observations, while medical examination is event based, so the sampling is irregular.

LSTMs and more general RNNs do not perform well in time series with irregularly sampled or missing data (Che et al., 2018; Zhang, 2021). Previous attempts to apply LSTMs to irregularly sampled data points focused on accelerating algorithm convergence or reducing short-term memory in an environment with high-resolution sampled data (Baytas et al., 2017). This project set out to explore the performance of LSTM network, which became one of the selection methods of sequence modeling, especially when combined with CNNs for medical image feature extraction (Donahue et al., 2015; Grano and Zhang, 2019). The main advantages of combining CNNs with LSTMs are flexibility and scalability; it allows multiple prior sequences of variable length to be classified using the same network. Longitudinal analysis of images can potentially improve the ability of machine learning algorithms to interpret imaging studies accurately and reliably, thus providing value for medical image processing (Gao et al., 2018).

3 Methods

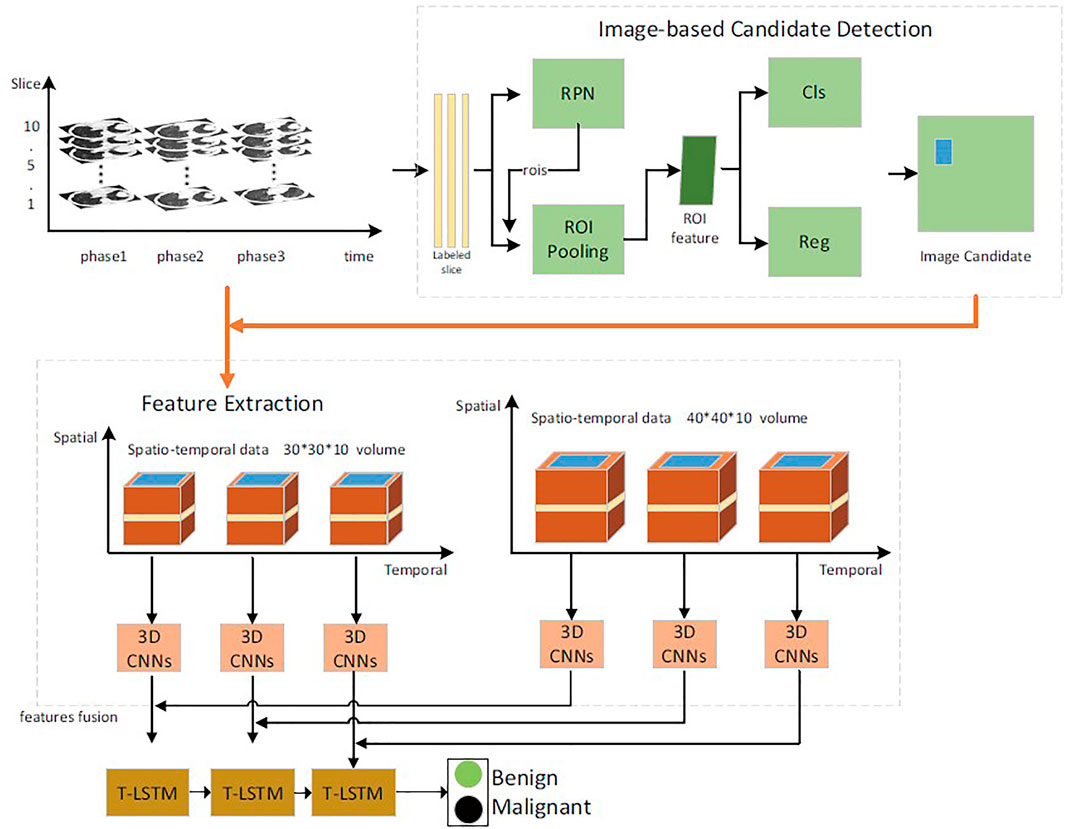

In this article, the benign and malignant lung lesions can be predicted by spatiotemporal feature fusion. For CT sequence images of the same patient from the early stage to the diagnosis, a faster Region-CNN (R-CNN) (Shinde et al., 2019) detector was used to generate ROI (Qiang et al., 2015), to extract temporal and spatial features of multilayer context information around pulmonary nodules, and a 3D CNN (Cai et al., 2021b) was used for fusion. Then, the temporal and spatial feature fusion vectors of pulmonary nodules in each period were selected to study the variation trend and relationship of feature vectors in each period by using time-modulated long–short memory network. Finally, the time-modulated LSTM (T-LSTM) model was used to predict the evolution trend of lung lesions over a long period and to determine their malignancy. The overall process is shown in Figure 2.

2D CNN selects ALEXNET network as baseline. CNN architectures for medical imaging usually contain fewer convolutional layers because of the small data sets and input size. The CNN architecture consisted of three convolutional layers and two fully connected layers, where each convolutional layer was followed by a max-pooling layer. In 2D CNN, the kernel moves in two directions. The input and output data of 2D CNN are 3D. It can be mainly used for single image data. In 3D CNN, the kernel moves in three directions. The input and output data of 3D CNN are 4D. It can be mainly used for 3D image data (magnetic resonance imaging, CT scan).

3.1 Lung CT Sequence Image Preprocessing

In the diagnosis process of doctors, the focus of observation and research is pulmonary nodules, which are transparent light and shadow with the maximum diameter of no more than 30 mm in the pulmonary parenchyma and occupy only a small part of the CT area of the chest cavity. In order to reduce the interference of other organs and tissues on the diagnosis process of doctors and effectively reduce the algorithm complexity, the lung CT images obtained from The National Lung Screening Trial (NLST) and cooperative hospitals were preprocessed. As the location of pulmonary nodules was not marked in detail in the data set, we adopted a pace–R-CNN detector to detect the target nodules and intercept the ROI-centered peripheral rectangular area to construct the pulmonary nodule data set.

We screened lung CT images of patients followed up for 3 years or more in the NLST data set to construct a long-term data set. The NLST data set marked the section number and approximate location of the most prominent pulmonary nodules in each phase sequence. The pulmonary CT image corresponding to the section number was examined for nodules. ResNet 101 (He et al., 2016) was selected as the backbone network of faster R-CNN. Boundary boxes were defined with five aspect ratios of 1:3, 1:2, 1:1, 2:1, and 3:1 and four scales of 8 × 8, 16 × 16, 32 × 32, and 64 × 64 to cover blocks of different shapes. It is worth noting that the 1:3 and 3:1 aspect ratio settings are due to the presence of pulmonary vascularized lesions, which are critical for the diagnosis of lung cancer.

According to the detection of pulmonary nodules, use a rectangular area with a scale of 30 * 30 or 40 * 40, take the coordinate information of the upper left corner of the detailed annotation rectangle in the lower right corner, cut the first five and the last five rectangular boxes according to the pulmonary nodules with the most obvious coordinate information as the center, and construct a 3D block. When each data set has the same sequence, do the same processing on the CT image, and establish a long-term pulmonary nodule sequence image data set.

3.2 Spatiotemporal Feature Extraction

The feature extraction methods of pulmonary lesions can be generally divided into traditional feature extraction methods and deep learning feature extraction methods. Generally speaking, the traditional method of feature extraction can only de-scribe a specific type of information. Deep learning, such as 2D CNN, has achieved good results in image feature extraction and can express high-level semantic information of lesions. However, this solution based on 2D CNN still cannot make full use of the 3D spatial context information of pulmonary nodules to extract the benign and malignant information of pulmonary nodules with temporal and spatial characteristics. Therefore, this article proposes a new method to extract the benign and malignant features of pulmonary nodules from CT sequences using 3D CNNs. Compared with 2D CNN, 3D CNN can encode more spatial information and extract more spatial discrimination information through the hierarchical structure of 3D sample training.

Features extracted by DCNN can represent the inherent semantic information of images (Kamnitsas et al., 2016). With the emergence of deep neural networks in computer vision, 3D CNN has developed rapidly in the past few years. Although 3D medical data are very common and popular in clinical practice, 3D CNN is still in its infancy in medical application. Furthermore, the hyperparameter adjustment of thousands of filters on large data sets is still an important challenge. To alleviate this problem, migrating pretrained 3D CNN to specific application scenarios is a very efficient and simple solution (Aaron et al., 2018).

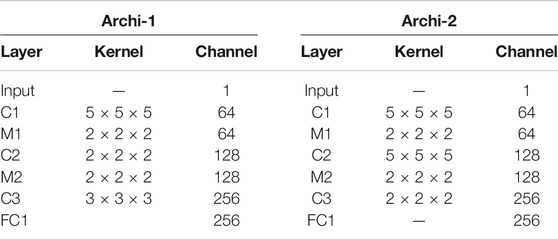

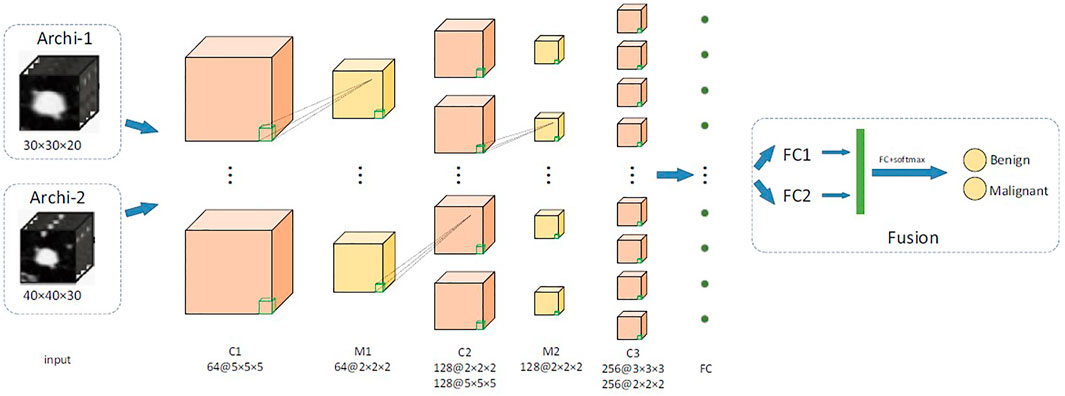

We proposed a two-channel network, which is suitable for input of different sizes. The main structure of our multilevel 3D CNN framework is shown in Figure 3. Each network has four convolutional layers. Both cnn-30 and cnn-40 contain a fully connected layer. After each hidden layer, a batch normalization layer is inserted to ensure a higher learning rate and reduce overfitting, and a dropout layer is added to further reduce the overfitting performance.

FIGURE 3. The main network structure of multiscale 3D CNN framework. C is the 3D convolutional layer; MP represents the 3D maximum pooling layer, whereas FC is the full connection layer.

The two architectures, respectively, output the 2D classification prediction of nodule or nonnodule by SoftMax in the upper layer and a 256-D feature vector from the last hidden layer. Their outputs are then combined into a single classification result of a given original 3D volume. This feature is used for feature fusion and for predicting classification of pulmonary nodules. We used data fusion techniques to, namely, late fusion. The two features from the last hidden layer of CNN are connected into a complete feature vector and sent to the prediction module. Table 1 details the network configuration.

A batch of 3D training samples are expressed as

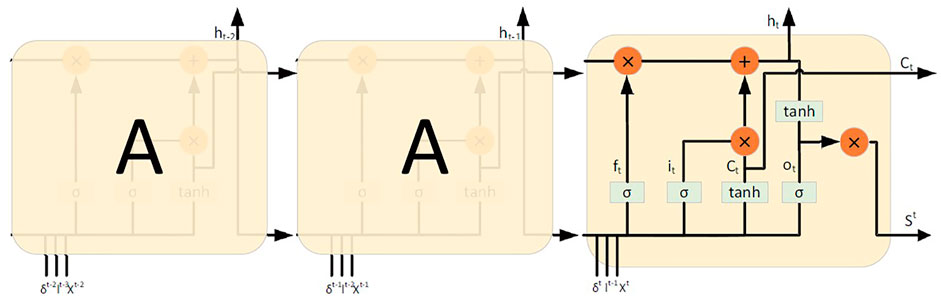

3.3 Long-Term Lung Lesion Prediction Based on the T-LSTM Model

In this article, the long-term pulmonary nodules sequence image data set prepared in Section 3.1 was used to construct a long-term pulmonary nodule benign and malignant prediction model. LSTM and RNN are deep network architecture. The connection between hidden units forms a directed cycle. The feedback loop enables the network to save the previous hidden state information as internal memory. Therefore, RNNs are preferred for problems where the system needs to store and update the context information (Li et al., 2020). Hidden Markov model (HMM) and other methods are also used for similar purposes. However, RNN has its unique characteristics, which is different from traditional methods (such as HMM). For example, RNN can deal with variable length sequences without the assumption of Markov property. In addition, in principle, the information entered in the past can be saved in memory without being limited by the past time. However, in practice, the optimization of long-term dependence is not always possible. Because when the gradient value becomes too small and too large, the gradient value will disappear and explode. In order to merge long-term dependencies without violating the optimization process, a variant of RNN has been proposed. One popular variant is LSTM, which can handle long-term dependencies using gated structures (Huimei et al., 2020).

However, traditional LSTMs are not suitable for our task because the time between consecutive follow-up of patients is variable (Zhang et al., 2020b), and they have no mechanism to explicitly model the arrival time of each observation (Baytas et al., 2017). In fact, LSTM and, more generally, RNN have been shown to perform poorly in time series with irregular sampling data or lack of values (Che et al., 2018). A previous study attempted to use LSTM for irregular sampling data points mainly focused on accelerating the convergence speed of the algorithm or reducing short-term memory in the setting with high-resolution sampling data.

For the first time, we propose a temporal information enhancing LSTM neural networks (T-LSTM) that combine recurrent time labels with RNNs, which makes the best use of the temporal features to improve the accuracy of short-term prediction. And the Long-term lung lesion prediction algorithm in T-LSTM is shown as Algorithm 1.

To solve these problems, we introduce two simple modifications to the standard LSTM architecture, called T-LSTM, both of which explicitly use the input-related time index. In the architecture proposed in this article, all images of a given patient are first processed by CNN architecture, which extracts a set of image features, denoted by Xˆt, at each time step. The LSTM takes as inputs

For the last image in the sequence, the LSTM predicts the image labels

Algorithm 1 Long-term lung lesion prediction algorithm for T-LSTM.

Input: fusions of pulmonary nodules at different periods of the same patient Xˆt, t = 1, 2, 3;

Output: The results of classification {0,1}.

Step 1: When calculating C0, the first implied state, Ct−1 is needed, but it does not exist, so it is set to 0.

Step 2: Calculate the input gate, such as Eq. 3, including the benign and malignant label of the lesion sequence image at time t, the input of the feature vector of the lesion sequence image at time t, and the time interval between t−1 and t. The activation function is calculated after summation.

Step 3: The forgetting gate was calculated as Eq. 2, including the benign and malignant labels of the lesion sequence image at time t, the input of the feature vector of the lesion sequence image at time t, and the time interval between t−1 and t. The activation function is calculated after summation.

Step 4: The output gate is calculated as Eq. 4, which includes the benign and malignant labels of the lesion sequence image at time t, the input of the feature vector of the lesion sequence image at time t, and the cumulative sum of the time intervals of t−1 and t, and then the activation function is calculated.

Step 5: The computational memory unit (the first layer is not calculated), as shown in Eq. 5, contains the benign and malignant labels of the lesion sequence image at time t, the input of the feature vector of the lesion sequence image at time t, and the time interval between t−1 and t. The activation function is calculated after summation.

Step 6: Calculation of implicit elements, such as Eq. 6.

Step 7: Repeat steps Eqs 2–6 to calculate the input and output of each layer by layer.

4 Experiments and Results

4.1 Data Sets

In order to train and classify CNN, we used two labeled lung data sets. One is the NLST data set, and the other is the provided cooperative hospital data set.

NLST (The Landmark National Lung Screening Trial) data set. The NLST is a randomized, multisite trial that examined lung cancer–specific mortality among participants in an asymptomatic high-risk cohort. Subjects underwent screening with the use of low-dose CT or chest X-ray. More than 53,000 participants each underwent three annual screenings from 2002 to 2007 (approximately 25,500 in the LDCT study arm), with follow-up postscreening through 2009. Lung cancers identified as pulmonary nodules were confirmed by diagnostic procedures (e.g., biopsy, cytology); participants with confirmed lung cancer were subsequently removed from the trial for treatment through 2009. NLST contains 421 CT scans annotated by four radiological experts voxel-wise.

The cooperative hospital had CT images of the lungs of 267 patients, a total of 1,837 cases. The pulmonary CT images of the cooperation hospital were taken from the positron emission tomography (PET)/CT center of a hospital in Shanxi Province in January 2011 and January 2017. The medical equipment used was Discovery ST16 PET/CT of GE. The CT image acquisition parameters were as follows: 150 mA, 140 kV, layer thickness 3.75 mm, and image resolution 512 × 512. Under the diagnosis of two professional radiologists, the nodule location was marked, and all cases were marked with 1 and 0, respectively.

4.2 Input Description

We determined the size of the receptive field used in our framework by analyzing the size distribution of pulmonary nodules. Firstly, we observed that the diameter density peak of small nodules was about 9 voxels in X and Y dimensions and about 4 voxels in Z dimension. We set the first network, Archi-1, with an acceptance domain of 30 × 30 × 10 (voxels). This receiving domain can contain small pulmonary nodules in the appropriate context, and it covers 85% of all nodules in the data set. This can be performed well under normal circumstances, most often in patients. The purpose of this window size is to provide rich background information for small nodules and appropriate background information for medium-sized lesions. For some large nodules, it can usually include their main parts and exclude some marginal areas. Finally, we constructed an overall acceptance domain of 40 × 40 × 10. According to our statistical analysis, the boundary of this model is more than 99% of nodules, except for several outliers.

4.3 Classification Accuracy Comparison of 3D CNN Feature Extraction Methods With Different Parameters

This article adopts the method of uniform random sampling; the NLST data set is divided into training set validation set and test set. Three parts will be 1 over 10 of the NLST data set as a test set; the rest of the data according to speak is divided into training set and test set because the model in clinical practice needs to detect significant differences of data and training data, so we use team hospital to provide the data set and the NLST test set as a test set to select the training program.

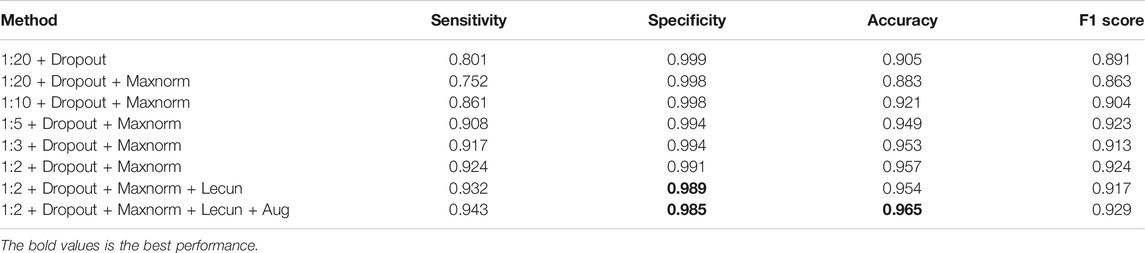

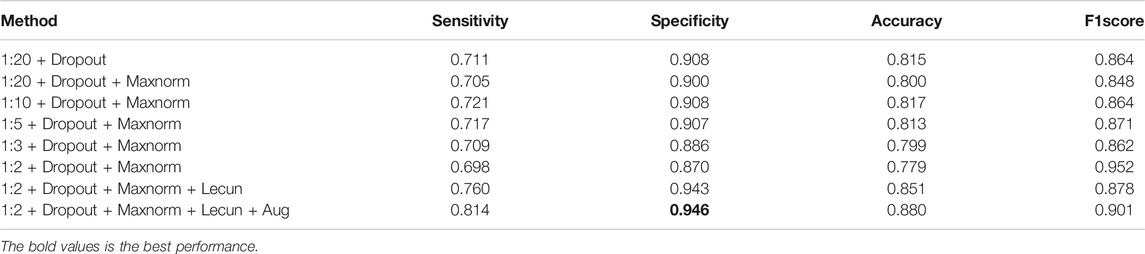

Training process, from the positive and negative sample dropout layer and maxnorm regularization, weight initialization, data expansion four aspects to experiment on the two-validation set to explore the four aspects of the influence of different combination for the model to detect lung nodules on the NLST test and cooperation hospital test sets of prediction results, and the network parameters as shown in Tables 2, 3, which define the sensitivity, specificity, accuracy, and F score of the four parameters to evaluate the classification effect of nodules. The dropout rates are 1:20, 1:10, 1:5, 1:3, and 1:2.

First, it can be seen from Tables 2, 3, when the samples are rare, even in the process of testing, all samples to sample more than one, and the same accuracy can be higher. Thus, the balance of positive and negative samples in the training is very important in this article. The main purpose of this model from the hundreds of thousands of pieces of chest CT image sequence forecasts suggestive of benign and malignant lesion area is for the doctor to prescreen in the end. The bold values is the best performance.

It can be seen from Tables 4 and 5 that the accuracy of the basic RNN tanh-RNN can reach 87.1%, which verifies that the RNN has the ability of learning and discriminating features. Support Vector Machines (SVM) is a traditional feature extraction and classification method. As it is unable to learn deep hidden features and their existing relationships, its accuracy is relatively low. However, the T-LSTM network proposed in this article is higher than RNN, which proves that considering the relevant continuous changes of things is helpful to further improve the accuracy of prediction. The bold values is the best performance.

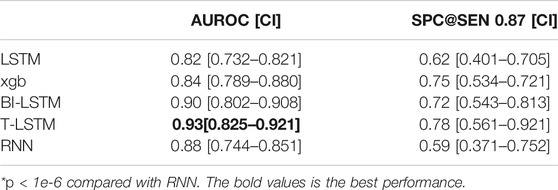

TABLE 5. Results for all models, AUROC, and specificity at sensitivity (SPC@SEN) of 0.87, with 95% confidence interval (CI) displayed in brackets.

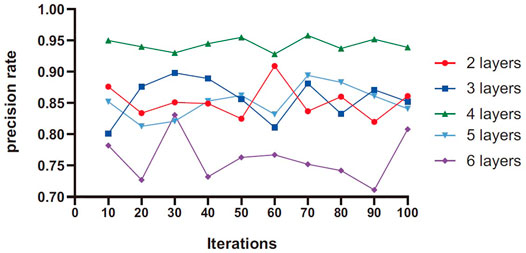

4.4 Discussion of the Number of LSTM Layers

The number of network layers directly affects the ability of the network to extract the characteristics of lung nodules. Theoretically, the more hidden layers, the more complex the network structure, making the network have a strong feature extraction ability, and the higher the accuracy. However, blindly increasing the number of network layers will result in increased difficulty of network training, greatly prolonged learning time, and poor accuracy. In this article, the network structure with different hidden layers is studied to ensure that other parameters of the network remain unchanged, and the average value is calculated 10 times per iteration. Generally speaking, the more layers of LSTM module, the stronger the learning ability of higher-level time representation. At the same time, a layer of ordinary neural network is added to reduce the dimension of the output results.

As can be seen from Figure 5, the prediction accuracy increases first and then decreases with the increase of the number of network layers. When the number of network layers is 4, the overall accuracy is higher than other values. When the number of layers in the network is 6, because the number of layers is too deep and difficult to converge, and at the same time, the high-level abstract feature information weakens the differentiation of benign and malignant nodules, the result will fall into the local extreme value, and the accuracy is reduced.

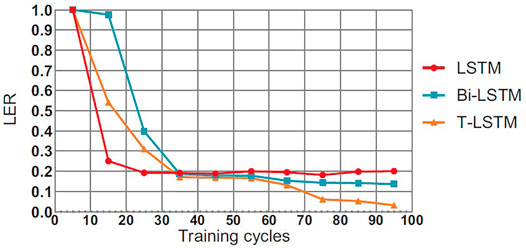

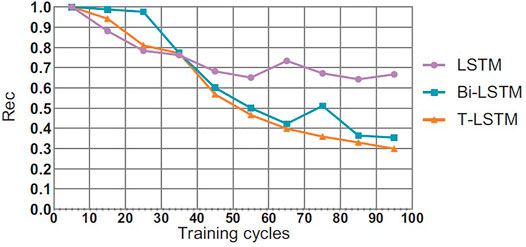

4.5 Comparison of Convergence Effect of T-LSTM

This section will compare the performance of the T-LSTM and the Bi-directional Long Short-Term Memory (BI-LSTM) LSTM in the training process. In theory, the BI-LSTM model takes about twice as much time as the LSTM because of its bidirectional structure. The single-cycle time of the T-LSTM is approximately 1.4 times as much as the LSTM due to the fact that the input data of the T-LSTM are more than those of the LSTM as shown in Figures 6–8, in the training process of neural network, although the LSTM converged faster than T-LSTM and BI-LSTM at the beginning; the time of BI-LSTM was only 1.5 times that of LSTM and that of T-LSTM was only 1.2 times that of LSTM due to the impact of data reading speed and other factors. After some periodic training, when LSTM and BI-LSTM gradually approach a constant value, T-LSTM can continue to converge. From the perspective of recognition effect, T-LSTM performs better than the other two. From the perspective of model convergence and recognition effect, the validity of time-modulated recursive neural network structure is proven.

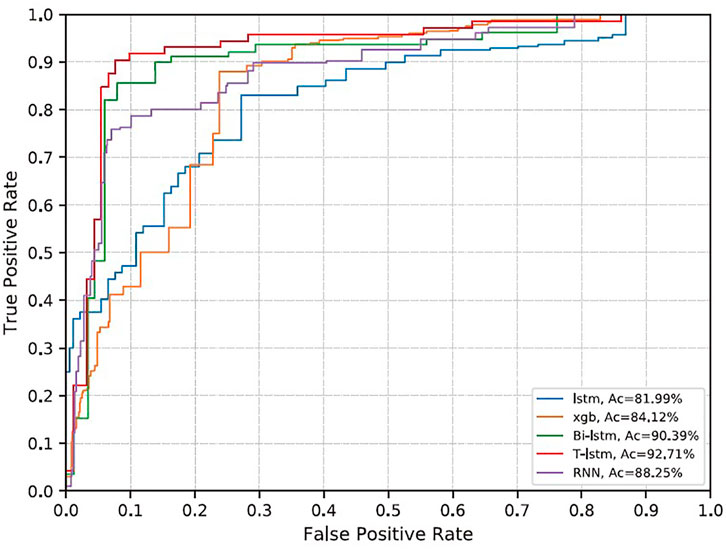

FIGURE 8. ROC curve of each model. Blue is LSTM; orange is gradient boost (xgb); green is BiLSTM; red is T-LSTM; and purple is RNN.

4.6 Comparison of Prediction Rates Among Different Classifiers

The RNN classifier does not add a priori knowledge. The AUC under the receiver operating characteristic (ROC curve; AUROC) obtained on the evaluation set is 0.88, the sensitivity is 0.87, and the specificity is 0.59. LSTM did not improve the accuracy and decreased slightly compared with RNN (Table 4). BI-LSTM increased AUROC to 0.90 and specificity to 0.64, which was not statistically significant. The improvement obtained by gradient boosting was more significant (AUROC 0.84, specificity 0.75, p < 1e−6). The T-LSTM network further improved the performance, with AUROC of 0.93, specificity of 0.78, and sensitivity of 0.87. The ROC curves of the five classifiers are given in Figure 8.

We can see that because the ability of network to learn from image sequences is limited by depth, RNN is not as good as BI-LSTM. In future work, we intend to validate our results on a larger evaluation set. The further improvement of this work is to train 3D CNN and T-LSTM networks at the same time to realize the joint optimization of the whole classification architecture. In addition, we will consider the role of clinical information in guiding classification. Finally, we can also evaluate the effect of using multiple a priori knowledge or neighborhood knowledge in the training set. In conclusion, combining long-time sequence image research in the deep learning analysis framework can improve the classification performance and enhance radiologists’ confidence in the reliability of decision support technology.

5 Discussion

It is reported that deep learning algorithm can achieve high performance in medical image classification task (Kooi and Karssemeijer, 2017; Ribli et al., 2018). However, the current algorithm is still lower than the average level of human radiologists in real-world data. One explanation for this gap is that radiologists add additional information to their diagnostic analysis, such as nonimage clinical information and patient specific information. We address the latter by allowing our algorithm to analyze current and previous studies. Most of the literatures in this field do not take into account the relevant characteristics and information of patient time series, so it is difficult to accurately compare the performance. On different data sets, AUROC values for cancer classification ranged from 0.79 to 0.95. The AUROCs with time information and without time information are 0.82 and 0.93, respectively, which is different from the related work (Kooi and Karssemeijer, 2017), reflecting the significant benefits of using previous studies. The advantage of our method is that it only needs to comprehensively label each pulmonary nodule without expensive local lesion description. The experimental results show that it is not enough to simply classify the images separately; only by training the classification algorithm on the long-time sequence image can it be improved.

It can be seen in Table 4, there are two serious problems in RNN: gradient explosion and gradient disappearance; thus, the follow-up training results are not very good. LSTM improves the gradient updating process, which is mainly generated by the accumulation of the output of each gate, so as to avoid the problems of gradient explosion and gradient disappearance caused by accumulation and multiplication such as RNN. Bidirectional LSTM is actually the integration of two LSTM (forward and backward) to enable them to extract information from the above and below at the same time. The main integration methods are direct splicing concatenated and weighted summation. Adding nonlinear characteristics can also fit the data better. The training system that provides the highest performance is T-LSTM, which trains based on features of 3D CNN extracted. T-LSTM solution is also scalable for analyzing multiple a priori sequences, and we will further study how to increase scalability and robustness in the future. In Table 2, there is a lower probability of specificity after data enhancement than without data enhancement, which may be related to the setting of data enhancement parameters. It can reduce the overfitting of data, but also depends on enhanced effects and methods. Although the proposed method has a certain reduction, it is within a reasonable range. In Figures 5, 6, the convergence speed of the proposed method is slower than that of LSTM at the beginning, but it can achieve the best convergence effect after 35 iterations. This shows that our method can realize effective processing and analysis for data with more time information.

However, there are still some limitations. If the time span of LSTM is very large and the network is very deep, this calculation will be very time-consuming. Meanwhile, this network structure also has certain limitations in efficiency and scalability. In addition, there is the issue of data size. An LSTM is a neural network and like any neural network requires a large amount of data to be trained on properly. The information with a time series needs to traverse all the cells before entering the current processing unit. This generates vanishing gradient. LSTM does not completely solve this problem. The methods proposed in this article tend to do better on unstable time series with more fixed components because of their inherent ability to quickly adapt to sharp changes in trends. However, this method can only make short-term prediction, and remote prediction may be invalid. This is also one of the limitations of the proposed method. In future work, we will consider how to better learn on medical small sample data sets. And we will try to improve the robustness and generalization of the algorithm so that the model can be used in more different scenarios and environments.

6 Conclusion

In this article, we have used and substantially extended LSTM in the 3D spatial–temporal domain for the task of modeling 3D longitudinal pulmonary nodule data. The novel 3D CNNs and T-LSTM network jointly learn the interslice structures, the interslice 3D contexts, and the temporal dynamics. Quantitative results of notably higher accuracies than the original RNN are reported, using several metrics on predicting the future tumor volumes. Compared with the most recent 2D + time deep learning–based tumor growth prediction models (Missrie et al., 2018; Audrey et al., 2019), our new approach directly works on 3D imaging space and incorporates clinical factors in an end-to-end trainable manner. This method can also detect the benign and malignant pulmonary nodules. Our experiments are conducted on the largest longitudinal lung data set (421 patients) to date and demonstrate the validity of our proposed method. This method enables efficient and effective 3D medical image segmentation with only sparse manual image annotations required. The presented prediction model can potentially enable other applications of medical sequence imaging applications. Gradient extinction can be remedied with the LSTM module, which is currently considered a multiswitched gateway, a bit like ResNet. Because LSTM can bypass some cells and memorize long steps, LSTM can solve the gradient disappearance problem to some extent. This method can provide technical support for processing medical image data or bioinformatics data with time information in the future.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Author Contributions

MW contributed to conception and design of the study. XL performed the statistical analysis and wrote the first draft of the manuscript. RA wrote sections of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

Funding

This work was supported by National Natural Science Foundation of China (grant numbers 61872261, 61972274).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Aaron, R., Miller, J. B., Christin, N., and Jeffrey, C. (2018). Neuroscience Learning from Longitudinal Cohort Studies of Alzheimer’s Disease: Lessons for Disease-Modifying Drug Programs and an Introduction to the center for Neurodegeneration and Translational Neuroscience. New York, NY: Alzheimers & Dementia Translational Research & Clinical Interventions. S2352873718300350–.

Audrey, W., Aberle, D., and Hsu, W. (2019). External Validation and Recalibration of the Brock Model to Predict Probability of Cancer in Pulmonary Nodules Using Nlst Data. Thorax 74. doi:10.1136/thoraxjnl-2018-212413

Baytas, I. M., Xiao, C., Zhang, X., Wang, F., and Zhou, J. (2017). “Patient Subtyping via Time-Aware Lstm Networks,” in The 23rd ACM SIGKDD International Conference. doi:10.1145/3097983.3097997

Bodla, N., Zheng, J., Xu, H., Chen, J. C., and Chellappa, R. (2017). “Deep Heterogeneous Feature Fusion for Template-Based Face Recognition,” in 2017 IEEE Winter Conference on Applications of Computer Vision (WACV). doi:10.1109/WACV.2017.71

Boudjemaa, R., Ouaar, F., and Oliva, D. (2020). Fractional Lévy Flight Bat Algorithm for Global Optimisation. Ijbic 15 (2), 100–112. doi:10.1504/ijbic.2020.10028011

Cai, X., Geng, S., Zhang, J., Wu, D., Cui, Z., Zhang, W., et al. (2021). A Sharding Scheme Based Many-objective Optimization Algorithm for Enhancing Security in Blockchain-Enabled Industrial Internet of Things. IEEE Trans. Ind. Inform. 17 (1), 7650–7658. doi:10.1109/tii.2021.3051607

Cai, X., Cao, Y., Ren, Y., Cui, Z., and Zhang, W. (2021). Multi-objective Evolutionary 3D Face Reconstruction Based on Improved Encoder-Decoder Network. Inf. Sci. 581, 233–248. doi:10.1016/j.ins.2021.09.024

Chandra, R. (2015). Competition and Collaboration in Cooperative Coevolution of Elman Recurrent Neural Networks for Time-Series Prediction. IEEE Trans. Neural Networks Learn. Syst. 26 (12), 1. doi:10.1109/tnnls.2015.2404823

Che, Z., Purushotham, S., Cho, K., Sontag, D., and Liu, Y. (2018). Recurrent Neural Networks for Multivariate Time Series with Missing Values. Sci. Rep. 8 (1), 6085. doi:10.1038/s41598-018-24271-9

Cheng, L. F., Darnell, G., Dumitrascu, B., Chivers, C., Draugelis, M. E., Li, K., et al. (2020). Sparse Multi-Output Gaussian Processes for Medical Time Series Prediction. BMC Med. Inform. Decis. Making 20. doi:10.1186/s12911-020-1069-4

Christo, V. R. E., Nehemiah, H. K., Nahato, K. B., Brighty, J., and Kannan, A. (2020). Computer Assisted Medical Decision-Making System Using Genetic Algorithm and Extreme Learning Machine for Diagnosing Allergic Rhinitis. Ijbic 16 (3), 148–157. doi:10.1504/ijbic.2020.111279

Cui, Z., Zhang, M., Wang, H., Cai, X., Zhang, W., and Chen, J. (2020). Hybrid many-objective Cuckoo Search Algorithm with Lévy and Exponential Distributions. Memetic Comp. 12 (3), 251–265. doi:10.1007/s12293-020-00308-3

Cui, Z., Zhao, Y., Cao, Y., Cai, X., Zhang, W., and Chen, J. (2021). Malicious Code Detection under 5G HetNets Based on a Multi-Objective RBM Model. IEEE Netw. 35 (2), 82–87. doi:10.1109/mnet.011.2000331

Deng, W., Zhao, H., Song, Y., and Xu, J. (2020). An Effective Improved Co-evolution Ant colony Optimisation Algorithm with Multi-Strategies and its Application. Ijbic 16 (3), 158–170. doi:10.1504/ijbic.2020.10033314

Donahue, J., Hendricks, L. A., Guadarrama, S., Rohrbach, M., Venugopalan, S., Saenko, K., et al. (2015). Long-term Recurrent Convolutional Networks for Visual Recognition and Description. Elsevier.

Duffy, S. W., and Field, J. K. (2020). Mortality Reduction with Low-Dose Ct Screening for Lung Cancer. New Engl. J. Med. 382 (6).

Fragkiadaki, K., Levine, S., Felsen, P., and Malik, J. (2016). Recurrent Network Models for Human Dynamics. Santiago, Chile: IEEE 2, 18.

Gao, L., Pan, H., Liu, F., Xie, X., and Han, J. (2018). “Brain Disease Diagnosis Using Deep Learning Features from Longitudinal MR Images: Second International Joint Conference, Apweb-Waim 2018, macau, china, July 23–25, 2018,” in Web and Big Data, 327–339. proceedings, part i. doi:10.1007/978-3-319-96890-2_27

Grano, T., and Zhang, Y. (2019). “Getting Aspectual-Guo under Control in Mandarin Chinese: An Experimental Investigation,” in Proceedings of the 30th North American Conference on Chinese Linguistics (NACCL-30), Vol. 1, 208–215.

He, K., Zhang, X., Ren, S., and Sun, J. (2016). “Deep Residual Learning for Image Recognition,” in Proceeding of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27-30 June 2016 (IEEE), 770–778. doi:10.1109/CVPR.2016.90

Hu, B., Chen, Y., and Keogh, E. (2016). Classification of Streaming Time Series under More Realistic Assumptions. Data Min Knowl Disc 30 (2), 403–437. doi:10.1007/s10618-015-0415-0

Huimei, H., Xingquan, Z., and Ying, L. (2020). Generalizing Long Short-Term Memory Network for Deep Learning from Generic Data. ACM Trans. Knowledge Discov. Data (Tkdd) 14 (2), 1–28. doi:10.1145/3366022

Kamnitsas, K., Ledig, C., Newcombe, V. F. J., Simpson, J. P., Kane, A. D., Menon, D. K., et al. (2016). Efficient Multi-Scale 3D CNN with Fully Connected CRF for Accurate Brain Lesion Segmentationfficient Multi-Scale 3d Cnn with Fully Connected Crf for Accurate Brain Lesion Segmen- Tation. Med. Image Anal. 36, 61–78. doi:10.1016/j.media.2016.10.004

Khusnuliawati, H., Fatichah, C., and Soelaiman, R. (2017). Multi-feature Fusion Using Sift and Lebp for finger Vein Recognition. TELKOMNIKA (Telecommunication Computing Electronics and Control) 15, 478. doi:10.12928/telkomnika.v15i1.4443

Kooi, T., and Karssemeijer, N. (2017). Classifying Symmetrical Differences and Temporal Change in Mammogra- Phy Using Deep Neural Networks. J. Med. Imaging (Bellingham) 4 (4), 044501. doi:10.1117/1.JMI.4.4.044501

Koutník, J., Greff, K., Gomez, F., and Schmidhuber, J. (2014). A Clockwork Rnn. Computer Sci., 1863–1871.

Li, L., and Feng, Y. (2015). “Using Time Series Analysis to Forecast Emergency Patient Arrivals in Ct Department,” in Proceeding of the 2015 12th International Conference on Service Systems & Service Management, Guangzhou, China, 22-24 June 2015. IEEE. doi:10.1109/icsssm.2015.7170134

Li, Y., Yu, Z., Chen, Y., Yang, C., Li, Y., Li, X. A., et al. (2020). Automatic Seizure Detection Using Fully Convolutional Nested Lstm. Int. J. Neural Syst. 30 (04), 1250034–1253520. doi:10.1142/S0129065720500197

Missrie, I., Hochhegger, B., Zanon, M., Capobianco, J., César, d. M. N. A., Pereira, M. R., et al. (2018). Small Low-Risk Pulmonary Nodules on Chest Digital Radiog Raphy: Can We Predict whether the Nodule Is Benign? Clin. Radiol. 73 (10), 902–906. S0009926018302277–. doi:10.1016/j.crad.2018.06.002

Nagaratnam, N., Nagaratnam, K., and Cheuk, G. (2018). Lung Cancer in the Elderly. Cham, Switzerland: Springer.

Oh, D. Y., Kim, J., and Lee, K. J. (2019). “Longitudinal Change Detection on Chest X-Rays Using Geometric Correlation Maps,” in Medical Image Computing and Computer Assisted Intervention – MICCAI 2019. Cham, Switzerland: Springer Nature, 748–756. doi:10.1007/978-3-030-32226-7_83

Onisko, A., Druzdzel, M., and Austin, R. (2016). How to Interpret the Results of Medical Time Series Data Analysis: Classical Statistical Approaches versus Dynamic Bayesian Network Modeling. J. Pathol. Inform. 7 (1), 50. doi:10.4103/2153-3539.197191

Qiang, Y., Zhang, X., Ji, G., and Zhao, J. (2015). Automated Lung Nodule Segmentation Using an Active Contour Model Based on Pet/ct Images. J. Comput. Theor. Nanoscience 12 (8), 1972–1976. doi:10.1166/jctn.2015.4216

Ren, S., He, K., Girshick, R., and Sun, J. (2017). Faster R-Cnn: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach Intell. 39 (6), 1137–1149. doi:10.1109/TPAMI.2016.2577031

Ribli, D., Horváth, A., Unger, Z., Pollner, P., and Csabai, I. (2018). Detecting and Classifying Lesions in Mammograms with Deep Learning. Sci. Rep. 8 (1), 4165. doi:10.1038/s41598-018-22437-z

Santeramo, R., Withey, S., and Montana, G. (2018). “Longitudinal Detection of Radiological Abnormalities with Time-Modulated Lstm,” in Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, 326–333. doi:10.1007/978-3-030-00889-5_37

Shi, B., Chen, Y, Zhang, P., Smith, C. D., and Liu, J. (2017). Nonlinear Feature Transformation and Deep Fusion for Alzheimer’s Disease Staging Analysis. Pattern Recognition 63, 487. doi:10.1016/j.patcog.2016.09.032

Shinde, S., Prasad, S., Saboo, Y., Kaushick, R., Saini, J., Pal, P. K., et al. (2019). Predictive Markers for Parkinson's Disease Using Deep Neural Nets on Neuromelanin Sensitive MRI. Neuroimage Clin. 22, 101748. doi:10.1016/j.nicl.2019.101748

Taillant, E., Avila-Vilchis, J. C., Allegrini, C., Bricault, I., and Cinquin, P. (2004). “Ct and Mr Compatible Light Puncture Robot: Architectural Design and First Experiments,” in Medical Image Computing & Computer-Assisted Intervention-Miccai, International Conference Saint-Malo (France: Springer). doi:10.1007/978-3-540-30136-3_19

Xiao, B., Chuntian, L., Peng, R., Jun, Z., Huijie, Z., and Su, Y. (2015). Object Classification via Feature Fusion Based Marginalized Kernels. Geosci. Remote Sensing Lett. IEEE 12, 8–12. doi:10.1109/LGRS.2014.2322953

Yang, S., Huang, Q., Cui, L., Xu, K., Ming, Z., and Wen, Z. (2020). Variable-grouping-based Exponential Crossover for Differential Evolution Algorithm. Ijbic 15 (3), 147–158. doi:10.1504/ijbic.2020.107486

Zhang, J., Chen, L., Ye, Y., Guo, G., Chen, R., Vanasse, A., et al. (2020). Survival Neural Networks for Time-To-Event Prediction in Longitudinal Study. Knowledge Inf. Syst. 62 (10). doi:10.1007/s10115-020-01472-1

Zhang, J., Xia, Y., Cui, H., and Zhang, Y. (2018). Pulmonary Nodule Detection in Medical Images: A Survey. Biomed. signal Process. Control 43 (MAY), 138–147. doi:10.1016/j.bspc.2018.01.011

Zhang, X., Onieva, E., Perallos, A., and Osaba, E. (2020). Genetic Optimised Serial Hierarchical Fuzzy Classifier for Breast Cancer Diagnosis. Ijbic 15 (3), 194–205. doi:10.1504/ijbic.2020.107490

Zhang, Y. (2020). Nominal Property Concepts and Substance Possession in Mandarin Chinese. J. East. Asian Linguist 29, 393–434. doi:10.1007/s10831-020-09214-8

Zhang, Y. (2021). Subjectivity and Nominal Property Concepts in Mandarin Chinese. ProQuest Dissertation Publishing: New Jersy, USA. [Doctoral dissertation, Indiana University].

Zhang, Z., Cao, Y., Cui, Z., Zhang, W., and Chen, J. (2021). A Many-objective Optimization Based Intelligent Intrusion Detection Algorithm for Enhancing Security of Vehicular Networks in 6G. IEEE Trans. Veh. Technol. 70 (6), 5234–5243. doi:10.1109/tvt.2021.3057074

Keywords: 3D CNNs, time-modulated LSTM, multiscale three-dimensional feature, prediction, characteristics of the fusion, pulmonary lesions

Citation: Liu X, Wang M and Aftab R (2022) Study on the Prediction Method of Long-term Benign and Malignant Pulmonary Lesions Based on LSTM. Front. Bioeng. Biotechnol. 10:791424. doi: 10.3389/fbioe.2022.791424

Received: 08 October 2021; Accepted: 06 January 2022;

Published: 02 March 2022.

Edited by:

Tinggui Chen, Zhejiang Gongshang University, ChinaReviewed by:

Zhen Li, The University of Hong Kong, Hong Kong SAR, ChinaXin Yang, Huazhong University of Science and Technology, China

Minghui Sun, Jilin University, China

Copyright © 2022 Liu, Wang and Aftab. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rukhma Aftab, 18234132492@163.com

Xindong Liu

Xindong Liu Mengnan Wang2

Mengnan Wang2 Rukhma Aftab

Rukhma Aftab