- 1Rehabilitation Engineering Laboratory, D-HEST, ETH Zürich, Zurich, Switzerland

- 2Department of Physiotherapy Zürcher RehaZentrum Wald, Wald, Switzerland

- 3Department of Neurology, University of Zurich and University Hospital Zurich, Zurich, Switzerland

Background: Robot-assisted therapy can increase therapy dose after stroke, which is often considered insufficient in clinical practice and after discharge, especially with respect to hand function. Thus far, there has been a focus on rather complex systems that require therapist supervision. To better exploit the potential of robot-assisted therapy, we propose a platform designed for minimal therapist supervision, and present the preliminary evaluation of its immediate usability, one of the main and frequently neglected challenges for real-world application. Such an approach could help increase therapy dose by allowing the training of multiple patients in parallel by a single therapist, as well as independent training in the clinic or at home.

Methods: We implemented design changes on a hand rehabilitation robot, considering aspects relevant to enabling minimally-supervised therapy, such as new physical/graphical user interfaces and two functional therapy exercises to train hand motor coordination, somatosensation and memory. Ten participants with chronic stroke assessed the usability of the platform and reported the perceived workload during a single therapy session with minimal supervision. The ability to independently use the platform was evaluated with a checklist.

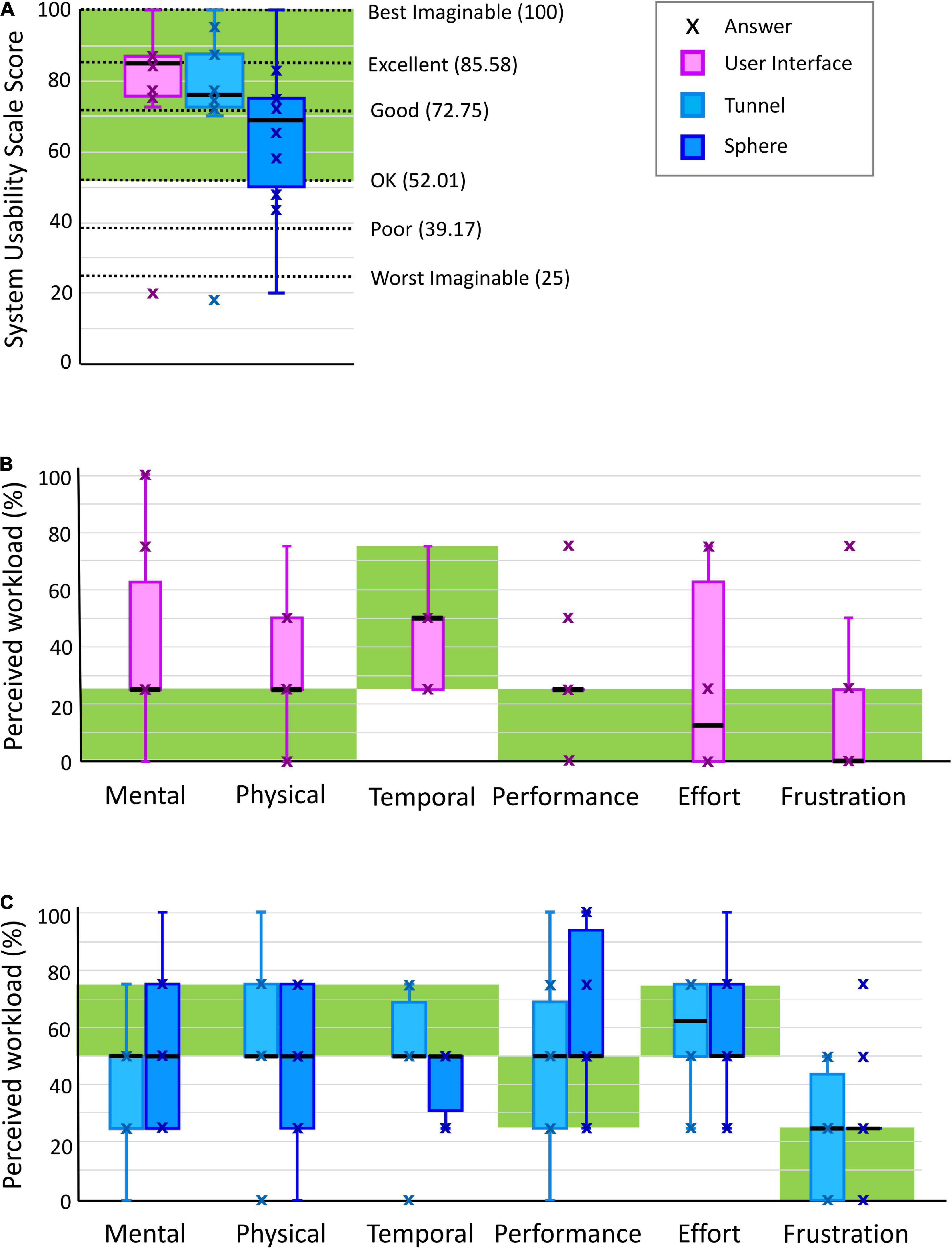

Results: Participants were able to independently perform the therapy session after a short familiarization period, requiring assistance in only 13.46 (7.69–19.23)% of the tasks. They assigned good-to-excellent scores on the System Usability Scale to the user-interface and the exercises [85.00 (75.63–86.88) and 73.75 (63.13–83.75) out of 100, respectively]. Nine participants stated that they would use the platform frequently. Perceived workloads lay within desired workload bands. Object grasping with simultaneous control of forearm pronosupination and stiffness discrimination were identified as the most difficult tasks.

Discussion: Our findings demonstrate that a robot-assisted therapy device can be rendered safely and intuitively usable upon first exposure with minimal supervision through compliance with usability and perceived workload requirements. The preliminary usability evaluation identified usability challenges that should be solved to allow real-world minimally-supervised use. Such a platform could complement conventional therapy, allowing to provide increased dose with the available resources, and establish a continuum of care that progressively increases therapy lead of the patient from the clinic to the home.

Introduction

Despite progress in the field of neurorehabilitation over the last decades, around one third of stroke survivors suffer from chronic arm and hand impairments (Raghavan, 2007; Morris et al., 2013), which limit their ability to perform basic activities of daily living (Jönsson et al., 2014; Franck et al., 2017; Katan and Luft, 2018). Growing evidence suggests that intensive rehabilitation programs that maximize and maintain therapy dose (i.e., number of exercise task repetitions and total therapy time) may promote further recovery and maintenance of upper limb function (Lohse et al., 2014; Veerbeek et al., 2014; Lang et al., 2015; Schneider et al., 2016; Vloothuis et al., 2016; Ward et al., 2019). A promising approach to increase therapy dose and intensity, may be offered by robot-assisted therapy (McCabe et al., 2015; Veerbeek et al., 2017; Daly et al., 2019). A variety of robotic devices have been proposed to train hand and wrist movements (Lambercy et al., 2007; Gupta et al., 2008; Takahashi et al., 2008; Aggogeri et al., 2019), as well as to provide sensitive and objective evaluation or therapy of motor and sensory (e.g., proprioceptive) function (Casadio et al., 2009; Kenzie et al., 2017; Mochizuki et al., 2019). However, robot-assisted therapy has so far typically been applied with constant supervision by trained personnel, which prepares and manages the complex equipment, sets up the patient on/in the device and configures the appropriate therapy plan. As a result, most robotic devices are typically used in the context of short (frequently outpatient) supervised therapy sessions in clinical settings (Lum et al., 2012; Page et al., 2013; Klamroth-Marganska et al., 2014). This generates organizational and economical constraints that restrict the use of the technology (Wagner et al., 2011; Schneider et al., 2016; Rodgers et al., 2019; Ward et al., 2019) and, as a result, despite claiming high intensity (Lo et al., 2010; Rodgers et al., 2019), the therapy dose achieved using robots remains limited compared to guidelines (Bernhardt et al., 2019) and pre-clinical evidence (Nudo and Milliken, 1996).

Minimally-supervised therapy, defined here as any form of therapy performed by a patient independently with minimal external intervention or supervision, is a promising approach to better harvest the potential of rehabilitation technologies such as robot-assisted therapy (Ranzani et al., 2020). This could allow for the simultaneous training of multiple subjects in the clinics (Büsching et al., 2018), or for subjects to receive robot-assisted training in their home (Chi et al., 2020). Several upper limb technology-supported therapies have been proposed for home use (Wittmann et al., 2016; Ates et al., 2017; Nijenhuis et al., 2017; Chen et al., 2019; Cramer et al., 2019; Laver et al., 2020), allowing subjects to benefit from additional rehabilitative services to increase dose (Laver et al., 2020; Skirven et al., 2020). However, only few minimally-supervised robotic devices capable of actively supporting/resisting subjects during interactive therapy exercises have been proposed (Lemmens et al., 2014; Sivan et al., 2014; Wolf et al., 2015; Hyakutake et al., 2019; McCabe et al., 2019) and, as typically happening in conventional care (Qiuyang et al., 2019), most of them did not focus on the hand. Moreover, these devices only partially fulfilled the complex set of constraints imposed by a minimally-supervised use.

To be effective, motivating and feasible, minimally-supervised robot-assisted therapy platforms (i.e., a set of hardware and software technologies used to perform therapy exercises) should meet a wide range of usability, human factors and hardware requirements, which are difficult to respect simultaneously. Besides the necessity to provide motivating and physiologically relevant task-oriented exercises to maximize subject engagement (Veerbeek et al., 2014; Laut et al., 2015; Johnson et al., 2020) and to monitor subjects’ ability level to continuously adapt the therapy (Metzger et al., 2014a; Hocine et al., 2015; Wittmann et al., 2016; Aminov et al., 2018), ensuring ease of use is critical (Zajc and Russold, 2019). When considering using a robot-assisted platform in a minimally-supervised way (clinical and home settings), specific hardware and software changes should be considered to allow a positive user experience and compliance with the therapy program/targets, as well as to assure safe interaction. In this sense, integrating usability evaluation during the development of rehabilitation technologies was shown to contribute to device design improvements, user satisfaction and device usability (Shah and Robinson, 2007; Power et al., 2018; Meyer et al., 2019). Unfortunately, the usability of robotic devices for upper-limb rehabilitation is only rarely evaluated and documented in the target user population before clinical tests (Pei et al., 2017; Catalan et al., 2018; Guneysu Ozgur et al., 2018; Nam et al., 2019; Tsai et al., 2019).

In this paper, we present the design of a platform for minimally-supervised robot-assisted therapy of hand function after stroke and the evaluation of its short-term usability in a single experimental session with 10 potential users in the chronic stage after stroke. The proposed platform builds on an existing high-fidelity 2-degrees-of-freedom end-effector haptic device [ReHapticKnob (RHK) (Metzger et al., 2014b)], whose concept was inspired by the HapticKnob proposed by Lambercy et al. (2007). This device was successfully applied in a clinical trial on subjects with subacute stroke, showing equivalent therapy outcomes in a supervised clinical setting compared to dose-matched conventional therapy without any related adverse event (Ranzani et al., 2020). This was a pre-requisite for the exploration of strategies to better take advantage of the robot’s unique features, such as potentially allowing the provision of minimally-supervised therapy. In order to make the device usable in a minimally supervise setting, we developed an intuitive user interface (i.e., physical/hardware and graphical/software) that can be independently used by subjects in the chronic stage after stroke to perform therapy exercises with minimal supervision, either in clinical settings or at home. Additionally, two new task-oriented therapy exercises were developed to be used in a minimally-supervised scenario and complement existing exercises by simultaneously training hand grasping and forearm pronosupination, which are functionally relevant movements. The goal of this paper is to present the hard and software modifications to the platform (i.e., robotic device with new physical and virtual user interface and therapy exercises) as well as the results of a preliminary usability evaluation with participants in the chronic stage after stroke using the device independently in a single session after a short explanation/familiarization period. This work is an important step to demonstrate that subjects with chronic stroke can independently and safely use a powered robotic device for upper-limb therapy upon first exposure, highlight key design aspects that should be taken into account for maximizing usability in real-world minimally-supervised scenarios, and thereby provide a methodological basis that could be generalized to other platforms and applications.

Materials and Methods

ReHapticKnob and User Interface

The therapy platform (hardware and software) proposed in this work consists of an existing robotic device, the RHK, (Metzger et al., 2014b) with a new user interface and two novel therapy exercises. The user interface is assumed to include physical components (i.e., hardware interfaces that get in contact with the user, such as the finger pads and buttons of the rehabilitation device, the RHK) and a graphical component (i.e., software), i.e., the graphical user interface (GUI).

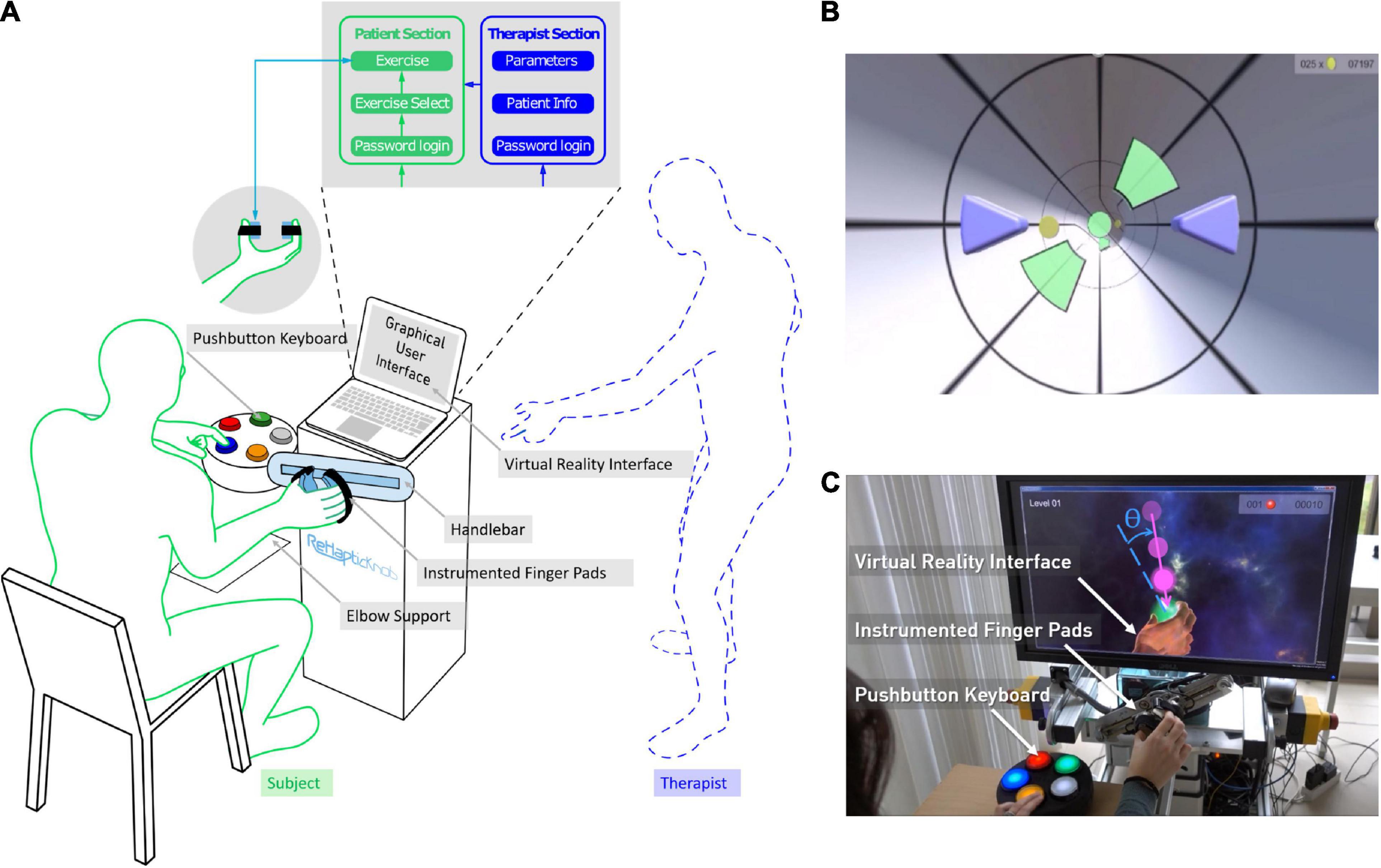

The RHK is a 2-degrees-of-freedom haptic device for assessment and therapy of hand function after stroke. It incorporates a set of automated assessments to determine the baseline difficulty of the therapy exercises (Metzger et al., 2014b), and allows to train hand opening-closing (i.e., grasping) and pronosupination of the forearm by rendering functionally relevant rehabilitative tasks (e.g., interaction with virtual objects) with high haptic fidelity (Metzger et al., 2014a). The user sits in front of the robot positioning his/her hand inside two instrumented finger pads, which slide symmetrically on a handlebar, as shown in Figure 1. Contact between the user’s fingers and the finger pads is assured through VELCRO straps. The simple end-effector design of the robot (compared to the typically more complex donning and doffing of exoskeletons where joint alignment is critical) makes it an ideal candidate for independent use. To achieve and maintain appropriate limb positioning, patients are first instructed by the therapist on how to place their arm on the forearm support. In our previous clinical trials (Metzger et al., 2014b; Ranzani et al., 2020), we found this to be sufficient to avoid misalignments and compensatory movements when using the robot.

Figure 1. The ReHapticKnob therapy platform. (A) The platform consists of a haptic rehabilitation device - the ReHapticKnob - with physical (i.e., instrumented finger pads, colored pushbutton keyboard) and graphical user interfaces (GUIs) and a set of therapy exercises that can be used with minimal supervision. The GUI includes a section for the therapist to initially customize the therapy plan and a patient section through which the user can autonomously perform predefined therapy exercises. (B) Virtual reality interface of the tunnel exercise. The subject has to drive a set of purple avatars by opening-closing and pronosupinating the finger pads. The goal is to avoid the green obstacles and collect as many coins as possible. (C) A subject performing the sphere exercise on the ReHapticKnob. During the testing phase shown, the subject has to catch a falling sphere halo by rotating the finger pads (pronosupination). The object is caught if the hand orientation (dotted line) is aligned with the falling direction (continuous line), within a certain angular range θ. Once the object is caught, the subject selects the sphere stiffness he/she perceives while squeezing the object by pressing the corresponding color on the pushbutton keyboard.

Considering the feedback collected from subjects and therapists within a previous clinical study under therapist supervision (Ranzani et al., 2020), we embedded the RHK into a novel therapy platform that is more user-friendly and suitable for minimally-supervised use. For this purpose, a novel GUI now directly controls the execution of the therapy program and includes two sections, one for the user and one for the therapist to customize the therapy, for example, before the first therapy session (Figure 1A). To configure the therapy for a subject, the therapist can log into a password protected “Therapist Section,” create/update a subject profile (i.e., selected demographic data, impaired side, identification code and password consisting of a sequence of four colors to access the therapy plan) and select the relevant exercise parameters (i.e., derived from preliminary automated assessments) that are needed to adapt the therapy exercises to the subject ability level (Metzger et al., 2014b). To perform a therapy session, the subject can autonomously navigate into the “Patient Section” using an intuitive colored pushbutton keyboard (Figure 1A). The subject can log in into his/her therapy plan by selecting his/her identification code and typing the defined colored password on the pushbutton interface. In the personal therapy plan, a graphical list of all the available therapy exercises appears. The user can then manually navigate through the exercise list and select the preferred exercise.

To maximize the usability and, consequently, the likeliness of therapists and subjects using the device, attention was devoted to the optimization of esthetics and simplicity in all virtual displays and hardware components. The design was guided by a set of usability heuristics (Nielsen, 1995), which included visibility of feedbacks (e.g., show performance feedback and unique identifiers on each user interface window), matching between virtual and haptic displays of the platform and corresponding real world tasks, user control and freedom (e.g., exit or stop buttons always available), consistency and standardization of displays’ appearance, visibility and intelligibility of instructions for use of the system, fast system response, pleasant and minimalistic design, as well as simple error detection/warnings. Particular caution was directed to the placement (e.g., easily visible/retrievable), size (e.g., large to be easily selectable), logical ordering, appearance and color coding (e.g., red for quitting/exiting) of the buttons both in the colored pushbutton keyboard and in the virtual displays (Norman, 2013; de Leon et al., 2020), trying to reduce them to a maximum of five, which was needed to execute all exercises. Finally, to guarantee platform modularity, a state machine performs the low-level control of the robot (i.e., position, velocity and force control implemented in LabVIEW 2016) while the GUI (Unity 5.6) guides the high-level control of the therapy session and easily allows to insert/remove different exercise types.

Exercises for Robot-Assisted Minimally-Supervised Therapy

The RHK includes a set of seven assessment-driven therapy exercises (Metzger et al., 2014b), which were developed following the neurocognitive therapy approach formulated by Perfetti (i.e., combining motor training with somatosensory and cognitive tasks) (Perfetti and Grimaldi, 1979). So far, these exercises only focused on the training of isolated movements (i.e., grasping or pronosupination) and were administered under therapist supervision [see (Metzger et al., 2014b; Ranzani et al., 2020) for more details on existing exercises].

To complement this available set of exercises, we implemented two new exercises optimized for use in a minimally-supervised scenario and focusing on tasks that should facilitate a transition to activities of daily living. For this purpose, the exercise tasks train synchronous movements and combine complex elaborations of sensory, cognitive and motor cues.

The tunnel exercise is a functional exercise focusing on synchronous coordination of grasping and pronosupination and on sensory perception of haptic cues. The user has to move two symmetric avatars (virtually representing the finger pads of the robot as two purple triangles, see Figure 1B) progressing in a virtual tunnel, while avoiding obstacles and trying to collect as many rewards/points (e.g., coins) as possible. The exercise includes sensory cues, namely hand vibrations indicating the correct position to avoid an obstacle, stiff virtual walls that constrain the movement of the avatars inside the virtual tunnel, and changes in viscosity (i.e., velocity-dependent resistance) within the tunnel environment on both degrees of freedom to challenge the stabilization of the hand movement during navigation. Increasing difficulty levels linearly increase the avatars speed within the tunnel (while consecutive obstacles remain at a constant distance with respect to one other), the maximum pronation and supination locations of the apertures between obstacles to promote an increase in the pronosupination range of motion (ROM) of the subject (based on an initial robotic assessment) and the changes in environment viscosity, while the space to pass through the obstacles and the haptic vibration intensity are linearly decreased. One exercise block consists of a 1-min long progression within the virtual tunnel, where up to 30 obstacles have to be avoided. One exercise session consists of a series of ten 1-min blocks.

The sphere exercise is a functional exercise focusing on hand coordination during grasping and pronosupination, with a strong focus on somatosensation and memory to identify the objects that are caught. One exercise block consists of a training phase and a testing phase. In the training phase, the user moves a virtual hand and squeezes a set of virtual spheres (i.e., three to five) to memorize the color attributed to each stiffness rendered by the robot [for more details refer to Ranzani et al. (2019)]. The user can manually switch the sphere to try/squeeze by pressing a predefined button on the colored pushbutton keyboard. In the testing phase, semi-transparent spheres (halos) fall radially from a random initial position, one at a time, toward the hand. By actively rotating the robot and adjusting the hand opening, the user has to catch the falling halo. A halo is only caught if the hand aperture matches the sphere diameter within an error band of ±10 mm, and the hand pronosupination angle is aligned with the falling direction within an error band θ (see Figure 1C) between ±40° and ±15° depending on the difficulty level. When a halo is caught, the participant has to squeeze it, identify its stiffness, and indicate it using the colored pushbutton keyboard. Each testing phase lasts 3 min. At increasing difficulty levels, the number of spheres and the speed of the falling halos increase, the tolerance in hand positioning to grasp the falling halos are reduced in the pronosupination degree of freedom, and the relative change in object stiffness decreases as a function of the subject’s stiffness discrimination ability level (based on initial robotic psychophysical assessments). One exercise session consists of three blocks (i.e., three training phases, each followed by a testing phase) and lasts between 10 and 15 min.

In both exercises, an assessment-driven tailoring regulates the level of difficulty throughout the sessions [similar to the approach described in detail in Metzger et al. (2014b)]. In short, the initial difficulty level at the beginning of the first therapy session is adapted to the subject’s ability based on two robotic assessments, which determine the subject’s active range of motion (AROM) in grasping and pronosupination and the ability to discriminate stiffnesses on the grasping degree of freedom (expressed as “Weber fraction”). In the tunnel game, “AROM” scales the positioning and size of the virtual walls that determine the size of the tunnel, while in the sphere exercise, it scales the workspace within which the halos are falling. Additionally, the “Weber fraction” scales the initial stiffness difference between spheres in the sphere exercise. At the end of an exercise block, the achieved performance (i.e., percentage of obstacles avoided over total obstacles for the tunnel exercise, and the percentage of halos correctly caught and identified over total number of halos for the sphere exercise) is summarized to the subject through a score displayed before the next block begins. The performance in the previous block can be used to further adapt the exercise difficulty over blocks similarly to other exercises presented in Metzger et al. (2014b). This allows to maintain a performance of around 70%, which maximizes engagement and avoids the frustration that could arise when performance is too low or too high (Adamovich et al., 2009; Cameirão et al., 2010; Choi et al., 2011; Lambercy et al., 2011; Metzger et al., 2014b; Wittmann et al., 2016). However, since the work presented in this paper tested only a single session, providing only little data for evaluating performance-based difficulty adaptation, these aspects will not be discussed in the present paper.

Participants

A pilot study to evaluate the usability of the proposed minimally-supervised therapy platform was conducted on ten subjects with chronic stroke (>6 months), representative of potential future users of the platform. Subjects were enrolled if they were above 18 years old, able to lift the arm against gravity, had residual ability to flex and extend the fingers, and were capable of giving informed consent and understanding two-stage commands. Subjects with clinically significant non-related pathologies (i.e., severe aphasia, severe cognitive deficits, severe pain), contraindications on ethical grounds, known or suspected non-compliance (e.g., drug or alcohol abuse) were excluded from the study.

Pilot Study Design

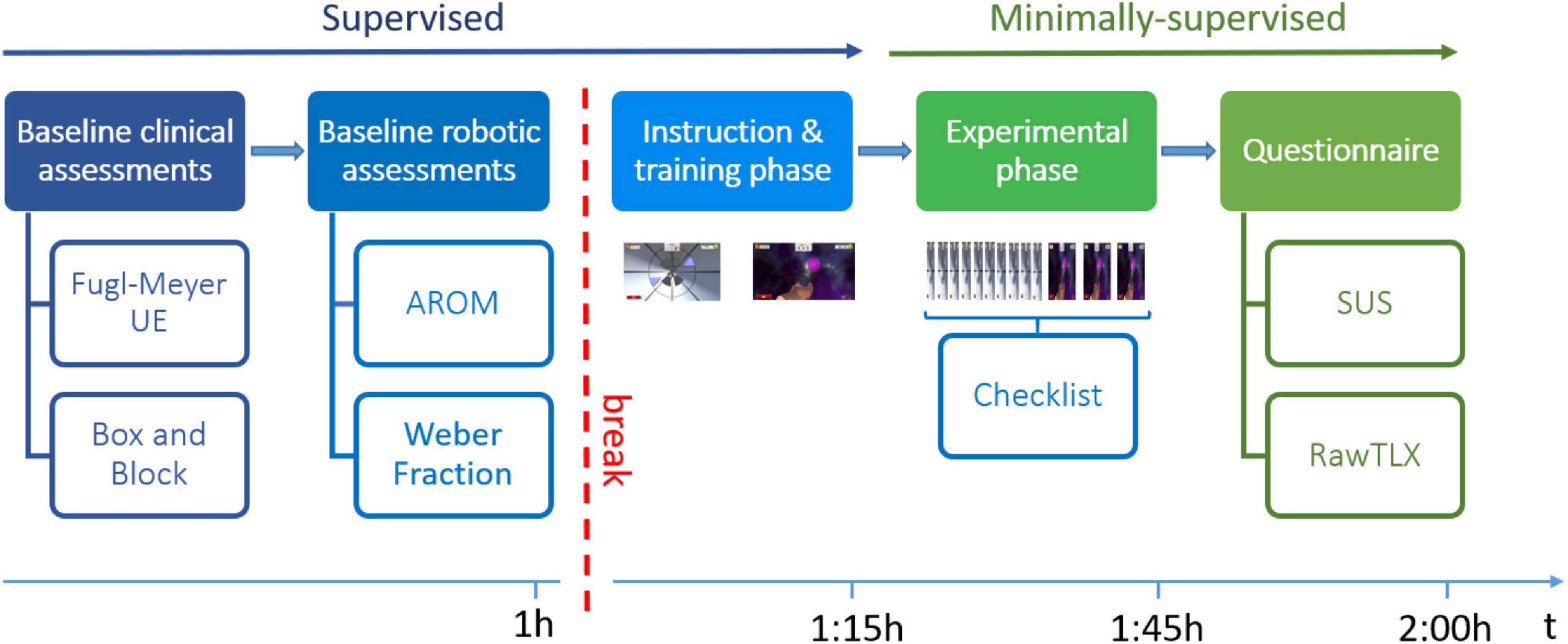

The pilot study was conducted at ETH Zurich, Switzerland, over a period of 2 weeks. Participants took part in a single test session, as illustrated in Figure 2. The session consisted of a supervised and a minimally-supervised part. In the supervised part, a supervising professional therapist assessed the subject’s baseline ability level through a set of standard clinical and robotic assessments, which were used to customize the difficulty levels of the therapy exercises. The therapist then instructed the subject on how to perform the exercises, and actively guided the subject in the execution of one block of each exercise. In this part of the experiment, subjects were encouraged to ask any questions they had related to the use of the device. In the subsequent minimally-supervised part, the subject had to independently use the therapy platform to perform the tunnel exercise (10 blocks) and the sphere exercise (3 blocks). During that time, the therapist sat at the back of the room and silently observed the subject’s actions, recording any error or action that the subject could not perform in a checklist, and intervening only in case of risk or explicit request from the subject. The subject had to independently place his/her hand inside the finger pads, log into the therapy plan (i.e., find his/her identification code through other subject identification codes and insert the personal colored password to log in), find and start the appropriate therapy exercises from a list of all available RHK exercises, test both exercises and log out from the therapy plan. At the end of the experimental phase, subjects answered a set of usability questionnaires.

Figure 2. Study protocol. Abbreviations: UE, Upper Extremity; AROM, Active Range of Motion; SUS, System Usability Scale; RawTLX, Raw Task Load Index.

The usability evaluation was performed on the new, more complex exercises training coordinated movements as well as sensory, cognitive and motor functions for multiple reasons. These exercises present cognitive challenges (e.g., understanding the exercise structure, robot commands/instructions and feedback), which directly influence the usability of the device and thus allow to test usability under the most demanding conditions. To avoid bias in the usability evaluation with a population in the chronic stage after stroke, which already achieved a good amount of recovery, we avoided simpler exercises (e.g., purely sensory, or purely motor) which are (in most cases) better suited for earlier stages of rehabilitation that would happen in a more closely supervised context. Our exercises are instead best suited for patients with mild to moderate impairments, the population that, based on our previous clinical studies (Ranzani et al., 2020), seems most suitable to train in a minimally-supervised scenario with the robot. Moreover, compared to simpler exercises, these should allow more subject engagement during minimally-supervised therapy, where there is no additional encouragement from a supervising therapist.

The study was approved by the Cantonal Ethics Committee in Zurich, Switzerland (Req-2017-00642).

Baseline Assessments

Subjects’ upper limb impairment was measured at baseline with the Fugl-Meyer Assessment of the Upper Extremity (FMA-UE) (Fugl-Meyer et al., 1975) and its wrist and hand subscore (FMA-WH). Gross manual dexterity was assessed using the Box and Block test (BBT) (Mathiowetz et al., 1985). In addition to clinical assessments, robotic assessments (i.e., “AROM” and stiffness discrimination ability expressed as “Weber fraction”) were performed and used to adapt the initial difficulty level of the therapy exercises to the subject’s ability from the first block of the exercise. “AROM” assesses the subject’s ability to actively open and close the hand and pronosupinate the forearm. “Weber fraction” describes the smallest distinguishable difference between two object stiffnesses. For more details on the robotic assessments, please refer to Metzger et al. (2014b).

Outcome Measures and Statistics

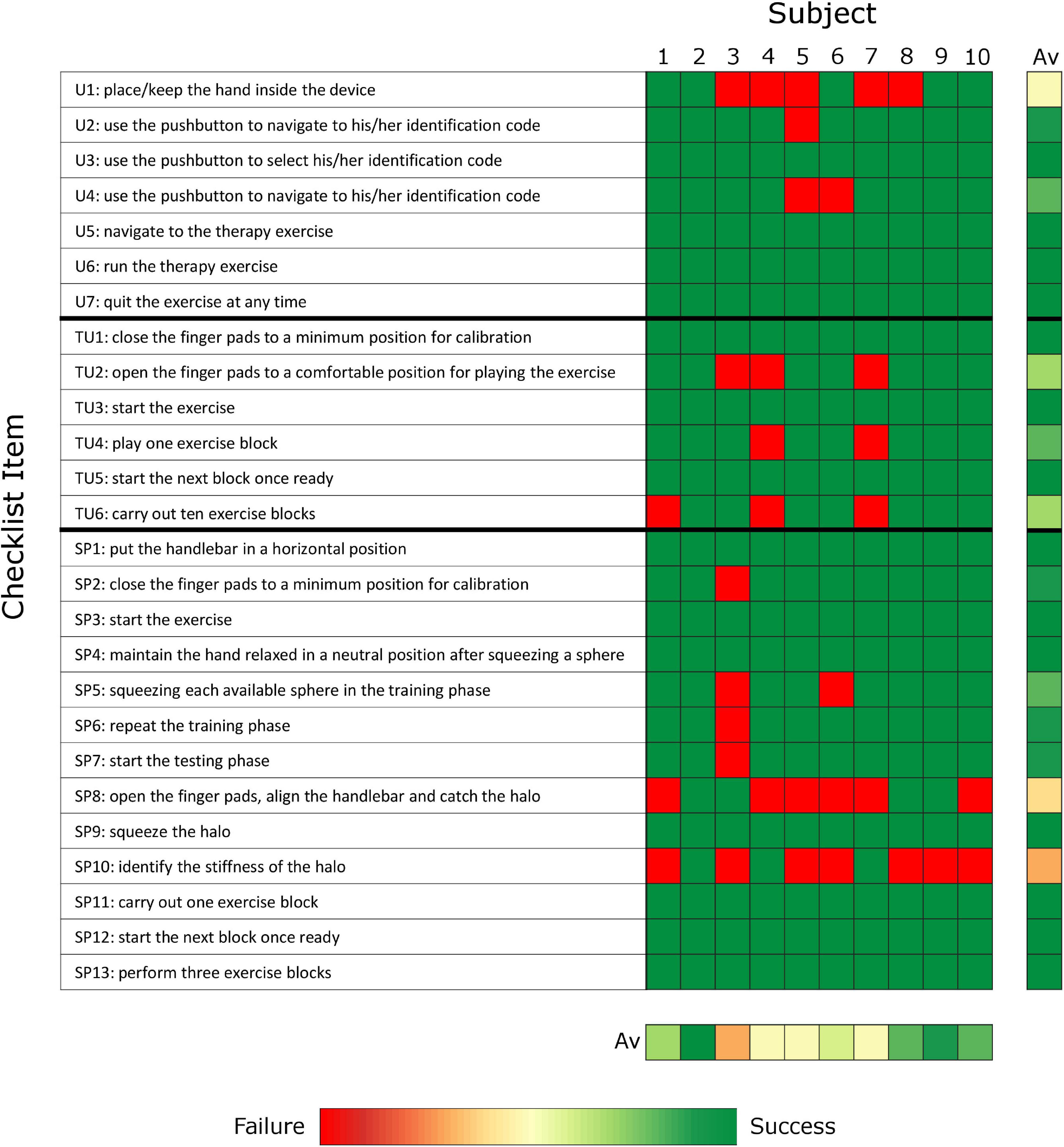

To evaluate the ability of chronic stroke subjects to independently use the therapy platform and identify remaining usability challenges, the main outcome measures were the percentage of items that could not be performed without external intervention and the results of two questionnaires evaluating the usability and perceived workload of the user interface and exercises. A performance checklist was used to record the tasks/actions that the subject could, or could not perform without supervision or in which therapist help was required. To evaluate each component of the therapy platform separately, the performance checklist and the two questionnaires were repeated for the user interfaces (i.e., GUI and hardware interfaces such as the finger pads and pushbutton keyboard) and for the two exercises. The performance checklist includes 26 items are described in Figure 3 (i.e., seven about the use of the user interface, six related to the tunnel exercise, and thirteen related to the sphere exercise). The results of the checklist per subject are calculated as percentage of items that required intervention with respect to total performed items.

Figure 3. Checklist results represented as heatmap. The results averaged over subjects and items are presented on the right and on the bottom of the heat map, respectively. (Green: no problem/issue in item completion without external intervention; Red: Failure and/or external intervention required to solve the item; Av: average; U: user interface; TU: tunnel exercise, SP: sphere exercise).

The standardized usability questionnaires were:

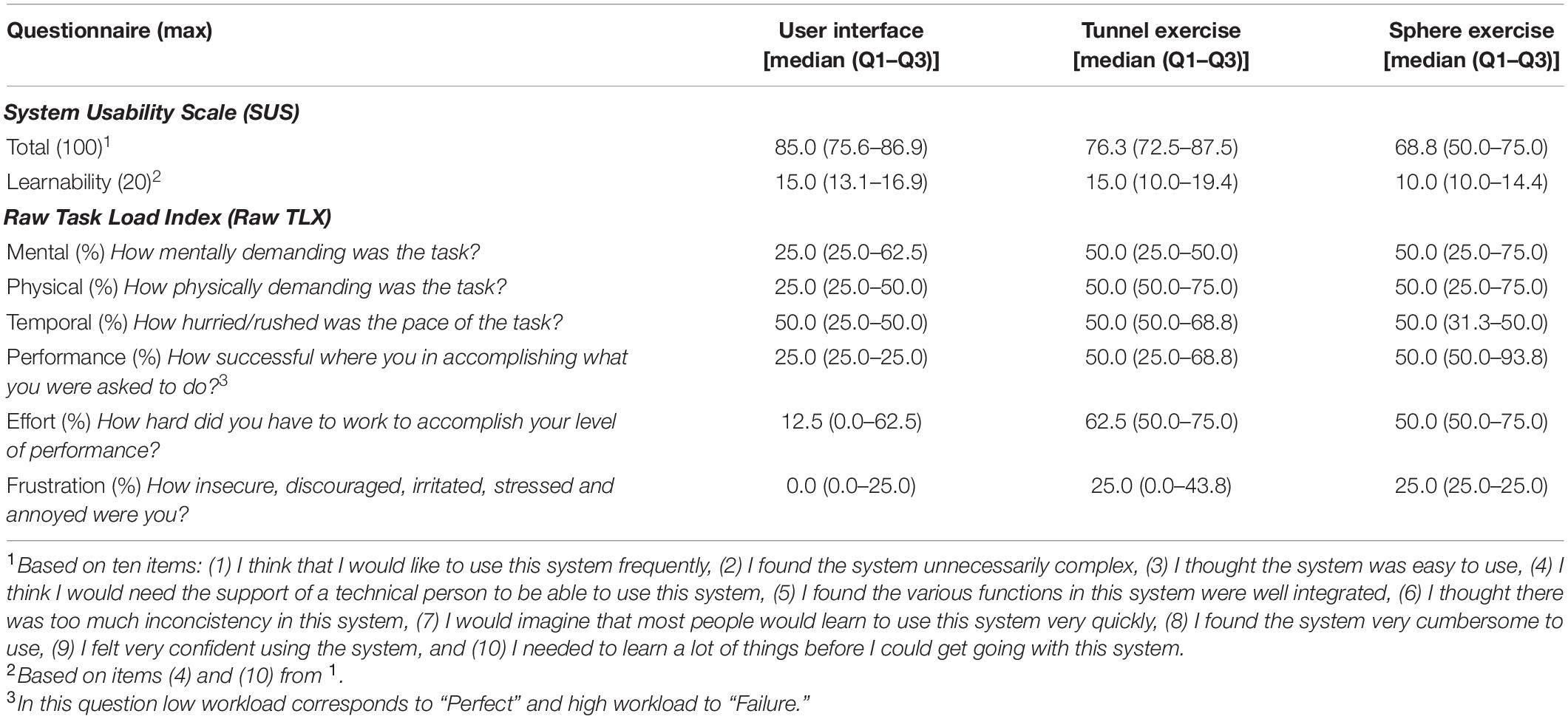

System Usability Scale (SUS) (Brooke, 1996), a ten-item questionnaire which assesses the overall usability (i.e., effectiveness, efficiency, satisfaction) of the system under investigation (i.e., user interface and each of the two exercises separately). Two items of the SUS refer specifically to the “learnability” of a system (i.e., “I think that I would need the support of a technical person to be able to use this system,” “I needed to learn a lot of things before I could get going with this system”) and were considered of high importance for the evaluation of the minimally-supervised usage scenario (Lewis and Sauro, 2009). Ideally, the total SUS score calculated from its ten items should be greater than 50 out of 100, indicating an overall usability between “OK” and “best imaginable” (Bangor et al., 2009). To evaluate if there was any correlation between SUS scores and baseline characteristics of the subjects, we calculated the Pearson’s correlation coefficients between the SUS scores (of user interface, tunnel exercise, and sphere exercise) of all the subjects and their age, FMA-UE, FMA-WH, and BBT score, resulting in a total of 12 comparisons. For these analyses, the statistical significance threshold (initially α = 0.05) was adjusted using Bonferroni correction for multiple comparisons, leading to a corrected α = 0.05/12 = 0.0042.

Raw Task Load Index (RawTLX) (Hart, 2006), a six-item questionnaire which assesses the workload while using the system under investigation. The RawTLX is the widely used, shortened form of the original NASA TLX, with the difference of the six workload domains being evaluated individually without the calculation of a total workload score through domain-weighting. The workload domains assessed are: (i) mental demand (i.e., amount of mental or perceptual activity required), (ii) physical demand (e.g., amount of physical workload required), (iii) temporal demand (i.e., amount of time pressure perceived during the use), (iv) overall performance (i.e., perceived level of unsuccessful performance), (v) effort (i.e., total amount of effort perceived to execute the task), and (vi) frustration level (i.e., amount of stress/irritation/discouragement perceived). The target workload levels differ depending on the application, thus they were defined by the investigator and therapist. For the user interface, a targeted minimal workload (i.e., ≤25%) was set as goal in all domains except for temporal demand, in which an intermediate workload level (i.e., between 25 and 75% included) was tolerated. The exercises should be challenging but not too difficult (Adamovich et al., 2009; Choi et al., 2011), allowing the subjects to maintain actual and perceived performance around 70%. For this reason, a target workload between 50 and 75% (included) was desired for mental, physical, temporal and effort domains, and a corresponding workload between 25 and 50% was desired in the performance domain, in which the workload axis is inversely proportional to the perceived performance. Finally, frustration should be avoided, so a workload ≤25% is required.

The SUS and RawTLX questionnaires were translated to German by a native speaker and rated on a five-intervals Likert scale, which was associated with corresponding scores of 0, 25, 50, 75, and 100%.

To monitor the safety of the platform during the minimally-supervised part of the experiment, adverse events and situations that could put at risk the safety of the user (e.g., triggering of safety routines of the robot for excessive forces/movements or hardware/software errors) were recorded.

The baseline assessments, answers from SUS and TLX questionnaires and population results of the checklist are analyzed via descriptive statistics and reported as median with first and third inclusive quartiles (i.e., median (Q1–Q3)) to represent the central tendency and spread/dispersion in subjects’ characteristics/responses, respectively. These statistics were selected because of the relatively small sample size, which does not safely allow to assume normal distribution of the data, and the ordinal nature of the SUS and TLX results based on five-intervals Likert scales (Sullivan and Artino, 2013).

Results

Experiment Characteristics

Ten subjects (four female, six male) in the chronic stage after an ischemic stroke (39.5 (27.0–60.5) months post-event) were eligible and agreed to participate in the study. The participant age was 60.5 (56.3–67.5) and there were four right and six left hemisphere lesions, while all subjects were right-handed. Most subjects showed mild to moderate (Woytowicz et al., 2017) initial upper-limb impairment with a FMA-UE of 41.5 (39.3–50.0) out of 66 points, and a FMA-WH of 17.0 (14.0–19.5) out of 24 points. In the BBT, subjects transported 39.5 (30.0–48.8) blocks in 1 min using their impaired limb. Before enrollment, all participants were informed about the study and gave written consent.

The experiment lasted 111.5 (104.0–135.0) minutes, which included 79.1 (67.0–86.0) minutes of robot use and 34.8 (24.0–48.0) minutes for baseline clinical assessments, break time and questionnaires. Within the robot use, the subjects spent 16.0 (14.0–20.0) minutes on baseline robotic assessments and 59.9 (53.0–67.0) minutes to learn how to use the user interface and exercises (i.e., instruction and training phase, 27.6 (22.0–38.0) minutes) and test them with minimal supervision (i.e., experimental phase, 30.6 (28.0–32.0) minutes). During the experimental phase, the therapist’s physical intervention (e.g., to assist hand movements or position the hand) or suggestions and further explanations (e.g., to repeat the login password or refresh the exercise rules) were required 3.5 (2.0–5.0) times per subject out of the 26 checklist items (see Figure 3), with highest number of interventions required by the oldest subject (subject 3, 87 years old, seven interventions).

Over the duration of the study, no serious adverse event related to the robot-assisted intervention or event that would put at risk the safety of the user were observed, but the software had to be restarted two times due to the triggering of safety routines (e.g., too high forces, positions, velocities generated by the user). Two subjects reported a mild temporary increase in hand muscle tone (e.g., finger flexors and/or extensors) during the therapy exercises.

User Interface

The user interface was ranked with a SUS score between good and excellent (85.0 (75.6–86.9) out of 100) as shown in Table 1 and Figure 4A. Nine subjects gave excellent rating and reported that they would use the RHK frequently. Most of the subjects reported that the user interface is intuitive and that the colored button interfaces are easy to use. The oldest subject (age 87) gave a score in the region of “worst imaginable” for the user interface (as well as for the sphere and tunnel exercises). The SUS results showed an inverse relationship with the age of the subjects, but no significant correlation following Bonferroni correction (correlation −0.737, p-value 0.015), and no linear relationship with their ability level as measured with the FMA-UE (0.170, 0.639), FMA-WH (−0.044, 0.904), and BBT (0.207, 0.566) scales.

Table 1. System Usability Scale and Raw Task Load Index results for user interface, tunnel exercise, and sphere exercise.

Figure 4. (A) System Usability Scale box-plot results for user interface (i.e., GUI, finger pads, and pushbutton keyboard), tunnel exercise and sphere exercise. (B) Raw TLX box-plot results showing perceived workload levels for user interface and (C) for tunnel and sphere exercise. black line: median; green area: target usability/workload level.

The Raw TLX results are shown in Table 1 and Figure 4B. The median perceived workload levels lie within the target workload bands in all the workload categories. However, the third quartile is outside of the target band (higher) for at least one datapoint (25%) in mental demand, physical demand and effort.

The subjects required external supervision or assistance for 14.3 (0.0–14.3)% of the checklist items related to the user interface (Figure 3). Five subjects needed help to insert or reinsert the hand into the finger pads, as the thumb can easily slip out while moving the finger pads, particularly during the execution of active tasks within the exercises. Two subjects could not remember the colored password.

Tunnel Exercise

The usability of the tunnel exercise was ranked between good and excellent (76.3 (72.5–87.5) out of 100) on the SUS (Table 1 and Figure 4A). Three subjects reported that the exercise is entertaining and motivating. As for the user interface, the SUS scores showed an inverse relationship with the age of the subjects, although without significant correlation after Bonferroni correction (correlation −0.681, p-value 0.030), and no linear relationship with FMA-UE (0.342, 0.333), FMA-WH (0.019, 0.958), and BBT (0.335, 0.344) scores.

The Raw TLX results are shown in Table 1 and Figure 4C. The median perceived workload levels lie within the target workload bands in all the workload categories. However, the first quartile is lower than the target workload band for at least one datapoint (25%) in mental demand.

The subjects required external supervision or assistance in only 0.0 (0.0–16.7)% of the checklist items related to the tunnel exercise (Figure 3). Six out of ten subjects could perform the entire exercise independently without any therapist intervention. One subject could not independently perform the calibration at the beginning of the exercise as she did not understand the instructions provided by the robot (i.e., the robot was asking to open the hand to a comfortable position and the subject tried to open the hand as much as possible). Two subjects could not independently perform either the calibration or the hand opening/closing tasks of the exercise, as they could not actively open the hand beyond of the minimum position of the robot (i.e., approximately 4 cm between thumb and index finger tip) due to their motor impairment level (i.e., FMA-UE below 38 out of 66 points, FMA-WH below 15 out of 24 points). One subject only completed 8 out of 10 blocks, as the robot went into a safety stop (i.e., too high forces, position or velocity). Two subjects required further explanations of the scope and rules of the exercise (e.g., tried to hit the obstacles instead of avoiding them). As additional comments, one subject reported that the tunnel speed was too fast for her, and another subject reported that the depth perception of the virtual reality should be improved. The median performance (i.e., number of obstacles avoided versus total number of obstacles) of the subjects within the ten blocks was 71.7 (59.1–79.9)%, which is very close to the desired 70% performance.

Sphere Exercise

The usability of the sphere exercise was ranked between OK and good [68.8 (50.0–75.0) out of 100] at the SUS (Table 1 and Figure 4A). Two subjects reported that the game was too challenging and more boring compared to the tunnel exercise and would recommend this game for a mildly impaired population. As for the user interface and tunnel exercise, the SUS scores showed an inverse relationship with the age of the subjects without a significant correlation after Bonferroni correction (correlation −0.739, p-value 0.015), and no linear relationship with FMA-UE (0.092, 0.800), FMA-WH (0.014, 0.970), and BBT (0.081, 0.825).

The Raw TLX results are shown in dark blue in Table 1 and Figure 4C. The median perceived workload levels lie within the target workload band for mental demand (50.0 (25.0–75.0)%), physical demand (50.0 (25.0–75.0)%), temporal demand (50.0 (31.3–50.0)%), performance (50.0 (50.0–93.8)%), effort (50.0 (50.0–75.0)%), and frustration (25.0 (25.0–25.0)%). However, the first quartile is lower than the target workload band for at least one datapoint (25%) in mental and physical demand, and the third quartile is higher than the target workload band in performance.

The subjects required external supervision or assistance in 11.5 (7.7–15.4)% of the checklist items related to the sphere exercise (Figure 3). Only one subject could perform the entire exercise without any therapist intervention. During the training phase, the subjects pressed a “next” button to go to the next sphere presented in the exercise. However, two subjects found this button confusing because the color of the button represented both the action of moving on to the next sphere to explore and one of the spheres that could be selected as answer. This issue could not be avoided with the current button interface with only five buttons for five possible objects/spheres to select from. The subjects suggested to avoid this issue by always having a unique mapping between button color and function/object, both in the training and in the test phase of the exercises. One subject did not understand how to repeat the training phase. During the testing phase, only four subjects learned how to catch and identify the falling halos, due to difficulties in understanding the catching strategy (six out of ten subjects), controlling and maintaining the grip aperture during catching or squeezing (three out of ten), perceiving the stiffness differences (five out of ten). The median performance (i.e., number of halos caught and identified versus total number of halos) of the subjects within the three blocks was 27.0 (16.4–31.5)%.

Additional Spontaneous Feedback

During the trial, the subjects reported additional spontaneous feedback. Three subjects recommended to modify the elbow support of the RHK. They asked to simplify the adjustment of the elbow support height with respect to the finger pads, which is currently done manually with two levers, and to constrain the forearm to the elbow support with straps to avoid large elbow movements during active pronosupination tasks (e.g., in the tunnel exercise). Two subjects reported mild/moderate pain in the fingers due to the finger straps, which were tightened to avoid finger slippage out of the thin handle surface. It was also reported that such finger pads might not allow a subject with high motor impairment to accurately control and perceive finger forces, as the contact between fingers and finger pads occurred only at the fingertips. Finally, the supervising therapist reported that to increase the safety of the device, all the mechanical parts of the robot (e.g., mechanical transmissions) that could get in contact with the user should be covered to avoid snag hazards (e.g., of the fingers).

Discussion

This paper presented the design and rigorous preliminary usability evaluation of a therapy platform (i.e., end-effector haptic device with new physical and GUIs and two novel therapy exercises) that aims to enable minimally-supervised robot-assisted therapy of hand function after stroke. This approach promises to be a suitable solution to increase the therapy dose offered to subjects after stroke either in the clinic (e.g., by allowing the training of multiple subjects in parallel, or additional training during the subject’s spare time in an unsupervised robotic gym), or at home after discharge, with the potential to maximize and maintain long-term therapy outcomes. A careful and quantitative pilot usability evaluation allows to preliminarily assess if the platform could be applicable in minimally-supervised conditions and which modifications are necessary to increase the feasibility of this therapy approach in a real-world minimally-supervised scenario (e.g., in the clinic).

Minimally-Supervised Therapy Is Possible Upon Short-Term Exposure

Through the development of a modular GUI and novel therapy exercises, we proposed a subject-tailored functional therapy platform that could be used upon first exposure by subjects after stroke in a single session with minimal therapist supervision. The platform was developed to meet a tradeoff between different requirements, namely to provide active task-oriented exercises similar to conventional exercises (Ranzani et al., 2020), while guaranteeing ease of use and subject compliance to the therapy program (motivation) while requiring minimal supervision both from a clinical (therapist) and technical (operator of the device) point of view (Zajc and Russold, 2019). Particular attention was dedicated to the optimization of the virtual reality interfaces, both in the GUI and in the exercises to be easily usable/learnable, efficient (in terms of workload) and motivating, since it was shown that enriched virtual reality feedback might facilitate an increase in therapy dose and consequent improvements in arm function (Laut et al., 2015; Laver et al., 2015; Johnson et al., 2020).

In clinical settings, minimally-supervised therapy has rarely been investigated and documented (Büsching et al., 2018; McCabe et al., 2019). Starting from Cordo et al. (2009), few robotic devices have been proposed for minimally-supervised upper limb therapy at home (Cordo et al., 2009; Zhang et al., 2011; Lemmens et al., 2014; Sivan et al., 2014; Wolf et al., 2015; Hyakutake et al., 2019; McCabe et al., 2019). Only one device includes a virtual reality interface to increase subject motivation, and most of these devices only provide basic adaptive algorithms to customize the therapy plan to the subject needs. The device settings (e.g., lengths, sizes, finger pads) and exercise parameters are mostly manually tuned by the therapist at the beginning of the therapy protocol, while the subject performance is either ignored, telemonitored, or minimally-supervised by the therapist. Typically, these devices were evaluated in research settings in terms of clinical efficacy, but they lacked usability evaluations, which would have been more informative and better correlated with their real-world adoption in a minimally-supervised scenario (Turchetti et al., 2014), as well as with their safety and feasibility. To obtain meaningful usability results that can be transferred to real-world use, therapy goals and use environment should be precisely defined.

The Platform Was Attributed High Usability, With Suggestions for Minor Improvements

We achieved very positive usability results with our therapy platform, with system usability scores between good and excellent (i.e., between 70 and 90 out of 100) for the user interface and tunnel exercise, between OK and good (i.e., between 50 and 80 out of 100) for the sphere exercise, as well as TLX scores within the target workload boundaries. This study revealed that, even with only few minutes of instruction, it is easy to learn how to use the platform, use the colored pushbutton keyboard, navigate through the GUI, insert the hand into the device finger pads and perform the exercises (i.e., particularly the tunnel exercise). Overall, the time needed to learn how to use the platform (instruction and training phase) and perform the experiment with minimal supervision (experimental phase) seems adequate for a first use of the platform (i.e., less than 60 min). The time required for testing under minimal supervision corresponded to our expectation (i.e., approximately 10 min for the test phase of each exercise, and ten additional minutes for training phases and setting up). The instructions and learning phases were relatively short for a first exposure (i.e., approx. 30 min). These results indirectly support the feasibility of the minimally-supervised use of the platform and seem to indicate that future users could be introduced to the platform within a single to two therapy sessions. The usability results showed an inverse trend with age but not with the impairment level of the subjects (global, distal, or related to manual dexterity), reaching worst results for the oldest subject (age 87). However, this result is not significant, particularly with our small user sample size.

Usability evaluations of rehabilitation robots reported in literature yielded scores between 36 and 90 out of 100 using different usability questionnaires, including the SUS (Pei et al., 2017), “Cognitive Walkthrough” and “Think Aloud” methods (Valdés et al., 2014), custom-made questionnaires or checklists (Chen et al., 2015; Smith, 2015), which can be based on the Technology Acceptance Model (Davis, 1985). Only Sivan et al. (2014) proposed a basic evaluation of the usability of a minimally-supervised robotic platform considering the time needed to learn how to use the device independently and the total time of use of the device, and based on the experiments and user feedback identified design aspects that could be improved (Sivan et al., 2014). Human-centered designs based on usability evaluations have become best-practice in the medical field in recent years (Wiklund and Wilcox, 2005; Oviatt, 2006; Shah and Robinson, 2006; Blanco et al., 2016; International Organization for Standardization, 2019), but the elicitation of standardized usability requirements and evaluations is still particularly challenging due to the heterogeneity of user groups, needs and environments (e.g., clinic or home) (Shah and Robinson, 2006), and the small sample sizes typically considered in this type of studies (van Ommeren et al., 2018). Therefore, it is generally difficult to compare the usability evaluations among different platforms.

The usability evaluation proposed in this work allows to quantify different aspects of usability, such as platform usability and learnability, perceived workload for the user, and ability to independently perform the tasks required during a minimally-supervised use of the platform. Our usability evaluation methods and results are not limited to the specific therapy platform proposed in this work, but can be generalized to most robotic therapy platforms. The early identification of usability aspects with a small sample population during the development of the platform allows to improve design points that could bias the clinical applicability and testing of the platform with patients, and ultimately help reducing pilot testing duration and associated costs. These points might otherwise only be noticed in longer and resource-demanding clinical studies and would then require corrections and additional retesting. For instance, through our detailed usability analysis, we highlighted key aspects regarding the physical and GUIs (e.g., handle size and shape, as well as button shape and color coding), and the exercise architecture (e.g., catching/grasping strategies closer to activities of daily living). These aspects would not affect the feasibility of using the platform in clinical settings but would certainly impact the adoption of this device, as well as other similar upper-limb robotic devices, in minimally-supervised settings. Finally, the GUI and pushbutton interface proposed in this work achieved very good usability at first exposure, suggesting that they are a valid approach to achieve minimally-supervised therapy with most (mono-lateral) upper-limb robotic devices and therapy exercises.

Therapy Exercises Are Functional, Motivating and Respect Target Workload Levels

The mental, physical and effort workloads in the exercises were rather high while frustration and performance workloads were rather low. The overall usability was high for both exercises. This is a promising result, underlining that the two new functional/synchronous exercises are engaging without being overly frustrating. Improvements would be needed to slightly increase the mental/cognitive workload required in the tunnel exercise and the physical workload in the sphere exercise. In the latter, however, the task complexity should be slightly reduced, since the performance achieved in the exercise is still too low with respect to the target performance level (i.e., 70%) and requires a too high performance workload. Detailed descriptions of minimally-supervised task-oriented exercises (e.g., requiring the functional training of multiple degrees of freedom) are rarely presented in literature (Lemmens et al., 2014; Sivan et al., 2014; Wolf et al., 2015). These exercises are often lacking engaging interfaces to enhance subject motivation (Zhang et al., 2011; McCabe et al., 2019) and are typically focused on pure motor training tasks, neglecting sensory and cognitive abilities. Based on the level of task complexity and on the usability results, our therapy exercises could be recommended for late stages of rehabilitation in a mildly/moderately impaired population. They could well complement the previously available exercises implemented on the rehabilitation robot that train either grasping or forearm pronosupination during passive proprioceptive tasks or active manipulation tasks (Metzger et al., 2014a).

The Platform Is Safe and Could Be Exploited for a Continuum of Robot-Assisted Care

After a guided instruction phase, our test tried to emulate a minimally-supervised environment in which the therapist intervened only in case help was required by the subject, as done in other studies performed in real-life minimally-supervised conditions (Lemmens et al., 2014; Sivan et al., 2014; Hyakutake et al., 2019). Throughout the test, the therapist intervention was needed on average less than 4 times per subject out of the 26 checklist items, mostly due to misunderstanding of the instructions or small software inconsistencies (e.g., unclear feedbacks, unclear color-function relations) without critical safety-related problems that would affect the applicability of the system with minimal supervision. These errors are expected to not occur anymore if the subjects were given a longer time for instructions and training. A continuum of use (over a larger time span) of our platform from supervised to minimally-supervised conditions would allow the user to familiarize with the system during the supervised sessions in the clinic and further continue the therapy seamlessly once the therapist is confident that the subject can safely train independently. Intervention minimization is useful to use the platform in the clinic, where a single therapist could supervise multiple subjects, and is essential in home environments, where external supervision is not always available or would require additional external communication channels [e.g., telerehabilitation (Wolf et al., 2015)]. Safety and customization could be further increased through additional integrated robotic assessments. For example, two subjects reported a mild temporary increase in muscle tone, which can be physiologically induced by the active nature of the robotic assessments and exercises (Veerbeek et al., 2017). An increase in hand muscle tone may cause pain and negatively affect recovery, but could be monitored online throughout the therapy using robotic assessments incorporated into the therapy exercises (Ranzani et al., 2019).

Limitations

The results of this pilot study should be interpreted with respect to the relatively small sample size tested, which is, however, considered sufficient to identify the majority of the usability challenges (Virzi, 1992). The results reflect the usability of the platform for a mildly/moderately impaired population, which arguably is the target population for such a minimally-supervised therapy platform, but could also be validated in different stages after stroke (e.g., subacute). The reported usability results are applicable to a population that does not suffer from color blindness, since most of the user interfaces rely on color perception. Numbers or symbols could be added for people with color blindness. The experiment lasted only one session, so it was not possible to evaluate how the subjects could learn to use the system in a longer term and in real-world minimally-supervised conditions, e.g., to assess if their motivation level would eventually drop after few sessions. For the same reason, the performance-based difficulty adaptation algorithms will be further investigated in the context of multi-session experiments. Moreover, the scales proposed to evaluate the usability and workloads required by our platform can only partly capture the overall user experience, which should also account for user emotions, preferences, beliefs, physical and psychological responses before and after a longer use of the platform (Petrie and Bevan, 2009; International Organization for Standardization, 2019; Meyer et al., 2019). Additionally, within this pilot study, it was not possible to implement the necessary usability adjustments that were identified and re-test the usability of the platform after modifications, but this should be assessed in the future. Finally, the presence of the technology developers during parts of the study (e.g., instruction and training phase) might have indirectly biased the usability evaluation performed by the subjects.

Future Directions

Future research should investigate how to equip rehabilitation robots with further intelligence to automatically propose therapy plans and settings based on objective measures, and provide comprehensive digital reports to remote therapists to monitor and document subjects’ progress. To be usable in a real-world minimally-supervised scenario, the therapy platform would require minor adjustments identified throughout this study. Regarding the hardware, the finger pads should be wider to avoid finger slippage, and the pushbutton keyboard should include more buttons to allow consistent color-function and color-object mapping in GUI and exercises (e.g., insert one or two buttons uniquely for exercise control or quitting, to avoid color overlapping in difficulty levels requiring five objects/colors, such as in the sphere exercise). All the mechanical parts of the robot should be covered to avoid snag hazards, and an optical fingerprint reader could be added to the platform to simplify the access of multiple users to their therapy programs without the need to remember colored passwords. Regarding the software, based on the successful proof of concept with our new minimally-supervised exercises, the available assessment-driven supervised therapy exercises proposed by Metzger (Metzger et al., 2014b) will be redesigned to be usable with minimal supervision. As for the sphere exercise, attention should be devoted to the optimization of instructions/feedbacks clarity, and of task complexity and matching to real-world actions. The GUI should provide feedback to the therapist (e.g., subject performance and statistics) and possibilities to further customize the exercises (e.g., simplify graphical content for subjects with attention or cognitive deficits). Finally, a long-term study is required to evaluate the feasibility and usability of a continuum of robot-assisted care from supervised to minimally-supervised conditions, and a mobile/portable device should be developed to allow the application of this approach also in the home environment.

Implications and Conclusions

The goal of this work was to develop and evaluate, in a single-session pilot study, the usability of a minimally-supervised therapy platform, allowing to perform functional, personalized and motivating task-oriented exercises at the level of the hand. Our findings demonstrate that a powered robot-assisted therapy device respecting usability and perceived workload requirements can be safely and intuitively used in a single session with minimal supervision by chronic stroke patients. This pilot evaluation allowed us to identify further design improvements needed to increase the platform usability and acceptance among the users. Our results open the possibility to use active robotic devices with minimal supervision to complement conventional therapies in real-world settings, offer increased dose with the existing resources, and create a continuum of care that progressively increases subject involvement and autonomy from the clinic to home.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Ethics Statement

The studies involving human participants were reviewed and approved by Cantonal Ethics Committee Zurich, Switzerland. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

RR performed the data analysis and wrote the manuscript together with OL, JH, and RG. LE and RR implemented the graphical user interface and the tunnel exercise. FV and RR implemented the sphere exercise. RR, OL, JH, and RG defined the study protocol. JH was responsible for patient recruitment and screening. BE performed the experimental sessions with JH and RR. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by the European Project SoftPro (No. 688857) and the Swiss State Secretariat for Education, Research and Innovation SERI under contract number 15.0283-1.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to thank all the volunteers that participated in the pilot study, and Dominik G. Wyser and Jan T. Meyer for their valuable insights and discussions during the writing of this work.

References

Adamovich, S. V., Fluet, G. G., Merians, A. S., Mathai, A., and Qiu, Q. (2009). Incorporating haptic effects into three-dimensional virtual environments to train the hemiparetic upper extremity. IEEE Trans. Neural Syst. Rehabil. Eng. 17, 512–520. doi: 10.1109/tnsre.2009.2028830

Aggogeri, F., Mikolajczyk, T., and O’Kane, J. (2019). Robotics for rehabilitation of hand movement in stroke survivors. Adv. Mech. Eng. 11:1687814019841921.

Aminov, A., Rogers, J. M., Middleton, S., Caeyenberghs, K., and Wilson, P. H. (2018). What do randomized controlled trials say about virtual rehabilitation in stroke? A systematic literature review and meta-analysis of upper-limb and cognitive outcomes. J. Neuroeng. Rehabil. 15:29.

Ates, S., Haarman, C. J., and Stienen, A. H. (2017). SCRIPT passive orthosis: design of interactive hand and wrist exoskeleton for rehabilitation at home after stroke. Autonomous Robots 41, 711–723. doi: 10.1007/s10514-016-9589-6

Bangor, A., Kortum, P., and Miller, J. (2009). Determining what individual SUS scores mean: adding an adjective rating scale. J. Usability Stud. 4, 114–123.

Bernhardt, J., Hayward, K. S., Dancause, N., Lannin, N. A., Ward, N. S., Nudo, R. J., et al. (2019). A stroke recovery trial development framework: consensus-based core recommendations from the second stroke recovery and rehabilitation roundtable. Int. J. Stroke 14, 792–802. doi: 10.1177/1747493019879657

Blanco, T., Berbegal, A., Blasco, R., and Casas, R. (2016). Xassess: crossdisciplinary framework in user-centred design of assistive products. J. Eng. Design 27, 636–664. doi: 10.1080/09544828.2016.1200717

Büsching, I., Sehle, A., Stürner, J., and Liepert, J. (2018). Using an upper extremity exoskeleton for semi-autonomous exercise during inpatient neurological rehabilitation-a pilot study. J. Neuroeng. Rehabil. 15:72.

Cameirão, M. S., Badia, S. B., Oller, E. D., and Verschure, P. F. (2010). Neurorehabilitation using the virtual reality based Rehabilitation Gaming System: methodology, design, psychometrics, usability and validation. J. Neuroeng. Rehabil. 7:48. doi: 10.1186/1743-0003-7-48

Casadio, M., Morasso, P., Sanguineti, V., and Giannoni, P. (2009). Minimally assistive robot training for proprioception enhancement. Exp. Brain Res. 194, 219–231. doi: 10.1007/s00221-008-1680-6

Catalan, J. M., Garcia, J., Lopez, D., Diez, J., Blanco, A., Lledo, L. D., et al. (2018). “Patient evaluation of an upper-limb rehabilitation robotic device for home use,” in Proceedings of the 2018 7th IEEE International Conference on Biomedical Robotics and Biomechatronics (Biorob), (Enschede: IEEE), 450–455.

Chen, M.-H., Huang, L.-L., and Wang, C.-H. (2015). Developing a digital game for stroke patients’ upper extremity rehabilitation–design, usability and effectiveness assessment. Proc. Manuf. 3, 6–12. doi: 10.1016/j.promfg.2015.07.101

Chen, Y., Abel, K. T., Janecek, J. T., Chen, Y., Zheng, K., and Cramer, S. C. (2019). Home-based technologies for stroke rehabilitation: a systematic review. Int. J. Med. Inform. 123, 11–22.

Chi, N.-F., Huang, Y.-C., Chiu, H.-Y., Chang, H.-J., and Huang, H.-C. (2020). Systematic review and meta-analysis of home-based rehabilitation on improving physical function among home-dwelling patients with a stroke. Arch. Phys. Med. Rehabil. 101, 359–373. doi: 10.1016/j.apmr.2019.10.181

Choi, Y., Gordon, J., Park, H., and Schweighofer, N. (2011). Feasibility of the adaptive and automatic presentation of tasks (ADAPT) system for rehabilitation of upper extremity function post-stroke. J. Neuroeng. Rehabil. 8:42. doi: 10.1186/1743-0003-8-42

Cordo, P., Lutsep, H., Cordo, L., Wright, W. G., Cacciatore, T., and Skoss, R. (2009). Assisted movement with enhanced sensation (AMES): coupling motor and sensory to remediate motor deficits in chronic stroke patients. Neurorehabil. Neural Repair 23, 67–77. doi: 10.1177/1545968308317437

Cramer, S. C., Dodakian, L., Le, V., See, J., Augsburger, R., McKenzie, A., et al. (2019). Efficacy of home-based telerehabilitation vs in-clinic therapy for adults after stroke: a randomized clinical trial. JAMA Neurol. 76, 1079–1087. doi: 10.1001/jamaneurol.2019.1604

Daly, J. J., McCabe, J. P., Holcomb, J., Monkiewicz, M., Gansen, J., Pundik, S., et al. (2019). Long-Dose intensive therapy is necessary for strong, clinically significant, upper limb functional gains and retained gains in Severe/Moderate chronic stroke. Neurorehabil. Neural Repair 33, 523–537. doi: 10.1177/1545968319846120

Davis, F. D. (1985). A Technology Acceptance Model for Empirically Testing New End-user Information Systems: Theory and Results. Cambridge, MA: Massachusetts Institute of Technology.

de Leon, G. J. L., Gratuito, J. P. M., and Polancos, R. V. (2020). “The impact of command buttons, entry fields, and graphics on single usability metric (SUM),” in Proceedings of the International Conference on Industrial Engineering and Operations Management Dubai, Dubai.

Franck, J. A., Smeets, R. J. E. M., and Seelen, H. A. M. (2017). Changes in arm-hand function and arm-hand skill performance in patients after stroke during and after rehabilitation. PLoS One 12:e0179453. doi: 10.1371/journal.pone.0179453

Fugl-Meyer, A. R., Jääskö, L., Leyman, I., Olsson, S., and Steglind, S. (1975). The post-stroke hemiplegic patient. 1. a method for evaluation of physical performance. Scand. J. Rehabil. Med. 7, 13–31.

Guneysu Ozgur, A., Wessel, M. J., Johal, W., Sharma, K., Özgür, A., Vuadens, P., et al. (2018). “Iterative design of an upper limb rehabilitation game with tangible robots,” in Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, 241–250.

Gupta, A., O’Malley, M. K., Patoglu, V., and Burgar, C. (2008). Design, control and performance of RiceWrist: a force feedback wrist exoskeleton for rehabilitation and training. Int. J. Robot. Res. 27, 233–251. doi: 10.1177/0278364907084261

Hart, S. G. (2006). “NASA-task load index (NASA-TLX); 20 years later,” in Proceedings of the Human Factors and Ergonomics Society Annual Meeting, (Los Angeles, CA: Sage Publications Sage CA), 904–908. doi: 10.1177/154193120605000909

Hocine, N., Gouaïch, A., Cerri, S. A., Mottet, D., Froger, J., and Laffont, I. (2015). Adaptation in serious games for upper-limb rehabilitation: an approach to improve training outcomes. User Model. User Adapted Interact. 25, 65–98. doi: 10.1007/s11257-015-9154-6

Hyakutake, K., Morishita, T., Saita, K., Fukuda, H., Shiota, E., Higaki, Y., et al. (2019). Effects of home-based robotic therapy involving the single-joint hybrid assistive limb robotic suit in the chronic phase of stroke: a pilot study. BioMed Res. Int. 2019:5462694.

International Organization for Standardization (2019). ISO 9241: Ergonomics of Human-System Interaction. Part 210: Human-Centred Design for Interactive Systems. Geneva: International Organization for Standardization.

Johnson, L., Bird, M.-L., Muthalib, M., and Teo, W.-P. (2020). An innovative STRoke Interactive Virtual thErapy (STRIVE) online platform for community-dwelling stroke survivors: a randomised controlled trial. Arch. Phys. Med. Rehabil. 101, 1131–1137. doi: 10.1016/j.apmr.2020.03.011

Jönsson, A.-C., Delavaran, H., Iwarsson, S., Ståhl, A., Norrving, B., and Lindgren, A. (2014). Functional status and patient-reported outcome 10 years after stroke: the Lund Stroke Register. Stroke 45, 1784–1790. doi: 10.1161/strokeaha.114.005164

Kenzie, J. M., Semrau, J. A., Hill, M. D., Scott, S. H., and Dukelow, S. P. (2017). A composite robotic-based measure of upper limb proprioception. J. Neuroeng. Rehabil. 14, 1–12.

Klamroth-Marganska, V., Blanco, J., Campen, K., Curt, A., Dietz, V., Ettlin, T., et al. (2014). Three-dimensional, task-specific robot therapy of the arm after stroke: a multicentre, parallel-group randomised trial. Lancet Neurol. 13, 159–166.

Lambercy, O., Dovat, L., Gassert, R., Burdet, E., Teo, C. L., and Milner, T. (2007). A haptic knob for rehabilitation of hand function. IEEE Trans. Neural Syst. Rehabil. Eng. 15, 356–366. doi: 10.1109/tnsre.2007.903913

Lambercy, O., Dovat, L., Yun, H., Wee, S. K., Kuah, C. W., Chua, K. S., et al. (2011). Effects of a robot-assisted training of grasp and pronation/supination in chronic stroke: a pilot study. J. Neuroeng. Rehabil. 8:63.

Lang, C. E., Lohse, K. R., and Birkenmeier, R. L. (2015). Dose and timing in neurorehabilitation: prescribing motor therapy after stroke. Curr. Opin. Neurol. 28:549. doi: 10.1097/wco.0000000000000256

Laut, J., Cappa, F., Nov, O., and Porfiri, M. (2015). Increasing patient engagement in rehabilitation exercises using computer-based citizen science. PLoS One 10:e0117013. doi: 10.1371/journal.pone.0117013

Laver, K., George, S., Thomas, S., Deutsch, J., and Crotty, M. (2015). Virtual reality for stroke rehabilitation: an abridged version of a Cochrane review. Eur. J. Phys. Rehabil. Med. 51, 497–506.

Laver, K. E., Adey-Wakeling, Z., Crotty, M., Lannin, N. A., George, S., and Sherrington, C. (2020). Telerehabilitation services for stroke. Cochrane Database Syst. Rev. 2013:CD010255.

Lemmens, R. J., Timmermans, A. A., Janssen-Potten, Y. J., Pulles, S. A., Geers, R. P., Bakx, W. G., et al. (2014). Accelerometry measuring the outcome of robot-supported upper limb training in chronic stroke: a randomized controlled trial. PLoS One 9:e96414. doi: 10.1371/journal.pone.0096414

Lewis, J. R., and Sauro, J. (2009). “The factor structure of the system usability scale,” in Proceedings of the International Conference on Human Centered Design, (Berlin: Springer), 94–103.

Lo, A. C., Guarino, P. D., Richards, L. G., Haselkorn, J. K., Wittenberg, G. F., Federman, D. G., et al. (2010). Robot-assisted therapy for long-term upper-limb impairment after stroke. N. Engl. J. Med. 362, 1772–1783.

Lohse, K. R., Lang, C. E., and Boyd, L. A. (2014). Is more better? Using metadata to explore dose–response relationships in stroke rehabilitation. Stroke 45, 2053–2058. doi: 10.1161/strokeaha.114.004695

Lum, P. S., Godfrey, S. B., Brokaw, E. B., Holley, R. J., and Nichols, D. (2012). Robotic approaches for rehabilitation of hand function after stroke. Am. J. Phys. Med. Rehabil. 91, S242–S254.

Mathiowetz, V., Volland, G., Kashman, N., and Weber, K. (1985). Adult norms for the Box and Block Test of manual dexterity. Am. J. Occup. Ther. 39, 386–391. doi: 10.5014/ajot.39.6.386

McCabe, J., Monkiewicz, M., Holcomb, J., Pundik, S., and Daly, J. J. (2015). Comparison of robotics, functional electrical stimulation, and motor learning methods for treatment of persistent upper extremity dysfunction after stroke: a randomized controlled trial. Arch. Phys. Med. Rehabil. 96, 981–990. doi: 10.1016/j.apmr.2014.10.022

McCabe, J. P., Henniger, D., Perkins, J., Skelly, M., Tatsuoka, C., and Pundik, S. (2019). Feasibility and clinical experience of implementing a myoelectric upper limb orthosis in the rehabilitation of chronic stroke patients: a clinical case series report. PLoS One 14:e0215311. doi: 10.1371/journal.pone.0215311

Metzger, J.-C., Lambercy, O., Califfi, A., Conti, F. M., and Gassert, R. (2014a). Neurocognitive robot-assisted therapy of hand function. IEEE Trans. Haptics 7, 140–149. doi: 10.1109/toh.2013.72

Metzger, J.-C., Lambercy, O., Califfi, A., Dinacci, D., Petrillo, C., Rossi, P., et al. (2014b). Assessment-driven selection and adaptation of exercise difficulty in robot-assisted therapy: a pilot study with a hand rehabilitation robot. J. Neuroeng. Rehabil. 11:154. doi: 10.1186/1743-0003-11-154

Meyer, J. T., Schrade, S. O., Lambercy, O., and Gassert, R. (2019). “User-centered design and evaluation of physical interfaces for an exoskeleton for paraplegic users,” in Proceedings of the 2019 IEEE 16th International Conference on Rehabilitation Robotics (ICORR), (Toronto, ON: IEEE), 1159–1166.

Mochizuki, G., Centen, A., Resnick, M., Lowrey, C., Dukelow, S. P., and Scott, S. H. (2019). Movement kinematics and proprioception in post-stroke spasticity: assessment using the Kinarm robotic exoskeleton. J. Neuroeng. Rehabil. 16, 1–13.

Morris, J. H., Van Wijck, F., Joice, S., and Donaghy, M. (2013). Predicting health related quality of life 6 months after stroke: the role of anxiety and upper limb dysfunction. Disability Rehabil. 35, 291–299. doi: 10.3109/09638288.2012.691942

Nam, H. S., Hong, N., Cho, M., Lee, C., Seo, H. G., and Kim, S. (2019). Vision-assisted interactive human-in-the-loop distal upper limb rehabilitation robot and its clinical usability test. Appl. Sci. 9:3106. doi: 10.3390/app9153106

Nielsen, J. (1995). 10 Usability Heuristics for User Interface Design, Vol. 1. California: Nielsen Norman Group.

Nijenhuis, S. M., Prange-Lasonder, G. B., Stienen, A. H., Rietman, J. S., and Buurke, J. H. (2017). Effects of training with a passive hand orthosis and games at home in chronic stroke: a pilot randomised controlled trial. Clin. Rehabil. 31, 207–216. doi: 10.1177/0269215516629722

Norman, D. (2013). The Design of Everyday Things: Revised and Expanded Edition. New York, NY: Basic books.

Nudo, R. J., and Milliken, G. W. (1996). Reorganization of movement representations in primary motor cortex following focal ischemic infarcts in adult squirrel monkeys. J. Neurophysiol. 75, 2144–2149. doi: 10.1152/jn.1996.75.5.2144

Oviatt, S. (2006). “Human-centered design meets cognitive load theory: designing interfaces that help people think,” in Proceedings of the 14th ACM international conference on Multimedia, Santa Barbara, CA, 871–880.

Page, S. J., Hill, V., and White, S. (2013). Portable upper extremity robotics is as efficacious as upper extremity rehabilitative therapy: a randomized controlled pilot trial. Clin. Rehabil. 27, 494–503. doi: 10.1177/0269215512464795

Pei, Y.-C., Chen, J.-L., Wong, A. M., and Tseng, K. C. (2017). An evaluation of the design and usability of a novel robotic bilateral arm rehabilitation device for patients with stroke. Front. Neurorobot. 11:36. doi: 10.3389/fnbot.2017.00036

Petrie, H., and Bevan, N. (2009). “The evaluation of accessibility, usability, and user experience,” in The Universal Access Handbook, Vol. 1, ed. C. Stepanidis (Boca Raton, FL: CRC Press), 1–16. doi: 10.1201/9781420064995-c20

Power, V., de Eyto, A., Hartigan, B., Ortiz, J., and O’Sullivan, L. W. (2018). “Application of a user-centered design approach to the development of xosoft–a lower body soft exoskeleton,” in International Symposium on Wearable Robotics, (Cham: Springer), 44–48. doi: 10.1007/978-3-030-01887-0_9

Qiuyang, Q., Nam, C., Guo, Z., Huang, Y., Hu, X., Ng, S. C., et al. (2019). Distal versus proximal-an investigation on different supportive strategies by robots for upper limb rehabilitation after stroke: a randomized controlled trial. J. Neuroeng. Rehabil. 16:64.

Raghavan, P. (2007). The nature of hand motor impairment after stroke and its treatment. Curr. Treat. Options Cardiovasc. Med. 9, 221–228. doi: 10.1007/s11936-007-0016-3

Ranzani, R., Lambercy, O., Metzger, J.-C., Califfi, A., Regazzi, S., Dinacci, D., et al. (2020). Neurocognitive robot-assisted rehabilitation of hand function: a randomized control trial on motor recovery in subacute stroke. J. Neuroeng. Rehabil. 17:115.

Ranzani, R., Viggiano, F., Engelbrecht, B., Held, J. P., Lambercy, O., and Gassert, R. (2019). “Method for muscle tone monitoring during robot-assisted therapy of hand function: a proof of concept,” in Proceedings of the IEEE 16th International Conference on Rehabilitation Robotics (ICORR), (Toronto, ON: IEEE), 957–962.

Rodgers, H., Bosomworth, H., Krebs, H. I., van Wijck, F., Howel, D., Wilson, N., et al. (2019). Robot assisted training for the upper limb after stroke (RATULS): a multicentre randomised controlled trial. Lancet 394, 51–62. doi: 10.1016/s0140-6736(19)31055-4

Schneider, E. J., Lannin, N. A., Ada, L., and Schmidt, J. (2016). Increasing the amount of usual rehabilitation improves activity after stroke: a systematic review. J. Physiother. 62, 182–187. doi: 10.1016/j.jphys.2016.08.006

Shah, S. G. S., and Robinson, I. (2006). User involvement in healthcare technology development and assessment. Int. J. Health Care Qual. Assur. 19, 500–515. doi: 10.1108/09526860610687619

Shah, S. G. S., and Robinson, I. (2007). Benefits of and barriers to involving users in medical device technology development and evaluation. Int. J. Technol. Assess. Health Care 23, 131–137. doi: 10.1017/s0266462307051677

Sivan, M., Gallagher, J., Makower, S., Keeling, D., Bhakta, B., O’Connor, R. J., et al. (2014). Home-based computer assisted arm rehabilitation (hCAAR) robotic device for upper limb exercise after stroke: results of a feasibility study in home setting. J. Neuroeng. Rehabil. 11:163. doi: 10.1186/1743-0003-11-163