- 1Electrical and Computer Engineering Department, University of California, Los Angeles, CA, United States

- 2Bioengineering Department, University of California, Los Angeles, CA, United States

- 3California NanoSystems Institute (CNSI), University of California, Los Angeles, CA, United States

- 4Department of Surgery, David Geffen School of Medicine, University of California, Los Angeles, CA, United States

Traditional staining of biological specimens for microscopic imaging entails time-consuming, laborious, and costly procedures, in addition to producing inconsistent labeling and causing irreversible sample damage. In recent years, computational “virtual” staining using deep learning techniques has evolved into a robust and comprehensive application for streamlining the staining process without typical histochemical staining-related drawbacks. Such virtual staining techniques can also be combined with neural networks designed to correct various microscopy aberrations, such as out-of-focus or motion blur artifacts, and improve upon diffracted-limited resolution. Here, we highlight how such methods lead to a host of new opportunities that can significantly improve both sample preparation and imaging in biomedical microscopy.

Introduction

Histochemical staining is an integral part of well-established pathology clinical workflows. Since thin tissue sections are mostly transparent, their features cannot be adequately observed through a standard brightfield microscope without exogenous chromatic staining. Another exogenous label commonly used to study biological specimens is formed by fluorescent probes, which enable highly specific tracking of sample components (Lichtman and Conchello, 2005) and can be used to monitor, e.g., nuclear dynamics (Kandel et al., 2020) and cellular viability (Hu et al., 2022). However, these labeling processes are time-consuming and laborious, comprising sample fixation, embedding, sectioning, and staining (Alturkistani et al., 2016). Furthermore, staining is not a perfectly repeatable procedure considering variations among human operators/technicians, and therefore the exact distribution and intensity of stains may differ from one staining operation to the next. Another disadvantage of exogenous staining is, in general, associated with their destructive nature as well as phototoxicity and photobleaching (Ounkomol et al., 2018; Jo et al., 2021; He et al., 2022), limiting imaging durations and compromising the integrity of the samples and their labels over time. Moreover, these staining procedures introduce distortions to the tissue that prevent further labelling or molecular analysis on the same regions, which presents a significant limitation in cases where multiple stains are required (Pillar and Ozcan, 2022).

An alternative approach to measuring the features of transparent biological samples is to exploit their inherent optical properties, such as autofluorescence or optical path length, in order to generate a contrast of their constituents. Autofluorescence (Monici, 2005), phase-contrast (Burch and Stock, 1942) and differential interference contrast (DIC) (Lang, 1982) microscopy offer such label-free information. Quantitative phase imaging (QPI) (Majeed et al., 2017; Park et al., 2018; Majeed et al., 2019) techniques, which provide precise phase data at the pixel level, have proven especially useful in biological applications.

Two general obstacles to using these modalities for a wider range of biomedical applications include: 1) most pathologists or medical experts have no familiarity with these kinds of images and cannot effectively interpret them, and 2) they lack the subcellular and molecular specificity that extraneous labels provide.

In recent years, largely due to the extraordinary progress in machine learning capabilities, computational staining techniques have emerged as an elegant solution to overcome these issues. Deep learning networks have been built to derive the stain of interest synthetically—whether chromatic or fluorescent—from label-free images (Rivenson et al., 2019a; Rivenson et al., 2019b; Kandel et al., 2020; Bai et al., 2022; Bai et al., 2023).

We believe the rapid expansion of such virtual staining applications and their integration with other microscopy-enhancing network models will invariably lead to transformative opportunities in biomedical imaging.

Virtual staining

Several virtual staining models have already been successfully designed and deployed, encompassing a variety of organ and staining types (Rivenson et al., 2019a; Rivenson et al., 2019b; Rivenson et al., 2020; Zhang et al., 2020; Li et al., 2021; Bai et al., 2022; Pillar and Ozcan, 2022). It has been shown that tissue biopsy images obtained with holographic microscopy or autofluorescence can be used to virtually generate the equivalents of standard histochemical stains using deep learning algorithms. In many cases, the networks involve a supervised form of the conditional generative adversarial network “GAN” (Goodfellow et al., 2020) (Figures 1A,B), which consists of a generator and a discriminator competing in a zero-sum setting. Such virtually stained slides have shown very good fidelity with their histologically stained counterparts when evaluated by pathologists (Pillar and Ozcan, 2022). This virtual staining technique greatly reduces manual labor and the costs associated with customary laboratory preparations of chemically stained tissue.

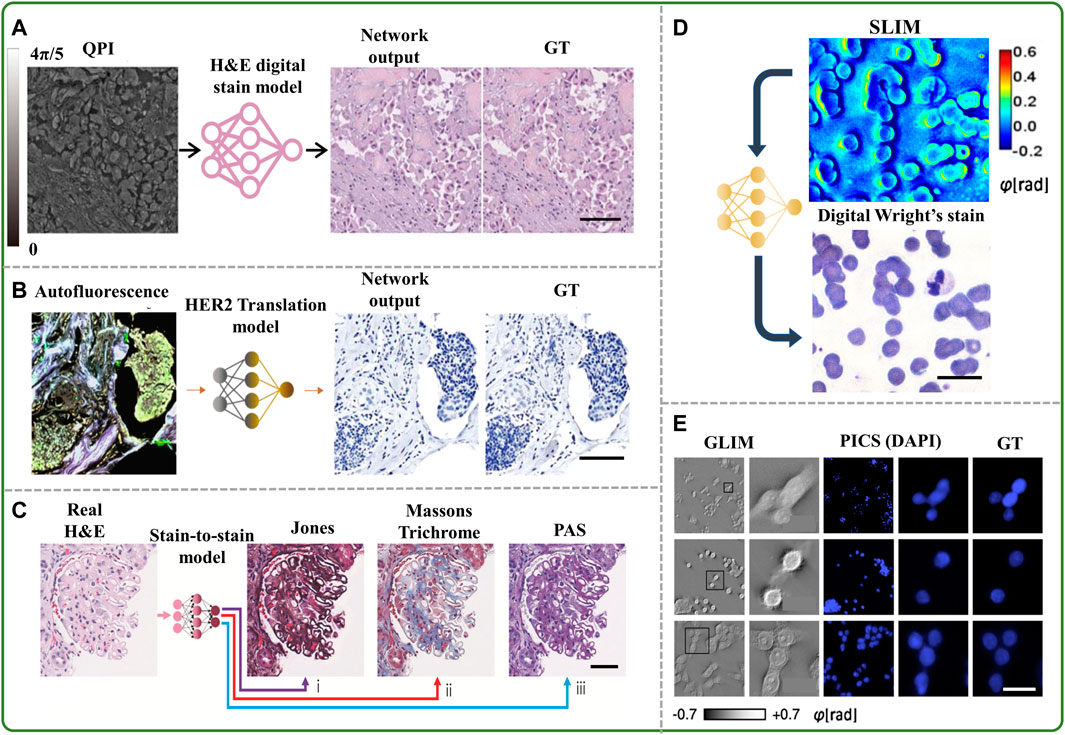

FIGURE 1. (A) Example of virtual staining of a QPI image to digitally generate an H&E brightfield image (Rivenson et al., 2019a), scale bar 50 μm, (B) example of virtual staining of an autofluorescence image to generate a HER2 brightfield image (Bai et al., 2022), scale bar 100 µm, (C) example of inferring special stains from an existing H&E stain (de Haan et al., 2021), scale bar 50 μm, (D) example of virtual staining of a blood smear quantitative phase image to digitally generate Wright’s stain (Fanous et al., 2022), scale bar 25 μm, (E) example of virtual staining of QPI cell images with a DAPI nuclear stain (Kandel et al., 2020), scale bar 25 µm.

Recent advances in this emerging field also include virtual staining of label-free images obtained in vivo (Li et al., 2021) and stain-to-stain transformations, e.g., generating Masson’s trichome stain from the image of hematoxylin and eosin (H&E) (de Haan et al., 2021) stained tissue, as shown in Figure 1C, and even blending various stains into an intelligent amalgam for diagnostic optimization (Zhang et al., 2020).

Virtual staining has also been used on label-free images of blood smears to artificially generate the Giemsa (Kaza et al., 2022) or Wright’s stain (Fanous et al., 2022) (Figure 1D), which are commonly used to diagnose leukocyte and erythrocyte disorders. Extension of virtual staining to various fluorescent probes has also been developed to specifically detect subcellular structures of interest without the need for fluorescent tags (Ounkomol et al., 2018; Kandel et al., 2020; Jo et al., 2021; He et al., 2022; Hu et al., 2022), with deep neural networks involving mostly U-Net architectures (Kandel et al., 2020). In one such experiment, the growth of the nucleus and cytoplasm of SW480 cells was assessed over many days by applying the computed fluorescence maps back to the corresponding QPI data (Kandel et al., 2020) (Figure 1E).

Another study used virtual staining to generate semantic segmentation maps from computationally inferred fluorescence images in live, unlabeled brain cells that were subsequently utilized to decipher cellular compartments (Kandel et al., 2021). The time-lapse development of hippocampal neurons was further studied using these synthetic fluorescence signals, emphasizing the connections between cellular dry mass generation and the movements of biomolecules inside the nucleus and neurites. This technique allowed for continuous recordings of live samples without deleterious fluorescent elements.

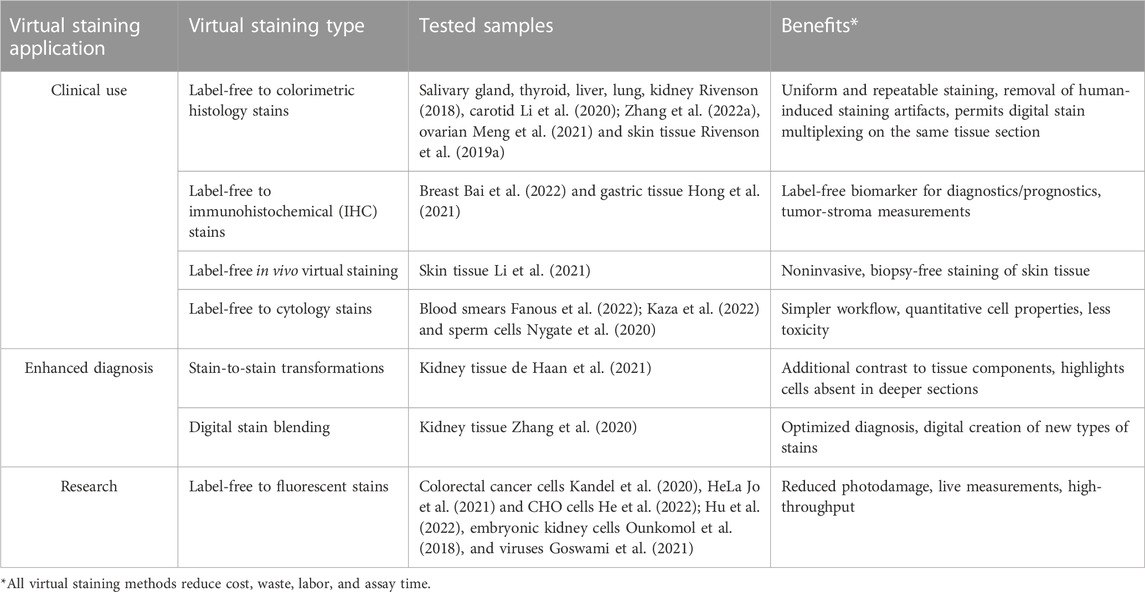

Table 1 provides an overview of some of these virtual staining approaches, including the tested sample types and the specific advantages they offer in addition to the cost, labor, and time savings compared with traditional chemical staining methods.

TABLE 1. Different virtual staining methods with their applications, tested samples and specific added benefits.

Discussion

Over the past century, light microscopy has undergone a remarkable and profound transformation. It has transitioned from being predominantly descriptive and qualitative to becoming a potent tool capable of uncovering novel phenomena and elucidating intricate molecular mechanisms through a synergistic visual and quantitative approach. One key driving factor behind these advancements has been the development of numerous immunohistochemical (IHC) stains that effectively highlight specific epitopes within cells. These IHC stains have significantly enhanced diagnostic capabilities in research and clinical pathology. However, in challenging cases, several IHC stains are often employed, necessitating the use of multiple tissue slides for analysis. This becomes a bottleneck as tissue biopsies are becoming smaller in size, and there is a growing need to harness new technologies that can extract more information from limited tissue samples. With its non-destructive nature, alternative label-free optical modalities, when combined with virtual staining, hold the potential to revolutionize the histology field by enabling multiple stains from a single tissue section. This advancement opens doors for more accurate diagnosis, even when working with relatively small tissue fragments. Furthermore, a notable decrease in required reagents and chemicals, including multiple specific antibodies, can prove highly advantageous for small laboratories that lack the financial means to maintain an ever-expanding inventory of diagnostic antibodies.

The overall processing time for a typical IHC stain typically spans a couple of days. Nevertheless, certain clinical situations such as transplanted organs with suspected rejection or rapidly growing tumors necessitate a significantly expedited pathological report. As for the virtual staining of whole slide images (WSI), the latest cutting-edge techniques can accomplish this process within minutes. Customizing the image acquisition system and digital processing hardware could further accelerate this operation and simplify the whole measurement process. For instance, it has been shown that GAN networks can be constructed and trained to deblur out-of-focus images with high reliability in frames with axial offsets of up to +/− 5 µm from the image plane (Luo et al., 2021). To accelerate the tissue imaging process, which often consists of frequent focus adjustments during the scanning of a WSI, cascaded networks have been assembled to first restore the sharpness of defocused images that randomly appear during the slide scanning process, and then digitally perform virtual staining on these autofocused images (Zhang Y. et al., 2022). This two-step tactic enabled by a cascade of autofocusing and virtual staining neural networks is an example of how deep learning can be used to enhance not only the sample preparation and staining processes, but also the measurement, i.e., the image acquisition step.

Similarly, digital staining could potentially be coupled with the recently devised motion-blur reconstruction method named GANscan (Fanous and Popescu, 2022; Rivenson and Ozcan, 2022). This technique scans tissue slides in a continuous manner at 30-times the speed of traditional microscopy scanning, and subsequently corrects for the speed-induced motion-blur effect through a GAN-trained network. If the inputs are images generated by a label-free contrast mechanism such as QPI or autofluorescence, the results of the model could thereafter be digitally stained. This, again, could constitute a cascaded neural network architecture, first handling the deblurring operation due to rapid scanning of the tissue sample, and then virtual staining of the deblurred samples from label-free endogenous contrast to a desired virtual stain.

Another deep learning operation that can be advantageously paired with virtual staining is the enhancement of spatial resolution. It has been shown that deep learning models can be trained to convert diffraction-limited confocal microscopy images into super-resolved stimulated emission depletion (STED) microscopy equivalent images (Wang et al., 2019). To our knowledge, a concept that has not yet been realized is achieving super-resolved quantitative phase imaging through the supervised learning of fluorescent-to-phase modalities, flipping the typical direction of transformation using labeled samples. Coupling such a virtual super-resolution network with digital staining could, in principle, allow one to obtain super-resolution brightfield H&E images from ordinary label-free QPI acquisitions.

Overall, virtually transforming one imaging modality into another, along with advances in deep learning tools, has been the boon of many meaningful microscopy innovations in recent years. And there are multiple circumstances in which such a strategy of cross-modality image transformations is still unexplored or may benefit from further research.

Models may be designed to fix the various imperfections of a sample, whether optical or physical, and could thereafter be virtually stained. A consecutive GAN network would first handle artifact reconstructions/corrections, and then the stain of choice would be digitally rendered. It is also worth noting that implementing a system that enables rapid and consistent imaging, correction, and virtual staining of tissue samples would significantly enhance stain uniformity/repeatability. This is particularly crucial considering the lab-based biases present in extensive and reputable databases, such as the digital image collection of The Cancer Genome Atlas (TCGA) (Dehkharghanian et al., 2023).

The cardinal challenges to such strategies are twofold: first, a copious amount of data is required for acceptable results. Enough instances need to be included to handle the various anomalies and differences of each case; second, as this is primarily a supervised learning approach, the image pairs need to be very well registered, which might be tedious and require manual inspection and quality assurance during the training data preparation (which is a one-time effort).

Conclusion

Virtual staining has demonstrated powerful capabilities using various modes of microscopy and will likely be implemented more and more in different bioimaging scenarios, steadily modernizing the industry altogether. The ability of virtual staining to accurately highlight tissue morphology while conserving tissue, reducing costs, and expediting turnaround time has the potential to revolutionize traditional histopathology workflows. However, for a truly disruptive virtual staining-based digitization of the well-established branches and subspecialties of pathology to occur, the technologies spanning both ends of the histological process (from sample acquisition to physician examination) need to be not only highly ergonomic, comprehensive and consistent, but also affordable and compatible with different forms of microscopy and slide scanner devices that are commercially available.

Data availability statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Author contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Acknowledgments

AO Lab acknowledges the support of NIH P41, The National Center for Interventional Biophotonic Technologies.

Conflict of interest

AO is the co-founder of a company (Pictor Labs) that commercializes virtual staining technologies.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alturkistani, H. A., Tashkandi, F. M., and Mohammedsaleh, Z. M. (2016). Histological stains: A literature review and case study. Glob. J. health Sci. 8, 72. doi:10.5539/gjhs.v8n3p72

Bai, B., Wang, H., Li, Y., de Haan, K., Colonnese, F., Wan, Y., et al. (2022). Label-free virtual HER2 immunohistochemical staining of breast tissue using deep learning. BME Front. 2022. doi:10.34133/2022/9786242

Bai, B., Yang, X., Li, Y., Zhang, Y., Pillar, N., and Ozcan, A. (2023). Deep learning-enabled virtual histological staining of biological samples. Light Sci. Appl. 12, 57. doi:10.1038/s41377-023-01104-7

Burch, C., and Stock, (1942). Phase-contrast microscopy. J. Sci. Instrum. 19, 71–75. doi:10.1088/0950-7671/19/5/302

de Haan, K., Zhang, Y., Zuckerman, J. E., Liu, T., Sisk, A. E., Diaz, M. F. P., et al. (2021). Deep learning-based transformation of H&E stained tissues into special stains. Nat. Commun. 12, 4884–4913. doi:10.1038/s41467-021-25221-2

Dehkharghanian, T., Bidgoli, A. A., Riasatian, A., Mazaheri, P., Campbell, C. J. V., Pantanowitz, L., et al. (2023). Biased data, biased AI: Deep networks predict the acquisition site of TCGA images. Diagn. Pathol. 18, 67–12. doi:10.1186/s13000-023-01355-3

Fanous, M. J., He, S., Sengupta, S., Tangella, K., Sobh, N., Anastasio, M. A., et al. (2022). White blood cell detection, classification and analysis using phase imaging with computational specificity (PICS). Sci. Rep. 12, 20043–20110. doi:10.1038/s41598-022-21250-z

Fanous, M. J., and Popescu, G. (2022). GANscan: Continuous scanning microscopy using deep learning deblurring. Light Sci. Appl. 11, 265. doi:10.1038/s41377-022-00952-z

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., et al. (2020). Generative adversarial networks. Commun. ACM 63, 139–144. doi:10.1145/3422622

Goswami, N., He, Y. R., Deng, Y. H., Oh, C., Sobh, N., Valera, E., et al. (2021). Label-free SARS-CoV-2 detection and classification using phase imaging with computational specificity. Light Sci. Appl. 10, 176. doi:10.1038/s41377-021-00620-8

He, Y. R., He, S., Kandel, M. E., Lee, Y. J., Hu, C., Sobh, N., et al. (2022). Cell cycle stage classification using phase imaging with computational specificity. ACS photonics 9, 1264–1273. doi:10.1021/acsphotonics.1c01779

Hong, Y., Heo, Y. J., Kim, B., Lee, D., Ahn, S., Ha, S. Y., et al. (2021). Deep learning-based virtual cytokeratin staining of gastric carcinomas to measure tumor–stroma ratio. Sci. Rep. 11, 19255. doi:10.1038/s41598-021-98857-1

Hu, C., He, S., Lee, Y. J., He, Y., Kong, E. M., Li, H., et al. (2022). Live-dead assay on unlabeled cells using phase imaging with computational specificity. Nat. Commun. 13, 713. doi:10.1038/s41467-022-28214-x

Jo, Y., Cho, H., Park, W. S., Kim, G., Ryu, D., Kim, Y. S., et al. (2021). Label-free multiplexed microtomography of endogenous subcellular dynamics using generalizable deep learning. Nat. Cell Biol. 23, 1329–1337. doi:10.1038/s41556-021-00802-x

Kandel, M. E., He, Y. R., Lee, Y. J., Chen, T. H. Y., Sullivan, K. M., Aydin, O., et al. (2020). Phase Imaging with Computational Specificity (PICS) for measuring dry mass changes in sub-cellular compartments. Nat. Commun. 11, 6256–6310. doi:10.1038/s41467-020-20062-x

Kandel, M. E., Kim, E., Lee, Y. J., Tracy, G., Chung, H. J., and Popescu, G. (2021). Multiscale assay of unlabeled neurite dynamics using phase imaging with computational specificity. ACS sensors 6, 1864–1874. doi:10.1021/acssensors.1c00100

Kaza, N., Ojaghi, A., and Robles, F. E. (2022). Virtual staining, segmentation, and classification of blood smears for label-free hematology analysis. BME Front. 2022. doi:10.34133/2022/9853606

Li, D., Hui, H., Zhang, Y., Tong, W., Tian, F., Yang, X., et al. (2020). Deep learning for virtual histological staining of bright-field microscopic images of unlabeled carotid artery tissue. Mol. imaging Biol. 22, 1301–1309. doi:10.1007/s11307-020-01508-6

Li, J., Garfinkel, J., Zhang, X., Wu, D., Zhang, Y., de Haan, K., et al. (2021). Biopsy-free in vivo virtual histology of skin using deep learning. Light Sci. Appl. 10, 233–322. doi:10.1038/s41377-021-00674-8

Lichtman, J. W., and Conchello, J.-A. (2005). Fluorescence microscopy. Nat. methods 2, 910–919. doi:10.1038/nmeth817

Luo, Y., Huang, L., Rivenson, Y., and Ozcan, A. (2021). Single-shot autofocusing of microscopy images using deep learning. ACS Photonics 8, 625–638. doi:10.1021/acsphotonics.0c01774

Majeed, H., Keikhosravi, A., Kandel, M. E., Nguyen, T. H., Liu, Y., Kajdacsy-Balla, A., et al. (2019). Quantitative histopathology of stained tissues using color spatial light interference microscopy (cSLIM). Sci. Rep. 9, 14679–14714. doi:10.1038/s41598-019-50143-x

Majeed, H., Sridharan, S., Mir, M., Ma, L., Min, E., Jung, W., et al. (2017). Quantitative phase imaging for medical diagnosis. J. Biophot. 10, 177–205. doi:10.1002/jbio.201600113

Meng, X., Li, X., and Wang, X. (2021). A computationally virtual histological staining method to ovarian cancer tissue by deep generative adversarial networks. Comput. Math. Methods Med. 2021, 1–12. doi:10.1155/2021/4244157

Monici, M. (2005). Cell and tissue autofluorescence research and diagnostic applications. Biotechnol. Annu. Rev. 11, 227–256. doi:10.1016/S1387-2656(05)11007-2

Nygate, Y. N., Levi, M., Mirsky, S. K., Turko, N. A., Rubin, M., Barnea, I., et al. (2020). Holographic virtual staining of individual biological cells. Proc. Natl. Acad. Sci. 117, 9223–9231. doi:10.1073/pnas.1919569117

Ounkomol, C., Seshamani, S., Maleckar, M. M., Collman, F., and Johnson, G. R. (2018). Label-free prediction of three-dimensional fluorescence images from transmitted-light microscopy. Nat. methods 15, 917–920. doi:10.1038/s41592-018-0111-2

Park, Y., Depeursinge, C., and Popescu, G. (2018). Quantitative phase imaging in biomedicine. Nat. Photonics 12, 578–589. doi:10.1038/s41566-018-0253-x

Pillar, N., and Ozcan, A. (2022). Virtual tissue staining in pathology using machine learning. Expert Rev. Mol. Diagnostics 22, 987–989. doi:10.1080/14737159.2022.2153040

Rivenson, Y., de Haan, K., Wallace, W. D., and Ozcan, A. (2020). Emerging advances to transform histopathology using virtual staining. BME Front. 2020. doi:10.34133/2020/9647163

Rivenson, Y. (2018). Deep learning-based virtual histology staining using auto-fluorescence of label-free tissue. arXiv preprint arXiv:1803.11293.

Rivenson, Y., Liu, T., Wei, Z., Zhang, Y., de Haan, K., and Ozcan, A. (2019a). PhaseStain: The digital staining of label-free quantitative phase microscopy images using deep learning. Light Sci. Appl. 8, 23–11. doi:10.1038/s41377-019-0129-y

Rivenson, Y., and Ozcan, A. (2022). Deep learning accelerates whole slide imaging for next-generation digital pathology applications. Light Sci. Appl. 11, 300–303. doi:10.1038/s41377-022-00999-y

Rivenson, Y., Wang, H., Wei, Z., de Haan, K., Zhang, Y., Wu, Y., et al. (2019b). Virtual histological staining of unlabelled tissue-autofluorescence images via deep learning. Nat. Biomed. Eng. 3, 466–477. doi:10.1038/s41551-019-0362-y

Wang, H., Rivenson, Y., Jin, Y., Wei, Z., Gao, R., Günaydın, H., et al. (2019). Deep learning enables cross-modality super-resolution in fluorescence microscopy. Nat. methods 16, 103–110. doi:10.1038/s41592-018-0239-0

Zhang, G., Ning, B., Hui, H., Yu, T., Yang, X., Zhang, H., et al. (2022a). Image-to-images translation for multiple virtual histological staining of unlabeled human carotid atherosclerotic tissue. Mol. Imaging Biol. 24, 31–41. doi:10.1007/s11307-021-01641-w

Zhang, Y., de Haan, K., Rivenson, Y., Li, J., Delis, A., and Ozcan, A. (2020). Digital synthesis of histological stains using micro-structured and multiplexed virtual staining of label-free tissue. Light Sci. Appl. 9, 78–13. doi:10.1038/s41377-020-0315-y

Keywords: biomedical microscopy, computational imaging, computational staining, digital staining, virtual staining, quantitative phase imaging, intelligent microscopy, digital pathology

Citation: Fanous MJ, Pillar N and Ozcan A (2023) Digital staining facilitates biomedical microscopy. Front. Bioinform. 3:1243663. doi: 10.3389/fbinf.2023.1243663

Received: 21 June 2023; Accepted: 17 July 2023;

Published: 26 July 2023.

Edited by:

Daniel Sage, Ecole Polytechnique Fédérale de Lausanne (EPFL), SwitzerlandReviewed by:

Martin Weigert, Swiss Federal Institute of Technology Lausanne, SwitzerlandCopyright © 2023 Fanous, Pillar and Ozcan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Aydogan Ozcan, b3pjYW5AdWNsYS5lZHU=

†ORCID: Aydogan Ozcan, orcid.org/0000-0002-0717-683X

Michael John Fanous

Michael John Fanous Nir Pillar

Nir Pillar Aydogan Ozcan1,2,3,4*†

Aydogan Ozcan1,2,3,4*†