- 1Hannover Medical School, ENT Clinic, Hanover, Germany

- 2Cluster of Excellence “Hearing4all”, Medical School of Hannover, Hanover, Germany

- 3Advanced Bionics GmbH, European Research Center, Hanover, Germany

Objectives: This clinical study investigated the impact of the Naída M hearing system, a novel cochlear implant sound processor and corresponding hearing aid, featuring automatic scene classification systems which combine directional microphones and noise reduction algorithms, on speech perception in various acoustic scenarios.

Methods: Speech perception was assessed in 20 cochlear implant (CI) recipients, comprising both bilaterally implanted and bimodal listeners. Participants underwent the adaptive matrix sentence test in both quiet and noisy environments. The automatic scene classifier (ASC, AutoSense OS 3.0) involving different microphone settings was evaluated against the omni-directional microphone on the Naída M hearing system. The predecessor hearing system Naída Q served as reference. Furthermore, the automatic focus steering feature (FSF, Speech in 360°) of the Naída M hearing system was compared to the manual FSF of the Naída Q hearing system in a multi-loudspeaker setup.

Results: While both sound processor models yielded comparable outcomes with the omni-directional microphone, the automatic programs demonstrated an enhancement in speech perception: up to 5 dB or 40% in noise for the latest sound processor relative to its predecessor. Subjective feedback further underscored the positive experience with the newer generation system in everyday listening scenarios.

Conclusion: The Naída M hearing system features advanced classification systems combined with superior processing capabilities, significantly enhancing speech perception in noisy environments compared to its predecessor, the Naída Q hearing system.

Introduction

Cochlear implants (CI) have proven to be a successful treatment for severe to profound sensorineural hearing losses (Wilson and Dorman, 2008; Rak et al., 2017). While for understanding speech in quiet, up to 100% speech intelligibility can be achieved, the audibility of speech in noisy surroundings remains difficult (Nelson et al., 2003). In such situations, directional microphones can enhance the signal-to-noise ratio (SNR) in scenarios where speech and noise sources are spatially separated, specifically when speech comes from the front and noise originates from behind. This technology is already well-established in hearing aids (HA) (Cord et al., 2002), as well as in CIs (Chung et al., 2006) where it has been found to significantly improve speech perception. The Naída CI Q (Quest) sound processor (Advanced Bionics, Valencia, CA) as well as the matching Naída Link Q HA (Phonak, Stäfa, Switzerland) offer a monaural adaptive directional microphone, called UltraZoom (Advanced Bionics, 2015), as well as a binaural fixed directional microphone, called StereoZoom (Advanced Bionics, 2016b). Research has consistently highlighted the advantages of using directional microphones over omni-directional (omni) microphones for enhanced speech perception. These benefits have been noted across various user groups, including those with hearing aids (HAs) only (unilateral or bilateral) (Bentler, 2005), cochlear implant (CI) only users (Buechner et al., 2014; Advanced Bionics, 2015; Geißler et al., 2015; Ernst et al., 2019; Weissgerber et al., 2019), as well as bimodal (CI and HA) users (Devocht et al., 2016; Ernst et al., 2019).

Coupled with advanced microphone technologies, noise reduction or signal enhancement algorithms have proven to further boost speech perception (Buechner et al., 2010), sound quality, and reduce listener effort (Dingemanse et al., 2018). Yet, a significant challenge remains: for individuals with hearing impairments, choosing the most suitable program for a given environment can be difficult. Furthermore, during the fitting process, selecting the optimal combination of microphone type and signal processing algorithm for individual users becomes even more intricate, given that each acoustic situation has its own unique demands and requires specific settings.

To address these challenges, automatic scene classification (ASC) systems were integrated into HAs. Prior clinical studies have identified improvements in both speech perception and ease of use when using these systems (Appleton-Huber, 2015; Wolfe et al., 2017; Rodrigues and Liebe, 2018; Searchfield et al., 2018) and even for CI users (Mauger et al., 2014; Eichenauer et al., 2021). Such research typically contrasted the performance of ASCs with manual program switching in different listening scenarios.

The latest generation sound processor from Advanced Bionics, the Naída CI M (Marvel), alongside its complementary HA from Phonak, the Naída Link M, utilize the same ASC system known as AutoSense OS. While the previous generation's ASC, AutoSound OS (Advanced Bionics, 2015), differentiated mainly between two listening environments—“calm situation” and “speech in noise”—the AutoSense OS introduces five more: speech in loud noise, speech in car, comfort in noise, comfort in echo, and music (Advanced Bionics, 2021a). This new system continuously assesses the listening environment and, when detecting a new scenario, seamlessly blends parameters in a gradual transition to the new settings, preventing any abrupt changes that might startle users.

The ASC's seven classes encompass diverse microphone settings such as T-Mic (Frohne-Büchner et al., 2004; Gifford and Revit, 2010; Kolberg et al., 2015; Advanced Bionics, 2021b), real-ear-sound (RES) (Chen et al., 2015; Advanced Bionics, 2021b), directional microphones (Advanced Bionics, 2015, 2016b) and algorithms targeting wind noise, reverberation (Eichenauer et al., 2021), or transient noise (Dyballa et al., 2015; Stronks et al., 2021). In more specific environments with background noise, where speech originates from non-frontal directions, the focus steering feature (FSF) can optimize the signal-to-noise ratio (SNR) to improve speech perception. While the Naída Q system necessitates manual direction-switching, the Naída M system automates this process. More specifically, the Naída Q system introduced an FSF feature, named “ZoomControl”, that enables users of two hearing devices to actively choose their desired auditory direction of focus (Advanced Bionics, 2014). For front or back orientations, both devices employ a fixed cardioid pattern, aligning their attention to the specified direction, a mechanism akin to beamforming. However, for a left or right focus, the audio signals with the favorable SNR from the intended side are relayed to the contralateral hearing device. Concurrently, the microphone signal of the contralateral device is attenuated. This method of transmitting the clear signal from the desired side while diminishing the unintended side's input effectively mitigates the challenges posed by the head shadow, resulting in enhanced speech perception (Advanced Bionics, 2016a; Holtmann et al., 2020).

In contrast, the Naída M system features a fully-automatic FSF, termed 'AutoZoomControl,' which seamlessly switches to the target direction based on the analysis of speech modulations from all four primary directions (Phonak, 2011).

In this study, speech perception was investigated using the ASC and FSF algorithms compared to the omni microphone settings in both the Naída Q and the Naída M systems. Also, subjective feedback on speech perception, sound quality, and ease of use via questionnaires was gathered.

Materials and methods

Ethics

The study was approved by the local Medical Ethical Committee (Medical University of Hannover) as well as the German competent authority (BfArM) and conducted in accordance with the Declaration of Helsinki as well as the Medizin-Produkte-Gesetz (MPG). All study participants provided written informed consent prior to participation in the study. Study participants received compensation for traveling expenses.

Investigational devices

The new Naída M hearing system (sound processor and hearing aid) was compared to the previous generation Naída Q hearing system. For both hearing systems, the ASC analyses the local acoustic environment of the hearing device user and automatically switches to the most appropriate microphone settings and sound cleaning algorithms. While the Naída Q's ASC switches between the omni-directional and the UltraZoom adaptive directional microphone, depending on whether the listening situation is “calm situation” or “speech in noise”, respectively, there are five additional classes included in the Naída M's ASC: “speech in loud noise,” “speech in car,” “comfort in noise,” “comfort in echo,” and “music.” Switching between these seven classes is based on an advanced machine learning system. A calm situation, involving a fixed program with an omni-directional microphone setting and a noisy situation involving the ASC setting were investigated for each of the hearing systems.

The FSF focuses toward the speech signal and increases the SNR by amplifying the signal of interest, while attenuating the signal arriving at the opposite side. This works in either front/back or left/right directions. While the Naída Q offers the FSF as a manual switching, where the user has to choose the direction by using either the processor or remote controls, the Naída M steers the focus automatically by analyzing the incoming microphone signals and then switching to the appropriate direction. The conditions omni and FSF on the Naída Q were compared to FSF on the Naída M.

Study design

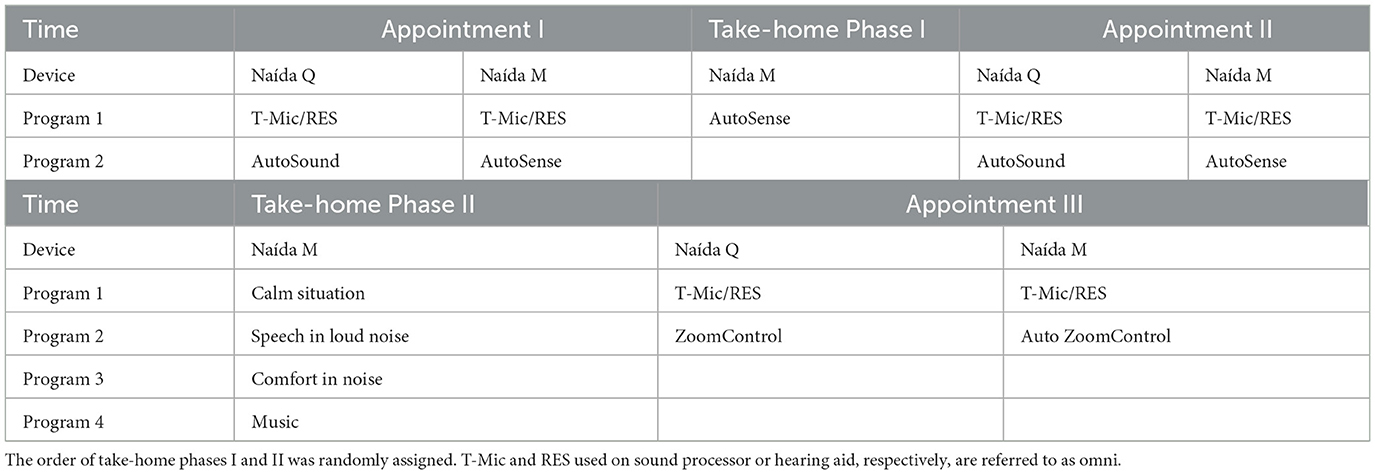

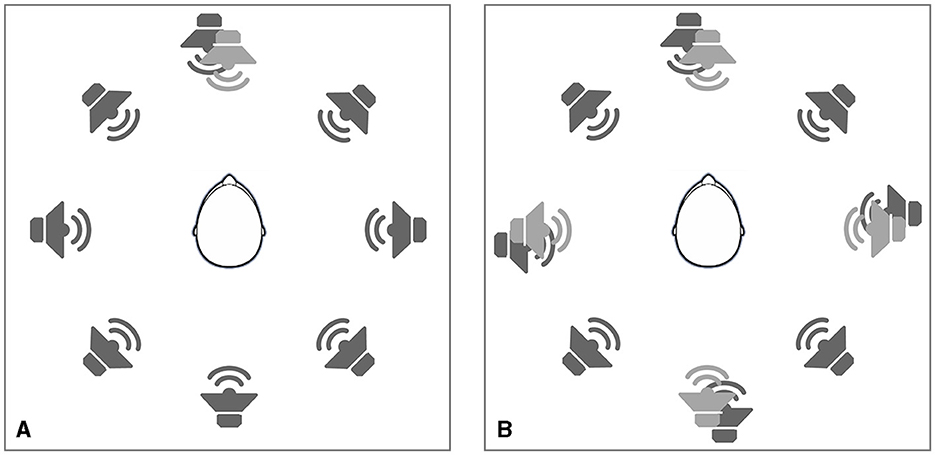

An uncontrolled open study design with within-subject comparisons was used. The participants were invited to take part in three study appointments with two take-home phases of at least 4 weeks between appointments (Figure 1).

Figure 1. Study design showing the timelines, hearing system, program and test methods. The order of take-home phases I and II was randomly assigned, dotted lines indicate which combinations of sound processor and microphone setting were measured with which method.

During the first appointment both hearing systems (Naída Q and Naída M) were evaluated through speech perception tests in quiet and in noise. Both the, omni settings as well as the respective ASCs were tested. One group (five bilateral, five bimodal, randomly assigned) started with just the ASC during the first take-home phase, the other group used four manual programs. During the second appointment, speech perception tests in noise were repeated. Participants were allocated the alternative program configuration, manual or automatic, for their second take-home phase. During the third appointment speech perception in noise was administered for only the omni setting of the Naída Q hearing system and for the FSF of both hearing systems.

Within any appointment, the order of measurements and the different settings under investigation were randomized.

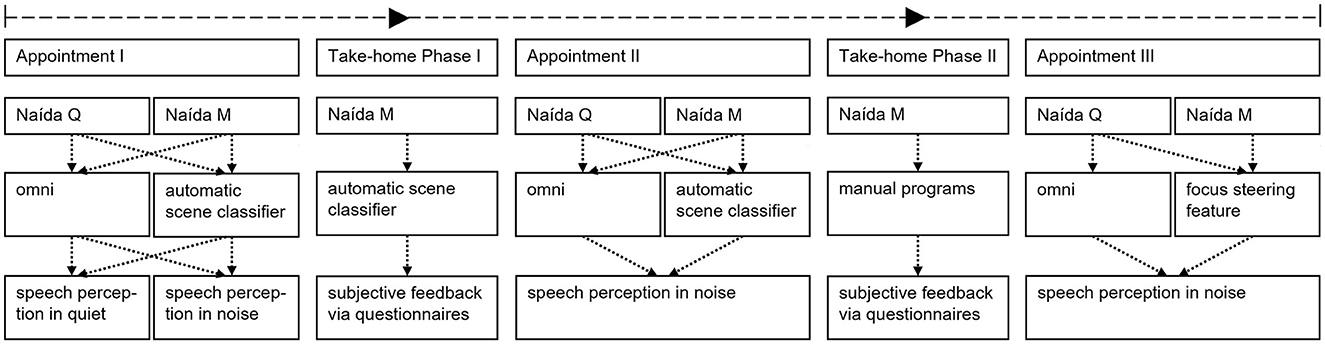

Study setup

In a sound-treated room, participants were positioned within an eight-loudspeaker circle having 45° equidistant loudspeaker positions at a 1.1 meter distance from the center of the participant's head. For evaluation of the ASC, the speech material was presented from the front (Figure 2A). For the FSF evaluation, speech was randomly presented from 0°, 90°, 180°, and 270° (Figure 2B). Interfering uncorrelated noise was presented simultaneously from all eight loudspeakers. The test setup is shown in Figure 2 where light and dark gray loudspeaker symbols indicate the target speech and the interfering noise signals, respectively.

Figure 2. Study setup to evaluate the influence of the automatic scene classifiers (A) and the focus steering features (B) on speech perception. Dark gray indicates loudspeakers presenting noise, light gray indicates loudspeakers presenting the target speech signal. In setup (A), the speech signal was presented from the front. In setup (B), the speech signal was randomly presented from 0°, 90°, 180°, and 270°. All eight loudspeakers presented the interfering noise signal.

Study group

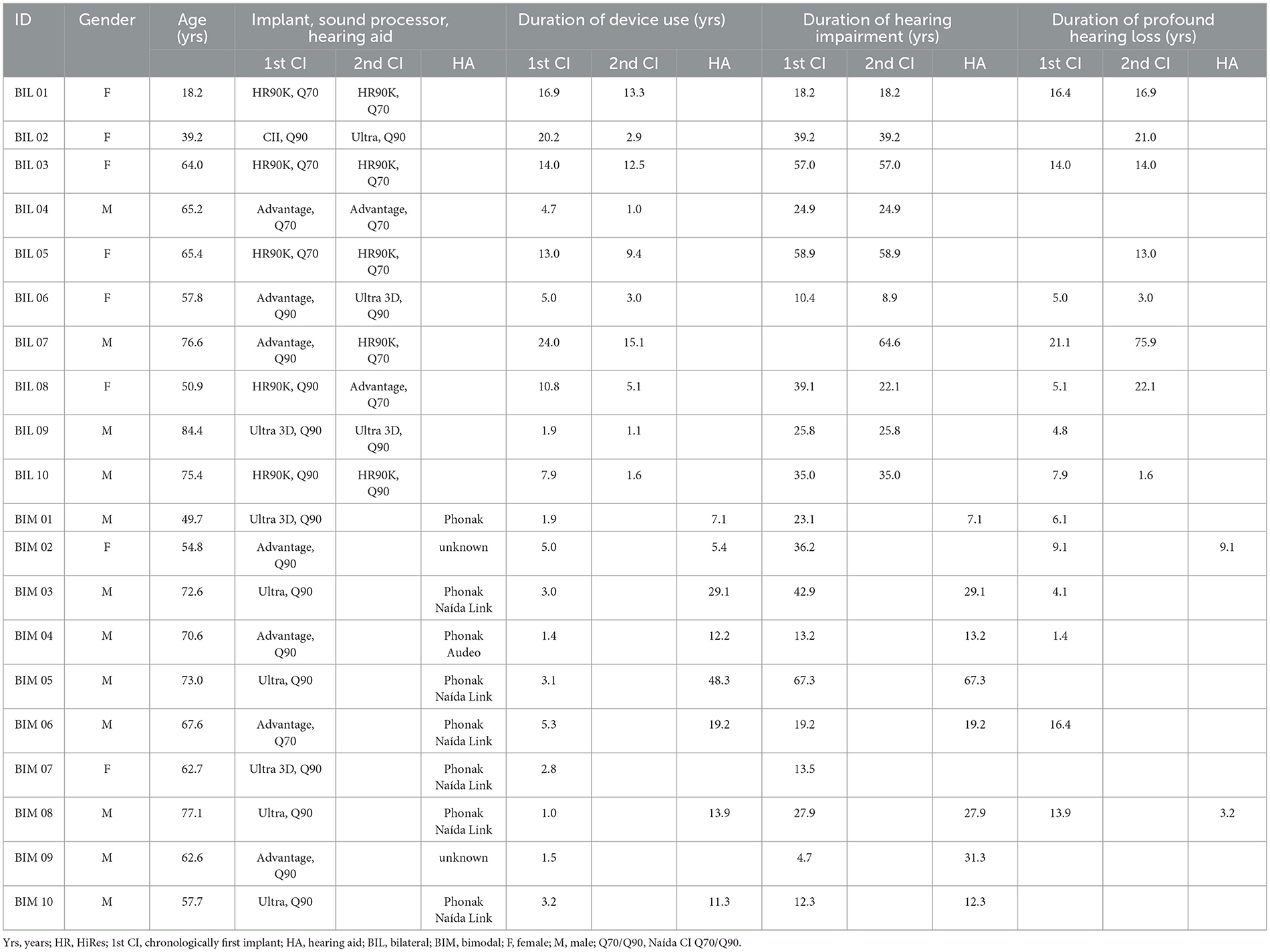

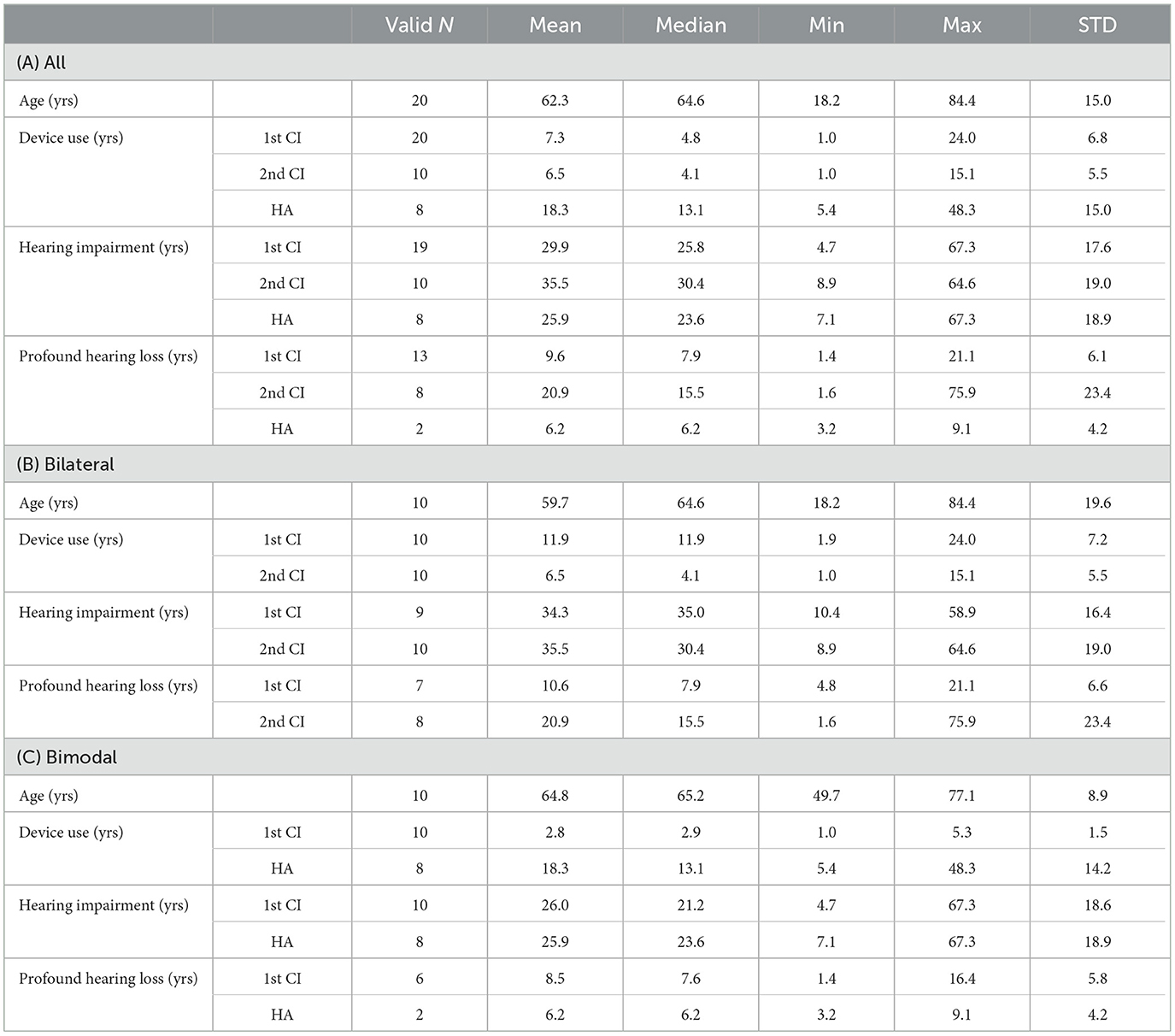

Twenty postlingually deafened CI users (ten bilateral and ten bimodal) participated in this clinical study. One of the study participants dropped out of the study before the last appointment. Two others did not perform the speech perception tests during the last appointment. All participants used an Advanced Bionics' implant system (various generations) and the Naída CI Q-Series sound processor (either the Q70 or Q90) with the HiRes Optima sound coding strategy (Advanced Bionics, 2011). Bimodal listeners used different types of hearing aids. Participants had an average age at enrolment of 62.3 years (range: 18.2–84.4 years) and an average duration of implant use of their first CI of 7.3 years (range: 1.0–24.0 years). Detailed demographical data are shown in Table 1.

The two subgroups, bilateral and bimodal, do not show significant differences in terms of age (p = 0.850), duration of hearing impairment (p = 0.307) or duration of profound hearing loss (p = 0.830), but the duration of first implant use was significant (p = 0.002). Eight participants (three bilateral and five bimodal) reported never switching between programs, one (bimodal) to switching on a monthly basis, four (two bilateral and two bimodal) to switching on a weekly and seven (five bilateral and two bimodal) to switching on a daily basis. Before starting the study 15 participants (seven bilateral and eight bimodal) preferred to use an automatic program, three (two bilateral and one bimodal) preferred not to use an automatic program and two (one bilateral and one bimodal) did not have any preference. Group data are shown in Table 2.

Table 2. Group values of demographical data for the entire study participants group (A) as well as split to the subgroups: bilateral (B) and bimodal (C).

Programming of hearing devices

The participants clinical Naída CI Q-Series sound processor program was transferred into the Naída CI Q90 study processor using the SoundWave fitting software. If requested by the participant, minor modifications of the global volume setting were applied. Study programs were then created based on these settings. The omni-directional microphone program was migrated to the Target CI fitting software to create the study programs for the Naída M sound processor. The ClearVoice (Buechner et al., 2010; Ernst et al., 2019) setting (off, low, medium, high) was transferred from the clinical program. SoftVoice (Marcrum et al., 2021) was enabled according to the default parameter settings in the software.

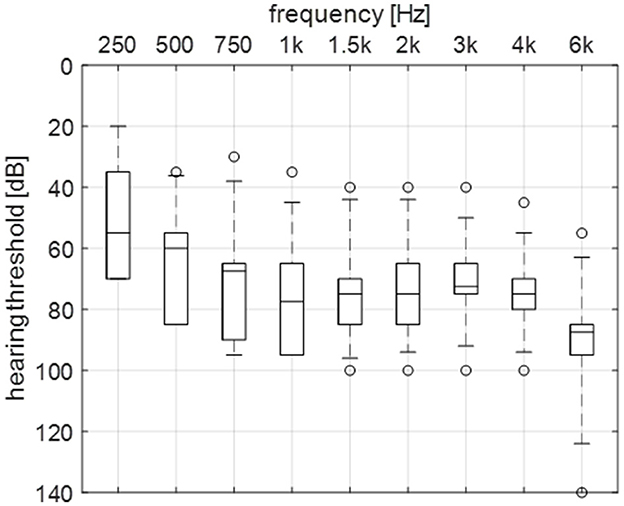

Unaided thresholds were measured via the audiogram direct function using the Naída Link Q90 hearing aid dedicated to this study at the following frequencies: 250 Hz, 500 Hz, 750 Hz, 1 kHz, 1.5 kHz, 2 kHz, 3 kHz, 4 kHz, and 6 kHz for the bimodal study group (Figure 3).

Figure 3. Unaided hearing thresholds of the un-implanted ear of the bimodal CI user group (N = 10). Line, median; box, quartiles 25 and 75%; circle, outlier; whisker, minimum and maximum.

Based on the audiogram, the hearing aids were adjusted using the Adaptive Phonak Digital Bimodal (APDB) fitting formula (Veugen et al., 2016a,b) using the Phonak Target fitting software. The settings were transferred to the Target CI software for the programming of the Naída M Link HA. Table 3 shows the programming of the hearing devices during the appointments for the speech perception measurements and during the take-home phases.

Prior to the start of the study the correct switching behavior of both automatic features into their classes or directions, were confirmed by technical measurements conducted in the actual study test room.

Speech perception

Speech perception in quiet as well as in noise was measured using the Oldenburg sentence test (OLSA) (Wagener et al., 1999). Two OLSA lists (20 sentences each) were used for each processing condition. Results from both OLSA lists were averaged to obtain the overall correct score for each individual test condition. For the ASC evaluation, the adaptive OLSA was used to determine the speech reception threshold (SRT), which represents the speech level required for 50% correct word understanding. For the speech perception measurement in noise a multi-talker babble noise was presented at 70 dB SPL. For the evaluation of the FSF, a fixed individual SNR was used resulting in 40–60% correct word understanding with the omni setting. The speech shaped noise (OLnoise) was presented at 65 dB SPL. An introductory sentence spoken by a female voice and lasting around 20 s was presented at a slightly higher level than the target speech signal to allow for the focus direction setting, manual or automatic, respectively.

Subjective feedback

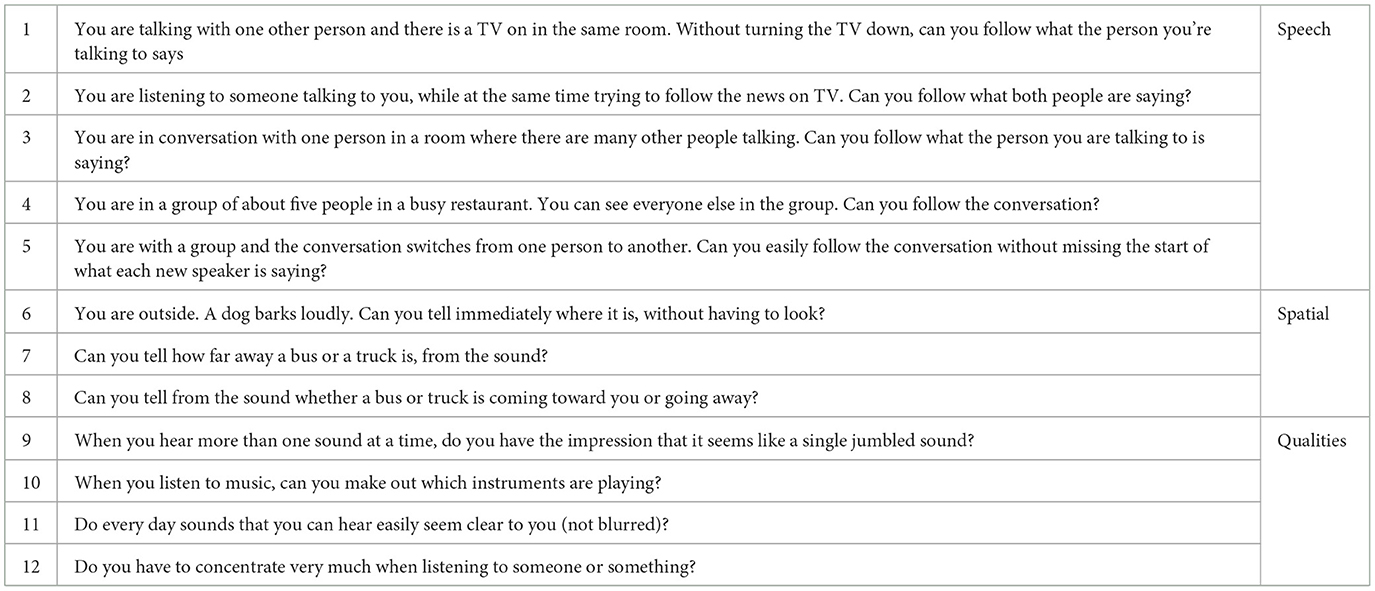

To evaluate the subjective hearing impression with the ASC vs. the manual programs, the SSQ-12 (Noble et al., 2013), a short version with 12 items of the Speech, Spatial and Qualities of Hearing Scale (Gatehouse and Noble, 2004) was administered. Individual questions are listed in Table 4.

Table 4. Twelve questions of the SSQ-12 questionnaire and the split to the three sections speech, spatial, and qualities.

Participants were asked to rate their hearing abilities on an 11-point Likert scale from 0 (very poor/strong/mixed) to 10 (very good/weak/not mixed). Ratings were averaged across the respective sections: speech (questions 1–5), spatial (questions 6–8) and quality (questions 9–12). Questionnaires were completed during the first appointment for the Naída Q hearing system and during the take-home phase for the Naída M hearing system.

At the end of the study, participants were asked to complete a customized device comparison questionnaire. After gaining experience with the Naída M hearing system, participants should indicate whether the Naída M hearing system is much better, slightly better, similar, slightly worse or much worse than the Naída Q hearing system for the following areas:

• Sound quality

• Speech understanding in quiet

• Speech understanding in noise

• Robustness

• Handling

• Battery runtime

• Wearing comfort

• Reliability

• Design/aesthetics

• Telephone connection

• Overall

Statistical analysis

Data were not normally distributed, and as comparisons were made within subjects over the study's duration, the Wilcoxon signed-rank test for dependent samples was employed. To adjust for multiple comparisons, a Bonferroni post-hoc correction was applied. Differences between groups were assessed using the Mann-Whitney-U test. The threshold for statistical significance was set at p = 0.05. All analyses were conducted using STATISTICA (data analysis software system), Dell Inc. (2015), version 12.

Due to one participant withdrawing before the third appointment and the presence of two missing data points within one of the conditions at the same appointment in the bimodal group, the statistical analysis was conducted for the entire group rather than separating it into bilateral and bimodal groups, to have more statistical power.

Results

Automatic scene classifiers

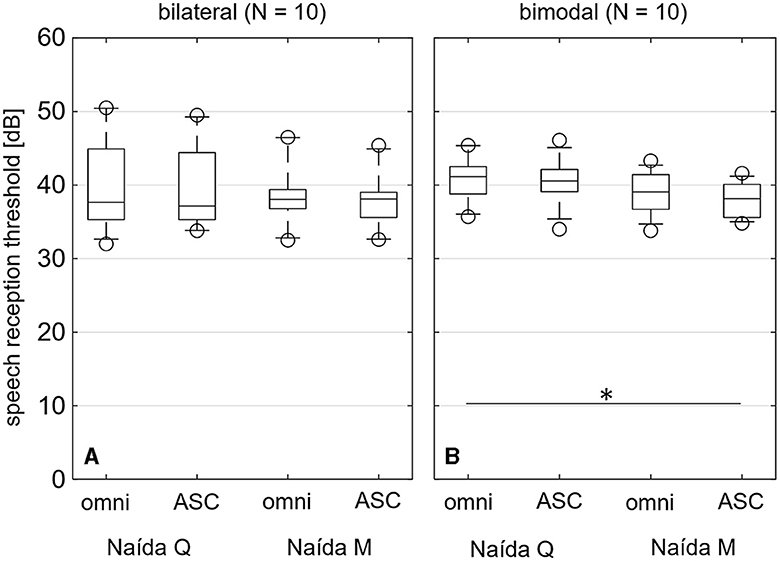

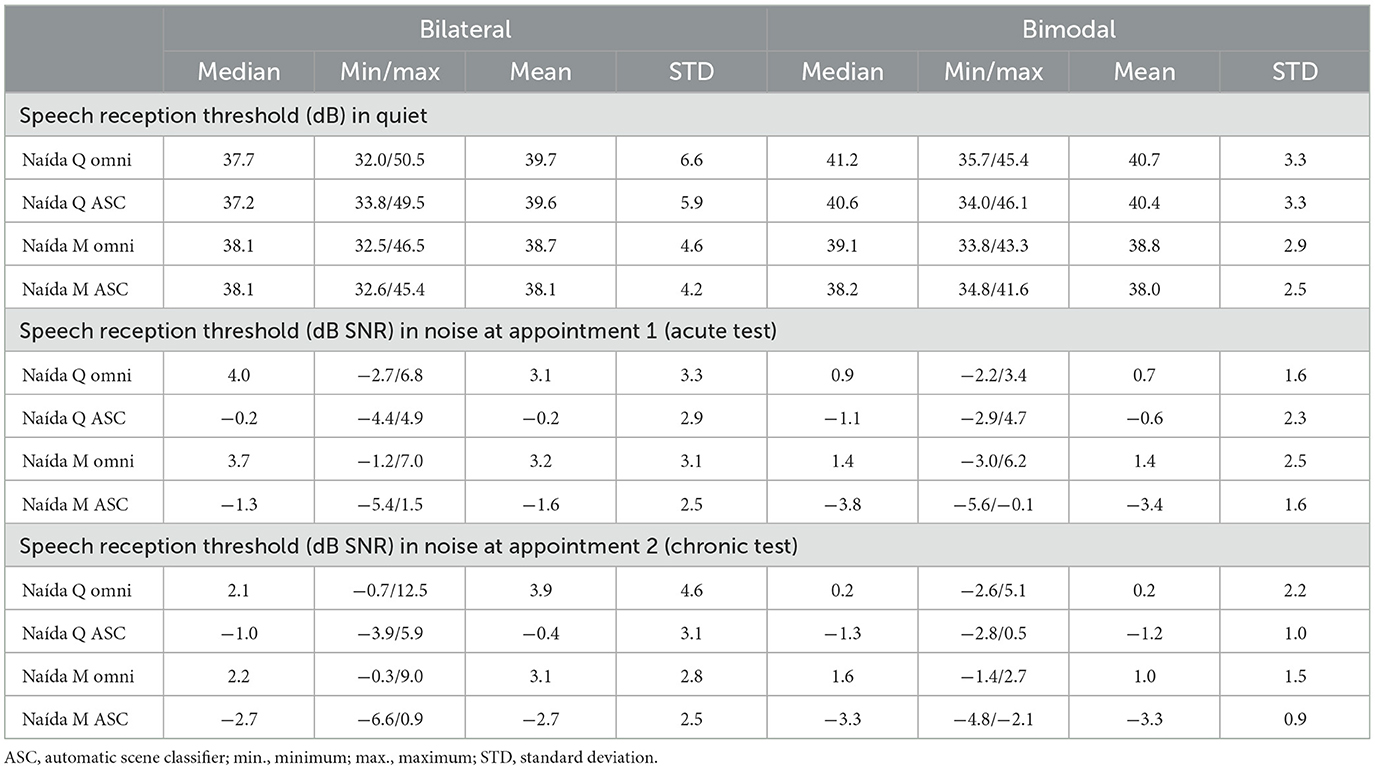

In Figure 4, the results from the listening task in quiet are shown. Outcomes from the speech perception in quiet test showed a significant improvement (p = 0.030), for the Naída M ASC compared to the Naída Q omni condition in bimodal users (Figure 4B). All other comparisons between the four conditions: (i) Naída Q omni, (ii) Naída Q ASC, (iii) Naída M omni, (iv) Naída M ASC showed no significant differences for bilateral (Figure 4A) or bimodal (Figure 4B) participants. Median values for the data shown in Figure 4 are listed in Table 5.

Figure 4. Speech perception in quiet measured acutely for the bilateral (A) and bimodal (B) CI user group. Line, median; box, quartiles 25 and 75%; circle, outlier; whisker, minimum and maximum; *indicate a significant differences with p < 0.05; ASC, automatic scene classifier.

Table 5. Speech perception outcomes in quiet and in noise (acute and chronic) with the four conditions Naída Q omni and ASC and Naída M omni and ASC for the bilateral and bimodal CI user groups.

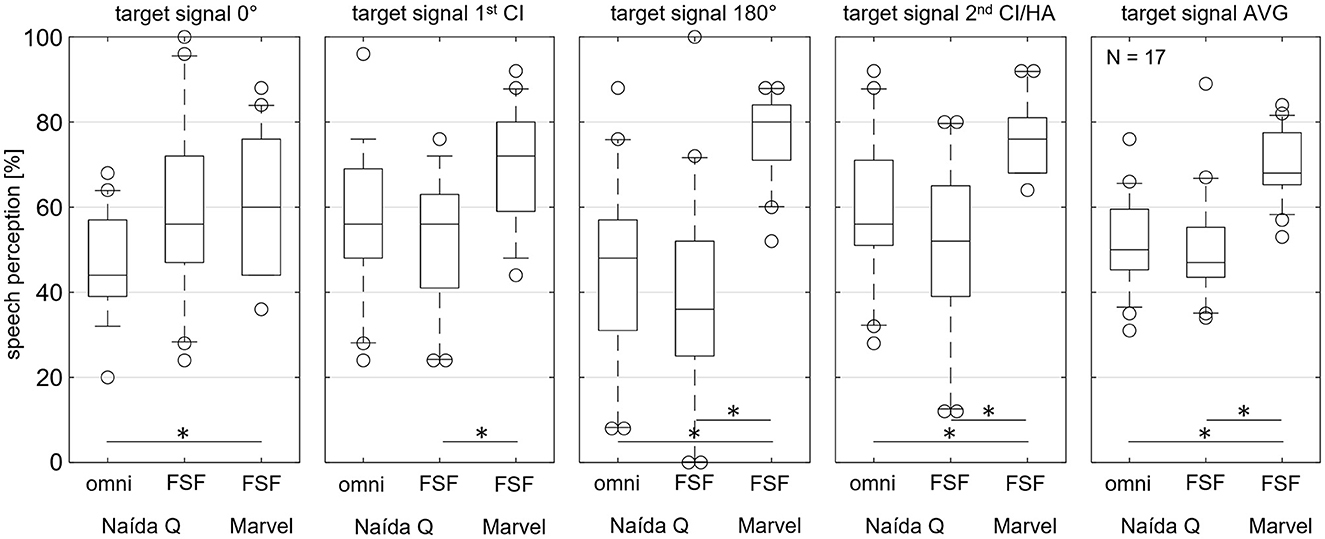

Outcomes for acutely measured speech perception in noise are shown in Figure 5A for the bilateral group and Figure 5B the bimodal group. For the bilateral group, significantly better results were obtained with the ASC conditions compared to their respective omni conditions, the improvements being 4.2 dB for the Naída Q (p = 0.030) and 5.0 dB for the Naída M (p = 0.030). The ASC condition on the Naída M also showed a significant improvement of 5.3 dB over the Naída Q omni condition (p = 0.041). The ASC condition on the Naída Q provided a significant 3.9 dB improvement over the Naída M omni condition (p = 0.030). For the bimodal group the Naída M ASC condition outperformed all other conditions by: 4.1 dB (Naída Q omni, p = 0.03), 2.8 dB (Naída Q ASC, p = 0.03) and 4.8 dB (Naída M omni, p = 0.03). No significant differences were measured between Naída Q and Naída M in their omni conditions for either group. The absolute SRT values are shown in Table 5.

Figure 5. Speech perception in noise measured acutely for the bilateral (A) and bimodal (B) CI user groups. Line, median; box, quartiles 2 and 75%; circle, outlier; whisker, minimum and maximum; *indicate a significant differences with p < 0.05; ASC, automatic scene classifier.

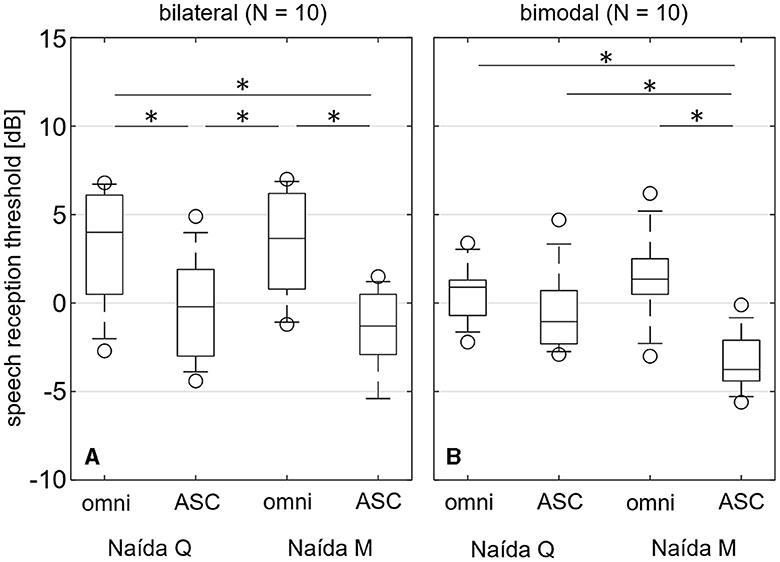

Outcomes of speech perception in noise, measured after chronic use of the Naída M hearing system, are shown in Figure 6A for the bilateral group and Figure 6B the bimodal group. For the bilateral group, significantly better results were obtained with the ASC conditions compared to the respective omni condition, the differences being 3.1 dB for the Naída Q (p = 0.030) and 4.9 dB for the Naída M (p = 0.030). Additionally, the ASC condition on the Naída M provided a statistically significant 4.8 dB improvement over the Naída Q omni condition (p = 0.030). The ASC condition on the Naída Q provided a statistically significant 3.2 dB improvement over the Naída M omni condition (p = 0.030). For the bimodal group, the Naída M ASC condition outperformed the omni conditions by 3.5 dB (Naída Q omni, p = 0.046) and 4.9 dB (Naída M omni, p = 0.046). No significant differences were measured between Naída Q and Naída M in their omni conditions for either group. Absolute SRT values are shown in Table 5.

Figure 6. Speech perception in noise measured after chronic use at the second appointment for the bilateral (A) and the bimodal (B) CI user group. Line, median; box, quartiles 25 and 75%; circle, outlier; whisker, minimum and maximum; *indicate a significant differences with p < 0.05; ASC, automatic scene classifier.

During the take-home phase between appointments one and two, participants got used to the hearing impression with the new sound processor. For the Naída M ASC condition, a significant difference of 1.4 dB (p = 0.007) in speech perception was observed between the acute and chronic measurements for the bilateral group.

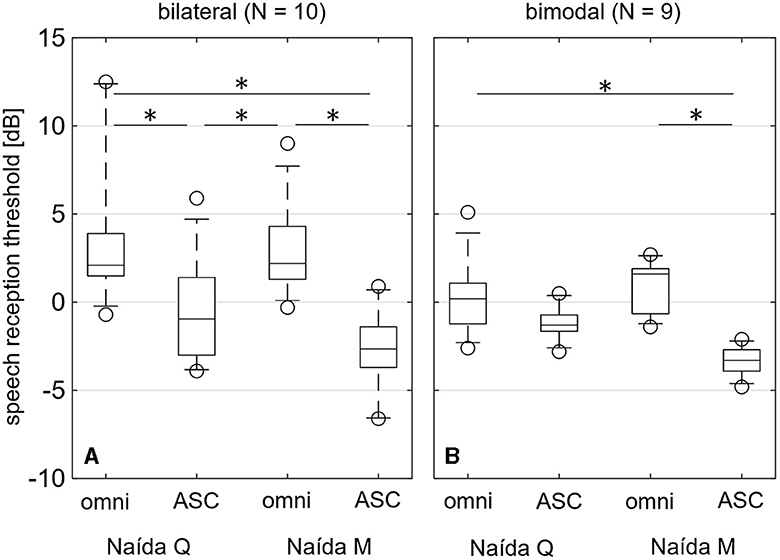

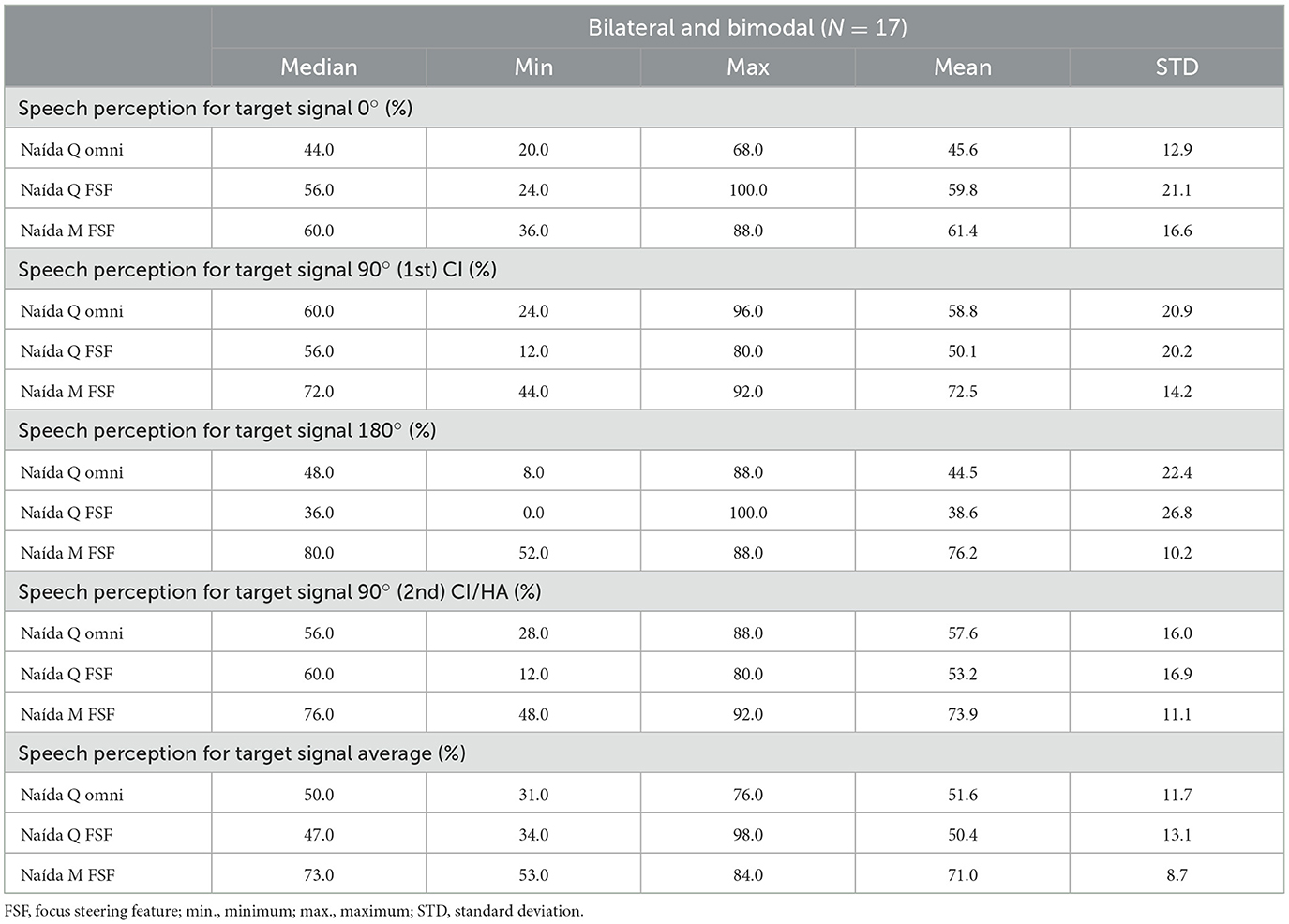

Focus steering features

Outcomes for speech perception in noise measured at appointment three, after chronic use of the Naída M hearing system, are shown in Figure 7 for the entire group, bilateral (N = 10) and bimodal (N = 7). The Naída M FSF condition provided significantly better results compared to the Naída Q omni condition (target signal 0°: 16%, p = 0.018; target signal 180°: 32%, p = 0.001; target signal 2nd CI: 20%, p = 0.006; average: 23%, p = 0.001), as well as compared to the Naída Q FSF condition (target signal 1st CI: 16%, p = 0.009; target signal 180°: 44%, p = 0.002; target signal 2nd CI: 16%, p = 0.004; average: 26%, p = 0.001). No significant differences were measured between Naída Q omni and FSF conditions for either target signal direction. Absolute percent correct values are shown in Table 6.

Figure 7. Speech perception in noise measured after chronic use for the entire CI user group. Line, median; box, quartiles 25 and 75%; circle, outlier; whisker, minimum and maximum; *indicate significant differences with p < 0.05; FSF, focus steering feature.

Table 6. Speech perception outcomes for the four target signal directions (0°, ±90°, 180°) as well as the average for the three conditions (Naída Q omni, Naída Q FSF and Naída M FSF), for the entire group (N = 17).

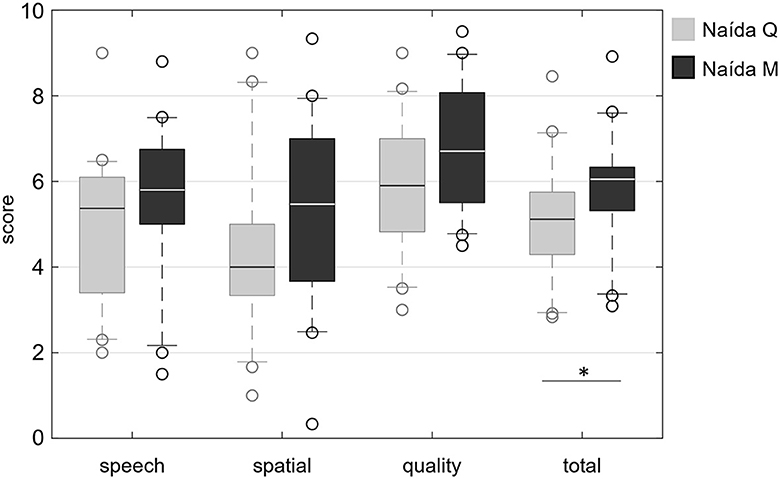

Subjective feedback

Median scores obtained via the SSQ-12 questionnaire at the start of the study for the Naída Q and during the take-home phase for the Naída M hearing system for the entire group of 18 participants (Figure 7). Figure 8 showed significantly better scores for the Naída M (6.1) compared to the Naída Q (5.1) for the average of all twelve questions (p = 0.022). There were no significant differences for the three sections at the overall group level.

Figure 8. Rating scores for the SSQ-12 questionnaire, split into the categories speech, spatial, quality and total for the entire CI user group (N = 18). Line, median; box, quartiles 25 and 75%; circle, outlier; whisker, minimum and maximum; *indicate a significant differences with p < 0.05.

Examining the scores for individual questions, significantly better ratings were obtained with the Naída M compared to Naída Q for questions 5 (p = 0.011), 7 (p = 0.039), 10 (p = 0.024), and 11 (p = 0.017). The remaining questions did not show significant differences.

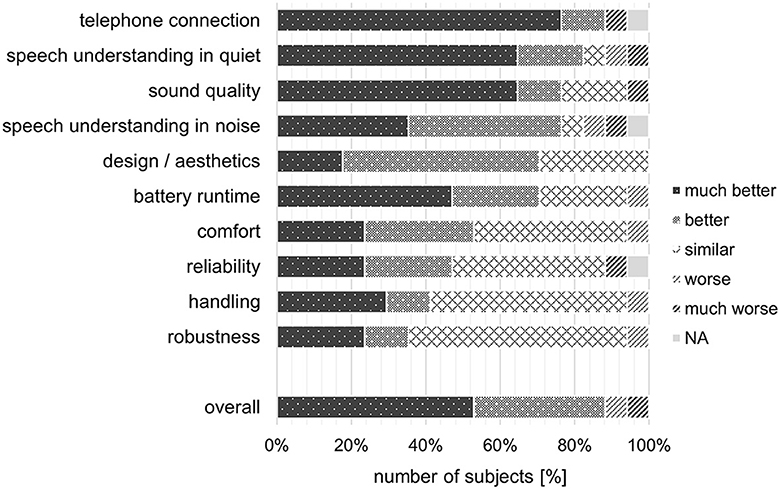

Ratings obtained via the custom questionnaire at the end of the study and compiled for the entire CI user group, N = 18 (Figure 9, some answers not applicable), showed that for the “overall” hearing impression, the large majority of participants (88.2%) rated the Naída M hearing system as either “better”, or “much better”, than the Naída Q hearing system, while only two participants (11.8%) rated it as worse or much worse. For specific aspects of the processor, the Naída M was rated either “better”, or “much better” than the Naída Q for ”telephone connection” (93.8%), “speech understanding in quiet” (82.4%), “sound quality” (76.5%), “speech understanding in noise” (81.3%), “design/aesthetics” and “battery runtime” (70.6%), “comfort” (52.9%), “reliability” (50.0%), “handling” (41.2%), and “robustness” (35.3%).

Figure 9. The percentage of participants scoring the Naída M hearing system as much better, better, similar, worse, or much worse than the Naída Q hearing system for the different aspects, such as sound quality, speech understanding, comfort and handling as well as the overall rating. NA, not applicable

Discussion

In this clinical study, we investigated the impact of automatic scene classifiers and focus steering features on hearing in challenging acoustic scenarios, focusing on both bilateral and bimodal cochlear implant users.

Automatic scene classifiers

The Automatic Scene Classifiers of two hearing systems was compared in this investigation. The target speech signal was presented from the front, with noise emanating from all eight surrounding loudspeakers, a setup adapted from prior research into directional microphones (Geißler et al., 2015). When tested under quiet conditions, the ASC of both systems naturally defaulted to the “calm situation” setting, utilizing their omni-directional microphones. Under these conditions, both systems' ASCs produced speech perception results that were comparable to when they were manually set to omni-mode. However, the Naída M's ASC outperformed both the ASC and omni settings of the Naída Q. This performance difference might stem from the Naída M's enhanced microphone features and sound-cleaning algorithms, notably the default activation of the SoftVoice feature, which was not commonly used in the Naída Q's clinical programs in this group. Notably, when exposed to a noisy environment simulating a restaurant ambiance (background noise at 70 dB SPL), the systems activated their “speech in (loud) noise” modes, utilizing directional microphones to counteract the ambient noise. Previous research has shown speech reception threshold benefits ranging from 1.4 to 6.9 dB when the UltraZoom directional microphone was compared to an omni-directional setting (Buechner et al., 2014; Geißler et al., 2015; Devocht et al., 2016; Mosnier et al., 2017; Ernst et al., 2019). Another body of research highlighted benefits for the StereoZoom directional microphone, registering variations between 2.6 and 4.7 dB when compared to the omni-directional microphone (Vroegop et al., 2018; Ernst et al., 2019) and between 0.9 and 1.4 dB when juxtaposed with UltraZoom (Ernst et al., 2019). In this context, it's important to note that these studies employed diverse loudspeaker arrangements, impacting the measured benefits of beamforming. For example, Ernst et al. (2019) elucidated the implications of merely adjusting loudspeaker angles presenting background noise, which impacted the performance of the beamformers. Interestingly, they also revealed different group results for bimodal (2 dB for UltraZoom and StereoZoom) and bilateral (2.5 dB for UltraZoom and 1.8 dB for StereoZoom) CI users. Variations in noise type can also lead to different speech perception results, likely due to different masking effects (Devocht et al., 2016).

Findings of this investigation revealed SRT benefits of 1.5 dB for the bimodal group and 3.1 dB for the bilateral group using the Naída Q's ASC (UltraZoom) compared to its omni-directional mode. Meanwhile, the Naída M's ASC (StereoZoom) showed even more pronounced benefits, with an improvement of 4.9 dB for both groups when compared to its omni-directional setting. Overall, these results align with the outcomes of prior studies.

Focus steering features

The Focus Steering Feature (FSF) was assessed using a unique setup: target speech signals were played from one of four loudspeakers—front, back, right, and left—with a randomized sequence, while noise was presented from eight evenly placed loudspeakers encircling the participant. This methodology contrasts with previous studies, where the ZoomControl was assessed in bimodal CI users in a right/left configuration. There, a fixed loudspeaker setup delivered the target signal to the hearing aid side, with noise directed to the dominant CI side, culminating in notably improved speech perception.

Holtmann et al. (2020) documented a 3.9 dB advantage in SRT for ZoomControl compared to the omni-directional microphone setting, using the adaptive OLSA sentence test. Additionally, in-house evaluations at Advanced Bionics (2016a) noted a 28% enhancement in speech perception when tested with the AzBio sentence test. However, it's worth noting that these studies primarily anchored the focus direction of ZoomControl to one side. In this investigation, the scope was expanded by incorporating all four directions.

The results were revealing: When participants manually adjusted their focus toward the target signal using the Naída Q system, no discernible FSF advantage was observed over the omni-directional microphone mode. Conversely, the Naída M system's automatic FSF yielded significantly improved speech perception scores. Efficient FSF switching, crucial for optimal performance, demands rapid reactions and adept localization skills—challenges deftly navigated by the Naída M's automated system. Significant differences were noted for all directions, except for the front, between the Naída Q's and Naída M's FSF. This observed anomaly can likely be attributed to users' tendency to default to the front direction in moments of uncertainty during manual selection. However, it is important to note that the relatively small sample size of twenty subjects may limit the generalizability of these findings.

Subjective feedback

Evaluations of both the Naída M and Naída Q hearing systems yielded comparable results in areas such as speech understanding, sound quality, and spatial hearing capacities. Yet, when averaging scores across all rating categories, the Naída M outperformed the Naída Q significantly (p < 0.05). Given that the Naída Q evaluations took place when users were already acclimated to its auditory characteristics and had received fittings tailored during clinical routines, there was no anticipation that the newly launched Naída M would score notably higher. Surprisingly, a substantial majority of participants either favored the Naída M or found it equivalent in all rating domains. Ideally, to eliminate any novelty bias associated with new devices and features, the study would have blinded participants to the system under evaluation. However, this approach was unfeasible due to the distinguishable designs of the two systems.

Clinical relevance of automatic features

Several studies have demonstrated the enhancements in speech perception brought about by features such as directional microphones and sound cleaning algorithms. While utilizing programs that combine these features can assist in certain everyday listening scenarios, many hearing device users remain reluctant to switch programs. This hesitation stems from uncertainties about the most effective choice or time constraints, as noted by Gifford and Revit (2010). Furthermore, the preference for a manual program can differ among individuals in specific listening situations, as highlighted by Searchfield et al. (2018).

A solution to these uncertainties lies in a classification system trained to discern a range of typical listening scenarios. Automatic switching, executed within a suitable time frame after the situation is detected, has shown better speech reception thresholds than manual switching, as evidenced by both Searchfield et al. (2018) and Eichenauer et al. (2021). However, due to the varied outcomes among individuals, Potts et al. (2021) emphasizes the necessity for a personalized fitting of device parameters to maximize their efficacy.

The automatic features examined in this study provide a broad default setting, suitable for the majority of participants across most situations. At the same time, these features allow for adjustments, such as the directional microphone or sound cleaning algorithms, tailored to individual requirements. This dual approach not only enables users to gain from automatic switching—even if they have a preference for a custom set of features—but also streamlines the process for health care professionals. Beginning with the automatic feature fitting can mitigate the need for manually programming each feature, thereby reducing overall fitting effort.

Conclusion

The latest Naída M hearing system incorporates an enhanced classifier capable of distinguishing various sound scenarios. This advancement, combined with superior processing capabilities, increases cochlear implant user's speech perception in noise ability when using the Naída M as compared to its predecessor, the Naída Q hearing system. By autonomously adapting to diverse challenging auditory scenarios or altering directionality based on the target speech, the system spares users the often difficult choice of selecting the optimal program. This innovation not only simplifies usability but also elevates real-world speech comprehension with the Naída M system.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

Ethics statement

The studies involving humans were approved by Ethics Committee of the Medizinische Hochschule Hannover. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

AB: Writing – review & editing, Conceptualization, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Supervision, Writing – original draft. MBa: Project administration, Writing – review & editing, Data curation, Investigation. SK: Data curation, Investigation, Project administration, Writing – review & editing. TL: Funding acquisition, Supervision, Writing – review & editing. MBr: Conceptualization, Formal analysis, Methodology, Project administration, Supervision, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research was supported by Advanced Bionics GmbH, Hannover, Germany.

Conflict of interest

MBr was employed by the manufacturer (Advanced Bionics GmbH) of the devices under investigation.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Advanced Bionics (2014). Binaural VoiceStream TechnologyTM: Improving Speech Understanding in Challenging Listening Environments. White Paper.

Advanced Bionics (2015). Auto UltraZoom and StereoZoom Features: Unique Naída CI Q90 Solutions for Hearing in Challenging Listening Environments. White Paper.

Advanced Bionics (2016a). Improving Speech Understanding Without Facing the Speaker for Unilateral AB Implant Recipients: Bimodal ZoomControl Feature. White Paper.

Advanced Bionics (2016b). Enhancing Conversations in Extreme Noise For Unilateral AB Cochlear Implant Recipients: Bimodal StereoZoom Feature. White Paper.

Advanced Bionics (2021a). AutoSense OSTM 3.0 Operating System: Allowing Marvel CI Users to Seamlessly Connect With the Moments They Love. White Paper.

Advanced Bionics (2021b). Marvel CI Technology: Leveraging Natural Ear acOustics to Optimize Hearing Performance. White Paper.

Appleton-Huber, J. (2015). AutoSense OS Benefit of the Next Generation of Technology Automation. Field Study News. Available at: https://www.phonakpro.com/content/dam/phonakpro/gc_hq/en/resources/evidence/field_studies/documents/fsn_autosense_os_speech_understanding.pdf (accessed June 10, 2024).

Bentler, R. A. (2005). Effectiveness of directional microphones and noise reduction schemes in hearing aids: a systematic review of the evidence. J. Am. Acad. Audiol. 16, 473–484. doi: 10.3766/jaaa.16.7.7

Buechner, A., Brendel, M., Saalfeld, H., Litvak, L., Frohne-Buechner, C., and Lenarz, T. (2010). Results of a pilot study with a signal enhancement algorithm for HiRes 120 cochlear implant users. Otol. Neurotol. 31, 1386–1390. doi: 10.1097/MAO.0b013e3181f1cdc6

Buechner, A., Dyballa, K.-H., Hehrmann, P., Fredelake, S., and Lenarz, T. (2014). Advanced beamformers for cochlear implant users: acute measurement of speech perception in challenging listening conditions. PLoS ONE 9:e95542. doi: 10.1371/journal.pone.0095542

Chen, C., Stein, A. L., Milczynski, M., Litvak, L. M., and Reich, A. (2015). “Simulating pinna effect by use of the Real Ear Sound algorithm in Advanced Bionics CI recipients,” in Conference on Implantable Auditory Prostheses (Lake Tahoe, CA).

Chung, K., Zeng, F.-G., and Acker, K. N. (2006). Effects of directional microphone and adaptive multichannel noise reduction algorithm on cochlear implant performance. J. Acoust. Soc. Am. 120, 2216–2227. doi: 10.1121/1.2258500

Cord, M. T., Surr, R. K., Walden, B. E., and Olson, L. (2002). Performance of directional microphone hearing aids in everyday life. J. Am. Acad. Audiol. 13, 295–307. doi: 10.1055/s-0040-1715973

Devocht, E. M. J., Janssen, A. M. L., Chalupper, J., Stokroos, R. J., and George, E. L. J. (2016). Monaural beamforming in bimodal cochlear implant users: effect of (A)symmetric directivity and noise type. PLoS ONE 11:e0160829. doi: 10.1371/journal.pone.0160829

Dingemanse, J. G., Vroegop, J. L., and Goedegebure, A. (2018). Effects of a transient noise reduction algorithm on speech intelligibility in noise, noise tolerance and perceived annoyance in cochlear implant users. Int. J. Audiol. 57, 360–369. doi: 10.1080/14992027.2018.1425004

Dyballa, K.-H., Hehrmann, P., Hamacher, V., Nogueira, W., Lenarz, T., and Büchner, A. (2015). Evaluation of A transient noise reduction algorithm in cochlear implant users. Audiol. Res. 5:116. doi: 10.4081/audiores.2015.116

Eichenauer, A., Baumann, U., Stöver, T., and Weissgerber, T. (2021). Interleaved acoustic environments: impact of an auditory scene classification procedure on speech perception in cochlear implant users. Trends Hear. 25:233121652110141. doi: 10.1177/23312165211014118

Ernst, A., Anton, K., Brendel, M., and Battmer, R.-D. (2019). Benefit of directional microphones for unilateral, bilateral and bimodal cochlear implant users. Coch. Implants Int. 20, 147–157. doi: 10.1080/14670100.2019.1578911

Frohne-Büchner, C., Büchner, A., Gärtner, L., Battmer, R. D., and Lenarz, T. (2004). Experience of uni- and bilateral cochlear implant users with a microphone positioned in the pinna. Int. Congr. Ser. 1273, 93–96. doi: 10.1016/j.ics.2004.08.047

Gatehouse, S., and Noble, W. (2004). The speech, spatial and qualities of hearing scale (SSQ). Int. J. Audiol. 43, 85–99. doi: 10.1080/14992020400050014

Geißler, G., Arweiler, I., Hehrmann, P., Lenarz, T., Hamacher, V., and Büchner, A. (2015). Speech reception threshold benefits in cochlear implant users with an adaptive beamformer in real life situations. Coch. Implants Int. 16, 69–76. doi: 10.1179/1754762814Y.0000000088

Gifford, R. H., and Revit, L. J. (2010). Speech perception for adult cochlear implant recipients in a realistic background noise: effectiveness of preprocessing strategies and external options for improving speech recognition in noise. J. Am. Acad. Audiol. 21, 441–451. doi: 10.3766/jaaa.21.7.3

Holtmann, L. C., Janosi, A., Bagus, H., Scholz, T., Lang, S., Arweiler-Harbeck, D., et al. (2020). Aligning hearing aid and cochlear implant improves hearing outcome in bimodal cochlear implant users. Otol. Neurotol. 41, 1350–1356. doi: 10.1097/MAO.0000000000002796

Kolberg, E. R., Sheffield, S. W., Davis, T. J., Sunderhaus, L. W., and Gifford, R. H. (2015). Cochlear implant microphone location affects speech recognition in diffuse noise. J. Am. Acad. Audiol. 26, 051–058. doi: 10.3766/jaaa.26.1.6

Marcrum, S. C., Picou, E. M., Bohr, C., and Steffens, T. (2021). Activating a noise-gating algorithm and personalizing electrode threshold levels improve recognition of soft speech for adults with CIs. Ear Hear. 42, 1208–1217. doi: 10.1097/AUD.0000000000001003

Mauger, S., Warren, C., Knight, M., Goorevich, M., and Nel, E. (2014). Clinical evaluation of the Nucleus 6 cochlear implant system: performance improvements with SmartSound iQ. Int. J. Audiol. 53, 564–576. doi: 10.3109/14992027.2014.895431

Mosnier, I., Mathias, N., Flament, J., Amar, D., Liagre-Callies, A., Borel, S., et al. (2017). Benefit of the UltraZoom beamforming technology in noise in cochlear implant users. Eur. Arch. Otorhinolaryngol. 274, 3335–3342. doi: 10.1007/S00405-017-4651-3

Nelson, P. B., Jin, S.-H., Carney, A. E., and Nelson, D. A. (2003). Understanding speech in modulated interference: cochlear implant users and normal-hearing listeners. J. Acoust. Soc. Am. 113, 961–968. doi: 10.1121/1.1531983

Noble, W., Jensen, N. S., Naylor, G., Bhullar, N., and Akeroyd, M. A. (2013). A short form of the speech, spatial and qualities of hearing scale suitable for clinical use: the SSQ12. Int. J. Audiol. 52, 409–412. doi: 10.3109/14992027.2013.781278

Phonak (2011). Auto ZoomControl Objective and Subjective Benefits With Auto ZoomControl. Field Study News.

Potts, L. G., Jang, S., and Hillis, C. L. (2021). Evaluation of automatic directional processing with cochlear implant recipients. J. Am. Acad. Audiol. 32, 478–486. doi: 10.1055/s-0041-1733967

Rak, K., Schraven, S. P., Schendzielorz, P., Kurz, A., Shehata-Dieler, W., Hagen, R., et al. (2017). Stable longitudinal performance of adult cochlear implant users for more than 10 years. Otol. Neurotol. 38, e315–e319. doi: 10.1097/MAO.0000000000001516

Rodrigues, T., and Liebe, S. (2018). Phonak AutoSense OSTM 3.0 the New and Enhanced Automatic Operating System. Phonak Insight. Available at: https://www.phonak.com/content/dam/phonak/en/documents/evidence/phonak_insight_marvel_autosense_os_3_0.pdf.coredownload.pdf (accessed June 10, 2024).

Searchfield, G. D., Linford, T., Kobayashi, K., Crowhen, D., and Latzel, M. (2018). The performance of an automatic acoustic-based program classifier compared to hearing aid users' manual selection of listening programs. Int. J. Audiol. 57, 201–212. doi: 10.1080/14992027.2017.1392048

Stronks, H. C., Tops, A. L., Hehrmann, P., Briaire, J. J., and Frijns, J. H. M. (2021). Personalizing transient noise reduction algorithm settings for cochlear implant users. Ear Hear. 42, 1602–1614. doi: 10.1097/AUD.0000000000001048

Veugen, L. C. E., Chalupper, J., Snik, A. F. M., Opstal, A. J., van, and Mens, L. H. M. (2016a). Matching automatic gain control across devices in bimodal cochlear implant users. Ear Hear. 37, 260–270. doi: 10.1097/AUD.0000000000000260

Veugen, L. C. E., Chalupper, J., Snik, A. F. M., van Opstal, A. J., and Mens, L. H. M. (2016b). Frequency-dependent loudness balancing in bimodal cochlear implant users. Acta Otolaryngol. 136, 775–781. doi: 10.3109/00016489.2016.1155233

Vroegop, J. L., Homans, N. C., Goedegebure, A., Dingemanse, J. G., van Immerzeel, T., and van der Schroeff, M. P. (2018). The effect of binaural beamforming technology on speech intelligibility in bimodal cochlear implant recipients. Audiol. Neurotol. 23, 32–38. doi: 10.1159/000487749

Wagener, K., Kuhnel, K., and Kollmeier, B. (1999). Development and evaluation of a German sentence test I: design of the Oldenburg sentence test. Audiol. Acoust. 38, 4–15.

Weissgerber, T., Bandeira, M., Brendel, M., Stöver, T., and Baumann, U. (2019). Impact of microphone configuration on speech perception of cochlear implant users in traffic noise. Otol. Neurotol. 40, e198–e205. doi: 10.1097/MAO.0000000000002135

Wilson, B. S., and Dorman, M. F. (2008). Cochlear implants: a remarkable past and a brilliant future. Hear. Res. 242, 3–21. doi: 10.1016/j.heares.2008.06.005

Keywords: cochlear implant, automatic scene classification, speech perception, Naída M hearing system, directional microphones

Citation: Buechner A, Bardt M, Kliesch S, Lenarz T and Brendel M (2024) Enhancing speech perception in challenging acoustic scenarios for cochlear implant users through automatic signal processing. Front. Audiol. Otol. 2:1456413. doi: 10.3389/fauot.2024.1456413

Received: 28 June 2024; Accepted: 19 August 2024;

Published: 06 September 2024.

Edited by:

Mehdi Abouzari, University of California, Irvine, United StatesReviewed by:

Sasan Dabiri, Northern Ontario School of Medicine University, CanadaBrian Richard Earl, University of Cincinnati, United States

Copyright © 2024 Buechner, Bardt, Kliesch, Lenarz and Brendel. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Andreas Buechner, YnVlY2huZXIuYW5kcmVhc0BtaC1oYW5ub3Zlci5kZQ==; Martina Brendel, bWFydGluYS5icmVuZGVsQGFkdmFuY2VkYmlvbmljcy5jb20=

Andreas Buechner1,2*

Andreas Buechner1,2* Martina Brendel

Martina Brendel