- Max-Planck-Institute for Mathematics in the Sciences, Leipzig, Germany

Tensor numerical methods, based on the rank-structured tensor representation of d-variate functions and operators discretized on large n⊗d grids, are designed to provide O(dn) complexity of numerical calculations contrary to O(nd) scaling by conventional grid-based methods. However, multiple tensor operations may lead to enormous increase in the tensor ranks (curse of ranks) of the target data, making calculation intractable. Therefore, one of the most important steps in tensor calculations is the robust and efficient rank reduction procedure which should be performed many times in the course of various tensor transforms in multi-dimensional operator and function calculus. The rank reduction scheme based on the Reduced Higher Order SVD (RHOSVD) introduced by the authors, played a significant role in the development of tensor numerical methods. Here, we briefly survey the essentials of RHOSVD method and then focus on some new theoretical and computational aspects of the RHOSVD and demonstrate that this rank reduction technique constitutes the basic ingredient in tensor computations for real-life problems. In particular, the stability analysis of RHOSVD is presented. We introduce the multi-linear algebra of tensors represented in the range-separated (RS) tensor format. This allows to apply the RHOSVD rank-reduction techniques to non-regular functional data with many singularities, for example, to the rank-structured computation of the collective multi-particle interaction potentials in bio-molecular modeling, as well as to complicated composite radial functions. The new theoretical and numerical results on application of the RHOSVD in scattered data modeling are presented. We underline that RHOSVD proved to be the efficient rank reduction technique in numerous applications ranging from numerical treatment of multi-particle systems in material sciences up to a numerical solution of PDE constrained control problems in ℝd.

1. Introduction

The mathematical models in large-scale scientific computing are often described by steady state or dynamical PDEs. The underlying physical, chemical or biological systems usually live in 3D physical space ℝ3 and may depend on many structural parameters. The solution of arising discrete systems of equations and optimization of the model parameters lead to the challenging numerical problems. Indeed, the accurate grid-based approximation of operators and functions involved requires large spatial grids in ℝd, resulting in considerable storage space and implementation of various algebraic operations on huge vectors and matrices. For further discussion we shall assume that all functional entities are discretized on n⊗d spatial grids where the univariate grid size n may vary in the range of several thousands. The linear algebra on N-vectors and N × N matrices with N = nd quickly becomes non-tractable as n and d increase.

Tensor numerical methods [1, 2] provide means to overcome the problem of the exponential increase of numerical complexity in the dimension of the problem d, due to their intrinsic feature of reducing the computational costs of multi-linear algebra on rank-structured data to merely linear scaling in both the grid-size n and dimension d. They appeared as bridging of the algebraic tensor decompositions initiated in chemometrics [3–10] and of the nonlinear approximation theory on separable low-rank representation of multi-variate functions and operators [11–13]. The canonical [14, 15], Tucker [16], tensor train (TT) [17, 18], and hierarchical Tucker (HT) [19] formats are the most commonly used rank-structured parametrizations in applications of modern tensor numerical methods. Further data-compression to the logarithmic scale can be achieved by using the quantized-TT (QTT) [20, 21] tensor approximation. At present there is an active research toward further progress of tensor numerical methods in scientific computing [1, 2, 22–26]. In particular, there are considerable achievements of tensor-based approaches in computational chemistry [27–31], in bio-molecular modeling [32–35], in optimal control problems (including the case of fractional control) [36–39], and in many other fields [6, 40–44].

Here, we notice that tensor numerical methods proved to be efficient when all input data and all intermediate quantities within the chosen computational scheme are presented in a certain low-rank tensor format with controllable rank parameters, i.e., on low-rank tensor manifolds. In turn, tensor decomposition of the full format data arrays is considered as an N-P hard problem. For example, the truncated HOSVD [7] of an n⊗d-tensor in the Tucker format amounts to O(nd+1) arithmetic operations while the respective cost of the TT and HT higher-order SVD [18, 45] is estimated by , indicating that rank decomposition of full format tensors still suffers from the “curse of dimensionality” and practically could not be applied in large scale computations.

On the other hand, often, the initial data for complicated numerical algorithms may be chosen in the canonical/Tucker tensor formats, say as a result of discretization of a short sum of Gaussians or multi-variate polynomials, or as a result of the analytical approximation by using Laplace transform representation and sinc-quadratures [1]. However, the ranks of tensors are multiplied in the course of various tensor operations, leading to dramatic increase in the rank parameter (“curse of ranks”) of a resulting tensor, thus making tensor-structured calculation intractable. Therefore, fast and stable rank reduction schemes are the main prerequisite for the success of rank-structured tensor techniques.

Invention of the Reduced Higher Order SVD (RHOSVD) in [46] and the corresponding rank reduction procedure based on the canonical-to-Tucker transform and subsequent canonical approximation of the small Tucker core (Tucker-to-canonical transform) was a decisive step in development of the tensor numerical methods in scientific computing. In contrast to the conventional HOSVD, the RHOSVD does not need a construction of the full size tensor for finding the orthogonal subspaces of the Tucker tensor representation. Instead, RHOSVD applies to numerical data in the canonical tensor format (with possibly large initial rank R) and exhibits the O(dnRmin{n, R}) complexity, uniformly in the dimensionality of the problem, d, and it was an essential step ahead in evolution of the tensor-structured numerical techniques.

In particular, this rank reduction scheme was applied to calculation of 3D and 6D convolution integrals in tensor-based solution of the Hartree-Fock equation [27, 46]. Combined with the Tucker-to-canonical transform, this algorithm provides a stable procedure for the rank reduction of possibly huge ranks in tensor-structured calculations of the Hartree potential. The RHOSVD based rank reduction scheme for the canonical tensors is specifically useful for 3D problems, which are most often in real-life applications. However, the RHOSVD-type procedure can be also efficiently applied in the construction of the TT tensor format from the canonical tensor input, which often appears in tensor calculations1.

The RHOSVD is the basic tool for the construction of the range-separated (RS) tensor format introduced in [32] for the low-rank tensor representation of the bio-molecular long-range electrostatic potentials. Recent example on the RS representation of the multi-centered Dirac delta function [34] paves the way for efficient solution decomposition scheme introduced for the Poisson-Boltzmann equation [33, 35].

In some applications the data could be presented as a sum of highly localized and rank-structured components so that their further numerical treatment again requires the rank reduction procedure (see Section 4.5 concerning the long-range potential calculation for many-particle system). Here, we present the constructive description of multi-linear operations on tensors in RS format which allow to compute the short- and long-range parts of resulting combined tensors. In particular, this applies to commonly used addition of tensors, Hadamard and contracted products as well as to composite functions of RS tensors. We then introduce tensor-based modeling of the scattered data by a sum of Slater kernels and show the existence of the low-rank representation for such data in the RS tensor format. The numerical examples demonstrate the practical efficiency of such kind of tensor interpolation. This approach may be efficiently used in many applications in data science and in stochastic data modeling.

Rank reduction procedure by using the RHOSVD is a mandatory part in solving the three-dimensional elliptic and pseudo-differential equations in the rank-structured tensor format. In the course of preconditioned iterations, the tensor ranks of the governing operator, the precoditioner and of the current iterand are multiplied at each iterative step, and, therefore, a fast and robust rank reduction techniques is the prerequisite for such methodology applied in the framework of iterative elliptic problem solvers. In particular, this approach was applied to the PDE constrained (including the case of fractional operators) optimal control problems [36, 39]. As result, the computational complexity can be reduced to almost linear scale, O(nR), contrary to conventional O(n3) complexity, as demonstrated by numerics in [36, 39].

Tensor-based algorithms and methods are now being widely used and developed further in the communities of scientific computing and data science. Tensor techniques evolve in traditional tensor decompositions in data processing [5, 42, 47], and they are actively promoted for tensor-based solution of the multi-dimensional problems in numerical analysis and quantum chemistry [1, 24, 29, 38, 39, 48, 49]. Notice that in the case of higher dimensions the rank reduction in the canonical format can be performed directly (i.e., without intermediate use of the Tucker approximation) by using the cascading ALS iteration in the CP format (see [50] concerning the tensor-structured solution of the stochastic/parametric PDEs).

The rest of this article is organized as follows. In Section 2, we sketch some results on the construction of the RHOSVD and present some old and new results on the stability of error bounds. In Section 2.2, we recollect the mixed canonical-Tucker tensor format and the Tucker-to-canonical transform. Section 3 recalls the results from Khoromskaia [27] on calculation of the multi-dimensional convolution integrals with the Newton kernel arising in computational quantum chemistry. Section 4 addresses the application of RHOSVD to RS parametrized tensors. In Section 4.2, we discuss the application of RHOSVD in multi-linear operations of data in the RS tensor format. The scattered data modeling is considered in section 4.5 from both theoretical and computational aspects. Application of RHOSVD for tensor-based representation of Greens kernels is discussed in Section 5. Section 6 gives a short sketch of RHOSVD in application to tensor-structured elliptic problem solvers.

2. Reduced HOSVD and CP-to-Tucker Transform

2.1. Reduced HOSVD: Error Bounds

In computational schemes including bilinear tensor-tensor or matrix-tensor operations the increase of tensor ranks leads to the critical loss of efficiency. Moreover, in many applications, for example in electronic structure calculations, the canonical tensors with large rank parameters arise as the result of polynomial type or convolution transforms of some function related tensors (say, electron density, the Hartree potential, etc.) In what follows, we present the new look on the direct method of rank reduction for the canonical tensors with large initial rank, the reduced HOSVD, first introduced and analyzed in [46].

In what follows, we consider the vector space of d-fold real-valued data arrays endorsed by the Euclidean scalar product 〈·, ·〉 with the related norm ||u|| = 〈u, u〉1/2. We denote by the class of tensors A ∈ ℝn⊗d parametrized in the rank-r, r = (r1, …, rd) orthogonal Tucker format,

with the orthogonal side-matrices and with the core coefficient tensor . Here and thereafter × ℓ denotes the contracted tensor-matrix product in the dimension ℓ, and ℝn⊗d denotes the Euclidean vector space of n1 × ⋯ × nd-tensors with equal mode size nℓ = n, ℓ = 1, …, d.

Likewise, denotes the class of rank-R canonical tensors. For given in the rank-R canonical format,

with normalized canonical vectors, i.e., for ℓ = 1, …, d, ν = 1, …, R.

The standard algorithm for the Tucker tensor decomposition [7] is based on HOSVD applied to full tensors of size nd which exhibits O(nd+1) computational complexity. The question is how to simplify the HOSVD Tucker approximation in the case of canonical input tensor in the form Equation (1) without use of the full format representation of A, and in the situation when the CP rank parameter R and the mode sizes n of the input can be sufficiently large.

First, let us use the equivalent (nonorthogonal) rank-r = (R, …, R) Tucker representation of the tensor Equation (1),

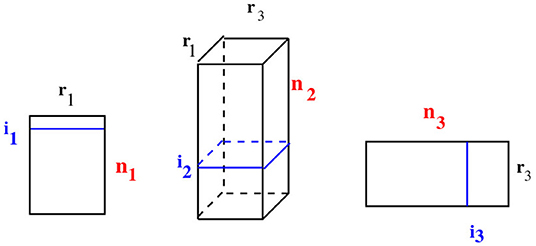

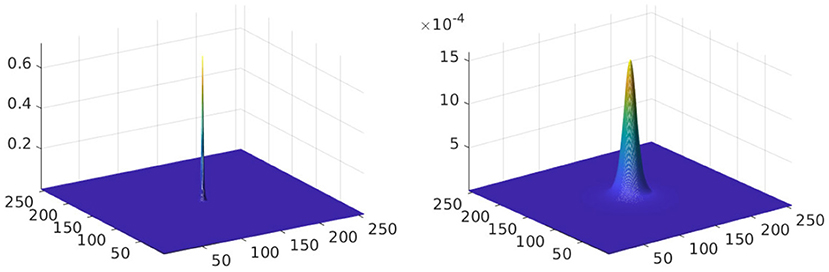

via contraction of the diagonal tensor with ℓ-mode side matrices (see Figure 1). By definition the tensor is called diagonal if it has all zero entries except the diagonal elements given by ξ(iℓ, …, iℓ) = ξiℓ, ℓ = 1, …, R.Then the problem of canonical to Tucker approximation can be solved by the method of reduced HOSVD (RHOSVD) introduced in [46]. The basic idea of the reduced HOSVD is that for large (function related) tensors given in the canonical format their HOSVD does not require the construction of a tensor in the full format and SVD based computation of its matrix unfolding. Instead, it is sufficient to compute the SVD of the directional matrices U(ℓ) in Equation (2) composed by only the vectors of the canonical tensor in every dimension separately, as shown in Figure 1. This will provide the initial guess for the Tucker orthogonal basis in the given dimension. For the practical applicability, the results of the approximation theory on the low-rank approximation to the multi-variate functions, exhibiting exponential error decay in the Tucker rank, are of the principal significance [51].

Figure 1. Illustration to the contracted product representation Equation (2) of the rank-R canonical tensor. The first factor corresponds to the diagonal coefficient tensor ξ.

In the following, we suppose that n ≤ R and denote the SVD of the side-matrix U(ℓ) by

with the orthogonal matrices , and , ℓ = 1, …, d. We use the following notations for the vector entries, (ν = 1, …, R).

To fix the idea, we introduce the vector of rank parameters, r = (r1, …, rd), and let

be the rank-rℓ truncated SVD of the side-matrix U(ℓ) (ℓ = 1, …, d). Here, the matrix Dℓ, 0 = diag{σℓ, 1, σℓ, 2, …, σℓ,rℓ} is the submatrix of Dℓ in Equation (3) and

represent the respective dominating (n × rℓ)-submatrices of the left and right factors in the complete SVD decomposition in Equation (3).

Definition 2.1. (Reduced HOSVD, [46]). Given the canonical tensor , the truncation rank parameter r, (rℓ ≤ R), and rank-rℓ truncated SVD of U(ℓ), see Equation (4), then the RHOSVD approximation of A is defined by the rank-r orthogonal Tucker tensor

obtained by the projection of canonical side matrices U(ℓ) onto the left orthogonal singular matrices , defined in Equation (4).

Notice that the general error bound for the RHOSVD approximation will be presented by Theorem 2.3, see also the discussion afterwards. Corollary 2.4 provides the conditions which guarantee the stability of RHOSVD.

The sub-optimal Tucker approximand Equation (5) is simple to compute and it provides accurate approximation to the initial canonical tensor even with rather small Tucker rank. Moreover, this provides the good initial guess to calculate the best rank-r Tucker approximation by using the ALS iteration. In our numerical practice, usually, only one or two ALS iterations are required for convergence. For example, in case d = 3, algorithmically, the one step of the canonical-to-Tucker ALS algorithm reduces to the following operations. Substituting the orthogonal matrices and from Equation (5) into Equation (2), we perform the initial step of the first ALS iteration

where A2 is given by the contraction

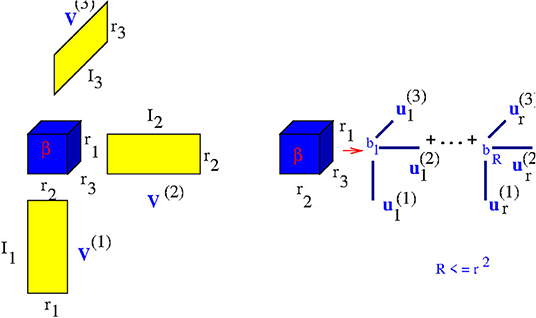

as illustrated in Figure 2. Then we optimize the orthogonal subspace in the second variable by calculating the best rank-r2 approximation to the r1r3 × n2 matrix unfolding of the tensor A2. The similar contracted product representation can be used when d > 3, as well as for the construction of the TT representation for the canonical input.

Here, we notice that the core tensor in the RHOSVD decomposition can be represented in the CP data-sparse format.

Proposition 2.2. The core tensor

in the orthogonal Tucker representation Equation (5), can be recognized as the rank-R canonical tensor of size r1 × ⋯ × rd with the storage request , which can be calculated entry-wise in O(Rr1 ⋯ rd) operations.

Indeed, introducing the matrices , for ℓ = 1, …d, we conclude that the canonical core tensor β0 is determined by the ℓ-mode side matrices . In the other words, the tensor is represented in the mixed Tucker-canonical format getting rid of the “curse of dimensionality” (see also Section 2.2 below).

The accuracy of the RHOSVD approximation can be controlled by the given ε-threshold in truncated SVD of side matrices U(ℓ). The following theorem proves the absolute error bound for the RHOSVD approximation.

Theorem 2.3. (RHOSVD error bound, [46]). For given in Equation (1), let σℓ,1 ≥ σℓ,2 … ≥ σℓ,min(n, R) be the singular values of ℓ-mode side matrices U(ℓ) ∈ ℝn×R (ℓ = 1, …, d) with normalized skeleton vectors. Then the error of RHOSVD approximation, , is bounded by

The complete proof can be found in Section 8 (see Appendix).

The accuracy of the RHOSVD can be controlled in terms of the ε-criteria. To that end, given ε > 0, chose the Tucker ranks such that is satisfied, then Theorem 2.3 provided the error bound adapted to the ε-threshold.

The error estimate in Theorem 2.3 differs from the case of complete HOSVD by the extra factor ||ξ||, which is the payoff for the lack of orthogonality in the canonical input tensor. Hence, Theorem 2.3 does not provide, in general, the stable control of relative error since for the general canonical tensors there is no uniform upper bound on the constant C in the estimate

The problem is that Equation (8) applies to the general non-orthogonal canonical decomposition.

The stable RHOSVD approximation can be proven in the case of the so-called partially orthogonal or monotone decompositions. With partially orthogonal decomposition we mean that for each pair of indexes ν, μ in Equation (1) there holds . For monotone decompositions we assume that all coefficients and skeleton vectors in Equation (1) have non-negative values.

Corollary 2.4. (Stability of RHOSVD) Assume the conditions of Theorem 2.3 are satisfied. (A) Suppose that at least one of the side matrices U(ℓ), ℓ = 1, ⋯ , d, in Equation (2), is orthogonal or the decomposition Equation (1) is partially orthogonal. Then the RHOSVD error can be bounded by

(B) Let decomposition Equation (1) be monotone. Then (9) holds.

Proof. (A) The partial orthogonality assumption combined with normalization constraints for the canonical skeleton vectors imply

The above relation also holds in the case of orthogonality of the side matrix U(ℓ) for some fixed ℓ. Then the result follows by (7).

(B) In case of monotone decomposition we conclude that the pairwise scalar product of all summands in Equation (1) is non-negative, while the norm of each ν-term is equal to ξν. Then the upper bound

holds for vectors , ν = 1, ⋯ , R, with non-negative entries applied to the case of R summands, thus implying ||ξ||2 ≤ ||A||2. Now, the result follows.

Clearly, the orthogonality assumption may lead to slightly higher separation rank, however, this constructive decomposition stabilizes the RHOSVD approximation method applied to the canonical format tensor (i.e., it allows the stable control of relative error). The case of monotone canonical sums typically arises in the sinc-based canonical approximation to radially symmetric Green's kernels by a sum of Gaussians. On the other hand, in long term computational practice the numerical instability of RHOSVD approximation was not observed in case of physically relevant data.

2.2. Mixed Tucker Tensor Format and Tucker-to-CP Transform

In the procedure for the canonical tensor rank reduction the goal is to have a result in a canonical tensor format with a smaller rank. By converting the core tensor to CP format, one can use the mixed two-level Tucker data format [12, 27], or canonical CP format. Figure 3 illustrates the computational scheme of the two-level Tucker approximation.

We define by the single-hole product of dimension-modes,

The same definition applies to the quantity .

Next lemma describes the approximation of the Tucker tensor by using canonical representation [12, 27].

Lemma 2.5. (Mixed Tucker-to-canonical approximation, [27]).

(A) Let the target tensor A have the form with the orthogonal side-matrices and . Then, for a given ,

(B) Assume that there exists the best rank-R approximation of A, then there is the best rank-R approximation of β, such that

The complete proof can be found in Section 8 (see Appendix). Notice that condition simply means that the canonical rank does not exceed the maximal CP rank of the Tucker core tensor.

Combination of Theorem 2.3 and Lemma 2.5 paves the way to the rank optimization of canonical tensors with the large mode-size arising, for example, in the grid-based numerical methods for multi-dimensional PDEs with non-regular (singular) solutions. In such applications the univariate grid-size (i.e., the mode-size) may be about n = 104 and even larger.

Notice that the Tucker (for moderate d) and canonical formats allow to perform basic multi-linear algebra using one-dimensional operations, thus reducing the exponential scaling in d. Rank-truncated transforms between different formats can be applied in multi-linear algebra on mixed tensor representations as well, see Lemma 2.5. The particular application to tensor convolution in many dimensions was discussed, for example, in [1, 2].

We summarize that the direct methods of tensor approximation can be classified by:

(1) Analytic Tucker approximation to some classes of function-related dth order tensors (d ≥ 2), say, by multi-variate polynomial interpolation [1].

(2) Sinc quadrature based approximation methods in the canonical format applied to a class of analytic function related tensors [11].

(3) Truncated HOSVD and RHOSVD, for quasi-optimal Tucker approximation of the full-format, respectively, canonical tensors [46].

Direct analytic approximation methods by sinc quadrature/interpolation are of principal importance. Basic examples are given by the tensor representation of Green's kernels, the elliptic operator inverse and analytic matrix-valued functions. In all cases, the algebraic methods for rank reduction by the ALS-type iterative Tucker/canonical approximation can be applied.

Further improvement and enhancement of algebraic tensor approximation methods can be based on the combination of advanced nonlinear iteration, multigrid tensor methods, greedy algorithms, hybrid tensor representations, and the use of new problem adapted tensor formats.

2.3. Tucker-to-Canonical Transform

In the rank reduction scheme for the canonical rank-R tensors, we use successively the canonical-to-Tucker (C2T) transform and then the Tucker-to-canonical (T2C) tensor approximation.

First, we notice that the canonical rank of a tensor A ∈ Vn has the upper bound (see [27, 46]),

where is given by Equation (10). Rank bound (13) applied to the Tucker core tensor of the size r × r × r, indicates that the ultimate canonical rank of a large-size tensor in Vn has the upper bound r2. Notice that for function related tensors the Tucker rank scales logarithmically in both approximation accuracy and the discretization grid size (see the proof for some classes of function in [51]).

The following remark shows that the maximal canonical rank of the Tucker core of 3rd order tensor can be easily reduced to the value less than r2 by the SVD-based procedure applied to the matrix slices of the Tucker core tensor β. Though, being not practically attractive for arbitrary high order tensors, the simple algorithm described in Remark 2.6 below is proved to be useful for the treatment of small size 3rd order Tucker core tensors within the rank reduction algorithms described in the previous sections.

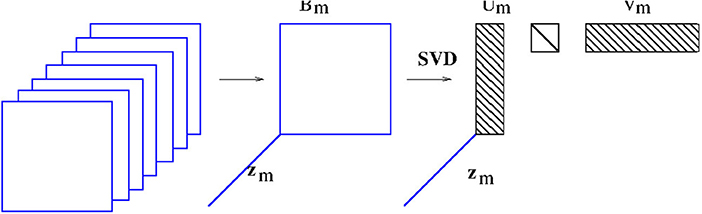

Remark 2.6. Let d = 3 for the sake of clarity [27, 46]. There is a simple procedure based on SVD to reduce the canonical rank of the core tensor β, within the accuracy ε > 0. Denote by , m = 1, …, r the two-dimensional slices of β in each fixed mode and represent

where zm(m) = 1, zm(j) = 0 for j = 1, …, r, j ≠ m (there are exactly d possible decompositions). Let pm be the minimal integer, such that the singular values of Bm satisfy for k = pm + 1, …, r (if , then set pm = r). Then, denoting by

the corresponding rank-pm approximation to Bm (by truncation of ), we arrive at the rank-R canonical approximation to β,

providing the error estimate

Representation (15) is a sum of rank-pm terms so that the total rank is bounded by . The approach can be extended to arbitrary d ≥ 3 with the bound R ≤ rd−1.

Figure 4 illustrates the canonical decomposition of the core tensor by using the SVD of slices Bm of the core tensor β, yielding matrices , and a diagonal matrix of small size pm × pm containing the truncated singular values. It also shows the vector zm = [0, …, 0, 1, 0, …, 0], containing all entries equal to 0 except 1 at the mth position.

It is worse to note that the rank reduction for the rank-R core tensor of small size r1 × ⋯ × rd, can be also performed by using the cascading ALS algorithms in CP format applied to the canonical input tensor, as it was applied in [50]. Moreover, a number of numerical examples presented in the present paper and in the included literature (applied to function generated tensors) demonstrate the substantial reduction of the initial canonical rank R.

3. Calculation of 3D Integrals with the Newton Kernel

The first application of the RHOSVD was calculation of the 3D grid-based Hartree potential operator in the Hartree-Fock equation,

where the electron density,

is represented in terms of molecular orbitals, presented in the Gaussian-type basis (GTO), The Hartree potential describes the repulsion energy of the electrons in a molecule. The intermediate goal here is the calculation of the so-called Coulomb matrix,

which represents the Hartree potential in the given GTO basis.

In fact, calculation of this 3D convolution operator with the Newton kernel, requires high accuracy and it should be repeated multiply in the course of the iterative solution of the Hartree-Fock nonlinear eigenvalue problem. The presence of nuclear cusps in the electron density makes additional challenge to computation of the Hartree potential operator. Traditionally, these calculations are based on involved analytical evaluation of the corresponding integral in a separable Gaussian basis set by using erf function. Tensor-structured calculation of the multi-dimensional convolution integral operators with the Newton kernel have been introduced in [27, 29, 46].

The molecule is embedded in a computational box Ω = [−b, b]3 ∈ ℝ3. The equidistant n × n × n tensor grid ω3, n = {xi}, i ∈ : = {1, …, n}3, with the mesh-size h = 2b/(n + 1) is used. In calculations of integral terms, the Gaussian basis functions , are approximated by sampling their values at the centers of discretization intervals using one-dimensional piecewise constant basis functions , ℓ = 1, 2, 3, yielding their rank-1 tensor representation,

Given the discrete tensor representation of basis functions (18), the electron density is approximated using 1D Hadamard products of rank-1 tensors as

For convolution operator, the representation of the Newton kernel by a canonical rank-RN tensor [1] is used (see Section 4.1 for details),

The initial rank of the electron density in the canonical tensor format Θ in Equation (17) is large even for small molecules. Rank reduction by using RHOSVD C2T plus T2C reduces the rank Θ ↦ Θ′ by several orders of magnitude, from to , from ~ 104 to ~ 102. Then the 3D tensor representation of the Hartree potential is calculated by using the 3D tensor product convolution, which is a sum of tensor products of 1D convolutions,

The Coulomb matrix entries Jkm are obtained by 1D scalar products of VH with the Galerkin basis consisting of rank-1 tensors,

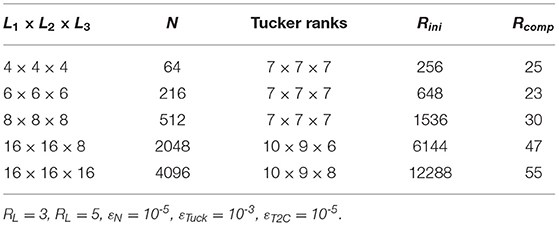

The cost of 3D tensor product convolution is O(nlogn) instead of O(n3logn) for the standard benchmark 3D convolution using the 3D FFT. Table 1 shows CPU times (sec) for the Matlab computation of VH for H2O molecule [46] on a SUN station using 8 Opteron Dual-Core/2600 processors (times for 3D FFT for n ≥ 1024 are obtained by extrapolation). C2T shows the time for the canonical-to-Tucker rank reduction.

Table 1. Times (sec) for the C2T transform and the 3D tensor product convolution vs. 3D FFT convolution.

The grid-based tensor calculation of the multi-dimensional integrals in quantum chemistry provides the required high accuracy by using large grids and the ranks are controlled by the required ε in the rank truncation algorithms. The results of the tensor-based calculations have been compared with the results of the benchmark standard computations by the MOLPRO package. It was shown that the accuracy is of the order of 10−7 hartree in the resulting ground state energy (see [2, 27]).

4. RHOSVD in the Range-Separated Tensor Formats

The range-separated (RS) tensor formats have been introduced in [32] as the constructive tool for low-rank tensor representation (approximation) of function related data discretized on Cartesian grids in ℝd, which may have multiple singularities or cusps. Such highly non-regular data typically arise in computational quantum chemistry, in many-particle dynamics simulations and many-particle electrostatics calculations, in protein modeling and in data science. The key idea of the RS representation is the splitting of the short- and long-range parts in the functional data and further low-rank approximation of the rather regular long-range part in the classical tensor formats.

In this concern RHOSVD method becomes an essential ingredient of the rank reduction algorithms for the “long-range” input tensor, which usually inherits the large initial rank.

4.1. Low-Rank Approximation of Radial Functions

First, we recall the grid-based method for the low-rank canonical representation of a spherically symmetric kernel functions p(||x||), x ∈ ℝd for d = 2, 3, …, by its projection onto the finite set of basis functions defined on tensor grid. The approximation theory by a sum of Gaussians for the class of analytic potentials p(||x||) was presented in [1, 11, 51, 52]. The particular numerical schemes for rank-structured representation of the Newton and Slater kernels

discretized on a fine 3D Cartesian grid in the form of low-rank canonical tensor was described in [11, 51].

In what follows, for the ease of exposition, we confine ourselves to the case d = 3. In the computational domain Ω = [−b, b]3, let us introduce the uniform n × n × n rectangular Cartesian grid Ωn with mesh size h = 2b/n (n even). Let be a set of tensor-product piecewise constant basis functions, labeled by the 3-tuple index i = (i1, i2, i3), iℓ ∈ Iℓ = {1, …, n}, ℓ = 1, 2, 3. The generating kernel p(||x||) is discretized by its projection onto the basis set {ψi} in the form of a third order tensor of size n × n × n, defined entry-wise as

The low-rank canonical decomposition of the 3rd order tensor P is based on using exponentially fast convergent sinc-quadratures for approximating the Laplace-Gauss transform to the analytic function p(z), z ∈ ℂ, specified by a certain weight ,

with the proper choice of the quadrature points tk and weights pk. The sinc-quadrature based approximation to generating function by using the short-term Gaussian sums in Equation (23) are applicable to the class of analytic functions in certain strip |z| ≤ D in the complex plane, such that on the real axis these functions decay polynomially or exponentially. We refer to basic results in [11, 52, 53], where the exponential convergence of the sinc-approximation in the number of terms (i.e., the canonical rank) was analyzed for certain classes of analytic integrands.

Now, for any fixed , such that ||x|| > a > 0, we apply the sinc-quadrature approximation Equation (23) to obtain the separable expansion

providing an exponential convergence rate in M,

In the case of Newton kernel, we have p(z) = 1/z, , so that the Laplace-Gauss transform representation reads

which can be approximated by the sinc quadrature Equation (24) with the particular choice of quadrature points tk, providing the exponential convergence rate as in Equation (25) [11, 51].

In the case of Yukawa potential the Laplace Gauss transform reads

The analysis of the sinc quadrature approximation error for this case can be found, in particular, in [1, 51], section 2.4.7.

Combining (22) and (24), and taking into account the separability of the Gaussian basis functions, we arrive at the low-rank approximation to each entry of the tensor P = [pi],

Define the vector (recall that pk > 0)

then the 3rd order tensor P can be approximated by the R-term (R = 2M + 1) canonical representation

Given a threshold ε > 0, in view of Equation (25), we can choose M = O(log2ε) such that in the max-norm

In the case of continuous radial function p(||x||), say the Slater potential, we use the collocation type discretization at the grid points including the origin, x = 0, so that the univariate mode size becomes n → n1 = n + 1. In what follows, we use the same notation PR in the case of collocation type tensors (for example, the Slater potential) so that the particular meaning becomes clear from the context.

4.2. The RS Tensor Format Revisited

The range separated (RS) tensor format was introduced in [32] for efficient representation of the collective free-space electrostatic potential of large biomolecules. This rank-structured tensor representation of the collective electrostatic potential of many-particle systems of general type allows to reduce essentially computation of their interaction energy, and it provides convenient form for performing other algebraic transforms. The RS format proved to be useful for range-separated tensor representation of the Dirac delta [34] in ℝd and based on that, for regularization of the Poisson-Boltzmann equation (PBE) by decomposition of the solution into short- and long-range parts, where the short-range part of the solution is evaluated by simple tensor operations without solving the PDE. The smooth long-range part is calculated by solving the PBE with the modified right-hand side by using the RS decomposition of the Dirac delta, so that now it does not contain singularities. We refer to papers [33, 35] describing the approach in details.

First, we recall the definition of the range separated (RS) tensor format, see [32], for representation of d-tensors . The RS format is served for the hybrid tensor approximation of discretized functions with multiple cusps or singularities. This allows the splitting of the target tensor onto the highly localized components approximating the singularity and the component with global support that allows the low-rank tensor approximation. Such functions typically arise in computational quantum chemistry, in many-particle modeling and in the interpolation of multi-dimensional data measured at certain set of spatial points in ℝn × n × n.

In the following definition of RS-canonical tensor format, we use the notion of localized canonical tensor U0, which is characterized by the small support whose diameter has a size of a few grid points. This tensor will be used as the reference one for presentation of the short-range part in the RS tensor. To that end we use the operation Replicaxν(U0) which replicates U0 into some given grid point xν. In this construction, we assume that the chosen grid points xν are well separated, i.e., the distance between each pair of points is not less then some given threshold nδ > 0.

Definition 4.1. (RS-canonical tensors, [32]). Given the rank-Rs reference localized CP tensor U0. The RS-canonical tensor format defines the class of d-tensors , represented as a sum of a rank-Rl CP tensor and a cumulated CP tensor , such that

where Ushort is generated by the localized reference CP tensor U0, i.e., Uν = Replicaxν(U0), with rank(Uν) = rank(U0) ≤ Rs, where, given the threshold nδ > 0, the effective support of Uν is bounded by diam(suppUν) ≤ 2nδ in the index size.

Each RS-canonical tensor is, therefore, uniquely defined by the following parametrization: rank-Rl canonical tensor Ulong, the rank-Rs reference canonical tensor U0 with the small mode size bounded by 2nδ, list of the coordinates and weights of N0 particles in ℝd. The storage size is linear in both the dimension and the univariate grid size,

The main benefit of the RS-canonical tensor decomposition is the almost uniform bound on the CP/Tucker rank of the long-range part , in the multi-particle potential discretized on fine n × n × n spatial grid. It was proven in [32] that the canonical rank R scales logarithmically in both the number of particles N0 and the approximation precision, see also Lemma 4.5.

Given the rank-R CP decomposition Equation (29) based on the sinc-quadrature approximation Equation (24) of the discretized radial function p(||x||), we define the two subsets of indices, l: = {k:tk ≤ 1} and s: = {k:tk > 1}, and then introduce the RS-representation of this tensor as follows,

where

This representation allows to reduce the calculation of the multi-particle interaction energy of the many-particle system. Recall that the electrostatic interaction energy of N charged particles is represented in the form

and it can be computed by direct summation in O(N2) operations. The following statement is the modification of Lemma 4.2 in [32] (see [54] for more details).

Lemma 4.2. [54] Let the effective support of the short-range components in the reference potential PR for the Newton kernel does not exceed the minimal distance between particles, σ > 0. Then the interaction energy EN of the N-particle system can be calculated by using only the long range part in the tensor P representing on the grid the total potential sum,

in O(RlN) operations, where Rl is the canonical rank of the long-range component in P, Pl.

Here, z ∈ ℝN is a vector composed of all charges of the multi-particle systems, and is the vector of samples of the collective electrostatic long-range potential Pl in the nodes corresponding to particle locations. Thus, the term denotes the “non–calibrated” interaction energy associated with the long-range tensor component Pl, while PRl denotes the long-range part in the tensor representing the single reference Newton kernel, and PRl(0) is its value at the origin.

Lemma 4.2 indicates that the interaction energy does not depend on the short-range part in the collective potential, and this is the key point for the construction of energy preserving regularized numerical schemes for solving the basic equations in bio-molecular modeling by using low-rank tensor decompositions.

4.3. Multi-Linear Operations in RS Tensor Formats

In what follows, we address the important question on how the basic multi-linear operations can be implemented in the RS tensor format by using the RHOSVD rank compression. The point is that various tensor operations arise in the course of commonly used numerical schemes and iterative algorithms which usually include many sums and products of functions as well as the actions of differential/integral operators, always making the tensor structure of input data much more complicated requiring the robust rank reduction schemes.

The other important aspect is related to the use of large (fine resolution) discretization grids which is limited by the restriction on the size of the full input tensors, O(nd) (curse of dimensionality), representing the discrete functions and operators to be approximated in low rank tensor format. Remarkably, that tensor decomposition for special class of functions, which allow the sinc-quadrature approximation, can be performed on practically indefinitely large grids because the storage and numerical costs of such numerical schemes scale linearly in the univariate grid size, O(dn). Hence, having constructed such low rank approximations for certain set of “reproducing” radial functions, makes it possible to construct the low rank RS representation at linear complexity, O(dn), for the wide class of functions and operators by using the rank truncated multi-linear operations. The examples of such “reproducing” radial functions are commonly used in our computational practice.

First, consider the Hadamard product of two tensors PR and QR1 corresponding to the pointwise product of two generating multi-variate functions centered at the same point. The RS representation of the product tensor is based on the observation that the long-range part of the Hadamard product of two tensors in RS-format is basically determined by the product of their long-range parts.

Lemma 4.3. Suppose that the RS representation Equation (31) of tensors PR and QR1 is constructed based on the sinc-quadrature CP approximation Equation (29). Then the long-range part of the Hadamard product of these RS-tensors,

can be represented by the product of their long-range parts, Zl = Pl ⊙ Ql, with the subsequent rank reduction. Moreover, we have rank(Zl) ≤ RlQl.

Proof. We consider the case of collocation tensors and suppose that each skeleton vector in CP tensors PR and QR1 is given by the restriction of certain Gaussians to the set of grid points. Chose the arbitrary short-range components in PR and some component in QR1, generated by Gaussians and , respectively. Then the effective support of the product of these two terms becomes smaller than that for each of the factors in view of the identity considered for arbitrary tk, tm > 0. This means that each term that includes the short-range multiple remains to be in the short range. Then the long range part in Z takes a form Zl = Pl ⊙ Ql with the subsequent rank reduction.

The sums of several tensors in RS format can be easily split into short- and long-range parts by grouping the respective components in the summands. The other important operation is the operator-function product in RS tensor format (see the example in [34] related to the action of Laplacian with the singular Newton kernel resulting in the RS decomposition of the Dirac delta). This topic will be considered in detail elsewhere.

4.4. Representing the Slater Potential in RS Tensor Format

In what follows, we consider the RS-canonical tensor format for the rank-structured representation of the Slater function

which has the principal significance in electronic structure calculations (say, based on the Hartree-Fock equation) since it represents the cusp behavior of electron density in the local vicinity of nuclei. This function (or its approximation) is considered as the best candidate to be used as the localized basis function for atomic orbitals basis sets. Another direction is related to the construction of the accurate low-rank global interpolant for big scattered data to be considered in the next section. In this way, we calculate the data adaptive basis set living on the fine Cartesian grid in the region of target data. The main challenge, however, is due to the presence of point singularities which are hard to approximate in the problem independent polynomial or trigonometric basis sets.

The construction of low-rank RS approximation to the Slater function is based on the generalized Laplace transform representation for the Slater function written in the form , , reads

which corresponds to the choice in the canonical form of the Laplace transform representation for G(ρ),

Denote by GR the rank-R canonical approximation to the function G(ρ) discretized on the n × n × n Cartesian grid.

Lemma 4.4. ([51]) For given threshold ε > 0 let ρ ∈ [1, A]. Then the (2M + 1)-term sinc-quadrature approximation of the integral in (34) with

ensures the max-error of the order of O(ε) for the corresponding rank-(2M + 1) CP approximation GR to the tensor G.

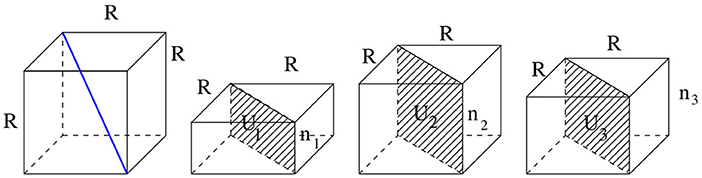

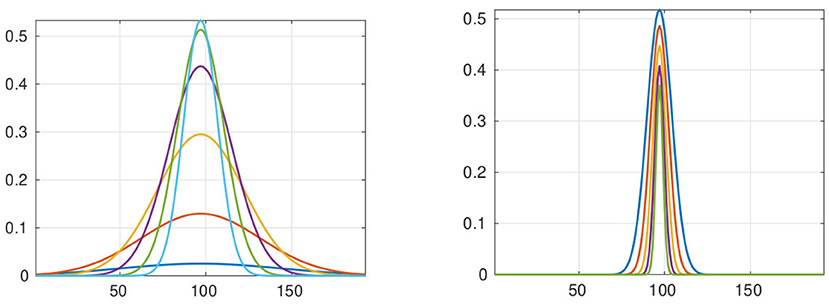

Figure 5 illustrates the RS splitting for the tensor GR = GRl + GRs representing the Slater potential G(x) = e−λ||x||, λ = 1, discretized on the n × n × n grid with n = 1024. The rank parameters are chosen by R = 24, Rl = 6 and Rs = 18. Notice that for this radial function the long-range part (Figure 5, left) includes much less canonical vectors comparing with the case of Newton kernel. This anticipates the smaller total canonical rank for the long-range part in the large sum of Slater-like potentials arising, for example, in the representation of molecular orbitals and the electron density in electronic structure calculations. For instance, the wave function for the Hydrogen atom is given by the Slater function e−μ||x||. In the following section, we consider the application of RS tensor format to interpolation of scattered data in ℝd.

Figure 5. Long-range (left) and short-range (right, a base 10 logarithmic scale) canonical vectors for the Slater function with the grid size n = 1024, R = 24, Rl = 6, λ = 1.

4.5. Application of RHOSVD to Scattered Data Modeling

In scattered data modeling the problem is in a low parametric approximation of multi-variate functions f:ℝd → ℝ by sampling at a finite set of piecewise distinct points. Here, the function f might be the surface of a solid body, the solution of a PDE, many-body potential field, multi-parametric characteristics of physical systems, or some other multi-dimensional data, etc.

Traditional ways of recovering f from a sampling vector f| = (f(x1), …, f(xN)) is the constructing a functional interpolant such that , i.e.,

Using radial basis (RB) functions one can find interpolants PN in the form

where p = p(r):[0, ∞) → ℝ is a fixed RB function, and r = ||·|| is the Euclidean norm on ℝd. In further discussion, we set Q(x) = 0. For example, the following RB functions are commonly used

The other examples of RB functions are defined by Green's kernels or by the class of Matérn functions [23].

We discuss the following computational tasks (A) and (B).

(A) For a fixed coefficient vector , efficiently representing the interpolant PN(x) on the fine tensor grid in ℝd providing (a) O(1)-fast point evaluation of PN in the computational volume Ω, (b) computation of various integral-differential operations on that interpolant (say, gradients, scalar products, convolution integrals, etc.)

(B) Finding the coefficient vector c that solves the interpolation problem Equation (35) in the case of large number N.

Problem (A) exactly fits the RS tensor framework so that the RS tensor approximation solves the problem with low computational costs provided that the sum of long-range parts of the interpolating functions can be easily approximated in the low rank CP tensor format. We consider the case of interpolation by Slater functions exp(−λr) in the more detail.

Problem (B): Suppose that we use some favorable preconditioned iteration for solving coefficient vector ,

with the distance dependent symmetric system matrix Ap, . We assume = Ωh be the n⊗d-set of grid-points located on tensor grid, i.e., N = nd. Introduce the d-tuple multi-index i ↦ i = (i1, …, id), and j ↦ j = (j1, …, jd) and reshape Ap, into the tensor form

which can be decomposed by using the RS based splitting

generated by the RS representation of the weighted potential sum in Equation (36). Here, ARs is a banded diagonal matrix with dominating diagonal part, while is the low Kronecker rank matrix. This implies a bound on the storage, O(N + dRln), and ensures a fast matrix-vector multiplication. Introducing the additional rank-structured representation in c, the solution of Equation (37) can be further simplified.

The above approach can be applied to the data sparse representation for the class of large covariance matrices in the spatial statistics, see for example [23, 55].

In application of tensor methods to data modeling (see Section 4.5) we consider the interpolation of 3D scattered data by a large sum of Slater functions

Given the coefficients cj, we address the question how to efficiently represent the interpolant GN(x) on fine Cartesian grid in ℝ3 by using the low-rank (i.e., low-parametric) CP tensor format, such that each value on the grid can be calculated in O(1) operations. The main problem is that the generating Slater function e−λ||x|| has the cusp at the origin so that the considered interpolant has very low regularity. As result, the tensor rank of the function GN(x) in Equation (38) discretized on a large n × n × n grid increases almost proportionally to the number N of sampling points xj, which in general may be very large. This increase in the canonical rank has been observed in a number of numerical tests. Hence, the straightforward tensor approximation of GN(x) does not work in this case.

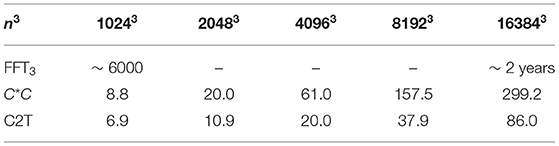

Tables 2, 3 illustrate the stability of the canonical rank in the number N of sampling points in the case of random and function related distribution of the waiting coefficients cj in the long-range part of the Slater interpolant Equation (38).

The generating Slater radial function can be proven to have the low-rank RS canonical tensor decomposition by using the sinc-approximation method (see section 4.1).

To complete this section, we present the numerical example demonstrating the application of RS tensor representation to scattered data modeling in ℝ3. We denote by the rank-R CP tensor approximation of the reference Slater potential e−λ||x|| discretized on n × n × n grid Ωn, and introduce its RS splitting GR = GRl + GRs, with Rl+Rs = R. Here, Rl ≈ R/2 is the rank parameter of the long-range part in GR. Assume that all measurement points xj in Equation (38) are located on the discretization grid Ωn, then the tensor representation of the long-range part of the total interpolant PN can be obtained as the sum of the properly replicated reference potential Gl, via the shift-and-windowing transform j, j = 1, …, N,

that includes about NRl terms. For large number of measurement points, N, the rank reduction is ubiquitous.

It can be proven (by slight modification of arguments in [32]) that both the CP and Tucker ranks of the N-term sum in Equation (39) depend only logarithmically (but not linearly) on N.

Proposition 4.5. (Uniform rank bounds for the long-range part in the Slater interpolant). Let the long-range part GN,l in the total Slater interpolant in Equation (39) be composed of those terms in Equation (24) which satisfy the relation tk ≤ 1, where M = O(log2ε). Then the total ε-rank r0 of the Tucker approximation to the canonical tensor sum GN,l is bounded by

where the constant C does not depend on the number of particles N, as well as on the size of the computational box, [−b, b]3.

Proof. (Sketch) The main argument of the proof is based on the fact that the grid function GN,l has the band-limited Fourier image, such that the frequency interval depends weakly (logarithmically) on N. Then we represent all Gaussians in the truncated Fourier basis and make the summation in the fixed set of orthogonal trigonometric basis functions, which defines the orthogonal Tucker representation with controllable rank parameter.

The numerical illustrations below demonstrate the CP rank by RHOSVD decomposition of the long-range part GN,l in the multi-point tensor interpolant via Slater functions.

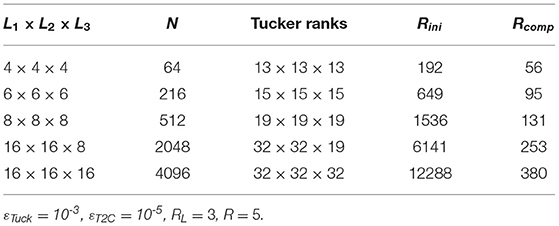

Now, we generate a tensor composed of a sum of Slater functions, discretized by collocation over n⊗3 representation grid with n = 384, and placed in the nodes of a sampling L1 × L2 × L3 lattice with randomly chosen weights cj in the interval cj ∈ [−5, 5] for every node. Every single Slater function is generated as a canonical tensor by using sinc-quadratures for the approximation of the related Laplace transform. Table 2 shows ranks of the long-range part of this tensor composed of Slater potentials located in the nodes of the lattices of increasing size. N indicates the number of nodes, while Rini and Rcomp are the initial and compressed canonical ranks of the resulting long-range part tensor, respectively. Tucker ranks correspond to the ranks in the canonical-to-Tucker decomposition step. Threshold values for the Slater potential generator is , while the tolerance thresholds for the rank reduction procedure are given by and . We observe that the ranks of the long-range part of the potential increase only slightly in the size of the 3D sampling lattice, N.

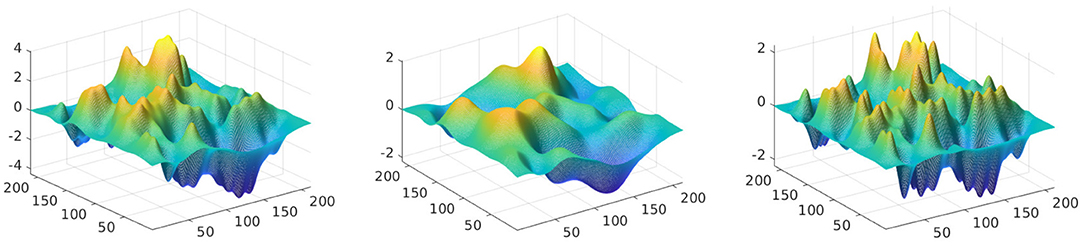

Figure 6 demonstrates the full-, short-, and long-range components of the multi-Slater tensor constructed by the weighted sum of Slater functions with randomly chosen weights cj in the interval cj ∈ [−5, 5]. The positions of the generating nodes are located on the 12 × 12 × 4 3D lattice. The parameters of the tensor interpolant are set up as follows: λ = 0.5, the representation grid is of size n⊗3 with n = 384, R = 8, Rl = 3 and the number of samples N = 576 (Figures zoom a part of the grid.). The initial CP rank of the sum of N0 interpolating Slater potentials is about 4, 468. Middle and right pictures show the long- and short-range parts of the composite tensor, respectively. The initial rank of the canonical tensor representing the long-range part is equal to RL = 2304, which is reduced by the C2C procedure via RHOSVD to Rcc = 71. The rank truncation threshold is ε = 10−3.

Figure 6. Full-, long- and short-range components of the multi-Slater tensor. Slater kernels with λ = 0.5 and with random amplitudes in the range of [−5, 5] are placed in the nodes of a 12 × 12 × 4 lattice using 3D grid of size n⊗3 with n = 384, R = 8, Rl = 3 and the number of nodes N = 576.

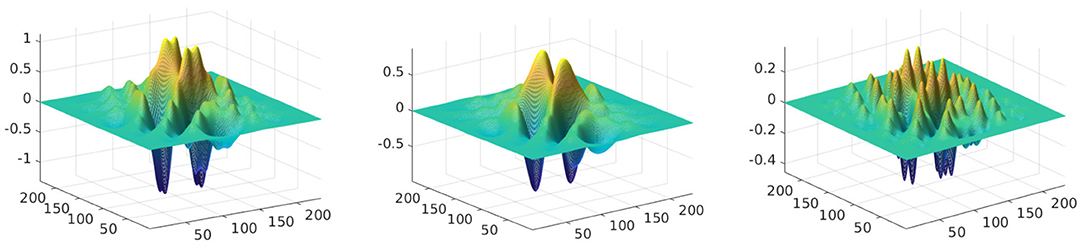

Figure 7 and Table 3 demonstrate the decomposition of the multi-Slater tensor with the amplitudes cj in the nodes (xj, yj, zj) modulated by the function of the (x,y,z)-coordinates

with a1 = 6 and a2 = 0.1, , , i.e., cj = F(xj, yj, zj).

Figure 7. Full-, long- and short-range components of the multi-Slater tensor. Slater kernels with λ = 0.5 and with amplitudes modulated by the function Equation (41) using the nodes of a 12 × 12 × 4 lattice on 3D grid of size n⊗3 with n = 384, R = 8, Rl = 3 and the number of nodes N = 576.

Next, we generate a tensor composed of a sum of discretized Slater functions on a sampling lattice L1 × L2 × L3, living on 3D representation grid of size n⊗3 with n = 232. The amplitudes of the individual Slater functions are modulated by a function of x, y, z-coordinates Equation (41) in every node of the lattice. Table 3 shows rank of the long-range part of this multi-Slater tensor with respect to the increasing size of the lattice. N = L1L2L3 is the number of nodes, and Rini and Rcomp are the initial and compressed canonical ranks, respectively. Tucker ranks are shown at the canonical-to-Tucker decomposition step. Threshold values for the Slater potential generation is , the thresholds for the canonical-to-canonical rank reduction procedure are given by and . Table 3 demonstrates the very moderate icrease of the reduced rank in the long-range part of the Slater potential sum on the size of the 3D sampling lattice.

Figure 7 demonstrates the full-, long-, and short-range components of the multi-Slater tensor. Slater kernels with λ = 0.5 and with the amplitudes modulated by the function Equation (41) of the (x, y, z)-coordinates are places on the nodes of a 12 × 12 × 4 sampling lattice, living on 3D grid of size n⊗3 with n = 384, R = 8, Rl = 3, and with the number of sampling nodes N = 576.

5. Representing Green's Kernels in Tensor Format

In this section, we demonstrate how the RHOSVD can be applied for the efficient tensor decomposition of various singular radial functions composed by polynomial expansions of a few reference potentials already precomputed in the low-rank tensor format. Given the low-rank CP tensor A further considered as a reference tensor, the low rank representation of the tensor-valued polynomial function

where the multiplication of tensors is understood in the sense of pointwise Hadamard product, can be calculated via n-times application of the RHOSVD by using the Horner scheme in the form

Similar scheme can be also applied in the case of multivariate polynomials.

For examples considered, in this section, we make use of the discretized Slater e−||x|| and Newton , x ∈ ℝd, kernels as the reference tensors. The following statement was proven in [11, 51] (see also Lemma 4.4).

Proposition 5.1. The discretized over n⊗d-grid radial functions e−||x|| and , x ∈ ℝd, included in representation of various Green kernels and fundamental solutions for elliptic operators with constant coefficients, both allow the low-rank CP tensor approximation. The corresponding rank-R representations can be calculated in O(dRn) operations without precomputing and storage of the target tensor in the full (entry-wise) format.

Tensor decomposition for discretized singular kernels such as ||x||, , m ≥ 2, and e−κ||x||/||x||, can be now calculated by applying the RHOSVD to polynomial combinations of the reference potentials as in Proposition 5.1. The most important benefit of the presented techniques is the opportunity to compute the rank-R tensor approximations without pre-computing and storage of the target tensor in the full format tensor.

In what follows, we present the particular examples of singular kernels in ℝd which can be treated by the above presented techniques. Consider the fundamental solution of the advection-diffusion operator d with constant coefficients in ℝd

If , then for d ≥ 3 it holds

where ωd is the surface area of the unit sphere in ℝd, [56–58]. Notice that the radial function for d ≥ 3 allows the RS decomposition of the corresponding discrete tensor representation based on the sinc quadrature approximation, which implies the RS representation of the kernel function η0(x), since the function is already separable. From computational point of view, both the CP and RS canonical decompositions of discretized kernels can be computed by successive application of RHOSVD approximation to the products of canonical tensors for the discretized Newton potential .

In the particular case , we obtain the fundamental solution of the operator for d = 3, also known as the Yukawa (for κ ∈ ℝ+) or Helmholtz (for κ ∈ ℂ) Green kernels

In the case of Yukawa kernel the tensor representations by using Gaussian sums are considered in [1, 2], see also references therein.

The Helmholtz equation with Imκ > 0 (corresponds to the diffraction potentials) arises in problems of acoustics, electro-magnetics and optics. We refer to [59] for the detailed discussion of this class of fundamental solutions. Fast algorithms for the oscillating Helmholtz kernel have been considered in [1]. However, in this case the construction of the RS tensor decomposition remains an open question.

In the case of 3D biharmonic operator = Δ2 the fundamental solution reads as

The hydrodynamic potentials correspond to the classical Stokes operator

where u is the velocity field, p denotes the pressure, and ν is the constant viscosity coefficient. The solution of the Stokes problem in ℝ3 can be expressed by the hydrodynamic potentials

with the fundamental solution

The existence of the low-rank RS tensor representation for the hydrodynamic potential is based on the same argument as in Remark 5.1. In turn, in the case of biharmonic fundamental solution we use the identity

where the nominator has the separation rank equals to d. The latter representation can be also applied for calculation of the respective tensor approximations.

Here, we demonstrate how the application of RHOSVD allows to easily compute the low rank Tucker/CP approximation of the discretized singular potential , x ∈ ℝ3, as well as the respective RS-representation, having at hand the RS representation of the tensor P ∈ ℝn⊗3 discretizing the Newton kernel. In this example, we use the discretization of in the form

where by P(3) we denotes the collocation projection discretization of . The low rank Tucker/CP tensor approximation to P(3) can be computed by the direct application of the RHOSVD to the above product type representation. The RS representation of P(3) is calculated based on Lemma 4.3. Given the RS-representation Equation (31) of the discretized Newton kernel, PR, we define the low rank CP approximation to the discretized singular part in the hydrodynamic potential P(3) by

In view of Lemma 4.3, the long range part of RS decomposition of , can be computed by RHOSVD approximation to the following Hadamard product of tensors,

Figure 8 visualizes the tensor as well as its long range part .

The potentials are discretized on n × n × n Cartesian grid with n = 257, the rank truncation threshold is chosen for ε = 10−5. The CP rank of the Newton kernel is equal to R = 19, while we set Rl = 10, thus resulting in the initial ranks 6859 and 103 for RHOSVD decomposition of and , respectively. The RHOSVD decomposition reduces the large rank parameters to R′ = 122 (the Tucker rank is r = 13) and (the Tucker rank is r = 8), correspondingly.

6. RHOSVD for Rank Reduction in 3D Elliptic Problem Solvers

Efficient rank reduction procedure based on the RHOSVD is a prerequisite for the development of the tensor-structured solvers for the three-dimensional elliptic problem, which reduce the computational complexity to almost linear scale, O(nR), contrary to usual O(n3) complexity.

Assume that all input data in the governing PDE are given in the low-rank tensor form. The convenient tensor format for these problems is a canonical tensor representation of both the governing operator, and of the initial guess as well as of the right hand side. The commonly used numerical techniques are based on certain iterative schemes that include at each iterative step multiple matrix-vector and vector-vector algebraic operations each of them enlarges the tensor rank of the output in the additive or multiplicative way. It turns out that in common practice the most computationally intensive step in the rank-structured algorithms is the adaptive rank truncation, which makes the rank truncation procedure ubiquitous.

We notice that in PDE based mathematical models the total numerical complexity of the particular computational scheme, i.e., the overall cost of the rank truncation procedure is determined by the multiple of the number of calls to the rank truncation algorithm (merely the number of iterations) and the cost of a single RHOSVD transform (mainly determined by the rank parameter of the input tensor). In turn, both complexity characteristics depend on the quality of the rank-structured preconditioner so that optimization of the whole solution process is can be achieved by the trade-off between Kronecker rank of the preconditioner and the complexity of its implementation.

In the course of preconditioned iterations, the tensor ranks of the governing operator, the preconditioner and the iterand are multiplied, and, therefore, a robust rank reduction is mandatory procedure for such techniques applied to iterative solution of elliptic and pseudo-differential equations in the rank-structured tensor format.

In particular, the RHOSVD was applied to the numerical solution of PDE constrained (including the case of fractional operators) optimal control problems [36, 39], where the complexity of the order O(nRlogn) was demonstrated. In the case of higher dimensions the rank reduction in the canonical format can be performed directly (i.e., without intermediate use of the Tucker approximation) by using the cascading ALS iteration in the CP format, see [50] concerning the tensor-structured solution of the stochastic/parametric PDEs.

7. Conclusions

We discuss theoretical and computational aspects of the RHOSVD served for approximation of tensors in low-rank Tucker/canonical formats, and show that this rank reduction technique is the principal ingredient in tensor-based computations for real-life problems in scientific computing and data modeling. We recall rank reduction scheme for the canonical input tensors based on RHOSVD and subsequent Tucker-to-canonical transform. We present the detailed error analysis of low rank RHOSVD approximation to the canonical tensors (possibly with large input rank), and provide the proof on the uniform bound for the relative approximation error.

We recall that the first example on application of the RHOSVD was the rank-structured computation of the 3D convolution transform with the nonlocal Newton kernel in ℝ3, which is the basic operation in the Hartree-Fock calculations.

The RHOSVD is the basic tools for utilizing the multilinear algebra in RS tensor format, which employs the sinc-analytic tensor approximation methods applied to the important class of radial functions in ℝd. This enables efficient rank decompositions of tensors generated by functions with multiple local cusps or singularities by separating their short- and long-range parts. As an example, we construct the RS tensor representation of the discretized Slater function e−λ||x||, x ∈ ℝd. We then describe the RS tensor approximation to various Green's kernels obtained by combination of this function with other potentials, in particular, with the Newton kernel providing the Yukawa potential. In this way, we introduce the concept of reproducing radial functions which pave the way for efficient RS tensor decomposition applied to a wide range of function-related multidimensional data by combining the multilinear algebra in RS tensor format with the RHOSDV rank reduction techniques.

Our next example is related to application of RHOSVD to low-rank tensor interpolation of scattered data. Our numerical tests demonstrate the efficiency of this approach on the example of multi-Slater interpolant in the case of many measurement points. We apply the RHOSVD to the data generated via random or function modulated amplitudes of samples and demonstrate numerically that for both cases the rank of the long-range part remains small and depends weakly on the number of samples.

Finally, we notice that the described RHOSVD algorithms have proven their efficiency in a number of recent applications, in particular, in rank reduction for the tensor-structured iterative solvers for PDE constraint optimal control problems (including fractional control), in construction of the range-separated tensor representations for calculation of the electrostatic potentials of many-particle systems (arising in protein modeling), and for numerical analysis of large scattered data in ℝd.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Author Contributions

BK: mathematical theory, basic concepts, and manuscript preparation. VK: basic concepts, numerical simulations, and manuscript preparation. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^Otherwise one can not avoid the “curse of dimensionality”, see the cost of the HT/TT SVD above.

References

1. Khoromskij BN. Tensor Numerical Methods in Scientific Computing. Berlin: De Gruyter Verlag (2018).

2. Khoromskaia V, Khoromskij BN. Tensor Numerical Methods in Quantum Chemistry. Berlin: De Gruyter Verlag (2018).

4. Comon P, Luciani X, De Almeida ALF. Tensor decompositions, alternating least squares and other tales. J Chemometr J Chemometr Soc. (2009) 23:393–405. doi: 10.1002/CEM.1236

5. Smilde A, Bro R, Geladi P. Multi-Way Analysis With Applications in the Chemical Sciences. Wiley (2004).

6. Cichocki A, Lee N, Oseledets I, Pan AH, Zhao Q, Mandic DP. Tensor networks for dimensionality reduction and large-scale optimization: part 1 low-rank tensor decompositions. Found Trends Mach Learn (2016) 9:249–429. doi: 10.1561/2200000059

7. De Lathauwer L, De Moor B, Vandewalle J. A multilinear singular value decomposition. SIAM J. Matrix Anal. Appl. (2000) 21:1253–78. doi: 10.1137/S0895479896305696

8. Ten Berge JMF, Sidiropoulos ND. On uniqueness in CANDECOMP/PARAFAC. Psychometrika (2002) 67:399–409.

9. Sidiropoulos ND, De Lathauwer L, Fu X, Huang K, Papalexakis EE, Faloutsos C. Tensor decomposition for signal processing and machine learning. IEEE Trans Signal Process. (2017) 65:3551–82. doi: 10.1109/TSP.2017.2690524

10. Golub GH, Van Loan F. Matrix Computations. Baltimore, MD: Johns Hopkins University Press (1996).

11. Hackbusch W, Khoromskij BN. Low-rank Kronecker product approximation to multi-dimensional nonlocal operators. Part I. Separable approximation of multi-variate functions. Computing. (2006) 76:177–202. doi: 10.1007/s00607-005-0144-0

12. Khoromskij BN, Khoromskaia V. Low rank tucker-type tensor approximation to classical potentials. Central Eur J Math. (2007) 5:523–50. doi: 10.2478/s11533-007-0018-0

13. Marcati C, Rakhuba M, Schwab C. Tensor rank bounds for point singularities in ℝ3. E-preprint arXiv:1912.07996, (2019).

14. Hitchcock FL. The expression of a tensor or a polyadic as a sum of products. J Math Phys. (1927) 6:164–89.

15. Harshman RA. “Foundations of the PARAFAC procedure: models and conditions for an “explanatory” multimodal factor analysis,” In: UCLA Working Papers Phonetics. vol. 16 (1970). 1–84.

16. Tucker LR. Some mathematical notes on three-mode factor analysis. Psychometrika (1966) 31:279–311.

17. Oseledets IV, Tyrtyshnikov EE. Breaking the curse of dimensionality, or how to use svd in many dimensions. SIAM J Sci Comput. (2009) 31:3744–59. doi: 10.1137/090748330

18. Oseledets IV. Tensor-train decomposition. SIAM J Sci Comput. (2011) 33:2295–317. doi: 10.1137/090752286

19. Hackbusch W, Kühn S. A new scheme for the tensor representation. J. Fourier Anal. Appl. (2009) 15:706–22. doi: 10.1007/s00041-009-9094-9

20. Khoromskij BN. O(dlogN)-quantics approximation of N-d tensors in high-dimensional numerical modeling. Construct Approx. (2011) 34:257–89. doi: 10.1007/S00365-011-9131-1

21. Oseledets I. Constructive representation of functions in low-rank tensor formats. Constr. Approx. (2013) 37:1–18. doi: 10.1007/s00365-012-9175-x

22. Kressner D, Steinlechner M, Uschmajew A. Low-rank tensor methods with subspace correction for symmetric eigenvalue problems. SIAM J Sci Comput. (2014) 36:A2346–68. doi: 10.1137/130949919

23. Litvinenko A, Keyes D, Khoromskaia V, Khoromskij BN, Matthies HG. Tucker tensor analysis of Matern functions in spatial statistics. Comput. Meth. Appl. Math. (2019) 19:101–22. doi: 10.1515/cmam-2018-0022

24. Rakhuba M, Oseledets I. Fast multidimensional convolution in low-rank tensor formats via cross approximation. SIAM J Sci Comput (2015) 37:A565–82. doi: 10.1137/140958529

25. Uschmajew A. Local convergence of the alternating least squares algorithm for canonical tensor approximation. SIAM J Mat Anal Appl (2012) 33:639–52. doi: 10.1137/110843587

26. Hackbusch W, Uschmajew A. Modified iterations for data-sparse solution of linear systems. Vietnam J Math. (2021) 49:493–512. doi: 10.1007/s10013-021-00504-9

27. Khoromskaia V. Numerical Solution of the Hartree-Fock Equation by Multilevel Tensor-structured methods. Berlin: TU Berlin (2010).

28. Khoromskaia V, Khoromskij BN. Grid-based lattice summation of electrostatic potentials by assembled rank-structured tensor approximation. Comp. Phys. Commun. (2014) 185:3162–74. doi: 10.1016/j.cpc.2014.08.015

29. Khoromskij BN, Khoromskaia V, Flad H-J. Numerical solution of the hartree-fock equation in multilevel tensor-structured format. SIAM J. Sci. Comput. (2011) 33:45–65. doi: 10.1137/090777372

30. Dolgov SV, Khoromskij BN, Oseledets I. Fast solution of multi-dimensional parabolic problems in the TT/QTT formats with initial application to the Fokker-Planck equation. SIAM J. Sci. Comput. (2012) 34:A3016–38. doi: 10.1137/120864210

31. Kazeev M, Khammash M., Nip, Ch. Schwab. Direct solution of the chemical master equation using quantized tensor trains. PLoS Comput Biol. (2014) 10:e1003359. doi: 10.1371/journal.pcbi.1003359

32. Benner P, Khoromskaia V, Khoromskij BN. Range-separated tensor format for many-particle modeling. SIAM J Sci Comput. (2018) 40:A1034-062. doi: 10.1137/16M1098930

33. Benner P, Khoromskaia V, Khoromskij BN, Kweyu C, Stein M. Regularization of Poisson–Boltzmann type equations with singular source terms using the range-separated tensor format. SIAM J Sci Comput. (2021) 43:A415-45. doi: 10.1137/19M1281435

34. Khoromskij BN. Range-separated tensor decomposition of the discretized Dirac delta and elliptic operator inverse. J Comput Phys. (2020) 401:108998. doi: 10.1016/j.jcp.2019.108998

35. Kweyu C, Khoromskaia V, Khoromskij B, Stein M, Benner P. Solution decomposition for the nonlinear Poisson-Boltzmann equation using the range-separated tensor format. arXiv preprint arXiv:2109.14073. (2021).

36. Heidel G, Khoromskaia V, Khoromskij BN, Schulz V. Tensor product method for fast solution of optimal control problems with fractional multidimensional Laplacian in constraints. J Comput Phys. (2021) 424:109865. doi: 10.1016/j.jcp.2020.109865

37. Dolgov S, Kalise D, Kunisch KK. Tensor decomposition methods for high-dimensional Hamilton–Jacobi–Bellman equations. SIAM J. Sci. Comput. (2021) 43:A1625–50. doi: 10.1137/19M1305136

38. Dolgov S, Pearson JW. Preconditioners and tensor product solvers for optimal control problems from chemotaxis. SIAM J. Sci. Comput. (2019) 41:B1228–53. doi: 10.1137/18M1198041

39. Schmitt B, Khoromskij BN, Khoromskaia V, Schulz V. Tensor method for optimal control problems constrained by fractional three-dimensional elliptic operator with variable coefficients. Numer. Lin Algeb Appl. (2021) 1–24:e2404. doi: 10.1002/nla.2404

40. Bachmayr M, Schneider R, Uschmajew A. Tensor networks and hierarchical tensors for the solution of high-dimensional partial differential equations. Found Comput Math. (2016) 16:1423–72. doi: 10.1007/s10208-016-9317-9

41. Boiveau T, Ehrlacher V, Ern A, Nouy A. Low-rank approximation of linear parabolic equations by space-time tensor Galerkin methods. ESAIM Math Model Numer Anal (2019) 53:635-58. doi: 10.1051/m2an/2018073

42. Espig M, Hackbusch W, Litvinenko A, Matthies HG, Zander E. Post-processing of high-dimensional data. (2019) E-Preprint arXiv:1906.05669.

43. Lubich Ch, Rohwedder T, Schneider R, Vandereycken B. Dynamical approximation of hierarchical Tucker and tensor-train tensors. SIAM J Matrix Anal. Appl. (2013) 34:470–94. doi: 10.1137/120885723

44. Litvinenko A, Marzouk Y, Matthies HG, Scavino M, Spantini A. Computing f-divergences and distances of high-dimensional probability density functions–low-rank tensor approximations. E-preprint arXiv:2111.07164. (2021).

45. Grasedyck L. Hierarchical singular value decomposition of tensors. SIAM. J. Matrix Anal. Appl. (2010) 31:2029. doi: 10.1137/090764189

46. Khoromskij BN, Khoromskaia V. Multigrid tensor approximation of function related arrays. SIAM J. Sci. Comput. (2009) 31:3002–26. doi: 10.1137/080730408

47. Ehrlacher V, Grigori L, Lombardi D, Song H. Adaptive hierarchical subtensor partitioning for tensor compression. SIAM J. Sci. Comput. (2021) 43:A139–63. doi: 10.1137/19M128689X

48. Kressner D, Uschmajew A. On low-rank approximability of solutions to high-dimensional operator equations and eigenvalue problems. Lin Algeb Appl. (2016) 493:556–72. doi: 10.1016/J.LAA.2015.12.016

49. Oseledets IV, Rakhuba MV, Uschmajew A. Alternating least squares as moving subspace correction. SIAM J Numer Anal. (2018) 56:3459–79. doi: 10.1137/17M1148712

50. Khoromskij BN, Schwab C. Tensor-structured Galerkin approximation of parametric and stochastic elliptic PDEs. SIAM J. Sci. Comput. (2011) 33:364–85. doi: 10.1137/100785715

51. Khoromskij BN. Structured rank-(r1, …, rd) decomposition of function-related tensors in ℝd. Comput. Meth. Appl. Math. (2006) 6:194–220. doi: 10.2478/cmam-2006-0010

54. Khoromskaia V, Khoromskij BN. Prospects of Tensor-Based Numerical Modeling of the Collective Electrostatics in Many-Particle Systems. Comput Math Math Phys. (2021) 61:864–86. doi: 10.1134/S0965542521050110

55. Matérn B. Spatial Variation, Vol. 36 of Lecture Notes in Statistics. 2nd Edn. Berlin: Springer-Verlag (1986).

8. Appendix: Proofs of Theorem 2.3 and Lemma 2.5

Proof of Theorem 2.3.

Proof. Using the contracted product representations of and , and introducing the ℓ-mode residual

with notations we arrive at the following expansion for the approximation error in the form

where

This leads to the error bound (by the triangle inequality)

providing the estimate (in view of , ℓ = 1, …, d, ν = 1, …, R)

Furthermore, since U(ℓ) has normalized columns, i.e., we obtain for ℓ = 1, …, d ν = 1, …, R. Now the error estimate follows

The case R < n can be analyzed along the same line.□

Proof of Lemma 2.5.

Proof. (A) The canonical vectors of any test element on the left-hand side of (11),

can be chosen in , that means

Indeed, assuming

we conclude that does not effect the cost function in (11) because of the orthogonality of V(ℓ). Hence, setting , and plugging (A2) in (A1), we arrive at the desired Tucker decomposition of Z, This implies

On the other hand, we have

This proves (11).