- 1Department of Industrial Engineering, University of Florence, Florence, Italy

- 2Interuniversity Center of Integrated Systems for the Marine Environment, Genoa, Italy

Underwater robots are nowadays employed for many different applications; during the last decades, a wide variety of robotic vehicles have been developed by both companies and research institutes, different in shape, size, navigation system, and payload. While the market needs to constitute the real benchmark for commercial vehicles, novel approaches developed during research projects represent the standard for academia and research bodies. An interesting opportunity for the performance comparison of autonomous vehicles lies in robotics competitions, which serve as an useful testbed for state-of-the-art underwater technologies and a chance for the constructive evaluation of strengths and weaknesses of the participating platforms. In this framework, over the last few years, the Department of Industrial Engineering of the University of Florence participated in multiple robotics competitions, employing different vehicles. In particular, in September 2017 the team from the University of Florence took part in the European Robotics League Emergency Robots competition held in Piombino (Italy) using FeelHippo AUV, a compact and lightweight Autonomous Underwater Vehicle (AUV). Despite its size, FeelHippo AUV possesses a complete navigation system, able to offer good navigation accuracy, and diverse payload acquisition and analysis capabilities. This paper reports the main field results obtained by the team during the competition, with the aim of showing how it is possible to achieve satisfying performance (in terms of both navigation precision and payload data acquisition and processing) even with small-size vehicles such as FeelHippo AUV.

1. Introduction

Unmanned underwater vehicles, both teleoperated and autonomous, are nowadays employed for many applications, effectively helping human operators performing a wide variety of tasks (or even replacing them during their execution) (CADDY, Mišković et al., 2016). Underwater vehicles come in different shapes and sizes: from those with a length of several meters and a weight of hundreds of kilograms (e.g., Rigaud, 2007; Furlong et al., 2012; Kaiser et al., 2016) to the more compact and lightweight (for instance Hiller et al., 2012; Crowell, 2013; McCarter et al., 2014). While bigger vehicles naturally allow the use of more complex instrumentation and possess the ability to store heavy payload, smaller vehicles are commonly associated with lower performance and limited payload carrying capabilities. Hence, one of the current challenges that designers of small vehicles need to face consists in the optimization of the available space on board.

In this framework, the Mechatronics and Dynamic Modeling Laboratory (MDM Lab) of the Department of Industrial Engineering of the University of Florence (UNIFI DIEF) has been active in the field of underwater robotics since 2011, participating in different robotics-related research projects and developing and building several AUVs since then. Furthermore, throughout the years, UNIFI DIEF took part in multiple student and non-student robotics competitions. A team from UNIFI DIEF (UNIFI Team) took part in the Student Autonomous Underwater Vehicles Challenge - Europe (SAUC-E) Ferri et al. (2015) competition in 2012, 2013, and 2016, while in 2015 the team participated in euRathlon (Ferri et al., 2016); finally, it took part in the European Robotics Leaugue (ERL) Emergency Robots competition in September 2017 (Ferri et al., 2017).

This paper reports the field experience of the UNIFI Team at ERL Emergency Robots 2017, held in Piombino (Italy), from the 15th to the 23rd of September. During the nine competition days, the robots of the participating teams competed in a set of tasks in the land, air, and sea domains. This paper focuses on the results obtained in the sea domain with FeelHippo AUV: in particular, it will be shown how such vehicle, despite its small size, possesses a complete navigation system capable of offering satisfying accuracy while autonomously navigating; at the same time, it will be demonstrated how the diverse payload the vehicle is equipped with can be exploited for different purposes. In other words, the mechatronics design has been conceived to be a suitable trade-off between portability and high performance.

Other AUVs used in student robotics competitions can be found for example in Fietz et al. (2014) and Carreras et al. (2018) (the winner of ERL Emergency Robots 2017). The remainder of the paper is organized as follows: section 2 and section 3 are dedicated to the description of FeelHippo AUV; while the former focuses on the mechanical design of the vehicle and on the onboard devices, the latter describes its software architecture, giving an overview of its navigation system and describing some of its payload analysis and processing capabilities. Section 4 reports the most significant results obtained during the competition, and section 5 concludes the paper.

2. FeelHippo AUV: Description

FeelHippo AUV has been designed and developed specifically for the participation in student robotics competitions; it has been used by a team of UNIFI DIEF during SAUC-E 2013, euRathlon 2015, and ERL Emergency Robots in 2017.

In addition to student competitions, FeelHippo AUV has been used for short navigation missions, mainly in shallow waters, from 2015 onward. Thanks to the sensors added for the competition, the level of performance achieved was satisfying; hence, it was decided to incorporate such devices within the standard equipment of the vehicle. From early to mid 2017, FeelHippo AUV underwent a major overhaul, in terms of both mechanical components (identifying those parts and subsystems that could be redesigned to increase overall functionality) and navigation sensors (permanent integration of new instrumentation required indeed a general revision of the electronics of the vehicle, in order to optimize the occupied volume). In particular, the old oil-filled thrusters were replaced in favor of thrusters manufactured by BlueRobotics and tailored to underwater applications. In addition to this, a new DVL by Nortek has been placed under the center of gravity of the vehicle. Formerly, it was positioned in the stern. As a consequence, the stability of the vehicle is increased. More information concerning the payload can be found in the following. In its current version (as of end 2017, Figure 1), FeelHippo AUV can be efficiently used as a small survey and inspection AUV, suitable for use in present and future research projects or, generally speaking, autonomous sea operations.

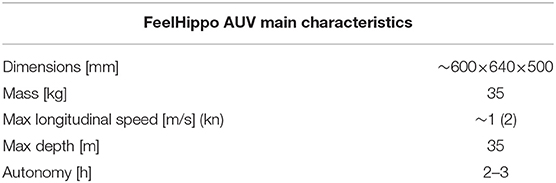

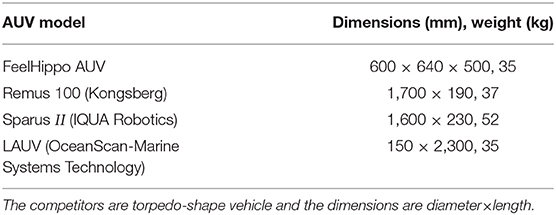

The main characteristics of the vehicle are reported in Table 1; the reduced dimensions and weight, together with the convenient handles visible in Figure 1, allow for easy transportation and deployment (no more than two people are required, and even deployment from shore is possible).

The central body of FeelHippo AUV is composed of a Plexiglass® hull with an internal diameter of 200 and 5 mm thickness, which houses all the non-watertight hardware and electronics. Two metal flanges constitute the connection between the central body and the Plexiglass® domes at each end of the main hull, and two O-rings ensure a watertight connection between the former and the domes.

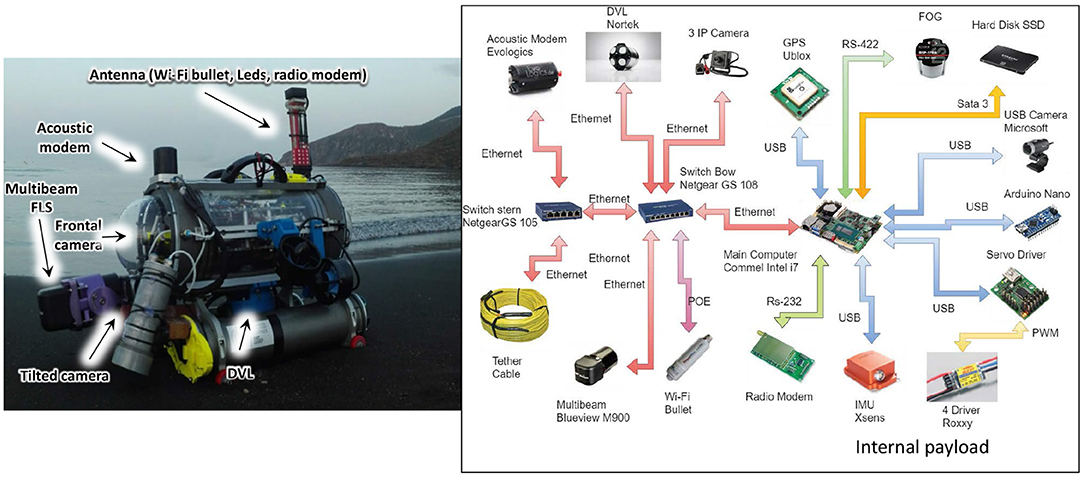

Four thrusters in vectored configuration (two on the stern and two on both lateral sides tilted of 45°), used to control translational motion and yaw (limited roll and pitch are guaranteed by hydrostatic stability), are connected with the central frame by 3-D printed custom-made plastic parts. Concerning the internal electronics, all the components are mounted on two parallel Plexiglass® planes, placed on linear guides which facilitate assembly and maintenance operations (allowing to easily extract internal components from within the central body of the vehicle). An Intel i-7 Mobile CPU is used for onboard processing, while the sensor set FeelHippo AUV is equipped with includes:

• U-blox 7P precision Global Positioning System (GPS);

• Xsens MTi-300 AHRS, composed of triaxial accelerometers, gyroscopes and magnetometers;

• Nortek DVL1000 Doppler Velocity Log (DVL), measuring linear velocity and also acting as Depth Sensor (DS). The device has been placed under the central body of the AUV; indeed, being such component quite heavy (~2.7 kg in air), this choice increases stability in water;

• KVH DSP 1760 single-axis high precision Fiber Optic Gyroscope (FOG) for a precise measurement of the vehicle heading.

For what concerns communication, in addition to a WiFi access point and a radio modem, an EvoLogics S2CR 18/34 acoustic modem is used underwater; in addition, a custom-made antenna houses four rows of RGB LEDs, used for easy optical communication of the state of the vehicle (e.g., low battery, acquisition of the GPS fix, mission start) while the former is on surface. Regarding payload, the following devices are currently mounted on the vehicle:

• One Microsoft Lifecam Cinema forward-looking camera, which also allows teleoperated guide;

• One bottom-looking ELP 720p MINI IP camera;

• Two lateral ELP 1080p MINI IP cameras, used for stereo vision;

• One Teledyne BlueView M900 2D Forward-Looking SONAR (FLS).

A scheme of the connections (logical and physical) among the components of the vehicle is reported in Figure 2. Despite its reduced size, FeelHippo AUV is able to equip diverse payload, both optical and acoustical. Furthermore, thanks to its particular structure, additional small devices (such as, e.g., supplementary cameras or LED illuminators) can be added to the main body of the vehicle with ease. More information about FeelHippo AUV versions from 2013 to 2017 can be found in Fanelli (2019), whereas more recent versions are described in Franchi et al. (2019). A comparison (in terms of dimensions and weight) with other competitors is reported in Table 2.

With the aim of highlighting the compactness of FeelHippo AUV, its physical data are compared with other AUVs present on the market.

3. FeelHippo AUV: Software Architecture

The software architecture is modular with independent processes that share information through an adapted TCP/IP protocol called Transmission Control Protocol for Robot Operating System (TCPROS) (Amaran et al., 2015; ROS). In section 3.1 a quick overview of the Guidance, Navigation, and Control (GNC) system is depicted, whereas in section 3.2 how to manage acoustic payload is described.

3.1. FeelHippo AUV: Guidance, Navigation, and Control System

Thanks to the available navigation sensors on board, introduced in section 2, FeelHippo AUV is capable of successfully performing autonomous navigation missions for the full extent of its battery charge without the need to resurface: thanks to a careful mechanical design, the vehicle is able to house position, depth, inertial, magnetic field, and velocity sensors inside its main body, thus disposing of a complete navigation system used to compute the pose of the AUV in real-time. Additionally, thanks to the presence of an acoustic modem, the vehicle is able to receive acoustic position fixes sent by dedicated instrumentation (e.g., Long, Short, or Ultra-Short BaseLine systems), which can be integrated within its GNC system and exploited to correct the pose estimated on board while underwater (or in any GPS-denied scenario).

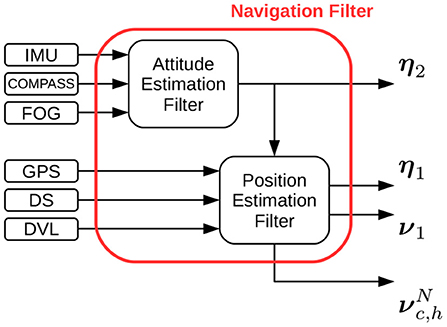

The navigation filter of FeelHippo AUV is the same as the one of the others AUVs of the MDM Lab, exploiting all the features developed at the University of Florence during past and present research projects; hence, this section only briefly reviews the core concepts.

The navigation system is used to determine an accurate estimate of the pose of the vehicle with respect to a local Earth-fixed reference frame whose axes point, respectively, North, East, and Down (NED frame). Resorting to the classic notation exploited to describe the motion of underwater vehicles (Fossen et al., 1994), such quantity is denoted with , where η1 indicates the position of the AUV, and η2 its orientation (exploiting a triplet of Euler angles; roll, pitch, and yaw are used in this context). Additionally, let us denote with the velocity (linear and angular) of the vehicle with respect to a body-fixed reference frame, and with τ ∈ ℝ6 the vector of forces and moments acting on the AUV.

A parallel structure has been chosen (refer to Figure 3): attitude is independently estimated using IMU, compass, and FOG data, and constitutes an input that is fed to the position estimation filter. In particular, the attitude estimation filter is based on the nonlinear observer proposed in Mahony et al. (2008), whose principle is to integrate angular rate changes measured by gyroscopes and correcting the obtained values exploiting accelerometers and magnetometers. The structure of the original filter proposed in Mahony et al. (2008) has then been suitably modified in order to better adapt it to the underwater field of application (Allotta et al., 2015; Costanzi et al., 2016); in particular, a real-time strategy to detect external magnetic disturbances (which would detrimentally affect the yaw estimate) has been developed in order to maintain the accuracy of the computed estimate in a wide variety of possible environmental conditions, promptly discarding corrupted compass reading, and relying on the high precision single-axis FOG.

For what concerns position estimation, in addition to being able to navigate in dead reckoning (which has proven to be satisfyingly reliable despite its straightforward philosophy if the adopted sensors are sufficiently accurate), the vehicle can resort to an Unscented Kalman Filter (UKF)-based estimator. Such filter makes use of a mixed kinematic/dynamic vehicle model (so as to capture more information about the evolution of the system with respect to a purely kinematic model, but at the same time offering a reduced burden on the processing unit of the vehicle with respect to a complete dynamic model), taking into account longitudinal dynamics only (the majority of torpedo-shape AUV motion takes place on the direction of forward motion, since it usually constitutes the direction of minimal resistance).

The reader can refer to Allotta et al. (2016), Caiti et al. (2018), and Costanzi et al. (2018) for more details.

3.2. Payload Acquisition and Processing

Object detection and mapping is a typical problem in the underwater domain. Research on this topic is crucial for both AUVs and Remotely Operated Vehicles (ROVs), permitting them to understand their surroundings. Unfortunately, different and a priori unknown scenarios, which affect the robot-environment interaction, need to be faced. Poor visibility conditions in murky and turbid waters can compromise the operations of optical devices. To overcome the above-mentioned issues, FeelHippo AUV presents, as stated in section 2, a FLS. In the first part of the section, an acoustic-based buoy detection algorithm with a reinforcement that exploits the known geometric dimensions of a static target is proposed.

3.2.1. FLS-Based Buoy Detection

The main concepts behind the algorithm are outlined:

• The acoustic video is acquired by one Teledyne BlueView M900 2D FLS and then it is real-time separated into a sequence of 8-bit grayscale images;

• Each frame is blurred with a Gaussian filter, leading to a smoother image.

• In order to detect high-reflection areas, which are likely to belong to a target object rather than to reverberation caused by the clutter, a direct binary threshold is applied to all the acoustic images. Let us define the source image as src, the destination image (namely the one after the binary threshold application) as dst and the threshold value threshold ∈ [0, 255]. Note that the interval limits depend on the depth of the image. As mentioned above, 8-bit grayscale images are considered;

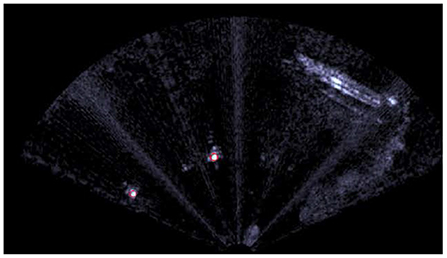

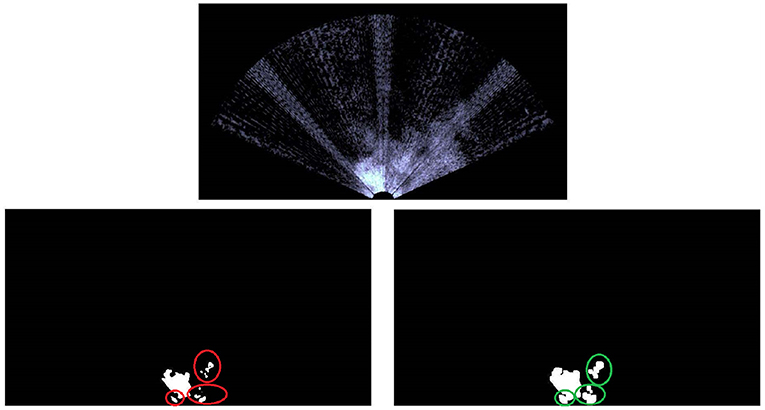

• Each frame is modified by means of morphological dilations. Because of the environmental disturbances, some speckle areas, which do not belong to any buoy, can take place. Morphological operations make these areas to coalesce, so they can be easily ignored, avoiding false-positive detections. The situation is clearly visible in Figure 4 where high-reflection areas are due to the bubbles in front of the vehicle.

• At this point, several white colored bounded sets are present. Geometric boolean requirements need to be met to distinguish buoy-like objects from the background. The main assumption behind the proposed method lies in the knowledge (even rough) of the shape and dimension of the target to detect. On the one hand, our technique exploits simple geometric conditions; on the other hand, targets that resemble elementary geometric shapes are meant to be identified (circles, ellipses, rectangles). Commonly, typical buoys fall inside the scope of applicability of the proposed algorithm, which appears as a good trade-off. Four geometric properties that lead to four boolean conditions are considered and it is worth highlighting that all the requirements need to be met. First of all, the area of all the bounded sets is checked. If it is between a minimum (amin) and a maximum (AMAX) value, the condition is verified. The goal is trivial: ignore too small or too big regions. Second of all, the circularity, which is defined below, is investigated. If it is between a minimum and a maximum value, the condition is confirmed. Its meaning lies in understanding how much the bounded sets resemble a circle. Obviously, ellipticity is taken into account when circularity is different from one.

where A is the area of the bounded set and P its perimeter. Afterwards, the convexity, defined as the ratio between the area of the set and the area of its convex hull (the smallest convex set that contains the original set), is checked. Another go/no go condition is applied.

where A is the area of the bounded set and Ach is the area of the convex hull. It is easy to understand that convexity ∈ (0, 1].

Lastly, the inertia ratio, which is defined in Equation (3) is verified. The goal is trivial: detect whether the object is elongated along a particular direction. Note that the moments of inertia are calculated with respect to the center of mass of the set.

where Imax and Imin are respectively the maximum and the minimum moment of inertia (the inertia along the principal axes) and IR ∈ ℝ+.

Figure 4. The image acquired by the FLS on top (note the bubbles in front of the vehicle that create a strong acoustic echo, see the white area). The binary threshold down on the left, whereas the latter is morphological dilated on the right. The red circles are the speckle areas and the green ones the subsequent aggregation.

Unfortunately, as stated by Hurtós et al. (2015), FLS imagery are affected by low Signal-to-Noise Ratio (SNR), poor resolution and intensity modifications that depends upon viewpoint variations, so some false positive detections might arise anyway.

Assuming a static target (very often a buoy falls inside this class), a position-based clustering algorithm with the aim of removing false positives, can be exploited. If several detections are accumulated around a small region, then what is insonified by the FLS has high probability to be the buoy. In other words, the presence of spurious noise and mobile objects (e.g., fish) can be managed by the proposed technique, leading to a robust solution. The key idea exploits the solution proposed in Ester et al. (1996) where, basically, elements with many nearby neighbors are grouped together, whereas points that lie too far from their closest neighbors are classified as outliers.

To locate the exact position of the detected targets, starting from the known position of the vehicle, an imaging geometry model needs to be defined and the reader can refer to Franchi et al. (2018) for more information. In few words, exploiting the work of Johannsson et al. (2010), Ferreira et al. (2014), Hurtós et al. (2015), and Walter (2008), a simplified linear model, where the FLS can be treated as an orthographic camera, is adopted.

4. ERL Emergency Robots 2017 Experimental Results

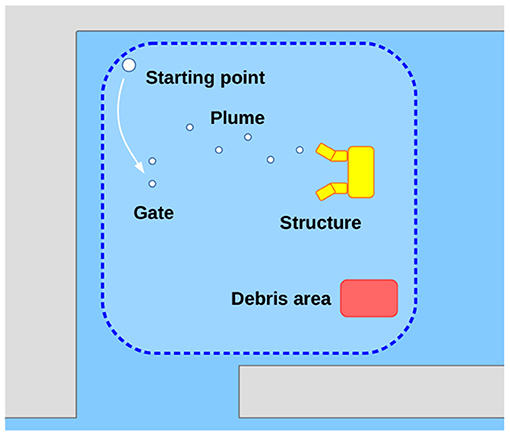

This section reports some of the results obtained during the robotics competition ERL Emergency Robots 2017, held in Piombino (Italy) in September 2017. In particular, the data shown here refer to multiple autonomous missions performed by FeelHippo AUV during the sea domain trials throughout the competition [refer to Ferri et al. (2017) for more details about the challenge]. Robots were asked to act in the following (recreated) catastrophic scenario: after an earthquake and a tsunami hit the shoreline area where a nuclear plant is located, evacuation procedures are issued; however, several people working at the plant are missing. Additionally, the premises have suffered damages of relevant intensity, with their lower sections flooded; furthermore, several pipes of the plant (both on land an underwater) are leaking radioactive material. Concerning the sea domain, the area of interest was constituted by a rectangular arena ~50 × 50 m wide. Beyond a starting gate, composed of two submerged buoys, lied the area of interest where an underwater plastic pipe assembly represented the (flooded) lower section of the plant. Obviously, no substance was actually leaking; a set of five numbered underwater buoys was used to represent the leaking fluid plume (leading to a particular component of the pipe assembly, where a specific marker represented the breakage). In addition, several objects anchored on the seabed (e.g., tables and chairs) indicated a debris area where it was likely to find the body (i.e., a mannequin dressed in easy visible orange) of one of the missing workers. See Figure 5 for a graphical representation of the arena and of the objects of interest (note that the picture is not to scale, and the positions of the depicted objects are not meant to represent actual shapes or dimensions).

Each participating team was allotted an exclusive time slot in the arena; from the starting point, the vehicle had to submerge, pass through the gate (without touching it, and providing optical or acoustical images of the gate itself), and it was then required to perform different tasks without resurfacing. Among the different tasks (but not limited to those mentioned here), each AUV was asked to inspect and map the area and the objects of interest (e.g., the plume, the gate, the underwater pipe assembly, the debris area) and to identify in real-time the mission targets, such as the leaking pipe and the missing worker. A specific score based on the degree of completeness and on the quality of the provided data (navigation and/or payload data, used to guarantee the veracity of team's claims on each submission) was assigned to each task. Hence, each AUV had to (a) precisely navigate through the arena, closely following the planned path in order to (b) efficiently make use of its own payload and payload processing algorithms, mapping the arena and identifying the objects of interest during navigation so as to score as much points as possible. In light of the above-mentioned considerations, this section is divided into two parts: at first, the focus will be given to the navigation performance of the vehicle, showing how FeelHippo AUV is able to follow a desired trajectory without incurring in an unacceptable position estimation error growth over time; then, it will be shown how the payload the vehicle is equipped with can be suitably used to accomplish the goals of the competition. Despite of the reduced size, its optimized mechatronics design, indeed leads to a compact but high-functional vehicle.

4.1. Navigation Results

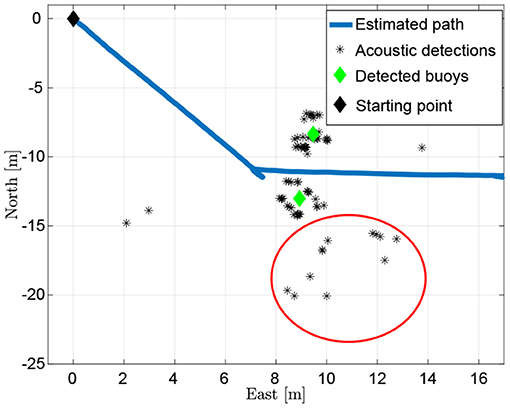

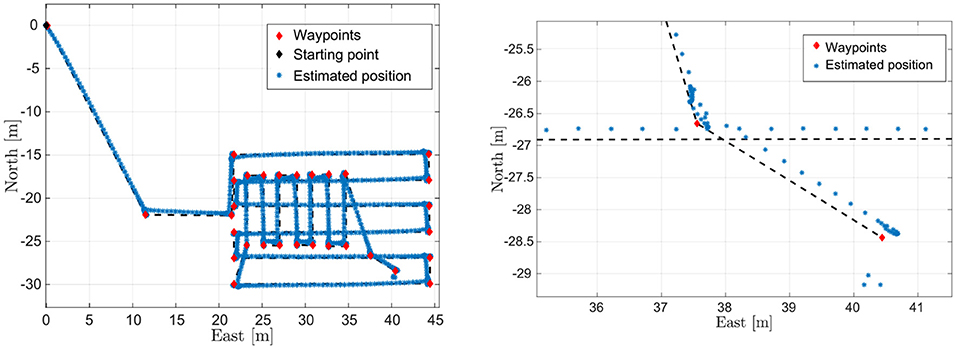

The results reported in this section refer to the mission performed by FeelHippo AUV during the final trial of the competition; hence, the path executed by the vehicle was planned according to the estimated positions of the objects of interest, evaluated from the in-water runs executed during the previous days. In particular, after passing through the gate, the vehicle autonomously performed a lawnmower path with West-East aligned transects, to cover as much as possible of the area of interest. Then, a second lawnmower path, perpendicular to the first, was executed in the northern part of the arena, where the plume buoys were supposed to be. Two final waypoints were included in the direction of the debris area in order to try to identify the objects composing the area itself or even the mannequin representing the worker.

Figure 6 shows the position estimate computed by FeelHippo AUV during the execution of the autonomous mission. The first waypoint (the starting point of Figure 5) was located at 42.954164° N, 10.6018952° E; the task was executed at the desired depth of 1 m (except for the last two waypoints, located at the depth of 3.5 m), with a desired longitudinal speed of 0.5 m/s and a covered path of about 240 m. The discontinuity visible in the lower-right corner of Figure 6 is due to the error between the path estimated onboard the vehicle while navigating underwater and the GPS fix acquired after resurfacing. Indeed, such error is <1 m after about 21 min of navigation (or, equivalently, <1% of the total length of the path), highlighting the satisfying accuracy of the navigation system of the vehicle: it is worth remembering that FeelHippo AUV performed the whole underwater mission autonomously, without resurfacing; communication from the ground control station to the vehicle (exception made for mission starts and possible emergency aborts) was specifically forbidden by the competition rules.

Figure 6. On the left, FeelHippo AUV estimated path, whereas on the right focus on the resurfacing position.

4.2. Payload Processing Results

FeelHippo AUV was asked to autonomously (and possibly real-time) find the seven buoys located in the sea domain arena, see Figure 5. Their physical characteristics in terms of color (orange), shape (approximately spherical) and dimensions (radius around 0.3 m) were a priori known.

While FeelHippo AUV was performing the path described in section 4.1, the buoys detection took place. The starting gate, composed of two buoys, is visible in Figure 7, whereas the result of the proposed solution is depicted in Figure 8. In the former, the rubber boat where the judges monitored the course of the competition can be noticed on the top-right corner. In the latter, due to the presence of the rubber boat, false positive detections take place (note the red circle). On the other hand, given their scattered nature, the clustering algorithm is able to handle the situation. In particular, before applying the clustering algorithm 82 detections take place, where 67 are true positives and 15 false positives. It is worth highlighting that the detection operation, as well as the target geolocalization, were conducted in real-time and any geometric constraints has been exploited for target detection (for example, the known geometric distance between the buoys that compose the starting gate).

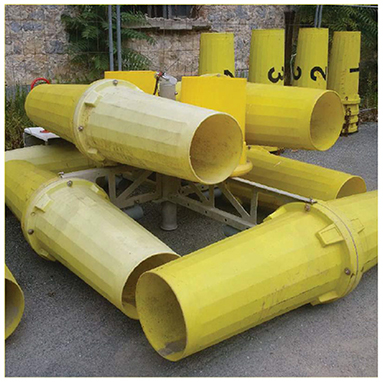

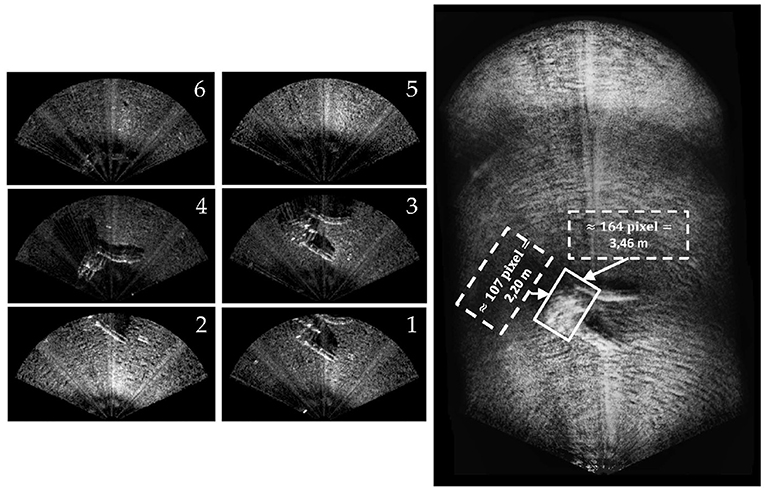

After the end of the competition, a 2D mosaic of the area around the structure (see Figures 5, 9), namely an underwater plastic pipe assembly with the aim of representing the (flooded) lower section of the plant, was performed. For a detailed description of the acoustic mosaic formation process, the interested reader can refer to Franchi et al. (2018). The proposed solutions make use of the OpenCV library (OpenCV). To this end, a new mission, where the FLS was mounted with a small tilt angle (~20° with respect to the water surface), was executed. The collected dataset was composed of 72 FLS images recorded along a 20-meter transect. The maximum FLS range was set to 10 meters and the FOV of the device was 130° (uneditable by the user). A few FLS frames and the final composite are reported in Figure 10. In the latter, the covered area is ~500 m2. Furthermore, the real dimensions of the underwater structure (which were a priori known) are in accordance with the size that can be obtained from Figure 10. Indeed, structure dimensions are about 2.20 × 3.20 × 1.20 m, whereas the obtained ones are 2.20 × 3.46 m. More information concerning the conversion from pixels to meters is presented by the authors in Franchi et al. (2018).

Figure 9. The structure placed on the sea bottom (Ferri et al., 2017).

Figure 10. The 2D mosaic of the underwater structure. On the right, the dimension of the underwater structure (retrieved by means of the mosaic) is reported.

5. Conclusion

The paper shows how FeelHippo AUV, despite its small size, represents a compact and complete underwater platform, which can be employed in different application scenarios.

In particular, a reliable and versatile navigation system, able to perform satisfying accuracies, is shown in section 3.1; indeed, two navigation approaches (the vehicle can exploit a dead reckoning strategy as well as a UKF-based solution) that present a relative error <1% after about 21 min of autonomous navigation are proposed.

For what concerns the payload acquisition and processing, an acoustic-based object detection algorithm (in our case, applied to underwater buoys) is treated in section 3.2.1, where substantial improvements through clustering techniques (usable in presence of static targets) are presented (Figure 8). Good performance in terms of detection even with limited visibility ranges are shown and, in addition, the real-time implementation is proposed. Lastly, an underwater acoustic mosaic is presented in section 4.2. The presented solution is shown to perform satisfying 2D underwater reconstruction of the order of hundreds of square meters. Future works will involve machine learning-based detection techniques and a mixed detection approach that resorts to a FLS and an optical camera.

The UNIFI Team has been awarded Second-in-Class in “Pipe inspection and search for search for missing workers (Sea+Air)” during ERL Emergency Robots 2017.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Author Contributions

MF: algorithm development, experiments, results validation, and writing. FF: experiments and results validation. MB: experiments and writing. AR: results validation, writing, and activities supervision. BA: writing and activities supervision.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to acknowledge the support of Mr. Nicola Palma and Mr. Tommaso Merciai in the development of the clustering algorithm and the acoustic mosaicing (section 4.2). Moreover, a special thank is addressed to the whole UNIFI Team.

References

Allotta, B., Caiti, A., Costanzi, R., Fanelli, F., Fenucci, D., Meli, E., et al. (2016). A new auv navigation system exploiting unscented kalman filter. Ocean Eng. 113, 121–132. doi: 10.1016/j.oceaneng.2015.12.058

Allotta, B., Costanzi, R., Fanelli, F., Monni, N., and Ridolfi, A. (2015). Single axis fog aided attitude estimation algorithm for mobile robots. Mechatronics 30, 158–173. doi: 10.1016/j.mechatronics.2015.06.012

Amaran, M. H., Noh, N. A. M., Rohmad, M. S., and Hashim, H. (2015). A comparison of lightweight communication protocols in robotic applications. Proc. Comp. Sci. 76, 400–405. doi: 10.1016/j.procs.2015.12.318

CADDY (2019). Official Website of the CADDY Project. Available online at: http://www.caddy-fp7.eu (accessed January 2020).

Caiti, A., Costanzi, R., Fenucci, D., Allotta, B., Fanelli, F., Monni, N., et al. (2018). “Marine robots in environmental surveys: current developments at isme—localisation and navigation,” in Marine Robotics and Applications (Springer), 69–86.

Carreras, M., Candela, C., Ribas, D., Palomeras, N., Magií, L., Mallios, A., et al. (2018). Testing sparus ii auv, an open platform for industrial, scientific and academic applications. arXiv preprint arXiv:1811.03494.

Costanzi, R., Fanelli, F., Meli, E., Ridolfi, A., Caiti, A., and Allotta, B. (2018). Ukf-based navigation system for auvs: online experimental validation. IEEE J. Ocean. Eng. 44, 633–641. doi: 10.1109/JOE.2018.2843654

Costanzi, R., Fanelli, F., Monni, N., Ridolfi, A., and Allotta, B. (2016). An attitude estimation algorithm for mobile robots under unknown magnetic disturbances. IEEE/ASME Trans. Mech. 21, 1900–1911.

Crowell, J. (2013). “Design challenges of a next generation small auv,” in Oceans-San Diego, 2013 (San Diego, CA: IEEE), 1–5.

Ester, M., Kriegel, H.-P., Sander, J., and Xu, X. (1996). “A density-based algorithm for discovering clusters in large spatial databases with noise,” in KDD'96: Proceedings of the Second International Conference on Knowledge Discovery and Data Mining, eds E. Simoudis, J. Han, and U. M. Fayyad (Portland, OR: AAAI Press), 226–231.

Fanelli, F. (2019). Development and Testing of Navigation Algorithms for Autonomous Underwater Vehicles. Springer.

Ferreira, F., Djapic, V., Micheli, M., and Caccia, M. (2014). Improving automatic target recognition with forward looking sonar mosaics. IFAC Proc. Vol. 47, 3382–3387. doi: 10.3182/20140824-6-ZA-1003.01485

Ferri, G., Ferreira, F., and Djapic, V. (2015). “Boosting the talent of new generations of marine engineers through robotics competitions in realistic environments: the sauc-e and eurathlon experience,” in OCEANS 2015-Genova (Genoa: IEEE), 1–6.

Ferri, G., Ferreira, F., and Djapic, V. (2017). “Multi-domain robotics competitions: the cmre experience from sauc-e to the european robotics league emergency robots,” in OCEANS 2017-Aberdeen (Aberdeen, UK: IEEE), 1–7.

Ferri, G., Ferreira, F., Djapic, V., Petillot, Y., Franco, M. P., and Winfield, A. (2016). The eurathlon 2015 grand challenge: The first outdoor multi-domain search and rescue robotics competition—a marine perspective. Mar. Techn. Soc. J. 50, 81–97. doi: 10.4031/MTSJ.50.4.9

Fietz, D., Hagedorn, D., Jähne, M., Kaschube, A., Noack, S., Rothenbeck, M., et al. (2014). Robbe 131: The Autonomous Underwater Vehicle of the auv Team Tomkyle. Available online at: https://auv-team-tomkyle.de/?page_id=411

Fossen, T. I. (1994). Guidance and Control of Ocean Vehicles. Chichester; New York, NY: Wiley New York.

Franchi, M., Ridolfi, A., and Zacchini, L. (2018). “A forward-looking sonar-based system for underwater mosaicing and acoustic odometry,” in Autonomous Underwater Vehicles (AUV), 2018 IEEE/OES (Porto: IEEE).

Franchi, M., Ridolfi, A., Zacchini, L., and Benedetto, A. (2019). “Experimental evaluation of a Forward-Looking SONAR-based system for acoustic odometry,” in Proceedings of OCEANS'19 MTS/IEEE MARSEILLE, Marseille (FR) (IEEE).

Furlong, M. E., Paxton, D., Stevenson, P., Pebody, M., McPhail, S. D., and Perrett, J. (2012). “Autosub long range: a long range deep diving auv for ocean monitoring,” in Autonomous Underwater Vehicles (AUV), 2012 IEEE/OES (Southampton, UK: IEEE), 1–7.

Hiller, T., Steingrimsson, A., and Melvin, R. (2012). “Expanding the small auv mission envelope; longer, deeper & more accurate,” in Autonomous Underwater Vehicles (AUV), 2012 IEEE/OES (Southampton, UK: IEEE), 1–4.

Hurtós, N., Ribas, D., Cufí, X., Petillot, Y., and Salvi, J. (2015). Fourier-based registration for robust forward-looking sonar mosaicing in low-visibility underwater environments. J. Field Robot. 32, 123–151. doi: 10.1002/rob.21516

Johannsson, H., Kaess, M., Englot, B., Hover, F., and Leonard, J. (2010). “Imaging sonar-aided navigation for autonomous underwater harbor surveillance,” in 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (Taipei: IEEE), 4396–4403.

Kaiser, C. L., Yoerger, D. R., Kinsey, J. C., Kelley, S., Billings, A., Fujii, J., et al. (2016). “The design and 200 day per year operation of the autonomous underwater vehicle sentry,” in Autonomous Underwater Vehicles (AUV), 2016 IEEE/OES (Tokyo: IEEE), 251–260.

Mahony, R., Hamel, T., and Pflimlin, J.-M. (2008). Nonlinear complementary filters on the special orthogonal group. IEEE Trans. Automat. Control 53, 1203–1218. doi: 10.1109/TAC.2008.923738

McCarter, B., Portner, S., Neu, W. L., Stilwell, D. J., Malley, D., and Minis, J. (2014). “Design elements of a small auv for bathymetric surveys,” in Autonomous Underwater Vehicles (AUV), 2014 IEEE/OES (Oxford, MS: IEEE), 1–5.

Mišković, N., Bibuli, M., Birk, A., Caccia, M., Egi, M., Grammer, K., et al. (2016). Caddy—cognitive autonomous diving buddy: two years of underwater human-robot interaction. Mar. Technol. Soc. J. 50, 54–66. doi: 10.4031/MTSJ.50.4.11

opencv (2019). Official Website of the Open Source Computer Vision Library. www.opencv.org. Available online at: (accessed January 2020).

Rigaud, V. (2007). Innovation and operation with robotized underwater systems. J. Field Robot. 24, 449–459. doi: 10.1002/rob.20195

ROS (2019). Official Website of Robot Operating System (ROS). Available online at: http://www.ros.org (accessed January 2020).

Keywords: underwater robots, autonomous underwater vehicle, robotics competitions, autonomous navigation, acoustic mosaicing

Citation: Franchi M, Fanelli F, Bianchi M, Ridolfi A and Allotta B (2020) Underwater Robotics Competitions: The European Robotics League Emergency Robots Experience With FeelHippo AUV. Front. Robot. AI 7:3. doi: 10.3389/frobt.2020.00003

Received: 24 April 2019; Accepted: 08 January 2020;

Published: 31 January 2020.

Edited by:

Enrica Zereik, Italian National Research Council (CNR), ItalyReviewed by:

Antonio Vasilijevic, University of Zagreb, CroatiaFilippo Campagnaro, University of Padova, Italy

Copyright © 2020 Franchi, Fanelli, Bianchi, Ridolfi and Allotta. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Matteo Franchi, bWF0dGVvLmZyYW5jaGlAdW5pZmkuaXQ=

Matteo Franchi

Matteo Franchi Francesco Fanelli1,2

Francesco Fanelli1,2 Matteo Bianchi

Matteo Bianchi Alessandro Ridolfi

Alessandro Ridolfi Benedetto Allotta

Benedetto Allotta