- 1Department of Oncology and Hemato-Oncology, University of Milan, Milan, Italy

- 2Applied Research Division for Cognitive and Psychological Science, IEO, European Institute of Oncology IRCCS, Milan, Italy

In the near future, Artificial Intelligence (AI) is expected to participate more and more in decision making processes, in contexts ranging from healthcare to politics. For example, in the healthcare context, doctors will increasingly use AI and machine learning devices to improve precision in diagnosis and to identify therapy regimens. One hot topic regards the necessity for health professionals to adapt shared decision making with patients to include the contribution of AI into clinical practice, such as acting as mediators between the patient with his or her healthcare needs and the recommendations coming from artificial entities. In this scenario, a “third wheel” effect may intervene, potentially affecting the effectiveness of shared decision making in three different ways: first, clinical decisions could be delayed or paralyzed when AI recommendations are difficult to understand or to explain to patients; second, patients' symptomatology and medical diagnosis could be misinterpreted when adapting them to AI classifications; third, there may be confusion about the roles and responsibilities of the protagonists in the healthcare process (e.g., Who really has authority?). This contribution delineates such effects and tries to identify the impact of AI technology on the healthcare process, with a focus on future medical practice.

Introduction

In the last few years, Artificial Intelligence (AI) has been on the rise, and some think that this technology will define the contemporary era as automation and factory tools defined the industrial revolutions, or as computers and the web characterized recent decades (1–3). These technologies, based on machine learning, promise to become more than simple “tools”; rather, they will be interlocutors of human operators that can help in complex tasks involving reasoning and decision making. The expression “machine learning” refers to a branch of computer science devoted to developing algorithms able to learn from experience and the external environment, improving performance over time (4–6). More specifically, algorithms are able to detect associations, similarities, and patterns in data, allowing predictions to be made on the likelihood of uncertain outcomes.

AIs and machine learning are present in a number of commonly used technologies, such as email, social media, mobile software, and digital advertising. However, the near future of AI is not that it will continue to work outside of the end users' awareness, as it mostly does nowadays; on the contrary, AI promises to become an active collaborator with human operators in a number of tasks and activities. AIs are able to analyze enormous quantities of data of various contents and formats, even where it is dynamically changing (Big Data). AIs identify associations and differences between data and provide human operators with outputs that are impossible to achieve by humans alone, at least in the same amount of time.

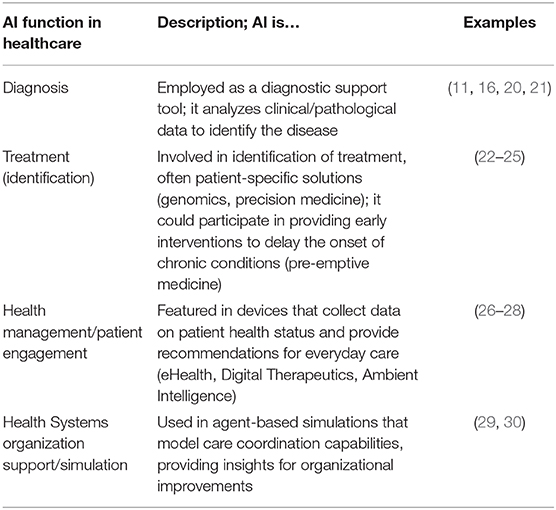

An example of such outputs are medical diagnoses and the identification of therapy regimens to be administered to patients. Health professionals (physicians especially) will increasingly interact with AIs to get information on their patients that will hopefully be more exact, specific, and based on objective data (7–9). Diagnostic decision support could be considered the main application area for AI-based innovation in medical practice (10, 11). Basically, machine learning devices are trained to classify stimuli based on initial examples. For instance, tumor types can be identified by the comparison of patient's TAC with information coming from scientific literature (12, 13); the same can be done with pictures of skin lesions (14), optical coherence tomography in the case of sight diseases (15–17), or the integration of clinical observations and medical tests for other diseases (18, 19). While diagnosis is recognized by many as the main area for AI implementation in medicine and healthcare, others could be envisaged, as summarized in Table 1.

However, the study of AI in healthcare in its social-psychological aspects is still an underrepresented area. One important field is that of “Explainable Artificial Intelligence” (commonly abbreviated in XAI), namely the research on AI's transparency and ability to explain its own elaboration processes. The American Agency for Advanced Research Projects for Defense (DARPA) launched a program on XAI (31), and the European Parliament demands a “right to explanation” in automated decision making (32). Indeed, one issue with AI implementation in professional practice regards the fact that it is supposed to be used by non-professionals: doctors, marketers, or military personnel are not expected to become experts in informatics or AI development, yet they will have to interact with artificial entities to make important decisions in their fields. While one could easily agree with the analyses and outputs of AIs, trusting them and taking responsibility for decisions that will affect the “real world” is no easy task. For this reason, XAI is identified by many scholars as a priority for technological innovation. Miller and colleagues (33, 34) maintain that AI developers and engineers should turn to social sciences in order to understand what is an explanation, and how it could be effectively implemented within AIs' capacities. For example, Vellido (35) proposed that AIs learn to make their processes transparent via visual aids that help a human user to understand how a given conclusion has been reached; Pravettoni and Triberti (36) highlighted that explanation is rooted in interaction and conversation, so that a complete, sophisticated XAI would be reached when artificial entities were able to communicate with human users in a realistic manner (e.g., answering questions, learning basic forms of perspective-taking, etc.). In any case, besides working on AI-human interfaces, another field of great interest is that of AI's impact on professional practice or the prediction of possible organizational, practical, and social issues that will emerge in the context of implementation.

Though the contribution of AIs to medical practice is promising, their impact on the clinician-patient relationship is still an understudied topic. From a psychosocial point of view, it is possible that new technologies will influence the relationship between clinicians and patients in several ways. Indeed, the introduction of AI into the healthcare context is changing the ways in which care is offered to patients: the information given by AIs on diagnosis, treatment, and drugs will be used to make decisions in any phase of the healthcare journey (e.g., choices on treatment or lifestyle changes, deciding to inform relatives of one's health status, communication of bad news).

According to a patient-centered perspective, such care choices should be made by the patient and the doctor within a mutual collaboration, which points to the popular concept of shared decision making. This concept has become fundamental in the debate on patient-centered approaches to care, with the number of scientific publications on the subject rising more than 600% from 2000 to 2013 (37). Reviews show that the communication process and relationship quality among doctors and patients has a significant effect on patients' well-being and quality of life, so that the proper communication style can alleviate the traumatic aspects of illness (38–40). However, when prefiguring the adoption of AIs participating in diagnosis and therapy identification, it is possible that the same concept of “shared decision” should be updated, taking into consideration the contribution of artificial entities.

Who to Share Decision Making With?

Shared decision making has been proposed as an alternative paradigm to the “paternalistic” one (41, 42). The latter model dominated disease-centered medicine, with the physician being authoritative and autonomous, giving recommendations to patients without taking into consideration their full understanding, personal needs, and feelings. While the “paternalistic” physician intended to act in the best interest of the patient, such an approach may be ineffective or counterproductive in the end, because the patient may not understand nor follow the recommendations (43). Shared decision making is a process by which patients and health professionals discuss and evaluate the options for a particular medical decision, in order to find the best available treatment that is based on knowledge that is accessible and comprehensible for both and satisfies both needs (44–46). During this process, the patient is made aware of diagnostic and treatment pathways, as well as of related risks and benefits; also, the patient's point of view is taken into consideration in terms of preferences and personal concerns (47–49).

In other words, shared decision making entails a process of communication and negotiation between the health professional and the patient in which both medical information (e.g., diagnosis, therapy, prognosis) and patient's concerns (e.g., doubts and request for clarification, lifestyle changes, worries for the future, etc.) are exchanged.

In the near future, where AI is expected to take a role in medical practice, it is important to understand its influence on shared decision making and on the patient-doctor relationship as a whole. In most of the health systems around the world, the patient has the right to be informed about which tools, resources, and approaches are being employed to treat his or her case; a patient will have to know that the diagnosis or even the medical prescriptions first came out of a machine, not through the human doctor's effort. Presumably, just the knowledge of the presence of a “machine” in the healthcare process could influence the attitudes of doctors and patients: from a psychological point of view, attitudes toward something may develop before any direct experience of it (e.g., as a product of hearsay, social norms, etc.), and influence subsequent conduct (50). Indeed, while medicine itself is inherently open to innovation and technology, some health professionals harbor negative attitudes toward technology for care (51, 52), the main reasons being the risk they feel for patient de-humanization (53, 54) or the fear that tools they are not confident in mastering may be used against them in medical controversies (55). Similarly, patients who do not feel confident in using technology (“computer self-efficacy”) benefit less from eHealth resources than other patients who do feel confident (56, 57), and technological systems for healthcare are not expected to work as desired if development is not tailored to users' actual needs and context of use (26). Though these data regard types of technologies different from AIs, they clearly show that technological innovation in the field of health is hardly a smooth process. While it is clear how the “technical” part of medicine (e.g., improving diagnosis correctness) would benefit from AIs and machine learning devices, their impact on the patient-doctor relationship is mostly unknown.

Furthermore, with the development of eHealth (58, 59) and the diffusion of interactive AIs as commonly used tools (e.g., home assistants), it is possible that chronic patients (e.g., patients with obesity, arthritis, anorexia, heart disease, diabetes) will be assisted by AIs in their everyday health management. Indeed, for example, the American Food and Drugs Administration (FDA) has made “significant strides in developing policies that are appropriately tailored to ensure that safe and effective technology reaches users” [US FDA (60), p.2], promoting the development of Software as a Medical Device (SaMD), devices that play a role in diagnosis or treatment (not only health or wellness management). FDA-approved digital therapeutics include, for example, reSET developed by PEAR Therapeutics (61), which delivers cognitive-behavioral therapy to patients suffering from substance abuse (62). It is possible that similar future resources will include AIs that directly interact with patients based on natural language processing.

In other words, AIs will not be just a “new app” on doctors' devices, but active interlocutors, able to deliver diagnosis, prognosis, and intervention materials to both the doctor and the patient. According to Topol (7), AI's implementation in care could potentially have positive effects, but this depends mainly on doctors' attitudes: for example, if AI were to take on administrative and technical tasks in medicine, doctors would have the occasion to recover the “lost time” for consultation with and empathic listening to their patients, so to improve shared decision making. In this sense, AI would become an active go-between among care providers and patients.

This considered, it becomes fundamental to understand whether we should expect structural changes in the same context of shared decision making and medical consultation. Will patients interact with AIs directly? Will doctors encounter difficulties and obstacles in adapting their work practices to include technologies able to participate in diagnosis and treatment? Are patient-doctor decisions to be shared with artificial entities too?

At the present time, the scientific literature lacks research data to fully respond to these questions. However, by considering the literature on health providers' reactions to technological innovation and the psychology of medicine, it is possible to prefigure some social-psychological phenomena that could occur in the forthcoming healthcare scenarios in order to prepare to manage undesirable side-effects.

A “Third Wheel” Effect

In common language, the expression “third wheel” refers to someone who is superfluous with respect to a couple. Typically, the focus of the expression is on this third person, who unintentionally finds him or herself in the company of a couple of lovers and feels excluded and out of place. On the other side of the relationship, the couple may be unaware of the stress caused to the third wheel, or they may feel awkward and uneasy because of the unwanted presence. In other words, a third wheel is someone who is perceived as an adjunct, something unnecessary, who may spoil the mood and negatively influence others' experience. Despite just being a popular idiom, this expression is sometimes used in psychological research to describe relationship issues as experienced by research participants (63): new technology, specifically social media, has been called a third wheel as well because of its possible negative influence on relationship quality (64, 65).

We propose to employ the expression “third wheel” to highlight an emergent phenomenon relating to the implementation of artificial entities in real-life contexts: while technologies become more and more autonomous, able to talk, to “think,” and to actively participate in decision making, their role within complex relationships may be unclear to the human interlocutors, and new obstacles to decision making could arise.

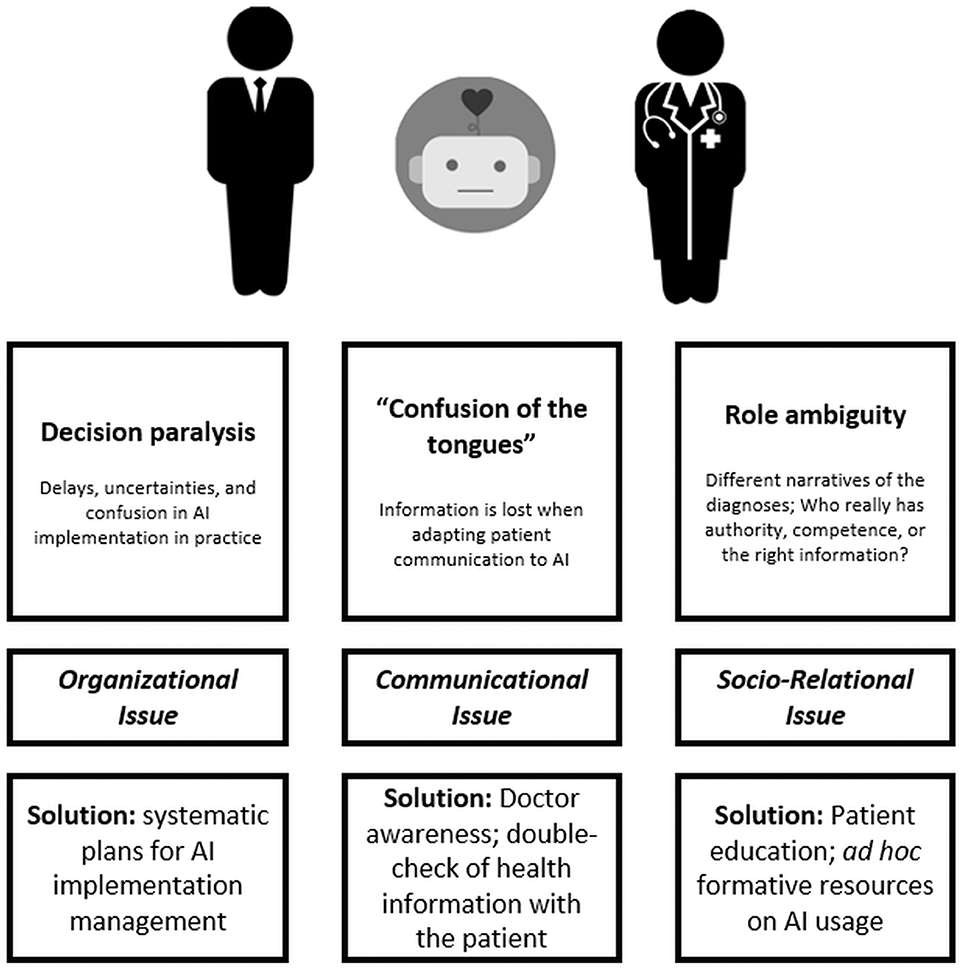

We identified three main ways a third wheel effect may appear in medical consultation aided by artificial intelligence: decision paralysis, or a risk of delay, “Confusion of the Tongues,” and role ambiguity. In the next sections, these will be described in detail.

Decision Paralysis, or a Risk of Delay

As previously stated, current AIs are not transparent in their elaboration processes; that is, their interlocutors may have no clear representation of how AIs have reached a given conclusion: this could generate “trust issues,” especially when important decisions should be taken on the basis of these conclusions. According to Topol and his seminal book Deep Medicine (7), one positive consequence for AI implementation in medical practice that we could hope for is giving back time to doctors to reserve to empathic consultation and patient-centered medicine. Indeed, if AIs were to take on technical and administrative tasks in medicine, doctors could devote their attention to patients as individuals and improve the “human side” of their profession. However, we should take into account that doctors using AIs will need to contextualize and justify their role within practice and the relationship with the patient. It could be said that doctors will become “mediators” between their artificial allies and the patients: AI's conclusions and recommendations should be reviewed by the doctor, approved and refined, and explained to the patient, answering his or her questions. On the other side, future technologies could include opportunities for direct interaction between AIs and patients: for example, digital therapeutics, or eHealth applications providing assistance to patients and caregivers in the management and treatment of chronic diseases, could potentially include access to the AI providing diagnosis and therapy guidelines. However, we can foresee that a patient would still need approval and guidance from the human doctor for modifications to the treatment schedule, medication intake, specific changes to lifestyle, and everyday agenda.

Such a “mediation” role could be time-consuming and, at least at an organizational level, generate decision paralysis or delays. Imagine that a hospital tumor board has to make a decision on a patient's diagnosis and only half of the board members agree with the AIs' recommendation; or, that a patient receives an important indication from the AI (e.g., stop taking a medication because wearable devices registered unwanted side effects), but he or she struggles to get in contact with his or her doctor to gain reassurance that this is the right thing to do.

These are examples of the implementation of AIs giving rise to a risk of delay. Though the technical processes could be accelerated, organizational and practical activities could be affected by the complex inclusion of an additional figure in the decision making process.

“Confusion of the Tongues”

The psychoanalyst Sandor Ferenczi used the expression “confusion of the tongues” to identify the obstacles inherent to communication between adults and children, who are inexorably heterogeneous in their mental representations of relationships and emotional experience. Since then, it has proved an effective expression to refer to interlocutors misinterpreting one another without knowledge.

The expression could be useful when we consider the utilization of AI in medical diagnosis, especially when the latter should be communicated to the patient. The physician is not a simple “translator” of information from the AI to the patient; on the contrary, he or she should play an active role during the process. Let us consider an example: an AI requires that the information on the patient's state is entered according to formats, categories, and languages that it is able to understand and analyze (e.g., data); however, it is possible that not all the relevant information for diagnosis could be transformed as such. How can doctors enter an undefined symptom, a general malaise, or a vague physical discomfort, if the patient himself or herself is hardly able to describe it? Even if trained in the understanding of natural language, the AI will not be able to integrate such information in its original form; this is not related to some sort of malfunction; rather, the AI does not have access to the complex and subtle emotional intelligence abilities that a human doctor can employ when managing a consultation with a patient. Specifically, one risk is that doctors would try to adapt symptoms to AIs' language and capabilities, for example by forcing the information coming from the patient into predefined categories; this could be related to an exaggerated faith in the technology itself, which could lead human users to overestimate its abilities (66).

This could lead patients not being motivated to report doubts, feelings, and personal impressions; indeed, patients can feel when doctors are not really listening to them (67, 68) and could experience a number of negative emotions ranging from anger to demoralization and a sense of abandonment (69–71). Such experiences have a detrimental effect both on the success of shared decision making and on therapy effectiveness because the patient will not adhere to the recommendations (72, 73). In other words, in this way, the new technology would become a source of patient reification, neglecting important elements that only humans' emotional intelligence can grasp.

Role Ambiguity

When the press started to write about Artificial Intelligence in medicine, a number of authoritative medical sources expressed a firm belief: AI will not replace doctors; it will only help them to do their jobs better, especially by analyzing complex medical data. However, we still have no clear idea about the perception of AIs on the side of patients; though it is obvious that AIs will not take over doctors' work, what will the patients think?

According to a recent survey by PwC on 11,000 patients from twelve different countries, 54% of the interviewees were amenable to the idea of being cured by artificial entities, 38% were against it, and rest were uncertain. The highest rates of acceptance can be traced to developing countries, which are open to any innovation in medicine, while countries used to high-level care systems were more critical.

This points to the need for AI innovation to be communicated and explained to patients in the right way, by justifying its added value but also by avoiding the risk that technology takes the place of human doctors in patients' perception. For example, as shown by some of the first implementations of the AI system Watson for Oncology by IBM (74, 75), it may happen that the diagnosis provided by AI does not mirror completely the ideas and assessments implemented by the doctor. It is possible that physicians, patients, and AIs will provide different narratives of diagnosis, prognosis, and treatment. This situation of ambiguity and disagreement could lead the patient to experience uncertainty, not knowing what opinion to follow, who really has authority, and who is actually working to help him or her.

When the doctor has to explain the role of AI in the consultation, he or she will have to reassure the patient that the recourse to such a technology is a desirable strategy to employ to provide the best possible consultation and treatment. However, in the perception of the patient, this communication may contain an implicit message, for example, that someone else is doing the doctor's work. A recent case was reported in the news worldwide where a patient and his family received a terminal diagnosis from the doctor on a moving robot interface: the family was shocked by the experience and perceived the use of the machine as an insensitive disservice (76).

This is an extreme example where a machine has been introduced in a delicate phase of the healthcare process: even if the machine was not acting autonomously, the effect was disastrous. Obviously, it is fundamental to build an empathic relationship with patients, especially with those dealing with the reality of death and grief (77, 78); while speaking through a machine is “technically the same” as in person, a grieving patient or his caregiver could reasonably feel talking to a robot to be a tragically absurd situation. This example shows how the implementation of technology should be analyzed not only in terms of functionality and technical effectiveness but also from the point of view of patients (79), taking into account their reaction and its consequences for patient health engagement as well as their commitment to shared decision making.

But if the AI is good as my doctor, or maybe even better, whom should I trust? A similar issue exists in medicine already: when multidisciplinary care is offered, patients may experience anxiety and confusion because they have to schedule appointments with several doctors and are not sure who to refer to with specific questions or who to listen to when recommendations are (or appear to them) contradictory (80); patients may find it difficult to trust health providers when the recommendation received is unexpected or counterintuitive (81) and they sometimes consult multiple health professionals, searching for infinite alternative options, as if the cure were some goods to buy, a maladaptive conduct known as “doctor shopping” (82). In other words, when there is disagreement, doubt, or ambiguity in diagnosis and treatment, its effect on the patient's perception and behavior should be taken into consideration and adequately managed within the consultation.

On the side of the doctor, the description of symptoms, diagnosis, and prognosis given by the AI could be more clear and understandable than the patient's; indeed, AI uses medical language and adopts the perspective of a medical professional, relying on objective data and scientific literature. While a patient could often experience difficulties when trying to explain his or her experience, AI could provide a different “narrative” of the diagnosis that the doctor would perceive as more comprehensible and reassuring. In this case, it is possible that the patient's testimony would be undermined or partially ignored, this way losing trace of the nuances and peculiarity of the actual patient's situation, which only a fine-grained analysis of the subjective testimony could detect.

To sum up, AI could potentially take the role of doctors in patients' perception or the opposite.

Discussion

In this contribution, we tried to identify possible dysfunctional effects of AI's inclusion in medical practice and consultation, conceptualizing them as multiple forms of a “third wheel” effect; besides prefiguring them, it is possible to sketch solutions to the issues to be explored by means of future research.

The three forms of the “third wheel” effect may affect three important areas of the medical consultation: organizational, communicational, and socio-relational aspects, respectively (see Figure 1 for a summary of the concept).

First, doctors and patients could experience a decision paralysis: decisions could be delayed when AIs' recommendations are difficult to understand or to explain to patients. Decision paralysis may affect the organizational aspects of healthcare contexts. It refers to how AI technology will be implemented in healthcare systems that may struggle to adapt their timings, procedures, and organizational boundaries to innovation. Tackling these issues entails making plans for the management of AI implementation that take into consideration not only the benefits of AI for the “technical” aspects of medical activities but also the behavior of organizational units toward AI outcomes and how these outcomes fit among any care practice processes.

Second, the presence of AIs could lead to a “confusion of the tongues” between doctors and patients, because patients' health information could be lost or transformed when adapted to AI's classifications. “Confusion of the tongues” affects communication between doctors and patients. It refers to the actual possibility for patients and doctors to understand each other and enact a desirable process of shared decision making. The solution to these possible issues involves the design of training resources for doctors that make them aware of how AI implementation could be perceived by patients; desirable practices within patient-doctor communication would include double-checking health-related information to address possible confusion arising from the delivery of relevant information mediated by AI.

Lastly, the involvement of AIs could cause confusion regarding roles in patient-doctor relationships when ambiguity or disagreement arises about treatment recommendations. Role ambiguity acts on socio-relational aspects in healthcare contexts. It refers to the unwanted effects on trust and quality of relationship related to the addition of an artificial interlocutor within the context. These relational aspects are important prerequisites for achieving a desirable healthcare collaboration. Therefore, solutions to role ambiguity issues would entail proper patient education on the usage of AI in their own healthcare journey, especially when intelligent technology resources interact with them directly, mediating treatment (e.g., eHealth). Future studies on the ethical implementation of AI in medical treatment should consider patients' perception of these tools and forecast under which conditions patients may feel “put aside” by their doctor because health advice and treatment are delivered by autonomous technologies.

The identification of the psychosocial effects of AI on medical practice is speculative in nature: we should wait until these technologies become actual protagonists in a renovated approach to clinical practice in order to collect data about their effects on the scenario. As a limitation of the present study, we did not report research data; rather, we tried to sketch possible correlates of AI implementation in healthcare based on the literature in health psychology and the social science of technology implementation issues. We believe that consideration of such established phenomena may help pioneers of AI in healthcare to forecast (and possibly manage in advance) issues that will characterize AI implementation as well. Future studies may employ technology acceptance measures to explore health professionals' and patients' attitudes toward artificial intelligence. Moreover, qualitative research methods (e.g., ethnographic observation) could be employed within the pioneer contexts where AIs start to be used in medical consultation, in order to capture the possible obstacles to practice consistent with the third wheel effect prefigured here.

Author Contributions

ST conceptualized the ideas presented in the article and wrote a first draft. ID helped to refine the theoretical framework and edited the manuscript. GP contributed with important intellectual content and supervised the whole process. All authors contributed to revision, read and approved the submitted version.

Funding

ST and GP were supported by MIUR - Italian Ministry of University and Research (Departments of Excellence Italian Law n.232, 11th December 2016) for University of Milan. ID was supported by Fondazione Umberto Veronesi.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

1. Barrat J. Our Final Invention: Artificial Intelligence and the End of the Human Era. New York, NY: St. Martin Press. (2013).

2. Li B, Hou B, Yu W, Lu X, Yang C. Applications of artificial intelligence in intelligent manufacturing: a review. Front Inform Technol Electron Eng. (2017) 18:86–96. doi: 10.1631/FITEE.1601885

3. Makridakis S. The forthcoming artificial intelligence (AI) revolution: its impact on society and firms. Futures. (2017) 90:46–60. doi: 10.1016/j.futures.2017.03.006

4. Angermueller C, Pärnamaa T, Parts L, Stegle O. Deep learning for computational biology. Mol Systems Biol. (2016) 12:878. doi: 10.15252/msb.20156651

5. Ghahramani Z. Probabilistic machine learning and artificial intelligence. Nature. (2015) 521:452–9. doi: 10.1038/nature14541

6. Lawrynowicz A, Tresp V. Introducing Machine Learning. In: Lehmann J, Volker J, editors. Perspectives on Ontology Learning, Berlin: IOS Press. (2011)

8. Wartman SA, Combs CD. Reimagining medical education in the age of AI. AMA J Ethics. (2019) 21:146–52. doi: 10.1001/amajethics.2019.146

9. Wooster E, Maniate J. Reimagining our views on medical education: part 1. Arch Med Health Sci. (2018) 6:267–9. doi: 10.4103/amhs.amhs_142_18

10. Amato F, López A, Peña-Méndez EM, Vanhara P, Hampl A, Havel J. Artificial neural networks in medical diagnosis. J Appl Biomed. (2013) 11:47–58. doi: 10.2478/v10136-012-0031-x

11. Jiang F, Jiang Y, Zhi H, Dong Y, Li H, Ma S, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol. (2017) 2:230–43. doi: 10.1136/svn-2017-000101

12. Fakoor R, Nazi A, Huber M. Using deep learning to enhance cancer diagnosis and classification. In Proceedings of the international conference on machine learning (Vol. 28). New York, NY: ACM. (2013).

13. Hu Z, Tang J, Wang Z, Zhang K, Zhang L, Sun Q. Deep learning for image-based cancer detection and diagnosis – A survey. Pattern Recognit. (2018) 83:134–49. doi: 10.1016/j.patcog.2018.05.014

14. Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. (2017) 542:115–8. doi: 10.1038/nature21056

15. De Fauw J, Ledsam JR, Romera-Paredes B, Nikolov S, Tomasev N, Blackwell S, et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat Med. (2018) 24:1342–50. doi: 10.1038/s41591-018-0107-6

16. Gargeya R, Leng T. Automated identification of diabetic retinopathy using deep learning. Ophthalmology. (2017) 124:962–9. doi: 10.1016/j.ophtha.2017.02.008

17. Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, et al. (2016). Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 316:2402–10. doi: 10.1001/jama.2016.17216

18. Abiyev RH, Ma'aitah MKS. Deep convolutional neural networks for chest diseases detection. J Health Eng. (2018) 2018:4168538. doi: 10.1155/2018/4168538

19. Litjens G, Sánchez CI, Timofeeva N, Hermsen M, Nagtegaal I, Kovacs I, et al. Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Sci Rep. (2016) 6:26286. doi: 10.1038/srep26286

20. Bahaa K, Noor G, Yousif Y. The Artificial Intelligence Approach for Diagnosis, Treatment Modelling in Orthodontic. In S. Naretto, editor, Principles in Contemporary Orthodontics, InTech (2011).

21. Ramesh AN, Kambhampati C, Monson JR, Drew PJ. Artificial intelligence in medicine. Ann R Coll Surg Engl. (2004) 86:334–8. doi: 10.1308/147870804290

22. Gubbi S, Hamet P, Tremblay J, Koch C, Hannah-Shmouni F. Artificial intelligence and machine learning in endocrinology and metabolism: the dawn of a new era. Front Endocrinol. (2019) 10:185. doi: 10.3389/fendo.2019.00185

23. Somashekhar SP, Sepúlveda MJ, Puglielli S, Norden AD, Shortliffe EH, Rohit Kumar C, Ramya Y. Watson for oncology and breast cancer treatment recommendations: agreement with an expert multidisciplinary tumor board. Ann Oncology. (2018) 29:418–23. doi: 10.1093/annonc/mdx781

24. Liu C, Liu X, Wu F, Xie M, Feng Y, Hu C. Using artificial intelligence (Watson for Oncology) for treatment recommendations amongst Chinese patients with lung cancer: feasibility study. J Med Internet Res. (2018) 20:e11087. doi: 10.2196/1108

25. Itoh H, Hayashi K, Miyashita K. Pre-emptive medicine for hypertension and its prospects. Hypertens Res. (2019) 42:301–5. doi: 10.1038/s41440-018-0177-3

26. Triberti S, Barello S. The quest for engaging AmI: patient engagement and experience design tools to promote effective assisted living. J Biomed Inform. 63:150–6. doi: 10.1016/j.jbi.2016.08.010

27. Davenport T, Kalakota R. The potential for artificial intelligence in healthcare. Future Healthc J. (2019) 6:94. doi: 10.7861/futurehosp.6-2-94

28. Triberti S, Durosini I, Curigliano G, Pravettoni G. Is explanation a marketing problem? the quest for trust in artificial intelligence and two conflicting solutions. Public Health Genomics. (2020). doi: 10.1159/000506014. [Epub ahead of print].

29. Kalton A, Falconer E, Docherty J, Alevras D, Brann D, Johnson K. Multi-agent-based simulation of a complex ecosystem of mental health care. J Med Syst. (2016) 40:1–8. doi: 10.1007/s10916-015-0374-4

30. Hamet P, Tremblay J. Artificial intelligence in medicine. Metabolism. (2017) 69:S36–40. doi: 10.1016/j.metabol.2017.01.011

31. Gunning D. Explainable Artificial Intelligence (XAI): Program Update Novmeber 2017. Defense Advanced Research Projects Agency (DARPA) (2017). Retrieved from: https://www.darpa.mil/attachments/XAIProgramUpdate.pdf

32. Edwards L, Veale M. Enslaving the algorithm: from a “right to an explanation” to a “right to better decisions”?. IEEE Secur Priv. (2018) 16:43–54. doi: 10.1109/MSP.2018.2701152

33. Miller T. Explanation in artificial intelligence: insights from the social sciences. Artif Intell. (2019) 267, 1–38. doi: 10.1016/j.artint.2018.07.007

34. Miller T, Hower P, Sonenberg L. Explainable ai: beware of inmates running the asylum or: how i learnt to stop worrying and love the social and behavioural sciences. In: Proc. IJCAI Workshop Explainable AI (XAI), 2017,. (2017) pp. 36–42. doi: 10.1016/j.foodchem.2017.11.091

35. Vellido A. The importance of interpretability and visualization in machine learning for applications in medicine and health care. Neural Comput Appl. (2019) 1–15, doi: 10.1007/s00521-019-04051-w

37. Légaré F, Thompson-Leduc P. Twelve myths about shared decision making. Patient Educ Couns. (2014) 96:281–6. doi: 10.1016/j.pec.2014.06.014

38. Barry MJ, Edgman-Levitan S. Shared decision making — the pinnacle of patient-centered care. N Engl J Med. (2012) 366, 780–1. doi: 10.1056/NEJMp1109283

39. Simpson M, Buckman R, Stewart M, Maguire P, Lipkin M, Novack D, Till J. Doctor-patient communication: the Toronto consensus statement. BMJ. (1991) 303:1385–7. doi: 10.1136/bmj.303.6814.1385

40. Stewart MA. Effective physician-patient communication and health outcomes: a review. CMAJ. (1995) 152, 1423–1433

41. Bragazzi NL. From P0 to P6 medicine, a model of highly participatory, narrative, interactive, and “augmented” medicine: Some considerations on Salvatore Iaconesi's clinical story. Patient Prefer Adherence. (2013) 7:353–9. doi: 10.2147/PPA.S38578

42. Müller-Engelmann M, Keller H, Donner-Banzhoff N, Krones T. Shared decision making in medicine: the influence of situational treatment factors. Patient Educ Couns. (2011) 82:240–6. doi: 10.1016/j.pec.2010.04.028

43. Spencer KL. Transforming patient compliance research in an era of biomedicalization. J Health Soc Behav. (2018) 59. 170–84. doi: 10.1177/0022146518756860

44. Ford S, Schofield T, Hope T. What are the ingredients for a successful evidence-based patient choice consultation?: a qualitative study. Soc Sci Med. (2003) 56:589–602. doi: 10.1016/S0277-9536(02)00056-4

45. Marzorati C, Pravettoni G. Value as the key concept in the health care system: how it has influenced medical practice and clinical decision-making processes. J Multidiscip Healthc. (2017) 10:101–6. doi: 10.2147/JMDH.S122383

46. Fioretti C, Mazzocco K, Riva S, Oliveri S, Masiero M, Pravettoni G. (2016). Research studies on patients' illness experience using the narrative medicine approach: a systematic review. BMJ Open. 6:e011220. doi: 10.1136/bmjopen-2016-011220

47. Chawla NV, Davis DA. Bringing big data to personalized healthcare: A patient-centered framework. J Gen Intern Med. (2013) 28:660–5. doi: 10.1007/s11606-013-2455-8

48. Corso G, Magnoni F, Provenzano E, Girardi A, Iorfida M, De Scalzi AM, et al. Multicentric breast cancer with heterogeneous histopathology: a multidisciplinary review. Future Oncol. (2020) 16:395–412. doi: 10.2217/fon-2019-0540

49. Riva S, Antonietti A, Iannello P, Pravettoni G. What are judgment skills in health literacy? A psycho-cognitive perspective of judgment and decision-making research. Patient Prefer Adherence. (2015) 9:1677–86. doi: 10.2147/PPA.S90207

50. Terry DJ, Hogg MA. Attitudes, Behavior, and Social Context: The Role of Norms and Group Membership. Hove, UK: Psychology Press (1999).

51. Huryk LA. Factors influencing nurses' attitudes towards healthcare information technology. J Nurs Manag. (2010) 18:606–12. doi: 10.1111/j.1365-2834.2010.01084.x

52. Jacobs RJ, Iqbal H, Rana AM, Rana Z, Kane MN. (2017). Predictors of osteopathic medical students' readiness to use health information technology. J Am Osteopath Assoc. 117:773. doi: 10.7556/jaoa.2017.149

53. Kossman SP, Scheidenhelm SL. Nurses' perceptions of the impact of electronic health records on work and patient outcomes. CIN – Comput Inform Nurs. (2008) 26:69–77. doi: 10.1097/01.NCN.0000304775.40531.67

54. Weber S. A qualitative analysis of how advanced practice nurses use clinical decision support systems. J Am Acad Nurse Pract. (2007) 19:652–67. doi: 10.1111/j.1745-7599.2007.00266.x

55. Liberati EG, Ruggiero F, Galuppo L, Gorli M, González-Lorenzo M, Maraldi M, et al. What hinders the uptake of computerized decision support systems in hospitals? a qualitative study and framework for implementation. Implemen Sci. (2017) 12:113. doi: 10.1186/s13012-017-0644-2

56. Cho J, Park D, Lee HE. Cognitive factors of using health apps: systematic analysis of relationships among health consciousness, health information orientation, eHealth literacy, and health app use efficacy. J Med Internet Res. (2014) 16:e125. doi: 10.2196/jmir.3283

57. Kelley H, Chiasson M, Downey A, Pacaud D, Payton FC, Paré G, et al. The clinical impact of ehealth on the self-management of diabetes: a double adoption perspective. J Assoc Inf Syst. (2011) 12:208–34. doi: 10.17705/1jais.00263

58. Afra P, Bruggers CS, Sweney M, Fagatele L, Alavi F, Greenwald M, et al. Mobile software as a medical device (SaMD) for the treatment of epilepsy: development of digital therapeutics comprising behavioral and music-based interventions for neurological disorders. Front Human Neurosci. 12:171. doi: 10.3389/fnhum.2018.00171

59. Sverdlov O, van Dam J, Hannesdottir K, Thornton-Wells T. Digital therapeutics: an integral component of digital innovation in drug development. Clin Pharmacol Ther. (2018) 104:72–80, doi: 10.1002/cpt.1036

60. US Food and Drug Administration. Proposed Regulatory Framework for Modifications to Artificial Intelligence/Machine Learning (AI/ML) Based Software as a Medical Device (SAMD)—Discussion Paper and Request for Feedback. (2019). Available online at: https://www.fda.gov/files/medical%20devices/published/US-FDA-Artificial-Intelligence-and-Machine-Learning-Discussion-Paper.pdf

61. Pear Therapeutics Inc. reSET for Substance Use Disorder. (2018). Retrieved from: https://peartherapeutics.com/reset/ (accessed April 16, 2020).

62. Budney AJ, Borodovsky JT, Marsch LA, Lord SE. Technological innovations in addiction treatment. In: Danovitch I, Mooney L, editors. The Assessment and Treatment of Addiction. St. Louis, MO: Elsevier (2019). p. 75–90.

63. Cosson B, Graham E. I felt like a third wheel': Fathers' stories of exclusion from the ‘parenting team. J Family Studi. (2012) 18:121–9. doi: 10.5172/jfs.2012.18.2-3.121

64. Clayton RB. The third wheel: The impact of Twitter use on relationship infidelity and divorce. Cyberpsychol Behav Soc Netw. (2014) 17:425–30. doi: 10.1089/cyber.2013.0570

65. Halpern D, Katz JE, Carril C. The online ideal persona vs. the jealousy effect: two explanations of why selfies are associated with lower-quality romantic relationships. Telemat Inform. (2017) 34:114–23. doi: 10.1016/j.tele.2016.04.014

66. Jochemsen H. (2008). Medical practice as the primary context for medical ethics. In D. Weisstub, G. Diaz Pintos Editors, Autonomy and human rights in health care. An international perspective. Dordrecht: Springer.

67. Charon R. Narrative medicine as witness for the self-telling body. J Appl Commun Res. 37:118–31. doi: 10.1080/00909880902792248

68. Smith SK, Dixon A, Trevena L, Nutbeam D, McCaffery KJ. Exploring patient involvement in healthcare decision making across different education and functional health literacy groups. Soc Sci Med. (2009) 69:1805–12. doi: 10.1016/j.socscimed.2009.09.056

69. Harris CR, Darby RS. Shame in physician-patient interactions: patient perspectives. Basic App Soc Psychol. (2009) 31:325–34. doi: 10.1080/01973530903316922

70. Kee JWY, Khoo HS, Lim I, Koh MYH. (2017). Communication skills in patient-doctor interactions: learning from patient complaints. Health Prof Educ. 4:97–106. doi: 10.1016/j.hpe.2017.03.006

71. Miaoulis G, Gutman J, Snow MM. Closing the gap: the patient-physician disconnect. Health Mark Q. (2009) 26:56–8. doi: 10.1080/07359680802473547

72. Ozawa S, Sripad P. How do you measure trust in the health system? A systematic review of the literature. Soc Sci Med. 91:10–4. doi: 10.1016/j.socscimed.2013.05.005

73. Taber JM, Leyva B, Persoskie A. Why do people avoid medical care? a qualitative study using national data. J Gen Intern Med. 30:290–7. doi: 10.1007/s11606-014-3089-1

74. Rajkomar A, Dean J, Kohane I. Machine learning in medicine. New Engl J Med. (2019) 380:1347–58. doi: 10.1161/CIRCULATIONAHA.115.001593

75. Ross C, Swetlitz I, Thielking M. IBM Pitched Watson as a Revolution in Cancer Care: it's Nowhere Close. Boston. (2017) Retrieved from https://www.statnews.com/2017/09/05/watson-ibm-cancer/ (accessed April 16, 2020)

76. Nichols G. Terminal patient learns he's going to die from a robot doctor. (2019) Retrieved at: https://www.zdnet.com/article/terminal-patient-learns-hes-going-to-die-from-a-robot-doctor/ (accessed Mar. 26, 2019).

77. Durosini I, Tarocchi A, Aschieri F. Therapeutic assessment with a client with persistent complex bereavement disorder: a single-case time-series design. Clin Case Stud. (2017) 16:295–312. doi: 10.1177/1534650117693942

78. Rosner R, Pfoh G, Kotoučová M. Treatment of complicated grief. Euro J Psychotraumatol. (2011) 2:7995. doi: 10.3402/ejpt.v2i0.7995

79. Wiederhold BK. Can artificial intelligence predict the end of life…And do we really want to know? Cyberpsychol. Behav. Soc. Netw. 22:297. doi: 10.1089/cyber.2019.29149.bkw

80. Kedia SK, Ward KD, Digney SA, Jackson BM, Nellum AL, McHugh L, et al. “One-stop shop”: lung cancer patients' and caregivers' perceptions of multidisciplinary care in a community healthcare setting. Transl Lung Cancer Res. (2015) 4:456–64. doi: 10.3978/j.issn.2218-6751.2015.07.10

81. Briet JP, Hageman MG, Blok R, Ring D. When do patients with hand illness seek online health consultations and what do they ask? Clinical Orthopaedics and Related Research (2014) 472:1246–50. doi: 10.1007/s11999-014-3461-9

Keywords: decision making, artificial intelligence, ehealth, patient-doctor relationship, technology acceptance, healthcare process, patient-centered medicine

Citation: Triberti S, Durosini I and Pravettoni G (2020) A “Third Wheel” Effect in Health Decision Making Involving Artificial Entities: A Psychological Perspective. Front. Public Health 8:117. doi: 10.3389/fpubh.2020.00117

Received: 03 December 2019; Accepted: 23 March 2020;

Published: 28 April 2020.

Edited by:

Koichi Fujiwara, Nagoya University, JapanCopyright © 2020 Triberti, Durosini and Pravettoni. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Stefano Triberti, c3RlZmFuby50cmliZXJ0aSYjeDAwMDQwO3VuaW1pLml0

Stefano Triberti

Stefano Triberti Ilaria Durosini

Ilaria Durosini Gabriella Pravettoni

Gabriella Pravettoni