- 1Clinical and Translational Science Center, Harvard Medical School, Boston, MA, United States

- 2Prevention Research Center, Harvard T.H. Chan School of Public Health, Boston, MA, United States

- 3Center for Community-Based Research, Dana Farber Cancer Institute, Boston, MA, United States

- 4Division of General Internal Medicine, Massachusetts General Hospital, Boston, MA, United States

Background: Strong partnerships are critical to integrate evidence-based prevention interventions within clinical and community-based settings, offering multilevel and sustainable solutions to complex health issues. As part of Massachusetts' 2012 health reform, The Prevention and Wellness Trust Fund (PWTF) funded nine local partnerships throughout the state to address hypertension, pediatric asthma, falls among older adults, and tobacco use. The initiative was designed to improve health outcomes through prevention and disease management strategies and reduce healthcare costs.

Purpose: Describe the mixed-methods study design for investigating PWTF implementation.

Methods: The Consolidated Framework for Implementation Research guided the development of this evaluation. First, the study team conducted semi-structured qualitative interviews with leaders from each of nine partnerships to document partnership development and function, intervention adaptation and delivery, and the influence of contextual factors on implementation. The interview findings were used to develop a quantitative survey to assess the implementation experiences of 172 staff from clinical and community-based settings and a social network analysis to assess changes in the relationships among 72 PWTF partner organizations. The quantitative survey data on ratings of perceived implementation success were used to purposively select 24 staff for interviews to explore the most successful experiences of implementing evidence-based interventions for each of the four conditions.

Conclusions: This mixed-methods approach for evaluation of implementation of evidence-based prevention interventions by PWTF partnerships can help decision-makers set future priorities for implementing and assessing clinical-community partnerships focused on prevention.

Introduction

The delivery of preventive services in community-based and clinical settings has tremendous potential to improve population health. However, these community and clinic-based preventive activities are rarely coordinated (1), even with evidence that clinical-community partnerships can improve health outcomes including smoking abstinence, perceived physical health, cholesterol levels and hypertension (2, 3). The potential of community-clinical partnerships to improve health is further emphasized by the finding that neighborhood or community-level determinants of health also impact the way patients interact with the healthcare system as measured by hospital readmissions (4) and emergency room visits (5). As healthcare systems become increasingly accountable for improving the health of populations, strategies for linking clinical systems and community-based partners are becoming essential (6).

Clinical-community collaborations offer an opportunity to create multi-level, sustainable change. Thousands of coalitions, alliances, and other forms of inter-organizational health focused partnerships were formed over the past two decades (7–9). These intersectoral partnerships are critical for addressing complex public health challenges. They can marshal complementary human and social capital, embed interventions in the broader public health system, and offer opportunities to address problems that cannot be solved by an organization or sector in isolation (8–11). Although collaboration across sectors or institution types is not without its challenges (12), coalitions and intersectoral partnerships have successfully impacted health disparities broadly (13), as well as in improved diabetes, HIV/AIDS, and substance abuse outcomes (14–16).

The clinical-community partnerships in this project implemented evidence-based interventions that address hypertension, pediatric asthma, falls among older adults, and tobacco use throughout Massachusetts. In 2012, as the second stage in Massachusetts' ground-breaking health reform initiative, the legislature passed Massachusetts General Law Chapter 224 (17). Among other things, it established the Prevention and Wellness Trust Fund (PWTF), which provided more than $42 million over 4 years to nine community-clinical partnerships. The Massachusetts Department of Public Health led the initiative, competitively selecting nine partnerships in diverse communities across the state and providing technical assistance to implement specified evidence-based interventions. The conditions and interventions were chosen for implementation because they were determined to be more likely than others to show changes in outcomes and costs, and positive return on investment, in the span of 3 years. The nine chosen communities exceeded state-wide prevalence of the priority conditions, were more racially and ethnically mixed, and had higher rates of poverty than the state average (18). The funded partnerships varied in configuration and ranged in size from 40,000 to 140,000 people; some were single cities, others included multiple cities and towns, and one constituted an entire county. Fifteen percent of the state population resides within the nine funded partnerships. All partnerships included a city/regional planning agency, a clinical health provider, and a community-based organization. Their size range from 6 to 15 participating organizations. More details on the PWTF partnerships, decisions, interventions, and model are available in the project final report (19).

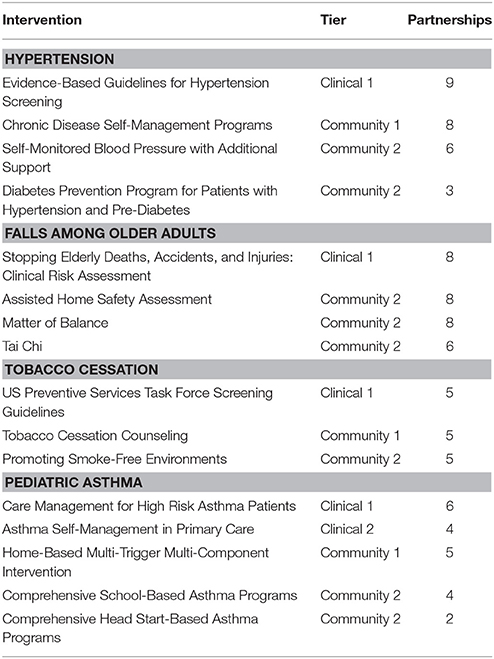

The initiative began in 2014 with a 6–9 month planning stage focused on capacity building. Communities developed partnerships among clinical providers and community-based organizations that linked and coordinated clinical and community-based strategies. The request for response specified that at least one intervention must involve bi-directional referrals from clinical to community organizations with feedback loops. For example, a community health center might partner with the YMCA to develop a system in which patients screened as hypertensive or at risk for falls are referred to community programming, and conversely YMCA members who express needs for clinical services are referred to the community health center. For most of the partnerships, full implementation began early in 2015. Table 1 lists the clinical and community evidence-based interventions for each health condition. Of the nine partnerships, all selected hypertension, eight selected falls among older adults, five selected tobacco cessation, and six chose pediatric asthma. MDPH provided grantee support, such as individualized technical assistance in evidence-based interventions, learning sessions to facilitate knowledge development and sharing across all grantees, and quality improvement evaluation. Partnerships were encouraged to culturally adapt interventions to meet the needs of their local communities.

Table 1. Clinical and community interventions implemented as part of the Prevention and Wellness Trust Fund.

The communities were required to jointly fund a rigorous independent evaluation of the PWTF to determine if it met its explicit legislative objectives: (1) a reduction in the prevalence of preventable health conditions; (2) a reduction in health care costs or the growth in health care cost trends associated with these conditions; and (3) an assessment of which populations benefited from any reduction. While not specified in the authorizing legislation, the Prevention and Wellness Advisory Board (PWAB) created by Chapter 224 strongly recommended the additional systematic collection of data that illustrate the implementation experiences in PWTF communities.

The purpose of this paper is to present a mixed methods approach to assess the PWTF implementation experience. While an outcome evaluation is critical to establishing success, embedding quantitative surveys and qualitative interviews that assess how these partnerships function and what contextual factors influence implementation will help to provide actionable findings. This paper draws upon implementation science, social network analysis, and a mixed methods design to understand these complexities.

First, the field of dissemination and implementation science is concerned with generating knowledge beyond clinical trials and effectiveness research to investigate change in real-world settings. In this study, we define implementation “as the way and degree to which an intervention is put into place in a given setting” (20). Fundamental to implementation science is the concept of integrating evidence-based interventions within a community or clinical setting and creating partnerships and supportive delivery systems to support the use of evidence-based interventions. At the core of this science is inquiry into the contextual factors that influence successful implementation of evidence-based interventions. To ground our inquiry, we applied the Consolidated Framework for Implementation Research (CFIR), an established framework that supports identification of actionable factors that influence success within five domains: the inner setting, the outer settings, characteristics of individuals, characteristics of the intervention, and processes (21).

Next, it was important to examine the composition, structure, and functions of the PWTF partnerships in the context of implementing evidence-based interventions. Social network analysis is a natural fit for evaluation of the function and impact of community-clinical partnerships, as it focuses on relationships (here, between organizations) and takes a systems perspective (22). Social network analysis has been applied effectively to the study of a range of collaborative efforts among organizations engaged in health promotion activities (23–25). Using the methods of social network analysis, it becomes possible to assess the form and function of a network, identify key actors and the types of resources exchanged across the network, assess the sustainability and strength of relationships, assess opportunities to strengthen the network's impact on a set of health outcomes, and assess challenges or drawbacks to collaboration (11). In this way, social network analysis affords the opportunity to explore the ways in which community-clinical partnership networks can be utilized to create change and achieve intended implementation outcomes (and ultimately, intended health outcomes) in the organizations and communities of interest.

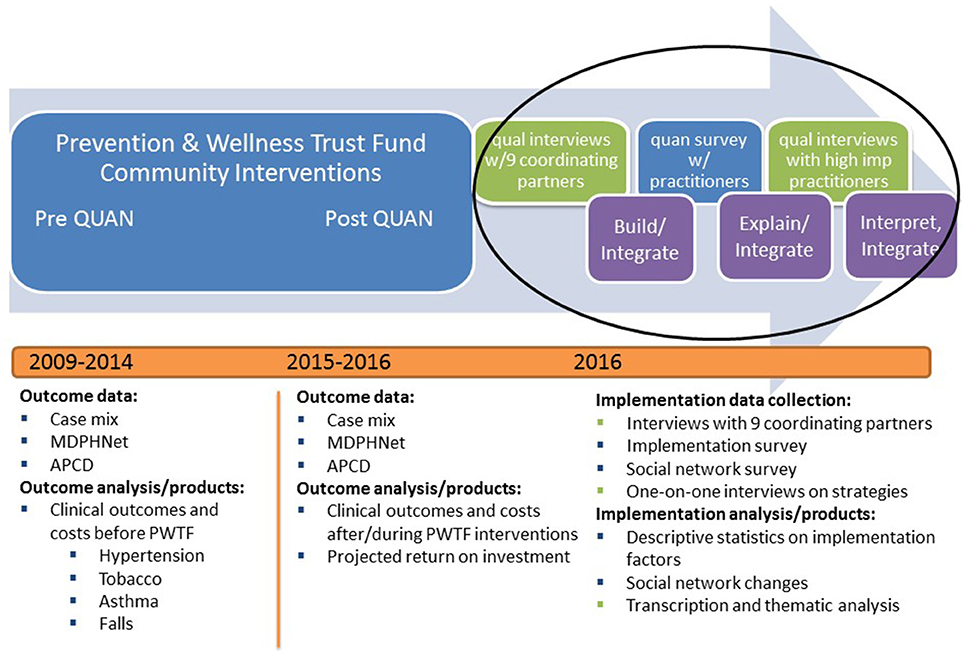

Finally, mixed methods research is the collection and analysis of quantitative and qualitative data, which is often employed to understand complex research problems for which one methodology is not sufficient (26). Mixed methods studies must use rigorous quantitative and qualitative methods and explicitly integrate or link these two types of data for a more comprehensive investigation of the topic at hand (26). Using mixed methods can be helpful for understanding the perceptions of practitioners and end-users of a given evidence-based intervention (27). A mixed methods design also aligns well with the need to conduct multi-level assessments of implementation efforts (e.g., collecting data at the community, clinic, provider, and patient levels) (28, 29). In this study, we use a multi-phase, explanatory sequential mixed methods design embedded in a large evaluation project to gain a more comprehensive understanding of implementation of the Prevention and Wellness Trust Fund interventions (Figure 1) (26, 30). Building three rapid phases of data collection and analysis upon one another is intended to explain what success looks like in this state-wide implementation of clinical-community linkages to build population-level disease prevention and management systems.

Figure 1. Multi-phase explanatory sequential mixed methods design embedded in the Prevention and Wellness Trust Fund Evaluation.

This mixed methods external evaluation will be useful to a variety of stakeholders, including legislators and other policymakers who need to know what PWTF accomplished and what next steps are indicated; implementing communities and agencies who need to know what worked and what didn't, and for whom; and other communities that want to learn from the PWTF experience.

Materials and Methods

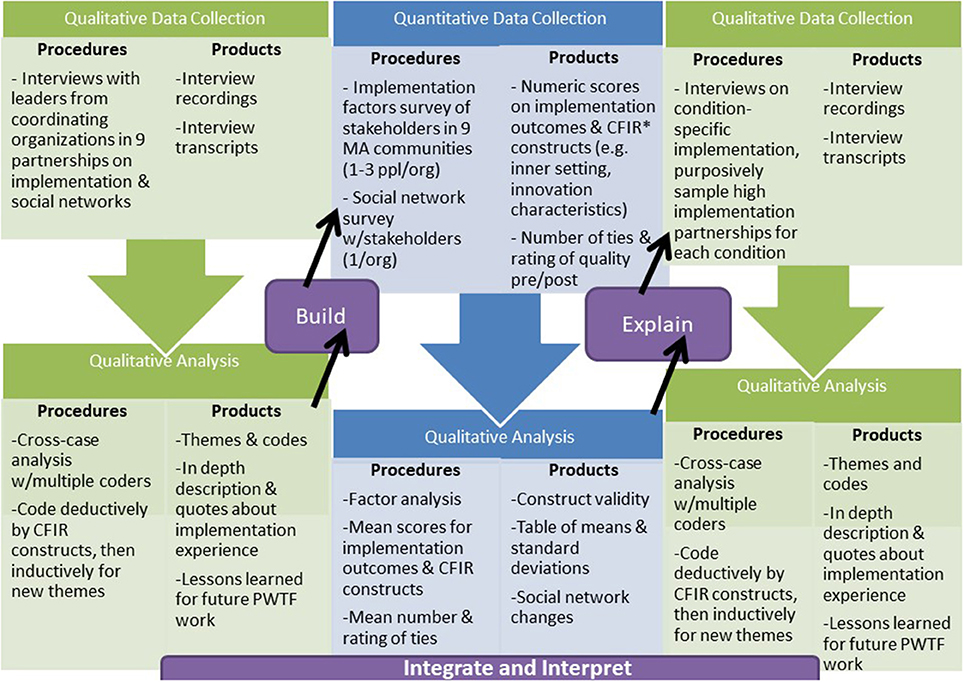

We used a multi-phase explanatory mixed methods design embedded in a larger evaluation to investigate what interventions work for whom and in what settings—key issues at the core of implementation science (see Figure 1). First, we conducted semi-structured qualitative telephone interviews (lasting about 1.5 h) with at least two leaders from each of the nine partnerships. Key informant interviews are in-depth discussions that offer insight into participants' perceptions and opinions and are suited for exploratory research (31). They are often conducted with an individual, but we chose to conduct them with leadership teams to gather high-level perspective and a sense of daily implementation efforts. The interview findings were used to develop a quantitative survey to assess the implementation experiences of 172 staff from participating clinical and community-based organizations and a social network analysis to assess changes in the relationships among 70 PWTF organizations. The quantitative survey data on ratings of perceived implementation success were used to purposively select 24 staff for interviews. These 1.5-h interviews (in person whenever possible) were intended to explore the most successful experiences of implementing evidence-based hypertension, falls, tobacco, and asthma interventions. We chose interviews at this stage rather than staff focus groups because we sampled different cadres of staff (e.g., physicians, partnership coordinators, community health workers). We expected some staff would be more comfortable describing challenges or barriers to implementation in one-on-one interviews versus focus groups which may have included more senior staff and leaders from their communities. Detailed descriptions of each of the phases of the mixed methods implementation evaluation are below and described visually in Figures 1, 2 with details on the project timeline, data collection and analyses activities, and products. The Consolidated Framework for Implementation Research (CFIR) guided the development of this evaluation (21). The Harvard Office of Human Research Administration (IRB) determined that full review and approval was not required for this study. It has been approved by the Office of Human Research Administration staff and the proposal was reviewed by the Department of Public Health's Institutional Review Board.

Figure 2. Step-by-step protocol for the multi-phase, explanatory mixed methods design for the Prevention and Wellness Trust Fund implementation evaluation.

Phase 1: Qualitative Interviews With Coordinating Partners

In March 2016, key informant interviews in Phase 1 served as an initial, high-level qualitative exploration of the implementation experience in each partnership and helped to adapt existing survey items to identify contextual influences on PWTF implementation in Phase 2.

Sampling, Recruitment, and Administration

Each partnership had one organization that served as the coordinating partner, meaning that it was responsible for leading and managing the initiative. The Massachusetts Department of Public Health identified participants from the coordinating partners for the Phase 1 qualitative interviews. The 2–4 key informants from each community included the current PWTF project manager from each partnership, plus additional interviewees with a large breadth of knowledge about this project. Participants included health department directors, community health center senior leadership, healthcare system administrators, and past project managers in communities that had experienced leadership turnover. Prior to interviews, the study team emailed each PWTF project manager a one-page overview detailing the purpose and expectations of each phase of the implementation evaluation. All interviews were scheduled via email and conducted over the phone at the convenience of coordinating partners. The research team conducted 1.5-h telephone interviews with each coordinating partner team. All coordinating partners agreed to participate in Phase 1 interviews.

Measures

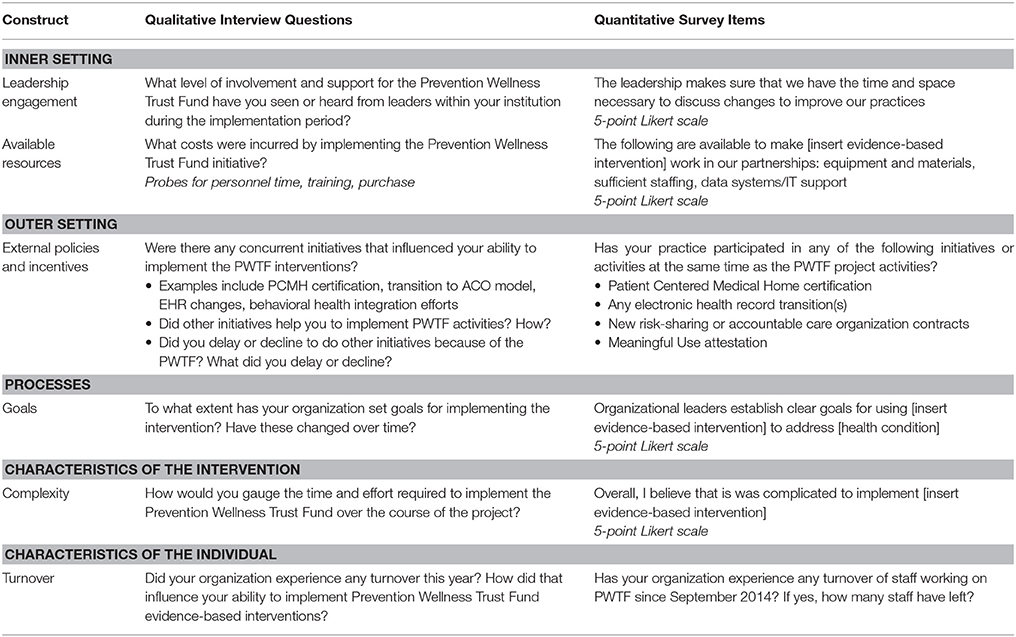

Implementation constructs explored in the Phase 1 interview included the implementation experience as well as an exploration of the contextual influences on implementation. To capture implementation experience, we included prompts related to buy-in among leadership and staff, details of intervention adaptation and delivery, the role of community health workers in supporting community-clinical partnerships to implement evidence-based interventions, and the connection between intervention implementation and health equity issues. The research team adapted an existing interview guide (32) based on the Consolidated Framework for Implementation Research (CFIR) to the PWTF settings and outcomes, attending to each of the five CFIR domains: inner setting (e.g., leadership engagement, resources) characteristics of the intervention (e.g., complexity, relative advantage), characteristics of individuals (e.g., role, turnover), outer setting (e.g., community context), and processes (e.g., planning, engaging champions) (21). The full interview guide is available in Supplementary Material 1 and example of qualitative interview questions appear in Table 2.

Table 2. Sample qualitative interview and quantitative survey questions aligned with the Consolidated Framework for Implementation Research (CFIR).

The social network analysis portion of the interview guide examined two classes of networks: (a) intra-partnership networks (relationships between PWTF organizations within each of the nine partnerships) and (b) inter-partnership networks (relationships between the nine partnerships). For the intra-partnership network assessment, the first step was to define the set of organizations of interest; in this case, all organizations involved with PWTF implementation (33). For each partnership we used the list from the MDPH as a starting point and then reviewed it with partners to revise as needed. Second, the interview guide included prompts to define relationships of interest. The literature suggests that important relationships linked to creating practice change in healthcare settings include communication, collaboration or competition, exertion of influence, and exchanging resources (25, 34). We asked about these and also prompted respondents to identify other important interactions or exchanges that supported their PWTF goals. Finally, we asked a set of questions to explore the role of additional, unofficial partners in the PWTF initiative. For example, a given community-based organization may be the official delivery site for a given evidence-based intervention, but may link with other local organizations for recruitment or other activities.

For the inter-partnership assessment, the interview guide focused on relationships among the nine participating partnerships, as they had been brought together as part of a quality improvement learning collaborative to support PWTF goals. The interviews focused on the range of network relationships involved in implementing evidence-based interventions through the PWTF. We also asked about the range of benefits derived from engaging with other partnerships and expected sustainability of these relationships.

Data Management and Analysis

Interview recordings were transcribed verbatim. Data were managed and prepared for analysis using NVivo qualitative data analysis software Version 11 (QSR International Pty Ltd. 2012. Melbourne, Australia). The research team reviewed transcripts for key constructs to include in the Phase 2 quantitative implementation and social network surveys. We conducted a cross-case analysis that began deductively coding according to contextual factors from CFIR, and then inductively added codes for new patterns and themes. Rigor was ensured with analysis triangulation; all interviews were coded by two researchers to ensure multiple perspectives (35, 36). Interview data were integrated with the phase 2 survey and phase 3 interview data, looking for concordant and discordant results (26).

Phase 2: Quantitative Surveys

During May and June 2016 in Phase 2 of this evaluation, we fielded two online surveys to quantitatively identify the contextual factors that influenced implementation of the evidence-based interventions and assess the social networks within and between each partnership. Both surveys helped to adapt an existing guide (32) for follow-up in-depth interviews in Phase 3.

Sampling, Recruitment, and Administration

The research team worked with the Massachusetts Department of Public Health and coordinating partners to generate a list of all organizations that were part of each partnership. Next, coordinating partners indicated the health conditions and evidence-based interventions associated with each organization and listed the names, roles/titles, and email addresses for 1–3 contacts at each organization who were involved with implementing the evidence-based interventions. They were asked to include clinical staff of varying levels (doctors, nurses, and medical assistants), practitioners in community-based settings, and community health workers. One week prior to launching the surveys, the study team emailed each PWTF project manager to disseminate a one-page overview detailing the broad content areas of focus on the survey. Project managers from each partnership shared the overview with participants.

Both surveys were conducted online via REDCap electronic data capture tools (37). The implementation survey was administered to all contacts identified by the coordinating partners (N = 2 14). The social network survey was administered to one representative at each organization designated as the lead for the PWTF (N = 90). Participants were invited to complete the surveys by email. They were given a 2-week window to respond to the surveys, with reminders sent at 1 week and 1 day before the official close. Coordinating partners assisted in encouraging survey participation. Participants were incentivized to complete the implementation survey with a chance to win a raffle for a $75 gift card. A total of 172 individuals completed the implementation survey (response rate = 80%) and 72 people completed the social network survey (response rate = 80%).

Measures

The research team adapted existing validated survey items (38, 39) to the PWTF settings and outcomes using findings gleaned from the Phase 1 interviews. Items assessed the perceived degree of implementation for each evidence-based intervention as well as contextual domains in the CFIR (21). A 4-point Likert scale captured the degree of implementation, with the following ratings: 0 (no implementation); 1 (“we are in the early stages of implementation”); 2 (“we have implemented this strategy, but inconsistently”); and 3 (“we have implemented this intervention fully and systematically”). The CFIR survey items were measured on a 5-point Likert scale with responses ranging from 1-strongly disagree to 5-strongly agree. We also included items to capture title, role, age, gender, race/ethnicity, education, language spoken, and years of experience. Adaptations to the survey were made based on qualitative data provided by the coordinating partners in Phase 1. For instance, sufficient staffing and data systems/IT support were frequently named as important resources influencing implementation; therefore, we created discrete items to assess these factors quantitatively on the survey. Using the qualitative data to adapt the quantitative survey ensured we could measure the frequency of these contextual influences in the large pool of 172 clinical and community-based implementers. The full survey is available in Supplementary Material 2 and Table 2 includes examples of survey items.

The quantitative, intra-partnership social network analysis utilized the list of organizations involved with PWTF implementation from the Phase 1 interviews and asked about relationships with all other members of the partnership. For example, if a given partnership included 7 organizations, we surveyed each organization about their relationships with the other 6 organizations. The social network analysis focused on a core set of relationships identified in Phase 1 as important for implementation: collaboration, sharing information/resources, sending referrals, receiving referrals, providing/receiving technical assistance or capacity-building, providing/receiving access to community members. We also asked questions about the sustainability of reported connections after funding is completed. Finally, we asked questions to prompt respondents to identify up to five additional partners involved in the execution of the evidence-based program or strategy. The quantitative, inter-partnership social network analysis included questions about relationships (using the same list provided above) with the other partnerships. Once more we asked about expected sustainability of connections after funding ends.

Data Management and Analysis

The research team analyzed quantitative survey data in SAS v9.4 (SAS Institute: Cary, NC). We calculated descriptive statistics (e.g., means of implementation outcomes and CFIR constructs) for all outcomes. A summary score for each evidence-based intervention was created for each partnership by averaging ratings of implementation from all respondents in each partnership. These 4-point scale summary scores were used to classify partnerships as “high implementation” using self-reported scores for each health condition. High implementation partnerships for each condition had summary scores for each evidence-based intervention that were higher than the PWTF average. Social network data were analyzed using a combination of the dedicated network analysis software UCINET (Analytic Technologies: Lexington, KY) and SAS v9.4. Quantitative social network analyses emphasized analysis of the relationships within the official set of network members for each partnership. The analyses linked social network metrics with implementation outcomes.

Phase 3: Qualitative Interviews With Implementers

In July and August 2016, the final phase of our evaluation, we conducted follow-up in-depth interviews with practitioners charged with implementation. The interviews focused on developing a more comprehensive understanding of the experience of implementing the evidence-based hypertension, falls, asthma, and tobacco interventions in real world clinical and community settings.

Sampling, Recruitment, and Administration

The research team sampled “high implementation” partnerships for participation in the Phase 3 interviews. The 4-point summary scores from Phase 2 surveys were used to classify partnerships as “high implementation” using self-reported scores for each health condition.

After high implementation partnerships were identified, the research team sampled 4–6 individuals (at least one clinical partner and one community partner) from each partnership for interviews. These individuals were purposively sampled from the list of implementation survey respondents in an effort to conduct information-rich interviews. For instance, Phase 3 interviews for falls among older adults in one partnership included speaking with a community health worker who conducted falls assessments and referrals within a community health center, a falls prevention coordinator from an elder services organization responsible for home safety assessments, folks leading Matter of Balance and Tai Chi classes at the YMCA and via city recreation, as well as the director of a local non-profit organization.

All 1.5-h interviews were scheduled via email and conducted in-person at the convenience of the participants whenever possible (two interviews were conducted over the phone). Interviews were audio recorded and transcribed verbatim. Participants were compensated with a $25 gift card. All people invited for Phase 3 interviews agreed to participate.

Measures

Similar to the Phase 1 formative interviews, the research team adapted an existing interview guide (32) based on the CFIR to the PWTF settings and outcomes. The adaptation included tailoring the interview to investigate findings from the quantitative surveys of Phase 2. Targeted probes for CFIR items with the highest or lowest average ratings on the survey were added to the interview. This was done to explore barriers and facilitators to implementation in greater depth. For example, respondents' extreme rating of the complexity of interventions and resources such as staffing led our team to add probes to the interview guide to gain a better understanding of what intervention complexity and staffing constraints looked like from the perspectives of those who were implementing the interventions in real world settings. Implementation constructs explored in the Phase 3 follow-up interview included the experience of implementing specific evidence-based interventions and an exploration of the contextual influences on implementation. Elements of the implementation experience include buy-in among leadership and staff, a description of how interventions were adapted and delivered, the role of community health workers, and strategies to address health equity. Clinical partners were also asked to discuss how quality of care initiatives impacted implementation of the PWTF interventions (40). All five CFIR domains were explored in this phase for each target health condition (21). The full interview guide appears in Supplementary Material 3 and there are examples of qualitative interview items in Table 2.

The analysis of Phase 2 network data highlighted the diversity of partnership structure for organizations working together to implement evidence-based interventions through the PWTF. We explored this further by asking implementers to describe their experiences with community-clinical linkages as part of the PWTF initiative. We also asked a series of questions about partnership sustainability to compare and contrast descriptions provided by implementers vs. descriptions provided by partnership leaders (Phase 1).

Data Management and Analysis

All interview audio-recordings were transcribed. Data were managed and prepared for analysis using NVivo qualitative data analysis software Version 11 (QSR International Pty Ltd. 2012. Melbourne, Australia). We conducted a cross-case analysis that began deductively coding according to contextual factors from CFIR, and then inductively added codes for new patterns and themes (35, 36). One-third (8 of 24) of transcripts were coded by a second researcher to build consensus around all codes and themes. Phase 3 interview data were integrated with survey and Phase 1 key informant interview data, looking for concordant and discordant results (26).

Discussion

This paper describes the design of a mixed methods approach for evaluating the implementation of clinical-community partnerships through The Prevention and Wellness Trust Fund. This study design will help us gain a comprehensive understanding of this complex approach for engaging communities in implementing evidence-based interventions across Massachusetts. To create an evaluation protocol that was truly mixed-methods, rather than simply multi-method, it was critical to explicitly and strategically find points in the evaluation process to integrate our qualitative data (41). In our multi-phase, explanatory sequential mixed methods design embedded in the larger PWTF evaluation, data were integrated or linked in several ways. First, while the initial mandated evaluation focused solely on the analysis of large quantitative datasets of medical claims, hospital discharges, and aggregated electronic health records, the PWTF advisory board and our research study team also prioritized embedding qualitative data into the larger evaluation to understand the complexities of the local implementation experiences. We also integrated quantitative and qualitative data to build implementation survey measures. The initial interviews with key informants were used to prioritize and adapt survey items for a tailored quantitative assessment of partnership social networks and implementation of the PWTF evidence-based interventions with a broader sample of implementation stakeholders in phase 2. Additionally, the study followed up on surveys with a second round of interviews as a means of explaining the quantitative results in greater depth. In this explanatory process, we used quantitative data on perceived level of implementation to sample “high implementation” partnerships and create qualitative probes to examine contextual implementation factors that were quantitatively rated as influential. This complex design presented the challenge of multiple phases depending on the success of earlier phases and determining how much data is sufficient to move forward to each subsequent mixed methods phase. For example, deciding how much quantitative analysis of the online survey should be conducted to inform the sampling and adaptation of the qualitative follow-up interviews.

The Prevention and Wellness Trust Fund sought to build and use partnerships to implement complex interventions in complex systems (42), meaning that a group of connected, “un-siloed” interventions addressing four priority health conditions were implemented in coordination across a variety of settings (e.g., hospitals, community health centers, schools, YMCAs, housing). By measuring the function and impact of partnerships within and between communities implementing evidence-based prevention programs, this evaluation is designed to better understand how to set up and support community-based prevention efforts. Accountable Care Organizations, which strive to develop clinic-community partnerships to improve the health of populations may use PWTF as a prototype. Using implementation science, interviews and surveys may help identify best practices for tailoring evidence-based interventions to unique contexts and constituents. The mixed methods study design also allows us to detail the challenges of clinical-community linkages, which are vital both in the narrow sense of promoting the use of specific evidence-based programs or practices, but also in a broader sense of, supporting sustainable community-level, systems changes (43).

The use of a mixed methods approach to understanding the implementation of evidence-based practices in clinical-community partnerships draws on the strengths of both qualitative and quantitative methods, but it is not without limitations. First, time constraints presented challenges in several ways, given that the external evaluation was only funded for the second year of a three-year implementation period. Limited time meant that our study was only able to conduct in-depth follow-up interviews with people implementing the interventions in “high implementation” partnerships. If we had more time, we could have prioritized exploring the implementation process and contextual factors within partnerships that have less success in greater depth with follow-up interviews that could further our understanding of implementation challenges. Time also limited our ability to use more objective quantitative measures, such as program reach or changes in clinical outcomes, to sample “high implementation” partnerships. We were also limited in our ability to evaluate how partnerships were trained and subsequently implemented interventions to address health equity, with only one question on interviews directed toward this topic.

In sum, this paper details the research protocol for the external evaluation of the implementation of the Prevention and Wellness Trust Fund. Subsequent implementation research from this project aims to describe how the hypertension, falls, asthma, and tobacco evidence-based interventions were implemented and identify actionable contextual factors that influenced implementation in the nine partnerships. The mixed methods approach will provide data that appeals to a range of constituents—from scientists to policymakers to public health and clinical practitioners. The findings from this study will be valuable for understanding what PWTF has accomplished and to help other communities planning to set-up or support community-clinical partnerships to deliver evidence-based preventive services.

Author Contributions

RL served as the lead author on the paper, contributing to conceptualization, literature summary, development of data collection measures, drafting/editing all sections of the paper, tables, and figures. SR contributed to conceptualization of the paper, literature summary, development of data collection measures, and drafting/editing methods and discussion. GK contributed to conceptualization of the paper, development of data collection measures, and drafting/editing the introduction and methods. CD served as senior author on the paper, contributing to conceptualization of the paper, development of data collection measures, and drafting/editing the introduction and discussion.

Funding

The study was funded by the Commonwealth of Massachusetts/Department of Public Health (INTF4250HH2500224018), NIH/NIMH (2002362059), and NIH/NCATS (4UL1TR001102-04). The views and opinions in this publication do not necessarily reflect the views and opinions of the Massachusetts Department of Public Health.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

In addition to our funder that provided valuable details on the PWTF model detailed in the introduction, we would like to thank the almost 200 practitioners and leaders throughout Massachusetts who shared their perspectives on implementation of PWTF through interviews and surveys as well as James Daly, Amy Cantor, and Queen Alike who helped to conduct interviews and analyze data for the project.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpubh.2018.00150/full#supplementary-material

References

1. Krist AH, Shenson D, Woolf SH, Bradley C, Liaw WR, Rothemich SF, et al. Clinical and community delivery systems for preventive care: an integration framework. Am J Prev Med. (2013) 45:508–16. doi: 10.1016/j.amepre.2013.06.008

2. Krist AH, Woolf SH, Frazier CO, Johnson RE, Rothemich SF, Wilson DB, et al. An electronic linkage system for health behavior counseling effect on delivery of the 5A's. Am J Prev Med. (2008) 35(5 Suppl.):S350–8. doi: 10.1016/j.amepre.2008.08.010

3. Porterfield DS, Hinnant LW, Kane H, Horne J, McAleer K, Roussel A. Linkages between clinical practices and community organizations for prevention: a literature review and environmental scan. Am J Prev Med. (2012) 42(6 Suppl. 2):S163–71. doi: 10.1016/j.amepre.2012.03.018

4. Herrin J, St Andre J, Kenward K, Joshi MS, Audet AM, Hines SC. Community factors and hospital readmission rates. Health Serv Res. (2015) 50:20–39. doi: 10.1111/1475-6773.12177

5. Baltrus P, Xu J, Immergluck L, Gaglioti A, Adesokan A, Rust G. Individual and county level predictors of asthma related emergency department visits among children on medicaid: a multilevel approach. J Asthma. (2017) 54:53–61. doi: 10.1080/02770903.2016.1196367

6. Sequist TD, Taveras EM. Clinic-community linkages for high-value care. N Engl J Med. (2014) 371:2148–50. doi: 10.1056/NEJMp1408457

7. Butterfoss FD, Goodman RM, Wandersman A. Community coalitions for prevention and health promotion: factors predicting satisfaction, participation and planning. Health Educ Q. (1996) 23:65–79.

8. Lasker RD, Weiss ES, Miller R. Partnership synergy: a practical framework for studying and strengthening the collaborative advantage. Milbank Q. (2001) 79:179–205. doi: 10.1111/1468-0009.00203

9. Roussos ST, Fawcett SB. A review of collaborative partnerships as a strategy for improving community health. Annu Rev Public Health. (2000) 21:369–402. doi: 10.1146/annurev.publhealth.21.1.369

10. Provan KG, Nakama L, Veazie MA, Teufel-Shone NI, Huddleston C. Building community capacity around chronic disease services through a collaborative interorganizational network. Health Educ Behav. (2003) 30:646–62. doi: 10.1177/1090198103255366

11. Provan KG, Veazie MA, Staten LK, Teufel-Shone NI. The use of network analysis to strengthen community partnerships. Public Adm Rev. (2005) 65:603–13. doi: 10.1111/j.1540-6210.2005.00487.x

12. Goerzen A, Beamish PW. The effect of alliance network diversity on multinational enterprise performance. Strateg Manage J. (2005) 26:333–54. doi: 10.1002/smj.447

13. Hennessey LS, Smith ML, Esparza AA, Hrushow A, Moore M, Reed DF. The community action model: a community-driven model designed to address disparities in health. Am J Public Health (2005) 95:611–6. doi: 10.2105/AJPH.2004.047704

14. Giachello AL, Arrom JO, Davis M, Sayad JV, Ramirez D, Nandi C, et al. Reducing diabetes health disparities through community-based participatory action research: the Chicago southeast diabetes community action coalition. Public Health Rep. (2003) 118:309–23. doi: 10.1093/phr/118.4.309

15. Parker R, Aggleton P. HIV and AIDS-related stigma and discrimination: a conceptual framework and implications for action. Soc Sci Med. (2003) 57:13–24. doi: 10.1016/S0277-9536(02)00304-0

16. Shults RA, Elder RW, Nichols JL, Sleet DA, Compton R, Chattopadhyay SK, et al. Effectiveness of multicomponent programs with community mobilization for reducing alcohol-impaired driving. Am J Prev Med. (2009) 37:360–71. doi: 10.1016/j.amepre.2009.07.005

17. Commonwealth of Massachusetts Executive Office of Health and Human Services. The Prevention and Wellness Trust Fund (2017) Available online at: http://www.mass.gov/eohhs/gov/departments/dph/programs/community-health/prevention-and-wellness-fund/

18. Massachusetts Department of Public Health. PWTF 2015 Annual Report, Appendix G: PWTF Partnership Baselines Data (2016).

19. Massachusetts Department of Public Health. Joining Forcing: Adding Public Health Value to Healthcare Reform. The Prevention and Wellness Trust Fund Final Report 2017 Available online at: http://www.mass.gov/eohhs/gov/departments/dph/programs/community-health/prevention-and-wellness-fund/annual-reports.html

20. Brownson R, Colditz G, Proctor E. Dessemination and Implementation Research in Health: Translating Science to Practice. New York, NY: Oxford University Press (2012).

21. Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. (2009) 4:50. doi: 10.1186/1748-5908-4-50

22. Luke DA, Harris JK. Network analysis in public health: history, methods, and applications. Annu Rev Public Health. (2007) 28:69–93. doi: 10.1146/annurev.publhealth.28.021406.144132

23. Ramanadhan S, Salhi C, Achille E, Baril N, D'Entremont K, Grullon M, et al. Addressing cancer disparities via community network mobilization and intersectoral partnerships: A social network analysis. PLoS ONE. (2012) 7:e32130. doi: 10.1371/journal.pone.0032130

24. Valente TW, Chou CP, Pentz MA. Community coalitions as a system: effects of network change on adoption of evidence-based substance abuse prevention. Am J Public Health (2007) 97:880–6. doi: 10.2105/AJPH.2005.063644

25. Valente TW, Coronges KA, Stevens GD, Cousineau MR. Collaboration and competition in a children's health initiative coalition: a network analysis. Eval Program Plann. (2008) 31:392–402. doi: 10.1016/j.evalprogplan.2008.06.002

26. Creswell JW, Plano Clark VL. Designing and Conducting Mixed Methods Research 2nd ed. Los Angeles: Sage (2011).

27. Palinkas LA, Horwitz SM, Chamberlain P, Hurlburt MS, Landsverk J. Mixed-methods designs in mental health services research: a review. Psychiatr Serv. (2011) 62:255–63. doi: 10.1176/appi.ps.62.3.255

28. Aarons GA, Fettes DL, Sommerfeld DH, Palinkas LA. Mixed methods for implementation research application to evidence-based practice implementation and staff turnover in community-based organizations providing child welfare services. Child Maltreat. (2012) 17:67–79. doi: 10.1177/1077559511426908

29. Palinkas LA, Aarons GA, Horwitz S, Chamberlain P, Hurlburt MS, Landsverk J. Mixed method designs in implementation research. Adm Policy Ment Health. (2011) 38:44–53. doi: 10.1007/s10488-010-0314-z

30. Creswell JW KA, Plano Clark VL, Smith KC for the Office of Behavioral and Social Sciences Research. Best Practices for Mixed Methods Research in the Health Sciences. (2011). National Institutes of Health.

31. Fontana A, Frey JH. Interviewing: the art of science. In: Denzin NK, Lincoln YS, editors. Handbook of Qualitative Research. Thousand Oaks, CA: SAGE Publications (1994). p. 361–76.

32. Damschroder LJ, Lowery JC. Evaluation of a large-scale weight management program using the consolidated framework for implementation research (CFIR). Implement Sci. (2013) 8:51. doi: 10.1186/1748-5908-8-51

33. Wasserman S, Faust K. Social Network Analysis: Methods and Analysis. New York, NY: Cambridge University Press (1994).

35. Patton M. Qualitative Research and Evaluation Methods. 3rd edn. Thousand Oaks, CA: Sage Publications (2002).

36. Damschoder LJ, Goodrich DE, Robinson CH, Fletcher CE, Lowery JC. A systematic exploration of differences in contextual factors related to implementing the MOVE! weight management program in VA: A mixed methods study. BMC Health Serv Res. (2011) 11:248. doi: 10.1186/1472-6963-11-248

37. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)–a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. (2009) 42:377–81. doi: 10.1016/j.jbi.2008.08.010

38. Fernandez ME, Walker TJ, Weiner BJ, Calo WA, Liang S, Risendal B, et al. Developing measures to assess constructs from the Inner Setting domain of the Consolidated Framework for Implementation Research. Implement Sci. (2018) 13:52. doi: 10.1186/s13012-018-0736-7

39. Liang L, Kegler M, Fernandez ME, Weiner B, Jacobs S, Williams R, et al. Measuring Constructs from the Consolidated Framework for Implementation Research in the Context of Increasing Colorectal Cancer Screening at Community Health Centers. In: 7th Annual Conference on the Science of Dissemination and Implementation. Bethesda, MD (2014).

40. Kruse G, Hays H, Orav E, Palan M, Sequist T. Meaningful use of the indian health service electronic health record. Health Serv Res. (2017) 52:1349–63. doi: 10.1111/1475-6773.12531

41. Fetters MD, Curry LA, Creswell JW. Achieving integration in mixed methods designs-principles and practices. Health Serv Res. (2013) 48(6 Pt 2):2134–56. doi: 10.1111/1475-6773.12117

42. Shiell A, Hawe P, Gold L. Complex interventions or complex systems? Implications for health economic evaluation. BMJ. (2008) 336:1281–3. doi: 10.1136/bmj.39569.510521.AD

Keywords: implementation science, mixed methods research, asthma, hypertension, falls, tobacco

Citation: Lee RM, Ramanadhan S, Kruse GR and Deutsch C (2018) A Mixed Methods Approach to Evaluate Partnerships and Implementation of the Massachusetts Prevention and Wellness Trust Fund. Front. Public Health 6:150. doi: 10.3389/fpubh.2018.00150

Received: 30 November 2017; Accepted: 03 May 2018;

Published: 05 June 2018.

Edited by:

Donna Shelley, School of Medicine, New York University, United StatesReviewed by:

Christopher Mierow Maylahn, New York State Department of Health, United StatesGeraldine Sanchez Aglipay, University of Illinois at Chicago, United States

Copyright © 2018 Lee, Ramanadhan, Kruse and Deutsch. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rebekka M. Lee, cmxlZUBoc3BoLmhhcnZhcmQuZWR1

Rebekka M. Lee

Rebekka M. Lee Shoba Ramanadhan

Shoba Ramanadhan Gina R. Kruse

Gina R. Kruse Charles Deutsch1

Charles Deutsch1