- 1Macro Agriculture Research Institute, College of Resource and Environment, Huazhong Agricultural University, Wuhan, China

- 2Key Laboratory of Arable Land Conservation (Middle and Lower Reaches of Yangtze River), Ministry of Agriculture, Wuhan, China

- 3Aerial Application Technology Research Unit, USDA-Agricultural Research Service, College Station, TX, United States

- 4Department of Biological Systems Engineering, University of Nebraska–Lincoln, Lincoln, NE, United States

- 5College of Engineering, Huazhong Agricultural University, Wuhan, China

- 6College of Plant Science and Technology, Huazhong Agricultural University, Wuhan, China

- 7Anhui Engineering Laboratory of Agro-Ecological Big Data, Anhui University, Hefei, China

- 8College of Science, Huazhong Agricultural University, Wuhan, China

Rapeseed is an important oil crop in China. Timely estimation of rapeseed stand count at early growth stages provides useful information for precision fertilization, irrigation, and yield prediction. Based on the nature of rapeseed, the number of tillering leaves is strongly related to its growth stages. However, no field study has been reported on estimating rapeseed stand count by the number of leaves recognized with convolutional neural networks (CNNs) in unmanned aerial vehicle (UAV) imagery. The objectives of this study were to provide a case for rapeseed stand counting with reference to the existing knowledge of the number of leaves per plant and to determine the optimal timing for counting after rapeseed emergence at leaf development stages with one to seven leaves. A CNN model was developed to recognize leaves in UAV-based imagery, and rapeseed stand count was estimated with the number of recognized leaves. The performance of leaf detection was compared using sample sizes of 16, 24, 32, 40, and 48 pixels. Leaf overcounting occurred when a leaf was much bigger than others as this bigger leaf was recognized as several smaller leaves. Results showed CNN-based leaf count achieved the best performance at the four- to six-leaf stage with F-scores greater than 90% after calibration with overcounting rate. On average, 806 out of 812 plants were correctly estimated on 53 days after planting (DAP) at the four- to six-leaf stage, which was considered as the optimal observation timing. For the 32-pixel patch size, root mean square error (RMSE) was 9 plants with relative RMSE (rRMSE) of 2.22% on 53 DAP, while the mean RMSE was 12 with mean rRMSE of 2.89% for all patch sizes. A sample size of 32 pixels was suggested to be optimal accounting for balancing performance and efficiency. The results of this study confirmed that it was feasible to estimate rapeseed stand count in field automatically, rapidly, and accurately. This study provided a special perspective in phenotyping and cultivation management for estimating seedling count for crops that have recognizable leaves at their early growth stage, such as soybean and potato.

Introduction

Next to soybean and oil palm, rapeseed (Brassica napus L.) is the third largest oil crop worldwide (Wang et al., 2010; Berrocoso et al., 2015; Bouchet et al., 2016). Statistical data from the Food and Agriculture Organization of the United Nations have shown that the world production of rapeseed in 2016 was more than 68 million tons, mainly from Canada (19.5 million tons), China (13.1 million tons), and India (6.8 million tons)1. Increasing rapeseed yield is a major focus for rapeseed researchers and cultivators (Godfray et al., 2010). The crop stand count at early growth stages is one of the most important parameters for the prediction of yield, density, and growth status (Jin et al., 2017; Liu S. et al., 2017; Zhao et al., 2018). Rapeseed leaf development in early growth stages includes cotyledons completely unfolded, first leaf unfolded, two leaves unfolded, three leaves unfolded, until nine, or more leaves unfolded (Weber and Bleiholder, 1990; Lancashire et al., 1991). Overlapping is intense throughout the entire leaf development stage. Moreover, small and irregular spacing makes rapeseed seedlings clustered. As a result, it is hard to detect and count each individual rapeseed seedling (Zhao et al., 2018). The traditional way of counting rapeseed seedlings at early growth stages is based on ground-level investigation which is labor-intensive and time-consuming (Jin et al., 2017; Liu T. et al., 2017; Naito et al., 2017). Since rapeseed seedlings are in small plant size, irregular spacing and complex overlapping at their early growth stages, timing of rapeseed seedling counting by field investigation depends on empiricism. Accordingly, the obtained records and data are subjective (Bucksch et al., 2014; Deng et al., 2018). The most serious problem is that ground-level investigation is destructive to the field crops (Jin et al., 2017; Liu S. et al., 2017; Deng et al., 2018). In addition, manual investigation brings more external factors into the plant growth environment, resulting in artificial error (Zhao et al., 2018). Therefore, an objective, precise, and automated rapeseed stand counting method will benefit researchers and producers (Araus and Cairns, 2014).

In recent years, plant scientists worldwide have shown great interest in phenotyping since this technology will bring a brand new perspective for agricultural planting and breeding (Furbank and Tester, 2011; Yang et al., 2014; Naito et al., 2017). Phenotyping provides a new tool to reveal phenotype traits determined by environmental and genetic factors (White et al., 2012; McCouch et al., 2013; Ghanem et al., 2015) and to estimate the growth status of plants and crops (Sadras et al., 2013; Maimaitijiang et al., 2017; Yang et al., 2017). Remote sensing technology provides an efficient means for crop phenotype data collection (Verger et al., 2014; Shi et al., 2016; Yu et al., 2016; Wendel and Underwood, 2017), which can record phenotyping traits, such as plant height, canopy temperature, architecture, stress, and color (Walter et al., 2012; Rahaman et al., 2015; Mir et al., 2019). In particular, UAVs draw much attention due to their unique advantages, such as noninvasive observation at low altitude, high resolution, frequent data collection, and deployment flexibility (Zhang and Kovacs, 2012; Ballesteros et al., 2014; Huang et al., 2016). Accordingly, UAVs are used as a platform to collect data and estimate vegetation growth parameters including biomass (Bendig et al., 2014), leaf area index (Córcoles et al., 2013), height (Van Iersel et al., 2018), yield (Geipel et al., 2014), canopy cover, and structure (Cunliffe et al., 2016). Overall, as a powerful and reliable platform, UAVs have shown their advantages to be used to collect crop data for phenotyping.

In studies of crop stand counting, Gnädinger and Schmidhalter (2017) found maize plant numbers had a strong correlation (R2 = 0.89) with the enhanced color digital counts using UAV imagery. Jin et al. (2017) extracted 13 object features containing color and texture from UAV images and further employed SVM for wheat classification, counting, and density estimation. Their results indicated that wheat density can be estimated when wheat plants had one to two leaves (Jin et al., 2017). Besides, field imagery for crop stand counting has been applied in some other crops such as corn (Shi et al., 2013; Varela et al., 2018), potato (Zheng et al., 2016; Sankaran et al., 2017), and cotton (Chen et al., 2018) with various remote sensing platforms. However, in most previous studies of crop stand counting, data were derived only from one observation at a certain day during the growth stages, and to our knowledge, there has been little research with data from multiple observations for rapeseed.

Object identification, classification, and counting are major tasks in image analysis (Davies, 2009; Blaschke, 2010; Ma et al., 2017; Zanotta et al., 2018). Several approaches have been developed for fast image processing and classification (Schowengerdt, 2012), including SVM, RF. These approaches are developed from using individual spectral, spatial, or textural information to integrating all the information (Linker et al., 2012). Gnädinger and Schmidhalter (2017) used regression analysis to find the correlation between maize plant number and green digital pixel counts based on spectral information. Jin et al. (2017) employed SVM model to classify wheat and to estimate seedling count and density by using spectral and textural features. These approaches can be used for counting trees (Gomes et al., 2018), fruits (Qureshi et al., 2017), and flowers based on satellite remote sensing imagery or UGV/UAV remote sensing imagery. Nevertheless, these regular non-automatic approaches required manual extraction of distinct features, which was time-consuming for a multi-observation study.

Convolutional neural networks have drawn wide attention due to their automated processing and good performance in image analysis (Lee et al., 2017; Pound et al., 2017; Sindagi and Patel, 2017). The CNN application for object detection and counting has been reported in previous studies, such as crowd detection and counting in public events (Sheng et al., 2016; Zhang et al., 2015, 2016) and animal detection and counting in the wild (Arteta et al., 2016). CNN approach is also reported to be applied for classification, detection, and counting for seeds, fruits, flowers, crops, and leaves in agriculture (Grinblat et al., 2016; Ramos et al., 2017). Pound et al. (2017) showed that CNNs were effective to distinguish, identify, and count wheat plants and their ears in glasshouse condition. Madec et al. (2019) used faster region-based CNN (Faster-RCNN) to detect and count wheat ears in a field and estimated the density of ears via high-resolution RGB imagery captured by a camera fixed on a boom. Besides, there are some studies using CNN for tree detection and counting. Li et al. (2016) employed a CNN algorithm to detect and estimate oil palm trees from four-band satellite images with 0.6 m spatial resolution, and they reported that more than 96% of trees were correctly detected. Csillik et al. (2018) used a customized CNN model for citrus tree detection and counting with overall accuracy greater than 95%. These studies demonstrated that CNN could be applied to remote sensing imagery captured from both satellites and UAVs. Crop stand counting using CNN has not been widely reported, especially for rapeseed stand counting. Ribera et al. (2017) reported a CNN model to estimate the number of sorghum plants by UAV imagery with a best mean absolute percentage error of 6.7%, which provided a solution for large field plant counting research using CNN. Using CNN in a field or in the wild is of more practical significance (Simonyan and Zisserman, 2014).

However, the situation of crops grown in the field was complex. Specifically, rapeseed seedlings grown in the field were in irregular spacing, complex overlapping, and different plant sizes, which made its counting difficult. Nevertheless, one of rapeseed phenotyping traits that UAV imagery collected was its leaf canopy. During the early growth stages of leaf development, rapeseed leaves play an important role reflecting their growth status (Weber and Bleiholder, 1990; Lancashire et al., 1991). Therefore, it is feasible to recognize individual rapeseed leaves with CNN and perform stand counting with reference to the existing knowledge of the number of rapeseed leaves per plant. The objectives of this study were to (1) recognize and count the individual rapeseed canopy leaves through UAV imagery with CNN, (2) establish and examine the models identifying the number of leaves per rapeseed seedling, and (3) propose an optimal timing to estimate rapeseed stand count.

Materials and Methods

Study Area and Experimental Design

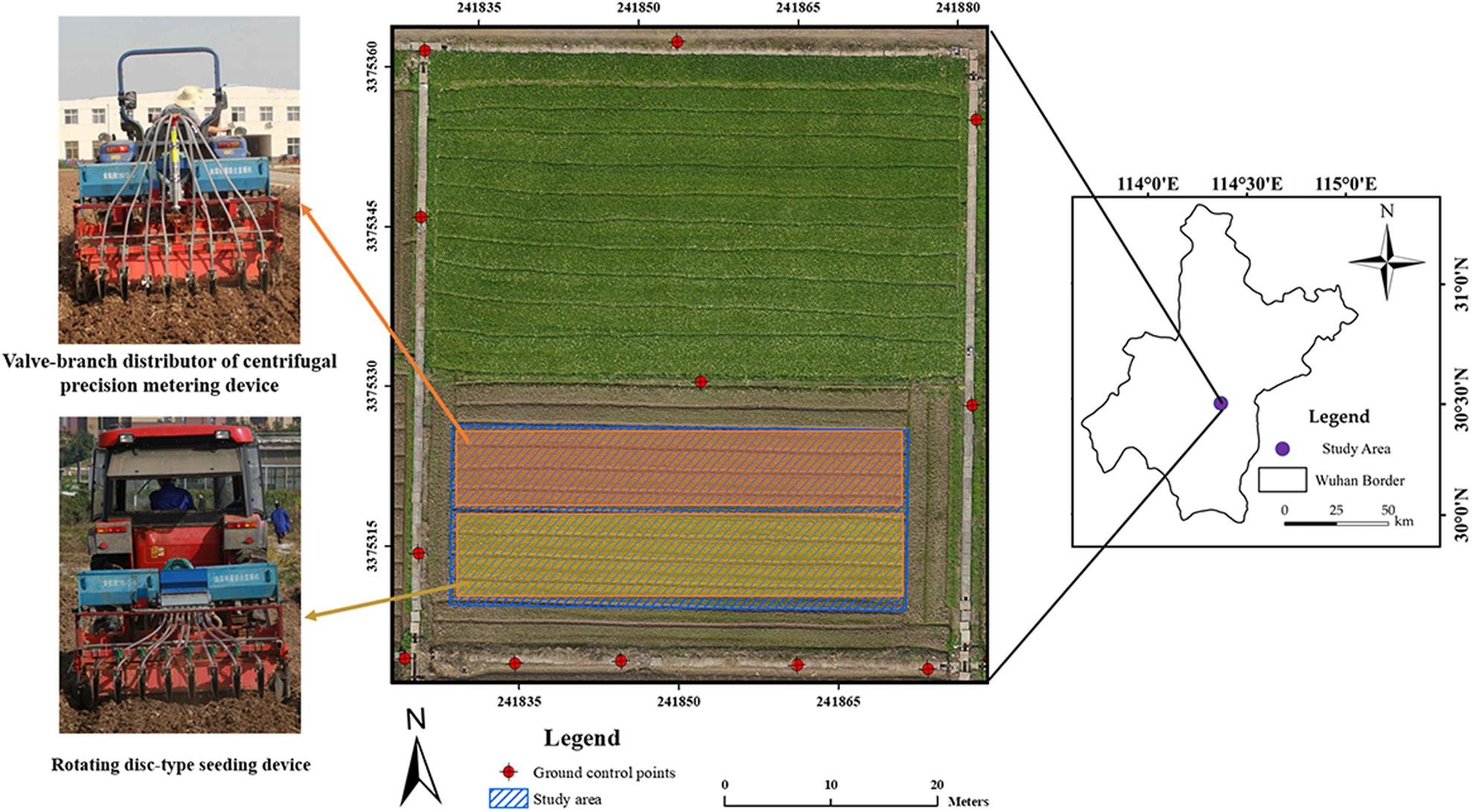

Figure 1 shows the study area and GCPs. The study area with center coordinates (30°28′57.11″N, 114°18′39.45″E) located near Huazhong Agricultural University in Wuhan, China. It covered an area about 50 m × 30 m with an average elevation of 27 m. In the field, a rapeseed cultivar named Huayouza 62 (B. napus L.) was sown with two planters including a valve-branch distributor-based centrifugal precision metering device and a rotating disk-type seeding device on November 4, 2017 (Figure 1). According to the experimental design, rapeseed was seeded by the two devices in eight rows simultaneously with 20 cm row spacing at a seeding rate of 5.5 kg/ha. No weed control management was implemented after sowing. There were 12 GCPs permanently arranged surrounding the study area. Reach RS+ (Emlid, United States) was used to collect GPS information.

Image Acquisition System

The image acquisition system was composed of a DJI rotocopter Matrice 600 UAV (DJI, Shenzhen, China) and a Nikon D800 camera (Nikon, Japan) in this study. The UAV could hover for 35 min without a payload. In this study, each mission took about 13 min with a payload of 2 kg. A GPS module was integrated into the UAV, which was tested with a horizontal accuracy of 0.5 m and a vertical accuracy of 1.5 m. The Nikon D800 camera fitted with a Nikon 50.0 mm f/1.4D lens was mounted on the UAV to collect nadir RGB images of the rapeseed field during flights. The complementary metal oxide semiconductor (CMOS) sensor of the camera had a size of 35.9 mm × 24.0 mm, capturing images with 7,360 × 4,912 pixels. The camera was also integrated with a GPS device to geotag the images and a wireless control to trigger the camera capturing imagery every 1.0 s automatically. An SD memory card was used to store JPEG images with a 24-bit format.

Each flight mission followed the same camera configuration during the whole rapeseed leaf growth stage. The frontal and side overlaps of the flight path were 80.0 and 70.0%, respectively. Two perpendicular flight paths were conducted to cover and image the rapeseed canopy. In this study, the UAV was flown at a ground speed of 3 m/s and at a height of approximately 30 m above ground level, and spatial resolution of image was about 0.18 cm.

Unmanned Aerial Vehicle Data Collection and Preprocessing

Image collection was scheduled from November 17, 2017, the 14th DAP, to January 12, 2018, with an interval of 7 days. This period covered the whole rapeseed leaf development stage (Weber and Bleiholder, 1990; Lancashire et al., 1991). Some adjustments were made because of rainy or heavy windy weather. As a result, data collection started on November 17, 2017, and ended on January 10, 2018, with specific dates on 14, 23, 32, 39, 46, 53, 58, and 68 DAP from 12:30 p.m. to 2:00 p.m. The one- to three-leaf stage was before 40 DAP and the three- to four-leaf stage lasted from 40 to 50 DAP. The four- to six-leaf stage ranged from 50 to 60 DAP, and the seven-leaf stage and beyond was after 60 DAP.

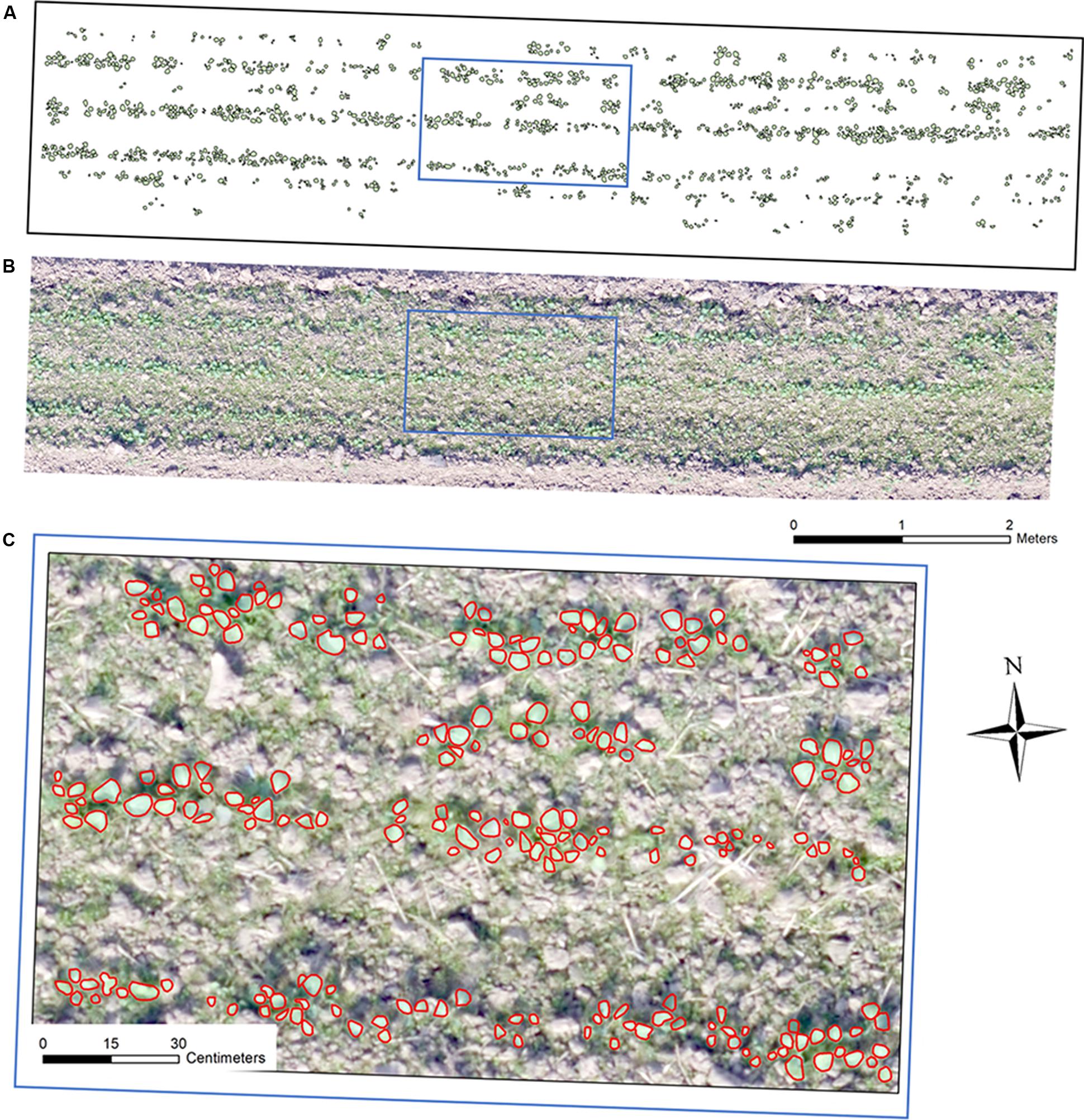

Free software Capture NX-D 1.2.1 from Nikon (Nikon, Japan) was utilized to correct geometric distortion for the UAV captured individual images. Secondly, an ortho-mosaic image for each collection was generated using Pix4Dmapper software (Pix4D, Switzerland). In this step, the 12 GCPs were added for image mosaicking. This study used eight sample plots (four plots per seeding device) for analysis, which were subsets from each ortho-mosaic image by ArcMap 10.3 (ESRI, United States). Each plot was in the size of 9.5 m × 2 m (Figure 2B).

Figure 2. Ortho-mosaic RGB image of a sample plot with a size of 9.5 m × 2 m: (A) manual interpreted and annotated rapeseed leaves, (B) ortho-mosaic RGB plot image, (C) RGB image overlapping with annotated rapeseed leaves.

These eight sample plots were divided into an image training dataset containing six sample plots and a test dataset consisting of two sample plots during the whole processing and analysis. Since manual counting in the field could possibly change the real field condition, making the data unreliable for multiple observations, this study manually interpreted and annotated the rapeseed leaves over the eight sample plots for each observation date using ArcMap 10.3 (an illustration in Figure 2), which was mainly described in the next section. This study also used image-based manual rapeseed seedling count as the ground truth reference. Data of 14 and 23 DAP were not used because rapeseed seedlings were too small to distinguish in imagery. Therefore, there were six remaining observation dates over the eight study plots (six for training and two for testing) in this study.

All the processing and analysis were executed on a computer with an Intel (R) Core (TM) i7-6800K CPU and one NVIDIA GeForce GTX 1060 6GB GPU, and the memory of the computer was 32 GB.

Image Processing and Data Analysis

Rapeseed Leaf Recognition Based on a Convolutional Neural Network

Convolutional neural networks are the most popular machine learning algorithms applied to various computer vision tasks, such as numeral recognition, face recognition, and handwriting recognition. Some software packages such as Python and Matlab provide a convenient environment for CNN modeling, and there are some open-source CNN codes online. However, it is still hard for a nonprofessional machine learning researcher to implement the entire flowchart of CNN modeling, including CNN software operation environment configuration, codes modification, as well as parameter adjustment. In addition, such CNN software is not suitable for processing geospatial information and geoinformation analysis. Thus, this study employed the easy-to-use image analysis software eCognition Developer 9.3 (Trimble, United States), which contains a CNN module (Trimble, 2018). The module can be used to recognize objects in images based on the Google TensorFlowTM library-create (Csillik et al., 2018; Trimble, 2018).

This approach was a patch-based CNN algorithm according to the categorization reviewed by Sindagi and Patel (2017). It was convenient and interactive when researchers use CNN for image analysis in this software. The operation in this software was mainly composed of four steps: (1) to generate labeled sample patches; (2) to create a CNN; (3) to train a CNN; and (4) to apply a CNN (Trimble, 2018). In this study, the labeled sample patches were cropped from the training sample plot images according to three classes including rapeseed leaves, weeds, and bare soil.

To label the three classes, the rapeseed leaves from the eight sample plots for each observation date were manually interpreted and annotated using ArcMap 10.3, as shown in Figure 2A. The labeled leaves were used as a reference, representing the ground truth leaves. The overlapping of rapeseed leaves was unavoidable, and each recognized canopy rapeseed leaf was outlined. Furthermore, the weeds increased with time since there was no weed control management in the field to maintain the original ecological scene. As DAP increased, the rapeseed leaves and weeds were much distinguishable by size, color, texture, and pattern in high-resolution UAV images. Therefore, after interpreting and annotating the rapeseed leaves, we used ExG-ExR, a color vegetation index whose ability for green pixel identification was confirmed (Meyer and Neto, 2008; Zhao et al., 2018) to label the remaining green pixels as weeds by eCognition Developer. The pixels that were not in green were labeled as bare soil through normalized green minus red difference index (NGRDI). The pixels whose values of NGRDI less than 0 were classified as bare soil. In this study, the input data of eCognition Developer include RGB imagery in TIFF format exported from ArcMap, the manual-annotated rapeseed leaf polygon shapefile exported from ArcMap.

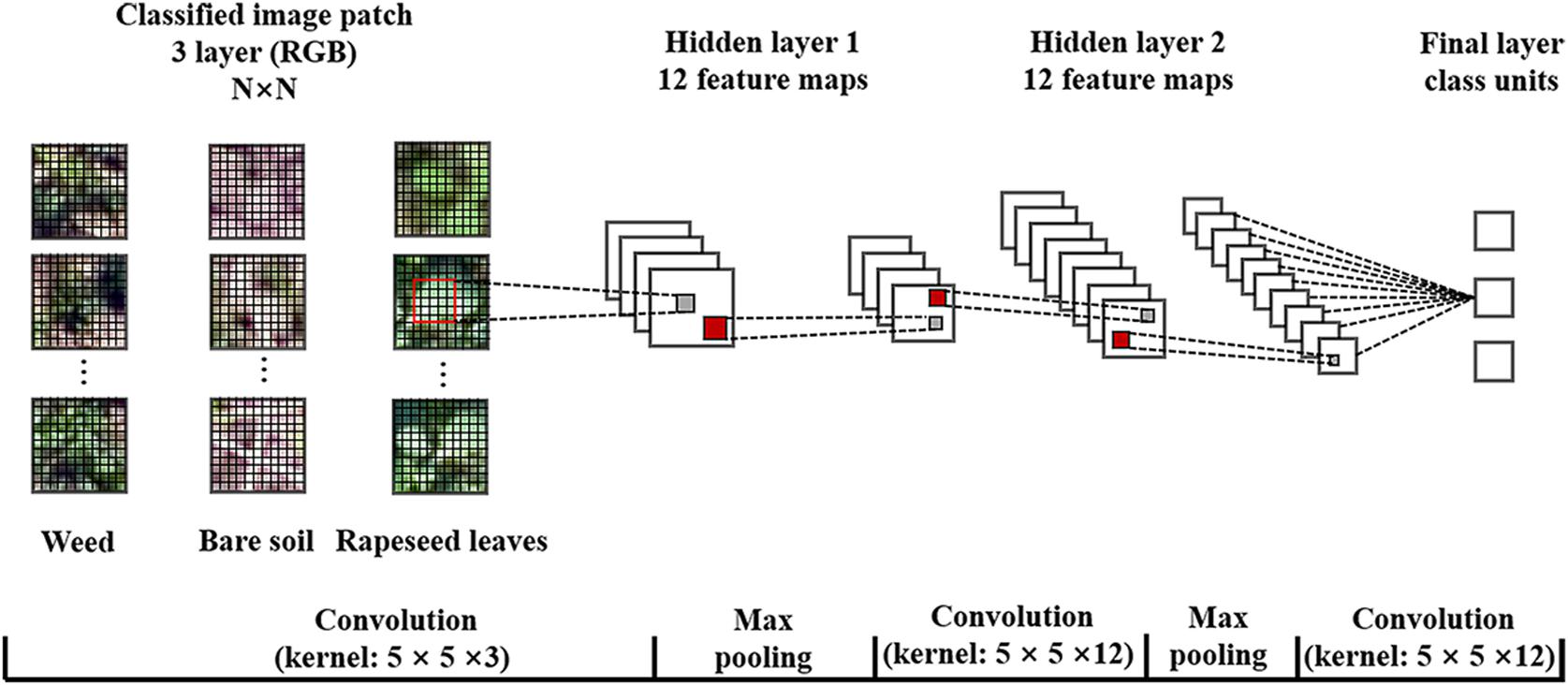

The parameters during the operation included sample patch size, number of hidden layers, kernel size, number of feature maps, max pooling, and learning rate. According to the rapeseed leaf development stages from one leaf to more than seven leaves during the investigation period, this study generated five sizes of sampling patches for CNN training, which included 16 × 16, 24 × 24, 32 × 32, 40 × 40, and 48 × 48. In addition, the default number of feature maps was 12, while the learning rate was at 0.0005 in the software. Based on the five patch sizes, the optimal kernel size was 5 × 5 after trial-and-error processing. Therefore, this study structured an initial CNN model as shown in Figure 3, containing two hidden layers, two max pooling layers, and one fully connected layer. A previous study using a similar CNN model showed great performance for oil palm tree detection and counting with satellite images (Li et al., 2016). We generated 20,000 sample patches of rapeseed leaves, 10,000 weed patches, and 10,000 bare soil patches from the training dataset in each observation date for five sample patches totally.

Figure 3. Structure and parameters of the convolutional neural network (CNN) model used in this study. RGB, red-green-blue.

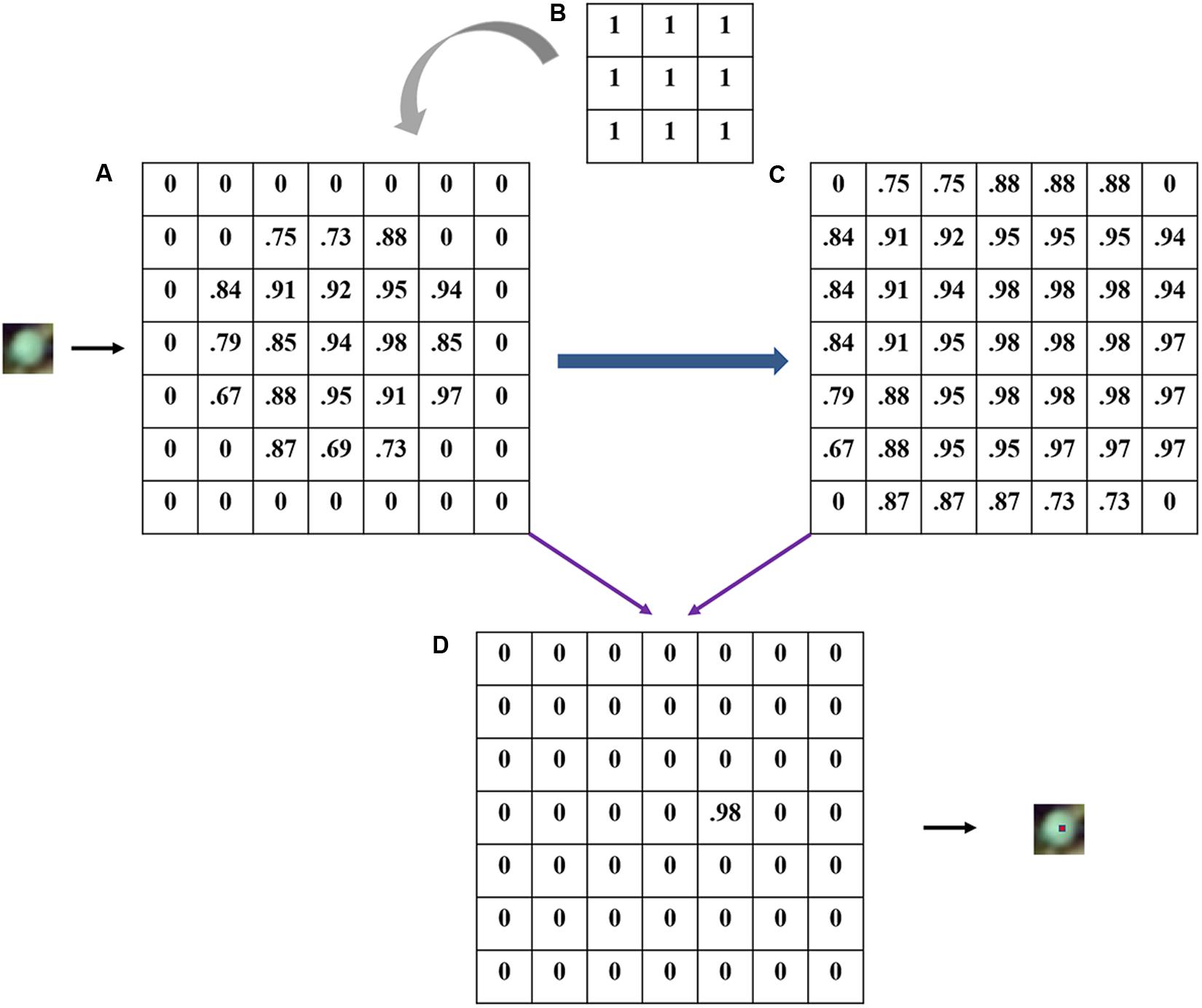

The number of recognized rapeseed leaves was counted after the dilate operation in the outputted heat map, according to the number of local maximal points. A heat map with each pixel value ranging from 0 to 1 was output by the CNN model, representing the possibility of the target class for rapeseed leaves. High pixel values close to 1 in the heat map indicated a high possibility of rapeseed leaves, while values close to 0 indicated a low possibility (Csillik et al., 2018; Trimble, 2018). Based on the pixel values, local maximal pixels whose threshold ranged from 0.5 to 1 (step on 0.01) were iteratively searched to locate a rapeseed leaf through the dilate operation in eCognition as the locations of rapeseed leaves were expected to coincide with local maximal and high values in the heat map (Figure 4).

Figure 4. An illustration of local maximal possibility detection with 3 × 3 filter (actual 5 × 5 in the study): (A) possibility heat map; (B) a 3 × 3 dilation filter; (C) the maximal layer after dilating; (D) locating target.

In this study, the size of the dilation filter was defined as 5 × 5, which was the same as the kernel size in CNN. Afterward, a maximal layer was generated, reflecting the maximum value in the matrix of 25 pixels in the heat map (Figure 4C). Accordingly, when a pixel in the maximal layer has the same value with the pixel in the same location of the heat map, this pixel inherited the value. Otherwise, the pixel was valued 0 (Figure 4D). Each located point was considered to represent a CNN-detected rapeseed leaf. Thus, the number of local maximal points was considered as the number of recognized rapeseed leaves.

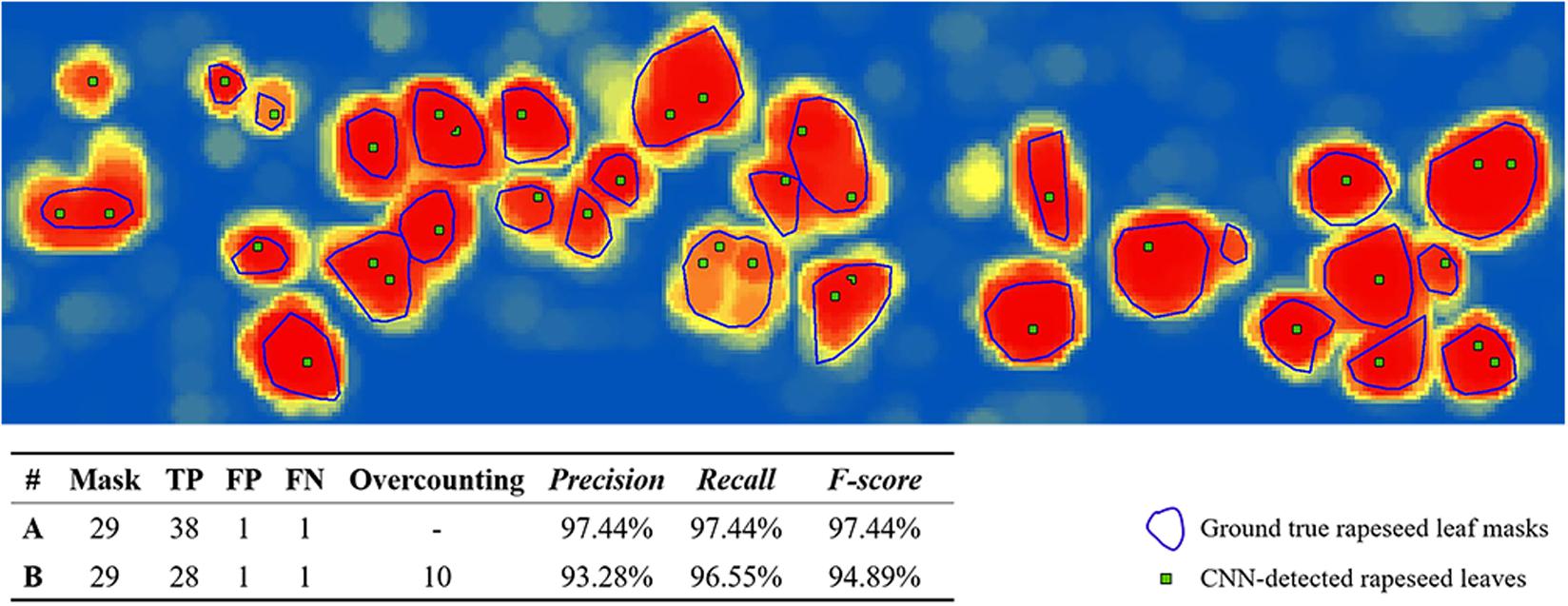

Overcounting occurred when the number of CNN-detected rapeseed leaves sometimes was larger than the actual number of rapeseed leaves, as a big leaf might correspond to several located maximum points (Figure 5). In Figure 4, if a pixel far away from the pixel of 0.98 also had a value of 0.98, two targets might be located in Figure 4D. Merging the adjacent points with a tolerance was a useful means for tree canopy recognition and counting (Li et al., 2016), but this method cannot be applied to rapeseed leaf counting in this study since the boundary of rapeseed leaves was less distinct than that of a tree canopy. Moreover, the sizes of rapeseed leaves were also different. Therefore, it was difficult to determine a precise distance for merging rapeseed leaf located points. Inspired by the method of using ground truth masks for assessing the accuracy of the estimated plant centers (Chen et al., 2017), we used the manual interpreted ground truth rapeseed leaf outlines as masks to record and analyze the overcounting rate (Roc) of the local maximum points for each investigation (Figure 5).

Figure 5. Illustration of overcounting statistics. CNN, convolutional neural network; FN, false negative; FP, false positive; TP, true positive. A was calculated without overcounting rate, B was calculated with overcounting rate.

The illustration in Figure 5 was a subset of CNN recognition results on 53 DAP with the 32-pixel patch size and a local max value of 0.65. The blue lines showed rapeseed leaf outline masks, and each mask represented a ground truth rapeseed leaf. The green points illustrated the CNN-detected rapeseed leaves.

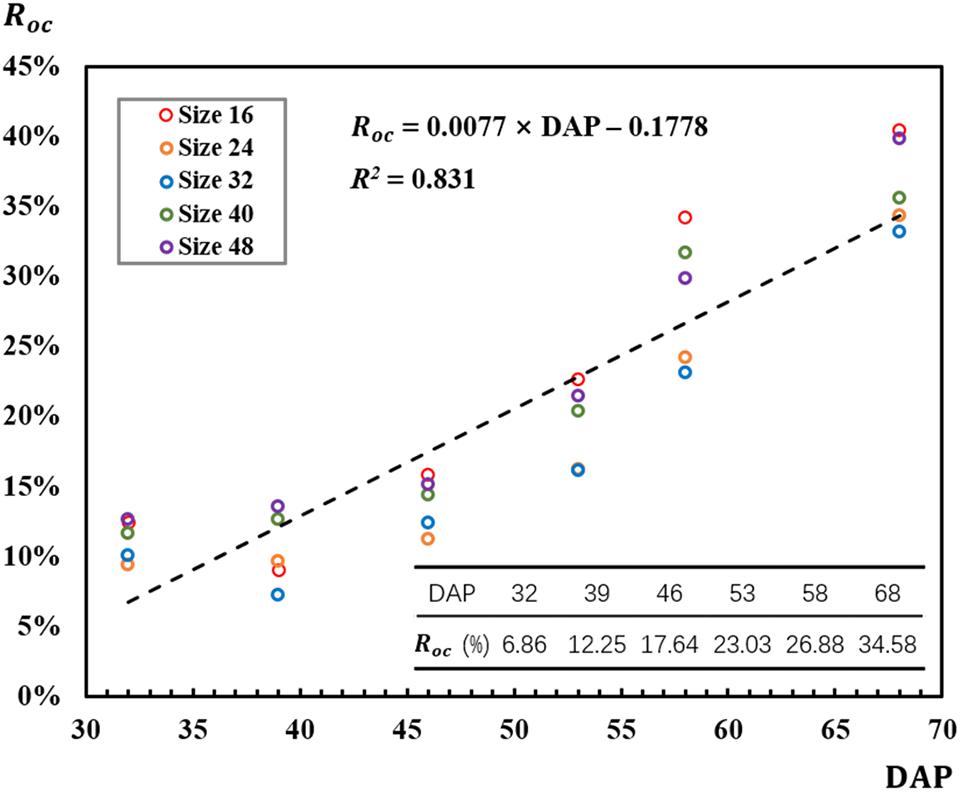

As mentioned, the sizes of leaves influenced the number of located maximum points representing the CNN-detected rapeseed leaves, and DAP was a significant factor of the leaf size. Thus, it could be assumed that in our study duration, the Roc might be related to DAP. For CNN detection and counting, Roc was calculated by:

where N is the number of CNN-detected rapeseed leaves, M is the number of ground truth rapeseed leaf masks, Ci is the number of located maximum points inside a ground truth rapeseed leaf mask for mask i, when mask i has more than one point.

Precision, Recall, and F-score were used in this study to evaluate leaf detection results (Xiong et al., 2017; Zhao et al., 2018). Precision and Recall are defined by true positive (TP), false positive (FP), and false negative (FN):

Combined with the situation of overcounting, the following equations can be inferred by Eqs. (1), (2), and (3) in this study:

The unique recognized local maximum point that is inside a ground truth mask is considered as a TP. Accordingly, TP here is the accurate number of CNN-detected rapeseed leaves. If a local maximum point is outside a mask, then this maximum point is considered as a FP. A mask is identified as a FN if there is no point recognized inside (Figure 5). F-score was used as the final exponent to evaluate the CNN-detected rapeseed leaf recognition accuracy. Precision, Recall, and F-score in this study can be expressed as follows:

Leave-One-Out Crossing Validation Regression Modeling for Rapeseed Seedling Counting

A strong relationship between the rapeseed seedling counting and the number of unfold leaves was expected because the identification of rapeseed at early growth stages was based on the number of unfolded leaves (for example, the one- to three-leaf stage and four- to six-leaf stage). In this study, the models that counting rapeseed seedlings through the number of manual-interpreted rapeseed leaves were first established and verified, which were considered as the reference describing the number of leaves per rapeseed seedling plant. Then the number of CNN-recognized rapeseed leaves was applied to these models to evaluate the accuracy of rapeseed stand count estimation at different growth stages.

The LOOCV regression modeling method was used to establish the models of seedling stand counting. LOOCV was effective in the case of small sample size. It was a special case of K-fold crossing validation, when K was equal to the number of samples. One sample was excluded for validation, and the rest samples were used for training. The same operation was repeated for K times so that each sample could be used for validation so that the results were unbiased. The LOOCV regression modeling was conducted by Python Spyder in Anaconda3 (64-bit) (Anaconda Software Distribution, 2016. Computer software).

In this study, there were six training sample plots for each investigation. Thus, K was 6, and LOOCV regression modeling was repeated for six iterations. The optimal rapeseed seedling model parameters of the corresponding DAP were further obtained by calculating an average value of the iteration results. Mean absolute error, RMSE, and coefficient of determination () were used to verify these models. They were calculated as follows:

where n is the number of sample plots, yi is the investigated ground truth rapeseed seedling stand count for sample i, is the model-predicted rapeseed seedling stand count for sample i, is the average value of the investigated ground truth rapeseed seedling stand count for all samples in each observation date.

Performance Evaluation of Counting Seedlings

The number of CNN-recognized rapeseed leaves was used to evaluate the eventual performance of counting seedlings in this study. The number of CNN-recognized rapeseed leaves corresponding to the best value of F-score was applied to the models. Relative RMSE (rRMSE) was calculated as follows:

where is the average value of the investigated ground truth rapeseed seedling stand count for all samples in each observation date.

Results

Counting Rapeseed Leaves Recognized by Convolutional Neural Networks

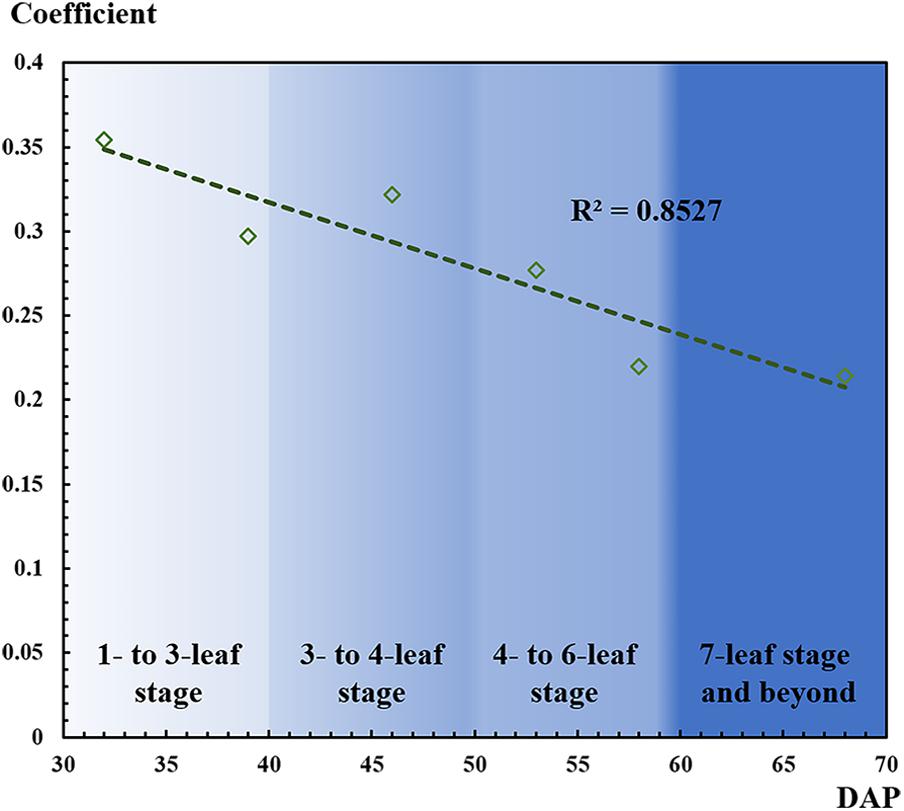

The strong correlation (R2 = 0.831) between Roc and DAP conformed the impact of DAP on Roc that Roc increased as DAP advanced during the observed rapeseed leaf development stages (Figure 6). The bigger leaves caused the more detected local maximal points for a leaf, thus resulting in the overcounting situation. As shown in Figure 6, Roc ranged from 7% on 39 DAP for the 32-pixel patch to 40% on 68 DAP for the 16-pixel patch. According to the correlation in Figure 6, Roc was estimated to be 6.86% for 32 DAP, 12.25% for 39 DAP, 17.64% for 46 DAP, 23.03% for 53 DAP, 26.88% for 58 DAP, and 34.58% for 68 DAP. In Figure 6, Roc for the 32-pixel patch (blue legend) was lower than that for the other four patch sizes in most DAPs.

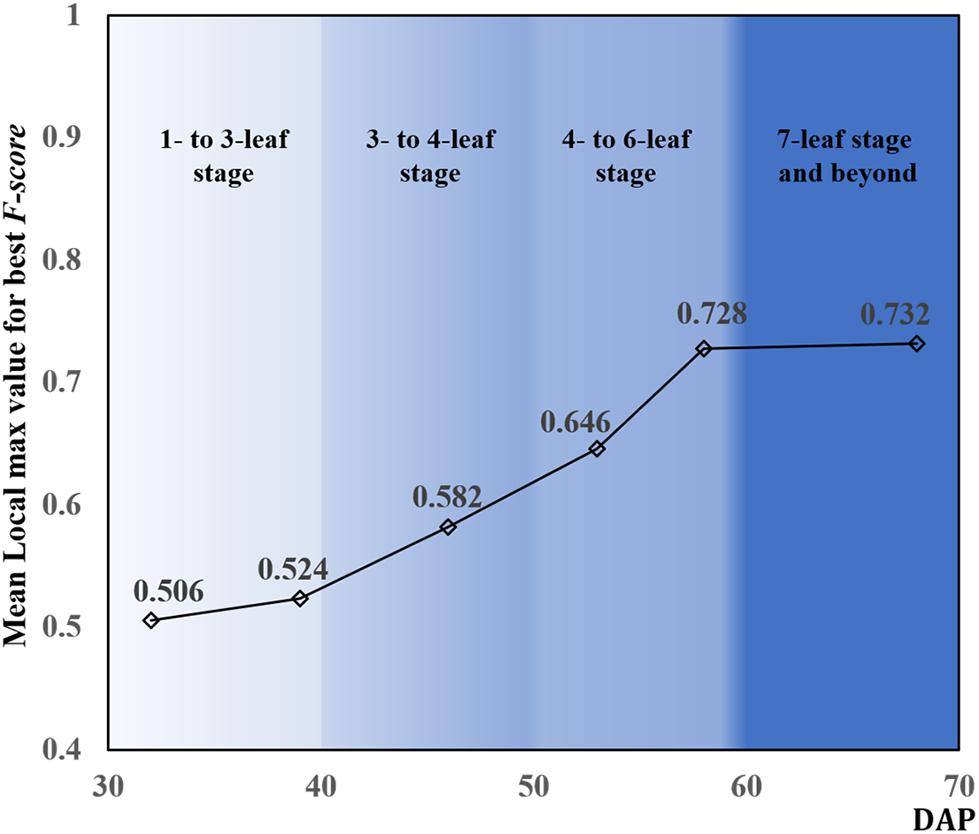

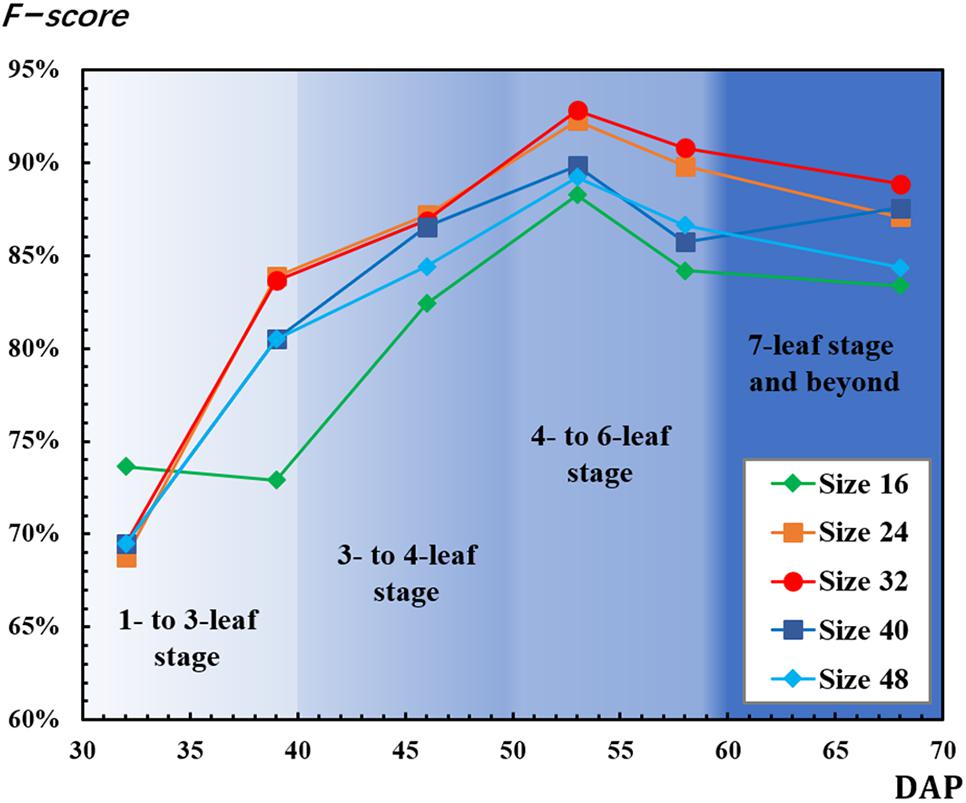

F-scores used for CNN-based recognition accuracy evaluation were calculated by equations (9), (10), and (11). The mean F-scores based on local maximal values ranging from 0.5 to 1 were calculated (Figure 7). For most of the patch sizes, F-scores increased from 32 to 53 DAP and decreased after 53 DAP. However, F-scores decreased from 32 to 39 DAP for the 16-pixel patch and increased from 58 to 68 DAP for the 40-pixel patch. For all patch sizes, F-scores had the highest values on 53 DAP. On 53 DAP, the ranking of F-score values from highest to lowest was 92.83% for the 32-pixel patch, 92.26% for the 24-pixel patch, 89.84% for the 40-pixel patch, 89.21% for the 48-pixel patch, and 88.26% for the 16-pixel patch.

Figure 7. Results of F-score for convolutional neural network (CNN)-detected rapeseed leaf recognition and counting.

Figure 8 shows the mean local max values achieving best F-score among the testing data during the whole observed leaf development stage. DAP also influenced the variation of local max values. The local max values increased with DAP and leveled off after 58 DAP. With these local max values, the number of rapeseed leaves recognized by CNN was counted for each observation.

Revealing the Number of Rapeseed Leaves per Seedling

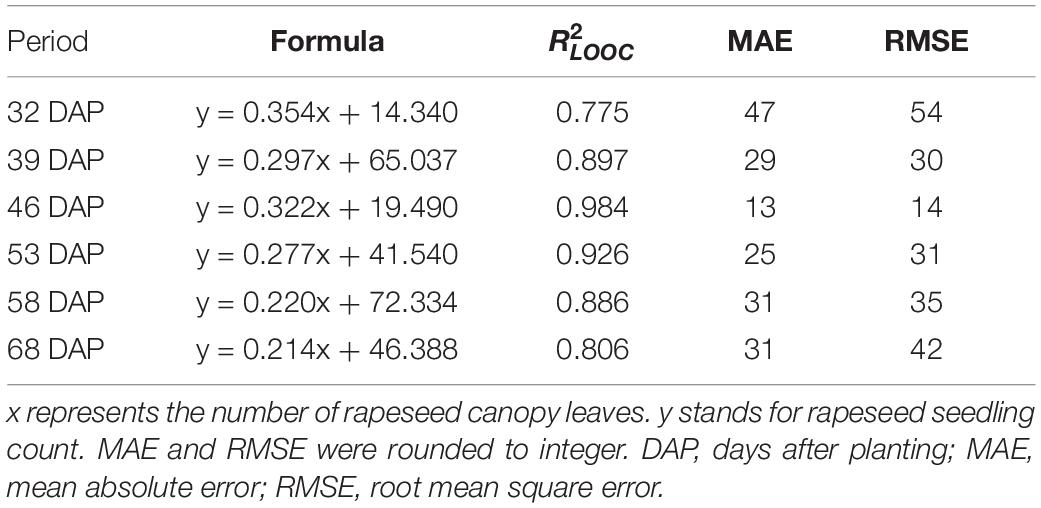

A strong correlation between the seedling counting and the number of canopy rapeseed leaves was established by using the LOOCV method over data of training sample plots for each observation (Table 1). All sub-models were significant (p-value < 0.05) except for the sub-model of the first iteration on 32 DAP exhibiting a p-value of 0.058. Afterward, the relationship defining the number of rapeseed leaves per seedling was revealed by the formulas in Table 1 for each observation.

These formulas were verified by the testing data. The ordering of from highest to lowest was 0.984 on 46 DAP, 0.926 on 53 DAP, 0.897 on 39 DAP, 0.886 on 58 DAP, 0.806 on 68 DAP, and 0.775 on 32 DAP. RMSE ranged from 14 plants to 54 plants, and MAE ranged from 13 plants to 47 plants. Results showed that the relationship revealing the number of rapeseed leaves per seedling obtained the best performance on 46 DAP and satisfactory performance on 53 DAP. As a result of the strong correlation revealing the number of rapeseed leaves per seedling, the approach using precise CNN-recognized leaves counting to estimate the seedling stand count was feasible and expected.

Performance of Estimating Seedling Stand Count With Convolutional Neural Network-Recognized Leaf Counting

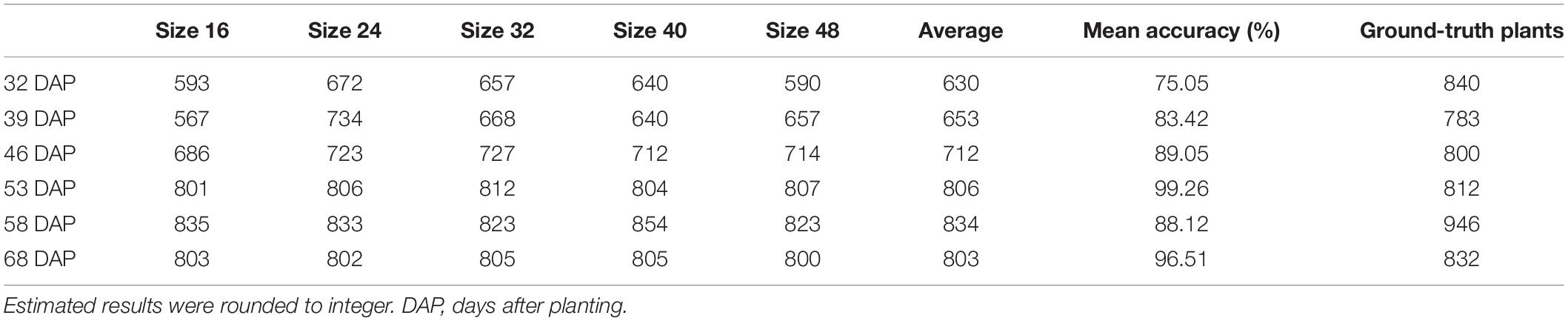

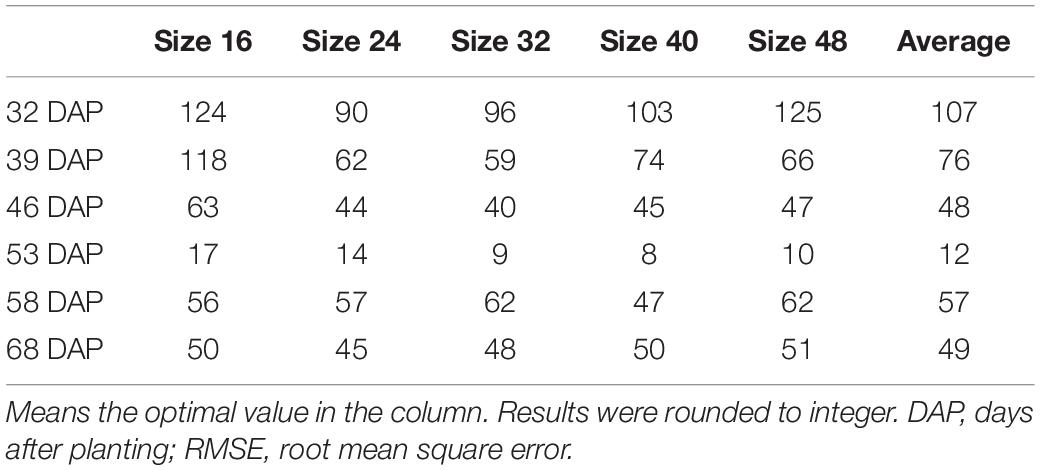

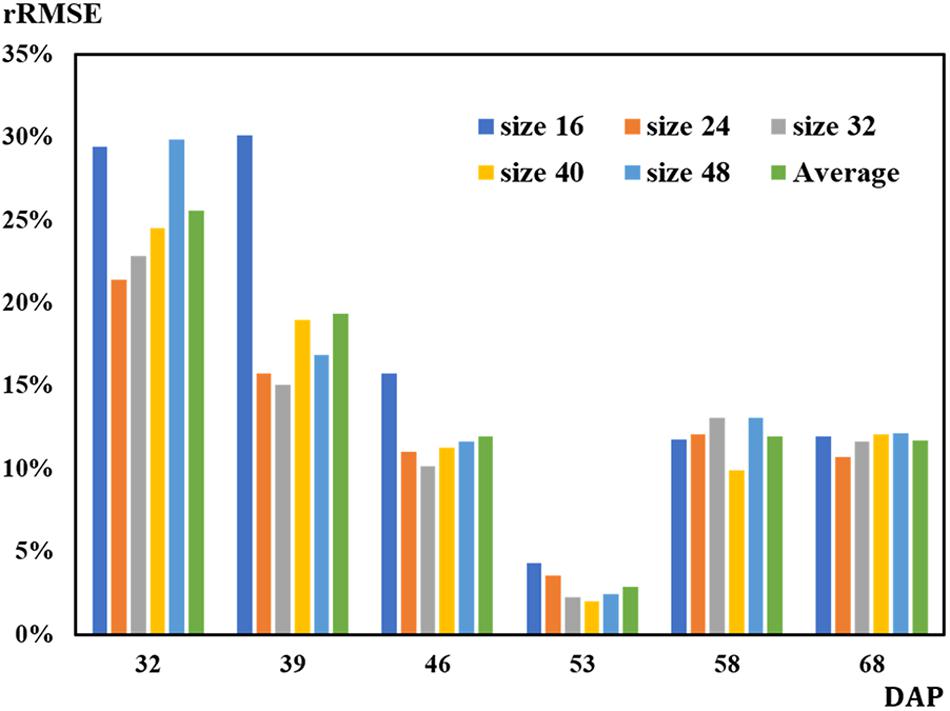

Convolutional neural networks-recognized leaf counting (see section “Counting Rapeseed Leaves Recognized By Convolutional Neural Networks”) was used to estimate seedling stand count according to the revealed relationship of the number of rapeseed leaves per seedling (Table 1 in section “Revealing the Number of Rapeseed Leaves Per Seedling”). Table 2 gives the results of estimating rapeseed seedling stand count. For all patch sizes, the best mean accuracy was achieved on 53 DAP with 99.26%. On average, 806 out of 812 plants were correctly estimated on 53 DAP at the four- to six-leaf stage. With the 32-pixel patch size, almost all the seedling stand counts were correctly estimated on 53 DAP. Some errors were counteracted mutually as a result of summing up the estimated seedling stand counts from two test sample plots. RMSE and rRMSE are presented in Table 3 and Figure 9, respectively.

On 32 DAP, a maximum mean RMSE of 107 plants was observed, while on 53 DAP, a minimum mean RMSE of 12 plants showing the best performance was obtained. On 53 DAP, the rRMSE was 1.99% for the 40-pixel patch size (Figure 9). For the 32-pixel patch size, its RMSE was nine plants with an rRMSE of 2.22%. The ordering of mean RMSE from lowest to highest was 12 on 53 DAP, 48 on 46 DAP, 49 on 68 DAP, 57 on 58 DAP, 76 on 39 DAP, and 107 on 32 DAP, with the best performance on 53 DAP. Similarly, the ordering of rRMSE was 2.89% on 53 DAP, 11.71% on 68 DAP, 11.97% on 46 DAP, 11.97% on 58 DAP, 19.36% on 39 DAP, and 25.59% on 32 DAP.

Discussion

Influence of Days After Planting Corresponding to Leaf Development Periods

Huayouza 62 (B. napus L.) used in the study is a member of the family Brassicaceae (Weber and Bleiholder, 1990; Lancashire et al., 1991). Based on the characteristics that its leaf development periods were highly related to DAP, the number of canopy leaves was used for seedling stand count modeling and estimation in this study. Chen et al. (2018) found that DAP had a strong correlation with the number of germinated seeds (R2 = 0.938) and with average plant size (R2 = 0.936). In our study, results also strongly illustrated that DAP played an important role on the leaf recognition and counting, revealing the relationship between seedling stand count and the number of leaves per seedling, and the eventual estimation of seedling stand count with CNN-recognized leaf counting. Finally, the best performance was achieved on 53 DAP for leaf recognition and counting as well as estimating seedling stand count with the number of CNN-recognized leaf counting (Figures 7, 9 and Tables 2, 3).

This study not only used DAP but also tried to associate it with the leaf development period and to determine the influence of DAP corresponding to the leaf development periods on rapeseed leaf counting (Figures 7, 8). DAP is an essential dimension unit to describe the growth process of crops, and it is easy to comprehend and quantify. Nevertheless, the growth situation based on DAP varies with different regions where crops and plants are cultivated with different treatments, even for the same cultivated variety. Sankaran et al. (2017) reported that it was hard to estimate the number of potato plants after 43 DAP because of the within-row canopy closure, even though the best correlation coefficient (r = 0.83) between manual plant counts and image-based counts was achieved on 32 DAP. This study presented a novel approach to understand the growth status of rapeseed seedlings and to count the seedlings in terms of leaf development periods.

In Figure 6, overcounting rate increased with DAP. From another perspective, the increasing overcounting rate was mainly caused by the development of leaves or the increasing size and number of rapeseed seedling canopy leaves. Rapeseed seedlings experience a process from new leaf unfolding to leaves gradually growing with DAP. In this study, rapeseed had more than seven leaves in the last observation period, which caused a serious overlapping. Moreover, the bigger leaf size influenced the recognition. According to the trend and strong correlation between overcounting rate and DAP (R2 = 0.831), when the number of leaves developed from one to more than seven leaves, overcounting rate might keep increasing.

As mentioned, as the number of leaves increased, leaf overlapping and saturation became more intensified. In Figure 7, F-score increased from the one- to six-leaf stage, and then decreased after that. This peak of F-score was mainly related to the leaf development. When rapeseed seedlings were in the one- to six-leaf stage, the number of unfolded leaves increased dramatically, and the characteristics of leaves became more and more obvious so that the recognition performance improved. After the six-leaf stage, leaf overlapping and saturation resulted in the decreasing recognition accuracy. On the other hand, in Figure 8, the local maximal value for CNN recognition did not increase after the six-leaf stage. Moreover, a strong correlation (R2 = 0.835) between the coefficient of the seedling counting models (Table 1) and DAP was found (Figure 10).

Figure 10. Correlation between coefficient of the seedling counting models and days after planting (DAP).

When a DAP was replaced by its corresponding leaf development stage, the coefficients were approximately the inverse of the number of leaves at the given stage. For example, at the one- to three-leaf stage, the coefficients on 32 and 39 DAP were 0.354 and 0.297, which were approximately the inverse of 3 (1/3 or 0.333). Similarly, at the four- to six-leaf stage, the coefficients on 53 and 58 DAP were 0.277 and 0.220, which were approximately the inverse of 5 (1/5 or 0.200). To some extent, this correlation demonstrated another perspective to understand the counting models through the leaf development stage, but more data are needed to verify the result more scientifically.

Another interesting finding related to the leaf development stage of rapeseed seedlings was that the timing of 53 DAP corresponding to the four- to six-leaf stage was near winter solstice in the Chinese calendar. Winter solstice, a meaningful solar term, is known as a significant time for winter rapeseed planting as well as for other agricultural activities. Many Chinese agronomic researchers and cultivating specialists regard winter solstice as a critical timing for rapeseed characteristic measurement. It is also considered as a mid-growth phase of rapeseed leaf development with about five leaves. In fact, the day of winter solstice was 4 days before 53 DAP. Therefore, the DAP corresponding to the leaf development stage was confirmed to be reliable in this study. Our findings offered a scientific explanation for the agricultural practice in China and should be referential for relevant studies and practices.

Influence of Parameters in Convolutional Neural Network

Influence of Patch Size

It is necessary to define patch sizes according to the specific purposes and the image target for the application of CNN. In this study, sample patches were cropped from the entire labeled UAV image. Moreover, the trained CNN model was applied to a large-scale field image. This study employed five sample patch sizes with 16, 24, 32, 40, and 48 pixels.

These sizes were determined mainly by the image resolution and the growth situation of rapeseed. Madec et al. (2019) assumed that resolution around 0.3 mm could allow to detect and count wheat ears for high-throughput phenotyping based on UAV-captured RGB images using Faster-RCNN. However, their research used imagery captured by a camera fixed on a boom, and their assumption was not verified with UAV images in their work. The imagery used in this study was captured by UAV in the field 30 m above ground level. If the altitude was more than 30 m, the resolution was not enough for recognition. If the UAV imagery was captured below 30 m, it would reduce the efficiency of field plot data collection. Furthermore, the turbulence generated by the UAV made the rapeseed leaves shake, leading to the unfocused targets in the imagery.

Meanwhile, the leaf size of rapeseed was another factor determining the patch size. In this study, an overlarge patch size such as a 128-pixel patch could contain redundant and useless information. On the contrary, an undersized patch would cause underfitting recognition results because the patch would be so small that the features between rapeseed leaves and weeds could not be distinguished. Employing a patch-based CNN algorithm (Sindagi and Patel, 2017) brought about a problem that the patch could not cover an oversized rapeseed leaf, resulting in a larger number of detected objects than the actual number called overcounting in this study (Figure 5). Therefore, the patch size of UAV imagery was limited by its imaging mode and resolution, field status, and the characteristics of objects.

In this study, the areas of the five patches were calculated and matched with the leaf size of rapeseed for each observation. The area of the 48-pixel patch was calculated to be 74.6 cm2, and the maximum area of rapeseed leaf obtained on 68 DAP was almost 63 cm2. Based on this, a sampling patch cropped with 48 pixels could meet the minimum requirement for covering an entire rapeseed leaf. However, each patch size was used for all the observations, thus the same patch size had a dynamic influence on the counting results as DAP changed. In Figure 7, the 16-pixel patch exhibited the optimal performance on 32 DAP, which was attributed to the fact that the 16-pixel patch could match the leaf size better on 32 DAP than on the other DAPs. On the other DAPs, the 24-pixel patch and the 32-pixel patch matched the leaf size better than the other patch sizes achieving better F-score in Figure 7.

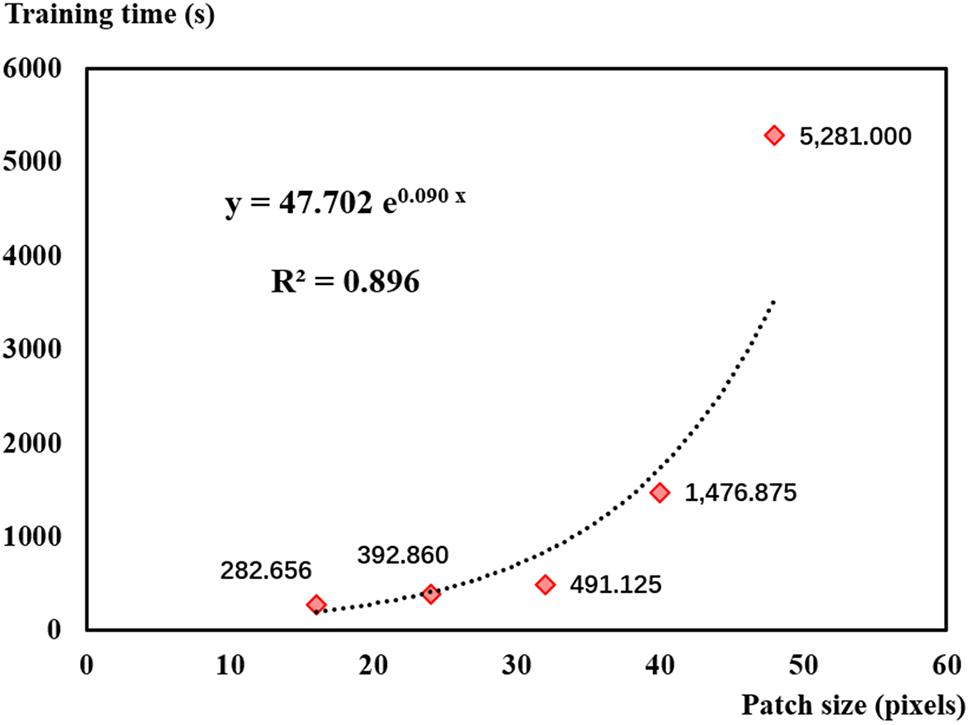

In addition, patch size significantly affected training time. Figure 11 shows that training time increased exponentially with patch size. Training time was more than 5,200 s (about 90 min) when using the 48-pixel patch. Although using the 16-pixel patch could save time, its results were not desirable in this study. According to Figure 11 and based on the results of leaf recognition and seedling estimation, the 32-pixel patch was selected as the optimal sampling size balancing the performance and efficiency in this study.

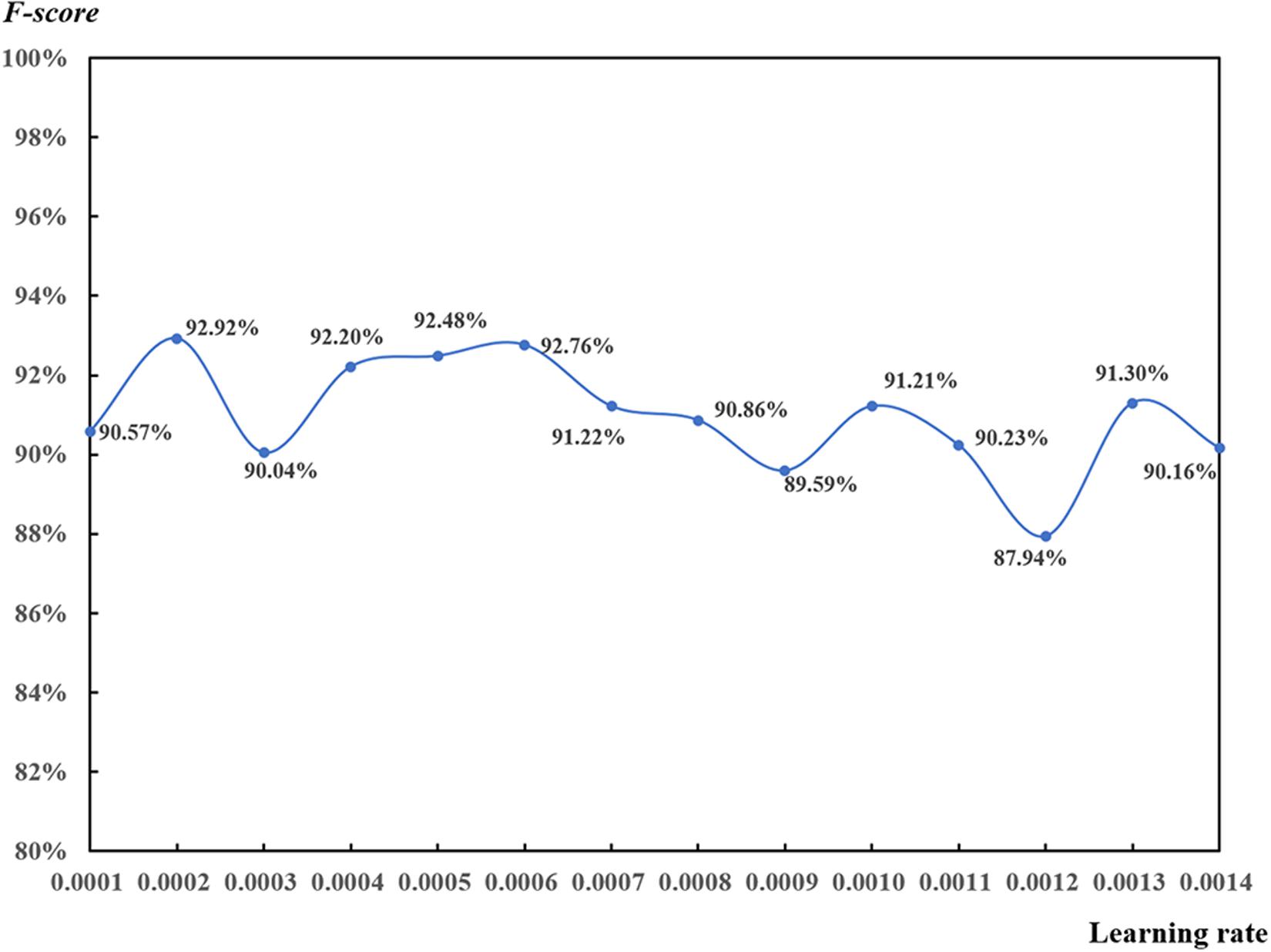

Influence of Learning Rate

Learning rates ranging from 0.0001 to 0.0014 (step on 0.0001) were used to analyze the learning rate function with the 32-pixel patch on 53 DAP based on the local max value of 0.65 (Figure 12). F-scores were higher than 90.00% for most of the results. However, it was difficult to determine the relationship between learning rate and F-score since there was an irregular fluctuation. In terms of the ranking of F-scores, the top four learning rates were 0.0002, 0.0006, 0.0005, and 0.0007 with all their corresponding F-scores higher than 92.00%.

Figure 12. Test of learning rate for the convolutional neural network-based rapeseed leaf recognition on 53 days after planting (32-pixel patch, local max value of 0.65).

Learning rate plays an important role in CNN models (Trimble, 2018). It defines the weights used to adjust the gradient descent optimization. If learning rate is too small, the learning process will be slowed down and may not be close to the optimal settings. If learning rate is too large, the model may not reach the minimum boundary and produce results of null values (Trimble, 2017). Learning rate is considered as a hyper-parameter in machine learning and deep learning, which is mostly set up based on practices and empiricism (Senior et al., 2013). Finally, learning rates ranging from 0.0004 to 0.0006 were suggested in this study.

Influence of Overcounting Rate

Overcounting is common in object identification and counting tasks, especially in large-scale scenes (Li et al., 2016). It is challenging to use CNN algorithms for object detection and counting in a large-scale scene. Most studies detected flowers, leaves, and crops in the lab because the scenes were well controlled and the results were less influenced by external factors. This study detected rapeseed leaves in a field-based scene. The existing leaf overlapping was one of the main reasons for overcounting. With leaf size increasing, the overlapping became more complex, which caused serious overcounting and inaccurate counting results.

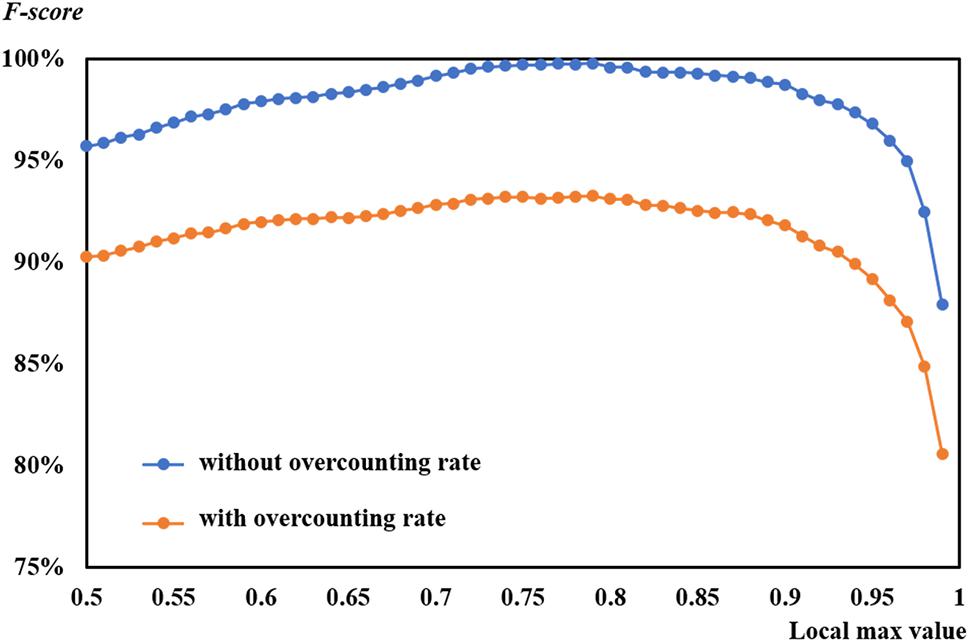

This study selected and employed different patch sizes, attempting to match the size of leaves and improve model performance. However, the overcounting was still difficult to avoid. Comparison was made between the F-scores with overcounting and those without overcounting based on the suggested learning rate of 0.0004 on 53 DAP (Figure 13). Even though two curves of F-scores exhibited a similar tendency, F-scores without overcounting calibration were higher than those with overcounting calibration. The former almost reached 100%, which was inaccurate.

Figure 13. Comparison of F-scores with and without overcounting rate at a learning rate of 0.0004 on 53 days after planting.

A graphic example of F-score with and without overcounting calibration is shown in Figure 5. As shown in the figure, precision without overcounting calibration was greater than that with overcounting calibration, resulting in an illusory high F-score. F-scores with overcounting calibration more accurately and objectively described the CNN-based leaf recognition performance. When the number of referencing masks was 29, the accurate number of detected rapeseed leaves was 28 using overcounting calibration. Without overcounting calibration, the number of detected rapeseed leaves was 38 (Figure 5). These results demonstrated that overcounting calibration was important to obtain precise rapeseed leaf counting. When overcounting calibration was considered, more reliable and objective rapeseed leaf counting results were achieved as demonstrated by the study.

Future Work

This study was a specific application of machine learning in agriculture for quantitative analysis of rapeseed seedling stand counting at a field level from UAV images. We aimed to estimate the rapeseed seedling count precisely and to offer a comprehensive study of field-based rapeseed seedling estimation throughout its early growth stages. Moreover, we tried to present a study showing general and user-friendly workflow for executing CNN methods. We also expected that our research offered another perspective in phenotyping and cultivation management for estimating seedling count for crops that have obvious tillering leaves at early growth stages such as soybean and potato. The results based on the particular case were desirable and promising. More detailed data and relevant technical information of our study were deposited to GitHub repository in “LARSC-Lab/Rapeseed_seedling_counting”.

In the future, we will focus on the data accumulation including data from multiple growing seasons and multiple fields in different locations. Moreover, multiple approaches are expected to be employed, and their performances will be compared to identify optimal methods and improve accuracy. A more comprehensive dataset of rapeseed from UAV imagery is expected to be completed and published in the future based on our work, which will promote the research in phenotyping for rapeseed and other crops.

Conclusion

Utilizing a consumer-grade camera mounted on a UAV for crop phenotyping and vegetation investigation in the field is feasible and efficient. This study attempted to estimate rapeseed stand count in UAV-captured RGB imagery with machine learning. CNN algorithm was used for rapeseed leaf identification and counting. Regression modeling coupled with LOOCV method was used to establish and optimize the relationship between the seedling counting and the number of rapeseed leaves. When the number of CNN-detected rapeseed leaves was brought into seedling counting models, the results demonstrated that our proposed framework performed well and achieved great accuracy. In summary, the following conclusions can be drawn from this study:

(1) The effectiveness of our proposed CNN framework on rapeseed leaf recognition and counting was verified in this study. Overcounting is a common problem during leaf recognition and counting. The overcounting rate was related to the DAP reflecting of the rapeseed growth conditions during leaf development. CNN-recognized rapeseed leaf counting incorporated with overcounting calibration was reliable with an overall F-score of more than 90%. On average, 806 out of 812 plants were correctly estimated on 53 DAP at the four- to six- leaf stage. RMSE was nine plants with rRMSE of 2.22% on 53 DAP for the 32-pixel patch size, while the mean RMSE was 12 with mean rRMSE of 2.89% for all patch sizes.

(2) This study demonstrated that DAP influenced the overcounting rate, CNN-recognized leaf results, and seedling counting models. On 46 and 53 DAP, the counting models presented desirable performance. Moreover, a strong correlation (R2 = 0.835) was also found between coefficients of counting models and DAP. The optimal observation period was on 53 DAP corresponding to the four- to six-leaf stage of rapeseed development.

(3) Based on machine learning approaches, this study proposed a framework for rapeseed stand counting in the field by high-resolution UAV imagery. Our future studies will focus on the collection and evaluation of multiple-year datasets to improve the robustness and reliability of the stand counting models.

Data Availability Statement

The “Rapeseed_seedling_counting” data that support the findings of this study are available in “LARSC-Lab/Rapeseed_seedling_counting” in GitHub, which can be found at https://github.com/LARSC-Lab/Rapeseed_seedling_counting.

Author Contributions

JZ, JX, and BZ designed and conducted the remote sensing part of the experiment. QL and GZ designed and conducted the agronomy part of the experiment. JZ, BZ, JX, and CH processed and analyzed the imagery as well as wrote the manuscript. CY guided the study design, advised in data analysis, and revised the manuscript. WY, YS, DZ, CW, TX, and ZJ were involved in the process of the experiment, ground data collection, or manuscript revision. All authors reviewed and approved the final manuscript.

Funding

This work was financially supported by the National Key Research and Development Program of China (2018YFD1000900) and the Fundamental Research Funds for the Central Universities (Grant Nos. 2662018PY101 and 2662018JC012).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We acknowledge the field staff of Huazhong Agricultural University for daily management of the field experiments. We are grateful to the reviewers for their valuable comments and recommendations to improve the manuscript.

Abbreviations

CNN, convolutional neural network; DAP, day after planting; GCP, ground control point; GPS, global positioning system; LOOCV, leave-one-out crossing validation; MAE, mean absolute error; RF, random forest; RMSE, root mean square error; rRMSE, relative root mean square error; SVM, support vector machine; UAV, unmanned aerial vehicle; UGV, unmanned ground vehicle.

Footnotes

References

Anaconda Software Distribution (2016). Computer Software. Vers. 2-2.4.0. Avaliable at: https://anaconda.com (accessed November, 2016).

Araus, J. L., and Cairns, J. E. (2014). Field high-throughput phenotyping: the new crop breeding frontier. Trends Plant Sci. 19, 52–61. doi: 10.1016/j.tplants.2013.09.008

Arteta, C., Lempitsky, V., and Zisserman, A. (2016). Counting in the Wild, in: European Conference on Computer Vision. Berlin: Springer, 483–498.

Ballesteros, R., Ortega, J. F., Hernández, D., and Moreno, M. A. (2014). Applications of georeferenced high-resolution images obtained with unmanned aerial vehicles. Part I: description of image acquisition and processing. Precis. Agric. 15, 579–592. doi: 10.1007/s11119-014-9355-8

Bendig, J., Bolten, A., Bennertz, S., Broscheit, J., Eichfuss, S., and Bareth, G. (2014). Estimating biomass of barley using crop surface models (CSMs) derived from UAV-Based RGB imaging. Remote Sens. 6, 10395–10412. doi: 10.3390/rs61110395

Berrocoso, J. D., Rojas, O. J., Liu, Y., Shoulders, J., González-Vega, J. C., and Stein, H. H. (2015). Energy concentration and amino acid digestibility in high-protein canola meal, conventional canola meal, and soybean meal fed to growing pigs. J. Anim. Sci. 93, 2208–2217. doi: 10.2527/jas.2014-8528

Blaschke, T. (2010). Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 65, 2–16. doi: 10.1016/j.isprsjprs.2009.06.004

Bouchet, A.-S., Laperche, A., Bissuel-Belaygue, C., Snowdon, R., Nesi, N., and Stahl, A. (2016). Nitrogen use efficiency in rapeseed. A review. Agron. Sustain. Dev. 36:38. doi: 10.1007/s13593-016-0371-0

Bucksch, A., Burridge, J., York, L. M., Das, A., Nord, E., Weitz, J. S., et al. (2014). Image-based high-throughput field phenotyping of crop roots. Plant Physiol. 166, 470–486. doi: 10.1104/pp.114.243519

Chen, R., Chu, T., Landivar, J. A., Yang, C., and Maeda, M. M. (2018). Monitoring cotton (Gossypium hirsutum L.) germination using ultrahigh-resolution UAS images. Precis. Agric. 19, 161–177. doi: 10.1007/s11119-017-9508-7

Chen, Y., Ribera, J., Boomsma, C., and Delp, E. (2017). “Locating crop plant centers from UAV-based RGB imagery,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Venice, 2030–2037. doi: 10.1111/tpj.14799

Córcoles, J. I., Ortega, J. F., Hernández, D., and Moreno, M. A. (2013). Estimation of leaf area index in onion (Allium cepa L.) using an unmanned aerial vehicle. Biosyst. Eng. 115, 31–42. doi: 10.1016/j.biosystemseng.2013.02.002

Csillik, O., Cherbini, J., Johnson, R., Lyons, A., and Kelly, M. (2018). Identification of citrus trees from unmanned aerial vehicle imagery using convolutional neural networks. Drones 2:39. doi: 10.3390/drones2040039

Cunliffe, A. M., Brazier, R. E., and Anderson, K. (2016). Ultra-fine grain landscape-scale quantification of dryland vegetation structure with drone-acquired structure-from-motion photogrammetry. Remote Sens. Environ. 183, 129–143. doi: 10.1016/j.rse.2016.05.019

Davies, E. R. (2009). The application of machine vision to food and agriculture: a review. Imaging Sci. J. 57, 197–217. doi: 10.1179/174313109X454756

Deng, L., Mao, Z., Li, X., Hu, Z., Duan, F., and Yan, Y. (2018). UAV-based multispectral remote sensing for precision agriculture: a comparison between different cameras. ISPRS-J. Photogramm. Remote Sens. 146, 124–136. doi: 10.1016/j.isprsjprs.2018.09.008

Furbank, R. T., and Tester, M. (2011). Phenomics – technologies to relieve the phenotyping bottleneck. Trends Plant Sci. 16, 635–644. doi: 10.1016/j.tplants.2011.09.005

Geipel, J., Link, J., and Claupein, W. (2014). Combined spectral and spatial modeling of corn yield based on aerial images and crop surface models acquired with an unmanned aircraft system. Remote Sens. 6, 10335–10355. doi: 10.3390/rs61110335

Ghanem, M. E., Marrou, H., and Sinclair, T. R. (2015). Physiological phenotyping of plants for crop improvement. Trends Plant Sci. 20, 139–144. doi: 10.1016/j.tplants.2014.11.006

Gnädinger, F., and Schmidhalter, U. (2017). Digital counts of maize plants by unmanned aerial vehicles (UAVs). Remote Sens. 9:544. doi: 10.3390/rs9060544

Godfray, H. C. J., Beddington, J. R., Crute, I. R., Haddad, L., Lawrence, D., Muir, J. F., et al. (2010). Food security: the challenge of feeding 9 billion people. Science 327, 812–818. doi: 10.1126/science.1185383

Gomes, M. F., Maillard, P., and Deng, H. (2018). Individual tree crown detection in sub-meter satellite imagery using marked point processes and a geometrical-optical model. Remote Sens. Environ. 211, 184–195. doi: 10.1016/j.rse.2018.04.002

Grinblat, G. L., Uzal, L. C., Larese, M. G., and Granitto, P. M. (2016). Deep learning for plant identification using vein morphological patterns. Comput. Electron. Agric. 127, 418–424. doi: 10.1016/j.compag.2016.07.003

Huang, Y., Thomson, S. J., Brand, H. J., and Reddy, K. N. (2016). Development and evaluation of low-altitude remote sensing systems for crop production management. Int. J. Agric. Biol. Eng. 9, 1–11.

Jin, X., Liu, S., Baret, F., Hemerlé, M., and Comar, A. (2017). Estimates of plant density of wheat crops at emergence from very low altitude UAV imagery. Remote Sens. Environ. 198, 105–114. doi: 10.1016/j.rse.2017.06.007

Lancashire, P. D., Bleiholder, H., Boom, T. V. D., Langelüddeke, P., Stauss, R., Weber, E., et al. (1991). A uniform decimal code for growth stages of crops and weeds. Ann. Appl. Biol. 119, 561–601.

Lee, S. H., Chan, C. S., Mayo, S. J., and Remagnino, P. (2017). How deep learning extracts and learns leaf features for plant classification. Pattern Recognit. 71, 1–13.

Li, W., Fu, H., Yu, L., and Cracknell, A. (2016). Deep learning based oil palm tree detection and counting for high-resolution remote sensing images. Remote Sens. 9:22. doi: 10.3390/rs9010022

Linker, R., Cohen, O., and Naor, A. (2012). Determination of the number of green apples in RGB images recorded in orchards. Comput. Electron. Agric. 81, 45–57. doi: 10.1016/j.compag.2011.11.007

Liu, S., Baret, F., Andrieu, B., Burger, P., and Hemmerlé, M. (2017). Estimation of wheat plant density at early stages using high resolution imagery. Front. Plant Sci. 8:739. doi: 10.3389/fpls.2017.00739

Liu, T., Li, R., Jin, X., Ding, J., Zhu, X., Sun, C., et al. (2017). Evaluation of seed emergence uniformity of mechanically sown wheat with UAV RGB imagery. Remote Sens. 9:1241. doi: 10.3390/rs9121241

Ma, L., Li, M., Ma, X., Cheng, L., Du, P., and Liu, Y. (2017). A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 130, 277–293. doi: 10.1016/j.isprsjprs.2017.06.001

Madec, S., Jin, X., Lu, H., De Solan, B., Liu, S., Duyme, F., et al. (2019). Ear density estimation from high resolution RGB imagery using deep learning technique. Agric. For. Meteorol. 264, 225–234.

Maimaitijiang, M., Ghulam, A., Sidike, P., Hartling, S., Maimaitiyiming, M., Peterson, K., et al. (2017). (UAS)-based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS J. Photogramm. Remote Sens. 134, 43–58. doi: 10.1016/j.isprsjprs.2017.10.011

McCouch, S., Baute, G. J., and Bradeen, J. (2013). Agriculture: feeding the future. Nature 499, 23–24.

Meyer, G. E., and Neto, J. C. (2008). Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 63, 282–293. doi: 10.1016/j.compag.2008.03.009

Mir, R. R., Reynolds, M., Pinto, F., Khan, M. A., and Bhat, M. A. (2019). High-throughput phenotyping for crop improvement in the genomics era. Plant Sci. 282, 60–72. doi: 10.1016/j.plantsci.2019.01.007

Naito, H., Ogawa, S., Valencia, M. O., Mohri, H., Urano, Y., Hosoi, F., et al. (2017). Estimating rice yield related traits and quantitative trait loci analysis under different nitrogen treatments using a simple tower-based field phenotyping system with modified single-lens reflex cameras. ISPRS J. Photogramm. Remote Sens. 125, 50–62. doi: 10.1016/j.isprsjprs.2017.01.010

Pound, M. P., Atkinson, J. A., Wells, D. M., Pridmore, T. P., and French, A. P. (2017). “Deep learning for multi-task plant phenotyping,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, 2055–2063.

Qureshi, W. S., Payne, A., Walsh, K. B., Linker, R., Cohen, O., and Dailey, M. N. (2017). Machine vision for counting fruit on mango tree canopies. Precis. Agric. 18, 224–244. doi: 10.1007/s11119-016-9458-5

Rahaman, M. M., Chen, D., Gillani, Z., Klukas, C., and Chen, M. (2015). Advanced phenotyping and phenotype data analysis for the study of plant growth and development. Front. Plant Sci 6:619. doi: 10.3389/fpls.2015.00619

Ramos, P. J., Prieto, F. A., Montoya, E. C., and Oliveros, C. E. (2017). Automatic fruit count on coffee branches using computer vision. Comput. Electron. Agric. 137, 9–22. doi: 10.1016/j.compag.2017.03.010

Ribera, J., Chen, Y., Boomsma, C., and Delp, E. J. (2017). “Counting plants using deep learning,” in Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP). Presented at the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC: IEEE, 1344–1348. doi: 10.1109/GlobalSIP.2017.8309180

Sadras, V. O., Rebetzke, G. J., and Edmeades, G. O. (2013). The phenotype and the components of phenotypic variance of crop traits. Field Crop. Res. 154, 255–259. doi: 10.1016/j.fcr.2013.10.001

Sankaran, S., Quirós, J. J., Knowles, N. R., and Knowles, L. O. (2017). High-resolution aerial imaging based estimation of crop emergence in potatoes. Am. J. Potato Res. 94, 658–663. doi: 10.1007/s12230-017-9604-2

Schowengerdt, R. A. (2012). Techniques for Image Processing and Classifications in Remote Sensing. Cambridge, MA: Academic Press.

Senior, A., Heigold, G., Ranzato, M., and Yang, K. (2013). “An empirical study of learning rates in deep neural networks for speech recognition,” in Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing. Presented at the ICASSP 2013 - 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC: IEEE, 6724–6728.

Sheng, B., Shen, C., Lin, G., Li, J., Yang, W., and Sun, C. (2016). Crowd counting via weighted vlad on dense attribute feature maps. IEEE Trans. Circ. Syst. Video Technol. 28, 1788–1797.

Shi, Y., Thomasson, J. A., Murray, S. C., Pugh, N. A., Rooney, W. L., Shafian, S., et al. (2016). Unmanned aerial vehicles for high-throughput phenotyping and agronomic research. PLoS One 11:e0159781. doi: 10.1371/journal.pone.0159781

Shi, Y., Wang, N., Taylor, R. K., Raun, W. R., and Hardin, J. A. (2013). Automatic corn plant location and spacing measurement using laser line-scan technique. Precis. Agric. 14, 478–494. doi: 10.1007/s11119-013-9311-z

Simonyan, K., and Zisserman, A. (2014). Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv preprint arXiv:1409.1556. Available online at: http://arxiv.org/abs/1409.1556

Sindagi, V. A., and Patel, V. M. (2017). A survey of recent advances in cnn-based single image crowd counting and density estimation. Pattern Recognit. Lett. 107, 3–16.

Van Iersel, W., Straatsma, M., Addink, E., and Middelkoop, H. (2018). Monitoring height and greenness of non-woody floodplain vegetation with UAV time series. ISPRS J. Photogramm. Remote Sens. 141, 112–123. doi: 10.1016/j.isprsjprs.2018.04.01

Varela, S., Dhodda, P., Hsu, W., Prasad, P. V., Assefa, Y., Peralta, N., et al. (2018). UAS) and supervised learning techniques. Remote Sens. 10:343. doi: 10.3390/rs10020343

Verger, A., Vigneau, N., Chéron, C., Gilliot, J.-M., Comar, A., and Baret, F. (2014). Green area index from an unmanned aerial system over wheat and rapeseed crops. Remote Sens. Environ. 152, 654–664. doi: 10.1016/j.rse.2014.06.006

Walter, A., Studer, B., and Kölliker, R. (2012). Advanced phenotyping offers opportunities for improved breeding of forage and turf species. Ann. Bot. 110, 1271–1279. doi: 10.1093/aob/mcs026

Wang, X., Liu, G., Yang, Q., Hua, W., Liu, J., and Wang, H. (2010). Genetic analysis on oil content in rapeseed (Brassica napus L.). Euphytica 173, 17–24. doi: 10.1007/s10681-009-0062-x

Weber, E., and Bleiholder, H. (1990). Erläuterunger zu den BBCH–Dezimal-codes für die entwicklungsstadien von mais, raps, faba-bohne, sonnenblume und erbse-mit abbildungen. Gesunde Pflanz 42, 308–321.

Wendel, A., and Underwood, J. (2017). Illumination compensation in ground based hyperspectral imaging. ISPRS J. Photogramm. Remote Sens. 129, 162–178. doi: 10.1016/j.isprsjprs.2017.04.010

White, J. W., Andrade-Sanchez, P., Gore, M. A., Bronson, K. F., Coffelt, T. A., Conley, M. M., et al. (2012). Field-based phenomics for plant genetics research. Field Crop. Res. 133, 101–112. doi: 10.1016/j.fcr.2012.04.003

Xiong, X., Duan, L., Liu, L., Tu, H., Yang, P., Wu, D., et al. (2017). Panicle-SEG: a robust image segmentation method for rice panicles in the field based on deep learning and superpixel optimization. Plant Methods 13:104. doi: 10.1186/s13007-017-0254-7

Yang, G., Liu, J., Zhao, C., Li, Z., Huang, Y., Yu, H., et al. (2017). Unmanned aerial vehicle remote sensing for field-based crop phenotyping: current status and perspectives. Front. Plant Sci. 8:1111. doi: 10.3389/fpls.2017.01111

Yang, W., Guo, Z., Huang, C., Duan, L., Chen, G., Jiang, N., et al. (2014). Combining high-throughput phenotyping and genome-wide association studies to reveal natural genetic variation in rice. Nat. Commun. 5:5087. doi: 10.1038/ncomms6087

Yu, N., Li, L., Schmitz, N., Tian, L., Greenberg, J. A., and Diers, B. W. (2016). Development of methods to improve soybean yield estimation and predict plant maturity with an unmanned aerial vehicle based platform. Remote Sens. Environ. 187, 91–101. doi: 10.1016/j.rse.2016.10.005

Zanotta, D. C., Zortea, M., and Ferreira, M. P. (2018). A supervised approach for simultaneous segmentation and classification of remote sensing images. ISPRS J. Photogramm. Remote Sens. 142, 162–173. doi: 10.1016/j.isprsjprs.2018.05.021

Zhang, C., and Kovacs, J. M. (2012). The application of small unmanned aerial systems for precision agriculture: a review. Precis. Agric. 13, 693–712. doi: 10.1007/s11119-012-9274-5

Zhang, C., Li, H., Wang, X., and Yang, X. (2015). “Cross-scene crowd counting via deep convolutional neural networks,” in Proceedings of the Computer Vision and Pattern Recognition (CVPR), 2015 IEEE Conference On, Piscataway, NJ: IEEE, 833–841.

Zhang, Y., Zhou, D., Chen, S., Gao, S., and Ma, Y. (2016). “Single-image crowd counting via multi-column convolutional neural network,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, 589–597.

Zhao, B., Zhang, J., Yang, C., Zhou, G., Ding, Y., Shi, Y., et al. (2018). Rapeseed seedling stand counting and seeding performance evaluation at two early growth stages based on unmanned aerial vehicle imagery. Front. Plant Sci. 9:1362. doi: 10.3389/fpls.2018.01362

Keywords: stand counting, field-based phenotyping, optimal observation timing, convolutional neural network, precision agriculture

Citation: Zhang J, Zhao B, Yang C, Shi Y, Liao Q, Zhou G, Wang C, Xie T, Jiang Z, Zhang D, Yang W, Huang C and Xie J (2020) Rapeseed Stand Count Estimation at Leaf Development Stages With UAV Imagery and Convolutional Neural Networks. Front. Plant Sci. 11:617. doi: 10.3389/fpls.2020.00617

Received: 07 August 2019; Accepted: 22 April 2020;

Published: 10 June 2020.

Edited by:

Tony Pridmore, University of Nottingham, United KingdomReviewed by:

Dong Xu, University of Missouri, United StatesAlexei E. Solovchenko, Lomonosov Moscow State University, Russia

Copyright © 2020 Zhang, Zhao, Yang, Shi, Liao, Zhou, Wang, Xie, Jiang, Zhang, Yang, Huang and Xie. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chenglong Huang, aGNsQG1haWwuaHphdS5lZHUuY24=; Jing Xie, eGllamluZzYyNUBtYWlsLmh6YXUuZWR1LmNu

†These authors have contributed equally to this work

Jian Zhang

Jian Zhang Biquan Zhao

Biquan Zhao Chenghai Yang3

Chenghai Yang3 Yeyin Shi

Yeyin Shi Guangsheng Zhou

Guangsheng Zhou Dongyan Zhang

Dongyan Zhang Wanneng Yang

Wanneng Yang Chenglong Huang

Chenglong Huang Jing Xie

Jing Xie