- 1Theoretical Computer Science, University of Tartu, Tartu, Estonia

- 2Ketita Labs OÜ, Tartu, Estonia

One proposal to utilize near-term quantum computers for machine learning are Parameterized Quantum Circuits (PQCs). There, input is encoded in a quantum state, parameter-dependent unitary evolution is applied, and ultimately an observable is measured. In a hybrid-variational fashion, the parameters are trained so that the function assigning inputs to expectation values matches a target function. The no-cloning principle of quantum mechanics suggests that there is an advantage in redundantly encoding the input several times. In this paper, we prove lower bounds on the number of redundant copies that are necessary for the expectation value function of a PQC to match a given target function. We draw conclusions for the architecture design of PQCs.

1. Introduction

Quantum Information Processing proposes to exploit quantum physical phenomena for the purpose of data processing. Conceived in the early 80's [1, 2], recent breakthroughs in building controllable quantum mechanical systems have led to an explosion of activity in the field.

Building quantum computers is a formidable challenge—but so is designing algorithms which, when implemented on them, are able to exploit the advantage that quantum computing is widely believed by experts to have over classical computing on some computational tasks. A particularly compelling endeavor is to make use of near-term quantum computers, which suffer from limited size and the presence of debilitating levels of quantum noise. The field of algorithm design for Noisy Intermediate-Scale Quantum (NISQ) computers has scrambled over the last few years to identify fields of computing, paradigms of employing quantum information processing, and commercial use-cases in order to profit from recent progress in building programmable quantum mechanical devices—limited as they may be at present [3].

One use-case area where quantum advantage might materialize in the near term is that of Artificial Intelligence [3, 4]. The hope is best reasoned for generative tasks: several families of probability distributions have been theoretically proven to admit quantum algorithms for efficiently sampling from them, while no classical algorithm is able or is known to be able to perform that sampling task. Boson sampling is probably the most widely known of these sampling tasks, even though the advantage does not seem to persist in the presence of noise (cf. [5]); examples of some other sampling procedures can be found in references [6, 7].

Promising developments have also been made available in the case of quantum circuits that can be iteratively altered by manipulation of one or several parameters: Du et al. [8] consider so-called Parameterized Quantum Circuits (PQCs) and find that they, too, yield a theoretical advantage for generative tasks. PQCs are occasionally referred to as Quantum Neural Networks (QNNs) (e.g., in [9]) when aspects of non-linearity are emphasized, or as Variational Quantum Circuits [10]. We stick to the term PQC in this paper, without having in mind excluding QNNs or VQCs.

The PQC architectures which have been considered share some common characteristics, but an important design question is how the input data is presented. Input data refers either to a feature vector, or to output of another layer of a larger, potentially hybrid quantum-classical neural network. The fundamental choice is whether to encode digitally or in the amplitudes of a quantum state. Digital encoding usually entails preparing a quantum register in states |bx〉, where bx ∈ {0, 1}n is binary encoding of input datum x. Encoding in the amplitudes of a quantum state, on the other hand, refers to preparing a an n-bit quantum register in a state of the form , where ϕj, j = 0, …, 2n − 1 is a family of encoding functions which must ensure that |ϕx〉 is a quantum state for each x, i.e., that holds for all x. We refer the reader to the discussion of these concepts in Schuld and Petruccione [11] for further details.

The present paper deals with redundancy in the input data, i.e., giving the same datum several times. The most straightforward concept here is that of “tensorial” encoding [12]. Here, several quantum registers are prepared in a state which is the tensor product of the corresponding number of identical copies of a data-encoding state, i.e., |ϕx〉⊗⋯⊗|ϕx〉. For example, Mitarai et al. [13], propose the following construction: To encode a real number x close to 0, they choose the state

where is the 1-qubit Pauli rotation around the Y-axis (and σY the Pauli matrix). But then, to construct a PQC that is able to learn polynomials of degree n in a single variable, they encode the polynomial variable x into n identical copies, . It is noteworthy, and the starting point of our research, that the number of times that the input, x, is encoded redundantly, depends on the complexity of the learning task.

Encoding the input several times redundantly, as in tensorial encoding, is probably motivated by the quantum no-cloning principle. While classical circuits and classical neural networks can have fan-out—the output of one processing node (gate, neuron, …) can be the input to several others—the no-cloning principle of quantum mechanics forbids to duplicate data which is encoded in the amplitudes of a quantum state. This applies to PQCs, and, specifically, to the input that is fed into a PQC, if the input is encoded in the amplitudes of input states.

1.1. The Research Presented in This Paper

The no-cloning principle suggests that duplicating input data redundantly is unavoidable. The research presented in this paper aims to lower bound how often the data has to be redundantly encoded, if a given function is to be learned. The novelty in this paper lies in establishing that these lower bounds are possible. For that purpose, the cases for which we prove lower bounds are natural, but not overly complex, thus highlighting the principle over the application.

The objects of study of this paper are PQCs of the following form. The input consists of a single real number x, which is encoded into amplitudes by applying a multi-qubit Hamiltonian evolution of the form e−iη(x)H at one point (no redundancy), or several points in the quantum circuit. The function η and Hamiltonian H may be different at the different points the quantum circuit.

Hence, our definition of “input” is quite general, and allows, for example, that the input is given in the middle of a quantum circuit—mimicking the way how algorithms for fault-tolerant quantum computing operate on continuous data: the subroutine for accessing the data be called repeatedly; cf., e.g., the description of the input oracles in van Apeldoorn and Gilyén [14]. It should be pointed out, however, that general state preparation procedures as in Harrow et al. [15] and Schuld et al. [12] cannot not be studied with the tools of this paper, because they apply many operations with parameters derived from a collection of inputs, instead of a single input.

Our lower bound technique is based on Fourier analysis.

1.2. Example

Take, as example, the parameterized quantum circuits of Mitarai et al. [13] mentioned above. Comparing with (1) shows: The single real input x close to 0 is prepared by performing, at n different positions in the quantum circuit, Hamiltonian evolution with ηj(x): = arcsin(x), and Hj: = σY/2, for j = 1, …, n.

We say that the input x to the quantum circuits of Mitarai et al. are encoded with input redundancy n—meaning, the input is given n times.

The example highlights the ostensible wastefulness of giving the same data n times, and the question naturally arises whether a more clever application of possibly different rotations would have reduced the amount of input redundancy.

In the case of Mitarai et al.'s example, it can easily be seen—from algebraic arguments involving the quantum operations which are performed—that, in order to produce a polynomial of degree n, redundancy n is best possible for the particular way of encoding the value x by applying the Pauli rotation to distinct qubits, we leave that to the reader. However, already the question whether by re-using the same qubit a less “wasteful” encoding could have been achieved is quite not so easy. Our Fourier analysis based techniques give lower bounds for more general encodings, in particular, for applying arbitrary single-qubit Pauli rotations to an arbitrary set of qubits at arbitrary time during the quantum circuit.

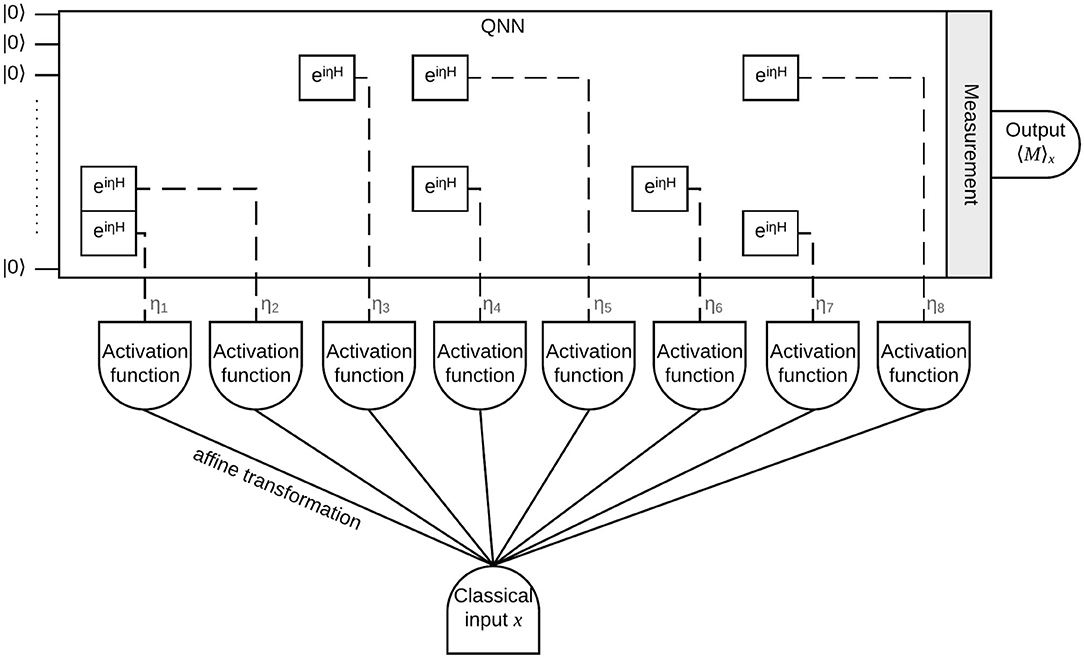

Figure 1 next page shows the schematic of quantum circuits with input x. The setup resembles that of a neural network layer. The j'th “copy” of the input is made available in the quantum circuit by, at some time, performing the unitary operation on one qubit, where ηj(x) = φ(ajx + bj), for an “activation function” φ. (We switch here to adding the factor 2π, to be compatible with our Fourier approach).

Figure 1. Schematic for the PQCs we consider. The classical input x, after being subjected to a transformations ηj(x) = φ(ajx + bj) with “activation function” φ, is fed into the QNN/PQC through Hamiltonian evolution operations . Quantum operations which do not participate in entering the input data into the quantum circuit are not shown; these include the operations which depend on the training parameters θ.

In the above-mentioned example in Mitarai et al. [13], the activation function is φ: = arcsin. Figure 1 aims at making clear that the input can be encoded by applying different unitary operations to different qubits, or to the same qubit several times, or any combination of these possibilities. Generalization of our results to several inputs is straightforward, if the activation functions in Figure 1 have a single input.

1.3. Our Results

As hinted above, our intention with this paper is to establish, in two natural examples, the possibility of proving lower bounds on input redundancy. The first example is what we call “linear” input encoding, where the activation function is φ(x) = x. The second example is Mitarai et al. [13] approach, where the activation function is φ(x) = arcsin(x).

For both examples, we prove lower bounds on the input redundancy in terms of linear-algebraic complexity measures of the target function. We find the lower bounds to be logarithmic, and the bounds are tight.

To the best of our knowledge, our results give the first quantitative lower bounds on input redundancy. These lower bounds, as well as other conclusions derived from our constructions, should directly influence design decisions for quantum neural network architectures.

1.4. Paper Organization

In the next section we review the background on the PQC model underlying our results. Sections 3 and 4 contain the results on linear and arcsine input encoding, respectively. We close with a discussion and directions of future work.

2. Background

2.1. MiNKiF PQCs

We now describe parameterized quantum circuits (PQCs) in more detail. Denote by

the quantum operations of an evolution with Hamiltonian H (operating on some set of qubits); the 2π factor is just a convenience for us and introduces no loss of generality. Following, in spirit, Mitarai et al. [13], in this paper we consider quantum circuits which apply quantum operations each of which is one of the following:

1. An operation as in (2), with a parameter α: = η which will encode input, x (i.e., η is determined by x);

2. An operation as in (2), with a parameter α: = θ which will be “trained” (we refer to these parameters as the training parameters);

3. Any quantum operation not defined by any parameter (although its effect can depend on θ, η, e.g., via dependency on measurement results).

Denote the concatenated quantum operation by . Now let M be an observable, and consider its expectation value on the state which results if the parameterized quantum circuit is applied to a fixed input state ρ0, e.g., ρ0: = |0〉〈0|. We denote the expectation value with parameters set to η, θ by f(η, θ):

The PQCs could have multiple outputs, but we do not consider that in this paper. We refer to PQCs of this type as MiNKiF PQCs, as [13] realized the fundamental property

where ej is the vector with a 1 in position j and 0 otherwise. This equation characterizes trigonometric functions. (The same relation holds obviously for derivatives in ηj direction.)

The setting we consider in this paper is the following.

• The training parameters, θ, have been trained perfectly and are thus ignored, in other words, omitting the θ argument, we conveniently consider f to be a function defined on ℝn (instead of on ℝn × ℝm);

• The inputs, x, are real numbers;

• The parameters η of f are determined by x, i.e., η is replaced by (φ(a1x + b1), …, φ(anx + bn)), where a, b ∈ ℝn; in other words, we study the function

We allow a, b to depend on the target function1.

This setting is restrictive only in as far as the input is one-dimensional; the reason for this restriction is that this paper aims to introduce and demonstrate a concept, and not be encyclopedic or obtain the best possible results.

This setting clearly includes the versions of amplitude encoding discussed in the introduction by applying operations UHj(φ(ajx+bj)) to |0〉〈0| states (of appropriately many qubits) for several j's, with suitable Hj's. However, the setting is more general in that it doesn't restrict to encode the input near the beginning of a quantum circuit, indeed, the order of the types of quantum operations is completely free.

To summarize, we study the functions

where

and

where . Then we ask the question: How large is the space of the x : f(η(x)), for a fixed activation function φ, but variable vectors a, b ∈ ℝn?

2.2. Fourier Calculus on MiNKiF Circuits

This paper builds on the simple observation of [16] that, under assumptions which are reasonable for near-term gate-based quantum computers, the Fourier spectrum, in the sense of the Fourier transform of tempered distributions, is finite and can be understood from the eigenvalues of the Hamiltonians. In particular, if, for each of the Hamiltonians Hj, j = 1, …, n, the differences of the eigenvalue of Hj are integer multiples of a positive number κj, then η↦f(η) is periodic.

Take, for example, the case of Pauli rotations ( in our notation): There, each of the Hj is of the form σuj/2 (with uj ∈ {x, y, z}). The eigenvalues of Hj are ±½, the eigenvalue differences are 0, ±1, and f : ℝn → ℝ is 1-periodic2 in every parameter, with Fourier spectrum contained in

More generally, if the Hj have eigenvalues, say, and for s = 1, …, Kj, then the eigenvalue differences are {−Kj, …, Kj}, and f : ℝn → ℝ is 1-periodic in every parameter, with Fourier spectrum contained in .

We refer to [16] for the (easy) details. In this paper, focusing on the goal of demonstrating the possibility to prove lower bounds on the input redundancy, we mostly restrict to 2-level Hamiltonians with eigenvalue difference 1 (such as one-half times a tensor product of Pauli matrices), which gives us the nice Fourier spectrum (7), commenting on other spectra only en passant.

For easy reference, we summarize the discrete Fourier analysis properties of the expectation value functions that we consider in the following remark. The proof of the equivalence of the three conditions is contained in the above discussions, except for the existence of a quantum circuit for a given multi-linear trigonometric polynomial, for which we defer [17], as it is not the topic of this paper.

REMARK 1. The following three statements are equivalent. If they hold, we refer to the function as an expectation value function, for brevity (suppressing the condition on the eigenvalues of the Hamiltonians). The input redundancy of the function is n.

1. The function f is of the form 5, where the Hj, j = 1, …, n, have eigenvalues ±½.

2. The function f is a real-valued function ℝn → ℝ which is 1-periodic in every parameter, and its Fourier spectrum is contained in . Hence,

where is the dot product (computed in ℝ), and the usual periodic Fourier transform of f, i.e., .

3. The function f is a multi-linear polynomial in the sine and cosine functions, i.e.,

(where “1” under the sum denotes the all-1 function).

3. Linear Input Encoding

We start discussing the case where the input parameters are affine functions of the input variable, e.g., φ = id and η(x) = x · a + b for some a, b ∈ ℝn, so the input redundancy is n.

For a ∈ ℝn define

with |·| denoting set cardinality; we refer to spread(a) as the spread of a. We point the reader to the fact that Ka is symmetric around 0 ∈ ℝ and 0 ∈ Ka, so that the spread is a non-negative integer.

For every k ∈ ℝ, consider the function

These functions are elements of the vector space ℂℝ of all complex-valued functions on the real line. We note the following well-known fact.

LEMMA 2. The functions χk,k ∈ ℝ, defined in (11) are linearly independent (in the algebraic sense, i.e., every finite subset is linearly independent).

Moreover, for every x0 ∈ ℝ and ε > 0, the restrictions of these functions to the interval ]x0 − ε, x0 + ε[ are linearly independent.

Proof: We refer the reader to Appendix 1 for the first statement and only prove the second one.

Suppose that for some finite set K ⊂ ℝ and complex numbers αk, k ∈ K we have for all z ∈ ]x0 − ε, x0 + ε[. Since g is analytic and non-zero analytic functions can only vanish on a discrete set, we then must also have g(z) = 0 for all z ∈ ℂ. This means that the linear dependence on an interval implies linear dependence on the whole real line. This proves the second statement, and the proof of Lemma 2 is completed.

We can now give the definition of the quantity which will lower-bound the input redundancy for linear input encoding.

DEFINITION 3. The Fourier rank of a function h : ℝ → ℝ at a point x0 ∈ ℝ is the infimum of the numbers r such that there exists an ε > 0, a set K ⊂ ℝ \ {0} of size 2r, and coefficients αk ∈ ℂ, k ∈ {0}∪K such that

Note that the Fourier rank can be infinite, and if it is finite, then it is a non-negative integer. Indeed, from h* = h it follows that , so that by the linear independence of the χ's (Lemma 2) we have , which means that in a minimal representation of h, the set K is symmetric around 0 ∈ ℝ.

EXAMPLES.

• Constant functions have Fourier rank 0 at every point.

• The trigonometric functions x ↦ cos(κx + ϕ), with κ ≠ 0, have Fourier rank 1 at every point.

• Trigonometric polynomials of degree d, , have Fourier rank d at every point, if αd ≠ 0, κd ≠ 0.

• The function x ↦ |sin(πx)| has Fourier rank 1 at every x0 ∈ ℝ \ ℤ and infinite Fourier rank at the points x0 ∈ ℤ.

• The function x ↦ x has infinite Fourier rank at every point.

THEOREM 4. Let f be an expectation value function, i.e., as in Remark 1. Moreover, let a, b ∈ ℝn, and h : ℝ → ℝ : x ↦ f(x · a + b). For every x0 ∈ ℝ, the Fourier rank of h at x0 is less than or equal to the spread of a.

Proof: With the preparations above, this is now a piece of cake. Let x0 ∈ ℝ and set ε : = 1. With Ka as defined in (10), for x ∈ ]x0 − ε, x0 + ε[, we have

where we let

This shows that h has a representation as in (12) with K: = Ka \ {0}. It follows that the Fourier rank of h is bounded from above by |Ka|/2 = spreada. This completes the proof of Theorem 4.

The theorem allows us to give the concrete lower bounds for the input redundancy.

COROLLARY 5. Let h be a real-valued function defined in some neighborhood of a point x0 ∈ ℝ.

Suppose that in a neighborhood of x0, h is equal to an expectation value function with linear input encoding, i.e., there is an n, a function f as in Remark 1, vectors a, b ∈ ℝn, and an ε > 0 such that h(x) = f(x · a + b) holds for all x ∈ ]x0 − ε, x0 + ε[.

The input redundancy, n, is greater than or equal to log3(r + 1), where r is the Fourier rank of h at x0.

To represent a function h by a MiNKiF PQC with linear input encoding in a tiny neighborhood of a given point x0, the input redundancy must be at least the logarithm of the Fourier rank of h at x0.

Proof of Corollary 5: For every a ∈ ℝn, we have , by the definition of Ka, and hence spread(a) ≤ (3n − 1)/2.

We allow that a, b are chosen depending on h (see the Remark 6 below). Theorem 4 gives us the inequality

which implies n ≥ log3(2r + 1) ≥ log3(r + 1), as claimed. (We put the +1 to make the expression well-defined for r = 0.) This concludes the proof of Corollary 5.

REMARK 6. If the entries of a are all equal up to sign, then we have spread(a) = n. It can be seen that if the entries of a are chosen uniformly at random in [0, 1], then spread(a) = (3n − 1)/2. Hence, it seems that some choices for a are better than others. Moreover, looking into the proof of Theorem 4 again, we see that the χk, k ∈ Ka, must suffice to represent (or approximate) the target function, and that the entries of b play a role in which coefficients αk can be chosen for a given a. Hence, it is plausible that the choices of a, b should depend on h.

REMARK 7. Our restriction to Hamiltonians with two eigenvalues leads to the definition of the spread in (10). If the set of eigenvalue distances of the Hamiltonian encoding the input ηj is Dj ⊂ ℝ, then, for the definition of the spread, we must put this:

Theorem 4 and Corollary 5 remain valid, with essentially the same proofs, but with a higher base for the logarithm.

4. Arcsine Input Encoding

We now consider the original situation of the example in Mitarai et al. [13], where the activation function is φ = arcsin. More precisely, for a, b ∈ ℝn, we consider

Abbreviating sj: = ajx + bj and for j = 1, …, n, Remark 13, gives us that the expectation value functions with arcsine input encoding are of the form

where we use the common shorthand [n]: = {1, …, n}, and set with τj(S, C) = sin if j ∈ S, τj(S, C) = cos if j ∈ C, and τj(S, C) = id otherwise.

Consider a formal expression of the form

where x is a variable (for arbitrary a, b ∈ ℝn and S, C ⊆ [n] with S ∩ C = Ø). We call it an sc-monomial of degree |S| + |C|. An sc-monomial can be evaluated at points x ∈ ℝ for which the expression under the square root is not a negative real number, i.e., in the interval

(which could be empty), and it defines an analytic function there. Note, though, that it can happen that an sc-monomial can be continued to an analytic function on a larger interval than Iμ. The obvious example where that happens is this: For j, j′ ∈ C with j ≠ j′ we have . In that case, the formal power series of the sc-monomial simplifies, and omitting the interval (also for j′) from (15) makes the intersection larger.

The following technical fact can be shown (cf. [17]).

LEMMA 8. Let be a linear combination of sc-monomials with degrees at most d, and suppose that ⋂jIμj ≠ Ø. If an analytic continuation of g to a function exists, then is a polynomial of degree at most d.

From this lemma, we obtain the following result.

COROLLARY 9. Let h : ℝ → ℂ be an analytic function, and x0 ∈ ℝ.

Suppose that in a neighborhood of x0, h is equal to an expectation value function with arcsine input encoding, i.e., there is an n, a function f as in Remark 1, vectors a, b ∈ ℝn, and an ε > 0 such that

1. −1 ≤ x · aj + bj ≤ +1 for all x ∈ ]x0 − ε, x0 + ε[, and

2. h(x) = f(arcsin(x · a + b)) holds for all x ∈ ]x0 − ε, x0 + ε[.

Then h is a polynomial, and the input redundancy, n, is greater than or equal to the degree of h.

To represent a polynomial h by a MiNKiF PQC with arcsine input encoding in a tiny neighborhood of a given point x0, the input redundancy must be at least the degree of h.

Proof of Corollary 9: Let us abbreviate g : x ↦ f(arcsin(x · a + b)):]x0 − ε, x0 + ε[ → ℝ. From the discussion above, we know that g is a linear combination of sc-monomials.

Both functions h and g are analytic, and they coincide on an interval. Hence, g has an analytic continuation, h, to the real line so that Lemma 8 is applicable, and states that h is a polynomial with degree at most n. This completes the proof of Corollary 9.

As indicated in the introduction, in the special case which is considered in Mitarai et al. [13]—where the input amplitudes are stored (by rotations) in n distinct qubits before any other quantum operation is performed—this can be proved by looking directly at the effect of a Pauli transfer matrix on the mixed state vector in the Pauli basis. Our corollary shows that this effect persists no matter how the arcsine-encoded inputs are spread over the quantum circuit.

The corollary allows us to lower bound the input redundancy for some functions.

EXAMPLES. There is no PQC with arcsine input encoding that represents the function x ↦ sinx (exactly) in a neighborhood any point. Indeed, the same holds for any analytic function defined on the real line which is not a polynomial: the exponential function, the sigmoid function, arcus tangens, …

Unfortunately, from these impossibility results, no approximation error lower bounds can be derived. Indeed, in their paper [13], Mitarai et al. point out that, due to the terms, the functions represented by the expectation values can more easily represent a larger class of functions than polynomials.

To give lower bounds for the representation of functions which are not analytic on the whole real line, we proceed as follows. For fixed n ≥ 1, x0 ∈ ℝ and a, b ∈ ℝn, denote by the vector space spanned by all functions of the form ]x0 − ε, x0+ε[ → ℝ : x ↦ f(arcsin(x · a + b)) for an3 ε > 0, where f ranges over all expectation value functions with input redundancy n, i.e., functions as in Remark 1, a, b ∈ ℝn satisfy −1 < ajx0 + bj < +1, and the arcsin is applied to each component of the vector.

PROPOSITION 10. The vector space has dimension at most 3n, and is spanned by the sc-monomials (14) of degree n.

Proof: With a, b fixed, there are at most 3n sc-monomials (14) of degree n, as S, C ⊆ [n] and S ∩ C = Ø hold. Hence, the statement about the dimension follows from the fact that the elements of are generated by sc-monomials.

The fact that the sc-monomials generate the expectation value functions with arcsine input-encoding of redundancy n is just the statement of (13) above. This concludes the proof of Proposition 10.

We can now proceed in analogy to the case of linear input encoding. Let us define the sc-rank at x0 of a function h defined in a neighborhood of x0 as the infimum over all r for which there exist sc-monomials μ1, …, μr, an ε > 0, and real numbers α1, …, αr such that x0∈⋂jIμu, and

Proposition 10 now directly implies the following result.

COROLLARY 11. Let h be a real-valued function defined in some neighborhood of a point x0 ∈ ℝ.

Suppose that in a neighborhood of x0, h is equal to an expectation value function with arcsine input encoding, i.e., there is an n, a function f as in Remark 1, vectors a, b ∈ ℝn, and an ε > 0 such that h(x) = f(arcsin(x · a + b)/(2π)) holds for all x ∈ ]x0 − ε, x0 + ε[.

The input redundancy, n, is greater than or equal to log3(r), where r is the sc-rank of h at x0.

To represent a function h by a MiNKiF PQC with arcsine input encoding in a tiny neighborhood of a given point x0, the input redundancy must be at least the logarithm of the sc-rank of h at x0.

We conclude the section with a note on the choice of the parameters a, b.

REMARK 12. It can be seen [17] that the dimension of the space is 3n, if aj, bj j = 1, …, n are chosen in general position, but only O(n) if a is a constant multiple of the all-ones vector. Moreover, as indicated in Proposition 10, the basis elements which span the space depend on a, b, and hence the space will in general be different for different choices of a, b. Again, we find that it is plausible that the choices of a, b should depend on the target function.

5. Conclusions and Outlook

To the best of our knowledge, our results give the first rigorous theoretical quantitative justification of a routine decision for the design of parameterized quantum circuit architectures: Input redundancy must be present if good approximations of functions are the goal.

Both activation functions we have considered give clear evidence that input redundancy is necessary, and grows at least logarithmically with the “complexity” of the function: The complexity of a function f with respect to a family of “basis functions” is the number of functions from the family which are needed to obtain f as a linear combination. In our results, the function family depends on the activation function. In the case of linear input encoding (activation function “identity”), the basis functions are trigonometric functions t ↦ e2πikt, whereas for the arcsin activation function, we obtain the basis monomials (14) already used, in a weaker form, in Mitarai et al. [13].

From Remarks 6 and 12 we see that the weights a, b, i.e., the coefficients in the affine transformation links in Figure 1, should have to be variable in order to ensure a reasonable amount of expressiveness in the function represented by the quantum circuit. We use the term variational input encoding to refer to the concept of training the parameters involved in the encoding with other model parameters. A recent set of limited experiments [18] indicate that variational input encoding improves the accuracy of Quantum Neural Networks in classification tasks.

While we emphasize the point that this paper demonstrates a concept—lower bounds for input redundancy can be proven—there are a few obvious avenues to improve our results.

Most importantly, our proofs rely on exactly representing a target function. This is an unrealistic scenario. The most pressing task is thus to give lower bounds on the input redundancy when an approximation of the target function with a desired accuracy ε > 0 in a suitable norm is sufficient.

Secondly, we thank an anonymous reviewer for pointing out to us that lower bounds for many more activation functions could be proved.

Finally, Remark 1 mentions that for every multi-linear trigonometric polynomial f, there is a PQC whose expectation value function is precisely f. It would be interesting to lower-bound a suitable quantum-complexity measure of the PQCs representing a function, e.g., circuit depth. While comparisons of the quantum vs. classical complexity of estimating expectation values have attracted some attention [19], to our knowledge, the same question in the “parameterized setting” has not been considered.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author Contributions

DT contributed the realization that lower bounds could be obtained, and sketches of the proofs. FG contributed the details of the proofs and the literature overview. All authors contributed to the article and approved the submitted version.

Funding

This research was supported by the Estonian Research Council, ETAG (Eesti Teadusagentuur), through PUT Exploratory Grant #620. DT was partly supported by the Estonian Centre of Excellence in IT (EXCITE, 2014-2020.4.01.15-0018), funded by the European Regional Development Fund.

Conflict of Interest

DT was employed by the company Ketita Labs OÜ.

The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This manuscript has been released as a pre-print on arXiv (arXiv:1901.11434).

Footnotes

1. ^Cf. Remarks 6 and 12. Indeed, our analysis suggests that the a, b should be training parameters if the goal is to achieve high expressivity; see the Conclusions.

2. ^This is where the factor 2π in the exponent is used.

3. ^Mathematically rigorously speaking, is the germ of functions at x0.

References

1. Feynman RP. Simulating physics with computers. Int J Theor Phys. (1982) 21:467–88. doi: 10.1007/BF02650179

3. Mohseni M, Read P, Neven H, Boixo S, Denchev V, Babbush R, et al. Commercialize quantum technologies in five years. Nat News. (2017) 543:171. doi: 10.1038/543171a

4. Perdomo-Ortiz A, Benedetti M, Realpe-Gómez J, Biswas R. Opportunities and challenges for quantum-assisted machine learning in near-term quantum computers. Quant Sci Technol. (2018) 3:030502. doi: 10.1088/2058-9565/aab859

5. Neville A, Sparrow C, Clifford R, Johnston E, Birchall PM, Montanaro A, et al. Classical boson sampling algorithms with superior performance to near-term experiments. Nat Phys. (2017) 13:1153. doi: 10.1038/nphys4270

6. Bremner MJ, Montanaro A, Shepherd DJ. Average-case complexity versus approximate simulation of commuting quantum computations. Phys Rev Lett. (2016) 117:080501. doi: 10.1103/PhysRevLett.117.080501

7. Farhi E, Harrow AW. Quantum supremacy through the quantum approximate optimization algorithm. arXiv. (2016) 160207674.

8. Du Y, Hsieh MH, Liu T, Tao D. The expressive power of parameterized quantum circuits. arXiv. (2018) 181011922.

9. Farhi E, Neven H. Classification with quantum neural networks on near term processors. arXiv. (2018) 180206002.

10. McClean JR, Romero J, Babbush R, Aspuru-Guzik A. The theory of variational hybrid quantum-classical algorithms. New J Phys. (2016) 18:023023. doi: 10.1088/1367-2630/18/2/023023

11. Schuld M, Petruccione F. Supervised Learning with Quantum Computers. Vol. 17. Cham: Springer (2018).

12. Schuld M, Bocharov A, Svore K, Wiebe N. Circuit-centric quantum classifiers. arXiv. (2018) 180400633.

13. Mitarai K, Negoro M, Kitagawa M, Fujii K. Quantum circuit learning. Phys Rev A. (2018) 98:032309. doi: 10.1103/PhysRevA.98.032309

14. van Apeldoorn J, Gilyén A. Improvements in quantum SDP-solving with applications. arXiv. (2018) 180405058.

15. Harrow AW, Hassidim A, Lloyd S. Quantum algorithm for linear systems of equations. Phys Rev Lett. (2009) 103:150502. doi: 10.1103/PhysRevLett.103.150502

18. Lei AW. Comparisons of Input Encodings for Quantum Neural Networks. Tartu: University of Tartu (2020).

19. Bravyi S, Gosset D, Movassagh R. Classical algorithms for quantum mean values. arXiv. (2019) 190911485.

Proof of Lemma 2

We have to prove that the functions χk, k ∈ ℝ, defined in (11) are linearly independent (in the algebraic sense, i.e., considering finite subsets of the functions at a time). There are several ways of proving this well-known fact; we give the proof that probably makes most sense to a physics readership: The Fourier transform (in the sense of tempered distributions) of the function χk is δ(k − *), the Dirac distribution centered on k. These generalized functions are clearly linearly independent for different values of k.

Keywords: parameterized quantum circuits, quantum neural networks, near-term quantum computing, lower bounds, input encoding

Citation: Gil Vidal FJ and Theis DO (2020) Input Redundancy for Parameterized Quantum Circuits. Front. Phys. 8:297. doi: 10.3389/fphy.2020.00297

Received: 22 May 2020; Accepted: 30 June 2020;

Published: 13 August 2020.

Edited by:

Vctor M. Eguíluz, Institute of Interdisciplinary Physics and Complex Systems (IFISC), SpainReviewed by:

Gian Luca Giorgi, Institute of Interdisciplinary Physics and Complex Systems (IFISC), SpainWilson Rosa De Oliveira, Federal Rural University of Pernambuco, Brazil

Copyright © 2020 Gil Vidal and Theis. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Francisco Javier Gil Vidal, ZnJhbmNpc2NvLmphdmllci5naWwudmlkYWwmI3gwMDA0MDt1dC5lZQ==; Dirk Oliver Theis, ZG90aGVpcyYjeDAwMDQwO3V0LmVl

Francisco Javier Gil Vidal

Francisco Javier Gil Vidal Dirk Oliver Theis

Dirk Oliver Theis