- 1Department of Astronomy and Astrophysics, Pennsylvania State University, University Park, PA, United States

- 2Center for Astrostatistics, Pennsylvania State University, University Park, PA, United States

- 3Department of Statistics, Pennsylvania State University, University Park, PA, United States

Celestial objects exhibit a wide range of variability in brightness at different wavebands. Surprisingly, the most common methods for characterizing time series in statistics—parametric autoregressive modeling—are rarely used to interpret astronomical light curves. We review standard ARMA, ARIMA, and ARFIMA (autoregressive moving average fractionally integrated) models that treat short-memory autocorrelation, long-memory 1/fα “red noise,” and nonstationary trends. Though designed for evenly spaced time series, moderately irregular cadences can be treated as evenly-spaced time series with missing data. Fitting algorithms are efficient and software implementations are widely available. We apply ARIMA models to light curves of four variable stars, discussing their effectiveness for different temporal characteristics. A variety of extensions to ARIMA are outlined, with emphasis on recently developed continuous-time models like CARMA and CARFIMA designed for irregularly spaced time series. Strengths and weakness of ARIMA-type modeling for astronomical data analysis and astrophysical insights are reviewed.

The Variability of Cosmic Populations

Except for five roving planets and an occasional comet or nova, the nighttime sky seems immutable to the human eye. The pattern and brightness of stars appears unchanging as from our childhood to old age. Myths from ancient Egyptian, Greek, and Australian Aboriginal cultures suggest that a few stars (such as Algol, Mira, and Aldeberan) were recognized as variables [1, 2]. As telescopic studies proliferated from the seventeenth through twenty first centuries, more variable stars were found with a wide range of characteristics. Some are periodic due to pulsations, rotationally modulated spots, or eclipses of binary companions. Others vary in irregular ways from magnetic flares, eruptions, pulsations, accretion of gas from companions, and most spectacularly, nova and supernova explosions. Ten thousand stars in two dozen categories were cataloged by Kukarkin and Parenago [3]; this catalog now has over 50,000 stars with >100 classes [4]. NASA's Kepler mission has recently shown that most ordinary stars are variable when observed with ~0.001% accuracy and dense cadences [5].

The study of celestial objects with variable brightness has broadened hugely in recent decades, emerging as a recognized discipline called “time domain astronomy” [6]. The brightest sources in the X-ray and gamma-ray sky are highly variable, typically from accretion of gas onto neutron stars and black holes. Timescales range from milliseconds to decades with a bewildering range of periodic, quasi-periodic, stochastic and bursting characteristics. The Galactic black hole binary GRS 1915+105 alone has a dozen modes of variability [7]. The radio sky has extragalactic quasars and blazars as well as Galactic pulsars and several varieties of fast radio bursts and transients. The non-photon gravitational wave observatories have recently emerged with rapid “chirps” from merging black hole and neutron star binaries [8]. A huge industry searching for distant supernova explosions is propelled by their utility in tracing the accelerated expansion of the Universe [9].

Given this tremendous growth in the volume and complexity of astronomical time series data, we can ask what methods are common for their characterization and analysis. In the statistical literature, the dominant methods are time domain parametric modeling. Popular introductory texts [10, 11] devote most of their chapters on autoregressive time domain models with only brief treatment of Fourier frequency domain methods. Prominent graduate level textbooks have a similar balance [12, 13]. Yet only few research papers each year in the astronomical literature mention autoregressive time domain methods: <10 papers/year prior to 2010, recently growing to ~25 papers/yr. In contrast, ~1,000 papers annually use “Fourier” or “power spectrum” procedures.

This radical dichotomy between the methodologies emphasized by statisticians and those used by astronomers motivates our investigation here. Perhaps the difference arises due to real differences in data characteristics and questions raised in a physical science like astronomy compared to questions raised in social sciences like econometrics that are treated in the statistical literature. This seems possible: time series texts oriented toward engineers [14, 15] and meteorologists [16] generally use spectral and wavelet analysis rather autoregressive modeling. Another difference is that social science time series mostly have evenly spaced observation times while astronomical time series are often irregularly spaced due to daily or annual celestial cycles and other causes. The recent growth in autoregressive models for astronomy is mostly restricted to the narrow field of continuous-time models that treat irregular time series [17].

Our goal here is to investigate whether standard time domain methods commonly taught and used in the social sciences can in fact be effective in characterizing astronomical time series, and whether this analysis path can lead to valuable scientific insights.

We start with a brief presentation of the mathematics of common time domain models, model fitting and validation, and implementation of modeling in public domain software. We proceed with application of standard methods to the brightness variations of four stars using both evenly- and irregularly-spaced light curves based on the work of Caceres et al. [submitted for publication, in preparation]. We continue with an outline of various extensions to the common autoregressive models, including new developments of continuous-time models appropriate for irregularly-spaced time series. We end with discussion on what astronomical insights can emerge from autoregressive modeling, and comments on the utility of this approach to future time domain studies in astronomy.

The ARMA, ARIMA, and ARFIMA Models

In this section, we give a simplified presentation of standard material on parametric autoregressive modeling. The suite of ARMA-related procedures was brought into prominence by the 1971 textbook of Box and Jenkins [[18] is the latest edition] and is sometimes known as the Box-Jenkins method. Additional coverage appears in time series analysis texts like Chatfield [10] and (in greater mathematical detail) Hamilton [12], and econometric textbooks like Enders [19], Greene [20], and Hyndman and Athanasopoulos [21]. The ARFIMA (also called FARIMA) model is less common than the ARIMA model (~0.05 M vs. ~2 M Google hits). Its mathematical foundations are treated in monographs such as Palma [22]. Autoregressive modeling is reviewed in the astronomical literature by Scargle [23], Koen and Lombard [24], and Caceres et al. [submitted for publication].

As elsewhere in statistical analysis, it is wise to examine the data nonparametrically prior to parametric modeling. The time series should be examined for its basic behavior with kernel density estimation (or similar nonparametric regression) smoothing to see trends. Box-plots, histograms and quantile-quantile plots show the distribution of intensity values. Most importantly here, the nonparametric autocorrelation function (ACF) gives the correlation between a series and time-lagged values of itself over its entire length. For an evenly-spaced time series x with time stamps t, mean value μ and standard deviation σ,

In realistic cases, the population mean and standard deviation are unknown and must be estimated from the data. As with Fourier and wavelet transforms, the full information in a time series is reproduced in the ACF if an unlimited number of coefficients are used. Theorems give the statistical distribution of the ACF for Gaussian white noise, so confidence intervals (such as P = 99%) can be constructed and hypothesis tests applied (Durbin-Watson and Breusch-Godfrey tests) to readily evaluate whether autocorrelation is present.

When the ACF shows significant signals, current values of the time series depend on past values. The time series violates the assumption of “independence” in i.i.d. and many standard statistical procedures can not be accurately applied. This is the situation where autoregressive modeling can be effective. Commonly, two forms of dependency on past values are treated as a linear regression. First, an autoregressive (AR) process has coefficients that quantify the dependence of current values on recent past values:

where ϵt is a normally (Gaussian) distributed random error with zero mean and constant variance, p is the order of the process (i.e., how many lags to model), and the a's are the corresponding coefficients for each lag up to order p. Second, a moving average (MA) process has coefficients that quantify the dependence of current values on recent past random shocks to the system:

where ϵt is the error term for the t-th time point, bi is the coefficient for each lagged error term up to order q. Here the past ϵ noise values can be viewed as random shocks to the system, and the b coefficients quantify the response to the shocks, ϵ. Adding these two equations together gives a combined ARMA(p,q) process. Coefficients are estimated by standard regression procedures such as maximum likelihood estimation.

The ARMA model has several assumptions including stationarity that requires constant mean and constant variance. A stationary time series exhibits the same behavior at the beginning, middle, and end of the dataset. Violations of constant variance (homoscedasticity) can be treated with nonlinear ARMA-type models with volatility like GARCH. Violations of stationarity can be tested and regimes of different behavior can be found using change point analysis.

Nonstationarity and variable mean values can sometimes be removed by fitting a global regression model such as a polynomial, but often an adequate detrending regression model cannot be found. A flexible nonparametric procedure called differencing can remove nonstationarity in many such cases. Here one applies the backshift operator B that replaces the time series xt by another consisting of the point-to-point difference in values:

The differenced time series can then be modeled as a stationary ARMA process, and the original time series with trends can be recreated by reversing or integrating the differenced time series. This combination of nonparametric differencing and integration with a parametric ARMA process is called the ARIMA(p,d,q) model where d represents the number of differencing operations applied and typically equals one.

Finally, and less intuitively, a fractional integrated procedure can be described by

where d can be a real (non-integer) order of differencing and B is the backshift operator defined above. When combined with ARMA modeling, this gives an ARFIMA(p,d,q) process. The advantage here is that fractional values of d quantifies a long-memory dependency of xt on past values in a single parameter. Mathematically, this fractional integration process corresponds to the Fourier behaviors known as 1/fα “red noise” where f is the frequency and α is the slope of a power law fit to the low-frequency Fourier power spectrum [22]. It can also be viewed as a binomial series expansion,

The parameter d is typically constrained to be in the range 0.0 − 0.5 to define a stationary model. Three commonly used parameters are related to each other as follows: d = α/2 and d = H − 1/2 where H is the econometricians' Hurst parameter.

In simple language, ARFIMA(p,d,q) is a time series model where the autoregressive and moving average components treat many short-memory dependencies on recent past values, and the fractional integration component treats both many forms of trend and a 1/fα-type long-memory dependency. Best fit parameters for ARMA -type coefficients are typically calculated with maximum likelihood estimation for different values of p, d, and q, called the order of the model. The optimal order is then selected to optimize the Akaike Information Criterion (AIC), a penalized maximum likelihood measure that balances improvements in likelihood with increases in model complexity.

ARMA, ARIMA, and ARFIMA models are attractive for astronomical time series analysis for various reasons. First, the models are very flexible, successfully modeling an astonishing variety of irregular or quasi-periodic, smooth or choppy, constant or variable mean light curves. Second, the dimensionality of the model is relatively low with a moderate computational burden of the numerical optimization. In contrast, local regression methods, such as Gaussian Processing regression, may have thousands of parameters with O(N3) computational burden although some computational efficiency has been achieved [25]. Third, error analysis on the parameters naturally emerges through the likelihood regression analysis. Fourth, they are extensible to situations involving multivariate time series, combinations of stochastic and deterministic behaviors, change points, and (moderately) irregular observation spacing. Autoregressive modeling is not well-adapted to situations with strictly periodic variations (where the signal is compactly concentrated in Fourier power coefficients) or with sudden eruptive events (where the nonstationary amplitude is not greatly reduced by differencing).

Altogether, ARIMA-type models have proved to be useful to a very wide range of physical and human-generated dynamic systems in engineering, econometrics, Earth sciences, and other fields. But despite these advantages, non-trivial ARIMA models have been used only rarely in time domain astronomy for compact radio sources [26], Mira stars [27] variability, and quasars [28]. ARFIMA models appear in only a single study on solar flare activity [29].

Testing the Model

The first action that every analyst should take after ARIMA-type modeling is model validation based, in part, on residual analysis. This should follow any regression procedure for two reasons. First, even though the “best fit” has been obtained in a maximum likelihood sense, the entire model family may not apply to the dataset under study; this problem is called “model misspecification.” Second, the model may be correctly specified but its underlying mathematical assumptions may be violated. For example, many regression procedures assume the errors (ϵ in Equation 1) are “normal i.i.d.”; that is, they are independently and identically distributed following a Gaussian distribution.

As with any regression analysis, the unsequenced values of model residuals can be tested for normality using the nonparametric Anderson-Darling or Shapiro-Wilks tests. The Jarque-Bera test for normality examined the skewness and kurtosis of the residual distribution. The Breusch-Pagen and White tests are used to test whether the residuals have constant variance or whether heteroscedasticity is present; this is a violation of the “identically” requirement in i.i.d.

A suite of specialized “time series diagnostics” have been developed by econometricians that use the time sequence of residuals to test model validity in various ways. These are hypothesis tests for specific characteristics where the statistic has a known distribution for the null hypothesis of Gaussian white noise. Thus quantitative probabilities for deviations from Gaussian white noise can be obtained under many circumstances.

The most well-known of these diagnostics is the Durbin-Watson test of serial autocorrelation; i.e., whether the AR(1) coefficient of the residuals is consistent with zero. The Breusch-Godfrey test generalizes this to test significance of AR(p) where p is specified by the user. The Box-Pierce and Ljung-Box test are “portmanteau” tests that address all values of p simultaneously. Other nonparametric tests (such as the Wald-Wolfowitz runs test that groups the residuals into a binary “+” and “−” variable) may also be effective in testing the existence of temporal structure in model residuals.

Several statistics test for nonstationarity in the residuals. A simple nonparametric correlation coefficient like Kendall's τ can test for overall correlation. Global regressions can reveal large-scale trends and local regressions smooth the residuals and reveal small-scale structure. But it is possible for a time series to be nonstationary but without trend. One of these conditions is called “unit root” where the time series does not revert to the original mean after experiencing a shock. The physicists' random walk is an example of a nonstationary diverging process. The augmented Dickey-Fuller and Kwiatkowski-Phillips-Schmidt-Shin (KPSS) statistics test for trend stationarity against a unit root.

Software Implementations in R

Software for autoregressive modeling is widespread. In Python, statsmodel.tsa and related libraries have ~70 functions implementing AR, ARMA, ARIMA, and VAR (vector autoregressive) model fitting. The kalmanf function estimates ARMA models with exact maximum likelihood estimation using the Kalman filter. The regime_switching function implements a nonlinear Markov switching dynamic regression. Time series diagnostics, trend regressors and other ancillary functions are included.

Matlab has several dozen infrastructure functions for manipulating time series objects. But its power is in the Econometrics Toolbox with extensive methodology (and tutorials) including: time series decomposition, filtering, ARIMA regression with non-i.i.d. errors, ARMAX estimation with exogenous covariates, GARCH models, multivariate VAR, AIC model selection, state space modeling with the Kalman filter, time series diagnostics, simulation and forecasting. This Matlab software costs several tens of dollars for a student, several hundred dollars for a university researchers, and several thousand dollars for a commercial license.

The largest collection of autoregressive software resides in the public domain R statistical software environment [30]. Base-R software includes infrastructure for “ts” (evenly spaced) and “zoo” (irregularly spaced) time series objects as well as basic ARIMA and ARIMAX functionality. But the bulk of advanced time series methods are implemented in some of the >12,000 add-on CRAN packages. Mature and extensive CRAN packages for ARIMA-type modeling include acp, arfima, BAYSTAR, BigVAR, bsts, carfima, carx, cents, cts, dse, fGarch, FGN, FitAR, FitARMA, forecast, glarma, imputeTS, ltsa, MARSS, mclcar, mlVAR, MSBVAR, MTS, partsm, perARMA, portes, prophet, robustarima, rugarch, sparsevar, stochvol, stsm, TSA, tsdecomp, tsDyn, tseries, and vars. Recently, the continuous-time CARFIMA model has been implemented in CRAN package carfima. Together these packages provide more than a thousand functions relating to autoregressive modeling. The package capabilities are briefly listed and classified in the CRAN Task View on Time Series Analysis. An elementary introduction to R for astronomical time series appears in the text by Feigelson and Babu [31].

Important diagnostics for goodness-of-fit for time series and model fit residuals (such as the Durbin-Watson, Bruesch-Godfrey, Box-Pierce, Augmented Dickey-Fuller, and KPSS tests) are available in CRAN packages such as tseries [32] and lmtest [33]. These tests are described in econometrics textbooks [e.g., [19, 20]] and in Wikipedia.

The ARIMA and ARFIMA fits in Figures 1–4 here were obtained using the auto.arima and arfima functions in forecast, one of the most popular CRAN packages that is describe in the ebook by Hyndman and Athanasopoulos [21]. The data manipulation and plotting was performed using base-R. Benchmarking shows that the auto.arima and arfima codes provide AIC-optimized maximum likelihood fits in O(N) wall-time: ~0.4 CPU-sec for ≤1,000 datapoints, in 4 CPU-sec for 10,000 datapoints, and so forth. This is sufficiently efficient for analyzing large astronomical surveys. However, the new carfima code (below) is much slower.

Figure 1. Space-based Kepler mission photometric light curve of a quiet star with autoregressive modeling. Left panels from top to bottom: Original light curve; differenced light curve; ARIMA model residuals; ARFIMA model residuals. Right panels show the autocorrelation function at each stage.

Application to Stellar Photometry

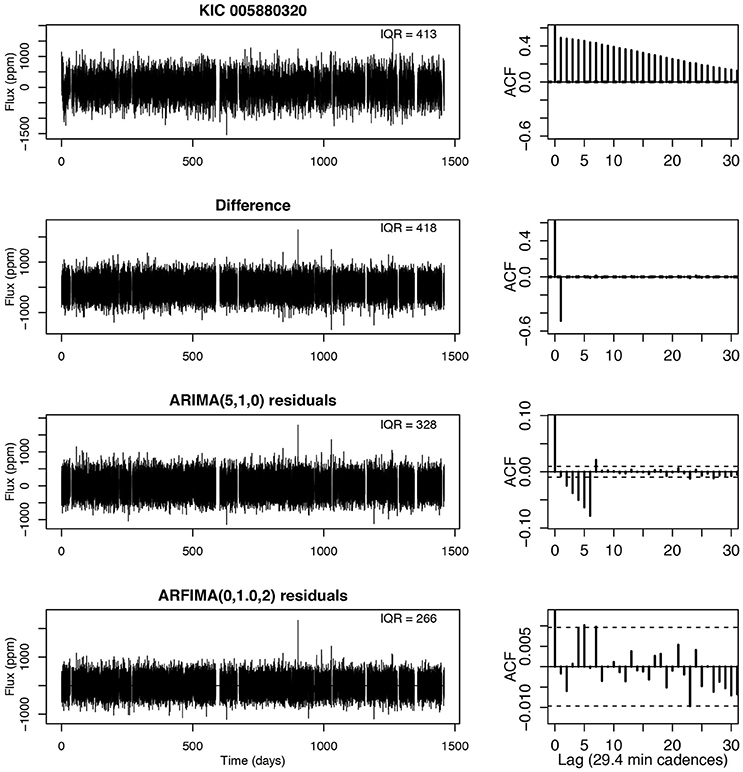

KIC 005880320 (Figure 1)1

We start our application of autoregressive models with a seemingly simple case: a magnetically quiet star observed nearly1 continuously for four years by NASA's Kepler mission with an evenly spaced 29.4 min cadence. This solar-type star, also called Kepler 758, has four confirmed “super-Earth” planets with periods ranging from 5 to 20 days and transit depth ranging from 100 to 300 ppm (parts per million). The periodic dips from the transiting planets are readily seen in periodograms based on the autoregressive model residuals Caceres et al. [in preparation].

Here we examine the appearance of the light curve from its original state through differencing, ARIMA and ARFIMA modeling (left panels). Quantitative measures include the inter-quartile range (IQR) and the autocorrelation function (ACF) at each stage of the analysis (right panels). Although the photometric behavior of the original light curve looks to the eye like near-random noise, it is highly autocorrelated with ACF(lag=1)~0.4 with memory extending to a day or more (upper right). The differencing operator does not reduce the noise level (IQR is unchanged) but reduces the long-memory autocorrelation leaving behind a strong short-memory component with ACF(1)≃−0.6. The maximum likelihood ARIMA fit required five coefficients but left behind significant autocorrelation over several lags. But the ARFIMA fit with only two coefficients, despite a d value close to zero, reduces the residuals to near-white noise.

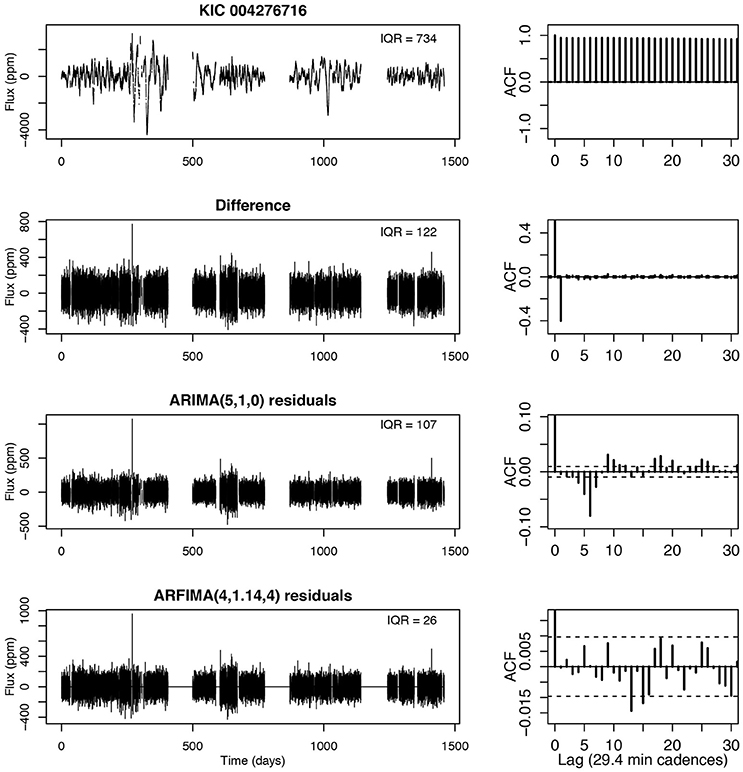

KIC 004276716 (Figure 2)

This star is a bright K3-type star that is observed for 14 of the 17 quarters of the Kepler mission with an unconfirmed candidate planetary transit with orbital period around 20 days. It is much more magnetically active than the star shown in Figure 1, exhibiting photometric variations from large spots that emerge and disappear after a few 30 day rotation periods. The differencing operator removed nearly all of the long-memory autocorrelation and reduced the overall IQR by a factor of 6, and a few outliers are revealed. An ARIMA model with order-6 improved the fit somewhat but the ARFIMA model with order-8 reduces the noise level several times again and eliminates all remaining autocorrelation. Here a significant (d = 1.14) long-memory component is present. Note that the residual noise levels seems variable (heteroscedastic), so it may be worthwhile trying GARCH modeling.

Caceres et al. [in preparation] has modeled the full sample of ~200,000 Kepler stars with ARIMA and ARFIMA, and find the behavior shown in Figures 1, 2 is typical for most, but not all, of the stellar variations. Generally, the broader family of ARFIMA models do a better job in reducing autocorrelation, although most of the noise is removed by ARIMA models. Very simple models such as AR(1) or ARMA(1,1) that do not include detrending by differencing are not adequate to model most stellar light curves. Fortunately for their scientific goals, Caceres et al. [submitted for publication, in preparation] find that the periodic planentary transit signals are mostly not incorporated into the maximum likelihood autoregressive fits, as they occupy only a small fraction of the data points. Their Transit Comb Filter periodogram, designed to treat a box-like transit after the differencing operator is applied, is able to recover most of the Kepler confirmed planets and uncover new planetary cadidates.

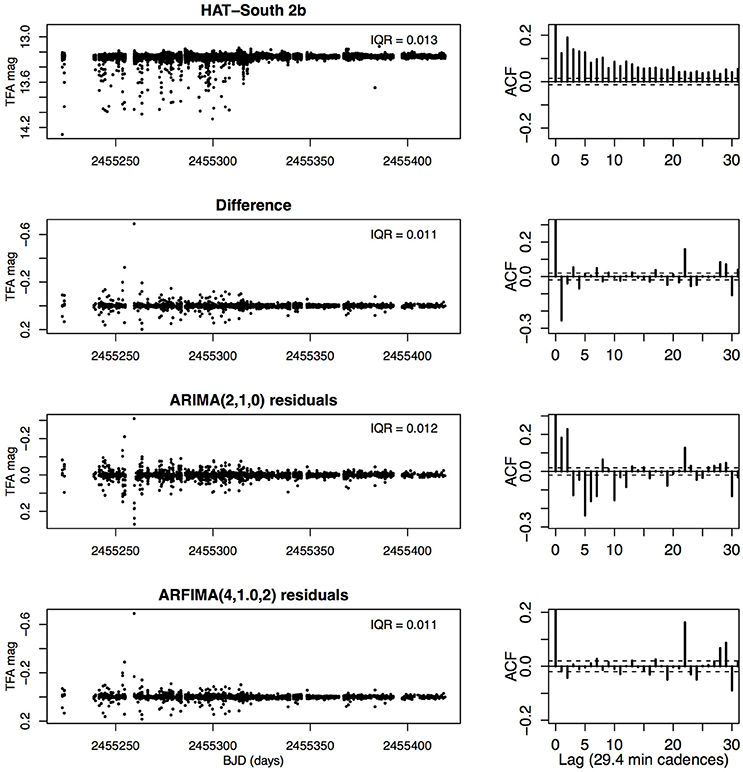

HATS-2b (Figure 3)

Ground-based surveys suffer two major cadence problems compared to space-based surveys: a given star is not observable for ~6 months every year due to solar motion, and a given star is not observable for daylight hours from a single site. The Hungarian Automated Telescope South network seeks to mitigate the latter problem with three telescopes on different continents allowing nearly continuous coverage during a 6 month season [34]. In ground-based surveys, most of the autocorrelated noise arises from uncorrected atmospheric variations at the telescope rather than intrinsic variability of the star. Nonetheless, planetary transits can be recovered from ground-based light curves in a small fraction of cases. HATS-2 is a magnetically active K-type star with a hot Jupiter in a 32 h orbit [35].

Figure 3. Ground-based light curve for the planet-bearing star HATS-2b from the Hungarian Automated Telescope South network showing ~6 months of brightness variations. Except for the top panels, the irregular observation times have been binned onto a fixed sequence of 29.4 min time bins.

The ground-based light curve here is quite different in behavior from the space-based Kepler light curve. The observing cadence is often dense with an observation every ~5 min, but irregular gaps in observations over the 6-month period are common. Strong dips in brightness occur due to passing clouds or other atmospheric phenomena. The irregular spacing is binned onto a fixed time grid; we arbitrarily choose the same 29.4 min time interval characteristics of the Kepler data. The result is that the original ~20,000 observations fill ~10,000 evenly spaced time bins of which 76% have missing entries. The more typical ground-based surveys with only one telescope will have >90% missing data.

Here we find that the autoregressive modeling suppresses the strong outliers attributable to clouds, but does not improve the overall noise amplitude: IQR = 0.013 mag (magnitude) in the original light curve and 0.011 mag in the ARFIMA residuals. But the autocorrelation in the original light curve is nearly entirely removed by the ARFIMA fit, although the ARIMA fit was less successful. Experimenting with different bin widths we find that increased bin sizes reduces the IQR somewhat but increases the residual ACF. We are finding that searching for planetary signals in the autoregressive residuals using our Transit Comb Filter periodogram is approximately as sensitive as the standard search for signals in the original lightcurve using the Box Least Squares periodogram (Stuhr et al., in preparation).

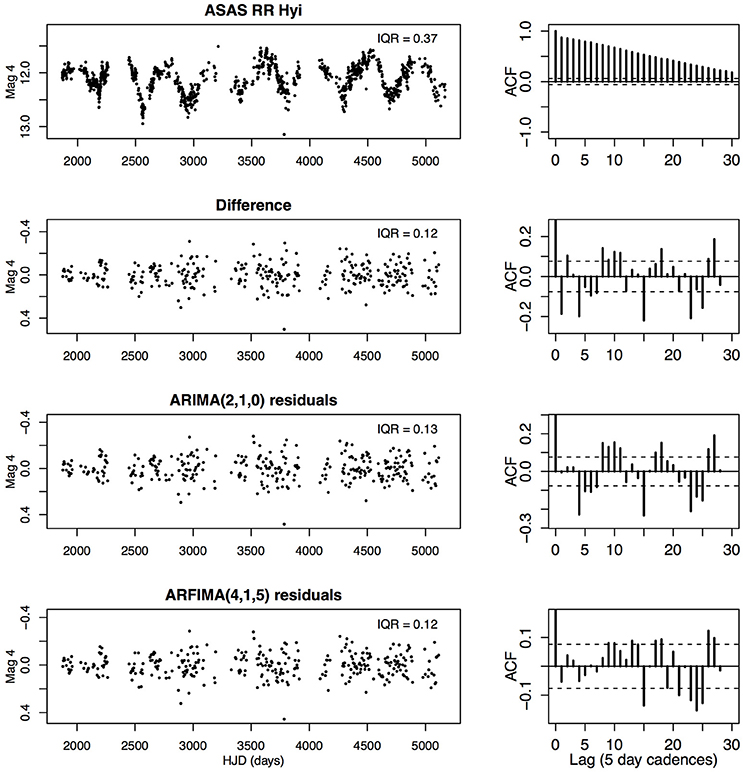

RR Hyi (Figure 4)

The final light curve examined here has ~1,000 irregularly spaced observations spread over ~10 years. The star is RR Hyi (= DV Oct), a poorly studied quasi-periodic long-period variable star at a location near the southern celestial pole that is visible for most of the year. The photometric observations are from the All Sky Automated Survey [36]. Most observations of RR Hyi are separated by hours to a few days. We bin the observations onto a fixed grid with arbitrarily chosen width of 5 days. The resulting light curve for autoregressive analysis has 660 observations of which 38% are missing.

Figure 4. Ground-based light curve for the variable star RR Hyi from the All-Sky Astronomical Survey (ASAS) showing ~10 years brightness variations. Except for the top panels, the irregular observation times have been binned onto a fixed sequence of 5 day time bins. Left panels from top to bottom: Original light curve; differenced light curve; ARIMA model residuals; ARFIMA model residuals. Right panels show the autocorrelation function at each stage.

The original light curve is obviously strongly autocorrelated on many scales. Most of the autocorrelation is removed by the differencing operation and the IQR noise level is reduced by a factor of three. However, neither ARIMA nor ARFIMA fits give any significant improvement in either noise or autocorrelation. This is a case where parametric autoregressive modeling is ineffective; only the nonparametric differencing detrending step has any useful effect. The failure is likely due to the small dataset with many gaps that misses much of the short-memory autocorrelation of the stellar variations2.

Extensions to ARIMA

In addition to ARFIMA for 1/fα noise processes, there are many mathematical variants of ARMA and ARIMA modeling. They are typically developed for economics time series analysis and are described in econometric textbooks like Enders [19], Greene [20], and Hyndman and Athanasopoulos [21].

Seasonality

Many economic variables have periodic variations with a 12-month period. These might be due to agricultural planting cycles, holiday sales cycles, or annual corporate reports. These periodic components are added to autoregressive components, just as a star's rotational period or a planetary orbit might produce periodicities superposed on a stochastic flaring pattern.

Exogeneous Covariates

Here a deterministic component is added to a stochastic ARMA-type model. It is typically a linear model applied to new variables over the same time intervals as the original time series. A common approach is the ARMAX(p, q) model

where the first two terms are the usual ARMA model for the temporal variable x, and the third term has linear dependence on r new temporal variables y. The exogeneous covariates can be any function linear in the parameters: periodic, or some deterministic trend (e.g., polynomial), or some tabulated variables without a simple mathematical form. To improve their modeling of exoplanetary transits hidden in stochastic variability, Caceres et al. [submitted for publication] create a function with periodic box-like dips with unknown depth. The advantage accrued here is that the transit depth is treated as just an additional parameter of the model with best fit value and confidence interval established by maximum likelihood estimation. The use of exogeneous covariates thus allows an astrophysically important parameter to be obtained along with ARMA parameters of less astrophysical interest.

Volatility

In the standard ARMA model, the autoregressive components are combined with a standardized Gaussian white noise component with constant variance, ϵ = N(0, σ2). But the noise can often be heteroscedastic where its variance is temporally variable. A common approach are the autoregressive conditional heteroscedastic or ARCH models initiated by Engle [38] and Bollerslev [39] where the squares of the residuals ϵ2 is itself an autoregressive or ARMA process,

The generalized ARCH or GARCH model is slightly more complex with both AR and MA components for the disturbances to the original ARMA process. GARCH models proved to be wonderfully effective for economic time series such as inflation and stock market variations; the Google search engine gives over million hits for “GARCH.” Many extensions have been developed: GARCH-M or GARCH-in-mean process; IGARCH or integrated GARCH; TGARCH or threshold GARCH; EGARCH or exponential GARCH; NAGARCH or nonlinear asymmetrical GARCH; COGARCH or continuous time GARCH; and dozens more. Astronomical volatility can be seen in solar magnetic activity and its consequent space weather measures, but it is not clear that GARCH-like models are effective for modeling these time series.

Regime Switching

An important class of nonlinear time series represents situations where the system jumps between different regimes of stationary ARMA-type relationships, such as high and low states seen in some astrophysical accretion systems. The switching mechanism is controlled by a hidden variable; if this variable is first-order autoregressive, then we have a Markov switching model. These models can be combined with GARCH and deterministic trends.

State Space Modeling

This is a broader approach to parametric modeling of complicated time series where autoregressive and other stochastic behaviors can be combined with linear or nonlinear deterministic trends or periodic components [40]. Functional behaviors can be linear or nonlinear, and can involve derivatives of state variables. Noise can be Gaussian or non-Gaussian, white or autoregressive. State space models are defined hierarchically with one level describing relationships between “state variables” defining the temporal behavior of the system and other levels describing how the underlying system relates to observables. Heterscedastic measurement errors common in astronomical data, for example, can be treated in a natural fashion. The coefficients of the model are calculated by least squares or maximum likelihood estimation, often through a recursive algorithm such as the Kalman filter.

Continuous Time Modeling for Irregular Astronomical Time Series

A mathematical limitation of classical autoregressive modeling is the restriction to evenly spaced datasets. However, astronomers need methods that are robust to irregularly spaced observing cadences limited by daily and annual celestial cycles as well as telescope allocation constraints. The underlying signal is typically generated by a continuous process of complex, often nonlinear, physical mechanisms that are not fully understood such as accretion disks and magnetohydrodynamical turbulence. Analysis is commonly based on Fourier analysis, but its underlying theorems apply only for the limited situation of a sinusoidal signal superposed on Gaussian white noise. This model is often inadequate to characterize the often multi-scale nonstationary structure in the time series. Finally, a variety of analysis techniques are needed to capture different properties in order to classify heterogeneous ensembles of time series, such as the 40 billion unevenly spaced light curves expected from the forthcoming Large Synoptic Survey Telescope.

In the stellar light curve applications above, we show that a sufficiently dense cadence of irregular observations can be successfully binned or interpolated onto a fixed time grid with “missing data,” allowing classical ARIMA-type modeling to proceed. However, a more effective approach may be generalizations of stochastic difference equations to stochastic differential equations. These have been studied mathematically starting in the 1940s to model physical phenomena such as Brownian motion and econometric variables [41, 42]. Just as ARMA processes play a central role in the representation of time series with evenly spaced observations, CARMA (continuous-time autoregressive moving average) processes play an analogous role in the representation of time series with continuous time parameter allowing direct treatment of irregular time sampling. Many variants are studied: Brownian motion and fractional Brownian motion with long-range dependence, Ornstein-Uhlenbeck process with exponential damping, Lévy process with heavy-tailed and asymmetric time series. The interest in CARMA-type processes is motivated mainly by the successful application of stochastic differential equation models to problems in finance.

In astronomy, a continuous autoregressive CAR(p) process was first applied by Koen [43]. A simple CAR(1) model, variously known as the Ornstein-Uhlenbeck process and damped random walk model, was brought into use for modeling quasar light curves in irregular astronomical time series by Kelly et al. [17] and generalized to CARMA(p, q) by Kelly et al. [28]. The latter paper gives the power spectral density, and autocovariance function at lag τ for the CARMA model. Kelly's software implementation called carma_pack on Github provides a Markov chain Monte Carlo sampler, an adaptive Metropolis algorithm combined with parallel tempering, for performing Bayesian inference on these continuous time autoregressive moving average models. This model has been used in dozens of astronomical studies, particularly for study of variability of quasars for which unevenly spaced observations are commonly available [e.g., [44, 45]].

CARMA models have favorable mathematical properties for astronomical use, although the statistical theory of continuous stochastic processes can be difficult [46]. Mathematically, the power spectrum density of a CARMA process is a sum of Lorentzian functions with centroid, widths, and normalizations of the Lorentzian functions as free parameters [47]. This provides a significant amount of flexibility for modeling complicated power spectra, such as accretion disk systems with quasi-periodic oscillations and red noise, suggesting that CARMA modeling may be applicable to many classes of astronomical variables. Moreover, CARMA models have a state space representation enabling the use of the Kalman filter for calculating their likelihood function. The computational complexity of calculating the likelihood function for CARMA models can therefore scale linearly with the number of data points in the light curve, an advantage for application to massive time-domain data sets.

The CARMA models account for irregular sampling and measurement errors, and are thus valuable for quantifying variability, forecasting and interpolating light curves, and variability-based classification. But they suffer the same limitations as ARMA(p, q) for regularly spaced data: it assumes (weak) stationarity of behavior across the light curve (for instance, no trend in brightness) and only treats short-memory autocorrelation out to max(p, q) time intervals. ARFIMA, on the other hand, treats nonstationarity and a powerlaw model of long-memory behavior in addition to these elements.

The mathematical development of continuous-time generalizations of ARIMA and ARFIMA have only recently begun; the effort is reviewed by Brockwell [48]. Theorems underlying CARIMA are presented by McElroy [49] and for CARFIMA (also called FICARMA) by Brockwell and Marquardt [50] and Brockwell [48]. A maximum likelihood estimation procedure for CARFIMA is developed by Tsai and Chan [51] with R public software implementation released by Tak and Tsai [52]. We encourage time domain astronomers to try modeling with their carfima R/CRAN package when faced with irregular light curves of variability that includes trend and long-memory “red noise” characteristics. It will be particularly valuable to compare the performance of standard ARIMA with “missing data” (above) and continuous-time CARIMA on real and simulated astronomical light curves.

Insights From Autoregressive Modeling

Most often, the goal of the econometrician is to forecast future values of the time series. But the astronomer is rarely concerned about forecasting brightness of their target star or quasar. (An exception is solar activity that affects our terrestrial environment.) The astronomer has other goals:

Identifying Outliers

As we saw with the four stars examined above, data points that are dramatically different from most autoregressive residuals are not necessarily distinctive in the original time series. Common astronomical procedures such as “repeated 3-sigma clipping” to remove outliers will be less effective than expected if autocorrelation is present. CRAN package forecast provides some tools for outlier detection in the presence of autocorrelation.

Identifying Change Points

While GRS 1915+105 with dozens of distinct modes of variability is exceptional, it is not uncommon that an astrophysical system will exhibit high and low states or other systematic changes in behavior. Time series methods are available for identifying change points include classic CUSUM and F tests and newer PELT and CROPS algorithms. These tests are implemented in R through CRAN packages like strucchange, changepoint, BCP, ECP, and CPM.

Characterizing 1/fα Red Noise

The characterization of long-memory processes such as 1/f-type noise may be relevant to the understanding of stochastic astrophysical processes like turbulence or accretion. But statistically, this can be biased. The astronomers' common procedure of linear least squares regression on the log-spectral power density, known in econometrics as the Geweke-Porter-Hudak [53] estimator, can be biased. It depends on unguided choices of frequency cutoff, detrending, spectral tapering and smoothing. A dozen other approaches to estimating α (or equivalently, d or H) are in use with no consensus on a single best approach [54]. We have been surprised that time series with increasing spectral power at low frequencies can often be adequately modeled with short-memory low-dimensional ARIMA models without long-range power law components; that is, it can be difficult to distinguish stochastic red noise from other behaviors. Altogether, we urge caution in quantitative assessment of red noise in astronomical time series.

Classifying Time Series

Increasingly, large ensembles of astronomical time series are collected from wide-field surveys without prior knowledge of the cause of variability in each object. Considerable efforts are made to classify (often irregularly spaced) light curves to identify transients and variable types in Big Data collections of light curves using training sets and machine learning techniques [e.g., [55–61]]. Autoregressive modeling results may be useful features to help discriminate variable classes. These might include the amplitude of AR(1) or MA(1) coefficients, the model order p and q, the presence of a significant d value in ARFIMA, and time series diagnostics of autoregressive model residuals.

Understanding Astrophysical Processes

It is not uncommon that astrophysical theory of (magneto)hydrodynamic situations such as accretion disks characterize the behavior in terms of Fourier coefficients. However, it seems reasonable that characterization of astrophysical models in terms of autoregressive coefficients should also be possible. The potential for new insights from this direction of research is unknown.

Concluding Comments

Astronomers have tended to use a narrow suite of time series methods, particularly Fourier analysis. This is appropriate when the physical phenomenon is strictly periodic with roughly sinusoidal shape such as radial velocity of circular Keplerian orbits or acoustic oscillations. The power spectrum concentrates periodic signals but not stochastic behaviors such as autoregression. There is real danger that inaccurate or even incorrect inferences will be made when autoregressive behaviors are present but are ignored by simplistic methods that assume the interesting signal is embedded only in Gaussian white noise. For example, detection of sudden flare events with a “3-sigma” criterion typically assumes Gaussian white noise whereas the effective sample size is reduced for autocorrelated signals. The Bayesian Blocks procedure [62] for detecting intensity changes in count data similarly assumes Poisson white noise.

Over several decades, researchers in many fields have found that low-dimensional autoregressive ARIMA-type models that quantify dependence on past behavior are amazingly effective in modeling many forms of variability, far more flexibly than low-dimensional (e.g., global polynomial) models that involve dependence on time alone. Extensive and user-friendly software is available for ARIMA-type modeling, particularly in the public domain R and Matlab statistical software environments.

Parametric modeling with any mathematical formalism is only useful for time series if the variability behavior is encompassed by the chosen mathematical model. In statistical parlance, the model must be correctly specified. But this constraint can be checked. Once a mathematical family of models is chosen and fitted to the data by maximum likelihood estimation, diagnostic tests can be applied to confirm that the model is indeed successful; for instance, testing that the model residuals are simple Gaussian white noise. Caceres et al. [in preparation] find that the aperiodic variability seen in most stars observed by NASA's Kepler mission can be effectively treated by ARIMA-type models.

A particular limitation of autoregressive modeling is the insistence on evenly spaced observation times. However, in our examination of stellar light curves above, we converted time series with irregular observation times into a sequence of regular intervals by simple binning with “missing data” in empty time slots. Density estimation (smoothing) or imputation procedures can also be applied; ARIMA modeling itself can be used for imputation, filling in missing data in astronomical time series. An alternative approach is to use continuous-time autoregressive models such as CARMA that treats irregular observation times directly with continuous time models.

Our emphasis here is on situations where a significant component of the variation exhibit autoregressive behaviors so that future values depend on past levels and changes. These components could arise as nuisance effects such as atmospheric fluctuations at the telescope, or they could be intrinsic to the astrophysical systems under study. While our treatment has concentrated on stellar photometric light curves, the methodology can readily be applied to other situations arising in astronomical research: optical or X-ray brightness variations from accreting supermassive black holes (quasars and Seyfert galaxies); complex signals in gravitational wave detectors; rapid atmospheric variations in ground-based active optics instruments; space weather induced background in satellite observatories; and so forth.

It is important to recognize that the scope of modern time series methodology is vast, propelled by complex problems in econometrics, signal processing, engineering, and Earth science systems. We have covered only a small portion of the field here. For example, change point analysis could help in study of astronomical transients, noise can be removed from astronomical signals with wavelet or sparsity methods, and irregularly sampled astronomical time series can be smoothed with local regression procedures. We encourage astronomers to be more adventuresome in investigating the utility of sophisticated time series methods, including ARIMA-type autoregressive modeling, to address problems in the burgeoning field of time domain astronomy.

Author Contributions

All authors listed have made a substantial, direct and intellectual contribution to the work, and approved it for publication.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Andrew Stuhr (Penn State) for substantial assistance in the data analysis, and Joel Hartman (Princeton) for collaboration on the HAT South analysis. This work is supported by NSF grant AST-1614690 and NASA grant 80NSSC17K0122.

Footnotes

1. ^Figure details. The light curves in the upper-left panels of Figures 1, 2 are obtained from NASA's MAST archive center at Web page http://archive.stsci.edu/kepler/data_search. The quantity plotted is PDC flux in parts-per-million about the mean. Known instrumental effects have been removed and the the quarters have been “stitched” together to a common mean. The dashed lines on the autocorrelation function represent 95% confidence limits for Gaussian white noise. The HATS-2 light curve in Figure 3 was obtained from https://hatsouth.org/planets/lightcurves.html. We use photometry from aperture 1 after Transit Filter Algorithm is applied to reduce instrumental and atmospheric variations. The light curve in the upper-left panel of Figure 4 was obtained from ASAS's Web page http://www.astrouw.edu.pl/asas. Photometry from different years were “stitched” together without any adjustments,.

2. ^It is interesting to compare our ARIMA treatment here to a time-frequency and wavelet treatment of a similar quasi-periodic variable star, RV Tau, by Thibaut and Roques [37]

References

1. Wilk SR Mythological evidence for ancient observations of variable stars. J Amer Assoc Variable Star Observers (1996) 24:129–33.

2. Hamacher DW. Observations of red-giant variable stars by Aboriginal Australians. Aust J Anthropol. arxiv 1709.04634[PrePrint]. (2018). doi: 10.1111/taja.12257

3. Kukarkin BV, Parenago PP. General Catalogue of Variable Stars. Moscow: U.S.S.R. Academy of Sciences (1948).

4. Samus NN, Kazarovets EV, Durlevich OV, Kireeva NN, Pastukhova EN. General catalogue of ariable stars: Verson GCVS 5.1. Astron Rep. (2017) 61:80–8. doi: 10.1134/S1063772917010085

5. Gilliland RL, Chaplin WJ, Jenkins JM, Ramsey LW, Smith JC. Kepler mission stellar and instrument noise properties revisited. Astron J. (2015) 150:133. doi: 10.1088/0004-6256/150/4/133

6. Griffin E, Hanisch H, Seaman R. New horizons in time-domain astronomy. In: Proceedings IAU Symposium No. 285. Cambridge, UK: Cambridge University Press (2012).

7. Belloni T, Klein-Wolt M, Méndez M, van der Klis M, van Paradijs J. A model-independent analysis of the variability of GRS 1915+105. Astron Astrophys. (2000) 355:271–90.

8. Abbott BP, Abbott R, Abbott TD, Abernathy MR, Acernese F, Ackley K, et al. Observation of gravitational waves from a binary black hole merger. Phys Rev Lett. (2016) 116:061102. doi: 10.1103/PhysRevLett.116.061102

9. Riess AG, Filippenko AV, Challis P, Clocchiattia A, Diercks A, Garnavich PM, et al. Observational evidence from supernovae for an accelerating universe and a cosmological constant. Astron J. (1998) 116:1009–38. doi: 10.1086/300499

10. Chatfield C. The Analysis of Time Series: An Introduction. 6th ed. Boca Raton, FL: CRC/Chapman & Hall (2003).

11. Brockwell PJ, Davis RA. Introduction to Time Series and Forecasting. 3rd ed. New York, NY: Springer (2016).

13. Shumway RH, Stoffer DS. Time Series Analysis and Its Applications with R Examples. 4th ed. (2017). Cham: Springer.

14. Percival DB, Walden AT. Spectral Analysis for Physical Applications. Cambridge: Cambridge University Press (1993).

15. Percival DB, Walden AT. Wavelet Methods for Time Series Analysis. Cambridge: Cambridge University Press (2000).

16. Duchon C, Hale R. Time Series Analysis in Meteorology and Climatology. Oxford, UK: Wiley (2012).

17. Kelly BC, Bechtold J, Siemiginowska A. Are the variations of quasar optical flux driven by thermal fluctuations? Astrophys J. (2009) 698:895–910. doi: 10.1088/0004-637X/698/1/895

18. Box GE, Jenkins GM, Reinsel GC, Ljung GM. Time Series Analysis: Forecasting and Control. 5th ed. Hoboken NJ: Wiley (2016).

21. Hyndman RJ, Athanasopoulos G. Forecasting: Principles and Practice. 2nd ed. Available online at: https://otexts.org/fpp2 (2017).

23. Scargle JD. Studies in astronomical time series analysis. I - Modeling random processes in the time domain. Astrophys J Suppl. (1981) 45:1–71. doi: 10.1086/190706

24. Koen C, Lombard F. The analysis of indexed astronomical time series. Part One. Basic methods. Mon Not R Astro Soc. (1993) 263:287. doi: 10.1093/mnras/263.2.287

25. Forman-Mackey D, Agol E, Ambikasaran S, Angus R. Fast and scalable Gaussian process modeling with applications to astronomical time series. Astron J. (2017) 154:220. doi: 10.3847/1538-3881/aa9332

26. Lazio TJ, Waltman EB, Ghigo FD, Fiedler RL, Forster RS, Johnston KJ. A dual-frequency, multiyear monitoring program of compact radio sources. Astrophs J Suppl. (2001) 136:265–392. doi: 10.1086/322531

27. Templeton MR, Karovska M. Long-period variability of o Ceti. Astrophys J. (2009) 691:1470–8. doi: 10.1088/0004-637X/691/2/1470

28. Kelly BC, Becker AC, Sobolewska M, Siemiginowska A, Ulltey P. Flexible and scalable methods for quantifying stochastic variability in the era of massive time-domain astronomical data sets. Astrophys J. (2014) 788:33. doi: 10.1088/0004-637X/788/1/33

29. Stanislavsky AA, Murnecki K, Magdziarz M, Weron A, Weron K. Modeling of solar flare activity from empirical time series of soft X-ray solar emission. Astrophys J. (2009) 693:1877–82. doi: 10.1088/0004-637X/693/2/1877

30. R Core Team R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing. Available online at: https://www.r-project.org (2018).

31. Feigelson ED, Babu GJ. Modern Statistlcal Methods for Astronomy with R Applications. Cambridge, UK: Cambridge University Press (2012).

32. Trapletti A, Hornik K. tseries: Time Series Analysis and Computational Finance. R package v. 0.10-44. Available online at: https://CRAN.R-project.org/package=tseries (2018).

33. Zeileis A, Hothorn T. Diagnostic checking in regression relationships. R News (2002) 2:7–10. Available online at: https://CRAN.R-project.org/doc/Rnews/

34. Bakos GA, Csubry Z, Penev K, Bayliss D, Jordán A, Afonso C, et al. HATSouth: a global network of fully automated identical wide-field telescopes. Publ Astro Soc Pacific (2013) 125:154. doi: 10.1086/669529

35. Mohler-Fischer M, Mancini L, Hartman J, Bakos GB, Penev K, Bayliss D, et al. HATS-2b: a transiting extrasolar planet orbiting a K-type star showing starspot activity. Astro Astrophys. (2013) 558:A55. doi: 10.1051/0004-6361/201321663

37. Thibaut C, Roques S. Time-scale and time-frequency analysis of irregularly sampled astronomical time series. EURASIP J Adv Signal Process. (2005) 10:1155.

38. Engle RF. Autoregressive conditional heteroscedasticity with estimates of the variance of United Kingdom inflation. Econometrica (1982) 50:987–1007. doi: 10.2307/1912773

39. Bollerslev T. Generalized autoregressive conditional heteroskedasticity. J Econ. (1986) 31:307–27. doi: 10.1016/0304-4076(86)90063-1

40. Durbin J, Koopman SJ. Time Series Analysis by State Space Methods. 2nd ed. Oxford: Oxford University Press (2012).

42. Bergstrom AR. Continuous Time Econometric Modelling. Oxford, UK: Oxford University Press (1990).

43. Koen C. The analysis of irregularly observed stochastic astronomical time-series - I. Basics of linear stochastic differential equations. Mon Not R Astro Soc. (2005) 361:887–92. doi: 10.1111/j.1365-2966.2005.09213.x

44. Graham MJ, Djorgovski SG, Stern D, Drake AJ, Mahabal AA, Donalek C, et al. A systematic search for close supermassive black hole binaries in the Catalina Real-time Transient Survey. Mon Not R Astro Soc. (2015) 453:1562–76. doi: 10.1093/mnras/stv1726

45. Guo H, Wang J, Cai Z, Sun M. How far is quasar UV/optical variability from a damped random walk at low frequency? Astrophys J. (2017) 847:132. doi: 10.3847/1538-4357/aa8d71

46. Garnier H, Wang L. (eds.). Identification of Continuous-Time Models from Sampled Data. London: Springer (2008).

47. Brockwell PJ. Lévy-driven continuous time ARMA processes. Ann Inst Stat Math. (2001) 53:113–24. doi: 10.1023/A:1017972605872

48. Brockwell PJ. Recent results in the theory and applications of CARMA processes. Ann Inst Stat Math. (2014) 66:647–85. doi: 10.1007/s10463-014-0468-7

49. McElroy TS. Forecasting continuous-time process with applications to signal extraction. Ann Inst Stat Math. (2013) 65:439–56. doi: 10.1007/s10463-012-0373-x

50. Brockwell PJ, Marquardt T. Lévy-driven and fractionally integrated ARMA processes with continuous time parameter. Stat Sin. (2005) 15:477–94.

51. Tsai H, Chan KS. Maximum likelihood estimation of linear continuous time long memory processes with discrete time data. J R Stat Soc Ser B. (2005) 67:703–16. doi: 10.1111/j.1467-9868.2005.00522.x

52. Tak H, Tsai H. Carima: Continuous-Time Fractionally Integrated ARMA Process for Irregularly Spaced Long-Memory Time Series Data. R package version 1.0.0. Available online at: https://cran.r-project.org/web/packages/carfima/index.html (2017).

53. Geweke J, Porter-Hudak S. The estimation and application of long memory time series models. J Time Ser Anal. (1983) 4:221–38. doi: 10.1111/j.1467-9892.1983.tb00371.x

54. Rea W, Oxley L, Reale M, Brown J. Not all estimators are born equal: the empirical properties of some estimators of long memory. Math Comput Simulat. (2013) 93:1016. doi: 10.1016/j.matcom.2012.08.005

55. Bloom JS, Richards JW, Nugent PE, Quimby RM, Starr DL, Poznanski D, et al. Automating discovery and classification of transients and variable stars in the synoptic survey era. Publ Astro Soc Pacific (2012) 124:1175. doi: 10.1086/668468

56. Faraway J, Mahabal A, Sun J, Wang X, Zhang L. Modeling light curves for improved classification. Stat Anal Data Mining (2016) 9:1–11. doi: 10.1002/sam.11305

57. Morii M, Ikeda S, Tominaga N, Tanaka M, Morokuma T, Ishiguro K, et al. Machine-learning selection of optical transients in the Subaru/Hyper Suprime-Cam survey. Pub Astro Soc Japan (2016) 68:104. doi: 10.1093/pasj/psw096

58. Cabrera-Vives G, Reyes I, Förster F, Estévez P, Maureira JC. Deep-HiTS: rotation invariant convolutional neural network for transient detection. Astrophys J. (2017) 836:97. doi: 10.3847/1538-4357/836/1/97

59. Wright DE, Lintott CJ, Smartt SJ, Smith KW, Fortson L, Trouille L, et al. A transient search using combined human and machine classifications. Mon Not R Astro Soc. (2017) 472:1315–23. doi: 10.1093/mnras/stx1812

60. Zinn JC, Kochanek CS, Kozlowski S, Udalski A, Szymanski K, Soszynski I, et al. Variable classification in the LSST era: exploring a model for quasi-periodic light curves. Mon Not R Astro Soc. (2017) 468:2189–205. doi: 10.1093/mnras/stx586

61. Castro N, Protopapas P, Pichara K. Uncertain classification of variable stars: handling observational GAPS and noise. Astron J. (2018) 155:16.

Keywords: time domain astronomy, irregularly sampled time series, variable stars, quasars, statistical methods, time series analysis, autoregressive modeling, ARIMA

Citation: Feigelson ED, Babu GJ and Caceres GA (2018) Autoregressive Times Series Methods for Time Domain Astronomy. Front. Phys. 6:80. doi: 10.3389/fphy.2018.00080

Received: 09 February 2018; Accepted: 10 July 2018;

Published: 07 August 2018.

Edited by:

Martin Anthony Hendry, University of Glasgow, United KingdomReviewed by:

Haroldo Valentin Ribeiro, Universidade Estadual de Maringá, BrazilAlberto Vecchiato, Osservatorio Astrofisico di Torino (INAF), Italy

Copyright © 2018 Feigelson, Babu and Caceres. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Eric D. Feigelson, ZWRmQGFzdHJvLnBzdS5lZHU=

Eric D. Feigelson

Eric D. Feigelson G. Jogesh Babu2,3

G. Jogesh Babu2,3