94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci. , 21 August 2020

Sec. Neural Technology

Volume 14 - 2020 | https://doi.org/10.3389/fnins.2020.00882

This article is part of the Research Topic Closed-loop Interfaces for Neuroelectronic Devices and Assistive Robots View all 10 articles

Martí de Castro-Cros1*

Martí de Castro-Cros1* Marc Sebastian-Romagosa2

Marc Sebastian-Romagosa2 Javier Rodríguez-Serrano2

Javier Rodríguez-Serrano2 Eloy Opisso3

Eloy Opisso3 Manel Ochoa3

Manel Ochoa3 Rupert Ortner2

Rupert Ortner2 Christoph Guger2,4,5

Christoph Guger2,4,5 Dani Tost1,6,7

Dani Tost1,6,7Objective: To evaluate whether introducing gamification in BCI rehabilitation of the upper limbs of post-stroke patients has a positive impact on their experience without altering their efficacy in creating motor mental images (MI).

Design: A game was designed purposely adapted to the pace and goals of an established BCI-rehabilitation protocol. Rehabilitation was based on a double feedback: functional electrostimulation and animation of a virtual avatar of the patient’s limbs. The game introduced a narrative on top of this visual feedback with an external goal to achieve (protecting bits of cheese from a rat character). A pilot study was performed with 10 patients and a control group of six volunteers. Two rehabilitation sessions were done, each made up of one stage of calibration and two training stages, some stages with the game and others without. The accuracy of the classification computed was taken as a measure to compare the efficacy of MI. Users’ opinions were gathered through a questionnaire. No potentially identifiable human images or data are presented in this study.

Results: The gamified rehabilitation presented in the pilot study does not impact on the efficacy of MI, but it improves users experience making it more fun.

Conclusion: These preliminary results are encouraging to continue investigating how game narratives can be introduced in BCI rehabilitation to make it more gratifying and engaging.

Stroke is a leading cause of severe physical disability. According to the World Health Organization, 15 million people suffer from stroke worldwide each year, five million of them die, and five million are permanently disabled (Donkor, 2018). Impairments in the upper limbs affect 60% of stroke survivors. Rehabilitation of these patients is key to improve patients’ capabilities of realizing daily life activities and, consequently, to improve their independence and quality of life (Pindus et al., 2018). Various technologies have been used to support upper limb rehabilitation including assistive robotic systems, camera tracking and motion sensors. Among them, the Mental Imagery Brain Computer Interface (MI-BCI) has emerged as a cost-effective, non-invasive rehabilitation technology, specially indicated for patients with a low range of motor motion, having fatigue, or pain (van Dokkum et al., 2015; Remsik et al., 2016; Cervera et al., 2018).

The strategy of MI-BCI rehabilitation is to exploit the capability of users to create a mental image of a movement. BCI systems use ElectroEncephaloGraphy (EEG) placing electrodes over the patients’ head to capture functional cortical activation changes while patients are trying to create a mental image of a functional motor movement. The EEG signal exhibit event-related synchronization and desynchronization of neural rhythms that can be correlated with the laterality of the mental image (McFarland et al., 2000; Neuper et al., 2006). Thus, machine learning algorithms can be trained to determine in real time if the mental image is correct (Chavarriaga et al., 2017).

Feedback is an essential feature of EEG-BCI rehabilitation. EEG-BCI signal analysis can be used to trigger functional electrostimulation (FES) (Quandt and Hummel, 2014) and to control robotic ortheses in order to assist the realization of motor activity (Ang et al., 2015). In this way, the disrupted sensorimotor loop is closed. It has been proven that this loop closure is a key factor to induce neural plasticity changes, therefore to improve functional behavior. Visual feedback is necessary to learn how to create mental images. In addition, during the routine use of BCI, it provides users with self-awareness and assessment of how they are performing. The suitability of different forms of feedback has been discussed (Lotte et al., 2013; Jeunet et al., 2016). On one hand, symbolic widgets such as progress bars and arrows are simple and fast to implement, but they have been found to be difficult to understand and may even distract users (Kosmyna and Lécuyer, 2017; Škola et al., 2019). On the other hand, embodied avatar representations of the patient’s limb promote Action Observation mechanisms and activate the Mirror Neuron Network (MNN) inducing thus cortical plasticity (Pichiorri et al., 2015; Zhang et al., 2018). Moreover, the sense of embodiment that a realistic avatar provides impacts positively on BCI control (Petit et al., 2015; Alimardani et al., 2016).

BCI sessions are based on repetition of exercises, they are cognitively demanding and can lead to a reduced patient engagement in rehabilitation. Gamification is defined as the introduction of game-design elements and principles such as narratives, scores and awards in non-game contexts to increase a person satisfaction and interest in performing activities by bringing intrinsically motivational playful experiences (Richter et al., 2015). Gamification has become a popular research topic with applications in a variety of domains from corporate business transformation to education and health (Zichermann and Linder, 2013). However, some studies in domains such as education, have shown that it is not always effective. Moreover, it can even yield to a reduction of the efficacy of the activity it aims at making more motivating (Hamari et al., 2014). The effects of gamification are greatly dependent on the context and on the users. In particular, rewards, badges and leaderboards should be used with precaution as they may backfire (Hanus and Fox, 2015).

Gamification has been largely used in conventional upper-arm rehabilitation in order to alleviate the repetitiveness of sessions, increase motivation, and engagement (Burke et al., 2009; Bermúdez-Badia et al., 2016). Commercial computer games have been adapted and new games have been designed on purpose to enhance the rehabilitation experience (Bermúdez-Badia and Cameirão, 2012). These games use the movement of the patients as the input system of the game. The movement is measured through various tracking systems (Llorens et al., 2015), and it substitutes conventional devices such as mouse and joysticks.

The introduction of gamification in BCI rehabilitation is quite challenging because using brain signals as the only user input reduces the scope of possible game narratives. Moreover, in order to keep the benefits of embodiment (Borrego et al., 2019), games should somewhat integrate the patient’s upper limb avatar. This is why existing studies typically involve driving or navigation tasks: for instance, destroying asteroids using left/right hand (Vourvopoulos et al., 2016) or rowing boats while trying to collect flags (Vourvopoulos et al., 2019). Existing gamified BCI solutions have been basically tested with volunteer participants that have not been affected by a stroke, thus there is a lack of data on actual patients. Little is known about the impact of introducing external stimuli such as game elements aside from the avatar’s limb on the efficacy of the training activity.

In this paper, we present a preliminary experimental study on gamified BCI post-stroke functional rehabilitation of the upper limbs. The goal of the study is to analyze how gamification impacts on the efficacy of the treatment and on patients’ experience.

The BCI system used on this study is recoveriX® (g.tec medical engineering GmbH, Austria). The system analyzes the EEG brain signals and provides multimodal feedback through a virtual reality avatar of the upper limbs and a FES proprioceptive feedback stimulation (Irimia et al., 2016, 2017; Cho et al., 2016). The EEG caps were equipped with 16 active electrodes (g.LADYbird or g.Scarabeo, g.tec medical engineering GmbH) located according to international 10/10 system (extended 10/20 system): FC5, FC1, FCz, FC2, FC6, C5 C3, C1, Cz, C2, C4, C6, Cp5, Cp1, Cp2, Cp6. A reference electrode was placed on the right earlobe and a ground electrode at position of Fpz.

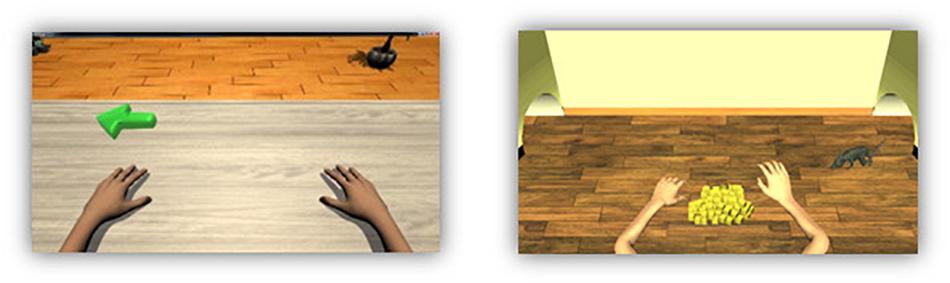

The game was developed on top of this system with two main requirements. First, it could not alter the pace of the rehabilitation. Second, in order to avoid altering the sense of identification of the user with the virtual forehand, the game could not modify the gesture of the avatar. With these limitations, the narrative was restricted to a game in which the unique action of the avatar was raising and lowering the wrist. Moreover, to make the virtual situation as similar as possible to the real one, we avoided driving-like actions that imply a virtual navigation of the avatar. We also wanted to have feedback of the current exercise and of the total training stage so far. Hence, the goal of the game is to compete with a mouse in order to preserve food. Figure 1 shows the “standard” avatar and the new game appearance. At the beginning of the session 80 pieces of cheese (one for each exercise) are set between the two virtual arms. At each exercise, a mouse appears from the right or left corner of the room (the side of the wrist that must move) and stands nearby the pile of cheese pieces during the cue sub-stage. In the feedback sub-stage, the game receives a cue of Boolean events that indicate if the mental image is being correct or not. The avatar’s hand moves accordingly, and the FES is activated. When a cue is incorrect, both the visual feedback and the electrical stimulation are disabled. In the relax sub-stage, if five consecutive events are considered correct, when the virtual arm lowers, the mouse runs away empty-handed. Otherwise, it takes a piece of cheese. The size of the pile is thus an indicator of the overall progress of the training stage. In addition, a scoring panel was added to reinforce the awareness of the user. This panel could be deactivated, shown intermittently or constantly displayed. The game was implemented with Unity and connected to the recoveriX® replacing the non-gamified version. It is available upon request by mail to the corresponding author.

Figure 1. Standard avatar and new game appearance. In the left side, is the avatar used in recoveriX system, the green arrow indicates in which hand the movement should be performed. In the right side is the new animated game, both arms are in the same position than the standard avatar. In front of the virtual subject there are 80 pieces of cheese that the user should try to keep. The rat indicates which hand should move.

Ten stroke patients with hemiparesis in the upper limb and six healthy subjects were recruited for this study. The stroke subjects were patients from Institut Guttmann. All participants were volunteers. The inclusion criteria for stroke patients were: (i) residual hemiparesis, (ii) the stroke occurred at least 4 days before the first assessment, (iii) functional restriction in the upper extremities. Additionally, for all participants, the following criteria were applied: (iv) to be able to understand written and spoken instructions, (v) stable neurological status, (vi) willing to participate in the study and to understand and sign the informed consent, (vii) to be able to attend meetings. Ethics approval was obtained from the Ethic committee of Institut Guttmann, Barcelona, Spain. Finally, all participants were informed about the goals of the project, and they provided their written informed consent before participating in the study.

All participants took part in the same procedure: control users in the research lab and patients in the rehabilitation institution. They performed two training sessions separated in time by a minimum of 1 day and a maximum of 2 weeks. Each session was composed of three runs or stages: Calibration (C-S1, C-S2), Training 1 (T1-S1, T1-S2), and Training 2 (T2-S1 and T2-S2). Each run was composed of 80 trials (80 movements) and lasted 12 min. There was a resting time of about 5 min between stages. Figure 2 describes the timing of each trial. Each movement started with a cue, and 2 s later the system presented an arrow pointing to the movement direction. The participant was instructed to start the MI just after the cue for the next 6 s. During this period the user had to imagine the wrist dorsiflexion, and the feedback devices were activated. After the feedback period the system provided a sound to mark the end of the exercise and gave 2 s of rest before the next trial.

The EEG data was bandpass filtered (0.5–30 Hz) to increase the signal to noise ratio (SNR) and to remove unnecessary components. We also applied a 50 Hz notch filter to reduce line noise. We then created 8 s epochs of EEG data for every trial and divided them into two classes: left and right.

Each epoch was bandpass filtered (8–30 Hz) and an artifact rejection was applied (the same as in the lateralization coefficient). Using the current frames, a CSP filter was created. Next, it was used to get 4 spatially filtered channels from the 16 EEG channels. For every frame we defined 14 timepoints, separated 0.5 s one from each other, from 1.5 to 8 s of the frames. For each timepoint we calculated a set of 4 features.

For each timepoint, we calculated the variance of each spatially filtered signal using a window of 1.5 s. The resulting four features for each timepoint were normalized, and we then derived their logarithmic values. Using all the features from all the timepoints and the entire frame collection, we calculated a linear discriminant analysis (LDA) classifier.

Using the CSP filter and the LDA classifier, the classifier accuracy is assessed with a 10-fold cross validation process. During this process, a classifier is created for every fold using 90% of the frames (training set). The classifier is then assessed with the other frames (testing set). This is done 10 times, and ultimately yields a mean accuracy for each class (left and right hand) and every timepoint. Finally, for each class, the MI accuracy is calculated as the maximum (Max. Accuracy) or as the mean (Mean Accuracy), among all timepoints. The LDA classifier was not modified from the original version (Irimia et al., 2016) to support the gamification pilot. Its code is not publicly available.

The calibration run is used to train the LDA classifier, thus, during this run the online feedback provided to users is always positive. After the calibration run, all participants were moved to the “Training” mode, where the feedback is triggered by the MI in real time. During Training 1 feedback is based on the classifier built after Calibration, and during Training 2 it is based on an enhanced version of the classifier using data from the previous two stages. Each session started from scratch; thus Session 2 did not use the classifier of Session 1.

During the two sessions subjects sat at a table with the computer screen in front. They wore headphones to listen to the instructions and sounds.

In the first session, calibration (C-S1) and Training 1 (T1-S1) were without the game, only with the regular avatar, while Training 2 (T2-S1) used the game without any feedback of time and scoring (no feedback). In the second session, all stages used the game: C-S2 (no feedback), T1-S2 showing score and time every ten exercises (intermittent feedback) and T2-S2 showing time and score constantly (constant feedback).

The feedback received by the users is shown in Figure 1. As mentioned, there are two kinds of feedback: time and score. The time is shown through a cheese-shape clock while the score is shown literally differentiating the user score, under the name of Jasper, and the rat score.

For this study two variables were analyzed: BCI performance and users’ experience. BCI performance was studied using the MI accuracy of each run computed as exposed above. Users’ experience was assessed using a questionnaire.

The opinions of users about the game were gathered through a customized version of the System Usability Scale (SUS) composed by 8-items to be answered in a Likaert scale of 1–5, being 1 the worst case and 5 the best (see Table 1).

In addition, all participants were asked about how often they played videogames in a 5-values scale (never, sometimes, often, usually, always), and if they had previous experience with BCI technology. The answers and all collected data are available at the git repository: https://github.com/nosepas1/BCI_gamification_data.

The software used for the statistical analysis was MATLAB R2017a and a python script using scipy stats, numpy and pandas. The first step of the statistical analysis is the comparison of the baselines of each group of participants; age, gender, and precision. First, the Shapiro-Wilk Test (SWT) test was performed to analyze the normality of the variables. For the comparison between groups (“Healthy” and “Stroke”), t-test for independent samples (in case of assumption of normality) and Mann–Whitney U test (in case of non-normality) were used.

For the analysis of the impact of the serious game combined with BCI on the user’s concentration, since no independence could be assumed, the MI accuracies of every subject in all games mode were compared. The selected test for the analysis was “repeated measures ANOVA” (Girden, 1992; Norman and Streiner, 2008; Singh et al., 2013; Verma, 2015), which allows the results’ comparison of the same group of participants at different time points. For that, two assumptions are needed: normality distribution (Shapiro-Wilk test > 0.05) and assumption of sphericity (Mauchly’s sphericity test > 0.05).

Finally, a quantitative analysis of the answers in the questionnaire of each participant was carried out.

Six healthy subjects and ten stroke patients were enrolled in the study, seven of them were females and nine males. The average age of the healthy group was 35.3 years old (SD = 16.0), with the maximum and minimum age in this group was 58 and 23 years old, respectively. The mean age of the stroke group was 55.8 years old and the maximum and minimum age was 79 and 26 years old. In the Stroke group, four patients had been affected on their right side, and 6 on their left side. The mean time since stroke was 33 months (SD = 22.8), seven in subacute phase, Three in chronic phase, and 0 in acute phase. Neither patients nor control users had previous experience in BCIs, except two patients that had used the recoveriX® system years ago. Control users had neither previous known neurological disorder, nor previous experience in BCIs.

The accuracy obtained after the first training run in the first session (T1-S1) is taken as a baseline reference for each subject. As mentioned above, in run T1-S1, participants used the standard visual feedback with a personalized classifier generated in the calibration run of Session 1(T1-C1). Thus, the accuracy obtained in T2-S1, T1-S2, and T2-S2 is compared with that of T1-S1. The equality of the baselines cannot be assumed, because there is a statistical difference in the age between groups. The age variable of the healthy group is not normally distributed (SWT: P = 0.022) and Mann-Whitney U test shows a significant difference between both age groups, P = 0.031. In order to see how much the age differences can influence the BCI performance, the correlation between the age and the maximum classification accuracy (maximum accuracy of the second run in the first session T1-S2) has been studied. The age variable with all participants and MI accuracy data follow a normal distribution (SWT age, P = 0.075, SWT accuracy, P = 0.096). The Pearson correlation test shows that there is no significant correlation between age and accuracy (rho = −0.195, P = 0.505). Thus, the comparison of the MI accuracy between groups is allowed. However, because of the small size of sample no general conclusion can be extracted about the relationship age and accuracy.

The comparison of the accuracy obtained in the first training run T1-S1 (after system calibration), shows that there is no statistical difference in the BCI performance between healthy and stroke group using unpaired t-test, t-value = |1.475| and P = 0.166 (SWT > 0.05).

In order to detect differences in the accuracy using different visual feedback modalities, the MI accuracy of each run has been analyzed using repeated measures ANOVA. All the datasets can be considered normally distributed. Shapiro-Wilk test did not show significant results at alpha level. Mauchly’s Test of Sphericity indicated that the assumption of sphericity has not been violated, χ2(2) = 9.595, P = 0.088.

Table 2 shows the results of the accuracy comparison using repeated measures ANOVA. The multiple comparison did not show statistical differences in the accuracy based on the gamification with different visual feedback modalities (see Figure 3 and Table 3). The same comparison has been done using only the data from the healthy or stroke group, and no significant differences have been detected.

While no significant differences are shown in several ANOVA tests, from inspection of Figure 3, a trend toward an improvement of mean accuracy along the sessions seems plausible. However, no conclusive results can be drawn because of the small number of subjects.

The users’ satisfaction was assessed after the last session using a questionnaire with eight questions rated from 1 to 5. For the quantification of the results the average of the individual score and the average of each question in the questionnaire has been computed.

Table 4 shows the results in the questionnaire based on groups and gaming experience. The first column shows the group name, the second column the group size, the third column is the averaged total questionnaire score based on the average score in each question, and the next eight columns show the average result for each group of each question. Figure 4 shows the questionnaire results of each group.

All participants gave high scores in all questions: users’ satisfaction is 4.20 points (SD = 0.45) up to five, the stroke group gave higher score in the questionnaire with 4.23 points (SD = 0.35), whereas the healthy group was 4.15 points (SD = 0.63). In general, the best aspect of the game is the clarity of the rules (Q4). The healthy group also highlighted the easiness of use (Q3). The worst aspect is the fun level of the game (Q1). In the informal debriefing after the sessions, users declared being pleased with the game, but suggested some enhancements such as introducing variations in the animation of the rat, which is always the same, and adding new auditory stimuli. The attention and somnolence in stroke patients are always a problem, which is not always discussed and should be considered in the design of experiments. In this case, patients agreed that the activity had the proper duration to avoid these problems. Stress was not quantitatively measured. However, in the debriefing session, patients did not mention any change in the level of fatigue and stress using the gamified version of training.

Finally, no significant correlation was found between the questionnaire score and accuracy.

The objective of this experiment was to explore how the proposed serious game can affect users’ concentration and performance of a BCI system for stroke functional rehabilitation.

Although the Healthy and Stroke groups presented significant differences in age, this unevenness does not seem to harm the analysis, because there is no lineal correlation between age and accuracy (Pearson’s test; rho = −0.195, P = 0.505). However, the number of subjects is too small to generalize this conclusion. In future experiments, with more subjects, ages will be stratified. Furthermore, there was no differences in the MI accuracy between the Healthy group and the Stroke group (t-test, t-value = |1.475| and P = 0.166).

The BCI performance has been studied through a multiple comparison analysis using the MI accuracy calculated after each run using different avatar versions. The comparison using repeated measures ANOVA test, showed no significant results, in the mean accuracy as well as in the maximum accuracy (Tables 2, 3 and Figure 3). The results of this first analysis demonstrate that there is no negative effect in the BCI performance when it is combined with a new gamified avatar. However, as shown in Figure 3A, the point cloud of T1-S2 and T2-S2 are slightly higher than T1-S1 (MI accuracy baseline measure). This difference is more evident in the mean accuracy plot (Figure 3C). The most probable explanation for that is that the pop-up scoring window can encourage the user to be more focused in the MI task.

The results obtained from the questionnaire show a high satisfaction level from the users (see Figure 4). In one hand, the easiness of use and the clarity of the rules are the features best scored by both groups. It is important to point out that previous experience on gaming is not related with better user experience or a better BCI performance. All users also reported that this new avatar helped them to improve their concentration (Q6) and reduce their boredom (Q7). This is consistent with the results obtained in Figure 3C. On the other hand, all participants gave the lowest score to the entertainment level (Q1) and visual attractiveness (Q2). As observed in previous experiments (Lledó et al., 2016), visual attractiveness is a desired objective but sometimes patients prefer simpler versions of a task. Future versions of the game could provide different versions of the game appearance. The difficult part is to improve the entertainment level of the game without increasing the cognitive task and, consequently, decreasing the BCI performance. Hence, other narrative threads could be tested and stratified into levels to assess how a story impacts on users’ performance and motivation. Moreover, the game difficulty level could be adapted to the user’s performance: the better the results, the higher the correct response threshold.

The main limitation of the study is small number of subjects and the age difference between groups. In addition, more sessions are needed to evaluate if the results observed in this pilot study are generalizable. Furthermore, new variables can be considered such us stress and fatigue, frequent in this type of rehabilitation. Finally, some emotional variables can be included to compare with the user performance.

Nevertheless, the idea of introducing games combined with BCI therapy seems to be an promising step to take to improve user experience, increase adherence to treatment and improve the functional outcome of patients.

A game-based rehabilitation instrument has been developed as an improvement of the existing recoveriX system for post-stroke upper limb rehabilitation. A pilot study has been carried out to test the impact of the game in the rehabilitation process. Sixteen subjects were recruited (6 healthy and 10 stroke patients) to perform 2 sessions of BCI therapy using different visual feedback modalities. The first run (80 trials) of each session was used to calibrate the system creating a personal LDA classifier. In the second run of the first session (T1-S1) all participants performed 80 trials using the “standard” VR avatar. In the third run of the first session (T2-S1) the participants used a new animated version based on the standard avatar. In the second run of the second session (T2-S2) users trained with the new avatar combined with a pop-up window that was appearing for a short period every 10 min showing the score. In the third run of the second session (T2-S2) the appearance was like the T2-S2, but the score window was appearing all the time. The objective of these last two runs was to add more cognitive responses to improve the concentration without harming the MI accuracy.

The results show there is no significant difference in the MI accuracy baseline between the healthy group and the stroke group. Moreover, there were no significant differences either between training with or without game. Results also show that there are no significant differences in the accuracies using the different forms of scoring feedback. Thus, the added stimuli of scoring and time does not affect performance. Concerning users’ opinions, they were all positive about the game level of entertainment, clarity of rules, narrative and visual attractiveness. Participants declared not having been affected by the game to create a mental image but having felt less bored. Finally, there was a consensus about the interest of gamifying stroke rehabilitation sessions. The main limitation of this study is the small size of the sample and small number of rehabilitation sessions. However, the results are encouraging to continue investigating how to bring gamification elements to post-stroke rehabilitation.

The datasets generated for this study are available on request to the corresponding author.

The studies involving human participants were reviewed and approved by the Institut Guttmann ethics committee. The patients/participants provided their written informed consent to participate in this study. Written, informed consent was obtained from the individuals for the publication of any potentially identifiable images or data included in this article.

MC-C contributed to the implementation and execution of the methodology and writing the manuscript. MS-R collaborated in the design of the experiment, the analysis of the results, and writing the manuscript. JR-S participated in the design of the experiment and in the set up of the brain computer interface, and the integration of the game. EO and MO collaborated in the organization of the pilot study with patients. EO reviewed the manuscript. RO and CG contributed to the design of the BCI technology. DT supervised the research and contributed to the design of the game, the analysis of the results, and writing the manuscript. All authors contributed to the article and approved the submitted version.

MC-C, MS-R, JR-S, and RO were employed by the company g.tec medical engineering Spain S.L. CG is CEO of the company g.tec medical engineering Spain S.L. and g.tec medical engineering GmbH.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnins.2020.00882/full#supplementary-material

Alimardani, M., Nishio, S., and Ishiguro, H. (2016). The importance of visual feedback design in BCIs; from embodiment to motor imagery learning. PLoS One 11:e0161945. doi: 10.1371/journal.pone.0161945

Ang, K. K., Chua, K. S. G., Phua, K. S., Wang, C., Chin, Z. Y., Kuah, C. W. K., et al. (2015). A Randomized controlled trial of EEG-based motor imagery brain-computer interface robotic rehabilitation for stroke. Clin. EEG Neurosci. 46, 310–320. doi: 10.1177/1550059414522229

Bermúdez-Badia, S., and Cameirão, M. S. (2012). The neurorehabilitation training toolkit (NTT): a novel worldwide accessible motor training approach for at-home rehabilitation after stroke. Stroke Res. Treat. 2012:802157.

Bermúdez-Badia, S., Fluet, G. G., Llorens, R., and Deutsch, J. E. (2016). “Virtual reality for sensorimotor rehabilitation post stroke: design principles and evidence,” in Neurorehabilitation Technology, eds D. Reinkensmeyer, and V. Dietz, (Cham: Springer), 573–603. doi: 10.1007/978-3-319-28603-7_28

Borrego, A., Latorre, J., Alcañiz, M., and Llorens, R. (2019). Embodiment and presence in virtual reality after stroke. a comparative study with healthy subjects. Front. Neurol. 10:1061. doi: 10.3389/fneur.2019.01061

Burke, J. W., McNeill, M. D. J., Charles, D. K., Morrow, P. J., Crosbie, J. H., and McDonough, S. M. (2009). Optimising engagement for stroke rehabilitation using serious games. Vis. Comput. 25:1085. doi: 10.1007/s00371-009-0387-4

Cervera, M. A., Soekadar, S. R., Ushiba, J., Millán, J. D. R., Liu, M., Birbaumer, N., et al. (2018). Brain-computer interfaces for post-stroke motor rehabilitation: a meta-analysis. Ann. Clin. Transl. Neurol. 5, 651–663. doi: 10.1002/acn3.544

Chavarriaga, R., Fried-Oken, M., Kleih, S., Lotte, F., and Scherer, R. (2017). Heading for new shores! Overcoming pitfalls in BCI design. Brain Comput. Interfaces 4, 60–73. doi: 10.1080/2326263x.2016.1263916

Cho, W., Sabathiel, N., Ortner, R., Lechner, A., Irimia, D. C., Allison, B. Z., et al. (2016). Paired Associative stimulation using brain-computer interfaces for stroke rehabilitation: a pilot study. Eur. J. Transl. Myol. 26:6132.

Donkor, E. S. (2018). Stroke in the 21st century: a snapshot of the burden, epidemiology, and quality of life. Stroke Res. Treat. 2018:3238165.

Hamari, J., Koivisto, J., and Sarsa, H. (2014). “Does gamification work? - A literature review of empirical studies on gamification,” in Proceedings of the 2014 47th Hawaii International Conference on System Sciences, (Piscataway, NJ: IEEE), 3025–3034.

Hanus, M. D., and Fox, J. (2015). Assessing the effects of gamification in the classroom: a longitudinal study on intrinsic motivation, social comparison, satisfaction, effort, and academic performance. Comput. Educ. 80, 152–161. doi: 10.1016/j.compedu.2014.08.019

Irimia, D., Sabathiel, N., Ortner, R., Poboroniuc, M., Coon, W., Allison, B. Z., et al. (2016). “recoveriX: a new BCI-based technology for persons with stroke,” in Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), (Piscataway, NJ: IEEE), 1504–1507.

Irimia, D. C., Cho, W., Ortner, R., Allison, B. Z., Ignat, B. E., Edlinger, G., et al. (2017). Brain-computer interfaces with multi-sensory feedback for stroke rehabilitation: a case study. Artif. Organs 41, E178–E184.

Jeunet, C., Jahanpour, E., and Lotte, F. (2016). Why standard brain-computer interface (BCI) training protocols should be changed: an experimental study. J. Neural Eng. 13:036024. doi: 10.1088/1741-2560/13/3/036024

Kosmyna, N., and Lécuyer, A. (2017). Designing guiding systems for brain-computer interfaces. Front. Hum. Neurosci. 11:396. doi: 10.3389/fnhum.2017.00396

Lledó, L. D., Díez, J. A., Bertomeu-Motos, A., Ezquerro, S., Badesa, F. J., Sabater-Navarro, J. M., et al. (2016). A comparative analysis of 2D and 3D tasks for virtual reality therapies based on robotic-assisted neurorehabilitation for post-stroke patients. Front. Aging Neurosci. 8:205. doi: 10.3389/fnagi.2016.00205

Llorens, R., Noé, E., Naranjo, V., Borrego, A., Latorre, J., and Alcañiz, M. (2015). Tracking systems for virtual rehabilitation: objective performance vs. subjective experience. a practical scenario. Sensors 15, 6586–6606. doi: 10.3390/s150306586

Lotte, F., Larrue, F., and Mühl, C. (2013). Flaws in current human training protocols for spontaneous brain-computer interfaces: lessons learned from instructional design. Front. Hum. Neurosci. 7:568. doi: 10.3389/fnhum.2013.00568

McFarland, D. J., Miner, L. A., Vaughan, T. M., and Wolpaw, J. R. (2000). Mu and beta rhythm topographies during motor imagery and actual movements. Brain Topogr. 12, 177–118.

Neuper, C., Müller-Putz, G. R., Scherer, R., and Pfurtscheller, G. (2006). Chapter 25 Motor imagery and EEG-based control of spelling devices and neuroprostheses. Prog. Brain Res. 159, 393–409. doi: 10.1016/s0079-6123(06)59025-9

Norman, G., and Streiner, D. (2008). Biostatistics: The Bare Essentials, 3rd Edn. Toronto: McGraw-Hill Education, 200.

Petit, D., Gergondet, P., Cherubini, A., and Kheddar, A. (2015). “An integrated framework for humanoid embodiment with a BCI,” in Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), (Piscataway, NJ: IEEE), 2882–2887.

Pichiorri, F., Morone, G., Petti, M., Toppi, J., Pisotta, I., Molinari, M., et al. (2015). Brain-computer interface boosts motor imagery practice during stroke recovery. Ann. Neurol. 77, 851–865. doi: 10.1002/ana.24390

Pindus, D. M., Mullis, R., Lim, L., Wellwood, I., Rundell, A. V., Aziz, N. A. A., et al. (2018). Stroke survivors’ and informal caregivers’ experiences of primary care and community healthcare services – A systematic review and meta-ethnography. PLoS One 13:e0192533. doi: 10.1371/journal.pone.0192533

Quandt, F., and Hummel, F. C. (2014). The influence of functional electrical stimulation on hand motor recovery in stroke patients: a review. Exp. Transl. Stroke Med. 6:9.

Remsik, A., Young, B., Vermilyea, R., Kiekoefer, L., Abrams, J., Evander-Elmore, S., et al. (2016). A review of the progression and future implications of brain-computer interface therapies for restoration of distal upper extremity motor function after stroke. Expert Rev Med Devices 13, 445–454. doi: 10.1080/17434440.2016.1174572

Richter, G., Raban, D. R., and Rafaeli, S. (2015). “Studying gamification: the effect of rewards and incentives on motivation,” in Gamification in Education and Business, eds T. Reiners, and L. C. Wood, (Cham: Springer), 21–46. doi: 10.1007/978-3-319-10208-5_2

Singh, V., Rana, R. K., and Singhal, R. (2013). Analysis of repeated measurement data in the clinical trials. J. Ayurveda Integr. Med. 4:77. doi: 10.4103/0975-9476.113872

Škola, F., Tinková, S., and Liarokapis, F. (2019). Progressive training for motor imagery brain-computer interfaces using gamification and virtual reality embodiment. Front. Hum. Neurosci. 13:329. doi: 10.3389/fnhum.2019.00329

van Dokkum, L. E. H., Ward, T., and Laffont, I. (2015). Brain computer interfaces for neurorehabilitation-its current status as a rehabilitation strategy post-stroke. Ann. Phys. Rehabil. Med. 58, 3–8. doi: 10.1016/j.rehab.2014.09.016

Vourvopoulos, A., Ferreira, A., and Badia, S. B. I. (2016). “NeuRow: an immersive VR environment for motor-imagery training with the use of Brain-Computer Interfaces and vibrotactile feedback,” in Proceedings of the International Conference on Physiological Computing Systems, Vol. 2, (Portugal: SCITEPRESS), 43–53.

Vourvopoulos, A., Pardo, O. M., Lefebvre, S., Neureither, M., Saldana, D., Jahng, E., et al. (2019). Effects of a brain-computer interface with virtual reality (VR) neurofeedback: a pilot study in chronic stroke patients. Front. Hum. Neurosci. 13:210. doi: 10.3389/fnhum.2019.00210

Zhang, J. J. Q., Fong, K. N. K., Welage, N., and Liu, K. P. Y. (2018). The activation of the mirror neuron system during action observation and action execution with mirror visual feedback in stroke: a systematic review. Neural Plast. 2018:2321045.

Keywords: brain computer interface, gamification, stroke, rehabilitation, functional rehabilitation, serious game

Citation: de Castro-Cros M, Sebastian-Romagosa M, Rodríguez-Serrano J, Opisso E, Ochoa M, Ortner R, Guger C and Tost D (2020) Effects of Gamification in BCI Functional Rehabilitation. Front. Neurosci. 14:882. doi: 10.3389/fnins.2020.00882

Received: 13 February 2020; Accepted: 28 July 2020;

Published: 21 August 2020.

Edited by:

Nicolas Garcia-Aracil, Miguel Hernández University of Elche, SpainReviewed by:

Xiaoli Li, Beijing Normal University, ChinaCopyright © 2020 de Castro-Cros, Sebastian-Romagosa, Rodríguez-Serrano, Opisso, Ochoa, Ortner, Guger and Tost. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Martí de Castro-Cros, bWFydGkuZGUuY2FzdHJvQHVwYy5lZHU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.