- 1Brain & Mind Research Center, Nagoya University, Nagoya, Japan

- 2Neuroimaging and Informatics Group, National Center for Geriatrics and Gerontology, Obu, Japan

- 3Graduate School of Engineering, Nagoya Institute of Technology, Nagoya, Japan

- 4Department of Radiology, Osaka University Graduate School of Dentistry, Osaka, Japan

In this study, we investigated the effect of the dynamic changes in brain activation during neurofeedback training in the classification of the different brain states associated with the target tasks. We hypothesized that ongoing activation patterns could change during neurofeedback session due to learning effects and, in the process, could affect the performance of brain state classifiers trained using data obtained prior to the session. Using a motor imagery paradigm, we then examined the application of an incremental training approach where classifiers were continuously updated in order to account for these activation changes. Our results confirmed our hypothesis that neurofeedback training could be associated with dynamic changes in brain activation characterized by an initially more widespread brain activation followed by a more focused and localized activation pattern. By continuously updating the trained classifiers after each feedback run, significant improvement in accurately classifying the different brain states associated with the target motor imagery tasks was achieved. These findings suggest the importance of taking into account brain activation changes during neurofeedback in order to provide more reliable and accurate feedback information to the participants, which is critical for an effective neurofeedback application.

Introduction

Real-time functional magnetic resonance imaging (fMRI), coupled with machine learning algorithms, has enabled the real-time identification of different brain states during fMRI scans (LaConte et al., 2007; LaConte, 2011; Shibata et al., 2011; Sitaram et al., 2011; Bagarinao et al., 2018). Using support vector machines (SVM), a type of supervised machine learning algorithm, LaConte et al. (2007) demonstrated the first real-time decoding of brain states corresponding to the left and right button presses task. In the same study, they also demonstrated the classifier’s ability to decode other cognitive and emotional states, albeit in a small number of participants. This approach was later extended by Sitaram et al. (2011) to the online classification and feedback of multiple emotional states. Shibata et al. (2011) used sparse logistic regression to decode target activation patterns from localized brain regions and used neurofeedback to induce perceptual learning.

Recently, we employed a multivariate pattern analysis using SVM to demonstrate the importance of feedback information in improving volitional recall of brain activation patterns during motor imagery training (Bagarinao et al., 2018). Specifically, we examined the effect of neurofeedback in recalling activation patterns associated with motor imagery tasks. For this purpose, participants underwent extended motor imagery task training consisting of two scanning sessions, one with feedback and the other without feedback. Consistency in recalling motor imagery relevant activation patterns was assessed using SVM. The results clearly showed that with feedback information, participants were able to recall relevant activation patterns significantly better than without feedback. For the training with feedback, we used data obtained from an initial scan to train SVMs, which were later used in the succeeding feedback runs (LaConte, 2011). We observed that the SVMs’ classification performance tended to decrease with each feedback run.

One of the implicit assumptions in brain state classification studies using supervised learning algorithms is the stationarity of the measured system (i.e., the brain) (Vapnik, 1999; Hastie et al., 2009). However, for most neurofeedback studies, the goal of training is for participants to learn the target tasks. This can be in the form of either up- or down-regulating activity in circumscribed brain regions (DeCharms et al., 2004; deCharms et al., 2005; Caria et al., 2007; Zotev et al., 2011, 2018; Hamilton et al., 2016; Sherwood et al., 2016), inducing specific patterns of brain activity (Shibata et al., 2011; Amano et al., 2016; Cortese et al., 2016, 2017; Koizumi et al., 2016), or enhancing connectivity between regions (Koush et al., 2013, 2017; Kim et al., 2015). As demonstrated by earlier studies, neurofeedback training can change functional brain networks (Haller et al., 2013) and can alter the profile of connectivity patterns of specific brain regions, for example, in the right inferior gyrus (Rota et al., 2011) as well as in the insular cortex (Lee et al., 2011). All of these entail dynamic changes in the brain’s activity if the participant has to learn the target tasks.

For neurofeedback to be effective, the feedback information also needs to be reliable and representative of the ongoing brain activity or activation pattern that was meant to be volitionally controlled. Otherwise, the feedback signal would not be of help to participants in actually self-regulating their brain activity. This can be clearly seen in neurofeedback studies that used sham control (deCharms et al., 2005; Rota et al., 2009; Caria et al., 2010; Zotev et al., 2018). Under the sham condition, the feedback signal provided to the participants does not represent actual activation. Findings using sham control have clearly demonstrated that the desired effect is not usually achieved, suggesting the importance of having the right feedback information for neurofeedback training to be effective. To account for the changing activation pattern that could be driven by learning effects and to be able to provide relevant feedback information, the system itself must be able to adapt as the training progresses.

In this study, we hypothesized that during neurofeedback training, dynamic changes in activation patterns could occur as participants learned to perform the target tasks. To account for these changing activation patterns, we examined whether continuously updating trained classifiers after every feedback scan could improve the classifiers’ performance and thus provide a more accurate feedback information to the participants. For this, we used a brain–machine interface (BMI) system that employed a motor imagery paradigm coupled with real-time fMRI based neurofeedback (Bagarinao et al., 2018). The choice of motor imagery, a covert cognitive process where an action is mentally simulated but not actually performed (Grèzes and Decety, 2000; Hétu et al., 2013), is motivated by its potential as an effective neurorehabilitation tool to improve motor functions (Jackson et al., 2001; Zimmermann-Schlatter et al., 2008; Malouin and Richards, 2013). We used linear SVMs to classify brain states associated with different motor imagery tasks and evaluated its performance during feedback runs.

Materials and Methods

Participants

We recruited 30 healthy young participants (15 males and 15 females), ranging in age from 20 to 25 years old (mean age = 21.7 years, standard deviation = 1.3 years) for this study. All participants had no history of neurological or psychiatric disorders, right handed as indexed by a handedness inventory test for Japanese (Hatta and Hotta, 2008), and with Mini-Mental State Examination score greater than 26. This study was approved by the Institutional Review Board of the National Center for Geriatrics and Gerontology of Japan. All participants gave written informed consent before joining the study.

Experimental Paradigm and Tasks

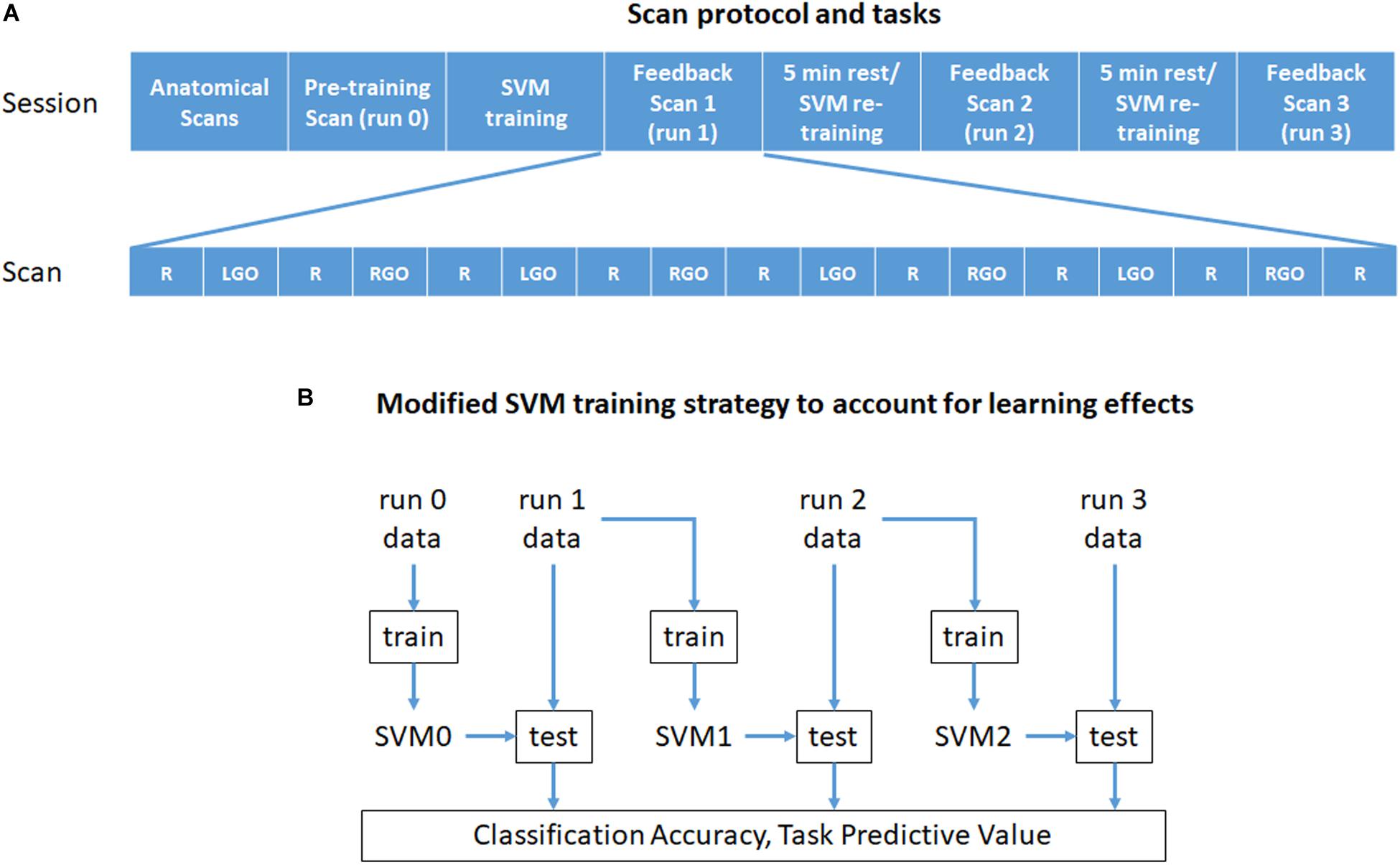

All participants underwent real-time fMRI scanning at the National Center for Geriatrics and Gerontology in Japan. The outline of the experimental protocol and tasks is given in Figure 1A. Each scanning session consisted of the following scans: (1) an anatomical localizer scan, (2) T1-weighted high resolution anatomical image scan, and (3) 4 motor imagery task fMRI scans (runs 0 to 3). All task fMRI scans consisted of nine rest (R) and eight task blocks with each block lasting for 30 s. The task blocks were divided into four blocks of imagined left hand gripping and opening (LGO) and four blocks of imagined right hand gripping and opening (RGO). In the first motor imagery scan (run 0), no feedback was provided, while for the remaining three scans (runs 1–3), feedback signals were given. In-between feedback scans, participants were given 5 min of rest.

Figure 1. Outline of (A) the experiment protocol and tasks and (B) the modified training strategy used in this study. Each scan block in (A) is 30 s long. R, rest; LGO, imagined left hand gripping and opening; RGO, imagined right hand gripping and opening; SVM, support vector machine.

Participants were also given time to practice the motor imagery tasks outside the scanner. During this practice session, the participants were initially asked to imagine gripping and opening their right or left hand to get a sense of the motor imagery tasks. This was done without time constraint. Once the participants felt comfortable doing the imagery tasks, they were then asked to actually perform the movements and instructed to remember the physical sensation of these movements as an additional guide for the imagery tasks. Finally, participants performed half of the task protocol corresponding to two blocks each of LGO and RGO tasks with rest in-between tasks using the same timing (30 s each block) and visual cue presentation in front of the stimulus computer. This was done to familiarize the participants with the actual tasks and timing during the scans. Participants were also instructed to continue doing the same imagery task even if the robot does not respond during the scans.

Imaging Parameters

All scans were acquired using a Siemens Magnetom Trio (Siemens, Erlangen, Germany) 3.0 T MRI scanner with a 12-channel head coil. Anatomical T1-weighted MR images were acquired using a 3D MPRAGE (Magnetization Prepared Rapid Acquisition Gradient Echo, Siemens) pulse sequence (Mugler and Brookeman, 1990) with the following imaging parameters: repetition time (TR) = 2.53 s, echo time (TE) = 2.64 ms, 208 sagittal slices with a 50% distance factor and 1 mm thickness, field of view (FOV) = 250 mm, 256 × 256 matrix dimension and in plane voxel resolution of about 1.0 mm × 1.0 mm × 1.0 mm. The functional images were acquired using a gradient echo (GE) echo planar imaging (EPI) sequence with the following parameters: TR = 2.0 s, TE = 30 ms, flip angle (FA) = 80 degrees, 37 axial slices with a distance factor of 30% and thickness of 3.0 mm, FOV = 192 mm, 64 × 64 matrix dimension, voxel resolution = 3.0 mm × 3.0 mm × 3.0 mm, and a total of 255 volumes.

Neurofeedback Training

For the feedback scans, we used our previously reported BMI system (Bagarinao et al., 2018). The system employed a small humanoid robot (KHR-3V, Kondo Science, Japan), the arms of which could be controlled (e.g., raising or lowering) via its USB connection to the real-time analysis system. During real-time fMRI scan, the system operates as follows. Each acquired image volume is immediately sent to the analysis system, which then processes the data. The preprocessed data is then fed into a previously trained SVM for real-time classification. If the target brain activation pattern has been identified, the analysis system will send a command (e.g., raise left arm) to the humanoid robot for immediate execution. The action of the robot is captured by a video camera, which sends the live video feed to the participant via a projector.

In this study, SVMs were trained to classify the different brain states associated with the imagery tasks and the trained SVMs were used to classify, in real-time, the ongoing brain state of the participants during feedback scans. Specifically, during task blocks, each acquired image volume was processed immediately by the analysis system, then classified by the trained SVM, and depending on the classification result, a command signal was sent to the humanoid robot. If SVM classified the volume as task (LGO/RGO) as compared to rest, the arm of the humanoid robot corresponding to the imagery task would be raised by 11 degrees, while for incorrect classification, the arm of the robot would remain stationary. Thus, during the task block, the robot’s arm would be continuously raised with an increment of 11 degrees depending on the SVM’s classification output. The higher the accuracy, the higher the corresponding arm would be raised. At the end of the task block, the arm would be reset to its initial downward position. During rest blocks, the robot stayed still and the participants focused their attention on a cross mark positioned on the robot’s body. The feedback was designed to be representative of or consistent with the task design. Since the classification was based on rest vs. task, when the classification was incorrect (SVM predicted rest during task blocks), the robot would not move, and when the classification was correct, the robot would move.

Unlike previous approaches, we used an incremental training strategy outlined in Figure 1B to train the SVMs during feedback scans. Specifically, the data from run 0 was used to train initial SVMs (SVM0) to classify rest from LGO (R vs. LGO) or rest from RGO (R vs. RGO) brain states. The trained SVMs were then used to classify in real time the acquired volumes obtained during feedback scan 1 (run 1). After the scan, the SVMs were updated and retrained using only the newly acquired data from run 1. The data from run 0 were not included in retraining the SVMs. The newly trained SVMs (SVM1) were then used to classify the data in the following feedback scan (run 2). This updating process was repeated until the last feedback scan.

For online and real-time image preprocessing, we used Statistical Parametric Mapping (SPM8, Wellcome Trust Center for Neuroimaging, London, United Kingdom) running on Matlab (R2016b, MathWorks, Natick, MA, United States). Data obtained during run 0 were immediately preprocessed after the scan (online preprocessing). The acquired functional images were realigned using a two-pass approach as implemented in SPM8. In the first pass, the images were co-registered to the first image in the series using a rigid body spatial transformation (three parameters for translation and three parameters for rotation) and the mean functional image, Imean, was then computed. In the second pass, the realigned images were further co-registered to the computed mean image. This mean image was also used as the reference image when aligning all functional images acquired during feedback scans. After realignment, the participant’s anatomical T1-weighted image was then co-registered to Imean and segmented into component images including gray matter (GM), white matter (WM), and cerebrospinal fluid (CSF). The segmentation step also generated the transformation information from subject space to the Montreal Neurological Institute (MNI) template space. Using this information, the realigned functional images were normalized to MNI, resampled to a 3 mm × 3 mm × 3 mm voxel resolution and smoothed using an 8-mm full-width-at-half-maximum (FWHM) 3D Gaussian kernel. Finally, we applied a whole brain mask to the preprocessed images to exclude voxels outside the brain. This preprocessed data were then used to train SVM0. Although the normalization step is unnecessary for individual analysis, we performed this step so that results could be easily validated and compared with that of the other subjects. Moreover, we also used a common whole brain mask to limit SVM analysis to voxels within the brain and normalization made this easier. During feedback scans, each functional image was preprocessed immediately after acquisition (real-time preprocessing). The image was first realigned to Imean estimated from run 0, then normalized to the MNI space, resampled to a 3 mm × 3 mm × 3 mm voxel resolution, smoothed using an 8-mm FWHM 3D Gaussian kernel, masked using the same whole brain mask employed in run 0 to exclude voxels outside the brain, incrementally detrended, and used as input to the trained SVMs for the real-time classification of the brain’s activation pattern. The SVM’s output was also detrended to correct for possible classifier drift (LaConte et al., 2007).

Offline Data Analysis

We also performed offline analyses of the acquired data to generate the activation maps associated with the imagery tasks. For this, we used SPM12. The T1-weighted images were first segmented into component images including GM, WM, and CSF, among others, using SPM’s segmentation approach (Ashburner and Friston, 2005). The bias-corrected anatomical image as well as the transformation information from subject space to MNI space were then obtained. For the functional images, the first 5 volumes were discarded to account for the initial image inhomogeneity. The remaining images were then realigned relative to the mean functional image, co-registered to the bias-corrected anatomical image, normalized to the standard MNI space using the transformation information obtained from the segmentation step, resampled to a 2 mm × 2 mm × 2 mm voxel resolution, and spatially smoothed using an 8-mm FWHM 3D Gaussian filter.

Using the preprocessed functional images, the activation maps associated with all imagery tasks were generated for each participant. To do this, we used a box-car convolved with SPM’s canonical hemodynamic response function to model each task (LGO/RGO). The 6 estimated realignment parameters were also included as covariates of no interest to account for the effects of head motion. Contrast images were extracted for the LGO and RGO tasks as well as contrasts comparing the two tasks (LGO > RGO). One-sample t-tests of the resulting contrast maps were also performed to generate activation maps at the group level. All statistical maps were corrected for multiple comparisons at the cluster level with p < 0.05 using family-wise error correction (FWEc) and a cluster-defining threshold (CDT) set to p = 0.001 as implemented in SPM12. To test our hypothesis of the changing activation patterns during feedback scans, we performed a one-way repeated measures analysis of variance (ANOVA) using contrast images for each participants from feedback runs 1 to 3 for both LGO and RGO tasks. Post hoc paired sample t-tests were also performed between pairs of feedback runs. We used the Neuromorphometrics atlas available in SPM12 to label the different cortical areas in the obtained statistical maps. Surface projections of the activation maps are shown using BrainNet Viewer (Xia et al., 2013).

Offline SVM analyses were also performed using the preprocessed data and employing the same training strategy outlined in Figure 1B for more precise and detailed analyses. Three SVM classification models including R vs. LGO, R vs. RGO, and LGO vs. RGO were investigated. The third model was added to evaluate the discrimination of the representation of the imagery movement between the left and the right hands. Since feedback training only involved the first two models, the classification performance of the third model could serve as an indirect measure to quantify learning of the imagery tasks, that is, the higher the classification performance, the better the participants were able to generate distinct activation patterns for the two tasks in spite of the fact that the feedback was solely based on the classification of rest vs. task (LGO/RGO) brain states. To evaluate the SVMs’ performance for the first two classification models, we computed the task predictive value (TPV), defined as the ratio between the number of correctly classified task volumes and the total number of task volumes. Specifically, for R vs. LGO classification model, TPV was defined as the ratio between the number of correctly classified LGO volumes and the total number of LGO volumes within the run. The TPV for R vs. RGO classification model was defined in the same way. For the LGO vs. RGO classification model, we used accuracy defined as the total number of correctly classified LGO and RGO volumes over the total number of LGO and RGO volumes. Rest volumes were excluded in these definitions since rest blocks were not monitored during the scan and participants might also have practiced the task during rest blocks, which could lead to inaccurate classification. We also investigated the effect of SVM re-training strategy on the estimated classification measures using a one-way repeated-measures ANOVA. In all SVM analyses, we used a linear SVM and set the regularization parameter c to 1. We used the default value of c since this value has provided robust classification performance on the same classification problem based on our previous study (Bagarinao et al., 2018). All analyses were performed in Matlab (R2016b, MathWorks, Natick, MA, United States) using in-house scripts and LIBSVM (Chang and Lin, 2011), a free library for SVMs.

To identify regions that significantly contributed to the trained SVM’s classification performance, we performed a one-sample t-test to the weights obtained from all participants for each of the classification model above using SPM12. This is to test whether the mean weight value at each voxel across participants were significantly greater than or less than 0. The resulting statistical maps were then corrected for multiple comparisons at the cluster level using FWEc p < 0.05 (CDT p = 0.001) as implemented in SPM12.

Validation Analyses

To further demonstrate the advantages of updating SVMs during a series of neurofeedback training runs, we performed additional offline validation analyses using the preprocessed data. The goal of these additional analyses was to contrast the classification performance when SVMs were only trained using data from run 0 and tested using data from feedback runs 1–3 with no training update, which is the typical approach. For these analyses, the same 3 SVM classification models (R vs. LGO, R vs. RGO, and LGO vs. RGO) were evaluated using the same performance measures (TPV for the first two models and accuracy for the third model).

Results

Improvement in Classification Performance With SVM Re-training

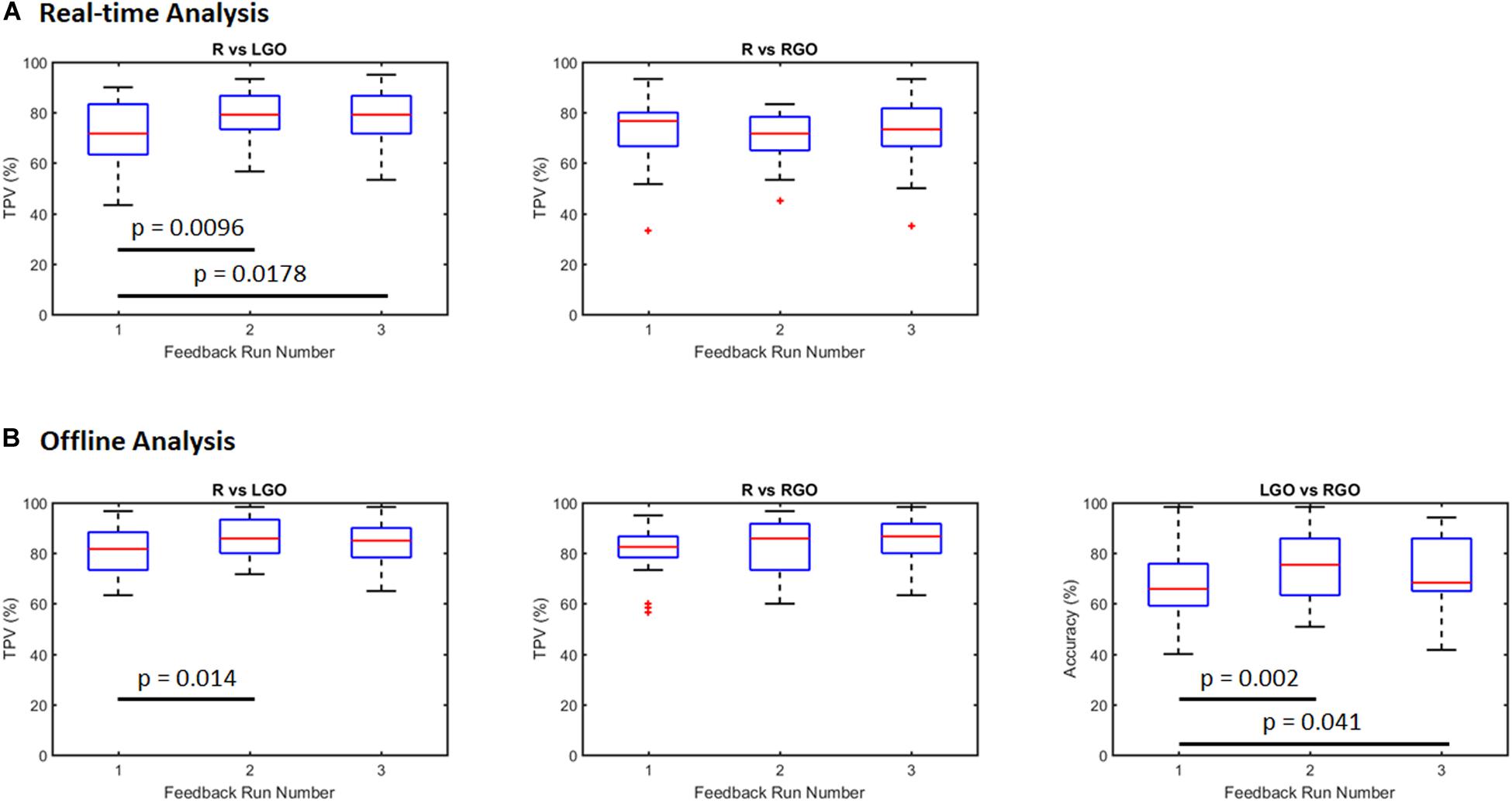

Figure 2 shows box plots of the classification performance of the SVMs trained using the incremental approach. Means and standard deviations are summarized in Table 1. For the real-time SVM performance (Figure 2A), the mean TPV values were 71.06, 78.83, and 78.28 for R vs. LGO and 72.78, 70.22, and 72.78 for R vs. RGO during feedback runs 1, 2, and 3, respectively. The result of one-way repeated-measures ANOVA showed significant main effect (F2,58 = 4.99, p = 0.010) in TPV values across feedback runs for R vs. LGO classification driven by the improvement in TPV between runs 1 and 2 (p = 0.0096, post hoc paired sample t-test) and runs 1 and 3 (p = 0.0178). For R vs. RGO classification, no significant (F2,58 = 0.536, p = 0.588) change was observed in TPV values across feedback runs. The list of TPV values for all participants is given in Supplementary Table S1.

Figure 2. Classification performance for support vector machines (SVMs) trained using the incremental strategy outlined in Figure 1B (A) during real-time neurofeedback training (real-time analysis) and (B) offline analysis of the same data set. R, rest; LGO, imagined left hand gripping and opening; RGO, imagined right hand gripping and opening; TPV, task predictive value.

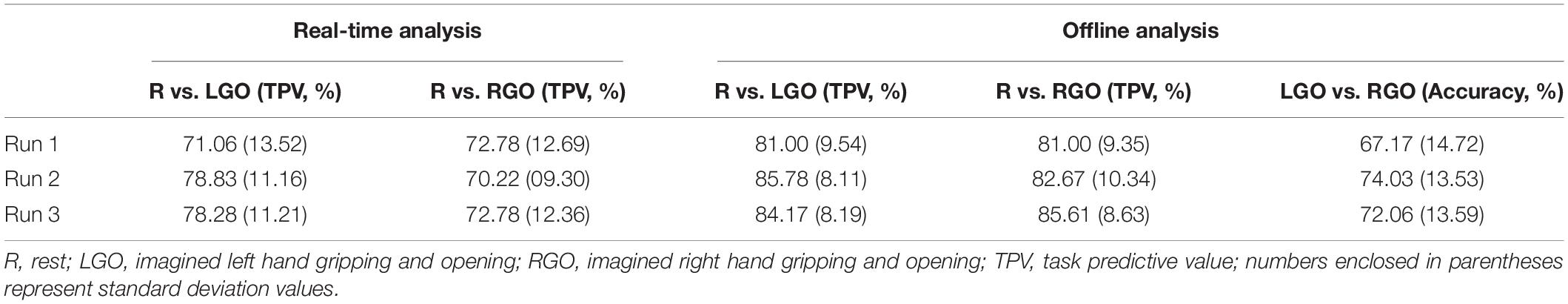

Table 1. Support vector machine (SVM) classification performance for the different classification models.

For the offline analysis (Figure 2B), we observed improvement in the classification performance as compared to that obtained during real-time analysis. This is mostly driven by better image preprocessing and additional optimization during offline analysis. The mean TPV values were 81.00, 85.78, and 84.17 for R vs. LGO classification and 81.00, 82.67, and 85.61 for R vs. RGO classification during feedback runs 1 to 3, respectively. Similarly, the mean accuracy values were 67.17, 74.03, and 72.06 for LGO vs. RGO classification. The results of the one-way repeated-measures ANOVA showed significant main effect in TPV values for R vs. LGO classification (F2,58 = 3.9107, p = 0.0255) and in accuracies for LGO vs. RGO classification (F2,58 = 5.2610, p = 0.0079) across feedback runs. Again, no significant change (F2,58 = 2.3711, p = 0.1024) was observed in TPV values for R vs. RGO classification. Post hoc paired sample t-tests showed significant improvement in TPV values between scans 1 and 2 for R vs. LGO classification (p = 0.014). The accuracy in classifying LGO vs. RGO also significantly improved between scans 1 and 2 (p = 0.002) as well as between scans 1 and 3 (p = 0.041). The list of TPV values and classification accuracies for all participants is given in Supplementary Table S2.

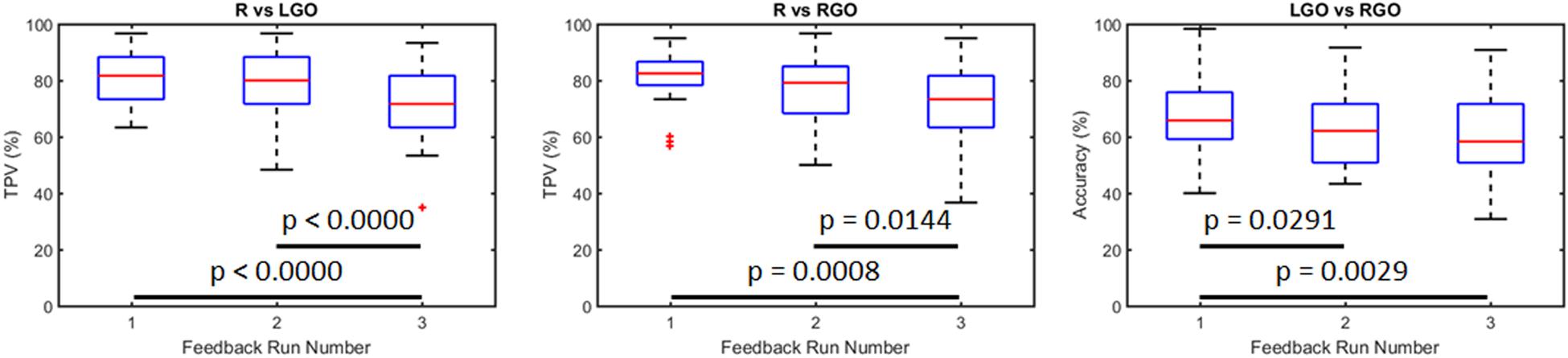

SVM Performance in the Validation Analyses

Figure 3 shows the SVM performance in the validation analyses of the preprocessed data. Using only the data from run 0 to train the SVMs and testing the trained SVM on data from runs 1 to 3, the mean TPV values for the R vs. LGO classification for runs 1 to 3 were 81.00, 78.00, and 71.44, respectively. For the R vs. RGO classification task, the mean TPV values for runs 1 to 3 were 81.00, 76.89, and 71.72, respectively, and for the LGO vs. RGO classification, the mean accuracies were 67.17, 63.44, and 60.56, respectively. One-way repeated measures ANOVA showed significant main effect in TPV values for R vs. LGO classification (F2,58 = 18.4031, p < 0.0000) and for R vs. RGO classification (F2,58 = 9.0382, p = 0.0004) as well as in the classification accuracy for LGO vs. RGO classification (F2,58 = 7.0906, p = 0.0018) across feedback runs. Post hoc paired sample t-tests showed significant decrease in TPV values between runs 1 and 3 (p < 0.0000 for R vs. LGO and p = 0.0008 for R vs. RGO) and runs 2 and 3 (p < 0.0000 for R vs. LGO and p = 0.0144 for R vs. RGO) as well as in the classification accuracy between runs 1 and 2 (p = 0.0291) and runs 1 and 3 (p = 0.0029) for the LGO vs. RGO classification model. These results suggest that without re-training, the SVM performance significantly decreases with each feedback run. The list of TPV values and accuracies for all participants is given in Supplementary Table S3.

Figure 3. Classification performance for support vector machines trained using data from run 0 and tested using data from feedback runs 1 to 3 without training update. R, rest; LGO, imagined left hand gripping and opening; RGO, imagined right hand gripping and opening; TPV, task predictive value.

Changes in Activation Patterns During Feedback Runs

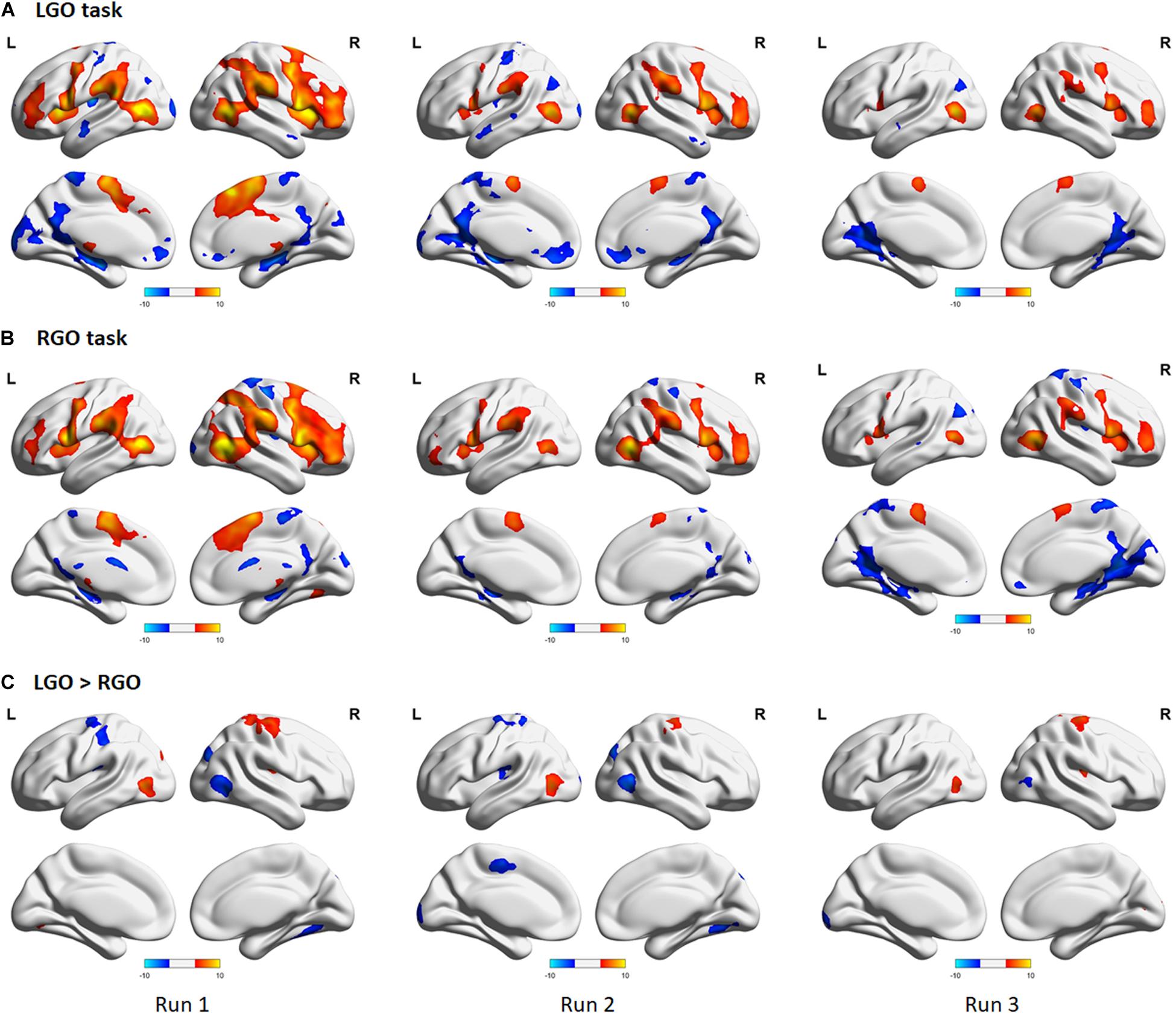

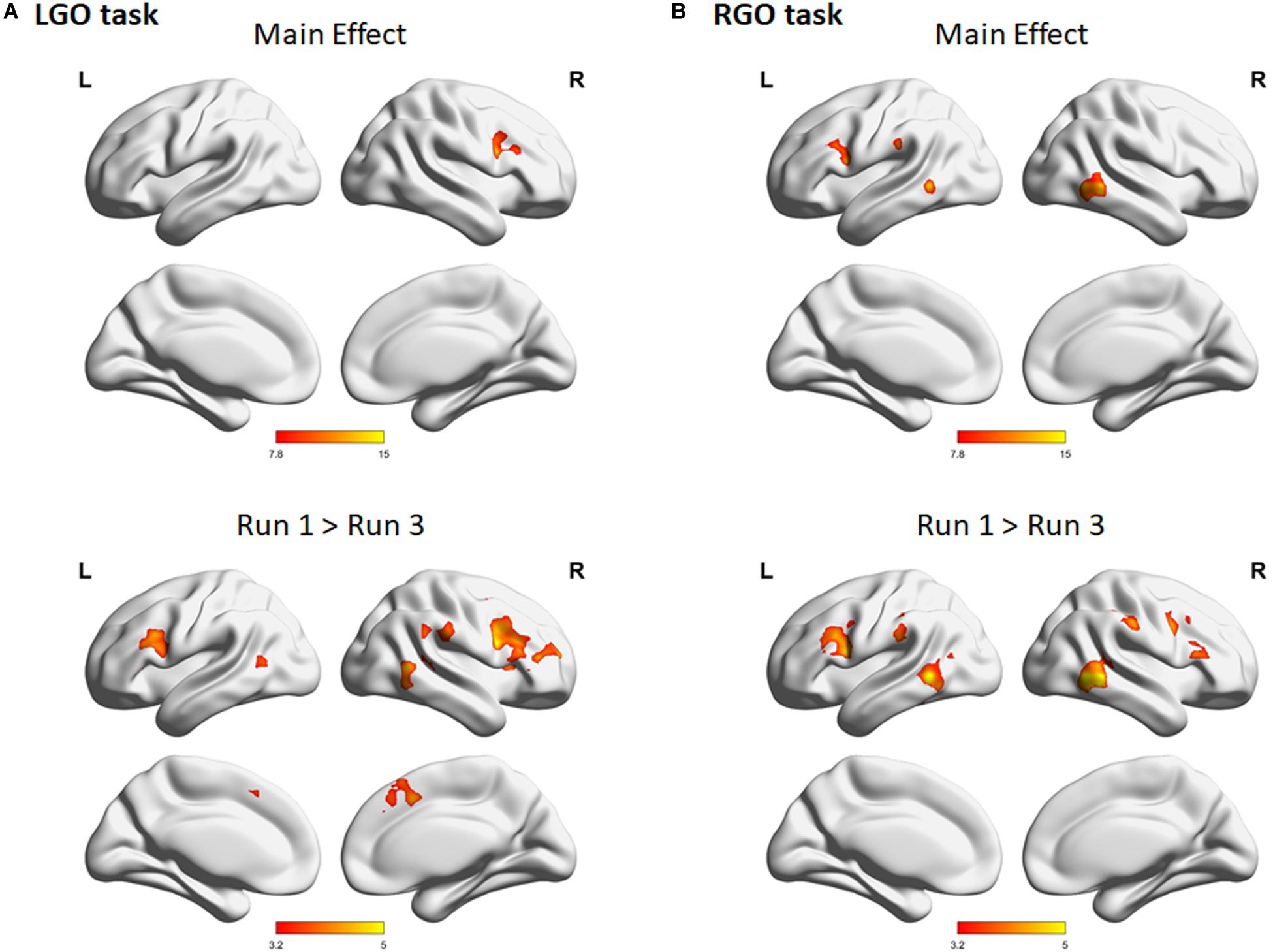

Figure 4 shows the group-level activation maps for the different tasks during feedback runs 1 to 3 generated using one-sample t-tests of the corresponding subject-level activation maps. Based on this figure, we could clearly see that the activation pattern changed from feedback run 1 to run 3 with the former being more widespread and the latter more focused on relevant brain regions. Clusters showing significant activation/deactivation during feedback runs 1 to 3 are summarized in Tables 2–4, respectively.

Figure 4. Group activation maps for imagined (A) left hand gripping and opening (LGO) and (B) right hand gripping and opening (RGO) tasks and (C) the contrast (LGO > RGO) between the two tasks obtained using a one-sample t-test from individual contrast maps for feedback runs 1 to 3 (left to right). All statistical maps were corrected for multiple comparisons at the cluster level using family-wise error correction (FWEc) p < 0.05 with a cluster-defining threshold (CDT) set at p = 0.001.

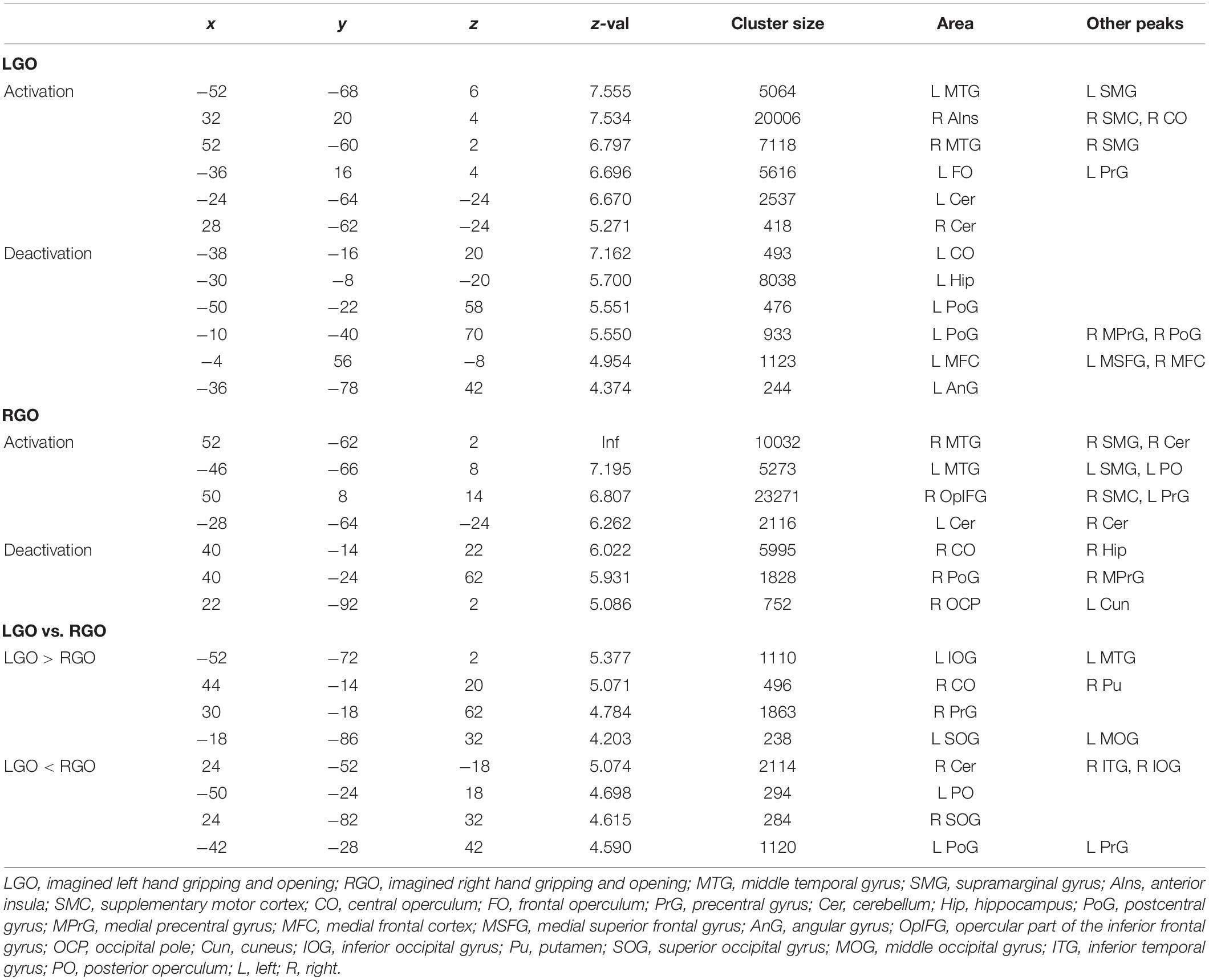

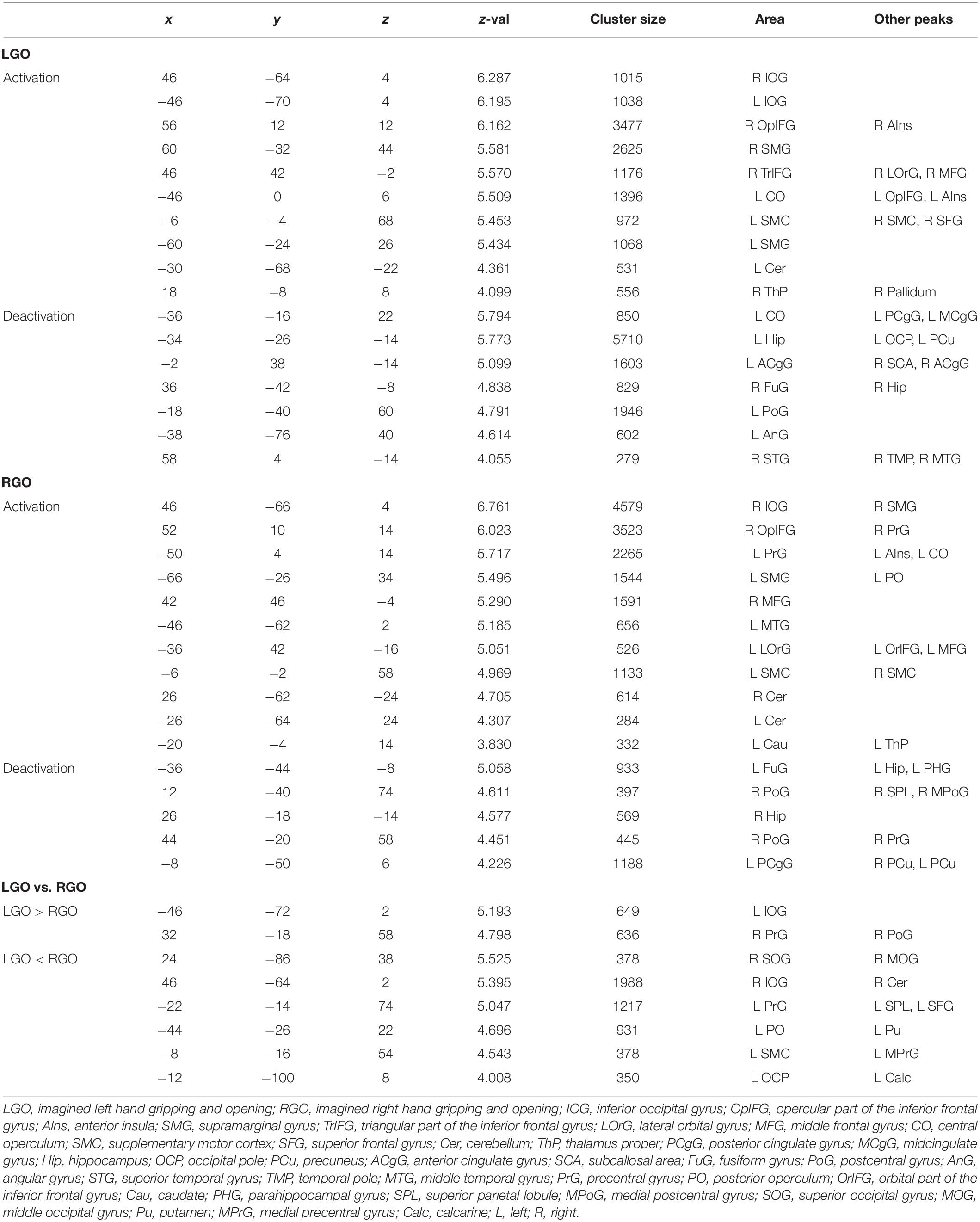

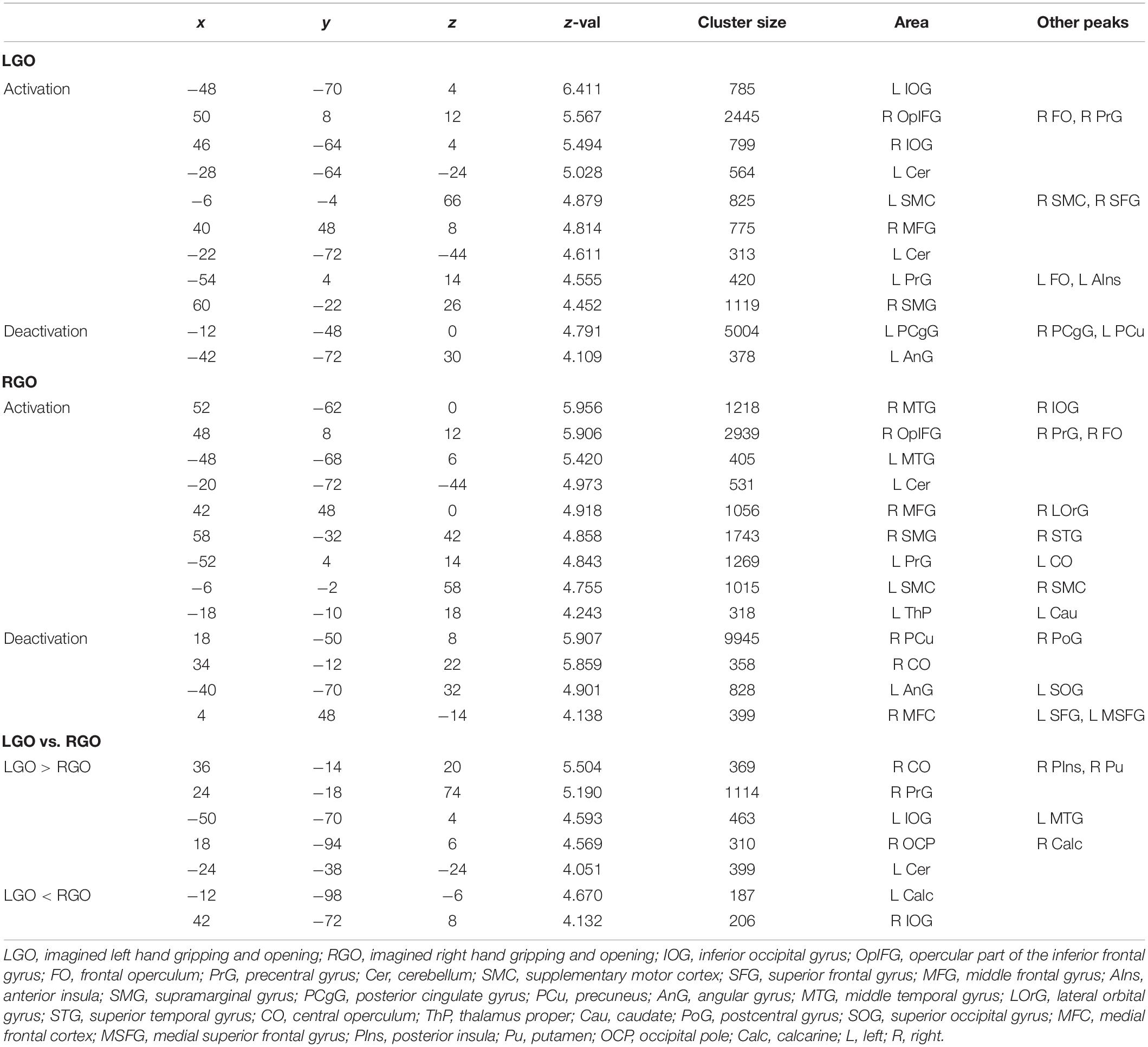

Table 2. Clusters showing significant (FWEc p < 0.05) activation and deactivation during feedback run 1 for the different motor imagery tasks.

Table 3. Clusters showing significant (FWEc p < 0.05) activation and deactivation during feedback run 2 for the different motor imagery tasks.

Table 4. Clusters showing significant (FWEc p < 0.05) activation and deactivation during feedback run 3 for the different motor imagery tasks.

For the LGO task (Figure 4A), regions that were consistently activated across feedback runs included the bilateral inferior occipital gyrus/middle temporal gyrus, right opercular part of the inferior frontal gyrus/anterior insula, left cerebellum (not shown in the figure), bilateral supplementary motor cortex/right superior frontal gyrus, right middle frontal gyrus, left precentral gyrus/anterior insula/frontal and central operculum, and right supramarginal gyrus. Regions activated only during feedback runs 1 and 2 included the left supramarginal gyrus and right thalamus proper/pallidum. The left cerebellum (inferior portion, not shown) was activated during feedback runs 1 and 3, while the right cerebellum (not shown) was activated only during feedback run 1. On the other hand, consistent deactivations across feedback runs were observed in the bilateral posterior cingulate gyrus and the left angular gyrus. The left central operculum, left hippocampus, bilateral anterior cingulate gyrus/medial frontal cortex, right fusiform gyrus/hippocampus, and the left postcentral gyrus/medial precentral gyrus were deactivated during feedback runs 1 and 2, while the right superior temporal gyrus/temporal pole/middle temporal gyrus was deactivated during feedback run 2.

For the RGO task (Figure 4B), regions that were consistently activated across feedback runs included the right middle temporal gyrus/inferior occipital gyrus, right opercular part of the inferior frontal gyrus/precentral gyrus, left middle temporal gyrus, left cerebellum (not shown), right middle frontal gyrus, right supramarginal gyrus, left precentral gyrus, bilateral supplementary motor cortex, and the left thalamus proper. The left supramarginal gyrus and the right cerebellum were activated only during feedback runs 1 and 2, while the left lateral part of the orbital gyrus was activated only during feedback run 2. On the other hand, only the right postcentral gyrus was consistently deactivated across feedback runs, while the bilateral precuneus/posterior cingulate gyrus and the left fusiform gyrus/parahippocampal gyrus were deactivated during feedback runs 2 and 3, the right central operculum during feedback runs 1 and 3, the left angular gyrus and right medial frontal cortex during feedback run 3, and the right occipital pole during feedback run 1.

Contrasting the two tasks (Figure 4C), regions showing consistent higher activations in the LGO task compared to the RGO task across all feedback runs included the right precentral gyrus and the left inferior occipital gyrus/middle temporal gyrus. The right central operculum showed higher activation in the LGO task compared to the RGO task only during feedback runs 1 and 3, while the left superior occipital gyrus showed higher activation during feedback run 1, and the right occipital pole and left cerebellum only during feedback run 3. In contrast, only the right inferior occipital gyrus showed consistent higher activation in the RGO task compared to the LGO task across all feedback runs. The right superior occipital gyrus, left precentral gyrus, left posterior operculum, and the right cerebellum also showed higher activation in the RGO task during feedback runs 1 and 2, while the left calcarine showed this during runs 2 and 3. Finally, the left supplementary motor cortex was higher in RGO task during feedback run 2 and the left postcentral gyrus during feedback run 1.

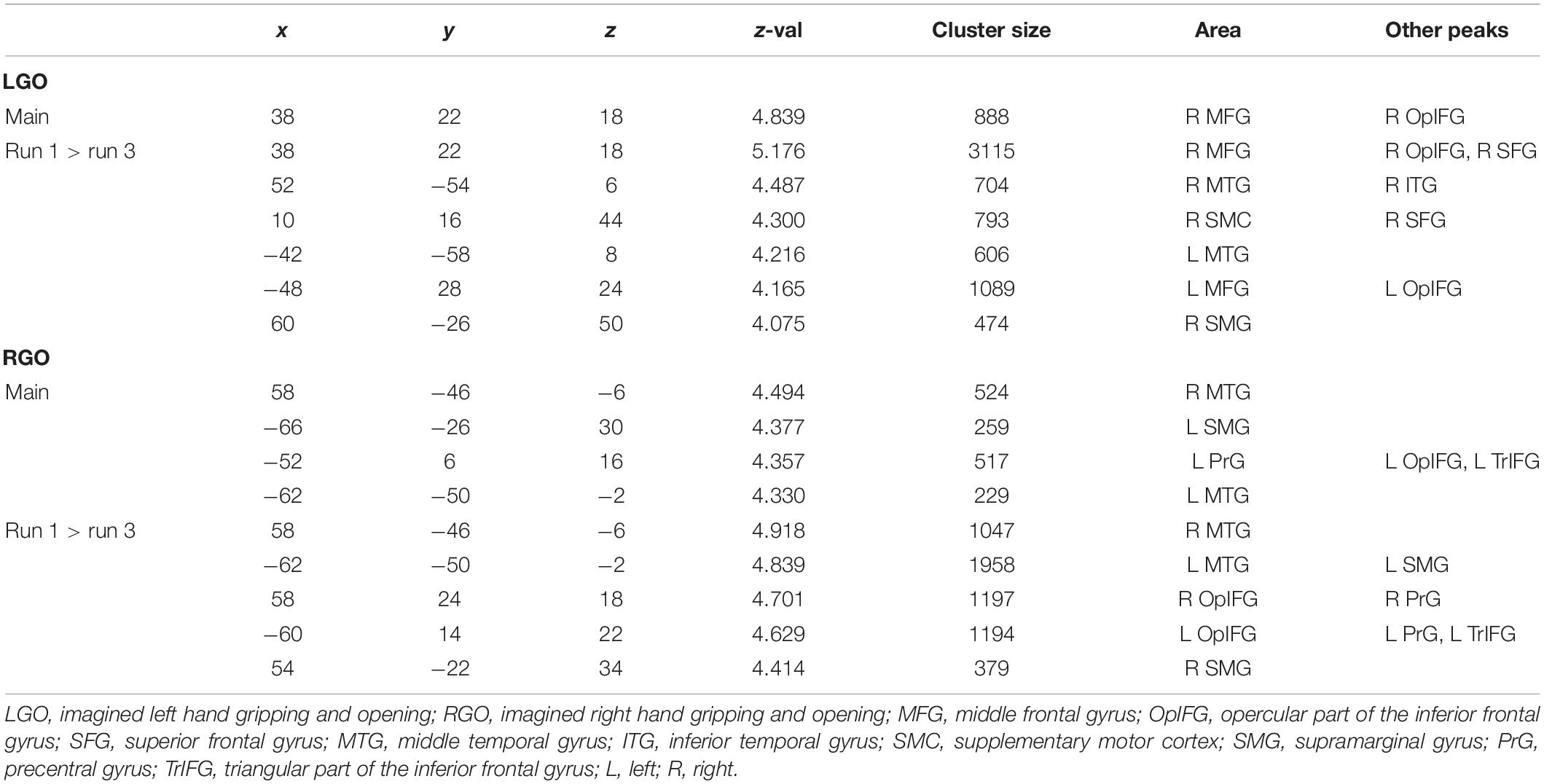

The results of the one-way repeated-measures ANOVA showed significant main effect for both tasks across feedback runs (Figure 5). For the LGO task (Figure 5A), significant changes in activation can be seen in the right middle frontal gyrus/opercular part of the inferior frontal gyrus, while for the RGO task (Figure 5B), in the bilateral middle temporal gyrus, left supramarginal gyrus, and left precentral gyrus. These changes were mostly driven by a decrease in activation in these regions between feedback runs 1 and 3 (Figure 5, lower figures). Aside from these regions, other regions showing significant decrease in activation included the bilateral middle temporal gyrus, right supplementary motor cortex, left middle frontal gyrus, and right supramarginal gyrus for the LGO task and the right opercular part of the inferior frontal gyrus/precentral gyrus and right supramarginal gyrus for the RGO task. The list of clusters showing significant changes across feedback runs, including peak MNI coordinates and cluster sizes, is given in Table 5.

Figure 5. Regions showing significant main effect in one-way repeated measures analysis of variance for the imagined (A) LGO and (B) RGO tasks. Results of post hoc paired sample t-tests for both LGO and RGO tasks are also shown in the bottom figures. All statistical maps were corrected for multiple comparisons using FWEc p < 0.05 with CDT p = 0.001.

Table 5. Clusters showing significant main effect in a one-way repeated measures analysis of variance for the different tasks across feedback runs.

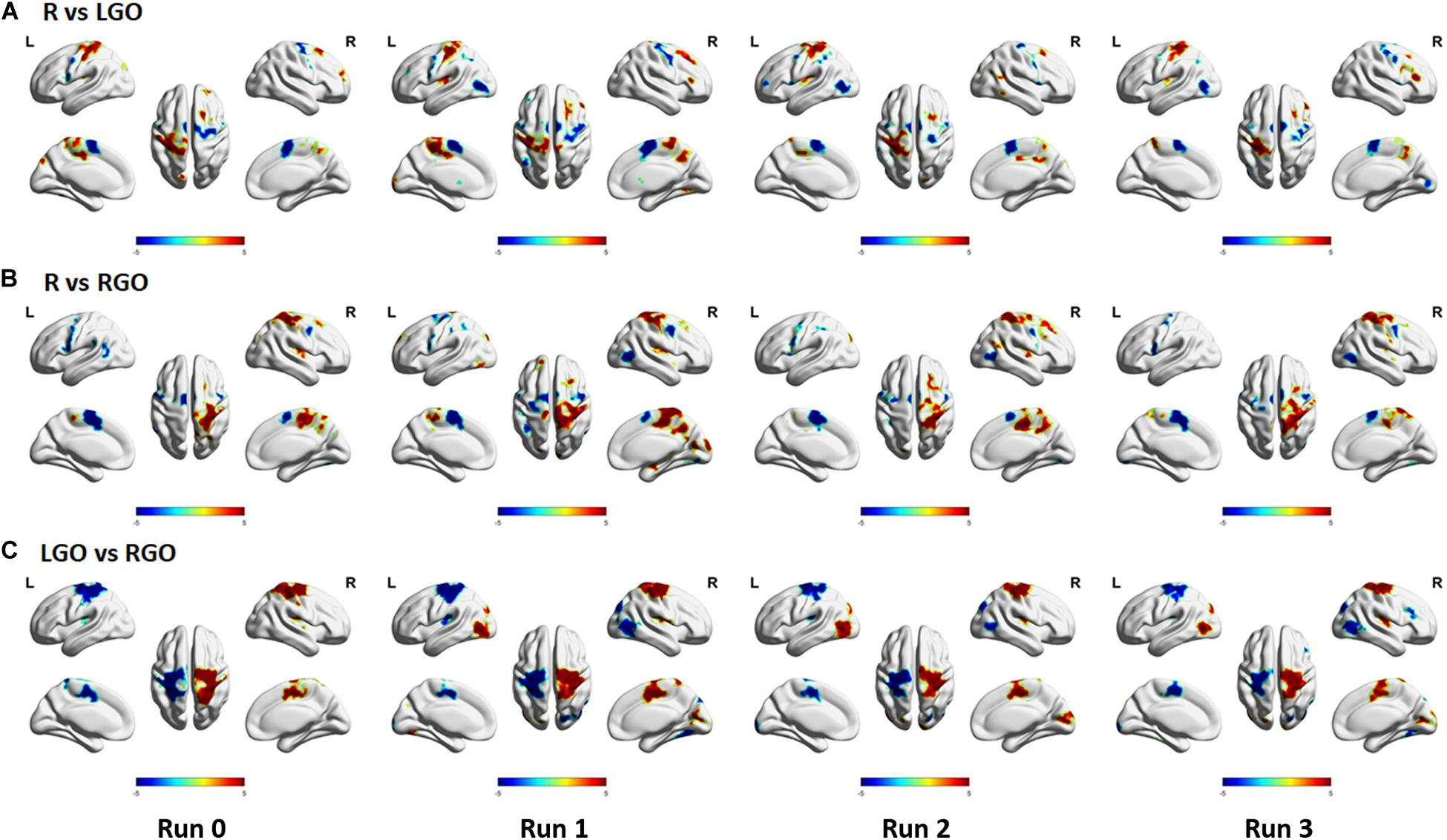

Significant SVM Weights

Support vector machines weights of the three classification models that are significant are shown in Figure 6. Regions that had generally higher activity during rest as compared to that during task (LGO/RGO) are shown in red-yellow colors in Figures 6A,B, or during LGO task as compared to RGO task in Figure 6C, whereas the opposite condition is shown in blue – light blue colors. Most of the relevant regions in the sensorimotor network appeared to have significant weights across runs. An important difference is the absence of the middle temporal gyrus/inferior occipital gyrus in the significance maps for run 0 as compared to that for runs 1–3. This difference is mainly due to the absence of feedback in run 0. Moreover, in spite of the decreasing activation intensity difference between LGO and RGO tasks across feedback runs (Figure 4C), regions in the sensorimotor network consistently showed significant weight values across feedback runs. The total number of voxels showing significant weight values for the 3 classification models also decreased from run 1 to run 3, although this is not so apparent in the figure. The lists of clusters with significant (FWEc p < 0.05, CDT p = 0.001) SVM weights for the 3 classification models are given in Supplementary Tables S4–S6.

Figure 6. Significant weights (FWEc p < 0.05 with CDT p = 0.001) of the different SVM classification models obtained using a one-sample t-test of the SVM weights from all participants: (A) R vs. LGO, (B) R vs. RGO, and (C) LGO vs. RGO. Red–yellow color map indicates that the activity during rest (R) is higher compared to that during task (LGO/RGO) in R vs. LGO and R vs. RGO models and higher during LGO task compared to that during RGO task in LGO vs. RGO model. Blue–light blue color map indicates the opposite.

Discussion

Using a motor imagery paradigm, we investigated the effect of the dynamic changes in brain activation during neurofeedback training to the classification of the different brain states associated with the target tasks. These changes could be driven by learning effects and possibly other factors. Our findings confirmed our hypothesis that brain activation patterns could dynamically change as the training progresses. By continuously adapting the trained SVMs after every feedback runs, significant improvement in the classification of the different brain states associated with the target motor imagery tasks could be attained. For BMI applications, this improvement could lead to better control of the system. And for neurofeedback training, this could provide more reliable feedback information to the participants, which is necessary to attain a successful neurofeedback training.

The goal of neurofeedback training is for participants to learn the target tasks using feedback information directly derived from their ongoing brain activity. This entails that dynamic changes in brain activation could potentially occur as the training progressed. Based on our findings, we did observe a more widespread activation during feedback run 1 followed by a more focused and localized patterns in succeeding runs. This initial widespread activity could be driven by the recruitment of additional brain regions needed to learn the target new skill. As the participants acquired the needed skill, irrelevant regions would no longer be activated whereas the relevant ones would become more prominent. This similar trend was also observed for connectivity patterns during neurofeedback training where connectivity was initially widespread among several regions and later confined to a small number of regions after participants presumably consolidated regulation skills (Rota et al., 2011). Thus, these activation changes should be considered when identifying different brain states during neurofeedback training.

Previous neurofeedback studies using brain state classification used datasets acquired before training to train classifiers, which would then be employed to classify the different brain states in succeeding feedback scans (see approach in validation analyses). Many of these studies had observed that the performance of classifiers trained this way usually decreased across feedback runs. LaConte (2011) attributed this effect to learning, suggesting that activation patterns changed during feedback training when participants learned the target tasks. Using a simple motor learning task, they demonstrated that the classification accuracy of the trained SVM did decrease when participants learned the task, whereas for those who did not, the accuracy remained the same or even increased. In our previous study (Bagarinao et al., 2018), we also observed the same trend, although the observed decrease was not statistically significant across the three feedback runs. To further support these previous findings, we performed the validation analyses (see section “Validation Analyses”) in which we trained SVMs using data from run 0 and used the trained SVMs to classify data from runs 1 to 3. These supplemental analyses were primarily performed to demonstrate that without re-training, the classifiers’ performance would be significantly affected due to the changing activation pattern (Figure 4). As is clearly evident in Figure 3, the classifiers’ performance did decrease with each feedback run. Using a very limited number of participants (2 participants), Sitaram et al. (2011) had also demonstrated that participants learned to improve their performance (measured in terms of classification accuracy) using an incremental method of re-training the classifiers. Our findings showing increases in TPV and accuracy values with continuous SVM re-training further validated their result for an extended number of participants.

One could also argue that even without SVM re-training, the classification performance could still be improved when participants learned the task since activation regions were becoming more well-defined (Figure 4). However, SVMs trained using data with a more widespread activation pattern could assign significant weights to regions that were initially active but not really relevant to the task. Without SVM re-training, these regions would still contribute to the classification even if these regions were no longer active after learning, thus affecting the SVM’s performance as clearly demonstrated in the results of the validation analyses (Figure 3). With SVM re-training, regions irrelevant to the task could be assigned non-significant weights to improve performance. Indeed, as shown in Figure 6 and Supplementary Tables S4–S6, the number of voxels with significant weights decreased with each feedback run, suggesting that some regions weighted as significant in the previous runs were no longer significant in feedback run 3. Although the presence of other confounding factors (e.g., learning) during neurofeedback training could also influence the SVM’s performance, the results reasonably indicated that the observed improvement in TPV values and accuracies was most likely driven by SVM re-training.

A consequence of the incremental training approach is the possibility that with training, the learned activation may not converge in the direction of the preferred activation pattern. This could be driven, for example, by the participants trying to explore different strategies for the target task, then the SVMs adjusting to the change in activation patterns and providing updated feedback to the participants, which in turn, affects the participants’ choice of strategy. This “adaptive” training is an intriguing scenario, but is unlikely with the current protocol. Here, we used two predefined tasks where the activation patterns are known in advance. Moreover, participants were also instructed to continue doing the same task even if there was no response from the robot (no movement). To identify if indeed the training results are consistent with the training goals, one can examine the resulting activation map, which can be readily generated when using real-time fMRI as compared to other modalities. Alternatively, one can also include a control task from which the target task can be contrasted or compared with, as performed in this study. This is particularly useful to detect cases where participants just perform something to contrast with the rest condition. Lastly, one can also include behavioral measures that can be evaluated for a successful training. Adaptive training is an interesting problem that will need more detailed investigation possibly using a different task design and is beyond the scope of the current paper.

Although the primary focus of the paper is to examine the efficacy of incremental training to improve SVMs’ classification performance when activation patterns dynamically change during training, our findings have also shown indications of the possible effects of the improvement in the classification to the participants’ ability to learn the task-relevant activation patterns. In our previous study (Bagarinao et al., 2018) comparing participants’ performance of motor imagery tasks with and without feedback, we have shown that participants were able to generate more consistent brain activation patterns that are relevant to the tasks during sessions with neurofeedback as compared to that without feedback, suggesting the importance of the feedback information. In that study, the SVMs were only trained using data from run 0 (the one without feedback) and the trained SVMs were subsequently used in the succeeding feedback runs. Under this condition, we found no significant improvement in the classification performance across feedback runs and the SVMs’ performance even showed some tendency to decrease with each feedback run. In terms of activation, we also did not observed significant changes across feedback runs. In contrast to these findings, we have demonstrated here that with incremental SVM training, improvement in accuracy could be observed across feedback runs. This improvement was also accompanied by significant changes in brain activation in the direction that lead to more focal activations in task-relevant brain regions. Although the way the feedback was presented differed in these two studies, and thus limiting a direct comparison, the motor imagery tasks were the same. Taken together, the findings of these two studies seemed to suggest the possibility that the improvement in SVMs’ performance has provided participants with better feedback information, which in turn, has led them to generate the more focal and task-relevant activation patterns. To fully address this association, more detailed investigations such as having a direct control group will be necessary.

In terms of activations, we identified several regions activated during feedback runs. Some of these were consistent with regions usually associated with motor imagery tasks including supplementary motor cortex, premotor cortex, prefrontal cortex, posterior parietal cortex, cerebellum, and basal ganglia (O’Shea and Moran, 2017; Tong et al., 2017). Interestingly, we did not find activations in the contralateral primary motor cortex (M1), an important target region for neurorehabilitation applications, for both imagery tasks at the group level. Instead, the ipsilateral M1 was consistently deactivated in all feedback runs for the RGO task and in the first two runs for the LGO task (Figure 4), consistent with previous results (Chiew et al., 2012). Findings concerning M1 activation during motor imagery task are inconsistent. A meta-analysis based on 75 papers showed that only 22 out of the 122 experiments reported M1 activation (Hétu et al., 2013). In a recent study using fMRI-based neurofeedback, Mehler et al. (2019) also reported that M1 could not be activated during imagery task in spite of the provided feedback information, supporting our finding. In contrast, they reported consistent activation of the supplementary motor cortex, which is also similar to what we observed.

The activation of other regions could be related to processes associated with neurofeedback training. In a recent review, Sitaram et al. (2017) had proposed three neurofeedback-related networks that included the dorsolateral prefrontal cortex, thalamus, lateral occipital, and posterior parietal cortex as regions for the control of visual neurofeedback, the dorsal striatum for neurofeedback learning, and the ventral striatum, anterior cingulate cortex, and anterior insula for neurofeedback reward. In this study, we also observed similar activation for some of these regions. For instance, we observed consistent activation of the left/right inferior occipital gyrus/middle temporal gyrus, regions implicated for the control of visual neurofeedback. These regions were also observed to be active when feedback was provided during motor imagery task but not without feedback (Bagarinao et al., 2018). It is also interesting to note that some of the regions associated with neurofeedback-related processes overlap with that of the motor imagery task (e.g., posterior parietal, anterior insula, and others). This could be due to the fact that neurofeedback training also involved some form of imagery.

Comparing across feedback runs, we observed that some regions showed significant decrease in activation with training. One such region is the inferior occipital gyrus/middle temporal gyrus. This region has been implicated in several neurofeedback studies (Berman et al., 2012; Marchesotti et al., 2017; Bagarinao et al., 2018) and, as mentioned earlier, could be associated with the control of visual feedback (Sitaram et al., 2017). The observed decreased activity of this region with training could indicate that participants were slowly decreasing their reliance on the feedback information as they learned the imagery tasks. Similar decreases in activation were also observed in the contralateral middle frontal gyrus/opercular part of the inferior frontal gyrus. Higher activation of this region has been associated with early learning or with a still incomplete motor sequence acquisition (Müller et al., 2002). Like the inferior occipital gyrus, this region may also be involved in the initial learning of the motor imagery tasks and becomes less activated as the new skill is acquired.

We also observed significant improvement in LGO task classification across feedback runs as compared to RGO task (Figure 2). Since most participants were right handed, this may be associated with the influence of handedness in learning motor imagery. An earlier study has demonstrated that motor imagery abilities are unbalanced between dominant and non-dominant hands (Maruff et al., 1999) with the dominant hand showing better performance (Guillot et al., 2010; Paizis et al., 2014). This behavioral difference could produce asymmetrical brain activation. Based on a magnetoencephalography study, Boe et al. (2014) have shown that the non-dominant hand induced a stronger event-related desynchronization in the ipsilateral sensorimotor cortex than in the contralateral cortex. This greater activation was considered as an indication of the control group’s inability to perform the motor imagery task with the non-dominant hand. Our data also showed consistent deactivation during RGO (dominant) task in the ipsilateral sensorimotor region, but not during the LGO (non-dominant) task (Figure 4). These differences in activation may explain why the LGO task showed significant improvement in classification across feedback runs than the RGO task. In this case, motor imagery with the non-dominant hand exhibited stronger and more dynamic activation pattern during training, leading to classification improvements whereas that of the dominant hand appeared more stable, resulting in more consistent classification.

Finally, we note the relevance of our findings for motor imagery training, particularly in relation to its clinical applications. Several studies have examined the application of motor imagery training as a no-cost, safe, and easy way to enhance motor functions. Motor imagery training has been employed to improve athletes’ performance (Feltz and Landers, 1983), provide additional benefits to conventional physiotherapy (Zimmermann-Schlatter et al., 2008), and enhance motor recovery following stroke (Jackson et al., 2001; Sharma et al., 2006; de Vries and Mulder, 2007). Motor imagery can also be used to identify potential sources of residual functional impairment in well-recovered stroke patients (Sharma et al., 2009a, b). By providing neurofeedback, motor imagery training has been shown to be more effective for neurorehabilitation. For example, adding BMI system in conjunction with motor imagery training to provide online contingent sensory feedback of brain activity has been shown effective in improving clinical parameters of post-stroke motor recovery (Ramos-Murguialday et al., 2013; Ono et al., 2014; Frolov et al., 2017). In healthy participants, using neurofeedback has been shown to significantly improve volitional recall of motor imagery activation patterns (Bagarinao et al., 2018). In these approaches, the BMI systems actively decode the brain activity and display the outcome to the user to create a feedback that is reflective of the task performance. Pilot studies using real-time fMRI-based neurofeedback for motor function recovery in stroke patients have also shown some promise (Sitaram et al., 2012; Liew et al., 2016). Using motor-imagery-based strategies, patients were able to increase connectivity between cortical and subcortical regions (Liew et al., 2016) and increase regulation of the activity in regions relevant to motor function (Sitaram et al., 2012; Lioi et al., 2020). An adaptive, multi-target motor imagery training approach was also proposed by Lioi et al. (2020). They used two target regions where the regions’ contributions to the feedback signal were weighted and the weight values were adjusted at the latter part of the training. In principle, this is similar to the proposed incremental SVM training approach. With SVM, regions are weighted according to their contribution to the classification. With the proposed incremental training, the weights can be adjusted according to the changes in activation pattern as the training progresses. Thus, further optimizing neurofeedback-based motor imagery training using the incremental strategy could be beneficial in improving the reliability of feedback information during motor imagery task training. This approach could also be used to customize differences in learning strategies, which may vary among individuals.

One of the limitations of the current study is the small number of feedback runs to continuously assess the improvement in accuracy with SVM re-training. We used only a limited number of runs to minimize task fatigue, which would increase with more feedback runs and could also introduce changes in activation pattern. Another limitation is the lack of independent instruments to assess the improvement in motor imagery performance of the participants during or after training. In the absence of such instruments, we used an indirect measure based on the accuracy of classifying the LGO task against the RGO task. Since the feedback information was mainly based on rest vs. task (LGO/RGO) performance, improvement in LGO vs. RGO classification would suggest better separation of the tasks’ activation patterns, which could be taken as an indication of the improvement in motor imagery performance in both tasks.

Conclusion

Our results confirmed our hypothesis that activation patterns could dynamically change during neurofeedback training as participants learned to perform the target motor imagery tasks. To account for these changes, we employed a training strategy that continuously updates the trained classifiers after every feedback run, resulting in the improvement of the SVM’s overall classification performance. This is important in order to provide more reliable and accurate feedback information to participants during neurofeedback training, an essential factor that could affect the effectiveness of neurofeedback in clinical settings.

Data Availability Statement

The datasets generated for this study will not be made publicly available due to privacy and legal reasons. Requests to access these datasets should be referred to TN at bmFrYWkudG9zaGloYXJ1QG5pdGVjaC5hYy5qcA==.

Ethics Statement

This study was reviewed and approved by the Institutional Review Board of the National Center for Geriatrics and Gerontology of Japan. All participants provided written informed consent before joining the study.

Author Contributions

EB, AY, SK, and TN conceived and designed the study. KT and SK assembled and programmed the humanoid robot for real-time fMRI application. EB developed the real-time fMRI analysis system. EB, AY, KT, and TN performed the experiments and analyzed the data. TN gave technical support and supervised the whole research procedure. EB and TN wrote the draft of the manuscript. All authors reviewed and approved the final version of the manuscript.

Funding

EB was supported by Grants-in-Aid for Scientific Research from the Japan Society for the Promotion of Science (KAKENHI Grant Number 26350993). TN was supported by Grants-in-Aid for Scientific Research from the Japan Society for the Promotion of Science (KAKENHI Grant Numbers 15H03104, 16K13063, and 19H04025) and the Kayamori Foundation of Information Science Advancement (FY 2017).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnins.2020.00623/full#supplementary-material

References

Amano, K., Shibata, K., Kawato, M., Sasaki, Y., and Watanabe, T. (2016). Learning to associate orientation with color in early visual areas by associative decoded fMRI neurofeedback. Curr. Biol. 26, 1861–1866. doi: 10.1016/j.cub.2016.05.014

Ashburner, J., and Friston, K. J. (2005). Unified segmentation. Neuroimage 26, 839–851. doi: 10.1016/j.neuroimage.2005.02.018

Bagarinao, E., Yoshida, A., Ueno, M., Terabe, K., Kato, S., Isoda, H., et al. (2018). Improved volitional recall of motor-imagery-related brain activation patterns using real-time functional MRI-based neurofeedback. Front. Hum. Neurosci. 12:158. doi: 10.3389/fnhum.2018.00158

Berman, B. D., Horovitz, S. G., Venkataraman, G., and Hallett, M. (2012). Self-modulation of primary motor cortex activity with motor and motor imagery tasks using real-time fMRI-based neurofeedback. Neuroimage 59, 917–925. doi: 10.1016/j.neuroimage.2011.07.035

Boe, S., Gionfriddo, A., Kraeutner, S., Tremblay, A., Little, G., and Bardouille, T. (2014). Laterality of brain activity during motor imagery is modulated by the provision of source level neurofeedback. Neuroimage 101, 159–167. doi: 10.1016/j.neuroimage.2014.06.066

Caria, A., Sitaram, R., Veit, R., Begliomini, C., and Birbaumer, N. (2010). Volitional control of anterior insula activity modulates the response to aversive stimuli. A real-time functional magnetic resonance imaging study. Biol. Psychiatry 68, 425–432. doi: 10.1016/j.biopsych.2010.04.020

Caria, A., Veit, R., Sitaram, R., Lotze, M., Welskopf, N., Grodd, W., et al. (2007). Regulation of anterior insular cortex activity using real-time fMRI. Neuroimage 35, 1238–1246. doi: 10.1016/j.neuroimage.2007.01.018

Chang, C.-C., and Lin, C.-J. (2011). LIBSVM. ACM Trans. Intell. Syst. Technol. 2, 1–27. doi: 10.1145/1961189.1961199

Chiew, M., LaConte, S. M., and Graham, S. J. (2012). Investigation of fMRI neurofeedback of differential primary motor cortex activity using kinesthetic motor imagery. Neuroimage 61, 21–31. doi: 10.1016/j.neuroimage.2012.02.053

Cortese, A., Amano, K., Koizumi, A., Kawato, M., and Lau, H. (2016). Multivoxel neurofeedback selectively modulates confidence without changing perceptual performance. Nat. Commun. 7:13669. doi: 10.1038/ncomms13669

Cortese, A., Amano, K., Koizumi, A., Lau, H., and Kawato, M. (2017). Decoded fMRI neurofeedback can induce bidirectional confidence changes within single participants. Neuroimage 149, 323–337. doi: 10.1016/j.neuroimage.2017.01.069

de Vries, S., and Mulder, T. (2007). Motor imagery and stroke rehabilitation: a critical discussion. J. Rehabil. Med. 39, 5–13. doi: 10.2340/16501977-0020

DeCharms, R. C., Christoff, K., Glover, G. H., Pauly, J. M., Whitfield, S., and Gabrieli, J. D. E. (2004). Learned regulation of spatially localized brain activation using real-time fMRI. Neuroimage 21, 436–443. doi: 10.1016/j.neuroimage.2003.08.041

deCharms, R. C., Maeda, F., Glover, G. H., Ludlow, D., Pauly, J. M., Soneji, D., et al. (2005). Control over brain activation and pain learned by using real-time functional MRI. Proc. Natl. Acad. Sci. U.S.A. 102, 18626–18631. doi: 10.1073/pnas.0505210102

Feltz, D., and Landers, D. (1983). The effects of mental practice on motor skill learning and performance: a meta-analysiss. J. Sport Psychol. 5, 25–57. doi: 10.1123/jsp.5.1.25

Frolov, A. A., Mokienko, O., Lyukmanov, R., Biryukova, E., Kotov, S., Turbina, L., et al. (2017). Post-stroke rehabilitation training with a motor-imagery-based brain-computer interface (BCI)-controlled hand exoskeleton: a randomized controlled multicenter trial. Front. Neurosci. 11:400. doi: 10.3389/fnins.2017.00400

Grèzes, J., and Decety, J. (2000). Functional anatomy of execution, mental simulation, observation, and verb generation of actions: a meta-analysis. Hum. Brain Mapp. 12, 1–19. doi: 10.1002/1097-0193(200101)12:1<1::aid-hbm10>3.0.co;2-v

Guillot, A., Louis, M., and Collet, C. (2010). “Neurophysiological substrates of motor imagery ability,” in The Neurophysiological Foundations of Mental and Motor Imagery, eds A. Guillot, and C. Collet, (New York, NY: Oxford University Press), 109–124. doi: 10.1093/acprof:oso/9780199546251.003.0008

Haller, S., Kopel, R., Jhooti, P., Haas, T., Scharnowski, F., Lovblad, K. O., et al. (2013). Dynamic reconfiguration of human brain functional networks through neurofeedback. Neuroimage 81, 243–252. doi: 10.1016/j.neuroimage.2013.05.019

Hamilton, J. P., Glover, G. H., Bagarinao, E., Chang, C., Mackey, S., Sacchet, M. D., et al. (2016). Effects of salience-network-node neurofeedback training on affective biases in major depressive disorder. Psychiatry Res. 249, 91–96. doi: 10.1016/j.pscychresns.2016.01.016

Hastie, T., Tibshirani, R., and Friedman, J. (2009). The Elements of Statistical Learning, 2nd Edn. New York, NY: Springer.

Hatta, T., and Hotta, C. (2008). Which inventory should be used to assess Japanese handedness : comparison between Edinburgh and H. N. handedness inventories. J. Hum. Environ. Stud. 6, 45–48. doi: 10.4189/shes.6.2_45

Hétu, S., Grégoire, M., Saimpont, A., Coll, M. P., Eugène, F., Michon, P. E., et al. (2013). The neural network of motor imagery: an ALE meta-analysis. Neurosci. Biobehav. Rev. 37, 930–949. doi: 10.1016/j.neubiorev.2013.03.017

Jackson, P. L., Lafleur, M. F., Malouin, F., Richards, C., and Doyon, J. (2001). Potential role of mental practice using motor imagery in neurologic rehabilitation. Arch. Phys. Med. Rehabil. 82, 1133–1141. doi: 10.1053/apmr.2001.24286

Kim, D. Y., Yoo, S. S., Tegethoff, M., Meinlschmidt, G., and Lee, J. H. (2015). The inclusion of functional connectivity information into fmri-based neurofeedback improves its efficacy in the reduction of cigarette cravings. J. Cogn. Neurosci. 27, 1552–1572. doi: 10.1162/jocn_a_00802

Koizumi, A., Amano, K., Cortese, A., Shibata, K., Yoshida, W., Seymour, B., et al. (2016). Fear reduction without fear through reinforcement of neural activity that bypasses conscious exposure. Nat. Hum. Behav. 1:0006. doi: 10.1038/s41562-016-0006

Koush, Y., Meskaldji, D.-E., Pichon, S., Rey, G., Rieger, S. W., Linden, D. E. J., et al. (2017). Learning control over emotion networks through connectivity-based neurofeedback. Cereb. Cortex 27, 1193–1202. doi: 10.1093/cercor/bhv311

Koush, Y., Rosa, M. J., Robineau, F., Heinen, K., Rieger, W. S., Weiskopf, N., et al. (2013). Connectivity-based neurofeedback: dynamic causal modeling for real-time fMRI. Neuroimage 81, 422–430. doi: 10.1016/j.neuroimage.2013.05.010

LaConte, S. M. (2011). Decoding fMRI brain states in real-time. Neuroimage 56, 440–454. doi: 10.1016/j.neuroimage.2010.06.052

LaConte, S. M., Peltier, S. J., and Hu, X. P. (2007). Real-time fMRI using brain-state classification. Hum. Brain Mapp. 28, 1033–1044. doi: 10.1002/hbm.20326

Lee, S., Ruiz, S., Caria, A., Veit, R., Birbaumer, N., and Sitaram, R. (2011). Detection of cerebral reorganization induced by real-time fMRI feedback training of insula activation: a multivariate investigation. Neurorehabil. Neural Repair 25, 259–267. doi: 10.1177/1545968310385128

Liew, S. L., Rana, M., Cornelsen, S., Fortunato De Barros Filho, M., Birbaumer, N., Sitaram, R., et al. (2016). Improving motor corticothalamic communication after stroke using real-time fMRI connectivity-based neurofeedback. Neurorehabil. Neural Repair 30, 671–675. doi: 10.1177/1545968315619699

Lioi, G., Butet, S., Fleury, M., Bannier, E., Lécuyer, A., Bonan, I., et al. (2020). A multi-target motor imagery training using bimodal EEG-fMRI neurofeedback: a pilot study in chronic stroke patients. Front. Hum. Neurosci. 14:37. doi: 10.3389/fnhum.2020.00037

Malouin, F., and Richards, C. L. (2013). “Clinical applications of motor imagery in rehabilitation,” in Multisensory Imagery, eds S. Lacey, and R. Lawson, (New York, NY: Springer), 397–419. doi: 10.1007/978-1-4614-5879-1_21

Marchesotti, S., Martuzzi, R., Schurger, A., Blefari, M. L., del Millán, J. R., Bleuler, H., et al. (2017). Cortical and subcortical mechanisms of brain-machine interfaces. Hum. Brain Mapp. 38, 2971–2989. doi: 10.1002/hbm.23566

Maruff, P., Wilson, P. H., De Fazio, J., Cerritelli, B., Hedt, A., and Currie, J. (1999). Asymmetries between dominant and non-dominant hands in real and imagined motor task performance. Neuropsychologia 37, 379–384. doi: 10.1016/S0028-3932(98)00064-5

Mehler, D. M. A., Williams, A. N., Krause, F., Lührs, M., Wise, R. G., Turner, D. L., et al. (2019). The BOLD response in primary motor cortex and supplementary motor area during kinesthetic motor imagery based graded fMRI neurofeedback. Neuroimage 184, 36–44. doi: 10.1016/j.neuroimage.2018.09.007

Mugler, J. P., and Brookeman, J. R. (1990). Three-dimensional magnetization-prepared rapid gradient-echo imaging (3D MP RAGE). Magn. Reson. Med. 15, 152–157. doi: 10.1002/mrm.1910150117

Müller, R. A., Kleinhans, N., Pierce, K., Kemmotsu, N., and Courchesne, E. (2002). Functional MRI of motor sequence acquisition: effects of learning stage and performance. Cogn. Brain Res. 14, 277–293. doi: 10.1016/S0926-6410(02)00131-3

Ono, T., Shindo, K., Kawashima, K., Ota, N., Ito, M., Ota, T., et al. (2014). Brain-computer interface with somatosensory feedback improves functional recovery from severe hemiplegia due to chronic stroke. Front. Neuroeng. 7:19. doi: 10.3389/fneng.2014.00019

O’Shea, H., and Moran, A. (2017). Does motor simulation theory explain the cognitive mechanisms underlying motor imagery? A critical review. Front. Hum. Neurosci. 11:72. doi: 10.3389/fnhum.2017.00072

Paizis, C., Skoura, X., Personnier, P., and Papaxanthis, C. (2014). Motor asymmetry attenuation in older adults during imagined arm movements. Front. Aging Neurosci. 6:49. doi: 10.3389/fnagi.2014.00049

Ramos-Murguialday, A., Broetz, D., Rea, M., Läer, L., Yilmaz, Ö, Brasil, F. L., et al. (2013). Brain-machine interface in chronic stroke rehabilitation: a controlled study. Ann. Neurol. 74, 100–108. doi: 10.1002/ana.23879

Rota, G., Handjaras, G., Sitaram, R., Birbaumer, N., and Dogil, G. (2011). Reorganization of functional and effective connectivity during real-time fMRI-BCI modulation of prosody processing. Brain Lang. 117, 123–132. doi: 10.1016/j.bandl.2010.07.008

Rota, G., Sitaram, R., Veit, R., Erb, M., Weiskopf, N., Dogil, G., et al. (2009). Self-regulation of regional cortical activity using real-time fMRI: the right inferior frontal gyrus and linguistic processing. Hum. Brain Mapp. 30, 1605–1614. doi: 10.1002/Hbm.20621

Sharma, N., Baron, J. C., and Rowe, J. B. (2009a). Motor imagery after stroke: relating outcome to motor network connectivity. Ann. Neurol. 66, 604–616. doi: 10.1002/ana.21810

Sharma, N., Pomeroy, V. M., and Baron, J. C. (2006). Motor imagery: a backdoor to the motor system after stroke? Stroke 37, 1941–1952. doi: 10.1161/01.STR.0000226902.43357.fc

Sharma, N., Simmons, L. H., Jones, P. S., Day, D. J., Carpenter, T. A., Pomeroy, V. M., et al. (2009b). Motor imagery after subcortical stroke. Stroke 40, 1315–1324. doi: 10.1161/STROKEAHA.108.525766

Sherwood, M. S., Kane, J. H., Weisend, M. P., and Parker, J. G. (2016). Enhanced control of dorsolateral prefrontal cortex neurophysiology with real-time functional magnetic resonance imaging (rt-fMRI) neurofeedback training and working memory practice. Neuroimage 124, 214–223. doi: 10.1016/j.neuroimage.2015.08.074

Shibata, K., Watanabe, T., Sasaki, Y., and Kawato, M. (2011). Perceptual learning incepted by decoded fMRI neurofeedback without stimulus presentation. Science 334, 1413–1415. doi: 10.1126/science.1212003

Sitaram, R., Lee, S., Ruiz, S., Rana, M., Veit, R., and Birbaumer, N. (2011). Real-time support vector classification and feedback of multiple emotional brain states. Neuroimage 56, 753–765. doi: 10.1016/j.neuroimage.2010.08.007

Sitaram, R., Ros, T., Stoeckel, L., Haller, S., Scharnowski, F., Lewis-Peacock, J., et al. (2017). Closed-loop brain training: the science of neurofeedback. Nat. Rev. Neurosci. 18, 86–100. doi: 10.1038/nrn.2016.164

Sitaram, R., Veit, R., Stevens, B., Caria, A., Gerloff, C., Birbaumer, N., et al. (2012). Acquired control of ventral premotor cortex activity by feedback training. Neurorehabil. Neural Repair 26, 256–265. doi: 10.1177/1545968311418345

Tong, Y., Pendy, J. T., Li, W. A., Du, H., Zhang, T., Geng, X., et al. (2017). Motor imagery-based rehabilitation: potential neural correlates and clinical application for functional recovery of motor deficits after stroke. Aging Dis. 8, 364–371. doi: 10.14336/AD.2016.1012

Vapnik, V. N. (1999). An overview of statistical learning theory. IEEE Trans. Neural Networks 10, 988–999. doi: 10.1109/72.788640

Xia, M., Wang, J., and He, Y. (2013). BrainNet viewer: a Network Visualization Tool for Human Brain Connectomics. PLoS One 8:e68910. doi: 10.1371/journal.pone.0068910

Zimmermann-Schlatter, A., Schuster, C., Puhan, M., Siekierka, E., and Steurer, J. (2008). Efficacy of motor imagery in post-stroke rehabilitation: a systematic review. J. Neuroeng. Rehabil. 5:8.

Zotev, V., Krueger, F., Phillips, R., Alvarez, R. P., Simmons, W. K., Bellgowan, P., et al. (2011). Self-regulation of amygdala activation using real-time fMRI neurofeedback. PLoS One 6:e24522. doi: 10.1371/journal.pone.0024522

Keywords: real-time fMRI, motor imagery, neurofeedback, support vector machines, incremental training, brain state, learning

Citation: Bagarinao E, Yoshida A, Terabe K, Kato S and Nakai T (2020) Improving Real-Time Brain State Classification of Motor Imagery Tasks During Neurofeedback Training. Front. Neurosci. 14:623. doi: 10.3389/fnins.2020.00623

Received: 30 September 2019; Accepted: 19 May 2020;

Published: 24 June 2020.

Edited by:

Javier Gonzalez-Castillo, National Institutes of Health (NIH), United StatesReviewed by:

Giulia Lioi, Inria Rennes - Bretagne Atlantique Research Centre, FranceMatthew Scott Sherwood, Wright State University, United States

Copyright © 2020 Bagarinao, Yoshida, Terabe, Kato and Nakai. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Epifanio Bagarinao, ZWJhZ2FyaW5hb0BtZXQubmFnb3lhLXUuYWMuanA=

Epifanio Bagarinao

Epifanio Bagarinao Akihiro Yoshida

Akihiro Yoshida Kazunori Terabe3

Kazunori Terabe3 Shohei Kato

Shohei Kato Toshiharu Nakai

Toshiharu Nakai