- 1School of Information Science and Engineering, Shandong University, Qingdao, China

- 2Institute of Brain and Brain-Inspired Science, Shandong University, Jinan, China

- 3Department of Anesthesiology, Qilu Hospital of Shandong University, Jinan, China

- 4Tensor Learning Unit, RIKEN AIP, Tokyo, Japan

- 5School of Automation, Guangdong University of Technology, Guangzhou, China

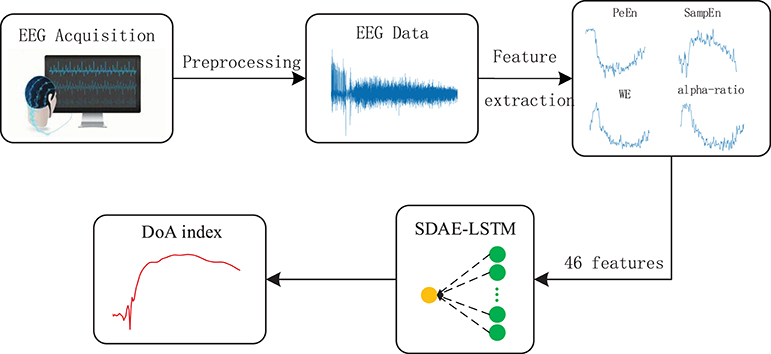

Electroencephalogram (EEG) signals contain valuable information about the different physiological states of the brain, with a variety of linear and nonlinear features that can be used to investigate brain activity. Monitoring the depth of anesthesia (DoA) with EEG is an ongoing challenge in anesthesia research. In this paper, we propose a novel method based on Long Short-Term Memory (LSTM) and a sparse denoising autoencoder (SDAE) to combine the hybrid features of EEG to monitor the DoA. The EEG signals were preprocessed using filtering, etc., and then more than ten features including sample entropy, permutation entropy, spectra, and alpha-ratio were extracted from the EEG signal. We then integrated the optional features such as permutation entropy and alpha-ratio to extract the essential structure and learn the most efficient temporal model for monitoring the DoA. Compared with using a single feature, the proposed model could accurately estimate the depth of anesthesia with higher prediction probability (Pk). Experimental results evaluated on the datasets demonstrated that our proposed method provided better performance than the methods using permutation entropy, alpha-ratio, LSTM, and other traditional indices.

1. Introduction

Electroencephalogram (EEG) signals have been widely used in various clinical applications including disease diagnosis and monitoring the depth of anesthesia (Zhang et al., 2001; Bruhn et al., 2006; Jameson and Sloan, 2006). Usually, the anesthesiologist doctors ask the patients some questions to monitor and estimate the DOA. This is inaccurate in clinical practice, and the accuracy of anesthesia monitoring depends on the experience of anesthesiologists. During the operation, misjudgment of the DoA of patients is serious and dangerous. If DoA is not deep enough, the patient may be awake during the operation and suffer from great psychological trauma. However, if too much anesthetic is used, the patient will be in deep anesthesia, which is not conducive to the patient's recovery and can even be life-threatening. Therefore, it is important to monitor the DoA exactly. Hence, EEG-based methods have been adopted as efficient clinical monitoring techniques due to the temporally varying and convenient features.

In recent years, many methods have been developed for monitoring the DoA index (Jiao et al., 2018; Jin et al., 2018). The bispectral (BIS) index was proposed by Rampil (1998), defining the DoA index in a range of 0–100. This algorithm has certain limitations in the burst suppression pattern (BSP), giving a high BIS index, while the patient is still in the state of anesthesia (Kearse et al., 1994). The EEG signal is non-stationary and exhibits non-linear or chaotic behaviors (Elbert et al., 1994; Natarajan et al., 2004). Many studies have shown that nonlinear analysis can be used for EEG in medical applications. Therefore, feature extraction of EEG signals based on nonlinear dynamics is widely used in the monitoring of anesthesia depth, for example, the Hurst exponent (Alvarez-Ramirez et al., 2008), detrended fluctuation analysis (Jospin et al., 2007), entropies (Bruhn et al., 2000; Chen et al., 2007), and a frequency band power ratio (Drummond et al., 1991).

Wavelet transform is an effective tool for extracting and analyzing the essential structure of signal in the time-frequency domain (Rezek and Roberts, 1998). As a method for identifying the time-frequency spectrum, wavelet transform can automatically adjust the size of the time window and better match the frequency characteristics of the signal; it is an ideal tool for signal analysis and processing. Therefore, many researchers had developed various wavelet entropy algorithms for DoA monitoring based on the wavelet transform, such as Shannon Wavelet entropy (SWE), Tsallis wavelet entropy (TWE), and Renyi wavelet entropy (RWE) (Rosso et al., 2006; Särkelä et al., 2007; Maszczyk and Duch, 2008). Wavelet entropy can represent the relative energy associated with the frequency band and detect similarities between signal segments (Puthankattil and Joseph, 2012; Benzy and Jasmin, 2015). It can measure the degree of signal order/disorder. Permutation entropy is a complexity measure for time series analysis. It is simple and has low computational complexity, which makes it useful for monitoring dynamic changes in complex time series. It is robust against artifacts in EEG in the awake state (Shalbaf et al., 2013). However, due to its high-frequency waves during the suppression period, permutation entropy is not effective in deep anesthesia (Cao et al., 2004; Li et al., 2008, 2010; Olofsen et al., 2008). Sample entropy was developed based on approximate entropy, which estimates irregularities and complexity by reconstruction of time series. Compared to the approximate entropy, the sample entropy eliminates self-matching, has less dependence on the length of the time series, and is more consistent when compared over a wide range of conditions (Richman and Moorman, 2000; Yoo et al., 2012). It can track the state of brain activity under high doses of anesthetic drugs but requires noise-free data (Shalbaf et al., 2012). Several different ratios of electrical activity in various frequency bands have been proposed as indices of anesthesia depth in previous studies (Drummond et al., 1991). Shah proved that the ratio of alpha and beta frequency to delta frequency power appears to be a useful tool for identifying stages of isoflurane anesthesia (Shah et al., 1988).

As well as traditional signal processing methods, learning-based methods have been widely used in EEG signal processing and have achieved good results (Zhang et al., 2015, 2018; Wu et al., 2019). In recent years, learning-based methods have also achieved good performance for monitoring DoA. As stated in Shalbaf et al. (2013), an artificial neural network was used to classify the DoA index with extracted feature sample entropy and permutation entropy. The various features extracted from the EEG represented different aspects of the EEG, so using multiple parameters to assess the depth of anesthesia was effective. Saffar also integrated the Beta index, SWE, sample entropy, and detrended fluctuation analysis as multiple features, and an adaptive neuro-fuzzy inference system was used for classifying the stages of DoA (Shalbaf et al., 2018). By applying the five indices of middle frequency, spectral edge frequency, approximate entropy, sample entropy, and permutation entropy as the inputs of the artificial neural network, Liu obtained the combination index and found that the combination of these variables was more accurate than a single index for monitoring DoA (Liu et al., 2016). Liu et al. (2019) extracted the EEG spectrum information as the input of a CNN and trained the CNN to classify the DoA, which achieved better results.

A Recurrent Neural Network (RNN) is a special neural network with a memory function, and it can effectively use temporal information to analyze time series. However, the traditional RNN model has the problem of gradient disappearance or gradient explosion, the long short-term memory network (LSTM), an improvement of RNN, solves this problem to a certain extent (Hochreiter and Schmidhuber, 1997). LSTM has been successfully applied in various fields such as handwriting recognition (Graves and Schmidhuber, 2009), machine translation (Sutskever et al., 2014), speech recognition (Graves et al., 2013), and so on.

An autoencoder can learn a representation of the input data efficiently through unsupervised learning (Vincent et al., 2008; Baldi, 2012). Li et al. (2015) used the Lomb-Scargle periodogram and a denoising autoencoder to estimate the spectral power from incomplete EEG. The results showed that this method is suitable for decoding incomplete EEG. It has been proved that a denoising sparse autoencoder can extract the features of data and improve the robustness of those features (Meng et al., 2017). Qiu et al. (2018) proposed a novel method of seizure detection based on a denoising sparse autoencoder, which achieved high classification accuracy in seizure detection.

In this paper, an anesthesia depth monitoring method based on a sparse denoising autoencoder (SDAE) and LSTM is investigated with a combination of hybrid features. We preprocessed an EEG signal containing noise through a sixth-order Butterworth filter. The permutation entropy, sample entropy, wavelet entropy, frequency band power, and frequency spectrum were then extracted as features and input into the SDAE-LSTM (Sparse Denoising Autoencoder and Long Short-Term Memory) network to estimate the DoA. The combination of these features compensates for the shortcomings of individual features and is the optimal combination, having been proven to be effective. An SDAE combined with LSTM can take advantage of the temporal information in EEG, increase the robustness of the system, and remove noise-containing information. The final experimental results demonstrated that our proposed hybrid method can achieve higher prediction probability (Pk) and provide better prediction performance than the traditional approaches.

The remainder of this paper is organized as follows. In section 2, we introduce the dataset and the baseline methods commonly used for DoA monitoring and our designed SDAE-LSTM network. Section 3 presents the experimental results of the SDAE-LSTM performance and compare it with other methods. Finally, Sections 4 and 5 provide discussion and conclusions.

2. Materials and Methods

2.1. Data

The dataset used for evaluation was from a previous study (McKay et al., 2006) and collected at the Waikato Hospital in Hamilton, New Zealand, which contains 20 patients aged 18–63 years old. These patients were scheduled for elective general, orthopedic, or gynecological surgery. The experiment was reviewed and approved by Waikato Hospital ethics committee and all subjects provided their written informed consent (McKay et al., 2006).

All trials were performed under the conditions specified by the American Society of Anesthesiologists. The raw EEG, unprocessed sevoflurane concentration, processed end-tidal sevoflurane concentration, RE, and SE of each patient were recorded in detail in the dataset. The commercial GE electrode system, which consisted of a self-adhering flexible band holding three electrodes, was applied to the forehead of each patient to record EEG data (100/s). The end-tidal sevoflurane concentration recorded from the mouth was sampled at 100/s (McKay et al., 2006). A plug-in M-Entropy module was used to measure the response entropy (RE) (0.2/s) and state entropy (SE) (0.2/s); its sampling rate is 1,600 Hz, the frequency bandwidth is 0.5–118 Hz, and the amplifier noise level is <0.5uV. Patients first inhaled fresh gas at 4L/min and where then given 3% sevoflurane for 2 min, followed immediately by a 7% inspired concentration. When RE had decreased to 20, 7% sevoflurane was continued for a further 2 min. Finally, sevoflurane was turned off.

The dataset we used records the raw EEG, unprocessed sevoflurane concentration, processed end-tidal sevoflurane concentration, RE, and SE of each patient. We did not segment the EEG signal and used a continuous signal throughout the whole process.

2.2. Feature Extraction

2.2.1. Sample Entropy

Sample entropy (SampEn) was developed by Richman and Moorman (2000) to represent the complexity of finite time series. Larger values of SampEn reflect a more irregular signal. Given a time series x(i), 1 ≤ i ≤ N, it can be reconstituted as N − m + 1 vectors Xm (i), defined as:

Let d be the distance between the vectors Xm (i) and Xm (j), which is given by:

is the probability that Xm (j) is within distance r of Xm (i), calculated as:

where ni (m, r) is the number of vectors Xj that were similar to Xi subject to d(Xi, Xj) ≤ r. When the embedding dimension equals m, the total number of template matches is:

Setting m = m + 1 and repeating the above steps, the SampEn of the time series is estimated by:

where Ln is the natural logarithm. The SampEn index is influenced by three parameters N, r, and m. N is the length of the time series, r is the threshold that determines the similarity of the patterns, and m is the length of the compared sequences. In this paper, we set N = 500, r = 0.2, and m = 2. The parameters are selected according to Bruhn et al. (2000) and Liang et al. (2015).

2.2.2. Permutation Entropy

Permutation entropy (PeEn) provides a simple and robust DoA estimation method with low computational complexity. It quantifies the amount of regularity in the EEG signal, and takes the temporal order of the values into account (Li et al., 2008). Given a time series XN = [x1, x2,… , xN] with N points, XN can be reconstructed as:

where τ is the time delay, and m denotes the embedding dimension. Then, Xi can be rearranged in an increasing order:

There are J = m! permutations for m dimensions. The vectors Xi can be represented by a symbol sequence in which each permutation is considered a symbol. For the time series XN, the probabilities of the dissimilar symbols for the time series XN are named P1,… , Pj. Based on Shannon entropy, permutation entropy can be defined as:

The calculation of PermEn is dependent on the length of the time series N, the length of the pattern m, and the time lag τ, which are N = 500, m = 4, and τ = 1. The parameters are selected as proposed in Su et al. (2016).

2.2.3. Wavelet Entropy

Wavelet entropy is based on wavelet transform with multiple scales and orientations (Särkelä et al., 2007). A suitable wavelet base is selected, and the original signal is developed at different scales, where Cj (k) is the decomposition coefficients at each scale j. The wavelet energy Ej of a signal is defined as follows:

where Lj denotes the number of coefficients at each decomposition scale. Therefore, the total energy of the signal can be expressed as:

Then, wavelet energy is divided by total energy to obtain the relative wavelet energy at each scale j:

Finally, the wavelet entropy is calculated by:

2.2.4. Alpha-Ratio

The alpha-ratio is the logarithmic relative power of two distinct frequency bands and can be calculated as follows:

where E30−42.5 and E6−12 represent spectral energy in the 30–42.5 and 6–12 Hz bands, respectively (Drummond et al., 1991; Jensen et al., 2006).

2.3. Our Work

2.3.1. Long Short-Term Memory (LSTM)

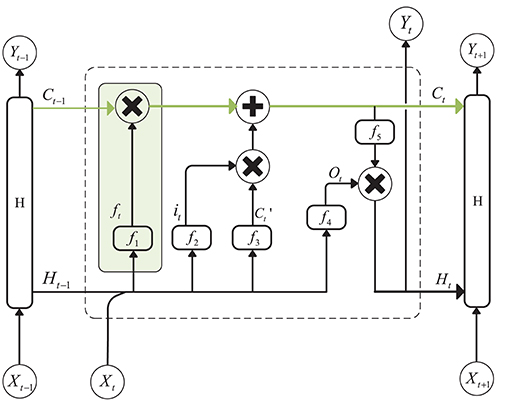

A recurrent Neural Network is an efficient tool for sequential data analysis, such as EEG signal processing. The emergence of LSTM, as an improvement of the RNN network model, plays a significant role in solving the problem of gradient disappearance during RNN training. LSTM is a kind of special RNN model, and, in order to make the gradient flow for long durations, LSTM introduces self-loops and propose the concept of a gate. Compared with an ordinary recurrent network, each cell has the same inputs and outputs but more parameters (Goodfellow et al., 2016). The structure is shown in Figure 1 (Hochreiter and Schmidhuber, 1997):

Figure 1. Structure of Long Short-Term Memory. The green line above the graph represents the cell state. The green box represents the gate, which controls the updating of the cell state.

The cell state update formula is as follows:

is the candidate values created by a tanh layer:

In the above equation, Wxc and Whc are the weights of the input layer Xt at the current moment and the hidden layer Ht−1 at the previous moment. bc is the bias, and ft and it are the forgetting gate unit and the input gate unit, respectively. The formulas for these two parameters are as follows:

where f1 and f2 are sigmoid functions that can map the value of the control coefficient between 0 and 1. And, the hidden layer Ht at the current moment can be written as:

ot is the output gate unit, and its expression is:

f4 is also a sigmoid function. Wxo and Who represent the weights of the input layer Xt and the hidden layer Ht−1 to the output gate, and bo is the bias of the network. f5 is an activation function, such as tanh.

The LSTM must be trained to regulate the weights and biases. One of the most commonly used training algorithms is Bayesian regularization back-propagation.

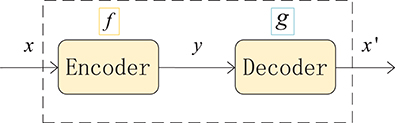

2.3.2. Autoencoder

An autoencoder (AE) can be understood as a system that attempts to restore its original input, as shown in Figure 2, and it is a kind of neural network. The dotted blue box is an AE model that consists of two parts, an encoder and a decoder. The encoder converts the input signal x into a hidden representation y, and the decoder recovers y into an output signal x′, which is a reconstructed x.

The purpose of an autoencoder is to recover the input x as much as possible. In fact, we usually focus on the encoding of the middle layer, or the mapping from input to encoding. In other words, in the case where we force the encoding y and the input x to be different, the system can also restore the original signal x, and then the encoding y already carries all the information of the original data, which is a effective representation of the automatic learning of the original data.

Figure 2. Structure of an autoencoder. The network consists of two parts: an encoder represented by the function f and a decoder, g. The encoder compresses the input into a hidden layer representation, and the decoder reconstructs the input from the hidden layer.

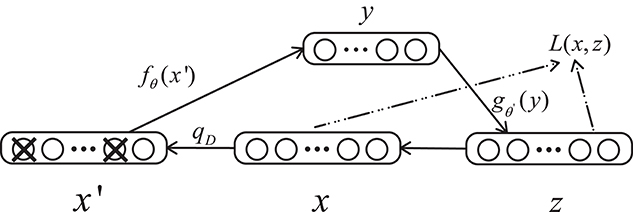

A denoising autoencoder (DAE) is an extension of an autoencoder and was proposed by Vincent et al. (2008). To prevent over-fitting problems, noise is added to the input data (the input layer of the network), which makes the learned encoder W more robust and enhances the generalization ability of the model. A schematic diagram of a denoising autoencoder is shown in Figure 3. In Figure 3, x is the original input data, and DAE sets the value of the input layer node to 0 with a certain probability to get the input x containing noise (Vincent et al., 2010).

Figure 3. Structure of a denoising autoencoder. x represents the input data, x′ is the corrupted input, and L is the loss function.

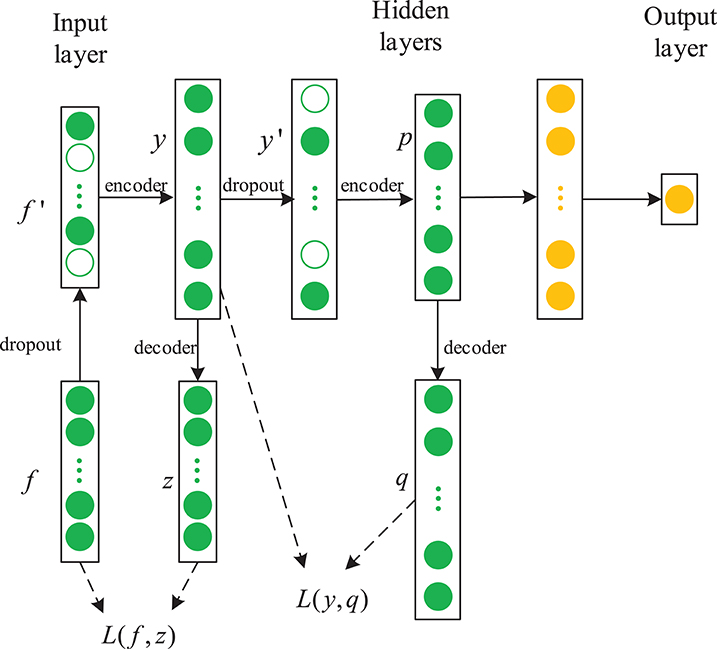

2.3.3. Our Proposed Method:SDAE-LSTM

In this paper, we propose a novel framework combining a sparse denoising autoencoder with the LSTM to predict anesthesia depth. Figure 4 shows the structure of SDAE-LSTM.

Figure 4. Structure of an SDAE-LSTM network. The green circles represent the layers of SDAEs and the yellow circles are LSTM layers. f′ represents the corrupted input features, y represents the encoded data, and L is a squared loss function.

First, we used the notching filter to filter out power-frequency interference and the sixth-order Butterworth filter to filter out frequencies <0.8 Hz and >50 Hz. Next, the entropies, spectrum, etc., were extracted as features, and we used the wavelet threshold to smooth the features. Then, the features from the EEG data were used to train the SDAE-LSTM network, using the sevoflurane effect concentration calculated by the PK model based on end-tidal concentration as the label. SDAEs were trained one by one, after training the first SDAE; its encoder output was used as the input of the second SDAE, and the output of the second SDAE's encoder was used as the input characteristic of the LSTM for the anesthesia depth prediction training. The whole neural network was fine-tuned after the training of LSTM. The anesthesia depth index was finally obtained from the output of the SDAE-LSTM. Figure 5 illustrates the entire proposed framework.

Figure 5. Depiction of our proposed framework. The structure of SDAE-LSTM is a contracted form, which means that 46 features are input in the SDAE-LSTM network, and there is output one index with which to monitor the DoA. The details of the SDAE-LSTM structure can be seen in Figure 4.

The features we extracted represent the different patterns of EEG. Permutation entropy is robust against artifacts in EEG in the awake state (Shalbaf et al., 2013), while sample entropy can track the state of brain activity under high doses of anesthetic drugs (Shalbaf et al., 2012). Wavelet entropy can measure the degree of order/disorder of the signal and provide underlying dynamic process information associated with the signal (Rosso et al., 2001). Frequency spectrum and alpha-ratio can also be used to detect EEG activity and have been proposed as indices of anesthesia depth in previous studies (Shah et al., 1988; Drummond et al., 1991). We finally chose 46 features, including 40 frequency spectra (30–50 Hz, the spectrum every 0.5 Hz is a feature point), 2 average spectra (the average spectra 30–47 and 47–50 Hz), 3 entropies (permutation entropy, sample entropy, and wavelet entropy) and the alpha-ratio. We combined the 46 features using a 46*N feature matrix as the input to the neural network.

2.4. PK/PD Model

The PK/PD model describes the relationship between anesthetic drug concentration and the EEG index. It consists of two parts: pharmacodynamics and pharmacokinetics. The pharmacokinetic side of the model describes how the blood concentration of the drug changes with time, and the pharmacodynamics side represents the relationship between the drug concentration at the effect site and the measured index (McKay et al., 2006).

McKay et al. claim that the effect-site concentration of sevoflurane is related to the partial pressure of the effect site, and the partial pressure of the effect site can be calculated by the classical first-order effect site model:

where Cet is end-tidal concentration, Ceff is the effect-site concentration, and keo denotes the first-order rate constant for efflux from the effect compartment.

We used a nonlinear inhibitory sigmoid Emax curve to describe the relationship between Ceff and the measured index.

where Effect is the EEG index, Emax and Emin are the maximum and minimum Effect respectively, EC50 describes the drug concentration that causes 50% of the maximum Effect, and γ is the slope of the concentration-response relationship.

2.5. Prediction Probability

To evaluate the performance of the DoA methods, the prediction probability (Pk) statistics are used to calculate the correlation between the measured EEG index and drug effect-site concentration. The prediction probability was first proposed by Smith et al. (1996). Smith proposed using a constant to indicate the predictive performance of the anesthesia depth index.

Given two random data points x, y with different Ceff, Pk describes the probability that the measured EEG index correctly predicts the Ceff of the two points. Let Pc, Pd, and Ptx be the respective probabilities that two data points drawn at random, independently and with replacement, from the population are a concordance, a discordance, or an x-only tie. The only other possibility is that the two data points are tied in observed depth y; therefore, the sum of Pc, Pd, and Ptx is the probability that the two data points have distinct values of observed anesthetic depth; that is, that they are not tied in y. Pk is defined as:

A value of 1 means that the predicted index can completely measure the depth of anesthesia, and a value of 0.5 indicates that the predicted index is completely random. Because the predictive index has a negative correlation, when the Pk value is < 0.5, it is replaced by 1 − Pk.

3. Experimental Results

In this section, we present the experimental results of the DoA index estimation using the SDAE-LSTM network and compare it with permutation entropy, sample entropy, wavelet entropy, alpha-ratio, and our proposed network without SDAE. The sevoflurane effect concentration calculated by the PK model based on end-tidal concentration was used as the label for network training.

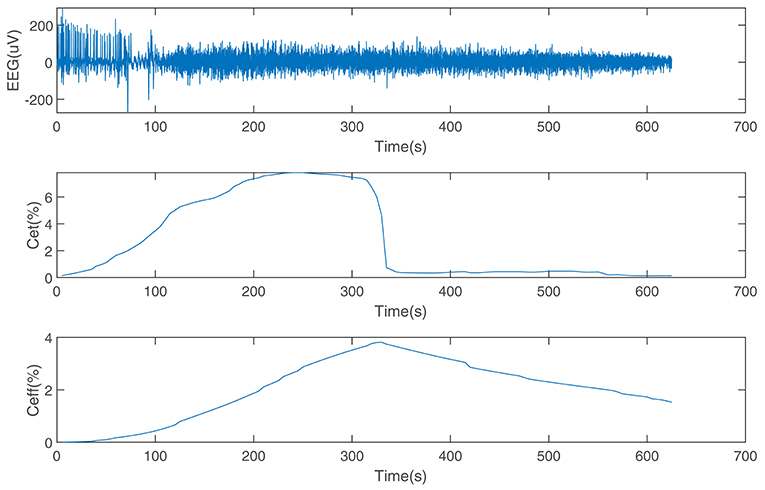

The performance of the SDAE-LSTM network was tested on the dataset we introduced in section 2. This dataset consists of EEG data during anesthesia from 20 subjects, from awake to deep anesthesia. Figure 6 shows the preprocessed EEG signal, the end-tidal concentration, and the effect-site concentration of a patient during the sevoflurane induction process, from awake to anesthesia and to recovery. We input 19 training samples into the SDAE-LSTM algorithm and the regression procedure for a new test sample. The experimental result was calculated by cross-validation; each time, one subject's EEG data were selected as the test set, the order of the remaining 19 subjects' data was shuffled, and the remaining 19 subjects' data were used as the training set for SDAE-LSTM network training. The final accuracy was the average of 20 experiments. We repeated the above steps 100 times and calculated the average as the final accuracy, which shows that our method is effective and reproducible. For the baseline methods, the results were calculated by the average of the accuracy of 20 experiments. The comparison was performed on a desktop computer with an Intel Xeon CPU at 2.6 GHz and 64 GB DDR4 memory under the Windows Server 2008 OS, Matlab R2017a, and Python 3.5.

Figure 6. EEG data from a patient and the end-tidal concentration and effect-site concentration of sevoflurane.

The current structure we used consists of five layers: one input layer with 46 nodes, three hidden layers with 92, 12, and 18 nodes, respectively, and one output layer with one node. The first three layers are SDAE, and the last two layers are LSTM.

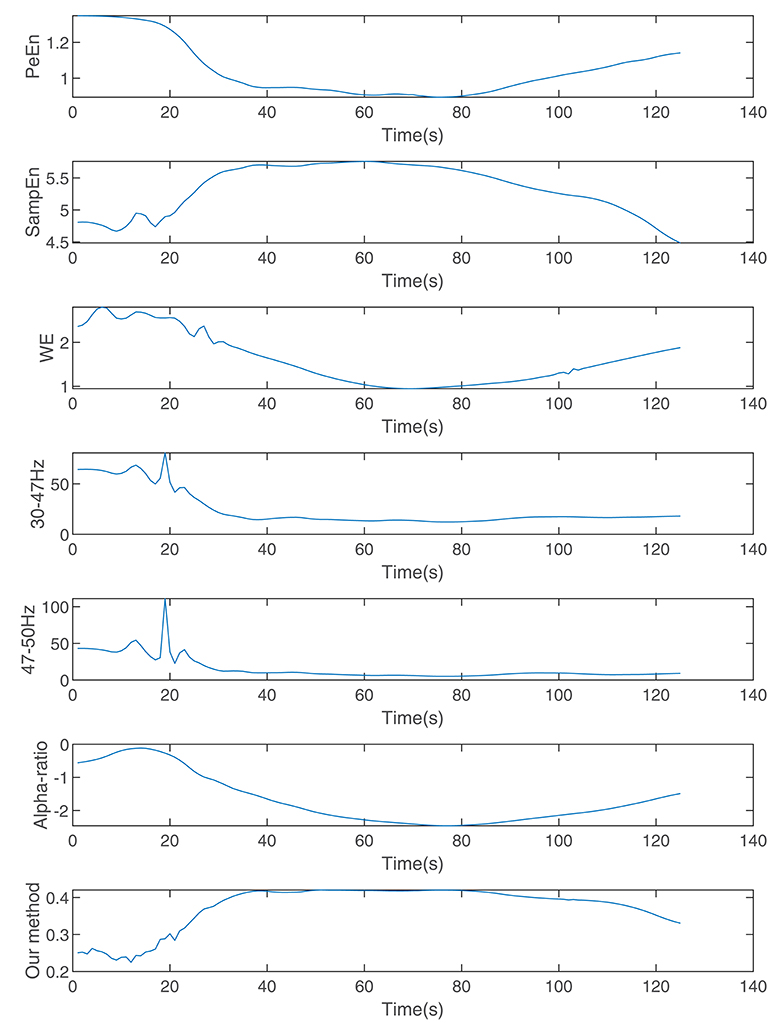

Figure 7 shows the calculated permutation entropy, sample entropy, and alpha-ratio and the index calculated during the process. It indicates that with an increase in drug effect-site concentration, the entropies decreased, and the index calculated by our method increased.

Figure 7. The indices calculated by wavelet entropy, sample entropy, alpha-ratio, the average spectra of 30–47 and 47–50 Hz, permutation entropy, and our method during anesthesia.

In addition, we used the Kolmogorov-Smirnov test to determine whether the Pk values of 20 subjects were normally distributed, and t-tests were used to assess whether our proposed method is more effective than other methods.

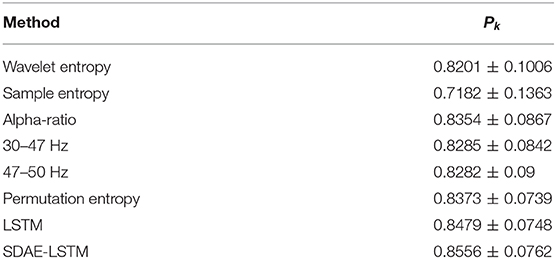

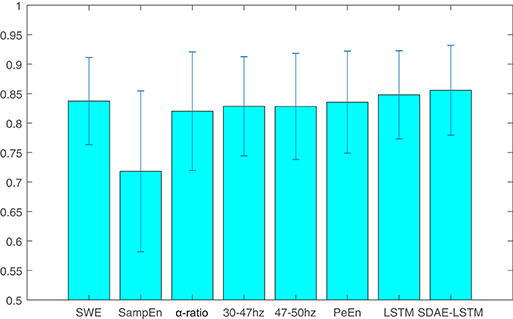

We compare the results of our proposed network with other methods including permutation entropy, sample entropy, wavelet entropy, alpha-ratio, and the network without SDAE. The Pk values of these methods are presented in Table 1 and Figure 8. We can see that the Pk value of the permutation entropy, 0.8373, is the highest of all baseline systems and that the Pk value of the LSTM structure can reach 0.8479, while, using our proposed SDAE-LSTM network, the Pk value was highest of all methods, reaching 0.8556. Therefore, based on our results, this kind of combination has the highest Pk value in anesthesia monitoring. The paired t-tests also confirmed that the proposed method provided significantly higher accuracy than traditional methods (SDAE-LSTM>PeEn: p < 0: 1222, SDAE-LSTM>SampEn:p < 0: 00013, SDAE-LSTM>SWE: p < 0: 0202, SDAE-LSTM>30–47 Hz: p < 0:0 201, SDAE-LSTM>47–50 Hz: p < 0: 0431, SDAE-LSTM>alpha-ratio: p < 0: 0477).

Table 1. Pk values of wavelet entropy, sample entropy, alpha-ratio, the average spectra of 30–47 and 47–50 Hz, permutation entropy, LSTM, and SDAE-LSTM.

Figure 8. The average and standard deviation of the Pk values of wavelet entropy, sample entropy, alpha-ratio, the average spectra of 30–47 and 47–50 Hz, permutation entropy, LSTM, and SDAE-LSTM, respectively. The blue lines in the figure represent the variance, and the blue rectangles represent the average values.

4. Discussion

In this study, we propose a new method for monitoring the depth of anesthesia using EEG signals. We used wavelet entropy, permutation entropy, sample entropy, alpha-ratio, and frequency spectrum to extract features from EEG signals. However, EEG is a complex dynamic signal with multiple linear and nonlinear features and it is very sensitive to noise. Using only one linear method or nonlinear method cannot analyze all aspects of brain activity. These traditional methods may vary from patient to patient and type of surgery. Moreover, muscle relaxants, anesthetics, and other similar drugs used during surgery affect the EEG, thus making the analysis of clinical symptoms unreliable (Shalbaf et al., 2015).

Therefore, a new method based on LSTM and an SDAE is proposed. LSTM is a neural network for processing sequence data. Compared with the general neural network, it is more suitable for processing and predicting important events with relatively long intervals and delays in the time series. Therefore, it can analyze the temporal information present in EEG. An SDAE is an improved unsupervised deep neural network. The sparseness constraint applied in the hidden layer of the network makes the representation of data sparse. Applying the denoising autoencoder to destroy the input data helps enhance the robustness of the system, making it suitable for the analysis of EEG signals that have a lower signal-to-noise ratio.

Pharmacokinetic/Pharmacodynamic (PK/PD) modeling and prediction probability were used to evaluate the effectiveness of the SDAE-LSTM model for monitoring DoA. PK/PD modeling can be sued to establish the relationship between the concentration of the anesthetic at the effect site and the EEG index. This method has been used to assess the proposed EEG index successfully (McKay et al., 2006). The PK side we used describes the changes in drug concentration in blood over time. The prediction probability was first proposed by Smith et al. (1996), proposing a constant to express the predictive accuracy of DoA (Smith et al., 1996).

We used a dataset collected by a New Zealand hospital containing 20 sets of patient data from the use of sevoflurane anesthesia methods, which recorded the entire process from the beginning to the end of anesthesia. Cross-validation was used to assess whether our method is effective. We compared the proposed method with the traditional methods. The results show that our method can achieve a Pk value of 0.8556 for predicting the depth of anesthesia, which is about 2% higher than the baseline methods and is increased by 0.77% compared with using a LSTM network only.

5. Conclusion

This paper provides a method for monitoring the DoA using EEG, which helps to provide a safer, reliable, and effective clinical environment for anesthetized patients. In this study, permutation entropy, wavelet entropy, sample entropy, alpha-ratio, and frequency spectrum are used to extract the features of EEG signals. These extracted features are applied to an SDAE-LSTM network. The method was compared with the traditional methods and achieved good results. Therefore, this method of combining the hybrid features in an SDAE-LSTM network can be used in future studies on anesthesia depth monitoring.

Data Availability Statement

The dataset for this article is not publicly available. We asked for the dataset from one of the authors of McKay et al. (2006) by email. Requests to access the datasets should be directed to bG9nYW4udm9zcyYjeDAwMDQwO3dhaWthdG9kaGIuaGVhbHRoLm56.

Author Contributions

QiaW and JL contributed conception and design of the study. RL and CL achieved the algorithm and finished numerical experiment. RL wrote the draft of the manuscript. QiW preprocessed the EEG data. QZ performed the statistical analysis. All authors contributed to manuscript revision, read, and approved the submitted version.

Funding

This work was supported by the Fundamental Research Funds of Shandong University (Grant No. 2017JC013), the Shandong Province Key Innovation Project (Grant Nos. 2017CXGC1504, 2017CXGC1502), and the Natural Science Foundation of Shandong Province (Grant No. ZR2019MH049).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Alvarez-Ramirez, J., Echeverria, J. C., and Rodriguez, E. (2008). Performance of a high-dimensional r/s method for hurst exponent estimation. Phys. A Statis. Mechan. Appl. 387, 6452–6462. doi: 10.1016/j.physa.2008.08.014

Baldi, P. (2012). “Autoencoders, unsupervised learning, and deep architectures,” in Proceedings of ICML Workshop on Unsupervised and Transfer Learning (Edinburgh), 37–49.

Benzy, V., and Jasmin, E. (2015). A combined wavelet and neural network based model for classifying depth of anaesthesia. Proc. Comput. Sci. 46, 1610–1617. doi: 10.1016/j.procs.2015.02.093

Bruhn, J., Myles, P., Sneyd, R., and Struys, M. (2006). Depth of anaesthesia monitoring: what's available, what's validated and what's next? Br. J. Anaesth. 97, 85–94. doi: 10.1093/bja/ael120

Bruhn, J., Röpcke, H., and Hoeft, A. (2000). Approximate entropy as an electroencephalographic measure of anesthetic drug effect during desflurane anesthesia. Anesthesiol. J. Am. Soc. Anesthesiol. 92, 715–726. doi: 10.1097/00000542-200003000-00016

Cao, Y., Tung, W.-w., Gao, J., Protopopescu, V. A., and Hively, L. M. (2004). Detecting dynamical changes in time series using the permutation entropy. Phys. Rev. E 70:046217. doi: 10.1103/PhysRevE.70.046217

Chen, W., Wang, Z., Xie, H., and Yu, W. (2007). Characterization of surface emg signal based on fuzzy entropy. IEEE Trans. Neural Syst. Rehabil. Eng. 15, 266–272. doi: 10.1109/TNSRE.2007.897025

Drummond, J., Brann, C., Perkins, D., and Wolfe, D. (1991). A comparison of median frequency, spectral edge frequency, a frequency band power ratio, total power, and dominance shift in the determination of depth of anesthesia. Acta Anaesthesiol. Scandinav. 35, 693–699. doi: 10.1111/j.1399-6576.1991.tb03374.x

Elbert, T., Ray, W. J., Kowalik, Z. J., Skinner, J. E., Graf, K. E., and Birbaumer, N. (1994). Chaos and physiology: deterministic chaos in excitable cell assemblies. Physiol. Rev. 74, 1–47.

Goodfellow, I., Bengio, Y., Courville, A., and Bengio, Y. (2016). Deep Learning. Cambridge: MIT press.

Graves, A., Mohamed, A.-R., and Hinton, G. (2013). “Speech recognition with deep recurrent neural networks,” in 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (icassp) (Vancouver, BC: IEEE), 6645–6649.

Graves, A., and Schmidhuber, J. (2009). “Offline handwriting recognition with multidimensional recurrent neural networks,” in Advances in Neural Information Processing Systems, eds D. Koller, D. Schuurmans, Y. Bengio, and L. Bottou (Vancouver, BC: Curran Associates, Inc.), 545–552.

Jameson, L. C., and Sloan, T. B. (2006). Using EEG to monitor anesthesia drug effects during surgery. J. Clin. Monit. Comput. 20, 445–472. doi: 10.1007/s10877-006-9044-x

Jensen, E. W., Litvan, H., Revuelta, M., Rodriguez, B. E., Caminal, P., Martinez, P., et al. (2006). Cerebral state index during propofol anesthesiaa comparison with the bispectral index and the a-line arx index. Anesthesiol. J. Am. Soc. Anesthesiol. 105, 28–36. doi: 10.1097/00000542-200607000-00009

Jiao, Y., Zhang, Y., Chen, X., Yin, E., Jin, J., Wang, X., et al. (2018). Sparse group representation model for motor imagery EEG classification. IEEE J. Biomed. Health Inform. 23, 631–641. doi: 10.1109/JBHI.2018.2832538

Jin, Z., Zhou, G., Gao, D., and Zhang, Y. (2018). EEG classification using sparse bayesian extreme learning machine for brain–computer interface. Neural Comput. Appl. 1–9. doi: 10.1007/s00521-018-3735-3

Jospin, M., Caminal, P., Jensen, E. W., Litvan, H., Vallverdú, M., Struys, M. M., et al. (2007). Detrended fluctuation analysis of EEG as a measure of depth of anesthesia. IEEE Trans. Biomed. Eng. 54, 840–846. doi: 10.1109/TBME.2007.893453

Kearse, J. L. Jr., Manberg, P., Chamoun, N., deBros, F., and Zaslavsky, A. (1994). Bispectral analysis of the electroencephalogram correlates with patient movement to skin incision during propofol/nitrous oxide anesthesia. Anesthesiology 81, 1365–1370.

Li, D., Li, X., Liang, Z., Voss, L. J., and Sleigh, J. W. (2010). Multiscale permutation entropy analysis of EEG recordings during sevoflurane anesthesia. J. Neural Eng. 7:046010. doi: 10.1088/1741-2560/7/4/046010

Li, J., Struzik, Z., Zhang, L., and Cichocki, A. (2015). Feature learning from incomplete EEG with denoising autoencoder. Neurocomputing 165, 23–31. doi: 10.1016/j.neucom.2014.08.092

Li, X., Cui, S., and Voss, L. J. (2008). Using permutation entropy to measure the electroencephalographic effects of sevoflurane. Anesthesiol. J. Am. Soc. Anesthesiol. 109, 448–456. doi: 10.1097/ALN.0b013e318182a91b

Liang, Z., Wang, Y., Sun, X., Li, D., Voss, L. J., Sleigh, J. W., et al. (2015). EEG entropy measures in anesthesia. Front. Comput. Neurosci. 9:16. doi: 10.3389/fncom.2015.00016

Liu, Q., Cai, J., Fan, S.-Z., Abbod, M. F., Shieh, J.-S., Kung, Y., et al. (2019). Spectrum analysis of EEG signals using cnn to model patient's consciousness level based on anesthesiologists' experience. IEEE Access. 7, 53731–53742. doi: 10.1109/ACCESS.2019.2912273

Liu, Q., Chen, Y.-F., Fan, S.-Z., Abbod, M. F., and Shieh, J.-S. (2016). A comparison of five different algorithms for EEG signal analysis in artifacts rejection for monitoring depth of anesthesia. Biomed. Signal Proc. Control 25, 24–34. doi: 10.1016/j.bspc.2015.10.010

Maszczyk, T., and Duch, W. (2008). “Comparison of shannon, renyi and tsallis entropy used in decision trees,” in International Conference on Artificial Intelligence and Soft Computing (Zakopane: Springer), 643–651.

McKay, I. D., Voss, L. J., Sleigh, J. W., Barnard, J. P., and Johannsen, E. K. (2006). Pharmacokinetic-pharmacodynamic modeling the hypnotic effect of sevoflurane using the spectral entropy of the electroencephalogram. Anesthes. Analges. 102, 91–97. doi: 10.1213/01.ane.0000184825.65124.24

Meng, L., Ding, S., and Xue, Y. (2017). Research on denoising sparse autoencoder. Int. J. Mach. Learn. Cybernet. 8, 1719–1729. doi: 10.1007/s13042-016-0550-y

Natarajan, K., Acharya, R., Alias, F., Tiboleng, T., and Puthusserypady, S. K. (2004). Nonlinear analysis of EEG signals at different mental states. BioMed. Eng. Online 3:7. doi: 10.1186/1475-925X-3-7

Olofsen, E., Sleigh, J., and Dahan, A. (2008). Permutation entropy of the electroencephalogram: a measure of anaesthetic drug effect. Br. J. Anaesthes. 101, 810–821. doi: 10.1093/bja/aen290

Puthankattil, S. D., and Joseph, P. K. (2012). Classification of EEG signals in normal and depression conditions by ANN using RWE and signal entropy. J. Mechan. Med. Biol. 12:1240019. doi: 10.1142/S0219519412400192

Qiu, Y., Zhou, W., Yu, N., and Du, P. (2018). Denoising sparse autoencoder-based ictal EEG classification. IEEE Trans. Neural Syst. Rehabil. Eng. 26, 1717–1726. doi: 10.1109/TNSRE.2018.2864306

Rampil, I. J. (1998). A primer for EEG signal processing in anesthesia. Anesthesiol. J. Am. Soc. Anesthesiol. 89, 980–1002.

Rezek, I., and Roberts, S. J. (1998). Stochastic complexity measures for physiological signal analysis. IEEE Transact. Biomed. Eng. 45, 1186–1191.

Richman, J. S., and Moorman, J. R. (2000). Physiological time-series analysis using approximate entropy and sample entropy. Am. J. Physiol. Heart Circulat. Physiol. 278, H2039–H2049. doi: 10.1152/ajpheart.2000.278.6.H2039

Rosso, O., Martin, M., Figliola, A., Keller, K., and Plastino, A. (2006). EEG analysis using wavelet-based information tools. J. Neurosci. Methods 153, 163–182. doi: 10.1016/j.jneumeth.2005.10.009

Rosso, O. A., Blanco, S., Yordanova, J., Kolev, V., Figliola, A., Schürmann, M., et al. (2001). Wavelet entropy: a new tool for analysis of short duration brain electrical signals. J. Neurosci. Methods 105, 65–75. doi: 10.1016/S0165-0270(00)00356-3

Särkelä, M. O., Ermes, M. J., van Gils, M. J., Yli-Hankala, A. M., Jäntti, V. H., and Vakkuri, A. P. (2007). Quantification of epileptiform electroencephalographic activity during sevoflurane mask induction. Anesthesiol. J. Am. Soc. Anesthesiol. 107, 928–938. doi: 10.1097/01.anes.0000291444.68894.ee

Shah, N., Long, C., and Bedford, R. (1988). “delta-shift”: an EEG sign of awakening during light isoflurane anesthesia. Anesthes. Analges. 67:206.

Shalbaf, A., Saffar, M., Sleigh, J. W., and Shalbaf, R. (2018). Monitoring the depth of anesthesia using a new adaptive neurofuzzy system. IEEE J. Biomed. Health Inform. 22, 671–677. doi: 10.1109/JBHI.2017.2709841

Shalbaf, R., Behnam, H., and Moghadam, H. J. (2015). Monitoring depth of anesthesia using combination of EEG measure and hemodynamic variables. Cognit. Neurodynam. 9, 41–51. doi: 10.1007/s11571-014-9295-z

Shalbaf, R., Behnam, H., Sleigh, J., and Voss, L. (2012). Measuring the effects of sevoflurane on electroencephalogram using sample entropy. Acta Anaesthesiol. Scandinav. 56, 880–889. doi: 10.1111/j.1399-6576.2012.02676.x

Shalbaf, R., Behnam, H., Sleigh, J. W., Steyn-Ross, A., and Voss, L. J. (2013). Monitoring the depth of anesthesia using entropy features and an artificial neural network. J. Neurosci. Methods 218, 17–24. doi: 10.1016/j.jneumeth.2013.03.008

Smith, W. D., Dutton, R. C., and Smith, T. N. (1996). Measuring the performance of anesthetic depth indicators. Anesthesiol. J. Am. Soc. Anesthesiol. 84, 38–51.

Su, C., Liang, Z., Li, X., Li, D., Li, Y., and Ursino, M. (2016). A comparison of multiscale permutation entropy measures in on-line depth of anesthesia monitoring. PLoS ONE 11:e0164104. doi: 10.1371/journal.pone.0164104

Sutskever, I., Vinyals, O., and Le, Q. V. (2014). “Sequence to sequence learning with neural networks,” in Advances in Neural Information Processing Systems (Montreal, QC: Curran Associates, Inc.), 3104–3112.

Vincent, P., Larochelle, H., Bengio, Y., and Manzagol, P.-A. (2008). “Extracting and composing robust features with denoising autoencoders,” in Proceedings of the 25th International Conference on Machine Learning (Helsinki: ACM), 1096–1103.

Vincent, P., Larochelle, H., Lajoie, I., Bengio, Y., and Manzagol, P.-A. (2010). Stacked denoising autoencoders: learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res. 11, 3371–3408.

Wu, Q., Zhang, Y., Liu, J., Sun, J., Cichocki, A., and Gao, F. (2019). Regularized group sparse discriminant analysis for p300-based brain-computer interface. Int. J. Neural Syst. 29:1950002. doi: 10.1142/S0129065719500023

Yoo, C. S., Jung, D. C., Ahn, Y. M., Kim, Y. S., Kim, S.-G., Yoon, H., et al. (2012). Automatic detection of seizure termination during electroconvulsive therapy using sample entropy of the electroencephalogram. Psychiat. Res. 195, 76–82. doi: 10.1016/j.psychres.2011.06.020

Zhang, X.-S., Roy, R. J., and Jensen, E. W. (2001). EEG complexity as a measure of depth of anesthesia for patients. IEEE Trans. Biomed. Eng. 48, 1424–1433. doi: 10.1109/10.966601

Zhang, Y., Nam, C. S., Zhou, G., Jin, J., Wang, X., and Cichocki, A. (2018). Temporally constrained sparse group spatial patterns for motor imagery bci. IEEE Trans. Cybernet. 49, 3322–3332. doi: 10.1109/TCYB.2018.2841847

Keywords: autoencoder, LSTM, EEG, anesthesia, feature extraction

Citation: Li R, Wu Q, Liu J, Wu Q, Li C and Zhao Q (2020) Monitoring Depth of Anesthesia Based on Hybrid Features and Recurrent Neural Network. Front. Neurosci. 14:26. doi: 10.3389/fnins.2020.00026

Received: 28 February 2019; Accepted: 10 January 2020;

Published: 07 February 2020.

Edited by:

Junhua Li, University of Essex, United KingdomReviewed by:

Sowmya V, Amrita Vishwa Vidyapeetham University, IndiaNicoletta Nicolaou, University of Nicosia, Cyprus

Tao Zhou, Inception Institute of Artificial Intelligence (IIAI), United Arab Emirates

Copyright © 2020 Li, Wu, Liu, Wu, Li and Zhao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Qiang Wu, d3VxaWFuZyYjeDAwMDQwO3NkdS5lZHUuY24=

Ronglin Li1

Ronglin Li1 Qiang Wu

Qiang Wu