- National-Regional Key Technology Engineering Laboratory for Medical Ultrasound, Guangdong Provincial Key Laboratory of Biomedical Measurements and Ultrasound Imaging, Health Science Center, School of Biomedical Engineering, Shenzhen University, Shenzhen, China

The presence of pathologies in magnetic resonance (MR) brain images causes challenges in various image analysis areas, such as registration, atlas construction and atlas-based segmentation. We propose a novel method for the simultaneous recovery and segmentation of pathological MR brain images. Low-rank and sparse decomposition (LSD) approaches have been widely used in this field, decomposing pathological images into (1) low-rank components as recovered images, and (2) sparse components as pathological segmentation. However, conventional LSD approaches often fail to produce recovered images reliably, due to the lack of constraint between low-rank and sparse components. To tackle this problem, we propose a transformed low-rank and structured sparse decomposition (TLS2D) method. The proposed TLS2D integrates the structured sparse constraint, LSD and image alignment into a unified scheme, which is robust for distinguishing pathological regions. Furthermore, the well recovered images can be obtained using TLS2D with the combined structured sparse and computed image saliency as the adaptive sparsity constraint. The efficacy of the proposed method is verified on synthetic and real MR brain tumor images. Experimental results demonstrate that our method can effectively provide satisfactory image recovery and tumor segmentation.

1. Introduction

Automated image computing routines (e.g., segmentation, registration, atlas construction) that can analyze the magnetic resonance (MR) brain tumor scans are of essential importance for improved disease diagnosis, treatment planning and follow-up of individual patients (Iglesias and Sabuncu, 2015; Mai et al., 2015; Menze et al., 2015; Chen et al., 2018). Lately, a wave of deep learning is taking over traditional computer aided diagnosis techniques, by learning abundant multi-level features from large amount of training repository for image representation and analyzing (Litjens et al., 2017; Shen et al., 2017). Various architectures of deep convolutional neural networks have been developed and employed for brain tumor segmentation (Pereira et al., 2016; Havaei et al., 2017; Kamnitsas et al., 2017; Zhao et al., 2018). Despite achieving satisfactory performance, deep learning based approaches require enormous amount of labeled images to train a segmentation model. Collecting and labeling useful training samples may last a lengthy duration thus sometimes is clinically impractical. In addition, the presence of pathologies in MR brain images causes difficulties in most of other image analyses, such as image registration, atlas construction and atlas-based anatomical segmentation. The recovery of pathological regions with normal brain appearances can facilitate subsequent image computing procedures. For example, the recovered images could further be used for atlas construction and specific patient's follow-up (Joshi et al., 2004; Liu et al., 2014; Zheng et al., 2017; Han et al., 2018). However, there is lack of deep learning based methods developed for pathological medical image recovery. In contrast, the low-rank and sparse decomposition (LSD) (Wright et al., 2009; Candès et al., 2011) scheme, learning normal image appearance from unlabeled population data, has been widely employed to decompose pathological MR brain images into recovered normal brain appearances and pathological regions (Liu et al., 2015; Tang et al., 2018).

Although the low-rank and sparse analyses of computational brain tumor segmentation has attracted considerable attention during last decade, it remains several challenges. First, conventional LSD methods have to be computed on a series of aligned images (Otazo et al., 2015; Tang et al., 2018), because the image misalignment causes undesired structure differences that would interfere the representation of sparse component. Thus, the image alignment should be conducted before/during the LSD computation; however, the image alignment itself is a challenging task. Second, specific spatial constraint should be imposed on sparse component to restrict the structured sparsity of the tumor region in the whole image. Third, LSD methods often produce recovered images (i.e., low-rank component) with distorted pathological regions (Liu et al., 2015), due to the lack of effective constraint between low-rank and sparse components. Thus it is essential to adaptively balance the low-rank and sparse components to reliably recover tumor regions meanwhile retaining normal brain regions.

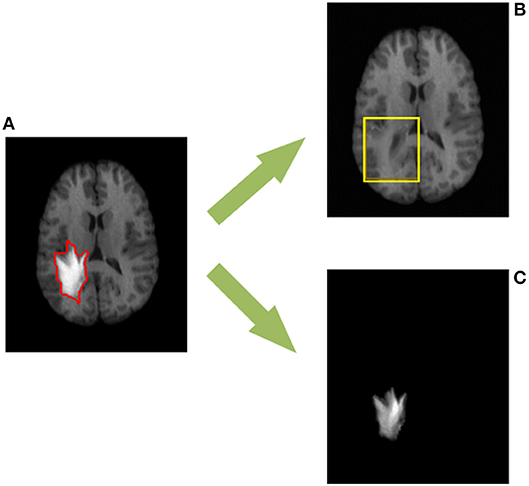

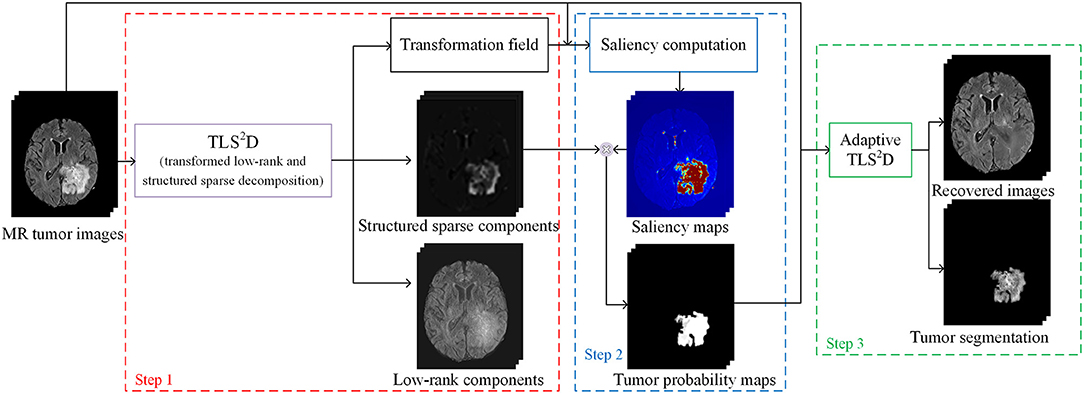

To address aforementioned issues, this paper presents a novel method for the simultaneous recovery and segmentation of pathological MR brain images (see Figure 1). Specifically, we propose a transformed low-rank and structured sparse decomposition (TLS2D) method. The proposed TLS2D integrates the structured sparsity constraint, LSD and image alignment into a unified framework, which is robust for extracting pathological regions. Furthermore, the well recovered images can be obtained using TLS2D with the combined structured sparse and computed image saliency as the adaptive sparsity constraint. Experimental results on synthetic and real MR brain tumor images demonstrate that the proposed TLS2D can effectively extract and recover tumor regions.

Figure 1. The proposed TLS2D method can decompose (A) the MR brain tumor image into (B) the recovered MR image with quasi-normal brain appearances, and (C) the extracted tumor region. The red contour in (A) indicates the manually delineated tumor boundary. The yellow box in (B) indicates the reliably recovered region.

2. Methods

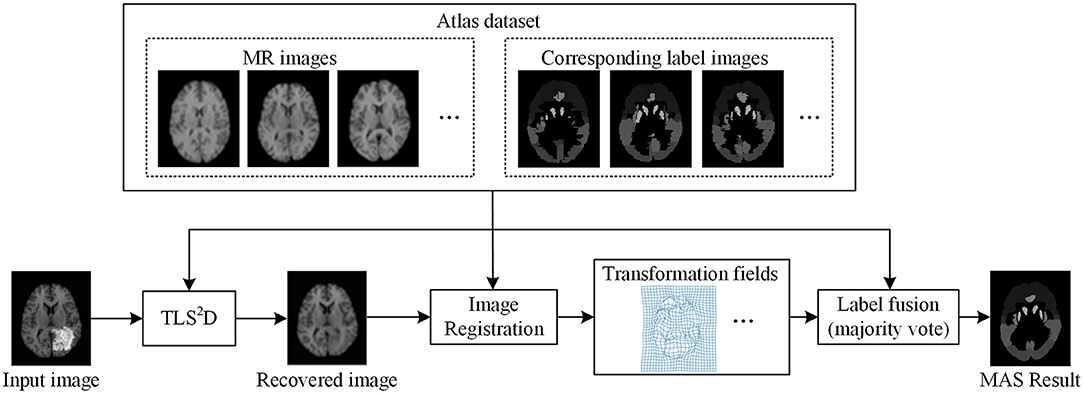

The proposed recovery and segmentation framework is shown in Figure 2. Our TLS2D first iteratively aligns all images and decomposes aligned images into low-rank and structured sparse components. Then the structured sparse components are combined with the computed saliency maps to generate tumor probability maps as the adaptive sparsity constraint. The final recovery and segmentation is obtained by imposing the adaptive sparsity constraint on the TLS2D.

Figure 2. The illustration of the whole recovery and segmentation framework using the proposed transformed low-rank and structured sparse decomposition (TLS2D) method.

The following subsections present a brief review of classical LSD, the details of our method and elaborate the novel TLS2D.

2.1. Review of Low-Rank and Sparse Decomposition (LSD)

Suppose we are given n previously aligned MR brain images , where w and h denotes width and height of the image, respectively. We can vectorize each image matrix An to form the column of , where m = w × h.

The conventional LSD method decomposes A into a low-rank matrix L and a sparse matrix S, where L indicates the linearly correlated normal images, and S represents sparse tumor regions. The decomposition can be solved by the following convex optimization:

where ||L||* is the nuclear norm of L (i.e., the sum of its singular values), ||S||1 is the ℓ1 norm of S, and regularizing parameter λ weights the relationship between low-rank and sparse components. The optimization in Equation (1) can be solved by augmented Lagrangian multiplier (ALM) method (Lin et al., 2010).

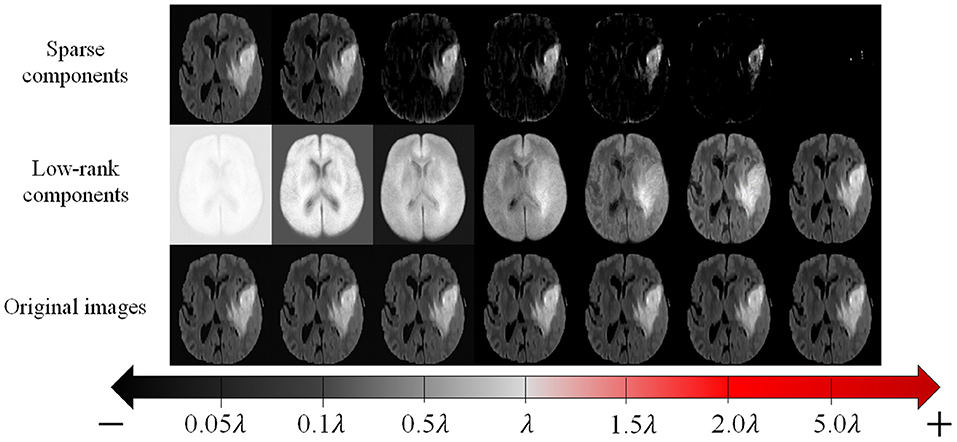

To realize practical and reliable recovery and segmentation of pathological MR images, the LSD remains three issues to be addressed: (1) all images shall be aligned in the same spatial domain; (2) S shall be structured sparse to better represent the structured sparsity of the contiguous tumor region in the whole image; (3) as illustrated in Figure 3, as the parameter λ becomes smaller, the low-rank images can recover tumor regions more reliably, but also generate more blurred appearances in originally normal regions. Therefore, regularizing parameter λ shall be different regarding to normal and tumor regions, thus to adaptively balance the low-rank and sparse components to reliably recover tumor regions meanwhile retaining normal brain regions.

Figure 3. The original MR images (bottom row), and corresponding recovered low-rank components (middle row) and sparse components (top row) given by conventional LSD method, with different values of regularizing parameter λ.

2.2. Transformed Low-Rank and Structured Sparse Decomposition (TLS2D)

To tackle the issues in LSD, we propose a transformed low-rank and structured sparse decomposition. Firstly, considering the tumor region usually occupies a contiguous portion of the brain image, thus it is reasonable to model the tumor region using the structured sparsity norm. Inspired by the structured sparsity in Jia et al. (2012), we introduce a structured sparsity norm Ω(S) to model tumor region, and define low-rank and structured sparse decomposition (LS2D) as:

where

In Equation (3), is the ith column in S; is the matrix form of Si. We define 3 × 3 overlapping-patch groups G in mat(Si), and g∈G represents each 3 × 3 group. Each group overlaps 6 pixels with its neighbor group. ||·||∞ is the ℓ∞ norm (i.e., the maximum value in a group g). The structured sparsity norm Ω(S) in Equation (2) can constrain S to be structured distribution thus better representing tumor region.

During the decomposition, the spatial mismatch between different images may cause undesired sparse noise. To alleviate the spatial mismatch, we perform image alignment in our decomposition procedure (Zheng et al., 2017). The proposed TLS2D is defined as follows:

where τ denotes a set of n affine transformations τ1, τ2, …, τn that warps A to align all images; .

The optimization of our TLS2D in Equation (4) is non-convex and difficult to solve directly due to the nonlinearity of the τ. To tackle this issue, we can iteratively linearize about the estimate of τ according to Boyd et al. (2011) and Wang et al. (2018). Specifically, we linearize the constraint by using the local first order Taylor approximation for each image as , where , and each is defined by p parameters of the transformation; is the Jacobian of the image Ai with respect to the transformation τi, and {ϵi} denotes the standard basis for ℝn. Thus, Equation (4) can be relaxed into the following optimization:

Then the resulting convex programming in Equation (5) can be solved by ALM method (Lin et al., 2010). We formulate the following augmented Lagrangian function:

where ; Y∈ℝm × n is the Lagrangian multiplier and μ is a positive hyperparameter; 〈·, ·〉 denotes the matrix inner product, and ||·||F is the Frobenius norm. The ALM algorithm then estimates both the optimal solution and the Lagrange multiplier by iteratively solving the following four subproblems:

where superscript t denotes the iteration. In each iteration, the first problem in Equation (7) can be expressed as

where . The problem in Equation (8) has a simple closed-form solution by soft thresholding operator (Parikh et al., 2014). Suppose the singular value decomposition of HL is (U, Σ, V) = svd(HL), then , where is the soft thresholding operator and [·]+ = max(·, 0).

The second problem in Equation (7) can be rewritten as

where . The problem in Equation (9) is the proximal operator associated with the structured sparsity norm, which can be calculated by solving a quadratic min-cost flow problem (Mairal et al., 2010).

Then given the current estimated Lt+1 and St+1, the solution of the third problem in Equation (7) can be calculated as

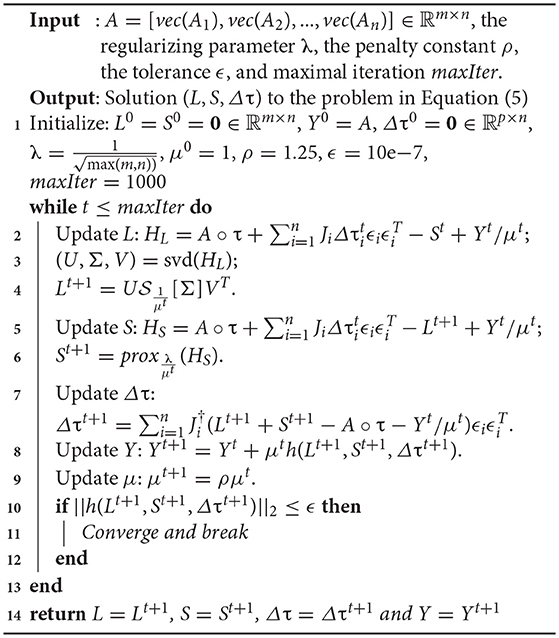

where denotes the Moore-Penrose pseudoinverse of Ji. We summarize the solver for Equation (4) in Algorithm 1.

2.3. Recovery and Segmentation Framework

In our recovery and segmentation framework, at the first step we employ the proposed TLS2D to align all MR images and meanwhile obtaining low-rank and structured sparse images (see Figure 2). The low-rank images at this step blur the tumor region and yet cannot reliably recover the normal image appearances. To address this problem, we propose to leverage the obtained structured sparse component to adjust the regularizing parameter λ in Equation (4) for the adaptive sparsity constraint.

Specifically, we compute the saliency maps of the MR images using (Perazzi et al., 2012). The saliency map indicates the saliency of each pixel to catch the human attention, with value 1 denoting the highest attention and 0 denoting no attention. According to (Perazzi et al., 2012), in order to calculate the saliency of an image, we first abstract this image into perceptually homogeneous elements using (Achanta et al., 2012). We then employ a set of high-dimensional Gaussian filters (Adams et al., 2010) to calculate two contrast measures (i.e., the uniqueness and spatial distribution of elements), and use these two measures to predict the final saliency of each pixel. In pathological MR images, the most salient part shall be the tumor regions. We then obtain the tumor probability map of an image by computing the dot product between its binary structured sparse image and its corresponding saliency map, as shown in Figure 2. The tumor probability map indicates the probability of each pixel being tumor region. We denote tumor probability map .

Finally, we use the tumor probability map to adaptively adjust the regularizing parameter λ in Equation (4). We define the adaptive TLS2D to obtain the final tumor segmentation and well recovered quasi-normal images:

where 1 ∈ ℝm×n, with each element equals to 1. λ(1 − P) is the adaptive regularizing matrix. ⊙ denotes dot product. In such a way, the sparse constraints for tumor and normal regions are set differently, thus our TLS2D can reliably recover tumor regions meanwhile retaining normal regions.

3. Experiments and Results

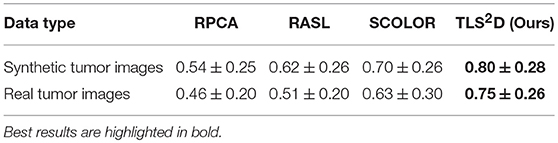

The proposed TLS2D method was evaluated on both synthetic and real MR brain tumor images. We also extensively compared our method with state of the art, including Robust Principal Component Analysis (RPCA) (Candès et al., 2011), Robust Alignment by Sparse and Low-rank decomposition (RASL) (Peng et al., 2012), and Spatially COnstraint LOw-Rank (SCOLOR) (Tang et al., 2018). Specifically, the RPCA method is one of the most classical and successful low-rank and sparse decomposition schemes; the RASL method considers spatial mismatch between different images and hence adds image alignment into the low-rank based decomposition procedure; the SCOLOR method imposes spatial constraint on sparse component to restrict its structured sparsity.

The metrics employed to quantitatively evaluate recovery and segmentation performance was structural similarity index (SSIM) (Wang et al., 2004) and Dice index (Chang et al., 2009), respectively. The SSIM index is the most popular metric to evaluate the similarity of two images by using structural information. The SSIM of two images x and y is:

where μx and μy is the average of x and y; σx and σy is the variance of x and y, respectively; σxy is the covariance of x and y; c1 and c2 are two constants to stabilize the division. The Dice index is used for comparing the similarity of two regions, and can be calculated as:

where T and G denotes the segmented tumor region and ground truth, respectively.

3.1. Validation on Synthetic MR Brain Tumor Images

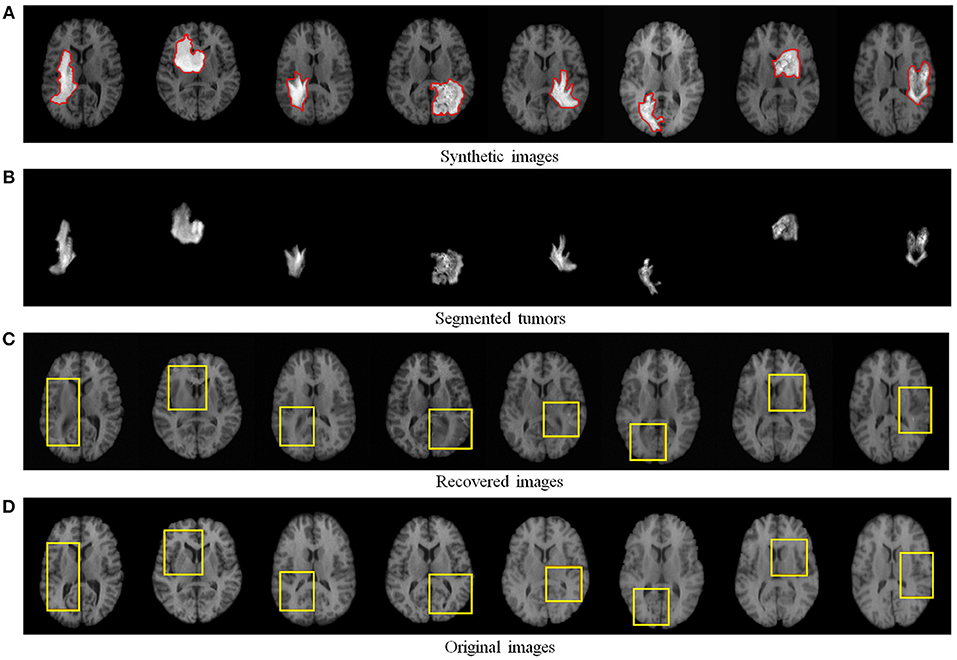

We first quantitatively evaluated the recovery performance of our method on synthetic tumor images. The synthetic MR brain tumor images are based on images from a public dataset LPBA40 (Shattuck et al., 2008). The LPBA40 dataset includes 40 T1-weighted MR normal brain images. Some example normal images from LPBA40 are shown in Figure 4D. We generated the synthetic tumor images by fusing tumor regions derived from a real MR tumor image dataset BRATS2018 (Menze et al., 2015) (see Figure 4A).

Figure 4. Recovery and segmentation of (A) synthetic MR brain tumor images: (B) the segmented tumors, (C) the recovered images with normal brain appearances, (D) the corresponding original MR images from LPBA40 (Shattuck et al., 2008). Yellow boxes illustrate the reliably recovered regions.

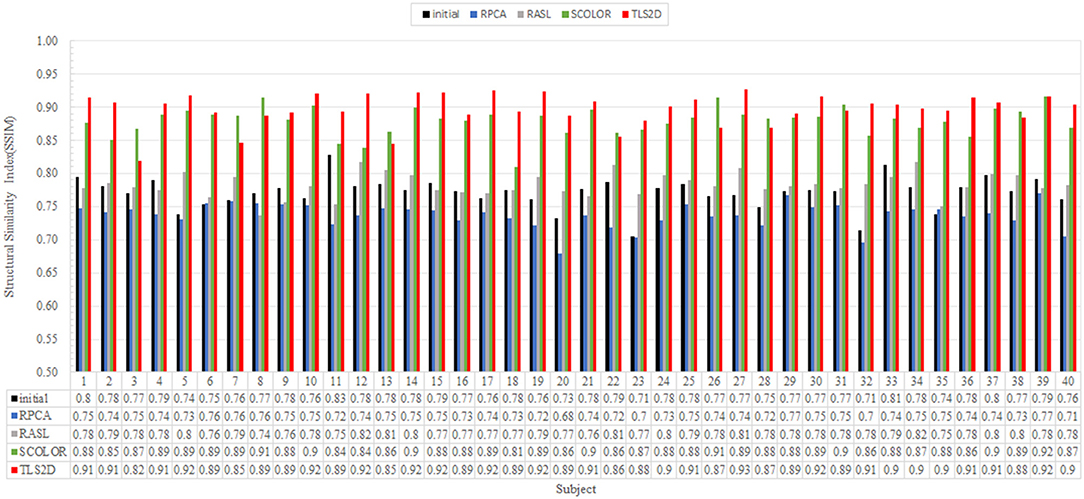

Figure 4 visualizes some recovery and segmentation results obtained by our method. It can be observed that our method can reliably extract the tumor regions, and recover these regions with normal brain appearances. Figure 5 further illustrates the quantitative SSIM values between the original MR images and the recovered images by different methods. Our TLS2D method consistently achieves the most similar image appearance to the original images from LPBA40. In addition, Table 1 lists the Dice indices of the segmented tumor regions by different methods. Our TLS2D achieves the best segmentation performance.

Figure 5. The structural similarity index (SSIM) between each of the original MR images and the corresponding recovered images by different methods. The “Initial” indicates the SSIM between the synthetic tumor images and the corresponding original images.

3.2. Evaluation on Real MR Brain Tumor Images

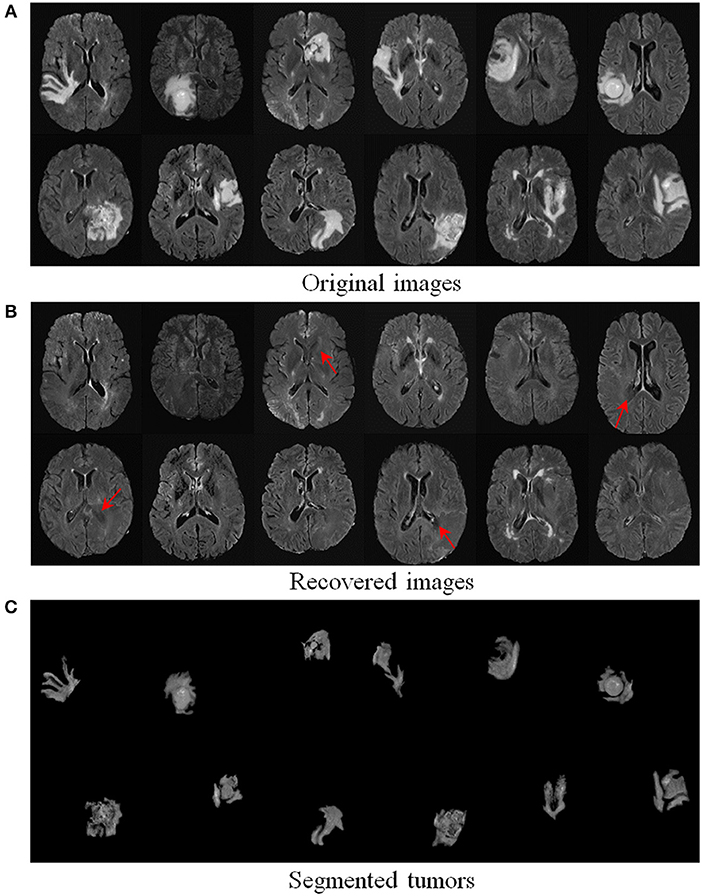

We further evaluated the efficacy of our method on 124 real T2-weighted FLAIR MR brain tumor images from the dataset BRATS2018 (Menze et al., 2015). Table 1 demonstrates that our TLS2D method achieves the best tumor segmentation results. Figure 6 illustrates some example recovery and segmentation results obtained by our method. It can be seen from Figure 6 that our method can achieve satisfactory recovery and segmentation performance. The recovered images by our method could infer the plausible brain structures, see red arrows in Figure 6B.

Figure 6. Recovery and segmentation of (A) real MR brain tumor images from BRATS2018 (Menze et al., 2015); (B) the recovered images with normal brain appearances, (C) the tumor segmentation results. Red arrows indicate well recovered brain structures.

3.3. Application to Multi-Atlas Segmentation

The recovery of pathological regions with normal brain appearances is beneficial for other image computing tasks, such as multi-atlas segmentation (MAS). The MAS attempts to register multiple normal brain atlases to a new brain image, thus to map their corresponding anatomical labels to the new brain image for the brain segmentation. Conventional MAS methods may not perform well when images are with tumor regions, because the appearance change induced by these regions cause difficulties in registering multiple atlases to the brain tumor image. We conducted multi-atlas segmentation based on the recovered images to demonstrate the benefit of our method on image recovery.

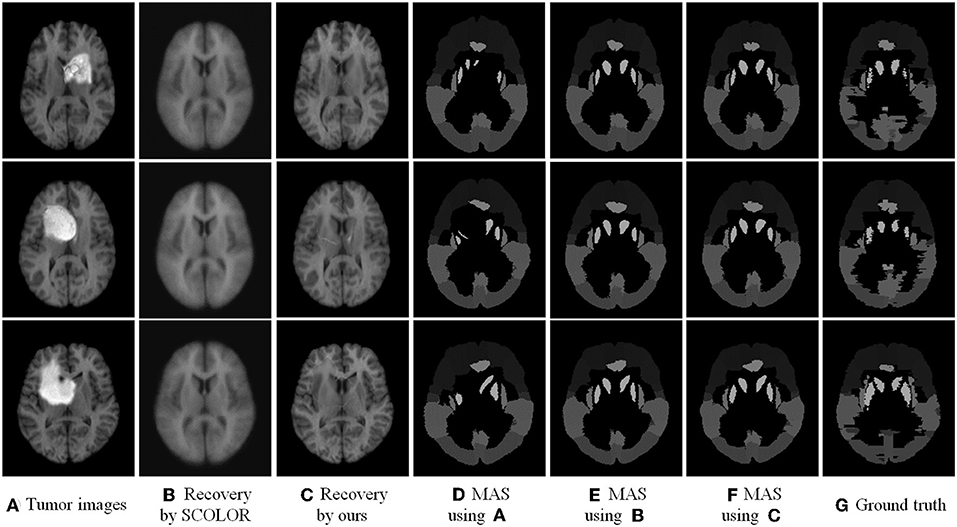

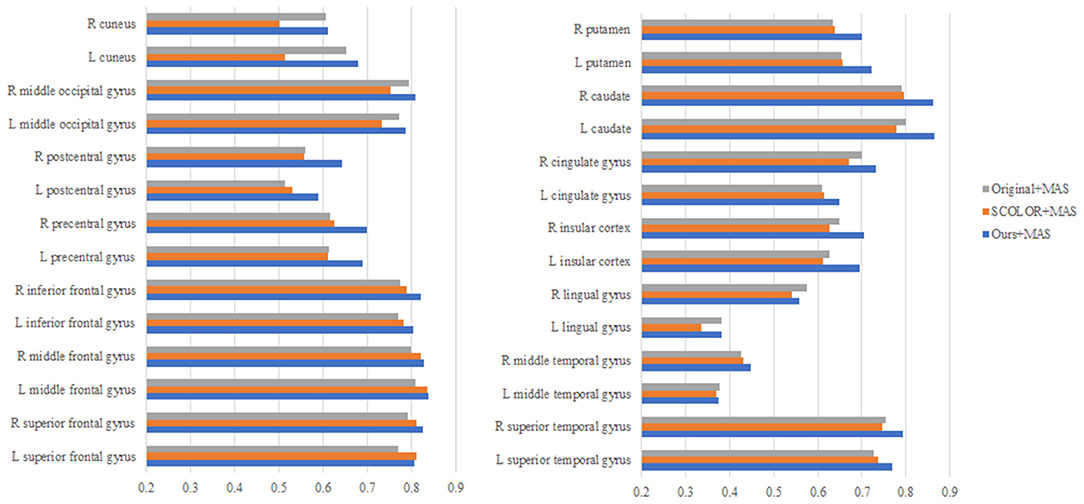

We used 40 T1-weighted MR images and their corresponding segmentation labels from LPBA40 (Shattuck et al., 2008) to conduct MAS. For each time of MAS, we chose one image to generate synthetic tumor image, and employed the remaining 39 images as multiple atlases. As shown in Figure 7, we then used the proposed TLS2D method to obtain the recovered image, and utilized an intensity-based non-rigid registration method (Myronenko and Song, 2010) to map multiple atlases to the recovered image for the brain segmentation via majority vote based label fusion. Figure 8 shows some MAS results obtained by using the recovered images and original images, respectively. It can be observed that the brain segmentations using our recovered images outperform those using original tumor images, especially in the regions tumor occupied. It also can be observed from Figure 8 that compared to SCOLOR method, our method can produce much clearer recovered images. Figure 9 further illustrates the average Dice indices of different brain regions of 40 segmented brain tumor images using MAS+original images, MAS+SCOLOR recovered images and MAS+our recovered images, respectively. The MAS using our recovered images consistently achieve better Dice indices compared to the MAS using original images and recovered images from SCOLOR, which demonstrates our method is potentially useful to improve the MAS when images are with pathological regions.

Figure 8. Multi-atlas segmentation results: (A) brain tumor images, (B) the recovered images by SCOLOR method, (C) our recovered images with quasi-normal brain appearances, (D) the MAS results by using original tumor images, (E) the MAS results by using the recovered images from SCOLOR, (F) the MAS results by using our recovered images, and (G) the segmentation ground truth.

Figure 9. The average Dice indices of different brain regions of 40 segmented brain tumor images using MAS+original images, MAS+SCOLOR recovered images, and MAS+our recovered images, respectively.

4. Discussion and Conclusion

In this study, we have proposed a novel low-rank based method, called transformed low-rank and structured sparse decomposition (TLS2D), for the reliable recovery and segmentation of pathological MR brain images. By integrating the structured sparsity, image alignment, and adaptive spatial constraint into a unified matrix decomposition framework, our method is robust for extracting pathological regions, and also is reliable for recovering quasi-normal MR appearances. The recovered image is beneficial for subsequent image computing procedures, such as atlas-based segmentation. We have compared the proposed TLS2D method with several state-of-the-art low-rank based approaches on synthetic and real MR brain images. Regarding these compared methods, the RPCA method is a conventional low-rank and sparse decomposition method; the RASL method embeds image alignment into LSD framework; the SCOLOR method imposes spatial constraint on sparse component. Experimental results show our method consistently outperforms all compared methods, which demonstrates the contribution of the proposed transformed low-rank and structured sparse decomposition with adaptive sparse constraint on simultaneous recovery and segmentation.

Computer aided methods that can assist clinicians to analyze the MR brain tumor scans are of essential significance for improved diagnosis, treatment planning and patients' follow-up. Automated tumor segmentation is the primary research task for analyzing the pathological images, and has been extensively investigated in the literature (Gordillo et al., 2013; Menze et al., 2015; Zhou et al., 2017). However, in addition to tumor segmentation task, the presence of pathologies in MR images poses challenges in other image computing tasks, such as intensity-/feature-based image registration (Sotiras et al., 2013) and atlas-based segmentation of brain structures (Cabezas et al., 2011), due to the structure and appearance changes of pathological brain images. Thus the recovery of pathological regions with normal brain appearances is beneficial for most image computing procedures. To this end, we consider to integrate the registration, segmentation and recovery procedures into a unified decomposition framework. The proposed TLS2D is a generic method for analyzing the MR brain tumor scans. It is worth noting that although our method is able to provide recovered images with quasi-normal brain appearances, the recovered regions may have some artifacts, located in the region around original tumor boundary, as shown in Figure 8. This is mainly due to the distinction of sparse constraints between inner boundary (tumor region) and outer boundary (normal region). Even so, compared to the original pathological images, our recovered images are more similar to the normal brain images, thus are more convenient to be used for other image computing tasks, such as multi-atlas segmentation shown in section 3.3.

The tumor region usually occupies a contiguous portion in the MR brain image, thus the distributions of tumor pixels are not pixel-wised sparse but structurally sparse. This motivates us to model the tumor region using the structured sparsity norm. Considering that the structured sparsity norm described in Jia et al. (2012) can effectively encourage sparse component to distribute in structured patterns and also its facility to be implemented in the low-rank and sparse decomposition scheme, we employ this structured sparsity norm (Jia et al., 2012) to model tumor region in this study. Note that the structured sparsity (Jia et al., 2012) could be replaced by sparsity in a different basis (e.g., a wavelet basis), but such sparsity needs to take into account the spatial connection of the sparse pixels.

The tumor segmentation performance of our method still could be improved, especially compared with the state-of-the-art deep learning based segmentation models (Pereira et al., 2016; Havaei et al., 2017). However, these deep learning based methods typically require enormous amount of high-quality labeled images to train a model for medical image segmentation. Although some recent approaches (Mlynarski et al., 2018; Shah et al., 2018) proposed a mixed-supervision scheme, which employed a minority of images with high-quality per-pixel labels and a majority of images with coarse-level annotations (bounding boxes, landmarks or image-level annotations) to train the deep neural networks; preparing annotations such as bounding boxes and landmarks is still laborious. Compared with deep learning based methods, our advantage is that the proposed TLS2D does not require labeled images to train a segmentation model; it extracts tumor regions by analyzing normal MR image appearances from unlabeled population data. What's more, the segmentation results of our method can alleviate the image labeling procedure by the clinicians. Our segmentation results could further be used as label information for the semi-supervised training of deep learning based segmentation models (Papandreou et al., 2015; Bai et al., 2017).

Author Contributions

CL, YW, TW, and DN: response for study design; CL: implemented the research and conducted the experiments; CL and YW: conceived the experiments, analyzed the results, and wrote the main manuscript text and prepared the figures. All authors reviewed the manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The work described in this paper was supported in part by the National Natural Science Foundation of China (No. 61701312), in part by the Natural Science Foundation of Shenzhen University (No. 2018010), and in part by the Shenzhen Peacock Plan under Grant KQTD2016053112051497.

References

Achanta, R., Shaji, A., Smith, K., Lucchi, A., Fua, P., Süsstrunk, S., et al. (2012). SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intel. 34, 2274–2282. doi: 10.1109/TPAMI.2012.120

Adams, A., Baek, J., and Davis, M. A. (2010). Fast highdimensional filtering using the permutohedral lattice. Computer Graph. Forum. 29, 753–762. doi: 10.1111/j.1467-8659.2009.01645.x

Bai, W., Oktay, O., Sinclair, M., Suzuki, H., Rajchl, M., Tarroni, G., et al. (2017). “Semi-supervised learning for network-based cardiac MR image segmentation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI) (Quebec City, QC: Springer), 253–260.

Boyd, S., Parikh, N., Chu, E., Peleato, B., and Eckstein, J. (2011). Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 3, 1–122. doi: 10.1561/2200000016

Cabezas, M., Oliver, A., Lladó, X., Freixenet, J., and Cuadra, M. B. (2011). A review of atlas-based segmentation for magnetic resonance brain images. Comput. Methods Programs Biomed. 104, e158–e177. doi: 10.1016/j.cmpb.2011.07.015

Candès, E. J., Li, X., Ma, Y., and Wright, J. (2011). Robust principal component analysis? J. ACM (JACM) 58, 11. doi: 10.1145/1970392.1970395

Chang, H.-H., Zhuang, A. H., Valentino, D. J., and Chu, W.-C. (2009). Performance measure characterization for evaluating neuroimage segmentation algorithms. Neuroimage 47, 122–135. doi: 10.1016/j.neuroimage.2009.03.068

Chen, H., Dou, Q., Yu, L., Qin, J., and Heng, P.-A. (2018). Voxresnet: deep voxelwise residual networks for brain segmentation from 3D MR images. NeuroImage 170, 446–455. doi: 10.1016/j.neuroimage.2017.04.041

Gordillo, N., Montseny, E., and Sobrevilla, P. (2013). State of the art survey on MRI brain tumor segmentation. Magn. Reson. Imaging 31, 1426–1438. doi: 10.1016/j.mri.2013.05.002

Han, X., Kwitt, R., Aylward, S., Bakas, S., Menze, B., Asturias, A., et al. (2018). Brain extraction from normal and pathological images: a joint PCA/image-reconstruction approach. NeuroImage 176, 431–445. doi: 10.1016/j.neuroimage.2018.04.073

Havaei, M., Davy, A., Warde-Farley, D., Biard, A., Courville, A., Bengio, Y., et al. (2017). Brain tumor segmentation with deep neural networks. Med. Image Anal. 35, 18–31. doi: 10.1016/j.media.2016.05.004

Iglesias, J. E., and Sabuncu, M. R. (2015). Multi-atlas segmentation of biomedical images: a survey. Med. Image Anal. 24, 205–219. doi: 10.1016/j.media.2015.06.012

Jia, K., Chan, T. H., and Ma, Y. (2012). “Robust and practical face recognition via structured sparsity,” in Proceedings of the IEEE European Conference on Computer Vision (ECCV) (Florence), 331–344.

Joshi, S., Davis, B., Jomier, M., and Gerig, G. (2004). Unbiased diffeomorphic atlas construction for computational anatomy. Neuroimage 23, S151–S160. doi: 10.1016/j.neuroimage.2004.07.068

Kamnitsas, K., Ledig, C., Newcombe, V. F., Simpson, J. P., Kane, A. D., Menon, D. K., et al. (2017). Efficient multi-scale 3D cnn with fully connected CRF for accurate brain lesion segmentation. Med. Image Anal. 36, 61–78. doi: 10.1016/j.media.2016.10.004

Lin, Z., Chen, M., Wu, L., and Ma, Y. (2010). The augmented lagrange multiplier method for exact recovery of corrupted low-rank matrices. Eprint Arxiv 9. doi: 10.1016/j.jsb.2012.10.010

Litjens, G., Kooi, T., Bejnordi, B. E., Setio, A. A. A., Ciompi, F., Ghafoorian, M., et al. (2017). A survey on deep learning in medical image analysis. Med. Image Anal. 42, 60–88. doi: 10.1016/j.media.2017.07.005

Liu, X., Niethammer, M., Kwitt, R., McCormick, M., and Aylward, S. (2014). “Low-rank to the rescue–atlas-based analyses in the presence of pathologies,” in International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI) (Cambridge, MA: Springer), 97–104.

Liu, X., Niethammer, M., Kwitt, R., Singh, N., McCormick, M., and Aylward, S. (2015). Low-rank atlas image analyses in the presence of pathologies. IEEE Trans. Med. Imaging 34, 2583–2591. doi: 10.1109/TMI.2015.2448556

Mai, J. K., Majtanik, M., and Paxinos, G. (2015). Atlas of the Human Brain. Cambridge, MA: Academic Press.

Mairal, J., Jenatton, R., Bach, F. R., and Obozinski, G. R. (2010). “Network flow algorithms for structured sparsity,” in Advances in Neural Information Processing Systems (Vancouver, BC), 1558–1566.

Menze, B. H., Jakab, A., Bauer, S., Kalpathy-Cramer, J., Farahani, K., Kirby, J., et al. (2015). The multimodal brain tumor image segmentation benchmark (BRATS). IEEE Trans. Med. Imaging 34, 1993. doi: 10.1109/TMI.2014.2377694

Mlynarski, P., Delingette, H., Criminisi, A., and Ayache, N. (2018). Deep learning with mixed supervision for brain tumor segmentation. arXiv [Preprint] arXiv:1812.04571.

Myronenko, A., and Song, X. (2010). Intensity-based image registration by minimizing residual complexity. IEEE Trans. Med. Imaging 29, 1882–1891. doi: 10.1109/TMI.2010.2053043

Otazo, R., Candès, E., and Sodickson, D. K. (2015). Low-rank plus sparse matrix decomposition for accelerated dynamic MRI with separation of background and dynamic components. Magn. Reson. Med. 73, 1125–1136. doi: 10.1002/mrm.25240

Papandreou, G., Chen, L.-C., Murphy, K. P., and Yuille, A. L. (2015). “Weakly-and semi-supervised learning of a deep convolutional network for semantic image segmentation,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV) (Santiago), 1742–1750.

Parikh, N., and Boyd, S. (2014). Proximal algorithms. Found. Trends Optim. 1, 127–239. doi: 10.1561/2400000003

Peng, Y., Ganesh, A., Wright, J., Xu, W., and Ma, Y. (2012). RASL: robust alignment by sparse and low-rank decomposition for linearly correlated images. IEEE Trans. Pattern Anal. Mach. Intel. 34, 2233–2246. doi: 10.1109/TPAMI.2011.282

Perazzi, F., Krähenbühl, P., Pritch, Y., and Hornung, A. (2012). “Saliency filters: Contrast based filtering for salient region detection,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (Providence, RI), 733–740.

Pereira, S., Pinto, A., Alves, V., and Silva, C. A. (2016). Brain tumor segmentation using convolutional neural networks in MRI images. IEEE Trans. Med. Imaging 35, 1240–1251. doi: 10.1109/TMI.2016.2538465

Shah, M. P., Merchant, S., and Awate, S. P. (2018). “Ms-net: mixed-supervision fully-convolutional networks for full-resolution segmentation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention (Granada: Springer), 379–387.

Shattuck, D. W., Mirza, M., Adisetiyo, V., Hojatkashani, C., Salamon, G., Narr, K. L., et al. (2008). Construction of a 3D probabilistic atlas of human cortical structures. NeuroImage 39, 1064–1080. doi: 10.1016/j.neuroimage.2007.09.031

Shen, D., Wu, G., and Suk, H.-I. (2017). Deep learning in medical image analysis. Ann. Rev. Biomed. Eng. 19, 221–248. doi: 10.1146/annurev-bioeng-071516-044442

Sotiras, A., Davatzikos, C., and Paragios, N. (2013). Deformable medical image registration: a survey. IEEE Trans. Med. Imaging 32, 1153–1190. doi: 10.1109/TMI.2013.2265603

Tang, Z., Ahmad, S., Yap, P. T., and Shen, D. (2018). Multi-atlas segmentation of MR tumor brain images using low-rank based image recovery. IEEE Trans. Med. Imaging 37, 2224–2235. doi: 10.1109/TMI.2018.2824243

Wang, Y., Zheng, Q., and Heng, P. A. (2018). Online robust projective dictionary learning: shape modeling for MR-TRUS registration. IEEE Trans. Med. Imaging 37, 1067–1078. doi: 10.1109/TMI.2017.2777870

Wang, Z., Bovik, A. C., Sheikh, H. R., and Simoncelli, E. P. (2004). Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Proc. 13, 600–612. doi: 10.1109/TIP.2003.819861

Wright, J., Ganesh, A., Rao, S., Peng, Y., and Ma, Y. (2009). “Robust principal component analysis: exact recovery of corrupted low-rank matrices via convex optimization,” in Advances in Neural Information Processing Systems, (Vancouver, BC), 2080–2088.

Zhao, X., Wu, Y., Song, G., Li, Z., Zhang, Y., and Fan, Y. (2018). A deep learning model integrating FCNNs and CRFs for brain tumor segmentation. Med. Image Anal. 43, 98–111. doi: 10.1016/j.media.2017.10.002

Zheng, Q., Wang, Y., and Heng, P. A. (2017). “Online robust image alignment via subspace learning from gradient orientations,” in Proceedings of the IEEE Conference on Computer Vision (ICCV) (Venice), 1771–1780.

Keywords: MR brain images, image recovery, tumor segmentation, structured sparsity, low-rank, matrix decomposition

Citation: Lin C, Wang Y, Wang T and Ni D (2019) Low-Rank Based Image Analyses for Pathological MR Image Segmentation and Recovery. Front. Neurosci. 13:333. doi: 10.3389/fnins.2019.00333

Received: 13 January 2019; Accepted: 21 March 2019;

Published: 09 April 2019.

Edited by:

Yangming Ou, Harvard Medical School, United StatesReviewed by:

Xiaoxiao Liu, CuraCloud Corporation, United StatesSuyash P. Awate, Indian Institute of Technology Bombay, India

Copyright © 2019 Lin, Wang, Wang and Ni. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yi Wang, b25ld2FuZ0BzenUuZWR1LmNu

Chuanlu Lin

Chuanlu Lin Yi Wang

Yi Wang Tianfu Wang

Tianfu Wang Dong Ni

Dong Ni