- 1Institute for Bioengineering of Catalunya, Barcelona, Spain

- 2Barcelona Institute for Science and Technology, Barcelona, Spain

- 3Universitat Pompeu Fabra, Barcelona, Spain

- 4Institució Catalana de Recerca i Estudis Avançats, Barcelona, Spain

The grand quest for a scientific understanding of consciousness has given rise to many new theoretical and empirical paradigms for investigating the phenomenology of consciousness as well as clinical disorders associated to it. A major challenge in this field is to formalize computational measures that can reliably quantify global brain states from data. In particular, information-theoretic complexity measures such as integrated information have been proposed as measures of conscious awareness. This suggests a new framework to quantitatively classify states of consciousness. However, it has proven increasingly difficult to apply these complexity measures to realistic brain networks. In part, this is due to high computational costs incurred when implementing these measures on realistically large network dimensions. Nonetheless, complexity measures for quantifying states of consciousness are important for assisting clinical diagnosis and therapy. This article is meant to serve as a lookup table of measures of consciousness, with particular emphasis on clinical applicability. We consider both, principle-based complexity measures as well as empirical measures tested on patients. We address challenges facing these measures with regard to realistic brain networks, and where necessary, suggest possible resolutions.

1. Introduction

In patients with disorders of consciousness (DoC), such as locked-in syndrome, coma or the vegetative state, levels of consciousness are clinically assessed through both, behavioral tests as well as neurophysiological recordings. In particular, these methods are used to assess levels of wakefulness (or arousal) and awareness (Laureys et al., 2004; Laureys, 2005). These observations have culminated in a two dimensional operational definition of clinical consciousness. Assessments of awareness use behavioral and/or neurophysiological (fMRI or EEG) protocols to gauge performance on cognitive tasks (Fingelkurts et al., 2012; De Pasquale et al., 2015). Assessments of wakefulness are based on metabolic markers (when reporting is not possible) such as estimates of glucose uptake in the brain, calibrated from positron emission tomography (PET) imaging (Bodart et al., 2017). A clinical definition of consciousness is useful when one wants to classify closely associated states and disorders of consciousness. For instance, in identifying clusters of related disorders on a bivariate scale, where wakefulness and awareness label orthogonal axes. Under healthy conditions, these two levels or scales are almost linearly correlated, as in conscious wakefulness (which shows high levels of arousal accompanying high levels of awareness), or in various stages of sleep (displaying low arousal with low awareness in deep sleep and slightly increased awareness during dreams). However, in pathological states, wakefulness without awareness can be observed in the vegetative state (Laureys et al., 2004), while transiently reduced awareness is observed following seizures (Blumenfeld, 2012). Patients in the minimally conscious state show intermittent and limited non-reflexive and purposeful behavior (Giacino et al., 2002; Giacino, 2004), whereas patients with hemi-spatial neglect display reduced awareness of stimuli contralateral to the side where brain damage has occurred (Parton et al., 2004). Nevertheless, while awareness is directly associated to consciousness, the role of wakefulness is less direct. Of course, in all organic life forms metabolic processes are necessary precursors of consciousness, if at all it exists in those organisms. Still it is not clear whether wakefulness does more than serving merely as a necessary condition for biological consciousness (Fingelkurts et al., 2014). Also it is not known if states of consciousness can exist, which do not depend on any form of wakefulness.

Given the above proposals for classifying states and disorders of consciousness, the topic of this article will concern complexity measures that describe awareness. The question is how can one reliably quantify states of awareness from patient data? This is particularly important for non-communicative patients as in coma or minimal consciousness (MC). It is for this reason that a variety of dynamical complexity measures have been developed for consciousness research. In this article, we first describe theoretically-grounded complexity measures and the challenges one faces when applying these measures to realistic brain data. We then outline alternative empirical approaches to classify states and disorders of consciousness. We end with a discussion on how these two approaches might inform each other.

2. Measures of Integrated Information

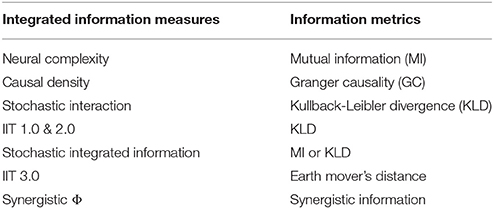

Dynamical complexity measures are designed to capture both, the network's topology as well as its causal dynamics. The most prominent of these measures is integrated information (also known as Φ), which was introduced in Tononi et al. (1994). Φ is defined as the quantity of information generated by a system as a whole, over and above that of its parts, taking into account the system's causal dynamical interactions. This reflects the intuition going back to William James that conscious states are integrated, yet diverse. Φ seeks to formalize this intuition in terms of the system's complexity. It is believed that complexity stems from simultaneous integration and differentiation of information generated by the system's collective states. Here differentiation refers to local functional specialization, while integration, as a complementary design principle, results in global or distributed coordination within the system. It is this interplay that generates integrated yet diversified information, which is believed to support cognitive and behavioral states in conscious agents. Early proposals formalizing integrated information can be found in Tononi et al. (1994), Tononi and Sporns (2003), and Tononi (2004). More recently, considerable progress has been made toward the development of a normative theory and applications of integrated information (Balduzzi and Tononi, 2008; Barrett and Seth, 2011; Tononi, 2012; Arsiwalla and Verschure, 2013; Oizumi et al., 2014; Arsiwalla and Verschure, 2016a,c; Krohn and Ostwald, 2016; Tegmark, 2016; Arsiwalla et al., 2017b; Arsiwalla and Verschure, 2017). As a whole vs. parts measure, integrated information has been defined in several different ways. Some examples are neural complexity (Tononi et al., 1994), causal density (Seth, 2005), Φ from integrated information theory: IIT 1.0, 2.0 & 3.0 (Tononi, 2004; Balduzzi and Tononi, 2008; Oizumi et al., 2014), stochastic interaction (Wennekers and Ay, 2005; Ay, 2015), stochastic integrated information (Barrett and Seth, 2011; Arsiwalla and Verschure, 2013, 2016b) and synergistic Φ (Griffith and Koch, 2014; Griffith, 2014). In Table 1 we summarize these theoretical measures along with their associated information metrics.

However, computing integrated information for large neurophysiological datasets has been challenging due to both, computational difficulties and limits on domains where these measures can be implemented. For instance, many of these measures use the minimum information partition of the network. This involves evaluating a large number of network configurations (more precisely, Bell number of network configurations), which makes their computational cost extremely high for networks of large size. As for domains of applicability, the measure of Balduzzi and Tononi (2008) has been formulated for deterministic, discrete-state, Markovian systems with the maximum entropy distribution. On the other hand, the measure of Barrett and Seth (2011) has been designed for stochastic, continuous-state, non-Markovian systems. The latter also admits dynamics with any empirical distribution (though in practice, it is easier to use with Gaussian distributions). The definition in Barrett and Seth (2011) is based on mutual information, whereas Balduzzi and Tononi (2008) uses an integrated information measure based on Kullback-Leibler divergence. However, it has also been noted that in some cases the measure of Barrett and Seth (2011) gives negative values, which complicates its interpretation. In contrast, the Kullback-Leibler based measure of Balduzzi and Tononi (2008) computes the information generated during state transitions and remains positive in the regime of stable dynamics. This lends it a natural interpretation as an integrated information measure. Note that both measures (Balduzzi and Tononi, 2008; Barrett and Seth, 2011) use a normalization scheme in their calculations. This normalization inadvertently introduces ambiguities in computations. Normalization is used for the purpose of determining the network partition that minimizes integrated information. However, it turns out that a normalization dependent choice of partition ends up affecting the computed value of Φ, thereby introducing ambiguity. An alternate measure based on “Earth-Mover's distance” was proposed in Oizumi et al. (2014) for discrete-state systems. This does away with normalization. However, this formulation lies outside the scope of standard information theory and is challenging for running computations on large networks.

More recently, the above-mentioned issues including normalization and scaling of computational costs with network size have been thoroughly addressed in Arsiwalla and Verschure (2016b). This study developed a formulation of stochastic integrated information based on the Kullback-Leibler divergence between the conditional multivariate distribution on the set of network states vs. the corresponding factorized distribution over its parts. The corresponding measure makes use of the maximum information partition rather than the minimum information partition used in previous measures. Using this formulation, Φ can be computed for large-scale networks with linear stochastic dynamics, for stationary (attractor) as well as non-stationary states (Arsiwalla and Verschure, 2016b). This work also demonstrated the first computation of integrated information for resting-state data of the human brain connectome. The connectome network is reconstructed from cortical white matter tractography. The data used for this computation consists of 998 voxels with approximately 28,000 weighted symmetric connections (Hagmann et al., 2008). Arsiwalla and Verschure (2016b) show that the topology and dynamics of the healthy human brain in the resting-state generates greater information complexity than a weight-preserving random rewiring of the same network (for network dynamics of the connectome refer to Arsiwalla et al., 2013; Betella et al., 2014; Arsiwalla et al., 2015a,b). While this formulation of integrated information indeed works for very large networks, it is still limited to linearized dynamics. Of course this works well in the vicinity attractors, as in the resting-state. However, it would be desirable to extend this formulation to include non-linearities existing in brain dynamics.

3. Empirical Measures

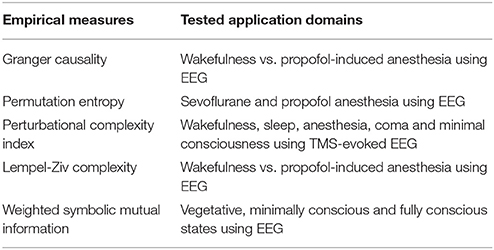

Ideally, integrated information was intended as a measure of awareness, one that could account for informational differences between states and also disorders of consciousness. However, as described above, for realistic brain dynamics and physiological data that task has in fact proven difficult. On the other hand, the basic conceptualization of consciousness in terms of integration and differentiation of causal information has motivated several empirical measures that seek to classify consciousness-related disorders from patient data. For example, Barrett et al. (2012) investigated changes in conscious levels using Granger Causality (GC) as a causal connectivity measure. Given two stationary time-series signals, Granger Causality measures “the extent to which the past of one assists in predicting the future of the other, over and above the extent to which the past of the latter already predicts its own future” (Granger, 1969), thus quantifying causal relations between two signaling sources. This was tested using electroencephalographic (EEG) data from subjects undergoing propofol-induced anesthesia. Recordings were made with source-localized signals at the anterior and posterior cingulate cortices. There Barrett et al. (2012) found “a significant increases in bi-directional GC in most subjects during loss of consciousness, especially in the beta and gamma frequency ranges.”

Other measures of causal connectivity include permutation entropy (Olofsen et al., 2008), transfer entropy (which extends Granger causality to the non-Gaussian case) (Wibral et al., 2014), symbolic transfer entropy (Staniek and Lehnertz, 2009) and permutation conditional mutual information (Li and Ouyang, 2010). Among these, permutation entropy has been tested on EEG data from patients administered with sevoflurane and propofol anesthesia. This measure has been successful in tracking anesthetic related changes in EEG patterns such as loss of high frequencies, spindle-like waves and delta waves. The other causal connectivity measures in this list have so far been applied to data from neuronal cultures or psychophysical paradigms. Many of them have shown results superior to Granger causality in the above-mentioned works. This suggests that they might be promising candidates as empirical consciousness measures.

An important measure that has been used as a clinical classifier of conscious states is the Perturbational Complexity Index (PCI), which was introduced by Casali et al. (2013) and tested on TMS-evoked potentials measured with EEG. PCI is calculated “by perturbing the cortex with transcranial magnetic stimulation (TMS) in order to engage distributed interactions in the brain and then compressing the resulting spatiotemporal EEG responses to measure their algorithmic complexity, based on the Lempel-Ziv compression.” For a given segment of EEG data, the Lempel-Ziv algorithm quantifies complexity by counting the number of distinct patterns present in the data. For instance, this can be proportional to the size of a computer file after applying a data compression algorithm. Computing the Lempel-Ziv compressibility requires binarizing the time-series data, based either on event-related potentials or with respect to a given threshold. Using PCI, Casali et al. (2013) were able to discriminate levels of consciousness during wakefulness, sleep, and anesthesia, as well as in patients who had emerged from coma and recovered a minimal level of consciousness. Later, the Lempel-Ziv complexity was also used by Schartner et al. (2015) on spontaneous high-density EEG data recorded from subjects undergoing propofol-induced anesthesia. Once again, a robust decline in complexity was observed during anesthesia. These are complexity measures based on data compression algorithms. A qualitative comparison between a data compression measure inspired by PCI and Φ was made in Virmani and Nagaraj (2016). While compression-based measures do seem to capture certain aspects of Φ, the exact relationship between the two is not completely clear. Nonetheless, these empirical measures have been useful for clinical purposes, in terms of broadly discriminating disorders of consciousness.

Another relevant complexity measure is the weighted symbolic mutual information (wSMI), introduced by King et al. (2013). This is a measure of global information sharing across brain areas. It evaluates “the extent to which two EEG channels present nonrandom joint fluctuations, suggesting that they share common sources.” This is done by first transforming continuous signals into discrete symbols, and subsequently computing the joint probabilities of symbol pairs between two EEG channels. Before computing the symbolic mutual information between two time-series signals, a weighting of symbols is introduced to disregard conjunctions of either identical or opposite-sign symbols from the two signal trains, as those cases could potentially arise from common source artifacts. In King et al. (2013) wSMI was estimated for 181 EEG recordings from awake but noncommunicating patients diagnosed in various disorders of consciousness (including 143 from patients in vegetative and minimally conscious states). This measure of information sharing was found to systematically increases with consciousness. In particular, it was able to distinguish patients in the vegetative state, minimally conscious state, and fully conscious state. In Table 2 we summarize empirical complexity measures commonly used in consciousness research along with their domains of application.

4. Discussion

The paradigm-shifting proposal that consciousness might be measurable in terms of information generated as a whole, over the sum of its parts, by the causal dynamics of the brain, has led to precise quantitative formulations of information-theoretic complexity measures. These complexity measures seek to operationalize the intuition that the complexity associated to consciousness arises from simultaneous integration and differentiation of the brain's structural and dynamical hierarchies. However, progress in this direction has faced practical challenges such as high computational cost upon scaling with network size. This is especially true with regard to realistic neuroimaging or physiological datasets. Even in the approach of Arsiwalla and Verschure (2016b), where both, the scaling and normalization problem have been solved, the formulation is still applicable only to linear dynamical systems. A possible way to extend this formulation to non-linear systems such as the brain might be to first solve the Fokker-Planck equations for these systems (as probability distributions will no longer remain Gaussian) and subsequently estimate entropies and conditional entropies numerically to compute Φ. Another solution to the problem might be to construct statistical estimators for the covariance matrices from data and then compute Φ.

In the meanwhile, at least for clinical purposes, it has been useful to have empirical complexity measures. These work as classifiers which very broadly discriminate between states of consciousness, such as wakefulness and anesthesia or between generic disorders of consciousness. However, these measures do not strictly correspond to integrated information. Some of them are based on signal compression, which does capture differentiation, though not directly integration. So far these methods have been applied on the scale of EEG datasets. One has yet to demonstrate their computational feasibility for larger datasets (which might only be a matter of time though). All in all, bottom-up approaches suggest important features that might help inform or constrain implementations of principle-based approaches. However, the latter are indispensable for ultimately understanding causal aspects of information generation and flow in the brain.

This article is intended as a lookup table spanning the landscape of both, theoretically-motivated as well as empirically-based complexity measures used in current consciousness research. Even though, for the purpose of this article, we have treated complexity as a global correlate of consciousness, there are indications that multiple complexity types, based on cognitive and behavioral control, might be important for a more precise classification of various states of consciousness (Arsiwalla et al., 2016b, 2017c; Bayne et al., 2016). This latter observation alludes to the need for an integrative systems approach to consciousness research (Fingelkurts et al., 2009; Arsiwalla et al., 2017a), especially one that is grounded in cognitive architectures and helps understand control mechanisms underlying systems level neural information processing (Arsiwalla et al., 2016a; Moulin-Frier et al., 2017).

Author Contributions

Both XA and PV contributed to the design, analysis, interpretation and writing of the manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work has been supported by the European Research Council's CDAC project: The Role of Consciousness in Adaptive Behavior: A Combined Empirical, Computational and Robot based Approach (ERC-2013- ADG 341196).

References

Arsiwalla, X. D., Betella, A., Bueno, E. M., Omedas, P., Zucca, R., and Verschure, P. F. (2013). “The dynamic connectome: a tool for large-scale 3d reconstruction of brain activity in real-time,” in ECMS, 865–869. doi: 10.7148/2013-0865

Arsiwalla, X. D., Dalmazzo, D., Zucca, R., Betella, A., Brandi, S., Martinez, E., et al. (2015a). Connectomics to semantomics: addressing the brain's big data challenge. Proc. Comput. Sci. 53, 48–55. doi: 10.1016/j.procs.2015.07.278

Arsiwalla, X. D., Herreros, I., Moulin-Frier, C., Sanchez, M., and Verschure, P. F. (2016a). Is Consciousness a Control Process? Amsterdam: IOS Press.

Arsiwalla, X. D., Herreros, I., Moulin-Frier, C., and Verschure, P. (2017a). “Consciousness as an evolutionary game-theoretic strategy,” in Conference on Biomimetic and Biohybrid Systems (Cham: Springer), 509–514.

Arsiwalla, X. D., Herreros, I., and Verschure, P. (2016b). On Three Categories of Conscious Machines. Cham: Springer International Publishing.

Arsiwalla, X. D., Mediano, P. A., and Verschure, P. F. (2017b). Spectral modes of network dynamics reveal increased informational complexity near criticality. Proc. Comput. Sci. 108, 119–128. doi: 10.1016/j.procs.2017.05.241

Arsiwalla, X. D., Moulin-Frier, C., Herreros, I., Sanchez-Fibla, M., and Verschure, P. F. (2017c). The morphospace of consciousness. arXiv:1705.11190.

Arsiwalla, X. D., and Verschure, P. (2016a). Computing Information Integration in Brain Networks. Cham: Springer International Publishing.

Arsiwalla, X. D., and Verschure, P. (2017). “Why the brain might operate near the edge of criticality,” in International Conference on Artificial Neural Networks (Cham: Springer), 326–333.

Arsiwalla, X. D., and Verschure, P. F. (2016b). The global dynamical complexity of the human brain network. Appl. Netw. Sci. 1:16. doi: 10.1007/s41109-016-0018-8

Arsiwalla, X. D., and Verschure, P. F. M. J. (2013). “Integrated information for large complex networks,” in The 2013 International Joint Conference on Neural Networks (IJCNN), (Dallas, TX), 1–7.

Arsiwalla, X. D., and Verschure, P. F. M. J. (2016c). High Integrated Information in Complex Networks Near Criticality. Cham: Springer International Publishing.

Arsiwalla, X. D., Zucca, R., Betella, A., Martinez, E., Dalmazzo, D., Omedas, P., et al. (2015b). Network dynamics with brainx3: a large-scale simulation of the human brain network with real-time interaction. Front. Neuroinform. 9:2. doi: 10.3389/fninf.2015.00002

Ay, N. (2015). Information geometry on complexity and stochastic interaction. Entropy 17, 2432–2458. doi: 10.3390/e17042432

Balduzzi, D., and Tononi, G. (2008). Integrated information in discrete dynamical systems: motivation and theoretical framework. PLoS Comput. Biol. 4:e1000091. doi: 10.1371/journal.pcbi.1000091

Barrett, A. B., Murphy, M., Bruno, M.-A., Noirhomme, Q., Boly, M., Laureys, S., et al. (2012). Granger causality analysis of steady-state electroencephalographic signals during propofol-induced anaesthesia. PLoS ONE 7:e29072. doi: 10.1371/journal.pone.0029072

Barrett, A. B., and Seth, A. K. (2011). Practical measures of integrated information for time-series data. PLoS Comput. Biol. 7:e1001052. doi: 10.1371/journal.pcbi.1001052

Bayne, T., Hohwy, J., and Owen, A. M. (2016). Are there levels of consciousness? Trends Cogn. Sci. 20, 405–413. doi: 10.1016/j.tics.2016.03.009

Betella, A., Bueno, E. M., Kongsantad, W., Zucca, R., Arsiwalla, X. D., Omedas, P., et al. (2014). “Understanding large network datasets through embodied interaction in virtual reality,” in Proceedings of the 2014 Virtual Reality International Conference, VRIC '14 (New York, NY: ACM), 23:1–23:7.

Blumenfeld, H. (2012). Impaired consciousness in epilepsy. Lancet Neurol. 11, 814–826. doi: 10.1016/S1474-4422(12)70188-6

Bodart, O., Gosseries, O., Wannez, S., Thibaut, A., Annen, J., Boly, M., et al. (2017). Measures of metabolism and complexity in the brain of patients with disorders of consciousness. NeuroImage 14, 354–362. doi: 10.1016/j.nicl.2017.02.002

Casali, A. G., Gosseries, O., Rosanova, M., Boly, M., Sarasso, S., Casali, K. R., et al. (2013). A theoretically based index of consciousness independent of sensory processing and behavior. Sci. Transl. Med. 5:198ra105. doi: 10.1126/scitranslmed.3006294

De Pasquale, F., Caravasso, C. F., Péran, P., Catani, S., Tuovinen, N., Sabatini, U., et al. (2015). Functional magnetic resonance imaging in disorders of consciousness: preliminary results of an innovative analysis of brain connectivity. Funct. Neurol. 30:193. doi: 10.11138/FNeur/2015.30.3.193

Fingelkurts, A. A., Fingelkurts, A. A., Bagnato, S., Boccagni, C., and Galardi, G. (2012). Toward operational architectonics of consciousness: basic evidence from patients with severe cerebral injuries. Cogn. Process. 13, 111–131. doi: 10.1007/s10339-011-0416-x

Fingelkurts, A. A., Fingelkurts, A. A., Bagnato, S., Boccagni, C., and Galardi, G. (2014). Do we need a theory-based assessment of consciousness in the field of disorders of consciousness? Front. Hum. Neurosci. 8:402. doi: 10.3389/fnhum.2014.00402

Fingelkurts, A. A., Fingelkurts, A. A., and Neves, C. F. (2009). Phenomenological architecture of a mind and operational architectonics of the brain: the unified metastable continuum. New Math. Nat. Comput. 5, 221–244. doi: 10.1142/S1793005709001258

Giacino, J. T. (2004). The vegetative and minimally conscious states: consensus-based criteria for establishing diagnosis and prognosis. NeuroRehabilitation 19, 293–298.

Giacino, J. T., Ashwal, S., Childs, N., Cranford, R., Jennett, B., Katz, D. I., et al. (2002). The minimally conscious state definition and diagnostic criteria. Neurology 58, 349–353. doi: 10.1212/WNL.58.3.349

Granger, C. W. J. (1969). Investigating causal relations by econometric models and cross-spectral methods. Econometrica 37, 424–438. doi: 10.2307/1912791

Griffith, V., and Koch, C. (2014). Quantifying Synergistic Mutual Information. Berlin, Heidelberg: Springer.

Hagmann, P., Cammoun, L., Gigandet, X., Meuli, R., Honey, C. J., Wedeen, V. J., et al. (2008). Mapping the structural core of human cerebral cortex. PLoS Biol. 6:e159. doi: 10.1371/journal.pbio.0060159

King, J.-R., Sitt, J. D., Faugeras, F., Rohaut, B., El Karoui, I., Cohen, L., et al. (2013). Information sharing in the brain indexes consciousness in noncommunicative patients. Curr. Biol. 23, 1914–1919. doi: 10.1016/j.cub.2013.07.075

Laureys, S. (2005). The neural correlate of (un) awareness: lessons from the vegetative state. Trends Cogn. Sci. 9, 556–559. doi: 10.1016/j.tics.2005.10.010

Laureys, S., Owen, A. M., and Schiff, N. D. (2004). Brain function in coma, vegetative state, and related disorders. Lancet Neurol. 3, 537–546. doi: 10.1016/S1474-4422(04)00852-X

Li, X., and Ouyang, G. (2010). Estimating coupling direction between neuronal populations with permutation conditional mutual information. NeuroImage 52, 497–507. doi: 10.1016/j.neuroimage.2010.05.003

Moulin-Frier, C., Puigbò, J.-Y., Arsiwalla, X. D., Sanchez-Fibla, M., and Verschure, P. F. (2017). Embodied artificial intelligence through distributed adaptive control: An integrated framework. arXiv:1704.01407.

Oizumi, M., Albantakis, L., and Tononi, G. (2014). From the phenomenology to the mechanisms of consciousness: integrated information theory 3.0. PLoS Comput. Biol. 10:e1003588. doi: 10.1371/journal.pcbi.1003588

Olofsen, E., Sleigh, J., and Dahan, A. (2008). Permutation entropy of the electroencephalogram: a measure of anaesthetic drug effect. Brit. J. Anaesth. 101, 810–821. doi: 10.1093/bja/aen290

Parton, A., Malhotra, P., and Husain, M. (2004). Hemispatial neglect. J. Neurol. Neurosurg. Psychiatry 75, 13–21.

Schartner, M., Seth, A., Noirhomme, Q., Boly, M., Bruno, M.-A., Laureys, S., et al. (2015). Complexity of multi-dimensional spontaneous eeg decreases during propofol induced general anaesthesia. PLoS ONE 10:e0133532. doi: 10.1371/journal.pone.0133532

Seth, A. K. (2005). Causal connectivity of evolved neural networks during behavior. Network 16, 35–54. doi: 10.1080/09548980500238756

Staniek, M., and Lehnertz, K. (2009). Symbolic transfer entropy: inferring directionality in biosignals. Biomed. Tech. 54, 323–328. doi: 10.1515/BMT.2009.040

Tononi, G. (2004). An information integration theory of consciousness. BMC Neurosci. 5:42. doi: 10.1186/1471-2202-5-42

Tononi, G. (2012). Integrated information theory of consciousness: an updated account. Arch. Ital. Biol. 150, 56–90.

Tononi, G., and Sporns, O. (2003). Measuring information integration. BMC Neurosci. 4:31. doi: 10.1186/1471-2202-4-31

Tononi, G., Sporns, O., and Edelman, G. M. (1994). A measure for brain complexity: relating functional segregation and integration in the nervous system. Proc. Natl. Acad. Sci. U.S.A. 91, 5033–5037. doi: 10.1073/pnas.91.11.5033

Virmani, M., and Nagaraj, N. (2016). A compression-complexity measure of integrated information. arXiv:1608.08450.

Wennekers, T., and Ay, N. (2005). Stochastic interaction in associative nets. Neurocomputing 65, 387–392. doi: 10.1016/j.neucom.2004.10.033

Keywords: consciousness in the clinic, computational neuroscience, complexity measures, information theory, clinical scales

Citation: Arsiwalla XD and Verschure P (2018) Measuring the Complexity of Consciousness. Front. Neurosci. 12:424. doi: 10.3389/fnins.2018.00424

Received: 29 December 2017; Accepted: 04 June 2018;

Published: 27 June 2018.

Edited by:

Mikhail Lebedev, Duke University, United StatesCopyright © 2018 Arsiwalla and Verschure. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xerxes D. Arsiwalla, eC5kLmFyc2l3YWxsYUBnbWFpbC5jb20=

Paul Verschure, cGF1bC52ZXJzY2h1cmVAZ21haWwuY29t

Xerxes D. Arsiwalla

Xerxes D. Arsiwalla Paul Verschure1,2,3,4*

Paul Verschure1,2,3,4*