95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Hum. Neurosci. , 11 September 2018

Sec. Cognitive Neuroscience

Volume 12 - 2018 | https://doi.org/10.3389/fnhum.2018.00361

A central question in neuroscience and psychology is how the mammalian brain represents the outside world and enables interaction with it. Significant progress on this question has been made in the domain of spatial cognition, where a consistent network of brain regions that represent external space has been identified in both humans and rodents. In rodents, much of the work to date has been done in situations where the animal is free to move about naturally. By contrast, the majority of work carried out to date in humans is static, due to limitations imposed by traditional laboratory based imaging techniques. In recent years, significant progress has been made in bridging the gap between animal and human work by employing virtual reality (VR) technology to simulate aspects of real-world navigation. Despite this progress, the VR studies often fail to fully simulate important aspects of real-world navigation, where information derived from self-motion is integrated with representations of environmental features and task goals. In the current review article, we provide a brief overview of animal and human imaging work to date, focusing on commonalties and differences in findings across species. Following on from this we discuss VR studies of spatial cognition, outlining limitations and developments, before introducing mobile brain imaging techniques and describe technical challenges and solutions for real-world recording. Finally, we discuss how these advances in mobile brain imaging technology, provide an unprecedented opportunity to illuminate how the brain represents complex multifaceted information during naturalistic navigation.

The ability to navigate is a challenging, high-order cognitive problem. Even in the simplest setting, such as in travel to a landmark in plain sight over open terrain (e.g., towards a church spire), navigating involves determining the required direction of travel and estimating how far to proceed. In reality, however, navigation is rarely this straightforward. Everyday experience typically requires navigation in multiple distinct contexts, varying in time course, familiarity and environmental complexity. For example, walking to work typically involves following a learned route to a known location, whereas walking to a new location for the first time involves mapping out an entirely new route to the goal. In addition to differences in the nature of the navigation required, differences in the properties of the surrounding environment and distance to be covered also exert a defining influence on the combination of cognitive processes involved in navigation (e.g., crossing a toy strewn nursery vs. driving to the airport). Despite the variety and complexity of cognitive processes involved, four decades of electrophysiological and neuroimaging research has successfully identified a number of distinct brain regions that are critical for specific operational aspects of navigation.

A wealth of evidence indicates that hippocampal regions are central to navigation when reaching a goal involves internal representations or “cognitive mapping” of a familiar environment (e.g., O’Keefe and Nadel, 1978; Burgess, 2008; Spiers and Barry, 2015). The parahippocampal cortex and retrosplenial cortex are also known to play distinct roles in navigation, with the former facilitating encoding and recognition of local environmental scenes, and the latter responsible for orientation and direction toward unseen goals in the broader environment (Epstein, 2008; Vann et al., 2009; although see Chadwick and Spiers, 2014). Moreover, it has been proposed that the retrosplenial cortex serves to translate between allocentric (world-referenced) representations in the medial temporal lobe and egocentric (self-referenced) representations in the posterior parietal cortex (Byrne et al., 2007). In addition, the cerebellum is thought to track self-motion (Rochefort et al., 2011), while the pre-frontal cortex is implicated in route planning and is also known to be involved in decision-making and strategy shifts during navigation (Poucet et al., 2004; Spiers, 2008). As will be described below, single-unit electrophysiological work in rodents has also identified brain regions with spatially-tuned firing correlates (for reviews see Taube, 2007; Moser et al., 2008; Derdikman and Moser, 2010; Hartley et al., 2014).

While it is clear that significant progress has been made in identifying the core neural mechanisms involved in navigation, limitations in ecological validity leave important questions unanswered—particularly in relation to the integration of the multifaceted cues required in complex scenarios. In the following section we provide a selective overview of evidence at a cellular level, derived from studies employing lab-based imaging techniques in rodents and humans. After outlining some limitations associated with existing approaches, including recent virtual reality (VR) studies, we highlight developments in mobile imaging technology that provide an exciting opportunity to understand the complexity of real-world navigation in humans.

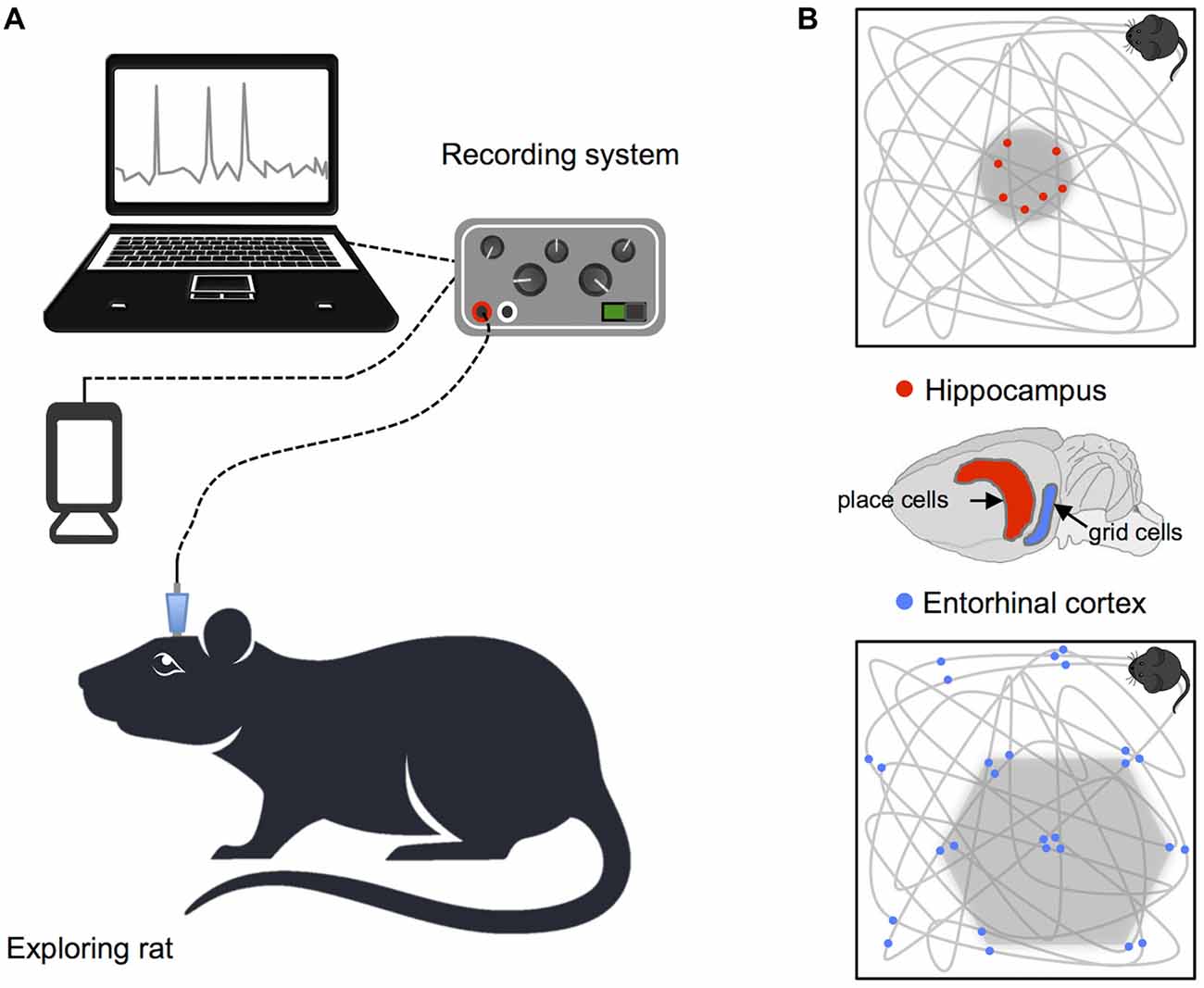

Studies of animals have led to significant insights regarding how neural systems contribute to identification of spatial location and orientation in the environment. Indeed, the bulk of our current understanding of neural representations of space in the hippocampal formation is derived from single-cell electrophysiological recordings, in rodents, during free movement around purpose built enclosures. In a pioneering study, place cells with spatially specific receptive fields indicating the animal’s current position were identified in CA3 and CA1 regions of the hippocampus (O’Keefe and Dostrovsky, 1971). Since this groundbreaking discovery, subsequent research has identified a number of additional cells that combine to support self-localization and orientation in rodents (see Figure 1). For example, grid cells in the entorhinal cortex code for position, with multiple distributed receptive fields that fire at regularly spaced locations, forming a hexagonal pattern that maps out available space (Fyhn et al., 2004; Hafting et al., 2005). In addition, head direction cells in the limbic system track facing direction, and border cells found in the subiculum, presubiculum, parasubiculum and entorhinal cortex denote proximity to the boundaries of the environment (Taube et al., 1990; Solstad et al., 2008; Lever et al., 2009; Boccara et al., 2010). Equivalent cells supporting spatial representation have also been found in mice and bats (Fyhn et al., 2008; Yartsev et al., 2011; Rubin et al., 2014; Finkelstein et al., 2015) and cells with functions corresponding to place, head direction and grid cells in rodents have also been identified in non-human primates (Robertson et al., 1999; Hori et al., 2003; Killian et al., 2012).

Figure 1. Recording procedure in rodents and basic navigation cells. (A) Schematic illustration of the setup for recording single cell data. (B) Examples of place and grid cell firing patterns: the gray lines show the animals’ path while exploring a square enclosure and the small dots highlight locations at which single neurons fired action potentials. Brain diagram highlights the location of place cells in the hippocampus and grid cells in the entorhinal cortex.

Although a significant amount of research has been done on the single-unit correlates of spatial representations in rodents, the options for using invasive recording techniques in humans is necessarily limited (Hartley et al., 2014). Measurement at the cellular level in humans involves implantation of microelectrodes (depth or surface) and is primarily used to monitor seizure activity in patients suffering from epilepsy. A small number of studies have employed intracranial recording during virtual navigation tasks however, demonstrating the presence of place cells in the hippocampal formation, path cells (tracking direction of movement) in the entorhinal cortex, and grid cells in the cingulate cortex, that resemble those found in rodents (Ekstrom et al., 2003; Jacobs et al., 2010, 2013). Moreover, in both rodents and humans, comparison of movement and slow/still periods has revealed increases in theta during motion (e.g., Ekstrom et al., 2005). These findings are interpreted as evidence of functional parallels between hippocampal oscillations in humans, and those found in rodents during navigation.

While similarities do clearly exist in the basic neural mechanisms implicated in navigation across mammalian species, differences are also apparent, particularly in the nature of theta rhythms. In rodents, active navigation is associated with dominant hippocampal theta oscillations in the 4–10 Hz range, linked to self-motion and temporal coordination of firing patterns in place, grid and head direction cells during navigation (and a wide variety of other behaviors, see Buzsáki, 2005; Jacobs, 2014 for discussion). By contrast, in humans, hippocampal oscillations have been observed at a lower frequency between 1 Hz and 4 Hz (Jacobs et al., 2007), and exhibit shorter bursts than are observed in rodents (Watrous et al., 2011). Moreover, across species differences in the operation of theta rhythms have emerged, which appear to challenge the central role of theta oscillations for human navigation. For example, evidence from non-human primates has revealed variability in the frequency of the dominant theta oscillation (Stewart and Fox, 1991; Skaggs et al., 2007). Additionally, work with bats has demonstrated the operation of grid cells in the entorhinal cortex despite the absence of theta oscillations during active navigation (Yartsev et al., 2011), although this finding has proved somewhat controversial. Crucially, slow movement speed in crawling bats is thought to be responsible for the failure to find the pattern of theta oscillations commonly observed in freely moving rodents, highlighting the importance of matching movement speed in animals (see Barry et al., 2012). This point is not trivial, as evidence also exists of differences in the frequency and duration of navigation-related theta oscillations across species (Watrous et al., 2013), based on recordings in quite different scenarios (i.e., rats completed a Barnes style maze while humans performed a virtual navigation task).

The preceding section emphasizes similarities and differences in the pattern of neural activity underlying navigation across species. Given that the bulk of knowledge about the role of the hippocampal formation in navigation is derived from work with rats, demonstrating parallels across species is valuable. Critically, however, across species neural differences have also been clearly demonstrated. Observations of variability in the underlying frequency profile of hippocampal oscillations across species could be of little functional significance (largely reflecting changes due to neuroanatomical organization or differences in methodology), or they could be interpreted as suggesting that the cognitive and neural processes underlying navigation may not be entirely equivalent in humans and animals. One key issue raised by existing work is the limitation inherent to traditional neuroimaging—whilst rats and other non-humans can be examined during free movement, human studies rarely achieve equivalent realism. The fact that rodent studies reveal specific functional modulations of theta as a consequence of motion illustrates the importance of incorporating movement into human studies. With this in mind, we turn to the use of VR environments for investigating human navigation.

In recent years, there has been a move toward increasing ecological validity in human imaging studies by employing VR technology to simulate real-world scenarios (Maguire et al., 1997; Riecke et al., 2002; Shelton and Gabrieli, 2002; Spiers and Maguire, 2006). Key strengths of VR include the flexibility and manipulability of the simulated environment, facilitating experimental tasks that are difficult or impossible to implement in real-world settings (whilst also providing a high degree of experimental control, e.g., Kearns et al., 2002). Significant progress in unraveling the complexities of spatial navigation has been made by studies combining fMRI with VR. For example, work to date demonstrates interactions between hippocampal and striatal systems supporting flexible navigation (Brown et al., 2012), the presence of grid-like signals in the entorhinal cortex during VR navigation (Doeller et al., 2010) and imagined navigation (Horner et al., 2016), decoding of goal direction in the entorhinal cortex (Chadwick and Spiers, 2014), and processing of environmental novelty in the hippocampus (Kaplan et al., 2014). In addition, combining MEG and fMRI has revealed memory related increases in theta power during self-initiated movement (by button press) in a VR environment, accompanied by increased activity in the hippocampus (Kaplan et al., 2012). While it is clear that VR combined with static imaging techniques has uncovered a wealth of information, limits on the degree of presence that can be obtained and the absence of idiothetic information may partially disrupt the formation of accurate spatial representations.

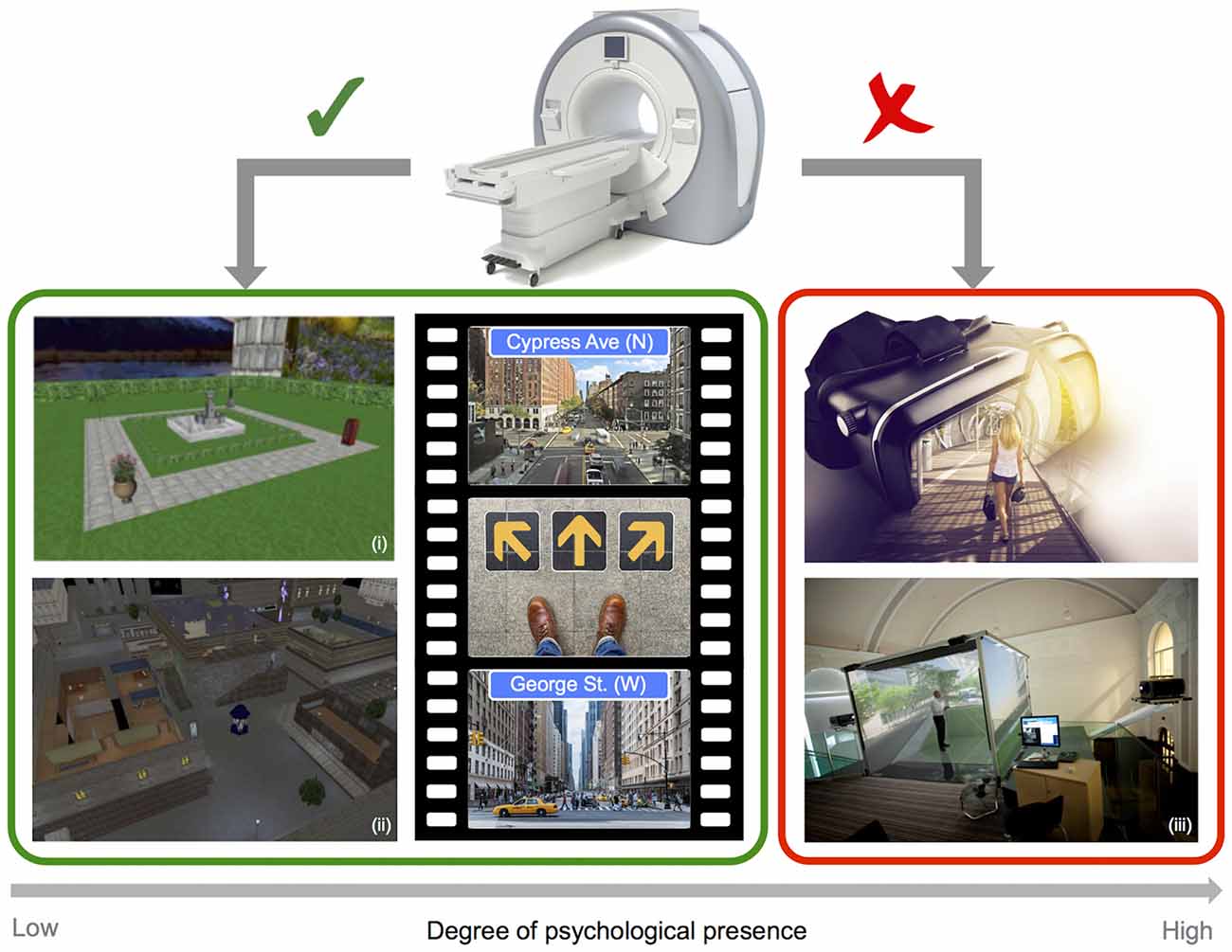

In the case of functional imaging work, scanner limitations impose restrictions on the sorts of VR technology that can be employed, and therefore on the degree of immersion and psychological presence that can be obtained (see Figure 2). In most cases, imaging studies of navigation in humans employ video game style VR displays, where participants control motion through the rendered environment via button presses or joystick input. The approach of combining functional imaging and VR to investigate spatial navigation can be seen as somewhat limited, on the one-hand by the use of 2D displays, and on the other by the absence of combined visual and proprioceptive feedback (Slater et al., 1998). Both of these factors clearly impact the degree of immersion and presence in the virtual environment. Navigating through a VR environment that gives the sense of motion while physically still can result in sensory conflict, and behavioral studies have indicated that this conflict can impair navigation performance when compared to natural walking conditions (Chance et al., 1998; Waller et al., 2004; Ruddle and Lessels, 2006). Importantly, changes in the degree of presence in VR have, in turn, been linked to significant changes in patterns of neural activity. A recent EEG study in humans explicitly compared the effects of 2D (desktop) and 3D (single-wall) interactive VR maze environments. Differences were found in the level and distribution of alpha activity (8–12 Hz) as a function of the degree of reported spatial presence, with greater presence ratings in the 3D environment associated with stronger parietal activation than was observed for the less immersive 2D environment (Kober et al., 2012). While the preceding study investigated spatial presence in VR rather than navigation per se, the findings clearly highlight the potential importance of realism for imaging experiments. Importantly, differences in alpha were apparent in posterior parietal regions associated with egocentric representations of the environment in prior fMRI and EEG studies (e.g., Plank et al., 2010; Schindler and Bartels, 2013). Another recent study employing intra-hippocampus EEG recordings during real world and virtual navigation tasks has demonstrated clear differences in theta, with oscillations peaking at a lower frequency band for virtual than for real movement (Bohbot et al., 2017). Moreover, outside of the context of navigation, movement speed has been shown to influence the prevalence of high frequency theta oscillations (6–12 Hz), providing a parallel with prior work in moving rodents (Aghajan et al., 2017). Given that the presence or absence of physical motion systematically alters the pattern of neural activity, it seems clear that the use of static VR approaches to navigation, while undoubtedly valuable, have some limitations.

Figure 2. Imaging and virtual reality (VR). Example screenshots of VR style navigation tasks typically employed in the scanner (left), and images of depicting fully immersive 3D VR technology (right), highlighting limitations in the degree of psychological presence that can be obtained when combining a VR approach with static imaging techniques (i: adapted from Chadwick et al., 2015; ii: adapted from King et al., 2005 and iii: Courtesy of Matt Wain photography).

Ultimately, if different patterns of activation can be observed based on the level of presence and immersion in virtual environments, it is reasonable to believe that neural signals of real-world navigation could also differ from those observed in VR functional imaging studies, particularly when navigation tasks that require updating of orientation and path integration. Efficient updating of egocentric and allocentric spatial representations requires the combination of visual, vestibular and kinesthetic information, with navigators having to adopt different strategies to compensate for the lack of natural movement in static studies (for discussion see Gramann, 2013). Of course, this lack of idiothetic information is not only relevant for studies investigating spatial navigation, but for cognitive neuroscience in general, with evidence supporting embodied accounts of cognition and demonstrating differences in brain states during movement (for discussion see Ladouce et al., 2017). To increase experimental control (e.g., Acharaya et al., 2016) and, in some instances, improve the resolution of neural recordings (Harvey et al., 2009), a number of recent navigation studies have also employed virtual environments with rats, facilitating cross-species comparison. Importantly, this work also highlights that self-motion has a fundamental role in establishing orientation in the environment—modulating navigation related neural patterns. In rats, direct comparison of firing patterns in hippocampal place cells across real and virtual navigation tasks reveals that a high proportion of cells are movement dependent (75%) and also demonstrates an overall reduction in theta power and frequency during static virtual navigation (Chen et al., 2013). Changes in the firing patterns of place cells during random foraging in two-dimensional body-fixed VR have also been observed, with reliance on distal visual cues alone in VR resulting in a marked reduction in spatial selectivity (Aghajan et al., 2015). Similarly, in both humans and nonhuman primates, hippocampal neurons have been found to exhibit weak spatial selectivity when relying on distal visual cues in VR (i.e., in the absence of proximal or vestibular cues; Rolls, 1999; Ekstrom et al., 2003).

Taken together, the evidence demonstrates that static fixed-location VR studies have the advantage of experimental control but do not capture the full sensory richness of actual movement through an environment (for review see Taube et al., 2013). Importantly, this conclusion receives clear support from lesion work in rats, which has independently confirmed the importance of vestibular information for spatial representations (Stackman and Taube, 1997; Stackman et al., 2002). A number of authors have highlighted that although humans have a bias toward the use of visual information (which is inherently easy to manipulate in VR experiments), vestibular, somatosensory, auditory and proprioceptive cues also contribute to navigation (e.g., Brandt et al., 2005; Israël and Warren, 2005; Frissen et al., 2011; Ekstrom et al., 2014; Aghajan et al., 2015; see Taube et al., 2013 for discussion). Theoretically, an important distinction is drawn between two forms of information involved in self-location: sensory perception of environmental features and self-motion information from visual (optic flow), vestibular, proprioceptive and motor systems (Taube et al., 2013; Barry and Burgess, 2014). In reality, however, navigation involves multi-sensory integration with cue salience potentially influencing how cortical hierarchies handle integration of spatially relevant information in any given situation. Ultimately, the broader utility of VR simulations depends upon its ability to accurately represent multiple key features of real-world navigation, including self-motion. Over the last few decades VR technology has developed significantly, such that the most advanced systems can now combine visual, auditory and haptic (tactile) stimulation, while responding to body position and movement using accelerometers, gyroscopes and magnetometers, providing a sense of physical presence and interaction with the virtual environment (Bohil et al., 2011). Indeed, some recent research has focused on investigating spatially relevant cues in VR systems, including visual-vestibular interactions in self-motion (Kim et al., 2015), distance perception (Kelly et al., 2014), and the addition of olfactory cues (Ischer et al., 2014). Overall, most of the evidence to date supports the view that fully immersive 3D VR systems (e.g., CAVE, HMD) can be used to render and manipulate real-world environments reliably for use across a number of applications.

Crucially, movement devices have also been developed to provide a sense of natural movement when exploring VR environments including “VirtuSphere” and the “CyberWalk” omnidirectional treadmill (Hardiess et al., 2015). The “VirtuSphere” is essentially a large-scale hamster ball on a wheeled platform, that the user stands inside wearing a HMD, capable of rolling in any direction based on user input. However, this system has not been widely adopted for research applications to date, and preliminary findings failed to demonstrate an increase in the degree of reported presence, and participants indicated that getting to grips with movement in the sphere was effortful (Skopp et al., 2014). Moreover, work contrasting natural, semi-natural (VirtuSphere) and non-natural (gamepad) locomotion in VR, using path deviation as a measure of accuracy, demonstrated that performance in the VirtuSphere was significantly worse than both other methods of locomotion, which was attributed to significant differences in motions and forces involved in initiating and terminating walking in the VirtuSphere compared to real world walking (Nabiyouni et al., 2015). Omnidirectional treadmill systems like CyberWalk facilitate more natural walking through large scale virtual environments, with evidence indicating that spatial updating performance on the CyberWalk treadmill did not differ from performance for natural walking (Souman et al., 2011). In rats, similar spatial representations (i.e., grid cells, head direction cells and border cells) have also been observed for treadmill navigation that allowed body rotation and real-world navigation, although some differences in the firing pattern of grid cells were observed, with greater spacing in VR than in real-world settings (Aronov and Tank, 2014). In essence, advances in VR technology, including devices used to interact with these systems, make it possible to simulate multiple aspects of real-world navigation. However, for human participants it is not possible to combine high validity VR systems with functional imaging (i.e., fMRI), but recent advances in mobile imaging technology now provide an exciting opportunity to capture neural signals related to navigation during motion.

Two different technologies facilitating mobile capture of neural activity in real-world contexts have evolved in recent years: EEG and functional Near-Infrared Spectroscopy (fNIRS) systems. EEG is recorded using electrodes placed at specific locations across the surface of the scalp (e.g., frontal, temporal, parietal, occipital etc), and provides a real-time measure of neural activity. EEG cannot be used to investigate deep brain structures, but static EEG has been used in the past to investigate frequency oscillations associated with spatial navigation. For example, work has shown that theta over frontal midline sites is directly related to task difficulty during virtual maze navigation (Bischof and Boulanger, 2003), and to processing of relevant landmarks while navigating in VR (Weidemann et al., 2009; Kober and Neuper, 2011). Using a virtual tunnel navigation task, homing responses consistent with the use of an egocentric reference frame exhibited stronger alpha blocking in the right primary visual cortex, while responses compatible with the use of an allocentric reference frame exhibited stronger alpha blocking in inferior parietal, occipito-temporal and retrosplenial cortices (Gramann et al., 2010b). During path integration in VR adoption of an egocentric reference frame has been associated with modulations of alpha in the parietal, motor and occipital cortices, and theta in the frontal cortices, while adoption of an allocentric reference frame is associated with performance related desynchronization in the 8–13 Hz frequency range and synchronization in the 12–14 Hz range in the retrosplenial complex (Lin et al., 2015). While these static EEG studies are open to the same criticism as the fMRI work outlined above, they clearly demonstrate the utility of EEG to address key questions, highlighting the potential utility of a mobile EEG approach.

Small lightweight battery powered EEG amplifiers were primarily developed for consumer applications (e.g., gaming, ambulatory health monitoring), but recently there has been rapid development of commercial devices better suited to pure research applications (see Park et al., 2015 for discussion of mobile imaging in the context of sports performance). Importantly, recent work contrasting wireless mobile and laboratory based amplifiers reports a high degree of correlation across systems, demonstrating that it is now possible to capture reliable EEG data using mobile technology (De Vos et al., 2014). Moreover, in the last few years there has been a steady growth in the number of studies successfully employing mobile EEG technology to query aspects of cognitive function in real-world contexts (e.g., Gramann et al., 2010a; Wascher et al., 2014). In a recent formative study, mobile EEG was employed to investigate the influence of real-world environments on the formation of episodic memories (Griffiths et al., 2016). Participants were presented with a series of words spaced out along a pre-designated route in an open-field environment, before performing a free recall test. Results replicated subsequent memory effects (contrasting encoding activity for subsequently remembered vs. forgotten items) reported in lab-based studies with power decreases in the low to mid frequency range (<30 Hz), including ubiquitous beta power decreases over the left inferior frontal gyrus and temporal pole regions, providing a clear demonstration that EEG data can be reliably obtained in naturalistic settings. Importantly, the study also set out to investigate the neural correlates of spatial and temporal context clustering. Crucially, this study goes a step beyond merely validating the use of EEG in real-world contexts; it clearly demonstrates the potential utility of a mobile EEG approach, highlighting the importance of environmental context.

Another method that can be used to obtain complimentary information in real-world contexts is fNIRS, which utilizes optical beams of light to monitor cerebral blood flow and hemodynamic response. In basic terms, near-infrared light is beamed onto the surface of the scalp and the level of oxygenation in the underlying region is inferred from the degree of absorption detected as light exits the head, providing an indirect measure of neural activity (Leff et al., 2011). Importantly, comparison with fMRI across a range of cognitive tasks has shown a high correspondence across measures, despite lower spatial resolution in the range of centimeters (Cui et al., 2011). Over the last two decades fNIRS has been applied to a wide range of topic areas, but the bulk of research has still been conducted under laboratory conditions (see Ferrari and Quaresima, 2012 for review). Recently, reports of battery operated wearable/wireless multi-channel systems being used to obtain reliable data in freely moving subjects have begun to emerge, demonstrating that fNIRS is indeed a powerful tool for brain measurement during motion in real-world contexts (e.g., Atsumori et al., 2010; Muehlemann et al., 2013; Piper et al., 2014). Importantly, fNIRS was successfully employed in a recent study contrasting mental workload associated with route following from maps presented on an augmented reality wearable display or a hand-held device during navigation. (McKendrick et al., 2016), providing the first demonstration of the utility of fNIRS for measuring aspects of real world spatial cognition. Moreover, one of the key advantages claimed for fNIRS is the ease of integration with other methods, including EEG (e.g., Chen L. C. et al., 2015; Ahn et al., 2016), making it possible to obtain complimentary spatial and temporal information simultaneosly. While the potential utility of a mobile imaging approach is clear, moving navigation research out of the lab and into the real world raises new challenges that need to be addressed with innovation in experimental design, recording procedures and data analysis.

There are a number of challenges associated with recording neural signals in active humans, which have been discussed in depth elsewhere (e.g., see Thompson et al., 2008; Makeig et al., 2009; Gramann et al., 2011, 2014), including handling of motion artifacts, tracking movements in space and synchronizing multiple physiological measures. Over the last decade significant progress has been made, not only in the development of mobile imaging systems, but also in the development of methods for handling the specific challenges presented by recording data during active navigation in real-world contexts. For example, standardized open-source frameworks have been developed which facilitate integration of multiple physiological methods and allow processing and analysis of mobile EEG and body imaging data (e.g., Lab Streaming Layer: Kothe, 2014, MoBILAB: Ojeda et al., 2014; see Reis et al., 2014 for overview of software and hardware solutions). A key problem inherent in imaging cognition in action using EEG is motion artifacts. Traditional cognitive experiments record EEG with participants seated in a dimly lit room, and movement is heavily discouraged to avoid contamination of the neural signal. Time periods of the recording exhibiting strong electrical potentials associated with eye movements and the contraction of muscles are typically rejected or removed using regression procedures in lab-based studies. Advancements in spatial filtering methods such as Independent Components Analysis (ICA) make it possible to isolate motion related artifacts inherent in mobile EEG data. ICA involves linear decomposition of EEG data into independent components and can be implemented to separate brain activity from eye movements, muscle activity and non-brain signals such as line noise (Delorme et al., 2007). Importantly, a number of mobile EEG studies demonstrate that motion artifacts can be adequately addressed using this approach during natural motion and interaction with the environment (e.g., Gwin et al., 2010; Jungnickel and Gramann, 2016; Zink et al., 2016).

Another key problem with conducting mobile experiments in the real-world is establishing the onset of task related cognitive operations. In the lab, computer software is often used to send a TTL pulse to the EEG amplifier signaling the onset of an event of interest, allowing separation of continuous EEG data into time periods associated with different experimental conditions for comparison. At this stage, the majority of mobile EEG studies continue to rely on input from a computer or tablet for accurate timestamping, but recent work has demonstrated that accurate timestamping can be achieved by appeal to concurrently recorded data streams like motion capture (Jungnickel and Gramann, 2016). In addition, an open-source framework has been developed that supports automated identification and labeling of events based on data from recordings of participants performing related tasks using pattern identification (EEG-Annotate: Su et al., 2018). Ultimately, obtaining accurate timestamps in naturalistic settings requires continued innovation, tailored to the specifics of intended experimental design. As adoption of mobile imaging methods grows, development of technical solutions to the unique problems associated with capturing real-world neuroimaging data will continue, but it is clear that significant progress has been made over the last decade in recording and analysis procedures for mobile EEG data and integration with other physiological measures.

Like EEG, fNIRS signal quality can also be impacted by factors relevant for real-world mobile studies, such as motion artifacts, interference from ambient light, sensitivity to changes in temperature and issues with hair (Pringle et al., 1999; McIntosh et al., 2010; Orihuela-Espina et al., 2010; Brigadoi et al., 2014). Recently, innovation in sensor technology has addressed some of these issues, with the development of hair-penetrating brush optodes (Khan et al., 2012) and photodiode sensors that not susceptible to ambient light (for a detailed discussion of hardware see Scholkmann et al., 2014). In the same way as an EEG data recording reflects a combination of activity from brain and non-brain sources, changes in fNIRS signals may not reflect neuronally induced hemodynamic response, instead reflecting task-related changes in systemic activity (e.g., changes in heart rate, blood pressure or breathing rate) or extracerebral hemodynamics (Tachtsidis and Scholkmann, 2016). Recording concurrent physiological measures of systemic activity (e.g., heart rate, respiration, skin conductance) and tracking head motion can assist in separating brain from non-brain sources, by using filtering methods based on PCA and ICA to remove movement-related or physiological artifacts (Herold et al., 2017; see Brigadoi et al., 2014 for a comparison of motion artifact correction methods). As has been noted elsewhere, a more critical limitation of using fNIRS to obtain a direct measure cognitive processing in natural environments is its low temporal resolution: fNIRS has a temporal resolution in the order of seconds, whereas fast cognitive processes occur on a sub-second timescale (e.g., see Gramann et al., 2011). Ultimately scalp EEG and fNIRS cannot be used to investigate deep brain structures (e.g., hippocampus) that have been the focus of the bulk of research in humans employing single-cell recording or fMRI techniques, but obtaining complimentary information that elucidates the spatial and temporal dynamics of real-world navigation is critical. While there are undoubtedly challenges inherent in recording EEG and fNIRS data during motion in real-world contexts, we believe that these issues can and will be adequately addressed over the coming years. Importantly, adoption of a mobile imaging approach for studying navigation should not be seen as a means merely to validate existing findings, but as a complimentary approach that facilitates addressing different sorts of questions about how neural indices of navigation are influenced by dynamic natural environments and physical movement through space.

The current review article has highlighted some of the problems that exist for studying human navigation, echoing longstanding concerns that have been expressed about animal navigation, “(…) the role of these various systems can best be understood if one takes into consideration an animal’s behavior in its natural habitat” (Nadel, 1991). As we have described above, studies on the neural systems involved in navigation have primarily been done in either freely-moving rodents or in humans viewing virtual settings while largely immobile. As outlined earlier, self-motion cues are known to be important for navigation tasks involving updating of orientation for path integration and the use of egocentric and allocentric spatial representations. In recent years, there has been an increase in behavioral work querying aspects of navigation using a hybrid VR-real world approach, where natural motion is yoked to movements within virtual environments (e.g., Chen X. et al., 2015; Chen et al., 2017; He and McNamara, 2018; Sjolund et al., 2018), which more closely parallels work carried out with rats. However, this hybrid approach is not generally combined with imaging techniques (although see Ehinger et al., 2014; Gehrke et al., 2018; Liang et al., 2018), so from a theoretical perspective, how the brain integrates sensory perception of environmental features and self-motion information from visual (optic flow), vestibular, proprioceptive and motor systems to support spatial navigation in humans remains an open question. VR will remain a powerful method for studying neural activity during active navigation, as it facilitates manipulations that are difficult or impossible to realize in the real-world. Moreover, combining immersive VR and omnidirectional treadmills with imaging techniques would reflect a significant advancement, particularly in terms of enabling investigation of path integration and reference frames in large scale environments.

Critically, it has been argued that the scale of space employed in common navigation tasks can play a critical role in determining process engagement; limiting inferences that can be drawn from animal studies performed in small-scale space, about neural mechanisms of human spatial navigation in large-scale environments (Wolbers and Wiener, 2014). Prior evidence has also highlighted that a combination of egocentric and allocentric coding strategies is needed to support navigation in large-scale space (Wiener et al., 2013). In essence, mazes employed to investigate navigation in rodents are often simplistic and designed to play to the animals’ strengths (e.g., radial arm, T-maze). By contrast, real world navigation is inherently complex, varying as a function of the availability of visible landmarks or boundaries, and requiring route planning and integration over much larger distances to reach goals that cannot be perceived from the starting location. In addition, in real world environments such as cities, navigating solely on the basis of orientation with respect to the final destination is not always a valuable strategy, and a successful outcome requires greater understanding of how small sub-regions of space link together to create a flexible map of the environment. Successful navigation in real world environments involves appreciation of our own orientation with respect to the surrounding environment, route planning beyond our current field of vision, and constantly updating our frame of reference as we move through the environment towards the goal destination, a situation that is difficult to recreate in the lab.

Our current view of navigation is limited by the simplified conditions that it is typically examined under. In addition to the potential questions highlighted above, regarding the nature of theta rhythms during real-world motion, and the influence of spatial scale on brain activity associated with egocentric and allocentric navigation strategies, the adoption of a mobile approach will open up a range of new questions for investigation. Critically, a mobile approach will provide an unprecedented opportunity for cognitive neuroscientists to investigate how the brain supports interaction with and navigation through dynamic noisy environments, while participating in additional tasks (e.g., walking, talking, driving). How does the brain integrate spatial information from multiple sensory cues and maintain spatial representations in short and long-term memory to support active navigation? How do we plan an optimal route when dealing with multiple destinations? How do the details of the environment (e.g., urban design) influence navigation? Does navigating in a busy city differ from navigating in open space? What happens while interacting with navigation technologies and how do they impact spatial cognition? These are just some examples of the kinds of questions that a mobile imaging approach will enable researchers to address. Importantly, adoption of a mobile approach will undoubtedly improve ecological validity in navigation research, facilitating assessment of natural behavior. Of course, there is a trade-off between ecological validity and experimental control, which will require methodological innovation for progress in investigating real-world aspects of navigation. Ultimately, the real-world cannot be entirely controlled, details of the environment to be navigated will be variable (e.g., temporary landmarks, imposed detours, weather, lighting, traffic, people). However, part of the appeal of a real-world imaging approach lies precisely in understanding how constantly changing environmental factors influence spatial cognition. In this context, methods for adequately monitoring the world, and interactions with it, must be carefully considered during experimental design. Importantly, developments in other technologies such eye-trackers, GPS devices, wearable video recorders, accelerometers, mobile phones and augmented reality wearable displays, make it possible to track relevant behaviors and manipulate details of the natural environment, enabling navigation research to move out of the lab and into the real-world.

By definition, real-world navigation is complex: multi-sensory inputs, including self-motion cues, are employed to establish an organism’s location in space and to continuously track progress toward a goal location in large scale environments. While mobile EEG and fNIRS cannot provide the spatial resolution of fMRI or the precision of intracranial recording, these approaches can, however, provide data on real-world navigation in humans—an approach to spatial cognition that has not been possible previously. Over the course of this review, we have highlighted the importance of idiothetic cues for orientation, path integration and the use of egocentric and allocentric spatial representations, and as such we believe that examination of these issues will benefit most from adopting a mobile approach. While we highlighted some limitations of static VR approaches, there is no doubt that the development of input devices that support movement is a significant advance. Moreover, we believe that combining fully immersive VR with mobile imaging techniques provides a good balance of ecological validity and experimental control. However, fully immersive VR technology and omnidirectional treadmills are expensive and also require dedicated testing areas and specialist skills to program realistic virtual environments. Moreover, there are some questions that can only be adequately addressed outside of the laboratory. For example, contrasting navigation performance in familiar (e.g., hometown) and unfamiliar environments. At this stage, it remains unclear to what extent current neurobiological models will be supported, augmented or undermined. What is clear, however, is that a move towards mobile imaging will provide a more complete picture, which can fully capture the complexities of real-world navigation.

JP performed the reference search and wrote the article. PD and DD provided feedback on drafts and edited the final submission.

This work did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

JP and DD are members of the SINAPSE collaboration (www.sinapse.ac.uk), a pooling initiative funded by the Scottish Funding Council and the Chief Scientific Office of the Scottish Executive.

Acharaya, L., Aghajan, Z. M., Vuong, C., Moore, J. J., and Mehta, M. R. (2016). Causal influence of visual cues on hippocampal directional selectivity. Cell 164, 197–207. doi: 10.1016/j.cell.2015.12.015

Aghajan, Z. M., Acharya, L., Moore, J. J., Cushman, J. D., Vuong, C., and Mehta, M. R. (2015). Impaired spatial selectivity and intact phase precession in two-dimensional virtual reality. Nat. Neurosci. 18, 121–128. doi: 10.1038/nn.3884

Aghajan, Z. M., Schuette, P., Fields, T. A., Tran, M. E., Siddiqui, S. M., Hasulak, N. R., et al. (2017). Theta oscillations in the human medial temporal lobe during real-world ambulatory movement. Curr. Biol. 27, 3743.e3–3751.e3. doi: 10.1016/j.cub.2017.10.062

Ahn, S., Nguyen, T., Jang, H., Kim, J. G., and Jun, S. C. (2016). Exploring neuro-physiological correlates of drivers’ mental fatigue caused by sleep deprivation using simultaneous EEG, ECG and fNIRS data. Front. Hum. Neurosci. 10:219. doi: 10.3389/fnhum.2016.00219

Aronov, D., and Tank, D. W. (2014). Engagement of neural circuits underlying 2D spatial navigation in a rodent virtual reality system. Neuron 84, 442–456. doi: 10.1016/j.neuron.2014.08.042

Atsumori, H., Kiguchi, M., Katura, T., Funane, T., Obata, A., Sato, H., et al. (2010). Noninvasive imaging of prefrontal activation during attention-demanding tasks performed while walking using a wearable optical topography system. J. Biomed. Opt. 15:046002. doi: 10.1117/1.3462996

Barry, C., and Burgess, N. (2014). Neural mechanisms of self-location. Curr. Biol. 24, R330–R339. doi: 10.1016/j.cub.2014.02.049

Barry, C., Bush, D., O’Keefe, J., and Burgess, N. (2012). Models of grid cells and theta oscillations. Nature 488, 103–107. doi: 10.1038/nature11276

Bischof, W. F., and Boulanger, P. (2003). Spatial navigation in virtual reality environments: an EEG analysis. Cyberpsychol. Behav. 6, 487–495. doi: 10.1089/109493103769710514

Boccara, C. N., Sargolini, F., Thoresen, V. H., Solstad, T., Witter, M. P., Moser, E. I., et al. (2010). Grid cells in pre- and parasubiculum. Nat. Neurosci. 8, 987–994. doi: 10.1038/nn.2602

Bohbot, V. D., Copara, M. S., Gotman, J., and Ekstrom, A. D. (2017). Low-frequency theta oscillations in the human hippocampus during real-world and virtual navigation. Nat. Commun. 8:14415. doi: 10.1038/ncomms14415

Bohil, C. J., Alicea, B., and Biocca, F. A. (2011). Virtual reality in neuroscience research and therapy. Nat. Rev. Neurosci. 12, 752–762. doi: 10.1038/nrn3122

Brandt, T., Schautzer, F., Hamilton, D. A., Bruning, R., Markowitsch, H. J., Kalla, R., et al. (2005). Vestibular loss causes hippocampal atrophy and impaired spatial memory in humans. Brain 128, 2732–2741. doi: 10.1093/brain/awh617

Brigadoi, S., Ceccherini, L., Cutini, S., Scarpa, F., Scatturin, P., Selb, J., et al. (2014). Motion artifacts in functional near-infrared spectroscopy: a comparison of motion correction techniques applied to real cognitive data. Neuroimage 85, 181–191. doi: 10.1016/j.neuroimage.2013.04.082

Brown, T. I., Ross, R. S., Tobyne, S. M., and Stern, C. E. (2012). Cooperative interactions between hippocampal and striatal systems support flexible navigation. Neuroimage 60, 1316–1330. doi: 10.1016/j.neuroimage.2012.01.046

Burgess, N. (2008). Spatial cognition and the brain. Ann. N Y Acad. Sci. 1124, 77–97. doi: 10.1196/annals.1440.002

Buzsáki, G. (2005). Theta rhythm of navigation: link between path integration and landmark navigation, episodic and semantic memory. Hippocampus 15, 827–840. doi: 10.1002/hipo.20113

Byrne, P., Becker, S., and Burgess, N. (2007). Remembering the past and imagining the future: a neural model of spatial memory and imagery. Psychol. Rev. 114, 340–375. doi: 10.1037/0033-295x.114.2.340

Chadwick, M. J., and Spiers, H. J. (2014). A local anchor for the brain’s compass. Nat. Neurosci. 17, 1436–1437. doi: 10.1038/nn.3841

Chadwick, M. J., Jolly, A. E., Amos, D. P., Hassabis, D., and Spiers, H. J. (2015). A goal direction signal in the human entorhinal/subicular region. Curr. Biol. 25, 87–92. doi: 10.1016/j.cub.2014.11.001

Chance, S. S., Gaunet, F., Beall, A. C., and Loomis, J. M. (1998). Locomotion mode affects the updating of objects encountered during travel: the contribution of vestibular and proprioceptive inputs to path integration. Presence 7, 168–178. doi: 10.1162/105474698565659

Chen, X., He, Q., Kelly, J. W., Fiete, I. R., and McNamara, T. P. (2015). Bias in human path integration is predicted by properties of grid cells. Curr. Biol. 25, 1771–1776. doi: 10.1016/j.cub.2015.05.031

Chen, G., King, J. A., Burgess, N., and O’Keefe, J. (2013). How vision and movement combine in the hippocampal place code. Proc. Natl. Acad. Sci. U S A 110, 378–383. doi: 10.1073/pnas.1215834110

Chen, X., McNamara, T. P., Kelly, J. W., and Wolbers, T. (2017). Cue combination in human spatial navigation. Cogn. Psychol. 95, 105–144. doi: 10.1016/j.cogpsych.2017.04.003

Chen, L. C., Sandmann, P., Thorne, J. D., Herrmann, C. S., and Debener, S. (2015). Association of concurrent fNIRS and EEG signatures in response to auditory and visual stimuli. Brain Topogr. 28, 710–725. doi: 10.1007/s10548-015-0424-8

Cui, X., Bray, S., Bryant, D. M., Glover, G. H., and Reiss, A. L. (2011). A quantitative comparison of NIRS and fMRI across multiple cognitive tasks. Neuroimage 54, 2808–2821. doi: 10.1016/j.neuroimage.2010.10.069

Derdikman, D., and Moser, E. I. (2010). A manifold of spatial maps in the brain. Trends Cogn. Sci. 14, 561–569. doi: 10.1016/j.tics.2010.09.004

Doeller, C. F., Barry, C., and Burgess, N. (2010). Evidence for grid cells in a human memory network. Nature 463, 657–661. doi: 10.1038/nature08704

Delorme, A., Sejnowski, T., and Makeig, S. (2007). Enhanced detection of artifacts in EEG data using higher-order statistics and independent component analysis. Neuroimage 34, 1443–1449. doi: 10.1016/j.neuroimage.2006.11.004

De Vos, M., Kroesen, M., Emkes, R., and Debener, S. (2014). P300 speller BCI with a mobile EEG system: comparison to a traditional amplifier. J. Neural Eng. 11:036008. doi: 10.1088/1741-2560/11/3/036008

Ehinger, B. V., Fischer, P., Gert, A. L., Kaufhold, L., Weber, F., Pipa, G., et al. (2014). Kinesthetic and vestibular information modulate α activity during spatial navigation: a mobile EEG study. Front. Hum. Neurosci. 8:71. doi: 10.3389/fnhum.2014.00071

Ekstrom, A. D., Arnold, A. E., and Iaria, G. (2014). A critical review of the allocentric spatial representation and its neural underpinnings: toward a network-based perspective. Front. Hum. Neurosci. 8:803. doi: 10.3389/fnhum.2014.00803

Ekstrom, A. D., Caplan, J. B., Ho, E., Shattuck, K., Fried, I., and Kahana, M. J. (2005). Human hippocampal theta activity during virtual navigation. Hippocampus 15, 881–889. doi: 10.1002/hipo.20109

Ekstrom, A. D., Kahana, M. J., Caplan, J. B., Fields, T. A., Isham, E. A., Newman, E. L., et al. (2003). Cellular networks underlying human spatial navigation. Nature 425, 184–188. doi: 10.1038/nature01964

Epstein, R. A. (2008). Parahippocampal and retrosplenial contributions to human spatial navigation. Trends Cogn. Sci. 12, 388–396. doi: 10.1016/j.tics.2008.07.004

Ferrari, M., and Quaresima, V. (2012). A brief review on the history of human functional near-infrared spectroscopy (fNIRS) development and fields of application. Neuroimage 63, 921–935. doi: 10.1016/j.neuroimage.2012.03.049

Finkelstein, A., Derdikman, D., Rubin, A., Foerster, J. N., Las, L., and Ulanovsky, N. (2015). Three-dimensional head-direction coding in the bat brain. Nature 517, 159–164. doi: 10.1038/nature14031

Frissen, I., Campos, J. L., Souman, J. L., and Ernst, M. O. (2011). Integration of vestibular and proprioceptive signals for spatial updating. Exp. Brain Res. 212, 163–176. doi: 10.1007/s00221-011-2717-9

Fyhn, M., Hafting, T., Witter, M. P., Moser, E. I., and Moser, M. B. (2008). Grid cells in mice. Hippocampus 18, 1230–1238. doi: 10.1002/hipo.20472

Fyhn, M., Molden, S., Witter, M. P., Moser, E. I., and Moser, M. B. (2004). Spatial representation in the entorhinal cortex. Science 305, 1258–1264. doi: 10.1126/science.1099901

Gehrke, L., Iversen, J. R., Makeig, S., and Gramann, K. (2018). The invisible maze task (IMT): interactive exploration of sparse virtual environments to investigate action-driven formation of spatial representations. BioRxiv:1101/278283 [pre print]. doi: 10.1101/278283

Gramann, K. (2013). Embodiment of spatial reference frames and individual differences in reference frame proclivity. Spat. Cogn. Comput. 13, 1–25. doi: 10.1080/13875868.2011.589038

Gramann, K., Ferris, D. P., Gwin, J., and Makeig, S. (2014). Imaging natural cognition in action. Int. J. Psychophysiol. 91, 22–29. doi: 10.1016/j.ijpsycho.2013.09.003

Gramann, K., Gwin, J. T., Bigdely-Shamlo, N., Ferris, D. P., and Makeig, S. (2010a). Visual evoked responses during standing and walking. Front. Hum. Neurosci. 4:202. doi: 10.3389/fnhum.2010.00202

Gramann, K., Onton, J., Riccobon, D., Mueller, H. J., Bardins, S., and Makeig, S. (2010b). Human brain dynamics accompanying use of egocentric and allocentric reference frames during navigation. J. Cogn. Neurosci. 22, 2836–2849. doi: 10.1162/jocn.2009.21369

Gramann, K., Gwin, J. T., Ferris, D. P., Oie, K., Jung, T. P., Lin, C. T., et al. (2011). Cognition in action: imaging brain/body dynamics in mobile humans. Rev. Neurosci. 22, 593–608. doi: 10.1515/RNS.2011.047

Griffiths, B., Mazaheri, A., Debener, S., and Hanslmayr, S. (2016). Brain oscillations track the formation of episodic memories in the real world. Neuroimage 143, 256–266. doi: 10.1016/j.neuroimage.2016.09.021

Gwin, J. T., Gramann, K., Makeig, S., and Ferris, D. P. (2010). Removal of movement artifact from high-density EEG recorded during walking and running. J. Neurophysiol. 103, 3526–3534. doi: 10.1152/jn.00105.2010

Hafting, T., Fyhn, M., Molden, S., Moser, M. B., and Moser, E. I. (2005). Microstructure of a spatial map in the entorhinal cortex. Nature 436, 801–806. doi: 10.1038/nature03721

Hardiess, G., Mallot, H. A., and Meilinger, T. (2015). “Virtual reality and spatial cognition,” in International Encyclopedia of the Social and Behavioral Sciences, 2nd Edn. ed. J. D. Wright (Oxford, UK: Elsevier), 133–137.

Hartley, T., Lever, C., Burgess, N., and O’Keefe, J. (2014). Space in the brain: how the hippocampal formation supports spatial cognition. Philos. Trans. R. Soc. Lond. B Biol. Sci. 369:20120510. doi: 10.1098/rstb.2012.0510

Harvey, C. D., Collman, F., Dombeck, D. A., and Tank, D. W. (2009). Intracellular dynamics of hippocampal place cells during virtual navigation. Nature 461, 941–946. doi: 10.1038/nature08499

He, Q., and McNamara, T. P. (2018). Spatial updating strategy affects the reference frame in path integration. Psychon. Bull. Rev. 25, 1073–1079. doi: 10.3758/s13423-017-1307-7

Herold, F., Wiegel, P., Scholkmann, F., Thiers, A., Hamacher, D., and Schega, L. (2017). Functional near-infrared spectroscopy in movement science: a systematic review on cortical activity in postural and walking tasks. Neurophotonics 4:041403. doi: 10.1117/1.NPh.4.4.041403

Hori, E., Tabuchi, E., Matsumura, N., Tamura, R., Eifuku, S., Endo, S., et al. (2003). Representation of place by monkey hippocampal neurons in real and virtual translocation. Hippocampus 13, 190–196. doi: 10.1002/hipo.10062

Horner, A. J., Bisby, J. A., Zotow, E., Bush, D., and Burgess, N. (2016). Grid-like processing of imagined navigation. Curr. Biol. 26, 842–847. doi: 10.1016/j.cub.2016.01.042

Ischer, M., Baron, N., Mermoud, C., Cayeux, I., Porcherot, C., Sander, D., et al. (2014). How incorporation of scents could enhance immersive virtual experiences. Front. Psychol. 5:736. doi: 10.3389/fpsyg.2014.00736

Israël, I., and Warren, W. H. (2005). “Vestibular, proprioceptive, and visual influences on the perception of orientation and self-motion in humans,” in Head Direction Cells and the Neural Mechanisms of Spatial Orientation, eds S. I. Wiener and J. S. Taube (Cambridge, MA: MIT Press), 347–381.

Jacobs, J. (2014). Hippocampal theta oscillations are slower in humans than in rodents: implications for models of spatial navigation and memory. Philos. Trans. R. Soc. Lond. B Biol. Sci. 369:20130304. doi: 10.1098/rstb.2013.0304

Jacobs, J., Kahana, M. J., Ekstrom, A. D., and Fried, I. (2007). Brain oscillations control timing of single-neuron activity in humans. J. Neurosci. 27, 3839–3844. doi: 10.1523/JNEUROSCI.4636-06.2007

Jacobs, J., Kahana, M. J., Ekstrom, A. D., Mollison, M. V., and Fried, I. (2010). A sense of direction in human entorhinal cortex. Proc. Natl. Acad. Sci. U S A 107, 6487–6492. doi: 10.1073/pnas.0911213107

Jacobs, J., Weidemann, C. T., Miller, J. F., Solway, A., Burke, J. F., Wei, X. X., et al. (2013). Direct recordings of grid-like neuronal activity in human spatial navigation. Nat. Neurosci. 16, 1188–1190. doi: 10.1038/nn.3466

Jungnickel, E., and Gramann, K. (2016). Mobile brain/body imaging (MoBI) of physical interaction with dynamically moving objects. Front. Hum. Neurosci. 10:306. doi: 10.3389/fnhum.2016.00306

Kaplan, R., Doeller, C. F., Barnes, G. R., Litvak, V., Düzel, E., Bandettini, P. A., et al. (2012). Movement-related theta rhythm in humans: coordinating self-directed hippocampal learning. PLoS Biol. 10:e1001267. doi: 10.1371/journal.pbio.1001267

Kaplan, R., Horner, A. J., Bandettini, P. A., Doeller, C. F., and Burgess, N. (2014). Human hippocampal processing of environmental novelty during spatial navigation. Hippocampus 24, 740–750. doi: 10.1002/hipo.22264

Kearns, M. J., Warren, W. H., Duchon, A. P., and Tarr, M. J. (2002). Path integration from optic flow and body senses in a homing task. Perception 31, 349–374. doi: 10.1068/p3311

Kelly, J. W., Hammel, W. W., Siegel, Z. D., and Sjolund, L. A. (2014). Recalibration of perceived distance in virtual environments occurs rapidly and transfers asymmetrically across scale. IEEE Trans. Vis. Comput. Graph. 20, 588–595. doi: 10.1109/TVCG.2014.36

Khan, B., Wildey, C., Francis, R., Tian, F., Delgado, M. R., Liu, H., et al. (2012). Improving optical contact for functional near-infrared brain spectroscopy and imaging with brush optodes. Biomed. Opt. Express 3, 878–898. doi: 10.1364/BOE.3.000878

Killian, N. J., Jutras, M. J., and Buffalo, E. A. (2012). A map of visual space in the primate entorhinal cortex. Nature 491, 761–764. doi: 10.1038/nature11587

Kim, J., Chung, C. Y., Nakamura, S., Palmisano, S., and Khuu, S. K. (2015). The Oculus Rift: a cost-effective tool for studying visual-vestibular interactions in self-motion perception. Front. Psychol. 6:248. doi: 10.3389/fpsyg.2015.00248

King, J. A., Hartley, T., Spiers, H. J., Maguire, E. A., and Burgess, N. (2005). Anterior prefrontal involvement in episodic retrieval reflects contextual interference. Neuroimage 28, 256–267. doi: 10.1016/j.neuroimage.2005.05.057

Kober, S. E., Kurzmann, J., and Neuper, C. (2012). Cortical correlate of spatial presence in 2D and 3D interactive virtual reality: an EEG study. Int. J. Psychophysiol. 83, 365–374. doi: 10.1016/j.ijpsycho.2011.12.003

Kober, S. E., and Neuper, C. (2011). Sex differences in human EEG theta oscillations during spatial navigation in virtual reality. Int. J. Psychophysiol. 79, 347–355. doi: 10.1016/j.ijpsycho.2010.12.002

Kothe, C. (2014). Lab Streaming Layer (LSL). Available online at: https://github.com/sccn/labstreaminglayer

Ladouce, S., Donaldson, D. I., Dudchenko, P. A., and Ietswaart, M. (2017). Understanding minds in real-world environments: toward a mobile cognition approach. Front. Hum. Neurosci. 10:694. doi: 10.3389/fnhum.2016.00694

Leff, D. R., Orihuela-Espina, F., Elwell, C. E., Athanasiou, T., Delpy, D. T., Darzi, A. W., et al. (2011). Assessment of the cerebral cortex during motor task behaviours in adults: a systematic review of functional near infrared spectroscopy (fNIRS) studies. Neuroimage 54, 2922–2936. doi: 10.1016/j.neuroimage.2010.10.058

Lever, C., Burton, S., Jeewajee, A., O’Keefe, J., and Burgess, N. (2009). Boundary vector cells in the subiculum of the hippocampal formation. J. Neurosci. 29, 9771–9777. doi: 10.1523/JNEUROSCI.1319-09.2009

Liang, M., Starrett, M. J., and Ekstrom, A. D. (2018). Dissociation of frontal-midline delta-theta and posterior α oscillations: a mobile EEG study. Psychophysiology 55:e13090. doi: 10.1111/psyp.13090

Lin, C. T., Chiu, T. C., and Gramann, K. (2015). EEG correlates of spatial orientation in the human retrosplenial complex. Neuroimage 120, 123–132. doi: 10.1016/j.neuroimage.2015.07.009

McIntosh, M. A., Shahani, U., Boulton, R. G., and McCulloch, D. L. (2010). Absolute quantification of oxygenated hemoglobin within the visual cortex with functional near infrared spectroscopy (fNIRS). Invest. Ophthalmol. Vis. Sci. 51, 4856–4860. doi: 10.1167/iovs.09-4940

Maguire, E. A., Frackowiak, R. S., and Frith, C. D. (1997). Recalling routes around london: activation of the right hippocampus in taxi drivers. J. Neurosci. 17, 7103–7110. doi: 10.1523/JNEUROSCI.17-18-07103.1997

Makeig, S., Gramann, K., Jung, T. P., Sejnowski, T. J., and Poizner, H. (2009). Linking brain, mind and behavior. Int. J. Psychophysiol. 73, 95–100. doi: 10.1016/j.ijpsycho.2008.11.008

McKendrick, R., Parasuraman, R., Murtza, R., Formwalt, A., Baccus, W., Paczynski, M., et al. (2016). Into the wild: neuroergonomic differentiation of hand-held and augmented reality wearable displays during outdoor navigation with functional near infrared spectroscopy. Front. Hum. Neurosci. 10:216. doi: 10.3389/fnhum.2016.00216

Moser, E. I., Kropff, E., and Moser, M.-B. (2008). Place cells, grid cells and the brain’s spatial representation system. Annu. Rev. Neurosci. 31, 69–89. doi: 10.1146/annurev.neuro.31.061307.090723

Muehlemann, T., Holper, L., Wenzel, J., Wittkowski, M., and Wolf, M. (2013). The effect of sudden depressurization on pilots at cruising altitude. Adv. Exp. Med. Biol. 765, 177–183. doi: 10.1007/978-1-4614-4989-8_25

Nabiyouni, M., Saktheeswaran, A., Bowman, D. A., and Karanth, A. (2015). “Comparing the performance of natural, semi-natural, and non-natural locomotion techniques in virtual reality,” in IEEE Symposium on 3D User Interfaces (3DUI), 2015 (Arles, France: IEEE), 3–10.

Nadel, L. (1991). The hippocampus and space revisited. Hippocampus 1, 221–229. doi: 10.1002/hipo.450010302

Ojeda, A., Bigdely-Shamlo, N., and Makeig, S. (2014). MoBILAB: an open source toolbox for analysis and visualization of mobile brain/body imaging data. Front. Hum. Neurosci. 8:121. doi: 10.3389/fnhum.2014.00121

O’Keefe, J., and Dostrovsky, J. (1971). The hippocampus as a spatial map. Preliminary evidence from unit activity in the freely-moving rat. Brain Res. 34, 171–175. doi: 10.1016/0006-8993(71)90358-1

O’Keefe, J., and Nadel, L. (1978). The Hippocampus as a Cognitive Map. New York, NY: Oxford University Press.

Orihuela-Espina, F., Leff, D. R., James, D. R. C., Darzi, A. W., and Yang, G. Z. (2010). Quality control and assurance in functional near infrared spectroscopy (fNIRS) experimentation. Phys. Med. Biol. 55, 3701–3724. doi: 10.1088/0031-9155/55/13/009

Plank, M., Müller, H. J., Onton, J., Makeig, S., and Gramann, K. (2010). “Human EEG correlates of spatial navigation within egocentric and allocentric reference frames,” in Proceedings of the 7th International Conference on Spatial Cognition VII (Berlin, Heidelberg: Springer), 191–206.

Park, J. L., Fairweather, M. M., and Donaldson, D. I. (2015). Making the case for mobile cognition: EEG and sports performance. Neurosci. Biobehav. Rev. 52, 117–130. doi: 10.1016/j.neubiorev.2015.02.014

Piper, S. K., Krueger, A., Koch, S. P., Mehnert, J., Habermehl, C., Steinbrink, J., et al. (2014). A wearable multi-channel fNIRS system for brain imaging in freely moving subjects. Neuroimage 85, 64–71. doi: 10.1016/j.neuroimage.2013.06.062

Poucet, B., Lenck-Santini, P. P., Hok, V., Save, E., Banquet, J. P., Gaussier, P., et al. (2004). Spatial navigation and hippocampal place cell firing: the problem of goal encoding. Rev. Neurosci. 15, 89–108. doi: 10.1515/revneuro.2004.15.2.89

Pringle, J., Roberts, C., Kohl, M., and Lekeux, P. (1999). Near infrared spectroscopy in large animals: optical pathlength and influence of hair covering and epidermal pigmentation. Vet. J. 158, 48–52. doi: 10.1053/tvjl.1998.0306

Reis, P. M., Hebenstreit, F., Gabsteiger, F., von Tscharner, V., and Lochmann, M. (2014). Methodological aspects of EEG and body dynamics measurements during motion. Front. Hum. Neurosci. 8:156. doi: 10.3389/fnhum.2014.00156

Riecke, B. E., van Heen, H. A. H. C., and Bulthoff, H. B. (2002). Visual homing is possible without landmarks: a path integration study in virtual reality. Presence Teleoperators Virtual Env. 11, 443–473. doi: 10.1162/105474602320935810

Robertson, R. G., Rolls, E. T., Georges-François, P., and Panzeri, S. (1999). Head direction cells in the primate pre-subiculum. Hippocampus 9, 206–219. doi: 10.1002/(sici)1098-1063(1999)9:3<206::aid-hipo2>3.0.co;2-h

Rochefort, C., Arabo, A., André, M., Poucet, B., Save, E., and Rondi-Reig, L. (2011). Cerebellum shapes hippocampal spatial code. Science 334, 385–389. doi: 10.1126/science.1207403

Rolls, E. T. (1999). Spatial view cells and the representation of place in the primate hippocampus. Hippocampus 9, 467–480. doi: 10.1002/(sici)1098-1063(1999)9:4<467::aid-hipo13>3.0.co;2-f

Rubin, A., Yartsev, M. M., and Ulanovsky, N. (2014). Encoding of head direction by hippocampal place cells in bats. J. Neurosci. 34, 1067–1080. doi: 10.1523/jneurosci.5393-12.2014

Ruddle, R. A., and Lessels, S. (2006). For efficient navigational search, humans require full physical movement, but not a rich visual scene. Psychol. Sci. 17, 460–465. doi: 10.1111/j.1467-9280.2006.01728.x

Schindler, A., and Bartels, A. (2013). Parietal cortex codes for egocentric space beyond the field of view. Curr. Biol. 23, 177–182. doi: 10.1016/j.cub.2012.11.060

Scholkmann, F., Kleiser, S., Metz, A. J., Zimmermann, R., Pavia, J. M., Wolf, U., et al. (2014). A review on continuous wave functional near-infrared spectroscopy and imaging instrumentation and methodology. Neuroimage 85, 6–27. doi: 10.1016/j.neuroimage.2013.05.004

Shelton, A. L., and Gabrieli, J. D. (2002). Neural correlates of encoding space from route and survey perspectives. J. Neurosci. 22, 2711–2717. doi: 10.1523/jneurosci.22-07-02711.2002

Skaggs, W. E., McNaughton, B. L., Permenter, M., Archibeque, M., Vogt, J., Amaral, D. G., et al. (2007). EEG sharp waves and sparse ensemble unit activity in the macaque hippocampus. J. Neurophysiol. 98, 898–910. doi: 10.1152/jn.00401.2007

Skopp, N. A., Smolenski, D. J., Metzger-Abamukong, M. J., Rizzo, A. A., and Reger, G. M. (2014). A pilot study of the virtusphere as a virtual reality enhancement. Int. J. Hum. Comput. Interact. 30, 24–31. doi: 10.1080/10447318.2013.796441

Slater, M., McCarthy, J., and Maringelli, F. (1998). The influence of body movement on subjective presence in virtual environments. Hum. Factors 40, 469–477. doi: 10.1518/001872098779591368

Sjolund, L. A., Kelly, J. W., and McNamara, T. P. (2018). Optimal combination of environmental cues and path integration during navigation. Mem. Cognit. 46, 89–99. doi: 10.3758/s13421-017-0747-7

Souman, J. L., Giordano, P. R., Schwaiger, M., Frissen, I., Thümmel, T., Ulbrich, H., et al. (2011). CyberWalk: enabling unconstrained omnidirectional walking through virtual environments. ACM Trans. Appl. Percept. 8:25. doi: 10.1145/2043603.2043607

Solstad, T., Boccara, C. N., Kropff, E., Moser, M. B., and Moser, E. I. (2008). Representation of geometric borders in the entorhinal cortex. Science 322, 1865–1868. doi: 10.1126/science.1166466

Spiers, H. J. (2008). Keeping the goal in mind: prefrontal contributions to spatial navigation. Neuropsychologia 46, 2106–2108. doi: 10.1016/j.neuropsychologia.2008.01.028

Spiers, H. J., and Barry, C. (2015). Neural systems supporting navigation. Curr. Opin. Behav. Sci. 1, 47–55. doi: 10.1016/j.cobeha.2014.08.005

Spiers, H. J., and Maguire, E. A. (2006). Thoughts, behaviour and brain dynamics during navigation in the real world. Neuroimage 31, 1826–1840. doi: 10.1016/j.neuroimage.2006.01.037

Stackman, R. W., Clark, A. S., and Taube, J. S. (2002). Hippocampal spatial representations require vestibular input. Hippocampus 12, 291–303. doi: 10.1002/hipo.1112

Stackman, R. W., and Taube, J. S. (1997). Firing properties of head direction cells in the rat anterior thalamic nucleus: dependence on vestibular input. J. Neurosci. 17, 4349–4358. doi: 10.1523/jneurosci.17-11-04349.1997

Stewart, M., and Fox, S. E. (1991). Hippocampal theta activity in monkeys. Brain Res. 538, 59–63. doi: 10.1016/0006-8993(91)90376-7

Su, K. M., Hairston, W. D., and Robbins, K. (2018). EEG-Annotate: automated identification and labeling of events in continuous signals with applications to EEG. J. Neurosci. Methods 293, 359–374. doi: 10.1016/j.jneumeth.2017.10.011

Tachtsidis, I., and Scholkmann, F. (2016). False positives and false negatives in functional near-infrared spectroscopy: issues, challenges and the way forward. Neurophotonics 3:031405. doi: 10.1117/1.nph.3.3.030401

Taube, J. S. (2007). The head direction signal: origins and sensory-motor integration. Annu. Rev. Neurosci. 30, 181–207. doi: 10.1146/annurev.neuro.29.051605.112854

Taube, J. S., Muller, R. U., and Ranck, J. B. (1990). Head-direction cells recorded from the postsubiculum in freely moving rats. I. Description and quantitative analysis. J. Neurosci. 10, 420–435. doi: 10.1523/jneurosci.10-02-00420.1990

Taube, J. S., Valerio, S., and Yoder, R. M. (2013). Is navigation in virtual reality with fMRI really navigation? J. Cogn. Neurosci. 25, 1008–1019. doi: 10.1162/jocn_a_00386

Thompson, T., Steffert, T., Ros, T., Leach, J., and Gruzelier, J. H. (2008). EEG applications for sport and performance. Methods 45, 279–288. doi: 10.1016/j.ymeth.2008.07.006

Vann, S. D., Aggleton, J. P., and Maguire, E. A. (2009). What does the retrosplenial cortex do? Nat. Rev. Neurosci. 10, 792–802. doi: 10.1038/nrn2733

Waller, D., Loomis, J. M., and Haun, D. B. M. (2004). Body-based senses enhance knowledge of directions in large-scale environments. Psychon. Bull. Rev. 11, 157–163. doi: 10.3758/bf03206476

Wascher, E., Heppner, H., and Hoffmann, S. (2014). Towards the measurement of event-related EEG activity in real-life working environments. Int. J. Psychophysiol. 91, 3–9. doi: 10.1016/j.ijpsycho.2013.10.006

Watrous, A. J., Fried, I., and Ekstrom, A. D. (2011). Behavioral correlates of human hippocampal delta and theta oscillations during navigation. J. Neurophysiol. 105, 1747–1755. doi: 10.1152/jn.00921.2010

Watrous, A. J., Lee, D. J., Izadi, A., Gurkoff, G. G., Shahlaie, K., and Ekstrom, A. D. (2013). A comparative study of human and rat hippocampal low-frequency oscillations during spatial navigation. Hippocampus 23, 656–661. doi: 10.1002/hipo.22124

Weidemann, C. T., Mollison, M. V., and Kahana, M. J. (2009). Electrophysiological correlates of high-level perception during spatial navigation. Psychon. Bull. Rev. 16, 313–319. doi: 10.3758/pbr.16.2.313

Wiener, J. M., de Condappa, O., Harris, M. A., and Wolbers, T. (2013). Maladaptive bias for extrahippocampal navigation strategies in aging humans. J. Neurosci. 33, 6012–6017. doi: 10.1523/jneurosci.0717-12.2013

Wolbers, T., and Wiener, J. M. (2014). Challenges for identifying the neural mechanisms that support spatial navigation: the impact of spatial scale. Front. Hum. Neurosci. 8:571. doi: 10.3389/fnhum.2014.00571

Yartsev, M. M., Witter, M. P., and Ulanovsky, N. (2011). Grid cells without theta oscillations in the entorhinal cortex of bats. Nature 479, 103–107. doi: 10.1038/nature10583

Keywords: spatial navigation, mobile brain imaging, virtual-reality (VR), EEG, fNIRS

Citation: Park JL, Dudchenko PA and Donaldson DI (2018) Navigation in Real-World Environments: New Opportunities Afforded by Advances in Mobile Brain Imaging. Front. Hum. Neurosci. 12:361. doi: 10.3389/fnhum.2018.00361

Received: 05 July 2018; Accepted: 23 August 2018;

Published: 11 September 2018.

Edited by:

Klaus Gramann, Technische Universität Berlin, GermanyReviewed by:

Sebastian Ocklenburg, Ruhr-Universität Bochum, GermanyCopyright © 2018 Park, Dudchenko and Donaldson. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Joanne L. Park, am9hbm5lLnBhcmszQHN0aXIuYWMudWs=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.