- 1Department of Applied Mathematics, School of Mathematics and Statistics, Xi'an Jiaotong University, Xi'an, China

- 2Department of Electrical Engineering, City University of Hong Kong, Hong Kong, China

Our aim is to propose an efficient algorithm for enhancing the contrast of dark images based on the principle of stochastic resonance in a global feedback spiking network of integrate-and-fire neurons. By linear approximation and direct simulation, we disclose the dependence of the peak signal-to-noise ratio on the spiking threshold and the feedback coupling strength. Based on this theoretical analysis, we then develop a dynamical system algorithm for enhancing dark images. In the new algorithm, an explicit formula is given on how to choose a suitable spiking threshold for the images to be enhanced, and a more effective quantifying index, the variance of image, is used to replace the commonly used measure. Numerical tests verify the efficiency of the new algorithm. The investigation provides a good example for the application of stochastic resonance, and it might be useful for explaining the biophysical mechanism behind visual perception.

Introduction

The phenomenon of stochastic resonance, discovered by Benzi et al. (1981), is a type of cooperative effect of noise and weak signal under a certain non-linear circumstance, in which the weak signal can be amplified and detected by a suitable amount of noise (Nakamura and Tateno, 2019). Distinct biological and engineering experiments using crayfish (Douglass et al., 1993; Pei et al., 1996), crickets (Levin and Miller, 1996), rats (Collins et al., 1996), humans (Cordo et al., 1996; Simonotto et al., 1997; Borel and Ribot-Ciscar, 2016; Itzcovich et al., 2017; van der Groen et al., 2018), or optical material (Dylov and Fleischer, 2010) suggested that noise might be helpful for stimuli detection and visual perception.

As the visual perception of images of low contrast can find significance in many fields such as medical diagnosis, flight security, and cosmic exploration, theoretical research on stochastic resonance-based contrast enhancement has become an interesting but challenging topic (Yang, 1998; Ditzinger et al., 2000; Sasaki et al., 2008; Patel and Kosko, 2011; Chouhan et al., 2013; Liu et al., 2019; Zhang et al., 2019). Simonotto et al. (1997) used the noisy static threshold model to recover the picture of Big Ben, Patel et al. proposed a watermark decoding algorithm using discrete cosine transform and maximum-likelihood detection (Patel and Kosko, 2011), Chouhan et al. explored contrast enhancement based on dynamic stochastic resonance in the discrete wavelet transform domain (Chouhan et al., 2013), and Liu et al. (2019) applied an optimal adaptive bistable array to reduce noise from the contaminated images. It is more and more evident today that stochastic resonance can be utilized as a visual processing mechanism in nervous systems and neural engineering applications, although many theoretical and technical problems remain to be solved.

There exist at least three issues to be clarified. The first issue is about model selection. In the existing literatures, the neuron model commonly used for image enhancing is the static threshold model. Since the threshold neuron is too oversimplified to contain the evolution of the membrane voltage, a more realistic biological neuron model should be considered. The second issue is that one cannot find enough details from the existing algorithms. For example, in those algorithms, there is nearly no explanation of the choice of the critical threshold, across which the pixel value of a black–white image will switch. Note that a suitable threshold is vital for image enhancement, so the second question we have to face is what a critical threshold should be. The last issue is about the adoption of the quantifying index, which helps one to pick out an optimally detected image. A typical assumption is that one knows a clear or clean reference picture, but in most practical applications, how can one get such reference pictures especially when taking photos in darkness?

To answer the above questions, we consider an integrate-and-fire neuron network with global feedback in this paper. Our work can be divided into two parts. The first part is model preparation, where we theoretically observe stochastic resonance based on linear approximation. In the second part, by integrating all the physiological and biophysical aspects of visual perception, we propose an algorithm for boosting the contrast of an image photographed in darkness. We give a criterion for determining the critical threshold and adopt the variance of image to quantify the quality of the enhanced image. Our numerical tests demonstrate that the new algorithm is effective and robust.

Stochastic Resonance in an Integrate-and-Fire Neuronal Network

Consider a global feedback biological network of N integrate-and-fire neurons (Lindner and Schimansky-Geier, 2001; Sutherland et al., 2009). The subthreshold membrane potential of each consisting neuron is governed by

where Vi is the membrane potential, C is the capacitance, gL is the leaky conductance, VL is the leaky voltage, and the external synaptic input is

with the excitatory synaptic current Excn, k(t) of rate λE, k and the inhibitory synaptic current Inhn, l(t) of rate λI, l, both modeled as i.i.d. homogenous Poisson processes, with ak(1 ≤ k ≤ p) and bl(1 ≤ l ≤ q) denoting the efficacies for excitatory and inhibitory synapses, respectively. Assume that each neuron receives a subthreshold cosine signal, s(t) = εcos(Ωt), from the external environment. By “subthreshold,” it means that, in the absence of the synaptic current input (2), the membrane potential cannot cross the given spiking threshold from below (Kang et al., 2005). Here we use Vr to denote the resetting potential; that is, whenever the ith membrane potential reaches the threshold Vth from below, the ith neuron will emit a spike and then the membrane potential will be reset to Vr immediately. Let ti, k be the kth spiking instant recorded from the ith neuron; then, the output spike train of the ith neuron can be described as yi(t) = ∑kδ(t − ti, k). In this network, the output spike trains from every consisting neuron are fed back to the ith neuron for 1 ≤ i ≤ N through the synaptic interaction.

Here the global feedback interaction is implemented by a convolution of the sum of all the spike trains with a delayed alpha function. We fix the transmission time delay τD = 1 and the synaptic time constant τS = 0.5. In Equation (3), the feedback strength G < 0 indicates inhibitory feedback, G > 0 represents excitatory feedback, and Equation (1) turns into a neuron array model for enhancing information transition (Yu et al., 2012) when G = 0.

For simplicity, let us drop the subscripts k and l in the rates and the synaptic efficacies, so λE = λI = λ, p = q and b = ra, with r being the ratio between inhibitory and excitatory inputs. Invoking diffusion approximation transforms the synaptic current to

where (B1(t), B2(t), …, BN(t)) is n dimensional standard Brownian motions. With Equation (3) available, Equation (1) can be rewritten as

where and ξi(t) is Gaussian white noise satisfying 〈ξi(t)〉 = 0 and 〈ξi(t + s)ξj(t)〉 = δ(s) for 1 ≤ i, j ≤ N.

It has been shown that the firing rate is approximately a linear function of the external input near the equilibrium point (Gu et al., 2019), so we apply the linear approximation theory (Lindner and Schimansky-Geier, 2001; Pernice et al., 2011; Trousdale et al., 2012) to calculate the response of each neuron. Let μ = ap(1 − r)λ + VL/τ and . Regarding each neuron as linear filter of an external perturbation, we rewrite Equation (4) into Equation (5)

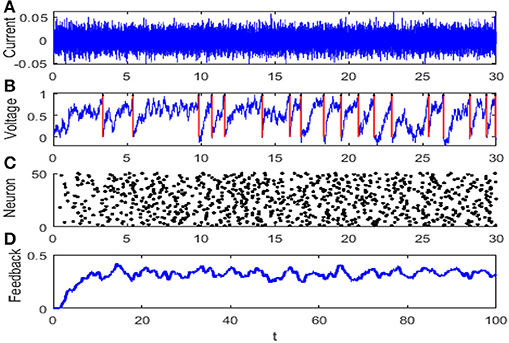

For simplicity, all of the variables are dimensionless and most of parameters are taken from Lindner et al. (2005), and particularly, time is measured in unit of membrane time constant τ. The dynamical evolution of the network is illustrated in Figure 1.

Figure 1. The evolution diagram of the integrate-and-fire neuron network: (A) diffusion approximation transforming the synaptic current with r = 1, (B) membrane potential of neuron where the red arrow denotes the discharge time, (C) raster plot of the network where every node denotes a spike at a corresponding time and neuron, and (D) feedback of the network. The parameters are set as μ = 0.8, VT = 1, VR = 0, G = 0.5, ε = 0.1, Ω = 1, τS = 0.5, τD = 1, τref = 0, and N = 50.

The phenomenon of stochastic resonance is frequently measured by the spectral amplification factor (Liu and Kang, 2018) and the output signal-to-noise ratio (Kang et al., 2005). With the help of the linear approximation theory, both the spectral amplification factor and the output signal-to-noise ratio for the homogeneous network can be explicitly attained. The spectral amplification factor is defined as the ratio of the power denoted by the delta-like spike in the output spectrum at ±Ω over the power of the input signal, namely,

while the signal-to-noise ratio, defined as the ratio of the power of the signal component over the background noise, is given by

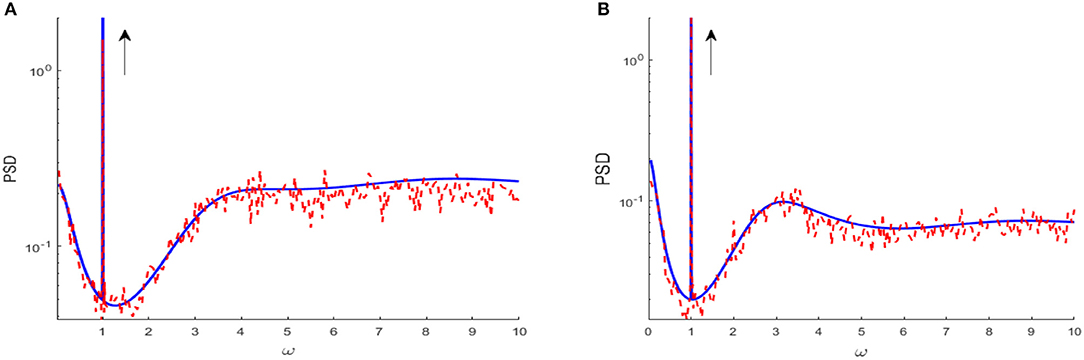

where A is the linear susceptibility, is the base current, is the Fourier transform of the kernel in Equation (2) and S0(ω, μ, D, VT) is the fluctuating spectral density of the unperturbed system. Gyy(ω) is power spectral density of output spike train, which consists of the signal component S1(ω) and the fluctuation component S2(ω). Actually, within the range of linear response, the power spectrum Gyy(ω) is a sharp power peak at the signal frequency riding over the spectral density of fluctuations, as shown in Figure 2. The detailed derivations of power spectral density Gyy(ω), spectral amplification factor SAF and output signal-to-noise ratio SNR are further described in Appendix.

Figure 2. Power spectrum density obtained from linear approximation (blue solid curve), compared to simulation (red dash curve) for D = 0.01 (A) and D = 0.1 (B), respectively. The parameters are set as μ = 0.8, VT = 1, VR = 0, G = 0.5, N = 3, ε = 0.1, Ω = 1, τS = 0.5, τD = 1, τref = 0, and τ = 1. The black arrow indicates the spike spectral line at the driving frequency, and the remaining part characterizes the spectral density of environmental fluctuations. Clearly, the spectral line of the driving signal is riding over the fluctuation spectral density. The explanation of figure and derivation of power spectrum density are displayed in Appendix.

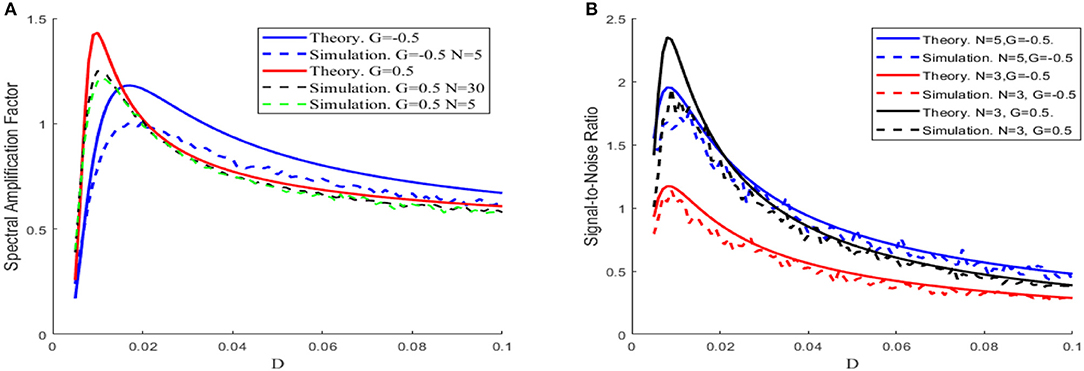

Equation (6) demonstrates that the spectral amplification factor is independent of the network size, whereas Equation (7) shows that the signal-to-noise ratio is proportional to the size. When comparing with the simulation results, Figure 3 shows that the theoretical results tend to be an overestimated approximation, but the overestimation is reduced as the network size increases. For this reason, the network size is fixed to be large enough in Figures 4, 5 so that the theoretical and simulation results are accurately matched.

Figure 3. The theoretical (solid) and simulation (dash) results of spectral amplification factor (A) and signal-to-noise ratio (B) vs. noise intensity, with μ = 0.8,VT = 1,VR = 0, ε = 0.1,Ω = 1,τS = 0.5,τD = 1,τref = 0, and τ = 1, under different sizes of the network N and different feedback strengths G, respectively.

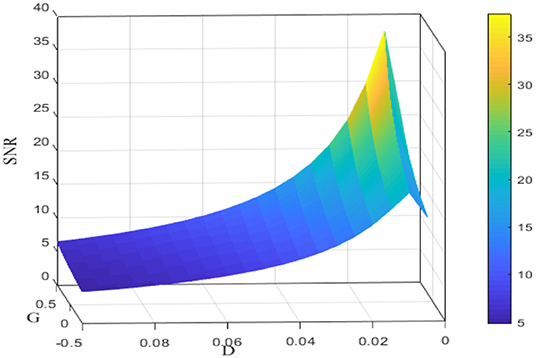

Figure 4. Signal-to-noise ratio for different noise intensities D and feedback strengths G. Data were obtained by the numerical simulation of a network, with μ = 0.8, VT = 1, VR = 0, N = 50, ε = 0.1, Ω = 1, τS = 0.5, τD = 1, τref = 0, and τ = 1. It is clear that for fixed G, the signal-to-noise ratio shows a rise before fall as a function of noise intensity D; for fixed D, the signal-to-noise ratio is an increasing function of the feedback strength G.

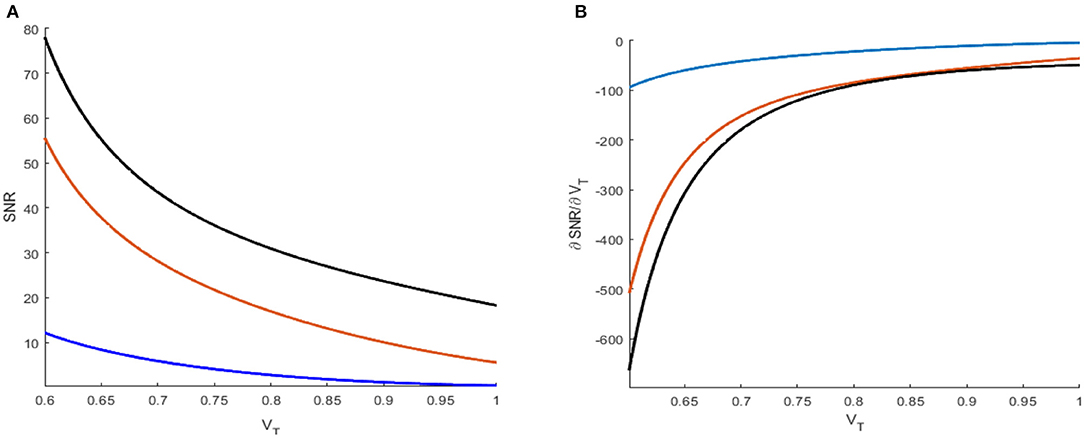

Figure 5. Signal-to-noise ratio (A) and its partial derivative with respect to threshold VT (B) under different reference currents μ = 0.2 (blue), 0.5 (orange), and 0.8 (black). The network size is N = 50.

Since the dependence of the spectral amplification factor or the signal-to-noise ratio on noise intensity is non-monotonic, one can conclude that stochastic resonance occurs for the given parameters in Figure 3. Figure 4 further shows the image of the signal-to-noise ratio on the two-parameter plane of noise intensity and global feedback strength. From this figure, it can be seen that, for fixed feedback strength, the existence of a sharp peak indicates stochastic resonance in the global feedback network, while for fixed noise intensity, the signal-to-noise ratio is a growing function of the feedback strength, which suggests the larger feedback strength is beneficial for resonant effect. Here we emphasize that the effect of the inhibitory feedback on the weak signal amplification is different from its effect on the intrinsic oscillation measure in Lindner et al. (2005) since these are two kinds of different synchronization. Phenomenologically, the former is the synchronization behavior of the external weak signal and the firing activity caused by noise, while the latter is the synchrony among the population neurons, and the difference in quantifying indexes directly leads to distinct observation. Thus, from the viewpoint of weak signal detection, one can say that the excitatory neural feedback is better than the inhibitory neural feedback.

Note that, in real neural activities, the spiking threshold may vary following the changing circumstance (Destexhe, 1998; Taillefumier and Magnasco, 2013), so it makes sense to consider the effect of the threshold on the population activity. By Equation (7), one has

where

and

with Re(·) being the real part of a complex value. Here, the Whittaker notation (Abramovitz and Stegun, 1964) is used for the parabolic cylinder function, with the recursion property and

The evolution of the signal-to-noise ratio [Equation (7)] and its partial derivative [Equation (8)] obtained via the threshold is shown in Figures 5A,B, respectively. The monotonical decrease in the signal-to-noise ratio suggests that a smaller threshold is better for weak signal detection. Moreover, from these figures, one can also see that an increasing distance between the base current and the firing threshold will lead to a reduced signal-to-noise ratio, as disclosed by Kang et al. (2005). As a result, the minimum distance between the base current and the firing threshold should be an important reference in designing visual perception applications of the global feedback network.

Stochastic Resonance Based Image Perception

We have systematically disclosed the phenomenon of stochastic resonance from the viewpoint of model investigation, and in this section, we wish to propose an algorithm for visual perception under the guidance of the above theoretical results. In fact, it is the theoretical evidence of SR in the integrate-and-fire neuron network in section stochastic resonance in an integrate-and-fire neuronal network that motivates us to do the application exploration. If noise at a certain level can amplify a weak harmonic signal via stochastic resonance, then noise of suitable amount can very likely enhance a more realistic weak signal such as the image of low contrast via aperiodic stochastic resonance.

In stochastic resonance, since the external weak signal is harmonic, one can use the spectral amplification factor or the output signal-to-noise ratio as quantifying index through frequency matching, while in aperiodic stochastic resonance, the external weak signal is aperiodic, so one has to resort to some coherence measure to describe the involved shape matching, as confirmed in neural information coding (Parmananda et al., 2005), hearing enhancement (Zeng et al., 2000). For the picture of low contrast, its contrast can be changed by noise and will attain to a maximum when the phenomenon of aperiodic stochastic resonance occurs; thus, we use the variance of image as a quantifying index as explained below. Even though a difference exists in quantifying index between stochastic resonance and aperiodic stochastic resonance, we can still use the results obtained from the model investigation as guidance. The numerical results in section stochastic resonance in an integrate-and-fire neuronal network show that positive feedback strength and low threshold are beneficial factors for observing the effect of stochastic resonance, and therefore we will take the two factors into account in the following algorithm design.

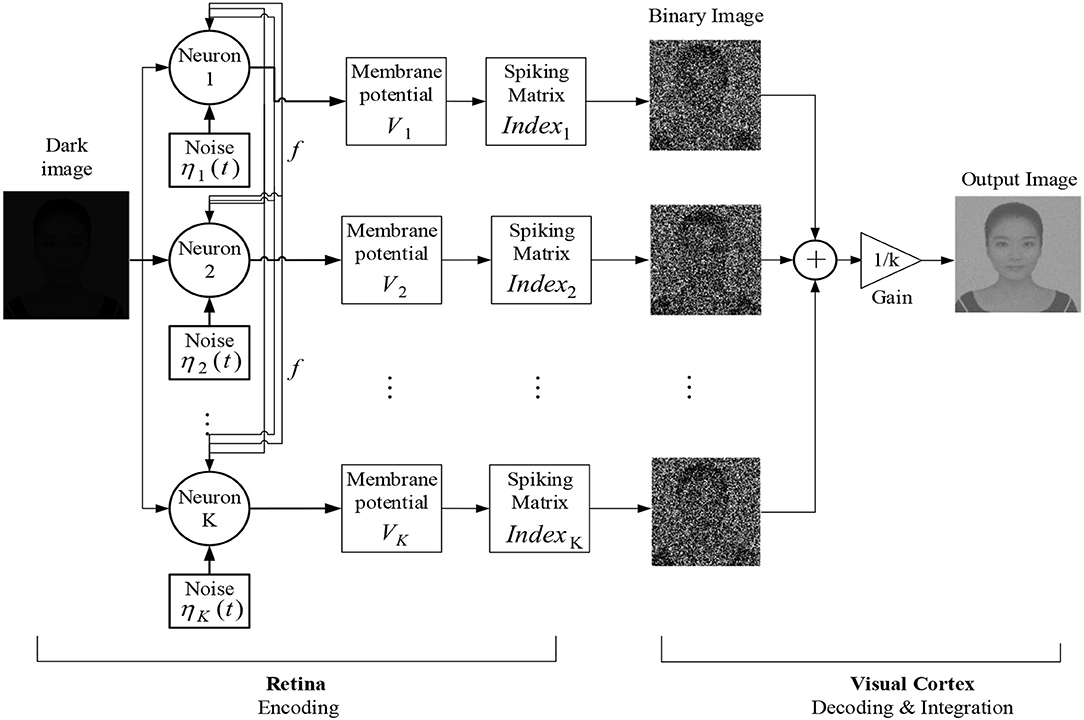

With the theoretical guidance in mind, we now start to present the algorithm for enhancing the image of low contrast. By the term dark image or image of low contrast, we mean that the picture is taken in a dark surrounding and cannot be detected at first sight. We put the new algorithm under the frame of the fundamental process for visual formation (Purves, 2011; Li, 2019): the photoreceptors in the retina receive the light and convert it into electrical signals, which is called encoding process, and then the signals are processed ultimately in the visual cortex, which is called decoding and integration process. Our algorithm is expounded into three steps, as shown in the flow chart in Figure 6.

Figure 6. Schematic diagram of the dark image enhancement algorithm based on the global feedback integrate-and-fire network.

Step 1. Encoding

When light enters the eye, the retina will convert the optical signal into electrical signal first. There are two kinds of photoreceptors in the retina, which are called rods and cones, respectively. The cones are active at bright light conditions and capable of color vision, while the rods are responsible for scotopic vision but cannot perceive color. As a result, human can capture the shape of the object in dim surroundings. We use the global feedback network [Equation (5)] of K integrate-and-fire neurons to simulate the perceptive process for rod cells. The membrane potential for each neuron is governed by

where the superscript corresponds to the pixels of the image and the subscript corresponds to the neurons, U(m, n)∈[0, 1] denotes the brightness of the input image, the Gaussian white noise satisfying is assumed to describe the fluctuation arising from the rhythms and the distribution of the rod cells along the retina, and fm, n(t) is the same global feedback function as in Equation (3). Upon reaching the threshold Vth from below, the ith neuron will emit an action potential at once and then the membrane potential is immediately reset toVr.

Step 2. Decoding and Integration

The coming information from the rod cells is decoded into a binary image within the visual cortex. We explain it from two aspects. Firstly, the carrier of neural information transmission is spike impulse, so the encoded information should be in the form of a spike train instead of the continuous membrane potential. Secondly, note that rod cells play a minor role in color vision, which actually leads to loss of color in dim light (Purves, 2011; Owsley et al., 2016), so it is reasonable to assume that all the receiving spike trains can be transformed into a binary image. Let matrix (Indexi)M × N store the spiking information of the ith neuron at the encoding stage. Then, the corresponding binary image matrix (Pici)M × N decoded by the ith neuron can be written as

With the decoded information from each neuron available, the visual cortex, as command center, will integrate all the information to form an overall gray image, which should be the picture we finally see in the dark surrounding. The idea of integration is inspired by boosting (Friedman, 2002). If each binary image is regarded as the output of the weak learner, the combination of the weak learners will be a strong learner and produce the gray image. We assume that the integration is in the way of linear superposition, namely,

where (Pic)M × N represents the integrated image.

We wish to put more emphasis on the validity of using the principle of stochastic resonance in our perception algorithm. It is well-known that noise is prevalent at the cellular level, and the level of the fluctuation in a neural system can be self-adjusted (Faisal and Selen, 2008; Durrant et al., 2011). What is more, distinct biophysical experiments (Douglass et al., 1993; Collins et al., 1996; Cordo et al., 1996; Levin and Miller, 1996; Pei et al., 1996; Borel and Ribot-Ciscar, 2016; Itzcovich et al., 2017; van der Groen et al., 2018) have shown that the benefit of noise can be utilized by biology. Thus, we assume that the human brain can select the perceived image of maximal contrast by means of the principle of stochastic resonance. The perceptive function of the brain is realized by neuron population, while the effect of stochastic resonance can be enhanced by uncoupled array or coupled ensemble; thus, our visual perception algorithm should be of some biological rationality.

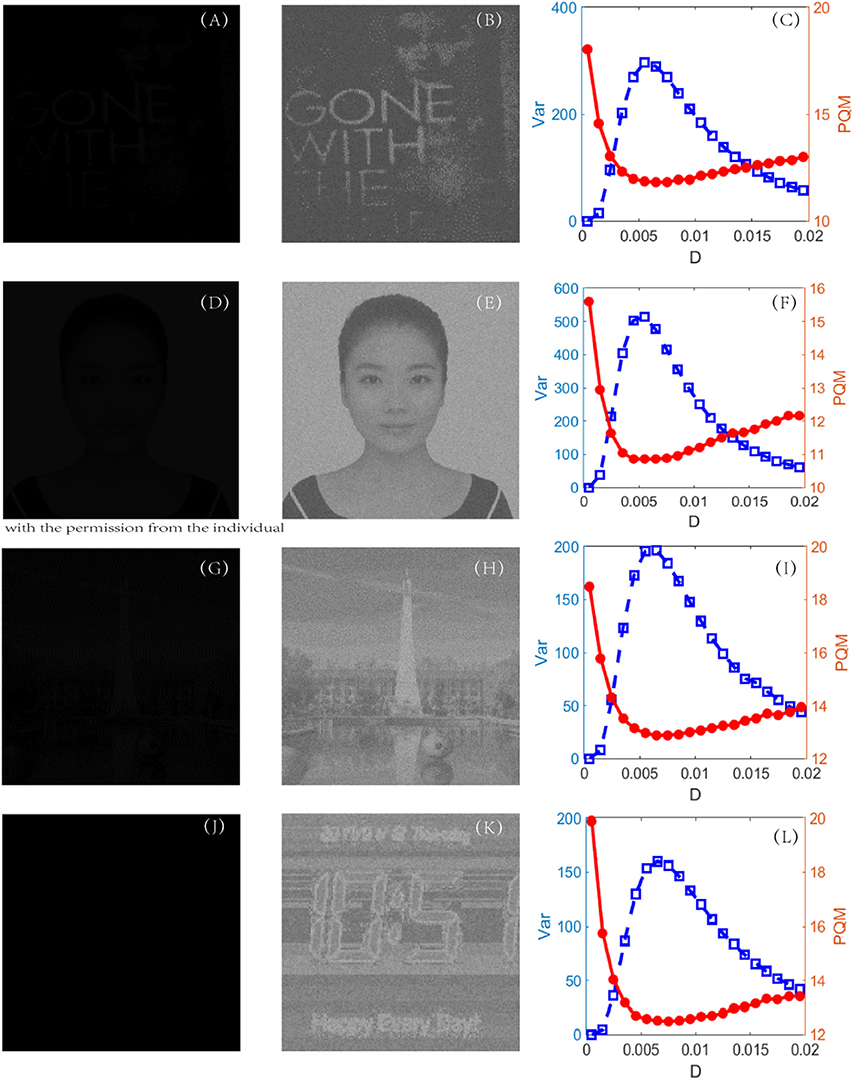

The procedure of the new algorithm is carried out in one unit of time by Euler integration with a step length of 0.01 time unit for all the detection experiments. The dark-input images were photos directly taken in a dark environment, such as that in Figure 7A, or artificially designed by compressing the original bright images into dark inputs, as shown in Figures 7D, G, J. The recognized images of the best quality, namely, the best enhanced images, are shown in the second column. During the experiments, it was found that some subtle key details, such as the quantifying index, the firing threshold, and the global feedback strength, need to be further explained.

Figure 7. First column (A, D, G and J): original dark-input images; second column (B, E, H and K): enhanced images with best quality; and third column (C, F, I and L): dependence of variance (blue, square) and PQM (red, dot) on noise intensity, for each experiment. The parameters are set as k = 1,000, VT = 0.1, VR = 0, G = 0.12, τs = 0.05, τD = 0.01, and τ = 1. For each experiment, the location of the peak of variance is always near the location of the bottom of the PQM, indicating that variance helps in recognizing the best-quality image.

Quantifying Index

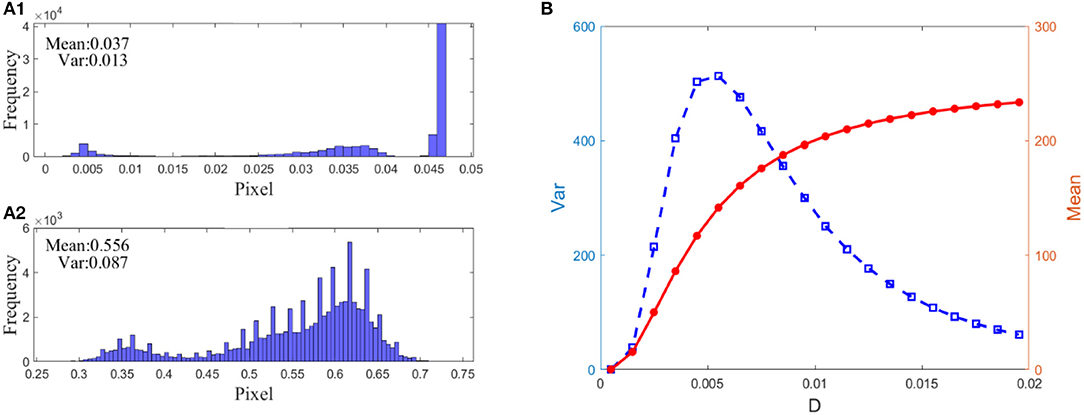

To evaluate the quality of an image, in the image processing literature, the most frequently used indexes are the peak signal-to-noise ratio and the mean-square error, where some known reference images are required. The perceptual quality metric (PQM) (Wang et al., 2002), another quantifying index used in visual perception, can skillfully evade the reference images. The more that PQM is close to 10, the better the quality of the image is (Susstrunk and Winkler, 2003), but it tends to become flat near the optimal value, as shown in Figure 7. Since the flatness is not favorable for picking out the optimal noise intensity to get the best enhanced image, the objective here is to find a better quantifying index to assess the perceptual quality. The new index is found to be the variance of image. For a given image UM × N, the variance is defined by

where Ū is the mean of the pixel matrix UM × N. The reason lies in the fact that this variance can reflect the heterogeneity among all the pixels. Intuitively, for a low-contrast image, the value of the variance will be quite low, but for a high-contrast image, the variance should take a much higher value. Figure 7 indeed verifies this reasoning. First of all, when the PQM is closer to 10, the variance curve will be nearer its peak. That is, the variance has the same capacity to identify which picture is the best in this task. Secondly, there is a sharp peak in the variance vs. the noise intensity curve so that one can easily detect an image with the best quality, namely, the best enhanced image. This is an advantage of the variance measure for the perceptual quality over the PQM measure, as shown in the third column of Figure 7. In addition, we note that the mean of the image is not suitable to be used as quantifying index. In fact, the mean of the image measures the luminance of an image, and it takes different values from the dark input and the best enhanced image to the blurred image due to excessive noise, but its value monotonically grows as noise intensity increases, as shown in Figure 8; thus, the mean is incapable of identifying the image with the best contrast as well. Undoubtedly, the comparison further emphasizes the applicability of the variance in visual perception.

Figure 8. Demonstration of the advantage of variance over mean: (A) 1: normalized histogram of the dark input image in Figure 7D, (A) 2: normalized histogram of the best enhanced image in Figure 7E, (B) Dependence of the variance (blue square) and the mean (red dot) on noise intensity. As seen from (A) 1 and 2, the dark-input image and the best enhanced image differ in the histogram. Nevertheless, it is not applicable for picking up the image of the best contrast since the mean grows monotonically (B) as noise intensity increases, even l when the contrast of the detected image deteriorates again. By contrast, the bell-shaped change of variance is suitable.

Firing Threshold

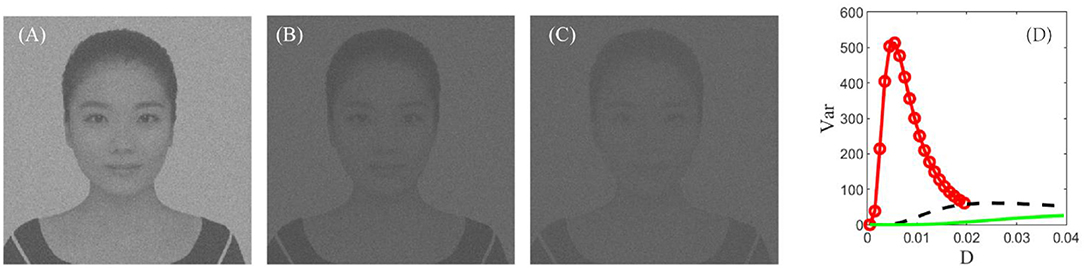

In real cortical activities, neurons can adopt a self-adaptive threshold strategy dependent on varying environments (Destexhe, 1998; Taillefumier and Magnasco, 2013) since the threshold has a direct impact on the neural electronic activity. We find that the threshold also has a large impact on the performance of the visual perception algorithm in Figure 9. The picture clearly shows that the choice of a suitable firing threshold is vital for the quality of the perceived image. Here the threshold is chosen according to the following rule. Firstly, find the frequency histogram of the dark image and denote the maximum pixel of the normalized histogram as max( U). Then, define the threshold by, where ceil (·) is the rounding function toward positive infinity. For example, the maximum pixel of the image in Figure 7D is max( U) = 0.05, as seen from Figure 8A1; accordingly, the threshold is taken as 0.1. It is worthy to remark that this kind of choice can guarantee that the distance between the base current and the firing threshold is minimized as far as possible, as suggested by the discussion following Figure 5.

Figure 9. The best enhanced image under VT = 0.1 (A), VT = 0.2 (B), and VT = 0.3 (C), respectively. (D) Dependence of variance on the noise intensity under VT = 0.1 (red, circle), VT = 0.2 (black, dash), and VT = 0.3 (green, solid). Other parameters are the same with Figure 7. This figure demonstrates that the best enhanced image is dependent on the spiking threshold, and thus choosing a suitable threshold is vital for image detection.

Feedback Strength

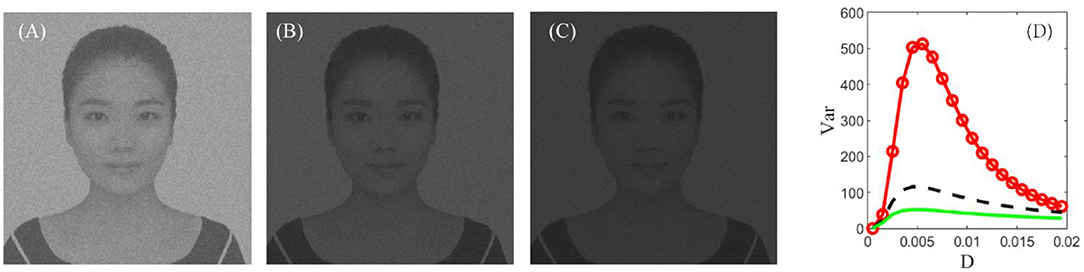

In section stochastic resonance based image perception, it was demonstrated that, when the global feedback changes from the inhibitory type into the excitatory type, the peak of the signal-to-noise ratio can be improved as shown in Figure 4. This theoretical observation encourages us to check the influence of the feedback strength of the encoding stage on the enhanced images as illustrated in Figure 10. Evidently, the excitatory feedback leads to the best enhancement among all the cases, and thus one can fix the feedback strength to be positive as shown in Figure 7. We emphasize that this finding does not deny that inhibition plays an important role in visual perception (Roska et al., 2006). As we know, both excitation and inhibition exist in the retina (Rizzolatti et al., 1974). We assume that excitation is reflected by step 1 of our algorithm. That is, different neurons in the retina help each other in detecting the same target and exhibit the cooperative effect in a general homogenous network at the encoding stage. This cooperative effect helps the individuals of the network spike regularly, and certainly this effect is consistent with the description in Brunel (2000) which states that the neurons exhibit a regular state when excitation dominates inhibition.

Figure 10. The best enhanced image under G = 0.12 (A), G = 0 (B), and G = −0.12 (C), respectively. (D) Dependence of variance on the noise intensity under G = 0.12 (red, circle), G = 0 (black, dash), and G = −0.12 (green, solid). Other parameters are the same with Figure 7. Clearly, an excitatory feedback is the best among all the types of global feedback, and this implies that different rods should cooperate with each other when facing the same task.

Conclusion

We have proposed a visual perception algorithm by combining the stochastic resonance principle of a global feedback network of integrate-and-fire neurons with the biophysical process for visual formation. The results can be summarized from the two closely related aspects. From the aspect of model investigation, we applied the technique of linear approximation and direct simulation to disclose the phenomenon of stochastic resonance in a global feedback network of integrate-and-fire neurons. It is demonstrated that both the spectral amplification factor and the output signal-to-noise ratio obtained from linear approximation are accurate when the size of the network is sufficiently large. Then, using the results derived from linear approximation, we found that positive feedback strength is beneficial for boosting the output signal-to-noise ratio, while a decreasing distance between the base current and the firing threshold can enhance the resonance effect. The theoretical observations are new, and they are also helpful for us to understand the working mechanism in rod neurons.

From the aspect of algorithm design, by applying the global feedback network (5) of integrate-and-fire neurons to simulate the perceptive process for rod cells, we have developed a novel visual perception algorithm. In the algorithm, the firing threshold is so critical that an inappropriate choice will lead to inefficiency in image enhancement. Under the inspiration of the theoretical finding that a decreasing distance between the base current and the firing threshold is favorable for stochastic resonance, we have proposed an explicit expression of a suitable firing threshold by referring to the histogram of the dark images. Moreover, we creatively introduced the variance of image rather than the perceptual quality metric as a more effective measure to examine the quality of the enhanced images. Massively numerical tests have shown that the biologically inspired algorithm is effective and powerful. We emphasize that the visual perception algorithm is a dynamical system based algorithm. We hope that it can be applied to relevant fields such as medical diagnosis, flight security, and cosmic exploration, where dark images are common. The algorithm also offers a good example of how the dynamical system research guides the neural engineering application. Following the success of this research, we will start to explore more interesting and important problems, such as the recovery of incomplete images, in the near future.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation, to any qualified researcher.

Author Contributions

YK guided and sponsored the research. YF did the simulation and algorithm implementation. YF worked out the initial draft, YK rewrote it, and GC made contribution in language polishing and general guide. The contributions from all the authors are important.

Funding

This work was financially supported by the National Natural Science Foundation under Grant No. 11772241.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fncom.2020.00024/full#supplementary-material

References

Abramovitz, M., and Stegun, I. A. (1964). Handbook of Mathematical Functions With Formulas, Graphs and Mathematical Tables. U.S. Department of Commerce, NIST.

Benzi, R., Sutera, A., and Vulpiani, A. (1981). The mechanism of stochastic resonance. J. Phys. A. 14, L453–L457. doi: 10.1088/0305-4470/14/11/006

Borel, L., and Ribot-Ciscar, E. (2016). Improving postural control by applying mechanical noise to ankle muscle tendons. Exp. Brain Res. 234, 2305–2314. doi: 10.1007/s00221-016-4636-2

Brunel, N. (2000). Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. J. Comput. Neurosci. 8, 183–208. doi: 10.1023/A:1008925309027

Chouhan, R., Kumar, C. P., Kumar, R., and Jha, R. K. (2013). Contrast enhancement of dark images using stochastic resonance in wavelet domain. Int. J. Mach. Learn. Comput. 2, 711–715. doi: 10.7763/IJMLC.2012.V2.220

Collins, J. J., Imhoff, T. T., and Grigg, P. (1996). Noise enhanced information transmission in rat SA1 cutaneous mechanoreceptors via a periodic stochastic resonance. J. Neurophysiol. 76, 642–645. doi: 10.1152/jn.1996.76.1.642

Cordo, P., Inglis, J. T., Verschueren, S., Collins, J. J., et al. (1996). Noise in human muscle spindles. Nature 383, 769–770. doi: 10.1038/383769a0

Destexhe, A. (1998). Spike-and-wave oscillations based on the properties of GABA(B) receptors. J. Neurosci. 18, 9099–9111. doi: 10.1523/JNEUROSCI.18-21-09099.1998

Ditzinger, T., Stadler, M., Strüber, D., and Kelso, J. A. S. (2000). Noise improves three-dimensional perception: stochastic resonance and other impacts of noise to the perception of autostereograms. Phys. Rev. E. 62, 2566–2575. doi: 10.1103/PhysRevE.62.2566

Douglass, J. K., Wilkens, L., Pantazelou, E., and Moss, F. (1993). Noise enhancement of information transfer in crayfish mechanoreceptors by stochastic resonance. Nature 365, 337–340. doi: 10.1038/365337a0

Durrant, S., Kang, Y., Stocks, N., and Feng, J. (2011). Suprathreshold stochastic resonance in neural processing tuned by correlation. Phys. Rev. E. 84:011923. doi: 10.1103/PhysRevE.84.011923

Dylov, D. V., and Fleischer, J. W. (2010). Nonlinear self-filtering of noisy images via dynamical stochastic resonance. Nat. Photonics 4, 323–328. doi: 10.1038/nphoton.2010.31

Faisal, A., and Selen, L. (2008). Noise in the nervous system. Nat. Rev. Neurosci. 9, 292–303. doi: 10.1038/nrn2258

Friedman, J. H. (2002). Stochastic gradient boosting. Comput. Stat. Data Anal. 38, 367–378. doi: 10.1016/S0167-9473(01)00065-2

Gu, Q. L., Li, S., Dai, W. P., Zhou, D., and Cai, D. (2019). Balanced active core in heterogeneous neuronal networks. Front. Comput. Neurosci. 12:109. doi: 10.3389/fncom.2018.00109

Itzcovich, E., Riani, M., and Sannita, W. G. (2017). Stochastic resonance improves vision in the severely impaired. Sci. Rep. 7:12840. doi: 10.1038/s41598-017-12906-2

Kang, Y., Xu, J., and Xie, Y. (2005). Signal-to-noise ratio gain of a noisy neuron that transmits subthreshold periodic spike trains. Phys. Rev. E 72:021902. doi: 10.1103/PhysRevE.72.021902

Levin, J. E., and Miller, J. P. (1996). Broadband neural encoding in the cricket cercal sensory system enhanced by stochastic resonance. Nature 380, 165–168. doi: 10.1038/380165a0

Li, Z. P. (2019). A new framework for understanding vision from the perspective of the primary visual cortex. Curr. Opin. Neurobiol. 58, 1–10. doi: 10.1016/j.conb.2019.06.001

Lindner, B., Doiron, B., and Longtin, A. (2005). Theory of oscillatory firing induced by spatially correlated noise and delayed inhibitory feedback. Phys. Rev. E. 72:061919. doi: 10.1103/PhysRevE.72.061919

Lindner, B., and Schimansky-Geier, L. (2001). Transmission of noise coded versus additive signals through a neuronal ensemble. Phys. Rev. Lett. 86, 2934–2937. doi: 10.1103/PhysRevLett.86.2934

Liu, J., Hu, B., and Wang, Y. (2019). Optimum adaptive array stochastic resonance in noisy grayscale image restoration. Phys. Lett. A. 383, 1457–1465. doi: 10.1016/j.physleta.2019.02.006

Liu, R. N., and Kang, Y. M. (2018). Stochastic resonance in underdamped periodic potential systems with alpha stable Lévy noise. Phys. Lett. A. 382, 1656–1664. doi: 10.1016/j.physleta.2018.03.054

Nakamura, O., and Tateno, K. (2019). Random pulse induced synchronization and resonance in uncoupled non-identical neuron models. Cogn. Neurodyn. 13, 303–312. doi: 10.1007/s11571-018-09518-5

Owsley, C., McGwin, G., Clark, M. E., Jackson, G. R., Callahan, M. A., Kline, L. B., et al. (2016). Delayed rod-mediated dark adaptation is a functional biomarker for incident early age-related macular degeneration. Ophthalmology 123, 344–351. doi: 10.1016/j.ophtha.2015.09.041

Parmananda, P., Santos, G. J. E., Rivera, M., and Showalter, K. (2005). Stochastic resonance of electrochemical aperiodic spike trains. Phys. Rev. E. 71:031110. doi: 10.1103/PhysRevE.71.031110

Patel, A., and Kosko, B. (2011). Noise benefits in quantizer-array correlation detection and watermark decoding. IEEE Trans. Signal Process 59, 488–505. doi: 10.1109/TSP.2010.2091409

Pei, X., Wilkens, L. A., and Moss, F. (1996). Light enhances hydrodynamic signaling in the multimodal caudal photoreceptor interneurons of the crayfish. J. Neurophysiol. 76, 3002–3011. doi: 10.1152/jn.1996.76.5.3002

Pernice, V., Staude, B., Cardanobile, S., and Rotter, S. (2011). How structure determines correlations in neuronal networks. PLoS Comput. Biol. 7:e1002059. doi: 10.1371/journal.pcbi.1002059

Rizzolatti, G., Camarda, R., Grupp, L. A., and Pisa, M. (1974). Inhibitory effect of remote visual stimuli on visual response of cat superior colliculus: spatial and temporal factors. J. Neurophysiol. 37, 1262–1275. doi: 10.1152/jn.1974.37.6.1262

Roska, B., Molnar, A., and Werblin, F. S. (2006). Parallel processing in retinal ganglion cells: how integration of space-time patterns of excitation and inhibition form the spiking output. J. Neurophysiol. 95, 3810–3822. doi: 10.1152/jn.00113.2006

Sasaki, H., Sakane, S., Ishida, T., Todorokihara, M., Kitamura, T., and Aoki, R. (2008). Suprathreshold stochastic resonance in visual signal detection. Behav. Brain Res. 193, 152–155. doi: 10.1016/j.bbr.2008.05.003

Simonotto, E., Riani, M., Seife, C., Roberts, M., Twitty, J., and Moss, F. (1997). Visual perception of stochastic resonance. Phys. Rev. Lett. 78, 1186–1189. doi: 10.1103/PhysRevLett.78.1186

Susstrunk, S. E., and Winkler, S. (2003). Color image quality on the Internet. SPIE Electron. Imaging 5304, 118–131. doi: 10.1117/12.537804

Sutherland, C., Doiron, B., and Longtin, A. (2009). Feedback-induced gain control in stochastic spiking networks. Biol. Cybern. 100, 475–489. doi: 10.1007/s00422-009-0298-5

Taillefumier, T., and Magnasco, M. O. (2013). A phase transition in the first passage of a Brownian process through a fluctuating boundary with implications for neural coding. Proc. Natl. Acad. Sci. U.S.A. 110, 1438–1443. doi: 10.1073/pnas.1212479110

Trousdale, J., Hu, Y., Shea-Brown, E., and Josić, K. (2012). Impact of network structure and cellular response on spike time correlations. PLoS Comput. Biol. 8:e1002408. doi: 10.1371/journal.pcbi.1002408

van der Groen, O., Tang, M. F., Wenderoth, N., and Mattingley, J. B. (2018). Stochastic resonance enhances the rate of evidence accumulation during combined brain stimulation and perceptual decision-making. PLoS Comput. Biol. 14:e1006301. doi: 10.1371/journal.pcbi.1006301

Wang, Z., Sheikh, H. R., and Bovik, A. C. (2002). No reference perceptual quality assessment of JPEG compressed images. IEEE Int. Conf. Image Process 1, 477–480. doi: 10.1109/ICIP.2002.1038064

Yang, T. (1998). Adaptively optimizing stochastic resonance in visual system. Phys. Lett. 245, 79–86. doi: 10.1016/S0375-9601(98)00351-X

Yu, T., Park, J., Joshi, S., Maier, C., and Cauwenberghs, G. (2012). “65K-neuron integrate-and-fire array transceiver with address-event reconfigurable synaptic routing,” in 2012 IEEE Biomed. Circuits Syst. Conf. Intell. Biomed. Electron. Syst. Better Life Better Environ. BioCAS 2012 - Conf. Publ., 21–24. doi: 10.1109/BioCAS.2012.6418479

Zeng, F. G., Fu, Q. J., and Morse, R. (2000). Human hearing enhanced by noise. Brain Res. 869, 251–255. doi: 10.1016/S0006-8993(00)02475-6

Keywords: stochastic resonance, spiking networks, visual perception, variance of image, contrast enhancement

Citation: Fu Y, Kang Y and Chen G (2020) Stochastic Resonance Based Visual Perception Using Spiking Neural Networks. Front. Comput. Neurosci. 14:24. doi: 10.3389/fncom.2020.00024

Received: 21 November 2019; Accepted: 17 March 2020;

Published: 15 May 2020.

Edited by:

Yu-Guo Yu, Fudan University, ChinaCopyright © 2020 Fu, Kang and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yanmei Kang, eW1rYW5nQHhqdHUuZWR1LmNu

Yuxuan Fu

Yuxuan Fu Yanmei Kang

Yanmei Kang Guanrong Chen

Guanrong Chen