- Independent Researcher, Saitama, Japan

The information processing in the large scale network of the human brain is related to its cognitive functions. Due to requirements for adaptation to changing environments under biological constraints, these processes in the brain can be hypothesized to be optimized. The principles based on the information optimization are expected to play a central role in affecting the dynamics and topological structure of the brain network. Recent studies on the functional connectivity between brain regions, referred to as the functional connectome, reveal characteristics of their networks, such as self-organized criticality of brain dynamics and small-world topology. However, these important attributes are established separately, and their relations to the principle of the information optimization are unclear. Here, we show that the maximization principle of the mutual information entropy induces the optimal state, at which the small-world network topology and the criticality in the activation dynamics emerge. Our findings, based on the functional connectome analyses, show that according to the increasing mutual information entropy, the coactivation pattern converges to the state of self-organized criticality, and a phase transition of the network topology, which is responsible for the small-world topology, arises simultaneously at the same point. The coincidence of these phase transitions at the same critical point indicates that the criticality of the dynamics and the phase transition of the network topology are essentially rooted in the same phenomenon driven by the mutual information maximization. As a consequence, the two different attributes of the brain, self-organized criticality and small-world topology, can be understood within a unified perspective under the information-based principle. Thus, our study provides an insight into the mechanism underlying the information processing in the brain.

Introduction

The human brain maintains its performance during perception, cognition, and behavior through information processing in the neuronal networks (Linsker, 1988; Gray et al., 1989; Sporns, 2002; Womelsdorf et al., 2007). Information processing is one of central functions of the brain, which organizes the hierarchical structure of neuronal networks. In particular, integrative processing in the large scale network, which interconnects segregated and functionally specialized regions in the brain (Tononi et al., 1994; Hilgetag and Grant, 2000; Sporns, 2013), is related to cognitive functions such as decision making (Friston, 2010; Clark, 2013; Park and Friston, 2013). In order to achieve efficient performance despite the requirements for rapid and flexible adaptation to changing environments (Bassett et al., 2006; Kitzbichler et al., 2009; Clark, 2013; Park and Friston, 2013; Mnih et al., 2015), information processing in the brain might be optimized (Friston, 2010). Since the brain is spatially limited in its finite volume, it is natural to assume that physical constraints, such as the biological costs, require the brain to optimize its function based on the limited resources (Achard and Bullmore, 2006; Chen et al., 2006; Bassett et al., 2010; Bullmore and Sporns, 2012). Due to this issue, the principles based on the information theoretic quantities, such as free-energy (Friston, 2010) and mutual information (Linsker, 1990), provide formulations, which account for the mechanism underlying the function and structure of the brain. However, understanding the details of the mechanism, and the effect of these principles on structural and functional aspects of the brain networks remains an open issue.

Small-world topology and self-organized criticality are major attributes which facilitate information processing in the brain, yet their relations to the principle of information optimization are still unclear. Recent advances in neuroimaging techniques allow noninvasive observation of anatomical and functional pathways in the brain, leading to elucidation of the network structures and dynamics pattern referred to as the connectome (Sporns et al., 2005; Achard et al., 2006; Bassett and Bullmore, 2006; Shmuel et al., 2006; Hagmann et al., 2008; Greicius et al., 2009; Bullmore and Bassett, 2010; Biswal et al., 2010; Brown et al., 2012). Small-world topology is one of common characteristics of the complex networks that appear in a wide range of phenomena (Watts and Strogatz, 1998; Newmann and Watts, 1999), including the functional connectivity in the brain (Achard et al., 2006; Bassett and Bullmore, 2006; van den Heuvel et al., 2008; van den Heuvel and Sporns, 2011). Due to the abundant existence of hubs and highly connected nodes in the small-world network, it generally achieves robust and efficient information transfer (Albert et al., 2000; Latora and Marchiori, 2001). On the other hand, self-organized criticality provides one attractive hypothesis describing the dynamics state in the brain (Bak et al., 1987; Beggs and Plenz, 2003; Beggs, 2008). Self-organized criticality is described as an emergent property of the system. Specifically, the dynamic systems of interconnected nonlinear elements naturally evolve into a self-organized critical state without any external tuning. Due to successive signal propagation at the large scale observed in the brain, the dynamics of individual units can induce rapid adaptive responses to external stimuli (Kitzbichler et al., 2009; Chialvo, 2010; Tagliazucchi et al., 2012). Based on the fact that small-world topology is an attribute arising in the critical state between random networks and ordered ones, the criticality is considered a major cause of this network attribute. However, the relation between these attributes, which are usually established separately, is not yet clearly understood.

In this study, we show a direct evidence that small-world network topology and self-organized criticality are related by the maximization principle of the mutual information entropy. Targeting the large scale brain network, we investigated the functional connectome constructed from the resting-state functional MRI (fMRI) data, which records activation patterns in brain regions during the resting state, and is expected to describe a common architecture of the human brain (Achard et al., 2006; Bassett and Bullmore, 2006; Fox and Raichle, 2007; Hagmann et al., 2008; van den Heuvel et al., 2008; Greicius et al., 2009; Honey et al., 2009; Biswal et al., 2010; Honey, 2010; van den Heuvel and Hulshoff Pol, 2010; Van Dijk et al., 2010; Hlinka et al., 2011). When conceptualizing the brain as an information processing system, successive patterns of activation and deactivation in different brain regions provide a representation of the processing associated with information transfer. Historically, studies based on measurements of the brain's responses to tasks or stimuli have been successful in mapping specific cognitive functions onto distinct brain regions (e.g., Kanwisher et al., 1997). However, accumulated evidence in recent studies indicates that various cognitive functions arise from the more complex dynamics of interactions between distributed brain regions, rather than from activities localized to specific regions (Ghazanfar and Schroeder, 2006; Bressler and Menon, 2010). Further evidences indicates that these activities are efficiently modulated by brain regions that are negatively correlated to tasks and are active and demonstrate spontaneous neural activity even in the resting state (Fox et al., 2005; Menon and Uddin, 2010). Then optimization of information processing is accomplished by coordinating activation and deactivation in different brain regions. Thus, activation correlations and anti-correlations between regions, which are calculated based on resting-state fMRI observations, provide basic information useful in understanding the above processes (Fox et al., 2005; Fox and Raichle, 2007; Uddin et al., 2009).

In our study, we use the preprocessed functional connectome data consisting of a matrix, each element of which represents the connectivity strength between regions (Biswal et al., 2010; Brown et al., 2012). We analyze these data using topological and statistical methods (Barrat et al., 2004; Achard et al., 2006; Clauset et al., 2009; Takagi, 2010, 2017; Klaus et al., 2011). Based on the information transfer model reflecting the topological and functional aspects, we show that the requirement for the maximization of the mutual information entropy drives the network to the critical state. We then show that the phase transition, with respect to the topological structure, appears according to this maximization. Further, we show that, at this critical point, the distribution of the connectivity strength converges to the model, indicating the self-organized criticality (Takagi, 2010, 2017). These evidences describe their relations explicitly, and indicate that they are essentially rooted in the single phenomenon driven by the maximization of the mutual information entropy. Thus, according to our results, the two different attributes of the brain, self-organized criticality and small-world topology, can be understood within a unified perspective, under the information-based principle.

Materials and Method

Functional Connectome Datasets

The functional connectome provides a description of the large scale network structure in the brain with the connectivity matrix, whose (i, j) element represents the connection weight wij. The weight wij was evaluated from the fMRI data by the correlation coefficient, where each node, i or j, corresponds to the single region segmented in the brain (Achard and Bullmore, 2006; Achard et al., 2006). In this study, we used preprocessed datasets of the connectivity matrix, which are directly available at the USC Multimodal Connectivity Database (Brown et al., 2012) from the web page (http://umcd.humanconnectomeproject.org/). These matrix datasets have N×N elements (wij), which correspond to the connectivity strengths between N = 177 brain regions in this case, which are sufficiently large to cover the entire brain (Brown et al., 2012). The matrix datasets used in this study thus contain 986 matrices constructed from data from different individual subjects. They are constructed from the datasets of the functional connectome of “1,000 connectome project” (Biswal et al., 2010), which collects the data obtained by resting-state fMRI (R-fMRI) of the brain. They reveal that, while individual differences can be observed, the connectome datasets share a common architecture.

Because the correlation coefficient indicates a linear relationship between variables, there would be limitations in applying this quantity to brain activity, which is nonlinear. However, it has been reported that resting-state fMRI data are almost Gaussian. As such, the loss in connectivity information due to the use of linear correlation is relatively small (Hlinka et al., 2011). We thus use this quantity, which approximately represents the brain network.

Network Description

The connectivity matrix contains the noise and artifacts (Eguiluz et al., 2005; Brown et al., 2012; Takagi, 2017), and subsequent noise reduction procedures are required to depict the network structure accurately. During usual analysis, these noises were removed by applying the threshold value to the matrix (wij). In this process, connections with small connectivity weights are removed, and the network was constructed by the residual connections. Further, this procedure is relevant to the brain network analysis, because it extracts core structures consisting of strongly connected pathways (Hagmann et al., 2008).

Introducing the threshold wt for the connection weight wij, we obtained the network description consisting of the connections corresponding to the |wij| > wt elements. Since responses of neuronal activity can be categorized as positive and negative ones (Shmuel et al., 2006), wij takes its value in the positive and the negative range accordingly, and then we adapted the threshold to the absolute value |wij|.

This process simultaneously produces the topological description, which was defined by the adjacency matrix (Eguiluz et al., 2005; Bullmore and Bassett, 2010; Honey, 2010). In this matrix, each element aij was assigned the binarized value, 0 or 1, according to the absence or presence of the connection between nodes i and j. For the introduced threshold, the adjacency matrix takes aij = 1 for the (i, j) element with |wij| > wt and aij = 0 otherwise.

We use the largest connected component and the clustering coefficient, which are basic measures of the topological network, to characterize the structure of the topological network. For a given graph description, such as that presented above, which is an undirected topological graph based on the adjacency matrix, connected components are defined by connected subgraphs. In each of these subgraphs, all of the vertices are connected to each other by paths. We measure the size of each connected component using the number of vertices in the subgraph. We then determine the largest connected component. In this paper, we measure this quantity using R-package igraph (Barrat et al., 2004). However, the clustering coefficient C, which is also known as transitivity, is used to measure the probability that the adjacent vertices of a vertex are connected (Watts and Strogatz, 1998). This quantity provides an important indicator of the small-world network. Unlike networks such as random or regular networks, small-world topology is defined as a network that can be highly clustered into regular lattices, yet have small characteristic path lengths, as in random graphs (Watts and Strogatz, 1998). In our previous work (Takagi, 2017), simultaneous emergence of a small average minimum path length and a large clustering coefficient were observed in the datasets, which we also use in this study. We thus measure the clustering coefficient C in this paper as an indicator of small-world topology using R-package igraph (Barrat et al., 2004).

Information Transfer Model

On the brain connectivity map represented by the connectivity matrix (wij) and the corresponding adjacent matrix (aij), information processing was represented by the signal transmission. Information transfer in the brain can be described by successive propagation of the signal represented by the activated state of each site (Bak et al., 1987; Beggs and Plenz, 2003; Beggs, 2008). In order to model the information transfer, we defined the stimulus signals S = (s1, …, sN) and the responses R = (r1, …, rN), assigning the three states for each i-th node si, rj ∈ {1, −1, 0} for the network size N. The inactivated regions were assigned the 0 state, while the two states at ±1 for si, rj were considered to represent positive and negative activations, respectively, in accordance with the empirical fact that responses of neuronal activity can be categorized as positive and negative (Shmuel et al., 2006).

In our simulation, where we use the same probability for positive and negative activation, we assigned 1 and −1 to each input signal si with the probability p, respectively. This value was set to 0 otherwise. This parameter indicates the strength of activity, which is related to energy consumption in the brain. Because brain activity fluctuates, the activation density is variously taken.

For a given set of signals S = (s1, …, sN) with randomly assigned values of si, we estimated the response rj using the total input signals received by each j-th node as

where wij is the connectivity between i and j, given by the connectivity matrix, and aij is the adjacency matrix. The state of each responding node rj was determined according to rs, j and the threshold wt.

Mutual Information Entropy

The mutual information entropy is one indicator which measures the information transfer from imposed stimuli to responses. It is frequently used to evaluate the information transfer in networks, including the neural network models and the real brain regions (Bak et al., 1987; Beggs and Plenz, 2003; Beggs, 2008). Therefore, we used this quantity to assess the efficiency of the information transfer in our model.

For the set of stimulus signals S and the corresponding responses R, the mutual information was defined as H(R)−H(R|S), where H(R) is the information entropy of the response R and H(R|S) is the conditional entropy. Especially for the transfer between i and j nodes, the mutual information entropy was estimated by the following equation

where the entropy, H(si) and H(rj), and joint entropy, H(si, rj), were calculated using the probabilities for each state si, rj ∈ {±1, 0}. Specifically the entropy H(si) is defined as for p(si), which indicates the probability of the state ±1, 0 at the i-th node. H(rj) is thus defined by substituting rj into si. In addition, H(si, rj) can be calculated using for p(si, rj), which represents the joint probability for the combination of si and rj states.

This definition, Equation (2), provides the mutual information entropy for each node, with averaging, as for all the possible connections. Finally, this quantity for the whole network was estimated as . In this study, we estimated the mutual information entropy according to this definition of the average. The optimal state with respect to information transfer is thus obtained by maximizing this quantity.

Result

Mutual Information Entropy

We calculated the mutual information entropy according to the model represented by Equation (1) and the definition Equation (2). In order to reduce noise and define the weight matrix (wij) and adjacent matrix (aij), we introduced the cut-off threshold wt. Considering the differences between individuals, we defined the threshold value wt based on the average of the connectivity < |w| > and the standard deviation σ|w| for each connectivity matrix. We calculated < |w| > and σ|w|, and defined the cut-off threshold by

with a parameter n. Further, as explained in the previous section, in order to control the activation density of the input stimuli S, we introduced the activation probability p, due to which each node is randomly activated.

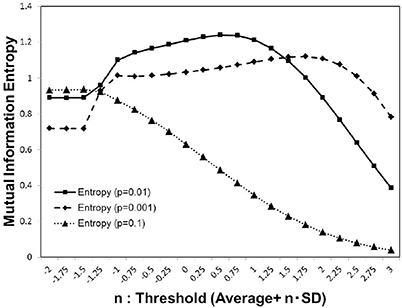

As shown in Figure 1, for each activation probability p = 0.1, 0.01, 0.001, we estimated the average of the mutual information entropy for different cut-off threshold values, which were defined as Equation (3). Comparing the peak values for these p-values, the maximum value was recorded in the case of p = 0.01, with the medium density, while for other cases lower peak maximum values were recorded. The result in Figure 1, showing the three different conditions, indicates that the density of the signal activation is one of the major factors that determine the efficiency of the information processing.

Figure 1. Mutual information. We calculated the average value of the mutual information Equation (2) using whole 986 datasets of the functional connectivity matrices (Biswal et al., 2010; Brown et al., 2012). The threshold value was considered as Equation (3) parameterized by n with the standard deviation. We used three different values of the activation probability, p = 0.001, 0.01, 0.1, corresponding to the dashed line, solid line, and dotted line on the plot, respectively. Each simulation, was repeated 1, 000 times, with random input signals.

Largest Component Size and Phase Transition

In order to determine other factors which contribute to the increase in the mutual information entropy, we evaluated one of the basic measures of the network, the size of the largest connected component. It is expected that the decomposition of the connected network decreases the mutual information entropy, because the information transfer between separated components is completely prohibited.

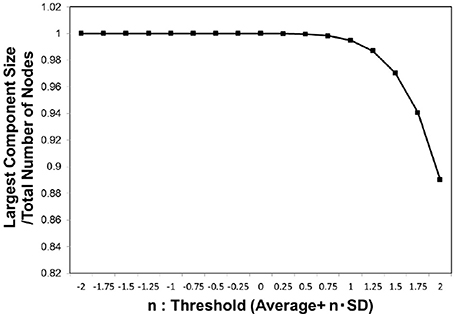

We then evaluated the largest component size against the threshold (Figure 2) for whole individual datasets of the functional connectome. In this figure, the size was normalized by the total number of the nodes, and 1 indicates that the network is fully connected. For the adjacent matrix obtained by adapting these threshold values defined as Equation (3), we measured the size of the largest connected component.

Figure 2. Largest component size. we estimated the largest component size of the topological network representation obtained for the threshold wt values defined as Equation (3). The vertical axis indicates n, which parameterizes the threshold as shown in the definition of Equation (3). The largest component size in the horizontal axis was normalized by the total number of the nodes, and then the value at 1 corresponded to the fully connected network. The average of this value was considered for the whole datasets, 986 datasets of the functional connectome (Biswal et al., 2010; Brown et al., 2012).

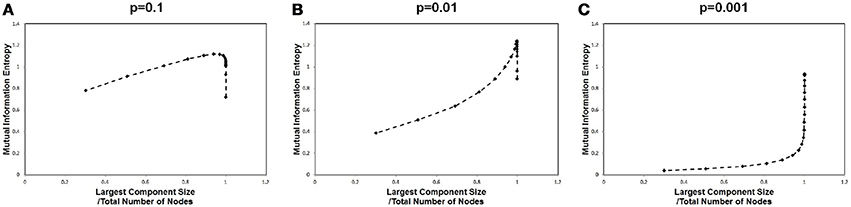

In order to identify the relation between the maximization in Figure 1 and the largest component size, we plotted the mutual information values against the corresponding largest component sizes for each cut-off threshold in Figure 3. A sharp peak with a discontinuous curve was observed for the p = 0.01 case (Figure 3B), whereas gradual changes appeared in the other cases with p = 0.001 and p = 0.1 (Figures 3A,C). This behavior in Figure 3B indicates that the largest component size is the other major factor which affects the mutual information entropy. Further, it implies that the maximization of the mutual information is related to the occurrence of the phase transition with respect to the topological structure. The existence of the phase transition observed in Figure 3B might agree with the argument that the brain operates near the critical state (Bak et al., 1987; Beggs and Plenz, 2003; Beggs, 2008; Kitzbichler et al., 2009; Chialvo, 2010; Tagliazucchi et al., 2012).

Figure 3. Mutual information and the largest component sizes. We plotted the mutual information value against the corresponding value of the largest component sizes. The mutual information data is the same as that in Figure 1, and the largest component sizes were taken from Figure 2 for each corresponding threshold value, where the largest component size was divided by the total number of nodes. (A) The estimated values for a high signal density (p = 0.1) are shown. (B) The estimated values for a medium signal density (p = 0.01) are shown. (C) The estimated values for a low signal density (p = 0.001) are shown.

This maximization might be explained by the criticality hypothesis (Bak et al., 1987; Beggs and Plenz, 2003; Beggs, 2008), which states that the information transfer is maximized in the critical state. This state is in contrast with the sub-critical state with less activation and the super-critical state, in which excess activation is saturated. In sub-critical state, due to poor sensitivity to the stimulus, activations die out, and the signal transfer is terminated quickly. On the other hand, in the super-critical state, the system reaches the runaway excitation due to uncontrolled chain reactions. Therefore, the information transmission is expected to be maximized in the critical state. The result in Figure 3, showing the three different conditions, indicates that the medium density with p = 0.01 (Figure 3B) represents the critical state.

Small-World Topology and Phase Transition

In the above results, we showed that network topology is one of the factors which contribute toward maximization of the mutual information entropy, and this is accompanied by its phase transition. In order to specify the relation between the mutual information maximization and the network topology, we investigated the behavior of the network topology around the critical point in greater detail.

The small-world topology is one of common characteristics of the complex network which arises in the critical state between random networks and ordered ones (Watts and Strogatz, 1998; Newmann and Watts, 1999). Generally, it contributes to the robustness and efficiency in the information transfer in various types of complex networks. It is considered that the small-world architecture is relevant for understanding the function of the brain, and the empirical evidences support this argument (Achard et al., 2006; Bassett and Bullmore, 2006; van den Heuvel et al., 2008; van den Heuvel and Sporns, 2011).

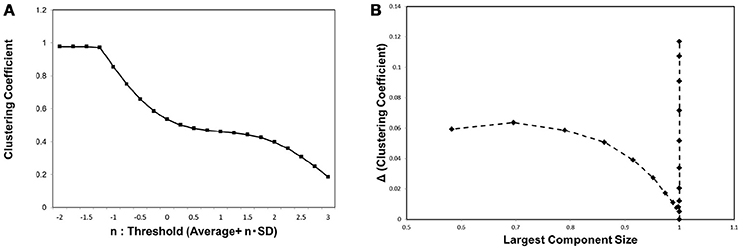

In order to characterize the behavior of the network topology around the critical point, we evaluated the clustering coefficient C. As explained in the previous section, this basic quantity is frequently used to characterize the small-world network, which exhibits relatively large clustering coefficient values (Watts and Strogatz, 1998). In Figure 4A we show the result of measuring the clustering coefficient. For different threshold values, the clustering coefficient remains almost constant at its value around the critical point specified in Figure 3B. This stability agrees with the observation in the Watts-Strogatz model that the clustering coefficient is stable near the state of the small-world topology (Watts and Strogatz, 1998). The small change in the clustering coefficient around the critical point shows that it has relatively large values during this transition. This explains why the small-world topology appears around this critical point.

Figure 4. The clustering coefficient and the threshold. (A) We measured the clustering coefficient for each threshold value considered as Equation (3). The average was calculated for the whole datasets, 986 datasets of the functional connectome (Biswal et al., 2010; Brown et al., 2012). (B) We plotted the changes in the clustering coefficient, ΔC, against the corresponding value of the largest component sizes same as shown in Figure 3. The difference in the clustering coefficient ΔC was defined as ΔC = C(i)−C(i+1), where i indicates the i-th value of the threshold in the panel (A), which is calculated from the minimum value n = −2 as (i = 0).

In order to provide the further evidence for the relation between small-world topology and phase transition, we measured the changes in this value ΔC, and plotted these values against the corresponding largest component size, same as in the case of Figure 3B. ΔC was defined by the difference of C between the values for neighboring thresholds, ΔC(i) = C(i) − C(i + 1) for the i-th threshold value in our calculation. Exhibiting similar behavior to Figure 3B, the plot in Figure 4 specifies the critical point with a sharp peak at the same critical point of the mutual information entropy. Thus, there exists a phase transition regarding the network topology, which is responsible for the small-world feature, and we suggest that this phase transition contributes to the maximization of the mutual information entropy.

Activation Pattern and the Self-Organized Criticality

In our model Equation (1), the other factor, which mainly contributes to the information transfer, is the connectivity strength wij. The distribution of wij is important for controlling the response, especially for hub nodes. On these nodes, the response to signals received from multiple sites is determined according to the combination of wij, (wi1j, wi2j, … ) for i1, i2, … . In these responses, highly weighted connections, which organize the core network in the brain, are dominant. The distribution of the connectivity strength is another important factor which determines the efficiency of the information transfer.

In order to describe the contribution of wij to the maximization of the mutual information entropy, we identified the statistical characteristics of wij around the critical point, and clarified its relation to the criticality observed with the mutual information entropy. For this purpose, we assessed the distribution of wij, whether it obeys the prediction of the self-organized criticality. In this state, it is predicted that characteristic scales will disappear, and the systems will behave independently of the scale (Bak et al., 1987). The emergence of the power law distribution is considered a typical characteristic observed in this state.

However, when we adapted the power law to the distribution of wij, the straightforward application was prohibited due to the upper and lower limits of its definition of the correlation coefficient. We then used the distribution model derived from the power law, adapting it to the restricted variable range (Takagi, 2010, 2017). In accordance with the restricted region |w| ≤ 1, we applied the power law to the variable , and obtained the expression , with a constant γ. Normalizing yields the expression of our distribution model,

In order to verify that the distribution follows this model, we assessed the performance of the distribution fitting using the Kolmogorov-Smirnov (KS) distance (Clauset et al., 2009; Klaus et al., 2011). For the cumulative distribution Pe(w), which is experimentally given, and the model distribution P(w) fitted to the data, the KS distance D is defined as

which measures the maximum distance of the model from the experimental data.

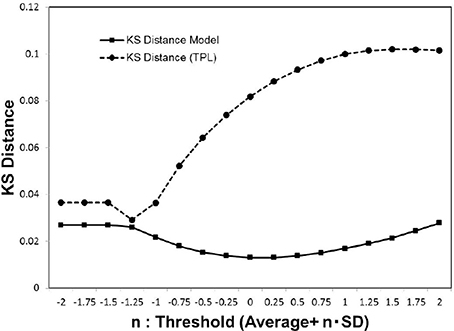

In Figure 5, we show the KS distance values for the noise-reduced weight matrix (wij), applying the cut-off threshold Equation (3). In the distribution fitting, the parameters of each distribution model were estimated by the maximum likelihood method. This was compared to the truncated power law, which is applied instead of the power law in most cases when the distribution has the upper limit (Achard et al., 2006). The exponentially truncated power law is described as , where α is a constant exponent, and xc is the truncation value or the cut-off. For the truncated power law, the maximum likelihood was estimated using R and the R-package brainwaver (http://cran.r-project.org/web/packages/brainwaver) (Achard et al., 2006).

Figure 5. Kolmogorov-Smirnov distance of the distribution models. We estimated the Kolmogorov-Smirnov (KS) distance for each cut-off threshold value. For the cumulative distribution P(|w|) of the experimental data wij which satisfies |wij|>wt, the parameters of the truncated power law and our models were estimated by the maximum likelihood method for each model. We then estimated the values of the KS distance for the whole datasets (Biswal et al., 2010; Brown et al., 2012), according to the Equation (5), and calculated the averages.

As indicated by the plot of Figure 5, our model yields more stable lower values stably than the truncated power law model. Consequently, our model Equation (4) provides a good fit for the distribution of (wij). Further, the convergence to this distribution model is indicated by the sufficiently small value of its minimum distance.

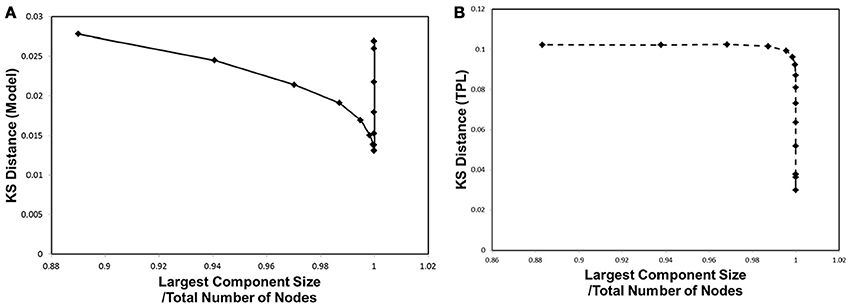

In order to correlate to the phase transitions shown in Figures 3B, 4B, we combined Figures 2, 5 into Figure 6, plotting the KS distance value against the corresponding largest component size for each cut-off threshold. The resulting distribution model (Figure 6A) exhibits a similar behavior to the cases of Figures 3B, 4B. The plot shows a sharp peak around the critical point, at which point the phase transitions observed with the mutual information entropy and the topology appear.

Figure 6. Kolmogorov-Smirnov Distance and phase transition. The Kolmogorov-Smirnov (KS) distance was plotted against the largest component size, same as shown in Figures 3, 4B. (A) The KS distance and the largest component size for our distribution model, for wij, are shown. (B) The KS distance and the largest component size for truncated power law distribution are shown.

In comparison with the results of the truncated power law distribution (Figure 6B), the behavior of our model (Figure 6A) clearly exhibits the characteristics of the phase transition with a sharp peak, and depicts its difference with the case of the truncated power law, in which such a peak is absent. In this figure, the distance of the truncated power law increases with that of wt, indicating that the difference from the experimental data becomes significant almost monotonically with decreasing noise. On the other hand, our model shows a decrease toward its minimum peak around the critical point. The presence of the sharp peak is a characteristic behavior observed only in our distribution model.

Discussion

Topology and Dynamics Patterns Under the Maximization of the Mutual Information Entropy

In this paper, we showed that, due to the maximization of the mutual information entropy in the large scale brain network, small-world network topology and criticality in the activation dynamics are induced. Our simulation results shown in Figure 3B indicate that the requirement for this maximization drives the network state to the critical point specified by the peak of this entropy.

Similar behavior was observed with the clustering coefficient (Figure 4B), indicating that the same mechanism induces the phase transition of the topological structure. This phase transition is responsible for the small-world topology, because this feature emerges during the phase transition between random and ordered networks. Further, the relation to the small-world topology is supported by our result (Figure 4A) showing the small change of the clustering coefficient around this point, indicating that the network has relatively high transitivity at this point.

In addition, this accompanies the emergence of self-organized criticality in the dynamics. This is shown by the convergence of the coactivation pattern distribution to the model, indicating self-organized criticality (Figure 6A). Toward the critical point specified in Figures 3B, 4B, the separation distance between the empirical data and the distribution model measured by the KS distance rapidly decreased. The criticality of this state was confirmed by the fact that this distribution model was directly derived from the power law, one of the characteristic features of self-organized criticality.

These results provide evidence to support that the principle of the mutual information maximization predominantly affects the structural and functional aspects of the brain network. Thus, our results explain the origin of the important attributes of topology and dynamics of the functional connectome.

Criticality

Our results provide a unified perspective of the topological and functional aspects of the connectome, under the concept of criticality. In Figure 1, we showed three different state, which corresponded to the sub-critical state with low signals (p = 0.001), the critical state with the medium signals (p = 0.01), and the super-critical state with high signals (p = 0.1). The criticality is explicitly shown by the result Figure 3B, in which the phase transition exhibited a sharp maximum peak of the mutual information. At this point, the mutual information entropy was maximized, and subsequently the optimal state, with respect to information transfer, appeared.

This criticality observed with the mutual information entropy explains the origin of the small-world topology and the criticality of the coactivation patterns. As represented in Equation (1), the information transfer depends on the topological structure represented by the adjacent matrix (aij) and the weight matrix of the connectivity strength (wij). As indicated by Figure 4B, the critical point of the clustering coefficient, one of the representative topology measures, coincides with that of the information entropy shown in Figure 3B. The maximization of the mutual information entropy induces the phase transition in the network topology. This small-world network contributes to the efficiency of the information transfer, because it contains hubs or highly connected nodes, which have the advantage of shortening the path length between the nodes.

These hubs, which have relatively large number of connections, have an opposite effect of inhibiting efficient communication. The signal transfer model Equation (1) implies that excess signals, which simultaneously reach a single hub node, confuse the transfer and produce noises. It is expected that, for these noises, the connectivity weight extracts the important signals, and then controls the information transfer, while avoiding confusions. The similar behaviors observed in Figures 3B, 6A imply that the requirement for the maximization of the mutual information entropy affects the distribution of the connectivity strength wij, which converges to the model, indicating the critical state. These results support the argument that the criticality of the connectivity strength has its origin in that of the information transfer.

Thus, the maximization of the mutual information entropy explains the origin of the phase transition in the topology and the criticality in the coactivation patterns. Although these two important attributes of the brain are established separately, they are directly related by the maximization. These findings provide a unified perspective for self-organized criticality and small-world topology, under the mechanism driven by the maximization of the mutual information entropy.

Biological Constraint

Our findings also reveal the contribution of the biological constraints to the mechanism regulating the information transfer. We had specified the critical point by the sharp peaks in Figures 3B, 4, and 6B. In these figures, the vertical axis, the largest component size ratio in the network, indicates that, at this point, the network structure shows the phase transition from the fully connected state to the fragmented one, which contains isolated components (Takagi, 2017). This state is relevant for maintaining the brain activity, because the fully connected structure might allow the integration of the signals (Tononi et al., 1994; Bassett and Bullmore, 2006; Bassett et al., 2006; Kitzbichler et al., 2009; Sporns, 2013) from functionally specialized regions in the brain (Tononi et al., 1994; Hilgetag and Grant, 2000; Sporns, 2013). Therefore, this state, the fully connected network, might be a minimum requirement for the integrated function of the brain (Tononi et al., 1994; Bassett and Bullmore, 2006; Hagmann et al., 2008; Sporns, 2013), and our result suggests that the brain network satisfies this constraint.

On the other hand, the same set of Figures 3B, 4, and 6B, indicate that this criticality is obtained by reducing the excess connections under the above constraint of integration. At this critical point, the integrated structure with the fully connected topology is preserved with the minimal connections, because the lower threshold allows excess connections. From the point of view of economic expenditure of energy (Achard and Bullmore, 2006; Bassett and Bullmore, 2006; Chen et al., 2006; Bassett et al., 2010; Bullmore and Sporns, 2012), suppressing excess connections reduces the energy cost of the network wiring and the biological energy consumption associated with the activity. Our results imply that the cost-effective state, without losing its function, is realized at this critical point (Takagi, 2017). We suggest that requirements for reducing the energy consumption and preserving the integrated state in the brain network work as the biological constraints to determine the optimal state of the brain network.

Concluding Remarks

The results from this study provide evidence to support the argument that the brain network is optimized with regard to information processing. This study suggests the principle and the constraint required for the mechanism underlying the information transfer in the brain network. Our results specifically suggest that, under the constraint of preserving the fully connected network structure, reducing the energy consumption and maximizing the information transfer are the principles governing the topological and functional aspects of the brain network. Thus, our results provide an insight into the mechanism of information processing in the brain.

Based on the simulation presented here, we describe the dynamics of the brain network in response to activation probability and the connectivity threshold, which are the major factors affecting mutual information entropy. Our conclusion is consistent with empirical data, such as those obtained regarding small-world topology and the criticality of the brain network. These findings are widely supported by various experimental and simulation results. Yet, how the requirement for optimization in information processing affects network developments in real brains remains unknown.

Author Contributions

KT designed the study, conducted the simulations and data analyses, and wrote the manuscript.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer, YL and the handling Editor declared their shared affiliation

Acknowledgments

We would like to thank Editage (www.editage.jp) for English language editing. Also, we thank the reviewers whose comments helped improve and clarify this manuscript.

References

Achard, S., and Bullmore, E. (2006). Efficiency and cost of economical brain functional networks. PLoS Comput. Biol. 3:e17. doi: 10.1371/journal.pcbi.0030017

Achard, S., Salvador, R., Whitcher, B., Suckling, J., and Bullmore, E. (2006). A resilient, low-frequency, small-world human brain functional network with highly connected association cortical hubs. J. Neurosci. 26, 63–72. doi: 10.1523/JNEUROSCI.3874-05.2006

Albert, R., Jeong, H., and Barabasi, A. (2000). Error and attack tolerance of complex networks. Nature 406, 378–382. doi: 10.1038/35019019

Bak, P., Tang, C., and Wiesenfeld, K. (1987). Self-organized criticality: an explanation of 1/f noise. Phys. Rev. Lett. 59, 381–384. doi: 10.1103/PhysRevLett.59.381

Barrat, A., Barthélemy, M., Pastor-Satorras, R., and Vespignani, A. (2004). The architecture of complex weighted networks. Proc. Natl. Acad. Sci. U.S.A. 101, 3747–3752. doi: 10.1073/pnas.0400087101

Bassett, D. S., and Bullmore, E. (2006). Small-world brain networks. Neuroscientist 12, 512–523. doi: 10.1177/1073858406293182

Bassett, D. S., Greenfield, D. L., Meyer-Lindenberg, A., Weinberger, D. R., Moore, S. W., Bullmore, E. T., et al. (2010). Efficient physical embedding of topologically complex information processing networks in brains and computer circuits. PLoS Comput. Biol. 6:e1000748. doi: 10.1371/journal.pcbi.1000748

Bassett, D. S., Meyer-Lindenberg, A., Achard, S., Duke, T., and Bullmore, E. (2006). Adaptive reconfiguration of fractal small-world human brain functional networks. Proc. Natl. Acad. Sci. U.S.A. 103, 19518–19523. doi: 10.1073/pnas.0606005103

Beggs, J. (2008). The criticality hypothesis: how local cortical networks might optimize information processing. Philos. Trans. A Math. Phys. Eng. Sci. 366, 329–343. doi: 10.1098/rsta.2007.2092

Beggs, J. M., and Plenz, D. (2003). Neuronal avalanches in neocortical circuits. J. Neurosci. 23, 11167–11177. doi: 10.1523/JNEUROSCI.23-35-11167.2003

Biswal, B. B., Mennes, M., Zuo, X.-N., Gohel, S., Kelly, C., Smith, S. M., et al. (2010). Toward discovery science of human brain function. Proc. Natl. Acad. Sci. U.S.A. 107, 4734–4739. doi: 10.1073/pnas.0911855107

Bressler, S. L., and Menon, V. (2010). Large-scale brain networks in cognition: emerging methods and principles. Trends Cogn. Sci. 14,277–290. doi: 10.1016/j.tics.2010.04.004

Brown, J. A., Rudie, J. D., Bandrowski, A., Van Horn, J. D., and Bookheimer, S. Y. (2012). The ucla multimodal connectivity database: a web-based platform for brain connectivity matrix sharing and analysis. Front. Neuroinform. 6:28. doi: 10.3389/fninf.2012.00028

Bullmore, E. T., and Bassett, D. S. (2010). Brain graphs: Graphical models of the human brain connectome. Annu. Rev. Clin. Psychol. 7, 113–140. doi: 10.1146/annurev-clinpsy-040510-143934

Bullmore, E., and Sporns, O. (2012). The economy of brain network organization. Nat. Rev. Neurosci. 13, 336–349. doi: 10.1038/nrn3214

Chen, B. L., Hall, D. H., and Chklovskii, D. B. (2006). Wiring optimization can relate neuronal structure and function. Proc. Natl. Acad. Sci. U.S.A. 103, 4723–4728. doi: 10.1073/pnas.0506806103

Clark, A. (2013). Whatever next? predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 36, 181–204. doi: 10.1017/S0140525X12000477

Clauset, A., Shalizi, C., and Newman, M. (2009). Power-law distributions in empirical data. SIAM Rev. 51, 661–703. doi: 10.1137/070710111

Eguíluz, V. M., Chialvo, D. R., Cecchi, G. A., Baliki, M., and Apkarian, A. (2005). Scale-free brain functional networks. Phys. Rev. Lett. 94:018102. doi: 10.1103/PhysRevLett.94.018102

Fox, M. D, and Raichle, M. E. (2007). Spontaneous fluctuations in brain activity observed with functional magnetic resonance imaging. Nat. Rev. Neurosci. 8, 700–711. doi: 10.1038/nrn2201

Fox, M. D., Snyder, A. Z., Vincent, J. L., Corbetta, M., Van Essen, D. C., and Raichle, M. E. (2005). The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proc. Natl. Acad. Sci. U.S.A. 102, 9673–9678. doi: 10.1073/pnas.0504136102

Friston, K. (2010). The free-energy principle: a unified brain theory? Nat. Rev. Neurosci. 11, 127–138. doi: 10.1038/nrn2787

Ghazanfar, A. A., and Schroeder, C. E. (2006). Is neocortex essentially multisensory? Trends Cogn. Sci. 10, 278–285. doi: 10.1016/j.tics.2006.04.008

Gray, C. M., König, P., Engel, A. K., and Singer, W. (1989). Oscillatory responses in cat visual cortex exhibit inter-columnar synchronization which reflects global stimulus properties. Nature 338, 334–337. doi: 10.1038/338334a0

Greicius, M. D., Supekar, K., Menon, V., and Dougherty, R. F. (2009). Restingstate functional connectivity reflects structural connectivity in the default mode network. Cereb. Cortex 19, 72–78. doi: 10.1093/cercor/bhn059

Hagmann, P., Cammoun, L., Gigandet, X., Meuli, R., Honey, C. J., Wedeen, V. J., et al. (2008). Mapping the structural core of human cerebral cortex. PLoS Biol. 6:e159. doi: 10.1371/journal.pbio.0060159

Hilgetag, C. C., and Grant, S. (2000). Uniformity, specificity and variability of corticocortical connectivity. Philos. Trans. R. Soc. Lond. B Biol. Sci. 355, 7–20. doi: 10.1098/rstb.2000.0546

Hlinka, J., Palus, M., Vejmelka, M., Mantini, D., and Corbetta, M. (2011). Functional connectivity in resting-state fmri: is linear correlation sufficient? NeuroImage. 54, 2218–2225. doi: 10.1016/j.neuroimage.2010.08.042

Honey, C. (2010). Can structure predict function in the human brain? NeuroImage 52, 766–776. doi: 10.1016/j.neuroimage.2010.01.071

Honey, C. J., Sporns, O., Cammoun, L., Gigandet, X., Thiran, J. P., Meuli, R., et al. (2009). Predicting human resting-state functional connectivity from structural connectivity. Proc. Natl. Acad. Sci. U.S.A. 106, 2035–2040. doi: 10.1073/pnas.0811168106

Kanwisher, N., McDermott, J., and Chun, M. (1997). The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997

Kitzbichler, M. G., Smith, M. L., Christensen, S. R., and Bullmore, E. (2009). Broadband criticality of human brain network synchronization. PLoS Comput. Biol. 5:e1000314. doi: 10.1371/journal.pcbi.1000314

Klaus, A., Yu, S., and Plenz, D. (2011). Statistical analyses support power law distributions found in neuronal avalanches. PLoS ONE 6:e19779. doi: 10.1371/journal.pone.0019779

Latora, V., and Marchiori, M. (2001). Efficient behavior of small-world networks. Phys. Rev. Lett. 87:198701. doi: 10.1103/PhysRevLett.87.198701

Linsker, R. (1988). Self-organization in a perceptual network. Computer 21, 105–117. doi: 10.1109/2.36

Linsker, R. (1990). Perceptual neural organisation: some approaches based on network models and information theory. Annu. Rev. Neurosci. 13, 257–281. doi: 10.1146/annurev.ne.13.030190.001353

Menon, V., and Uddin, L. (2010). Saliency, switching, attention and control: a network model of insula function. Brain Struct, Funct. 214, 655–667. doi: 10.1007/s00429-010-0262-0

Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A.A., Veness, J., Bellemare, M. G., et al. (2015). Human-level control through deep reinforcement learning. Nature 518, 529–533. doi: 10.1038/nature14236

Newmann, M., and Watts, D. (1999). Renormalization group analysis of the small-world network model. Phys. Lett. A 263, 341–346. doi: 10.1016/S0375-9601(99)00757-4

Park, H. J., and Friston, K. (2013). Structural and functional brain networks: from connections to cognition. Science 342:1238411. doi: 10.1126/science.1238411

Shmuel, A., Augath, M., Oeltermann, A., and Logothetis, N. (2006). Negative functional mri response correlates with decreases in neuronal activity in monkey visual area v1. Nat. Neurosci. 9, 569–577. doi: 10.1038/nn1675

Sporns, O. (2002). Network analysis, complexity, and brain function. Complexity 8, 56–60. doi: 10.1002/cplx.10047

Sporns, O. (2013). Network attributes for segregation and integration in the human brain. Curr. Opin. Neurobiol. 23, 162–171. doi: 10.1016/j.conb.2012.11.015

Sporns, O., Tononi, G., and Kötter, R. (2005). The human connectome: A structural description of the human brain. PLoS Comput. Biol. 1:e42. doi: 10.1371/journal.pcbi.0010042

Tagliazucchi, E., Balenzuela, P., Fraiman, D., and Chialvo, D. (2012). Criticality in large-scale brain fmri dynamics unveiled by a novel point process analysis. Front. Physiol. 3:15. doi: 10.3389/fphys.2012.00015

Takagi, K. (2010). Scale free distribution in an analytical approach. Physica A 389, 2143–2146. doi: 10.1016/j.physa.2010.01.034

Takagi, K. (2017). A distribution model of functional connectome based on criticality and energy constraints. PLoS ONE 12:e0177446. doi: 10.1371/journal.pone.0177446.

Tononi, G., Sporns, O., and Edelman, G. (1994). A measure for brain complexity: relating functional segregation and integration in the nervous system. Proc. Natl. Acad. Sci. U.S.A. 91:5033.

Uddin, L. Q., Kelly, A. M., Biswal, B. B., Castellanos, F. X., and Milham, M. P. (2009). Functional connectivity of default mode network components: correlation, anticorrelation, and causality. Hum. Brain Mapp. 30, 625–637. doi: 10.1002/hbm.20531

van den Heuvel, M. P., and Hulshoff Pol, H. E. (2010). Exploring the brain network: a review on resting-state fmri functional connectivity. Eur. Neuropsychopharmacol. 20, 519–534. doi: 10.1016/j.euroneuro.2010.03.008

van den Heuvel, M. P., and Sporns, O. (2011). Rich-club organization of the human connectome. J. Neurosci. 31, 15775–15786. doi: 10.1523/JNEUROSCI.3539-11.2011

van den Heuvel, M. P., Stam, C. J., Boersma, M., and HulshoffPol, H. E. (2008). Small world and scale-free organization of voxel based resting-state functional connectivity in the human brain. Neuroimage 43, 528–539. doi: 10.1016/j.neuroimage.2008.08.010

Van Dijk, K. R., Hedden, T., Venkataraman, A., Evans, K. C., Lazar, S. W., and Buckner, R. L. (2010). Intrinsic functional connectivity as a tool for human connectomics: theory, properties, and optimization. J. Neurophysiol. 103, 297–321. doi: 10.1152/jn.00783.2009

Watts, D. J., and Strogatz, S. H. (1998). Collective dynamics of 'small-world' networks. Nature 393, 440–442. doi: 10.1038/30918

Keywords: functional connectome, information processing, mutual information, phase transition, self-organized criticality, small-world network

Citation: Takagi K (2018) Information-Based Principle Induces Small-World Topology and Self-Organized Criticality in a Large Scale Brain Network. Front. Comput. Neurosci. 12:65. doi: 10.3389/fncom.2018.00065

Received: 19 February 2018; Accepted: 19 July 2018;

Published: 07 August 2018.

Edited by:

Xiaoli Li, Beijing Normal University, ChinaCopyright © 2018 Takagi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kosuke Takagi, Y291dGFrYWdpQG1zZS5iaWdsb2JlLm5lLmpw

Kosuke Takagi

Kosuke Takagi