- Laboratory of Soil Ecology and Microbiology, University of Rhode Island, Kingston, RI, United States

Traditional passive approaches to teaching, such as lectures, are not particularly effective at promoting student learning, or at developing the qualities that employers seek in graduates from soil science programs, such as problem-solving and critical thinking skills. In contrast, active learning approaches have been shown to promote these very qualities in students. Here, I discuss my use of active learning approaches to teach soil science at the introductory and advanced levels, with particular focus on problem-based learning (PBL), and combined just-in-time teaching (JITT) and peer instruction (PI). A brief description of the each pedagogical approach is followed by evidence of its impact on student learning in general and, when available, its use in soil science courses. I describe and discuss my experiences using these approaches teaching introductory soil science (face-to-face and online), soil chemistry and soil microbiology courses, and provide examples of some of the problems I use. I have found the benefits to student learning in terms of student engagement, ownership of learning, and development of critical thinking and problem-solving skills easily outweigh the additional effort required, and are clear relative to traditional, passive approaches to teaching.

Introduction

Soil science courses have been traditionally taught in lecture format, in which the professor imparts knowledge to the students. For example, over half of introductory soil science courses in the US are taught using a strictly lecture format (Jelinski et al., 2019). This learning approach—in which students passively receive knowledge from an instructor—is not unique to soil science: it is how science courses have been taught for centuries.

Lecturing originated thousands of years ago, with the oral tradition of knowledge dissemination before development of the written word. Following the invention of writing, knowledge resided in books whose availability was limited because they had to be copied by hand. Given this constraint, lecturing was an effective way for transmission of knowledge: the lecturer—also known as a reader—read from a book that contained the knowledge to be transmitted, and the students wrote down this information, essentially creating their own hand-written book (Schmidt et al., 2015). Although the invention of the moveable type printing press in the fifteenth century greatly expanded the availability and affordability of books—obviating the need for lecturing—lecturing as a method of instruction in college education has, for better or worse, survived for over a thousand years.

The lecture has persisted in part for financial and logistical reasons: it allows face-to-face teaching of a large number of students with minimal institutional investment in personnel and infrastructure. The “sage on the stage” approach can be engaging, and can be an effective medium to explain difficult concepts, provided the instructor is sufficiently charismatic and adept at explaining (Schmidt et al., 2015). Nevertheless, lecturing is not particularly effective in promoting student learning. For example, students can learn as much (Costin, 1972) or more (Corey, 1934) from reading a textbook than from hearing the same material delivered in a lecture for the same amount of time. Furthermore, because it is a passive approach that tends to preclude questioning and the exchange of ideas among students and instructor, the exclusive use of lecturing does not allow for the development of critical thinking skills, one of the qualities that a liberal education is supposed to promote in students. Because students in lecture courses tend not to take ownership of their learning, lecturing also fails to promote life-long learning, an important trait for success in an ever-changing professional milieu.

Introductory soil science courses generally have a separate laboratory section that does not fare much better than the lecture in terms of passivity. Approximately 92% of introductory soil science courses in the US have a laboratory component (Jelinski et al., 2019). The traditional introductory soil science laboratory requires that the students follow a series of prescribed steps to achieve a result about the properties of a soil sample, such as structure, organic matter, pH, bulk density, or particle size distribution. The lab manual is a sort of cookbook with recipes that, if followed properly, produce the correct result. The field component of the laboratory section generally involves listening to the instructor describe landscapes and soil profiles in the context of soil genesis, classification, and land use. This approach leaves little room for active learning by, for example, applying the methods learned to testing hypotheses about what drives differences in soil properties at different spatial scales.

This passive approach to soil science education has consequences for the employability of our students. In a survey conducted in 2008 by the Soil Science Society of America, employers expressed dissatisfaction with soil science education, including the lack of field experience, poor written and verbal communication skills, and lack of critical thinking skills (Havlin et al., 2010). Furthermore, their comments indicated that the lack of problem-solving skills stemmed from lack of rigor or integration of knowledge within the curriculum (Havlin et al., 2010). Similar observations about the academic training of soil scientists have been reported in Australia (Field et al., 2011).

Soil science faculty around the globe have, over the past few decades, realized the need to revamp the curriculum to better prepare students for professional careers in soil science (e.g., Baveye et al., 2006; Hartemink and McBratney, 2008; Field et al., 2011; Jimenez et al., 2019; Jelinski et al., 2019). In the US, Jelinski et al. (2019) found that 44% of introductory soil science courses used teaching approaches that involved active learning environments. Among the innovative, active learning approaches to soil science education at the college level are the use of case studies in soil biogeochemistry (Duckworth and Harrington, 2018), problem-based learning in introductory soil science (Amador and Görres, 2004), jigsaw exercises (in which students learn part of an assignment and come together to share the information to complete the “jigsaw puzzle”; Daigh and Motschenbacher, 2017), integrating active learning strategies in combined lectures and labs (i.e., studio approach) (Andrews and Frey, 2015), and drive-thru labs in which the instructor demonstrates a process, uses examples to discuss soil properties, or teaches a procedure to a small group of students (Abit et al., 2018).

Here I describe some of the active learning approaches that I have used to teach soil science at the college level. I include my experiences using problem-based learning (PBL) to teach introductory soil science (face-to-face and online), as well as soil microbiology and soil chemistry courses. I also describe how I have used just-in-time teaching (JITT) and peer instruction (PI) in combination with traditional lectures to teach introductory soil science. For each pedagogical approach I provide a brief description, information on its pedagogical underpinnings and on its adoption and effectiveness, and an account of how I have used it in my courses.

Problem-Based Learning

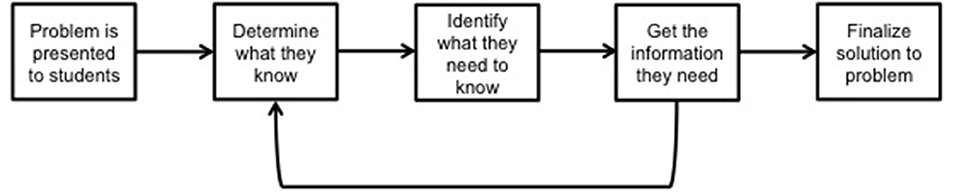

In a course using PBL, students work in permanent groups to develop solutions to authentic, open-ended problems. They learn the course content as they solve the problems. Problem-based learning involves a recursive series of steps, well-described by Boud and Feletti (1997) and summarized in Figure 1.

Problem-based learning is based on constructivist theories of learning, which argue that learning is an active process and requires that the learner construct knowledge. The constructed knowledge is linked to prior knowledge, which allows for continued revision of a person's understanding of the world around her. Constructivism draws from the ideas on learning of John Dewey and Jean Piaget, as well as William James, a pragmatist who contended that the value of an idea depends on its usefulness in the practical world, among others (Yilmaz, 2008).

The positive effects of PBL include improved learning (Norman and Schmidt, 1992), better student attitude and clinical performance (Vernon and Blake, 1993), promotion of life-long, self-directed learning (Candy, 1991; Vernon and Blake, 1993; Spencer and Jordan, 1999); and improved critical thinking skills (Tiwari et al., 2006). Among the negative aspects of PBL are resistance from students, the extra time it takes to develop effective problems and assessments, and problems with the shift in classroom power dynamic form “sage on the stage” to “guide on the side” on the part of both students and faculty (Amador et al., 2007). Although it would appear that PBL would be effective to teach only a limited number of students, it has been used successfully to teach courses with hundreds of students (Amador et al., 2007).

Problem-based learning has been used successfully to teach courses in a wide variety of fields, including nursing (Glen and Wilkie, 2017), medicine (Barrows, 1996), introductory science (Allen et al., 1996), writing (Pennell and Miles, 2009; Amador and Miles, 2016), hydrology (Lyon and Teutschbein, 2011), humanities (Hutchings and O'Rourke, 2002), and social sciences (Amador et al., 2007). A number of medical schools use PBL exclusively to train students, and students taught using the PBL curriculum demonstrate equal or better professional competencies than those taught regular curricula (Neville, 2009). Reports on the use of PBL to teach soil science are limited. Strivelli et al. (2011) successfully introduced a PBL component to a soil management course, and Amador and Görres (2004) developed an introductory soil science course that relied exclusively on PBL.

I have previously taught Introduction to Soil Science, a required course, to ~50 first- and second-year students, and currently teach Soil Microbiology and Soil Chemistry, both elective courses, to ~25 third-and fourth-year and graduate students, using PBL exclusively. The integrative nature of soil science as a discipline—drawing knowledge from a wide variety of scientific fields—fits well with the authentic, open-ended, ill-defined nature of the problems and the collaborative aspects of PBL, making it an effective approach to teach soil science courses.

The general structure of my PBL courses is similar for all them. Students work in permanent groups of four or five—chosen at random—to develop solutions to real, open-ended problems over the course of the semester. The permanence of groups minimizes the time needed to develop effective group dynamics, which is disrupted by formation of new groups. They learn the course content as they gather information and integrate it into their solutions. For each part of a problem, they go through the PBL cycle (Figure 1) which involves students figuring out: (i) what they already know that can help them with a solution, followed by (ii) what else they need to know to develop a solution, and (iii) how will they acquire the necessary information. The students discuss and integrate the new information into a solution as they deem appropriate and repeat the cycle until they are satisfied they have a reasonable solution.

Class Structure

Students go through the PBL cycle during the scheduled class time. In a typical sequence, I give them a paper copy of the first part of a problem and ask one student to read it aloud so that they can all start on the same page. I then ask them to take 15 to 20 min to develop two lists: one of what they know, and how each item is relevant to solving the problem, and a second one on what they need to know and how that is relevant to solve the problem. I circulate among the group to eavesdrop on their discussions. One person from each group presents the two lists, and the rest of the class and I ask questions. As new groups present their lists, I ask them to focus on what is new to the discussion to avoid repetition. After all the groups have presented, they reconvene to consider the feedback they got from their fellow students and me and what they learned from the other groups, and to figure out who will be finding out what before class meets again. Researching what they need to know takes place outside of class time.

At the beginning of the next class, I ask students to discuss the information they've found within their group, integrating this with the information they knew to develop a solution. One student from each group presents the group's solution to the rest of the class, followed by questions and whole class discussion. If the students (and I) think a reasonable solution has been reached, we move on to the next part of the problem. Otherwise, we go through another PBL cycle.

Writing Problems

One of the hallmarks of PBL is the use of authentic, open-ended problems for which there is no unique solution. The open-ended nature of the problems distinguishes it from other pedagogical approaches that have a single correct answer, such as case studies. Open-ended problems mimic the kinds of problems students will encounter in their professional careers: they are messy, ill-defined, and the information necessary to develop a solution is often incomplete, unavailable, and/or erroneous. This type of problem can be developed from one's own experiences as a soil scientist and researcher, by adapting existing problems, such as end-of-chapter problems in a textbook, or may be developed from scratch. A detailed account of problem design can be found in Amador et al. (2007).

An example of one of the problems I use in my soil microbiology course can be found in Supplementary Table 1. The problem is loosely based on my own experience as a consultant on a bioremediation project. The questions at the end of Part 1 are meant to help students focus their attention on the important aspects of the problem. In addition to the problem being authentic, open-ended, and ill-defined, there are a few more things to note in this example: (i) the student is the main character in the problem: people respond to problems better when they can “see” themselves in a situation; (ii) the action in the story moves along to keep the students' attention, (iii) the Goldilocks rule applies: the problem is challenging enough that the students believe they can solve it, but is not so easy that it is readily solvable, and (iv) humor makes the problem more approachable—after all, how difficult can something be if it's funny?

Assessment

Assessment in my face-to-face PBL courses comes at the end of the problem, when the students have developed a proposed solution. Different types of assessment are involved, depending on the course:

1. Presentations. In upper-level courses students make presentations at the end of every problem. The group works on the presentation outside class time and one person from each group makes the ~10 min presentation to the whole class describing their understanding of the problem, their solutions, what they were able to conclude, and remaining unsolved matters. This is followed by a discussion period in which the whole group is expected to participate in answering questions. I grade the presentation using a rubric that addresses the group's understanding of the problem, the soundness of their arguments, the group's ability to field questions, and the effectiveness of the visual aids. The grade for the presentation is shared by the whole group.

2. Exams. In the upper-level courses, students complete a take-home exam that tests their mastery of the concepts learned while solving the problem. They answer the exam individually. Once they are finished with the individual exam, they meet in their groups during class time to answer the same exam as a group. The individual exam counts for 80% of the exam grade; the group exam accounts for the remaining 20%. In the introductory course, students take an in-class, multiple choice exam during the first half of the class period, for which they are allowed to use notes—but not the textbook—to answer the questions. Once they turn in their answers, they are given the answer key and are asked to explain why their answers are wrong for half-credit. They are allowed to use the textbook during the second part of the exam.

3. Synthesis paper. In both introductory and upper-level courses one student from each group takes the lead in writing a paper in which they explain the group's understanding of the problem, their solutions to each part of the problem, and identify matters that remained unresolved. After I grade the paper and provide them with comments, they can revise and resubmit for a better grade. The grade for the paper is the same for all group members.

4. Peer evaluations. One of the fears of students working in groups is free-riders, who contribute little or nothing to group projects yet reap the same benefits as the group members who did the work (Maiden and Perry, 2011). I address this by having students evaluate each other's performance as a group member anonymously. Students are provided with a copy of the peer evaluation form at the beginning of the course. The peer evaluation—adapted from Kitto and Griffiths (2001)—produces a score between 0.7 and 1.1. This value is used as a multiplier to adjust the grade an individual actually receives for the group presentation and paper. For example, a group member that has contributed above and beyond expectations that receives an evaluation score of 1.1 will have the grade on the paper (say it was a 90/100) of 99, whereas member that hardly contributed, with a score of 0.7, would have a grade of 63.

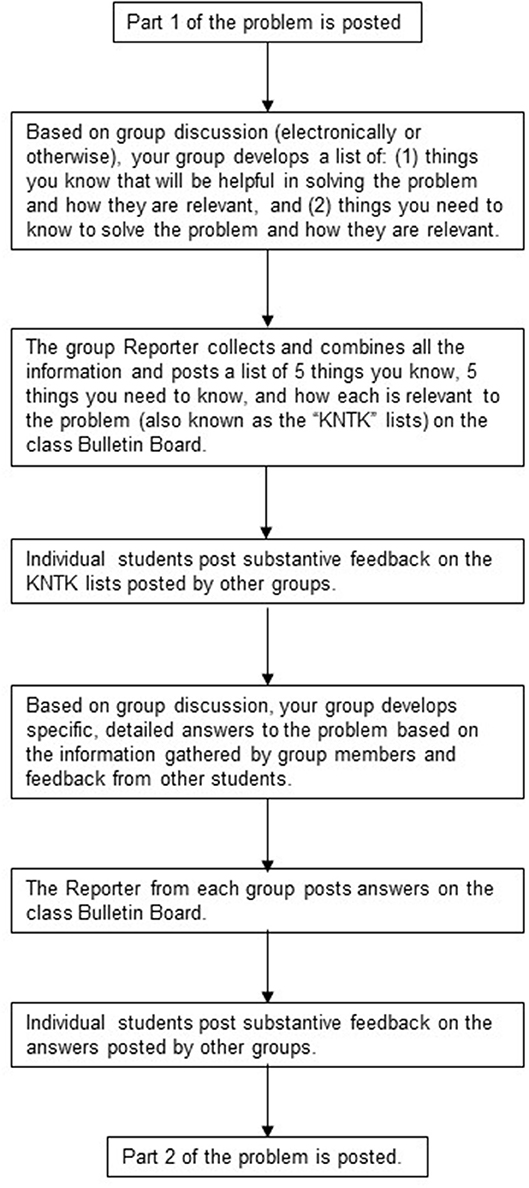

I have also used PBL to teach introductory soil science online in an asynchronous environment (Amador, 2012; Amador and Mederer, 2013). The problems remain the same as for the face-to-face class. The class centers on the PBL cycle, with the group interaction structured for the asynchronous online environment (Figure 2). Groups are presented with a problem, and they generate and posts lists of what they know and how that is relevant, and what they need to know that is relevant by a deadline. After these are posted, students from other groups are asked to post their thoughts on these lists. Following this comment period, groups either post another round of know/need to know or their solutions to that part of the problem, depending on discussion progress. This is again followed by comments from students from other groups. These online interactions are an important part of the assessment, one of the main differences from the face-to-face version of PBL, where student discussions are not assessed. Because students have more time to think and integrate information, the online discussions tend to be better thought out and expressed than those in a classroom setting. Assessment also includes an individual test and a group synthesis paper, although a presentation is not required.

Combined Just-in-Time Teaching and Peer Instruction

Given the passive nature of learning in traditional lecture course, I have introduced a combination of just-in-time teaching (JITT) and peer instruction (PI) in my large (>100 students) introductory soil science lecture course.

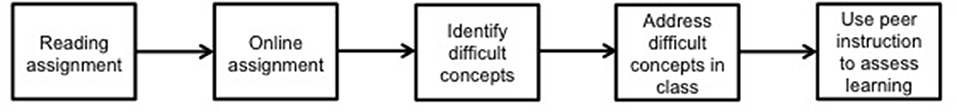

Just-in-time teaching involves students preparing for class by reading background materials—textbook or other sources—and completing assignments online based on those readings. The assignments, due shortly before class, provide feedback to the instructor on which concepts students understand and which they are having difficulty with. The instructor then uses this information to adjust classroom activities to improve learning (Figure 3). Just-in-time teaching was developed by Novak et al. (1999) to improve teaching and learning in introductory physics courses, and the approach has been adopted by faculty in the STEM disciplines and in the humanities (Simkins and Maier, 2010). Using JITT in lecture courses improves class attendance and study skills (Marrs et al., 2003), likelihood of reading the textbook before class (Howard, 2004), and conceptual thinking (Formica et al., 2010). However, if students are not provided with the context for the use of JITT, they can react negatively (Camp et al., 2010), which can be addressed by reiterating the reasons for using this approach.

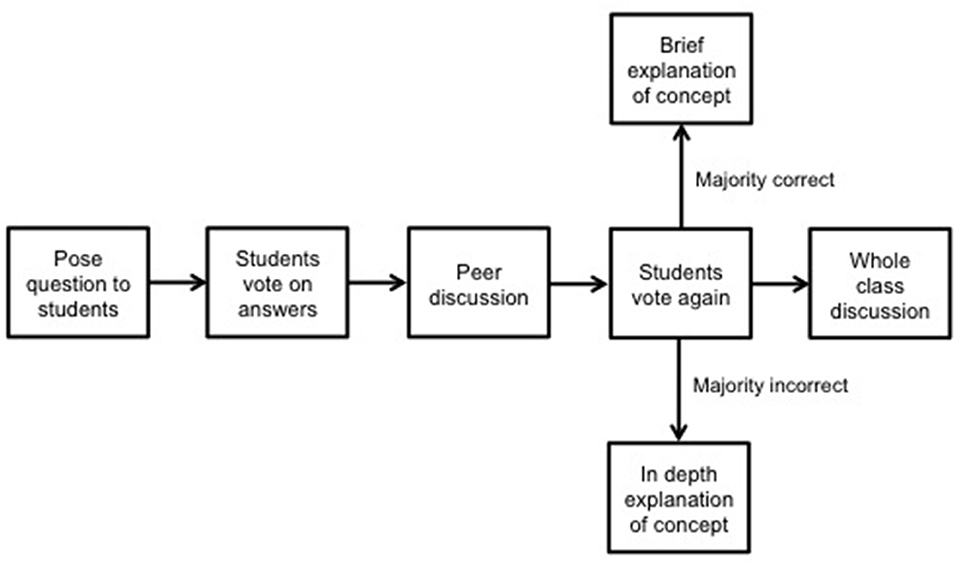

Peer instruction involves students answering multiple choice questions posed to the whole class during lecture (Figure 4). Students reflect on the question and commit to an answer by voting. If a high proportion of students vote for an incorrect answer, they are asked to discuss their answer with their neighbor (PI is also known as “turn to your neighbor”). Peer discussion is then followed by another round of voting, at which point the instructor decides whether more explanation is needed. Peer instruction has been championed by Eric Mazur at Harvard University, where it is used in introductory physics courses (Mazur and Hilborn, 1997).

Peer instruction improved student confidence and competence in STEM courses (Ochsner and Robinson, 2017), as well as learning in students in a variety of college courses, including physics (Crouch and Mazur, 2001; Fagen et al., 2002), physiology (Rao and DiCarlo, 2000), and computer science (Porter et al., 2013). It is often coupled with JITT, which serves as the source of the questions posed to the class. The combination of JITT and PI has been shown to improve learner participation and retention, and the amount of learner-centered time (Schuller et al., 2015).

I introduced a combination of JITI and PI into my Introduction to Soil Science lectures a few years ago. The intention was 2-fold: (i) to get students to read the textbook (Howard, 2004) and start grappling with the ideas and concepts covered in a lecture before they come to class, and (ii) to get them to think about and discuss these ideas and concepts after they have been presented in class. Bringing these active pedagogical approaches to my lectures has helped shift the class dynamic to improve student engagement, enhance critical thinking, and help students take ownership of their learning.

Just-in-time teaching in my course involves homework that is due a day or so before the lecture on a particular topic. Known as Application Problems (APs), the homework asks students to answer questions about open-ended, real-world problems. The sample problem focuses on soil ecological interactions and how these can affect decomposition of plant detritus (Supplementary Table 2). Answering the questions require that students learn about a particular concept on their own, before the topic is discussed in lecture, from the assigned reading in their textbook, which is chosen based on its comprehensive, in-depth coverage of soil science topics (e.g., Weil and Brady, 2016). The students are free—and encouraged to—peruse the internet for explanatory blogs, videos, memes, etc. They are, however, expected to work on their own. The problems also ask that they apply what they have learned, for example, by making a decision or prediction based on the concepts learned.

The homework is submitted online and graded based primarily on whether the answers show an understanding of the concepts we want them to learn. Because they should be a low stakes opportunity for students to assess how well they grasp the concepts, each AP accounts for 1% of the final grade (10% total). The teaching assistant that grades the APs also keeps track of concepts students found particularly difficult and reports this information to me, which allows me to adjust my lectures beforehand.

The peer instruction aspect takes place in lecture, after the students have completed the homework on the topics that will be taught in that lecture. After I lecture on a topic, I put up a slide with the setup of a problem, either a verbatim or abridged version of their homework (Supplementary Figure 1). This is followed by a series of multiple-choice questions about different aspects of the homework. After I put up the first question, I ask students to take some time to think about it and vote for what they think is the correct answer. I ask them to raise their hands for A, B, C, or D and quickly estimate the proportion of students that have the right answer. If I have crafted an effective set of choices for a question, there will be a good distribution of wrong and right answers. If that is the case, I then ask the students to turn to their neighbor and convince them of why their answer is right and the neighbor is wrong. After the buzz in the auditorium dies down, I ask students to vote again, and then reveal what is the correct answer. This is followed by a request for a student who chose the most popular incorrect answer to explain their reasoning, and for a student who chose the correct answer to explain her reasoning. I may expand on these explanations if think it's warranted, and move on to the next question. A set of peer instruction questions during a 75 min lecture takes 7 to 12 min, depending on the number of questions.

The voting in peer instruction can be assessed more quantitatively than by estimating numbers from a show of hands. A number of proprietary classroom response systems (i.e., clickers) as well as smartphone-based non-proprietary systems (Lee et al., 2013; Luo et al., 2016) are available to record, quantify, and display student responses. Because responses may be tracked to individual students, these systems have the potential to gather data on a particular student's participation (and thus, attendance) and level of understanding. Although the information can also be used to grade student responses, this can prove counter-productive because fear of choosing the wrong answer can inhibit participation (James, 2006).

Conclusions

Active learning approaches in soil science education are an essential component of developing the critical thinking and problem-solving skills, and the capacity for life-long learning in our students, which they will need for professional success. I have found using PBL exclusively to be effective in fostering these qualities in teaching introductory soil science, soil microbiology and soil chemistry courses. The introduction of JITT and PI into my large lecture course has proven an effective means of getting students to engage with course content before lecture, with each other during lecture, and to take ownership of their learning. The short descriptions I have given here provide the reader with the briefest introduction to their implementation. For those interested in trying out these active learning approaches, a number of the references cited here provide detailed advice on their implementation.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fenvs.2019.00111/full#supplementary-material

Supplementary Figure 1. Sample questions used for peer instruction during lecture.

References

Abit, S. M., Curl, P., Lasquites, J. J., and MacNelly, B. (2018). Delivery and student perceptions of drive-through laboratory sessions in an introductory-level soil science course. Nat. Sci. Ed. 47:170015. doi: 10.4195/nse2017.07.0015

Allen, D. E., Duch, B. J., and Groh, S. E. (1996). The power of Problem-Based Learning in teaching introductory science courses. New Dir. Teach. Learn. 1996, 43–52. doi: 10.1002/tl.37219966808

Amador, J. A. (2012). “Introduction to soil science: transforming a Problem-Based Learning course to online,” in Taking Your Course Online: An Interdisciplinary Journey, eds K. M. Torrens and J. A. Amador (Charlotte, NC: Information Age Publishing, 79–91.

Amador, J. A., and Görres, J. H. (2004). A Problem-Based Learning approach to teaching introductory soil science. J. Nat. Res. Life Sci. Educ. 33, 21–27.

Amador, J. A., and Mederer, H. (2013). Migrating successful student engagement strategies to the virtual classroom: opportunities and challenges using jigsaw groups and Problem-Based Learning. J. Online Learn. Teach. 9, 89–105.

Amador, J. A., Miles, E. A., and Peters, C. B. (2007). The Practice of Problem-Based Learning. Bolton, MA: Anker Publishing.

Amador, J. A., and Miles, L. (2016). Live from Boone Lake: interdisciplinary Problem-Based Learning meets public science writing. J. Coll. Sci. Teach. 45, 36–42. doi: 10.2505/4/jcst16_045_06_36

Andrews, S. E., and Frey, S. D. (2015). Studio structure improves student performance in an undergraduate introductory soil science course. Nat. Sci. Educ. 44, 60–68. doi: 10.4195/nse2014.12.0026

Barrows, H. S. (1996). Problem-Based Learning in medicine and beyond: a brief overview. New Dir. Teach. Learn. 1996, 3–12. doi: 10.1002/tl.37219966804

Baveye, P., Jacobson, A. R., Allaire, S. E., Tandarich, J. P., and Bryant, R. B. (2006). Whither goes soil science in the United States and Canada? Soil Sci. 171, 501–518. doi: 10.1097/01.ss.0000228032.26905.a9

Boud, D., and Feletti, G. (1997). The Challenge of Problem-Based Learning. 2nd Edn. London: Kogan Page.

Camp, M. E., Middendorf, J., and Sullivan, C. S. (2010). “Using just-in-time teaching to motivate student learning,” in Just-in-Time Teaching: Across the Disciplines, Across the Academy, eds S. Simkins and M. Maier (Sterling, VA: Stylus Publishing, 25–38.

Candy, P. C. (1991). Self-Direction for Lifelong Learning: A Comprehensive Guide to Theory and Practice. San Francisco, CA: Jossey-Bass.

Corey, S. M. (1934). Learning from lectures vs. learning from readings. J. Ed. Psych. 25:459. doi: 10.1037/h0074323

Costin, F. (1972). Lecturing versus other methods of teaching: a review of research. Br. J. Educ. Technol. 3, 4–31. doi: 10.1111/j.1467-8535.1972.tb00570.x

Crouch, C. H., and Mazur, E. (2001). Peer instruction: ten years of experience and results. Am. J. Phys. 69:970. doi: 10.1119/1.1374249

Daigh, A. L. M., and Motschenbacher, J. M. D. (2017). Breaking tradition to create self-motivated, collaborative students. CSA News 62, 33–35. doi: 10.2134/csa2017.62.1028

Duckworth, O. W., and Harrington, J. M. (2018). “Student presentations of case studies to illustrate core concepts in soil biogeochemistry,” in A Collection of Case Studies (Madison, WI: ASA), 177–185. doi: 10.4195/jnrlse.2011.0012n

Fagen, A. P., Crouch, C. H., and Mazur, E. (2002). Peer instruction: results from a range of classrooms. Phys. Teach. 40:206. doi: 10.1119/1.1474140

Field, D. J., Koppi, A. J., Jarrett, L. E., Abbott, L. K., Cattle, S. R., Grant, C. D., et al. (2011). Soil science teaching principles. Geoderma 167, 9–14. doi: 10.1016/j.geoderma.2011.09.017

Formica, S. P., Easley, J. L., and Spraker, M. C. (2010). Transforming common-sense beliefs into Newtonian thinking through Just-In-Time Teaching. Phys. Rev. Spec. Topics Phys. Ed. Res. 6:020106. doi: 10.1103/PhysRevSTPER.6.020106

Glen, S., and Wilkie, K. (2017). Problem-Based Learning in Nursing: A New Model for a New Context. London: Macmillan International Higher Education.

Hartemink, A. E., and McBratney, A. (2008). A soil science renaissance. Geoderma 148, 123–129. doi: 10.1016/j.geoderma.2008.10.006

Havlin, J., Balster, N., Chapman, S., Ferris, D., Thompson, T., and Smith, T. (2010). Trends in soil science education and employment. Soil Sci. Soc. Am. J. 74, 1429–1432. doi: 10.2136/sssaj2010.0143

Howard, J. R. (2004). Just-in-time teaching in sociology or how I convinced my students to actually read the assignment. Teach. Sociol. 32, 385–390. doi: 10.1177/0092055X0403200404

Hutchings, B., and O'Rourke, K. (2002). Problem-based learning in literary studies. Arts Hum. Higher Ed. 1, 73–83. doi: 10.1177/1474022202001001006

James, M. C. (2006). The effect of grading incentive on student discourse in Peer Instruction. Am. J. Phys. 74:689. doi: 10.1119/1.2198887

Jelinski, N. A., Moorberg, C. J., Ransom, M. D., and Bell, J. C. (2019). A survey of introductory soil science courses and curricula in the United States. Nat. Sci. Ed. 48:180019. doi: 10.4195/nse2018.11.0019

Jimenez, L. S., Vega, N., Capa, E. D., Fierro, N., del, C., and Quichimbo, P. (2019). Estilos y estrategia de enseñanza-aprendizaje de estudiantes universitarios de la Ciencia del Suelo. Rev. Electron. Invest. Educ. 21, 37–46. doi: 10.24320/redie.2019.21.e04.1935

Kitto, S. L., and Griffiths, L. G. (2001). “The evolution of problem-based learning in a biotechnology course,” in The Power of Problem-Based Learning, eds B. J. Duch, S. E. Groh, and D. E. Allen (Sterling, VA: Stylus, 121–130.

Lee, A. W. M., Ng, J. K. Y., Wong, E. Y. W., Tan, A., Lau, A. K. Y., and Lai, S. F. Y. (2013). Lecture rule No. 1: cell phones ON, please! A low-cost personal response system for learning and teaching. J. Chem. Educ. 90, 388–389. doi: 10.1021/ed200562f

Luo, T., Dani, D. E., and Cheng, L. (2016). Viability of using Twitter to support peer instruction in teacher education. Int. J. Social Media Interact. Learn. Environ. 4, 287–304. doi: 10.1504/IJSMILE.2016.081280

Lyon, S. W., and Teutschbein, C. (2011). Problem-based learning and assessment in hydrology courses: can non-Traditional assessment better reflect intended learning outcomes? J. Nat. Resour. Life Sci. Educ. 40, 199–205. doi: 10.4195/jnrlse.2011.0016g

Maiden, B., and Perry, B. (2011). Dealing with free-riders in assessed group work: Results from a study at a UK university. Assess. Eval. Higher Ed. 36, 451–464. doi: 10.1080/02602930903429302

Marrs, K. A., Blake, R., and Gavrin, A. (2003). Use of warm up exercises in just in time teaching: determining students' prior knowledge and misconceptions in biology, chemistry, and physics. J. Coll. Sci. Teach. 33, 42–47.

Mazur, E., and Hilborn, R. C. (1997). Peer Instruction: A User's Manual, Vol. 5. Upper Saddle River, NJ: Prentice Hall.

Neville, A. J. (2009). Problem-based learning and medical education forty years on. Med. Prin. Pract. 18, 1–9. doi: 10.1159/000163038

Norman, G. T., and Schmidt, H. G. (1992). The psychological basis of problem-based learning: a review of the evidence. Acad. Med. 67, 557–565. doi: 10.1097/00001888-199209000-00002

Novak, G. M., Patterson, E. T., Gavrin, A. D., and Christian, W. (1999). Just-in-Time-Teaching: Blending Active Learning With Web Technology. Upper Saddle River, NJ: Prentice Hall.

Ochsner, T. E., and Robinson, J. S. (2017). The impact of a social interaction technique on students' confidence and competence to apply STEM principles in a college classroom. NACTA J. 61, 14–20.

Pennell, M., and Miles, L. (2009). “It actually made me think”: problem-based learning in the business communications classroom. Bus. Comm. Quart. 72, 377–394. doi: 10.1177/1080569909349482

Porter, L., Lee, C. B., and Simon, B. (2013). “Halving fail rates using peer instruction: a study of four computer science courses,” in SIGCSE '13 Proceeding of the 44th ACM Technical Symposium on Computer Science education, 177–182 (Denver, CO).

Rao, S. P., and DiCarlo, S. E. (2000). Peer instruction improves performance on quizzes. Adv. Physiol. Educ. 24, 51–55. doi: 10.1152/advances.2000.24.1.51

Schmidt, H. G., Wagener, S. L., Smeets, G. A. C. M., Keemink, L. M., and Van der Molen, H. T. (2015). On the use and misuse of lectures in higher education. Health Profess. Educ. 1, 12–18. doi: 10.1016/j.hpe.2015.11.010

Schuller, M. C., DaRosa, D. A., and Crandall, M. L. (2015). Using just-in-time teaching and peer instruction in a residency program's core curriculum: enhancing satisfaction, engagement, and retention. Acad. Med. 90, 384–391. doi: 10.1097/ACM.0000000000000578

Simkins, S., and Maier, M. (2010). Just-in-time Teaching: Across the Disciplines, Across the Academy. Sterling, VA: Stylus Publishing.

Spencer, J. A., and Jordan, R. K. (1999). Learner-centred approach in medical education. Brit. Med. J. 318, 1280–1283. doi: 10.1136/bmj.318.7193.1280

Strivelli, R. A., Krzic, M., Crowley, C., Dyanatkar, S. A., Bomke, A., Simard, S. W., et al. (2011). Integration of problem-based learning and web-based multimedia to enhance a soil management course. J. Nat. Resour. Life Sci. Educ. 40, 215–223. doi: 10.4195/jnrlse.2010.0032n

Tiwari, A., Lai, P., So, M., and Yuen, K. (2006). A comparison of the effects of problem-based learning and lecturing on the development of students' critical thinking. Med. Educ. 40, 547–554. doi: 10.1111/j.1365-2929.2006.02481.x

Vernon, D. T., and Blake, R. L. (1993). Does problem-based learning work? A meta-analysis of evaluative research. Acad. Med. 68, 550–563. doi: 10.1097/00001888-199307000-00015

Weil, R. R., and Brady, N. C. (2016). The Nature and Properties of Soils, 15th Edn. Upper Saddle River, NJ: Pearson.

Keywords: active learning, problem-based learning, just-in-time teaching, peer instruction, soil science education

Citation: Amador JA (2019) Active Learning Approaches to Teaching Soil Science at the College Level. Front. Environ. Sci. 7:111. doi: 10.3389/fenvs.2019.00111

Received: 05 March 2019; Accepted: 26 June 2019;

Published: 27 August 2019.

Edited by:

Philippe C. Baveye, AgroParisTech Institut des Sciences et Industries du Vivant et de L'environnement, FranceReviewed by:

Sudipta Rakshit, Tennessee State University, United StatesAlix Vidal, Technical University of Munich, Germany

Copyright © 2019 Amador. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jose A. Amador, amFtYWRvckB1cmkuZWR1

Jose A. Amador

Jose A. Amador