- 1Department of Computer Science and Information Engineering, National Taiwan University, Taipei, Taiwan

- 2Institute of Information Science, Academia Sinica, Taipei, Taiwan

Attribute-aware CF models aim at rating prediction given not only the historical rating given by users to items but also the information associated with users (e.g., age), items (e.g., price), and ratings (e.g., rating time). This paper surveys work in the past decade to develop attribute-aware CF systems and finds that they can be classified into four different categories mathematically. We provide readers not only with a high-level mathematical interpretation of the existing work in this area but also with mathematical insight into each category of models. Finally, we provide in-depth experiment results comparing the effectiveness of the major models in each category.

1. Introduction

Collaborative filtering is arguably the most effective method for building a recommender system. It assumes that a user's preferences regarding items can be inferred collaboratively from other users' preferences. In practice, users' past records regarding items, such as explicit ratings or implicit feedback (e.g., binary access records), are typically used to infer similarity of taste among users for the purposes of recommendation. In the past decade, matrix factorization (MF) has become a widely adopted method of collaborative filtering. Specifically, MF learns a latent representation vector for a user and an item and computes their inner products as the predicted rating. The learned latent user/item factors are supposed to embed specific information about the user/item. That is, two users with similar latent representations will have similar tastes regarding items with similar latent vectors.

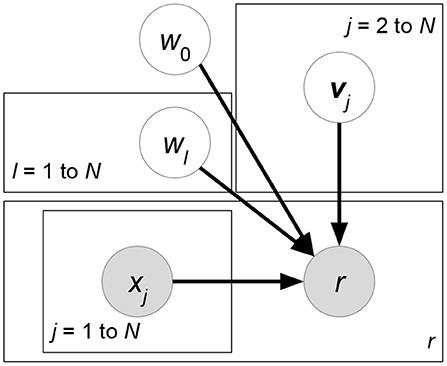

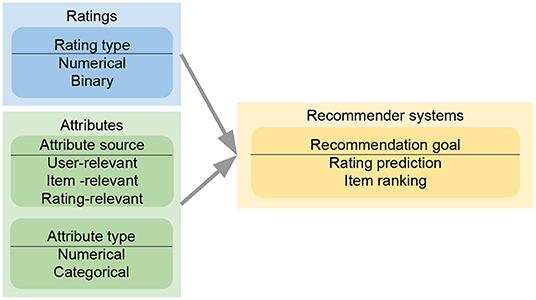

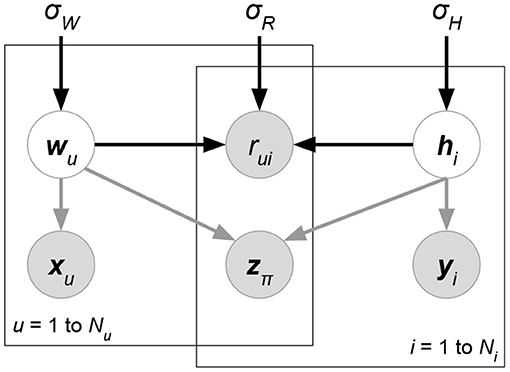

In the big data era, classical MF using only ratings suffers a serious drawback, as such a method is unable to exploit other accessible information such as the attributes of users/items/ratings. For instance, data could contain the location and time that a user rated an item. These rating-relevant attributes, or contexts, could be useful in determining the scale of user liking for an item. The side information or attributes relevant to users or items (e.g., the demographic information of users or the item genre) can also reveal useful information. Such side information is particularly useful for situations where the ratings of a user or an item are sparse, which is known as the cold-start problem for recommender systems. Therefore, researchers have formulated attribute-aware recommender systems (see Figure 1) aiming at leveraging not only the rating information but also the attributes associated with ratings/users/items to improve the quality of recommendation.

Figure 1. Interpretation of inputs, including ratings and attributes, in attribute-aware collaborative filtering-based recommender systems.

Researchers have proposed different methods to extend existing collaborative filtering models in recent years, such as factorization machines, probabilistic graphical models, kernel tricks, and models based on deep neural networks. We notice that those papers can also be categorized based on the type of attributes incorporated into the models. A class of recommender systems considers relevant side information, such as age, gender, the occupation of users, or the expiration and price of items when predicting ratings (e.g., Adams et al., 2010; Porteous et al., 2010; Fang and Si, 2011; Ning and Karypis, 2012; Zhou et al., 2012; Park et al., 2013; Xu et al., 2013; Kim and Choi, 2014; Lu et al., 2016; Zhao et al., 2016; Feipeng Zhao, 2017; Guo, 2017; Yu et al., 2017; Zhou T. et al., 2017). On the other hand, context-aware recommender systems (e.g., Shin et al., 2009; Karatzoglou et al., 2010; Li et al., 2010b; Baltrunas et al., 2011; Rendle et al., 2011; Hidasi and Tikk, 2012, 2016; Shi et al., 2012a, 2014a; Liu and Aberer, 2013; Chen et al., 2014; Nguyen et al., 2014; Hidasi, 2015; Liu and Wu, 2015) enhance themselves by considering the attributes appended to each rating (e.g., rating time, rating location). Other terms may be used to indicate attributes interchangeably such as metadata (Kula, 2015), features (Chen et al., 2012), taxonomy (Koenigstein et al., 2011), entities (Yu et al., 2014), demographic data (Safoury and Salah, 2013), categories (Chen et al., 2016), contexture information (Weng et al., 2009), etc. The above setups all share the same mathematical representation; thus, technically, we do not distinguish them in this paper. That is, we regard whichever information is associated with a user/item/rating as user/item/rating attributes, regardless of its nature. Therefore, a CF model that takes advantage of ratings as well as associated attributes is called an attribute-aware recommender in this paper.

Note that the attribute-aware recommender systems discussed in this paper are not equivalent to hybrid recommender systems. The former treat additional information as attributes, while the latter emphasize the combination of collaborative filtering-based methods and content-based methods. To be more precise, this review covers only works that assume unstructured and independent attributes, either in binary or numerical format, for each user, item, or rating. The reviewed models do not have prior knowledge of the dependency between attributes, such as the adjacent terms in a document or user relationships in a social network.

This review covers more than one hundred papers in this area in the past decade. We find that the majority of the works propose an extension of matrix factorization to incorporate attribute information in collaborative filtering. The main contribution of this paper is to not only provide a comprehensive review, but also provide a means to classify these works into four categories: (I) discriminative matrix factorization, (II) generative matrix factorization, (III) generalized factorization, and (IV) heterogeneous graphs. For each category, we provide the probabilistic interpretation of the models. The major distinction of these four categories lies in their representation of the interactions between users, items, and attributes. Discriminative matrix factorization models extend the traditional MF by treating the attributes as prior knowledge to learn the latent representation of users or items. Generative matrix factorization further considers the distributions of attributes and learns such, together with the rating distributions. Generalized factorization models view the user/item identity simply as a kind of attribute, and various models have been designed for determining the low-dimensional representation vectors for rating prediction. The last category of models proposes to represent the users, items, and attributes using a heterogeneous graph, where a recommendation task can be cast into a link-prediction task on the heterogeneous graph. In the following sections, we will elaborate on general mathematical explanations of the four types of model designs and discuss the similarities/differences among the models.

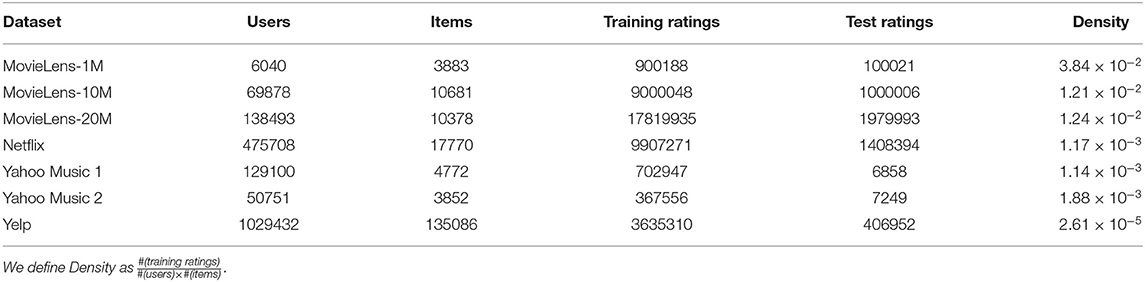

There have been four prior reviews (Adomavicius and Tuzhilin, 2011; Verbert et al., 2012; Bobadilla et al., 2013; Shi et al., 2014b) introducing attribute-aware recommender systems. We claim three major differences between our work and the existing papers. First, previous review papers mainly focused on grouping different types of attributes and discussing the distinctions of memory-based collaborative filtering and model-based collaborative filtering. In contrast, we are the first that have aimed at classifying the existing works based on the methodology proposed instead of the type of data used. We further provide mathematical connections for different types of models so that the readers can better understand the spirit of the design of different models as well as their technical differences. Second, we are the first to provide thorough experiment results (seven different models on eight benchmark datasets) to compare different types of attribute-award recommendation systems. Note that Bobadilla et al. (2013) is the only previous review work with experimental results. However, for that study, experiments were performed to compare different similarity measures in collaborative filtering algorithms instead of directly verifying the effectiveness of different attribute-aware recommender systems. Finally, we cover the latest work on attribute-aware recommender systems. We note that the existing review papers do not include forty papers after 2015. In recent years, several deep neural network-based solutions (Zhang et al., 2017) have achieved state-of-the-art performance for this task.

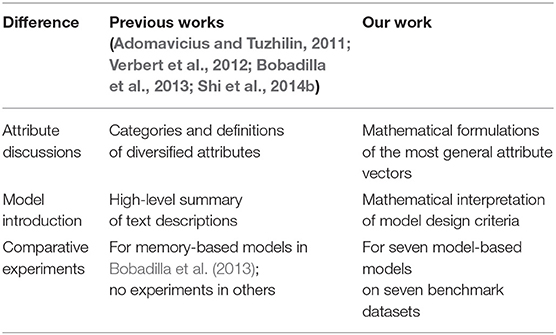

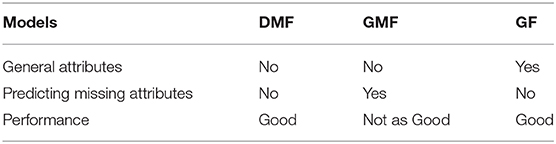

Table 1 shows comparisons between our work and previous reviews.

We will introduce the basic concepts behind recommender systems in section 2, followed by formal analyses of attribute-aware recommender systems in sections 3 and 4. A series of experiments detailed in section 5 were conducted to compare the accuracy and parameter sensitivity of six widely adopted models. Finally, section 6 concludes this review and identifies work to be done in the future.

2. Preliminaries

2.1. Problem Definition of Recommender Systems

Recommender systems act as skilled agents to assist users to conquer information overload while making selection decisions over items by providing customized recommendations. Users and items are general phrases denoting, respectively, entities actively browsing and making choices and entities being selected, such as goods and services.

Formally, recommender systems leverage one or more of the following three information sources to discover user preferences and generate recommendations: user-item interactions, side information, and contexts. User-item interactions, or ratings, are collected explicitly by prompting users to provide numerical feedback on items and are acquired implicitly by tracking user behaviors such as clicks, browsing time, or purchase history. The data are commonly represented as a matrix that encodes the preferences of users and is naturally sparse, since users normally interact with a limited fraction of items. Side information is rich information attached to an individual user or item that depicts user characteristics such as education and job or item properties such as description and product categories. Side information can take diverse structures with rich meaning, ranging over numerical status, texts, and images to videos, locations, and networks. On the other hand, context refers to all the information collected when a user interacts with an item, such as timestamps, locations, or textual reviews. This contextual information usually serves as an additional information source appended to the user-item interaction matrix.

The goal of recommender systems is to disclose unknown user preferences over items that users never interact with and recommend the most preferred items to them. In practice, recommender systems learn to generate recommendations based on three types of approaches: pointwise, pairwise, and listwise. The pointwise approach is the most common approach and demands recommendation systems to provide accurate numerical predictions on observed ratings. Items that a user never interacts with are then sorted by their rating predictions, and a number of items with the highest ratings are recommended to the user. On the other hand, a pairwise approach seeks to preserve the ordering of any pair of items based on ratings, while in the listwise approach, recommender systems aim to preserve the relative order of all rated items as a list for each user. The pairwise approach and listwise approach are together considered item rankings that only require recommender systems to output the ordering of items but not ratings for individual items.

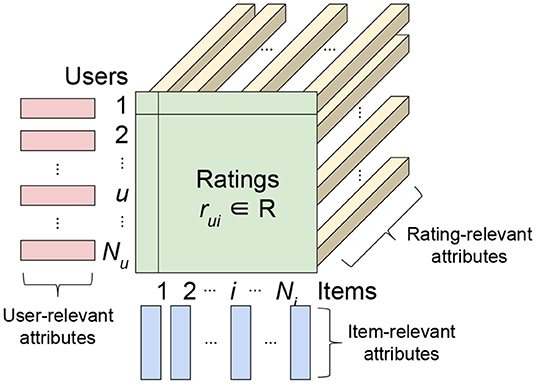

In most of the works covered in this paper, the task of attribute-aware recommendation is defined as predicting unknown ratings, that is: given Nu users, Ni items, a user-item rating matrix with only a small portion Nr ratings observed (i.e., there are a total of Nu × Ni − Nr missing ratings), side information of users (assuming each user has KX attributes), side information of items , and contexts , the goal is to build a model that is capable of predicting each of the unknown ratings in R.

Then, given any specific user, a recommender system can make recommendations based on the predicted ratings. Normally, items with higher ratings are recommended first. Note that the dimension KX, KY of side information attribute matrix X, Y might be zero, denoting that there is no side information about users or items. Likewise, if there is no contextual information about user-item interactions, KZ will be zero.

The core techniques or algorithms for realizing recommender systems are generally classified into three categories: content-based filtering, collaborative filtering, and hybrid filtering (Bobadilla et al., 2013; Shi et al., 2014b; Isinkaye et al., 2015). Content-based filtering generates recommendations based on properties of items and user-item interactions. Content-based techniques exploit domain knowledge and seek to transform item properties in raw attribute structures such as texts, images, or locations into numerical item profiles. Each item is represented as a vector, and the matrix of side information of items Y is constructed. A representation of each user is then created by aggregating profiles of items that this user interacted with, and a similarity measure is leveraged to retrieve a number of the most similar items as recommendations. Note that content-based filtering does not require information from any other user to make recommendations. Collaborative filtering strives to identify a group of users with similar preferences based on past user-item interactions and recommends items preferred by these users. Since discovering users with common preferences is generally based on user-item ratings R, collaborative filtering becomes the first choice when item properties are inadequate in describing their content, such as movies or songs. Hybrid filtering is the extension or combination of content-based and collaborative filtering. Examples of this are building an ensemble of the two techniques, using the item rating history of collaborative filtering as part of the item profiles for content-based filtering or extending collaborative filtering to incorporate user characteristics X or item properties Y. This review focuses on attribute-aware recommender systems that shed light not only on user-item interactions R but also on the side information of users or items X, Y and contexts Z, which is a subset of hybrid filtering.

2.2. Collaborative Filtering and Matrix Factorization

Collaborative filtering (CF) has become the prevailing technique for realizing recommender systems in recent years (Adomavicius and Tuzhilin, 2005, 2011; Shi et al., 2014b; Isinkaye et al., 2015). It assumes that the preferences users exhibit for items they have interacted with can be generalized and used to infer their preferences regarding items they have never interacted with by leveraging the records of other users with similar preferences. This section briefly introduces conventional CF techniques, which assume the availability of only user-item interactions or the rating matrix R. In practice, they are commonly categorized into memory-based CF and model-based CF (Adomavicius and Tuzhilin, 2005; Shi et al., 2014b; Isinkaye et al., 2015).

Memory-based CF directly exploits rows or columns in the rating matrix R as representations of users or items and identifies a group of similar users or items using a pre-defined similarity measure. Commonly used similarity metrics include the Pearson correlation, the Jaccard similarity coefficient, the cosine similarity, or their variants. Memory-based CF techniques can be divided into user-based and item-based approaches, which identify either a group of similar users or similar items, respectively. For user-based approaches, K nearest neighbors—or the K most similar users—are extracted, and their preferences or ratings regarding a target item are aggregated into a rating prediction using similarities between users as weights. The rating prediction for user u on item i, , can be formulated as:

where function sim(·) is a similarity measure, Z is the normalization constant, and Uu is the set of similar users to user u (Shi et al., 2014b). Rating predictions of item-based approaches can be formulated in a similar way. The calculated pairwise similarities between users or items act as the memory of the recommender system since they can be saved for generating later recommendations.

Model-based CF, on the other hand, takes the rating matrix R to train a predictive model with a set of parameters θ to make recommendations (Adomavicius and Tuzhilin, 2005; Shi et al., 2014b). Predictive models can be formulated as a function that outputs ratings for rating predictions or numerical preference scores for item ranking given a user-item pair (u, i):

Model-based CF then ranks and selects the K items with the highest ratings or scores rui as recommendations. Common core algorithms for model-based CF involve Bayesian classifiers, clustering techniques, graph-based approaches, genetic algorithms, and dimension-reduction methods such as Singular Value Decomposition (SVD) (Adomavicius and Tuzhilin, 2005, 2011; Bobadilla et al., 2013; Shi et al., 2014b; Isinkaye et al., 2015). Over the last decade, a class of latent factor models, called matrix factorization, has been popularized and is commonly adopted as the basis of advanced techniques because of its success in the development of algorithms for the Netflix competition (Koren et al., 2009; Koren and Bell, 2011). In general, latent factor models aim to learn a low-dimensional representation, or latent factor, for each entity and combine the latent factors of different entities using specific methods such as inner product, bilinear map, or neural networks to make predictions. As one of these latent factor models, matrix factorization for recommender systems characterizes each user and item by a low-dimensional vector and predicts ratings based on inner product.

Matrix factorization (MF) (Paterek, 2007; Koren et al., 2009; Koren and Bell, 2011; Shi et al., 2014b), in the basic form, represents each user u as a parameter vector and each item i as , where K is the dimension of latent factors. The prediction of user u's rating or preference regarding item i, denoted as , can be computed using the inner product:

which captures the interaction between them. MF seeks to generate rating predictions that are as close as possible to those recorded ratings. In matrix form, it can be written as finding W, H such that R ≈ W⊤H where . MF is essentially learning a low-rank approximation of the rating matrix, since the dimension of representations K is usually much smaller than the number of users Nu and items Ni. To learn the latent factors of users and items, the system tries to find W, H that minimizes the regularized square error on the set of entries of known ratings in R [denoted as δ(R)]:

where λW and λH are regularization parameters. MF tends to cluster users or items with similar rating configurations into groups in the latent factor space, which implies that similar users or items will be close to each other. Furthermore, MF assumes that the rank of rating matrix R or the dimension of the vector space generated by the rating configuration of users is far smaller than the number of users Nu. This implies that each user's rating configuration can be obtained by a linear combination of ratings from a group of other users since they are all generated by K principal vectors. Thus, MF is in the spirit of collaborative filtering, which is to infer a user's unknown ratings by the ratings of several other users.

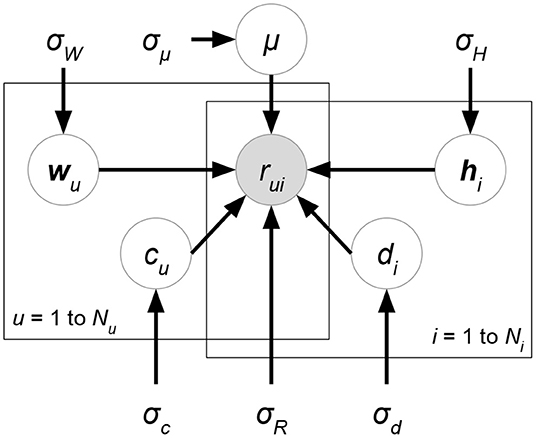

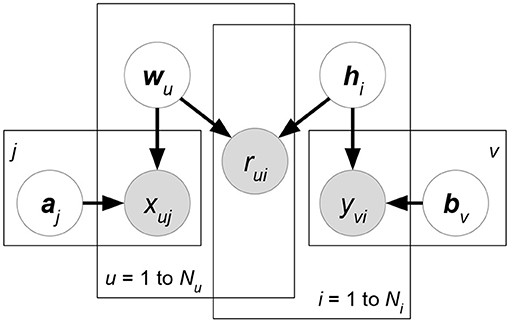

Biased matrix factorization (Paterek, 2007; Koren et al., 2009; Koren and Bell, 2011), an improvement of MF, models the characteristics of each user and each item and the global tendency that are independent of user-item interactions. The obvious drawback of MF is that only user-item interactions are considered in rating predictions. However, ratings usually contain universal shifts or exhibit systematic tendencies with respect to users and items. For instance, there might be a group of users inclined to give significantly higher ratings than others or a group of items widely considered to be of high-quality and that receive higher ratings. Besides, it is common that all ratings are non-negative, which implies that the overall average might not be close to zero and causes a difficulty for training small-value-initialized representations. Due to the issues mentioned above, biased MF augments MF rating predictions with linear biases that account for user-related, item-related, and global effects. The rating prediction is extended as follows:

where μ, ci, dj are global bias, bias of user i, and bias of item j, respectively. Biased MF then finds the optimal W, H, c, d, μ that minimizes the regularized square error as follows:

where denotes the squared Frobenius norm. The regularization parameter λ is tuned by cross-validation.

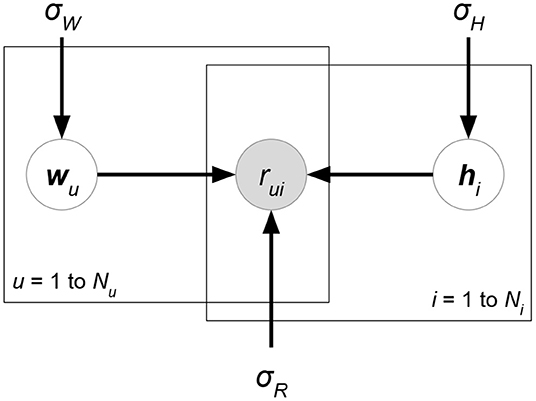

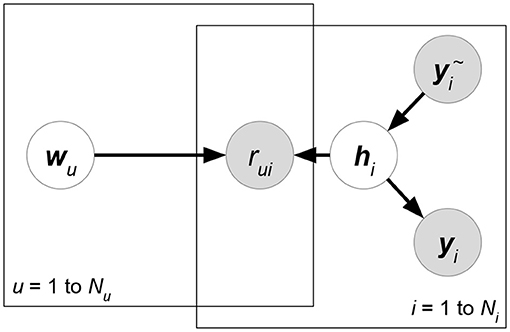

Probabilistic matrix factorization (PMF, Figure 2 and Biased PMF, Figure 3) (Salakhutdinov and Mnih, 2007, 2008) is a probabilistic linear model with observed Gaussian noise and can be viewed as a probabilistic extension of MF. PMF adopts the assumption that users and items are independent and represents each user or each item with a zero-mean spherical multivariate Gaussian distribution as follows:

where and are observed user-specific and item-specific noise. PMF then formulates the conditional probability over the observed ratings as

where δ(R) is the set of known ratings and denotes the Gaussian distribution with mean μ and variance σ2. Learning in PMF is conducted by maximum a posteriori (MAP) estimation, which is equivalent to maximizing the log of the posterior distribution of W, H:

where C is a constant independent of all parameters and K is the dimension of user or item representations. With Gaussian noise observed, maximizing the log-posterior is identical to minimizing the objective function with the form:

where . Note that (10) has exactly the same form as the regularized square error of MF, and gradient descent or its extensions can then be applied in training PMF.

Since collaborative filtering techniques only consider rating matrix R in making recommendations, they cannot discover the preferences of users or for items with scant user-item interactions. This problem is referred as the cold-start issue. In section 3, we will review recommendation systems that extend CF to incorporate contexts or rich side information regarding users and items to alleviate the cold-start problem.

3. Attribute-Aware Recommender Systems

3.1. Overview

Attribute-aware recommendation models are proposed to tackle the challenges of integrating additional information from user/item/rating. There are two strategies for designing attribute-aware collaborative filtering-based systems. One direction is to combine content-based recommendation models with CF models, which can directly accept attributes as content to perform recommendation. On the other hand, researchers also try to extend an existing collaborative filtering algorithm such that it leverages attribute information.

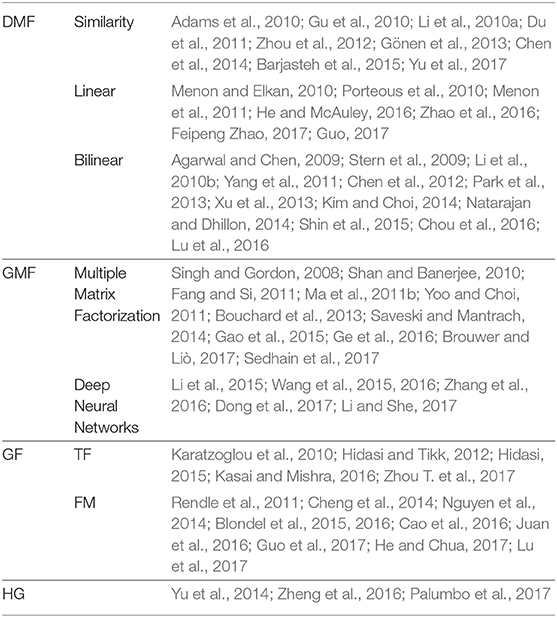

Rather, we will focus on four important factors in the design of an attribute-aware recommender system in current research, as shown in Figure 4. They are specifically discussed from sections 3.2 to 3.5. With respect to input data, attribute sources determine whether an attribute vector is relevant to users, items, or ratings. For example, age describes a user instead of an item; rating time must be appended to ratings, representing when the rating event occurred. Different models impose distinct strategies to integrate attributes of specific sources. Additionally, a model may constrain attribute types that can be used. For instance, graph-based collaborative filtering realizations define attributes as node types, which is not appropriate for numerical attributes. Rating type is the factor that is emphasized by most model designers. Besides usual numerical ratings, many recommendation models concentrate on binary rating data, where the ratings represent whether users interact with items. Finally, different recommender systems emphasize different recommendation goals. One is to predict the ratings from users to items through minimizing the error between the predicted and real ratings. Another is to produce the ranking among items given a user instead of caring about the real rating value of a single item. We then summarize the design categories of all the surveyed papers in a table in section 3.6.

Figure 4. Model design flow of an attribute-aware collaborative filtering-based recommender system. When reading ratings and attributes for a proposed approach, we have to consider the sources and the types of attributes or ratings, which could affect the recommendation goals and model designs. The evaluation of a proposed recommender system depends heavily on the chosen recommendation goals.

Throughout this paper, we will use to denote the attribute matrix, where each column xi represents a K-dimensional attribute vector of entity i. Here, an entity can refer to a user, an item, or a rating, determined by attribute sources (discussed in section 3.2). If attributes are limited categorically, then X ∈ {0, 1}K×N can be represented by one-hot encoding (discussed in section 3.3). Note that our survey does not include models designed specifically for a certain type of attribute but rather covers models that are general enough to accept different types of attributes. For example, Collaborative Topic Regression (CTR) (Wang and Blei, 2011) extends matrix factorization with Latent Dirichlet Allocation (LDA) to import text attributes. Social Regularization (Ma et al., 2011a) specifically utilizes user social networks to regularize the learning of matrix factorization. Neither model is included since they are not general enough to deal with general attributes.

3.2. Sources of Attributes

Attributes usually come from a variety of sources. Typically, side information refers to the attributes appended to users or items. In contrast, keyword contexts indicate the attributes relevant to ratings. Ratings from the same user can be attached to different contexts, such as “locations where users rate items.” Recommendation models considering rating-relevant attributes are usually called context-aware recommender systems. Although contexts in some papers could include user-relevant or item-relevant ones, in this paper, we tend to be precise and use the term contexts only for rating-relevant attributes.

Sections 3.2.1 and 3.2.2, respectively introduce different attribute sources. It is worth mentioning our observation as follows. Even though some of the models we surveyed demand side information while others require context information, we discover that the two sets of attributes can be represented in a unified manner and thus that both types of models can be applied. We will discuss such unified representation in sections 3.2.3 and 3.2.4.

3.2.1. Side Information: User-Relevant or Item-Relevant Attributes

In the surveyed papers, side information could refer to user-relevant attributes, item-relevant attributes, or both. User-relevant attributes determine the characteristics of a user, such as “age,” “gender,” “education,” etc. In contrast, item-relevant attributes describe the properties of an item, like “movie running time,” “product expiration data,” etc. Below, we discuss user-relevant attributes, but all the statements can be applied to item-relevant attributes. Given user-relevant attributes, we can express them with matrix , where Nu is the number of users. Each column of X corresponds to K attribute values of a specific user. The most important characteristic of user-relevant attributes is that they are assumed unchanged with the rating process of a user. For example, every rating from the same user shares the identical user-relevant attribute “age.” In other words, even without any ratings from a user in collaborative filtering, the user's rating behaviors on items could be still extracted from other users that have similar user-relevant attribute values. Attribute-aware recommender systems that address the cold-start user problems (i.e., there are few ratings of a user) typically adopt user-relevant attributes as their auxiliary information under collaborative filtering. The attribute leverage methods are presented in section 4.

Readers may ask why user-relevant attributes and item-relevant attributes are not distinguished. Our observations during this survey indicate that most of the recommendation approaches have symmetric model designs for users and items. In matrix factorization-based methods, rating matrix R is factorized into two matrices W and H, referring to user and item latent factors, respectively. However, matrix factorization does not change its learning results if we exchange the rows and columns of R. Despite the exchange of rows and columns, W and H just exchange what they learn from ratings: W for items but H for users.

On the basis of the above conclusions, some of the related work could be further extended, in our opinion. If one attribute-aware recommender system claims to be designed only for user-relevant attributes, then readers could use a symmetric model design for item-relevant attributes to obtain a more general model.

3.2.2. Contexts: Rating-Relevant Attributes

Collaborative filtering-based recommender systems usually define ratings as the interaction between users and items, though it is likely to have more than one interaction. Since ratings are still the focus of recommender systems, other types of interactions, or rating-relevant attributes, are called contexts in related work. For example, the “time” and the “location” that a user rates an item are recorded with the occurrence of the rating behavior. Rating-relevant attributes change with rating behaviors, and thus they could offer auxiliary data on why a user decides to give a rating to an item. Moreover, rating-relevant attributes could capture the rating preference change of a user. If we have time information appended to ratings, then attribute-aware recommender systems could discover users' preferences at different times.

The format of rating-relevant attributes is potentially more flexible than that of user-relevant or item-relevant ones. In section 4.3, we will introduce a factorization-based generalization of matrix factorization. In this class of attribute-aware recommender systems, even the user and item latent factors are not required to predict ratings; mere rating-relevant attributes can do it, using their corresponding latent factor vectors.

3.2.3. Converting Side Information to Contexts

Most attribute-aware recommender systems choose to leverage one of the attribute sources. Some proposed approaches specifically incorporate user- or item-relevant attributes, while others are designed for rating-relevant attributes only. It seems that existing approaches should be applied according to which attribute sources they use. However, we argue that the usage of attribute-aware recommender systems could be independent of attribute sources if we convert them to each other using a simple method.

Let be the user-relevant attribute matrix, where each column is the attribute set of user u. Similarly, let be the matrices of item-relevant attributes and rating-relevant attributes, respectively. Note that a column index of matrix Z is denoted by π(u, i), which is associated with user u and item i. A simple concatenation with respect to users and items can achieve the goal of expressing X or Y as Z, as shown below:

Equation (11) implies that we just extend the current rating-relevant attributes zπ(u,i) to using the attributes xu, yi from corresponding users or items. If training data do not consist of zπ(u,i), xu or yi, we can eliminate the notations on the right-hand side of (11). Advanced attribute selection or dimensionality reduction methods could extract effective dimensions in , but further improvement is beyond our scope. If missing attribute values exist in , then we suggest directly filling these attributes with 0. Please refer to section 3.2.4 for our reasoning.

3.2.4. Converting Contexts to Side Information

Following the topic in section 3.2.3, the reader may be curious about how to reversely convert rating-relevant attributes to user- or item-relevant ones. In the following paragraphs, we adopt the same notations as in section 3.2.3. Due to symmetric designs for X and Y, we demonstrate only the conversion from Z to X. Concatenation is still the simplest way to express Z as a part of X:

All the rating-relevant attributes z(u,1), z(u,2), …, z(u,Ni) from Ni items must be associated with user u. xu is thus extended to by appending these attributes. Note that there are a large number of missing attributes on the right-hand side of (12), since most items are never rated by user u in real-world data. Eliminating missing zπ(u,i), as what we do in section 3.2.3, reveals different dimensions between two user-relevant attributes . To our knowledge, there is no user-relevant attribute-aware recommender system allowing individual dimensions of user-relevant attributes.

Readers can use attribute imputation approaches to remove missing values in . However, we argue that simply filling missing elements with 0 is sufficient for attribute-aware recommender systems. We explain our reasons through the observations in section 3.3. For numerical attributes, (13)–(15) show the various attribute modeling methods. If attributes X are mapped through function f like (13) or (15), then zero attributes in f will cause no mapping effect (except constant intercept of f). If attributes X are fitted by latent factors onto function f such as (14), then typically in the objective design, we can skip the objective computation of missing attributes. As for categorical attributes, we exploit one-hot encoding to represent them with numerical values. Categorical attributes can then be handled as numerical attributes.

3.3. Attribute Types

In most cases, attribute-aware recommender systems accept a real-value attribute matrix X. However, we notice that some attribute-aware recommender systems require attributes to be categorical, which is typically represented by binary encoding. Specifically, this approach demands a binary attribute matrix where attributes of value 1 are modeled as discrete latent information in some way. A summary of the two types of attributes is given in sections 3.3.1 and 3.3.2.

It is trivial to put one-hot categorical attributes into numerical attribute-aware recommender systems, since binary values {0, 1} ⊂ ℝ. Nonetheless, putting numerical attributes into categorical attribute-aware recommendation systems runs the risk of losing attribute information (e.g., quantization processing).

3.3.1. Numerical Attributes

In our paper, numerical attributes refer to the set of real-valued attributes, i.e., attribute matrix X ∈ ℝK×N. We also classify integer attributes (like movie ratings {1, 2, 3, 4, 5}) to numerical attributes. Most of the relevant papers model numerical attributes as their default inputs in recommender systems, as is common in machine learning approaches.

There are three common model designs through which numerical attributes X affect recommender systems. First, we can map X to latent factor space by function fθ with parameters θ, and then fit the corresponding user or item latent factor vectors:

Second, like the reverse of (13), we define a mapping function fθ such that mapped values from user or item latent factors can be close to observed attributes:

Finally, numerical attributes can be put into function fθ, which is independent of existing user or item latent factors in matrix factorization:

Equations (13) and (14) are typically seen in user-relevant or item-relevant attributes, while rating-relevant attributes are often put into (15)-like formats. However, we emphasize that attribute-aware recommender systems are not limited to these three model designs.

3.3.2. Categorical Attributes

The values of a numerical attribute are ordered, while, on the other hand, the values of a categorical attribute show no ordered relations with each other. Given a categorical attribute Food ∈ {Rice, Noodles, Other}, the meanings of the values do not imply which one is larger than the other. Thus, it is improper to give categorical attributes ordered dummy variables, like Rice = 0, Noodles = 1, Other = 2, which could incorrectly imply Rice < Noodles < Other, misleading machine learning models. The most common solution to categorical attribute transformation is one-hot encoding. We generate d-dimensional binary attributes that correspond to the d values of a categorical attribute. Each of the d binary attributes indicates the current value of a categorical attribute. For example, we express attribute Food ∈ {{1, 0, 0}, {0, 1, 0}, {0, 0, 1}}. These correspond to the original values {Rice, Noodles, Other}. Since the values of the categorical attribute are unique, the mapped binary attributes contain only a 1, and others, 0. Once all the categorical attributes are converted to one-hot encoding expressions, we can apply them to existing numerical attribute-aware recommender systems.

Certain relevant papers are suitable for, or even adversely limited to, categorical attributes. Heterogeneous graph-based methods (section 4.4) add new nodes (e.g., Rice, Noodles, Other) to represent the values of categorical attributes. Following the concept of latent factor in matrix factorization, some methods propose to assign each categorical attribute value a low-dimensional latent factor vector (e.g., each of Rice, Noodles, Other has a latent factor vector w ∈ ℝK). These vectors are then jointly learned with classical user or item latent factors in attribute-aware recommender systems.

3.4. Rating Types

Although we always define the term ratings as the interactions between users and items in this paper, some previous works claim a difference between explicit opinions and implicit feedback. Taking the dataset MovieLens, for example, a user gives a rating value in {1, 2, 3, 4, 5} toward an item. The value denotes the explicit opinion, which quantifies the preference of the user for that item. How recommendation methods handle such type of ratings will be introduced in section 3.4.1.

Even though modeling explicit opinions is more beneficial for future recommendation, such data is more difficult to gather from users. Users may hesitate to show their preferences due to privacy considerations, or they may not be willing to spend time labeling explicit ratings. Instead, recommender systems are more likely to collect implicit feedback, such as user browsing logs. Such datasets record a series of binary values, each of which implies whether a user ever saw an item. User preferences behind implicit feedback assume that the items seen by a user must be more preferred by the user than those items that have never been seen. We discuss this type of rating in detail in section 3.4.2.

Some numerical rating data are controversial, like “the number of times a user clicks on the hyperlink to visit the page of an item.” Some of the related work may define such data as implicit feedback because the number of clicks is not equivalent to explicit user preferences. However, in this paper, we still identify them as explicit opinions. With respect to model designs, related recommendation approaches do not differentiate such data.

3.4.1. Explicit Opinions: Numerical Ratings

A numerical rating matrix r ∈ ℝ expresses users' opinions on items. Actually, numerical ratings in real-world scenarios are often represented by positive integers, such as MovieLens ratings r ∈ {1, 2, 3, 4, 5}. Though there are no explicit statements in related work, we suppose that a higher rating implies a more positive opinion.

Since, in most datasets, the gathered rating values are positive, there could be an unbiased learning problem. Matrix factorization could not learn the rating bias due to the non-zero mean of ratings (r) ≠ 0. Specifically, in vanilla matrix factorization, we have regularization terms and for user and item latent factor matrix W, H. That is, we require the expected value (W) = (H) = 0 from the viewpoint of corresponding normal distributions. Given rating rui of user u to item i, and assuming the independence of W, H as probabilistic matrix factorization does, we obtain the expected value of rating estimate , which cannot closely fit true ratings if (rui) ≠ 0. Biased matrix factorization can alleviate the problem by absorbing the non-zero mean with additional bias terms. Besides, we are able to normalize all the ratings (subtract the rating mean from every rating) to make matrix factorization prediction unbiased. Real-world numerical ratings also have finite maximum and minimum values. Some recommendation models choose to normalize the ratings to range r ∈ [0, 1] and then constrain the range of rating estimate using the sigmoid function .

3.4.2. Implicit Feedback: Binary Ratings

Today, more and more researchers are interested in the scenario of binary ratings r ∈ {0, 1} (i.e., implicit feedback), since such rating data are more accessible, for instance, “whether a user browsed the information about an item.” Online services do not require users to give explicit numerical ratings, which are often harder to gather than binary ones.

We observe only positive ratings r = 1; negative ratings r = 0 do not exist in training data. Taking browsing logs as an example, the data include the items that are browsed by a user (i.e., positive examples). Items not being in the browsing data could imply that they are either absolutely unattractive (r = 0) or just unknown (r ∈ {0, 1}) to the user. One-class collaborative filtering methods are proposed to address the problem. Such methods often claim two assumptions:

• An item must be attractive to a user (r = 1) as long as the user has seen the item.

• Since we cannot distinguish between the two reasons (absolutely unattractive or just unknown) why an item is unseen, such methods suppose that all the unseen items are less attractive (r = 0). However, the number of unseen items is practically much larger than that of seen items. To alleviate the problem of learning bias toward r = 0 together with learning speed, we exploit negative sampling, which sub-samples partial unseen ratings for training.

To build an objective function satisfying the above assumptions, we can choose either pointwise learning (section 3.5.1) or pairwise learning (section 3.5.2). The Area Under the ROC Curve (AUC), Normalized Discounted Cumulative Gain (NDCG), Mean Average Precision (MAP), precision, and recall are often used to justify the quality of recommender systems for binary ratings.

3.5. Recommendation Goals

Any recommender system needs human intervention to set up a training goal. Since collaborative filtering-based recommender systems rely on ratings, the most straightforward goal is to infer what rating will be given by a user for an unseen item, called rating prediction. If the ratings of every item can be accurately predicted, then for any user, a recommender system can just sort and recommend items based on highest predicted rating. In machine learning, such a goal for model-based recommender systems can be described as pointwise learning. That is, given a user-item pair, a pointwise learning recommendation model directly minimizes the error of predicted ratings and true ones. The related mathematical details are presented in section 3.5.1.

However, in general, our ultimate goal is to recommend unseen items to users without being concerned about how these items are rated. All unseen items in pointwise learning are finally ranked in descending order of their ratings. In other words, what we truly care about is the order of ratings, but not the true rating values. Also, some research papers figure out that a low error of rating prediction is not always equivalent to a high quality of recommended item lists. Recent model-based collaborative filtering models have begun to set optimization goals of item ranking. That is, for the same user, such models maximize the differences between high-rated items and low-rated ones in training data. The implementation of item ranking includes pairwise learning and listwise learning in machine-learning domains. Both learning ideas try to compare the potentially related ranks between at least two items for the same user. section 3.5.2 will present how to define optimization criteria for item ranking.

3.5.1. Rating Prediction: Pointwise Learning

In the training stage, given a ground-truth rating r, a recommender system needs to make a rating estimate that is expected to predict r. Model-based collaborative filtering methods (e.g., matrix factorization) build an objective function to be optimized (either maximization or minimization) for recommendation goals. For numerical ratings r ∈ ℝ (section 3.4.1) of users u to items i, we can minimize the error between the ground truth and the estimate as follows:

δ(R) is the set of training ratings, which are the non-missing entries in rating matrix R. As mentioned in section 3.4.1, if ground-truth ratings r are normalized to [0, 1] in data pre-processing, then in (16) we can put sigmoid function onto rating estimate that fits r more closely. With respect to probability, (16) is equivalent to maximizing normal likelihood:

where is the probability density function of a normal distribution with mean and variance σ2 is a predefined uncertainty between r and . Taking (−log) on (17) will give (16). Evidently the rating prediction problem can be addressed by regression models over ratings R with both (16) and (17).

For binary ratings r ∈ {0, 1} (section 3.4.2), other than (16) with the sigmoid function, such data can also be modeled as a binary classification problem. Specifically, we model r = 1 as the positive set and r = 0 as the negative set. A logistic regression (or Bernoulli likelihood) is then built for rating prediction:

The optimizations of (16) and (17) are based on an evaluation metric: Root Mean Squared Error (RMSE), whose formal definition is as follows:

For convenience of optimization, the regression models eliminate the root function from RMSE, essentially optimizing the MSE. Since the root function is monotonically increasing, minimizing MSE is equivalent to minimizing RMSE (19).

Even though a recommender system elects to optimize (18), the binary classification also attempts to minimize RMSE, except that rating estimate is replaced with sigmoid-applied version . Observing the maximization of (18), we obtain a conclusion: as r = 1, or as r = 0. In other words, (18) tries to minimize the error between and r ∈ {0, 1}, which has the same optimization goal as RMSE (19).

3.5.2. Item Ranking: Pairwise Learning and Listwise Learning

This class of recommendation goal requires a model to correctly rank two items in the training data, even though the model could inaccurately predict the value of a single rating. Since recommender systems are more concerned about item ranking for the same user u than ranking for different users, existing approaches sample item pairs (i, j) where rui > ruj, given fixed user u (i.e., item i is ranked higher than item j for user u), and then let rating estimate pair learn to rank the two items with . In particular, we can use the sigmoid function to model the probabilities in the pairwise comparison likelihood:

Taking (−log) on objective function (20) will give the log-loss function. Bayesian Personalized Ranking (BPR) (Rendle et al., 2009) first investigated the usage and the optimization of (20) for recommender systems. BPR shows that (20) maximizes a differentiable smoothness of the evaluation metric Area Under the ROC Curve (AUC):

where T is the number of training instances {(u, i, j) ∣ {rui, ruj} ⊆ δ(R), rui > ruj}. (x) ∈ {0, 1} denotes an indicator function whose output is 1 if and only if condition x is judged true. We show the connection between (20) and (21) below:

Under the condition of argmax, we approximate non-differentiable indicator function (x) by differentiable sigmoid function sig(x). The maximization of (22) is equivalent to optimizing (20) due to the monotonically increasing logarithmic function. AUC evaluates whether all the predicted item pairs follow the ground-truth rating comparisons in the whole item list. Our observations indicate that most of the reviewed approaches based on item ranking build their objective functions with AUC optimization. There are other choices of optimization functions to approximately maximize AUC, such as hinge loss:

In the domain of top-N recommendation, the item order outside top-N ranks is unimportant for recommender systems. Maximizing AUC could fail to recommend items, since AUC gives the same penalty to all items. That is, a recommender system could gain high AUC when it accurately ranks the bottom-N items, but it is not beneficial for real-world recommendation, since a user only pays attention to the top-N items. Listwise evaluation metrics like Mean Reciprocal Rank (MRR), Normalized Discounted Cumulative Gain (NDCG), and Mean Average Precision (MAP) are proposed to give different penalty values to item ranking positions. There have been attempts to optimize differential versions of the above metrics, such as CliMF (Shi et al., 2012b), SoftRank (Taylor et al., 2008), and TFMAP (Shi et al., 2012a).

Our observations of the surveyed papers indicate that recommender systems reading binary ratings (section 3.4.2) prefer to optimize an item-ranking objective function. Compared with numerical ratings (section 3.4.1), a single binary rating reveals less information on a user's absolute preference. Pairwise learning methods can capture more information by modeling a user's relative preferences, because the number of rating pairs rui = 1 > 0 = ruj is more than the number of ratings for each user.

3.6. Summary of Related Work

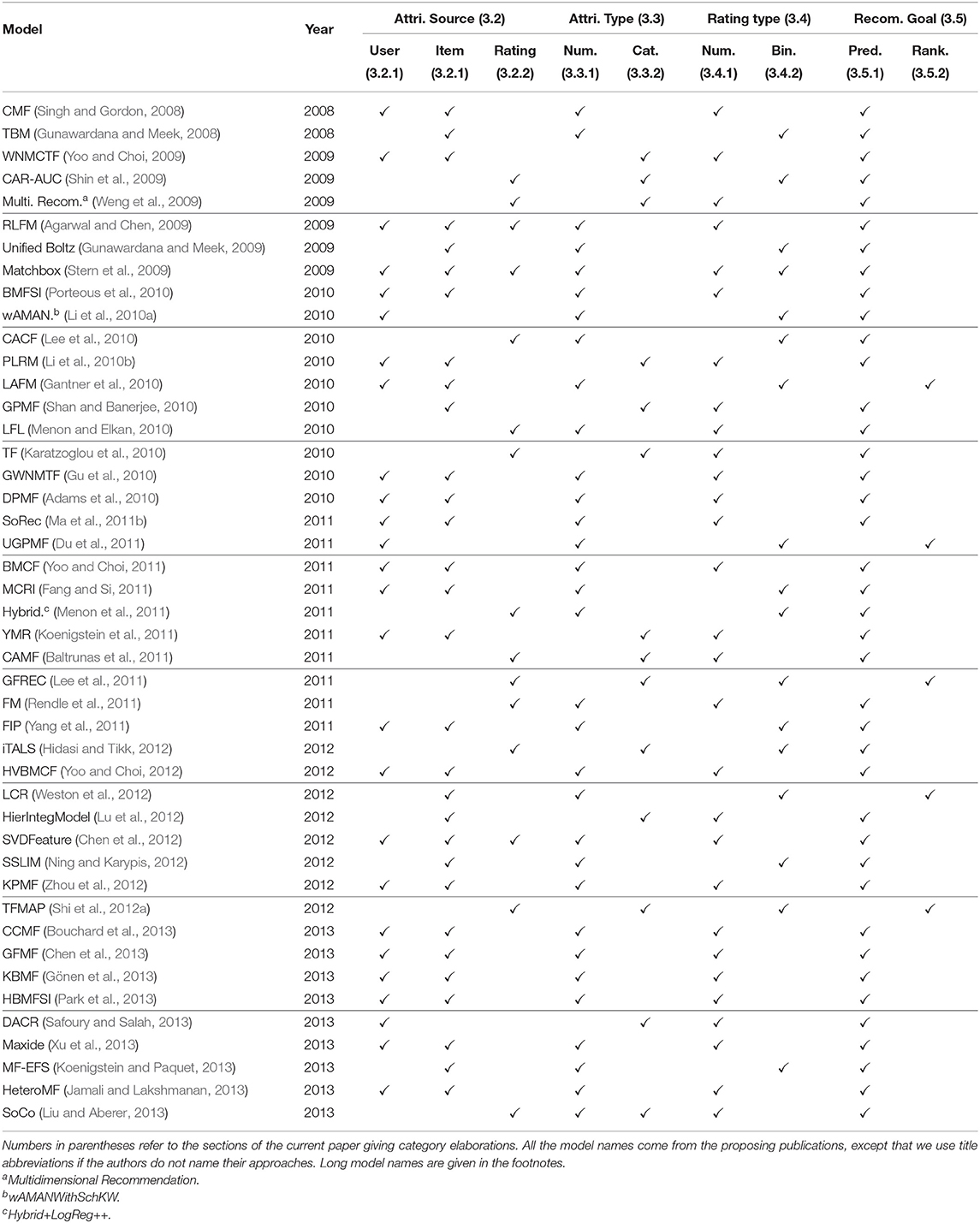

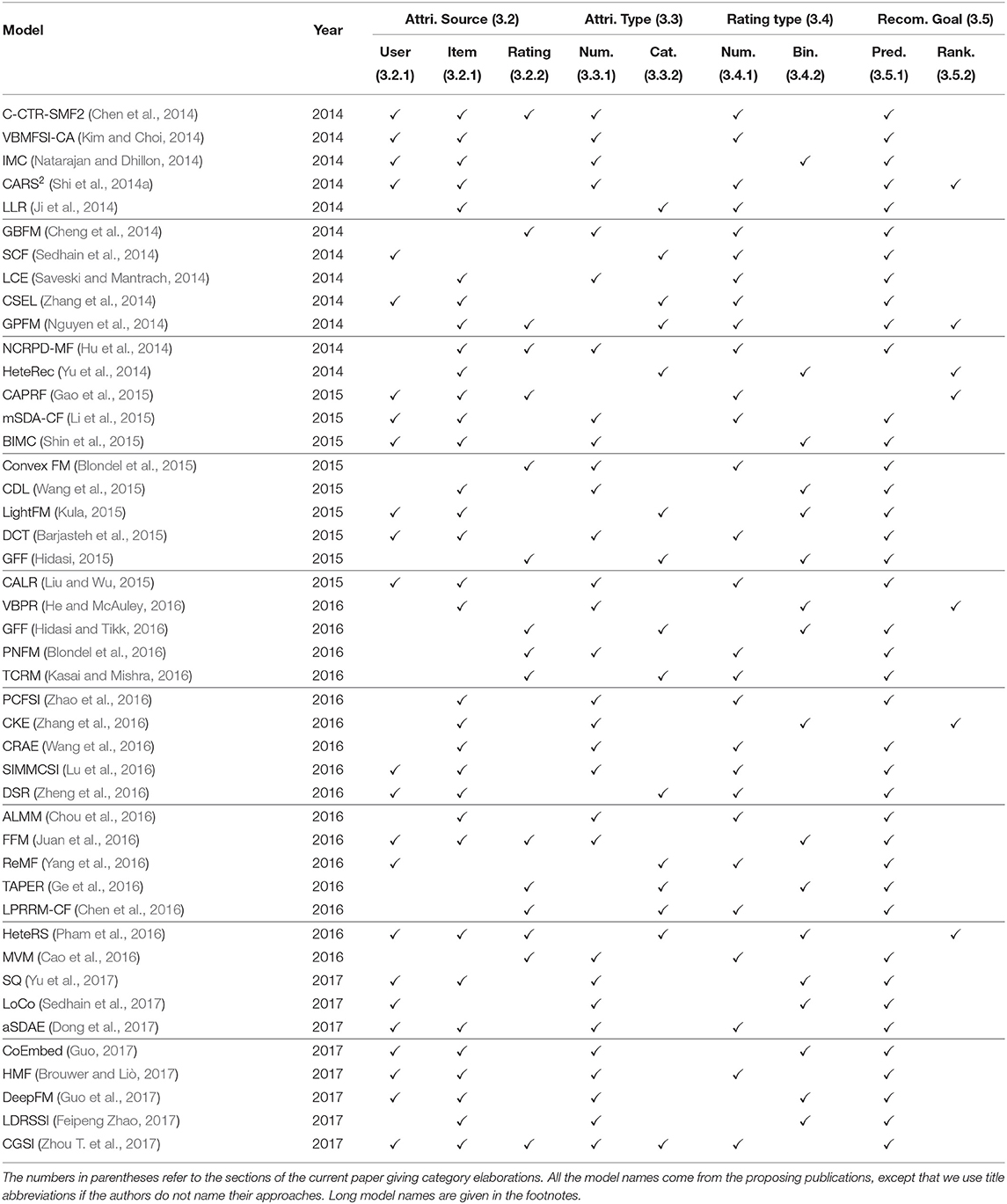

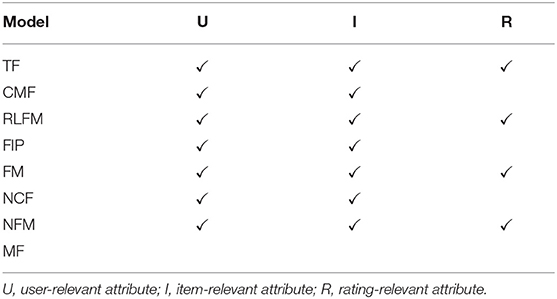

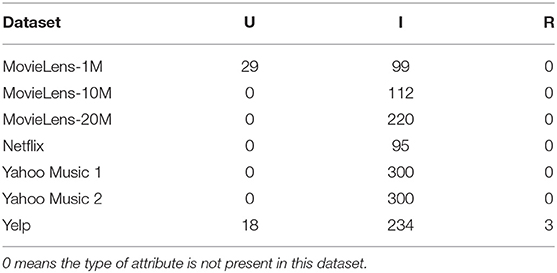

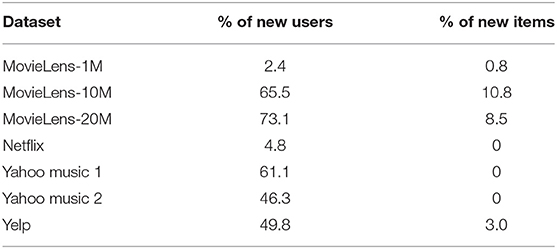

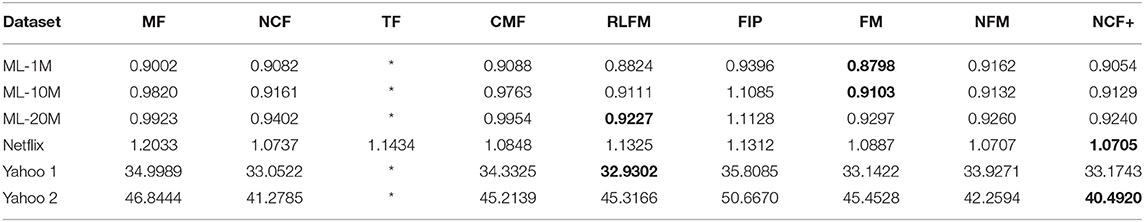

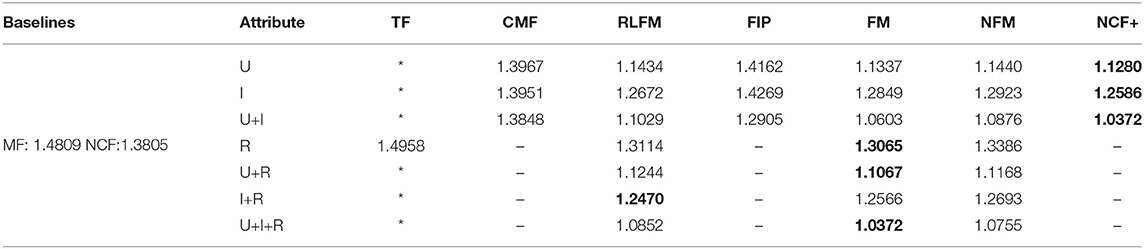

Having introduced the above categories for attribute-aware recommender systems, we list which category each publication we have surveyed belongs to in Tables 2–4. We trace back 10 years to summarize the recent trends in attribute-aware recommender systems.

4. Common Model Designs of Attribute-Aware Recommender Systems

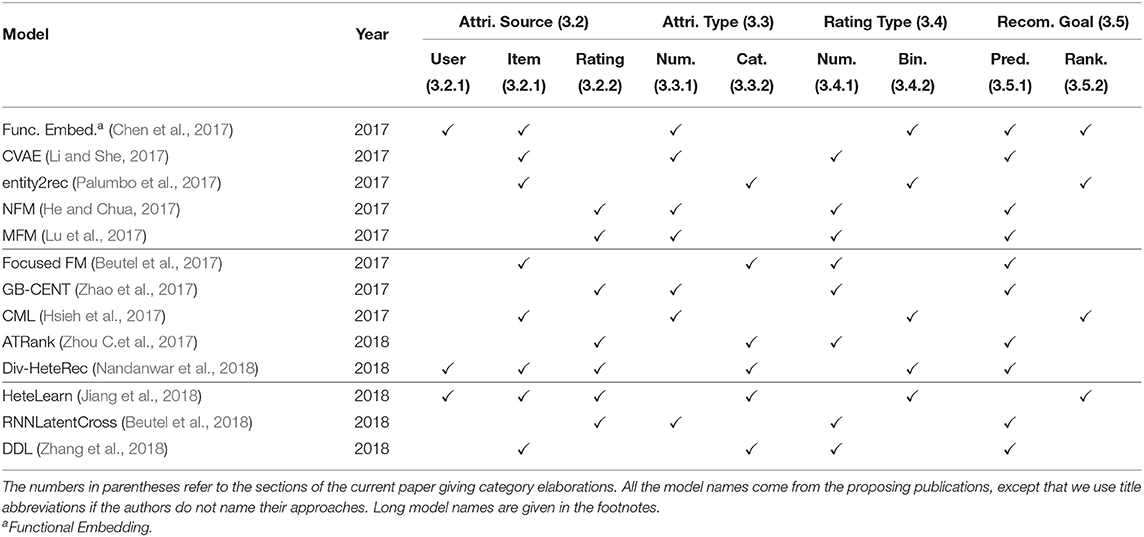

In this section, we formally introduce the common attribute integration methods of existing attribute-aware recommender systems. If collaborative filtering approaches are modeled by user or item latent factor structures like matrix factorization, then attribute matrices become either the prior knowledge of the latent factors (section 4.1) or the generation outputs from the latent factors (section 4.2). On the other hand, some approaches used are actually generalizations of matrix factorization (section 4.3). Besides, the interactions between users and items can be recorded by a heterogeneous network, which can incorporate attributes by simply adding attribute-representing nodes (section 4.4). The major distinction of these four categories lies in the representation of the interactions of users, items, and attributes. The discriminative matrix factorization models extend the traditional MF by learning the latent representation of users or items from the input attribute prior knowledge. Generative matrix factorization further considers the distributions of attributes and learns such together with the rating distributions. Generalized factorization models view the user/item identity simply as a kind of attribute, and various models have been designed for learning the low-dimensional representation vectors for rating prediction. The last category of models propose to represent the users, items, and attributes using a heterogeneous graph, where a recommendation task can be cast into a link prediction task on the heterogeneous graph. We classify each model into these categories in Table 5.

4.1. Discriminative Matrix Factorization

Intuitively, the goal of an attribute-aware recommender system is to import attributes to improve recommendation performance (either rating prediction or item ranking). In the framework of matrix factorization, an item is rated or ranked according to the latent factors of the item and its corresponding users. In order words, the learning of latent factors in classical matrix factorization depends only on ratings. Thus, the learning may fail due to a lack of training ratings. If we can regularize the latent factors using attributes or make attributes determine how to rate items then matrix factorization methods can be more robust to compensate for the lack of rating information in the training data, especially for those users or items that have very few ratings. In the following, we choose to describe attribute participation from probabilistic perspectives. Learning in Probabilistic Matrix Factorization (PMF) tries to maximize the posterior probability p(W, H ∣ R) of two latent factor matrices W (for users) and H (for items) given observed entries of training rating matrix R. Clearly, attribute-aware recommender systems claim that we are given an extra attribute matrix X. Then by Bayes' rule, the posterior probability can be shown as follows:

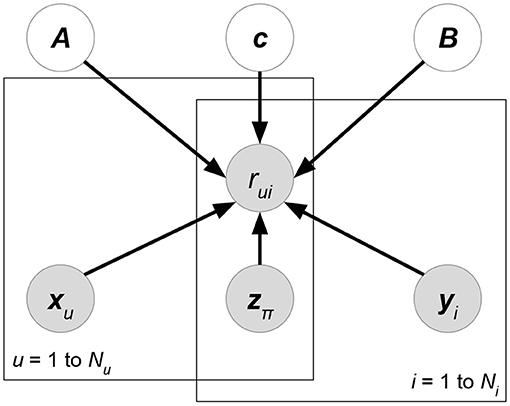

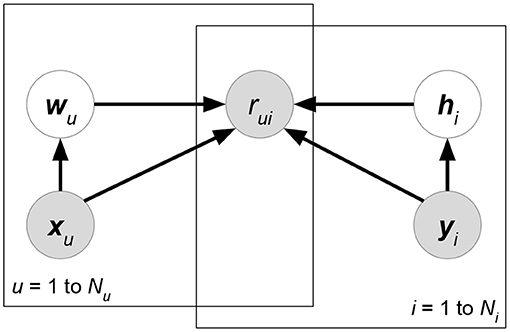

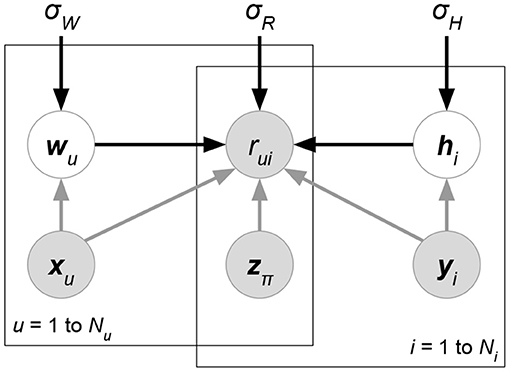

We eliminate the denominator p(R ∣ X) since it does not contain variables W, H for maximization. At the prior part, we follow the independence assumption W⊥H of PMF, though here the independence is given attribute matrix X. Now, compared with classical PMF, both likelihood p(R ∣ W, H, X) and prior p(W ∣ X)p(H ∣ X) could be affected by attributes X. Attributes in the likelihood can directly help predict or rank ratings, while attributes in the priors regularize the learning directions of latent factors. Moreover, some current works assume additional independence between attributes and the matrix factorization formulation. We give graphical interpretation of Discriminative Matrix Factorization in Figure 5. For ease of explanation, we suppose that all the random variables follow a normal distribution with mean μ and variance σ2, or a multivariate normal distribution with mean vector μ and covariance matrix Σ. Theoretically, the following models accept other probability distributions.

Figure 5. Graphical interpretation of a discriminative probabilistic matrix factorization whose attributes X, Y, Z are given for ratings and latent factors. User and item-relevant attributes X, Y could affect the generation of latent factors W, H, or ratings R, while rating-relevant attributes Z typically determine the rating prediction R. The models of this class may eliminate some of the gray arrows to imply additional independence assumptions between attributes and other factors.

We further introduce the sub-categories below.

4.1.1. Attributes in a Linear Model

This is the generalized form to utilize attributes in this category. Given the attributes, a weight vector is applied to perform linear regression together with classical matrix factorization . Its characteristics in mathematical form are shown in likelihood functions:

where θ = {α, β, γ}, while δ(R) denotes the non-missing ratings in the training data, and π(u, i) is the column index corresponding to user u and item i. denote attribute matrices relevant to user, item, and ratings, respectively, while α, β, γ are their corresponding transformation functions, where attribute space is mapped toward the rating space identical with . Most early models select simple linear transformations, i.e., α(x) = a⊤x, β(y) = b⊤y, γ(z) = c⊤z, which has shown a boost in performance, but recent works consider neural networks for non-linear α, β, γ mapping functions. A simple linear regression model can be expressed as a likelihood function of normal distribution with mean μ and variance σ2. Ideally, the distributions of latent factors W, H will have prior knowledge from attributes X, Y, but we have not yet observed an approach aiming at designing attribute-aware priors as the last two terms of (25).

• Bayesian Matrix Factorization with Side Information (BMFSI) (Porteous et al., 2010) is an example in this sub-category. On the basis of Bayesian Probabilistic Matrix Factorization (BPMF) (Salakhutdinov and Mnih, 2008), BMFSI uses a linear combination like (25) to introduce attribute information to rating prediction. It is formulated as:

where θ = {a, b} and δ(R) is the set of training ratings. The difference from (25) is that rating attributes z will be concatenated with either xu or yu, and thus we drop independent weight variable c in BMFSI. We ignore other attribute-free designs of BMFSI (e.g., the Dirichlet process).

4.1.2. Attributes in a Bilinear Model

This is a popular method when two kinds of attributes (usually user and item) are provided. Given user attribute matrix X and item attribute matrix Y, a matrix A is used to model the relation between them. The mathematical form can be viewed as follows:

where θ = {α, β, γ} are transformation functions from attribute space to rating space. In particular, function α learns the interior dependency between user attributes x and item attributes y, while β and γ find the extra factors by which x or y itself affects the rating result. Compared with (25), the advantage of (27) is that it further considers a set of rating factors that come from the intersections between user and item attributes. However, such a modeling approach cannot work if either user attributes or item attributes are not provided by training data. Prior studies commonly select a simple linear form, called bilinear regression:

In fact, as mentioned in Lu et al. (2016), can be absorbed into and written in the form by appending a new dimension whose value is fixed to 1 for each x and y.

Works in this category differ on whether the bilinear term is explicit or implicit. Also, the latent factor matrices W, H are inherently included in the bilinear form. Specifically, (28) implies that the form of the dot product of two linear-transformed attributes wu = Sxu and hi = Tyi since it can be reformed as where A = S⊤T. Some works such as the Regression-based Latent Factor Model (see below) choose to softly constrain wu ≈ Sxu and hi ≈ Tyi using priors p(W ∣ X) and, p(H ∣ Y).

• Matchbox (Stern et al., 2009) (Figure 6). Let X, Y, Z be the attribute matrices with respect to users, items, and ratings, respectively. Matchbox assumes a rating is predicted by the linear combinations of X, Y, Z:

where δ(R) is the set of non-missing entries in rating matrix R. xu, yi represents the attribute set of user u or item i. z(u,i) denotes the rating-relevant attributes associated with user u and item i. Note that (29) defines latent factors W = AX, H = BY, and then we just have to learn the shared weight matrices A, B. The prior distributions of A, B, c are further factorized, which assumes that all the weight entries in these matrices are independent of each other.

• Friendship-Interest Propagation (FIP) (Yang et al., 2011) (Figure 7). Following the notations from the previous RLFM introduction, FIP considers two types of attribute matrices: X and Y. Based on vanilla matrix factorization, FIP encodes attribute information by modeling the potential correlations between X and Y:

where matrix C forms the correlations between attribute matrices X and Y.

• Regression-based Latent Factor Model (RLFM) (Agarwal and Chen, 2009) (Figure 8). Given three types of attribute matrices: user-relevant X, item-relevant Y, and rating-relevant Z, RLFM models them in different parts of biased matrix factorization. X, Y serve as the hyperparameters of latent factors, while Z joins the regression framework to predict ratings together with latent factors. RLFM can be written as:

where θ = {c, d, A, B, α, β, γ} and δ(R) is the set of non-missing ratings for training. Biased matrix factorization adds two vectors c, d to learn the biases for each user or item. Parameters A, B, α, β, γ map attributes with latent factors (for X, Y) or rating prediction (for Z).

4.1.3. Attributes in a Similarity Matrix

In this case, a similarity matrix that measures the closeness of attributes between users or between items is presented. Given the user attribute matrix , where Nu is the number of users and D is the user attribute dimension, a similarity matrix is computed. There are many metrics for similarity calculation, such as Euclidean distance and kernel functions. The similarity matrix is then used for matrix factorization or other solutions. The special quality of this case is that human knowledge is involved in determining how the interactions between attributes should be modeled. One example is Kernelized Probabilistic Matrix Factorization, which utilizes both a user similarity matrix and item a similarity matrix.

• Kernelized Probabilistic Matrix Factorization (KPMF) (Zhou et al., 2012) (Figure 9). Let K, Nu, Ni be the number of latent factors, users, and items. Given user-relevant attribute matrix or item-relevant attribute matrix , we can always obtain a similarity matrix or where each entry stores a pre-defined similarity between a pair of users or items. KPMF then formulates the similarity matrix as the prior of its corresponding latent factor matrix:

Here, we use subscripts wu to denote the u-th column vector of a matrix W, while superscripts wk imply the k-th row vector of W. Intuitively, the similarity matrices control the learning preferences of user or item latent factors. If two users have similar user-relevant attributes (i.e., they have a higher similarity measure in SX), then their latent factors are forced to be closer during the matrix factorization learning.

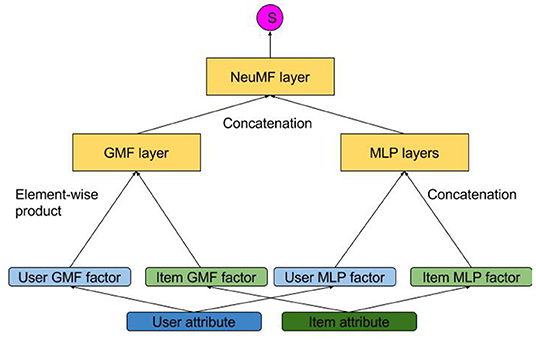

Neural Collaborative Filtering (He et al., 2017) (NCF) is a model that combines a linear structure and a neural network. He et al. (2018) further improves it by using CNN on top of the outer product of user and item embeddings. However, the authors only used user and item one-hot encoding vectors as their input. Though they mentioned that it could be easily modified to accommodate additional attributes, it was not clearly demonstrated. Therefore, we do not include it in either of the categories since it is beyond the scope of our survey (though it is similar to Discriminative Matrix Factorization). We still include NCF as a baseline model in the empirical comparison section, as well as including a slight modification of the model that takes additional attributes as inputs as a competitor. A brief introduction of the model and its variance will be given in section 5.1.1.

4.2. Generative Matrix Factorization

In Probabilistic Matrix Factorization (PMF), ratings are generated by the interactions of user or item latent factors. However, the PMF latent factors are not limited to rating generation. We can also generate attributes from latent factors. Mathematically, using Bayes' rule, we maximize a posteriori as follows:

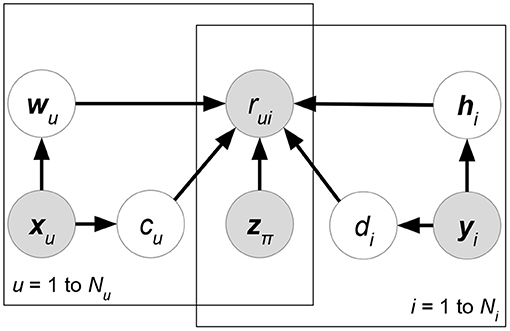

where p(R, X) does not affect the posterior maximization. We again assume independence R⊥X given latent factors W, H in (33), which is commonly adopted in related work. Furthermore, X may share either latent factors W [i.e., p(X ∣ W)] or H [i.e., p(X ∣ H)] with R but not both due to matrix factorization having more generalization capability. We give the graphical interpretation of Generative Matrix Factorization in Figure 10.

Figure 10. Graphical interpretation of generative probabilistic matrix factorization, whose attributes X, Y, Z, together with ratings, are generated or predicted by latent factors. User and item-relevant attributes X, Y could be generated by corresponding latent factors W, H, respectively. Rating-relevant attributes Z are likely to result from both W and H. For models of this class, some of the gray arrows are removed to represent their additional independence assumptions about attribute generation.

The following relevant works are classified in this category. For simplicity, all the probabilities follow a normal distribution, i.e, (i.e., squared loss objective) with mean μ and variance σ2 (or mean vector μ and covariance matrix Σ for multivariate normal distributions). However, the example models are never restricted to within normal distributions.

There are two different branches in this direction. On the one hand, earlier works again use the matrix factorization technique to generate attributes from user or item latent factors. This can be seen as a linear mapping between latent factors and attributes. On the other hand, with the help of deep neural networks, recent works combine matrix factorization and deep autoencoders to realize non-linear mappings for attribute generation. We will introduce them in the following sections.

4.2.1. Attributes in Multiple Matrix Factorization

Similar to PMF R ≈ W⊤H for rating distributions; attributes distributions are modeled using another matrix factorization form. Given user attribute matrix X, item attribute matrix Y, and rating attribute matrix Z, they can be factorized as X ≈ A⊤W, Y ≈ B⊤H of, low rank. Specifically, its objective function is written as:

where δ(R) denotes the non-missing entries of matrix R. The insight of (34) is to share the latent factors W, H in multiple factorization tasks. W is shared with user attributes, while H is shared with item attributes. Z requires the sharing of both W and H due to user- and item-specific rating attributes. Therefore, the side information of X, Y, and Z can indirectly transfer to rating prediction. Auxiliary matrices A, B, and C learn the mappings between latent factors and attributes. With respect to the mathematical form of matrix factorization, the expectation of feature values is linearly correlated with its corresponding latent factors.

• Collective Matrix Factorization (CMF) (Singh and Gordon, 2008) (Figure 11). Here, we introduce a common model in this sub-category. The CMF framework relies on the combination of multiple matrix factorization objective functions. CMF first builds the MF for rating matrix R. User- and item-relevant attribute matrices X, Y are then appended to the matrix factorization objectives. Overall, we have:

where δ(R), δ(X), δ(Y) denote the non-missing entries of matrix R, X, Y that are generated by latent factor matrices W, H, A, B of zero-mean normal priors (i.e., l2 regularization). In (35), W, H are shared by at least two matrix factorization objectives. Attribute information in X, Y is transferred to rating prediction R through sharing the same latent factors. Note that CMF is not limited to three matrix factorization objectives (35).

4.2.2. Attributes in Deep Neural Networks

In deep neural networks, an autoencoder is usually used to learn the latent representation of observed data. Specifically, the model tries to construct an encoder and a decoder , where the encoder learns to map from possibly modified attributes to low-dimensional latent factors, and the decoder recovers original attributes X from latent factors. Moreover, activation functions in autoencoders can reflect non-linear mappings between latent factors and attributes, which may capture the characteristics of attributes more accurately.

To implement an autoencoder, we first generate another attribute matrix from X. could be the same as X or could be different due to corruption, e.g., adding random noise. Autoencoders aim to predict the original X using latent factors that are inferred from generated . Here, attributes serve not only as the generation results X but also as the prior knowledge of latent factors. Let us review Bayes' Rule to figure out where autoencoders appear for generative matrix factorization:

p(R, Y ∣ Ỹ) is eliminated due to irrelevance in maximization of (36). By sharing latent factors W, H between autoencoders and matrix factorization, attribute information can affect the learning of rating prediction. Modeling with normal distributions, we can conclude that the expectation of attributes X is non-linearly mapped from latent factors W, H. Although latent factors have priors from attributes, we categorize relevant works as generative matrix factorization, since we explicitly model attribute distributions in the decoder part of autoencoders.

• Collaborative Deep Learning (CDL) (Wang et al., 2015) (Figure 12). This model uses a combination of collaborative filtering and Stacked Denoising Auto-Encoder (SDAE). Since the model claims to exploit item attributes Y only, in the following introduction we define in (36). In SDAE, input attribute Ỹ is not equivalent to Y due to the addition of random noise to Ỹ. CDL implicitly adds several independence assumptions (R⊥Ỹ ∣ W, H), (Y⊥W ∣ H, Ỹ), (W⊥Ỹ) to formulate its model. Then, using identical notations in CMF introduction, normal distributions are again applied to CDL:

Functions indicate the encoder and the decoder of SDAE. The two functions could be formed by multi-layer perceptrons whose parameters are denoted by θ, ϕ. The distribution of attribute matrix Y to be modeled in the decoder part can be clearly seen. Last but not least, the analysis from (36) to (37) implies that other ideas, user-relevant attributes for example, could be involved naturally in CDL, as long as we remove more independence assumptions.

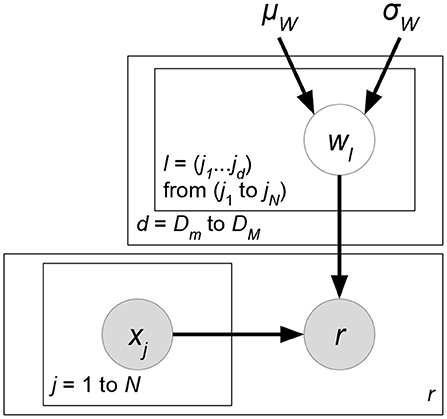

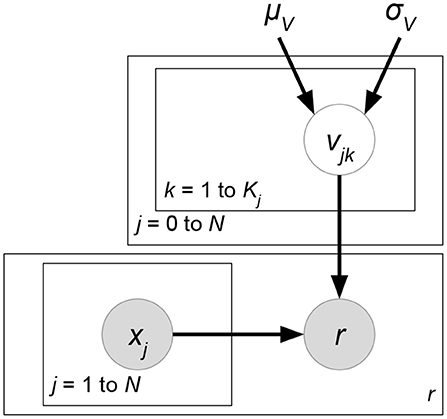

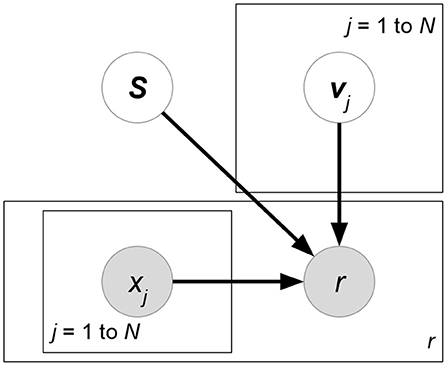

4.3. Generalized Factorization

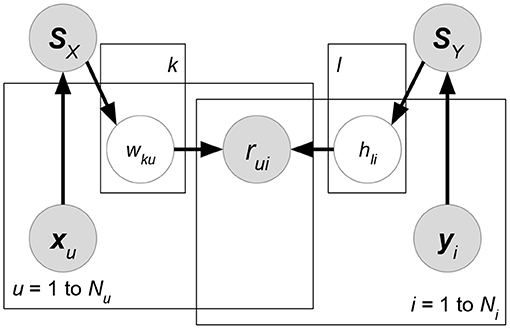

Thanks to the success of matrix factorization in recommender systems, advanced works have emerged on generalizing the concept of matrix factorization in order to extract more information from attributes or interactions between users and items. The works classified in either sections 4.1 or 4.2 propose to design attribute-aware components on the basis of PMF. They explicitly express an assumption of vanilla PMF: the existence of a latent factor matrix W to represent user preferences and another matrix H for items. However, the works classified in this section do not regard W and H as special features of the models. Rather, such works propose an expanded latent factor space shared by users, items, and attributes. Here, neither users nor items are special entities in a recommender system. They are simply considered as categorical attributes. Taking rating rui as an example, it implies that we have a one-hot user-encoding vector where all the entries are 0 except for the u-th entry; similarly, we also have a one-hot item-encoding vector of the i-th entry, 1. Thus, external attributes X can be involved simply in the matrix-factorization-based models, because now users and items are also attributes whose interactions commonly predict or rank ratings. We first propose the most generalized version of interpretation: Given a rating r and its corresponding attribute vector x ∈ ℝN, then we make rating estimate (Figure 13):

where δ(R) indicates the set of observed ratings in training data. Variable d ∈ {0} ∪ ℕ determines the dth-order multiplication interaction between attributes xj. As d = 0, we introduce an extra bias weight w0 ∈ ℝ in (38). The large number of parameters w ∈ ℝ is very likely to overfit training ratings due to the dimensionality curse. To alleviate overfitting problems, the ideas in matrix factorization are applied here. For higher values of d, it is assumed that each w is a function of low-dimensional latent factors:

where implies the K-dimensional (Kj ≪ N∀j) latent factor or representation vector for each element xj of x (Figure 14). Function fd maps these d vectors to a real-valued weight. Our learning parameters then become v. The overall number of parameters (Dm ≤ d ≤ DM) decreases from to where . Next, we prove that matrix factorization is a special case of (38). Let Dm = DM = 2 and x be the concatenation of one-hot encoding vectors of users as well as items. Also, we define . Then, for rating rui of user u to item i, we have:

where Nu denotes the number of users. Equation (40) is essentially equivalent to matrix factorization. In this class, the existing works either generalize or improve two early published models: Tensor Factorization (TF) (Figure 15) and the Factorization Machine (FM) (Figure 16). Both models can be viewed as special cases of (38). We introduce TF and FM in the sections below.

4.3.1. TF-Extended Models

Tensor Factorization (TF) (Karatzoglou et al., 2010) requires the input features to be categorical. Attribute vector x ∈ {0, 1}N is the concatenation of D one-hot encoding vectors. (D − 2) categorical rating-relevant attributes form their own binary one-hot representations. The additional two one-hot vectors represent IDs of users and items, respectively. As a special case of (38), TF fixes Dm = DM = D to build a single D-order interaction between attributes. Since weight function fD in (39) allows individual dimensions Kj for each latent factor vector vj, TF defines a tensor to exploit the tensor product of all latent factor vectors. In sum, (38) is simplified as follows:

where function f(·) = < · > denotes the tensor product. Note that attribute vectors x in TF must consist of exact C 1s due to one-hot encoding. Therefore, there exists only the matches j1 = l1, j2 = l2, …, jD = lD where all the attributes in these positions are set to 1.

4.3.2. FM-Extended Models

The Factorization Machine (FM) (Rendle et al., 2011) allows numerical attributes x ∈ ℝN as input, including one-hot representations of users and items. Although higher-order interactions between attributes could be formulated, FM focuses on at most second-order interactions. To derive FM from (38), let 0 = Dm ≤ d ≤ DM = 2 and in (39) be applied for the second-order interaction. We then begin to simplify (38):

which is exactly the formulation of FM. Note that FM implicitly requires all the latent factor vectors v to be of the same dimension K; however, this requirement could be removed from the viewpoint of our general form (38). Models in this category mainly differ in two aspects. First, linear mapping can be replaced by deep neural networks, which allows non-linear mapping of attributes. Second, FM only extracts first-order and second-order interactions. Further works such as Cao et al. (2016) extract higher-order interactions between attributes.

4.4. Modeling User-Item-Rating Interactions Using Heterogeneous Graphs

We notice several relevant works that perform low-rank factorization or representation learning in heterogeneous graphs, such as (Lee et al., 2011; Yu et al., 2014; Pham et al., 2016; Zheng et al., 2016; Palumbo et al., 2017; Jiang et al., 2018; Nandanwar et al., 2018). Note that these works do not require graph-structured data. Instead, they model the interactions between user, item, rating, and attribute as a heterogeneous graph. The interactions of users and items can be represented by a heterogeneous graph with two node types. An edge is unweighted for implicit feedback and weighted for explicit opinions. External attributes are typically leveraged by assigning them extra nodes in the heterogeneous graph. A heterogeneous graph structure is more suitable for categorical attributes, since each candidate value of attributes can be naturally assigned a node.

In heterogeneous graphs, recommendation can be viewed as a link prediction problem. Predicting a future rating corresponds to forecasting whether an edge will be built between user and item nodes. The existing works commonly adopt a two-stage algorithm to learn the model. First, we apply a random-walk or a meta-path algorithm to gather the similarities between users and items from a heterogeneous graph. The similarity information can be kept as multiple similarity matrices or network embedding vectors. Then, a matrix factorization model or other supervised machine learning algorithms are applied to extract discriminative features from the gathered similarity information, which is used for future rating prediction. Another kind of method is to first define the environment where ranking or similarity algorithms are applied. Defining the environment refers to either determining the heterogeneous graph structures or learning the transition probabilities between nodes from observed heterogeneous graphs. Having the environment, we can apply an existing algorithm (Rooted PageRank, for example) or a proposed method to gain the relative ranking scores for each item. In other words, the main difference between the two kinds of methods is whether the similarity calculation is in the first stage or the second stage. Both kinds of methods as abovementioned can be unified as a constrained likelihood maximization:

where a parameterized function fθ is specifically defined to estimate a similarity score s(u, i) of item i, given user u as a query. The calculation of a similarity score comes from the set ℙu,i of random walks or paths w from node u to i in the heterogeneous graph. The generation of ℙu,i considers the attribute node set X. Either or both of the likelihood and the constraint may involve the information of observed ratings δ(R) of rating matrix R for likelihood maximization or similarity calculation. In our estimation, the current heterogeneous-graph-based models do not directly solve the constrained optimization problem (Equation 43). Commonly, they first exploit a two-stage solution that either solves the likelihood maximization or satisfies the similarity constraint. The output is then cast into the other part of (Equation 43). With different definitions of fθ and p, the two-stage process may run only once or iteratively until convergence. The definition of s(u, i) in the surveyed papers includes PageRank (Lee et al., 2011; Jiang et al., 2018), PathSim (Yu et al., 2014), and so on. The likelihood function p guides the similarity-related parameters θ to fit the distribution objective of observed similarities s or ratings R. The objective may be given attributes X as auxiliary learning data. Minor works like (Lee et al., 2011) do not optimize the likelihood; instead, they directly compute the similarity constraint with pre-defined θ from a specifically designed heterogeneous graph.