95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 21 September 2011

Sec. Psychology of Language

volume 2 - 2011 | https://doi.org/10.3389/fpsyg.2011.00222

This article is part of the Research Topic Near-Infrared Spectroscopy: Recent Advances in Infant Speech Perception and Language Acquisition Research View all 11 articles

Previous research has shown that by the time of birth, the neonate brain responds specially to the native language when compared to acoustically similar non-language stimuli. In the current study, we use near-infrared spectroscopy to ask how prenatal language experience might shape the brain response to language in newborn infants. To do so, we examine the neural response of neonates when listening to familiar versus unfamiliar language, as well as to non language stimuli. Twenty monolingual English-exposed neonates aged 0–3 days were tested. Each infant heard low-pass filtered sentences of forward English (familiar language), forward Tagalog (unfamiliar language), and backward English and Tagalog (non-language). During exposure, neural activation was measured across 12 channels on each hemisphere. Our results indicate a bilateral effect of language familiarity on neonates’ brain response to language. Differential brain activation was seen when neonates listened to forward Tagalog (unfamiliar language) as compared to other types of language stimuli. We interpret these results as evidence that the prenatal experience with the native language gained in utero influences how the newborn brain responds to language across brain regions sensitive to speech processing.

It is well known that the adult brain is specialized in its response to native language (Perani et al., 1996; Dehaene et al., 1997). Recent evidence has suggested that the human brain is tuned to language from the earliest stages of development. Only a few days after birth, neonates respond differently to language than to non-linguistic sounds. Very young infants demonstrate a preference for listening to speech over non-speech (Vouloumanos and Werker, 2007), and are capable of discriminating languages from different rhythmical classes (Mehler et al., 1988; Nazzi et al., 1998; Ramus et al., 2000). However, what is unknown from past research is the extent to which early prenatal experience with language may play a role in determining the organization of neonates’ neural tuning for language. In particular, no one has yet investigated whether the experience that neonates have with the native language while in utero influences the pattern and location of brain activity to familiar versus unfamiliar language. In the current study, we use near-infrared spectroscopy (NIRS) to take the first steps in exploring this question.

Research to date examining the neonate brain response to language versus non-language has shown that brain responses to familiar language are both stronger and more specialized when compared to the response to non-language (Dehaene-Lambertz et al., 2002, 2010; Pena et al., 2003). Using behavioral methods, a left hemisphere advantage for language has been inferred through dichotic listening to individual syllables as measured by high-amplitude sucking in newborns (Bertoncini et al., 1989), as well as through mouth asymmetries during babbling in 5 to 12-month-olds (Holowka and Petitto, 2002). In neuroimaging research, optical imaging studies with newborns have shown a greater left hemisphere response to audio recordings of forward versus backward speech (Pena et al., 2003), as well as evidence that the left hemisphere plays an important role in processing repetition structures in language (e.g., ABB versus ABC syllable sequences; Gervain et al., 2008). Similarly, fMRI studies with infants 2–3 months of age indicate differential responses in the left hemisphere to continuous forward versus backward speech (Dehaene-Lambertz et al., 2002), and to speech versus music (Dehaene-Lambertz et al., 2010). These functional studies are supported by structural MRI analyses indicating asymmetries at birth in the left hemisphere language areas of the brain (Dubois et al., 2010). All of the above studies, however, have focused on young infants’ neural response to familiar language, leaving open the question of how much responses may have been driven by language experience.

At birth, neonates are experiencing extra-uterine language for the first time. However, in utero they have had the opportunity to learn about at least some of the properties of language. The peripheral auditory system is mature by 26 weeks gestation (Eisenberg, 1976), and the properties of the womb are such that the majority of low-frequency sounds (less than 300 Hz) are transmitted to the fetal inner ear (Gerhardt et al., 1992). The low-frequency components of language that are transmitted through the uterus include pitch, some aspects of rhythm, and some phonetic information (Querleu et al., 1988; Lecaneut and Granier-Deferre, 1993). Moreover, the fetus has access to the mother’s speech via bone conduction (Petitjean, 1989). There is evidence that the fetus can hear and remember language sounds even before birth. Fetuses respond to and discriminate speech sounds (Lecanuet et al., 1987; Zimmer et al., 1993; Kisilevsky et al., 2003). Moreover, newborn infants show a preference for their mother’s voice at birth (DeCasper and Fifer, 1980) and show behavioral recognition of language samples of children’s stories heard only during the pregnancy (DeCasper and Spence, 1986). Finally, and of particular interest to our work, newborn infants born to monolingual mothers prefer to listen to their native language over an unfamiliar language from a different rhythmical class (Mehler et al., 1988; Moon et al., 1993). These studies suggest that infants may have learned about the properties of the native language while still in the womb.

In a recent extension of the work showing a preference for the native language at birth, Byers-Heinlein et al. (2010) investigated how prenatal bilingual experience influences language preference at birth. Infants from 0 to 5 days of age born to either monolingual English or bilingual English–Tagalog mothers were tested in a high-amplitude sucking procedure. Infants were played sentences in both English (a stress-timed language) and Tagalog (a Filipino language that is syllable-timed). Sentences from both languages were low-pass filtered (to a 400-Hz cut-off), to maintain the rhythmical information of each language while eliminating most surface segmental cues that may be different across languages. Byers-Heinlein et al. (2010) found that while all infants could discriminate English and Tagalog, the monolingual-exposed infants showed a preference for only English and the bilingual-exposed infants had a similar preference for both English and Tagalog. These results provide strong evidence that language preference at birth is influenced by the language heard in utero, even when infants have had prenatal experience with multiple languages. The neural correlates of this behavioral preference for familiar language(s) at birth are however, unknown.

A recent neuroimaging study of infants’ processing of speech and non-speech has provided some support for the hypothesis that language experience may impact early neural specialization for processing some aspects of language (Minagawa-Kawai et al., 2011). Using NIRS, 4-month-old Japanese infants’ brain response was assessed while listening to a familiar language (Japanese), to an unfamiliar language (English), and to different non-speech sounds (emotional voices, monkey calls, and scrambled speech). Greater left hemisphere activation was reported for both familiar and unfamiliar language when compared to the non-speech conditions. Critically, activation was also significantly greater to the familiar language when compared to the unfamiliar language. This latter finding implies that by 4 months of age, the young brain responds differently to familiar versus unfamiliar language and is thus influenced by language experience. However, the infants studied by Minagawa-Kawai et al. (2011) were 4 months of age – meaning that these infants have dramatically more experience with their native language than newborn infants. It is unknown whether infants with only a few hours of post-natal experience will show a similar difference in neural activation to a familiar versus an unfamiliar language.

In contrast to the above studies demonstrating the impact of language experience on newborn infants’ language processing, other areas of research have uncovered aspects of language perception that appear unaffected by specific language experience early in development. For example, neonates’ rhythm-based language discrimination has been shown to be based on language-general abilities. Phonologists have traditionally classified the world’s languages into three main rhythmic categories: stress-timed (e.g., English, Dutch), syllable-timed (e.g., Spanish, French), and mora-timed (e.g., Japanese). This distinction is critically important to language learning as rhythmicity is associated with word order in a language (Nespor et al., 2008), rendering it one of the most potentially informative perceptual cues for bootstrapping language acquisition. Recent cross-linguistic investigations have more finely quantified the distinction between rhythmical classes, finding that languages fall into rhythmical class on the basis of two parameters: percent vowel duration within a sequence and the standard deviation of the duration of consonantal intervals (Ramus et al., 1999; see also Grabe and Low, 2002 for a different measurement scheme).

In a long series of studies, it has been demonstrated that young infants are able to discriminate languages from different rhythmical classes (Mehler et al., 1988; Nazzi et al., 1998; Ramus et al., 2000). This ability does not depend on familiarity with one or both of the languages being tested. Infants with prenatal experience with a single language can discriminate the native language from a rhythmically dissimilar unfamiliar language (Mehler et al., 1988), as well as discriminate two unfamiliar rhythmically different languages (Nazzi et al., 1998). Further, infants with prenatal bilingual exposure are able to discriminate their two native languages when those languages are from different rhythmical classes, even though both languages are familiar (Byers-Heinlein et al., 2010). These findings show that rhythm-based language discrimination in newborns is not based on experience with the native language, but instead on initial universal biases. It therefore may be the case that the early neural response to language in neonates also reflects similar language-universal processing.

The goal of the present study was to test whether neonates’ early brain specialization for language is driven exclusively by a universal preparation for language, or whether there is influence from prenatal language experience. To test these competing hypotheses, we measured the patterns and location of neonates’ brain response to a familiar (the primary language heard in utero) versus an unfamiliar language. Building on previous research, we compared the pattern and location of neural responses to forward speech versus backward speech in both a familiar and an unfamiliar language. We tested newborn infants, an age group that has not previously been tested for the influence of listening experience on neural organization.

We employed NIRS to measure neural activity in neonates when listening to familiar and unfamiliar language. Participants in the current study were born to monolingual English-speaking mothers, and each infant was tested in four language conditions. In two forward-language conditions, neonates were played sentences of adult-directed English and Tagalog. In two backward-language control conditions, infants were played the same English and Tagalog sentences reversed. Backward speech has often been used in neuroimaging studies exploring the brain response to language versus no-language, including both studies with adults (Perani et al., 1996; Carreiras et al., 2005) and infants (Dehaene-Lambertz et al., 2002; Pena et al., 2003). Backward-language is believed to be a useful non-linguistic control because it matches the forward-language in both intensity and pitch, but is distinctly non-linguistic, as humans are unable to produce some of the sound sequences created (such as backward aspirated stops), because words in backward speech do not have a proper syllable form, and because the prosodic structure of sentences is disturbed. As a consequence, infants fail to discriminate a pair of reversed languages despite succeeding in differentiating the same pair of forward-played languages (Mehler et al., 1988). This suggests that backward spoken language does not carry the same linguistic relevance as forward speech (Mehler et al., 1988; Ramus et al., 2000).

The language stimuli used in the current study were the identical low-pass filtered sentences used in the previously described behavioral study investigating language preference and discrimination in neonates conducted by Byers-Heinlein et al. (2010). Low-pass filtered speech has been used by many cross-linguistic preference and discrimination studies (Mehler et al., 1988; Nazzi et al., 1998; Byers-Heinlein et al., 2010), as the filtering is believed to eliminate surface acoustic and phonetic cue differences between languages that may lead to irrelevant preferences. The filtering allows much of the rhythmical structure of the language to remain intact, and infants’ ability to use rhythmical information to discriminate between languages is thought to remain the same across unfiltered and filtered language (Mehler et al., 1988). Filtered speech was used in the present study as it likely mimics the properties of language perceived in utero (Lecaneut and Granier-Deferre, 1993). As such, we expected that the neonate response to filtered speech should reflect how initial prenatal experience might shape the neural response to speech. It should be noted, however, that while there is considerable behavioral work on language preference and discrimination using filtered speech, ours is the first study to use filtered speech in a neural imaging study addressing these questions. Given that some of the features of speech important to neural localization may be removed in filtering the stimulus (i.e., the fast phonetic change dynamics; Zatorre and Belin, 2001; Poeppel, 2003; Zatorre and Gandour, 2007), we anticipated that the neural response to filtered speech might differ from patterns of results found previously with unfiltered speech.

Twenty full-term, healthy neonates (ranging in age from 0 to 3 days, mean age = 1.6 days) born to English-speaking mothers were included in the analyses. All mothers reported speaking at least 90% English during their pregnancy, and no Tagalog (19 mothers reported speaking 100% English, and one mother 90% English and 10% Romanian). An additional 10 infants were tested, but were excluded due to the infant becoming awake or fussy and failing to complete the procedure (5), equipment failure (2), insufficient analyzable data (2), or parental interference (1). All infants were tested while asleep or during a quiet state of wakefulness. All parents of infants gave informed consent prior to beginning the experiment.

The language samples used in the current study we taken from those used by Byers-Heinlein et al. (2010). Stimuli consisted of six English sentences and six Tagalog sentences recorded by native speakers of each language and spoken in an adult-directed manner. All sentences were matched in pitch, duration, and number of syllables, and were produced by adult native language speakers. Sentences were low-pass filtered to 400 Hz, to remove surface segmental cues while maintaining rhythmical structure and prosody. Backward-language sentences were formed by reversing the English and Tagalog sentences using Praat (Boersma and Weenink, 2011). Sentence lengths in English ranged from 3.28 to 4.09 s, with a mean of 3.55 s. Sentence lengths in Tagalog ranged from 3.07 to 4.19 s, with a mean of 3.61 s.

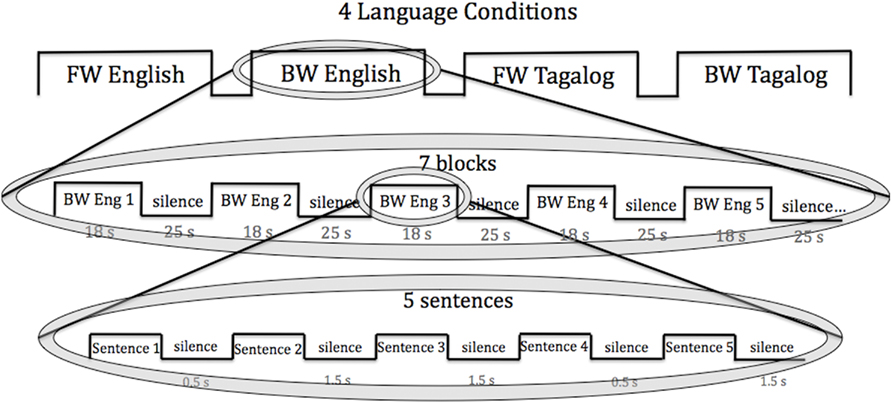

Each infant was tested in all four language conditions: forward English, forward Tagalog, backward English, and backward Tagalog. The conditions were randomly ordered across infants and presented consecutively. The blocked design was chosen as it has been used by many infant NIRS studies (e.g., Pena et al., 2003; for a review see Gervain et al., 2011). Each condition lasted 5.6 min. Within each condition, stimuli were organized within seven blocks that each lasted 18–20 s. Each block consisted of five sentences. There were six sentences in total for each language, and for each block five different sentences were randomly selected. Within a block, the five sentences were separated by brief pauses of variable length (0.5–1.5 s), following Gervain et al. (2008). Blocks were separated from each other by 25–35 s of silence. The total testing time for each infant was 22.4 min. The block design used is presented in Figure 1.

Figure 1. The block design used in the current study. Each infant was exposed to all four language conditions (FW, BW English; FW, BW Tagalog). Within each language condition, infants heard seven language blocks of five sentences. Each sentence was 3–4 s in length.

Neonates were tested in a local maternity hospital, while asleep or at rest in a bassinet. Testing occurred in a silent, private experimental room. A Hitachi ETG-4000 NIRS machine with a source detector separation of 3 cm and two continuous wavelengths of 695 and 830 nm was used to record the NIRS signal, using a sampling rate of 10 Hz. For further technical details regarding the machine, see Gervain et al. (2011).

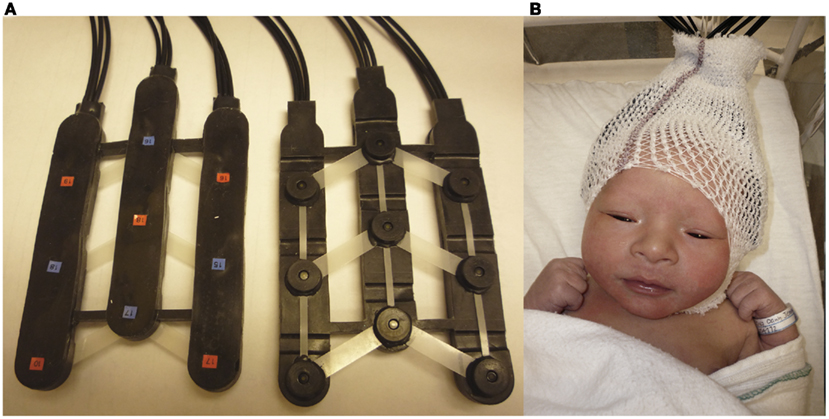

Two chevron-shaped probes were used, each consisting of nine 1 mm optical fibers. Of these nine fibers, five were emitters and four detectors. As such, there were 12 recording channels in each probe. One probe set was placed over the perisylvian area of the neonate’s scalp of the left hemisphere, with the second probe set over the symmetrical area of the right hemisphere. The chevron shape of the probes was situated to nestle above the infant’s ears (see Figure 2 for image of the probes, and probes placed on infant; see Figure 3 for probe configuration). A stretchy cap was used to keep the probes in place. The NIRS machine used a laser power of 0.75 mW.

Figure 2. (A) Picture of chevron-shaped probes used. (B) Picture of a neonate with probes placed upon the head.

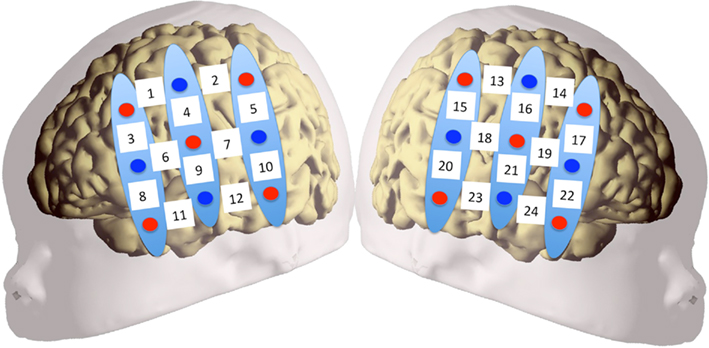

Figure 3. Configuration of probe sets overlaid on a schematic infant head. Red circles indicate emitter fibers, while blue circles indicate detectors. Separation in all emitter–detector channels was 3 cm. The probes were placed on the participants’ heads using surface landmarks, such as the ears or the vertex. Channels 1–12 were placed over the left hemisphere, while channels 13–24 were placed over the right. Probes were placed so that the bottom-most channels (11, 12 in LH; 23, 24 in RH) ideally nestled above the infant’s ear.

A MacBook laptop or a Mac Mini desktop computer running Psyscope × (Build 36) controlled the experiment, playing the language stimuli and sending markers to the NIRS machine. The language stimuli were played through two speakers approximately 1.5 m from the infants’ head. The intensity of the stimuli was set to 70–75 dB.

Analyses were initially conducted on oxyHb and deoxyHb in a time window between 0 and 35 s after stimulus onset to capture the full time course of the hemodynamic response in each block (Gervain et al., 2008). Data were averaged across blocks within the same condition. Data were band-pass filtered between 0.01 and 0.7 Hz, as to remove low-frequency noise (i.e., slow drifts in Hb concentrations) as well as high frequency noise (i.e., heartbeat). Movement artifacts were removed by isolating blocks in which a change in concentration greater than 0.1 mmol × mm over a period of 0.2 s, i.e., two samples, occurred, and rejecting the block. On average, 3.69 blocks were retained for data analysis in the English FW, 3.17 in the English BW, 3.46 in the Tagalog FW, and 3.61 in the Tagalog BW condition. For all retained blocks, a baseline was established by linearly fitting the 5-s preceding the onset of the block and the 5-s beginning 15 s after the end of the block. This timeline is used to allow the hemodynamic response function that occurs in response to the experimental stimuli to return to the original steady state (Pena et al., 2003; Gervain et al., 2008).

The region of interest (ROI) was defined following Pena et al. (2003). Channels 7–12 and 19–24 were chosen as the ROI in each hemisphere. These ROIs correspond to the lower ROIs in Pena et al. (2003), which is the area where significant activation was found in that study. These ROIs comprise the temporal (auditory processing) brain areas, where one can expect to find the strongest speech-related response.

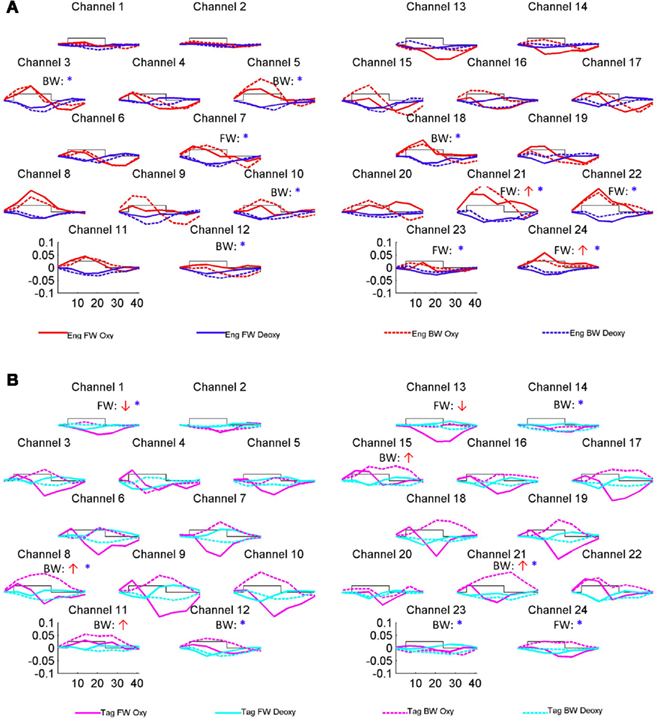

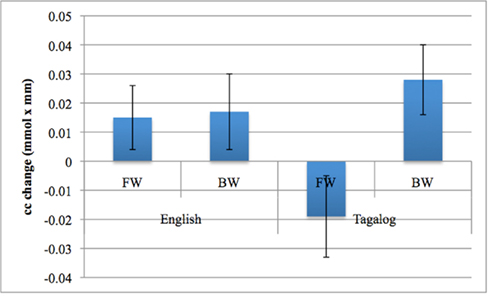

The grand average results of the experiment are presented in Figures 4 and 5. The figures show the averages of oxyHb and deoxyHb concentration change in all blocks for each condition across all infants. A table of the oxyHb results is presented in Figure 6. We conducted a repeated measures analysis of variance (ANOVA) within the target ROI (lower channels, as used by Pena et al., 2003) with factors Language (English/Tagalog) × Direction (BW/FW) × Hemisphere (LH/RH) separately for oxyHb and deoxyHb, similar to Pena et al.’s (2003) analysis. The ANOVA for oxyHb yielded a significant main effect for Direction [F(1,19) = 5.342, p = 0.032], as BW speech gave rise to a larger response than FW speech. The interaction between Language × Direction was marginally significant [F(1,19) = 3.882, p = 0.064], as FW Tagalog gave rise to a decrease in oxyHb (inverted response), whereas FW and BW English as well as BW Tagalog resulted in an increase in oxyHb (canonical response; significant and marginal Bonferroni post hoc tests: FW Tagalog versus BW Tagalog p = 0.002; FW Tagalog versus FW English p = 0.054; FW Tagalog versus BW English p = 0.077; Figure 7). A similar ANOVA with deoxyHb yielded no significant results.

Figure 4. Grand average results of the experiment. Numbers and location of channels correspond to the placement shown in Figure 3. (A) The oxyHb and deoxyHb response to the English FW and BW conditions. (B) The oxyHb and deoxyHb response to the Tagalog FW and BW conditions. The arrows indicate significant changes in concentrations in channel-by-channel t-tests (p < 0.05, uncorrected) as compared to a zero baseline: the red upward arrow indicates significant increase in oxyHb (canonical response), the red downward significant decrease in oxyHb (inverted response), the blue asterisk a significant decrease in deoxyHb (canonical response). After correction for multiple comparisons, using the False Discovery Rate as defined by Benjamini and Hochberg (1995), none of these comparisons reached significance. The channel-wise results are therefore only suggestive.

Figure 7. The Language by Direction interaction in oxyHb activation obtained in the experiment. Error bars represent SE of the mean.

Our findings demonstrate that the neural processing of language is influenced by language experience even by the first few days of life. When newborn infants listened to English (familiar) and Tagalog (unfamiliar) language stimuli, we observed a difference in brain response. When processing forward-played sentences of English, neonates showed an increase in overall oxygenated hemoglobin across both hemispheres. In contrast, when infants listened to sentences of unfamiliar forward Tagalog, we observed a decrease in oxygenated hemoglobin. No language familiarity effects were found in the brain response to backward speech, as neonates had a similar neural response to backward English and backward Tagalog. While we observed different patterns of brain activation to forward English versus forward Tagalog, we did not find a consistent difference in the localization of brain activity between language conditions. For both English and Tagalog, similar patterns of activation were found in the temporal regions across the left and right hemispheres. Our results therefore suggest that prenatal language experience does shape how the brain responds to familiar and unfamiliar language. These results echo behavioral findings using the same stimuli (Byers-Heinlein et al., 2010), where neonates were shown to both prefer and discriminate a familiar language from an unfamiliar language. However, at least with the filtered language stimuli used in our study, we find no evidence that the neonate brain uses distinct brain regions to process different languages.

Our data also produced several unexpected findings. First, the lack of any observed hemisphere differences in neonates’ response to familiar or unfamiliar language contrasts with previous studies showing left hemisphere dominance for language processing in young infants in the area of the planum temporale (Dehaene-Lambertz et al., 2002; Pena et al., 2003). This is surprising given the left lateralization of neonate brain response to language found by Pena et al. (2003) which used very similar methodology to our study. We propose two hypotheses to explain the difference in hemispheric findings between the current study and Pena et al. (2003). One possibility is that the subtle differences in procedure led to the differential findings. While we attempted to place the probes in similar temporal areas to Pena et al. (2003) it is impossible to know if the placement was completely comparable across studies. It may be that slightly different brain regions are being measured, and that our study did not pick up on areas that are lateralized to language at birth (such as the planum temporale).

However, we believe that the difference in lateralization in our study and by Pena et al. (2003) is more likely based on the stimuli used. While the current study used low-pass filtered samples of speech, Pena et al. (2003) used unfiltered speech. When low-pass filtering speech to 400 Hz, much of the segmental information, such as consonant formant transitions, is removed, while most of the prosodic information is retained. We believe that this alteration of the speech stimuli may cause neural activation that is more bilateral rather than left hemisphere dominant. Several lines of research have demonstrated that different aspects of speech are processed in different brain areas (Zatorre and Belin, 2001; Poeppel, 2003; Zatorre and Gandour, 2007). While rapid changes in speech (such as formant transitions in consonants) result in a left hemisphere bias in processing, slower changes in speech (such as prosody) result in a right hemisphere bias. This sensitivity in brain processing has recently been evidenced in very young infants, including neonates (Homae et al., 2006; Telkemeyer et al., 2009; Minagawa-Kawai et al., 2011). We therefore suggest that filtered speech, as compared to unfiltered speech, would likely emphasize slower prosodic changes and de-emphasize faster consonant formant transitions in the speech, therefore resulting in bilateral activation. However, further research is needed to investigate this hypothesis, by directly comparing the neural response to filtered versus unfiltered speech.

A second unexpected finding in the current study was the lack of a differential brain response to forward and backward English. This finding also contrasts with the results from Pena et al. (2003) where greater left hemisphere activation was found for forward versus backward native language stimuli. Again, we propose that this result may be affected by the nature of the filtered speech used. As noted above, reversed speech is made non-linguistic in nature due to two factors: First, many of the consonants in backward speech cannot be produced by the human vocal tract. Second, the prosodic structure of speech is disturbed when reversed. While the filtering likely reduces the first cue to unnaturalness, the second cue still remains in filtered backward speech. One possible post hoc explanation for our pattern of results is that as the filtering does maintain rhythm, neonates may be able to detect a familiar rhythmical structure in both the forward and backward English, leading to similar neural processing of both types of English language stimuli. In contrast, the rhythm of Tagalog is unfamiliar in both forward and backward forms, meaning that no familiarity might lead to similar processing of both Tagalog conditions. As infants might not be able to detect that FW and BW Tagalog are the normal and reversed versions of the same stimuli or come from the same language, there is no reason to expect a similar response to these two conditions. However, this hypothetical possibility requires further refinement and testing.

Thirdly, the statistical analyses revealed a negative oxyHb response to forward Tagalog. This hemodynamic response shape to forward Tagalog requires further investigation. However, what is important to note is that the size and shape of the brain response to forward Tagalog is clearly different from the shape of the response we obtained in the English conditions, further underscoring the difference between the processing of the native and a non-native language.

Regardless of the basis of the differential brain response to English and Tagalog, our main finding remains that neonates showed a dissimilar pattern how the brain responds to familiar versus familiar language. We cannot make definitive claims as to the exact nature of this processing difference on the basis of the results from the current study. Nonetheless, the results do highlight this as an area prime for future research.

Our results raise several additional questions for future study. How much prenatal language experience is sufficient to shape the neural response to language? Do premature infants show an equivalent different response to familiar and unfamiliar languages as found in the current study with full-term infants? Furthermore, if infants are raised post-natally in surroundings where prenatal and post-natal language experience differs, how might the initial brain response to language shift during development? How much post-natal experience with an unfamiliar language is needed to alter neural activation? Finally, our evidence that prenatal language experience impacts neonates’ initial neural response to language raises the question of whether and how this early neural activation might impact later language processing and learning of familiar versus unfamiliar language.

In the current study, we provide the first exploration of whether the newborn infant’s neural processing of language is influenced by early language experience. We find a clear difference in how the neonate brain responds to familiar versus unfamiliar language. These results indicate that even prior to birth, the human brain is tuning to the language environment.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Benjamini, Y., and Hochberg, Y. (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Stat. Soc. Series B Stat. Methodol. 57, 289–300.

Bertoncini, J., Morais, J., Bijeljac-Babic, R., McAdams, S., Peretz, I., and Mehler, J. (1989). Dichotic perception and laterality in neonates. Brain Lang. 37, 591–605.

Boersma, P., and Weenink, D. (2011). Praat: Doing Phonetics by Computer [Computer Program], Version 5.2.12. Available at: http://www.praat.org/ [Retrieved January 28, 2011].

Byers-Heinlein, K., Burns, T. C., and Werker, J. F. (2010). The roots of bilingualism in newborns. Psychol. Sci. 21, 343–348.

Carreiras, M., Lopez, J., Rivero, F., and Corina, D. (2005). Neural processing of a whistled language. Nature 433, 31–32.

DeCasper, A. J., and Fifer, W. P. (1980). Of human bonding: newborns prefer their mother’s voices. Science 208, 1174–1176.

DeCasper, A. J., and Spence, M. J. (1986). Prenatal maternal speech influences newborns’ perception of speech sounds. Infant Behav. Dev. 9, 133–150.

Dehaene, S., Dupoux, E., Mehler, J., Cohen, L., Paulesu, E., Perani, D., van der Moortele, P., Lehéricy, S., and LeBihan, D. (1997). Anatomical variability in the cortical representation of first and second language. Neuroreport 8, 3809–3815.

Dehaene-Lambertz, G., Dehaene, S., and Hertz-Pannier, L. (2002). Functional neuroimaging of speech perception in infants. Science 298, 2013–2015.

Dehaene-Lambertz, G., Montavont, A., Jobert, A., Allirol, L., Dubois, J., Hertz-Pannier, L., and Dehaene, S. (2010). Language or music, mother or Mozart? Structural and environmental influences on infants’ language networks. Brain Lang. 114, 53–65.

Dubois, J., Benders, M., Lazeyras, F., Borradori-Tolsa, C., Ha-Vinh Leuchter, R., Mangin, J. F., and Hüppi, P. S. (2010). Structural asymmetries of perisylvian regions in the preterm newborn. Neuroimage 52, 32–42.

Eisenberg, R. B. (1976). Auditory Competence in Early Life: The Roots of Communicate Behavior Baltimore: University Park Press.

Gerhardt, K. J., Otto, R., Abrams, R. M., Colle, J. J., Burchfield, D. J., and Peters, A. J. M. (1992). Cochlear microphones recorded from fetal and newborn sheep. Am. J. Otolaryngol. 13, 226–233.

Gervain, J., Macagno, F., Cogoi, S., Pena, M., and Mehler, J. (2008). The neonate brain detects speech structure. Proc. Natl. Acad. Sci. U.S.A. 105, 14222–14227.

Gervain, J., Mehler, J., Werker, J. F., Nelson, C. A., Csibra, C., Lloyd-Fox, S., Shukla, M., and Aslin, R. N. (2011). Near-infrared spectroscopy: a report from the McDonnell infant methodology consortium. Dev. Cogn. Neurosci. 1, 22–46.

Grabe, E., and Low, E. L. (2002). “Durational variability in speech and the rhythm class hypothesis,” in Papers in Laboratory Phonology, eds C. Gussenhoven and N. Warner (Berlin: Mouton de Gruyter), 515–546.

Holowka, S., and Petitto, L. A. (2002). Left hemisphere cerebral specialization for babies while babbling. Science 297, 1515.

Homae, F., Watanabe, H., Nakano, T., and Taga, G. (2006). The right hemisphere of sleeping infant perceives sentential prosody. Neurosci. Res. 54, 276–280.

Kisilevsky, B. S., Hains, S. M. J., Lee, K., Xie, X., Ye, H. H., Zhang, K., and Wang, Z. (2003). Effects of experience on fetal voice recognition. Psychol. Sci. 14, 220–224.

Lecaneut, J. P., and Granier-Deferre, C. (1993). “Speech stimuli in the fetal environment,” in Developmental Neurocognition: Speech and Face Processing in the First Year of Life, eds B. De Boysson-Bardies, S. de Schonen, P. Jusczyk, P. MacNeilage, and J. Morton (Norwell, MA: Kluwer Academic Publishing), 237–248.

Lecanuet, J. P., Granier-Deferre, C., DeCasper, A. J., Maugeais, R., Andrieu, A. J., and Busnel, M. C. (1987). Perception and discrimination of language stimuli. Demonstration from the cardiac responsiveness. Preliminary results. C. R. Acad. Sci. III 305, 161–164.

Mehler, J., Jusczyk, P. W., Lambertz, G., Halsted, N., Bertoncini, J., and Amiel-Tison, C. (1988). A precursor of language acquisition in young infants. Cognition 29, 143–178.

Minagawa-Kawai, Y., van der Lely, H., Ramus, F., Sato, Y., Mazuka, R., and Dupoux, E. (2011). Optical brain imaging reveals general auditory and language-specific processing in early infant development. Cereb. Cortex 21, 254–261.

Moon, C., Cooper, R. P., and Fifer, W. P. (1993). Two-day-olds prefer their native language. Infant Behav. Dev. 16, 495–500.

Nazzi, T., Bertoncini, J., and Mehler, J. (1998). Language discrimination by newborns: toward an understanding of the role of rhythm. J. Exp. Psychol. Hum. Percept. Perform. 24, 756–766.

Nespor, M., Shukla, M., van de Vijver, R., Avesani, C., Schraudolf, H., and Donati, C. (2008). Different phrasal prominence realization in VO and OV languages. Lingue e Linguaggio 7, 1–28.

Pena, M., Maki, A., Kovacic, D., Dehaene-Lambertz, G., Koizumi, H., Bouquet, F., and Mehler, J. (2003). Sounds and silence: an optical topography study of language recognition at birth. Proc. Natl. Acad. Sci. U.S.A. 100, 11702–11705.

Perani, D., Dehaene, S., Grassi, F., Cohen, L., Cappa, S. F., Dupoux, E., Fazio, F., and Mehler, J. (1996). Brain processing of native and foreign languages. Neuroreport 7, 2439–2444.

Petitjean, C. (1989). Une condition de l’audition foetale: la conduction sonore osseuse. Conséquences cliniques et applications pratiques. MD dissertation, University of Franche-Comté, Besançon.

Poeppel, D. (2003). The analysis of speech in different temporal integration windows: cerebral lateralization as “asymmetric sampling in time.” Speech Commun. 41, 245–255.

Querleu, D., Renard, X., Versyp, F., Paris-Delrue, L., and Crepin, G. (1988). Fetal hearing. Eur. J. Obstet. Gynecol. Reprod. Biol. 28, 191–212.

Ramus, F., Hauser, M. D., Miller, C. T., Morris, D., and Mehler, J. (2000). Language discrimination by human newborns and cotton-top tamarin monkeys. Science 288, 349–351.

Ramus, F., Nespor, M., and Mehler, J. (1999). Correlates of linguistic rhythm in the speech signal. Cognition 73, 265–292.

Telkemeyer, S., Rossi, S., Koch, S. P., Nierhaus, T., Steinbrink, J., Poeppel, D., Obring, H., and Wartenburger, I. (2009). Sensitivity of newborn auditory cortex to the temporal structure of sounds. J. Neurosci. 29, 14726–14733.

Vouloumanos, A., and Werker, J. F. (2007). Listening to language at birth: evidence for a bias for speech in neonates. Dev. Sci. 10, 159–164.

Zatorre, R. J., and Belin, P. (2001). Spectral and temporal processing in human auditory cortex. Cereb. Cortex 11, 946–953.

Zatorre, R. J., and Gandour, J. T. (2007). Neural specializations for speech and pitch: moving beyond the dichotomies. Philos. Trans. R. Soc. Lond. B Biol. Sci. 363, 1087–1104.

Keywords: language, near-infrared spectroscopy, neonates

Citation: May L, Byers-Heinlein K, Gervain J and Werker JF (2011) Language and the newborn brain: does prenatal language experience shape the neonate neural response to speech? Front. Psychology 2:222. doi: 10.3389/fpsyg.2011.00222

Received: 19 February 2011;

Paper pending published: 15 April 2011;

Accepted: 22 August 2011;

Published online: 21 September 2011.

Edited by:

Heather Bortfeld, University of Connecticut, USAReviewed by:

Nadege Roche-Labarbe, Massachusetts General Hospital, USACopyright: © 2011 May, Byers-Heinlein, Gervain and Werker. This is an open-access article subject to a non-exclusive license between the authors and Frontiers Media SA, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and other Frontiers conditions are complied with.

*Correspondence: Lillian May, Department of Psychology, University of British Columbia, 2136 West Mall, Vancouver, BC, Canada V6T 1Z4. e-mail:bGFtYXlAcHN5Y2gudWJjLmNh

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.