Internal representation of task rules by recurrent dynamics: the importance of the diversity of neural responses

- 1 Center for Theoretical Neuroscience, College of Physicians and Surgeons, Columbia University, New York, NY, USA

- 2 Institute of Neuroinformatics, University of Zurich and Swiss Federal Institute of Technology Zurich, Zurich, Switzerland

- 3 Department of Neurobiology, Kavli Institute for Neuroscience, Yale University School of Medicine, New Haven, CT, USA

Neural activity of behaving animals, especially in the prefrontal cortex, is highly heterogeneous, with selective responses to diverse aspects of the executed task. We propose a general model of recurrent neural networks that perform complex rule-based tasks, and we show that the diversity of neuronal responses plays a fundamental role when the behavioral responses are context-dependent. Specifically, we found that when the inner mental states encoding the task rules are represented by stable patterns of neural activity (attractors of the neural dynamics), the neurons must be selective for combinations of sensory stimuli and inner mental states. Such mixed selectivity is easily obtained by neurons that connect with random synaptic strengths both to the recurrent network and to neurons encoding sensory inputs. The number of randomly connected neurons needed to solve a task is on average only three times as large as the number of neurons needed in a network designed ad hoc. Moreover, the number of needed neurons grows only linearly with the number of task-relevant events and mental states, provided that each neuron responds to a large proportion of events (dense/distributed coding). A biologically realistic implementation of the model captures several aspects of the activity recorded from monkeys performing context-dependent tasks. Our findings explain the importance of the diversity of neural responses and provide us with simple and general principles for designing attractor neural networks that perform complex computation.

Introduction

Neurons in the mammalian brain are highly heterogeneous (Soltesz, 2005; Marder and Goaillard, 2006) and show diverse responses to sensory stimuli and other events. This diversity is especially bewildering with regard to the prefrontal cortex, a brain structure that has been shown to be critically important for higher cognitive behaviors in numerous lesion (Petrides, 1982; Passingham, 1993; Murray et al., 2000), clinical (Petrides, 1985), and imaging (Boettiger and D’Esposito, 2005) studies. Indeed, single-neuron recordings from the prefrontal cortex have yielded a rich phenomenology: neurons have been found to respond to sensory stimuli and show persistent activity during working memory (Fuster and Alexander, 1971; Funahashi et al., 1989; Romo et al., 1999), reflect animals’ decisions or intended actions (Tanji and Hoshi, 2008) or rewards (Barraclough et al., 2004), and encode contexts, task rules (Wallis et al., 2001; Genovesio et al., 2005; Mansouri et al., 2006, 2007) and abstract concepts like numbers (Nieder and Miller, 2003). Typically, a single prefrontal cell is not merely responsive to a single event but shows selectivity to a combination of different aspects of the task being executed (mixed selectivity). These findings naturally pose the question: does such diversity of responses play a constructive computational role in complex cognitive tasks?

We found a computational role for the neuronal response diversity, which is directly related to the function of prefrontal cortex of actively maintaining a representation of behavioral rules (Goldman-Rakic, 1987; Miller and Cohen, 2001). This is in line with previous theoretical works that have shown that specific forms of mixed selectivity can be harnessed to perform computation such as complex sensorimotor transformations (Zipser and Andersen, 1988; Pouget and Sejnowski, 1997; Pouget and Snyder, 2000; Salinas and Abbott, 2001) and to model serial working memory (Botvinick and Watanabe, 2007) and visuomotor remapping (Salinas, 2004a) (see Discussion for more details).

Rules are prescribed guides for problem solving and flexible decision making and they vary in the degree of abstraction. Examples include conditional (arbitrary) sensorimotor associations (if red light, then stop), task rules (respond if two stimuli match), strategies for decision making (if win, stay; if lose, switch). We assumed that the rule in effect is actively maintained by a recurrent neural circuit. In particular we hypothesized that the neural correlate of a rule is a self-sustained persistent pattern of activity (see e.g., Miller and Cohen, 2001). Small perturbations of these activity patterns are damped by the interactions between neurons, so that the state of the network remains close to one of the patterns of persistent activity. Hence these patterns are stable, and they are called attractors of the neural dynamics. Attractor network models have been previously studied for associative (Hopfield, 1982) and working memory (Amit, 1989; Wang, 2001) of sensory stimuli. In these models a sensory stimulus activates one of the strongly interacting populations of neurons and the memory of stimulus identity is maintained by the persistent activity of the activated population.

Our intention was to extend these models to the most general case in which every attractor corresponds to a particular rule, as assumed in studies on specific tasks (Amit, 1988; O’Reilly and Munakata, 2000; Xing and Andersen, 2000; Loh and Deco, 2005). In particular we wanted to understand how the rule can affect our decisions, and how external events can select the rule in effect. We assumed that every event generates a driving force that steers the neural activity toward a different stable pattern. Such a pattern corresponds to a new rule and depends on both the external event and the previous rule in effect.

In such a scenario, as we will show, the absence of neurons with mixed selectivity typically compromises the possibility of constructing a neural network that can perform the task. These difficulties are almost always encountered whenever the rules for committing the course of action contain a dependence on the context. For example, they are unavoidable in the case of the Wisconsin Card Sorting Test (WCST), when the subject needs to switch from one rule to another. The next rule to be selected clearly depends not only on the instruction to switch, but also on the previous rule in effect (context). The inability to switch in a WCST is often considered as an indication of a damage of prefrontal cortex (Milner, 1963), which is a brain area with abundance of mixed selectivity (see e.g., Asaad et al., 1998; Cromer et al., 2010; Rigotti et al., 2010).

We will then show that neurons with mixed selectivity and diverse response properties not only are necessary in our scenario to perform context-dependent tasks, but they are also sufficient to solve arbitrarily complicated tasks. Mixed selectivity is readily obtained by connecting cells with random connections to both the neurons in the recurrent circuit and to the neurons representing the external events. We will show that this simple form of heterogeneity grants the neural network the ability to implement arbitrarily complicated tasks. Surprisingly, it turns out that the number of randomly connected neurons needed to implement a particular task is not much larger than the minimal number of neurons required in a carefully designed neural circuit. This number grows only linearly with the number of inner mental states encoding the rules, and the task-relevant events, despite the combinatorial explosion of possible mixed selectivity responses. The randomly connected neurons possess response properties that are more diverse than required in a minimal circuit, as they respond to both necessary and unnecessary combinations of mental states and events. Moreover, such response properties are predicted to be pre-existent and universal as they should be observable before the learning process, independently from the task to be learned. Our work suggests that the observed diversity of the neural responses plays an important computational role, both in the acquisition and the execution of tasks in which our decision or our actions depend on the context.

Results

Modeling Complex Cognitive Tasks: the General Framework

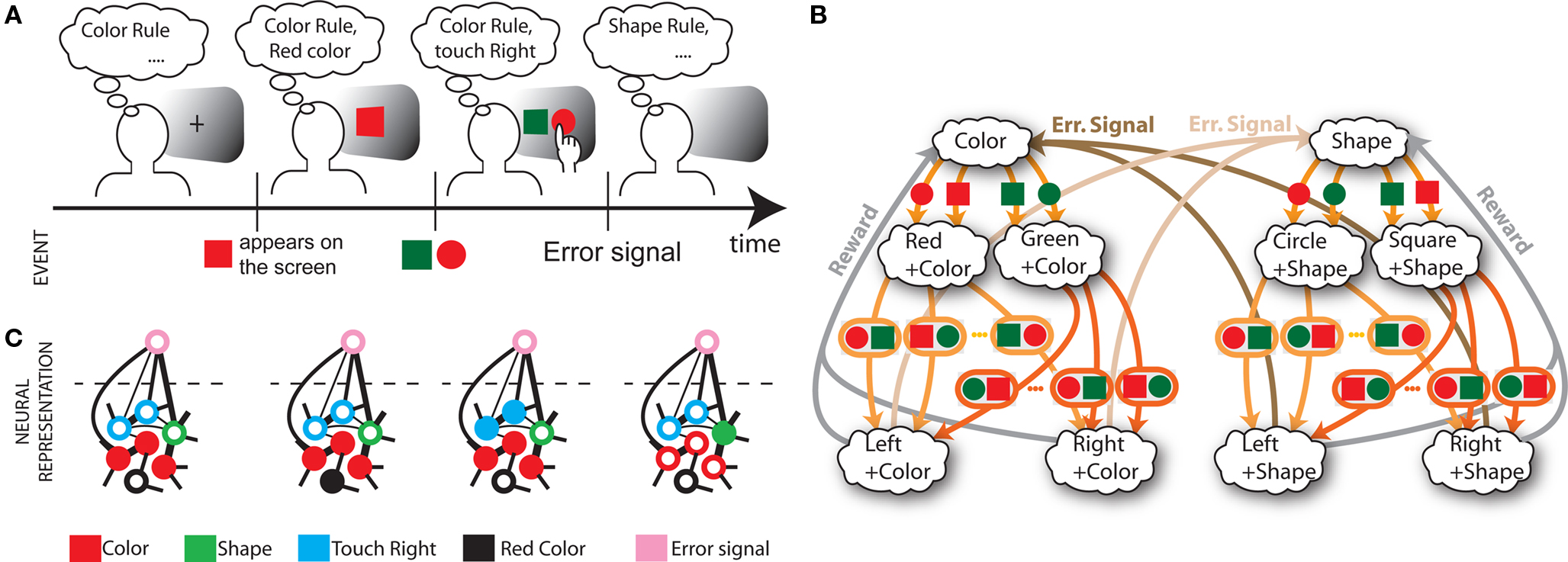

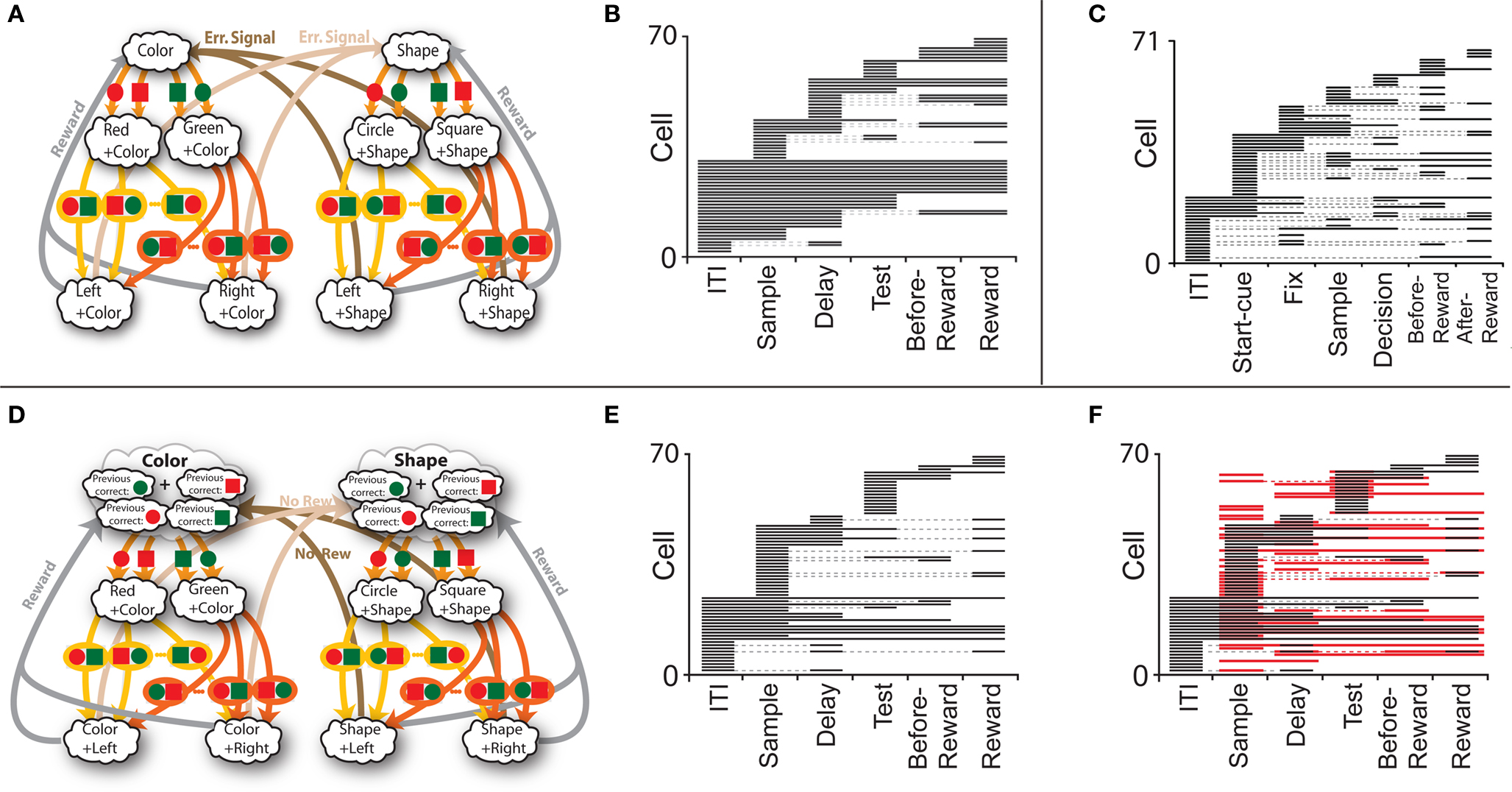

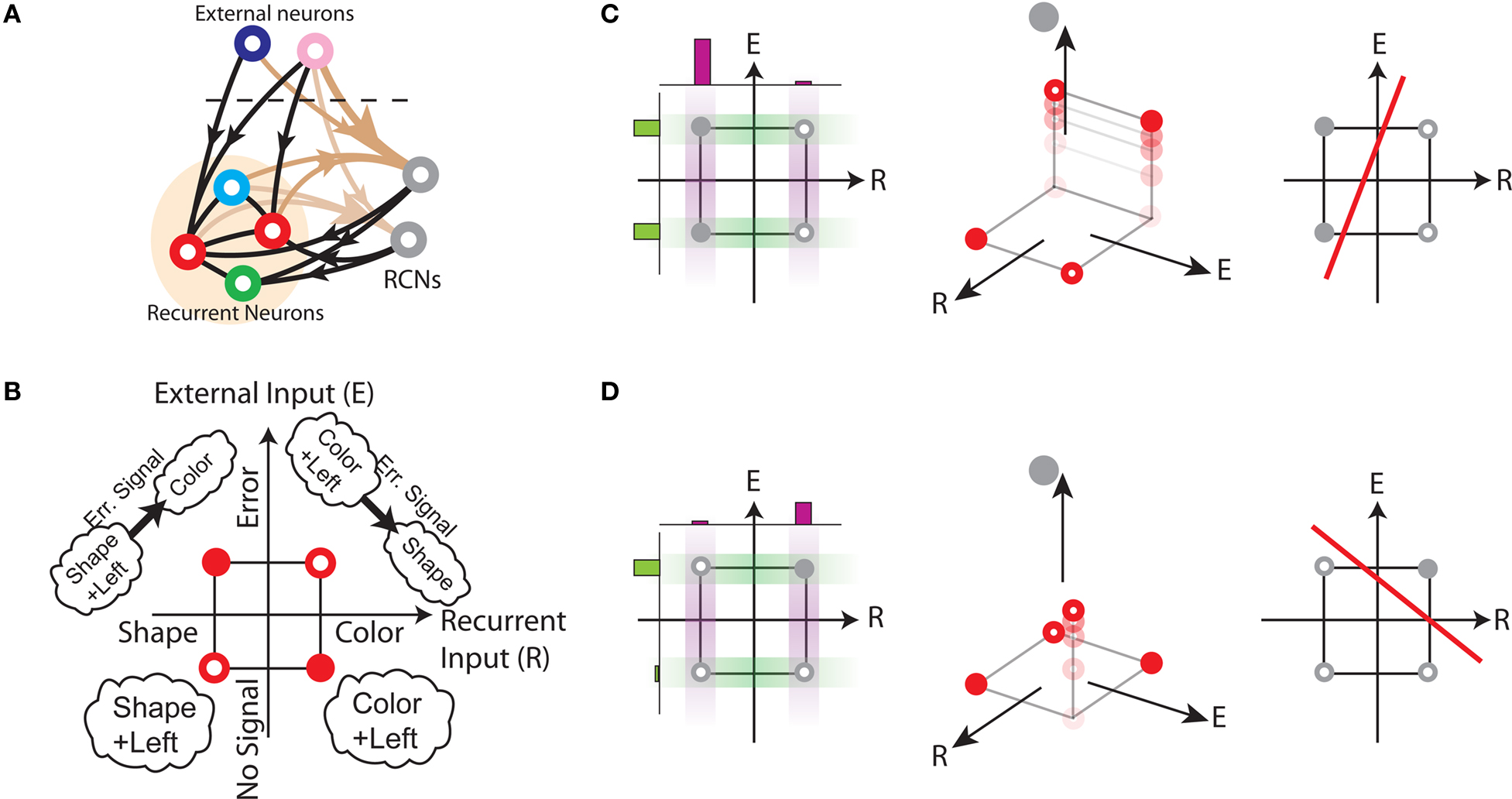

In order to model the most general rule-based behavior, we assume that subjects performing complex tasks go through a series of inner mental states, each representing an actively maintained disposition to behavior or an action that is being executed. Each state contains information about task-relevant past events and internal cognitive processes representing reactivated memories, emotions, intentions and decisions, and in general all factors that will determine or affect the current or future behavior, like the execution of motor acts. In Figure 1A we illustrate this scenario in the case of a simplified version of the Wisconsin Card Sorting Test (WCST). In a typical trial, the subject sees a sample stimulus on a screen and, after a delay, he is shown two test stimuli. He has to touch the test stimulus matching either the shape or the color of the sample, depending on the rule in effect. The subject has to determine the rule by trial and error; a reward confirms that the rule was correct, and an error signal prompts the subject to switch to the alternative rule. Every task-relevant event such as the appearance of a visual stimulus or the delivery of reward is hypothesized to induce a transition to a different mental state.

Figure 1. A context-dependent task. (A) A typical trial of a simplified version of the WCST, similar to the one used in the monkey experiment (Mansouri et al., 2006, 2007). The subject has to classify visual stimuli either according to their shape or according to their color. Before the trial starts, the subject keeps actively in mind the rule in effect (color or shape). Every event, like the appearance of a visual stimulus, modifies the mental state of the subject. An error signal indicates that it is necessary to switch to the alternative rule. (B) Scheme of mental states (thought balloons) and event-driven transitions (arrows) that enables the subject to perform the simplified WCST. (C) Neural representation of the mental states shown in (A): circles represent neurons, and colors denote their response preferences (e.g., red units respond when Color Rule is in effect). Filled circles are active neurons and black lines are synaptic connections. For simplicity, not all neurons and synaptic connections are drawn.

The neural correlate of a mental state is assumed to be a stable pattern of activity of a recurrent neural circuit. The same neural circuit can sustain multiple stable patterns corresponding to different mental states. Events like sensory stimuli, reward delivery, or error signals steer the neural activity toward a different stable pattern representing a new mental state. Such a pattern will in general depend on both the external event and the previous mental state.

In order to construct an attractor network that is able to perform a certain context-dependent task we need to find the synaptic couplings between neurons that satisfy the mathematical conditions for guaranteeing that the attractors are stable fixed points of the neural dynamics and that external events induce the desired transitions. Interestingly, we found that even in the example of very simple context-dependent motor tasks, these conditions cannot be fulfilled simultaneously, similarly to what happens in the case of semantic networks (Hinton, 1981). We will show that this is a general problem of all context-dependent tasks.

Fundamental Difficulties in Context-Dependent Tasks

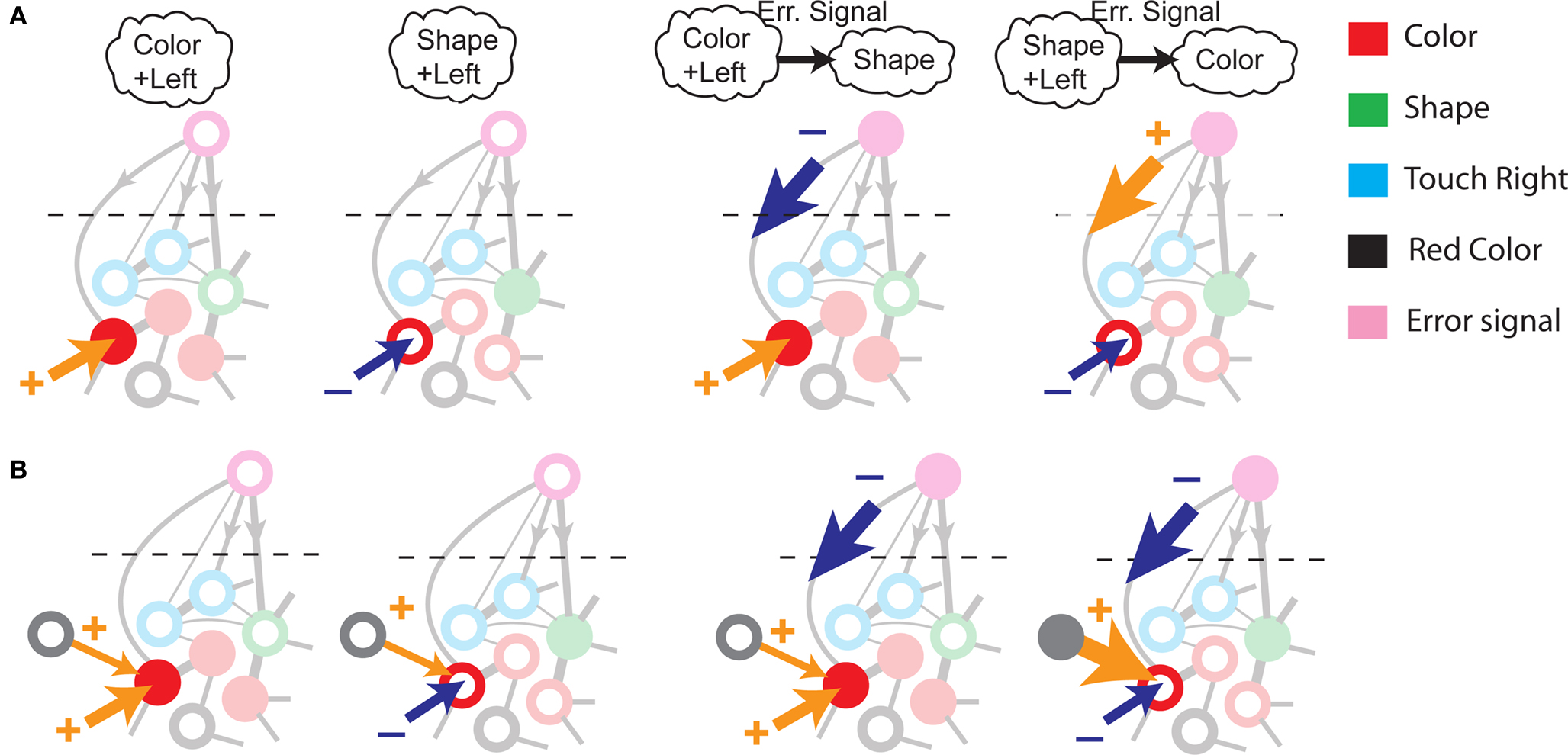

To illustrate the problem caused by context dependence, consider a task switching induced by an error signal in the simplified WCST (see Figure 2A). In one context, e.g., when the Color Rule is in effect, the error signal induces a transition to the Shape Rule state at the top of the scheme of Figure 1B, whereas in the other, when starting from the Shape Rule, the same event determines the selection of the Color Rule state. In the first context the neurons of the recurrent circuit excite each other so as to sustain the pattern of persistent activity representing the Color Rule mental state. The overall recurrent input to neurons selective for Color Rule must therefore be excitatory enough to sustain the persistent activity state representing the Color Rule. On the other hand, in the Shape Rule state the overall current should be below the activation threshold (Figure 2A, left). In order to induce a rule switch, the additional synaptic input generated by the Error Signal should be inhibitory enough to overcome the recurrent input and inactivate these neurons when starting from the Color Rule mental state, and excitatory enough to activate them when starting from the Shape Rule state (Figure 2A, right). This is impossible to realize because the neural representation of the Error Signal is the same in the two contexts. This problem is equivalent to the known problem of non-linear separability of the Boolean operation of exclusive OR (XOR) and it plagues most neural networks implementing context-dependent tasks.

Figure 2. (A) Impossibility of implementing a context-dependent task in the absence of mixed selectivity neurons. We focus on one neuron encoding Color Rule (red). In the attractors (two panels on the left), the total recurrent synaptic current (arrow) should be excitatory when the Color Rule neuron is active, inhibitory otherwise. In case of rule switching (two panels on the right), generated by the Error Signal neuron (pink), there is a problem as the same external input should be inhibitory (dark blue) when starting from Color Rule and excitatory (orange) otherwise. (B) The effect of an additional neuron with mixed selectivity that responds to the Error Signal only when starting from Shape Rule. Its activity does not affect the attractors (two panels on the left), but it excites Color Rule neurons when switching from Shape Rule upon an Error Signal. In the presence of the mixed selectivity neurons, the current generated by the Error Signal can be chosen to be consistently inhibitory.

We illustrated the problem in a specific and schematic example, but more in general, a non-linear separability manifests itself whenever the same external event must activate a neural population in one context, and inactivate it in another, like a flip-flop. More formally, consider two attractors given by the activity patterns  and

and  (Color + Left and Shape + Left of the example of Figure 2). These represent two mental states preceding a particular event E that will induce a transition to

(Color + Left and Shape + Left of the example of Figure 2). These represent two mental states preceding a particular event E that will induce a transition to  (Shape in the example) when starting from

(Shape in the example) when starting from  , and to a different pattern

, and to a different pattern  (Color) when starting from

(Color) when starting from  (E = Err. Signal in Figure 2). We need to impose the following two conditions to guarantee that the mental states are fixed points of the dynamics:

(E = Err. Signal in Figure 2). We need to impose the following two conditions to guarantee that the mental states are fixed points of the dynamics:

where E0 denotes the absence of any event (e.g., when the recurrent network receives only spontaneous activity). At the same time we need to impose the two conditions corresponding to the event-driven transitions:

where E represents the external event. We now prove that there is no set of synaptic weights that satisfies all the four conditions when for some neuron i we have that

We define as  (μ = 1,2) the total synaptic current to neuron i when the network is in one of the initial attractors

(μ = 1,2) the total synaptic current to neuron i when the network is in one of the initial attractors  . For simplicity and without loss of generality, we assume that the external current in the absence of events is 0. We now consider a case in which the activity of neuron i is different in the two initial mental states (i.e., when

. For simplicity and without loss of generality, we assume that the external current in the absence of events is 0. We now consider a case in which the activity of neuron i is different in the two initial mental states (i.e., when  , where θ is the threshold for neuronal activation). When the external input is activated upon the occurrence of an event, an extra current Hi is injected into neuron i. The current is uniquely determined by the event and by the weights of the synapses connecting the external to the recurrent neurons. As a consequence it is the same for all initial mental states, that is for both attractors

, where θ is the threshold for neuronal activation). When the external input is activated upon the occurrence of an event, an extra current Hi is injected into neuron i. The current is uniquely determined by the event and by the weights of the synapses connecting the external to the recurrent neurons. As a consequence it is the same for all initial mental states, that is for both attractors  and

and  . We can now show that in the case in which the external event should modify the activation of neuron i in both attractors, it is impossible to impose all conditions simultaneously. Indeed, consider, without loss of generality, a case in which for the attractors we have

. We can now show that in the case in which the external event should modify the activation of neuron i in both attractors, it is impossible to impose all conditions simultaneously. Indeed, consider, without loss of generality, a case in which for the attractors we have  and

and  . We also assume that the transitions require that starting from mental state 1, the neuron is inactivated (

. We also assume that the transitions require that starting from mental state 1, the neuron is inactivated ( ), whereas starting from mental state 2 the neuron is activated (

), whereas starting from mental state 2 the neuron is activated ( ). We see that it is not possible to fulfill all these requirements simultaneously as Hi should be negative enough to satisfy the condition for the first transition [Hi < −(Ii − θ) with Ii − θ > 0, to satisfy the mental state stationarity condition], and, at the same time, positive enough to allow for the second transition. As the synaptic currents

). We see that it is not possible to fulfill all these requirements simultaneously as Hi should be negative enough to satisfy the condition for the first transition [Hi < −(Ii − θ) with Ii − θ > 0, to satisfy the mental state stationarity condition], and, at the same time, positive enough to allow for the second transition. As the synaptic currents  are determined by the given patterns of neural activity and by the synaptic weights, this result implies that there is no set of synaptic weights that can satisfy all conditions. Notice that this result does not depend on the specific representations of the mental states and external events, but only on the existence of neurons whose state is activated in one context and inactivated in another context by the same event (see Section “Constraints on the Types of Implementable Context-Dependent Transitions” in Appendix for a geometrical representation of this problem).

are determined by the given patterns of neural activity and by the synaptic weights, this result implies that there is no set of synaptic weights that can satisfy all conditions. Notice that this result does not depend on the specific representations of the mental states and external events, but only on the existence of neurons whose state is activated in one context and inactivated in another context by the same event (see Section “Constraints on the Types of Implementable Context-Dependent Transitions” in Appendix for a geometrical representation of this problem).

The probability of not encountering such a problem decreases exponentially with the number of transitions and with the number of neurons in the network, if the patterns of activities representing the mental states are random and uncorrelated (see Section “Constraints on the Types of Implementable Context-Dependent Transitions” in Appendix). This result indicates that it is very likely to encounter this problem every time our action or, more in general, our next mental state, depends on the context. We will show in the next sections that neurons with mixed selectivity solve the problem in the most general case and for any neural representation.

The Importance of Mixed Selectivity

The main problem of the example illustrated in Figure 2A is originated by the assumption that each neuron is selective either to the inner mental state (Color or Shape Rule) or to the external input (such as the Error Signal). Indeed, consider an additional neuron that responds to the Error Signal only when the neural circuit is in the state corresponding to the Shape Rule. Such a neuron exhibits mixed selectivity as it is sensitive to both the inner mental state and the external input. Its average activity is higher in trials in which Shape Rule is in effect compared to the average activity in Color Rule trials. In particular, the average activity in time intervals during and preceding the Error Signal is higher when starting from Shape Rule than when starting from Color Rule. At the same time it is also selective to the Error Signal when we average across the two initial inner mental states corresponding to Color and Shape Rule. Neurons with such selectivity are widely observed in prefrontal cortex and we now show that their participation in the network dynamics solves the context dependence problem (see Figure 2B). The mixed selectivity neuron is inactive in the absence of external events, and hence it does not affect the mental state dynamics. However, it responds differently depending on the initial state preceding a transition induced by the Error Signal. This allows us to design the circuit in such a way that the Error Signal is consistently inhibitory. In this way, when starting from Color Rule, the external input inactivates the Color neurons, as required to induce a transition to the Shape Rule state. When starting from the Shape Rule, the mixed selectivity neuron is activated by the Error Signal and its excitatory output to the Color neuron can overcome the inhibitory current of the Error Signal and activate the Color neuron. Notice that it is possible to find analogous solutions every time the neuron has mixed selectivity to the Error Signal and to the rule in effect. In fact, all neurons with mixed selectivity are activated in an odd number of cases out of the four possible situations (all combinations of the two rules, Shape or Color, in the presence/absence of the Error Signal illustrated in Figures 2A,B). Any of these mixed selectivity neurons can solve the problem, as opposed to neurons that are selective only to the inner mental state or only to the external input (see also The Importance of Mixed Selectivity in Appendix for the importance of mixed selectivity in the general case).

Randomly Connected Neurons Exhibit Mixed Selectivity

A neuronal circuit can be designed to endow the neurons with the necessary mixed selectivity (see e.g., Zipser and Andersen, 1988; Poggio, 1990; Pouget and Sejnowski, 1997; Pouget and Snyder, 2000; Salinas, 2004b). For example, neural network learning algorithms like backpropagation (see e.g., Hertz et al., 1991) are designed to solve non-linear separability problems similar to the one that we found in the case of context-dependent tasks. They rely on the introduction of neurons (hidden units) whose synapses are iteratively modified by a training procedure until the problem is solved. In all these cases, these additional neurons exhibit the mixed selectivity described in the previous section after a laborious training procedure.

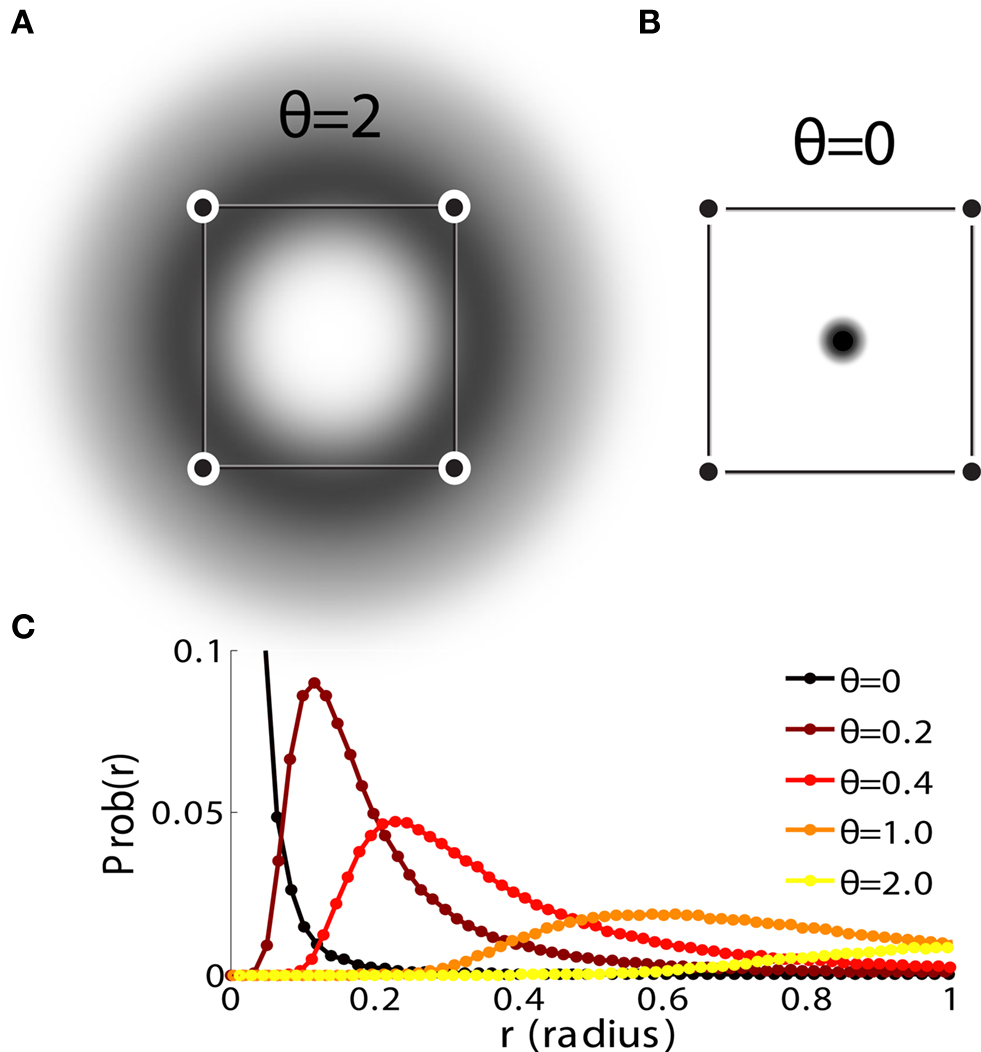

We found that there is a simple and surprisingly general solution to the problem of context dependence that does not require any training. The solution is based on the observation that neurons which receive inputs from the recurrent network and the external neurons with random synaptic weights (Randomly Connected Neurons, or RCNs) naturally exhibit mixed selectivity. Our neural network model exploits this fact and is composed of three populations of McCulloch–Pitts neurons (i.e., neurons that are either active when the total synaptic current generated by the connected neurons is above some threshold θ, inactive otherwise): (1) external neurons representing external events, (2) recurrent neurons encoding the mental state, (3) RCNs (see Figure 3A). The recurrent neurons receive inputs through plastic synaptic connections from all the neurons in the three populations. The RCNs receive connections from both the external and the recurrent neurons through synapses with fixed, Gauss distributed random weights (with zero mean).

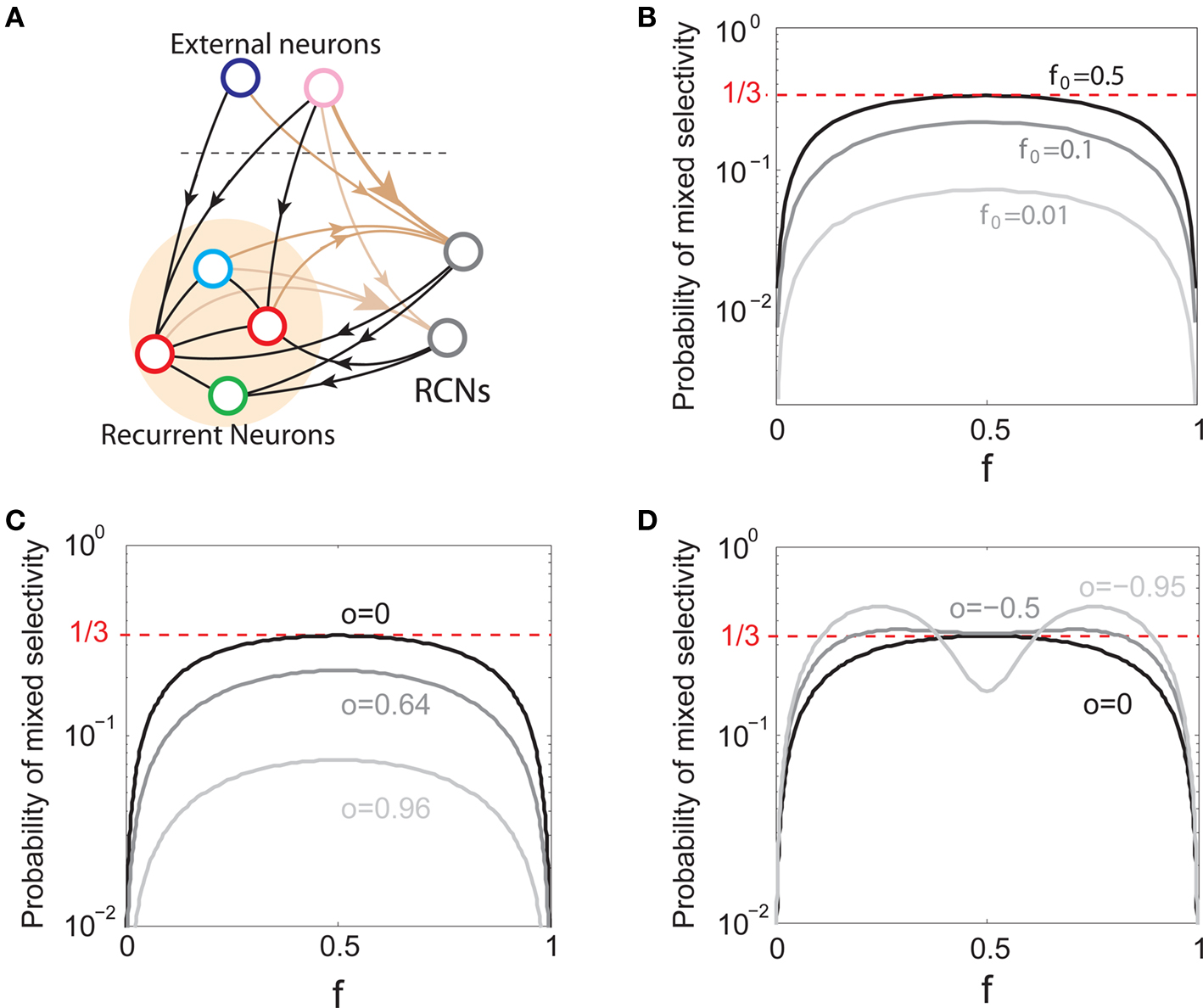

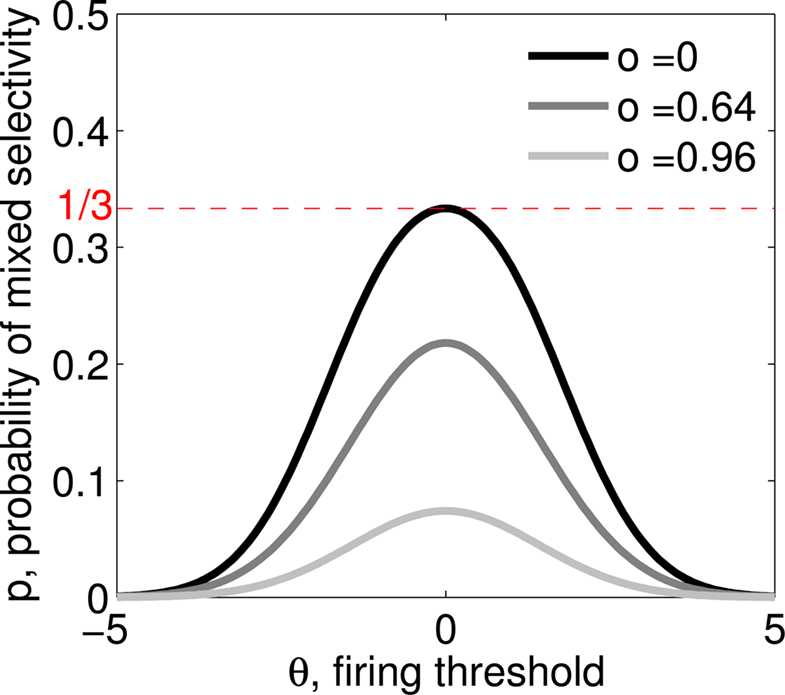

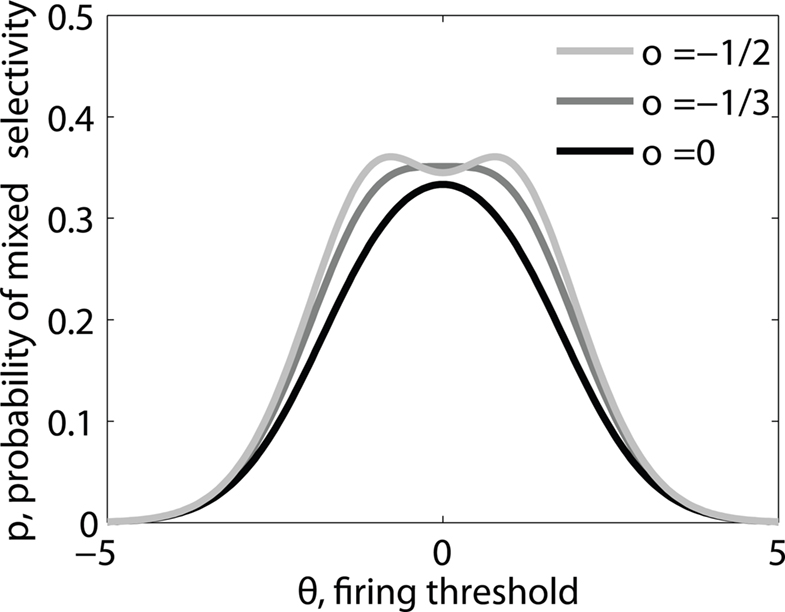

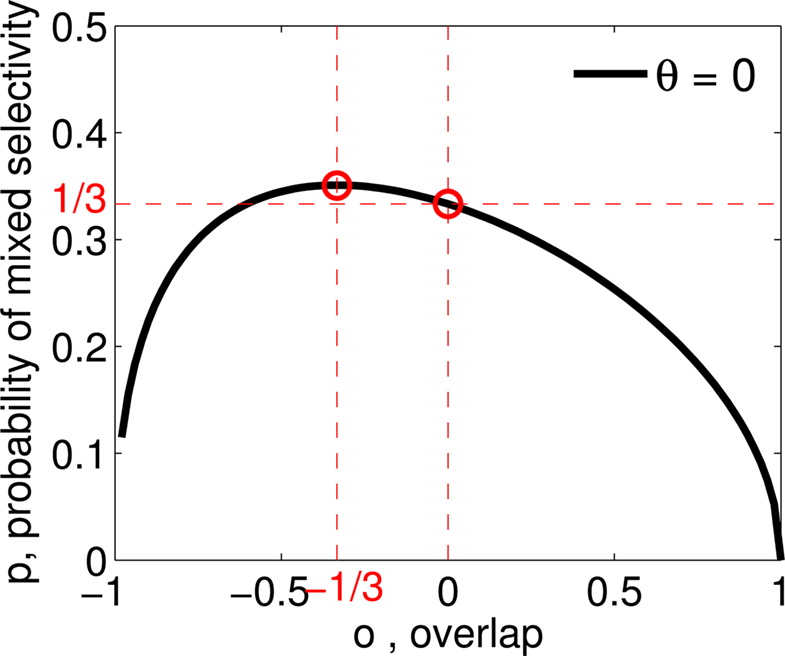

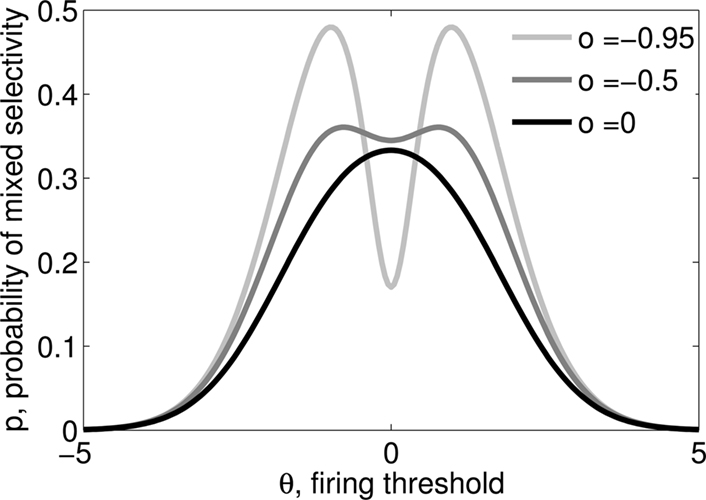

Figure 3. (A) Neural network architecture: randomly connected neurons (RCN) are connected both to the recurrent neurons and the external neurons by fixed random weights (brown). Each RCN projects back to the recurrent network by means of plastic synapses (black). Not all connections are shown. (B) Probability that an RCN displays mixed selectivity (on log scale) and hence solves the problem of Figure 2 as a function of f, the average fraction of input patterns to which each RCN responds. Different curves correspond to different coding levels f0 of the representations of the mental states and the external inputs. The peak is always at f = 1/2 (dense RCN representations). (C) Probability that an RCN has mixed selectivity as a function of f, as in (B), for different positive values of the overlap o between the two initial mental states, and the two external inputs corresponding to the spontaneous activity and the event. Again the peak is always at f = 1/2. The curve decays gently as o goes to 1. (D) As in (C), but for negative values of the overlap o, meaning that the patterns representing the mental states are anti-correlated. There are now two peaks, but notice that they remain close to f = 1/2 for all values of o.

If the activity threshold θ = 0, then every RCN responds on average to half of all possible input patterns (dense coding), as the total synaptic current is either positive or negative with equal probability. As the threshold θ increases, an RCN responds to a progressively decreasing fraction f of input patterns (sparse coding). For example, an RCN that by chance is strongly connected to both the Shape Rule recurrent neurons and to the Error Signal external neurons, will have the same mixed selectivity as the neuron represented in Figure 2B. Indeed, for a sufficiently high threshold θ, it would respond to the Error Signal only when Shape Rule neurons are active. It turns out that the probability that an RCN, as a mixed selectivity neuron, responds to an odd number of the possible combinations of the external input and the inner mental state can be as large as 1/3 when θ is small and f is close to 1/2 (see Figure 3B and Estimating the Number of Needed RCNs in Appendix). Surprisingly, this result implies that the number of RCNs needed to solve a context-dependent problem is on average only three times larger than the number of neurons needed in a carefully designed neural circuit.

In general, the probability that an RCN is a mixed selectivity neuron, depends on the coding level f0 (the fraction of active neurons in the recurrent and the external network), on the correlations between the representations of different mental states and different external inputs, and on the threshold θ that determines the coding level f of the RCNs. However, it does not depend on the values and the specific distribution of the random synaptic weights, provided that the synapses are not correlated to other synapses or to the input patterns. This means that the synaptic connections to the RCNs can be positive and negative, entirely positive (excitatory), or entirely negative (inhibitory), and the probability of finding a mixed selectivity neuron remains the same, provided that the threshold θ is properly shifted (see Estimating the Number of Needed RCNs in Appendix).

Dense representations of RCN patterns of activities (f = 1/2) are more efficient than sparse representations (f → 0 or f → 1), regardless of the coding level f0 of the representations of the mental states and the external inputs. This is illustrated in Figure 3B where the probability that an RCN responds as a mixed selectivity neuron is plotted against f for three values of f0. The proof is valid for patterns representing mental states and events that are random and uncorrelated. All curves have a maximum in correspondence of f = 1/2 and they are relatively flat for a wide range of f values. The maximum decreases gently as f0 approaches 0 (approximately as  ) because the overlap between different mental states and external inputs progressively increases, and this makes it difficult for an RCN to discriminate between different initial mental states, or different external inputs. For the same reasons, the maximum decreases in the same way as f0 tends to 1.

) because the overlap between different mental states and external inputs progressively increases, and this makes it difficult for an RCN to discriminate between different initial mental states, or different external inputs. For the same reasons, the maximum decreases in the same way as f0 tends to 1.

As the patterns representing mental states and events become progressively more correlated, the number of needed RCNs increases. In particular, in Figure 3C we show the probability of mixed selectivity as a function of f of the RCNs for different correlation levels between the patterns representing mental states and external events. The degree of correlation is expressed as the average overlap o between the two patterns representing the initial mental states (the same overlap is used for the two external events). o varies between −1 and 1, and it is positive and close to 1 for highly similar patterns (Figure 3C) or negative (Figure 3D), for anti-correlated patterns. The overlap o = 0 corresponds to the case of uncorrelated patterns. As o increases, it becomes progressively more difficult to find an RCN that can have a differential response to the two initial mental states. This is reflected by a probability that decreases approximately as  . For all curves plotted in Figure 3C, the maximum is always realized with f = 1/2. Interestingly, for anti-correlated patterns, the maximum splits in two maxima that are slightly above 1/3 (see Figure 3D). The maxima initially move away from f = 1/2 as the patterns become more anti-correlated, but then, for o < −5/6, they stop diverging from the mid point. The optimal value for f remains within the interval [0.3, 0.7] for the whole range of correlations.

. For all curves plotted in Figure 3C, the maximum is always realized with f = 1/2. Interestingly, for anti-correlated patterns, the maximum splits in two maxima that are slightly above 1/3 (see Figure 3D). The maxima initially move away from f = 1/2 as the patterns become more anti-correlated, but then, for o < −5/6, they stop diverging from the mid point. The optimal value for f remains within the interval [0.3, 0.7] for the whole range of correlations.

In all the cases that we analyzed, which cover practically all possible statistics of the patterns for the mental states and the external events, the probability of finding an RCN that solves the context-dependent task is always surprisingly high, provided that the patterns of activities of the RCNs are not too sparse (i.e., when f is sufficiently close to 1/2, within the interval [0.3, 0.7]).

In this section we analyzed the probability that an RCN solves a single, generic, context-dependent problem. How does the number of needed RCNs scale with the complexity of an arbitrary task with multiple context dependencies? In order to answer this question, we first need to define the neural dynamics and construct a circuit that harnesses RCNs to implement an arbitrary scheme of mental states and event-driven transitions.

A General Recipe for Constructing Recurrent Networks that Implement Complex Tasks

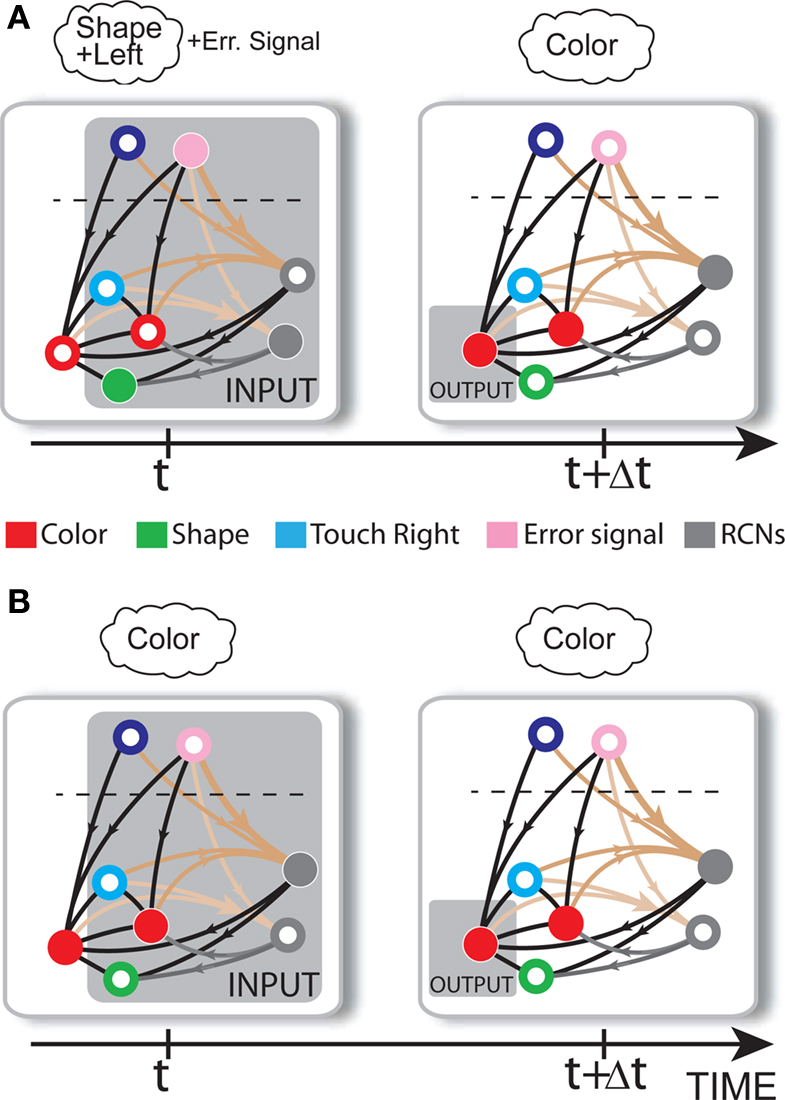

Consider our model shown in Figure 3A. Given a scheme of mental states and event-driven transitions like the one of Figure 1B, the weights of the plastic synaptic connections are modified according to a prescription that guarantees that the mental states are stable patterns of activity (attractors) and that the events steer the activity toward the correct mental state. In particular, for each attractor encoding a mental state, and for each event-driven transition we modify the plastic synapses as illustrated in Figure 4. For the example of the transition Shape + Left to Color induced by an Error Signal of Figure 4A we clamp the recurrent neurons to the pattern of activity corresponding to the initial state (Shape + Left). We then compute the activity of the RCNs. We isolate in turn all the recurrent neurons and we modify their plastic synapses according to the perceptron learning rule (Rosenblatt, 1962) so that the total synaptic input drives the neurons to the activation state they should have at time t + Δt, after the transition has occurred. In case of the mental states we impose their stationarity by requiring that each pattern representing a mental state at time t reproduces itself at time t + Δt (see Figure 4B). In order to guarantee the stability of these patterns, we require that active neurons are driven by a current that is significantly larger than the minimal threshold value θ (i.e., I > θ + d, where d > 0 is known as a “learning margin”). Analogously, inactive neurons should be driven by a current I < θ − d. To avoid that the stability condition is trivially satisfied by inflating all synaptic weights, we require that the learning margin should grow with the length of the vector representing all the synaptic weights on the dendritic tree (Krauth and Mezard, 1987; Forrest, 1988) (see Methods: Details of the Model for the details of the synaptic dynamics). When the learning procedure is repeated for all neurons, the patterns of activity corresponding to the mental states are cooperatively maintained in time through synaptic interaction and are robust to perturbations.

Figure 4. Prescription for determining the plastic synaptic weights. (A) For event-driven transitions the synapses are modified as illustrated in the case of the transition from Shape + Left to Color induced by an Error Signal. The pattern of activity corresponding to the initial attractor (Shape + Left) is imposed to the network. Each neuron is in turn isolated (leftmost red neuron in this example), and its afferent synapses are modified so that the total synaptic current generated by the initial pattern of activity (time t, denoted by INPUT), drives the neuron to the desired activity in the target attractor (OUTPUT at time t + Δt). (B) For the mental states the initial and the target patterns are the same. The figure shows the case of the stable pattern representing the Color mental state. The procedure is repeated for every neuron and every condition.

All conditions corresponding to the mental states and the event-driven transitions can be imposed if there is a sufficient number of RCNs in the network. If it is not possible to satisfy all conditions simultaneously we keep adding RCNs and we repeat the learning procedure. We show that such a procedure is guaranteed to converge (see Estimating the Number of Needed RCNs in Appendix).

Dense Neural Representations Require a Number of Neurons that Grows Only Linearly with the Number of Mental States

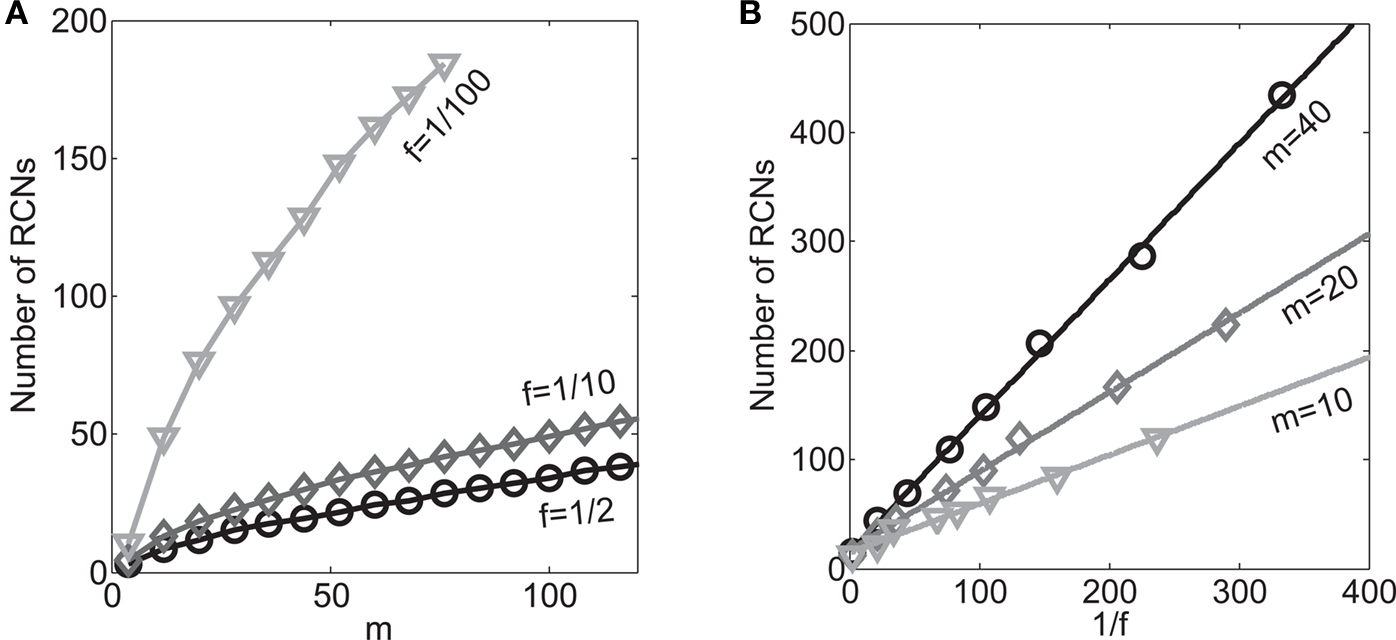

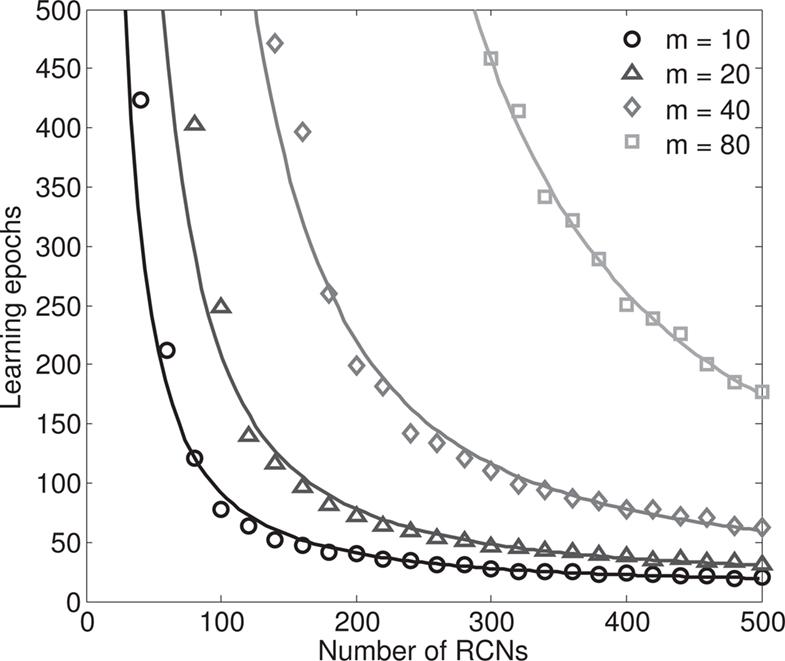

If we follow the prescription of the previous paragraph, how many RCNs do we need in order to implement a given scheme of mental states and event-driven transitions? Not surprisingly, the answer depends on the threshold θ for the activation of the RCNs, and hence on the RCNs’ coding level f. Indeed we have shown that the probability that an RCN solves a single context dependence problem depends on f, and that it is maximal for dense representations. We expected to observe a similar dependence in the full dynamic neural network implementing a complex scheme of multiple mental states and context-dependent event-driven transitions.

In the extreme limit of small f (ultra-sparse coding), each RCN responds only to a single, specific input pattern (f = 1/2N, where 2N is the total number of possible patterns and N is the number of synaptic inputs per RCN). We prove in Section “Estimating the Number of Needed RCNs” in Appendix that for the ultra-sparse case, any scheme of attractors and event-driven transitions can be implemented and the basins of attraction can have any arbitrary shape. Unfortunately, the number of necessary RCNs grows exponentially with the number of recurrent and external neurons. Such a dependence reflects the combinatorial explosion of possible patterns of neural activity that represent conjunctions of events.

On the other hand, with a larger f, it is more likely that an RCN solves our problem, as for the mixed selectivity neuron of Figure 2B. To quantify this effect we devised a benchmark to estimate how the number of necessary RCNs scales with f and the complexity of a context-dependent task in the case of multiple context dependencies. Specifically, we simulated a network with RCNs with coding level f implementing a set of r random transitions between m mental state attractors represented by random uncorrelated patterns. Since the result of a transition triggered by an external stimulus depends in general on the initial mental state, m can also be thought of as the number of distinct contexts. Additionally, in all these analyses we sought to make sure that the attractors representing the mental states had a finite basin of attraction ρB. This means that, whenever the activity pattern was in an initial configuration within a distance ρB > 0 from an attractor representing a mental state, then the network dynamics was required to relax into the corresponding attractor. Equivalently, every pattern of activity with an overlap greater than o = 1 − 2ρB with a given attractor pattern was required to evolve toward that attractor. For each set of parameters m, r, f, and ρB, we computed the minimal number of required total neurons in the network (recurrent and RCNs), so that the r transitions are correctly implemented and all the m mental states have a basin of attraction of at least ρB.

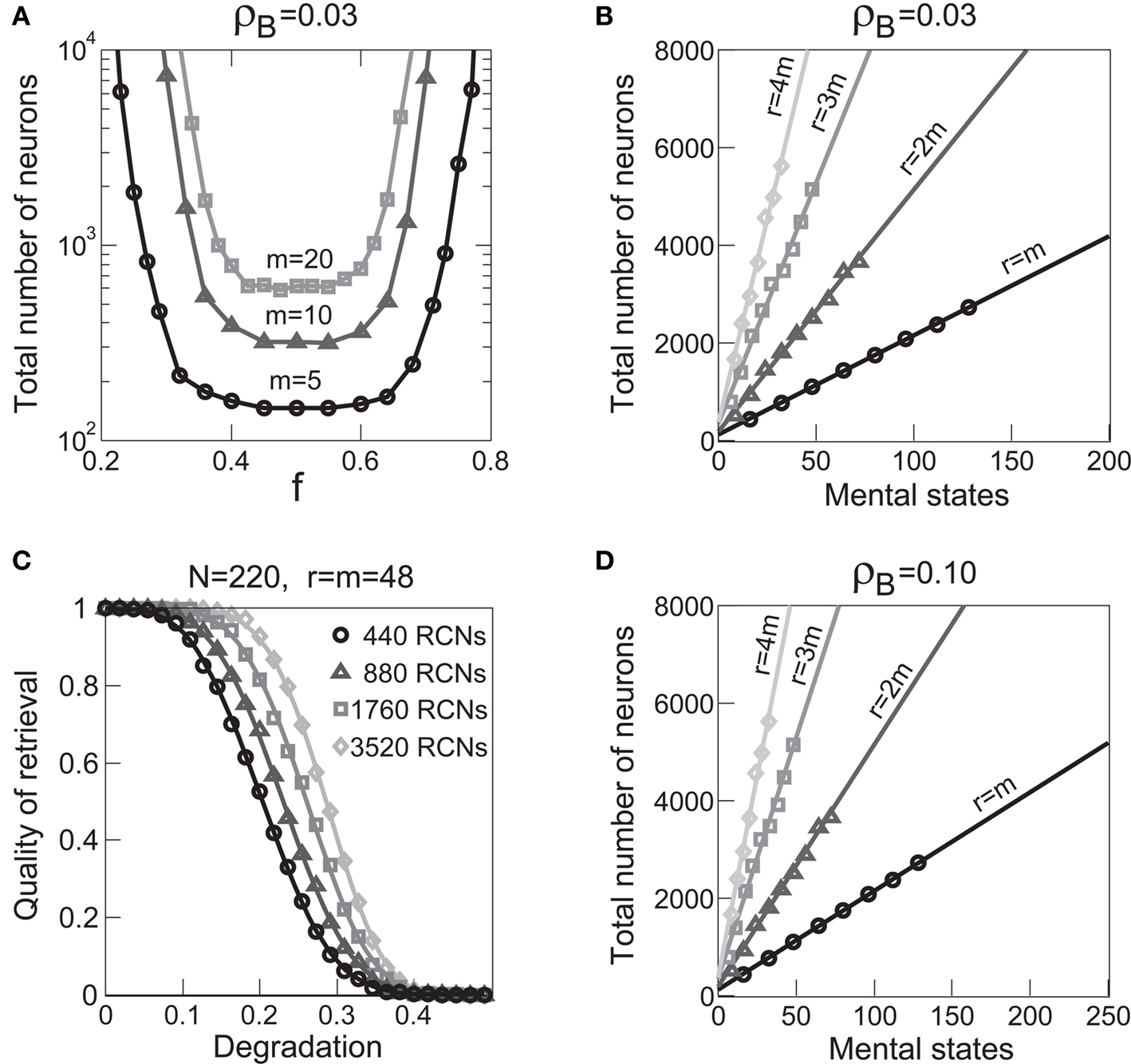

Figure 5A shows the required total number of neurons (recurrent and RCNs) as a function of the coding level f of the RCNs found by varying the number of neurons so that the RCNs were always four times as many as the recurrent neurons. The results are shown for r = m transitions for three different numbers of contexts, m = 5,10,20. Consistently with the estimates of the probability that an RCN solves a single context dependence problem plotted in Figure 3, the minimal number of required neurons is in correspondence of dense RCNs patterns of activity (f = 1/2). With f = 1/2, we examined in Figure 5B how the minimal number of needed neurons depends on the task complexity, and in particular how it depends on the number of mental states m and transitions r. Notice that for the curves in Figure 5B labeled with r > m, the same event drives more than one transition, which is what typically happens in context-dependent tasks. The total number of neurons needed to implement the scheme of mental states and event-driven transitions and to keep the size of the basins of attraction constant, increases linearly with m and the slope turns out to be approximately proportional to the ratio r/m, the number of contexts in which each event can appear. In other words, the number of needed neurons increases linearly with the total number of conditions to be imposed for the stability of mental states, and the event-driven transitions. This favorable scaling relation indicates that highly complicated schemes of attractor states and transitions can be implemented in a biological network with a relatively small number of neurons.

Figure 5. (A) Distributed/dense representations are the most efficient: total number of neurons (recurrent network neurons + RCNs) needed to implement r = m transitions between m random attractor states (internal mental states) as a function of f, the average fraction of inputs that activate each individual RCN. The minimal value is realized with f = 1/2. The three curves correspond to three different numbers of mental states m (5,10,20). The number of RCNs is 4/5 of the total number of neurons. (B) Total number of needed neurons to implement m random mental states and r transitions which are randomly chosen between mental states, with f = 1/2. The number of needed neurons grows linearly with m. Different curves correspond to different ratios between r and m. The size of the basin of attraction is at least ρB = 0.03 (i.e., all patterns with an overlap larger than o = 1 - 2ρB = 0.94 with the attractor are required to relax back into the attractor). (C) The size of basins of attraction increases with the number of RCNs. The quality of retrieval (fraction of cases in which the network dynamics flows to the correct attractor) is plotted against the distance between the initial pattern of activity and the attractor, that is the maximal level of degradation tolerated by the network to still be able to retrieve the attractor. The four curves correspond to four different numbers of RCNs. In all these simulations the number of recurrent neurons was kept fixed at N = 220 and m = r = 48. (D) Same as (B), but for larger basins of attraction, ρB = 0.10.

Scaling Properties of the Basins of Attraction

The RCNs have been introduced to solve the problems originated by the context dependence of some of the transitions. What is the effect of the RCNs on the size and the shape of the basins of attraction? The participation of the RCNs population in the network dynamics effectively leads to the dilation of the space in which the patterns of neural activity are embedded. Specifically, as the number of RCNs increases, the absolute distance between the activity vectors representing different combinations of mental states and external inputs also increases. As a result, the patterns of activity representing the mental state and the external input become more distinguishable and easily separable by read-out neurons. This projection into a higher dimensional space is remindful of the support vector machines (SVM) strategy of pre-processing the data (Cortes and Vapnik, 1995).

The space dilation caused by the introduction of the RCNs can solve the non-linear separabilities generated by context dependence. At the same time it has the desirable property of approximately preserving the structure of the basins of attraction. Indeed, the total synaptic inputs to the RCNs have statistical properties that are similar to the ones of random projections. Random projections are simply obtained by multiplying the vectors representing the patterns in the original space by a matrix with random uncorrelated components. These projections preserve vectors similarities with high probability if the projection space is large enough (Johnson and Lindenstrauss, 1984). As a consequence random projections preserve the structure of the basins of attraction, because all points surrounding the attractor in original space are mapped onto points which maintain the same spatial relation.

Because of the non-linearity due to the sigmoidal neuronal input–output relation, the RCNs distort the space and preserve similarities only with some degree of approximation. For instance, small distances are on average amplified more than large distances. However, similarly to what happens for random projections, the ranking of distances is preserved (again, on average). In other words, if pattern B is more similar to A than C, also the corresponding RCN representations will be likely to preserve the same similarity relations. This is an important property for preserving the topology of the basins of attraction.

To summarize, the RCNs always increase the absolute distances between the input patterns of activity and preserve approximately the relative distances. The small distortions introduced by the non-linear input–output function have the beneficial effect of solving the non-linear separability due to context dependence, and the negative effect of partially disrupting the topology of the basins of attraction.

The effect on the capacity are illustrated in Figures 5B–D. The basin of attraction for a fixed point is estimated in Figure 5C. Starting from the fixed point, we perturbed the neurons of the recurrent network, and measured the fraction of perturbed patterns that relaxed back into the correct attractor. The fraction of correct relaxations stays at 1 when the initial patterns are close to the attractor and then it decreases with the fraction of perturbed neurons. As long as the fraction of correct relaxations is near 1, most of the patterns are within the basin of attraction. The different curves correspond to a different number of RCNs (at fixed number of recurrent neurons) and it is clear that the introduction of RCNs expands the basin of attraction, although the number of required neurons seems to grow exponentially with the size of the basin.

However, when the complexity of the task increases, the dependence of the number of RCNs on the number of mental states and the number of transitions remains linear for all the different sizes of basins of attraction that we studied. In order to preserve this scaling, we increased in the same proportion the number of neurons of the recurrent network and the RCNs, so that the RCNs can solve the non-linear separabilities, but at the same time they do not distort too much the distances in the original space of the recurrent network. In Figure 5D we show how the number of required neurons (recurrent neurons + RCNs) scales with the number of mental states for the benchmark of Figure 5B. The two figures differ in the required sizes for the basins of attraction. For Figure 5B the basin of attraction had to be large enough to guarantee that initial patterns with a perturbation as high as 3% (i.e., the probability of changing the state of each neuron is ρB = 0.03) would all relax back in the attractor. In Figure 5D the requirement about the basin of attraction was that initial patterns with a 10% perturbation would all relax back in the attractor. In both cases the number of needed neurons is linear in both the number of mental states m and the number of transitions r. In particular, if Nr is the total number of required neurons, we have that:

Nr ∼ α(r/m)m,

where α is a function of the number of transitions per state (r/m). In our case, α = βr/m, where β depends on the size of the basins of attraction. It is practically constant for ρB = 0.03, 0.10 (β ≃ 60) and it increases rapidly for larger basins with ρB = 0.20 (β ≃ 200, not shown in the figures). Our simulations show in all cases that the number of needed neurons increases linearly with the number of conditions to be imposed (i.e., the number of attractors plus the number of event-driven transitions), regardless of the size of the basin of attraction.

The introduction of RCNs increases the absolute distances between the input patterns, and also has the beneficial effect of speeding up the learning process. Indeed the convergence time of the perceptron algorithm that we use to impose all the conditions for attractors and transitions decreases with an increasing number of RCNs, as shown in Section “The Number of Learning Epochs Decreases with the Number of RCNs” in Appendix, Figure 8. This is true also when we impose that the basins of attractions must have a given fixed size, or in other words, that the generalization ability of the network remains unchanged for different numbers of RCNs.

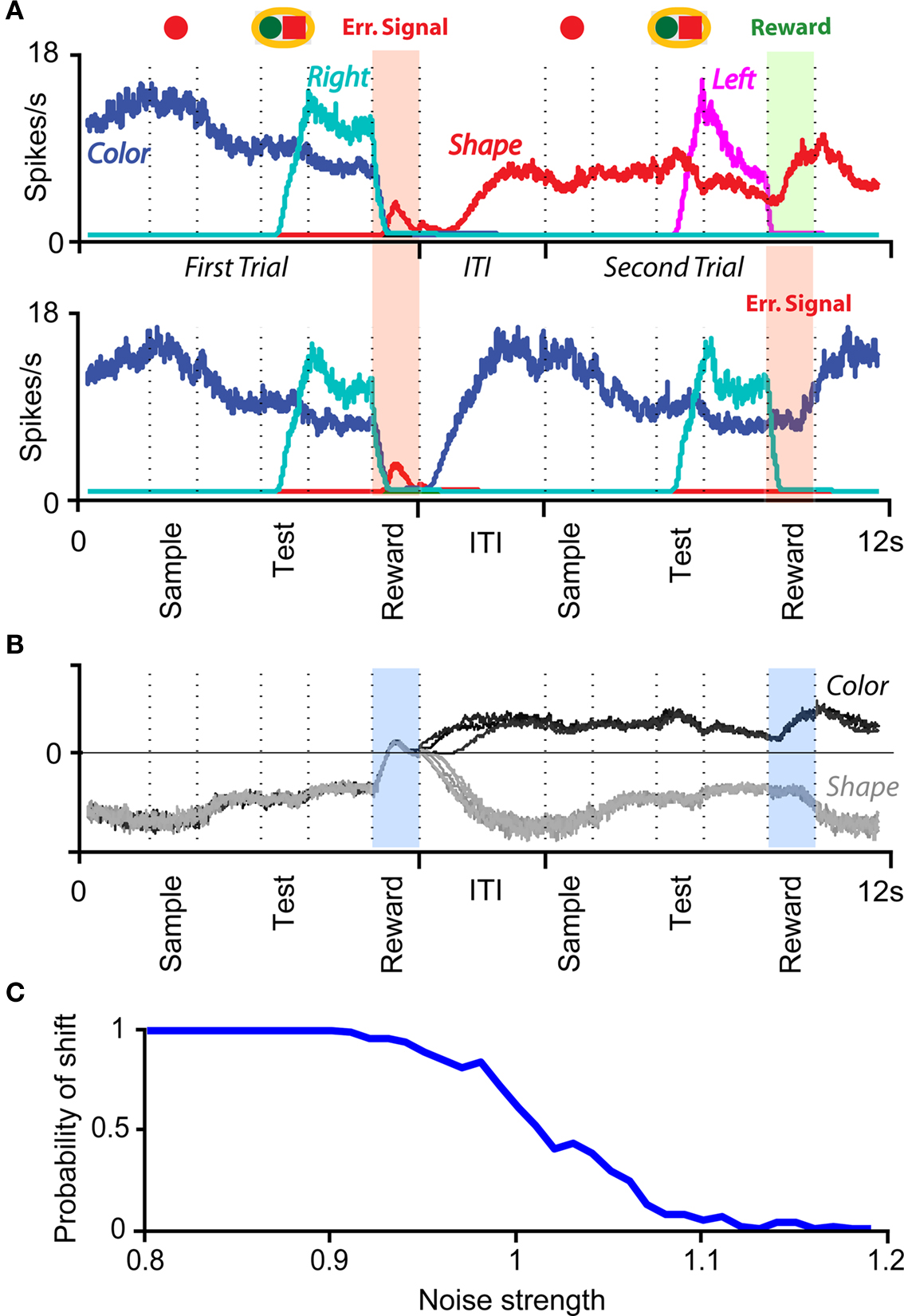

Modeling Rule-Based Behavior Observed in Monkey Experiments

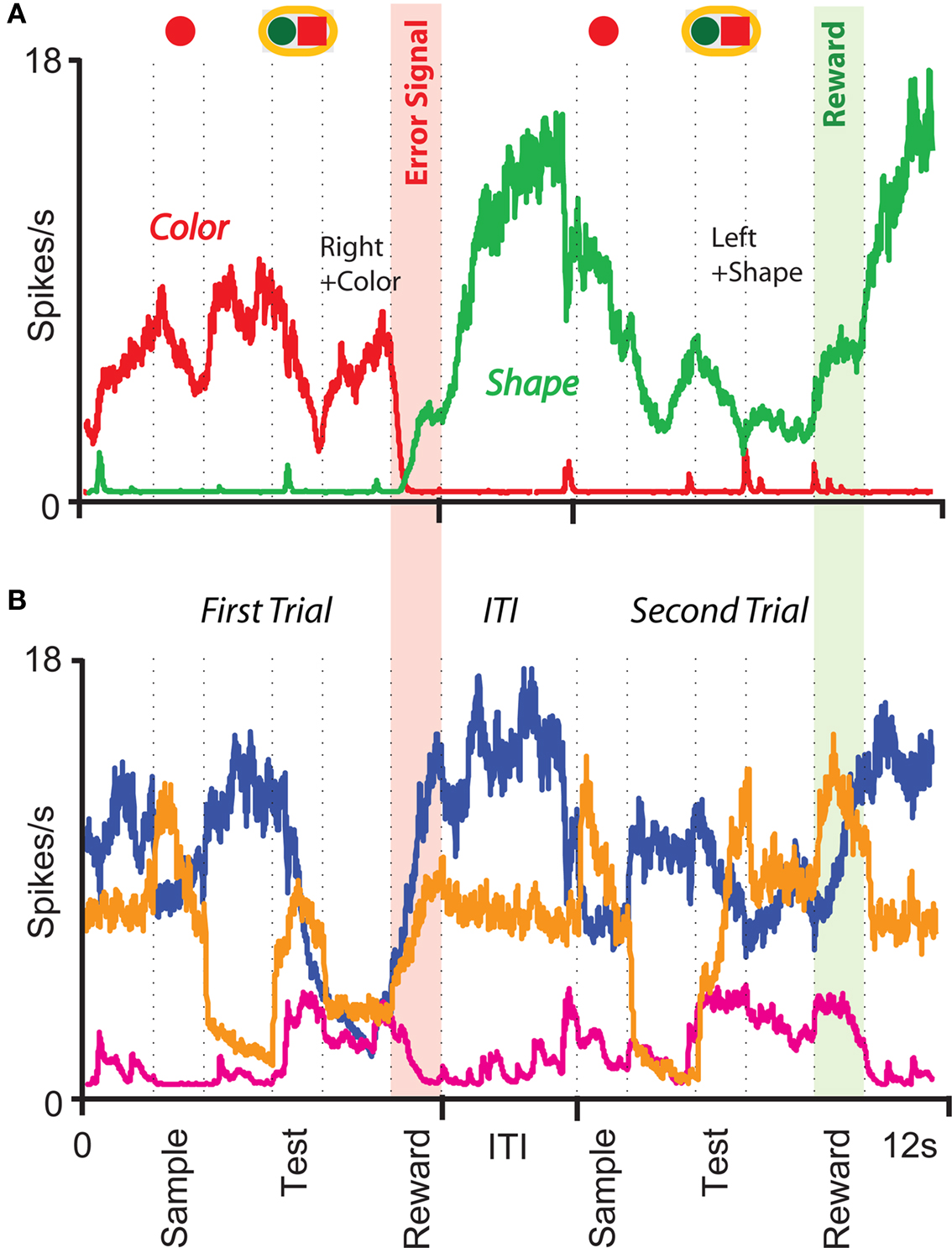

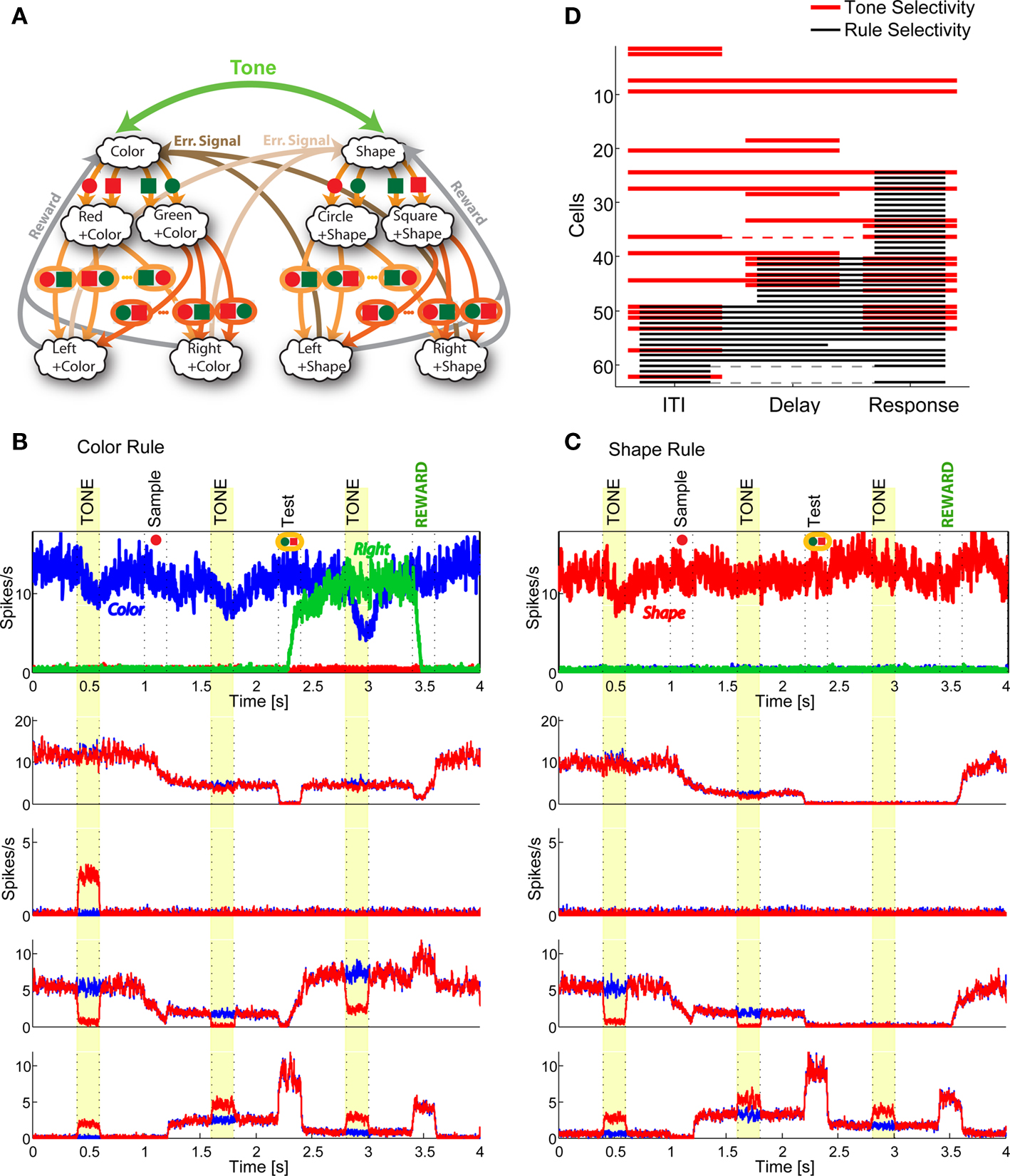

The prescription for building neuronal circuits that implement a given scheme of mental states and event-driven transitions is general, and it can be used for arbitrary schemes provided that there is a sufficient number of RCNs. To test our general theory, we applied our approach to a biologically realistic neural network model designed to perform a rule-based task which is analog to the WCST described in Figure 1 (Mansouri et al., 2006, 2007), whose scheme is reproduced in Figure 7A. We implemented a network of more realistic rate-based model neurons with excitation mediated by AMPA and slow NMDA receptors, and inhibition mediated by GABAA receptors. Figure 6A shows the simulated activities of two rule selective neurons during two consecutive trials after a rule shift. The rule in effect changes from Color to Shape just before the first trial, causing an erroneous response that is corrected in the second trial, after the switch to the alternative rule. Although the two neurons shown in Figure 6A are always selective to the rule, their activity is modulated by other events throughout all the epochs of the trials. This is due to the interaction with the other neurons in the recurrent network and with the RCNs. Figure 6B shows the activity of three RCNs. They typically have a rich behavior exhibiting mixed selectivity that changes depending on the epoch (and hence on the mental state). Two features of the simulated neurons have already been observed in experiments: (1) neurons show rule-selective activity in the inter-trial interval, as observed for a significant fraction of cells in PFC (Mansouri et al., 2006); (2) the selectivity to rules is intermittent, or in other words, neurons are selective to a different extent to the rules depending on the epoch of the trial. This second feature is analyzed in detail in the next section.

Figure 6. Simulation of a Wisconsin Card Sorting-type Task after a rule shift. (A) Simulated activity as a function of time of two sample neurons of the recurrent network that are rule selective. The first neuron (red) is selective to “color” and the second (green) to “shape”. The events and the mental states for some of the epochs of the two trials are reported above the traces. (B) Same as (A), but for three RCNs.

Figure 7. (A) Minimal scheme of mental states and event-driven transitions for the simplified WCST (same as in Figure 1B). (B) Rule selectivity pattern for 70 simulated cells: for every trial epoch (x-axis) we plotted a black bar when the neuron had a significantly different activity in shape and in color blocks. The neurons are sorted according to the first trial epoch in which they show rule selectivity. (C) Same analysis as in (B), but for spiking activity of single-units recorded in prefrontal cortex of monkeys performing an analog of the WCST (Mansouri et al., 2006). (D) Scheme of mental states and event-driven transitions with multiple states during the inter-trial interval (E) Same as (B), but for the history-dependent scheme in (D). (F) Same as (E), but for the selectivity to the color of the sample (red bars). Short black bars indicate rule selectivity.

Observed Properties of Mixed Selectivity Neurons: Intermittent Selectivity in Simulations and Experiments

To analyze more systematically the selectivity of simulated mixed selectivity cells and to compare it to what is observed in prefrontal cortex, in Figure 7B we plotted for 70 cells whether they are significantly selective to the rule for every epoch of the trial. The cells are sorted according to rule selectivity in different epochs, starting from the neurons that are rule selective in the inter-trial interval. Whenever a cell is rule selective in a particular epoch, we draw a black bar. In the absence of noise, all cells would be selective to the rule, as every mental state is characterized by a specific collective pattern of activity and the activity of each neuron is unlikely to be exactly the same for two different mental states. However we consider a cell to be selective to the rule only if there are significant differences between the average activity in Shape trials and the average activity in Color trials. The results depend on the amount of noise in the simulated network, but the general features of selectivity described below remain the same for a wide range of noise levels.

The selectivity clearly changes over time, as the set of accessible mental states for which the activity is significantly different, changes depending on the epoch of the trial. This intermittent selectivity is also observed in the experimental data (Mansouri et al., 2006) reproduced in Figure 7C. More recently it has been observed also in (Cromer et al., 2010). The experimental selectivity is in general less significant than in the simulations for several reasons. In the experiment the neural activity is estimated on a limited number of trials from spiking neurons and hence the noise can be significantly higher than in the simulations. However there might be a more profound reason for the discrepancy between experiments and simulations, which is related to the fact that the monkey might be using a strategy that is more complicated than the one represented in Figure 1B. If, indeed, we assume that the monkey keeps actively in mind not only the rule in effect, but also some other information about the previous trial that is not strictly essential for performing the task, then the number of accessible states during the inter-trial interval can be significantly larger, and this can strongly affect the selectivity pattern of Figure 7B. This is illustrated in Figures 7D–F, where we assumed that the monkey remembers not only the rule in effect, but also the last correct choice (see e.g., Barraclough et al., 2004 for a task in which the activity recorded in PFC contains information about reward history). In such a case the activity in the inter-trial interval is more variable from trial to trial and the pattern of selectivity resembles more closely the one observed in the experiment of Mansouri et al. (2006).

The statistics of the black bars depends on the structure of the neural representations of the mental states and on the statistics of the random connections to the RCNs. In particular, the correlations between mental states can generate correlations between patterns of selectivity in different epochs, and across neurons. The fact that rule selectivity is not a property inherent to the cell is a general feature of our network which will be demonstrated also for different types of selectivity, such as a stimulus feature or reward delivery (observed in the experiment of Mansouri et al., 2006). For example, the simulations in Figure 7F show for the same cells of Figure 7E the selectivity to the color of the sample stimulus (red bars), on top of the bars indicating rule selectivity. Obviously, there is no cell that is selective to the sample stimulus before it is presented (inter-trial interval), but in the remaining part of the trial the pattern of red bars seems to be as complex as the one for rule selectivity. Notice that some cells are selective to both the rule and the color in some epochs.

Predicted Features of Mixed Selectivity: Diversity, Pre-Existence, and Universality

The RCNs and the recurrent neurons show mixed selectivity that is predicted to exhibit features that are experimentally testable. In particular:

1. Mixed selectivity should be highly diverse, in time, as pointed out in the previous section (see also Lapish et al., 2008; Sigala et al., 2008), and in space, as different neurons exhibit significantly different patterns of selectivity. Such a diversity is predicted to be significantly higher than in the case of alternative models with hidden units, in which the synaptic connections are carefully chosen to have a minimal number of hidden units. According to our model, neurons with selectivity to behaviorally irrelevant conjunctions of events and mental states are predicted to be observable at any time (see Rigotti et al. (2010) for preliminary experimental evidence in orbito-frontal cortex and amygdala).

2. Mixed selectivity should pre-exist learning: neurons that are selective to behaviorally relevant conjunctions of mental states and events are predicted to be pre-existent to the learning procedure of a task.

3. Mixed selectivity should be “universal”: the neurons of the network have the necessary mixed selectivity to solve arbitrarily complicated tasks that involve the present and future mental states. Were we able to impose artificially an arbitrary pattern of activity representing a future mental state, we would observe neurons that are selective to conjunctions of that mental state and familiar or unfamiliar events, even before any learning takes place.

These three features are illustrated in Figure 8 where we make specific predictions in the case in which the simplified WCST illustrated in Figure 1 and analyzed in the previous section is modified to produce a rule switch whenever a tone is heard. We consider the situation in which the subject has already learned and is correctly performing the WCST. At some point, a new sensory stimulus (e.g., a tone) signals a rule switch, and the task is modified as indicated in Figure 8A. We now analyze the behavior of the simulated network before the new task is learned. Figures 8B,C show the activity of a few neurons as a function of time. The tone is a new event, and it is initially ignored by the network collective dynamics and the behavior is still controlled by the old scheme of mental states and event-driven transitions. In other words, the tone is unable to induce any transition from one mental state to another. In general the behavior would be unaffected by any distractor that is sufficiently dissimilar from the relevant sensory stimuli. This resistance to distractors has been observed in prefrontal circuits (Sakai et al., 2002).

Figure 8. Diversity, pre-existence and universality of neurons with mixed selectivity. (A) Extended WCST (eWCST): task switch is driven not only by an error signal, but also by a tone (green arrow). (B,C) All necessary mixed selectivities are pre-existent (i.e., they exist before learning). The simulated network is trained on the WCST of Figure 1D. We show the neural activity in trials preceding learning of eWCST. The neurons in the top panels of (B,C) encode the rule in effect and the motor response Right, as in Figure 6. (B) Shows one trial in which Color Rule is in effect, (C) a trial in which Shape Rule is in effect. The other plots represent the activity of four cells during the same trial in the absence (blue) and in the presence (red) of the tone. Some neurons are selective to the rule, but not to the tone (top). Some others have mixed selectivity to the tone and the rule (two central panels) even when the conjunctions of events are still irrelevant for the task (the network is not trained to solve eWCST). See in particular the neuron in the top central panel, that responds to the tone only when Color Rule is in effect. Finally, there are neurons that are selective to the tone but not to the rule. (D) Selectivity to the rule in effect (black) and to the tone (red) before learning of the eWCST (cf. Figure 7F). There are many neurons with the mixed selectivity that are necessary to solve the eWCST before any learning takes place.

Although the tone does not initially induce any transition from one mental state to another, the activity of individual neurons is visibly affected by it, and there are clearly cells that are already selective to the conjunction of tone and mental states even before the meaning of the tone is learned. The selectivity to the tone is shown in the four bottom panels of Figures 8B,C, in which we plot the activity of a few representative RCNs in the presence (red) and in the absence of the tone (blue). These neurons clearly show a selectivity to the conjunction of tone and mental states (see yellow stripes).

This kind of behavior reflects an efficient form of gating that allows the neural network to perform correctly the task, but, at the same time, to encode transiently in its activity the occurrence of a new event (the tone).

It important to notice that these neurons that respond to conjunctions of tone and rule encoding mental states are irrelevant for the simplified WCST, but they are anyway present in the network and observable (high diversity feature). As it turns out, they are essential for rule switching induced by the tone, as they solve the context dependence problem of the task to be learned, and they are already present before the learning process takes place (pre-existence feature). The mixed selectivity of the RCNs is also universal, as it would solve any other task with elevated probability (universality feature). The statistics of the selectivity to rules and to the tone of 62 RCNs is shown in Figure 8D. The black bars represent selectivity to rules, as in Figures 7B,C,E, and the red ones represent selectivity to the tone. In both cases, the selectivity in the different epochs is shown before the learning process takes place. Figure 8D shows that there is a large proportion of RCNs that exhibit mixed selectivity to rules and tone, and that can greatly facilitate the learning process (see discussion in Asaad et al., 1998 and Rigotti et al., 2010).

Discussion

Heterogeneity is a salient yet puzzling characteristic of neural activity correlated with high level cognitive processes such as decision making, working memory, and flexible sensorimotor mapping. Usually models are built to reflect the way we believe the brain solves a certain problem, and neurons with particular functional properties are carefully chosen to make the system work. In some cases these systems are tested to see whether they remain robust in spite of the presence of disorder and the diversity observed in the real brain. Instead, here we showed that heterogeneity actually plays a fundamental computational role in complex, context-dependent tasks. Indeed, it is sufficient to introduce neurons that are randomly connected in order to reflect a mixture of neural activity encoding the internal mental state and the neural signals representing external events. The introduction of these cells in the network is sufficient to enable the network to perform complex cognitive tasks and facilitates the process of learning. One of the main results of our work is that the number of necessary randomly connected neurons is surprisingly small and typically is comparable to the number of cells needed in carefully designed neural circuits. The randomly connected neurons have the advantage that they provide the network with a large variety of mixed selectivity neurons from the very beginning, even before the animal can correctly perform the task. Moreover, when the representations are dense, they are “universal” as they are likely to participate in the dynamics of multiple tasks.

Other Approaches Based on Hidden Units with Mixed Selectivity

Mixed selectivity has already been proposed as a solution to similar and different problems. For example, mixed selectivity to the retinal location of a visual stimulus and the position of the eyes can be used to generate a representation of the position of external objects and then determine the changes in joint coordinates needed to reach the object (Zipser and Andersen, 1988; Pouget and Sejnowski, 1997; Pouget and Snyder, 2000; Salinas and Abbott, 2001). Neurons with these response properties have been observed in the parietal cortex of behaving monkeys. Neurons with mixed selectivity to the identity of a visual stimulus and its ordinal position in a sequence have been used to model serial working memory (Botvinick and Watanabe, 2007). Mixed selectivity to stimulus identity and to a context signal have been used to model visuomotor remapping (Salinas, 2004a). More in general, complex non-linear functions of the sensory inputs like motor commands, can be expressed as a linear combination of basis functions (Poggio, 1990). These non-linear functions can be implemented by summing the inputs generated by neurons with mixed selectivity to all possible combinations of the relevant aspects of the task (e.g., different features of the sensory stimuli). One of the unresolved issues related to this approach is that the number of needed mixed selectivity neurons increases exponentially with the number of relevant aspects of the task (combinatorial explosion). This should be contrasted with the linear scaling of our approach based on RCNs.

The solution that we propose is based on the introduction of additional neurons that are randomly connected and that modify the representation of inner mental states in the presence of external inputs. As discussed, our solution is simple and it reproduces the response properties of neurons recorded in prefrontal cortex. A similar solution to the context dependence problem has been proposed by Salinas (2004a,b), who harnessed gain modulation to solve the non-linear separabilities. His approach is similar to the basis function approach that was just discussed, in Section “Introduction,” as he introduces neurons whose activity depends on the product of a function of the identity of the stimulus and a function of the context signal. These neurons have mixed selectivity to the inner mental state encoding the context and to the sensory stimulus. Similarly to what we did with the RCNs, he also chose a random permutation of gain functions to generate these neurons. However, in contrast with what we did, the author decided not to model explicitly the neural circuit that maintains actively the context representation and produces the neurons with mixed selectivity. Moreover, and most importantly, he presented an interesting case study, but he did not study systematically the scaling properties of his neural system.

In the works discussed above, the neurons with mixed selectivity are the result of specific, prescribed synaptic weights. However, there are also more general learning rules to find the weights to hidden units that have the needed mixed selectivity. A classical example is the Boltzmann machine (Ackley et al., 1985), which has been designed to solve similar problems, in which attractors corresponding to non-linearly separable patterns are stabilized by the activity of hidden units. Recent extensions of the Boltzmann machine algorithm (O’Reilly and Munakata, 2000; Hinton and Salakhutdinov, 2006) can also deal with event-driven transitions from one attractor to another. Our approach is similar because our RCNs are analogous to the hidden units of the Boltzmann machines. However, in our case the synaptic connections to the RCNs are not plastic and we do not need to learn them.

We would like to stress that what we propose is not a real learning algorithm, but rather a prescription for finding the synaptic weights. A real, biologically plausible learning algorithm would probably require a significantly more complicated system, with many of the features discussed in O’Reilly and Munakata (2000). However we believe that it is important to notice that our network can implement arbitrarily complicated schemes of attractors and event-driven transitions with a very simple prescription to find the desired synaptic configuration. This might greatly simplify and speed up a real learning algorithm. Moreover, mixed selectivity neurons that are predicted to be present even before the learning procedure starts, can be used to learn mental states that represent rules or other abstract concepts. One example is the creation of mental states corresponding to different temporal contexts as considered in Rigotti et al. (2010). Recently, it has also been shown (Dayan, 2007) that mixed selectivity neurons implemented with multilinear functions can play an important role in neural systems that implement both habits and rules during the process of learning of complex cognitive tasks. Multilinearity implements conditional maps between the sensory input, the working memory state, and an output representing the motor response.

We assumed that the RCNs have fixed random synapses, but this does not imply that our network requires the existence of synapses that are not plastic. It might be possible that the statistics of the random synaptic weights varies on a timescale that is significantly longer than the timescales over which the tasks are learned. We still do not know whether the introduction of this form of learning can improve the performance of the network and to what extent, although we know that in general learning on multiple timescales can be greatly beneficial for memory performance (Fusi et al., 2005). We know that there are forms of learning rules that modify the synaptic weights of neurons that are initially randomly connected without disrupting the performance of the network. This is the case of multilayer perceptrons with synapses that are initialized at random values, as discussed below.

Other Models Based on Randomly Connected Neurons

Networks of randomly connected neurons have been studied since the 1960s (Marr, 1969; Albus, 1971). In these works the authors, inspired by the ideas by P. H. Greene (Greene, 1965), realized that random subsets of input patterns can provide an efficient, compact representation of the information contained in the patterns. At the same time, these representations can be less correlated than the original patterns, and hence they can facilitate learning and memorization. In the neural circuit that we propose, we basically create with the RCNs a compressed representation of the inner mental state and the external input, and in this sense the RCNs play a similar role to the neurons of Greene (1965), Marr (1969), and Albus (1971). Moreover, the non-linearity introduced by the f–I curve of the RCNs contributes to increase the distances between highly correlated patterns, similarly to the non-linearities introduced in the cited works. It is important to notice that the RCNs provide our recurrent circuit with an explicit dynamical process that decorrelates the patterns representing the mental states and the external inputs and, at the same time, it the distances are dilated without disrupting the structure of the basins of attraction (see Scaling Properties of the Basins of Attraction). Simplified models in which the patterns of activity are assumed to be random and uncorrelated do not explicitly address the issue of how the original representations are decorrelated, and whether the topology of the basins of attraction is preserved (see e.g., Hopfield, 1982 for a classic example and Cerasti and Treves, 2010 for a more recent application of the same idea to the feed-forward pre-processing performed by the dentate-gyrus).

More recently randomly connected neurons have been used to generate complex temporal sequences and time varying input–output relations (Maass et al., 2002; Jaeger and Haas, 2004; Sussillo and Abbott, 2009) and to compress, transmit and decompress information (Candes and Tao, 2004). In many other cases they also have been used implicitly in the form of random initial weights. For example in the case of gradient descent learning algorithms like backpropagation (Zipser and Andersen, 1988). As proved in our manuscript, much of the needed mixed selectivity to solve non-linear separabilities might be already present in the initial conditions when the synaptic weights of hidden units start from a random configuration. We suspect that in many situations the learning rules would not need to modify these synapses to achieve a similar performance.

How Dense Should Neural Representations be?

Our results show that in order to solve the problems related to context dependence, the optimal representations for mental states, external inputs and for the patterns of activities of the RCNs should be dense. This means that the majority of the neurons is expected to respond to a large fraction of aspects of the task, and in general to complex conjunctions of events and inner mental states. Despite the lack of systematic studies providing a direct quantitative estimate of the average coding level f, dense representations have been widely reported in prefrontal cortex (Fuster and Alexander, 1971; Funahashi et al., 1989; Miller et al., 1996; Romo et al., 1999; Wallis et al., 2001; Nieder and Miller, 2003; Genovesio et al., 2005; Mansouri et al., 2006, 2007; Tanji and Hoshi, 2008).

The optimal fraction f for solving context dependence problems is 1/2, and this is not surprising as such a fraction would maximize the amount of information that can be stored in the neural patterns of activity of the RCNs. Indeed RCNs have to provide the network with patterns of activities that contain the information about both the inner mental states and the external inputs. However, the observed f might be smaller than the optimal value 1/2 for at least two reasons. The first one is related to metabolic costs, as it is clear that sparser representations (small f) would require a lower neural activity and hence a lower energy consumption. The second one concerns the interference between different mental states. The same network has probably to solve also non-context-dependent tasks or subtasks, like simple one-to-one mappings. In such a case, elevated values of f can degrade the performance because of the interference of the memorized representations of the mental states, as already shown by several works on the importance of sparseness for attractor neural networks (see e.g., Amit, 1989). Fortunately, Figure 3B show that the probability that an RCN solves a context-dependent problem is nearly flat around the maximum at f = 1/2, and it decreases rapidly only for significantly sparse representations. The optimal f when all these factors are considered, is more likely to be in an interval like 0.1 − 0.5.

Stochastic Transitions

In our simulations of a WCST-type task, a transition from one rule to another was induced deterministically by an Error Signal or by the absence of an expected reward. However the parameters of the network and the synaptic couplings can be tuned in such a way that certain transitions between states occur stochastically with some probability (see Stochastic Transitions Between Mental States in Appendix). Such a probability might depend on the production of neuromodulators like acetylcholine or norepinephrine, which have been hypothesized to signal expected and unexpected uncertainty (Yu and Dayan, 2005). In uncertain environments, where reward is not obtained with certainty even when the task is performed correctly, the animal should accumulate enough evidence before switching to a different strategy. Such a behavior could be implemented by assuming that an independent system keeps track of recent reward history and produces a neuromodulator controlling the probability of making a transition between the mental states corresponding to alternative strategies. This scenario could explain the observed behavior of the monkeys in the WCST-type task (Mansouri et al., 2006, 2007) in which, when task rule switching was signaled by change of reward contingencies, they switched to a different rule with a probability close to 50%. A detailed analysis of the monkey behavior in the particular experiment that we modeled would be very interesting but goes beyond the scope of this work.

Why Attractors?

One of the limitations on the number of implementable transitions in the absence of mixed selectivity units is due to the constraints related to the assumption that initial states are stable patterns of persistent activity, or, in other words, attractors of the neural dynamics. This is based on the assumption that rules are encoded and maintained internally over time as persistent neural activity patterns (Goldman-Rakic, 1987; Amit, 1989; Miller and Cohen, 2001; Wang, 2001). Given the price we have to pay, what is the computational advantage of representing mental states with attractors? One of the greatest advantages resides in the ability to generalize to different event timings, for instance to maintain internally a task rule as long as demanded behaviorally. In most tasks, all animals have a remarkable ability to disregard the information about the exact timing when such an information is irrelevant. For example when they have to remember only the sequence of events, and not the time at which they occur. The proposed attractor neural networks with event-driven transitions can generalize to any timing without the necessity of re-training. Generalizing to different timings is a problem for alternative approaches that encode all the detailed time information (Maass et al., 2002, 2007; Jaeger and Haas, 2004) or for feed-forward models of working memory (Goldman, 2009). The networks proposed in Maass et al. (2002), Jaeger and Haas (2004), and Goldman (2009) can passively remember a series of past events, in the best case as in a delay line (Ganguli et al., 2008). The use of an abstract rule to solve a task requires more than a delay line for at least two reasons: (1) Delay lines can be used to generate an input that encodes the past sequence of recent events and such an input can in principle be used to train a network to respond correctly in multiple contexts. However, the combinatorial explosion of all possible temporal sequences would make training costly and inefficient as the network should be able to recognize the sequences corresponding to all possible instantiations of the rules. (2) Even if it is possible to train the network on all possible instantiations of the rule, it is still extremely difficult if not impossible to train the network on all possible timings. A delay line would consider distinct two temporal sequences of events in which the event timings are different, whereas any attractor based solution would immediately generalize to any timing.

Models of working memory based on short term synaptic plasticity (Hempel et al., 2000; Mongillo et al., 2008) can operate in a regime that is also insensitive to timing, but they require the presence of persistent activity and the imposition of the stability conditions on the synaptic matrix, similarly to what we proposed in our approach. Moreover, these attractor networks do not act like fast switches between steady states, instead they are endowed with slow recurrent dynamics and exhibit transients such as quasi-linear ramping activity on the timescale of up to a second (Wang, 2008, 2002).

Conclusion